6

Evaluating Predictive Systems

The ability to identify potentially invasive species could yield enormous benefits. Quarantine measures could be streamlined to search primarily for designated species at points of entry, and detection efforts within the United States could be focused on the habitats in which the species are likely to reside. Such identification ability would replace the searches for often vaguely defined threats (poorly characterized invasive species) with searches for organisms that are of legitimate cause for concern and action.

A determination that a given nonindigenous plant or plant pest has the potential to become invasive in the United States presumes that information about it and its introduction are known, such as its characteristics; the specific event that brings it into the United States; where, when, and how it is brought in; that it can become established, proliferate, and spread in its new environment; and the harm it would do. The same criteria apply to nonindigenous organisms introduced as biological control agents (Strong and Pemberton 2000).

Prediction in this sense is not explanation. Explanation identifies why a phenomenon or event has occurred; in science, explanation is often based on statistical analysis of experimental observations. Much of the material in Chapters 2-4 is a review of factors that explain, to various extents, why some organisms are able to persist and spread, outcompete natives, and alter ecosystems. In contrast, prediction is the generation of statements about the likelihood that events will occur (Williamson 1996).

The committee concluded that a scientifically based system to predict invasiveness must satisfy three general criteria:

-

It must be transparent, be open to review, and have been evaluated by peers.

-

It must have a logical framework that includes independent factors— identified through critical observation, experimentation, or both—important in the invasion process.

-

Use of the framework must be repeatable and lead to the same outcome, regardless of who makes the predictions.

This chapter examines approaches for predicting the invasiveness of plants and the arthropod and pathogenic pests of plants in the United States. Most predictive systems rely principally on observational data (sometimes coupled with traits, origins, and the like). The data may be grouped taxonomically on coarse or fine levels (for example, all plants, animals, or microorganisms; or according to family, genus, or species), by characteristic (such as reproductive mode, mode of propagation, or environmental range), or by locale and climate of origin. The committee suggests a broader framework for organizing information used in predictive systems; the framework is based on the sequential steps of invasion: arrival, persistence or establishment, and proliferation and spread.

This chapter also addresses systems of prediction that are augmented by an assessment of the value or character of invasions and an assessment of the certainty of or confidence in their occurrence. Predictions based on this broader dimension of information and analysis are risk assessments, specifically ecological risk assessments.

Predicting biotic invasions has been based largely on identification of species that already have a record of invasiveness. This approach seems almost obvious, especially for plant pathogens. For example, the fungal pathogens of cereals are equally likely to be infectious on a given wheat variety in the United States, in Australia, and in western Europe. It would be imprudent to assume otherwise unless there were specific information to the contrary. Similar examples occur for arthropod pests. Even though the Russian wheat aphid has not yet produced the devastation in the United States that it produces elsewhere, it is a pest of wheat in many new ranges (Hughes 1996). No prolonged monitoring was deemed necessary to enforce control of the aphid once it appeared in the United States. Plant species known to be invasive have routinely sparked awareness of their invasive potential in unexploited new ranges. The World’s Worst Weeds, the compendium by Holm et al. (1991), is empirical testimony to the recurrence of some species as invaders in many ranges. The first detection of one of these species in a new range should spark immediate eradication efforts. The record of a plant’s invasiveness in other geographic areas beyond its native range remains the most reliable predictor of its ability to establish and invade. The same is true for arthropods and pathogens if host plants they can use elsewhere also occur in the United States.

Expert judgment of a species’ history in new ranges has several limitations. First, expert judgment is subjective and given the same information, experts’ evaluations of the threat of an invasion can differ. Second, some species considered potential invaders in the United States simply on the basis of their record elsewhere have yet to become problems here despite their repeated entry (Reed 1977). The failure so far to be a problem has multiple potential causes. On the basis of the information in Chapters 3 and 4, it is apparent that there is a high degree of stochasticity in the outcome of any immigration. A species with a record of invasion could eventually become invasive in an additional new range once stochastic forces were overcome or avoided. Finally, a further dilemma is posed by arthropod and plant pathogens that cause little or no recognizable damage to plants in their native habitats and therefore have been ignored but have the potential to cause substantial damage to those plants’ susceptible North American relatives.

PREDICTION BASED ON CLIMATE-MATCHING

Even before ecology was formalized as a science, the ability to predict the geographic and ecological ranges of species by using climatic similarities was actively sought (von Humboldt and Bonpland 1807, Grace 1987). The extension of this reasoning to predict the potential new range of an introduced species and even whether it would survive at all in a new locale has long been practiced (Johnston 1924, Wilson et al. 1992). Such reasoning is supported by comparison of the climates in native and naturalized ranges for some species. Most striking in this regard is the extensive list of plant species that are native along the border of the Mediterranean Basin and are now naturalized in locales with climates similar to the Mediterranean Region: coastal areas of southern California and Chile, southwest Australia, and the Cape of Good Hope. Furthermore, species from each of the other four regions have become naturalized in one or more of the others (Kruger et al. 1989, Fox 1990).

There have been repeated attempts to develop “climate-matching” models by comparing the voluminous records of the earth’s climates collected at numerous locations (Busby 1986, Panetta and Dodd 1987, Panetta and Mitchell, 1991, Cramer and Solomon 1993). The challenge (aside from verifying the accuracy of data from meteorological stations scattered around the globe) has been to integrate these data in an ecologically meaningful way. More important, weather is the stochastic expression of climate, and the persistence of organisms, whether native or introduced, is much influenced by this variation about the mean characteristics of any climate (Mack 1995).

CLIMEX is one example of the models developed to predict the potential ranges of introduced species (Sutherst et al. 1999). The model in its revised forms has been used repeatedly to predict the geographic distributions of plants, microbial pathogens, and arthropods under both current conditions and global

climate-change scenarios (Sutherst et al. 1989, 1998, 1999). Its most extensive applications have been useful for predicting the fate of immigrant species that are candidates for release in biological control (by identifying possible sites to collect biocontrol species and sites where they might be released and persist), and in predicting the fate and new ranges of introduced pests (Sutherst 1991a,b).

In its basic composition, CLIMEX contains an open-ended meteorological database of about 2500 locations that span the world. Meteorological data include average monthly maximal and minimal temperatures, relative humidity, and precipitation. The model interpolates monthly average climatic data into weekly values. CLIMEX has two main operating modes: “Match Climates” and “Compare Locations”. The “Match Climates” mode compares meteorological conditions among locations without reference to species. Either a specific meteorological variable (such as minimal winter temperature) or a suite of meteorological variables can be compared between locations for a specific period or the entire year. The model allows users to search the meteorological database in asking “Does Location X [a potential new locale] have a climate similar to that of Location Y [a species’ native or other locale] under preset standards of similarity?” That basic task is common to many climate-matching software programs (Kriticos and Randall 2001).

The “Compare Locations” function is more applicable to the common need in predicting future invaders: it is used to predict potential distributions of species under current climatic regimes. Predictions are based on the climate in a species’ current range, on experimentally determined tolerances of a species with respect to key environmental characteristics (for example, Vickery 1974), and on life-history information, such as senescence or diapause. Information on a species is used to find other locales in which the species potentially could persist.

A major limitation in the use of climate-matching (to compare a current range with a potential range) as a predictor of a species’ potential new range(s) lies in the assumption that climate is the main, if not the only, determinant of a species’ distribution. Distributions are also strongly influenced by the biotic component of any environment (Crawley 1992, Mack 1996a) and by chance dispersal; that is, a species might be absent from a locale (even in the general native range) through the vagaries of species dispersal rather than through environmental limitation (Sutherst and Maywald 1985, Davis et al. 1998). Furthermore, this system does not attempt to measure the impact of a species in its new range.

In addition, some species’ distributions are comparatively uniform or at least quite narrowly defined: the climatic range that they can tolerate is not reflected in their native or current new distributions. For example, Sorghum halepense (Johnson grass) has extended its range into southern Canada, thereby far exceeding the climatic range that would have been predicted for it by considering its native subtropical range (Warwick et al. 1984). Such complications diminish or

at least complicate predictions made by climate-matching models (Sutherst et al. 2000).

Furthermore, climate-matching programs rely on comparing average meteorological data; this approach assumes that the variances about the means are the same (Sutherst et al. 2000). Most important, no current climate-matching model fully incorporates the extent to which the climate at any site varies randomly in observed range extremes.

CLIMEX illustrates an approach that can narrow the estimate of the potential range of an introduced species or predict potential ranges from which a persistent nonindigenous species might emerge. Such models yield, in effect, a first evaluation or screening of potential range. Even if a species’ native range appears to have a climate similar to a climate in the United States, the similarity by no means ensures that if it arrives, it will persist, much less that it will become invasive. Evaluation based particularly on the biotic component of a potential target range is also needed. Nevertheless, climate-matching deserves support and further evaluation (Kriticos and Randall 2001).

PREDICTION BASED ON TRAITS

Recurrence of some species as invaders in widely separated parts of the globe has long prompted the hypothesis that some species possess traits or attributes that enhance the probability that they will flourish in a new range. The hypothesis has been evaluated by searching for associations of life-history traits among these “repeat offenders” (mostly plants, but see Ehrlich 1989). For example, traits associated with known invasive pathogens include a short latent period, a long infectious period, a high rate of spore or propagule production, a high spore or propagule survival rate within and between growing seasons, efficient long-distance dispersal of spores, a wide host range, and environmental plasticity (Campbell and Madden 1990; van den Bosch et al. 1999). Examples of such pathogens with short latent periods and high rates of spore production are Phytophthora infestans, the cause of potato late blight, and Puccinia graminis, the cause of stem rust in wheat. P. graminis and Phakopsora pachyrhizi, the cause of soybean rust, are examples of pathogens with very efficient long-distance dispersal of spores. An invasive pathogen with a wide host range is plum pox virus (PPV). Synchytrium endobioticum, the cause of potato wart, is an example of a pathogen with very long survival times in the soil in the absence of a host plant. It is not known, however, if such information might be used to prospectively evaluate pathogens that are newly identified or not widespread.

As early as 1879 Henslow observed that many widespread British plants were self-pollinating and associated this trait with their independence from animal pollinators. Firm scientific foundations for work on the traits of invading plant species can be traced to Salisbury’s long-term studies on the ecology of weeds in Britain (Salisbury 1961). Attention to this issue received a major boost

with publication of the highly influential symposium volume The Genetics of Colonizing Species, edited by Baker and Stebbins (1965). Contributors to that volume provided much insight in identifying ecological and genetic characteristics most commonly found in invading species.

Baker (1965, 1974) compiled a list of traits found in the “ideal weed” (which is roughly analogous to the plant invaders dealt with here), including rapid development to reproductive maturity, high reproductive capacity, small and easily dispersed seeds, broad environmental tolerance, and high phenotypic plasticity. As Mack (1996b) has pointed out, the potential value of such characteristics to an invader are readily perceived, and many of the world’s plant invaders do indeed possess some of them (reviewed in Brown and Marshall 1986). However, many species with no record of producing an invasion also possess many of the traits listed by Baker. In addition, the failure of surveys among floras to find support for “ideal weed” characteristics among invaders have led to challenges to the strength of earlier generalizations about the traits associated with plant invaders (Noble 1989, Perrins et al 1992a,b, Williamson 1996). Weeds that are invasive but have none of the traits attributed to invasiveness include Watsonia bulbillifera (wild watsonia) and Homeria miniata (two-leaf cape tulip) in Australia (Parsons and Cuthbertson 1992) and Gunnera tinctoria in Ireland (Clement and Foster 1994). Baker’s list was also heavily biased toward traits commonly found in weeds of agricultural and ruderal habitats. Those habitats make up only a portion of the diverse environments invaded by plants; not surprisingly, diverse colonizing strategies are associated with invaders.

The shortcomings of attempts to use traits as a predictive tool have fueled skepticism about whether it would ever be possible to predict successfully which species would become invaders (Crawley 1987, Williamson and Fitter 1996). Traits commonly found among invaders are often assumed to contribute to these species’ invasions, although this assumption is rarely demonstrated by appropriate ecological and demographic studies (Schierenbeck et al. 1994, Rejmanek and Richardson 1996). The design of such investigations is formidable because isolating features of an organism’s life history that contribute substantially to fitness is difficult. Ideally, an investigator would be able to specify the combination of traits that cause invasiveness. However, evolutionary divergence is often so great that most life-history traits differ to some extent among even related taxa, and this divergence makes inferences difficult about the key features responsible for invasiveness. The less closely related the taxa, the more acute the problem becomes; however, even species comparisons involving congeners often reveal large differences in ecology and life history.

The difficulties of making predictions among broad taxonomic groups were recently demonstrated by Goodwin et al. (1999). They examined predictive capability on the basis of three plant traits related putatively to invasiveness: life form (annual or perennial), stem height, and flowering period. They then compared invasive and noninvasive European plant species in their new range in New

Brunswick, Canada. Two of the traits—height and flowering period—were significant components of a predictive model, but the model’s predictions were no better than random. A second analysis that was based on the same variables as the first plus geographic range produced a model that contained only geographic range. This model produced predictions decidedly better than random, but the results nevertheless are disheartening: the species that are most likely to be transported to a new environment are also the ones most likely to become invasive because of their wider environmental tolerances.

Difficulties in prediction arise because organisms depend not only on individual characteristics, but also on interactions with their environment. That perspective is explicitly recognized in the familiar interaction term: genotype X environment = phenotype (Falconer 1981). The phenotype expressed in a particular environment governs the fitness of a genotype (Heywood and Levin 1984, Bradshaw 1984). Different environments elicit different phenotypic responses from the same genotype. By analogy, species responses vary among environments, so invasive potential depends on the environmental circumstances that an introduced genotype confronts. The importance of the environment is generally recognized in ecology and animal behavior, where there are numerous demonstrations of so called “context-dependent” responses of organisms to various ecological conditions (Heywood and Levin 1984, Sultan 1987). Thus, simply identifying the traits of a species and ignoring the environmental context in which the species occurs limits the information about whether the species can persist, let alone become invasive. Many species fail to display traits usually associated with invasive potential—high reproductive performance, wide geographic range, and so on—in their native ranges but behave differently in novel ecological settings. The contrast in responses between native and introduced populations arises without necessarily involving changes in life-history traits. That outcome is strong evidence of the importance of environmental context in predicting whether an introduced species will become invasive.

Assessing the outcome of “species X environment” interactions in terms of invasive potential is considerably more difficult than identifying traits displayed by a known invader. It requires knowledge of the range of environments that potential invaders are likely to encounter in their adopted homes and experimentation as to the species’ responses to the new environments. Experiments are time-consuming, and simulating environmental variation is a formidable task, especially in an environmental growth chamber or experimental garden (Patterson et al. 1979, 1980, 1982). To predict invasiveness accurately, the experimenter would study potential invaders in an array of environments (Prince and Carter 1985, Mack 1996b). That approach entails risk: the experimental introductions themselves could escape, thereby sparking invasions.

Despite those difficulties, trait-based approaches hold appeal as a means of providing a general guideline regarding invasive potential (Reichard and Hamilton 1997). However, the predictive power of the approaches is directly

proportional to the amount of ecological information available on attributes of an organism and a receiving environment. Trait-based approaches require an appreciation that the life histories of organisms are molded by evolution, which results in the “optimization” of fitness for a variety of environments. Individual traits rarely operate in isolation but are instead parts of life-history syndromes that represent an integration of traits in response to environment over time. Thus, an immigrant becomes an invader because it has adaptive suites of morphological, physiological, and ecological traits that arise and are conserved during the course of evolution.

The phylogenetic distribution of invaders may not be random among the earth’s biotas. For example, among flowering-plant families, naturalized and invasive species, particularly those deemed weeds in agriculture, are more commonly represented among dicotyledonous than among monocotyledonous families (Parsons and Cuthbertson 1992). Indeed, it is probably the general observation that some groups appear more frequently than others on the lists of naturalized species that has motivated the search for traits associated with invasiveness. The search continues despite the recognition that diverse ecological strategies are associated with species’ becoming invasive.

One way in which the identity of traits relevant to invasiveness has long been pursued is to survey large numbers of invasive species for taxonomic patterns. For example, Heywood (1989) concluded that most agricultural weeds come from the large families Asteraceae and Poaceae. Heywood (1989) and Cronk and Fuller (1995) identified these families and Fabaceae as containing many species that are invaders of natural areas. However, as pointed out by Daehler (1998), such surveys do not establish whether the number of invaders is higher in specific families than might be expected by chance alone.

Surveys of the above type, as in much ecological research, can reveal useful information, but they generally do not consider the phylogenetic relationships of taxa. Invaders rarely constitute a random sample of species or traits, so attempts to search for the correlated evolution of traits associated with invasiveness can suffer from phylogenetic nonindependence in that species are used as independent data points. Nonindependence tends to inflate degrees of freedom and increases the likelihood of type I errors (that incorrectly identify positive associations) in investigations searching for associations between traits and invasiveness. Recently, Crawley et al. (1996) used phylogenetically independent contrasts (PICs) and a molecular phylogeny of angiosperms to investigate ecological traits that distinguish native and introduced plant species in Britain. They found that introduced plants were taller, had larger seeds, were more likely to flower early or later in the flowering season, and were more likely to be insect-pollinated than their native counterparts. A lively debate has developed over the pros and cons of using PICs for addressing ecological questions (Harvey et al. 1996, Westoby et al. 1995); the availability of new phylogenetic data will aid in determining which traits are functionally associated with invasiveness.

Ideally, if invasiveness were found to have originated on multiple occasions within a phylogeny, it would be possible to determine whether particular traits are associated with the change in ecological behavior. Recurrent patterns among different lineages would help to identify key traits that initiate the evolution of invasiveness.

On the basis of a multivariate approach, results of several studies suggest that traits of invaders can, at least in some cases, be predicted (see Reichard and Hamilton 1997, Rejmanek and Richardson 1996). For example, Rejmanek and Richardson (1996) were able to predict the invasiveness of pines (Pinus) on the basis of a few simple biological traits. A discriminant analysis of 10 life-history traits in 24 cultivated pines species, half of which were considered invasive, demonstrated that seed mass, minimal juvenile period, and the interval between large seed crops were all closely associated with invasiveness.

What accounts for the abovementioned apparent success in predicting invasiveness? Most earlier work on traits associated with invasiveness involved large-scale surveys in which many taxa that differed widely in phylogeny and ecology were represented. Many factors are confounding in such datasets and are likely to obscure relations between life-history traits and invasiveness, because invasiveness might arise for diverse reasons in large taxonomically heterogeneous samples. Rejmanek and Richardson (1996) restricted their analysis to one taxonomic group of woody plants, thus minimizing that problem.

Another limitation in the analysis of traits is the bias of immigration (Mack et al. 2000). Comparisons of invasive and noninvasive members of any taxon are severely handicapped by the happenstance that has led some species to be transported to new environments many times, while some of their relatives to have never been carried outside their native environments. We have no empirical basis for predicting the role of the latter group in new environments or which of their traits facilitate, deter, or play no role in their persistence there.

Although there is generally good understanding of the factors and processes that contribute to invasiveness, the component traits lose predictive power when applied to a taxonomically diverse array of species. Predictive systems have so far focused almost exclusively on plants, and the successful systems are restricted to relatively small taxonomic groups. There is no consensus as to whether the current predictive systems for plants are sufficiently accurate or applicable in a comprehensive manner (Mack et al. 2000).

A SCIENTIFIC BASIS FOR PREDICTION?

Although neither a species’ past performance in new environments nor its assemblage of traits is an adequate guide to forming comprehensive predictions on a species’ invasive potential, these tools have value. The key question is whether there is a scientific means to improve on those tools for species that have no immigration history.

The considerable fundamental knowledge about the factors and processes that drive biological invasions should enable the fundamental structure of a predictive system to be described. The committee strove to identify those factors and processes evident in the science-based literature on invaders—hereafter referred to as “characteristics”—that could be used to predict invasiveness. It was not assumed that a functional predictive system could be developed now; rather, the committee considered whether it was possible to develop such a system at all. The framework that we developed can be considered a testable hypothesis that, if to used as a predictive system, must be empirically evaluated. From the outset, the committee determined that the characteristics must reflect the interactions of species traits (genotype) with environmental factors and that environmental factors would be both natural and human-mediated. The initial question asked was, “What general characteristics are indicative of or associated with organisms that arrive, persist, and eventually invade in new environments?” Groups of committee members worked independently to identify such characteristics for the major taxa considered (arthropods, plants, and plant pathogens) and then attempted to delineate how to quantify these characteristics and how the quantifications would be used to determine a likelihood for each of the stages of an invasion.

The committee identified combinations of species traits, environmental factors, and their interactions for organisms that were most likely to arrive at a foreign destination, persist, and invade. There was near unanimity of characteristics associated with arrival among the three taxonomic groups (Table 6-1a). For example, historical evidence of introduction elsewhere, high rate of movement from a source to a specific destination, high survival during transit, and escape from regulatory safeguards were viewed as common characteristics of nonindigenous species that were likely to arrive at new destinations. The ability to identify the characteristics that serve as predictors declined for the other two stages of the invasion process; this difficulty reflects the fact that the processes of arrival are strongly influenced by human activities and are reasonably well known, whereas the processes of persistence and invasion are often highly idiosyncratic. (Tables 6-1b and 6-1c).

Having identified characteristics thought to be useful for predicting the likelihood of each of the three stages of the invasion process, the committee asked how we might know, measure, or assign parameters to these characteristics. For example, a history of long-distance movement was identified as key information for predicting the arrival of a nonindigenous species. The logic here is straightforward: if the organism has been intercepted repeatedly, there is a high likelihood that it will arrive again. Thus, measuring the history of long-distance movement could be as simple as “yes” or “no” and based on historical records. Three other categories of information were also identified as likely predictors of arrival: the rate of movement from a source to a potential destination, survival during transit, and escape from safeguards (such as inspection or control mea-

TABLE 6-1a. Characteristics of Nonindigenous Pathogens, Plants, and Arthropods Thought to be Indicative of Likelihood of Arrival in New Habitat or Range and Information Needed to Functionalize the Characteristics

|

|

Information Needed |

||

|

Characteristic |

Pathogen |

Plant |

Arthropod |

|

History |

• Is there historical (archival) evidence of repeated introduction of the pathogen? |

• Has the plant been frequently and recently intercepted outside its native range? |

• Has the arthropod been frequently and recently intercepted or detected in North America? |

|

Rate of movement from source to potential destination |

• Is the pathogen commonly associated with the import (commodity, plant, or conveyance) in or coming from the country of origin? • Is the volume of the imported material great? • Does the pathogen have a wide geographic range? • Can it be moved passively (airborne or birds)? |

• Does the taxon have a wide geographic range? • Does the plant occur in a wide array of environments? • Does the plant have demonstrated or alleged human value? • Are the frequency and volume of trade between the plant’s existing range and its potential new introduction point high? |

• Does the arthropod have a strong association with the imported product? • Is the volume of imported material large? • Does the arthropod have a wide geographic range (proportional to likelihood of transport)? • Does the arthropod, at some times, have high population densities in its native range? |

|

Survival during movement |

• Can the pathogen survive transit? |

• Does the plant have a durable resting stage (seeds or vegetative structures)? |

• Is the arthropod likely to survive transport? |

|

Escape from safeguards |

• Can the pathogen be detected, and is there a recognized detection or mitigation procedure either at the point of origin or at the destination? |

(Information for plants is inadequate) |

• Can the arthropod be detected, and is there a recognized procedure for detection or mitigation either at the point of origin or at the destination? |

TABLE 6-1b. Characteristics of Nonindigenous Pathogens, Plants, and Arthropods Thought to be Indicative of Likelihood of Establishment in New Habitat or Range and Information Needed to Functionalize the Characteristics

|

|

Information Needed |

||

|

Characteristic |

Pathogen |

Plant |

Arthropod |

|

History |

|

• Is there a history of naturalization? |

• Is there a history of establishment in a similar environment elsewhere outside its native range? |

|

Environment suitability |

• Is there a similarity in the physical habitat between the point(s) of origin or other naturalized range of the pathogen and potential destinations? |

• Is climate similar between the current geographic range and potential destinations? |

• Is climate similar between the current geographic range and potential destinations? |

|

Resources-hosthabitat finding |

• Is a suitable (susceptible) host that can maintain a base level of pathogen reproduction present? • Are potential hosts in spatial and temporal synchrony with the pathogen? • Does dissemination depend on a vector, and, if so, is the vector present? • Is the pathogen excessively virulent? |

(Not considered a major restrictor for plants) |

• Are potential hosts spatially and temporally available to the arthropod? • Is there biotic resistance, mostly in the form of natural enemies that would limit survival? |

|

Overcoming demographic and environmental stochasticity |

• Does the pathogen have a means of surviving adverse habitat or environmental conditions? • Is the inoculum pressure high (multiple foci, large population, or large number of propagules)? |

• Is the propagule pressure (number of founders, frequency of introductions, or spatial distribution) high? • Is the time to first reproduction short? • Does the plant have a dormant period? • Is there uniparental reproduction? |

• Is the propagule pressure (number of colonists, possible introductions per year, and possible locations) high? • Is there uniparental reproduction? • Does the arthropod have a high growth rate? |

TABLE 6-1c. Characteristics of Nonindigenous Pathogens, Plants, and Arthropods Thought to be Indicative of Likelihood of Invasion in New Habitat or Range and Information Needed to Functionalize the Characteristics.a

|

|

Information Needed |

||

|

Characteristic |

Pathogen |

Plant |

Arthropod |

|

History |

• Is there a history of the pathogen causing detrimental effects to plants elsewhere? |

• Is there a history of invasiveness elsewhere? |

• Is there a history of thriving elsewhere outside native range? |

|

Host-habitat availability |

• Is the host range wide? • Are susceptible hosts widespread? • Has the pathogen demonstrated diversity in pathogenic traits? |

• Does the plant occur with disturbance or in cultivation? |

• Are potential hosts contiguously distributed? |

|

Dispersal |

• Does the pathogen have means of rapid and widespread dispersal? |

• Does the plant have a means of rapid and efficient dispersal? • Does the plant possess a fleshy fruit? |

• Does the arthropod have an effective means of dispersal (natural or human-assisted)? |

|

Growth |

• Does the pathogen have a high reproductive capacity? |

• Does the plant have a high reproductive output through seeds or asexual propagules? • Is the plant closely related to native flora? |

• Is there biotic resistance that will limit growth? |

|

aNote that species must persist (Table 6-1b) before invasion can occur. |

|||

sures). For each of those characteristics, information that would be required to assign parameters to the characteristic was identified. For example, to assign parameters to an insect’s movement from a source to a potential destination, the committee thought it important to know whether the insect has a strong association with an imported material, whether it has a broad geographic host range, whether it has high densities periodically in its native range, and the volume of

imported material with which it is associated. That information is important because immigration via an imported material will be a function of the infestation rate and the quantity of the material or product being imported. Infestation rate will depend in part on the insect’s density in its native range, and density is often associated with geographic range. Furthermore, the likelihood that the species will be transported appears to be related to the size of its geographic range (Ehrlich 1989), which could be related to the likelihood of being inadvertently included in exported cargo.

Identification of the requisite information and data was more difficult for the persistence and invasion stages (Tables 6-1b and 6-1c). More important, it was not clear how to use the information and to assign parameters. In fact, it was rarely clear on what scale the parameters should even be arrayed. For example, even if information on rate of movement were available, we could not identify how it should be arranged to provide a unit that described movement, or even whether it should be “yes” or “no”; “high”, “medium” or “low”; or some number in the interval 1-10. This dilemma was encountered for each of the characteristics identified.

Another example reinforces the point mentioned above. An essential characteristic leading to the survival of a newly arrived nonindigenous species is the ability to overcome demographic and environmental stochasticity (see Chapters 3 and 4). For plants, the needed data include whether the propagule pressure is high, whether the time to first reproduction is short, whether the plant has a dormant period, and whether there is uniparental reproduction. Answering those questions leads immediately to further questions: When is propagule pressure high? What is a short time to first reproduction compared with a long time or an intermediate time? How long need the dormant period be, and when must it occur? Even if the primary questions were answered and answers to the secondary questions were available, it is not clear how answers to the primary questions would be used to obtain a way to account for demographic and environmental stochasticity. In fact, it is not obvious what this accounting should be: should it be dichotomous, categorical, or continuous?

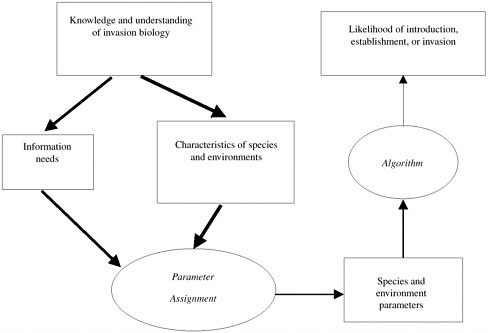

The dilemma faced in using a predictive system for invasiveness is summarized in Figure 6-1. Characteristics of nonindigenous organisms that could contribute to invasiveness and characteristics of the environments into which they are introduced are reasonably well known. Less well understood are the types of data needed to quantify these characteristics. Poorly known is how to assign parameters to the characteristics and how the parameters are to be used in algorithms to determine the likelihood of each stage of the invasion process. Even if those limitations are surmounted, much of the needed information is usually unavailable for species that might be introduced. The lack of data not only prevents the framework from being used, but also keeps the level of uncertainty in the framework from being estimated.

FIGURE 6-1 General structure of system for predicting invasiveness of nonindigenous species. Knowledge and understanding of invasion biology allow identification of the genotypic and environmental characteristics of invasive species and of information required to assign parameters to these characteristics. Parameters are then used in algorithm to estimate likelihood of stages of invasion. Ellipses represent processes in system; boxes state variables. Width of arrows represent levels of certainty in process; thicker arrow mean higher levels of confidence.

Although not the entire answer to the problems raised above, the results of deliberate experimentation would contribute substantially to their solution. For example, controlled experimental field screening for potentially invasive species could be pursued for plant species whose features are associated with establishment and rapid spread without cultivation and whose immigration history is unknown. In addition to averting the release of a potentially invasive species, results of field trials would substantially enhance the ability to detect common patterns of plant performance in new environments. Similar efforts to acquire life history and population level data in situations that approximate field settings would be beneficial for predicting the fate of nonindigenous arthropods and pathogens that are of concern, including species proposed for deliberate introduction. The logistical difficulties and costs of implementing such studies are likely to be greater for arthropods and pathogens than for plants, but extending this approach to arthropods and pathogens would similarly provide valuable informa-

tion about the capacity of these organisms for population growth and spread and improve the ability to predict invasiveness.

Quantifying a species’ performance in environments it has not before encountered can only be accomplished through experimentation (Hairston 1989, Mack 1996a). Our inadequate knowledge of the early stages in an invasion demonstrates the need for experimentation based on carefully constructed hypotheses about a species in specific ranges under measured environmental conditions. For example, detailed demographic information among populations and cohorts occurring in different environments is essential if we are to predict the outcome of these critical periods in the course of an invasion (such as Mack and Pyke 1983, 1984; Grevstad 1999a,b). Such a protocol already exists in the USDA Agricultural Research Service plant germplasm introduction and testing laboratories. These laboratories evaluate new accessions, including pasture and range species, for their likelihood to contribute to U.S. agriculture. Their goal is to identify species that will grow profitably in the United States—that is, free of predators, pathogens and competitors (USDA 1984). Species deemed free of such hazards should however be reviewed carefully before release because many features that are prized in land reclamation, rangeland management and landscape horticulture are the same features that could enhance these species’ persistence outside cultivation in the United States (McArthur et al. 1990). A geographically broad network of experimental gardens to identify species that could readily spread and persist upon release should be established. Extending these ideas to arthropods and pathogens, including those under consideration for deliberate introduction, would be valuable, although practical considerations associated with experiments in field settings may be more difficult to overcome. Well-designed studies that obtain information on life history traits, interactions with native species and population level parameters in a realistic environmental context could provide valuable information about the potential for population growth and spread of an arthropod or pathogen in a new habitat, and increase our overall understanding and ability to predict invasiveness.

RISK ASSESSMENT

From our discussion thus far, it is clear that we do not have a comprehensive taxonomically based ability to predict with sufficiently high accuracy whether, when, or how a nonindigenous species will become established in a new range. Others have reached the same conclusion (Mack et al. 2000, Williamson 1996). However, our knowledge of the factors and processes that mediate invasion can be used in a related endeavor: risk assessment. Risk assessment can provide valuable insights into both the likelihood of an invasion and its consequences, and these insights can prove useful for forming management strategies and policies.

Risk is the product of the likelihood of an event or process and its consequences As a result of the joint consideration of likelihood and outcomes, events with low to moderate likelihood but serious consequences can carry high risk, whereas events with high likelihood but insignificant consequences often carry little, if any, risk. Although predicting the likelihood of invasion by nonindigenous species has much appeal, from a practical perspective it is risk that must be evaluated. Risk incorporates the economic and environmental outcomes of invasions, and these outcomes are of primary importance to society.

Further rationalization for engaging in risk assessment rather than prediction of invasiveness itself arises from the uncertainty that will accompany most predictions of invasiveness compared with assessments of risk. Uncertainty refers to the degree of confidence in a prediction or assessment (Kammen and Hassenzahl 1999). If only the likelihood of a nonindigenous species’ becoming established in a new environment is considered, it is difficult to apply the level of uncertainty in this prediction fully in decision-making. But if the consequences of an invasion are considered, uncertainty can be incorporated into the evaluation of risk. Greater caution might be used in response to the uncertainty about events and processes that can have serious consequences. Thus, with respect to the practical application of predicting biological invasions, it is the risk posed by invasion by a particular species that should be evaluated.

Several systems have been devised for evaluating the likelihood that a nonindigenous species will become invasive, and they all share a qualitative structure. They assess the likelihood that a nonindigenous species will arrive, become established, and proliferate and spread with a set of species traits and environmental characteristics. In that regard, they are similar to the predictive structure shown in Figure 6-1. Usually, the characteristics are described in a dichotomous fashion (for example, present or absent), and the likelihood measures are categorical (for example, high, medium, or low). The systems also rely on the qualitative assessment of consequences, usually by indicating whether consequences will occur. A collective measure of consequences is determined by summing the expected occurrences of individual consequences (such as crop loss, decreased market, or direct impact on endangered species). The estimate of likelihood of establishment and expectation of consequences are combined to identify a categorical estimate of risk.

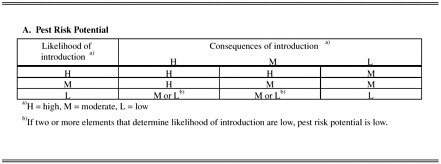

Two specific pest risk-assessment procedures are portrayed in Figures 6-2 and 6-3. Figure 6-2 depicts a generic system used by the Animal and Plant Health Inspection Service (USDA/APHIS 1997). The likelihood of pest introduction is based on the estimated quantity of imported material and “pest opportunity”, which is a synthesis of the arrival and establishment stages of the invasion process. It is somewhat incongruous that pest opportunity is measured as a sum of scores that have a probabilistic basis. Because these scores represent subjectively determined probabilities, their joint consideration should reflect the multiplication of the chains of independent probabilities to determine their joint likelihoods. The

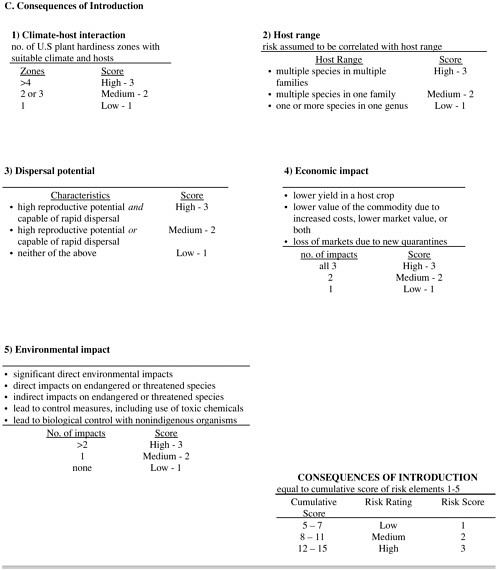

FIGURE 6-2 Qualitative pest risk-assessment procedure used by USDA/APHIS (1997). Risk potential (A) is based on joint consideration (addition) of likelihood of introduction and consequences of introduction. Likelihood of introduction (B) is based on quantity of imported material and qualitative assessment of the likelihood that nonindigenous species will survive or find suitable host or habitat. Consequences of introduction (C) are based on qualitative assessments of climate-matching, pest host range and dispersal, and economic and environmental impacts.

consequences of introduction are determined as the sum of scores for five “risk elements”. Three risk elements refer to the invasion process, and two refer to the consequences of invasion. Thus, in this system, consequences are assessed as a composite measure of the spread and abundance of a newly introduced species and impacts that stem from the invasion. On the basis of the characteristics listed in Table 6-1, it is questionable whether the three risk elements that putatively capture the likelihood of invasion by an introduced organism are reliable. The overall pest risk does, however, reflect the combined influences of likelihood and consequences of introduction.

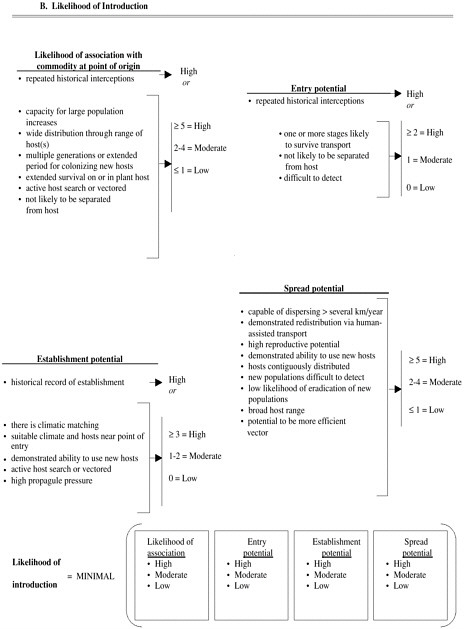

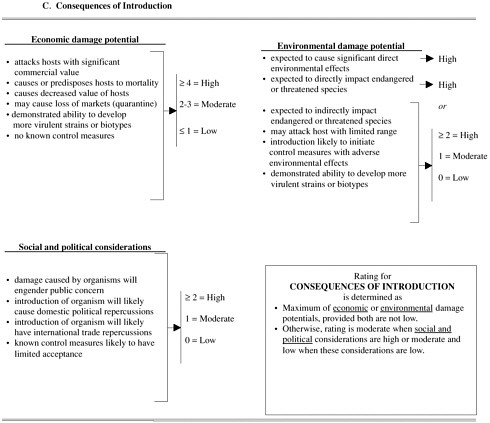

The second pest risk-assessment protocol (Figure 6-3) has been proposed for use with solid-wood packing materials (USDA/APHIS-FS 2000). This system more clearly delineates the invasion process (likelihood of introduction) and its consequences. It also models the process for determining the likelihood of introduction as akin to a set of independent events that must all occur for the immi-

FIGURE 6-3 Qualitative pest risk-assessment procedure proposed for use with imported solid-wood packing materials (USDA 2000). Pest risk potential (A) is based on qualitative assessment of likelihood of pest introduction (B) and consequences of introduction (C). Likelihood of introduction (B) is based on series of processes and is similar to result of multiplying probabilities of independent events to obtain joint probability. Likelihood of each process (such as association with commodity at point of origin or entry potential) is assigned score of high, medium, or low on basis of whether one or more criteria are deemed true. For example, entry potential is considered high if there is history of repeated interceptions or if two of followng three criteria are true: one or more life stages of pest likely to survive transport, pest not likely to be separated from host, and pest is difficult to detect. Consequences of introduction (C) are essentially sum of individual consequences, although consequences do not carry identical weights. Each consequence (such as, economic damage potential) is assigned score of high, moderate, or low on the basis of whether one or more criteria are true. Pest risk potential is based on joint consideration of likelihood of introduction and consequences of introduction.

grant population to develop an invasive population (that is, the multiplication of probabilities). As with the first system, the overall risk reflects the combined influences of the likelihood of introduction and consequences of introduction.

Because risk-assessment systems use the same structure as that depicted in Figure 6-1 to assess the likelihood of introduction, they share the limitations identified for a scientifically based prediction of invasion. Foremost among the limitations is that risk-assessment systems require subjective determination of characteristics of nonindigenous species and the environments into which they might be introduced, and they identify the risk of introduction by subjectively placing species and environmental characteristics into likelihood categories. If the characteristics for assessing the likelihood of introduction shown in Figure6-3 are used as an example, it is not clear how one would determine whether an organism has a high capacity for increase, what the capacity is for dispersing, or what constitutes an extended period for colonizing new hosts. Those determinations are made subjectively and are thus susceptible to bias. Furthermore, none

of the mapping systems used to identify likelihood of introduction based on those characteristics has been fully validated. Even validation of the Australian weed risk-assessment system (see Box 6-1) can be viewed as somewhat equivocal. Thus, these components of current pest risk-assessment systems cannot yet satisfy the science-based criteria of repeatability and peer review for validity. Simberloff and Alexander (1998) came to similar conclusions when they reviewed risk-assessment systems.

Similar criticisms can be leveled against the procedures by which the component of the risk-assessment systems dealing with consequences are assembled. Much of the information required to assess consequences is based on expert judgment, which is often subjective, can vary substantially among evaluators, and can be influenced by political or other external factors. To our knowledge, the algorithms used to categorize the overall consequences have not been validated. Furthermore, uncertainty is never explicitly incorporated into the evaluation process. In some cases (such as in the International Plant Protection Convention protocol), uncertainty is recorded but apparently then ignored.

Pest risk-assessment systems have value, provided that the reasoning used is underlain by careful documentation. The process of conducting a qualitative risk assessment is at least as valuable as the specific risk values that are produced, because the process, when carefully documented, provides a mechanism for assembling and synthesizing relevant information and knowledge. Furthermore, the process of risk assessment catalyzes a thorough consideration of the relevant events. When a consistent and logical presentation justifies the parameters used in a risk assessment and its conclusions, assessments can meet the scientific criteria of transparency and openness. Many risk assessments use slightly different characteristics and methods for determining likelihood, depending on the specifics of the situation being considered. That variability is a strength of the risk-assessment systems, not a shortcoming.

In the absence of careful documentation, specific risk values are worthless. That is a serious problem because any specific value imparts to some policymakers and a large part of the public a scientific aura and a sense of knowledge that might not be warranted. Pest risk assessments that lack careful documentation can do more harm than if no assessment had been undertaken.

A recent risk assessment of the introduction of the cape tulip (Homeria spp.) (USDA/APHIS 1999) illustrates both the limitations and the strengths of qualitative risk assessment (see Box 6-2). Determination of the likelihood of introduction is the principal weak point in the assessment. The most apparent problem is the lack of rationale for the scores given in the pathway analysis. The analysis fails the test of transparency; there is little way to critique the scores other than to say that they are unsubstantiated. A weakness in the protocol used is that scores are summed rather than viewed as the likelihoods of events in a sequential chain. For grain imports and ornamental plant shipments, the risk assessment indicated only a low likelihood that Homeria will escape detection at the point of entry. If

|

BOX 6-1 Western Australia Weed Risk-Assessment System Some states in Australia have established their own weed-assessment system in addition to using the federal system developed by the Australian Quarantine and Inspection Service (AQIS). The system for Western Australia (WA) is perhaps the most elaborate of these state systems (Randall and Stuart 2000). In establishing their own systems, states in Australia have recognized that environments at one end of the Australian continent differ enormously from those elsewhere in the country, and a nonindigenous species could well be invasive in one state or region and be innocuous (or even fail to become established) in another. The WA system was designed primarily to evaluate requests for the deliberate introduction of plants into the state, but its protocol could also be applied to accidental introductions. Unlike the AQIS system, the system for WA is not trait-based but relies extensively on the history of nonindigenous species in WA and elsewhere. Expert judgment (for example, “assessors decision points”) is used to determine the extent to which a species with a history of naturalization might pose a threat in WA. An essential component of the system is the large database of species and their ecology that has been compiled by R. Randall, plant profiler, for Western Australia Agriculture. In addition, the protocol uses the results from a climate-matching program (CLIMEX) in determining whether the species could persist anywhere in the state, on the basis of the similarity of climates in the native range and potential new ranges in WA. Although the system appears to operate as a basis of allowing or prohibiting any proposed species entry, a risk assessment is performed, in effect, through determination, with a full weed assessment, that a species is an important weed and poses an immediate or imminent threat. The WA system includes an essential but often overlooked component of assessment—correct identification of the species. The assessment of nonindigenous species worldwide contains celebrated cases in which evaluation and control were delayed by the misidentification of species that proved harmful. For plants |

that is true, the overall likelihood of introduction should also be low. The analysis of consequences is more robust, and justification, including relevant literature, is provided for each of the rankings. Furthermore, although the characteristics used to determine the likelihood of invading (as part of the consequences evaluation) might be challenged, it is clear what characters were used and how they were determined.

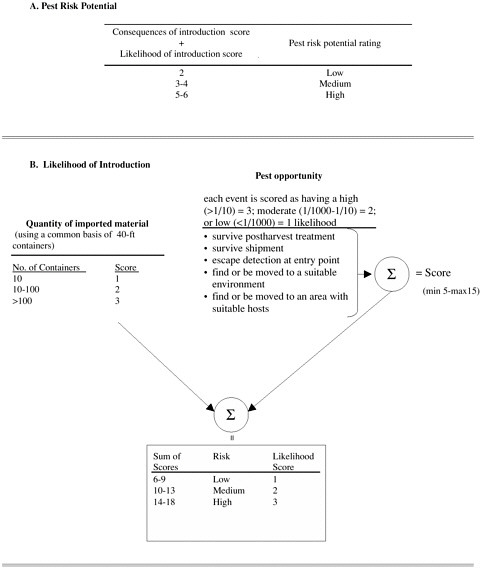

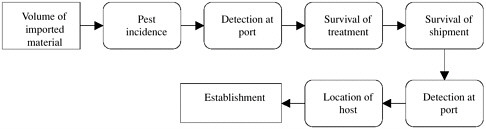

Quantitative scenario analysis (Kaplan 1993, Firko 1995a) has been used to produce quantitative assessments for the likelihood of establishment. The process involves identifying and enumerating all potential pathways by which establishment can occur. Probability distributions are used to model the likelihood of each link in the chain and combined to estimate the overall likelihood of a nonindigenous species becoming established (Figure 6-4). Managing the risk consists

|

that are being deliberately introduced, the correct identification cannot be entrusted totally to the applicant. The past performance of any congeners of the species is also considered in the evaluation. Having a congener with a record as a weed elsewhere does not automatically cause a species to be prohibited entry, but it does prompt further assessment. Use of records of congeneric weeds prompts further investigation at several points in the flow diagram. An important feature of the WA system is that final approval of entry of a species is not usually automatic, especially for species for which there is no record. There are in effect several layers of examination and evaluation that each species must pass through before import approval is given. This multilayer system contains benefits for both the public and the applicant for species entry. Only a species that appears on a federal or WA prohibited list is automatically excluded from further evaluation. A species that is already naturalized in the state may be allowed entry (that is, entry of additional populations), although there is provision that these introductions may be denied. As a result, blocking their entry can reduce the amount of genetic variation in a species in the state. Although a record of a species as a “significant weed” (one demonstrated in the scientific literature to have an impact in agriculture or natural ecosystems) does not automatically prohibit a species from entry into the state, it does prompt a full weed assessment. Such assessments are more detailed examinations of the record of behavior of the species than simply whether it appears as a weed anywhere. A decision can be made after this assessment to prohibit or permit the species’ entry. One apparent anomaly concerns the history of established domestic and international trade in the species; it is not clear to what degree a species with such a trade history would be permitted entry. The strength of this system appears to depend on the comprehensiveness of the database maintained on nonindigenous species; that is, expert judgment here is based largely on known behavior of the species outside WA (Randall and Stuart 2000). |

of reducing the likelihood of events in the chain. Although this method is quantitative, it often requires substantial subjectivity in assigning the probability distributions used to represent events along a pathway. Ideally, empirical data would be used to select the distributions, but such data are often not available.

As currently used, the method considers only the arrival and initial survival of a nonindigenous species, processes that usually are much better understood than the proliferation and spread phase of an invasion. Quantitative scenario analysis should be expanded to include all aspects of the invasion process, especially when consequences are high. The array of information suggested by the committee in Table 6-1 could be incorporated in scenario analysis to reflect a more comprehensive and realistic perspective on the risk posed by the introduction of pests and of organisms intended as biological control agents.

|

BOX 6-2 Summary of a Risk Assessment of Cape Tulip Introduction Homeria, or cape tulip, of which there are 30-40 species, are native to South Africa and are widely propagated as ornamentals. An assessment was initiated by a request to import oats from Australia that might contain Homeria spp. In Australia, these species are weeds. They produce glycosides that are poisonous to livestock and humans. Potential impacts of cape tulips include livestock poisoning, reduced crop yield, changes in plant community structure, human poisoning, and increased use of herbicides to control the plants after their establishment. Species in this genus already occur in the United States outside cultivation. The likelihood of establishment was assessed by identifying three potential pathways and assigning qualitative likelihoods to each of five events for each pathway. The likelihood of each event was judged to be low (p < 0.01), moderate (0.01 ≤ p ≤ 0.1), or high (p > 0.1), with numerical scores of 1, 3, and 5, respectively. The pathways, pathway events, and scores appear in Table 6-2. Note that this table is based on the risk elements for “pest opportunity” in Figure 6-2. Numerical scores for the likelihoods were summed, and the likelihood of establishment was determined by using the sum. If the sum of scores was 7-14, the likelihood of introduction was judged to be low (1); 15-24, moderate (2); and above 25, high (3). On the basis of a total of 63, the likelihood of establishment was judged to be high. The consequences of establishment were based on the summation of four quantitative criteria, each of which was given a ranking of low, medium, or high (1,2, or 3, respectively). The first criterion was the suitability of the habitat for widespread distribution. On the basis of relevant literature and predictions from CLIMEX (Sutherest et al., 1991a) this was rated high with a score of 3 (suitable climate would allow the weed to establish in four or more plant-hardiness zones). The second criterion was the ability to disperse and spread, which was also rated high, on the basis of the plants’ having a high reproductive potential and highly mobile propagules. A checklist of characters thought to influence reproduction and dispersal was consulted to derive the rating. Characters contributing to dis |

An example of scenario analysis that takes a broad approach is a risk assessment of threats to a wetland system in Ottawa, Ontario, which included sorting out the nature and magnitude of several factors, only one of which was a nonindigenous plant (Foran and Ferenc 1999, Moore 1998). The scenario analysis broadly evaluated the ecosystem, the factors influencing the system, and the known, unknown, and expected responses of the system to stresses. By creating a matrix with all identifiable components in the stress (cause) and effect profile, the assessment was able to focus on the most relevant pathways and effects. The assessment concluded that the nonindigenous plant posed the greatest risk to the wetland system. This assessment intentionally included all parts of the source-

|

persal and spread were prolific seed production; reproduction by corms; dispersal by wind, water, machinery, animals, and humans; and the ability of seeds and corms to tolerate some stress. The third criterion was an economic-impact rating that was based on potential reduced crop yield, lower commodity values, and possible loss of markets. The impact was rated as high on the basis of the potential economic loss from stock poisoning and reduced crop yield. The fourth criterion was environmental impact, which was also scored as high on the basis of potential impact on community structure and human health and the impact of control practices. The total score for the consequences assessment was 12, leading to an overall rating of high consequences (score of 3). The final measure of risk was based on the sum of the score for risk of establishment (3) and the score for consequences (3). That sum led to an overall evaluation of high risk. |

exposure pathway-response relationships and did not focus on only one part or one pathway.

The advantage of a scenario system is that it captures the ecological context, as recommended in present ecological risk assessment (Suter 1993, USEPA 1998). All the information is used, and a line of evidence is set aside only after compelling evidence warrants that action. The greatest limitations are that the analysis can be data-intensive and still remain blind to novel and unknown conditions. Modeling and use of related data can overcome some problems related to missing data, and peer review might be able to address some of the issues related to unknown conditions.

FIGURE 6-4 Generic pathway for quantitative scenario analysis. Rectangles are rates and rounded rectangles are probabilities. Analysis consists of describing probabilities using probability by distributions and parameterizing the volume(s). Monte Carlo simulation is used to estimate rate of establishment.

Pest risk assessment meets a crucial need in that it furnishes a framework for gathering and synthesizing information about a nonindigenous species and about the consequences of its becoming invasive. Those strengths have been recognized, and principles for conducting pest risk analyses have been outlined (Gray et al. 1998). We have discussed several of these principles, but three warrant recapitulation. First, because expert judgment must be used for estimating the characteristics and parameters used in pest risk assessments, the judgments must be clearly identified and supported. Second, the components in pest risk assessments must be completely documented, including the data that are considered and used; models, their assumptions, and results; and sources and justification of parameter values and other relevant facts. Third, pest risk assessments should be subject to peer review. Although risk assessment is not a scientific exercise, it is science-based and the most reliable method to evaluate its scientific credibility is peer review; such review should strengthen and refine analysis and not be viewed as an attack to be deflected.

KEY FINDINGS

-

Predicting the invasion of introduced organisms in a new range has been based largely on identifying species that already have a record of invasiveness. Thus, the record of a plant’s invasiveness is currently the most reliable predictor of its ability to establish and become invasive in the United States. The same is true for arthropods and pathogens if host plants on which they have colonized elsewhere exist in the United States.

-

Climate-matching simulations can narrow the estimated potential range of an introduced species or estimate potential ranges from which invasive species might emerge. Such models form, in effect, a first evaluation or screening of a

-

species’ potential new ranges. Even if a species’ native range appears to have a climate similar to a climate in the United States, the similarity by no means ensures that if the species arrives it will persist, let alone become invasive. Evaluation based particularly on the biotic components of a potential target range is also needed, but it is difficult to incorporate this component into a simulation.

-

Trait-based approaches for predicting invasiveness hold much appeal as a means of providing a general guideline regarding any species’ invasive potential. However, the predictive power of these approaches is directly proportional to the amount of ecological information available on a species’ attributes, the species’ new environment, and the often stochastic circumstances surrounding its entry. The expression of species’ attributes are context-specific (that is, environment-specific).

-

The phylogenetic distribution of invaders is not random among the earth’s biotas. However, evolutionary divergence is often so great that even closely related taxa can differ greatly in many life-history traits. That divergence makes it difficult to infer the key features responsible for invasiveness. The more distantly related the taxa, the more acute the problem; however, even species comparisons involving congeners often reveal large differences in ecology and life history. The availability of new detailed phylogenetic data will aid in determining which traits are functionally associated with invasiveness.

-

Predictive systems have so far focused almost exclusively on plants, and the successful systems are restricted to relatively small taxonomic groups. There is no consensus as to whether the current predictive systems for plants are also conceptually appropriate for plant pests and for defining the risk posed by introducing organisms for biological control.

-

There is considerable fundamental knowledge about the factors and processes that drive biological invasions, and a fundamental structure of a predictive system can be defined. Erection of comprehensive predictive systems is stymied, however, by the lack of adequate experimentally derived information on the behavior of species in different environments. Such information would place useful bounds on the functional elements in any predictive system.

-

Until a comprehensive system for predicting invasion is developed, the uncertainty inherent in prediction can be evaluated most practically in the context of risk assessment. Its strength is that it incorporates the economic and environmental outcomes of invasions; these are the outcomes that are of primary importance to society. Risk assessments that are carefully documented and that explain the logic of their rating systems are more likely to result in repeatable conclusions in the hands of different experts. The committee advocates the creation of a comprehensive predictive system for identifying future invasive species based entirely on the interactions among a given species’ biology, the environment it enters, and the circumstances of its residence in the new range. Risk assessment is an interim approach. Through its attention to species that may have substantial

-

environmental or economic consequences in a new range, risk assessment reduces dependence on the fundamental biological information needed in a purely predictive system. Species that are deemed to have a high risk of damage are highlighted even if the understanding of their biology is incomplete. Finally, risk assessments that are carefully documented and transparent in the logic used to assemble their ratings would allow public scrutiny and independent evaluation.