The Federal Partnership with U.S. Industry in U.S. Computer Research: History and Recent Concerns

Kenneth Flamm

The University of Texas at Austin

In 1999, two distinguished advisory panels, a congressional budget analysis, and an administration budget proposal1 either explicitly or implicitly focused on recent concerns that declines in federal and industry support for computer research pose potential problems for the long-term health of the information technology sector in the United States. This paper analyzes extant data on changing patterns of computer R&D funding, and how these changes are correlated with changes in the internal structure of the U.S. computer industry. We begin by briefly reviewing the history of federal support for computer R&D in the United States, drawing largely on the author’s 1987 analysis of the development of government-supported computer research programs worldwide,2 and update that

analysis to the present. Disaggregated estimates of R&D funding, over time, are then constructed and presented. The proposition that much R&D activity has migrated out of computer hardware production and into semiconductor production is measured against available data. A final section produces a net assessment of recent trends, summarizes recent proposals that address these trends, and offers some concluding observations on the problems that these proposals are likely to encounter.

The United States government has been an important patron of information technology research throughout its history, beginning with the development of the first electronic digital computers during the Second World War, and continuing up through the present.3 Much has changed since the birth of this technology in the 1940s, however, and in the last several years prominent voices have articulated new concerns over changing relationships between government, industry, and information technology research. This paper surveys available empirical data on computer research activity with an eye to the legitimacy of these worries. Before examining the present, we briefly survey the past.

THE BIG PICTURE4

In the first decade after the war, most significant computer research and development projects in the United States depended—directly or indirectly—on funding from the United States government, mainly from the U.S. military. The second decade of the computer, from the mid-1950s through the mid-1960s, saw rapid growth in commercial applications and utilization of computers. By the mid-1960s, business applications accounted for a vastly larger share of computer use. However, the government continued to dominate the market for high-performance computers, and government-funded technology projects pushed much of the continuing advance at the frontiers of information technology over this period.

From the mid-1960s through the mid-1970s, continued growth in commercial applications of vastly cheaper computing power exploded. By the middle of the decade of the seventies, the commercial market had become the dominant force driving the technological development of the U.S. computer industry. The government role, increasingly, was focused on the very high-end, most advanced computers, in funding basic research, and in bankrolling long-term/leading-edge technology projects.

From the mid-1970s to the beginning of the 1990s, the commercial market continued to balloon in size relative to government markets for computers, leav-

ing the government an increasingly niche segment in a huge, wide market for computers of all shapes and sizes. Only in small niches—particularly in the very-highest-performance computers and data communications networks—did government continue to play a role as a quantitatively or qualitatively dominant customer for leading-edge technology. The government also remained the primary funder of basic research, and long term and long-range computer technology projects.

The 1990s saw some significant changes in the pattern of funding for computer research in the United States, and a sharp turn in its R&D relationships with industry. Indeed, the industry itself seems to have undergone some abrupt changes in its patterns of R&D funding. Both sets of changes, at some fundamental level, are what have motivated recent expressions of concern over the magnitude and direction of information technology R&D in the United States. Before examining the basis for these concerns, we will briefly mention some of the major players within the U.S. government responsible for bankrolling the IT revolution.

DEFENSE

What was arguably the first stored-program, electronic computer was built in Britain during World War II to assist in cracking German codes, and it was the intelligence community that dominated the development of the very fastest computers, and the components needed to make those computers, in the United States in the early years. Through the 1950s and 1960s, the National Security Agency was the primary funder of what came to be called supercomputers.

From the early 1960s on, a unique new U.S. defense agency came to dominate the U.S. computer R&D agenda. The Advanced Research Projects Agency (ARPA, later renamed DARPA with a “Defense” prefix added on), under a succession of technology leaders rotated in from the U.S. computer community, devised a strategy of focusing on long-term, innovative, and highly experimental research projects that ultimately generated an outstanding record of accomplishment. The DARPA-funded projects of the 1960s and 1970s are the building blocks of today’s bread-and-butter computing. Such advances as time sharing and distributed computing, computer networks, the ARPAnet (the precursor of today’s Internet), expert systems, speech recognition, advanced computer interfaces (like the mouse), computer graphics, computer-based engineering design and productivity tools, advanced microelectronics design, manufacturing and test tools—all drew significantly on results of an astounding portfolio of people and projects.

That is not to say that everything the Department of Defense touched turned to gold. DARPA occasionally burned serious resources on large-scale, “political” projects that didn’t seem to produce much in the way of results—the so-called Strategic Computing Initiative, the centerpiece of DARPA computer research in the late 1980s, is a prime example of a massively expensive program

that seems to have ultimately slipped beneath the waves with barely a ripple. Other Defense customers have funded even larger, marquee R&D programs with what have been decidedly mixed results—the Office of the Secretary of Defense did not have a lot to show for its billion-dollar VHSIC (Very High Speed Integrated Circuit) program of the seventies, or its various ADA programming language initiatives of the 1980s and 1990s. Indeed, the Defense-defined ADA language seems destined to become the Latin of computer languages—used only by the most faithful within the inner sancta of the Pentagon.

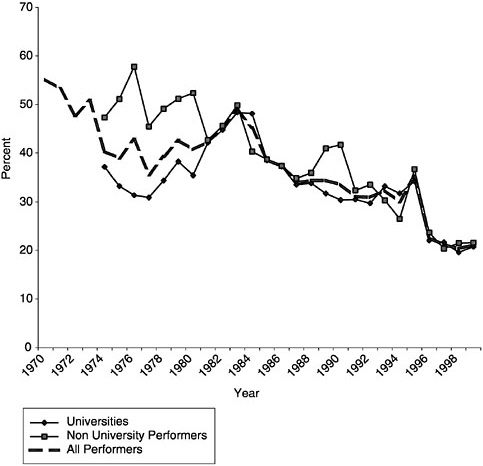

Overall, DoD’s role in federal funding of computer research has declined significantly over the years. Detailed NSF data on federal funding of math and computer science research (that is, excluding development expenditure) begin in the 1970s. Figure 1 shows the growth of federal spending in this area over 30 years, expressed in 1996 dollars (using the fiscal year GDP deflators compiled by NSF). Note that overall, federal spending on basic research in the area has gone from exceeding 50 percent of the total in the mid-1970s, to a one-third/two-thirds split today. The growth in basic research funding has certainly been considerably less impressive than growth in applied research.5

Within the relatively slow-growing federal basic computer research budget, DoD’s role has shrunk even more sharply over the decades. Figure 2 shows that in the early 1970s, over 50 percent of federally funded basic computer research came from DoD. Today, that figure is about 20 percent.6

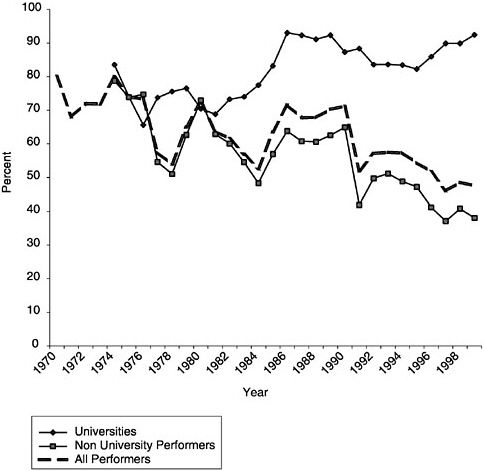

Similarly, Figure 3 shows that DoD’s share of applied federal computer research funds has fallen from as much as 80 percent in the early seventies, to under 50 percent today.7 Interestingly, even as DoD’s overall share of applied federal research funding outside of universities was falling—mainly because other agencies’ funds for applied computer research in industrial and nonacademic settings exploded (see Figure 1) —DoD remained the clearly dominant funder of applied research in universities. Better than 90 percent of federally funded applied math and computer science research in universities for 1999 came from DoD, preserving its role as the primary funder of computer research (aggregating basic and applied) in universities.

In short, the major trend affecting DoD in recent years has been a dramatic shrinkage in its relative importance as a funder of computer research within the federal government. This has happened at a time when the overall federal research portfolio was shifting away from basic and toward applied research, and a dramatic surge in recent years in applied computer research by agencies other than DoD, channeled mainly to industrial and nonacademic performers. Next, we briefly consider the other federal patrons of computer research.

FIGURE 2 DoD share of federal math and CS basic research dollars.

THE OTHERS

Department of Energy/Atomic Energy Commission

Historically, the second-largest federal funder of computer research and development has been the Department of Energy, and its predecessor agency, the Atomic Energy Commission. This was true 50 years ago, and it remains true today. Energy’s special interest has been in funding the development and use of supercomputers, and the modeling and operating system software for nuclear weapons design that runs on these ultra-high-performance computers. In the 1950s and 1960s, these machines bore the names of now defunct companies—Engineering Research Associates, Philco, Control Data, Cray Research—and IBM. In the eighties and nineties now familiar new names were added to the Energy roster (along with IBM) —Intel, Silicon Graphics, and Sun Microsys-

FIGURE 3 DoD share of federal math and CS applied research dollars.

tems, and the focus shifted toward massively parallel supercomputing using large numbers of processors lashed together.

In addition to funding the second-largest slug of computer research funding in the federal budget, Energy was particularly creative in supporting development and production of horrendously expensive new hardware. A variety of means—advance purchase agreements, prepayment for hardware, direct subsidy of development expenditure—have been used to underwrite creation of these top-of-the-line machines.

NASA

In the sixties and seventies, NASA was probably the third-most significant federal player in computers. While the scale of its efforts was significantly smaller than that of DoD and even Energy, it was highly focused and had great

impact on a number of areas. Principal among these were artificial intelligence, computer simulation of physical design (the NASTRAN finite-element structural modeling software that is today an industry standard was originally developed for NASA), image processing, large-scale system software development (the Space Shuttle was a major landmark for large-scale complex systems development projects), and radiation-hardened, reliable computing hardware. Today, however, NASA influence has dwindled along with its budget, and it is now the fifth-largest player in funding computer research.

NSF

The National Science Foundation was a late arrival on the computer scene. Computer science did not emerge as a separate academic discipline until the 1960s, and with its orientation toward academically defined disciplines, the NSF initially only funded problem-oriented computer applications in traditional academic disciplines. The first organized thrust into computing came in 1967, with a large facilities investment program designed to strengthen computing hardware resources available in the nations’ universities and colleges. In the mid-1970s, computer science finally took off as a supported discipline. From 1985 on, another large infrastructure investment—this time in making supercomputers available to academics, outside of the traditional defense and nuclear weapons communities—was launched. Finally, in the 1990s, NSF was given the leadership role in the new High Performance Computing and Communications Initiative, which was merged into a single, larger interagency IT R&D budget for FY 2001.

Today, interagency briefings typically show NSF with the largest share of IT R&D in the federal budget,8 but this is an arguably misleading assertion. (For one thing, substantial computer R&D support by the individual services within DoD, and lower-profile computer R&D efforts embedded within other programs elsewhere within the federal budget are clearly being omitted from such IT “crosscuts.”) A safer claim is that NSF is today the dominant force in basic computer research (with close to 60 percent of federal basic computer research funds, and close to half of all basic math and computer science research funding). Within research more broadly writ—i.e., including applied research—NSF today has a solid hold on the number-three spot, after DoD and Energy.

NIH

The National Institutes of Health in recent years have come to occupy an increasingly prominent role as a supporter of computer research, in no small part

because of the growing role of computers and computing technology in automated genetic sequencing and analysis. It is by no means a new role, however. In earlier decades, NIH had played a small but focused role in supporting the development of artificial intelligence and expert systems for interpreting data and diagnostics. In a sense, the recent upsurge in the NIH presence in computers represents a continuation of this activity in a new and promising area of technology. Today, NIH is the fourth largest player in federal computer research, basic and applied. With roughly two-thirds of its funding going into basic research, it is number three in basic research, after NSF and DoD.

THE U.S. GOVERNMENT AND ACADEMIC COMPUTER RESEARCH

Thus far, we have seen that there has been significant growth in federal funds for math and computer research over the last several decades, more than doubling in real terms from 1991 to 1999 alone. Federal math and computer science research obligations approached $2.5 billion in 1999. We did, however, note that the agencies involved in providing these funds have altered their relative shares of this pie over the years, and that overall, there has been a marked shift away from basic research and toward more applied projects.

Does this mean that the federal role in academia, which we tend to identify with more basic research activities, has declined? Briefly, the answer is no. Based on NSF data, Figure 4 shows the share of federal funds in math and computer science research and development undertaken in universities, and in university-administered federally funded research and development centers (FFRDCs).9 Though there has been some downward trend most recently, the federal share of all math and computer R&D funds in universities has fluctuated between 65 and 75 percent over the last fifteen years, and shows no sign of fundamental change.

In short, federal and non-federal funding of computer R&D in universities have increased at roughly similar rates over the long haul. Given the continuing predominance of federal funding in universities and their FFRDCs, however, the data in Figures 1 and 4 together suggest that the math and computer science R&D undertaken in academic institutions has shifted away from more basic projects and toward more applied research and development.

FIGURE 4 Federal share of university math & CS funding.

THE U.S. GOVERNMENT AND INDUSTRIAL COMPUTER RESEARCH

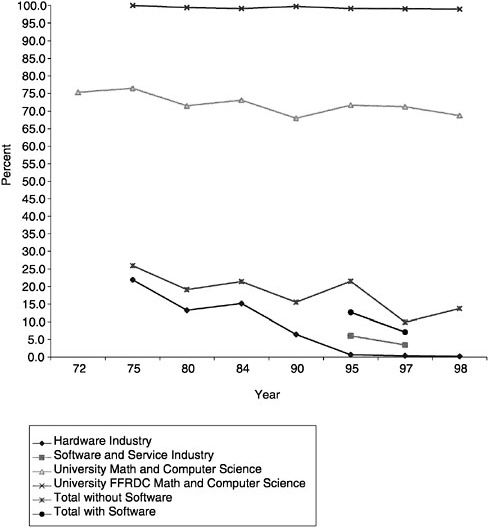

The same sort of assertion of continuity does not apply to federal computer research funds going to the industrial sector. In the 1950s, the federal government paid for perhaps 60 percent of research and development undertaken by firms producing computer hardware. By the mid-1960s, the federal share had declined to roughly a third of total R&D.10 By 1975, NSF data show that firms

loosely classified as being computer hardware producers (“office, computing, and accounting machines,” or OCAM) received federal R&D funds accounting for 22 percent of their total effort, and by 1980 that share had further declined to 13 percent.11 (See Figure 5.) After increasing slightly, to 15 percent of OCAM R&D in the mid-1980s, the federal share plunged to 6 percent by 1990, plunged again to 0.6 percent by 1995, and had dropped by another third, to 0.4 percent, by 1997.

Clearly, after years of slow, gradual erosion, the role of federal funds in computer hardware industry R&D plunged to virtually nil in the nineties. Further, since the industry R&D effort is so much larger in the aggregate than research undertaken in universities, even lumping robust federal support for university R&D efforts with industry R&D would not arrest the precipitous decline of the nineties in an overall U.S. aggregate. Adding university math and computer science research to computer hardware industry R&D, the federal share of the total went from 26 percent in 1975, hovered in the 16 to 22 percent range from 1980 through 1995, then dropped to 10 percent in 1997 (Figure 5). Beginning in 1995, industrial R&D by computer software and service firms has been measured in NSF statistics, but folding in that, too, does not arrest a sharp decline from 1995 to 1997.

A positive interpretation of these changes might be to suggest that while federal funding grew at a robust enough rate, the computer industry grew at a truly phenomenal rate in the nineties, resulting in a federal share of computer R&D that dropped sharply in the late nineties. In other words, while the federal slice of the pie increased, the overall R&D pie grew vastly—much faster with the explosive computer industry growth of the 1990s.

FIGURE 5 Federal share of computer R&D.

COMPUTER R&D UNDER THE MICROSCOPE

Unfortunately, the latter suggestion turns out to be untrue. Indeed, surprisingly and perhaps even shockingly, U.S. computer hardware industry R&D seems to have actually declined in real terms during the 1990s, according to NSF statistics! Rather than being a bigger federal slice of an even bigger industry pie, federal funds seem to have become a truly tiny piece of a much smaller hardware industry pie.

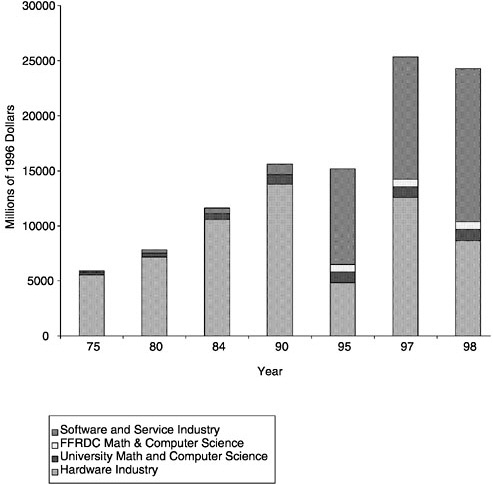

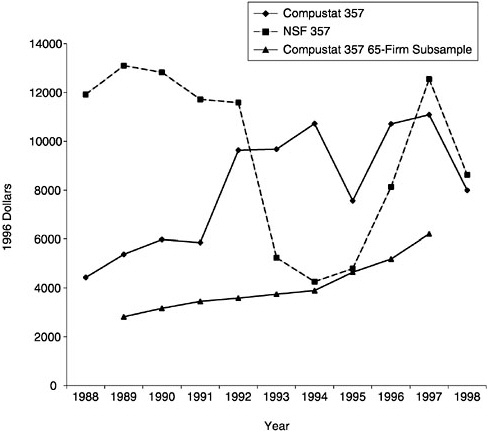

Figure 6 tells this surprising story. NSF estimates (in constant 1992 dollars) of industry-funded R&D in the computer hardware (and software, when available) sec-

FIGURE 6 Computer-related R&D in 1996 dollars.

tors are shown in comparison with each other and with the size of math and computer science R&D undertaken in universities and university-administered FFRDCs. (Data is from the same NSF sources as in earlier figures.) Both OCAM industry R&D and university math and computer science research grow in real terms through 1990. But from 1990 through 1995, OCAM industry R&D drops by about two-thirds. Even if software and service industry R&D is added on to hardware (OCAM) for 1995, total industry-funded R&D drops from 1990 to 1995.

After some recovery in 1997, computer hardware industry R&D drops again in 1998. Even with increasing software and services industry R&D added on, the total again declines from 1997 to 1998. By 1998, OCAM total R&D has still not recovered to its 1990 level, while software industry R&D has increased by al-

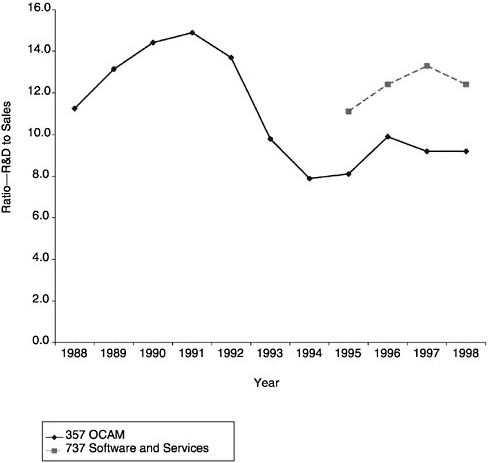

FIGURE 7 Ratio of R&D to sales for computer firms per NSF statistics.

most one-half of its 1995 level. Math and computer science research in universities and their FFRDCs, by contrast, is relatively stable in the 1990s, as was the federal share of the total.

Industrial computer R&D seems to have not only fallen in absolute terms in the 1990s, but also relative to sales by computer hardware producers. Figure 7 shows that the research intensity of computer hardware makers tracked by NSF statistics fell sharply in the 1990s.

At least superficially, then, we are left with a somewhat troubling picture of a high-tech computer industry that cut back its investment in R&D over most of what we know of the decade of the 1990s. How and why did this happen? Before exploring this in greater detail, it is helpful to discuss whether this trend might possibly be dismissed as a statistical artifact.

THE IBM EFFECT

The NSF statistics on industry R&D we have been examining classify R&D at the company level, imputing all R&D to the primary industry of the company. Industrial classification of a company is generally based on employment, and it is therefore possible for companies to switch industries from one year to the next if one activity of the company increases and surpasses another that previously might have been the company’s largest.

NSF officials have in the past suggested that this was going on in statistics for the computer hardware sector in the 1990s, and consideration of the case of IBM makes concrete how this could cause large shifts in reported R&D. Investment analysts covering IBM typically reported in their market research that in the mid-1990s, IBM’s largest revenue-producing sector shifted from hardware to software and services. Corporate R&D figures reported in IBM’s annual reports suggest that this could have large consequences for NSF industry R&D estimates. In 1975, IBM’s R&D amounted to about 55 percent of total company funds for OCAM R&D in NSF statistics. By 1985, the relative share of IBM R&D had fallen slightly, to 50 percent, and by a decade later, in 1990, was still about 45 percent. Thus, a transfer of IBM out of hardware and into software in the NSF statistics could very well have reduced OCAM R&D significantly in 1995 purely as a result of the classification change.

However, even if IBM and other computer hardware firms had shifted out of OCAM and into software and services in the NSF classifications, the data would still seem to show an absolute decline from 1990 to 1995, even if a very large share of software and service R&D in 1995 had been coming from such “transferees.” In order to not have declining R&D from 1990 to 1995 among firms classified as computer hardware producers in 1990, there would had to have been large-scale movement out of OCAM and into still other sectors, like communications equipment or scientific instruments. While not impossible, this seems unlikely.

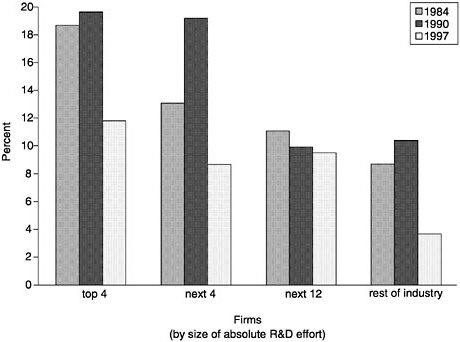

Furthermore, those companies that continued to be classified as OCAM producers clearly underwent a decline in their R&D-intensity in the 1990s, compared with prior decades. It is not true that the firms remained as research intensive as before, but that there were merely less of them, thus reducing the aggregate investment by this sector in R&D. In fact, there were very large declines in R&D intensity for all types of OCAM producers, large and small. Figure 8 shows the extraordinary declines in R&D intensity when OCAM companies are sorted into groups according to the size of their absolute R&D effort—the four largest, the next 4, the next 12, and the rest of the industry. All of these groupings of firms, using the same NSF data described earlier, showed large declines in research intensity in the 1990s.

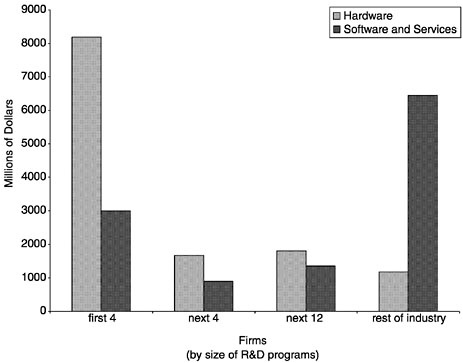

The particularly sharp declines in research intensity within the largest play-

ers are notable because the biggest players are responsible for most of the R&D in computer hardware. Figure 9 shows, for 1997, the distribution of R&D across firms, ranked by the size of their R&D programs. The situation, as can be seen, is quite different in software and services, where smaller players, in the aggregate, account for most of the overall R&D activity. A similar Figure would be obtained analyzing sales for the same groupings of firms, and would tell the same story about differences between hardware, and software and services.

In short, it is not true that the decline in computer hardware industry R&D depicted in NSF data might purely reflect classification changes that reduced the number of firms in the industry, thus lowering total R&D while possibly leaving R&D intensity unchanged. The R&D intensity of those who continued to be classified as computer producers clearly declined in the 1990s, and played a role in the overall decline in R&D undertaken by the hardware-producing sector.

Still, it would be useful to have a more direct way of analyzing changes in research intensity among computer industry firms due to classification changes, versus changes within firms. To examine this question, we need data that does not suffer from the NSF data’s abrupt classification shifts.

CHANGES IN COMPUTER INDUSTRY RESEARCH INTENSITY

For this purpose, we turn to corporate financial data collected in Standard and Poor’s Compustat database. Unlike the NSF data, only publicly traded company-funded R&D is included in these statistics, and foreign companies with ADR shares sold on American stock markets are included. However, as we have already seen, federal funding was minimal by the 1990s, and therefore the first of these differences would little affect our examination of industry R&D activity over this period. Since we have access to individual company data from this source, we can—unlike the NSF data—examine the composition of some sample of computer industry firms directly, and eliminate the potential impacts of classification changes on R&D trends.

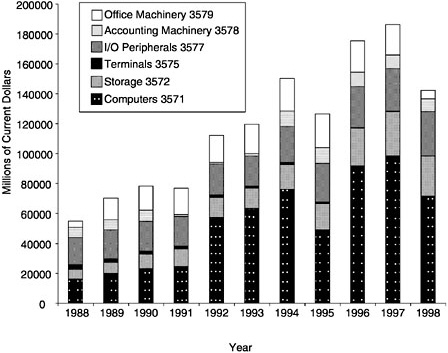

To isolate the potential impacts of classification changes, I used a 1999 edition of the Compustat database to construct a sample of 396 firms classified in OCAM (Standard Industrial Classification Code 357) over 1979–1998 when last assigned an industry (some of these firms exited the sample—they failed or were acquired by other companies—in which case their classification at the time of exit was used). I remark again that the Compustat sample includes firms no longer currently in the industry, and foreign firms, which are not included in the NSF sample, and excludes privately held firms, which are. Therefore, the number of U.S. firms tracked in the Compustat data is certainly less than the number of firms in the NSF survey, which we know exceeded 400 in recent years (see above). Of the Compustat firms, 100 were computer producers (SIC 3571), 64 computer storage makers (SIC 3572), 34 makers of computer terminals (SIC 3575), 131 producers of I/O peripherals (SIC 3577), 40 makers of accounting

machinery (SIC 3578), and 27 producers of office machinery (SIC 3579). In addition, historical data was also available for 118 producers of computer networking and communications equipment, for whom Compustat created a classification (SIC 3576) not found in official government statistics for SIC 357. This latter group includes firms like Cisco, 3Com, and Adaptec, and was excluded from our analysis of SIC 357, in order to ensure greater comparability to NSF statistics. It is also important to reaffirm that the NSF data, collected by the Census, cover all U.S. firms, while Compustat data cover all publicly held firms listed on U.S. stock exchanges.

The Compustat data, in short, is a sample of firms classified in SIC 357 in 1998, if currently in business, or when last in business, if they exited the industry prior to 1998. If there were missing values for either sales or R&D in any year, that observation was dropped from that sample. Note that there are missing values for a number of Compustat firms in 1998 that significantly affect totals for that year.12 Like the NSF data, firms entering an industry push up totals; unlike the NSF data, a firm in the industry in 1998 (or when last classified) is being counted in that industry in all previous years.

Figure 10 shows the distribution of sales within SIC 357 across product segments for our sample of Compustat firms over the years 1988–1998. The surge in computer sales from 1992–1994 is clearly shown. Much of the choppiness in Figure 10, however, comes from firms exiting the industry (or being acquired), or new firms entering the industry.

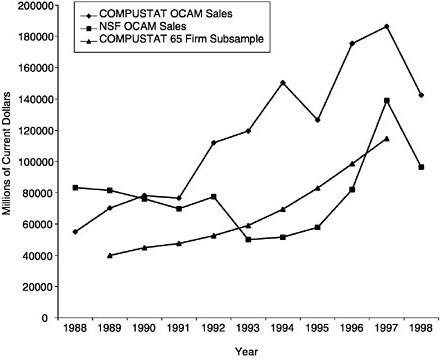

To make this point, I have constructed a subsample of the Compustat SIC 357 data showing only data for those firms with both R&D and sales data for every year over the 1979–1997 period. Out of our original sample of almost 400 companies, there were only 65 that met this test! Figure 11 compares total sales for the firms in my Compustat SIC 357 sample (which covers worldwide sales by these companies) with net domestic (i.e., excluding foreign) sales for the U.S. firms undertaking R&D classified in the NSF SIC 357 sample over these same years, and to my subset of 65 firms with continuous R&D and sales data. Both full-sample Compustat sales and NSF sales jump abruptly from year to year, while my continuous data subset of 65 firms, which accounts for a majority of the sales over this period, increases quite smoothly.

My conclusion is that entry and exit of firms in both Compustat and NSF samples seems to account for an important portion of the ups and downs in sales. When downturns hit, some incumbent players exit. When the computer industry turns back up, new and different players come in and account for an important

|

12 |

Perhaps the most extreme example is that of SIC 3579, office machines, where a single firm—Japanese firm Sanyo, whose ADR shares are sold on a U.S. stock exchange and therefore is included in the Compustat sample—accounted for better than 80 percent of the sector’s total R&D in 1997, and U.S. producer Pitney Bowes most of the remainder. With Sanyo’s number missing for 1998, the sector’s R&D falls sharply in the data pictured in Figure 10. |

component of increased growth. Since the NSF data exclude foreign sales, they would normally be substantially less than the Compustat data (which are worldwide sales) for any given group of firms. The expected relation held, and the two series moved in a generally similar manner from 1995 on. Prior to 1995, however, the NSF data generally are nonincreasing, and even exceed the Compustat totals in the late 1980s. This suggests that the NSF data are capturing firms which either exited the computer industry, or shifted the bulk of their sales into other industries, over the 1988–1994 period, while these same firms are never included in the 1998 Compustat sample (which is based on the firm’s activity in 1998 or its last activity prior to acquisition or failure in other cases). Thus, it appears that the NSF data include substantial sales by computer hardware firms that either failed or switched their main line of business to other activities over the 1988–1994 period.

Putting the above facts together, both flat or declining sales by firms focused on computer hardware, and declines in the research intensity of those sales, combined to produce the declining computer hardware R&D figures in the early 1990s in the earlier Figures showing the NSF data. In the Compustat data, by way of contrast, there are increasing sales over this period. Both NSF and Compustat, on the other hand, show increasing sales after 1995.

Figure 12 shows company-funded R&D, measured in 1996 dollars (using the GDP implicit price deflator), over the period 1988–1998 for both NSF and Compustat samples. Comparison of the full Compustat sample with the “continuous” 65-firm subsample strongly suggests again that entry and exit in the computer hardware business in the early 1990s must be the explanation for the zigs and zags in total company-funded R&D. As with sales, the NSF R&D data show further evidence of major classification changes in companies assigned to OCAM in the early 1990s.

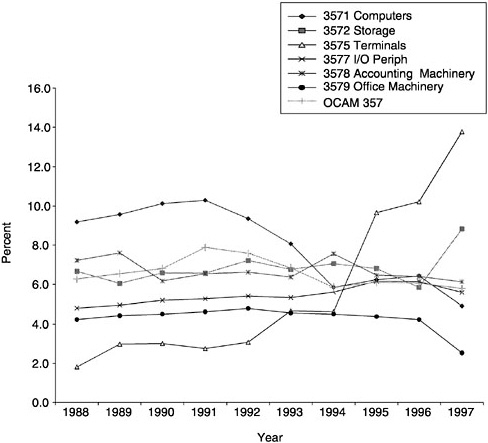

Figure 13 compares company-funded R&D intensity (R&D as a percent of sales) for firms in the NSF SIC 357 OCAM sector with the aggregate R&D intensity for the overall Compustat SIC 357 sample, as well as the 65 firms in our “continuous” Compustat sub-sample for SIC 357. The NSF OCAM R&D intensity (which takes R&D as a fraction of domestic U.S. sales only) falls sharply in the early 1990s, as do the Compustat figures for computer (SIC 3571) R&D intensity (which take worldwide corporate sales as the denominator). Exports are a significant share of U.S. computer companies’ sales, so we would expect the NSF figure to be substantially greater, as it generally is. Therefore, this figure shows three things: that the movement of NSF OCAM 357 R&D intensity over time parallels that of SIC 3571 in both Compustat samples; that the SIC 3571 R&D intensity converges to SIC 357 R&D intensity in both Compustat samples; and that SIC 357 OCAM R&D intensity, in all three data sets, is down somewhat relative to the early 1990s by the late 1990s—by a little in the Compustat data; by a lot in the NSF data.

All the above is consistent with a story in which many highly R&D-inten-

FIGURE 12 COMPUSTAT vs. NSF co-funded SIC 357 R&D in 1996 dollars.

sive computer firms either failed, shifted into other lines of business, or were acquired by others in other lines of business in the 1990s. (Fortunately, this matches up with actual events for this decade.) All these much more R&D-intensive firms—whether they failed, merged with other computer firms, or merged with non-computer firms—are picked up in the early part of the 1990s in the NSF sample. The subset that was acquired by other computer companies remaining in the business, or that failed, is picked up in the broad Compustat sample over the entire period. The subset that was acquired by other computer companies remaining in the business is picked up in the small (65-firm) continuous Compustat sample after the period of acquisition only, while failed enterprises and new entrants in the late 1990s are omitted in the continuous subsample.

In short, the decline in R&D intensity appears to be real, but mainly in computer systems (SIC 3571), where it plunged precipitously. The above data can be read as suggesting that the NSF survey procedures may give much greater relative weight to these exiting, high R&D computer companies, in constructing

FIGURE 13 Company funded R&D intensity in SIC 357.

an estimate for all OCAM, than the smaller subset of firms tracked in the Compustat classification scheme.

Figure 14 displays R&D intensity, by subsector, for the full Compustat sample. Declines in R&D-intensity in computers appear to be offset to a significant extent by increasing R&D intensity in I/O peripherals. More interestingly, this raises the issue of whether the decline in R&D intensity in computers, and the relative stagnation of computer hardware R&D in the late 1990s, really masks the fact that the leading edge of computer technology has moved out of traditional computer companies and into new and different kinds of firms.

MIGRATION OF COMPUTER R&D INTO OTHER SECTORS

In many respects, it is obvious that much R&D that used to be done by computer firms is now being done elsewhere. The classic example is the person-

FIGURE 14 COMPUSTAT OCAM R&D intensity.

al computer (PC) industry. Where computer firms once did most of the R&D, design, and engineering work needed for a new model of PC, virtually all of the design is now incorporated into highly integrated, standardized semiconductor components now designed, developed, and shipped by Intel and a handful of other specialized chip firms. Today, a builder of “commodity” PCs, like Dell or Gateway, typically spends something like 1 percent of revenue on R&D, compared with the 10 percent or more that was the case for computer makers in the 1980s. The “technology” is largely in the chips and other components, not the “box” that is the PC maker’s responsibility.

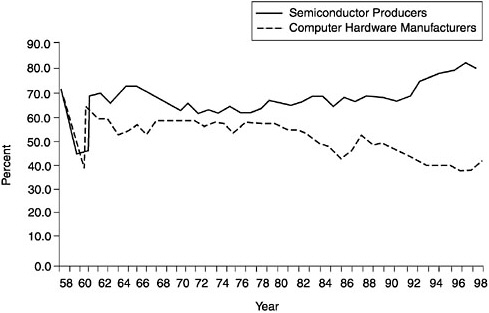

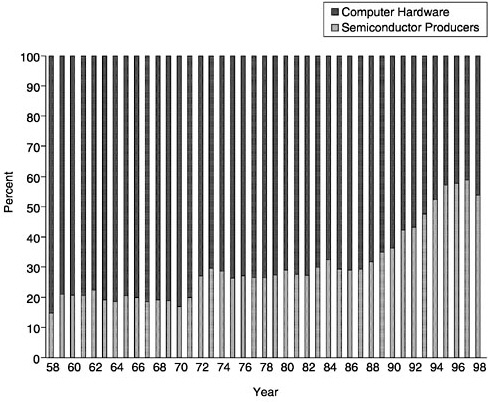

Figure 15 makes this point graphically by showing the split of value added between U.S. computer hardware manufacturers (SIC 357) and semiconductor producers (SIC 3674) over the last 40 years. The changes are striking. Semiconductors and computers accounted for roughly equal shares of value added in their sectors’ output in the early 1960s. Today, the share of value added in

FIGURE 15 Value added/shipments in OCAM and semiconductors.

computer shipments is roughly half the share of value added in semiconductor shipments.13

Alternatively, Figure 16 shows the split of value added between these two sectors. In the early 1960s, computers accounted for 80 percent of the value added in the two industries. By the 1990s, computers’ share of the two industries’ value added was halved, to about 40 percent.

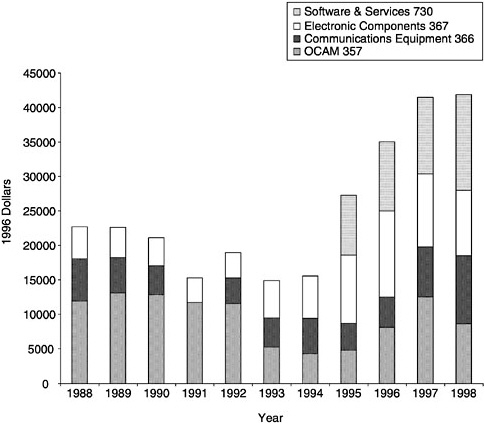

Semiconductors were not the only IT-related sector benefiting from relatively higher levels of R&D investment in the 1990s. Figure 17 shows that in addition to semiconductors (contained in NSF statistics within SIC 367, “electronic components”), communications hardware expanded sharply in both real terms, and relative to OCAM R&D. With the rise of the Internet, the World Wide Web, and e-commerce, this is not surprising. It is borne out by the available data.

FIGURE 16 Distribution of U.S. manufacturing value added between OCAM and semiconductors.

SUMMARY AND CONCLUSIONS

Government’s role in the funding of computer R&D has declined greatly, in both relative and absolute terms. Virtually no government R&D funds now support projects at computer hardware companies, in marked contrast to the situation just two decades ago.

The decline in support for basic research is particularly striking, and perhaps even troubling. Even more disturbing are various anecdotal reports (since statistics on basic research in industry are virtually nonexistent) suggesting that within industry’s domain, it has turned even more sharply away, in relative terms, from long-term, basic, and fundamental research on computing technology. Furthermore, considering just the federal investment in computer research, informed observers suggest that even the federal investment has increasingly been funneled into “targeted” research, and away from “fundamental” projects.14

FIGURE 17 Co-funded IT R&D in 1996 dollars.

Certainly, within the Department of Defense, there has been considerable pressure on DARPA to shift from more basic, fundamental projects, toward near-term efforts with expected impacts on short-term military requirements. Given DARPA’s enormous historical importance in this area, this raises important questions about whether federal funding of long-term, fundamental research on information technology is at optimal levels.

After looking at a variety of data sets, we have tentatively concluded that the apparent decline in industrial R&D intensity in computer hardware is real. It is particularly pronounced in computer systems; less so in storage and peripherals.

Relatively stagnant or even declining levels of R&D in computer systems have to some extent been offset by increasing R&D intensity in areas such as networking, communications, and peripherals, and by the shift of considerable computer architecture R&D into semiconductor producers.

The statistics collected by Census and NSF in their current form are not well suited to tracking trends in R&D. Given the growing importance of these sectors in overall output, and the widespread belief that they are associated with recent

large improvements in productivity, investment of reasonable resources in tracking the U.S. investment in these technologies would seem uncontroversial. A serious review of the objectives and conceptual basis for surveys and statistics of R&D would seem useful given the apparent structural changes affecting these high-tech industries.

In short, the scale of R&D investments in computers and computer architectures has dropped in both absolute and relative terms recently, and longer-term investments in basic and fundamental research are clearly falling short by historical standards. This would not be a source of concern if we were convinced that computing technology had “matured,” and was no longer an area with a high social payoff for the U.S. economy (with substantial excess over private returns). However, given a growing economic literature suggesting an important link between information technology and the recent performance of the U.S. economy, the widening role of high-performance computing as a complement to continued technical advance in other high payoff areas, like biotechnology, and a widespread technical belief that much remains to be done and many high payoff areas remain in utilizing computers, it would be prudent for the United States, as a whole, to plant more seed corn in this particular field.

Indeed, three recent reports have called for precisely this sort of action.15 The response to these reports has been lukewarm, however, with only partial funding to date for the increases in R&D called for in these reports. Perhaps this is because the question of what is actually being spent is so muddy in the current statistics, and perhaps because the focus for a new wave of long-term R&D projects is as yet undetermined.

There clearly is a consensus that this has been a productive area for government-industry collaboration, and remains so. The history of government and private R&D investments in IT has been a highly complementary one, with each bringing unique and different strengths and focus to the enterprise. The biggest challenge is likely to be how to move beyond a vague approval of greater collaborative public/private investments in information technology, and on to concrete particulars: How many dollars are justified, in what sorts of projects and topics, and organized how?

The greatest contribution of the economics community to this debate may be to better define a framework in which to collect and analyze data on these technology investments and their payoff. The greatest contribution of the science and technology community may be to better specify what concrete research themes and areas are worthy of greatly increased support, and collaboration between public and private sectors. If we can execute both of these agendas competently,

we may yet manage to extend the impact of this extraordinary set of innovations well into the twenty-first century.

REFERENCES

Congressional Budget Office. 1999. Current Investments in Innovation in the Information Technology Sector: Statistical Background. Washington, D.C.: Government Printing Office.

Flamm, K. 1987. Targeting the Computer. Washington, D.C.: The Brookings Institution.

Flamm, K. 1998. Creating the Computer. Washington, D.C.: The Brookings Institution.

National Research Council. 1999. Funding a Revolution: Government Support for Computing Research. Washington, D.C.: National Academy Press.

National Research Council. 2000. Making IT Better: Expanding Information Technology Research to Meet Society’s Needs. Washington, D.C.: National Academy Press.

National Science and Technology Council. 1999. Information Technology: Frontiers for a New Millennium. Committee on Technology, Subcommittee on Computing, Information, and Communications R&D. Washington, D.C.: Government Printing Office.

President’s Information Technology Advisory Committee (PITAC). 1999. Information Technology Research: Investing in Our Future. Washington, D.C.: Government Printing Office.