surface of a balloon; as it is blown up, what was a speck on the surface becomes large and now contains many features of specialization and efficiency. The interactions of technology and society are highly complex and around each small bit of truth that we understand is a huge dark area that we do not understand. He urged the group to be aware of these dark areas and not to base their understanding on a few narrow parameters.

The Value of Sharing Knowledge

Dr. Spencer added two comments. The first was that the day when somebody could leave a semiconductor company with some knowledge and take it to another company had probably passed. He said that the last serious case he had heard was at Intel, when—as someone humorously put it—vulture capitalists lured away some of the people who were making static memory, causing Intel to go after them. He said that one of the biggest surprises to those who began to work at SEMATECH fifteen years ago was that “everybody knew everything” and there were no secrets.

His second comment was that the rapid early growth of the semiconductor and integrated circuit industries was possible because there were no patents. Use of the patent for the semiconductor and the transistor was provided to everyone for a modest fee due to the Bell System’s consent decree with the U.S. government. This precedent, he added, may be an important lesson for the biotechnology industry. The same was true for the integrated circuit; a patent case between Texas Instruments and Fairchild Semiconductor resulted in this technology not being patented.

The Need for More Basic Research

Dr. Mowery added that the vertically specialized firms that are entering the semiconductor industry are focusing largely on development work at the commercial, leading edge of technology. He questioned whether this sort of highly focused, upstream research could be maintained without better integration with more basic research. Dr. Gomory seconded this concern and expressed the hope that the workshop would further explore this and related issues, such as the powerful role of venture capitalists in enabling many developments in technology.

MICROPROCESSORS AND COMPUTERS: FIVE TRENDS

Alan Ganek

IBM

Mr. Ganek focused his talk on five trends that help to describe the present state of information technology and affect all its components.

An Optimistic View on Overcoming Limits

The first trend is the straightforward observation that progress in information technology continues at an ever increasing pace. This might seem obvious, he said, but most technology areas have rapidly improved and then reached a point of maturity and growth levels off. Even for microprocessors and computers we are near that point of maturity, although its exact position is unknown.

Indeed, there is some doubt in the semiconductor industry that its pace of growth can continue. Historically, about 50 percent of its improvements have been made through better yield per wafer, finer lithography, and other scaling techniques that reduce size and produce better electrical behavior. He showed evidence, however, that the pace of lithography improvements in the past few years has been faster than ever before.

He then turned to the transistor and a representation of the gate dielectric mentioned by Dr. Spencer. He noted that the transistor is now only about a dozen atomic diameters thick, and it is not clear how to reduce that thickness further than one-half of its current dimension. “So there are real fundamental atomic limits you approach,” he said. “That may make you wonder, why am I so optimistic?” He answered his own question by suggesting that improvements will come from other directions, supplementing the lithography and other aspects of scaling he had mentioned. “Physicists are famous for telling us what they cannot do and then coming back and doing something about it.”

Factors That Improve Performance

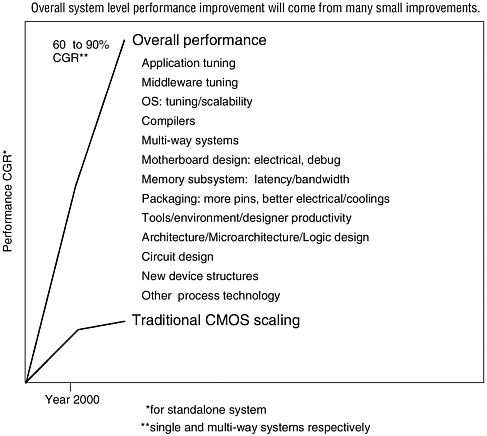

He illustrated his point with a chart where every figure represented a factor that improves performance at the same feature size (Figure 9). The challenge is that such improvements are one-time effects that must be followed by something else. Nonetheless, materials science has provided many such effects, such as introducing copper to interconnect the substrates of the chip; dielectrics that improve performance through the wires; and silicon on insulator that improves the circuitry within it. Silicon germanium is an alternative technology that does not replace CMOS but it does allow you to build mixed-signal processors at very high frequencies that can handle digital and analog circuitry together. He showed the relevance of this technology to Dr. Cerf’s comment that the communications industry’s urgent need is to make the translations from huge fiber backbones to routers and other components that can convert optical to electrical signals.

He then offered another illustration of how speed can be increased despite the physical challenges. He showed a picture of a friend holding the first experimental gigahertz processor on a wafer. Just three years ago a gigahertz was three times the speed of any commercially available processor and yet it was produced on a 300-megahertz processor line. This was done not by semiconductor power but by redesigning the data flows to triple the performance of the processor. The

FIGURE 9 System-level Performance Improvement.

increased speed, therefore, was purely a design attribute, similar to those mentioned earlier by Dr. Morgenthaler. His point was that many factors could maintain the growth of computing power.

The Processor is “Not the Whole Game”

After showing a growth line of conventional bulk CMOS as evidence that growth was starting to level off, he said he was optimistic that engineers would keep finding ways to push it forward. He could not say whether it would continue as an exponential curve or something less, but he expressed confidence in continued price performance. He stressed that from a systems perspective, processor performance alone “is not the whole game.” IBM estimates that of the compound growth rate in systems performance, about 20 percent has been due to traditional

CMOS scaling. In uniprocessor systems about 60 percent of the compound growth rate has come from a stack of other factors, and for multiway systems 90 percent of the growth has come from other factors.

These factors include other process technology, circuit design, logic design, tools, environment, and packaging techniques. He said that packaging as an art had fallen out of vogue at IBM but is now being reestablished because of the leverage it provides. In addition to these factors is the whole software stack, including compilers, middleware, and—probably the single best biggest lever in actual deployment—application tuning. A poorly tuned application, he said, imposed a sheer waste of processor speed. Mr. Ganek said he saw tremendous opportunities to continue the progress in ways that complement the basic CMOS drivers.

Continuing Progress in Supercomputers

He then discussed growth in the speed of supercomputers, which are inherently parallel machines, and offered a forecast of an 84 percent compounded growth rate in terms of teraflops, or trillion floating point operations per second. This rate would be even higher if one began with the Deep Blue chess-playing machine; great speed is possible in special-purpose machines. Deep Blue’s technology is now used in a Department of Energy 12-teraflop machine for simulating nuclear events. IBM is now collaborating with the Japanese to build a machine that simulates molecular dynamics: a very narrow-focus machine that has the potential of yielding 100 teraflops within the next year or two. The Blue Gene protein-folding system IBM is building will have 1 million processors, each of which has 1-gigahertz speed. That may not sound fast but each chip will have 32 processors as well as integrated communications and memory functions—“a completely radically different architectural design that will get a tremendous amount of performance.”

Mr. Ganek mentioned the inexact but interesting scale by which Ray Kurzweil has compared microprocessors with living organisms: The Deep Blue machine was probably at the capacity of a lizard and the IBM ASCI white machine is about at the level of a mouse. Humans would fall into the 20- or 30-petaflop range—a range that one would expect general-purpose supercomputers to handle by about 2015. If this scale has any validity, “that means we’re going to go through the equivalent of 400 million years of evolution over the next 15 years.”

The Growth of Memory

Mr. Ganek reemphasized that performance involves more than processors. Storage is especially important and has moved more rapidly than semiconductors for the past decade in terms of price performance. The compounded annual growth rate of storage capacity has increased from 60 percent in the beginning of the

decade to 100 percent at present. Now, many desktop computers have disks with storage densities of 20 gigabits per square inch. Mr. Ganek said that when he first learned the term “superparamagnetic limit,” he was taught that that limit was 8 gigabits per square inch, and that limit has already been exceeded by a factor of 2.5. Scientists now believe that 100 gigabyte-per-square-inch density is achievable with magnetic technology, and they believe that alternative technologies may perform even better.

He showed an illustration of a 340-megabyte disk drive in a form factor only slightly larger than a quarter now available at a gigabyte and said that in three or four years this will be available at 5 or 6 gigabytes. In general, the ability to have cheap storage everywhere is going to continue. Then he showed how this translates into price performance, “which is why we’re going to be using so-called electromechanical devices like storage instead of just memory for a long time to come.” He predicted that in 2005 the cost of storage would be only about a third of a cent per megabyte. Given the time required to erase data, it will be less expensive to keep them than to delete them.

A New Window for Fiber Optics

He commented on fiber optics and the worry of many people about the maturity of erbium-doped fiber amplifiers. Recently, wavelength division multiplexing has opened a new window for growth. He did warn that progress is never a continuous curve, and that backbone bandwidth is determined largely by the capacity of the fiber that is deployed. “It is a step function and the backbone you have is only good as what is laid.” He said that the good news, based on conversations with industry experts, is that backbone capacity is growing at a tremendous rate around the country and that in two or three years it may reach the neighborhood of 10 to 20 petabits. He added that it was not apparent that the quantity or quality of content was developing at nearly that rate. “Last mile” access technologies such as cable, xDSL, and cellular, which connect local networks to the backbone, limit the full use of backbone bandwidth.

The Pervasiveness of Computing Devices

In introducing the second trend, Mr. Ganek then touched on a subject raised by Dr. Cerf, the growing pervasiveness of computing devices, which will interconnect computation everywhere and change the interaction of people and objects in the digital world. Pervasive devices will become the dominant means of information access. The personal computer will continue to drive a healthy business but it will be dwarfed by the growth of data-capable cell phones, which will pass the installed personal computer base in 2002, and the additional growth of personal digital assistants (PDAs), smart cards, smart cars, smart pagers, and appliances. He then showed a Watchpad being developed at IBM that has 500 mega-

hertz of processing capability, a new display technology with high resolution, a phone directory, IrDA communication channels, calendar, pager, and other features. He called it “elegant, always on you, always on, easy to use, and hard to lose.” In four or five years people will have 5 or 6 gigahertz of capability on their wrists with a convenient and flexible user interface. Its touch-screen interface is superior to the small pointing stylus that is so easily lost.

Mr. Ganek predicted more mixed modes of technology, including voice recognition which will display results in more useful ways. Today in some applications one can ask for airline information, for example, but it is difficult to remember it all. If the verbal request can be answered with a screen display, the information can be kept for subsequent interactions with other systems. The application of such technology is not limited to traditional business functions and personal data acquisition. These devices might be thought of as clients that do the job of finding information.

Such devices can also become servers. He showed a device that helps patients with Parkinson’s disease. With sensors in the brain connected by wires in the skin to a pacemaker-like unit at the heart, the device senses an oncoming tremor and generates an impulse to stimulate the brain and avert the tremor. Pacemakers—of which there were “until recently, more in the world than Palm Pilots”—can also broadcast information about vital signs. This means that people can be walking Web servers that provide critical medical information. Such devices are not just retrievers of information; they are continuous sources of real-time information.

The Potential of Software Through ‘Intelligent Networks’

A third trend is a utility-like model for value delivery based on an intelligent infrastructure. Mr. Ganek compared it to the motor analogy that Dr. Cerf introduced. Looking back to 1990 and earlier, people assumed that clients would access an enterprise through an application server on a network, one client at a time. Now, in addition to individual enterprises there are new providers that offer a range of services. The clients are network clients of an intelligent network and the enterprise they are associated with is only one of many sources of information. The network becomes a repository of intelligence across a broad spectrum of applications, such as caching, security, multicasting, network management, and transcoding, so that the application does not need to know what the device looks like.

This direction relates to the earlier discussion of software development and productivity. Individuals writing lines of code have made good progress but not at the exponential rates of lithography, for example. Such deployments are the next step beyond the packages mentioned earlier, where software for electronic utilities has an economy of scale. The software is provided through the network and people accessing those utilities need to make fewer in-house changes. Small businesses might need only a browser to access the software. For larger concerns

the system is more complex, but the service is still outsourced and the software is developed with a larger economy of scale.

The Formation of E-Marketplaces and Virtual Enterprises

The fourth trend also concerns software for business. He said that when he started writing software, he had a notion of subroutines that had to do with such low-level functions as figuring out the sine curve or sorting. Today, writing software has moved to much higher-level components that are becoming synonymous with business objects. Of all the software being written the most important relates to enterprise processes: business-to-consumer processes, business-to-business processes. Moreover, electronic commerce building blocks are emerging to support these enterprise processes.

E-marketplaces are being formed that may mediate up to 50 percent of business-to-business commerce by 2004. The marketplaces will be able to bring many buyers and many sellers together and add such functions as dynamic pricing, frictionless markets, and value-added service. It is too early to know exactly how this will happen, Mr. Ganek said, but the e-marketplace is already a tremendous force in determining how people deploy technology for business, which has a direct bearing on the economy. People have moved from using the Internet like a shopping catalog to a many-to-one procurement tool. Now they are matching many suppliers with many buyers and getting commerce done in a much less expensive way. Instead of days, weeks, and months business transactions will take hours and minutes.

The final step of development will be virtual enterprises, which will play an important role in the evolution of collaborative commerce. There are many questions about how virtual enterprises will work: will they form their relationships dynamically using discovery technology to find out who else is out there? What can they deliver? How can a business be constructed electronically by connecting these different pieces? Industry consortia are working together to find the answers.

The Electronic Business of the Future

The fifth trend is that the electronic business of the future is going to be dynamic, adaptive, continually optimized, and dependent on powerful business analytics and knowledge management. Those who started using information technology in businesses years ago saw it largely as a tool for automation—doing manual tasks faster and cheaper. Information technology is now the arena where companies compete with one another. It is no longer simply a plus-side attribute; it is a frontier for creating new strategy and revenue generation.

He offered the high-end example of insurance risk management. How companies in this business manage their risks and invest is critical to whether they succeed. The more risk factors a company can analyze at one time the more valu-

able the analysis. Risk computations turn out to be very complex, however. A typical supercomputer in 1995 running at perhaps 1 gigaflop would take about 100 hours to solve a problem involving three risk factors. This solution would be helpful but too slow for the pace of business. In 1999 the same company might have a 25-gigaflop computer that is reasonably affordable and could do the same analysis in two to three hours. This still does not include all the desired parameters, but the company could look at the problem several times a day and start to make some judicious choices that contribute to a competitive edge.

In 2002, when the same company might expect to have a teraflop machine, it could run the same problem in minutes. This speed could begin to change how the company functions and what actions it takes in the stock market in real time. Such computing ability will move into virtually every business. As an example, he cited IBM’s ability to do supply-chain analyses that assess trade offs between inventory and time to market. Such analyses save the company an estimated amount in the hundreds of millions of dollars a year in the personal computer business. Because it is so hard to make a profit in the personal computer business that computing ability probably allows that company to stay in the business.

The Promise of Deep Computing

He closed with the example of what more efficient computing is beginning to do for the pharmaceutical industry. The cost of producing new chemical entities has more than quadrupled since 1990, while the number of new entities has remained essentially flat. The number of new drugs being produced by deep computing, however, is beginning to rise, and this number is estimated to triple by the year 2010. These deep computing processes include rational drug design, genomic data usage, personalized medicine, protein engineering, and molecular assemblies. This projection is speculative, he emphasized, but it shows the extent to which a major industry is counting on radically new uses of information technology.

DISCUSSION

The Wish for Self-Learning Machines

Dr. Myers passed along a wish list from Dan Goldin, administrator of the National Aeronautics and Space Administration, who hopes to develop spacecraft that can cope with unexpected conditions by making autonomous decisions. Remote decision making would eliminate the long time delay involved in communicating with Earth for instructions. True decision making, however, would require computers that are, to some extent, self-actuating, self-learning, and self-programming. This, in turn, may imply a new form of computing architecture.

Mr. Ganek responded that a number of challenges lie ahead in designing such thinking machines. He mentioned the exercise in computer science known