7

Advancements in Conceptualizing and Analyzing Cultural Effects in Cross-National Studies of Educational Achievement1

Gerald K. LeTendre*

[T]hose who have conducted the [International Association for the Evaluation of Educational Achievement] IEA studies have been well aware that educational systems, like other aspects of a culture, have characteristics that are unique to a given culture. In order to understand why students in a particular system of education perform as they do, one must often reach deep into the cultural and educational history of that system of education (Purves, 1987, p. 104).

The problem of “culture” has engaged researchers of cross-national trends in education and schooling for decades and continues to invigorate a lively theoretical and methodological debate today. Scholars interested in comparative studies of education can still find themselves in a quandary as there is, to date, no single definition of culture or method of cultural analysis that is agreed on by all researchers. Researchers in the field still debate questions such as “How can an understanding of differences in cultures help us to better understand international differences in student achievement?” “How can an analysis of culture help us to understand what are and are not possible lessons to be learned for the U.S. in terms of improving student achievement?” or “When is culture an important factor and when is it not?”

Nonetheless, there have been significant advances in how culture has been conceptualized in cross-national studies of educational achievement.

Both theoretically and methodologically, the study of educational achievement has been advanced by borrowing from cross-national work in the subfields of sociology of education, anthropology of education, cultural psychology, and qualitative studies in education. The traditional “national case study” model—in which idealized models of the nation’s education system were developed and analyzed—commonly used in the earliest days of the IEA has given way to studies which take into account national and regional diversity as well as studies that try to account for cross-national factors that can affect a range of nations. At both the micro-and macrosocial levels, an analysis of cultural effects has been advanced by better theory, research designs, and data sets and powerful software capable of analyzing huge data sets. The development of iterative, multimethod research designs has allowed researchers to overcome major epistemological problems that previously separated qualitative and quantitative researchers, allowing cultural analyses to inform cross-national studies at all stages of the research.

In this chapter, I will review the advances that have been made in conceptualizing and studying culture in cross-national or comparative studies of schools and educational systems. I will show how models of cultural dynamics can be integrated with quantitative data in studies of educational achievement and cultural effects. Already, many researchers in the subfields of anthropology of education, sociology of education, and comparative education now routinely use mixed method designs to account for cultural effects (see Caracelli & Green, 1993; Tashakkori & Teddlie, 1998). I will summarize the most important methodological advances in modeling cultural effects on student achievement in two large recent IEA studies, and propose new models of multimethod research design that can integrate cultural analyses and quantitative analyses in the same study. Finally, I will discuss the problems inherent in trying to create large, qualitative, public databases: the kind of databases that further the integration of cultural analysis in studying many aspects of educational achievement.

HOW TO DEFINE “CULTURE”?

Virtually every aspect of education can be described as “culture,” depending on how the writer uses the term. In the quote cited at the beginning of this chapter, Purves states that the entire educational system is part of the national culture. Defining just what culture is has been an elusive task (see Hoffman, 1999, for an essay on the various definitions of culture used in comparative education studies). Using the broadest definition, even the most basic patterns of instructional practice are seen as the result of culture. Taking this stance, basic concepts such as “academic

achievement” are regarded as socially constructed or “cultural” phenomena by the researcher (see Goldman & McDermott, 1987; Grant & Sleeter, 1996). From this perspective, it is useless to argue what may or may not be a cultural effect because culture permeates and affects all social interactions.

The idea that culture or cultural effects can be reduced to a set of variables has, as Hoffman (1999, pp. 472-474) notes, led to a dead end in comparative education. This kind of epistemological impasse has long kept qualitative and quantitative researchers from uniting in a common study of the cross-national causes and correlates of educational achievement. Invested in one mode of investigation, both quantitative and qualitative researchers find themselves bogged down in fruitless epistemological debates, missing a way to bridge qualitative and quantitative approaches to research: incorporating both methods in a larger research project designed to identify and test patterns of causality. While quantitative analysts tend to assume that a universal causal model with discrete variables can be readily identified and implemented in cross-national research, qualitative analysts tend to assume that the first problem to overcome in a cross-national study is how to model causation.

Early comparativists frequently used textual descriptions or statistical summaries of nations or national systems of education as the basis for a comparative methodology (Bereday, 1964). This approach was limited because it assumes a pervasive, ill-defined cultural effect. In searching for what Jones termed “a scientific methodology” (Jones, 1971, p. 83) comparativists employed models in which nations as a whole were identified as culturally homogeneous units, that is, the “national case study” (see Passow, 1984).2 This led the field of comparative and international education to be dominated largely by “area experts” who studied the educational system of specific nations or regions. Content analyses of “comparative” studies of education printed in major academic journals reveal a predominant focus on only one country (Ramirez & Meyer, 1981) and a lack of comparative research design (see also Baker, 1994).

Rust, Soumare, Pescador, and Shibuya (1999, p. 107) aptly demonstrate that over the past 20 years, few studies appearing in the major comparative journals cite the major theorists of the field of comparative education, and that “Very little attention has been given to data collection and data analysis strategies.” However, outside of the comparative education journals, there has been significant debate about how to improve data collection and data analysis strategies. The basic strategy of combining cross-national achievement and survey data, widely employed in IEA studies, provoked lively debates, particularly around the Second International Mathematics Study (Baker, 1993; Bradburn, Haertel, Schwille, & Torney-Purta, 1991; Rotberg, 1990; Westbury, 1992, 1993). However, these

debates did not address the basic concept of culture.3 The authors generally accepted an implicit model of culture as a historically static set of values (or language) specific to, and homogeneous among, a particular nation that could be readily modeled using discrete variables and linear equations.

The problem with attempting to measure culture as a set of discrete variables that function in the same way across nations can be demonstrated by reference to attempts to understand what constitutes effective teaching within any given nation. The creation of ever more detailed national-level data sets has reached a dead end in IEA studies. For example, in 1984, Passow (p. 477) identified “quality of teachers” as one possible “teacher variable” to consider. In the IEA classroom study (Anderson, Ryan, & Shapiro, 1989), “quality of teachers” was measured in many different ways with sets of variables addressing specific areas— including knowledge of the field, instructional practice, and belief systems—any number of which could be construed as “cultural.” In the Third International Mathematics and Science Study (TIMSS), a host of variables measured a wide range of teacher behaviors, beliefs, and backgrounds. Table 7-1 provides a partial list of variables related to teacher quality in the TIMSS Population 2 teacher questionnaire that many qualitative analysts would consider to measure cultural effects.4

Faced with such an alarming number of variables, researchers have turned to qualitative studies to help orient research and guide analysis and interpretation. The work of cross-cultural psychologists, such as Stevenson and Stigler, attempted to understand how teacher quality affects student achievement by incorporating theories that specific beliefs about teaching and learning were “cultural” and drove more effective instructional practice (see Stevenson & Stigler, 1992; Stigler & Hiebert, 1998). Both Stevenson and Stigler argue that Japanese teachers’ emphasis on persistence rather than innate ability led them to believe they could increase the achievement of all students, thus providing motivation to work harder to ensure that all students make academic progress.

The idea that what makes a “good” teacher (or a good classroom) depends on the culturally influenced expectations of students, parents, and the teachers themselves has been expanded by the work of anthropologists and educational researchers (see, e.g., Anderson-Levitt, 1987, 2001; Crossley & Vulliamy, 1997; Daniels & Garner, 1999; Flinn, 1992; LeTendre, 2000; Shimahara, 1998). Scholars in subfields such as anthropology of education or sociology of education who engage in cross-national work now tend to employ a model of culture as a dynamic system. Rather than attempt to create more and more numerous sets of variables, these researchers emphasize the respondent’s perceptions of the social world, individual-level interactions, variation in cultural norms within

TABLE 7-1 Teacher Variables in TIMSS, Population 2

|

Time on Task in Classroom |

Time Outside of Classroom |

Implementation |

|

Time teaching textbook |

Preparing/grading exams |

Ability tracked or detracked |

|

Time spent on topics (20 main topics) |

Planning lessons |

Use of calculators |

|

Time on introduction of topic |

Updating student records |

Use of review |

|

Time on review |

Reading/grading student work |

Use of quizzes |

|

Frequency of computer use |

Professional study |

Small-group activities |

|

Frequency of use of graphs or charts |

Meetings with other teacher/student/parents |

Paper-pencil exercises |

|

Time on small-group activities |

|

Hands-on lab |

|

Time on topic development |

|

Assign homework |

|

Frequency of teacher/ student interaction |

|

Oral recitation/drill |

|

|

Ask students to explain reasoning |

|

|

|

Type of homework assigned |

|

|

SOURCE: TIMSS Population 2 Mathematics/Science Questionnaire. |

||

nations and sources of conflict (e.g., historical, regional, linguistic, racial, etc.) around key concepts, roles, and institutions.

As early as 1976, IEA scholars called for increased attention to alternative ways to model cultural effects:

The most interesting, and perhaps the most useful, approach to cross-national research proceeds not in terms of existing country-wide units, but on the basis of sub-national units. This means that it may be more interesting (for comparative work) to inquire about the correlates of achievement with, say, metropolitan areas across several countries, or among the children of the poor, or among girls, each group taken together across nations, than it is to regard individual countries as the logical, or only, units of analysis. (Passow, Noah, Eckstein, & Mallea, 1976, p. 293)

Many scholars now recognize that the static, national case study approach ultimately masks more important findings regarding the range of cultural variation within national subunits; conflicting educational expectations held by religious, linguistic or ethnic groups; and the degree to which cultural change affects the nation in question. For example, Shimahara (1998, pp. 3-4) squarely places his recent volume as a contribution to cross-national studies of education in that it brings to bear a contextual (i.e., cultural dynamic) perspective on classroom management:

Yet the majority of international comparisons tend to be sketchy and cursory, paying scant attention to the national and cultural context of schooling. Such a problem is glaring in a broad array of writings.... Even The Handbook of Research on Teaching, presumably the most authoritative project on teaching of the American Educational Research Association, suffers from the same shortcomings. Authors refer to teaching practices in other countries without offering contextual interpretations.

Shimahara, in this and previous works, has attempted to bring anthropological theory and qualitative methods to cross-national studies of education and achievement in the last ten years. The importance of “culture” in explaining the schooling process, or more basically, in identifying the boundaries of school as an institution, has played an increasing role in the IEA’s studies.

ADVANCES IN MODELING CULTURE

Researchers engaged in cross-national studies of educational systems have begun to use models of culture where culture is seen as a pervasive set of values, habits, and ideals that permeate every social institution and, in fact, construct the boundaries of acceptable or even imaginable behavior (see Douglas, 1986 for a theoretical synthesis), but do not assume that culture is historically static or homogeneous within national boundaries. Researchers conducting comparative studies that use a cultural dynamic approach to study educational achievement, for example, first document the range and variation in respondent’s definitions and knowledge of key concepts (e.g. achievement), social roles (e.g. teacher), and institutions (e.g. school). They then proceed to analyze patterns of consensus or conflict around such concepts, at the same time comparing recorded belief statements with observed behaviors. The same process is carried out in a second country and the patterns of consensus or conflict within each nation are then compared (see Anderson-Levitt, 2001; LeTendre, 2000; Shimahara and Sakai, 1995; Spindler, 1987).

Two recent major IEA studies—Civic Education and TIMSS—both attempted to incorporate more dynamic models of culture and made sig-

nificant methodological and theoretical advances over previous studies as they incorporated extensive qualitative components. The decision to combine qualitative and quantitative data collection in different research components of the same study indicates an understanding that a combined qualitative/quantitative approach will maximize understanding of educational processes cross-nationally. In the TIMSS-Repeat (TIMSS-R), an expanded video component has been retained with the explicit intent of providing the opportunity for holistic analysis that incorporates a more dynamic model of culture. The intended coding procedures of the TIMSSR video data will include both inductive and deductive components. The researchers stated they will:

Develop a holistic interpretive framework for each country to which specific teaching codes can be linked. We refer to this as “conserving” for each country the context or meaning of a given analytic code, for example the meaning of the use of chalkboards and overhead projectors. (TIMSS-R Video Study Web site at www.lessonlab.com/timss-r/videocoding.htm)

These advances in integrating qualitative and quantitative methods in cross-national studies of academic achievement parallel the areas of experimentation by scholars in many fields. In demography, family studies, and other fields, whole journal issues recently have been devoted to investigation of qualitative methods. For example, Asay and Hennon (1999) suggest innovations in interviewing for international family research derived from qualitative educational studies. An entire issue of The Professional Geographer is dedicated to qualitative methods, including “Use of Storytelling” and “The Utility of In-Depth Interviews” (The Professional Geographer, Vol. 51, No. 2). Essentially, several fields are converging on a combined analytic strategy that mixes quantitative and qualitative data in order to answer distinct but related questions about a given phenomenon.

HOW CULTURAL ANALYSES IMPROVE OUR UNDERSTANDING OF ACHIEVEMENT

How can an understanding of differences in national cultural dynamics help us to better understand international differences in student achievement? How can an analysis of culture help us to understand what are and are not possible lessons to be learned for the United States in terms of improving student achievement? The answer lies in the richness of details—the “thick description”—that high quality qualitative studies provide. This kind of data allows researchers to address three major problems in current social science research: how to capture daily life, how to

improve interpretation, and how to model dynamic systems. More accurate data on daily life form the basis for more accurate comparisons of national cultural dynamics and improve our knowledge of the implications and problems that need to be faced if programs or reforms are to be transferred from one nation to another.

Capturing Daily Life

Researchers in a variety of fields are drawn to qualitative methods as a way of more accurately documenting and portraying the social experiences of groups of interest. As Asay and Hennon (1999, p. 409) write: “Qualitative methodologies are often chosen for family research because of their ability in gaining ‘real life’ and more contextualized understanding of the phenomenon of interest.” Researchers from several fields appear to see qualitative methods as a way to capture more accurate portrayals of the social world. Epistemologically, these researchers believe that an analysis of system dynamics will produce results distinct from an analysis of the causal relationships between parts of the system. Stigler and Hiebert (1999) noted that in studying the videotapes of teachers in three nations, it was more important to see how the lessons formed a whole than to count frequencies of coded categories. Simply put, qualitative analyses provide insight into how the cultural dynamic is working at the time of the study.

On another level, although surveys, structured interviews, or observation instruments can capture a myriad of codeable behaviors or characteristics (such as the desire to go to college), they largely fail to capture the assumed meanings that people make in every social interaction. For example, in my own work, I found that all adolescents said they wanted to go on to college (LeTendre, 2000). Yet what was meant by college differed dramatically. One young U.S. adolescent boy said to me: “I’d like to go to college, like an electrician’s program like my uncle went to, but if not that I’d like to be a lawyer.” Survey questions about future academic aspirations generally fail to capture the complexity, and confusion, that characterizes adolescent educational decision making (see LeTendre, 1996; Okano, 1995). This student (and many others I interviewed) saw all forms of post-high school training as “college.”

The fact that an adolescent thinks there is essentially no difference between the kind of training needed to be a journeyman electrician and a lawyer suggests that his or her educational trajectory will be affected adversely by a lack of knowledge of the basic educational opportunity structure (see also Gambetta, 1987; Gamoran & Mare, 1989; Hallinan, 1992; Kilgore, 1991; Lareau, 1989). Similarly, Stigler and Hiebert (1999) note that in studying teacher practice in classrooms, coding and analyzing

discrete variables fails to accurately model the effect of specific actions in context. Such contextualized knowledge opens new lines of inquiry for the researcher, and presents the possibility of identifying what features of the system are likely to be linked with areas of interest and what are not. That is, holistic analysis of the dynamic system can highlight pertinent cultural features or subsystems that can be targeted for more intense study. Qualitative data allow researchers to document the extent to which behaviors vary and to which disagreement is raised, and the kinds of behaviors about which people argue. This kind of highly detailed, descriptive data allows for a more accurate interpretation of the entire body of data we have about a given country.

Improving Interpretation

By analyzing culture as a dynamic system, researchers increase the accuracy of interpretation of the results of qualitative or combined qualitative/quantitative studies in terms of bringing them closer to how the respondents themselves see things. This was made dramatically clear to me in my own work. I found that certain academically competent Japanese students were highly worried and concerned about the upcoming high school examination—a fact that would not be predicted from either a conflict or sponsorship model of educational selection (LeTendre, 1996). A reanalysis of field notes and interview transcriptions, however, revealed the role that strong emotions played in the decision-making process and suggested a new theoretical interpretation: Students who have high test scores are perceived by their teachers to need less counseling about high school placement, but this lack of counseling makes the students feel that their choices are less “safe” or “good” than lower achieving students who receive more counseling. High-achieving Japanese students were not able to reassure themselves emotionally, via contact with teachers, that their choices were sound choices, creating anxiety.

Too often, highly statistical analyses of educational achievement fail to record accurately how teachers, students, parents, and administrators interpret the world around them, thus preventing accurate causal modeling of the social system in question. For example, one could theorize that a culture of competition in Japan (Dore, 1976) drives high-stakes testing and the large cram-school system (see also Rohlen, 1980; Zeng, 1996). Yet ethnographic studies of U.S. schooling (Eckert, 1989; Goldman & McDermott, 1987; Grant & Sleeter, 1996) also document a culture of competition, yet there has been little high-stakes testing or cram-school development in the United States. The expression of academic competition is affected by patterns of relationships between key concepts, roles, and institutions, and these patterns differ between the United States and Japan.

In the United States, competition pervades all aspects of student life in schools, particularly social life, and adolescents spend considerable energy in vying for social popularity or athletic supremacy. In U.S. schools, there are distinct and separate social status hierarchies that split arenas of competition, “jocks” opposed to “nerds” (see Eckert, 1989). In Japan, there is less differentiation of social status hierarchies, and all social status hierarchies are affected by academic performance (Fukuzawa & LeTendre, 2001). Even in working-class high schools, there is a comparative lack of a strong countercultural movement (compare Kinney, 1994; Okano, 1993; Trelfa, 1994, with Grant & Sleeter, 1996; Jenkins, 1983; or MacLeod, 1987). Without understanding the cultural context of competition—the ways in which adolescents (and teachers) make sense of academic competition and how it affects their lives—we could not model accurately the role of academic competition in either the United States or Japan, much less conduct a systematic comparison of the effect of competition on student achievement and socialization. Nonetheless, ethnographic studies by themselves provide limited data for national policy decisions. National survey and testing data are needed if researchers wish to formulate and test hypotheses at a national level.

A combination of qualitative and quantitative data has gained wide support as a way to increase accuracy of interpretation. In summarizing the future strategy of IEA basic school subjects, Plomp (1990, p. 9) writes, “[I]ncreased attention will be paid to ways of combining quantitative and ‘narrative’ methodologies in order to provide potential for rich interpretations for the statistical data, and in this way providing decision makers which [sic] more comprehensive information.” Plomp (then IEA chairman), like researchers in other fields, believed that some form of qualitative data was needed to accurately interpret survey results.

Modeling Dynamic Systems

Attempts to model cultural effects by using an increasingly large array of variables in cross-national studies failed because the potential number of variables that can be considered cultural is so large. Identifying what a cultural variable is (as well as measuring its impact) tends to devolve into arguments about how to define culture. This strategy also has another limitation.

Defining cultural variables assumes that any given variable will have the same effect across time and place: that the cultural dynamic will function in the same way over time and across regions. The prevailing understanding of cultures is that they are systems in flux that cannot be studied in some state of equilibrium or against some initial steady state. There are no steady states or states of equilibrium for nations. The cultural dynamic

is essentially a “moving target” that constantly changes and does not have a readily identifiable trajectory. Grand cultural theories of early anthropology (regarding progressive evolution from sociocultural states of savagery through barbarism to civilization) have been abandoned and criticized for their inherent racial and/or cultural prejudice. Scholars of national educational systems and cross-national education studies must try to understand the workings of a system with limited knowledge of what states the system has passed through (i.e., historical context) and no knowledge of what states the system is likely to go through (developmental trajectory).

Modeling culture as a dynamic system offers a way to understand the overall patterns of interactions that occur in the culture at the time it is observed. Modeling culture as a dynamic system shifts the analytic focus from identifying discrete, quantifiable cultural variables (and their statistical relation to other variables) to a focus on recording and documenting participants’ understandings and social interactions. Modeling culture as a dynamic system also implies that individuals are trying to make sense out of their world, and that there will be significant individual variation in terms of what kinds of classes of things or people affect individual perceptions and behavior.

Modeling culture as a dynamic system generates sets of questions about the overall functioning of the system. In discussing the limitations of observational instruments to capture classroom environments Anderson and colleagues (1989, p. 299) noted that “Differences among studies appear to exist in the categories of questions that are formed (whether a priori or post hoc). Furthermore, and both as expected and as appropriate, the categories formed seemed to depend on the purpose or purposes of the study.” An analysis of cultural effects, in which culture is modeled as a dynamic system, is designed to ask different questions from studies that try to identify causal relationships (defined a priori) between specific variables in the system.

Integrating Qualitative Analyses

Some combination of qualitative and quantitative data is necessary if we are to understand, model, and compare national educational systems. The problem remains in determining what the best way is to integrate an analysis of cultural effects or qualitative studies with quantitative studies in order to improve cross-national studies of achievement. Because the basic research designs and rationales of qualitative and quantitative studies “ask very different questions of the data,” an overarching strategy of combined analysis seems most appropriate (see Tashakkori & Teddlie, 1998). Qualitative studies, on their own, offer ways to capture daily life,

improve interpretation, and model dynamic systems but suffer some significant limitations. See Goldthorpe (1997, pp. 3-17) for a critique of such methods in comparative social science research.

In the next section, I compare the relative strengths and weaknesses of two large cross-national studies of achievement, TIMSS and the IEA Civic Education Study. Both studies represented advances in modeling culture in terms of large, cross-national studies, yet they represented very different approaches to integrating qualitative and quantitative components. This point is crucial because, as I will show in the case of TIMSS, simply conducting simultaneous quantitative and qualitative studies does not address the problem of creating an overarching framework for analysis. It is this framework that allows the results of the different research components to be used in ways that increase the overall analytic strength of the study. Thus, although the Civic Education Study gathered far less culturally nuanced data (from the standpoint of a qualitative researcher), the data were better integrated into the overall study than in TIMSS.

An analysis of these two studies shows that (1) qualitative studies intended to improve conceptualizing and analyzing of cultural effects must be open ended or flexible enough to capture essential national variation and provide an understanding of how key concepts, roles, and institutions (e.g., civics or academic achievement) are perceived by different groups of actors within the nation; and (2) larger studies must have an iterative, componential design in order to successfully integrate the analysis of qualitative and quantitative data.

COMPARISON OF TIMSS AND THE IEA CIVIC EDUCATION STUDY

All IEA studies follow a rigorous research design process that includes extensive planning and review phases. TIMSS and the IEA Civic Education Study are of particular interest in that the designers explicitly tried to incorporate qualitative components into the research design in order to investigate cultural effects. In many cross-national studies, national differences or “culture” were studied using an exploratory/confirmatory combination of quantitative components. Both the IEA Computer Study and the Organization for Economic Cooperation and Development (OECD) International Adult Literacy Survey employed this methodology. TIMSS, in particular, represented a major methodological advancement over previous IEA studies by generating two large qualitative databases—the results of the video and case study components.

Both TIMSS (see Schmidt, McKnight, & Raizen, 1997; Stevenson & Nerison-Low, 2000; Stigler & Hiebert, 1998) and the IEA Civic Education Study (Torney-Purta, Schwille, & Amadeo, 1999; Torney-Purta, Lehmann,

Oswald, & Schultz, 2001) generated large amounts of qualitative data in the form of national case studies as well as massive amounts of quantitative data from surveys and assessment instruments. Both studies provide insights into how we can integrate more effectively an analysis of culture into cross-national studies of achievement. Important differences between the two studies—in terms of the number of separate study components, the sequencing of the components, and the overall strategy for analyzing the components—make these two studies an ideal methodological case study. In this section, I will compare how cultural effects were modeled and studied, contrast the research designs in terms of component models, and identify the research design strengths that improve the integration of qualitative and quantitative data.

In discussing TIMSS and the Civic Education Study, I will limit my discussion to the qualitative components and their role in providing an analysis of cultural effects. Given my familiarity with the TIMSS case study component, I will devote more discussion to it than to the video study. Those interested in learning more about the case studies can consult the following: Office of Educational Research and Improvement (1998, 1999a, 1999b) and Stevenson and Nerison-Low (2000). Those interested in the video studies can consult Stigler & Hiebert (1998, 1999). In addition, links to major TIMSS documents can be found at the National Center for Education Statistics Web site.

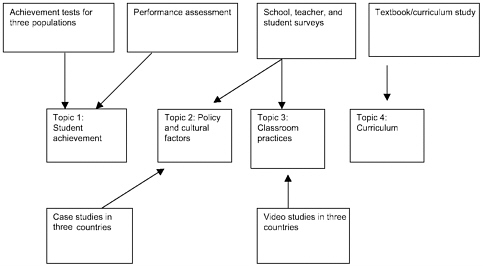

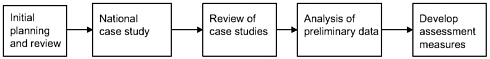

The TIMSS Case Study: Collision of Two Methodologies

The TIMSS study components (achievement, curriculum, survey, case study, and video) all were carried out simultaneously, but the emphasis given to each component was different. The most emphasis (in terms of data collection and analysis) was placed on the achievement tests, the surveys, and the curriculum analysis (see Figure 7-1). Although this type of diagramming represents the complexity of the TIMSS research design in a simplistic manner, it highlights important features of the overall research design (compare with Figure 7-2). The sequential process of data collection and analysis in the IEA Civic Education Study allowed the qualitative data to have a far greater impact on the overall analysis than in TIMSS.

The overall analytic strategy for TIMSS was to identify models of causal relationships between discrete variables. Contrast this approach with the mixed methods design in Brewer and Hunter (1989), Morgan (1998), or Tashakkori and Teddlie (1998). TIMSS also differed from other IEA studies in that multiple topics were studied (e.g., student achievement, instructional practices, K-12 policy issues), whereas in many IEA studies there is typically one topic, such as computer use. Each compo-

FIGURE 7-1 Schematic of TIMSS research design. See also NRC (1999, p. 3).

nent of TIMSS (e.g., the case study, the video study) was tailored to study a particular subject in a specialized way: questionnaires addressed instructional practice and opportunity to learn (OTL); interviews and observations addressed overall perceptions of the educational system. This specialization in each component led to problems in coordinated analysis that I will address in more detail.

In addition, the size of each component was much larger than in most studies that employ mixed methods. Within the TIMSS each component produced large amounts of data—larger than many single studies conducted by the average educational researcher. More than 40 countries participated in TIMSS, with at least three nations participating in each component. Several of the components, such as the achievement tests, surveys, curriculum study, and case studies, measured multiple age ranges, a fact that further complicated the analysis needed to adequately assess the results of any one component.

FIGURE 7-2 Schematic of IEA Civic Education Study research design (mixed

This simple fact of “data overload” meant that the potential insight to be gained by comparing the databases has not yet been achieved, as researchers have devoted their energies to concentrating on only one or two components. Data overload has been a problem in using TIMSS to inform educational policy. Some policy initiatives were trapped in debates informed by premature or incomplete analysis (LeTendre, Baker, Akiba, & Wiseman, 2001). “However, the very richness and complexity of the study has been a source of dissonance between the research and policy communities,” notes BICSE (National Research Council, 1999, p. 2).

The case study component of TIMSS was an ambitious attempt to integrate then state-of-the-art qualitative methods in a cross-national study of educational achievement. Methodologically, the case study component was influenced by the Whitings’ groundbreaking six-culture study (Whiting & Whiting, 1975)5 and the rationale and methods for the case study component were developed in a context distinct from the typical IEA study. The “Study Plan for the Case Studies” (Stevenson & Lee, n.d.) makes passing reference to the “Second IEA International Studies,” but does not cite or quote extensively from either IEA studies or from major figures publishing qualitative work in the field of comparative and international education (e.g. Altbach & Kelly, 1986). In a draft of the “Justification for the Case Studies (undated, p. 2),” the authors note: “To our knowledge, there have been no detailed qualitative studies that compare everyday practices at home and at school that might contribute to differences in students’ level of academic achievement in science and mathematics. This is the major goal of the case studies.”

The implementation of the case study component, in retrospect, was weakened by a lack of a preliminary synthesis of methodological issues relating to cross-national or cross-cultural studies of schooling. In both the anthropological literature and the comparative/international literature, there were numerous studies that dealt precisely with the issue of how “everyday practices at home and at school” influence student achievement, although these studies did not have the cross-national scope of TIMSS.6 This lack of a multidisciplinary integration of qualitative methods meant that the case study team itself had to work through methodological issues during the study (e.g., how to deal with analysis of data in the field) rather than focus on integrating the case study data with other TIMSS components.

A second design problem was the placement of the case studies in the overall TIMSS research design and the overall timeline of TIMSS. Although attempts were made to coordinate the efforts of the TIMSS survey team and the case study team, by the time both teams met in late August 1994, the questions and procedures for the survey component of the main study and the case study already had been set. This meant that the quan-

titative (achievement tests, main study surveys, curriculum analysis) and qualitative (case studies, video studies) studies were carried out largely in isolation from each other.

The original intent of the overall TIMSS design was integrative. The initial focus on why there are differences in test scores, classroom practices, and teaching standards all suggested that the case studies were to follow a methodological approach similar to that used in qualitative studies of education; the case studies would be analyzed and contrasted with the data from the video and main survey and achievement study. This kind of integrated analysis never occurred because of time constraints, the amount of data collected, and the lack of a comprehensive analysis plan that could bridge epistemological differences between the study components.

The main problem was time. In traditional ethnographic fieldwork, data analysis begins as soon as the fieldworker enters the field. All “data” collected (e.g., interview transcripts, observations, records of informal conversations) are understood to be affected by the researcher’s biases, status in the field, and the contextual situation in which data were generated. The analysis of data begins with outlining how researchers make meaning out of what they are told or saw, how valid these interpretations are compared to subsequent interpretations based on more experience, and how these interpretations lead to more finely developed questions about the field (see Goetz & LeCompte, 1984). Such analysis typically leads researchers to highlight conflicting points of view, to call attention to the hidden or implicit assumptions research participants are making, and to infer, for the naïve reader, the meanings participants intended when the researchers write up the study (see Wolcott, 1994). Inference and inductive thinking are incorporated as part of the analytic strategy in most branches of qualitative inquiry (see Glaser & Strauss, 1967; Hammersley & Atkinson, 1983; Lincoln & Guba, 1985).

To use such analysis to inform quantitative research designs requires early integration. The kind of analysis generated in the TIMSS case studies is most useful in the early stages of survey or instrument design, when important questions about meaning in specific linguistic or cultural contexts are being assessed. As Ercikan (1998, p. 545) noted, “In the case of international assessments, multidimensional abilities can differ from one country to another due to cultural, language, and curriculum differences. Cultural difference can influence intrinsic interest and familiarity of the content of items.” Because of the TIMSS timeline, the case study data were unavailable to the designers of the survey and test instruments, leaving researchers and reviewers to try to integrate the results of the components as “finished products.”

The IEA Civic Education Study: Mixed Methods

The IEA Civic Education Study, like TIMSS, used both quantitative and qualitative methods. However, the research design of the Civic Education Study incorporated quantitative and qualitative data in a mixed-methods approach wherein experts moved back and forth between two sets of data and where the findings of the qualitative data could be used to alter the collection of quantitative data. The organizing committee for the Civic Education Study began by calling on the expertise of national experts and the IEA general assembly to support a study of civic education. Although the study, like previous IEA studies, used an implicit model of nation as the “case” or unit of analysis, the committee members evinced a belief that “civics” as a topic was likely to be strongly linked with national cultures and values in a way that studies of previous IEA topics were not.

The committee proposed a series of national case studies, but ones that emphasized a cultural conflict model or social construction of reality perspective. The most common case study format in IEA studies is the summative case study (see also Stake, 1995; Yin, 1994). Summative case studies are designed to provide the qualitative equivalent of a descriptive statistics report in a statistical study. Apparently what some reviewers expected (or hoped) the TIMSS case studies would provide. Although the TIMSS case studies were clearly “ethnographic” (see Merriam, 1988) studies, the Civic Education case studies were mixtures of historical and ethnographic case studies. Both studies, then, have features that place them in the ethnographic tradition where the implicit model of culture used is a dynamic or conflict model. In both studies, the case study components provided a “thick description” of culture that would allow significant questioning of how phenomena (e.g., ideals of citizenship and beliefs about learning mathematics) were culturally formed and acted out in the schools of participating nations.

The research design of the Civic Education cases diverged significantly from TIMSS in that the people doing the case study were not required to have ethnographic or qualitative training. There was no attempt to follow a model like the Whitings’ six-culture study, wherein the problem of cultural translation was made explicit. Rather, following a more traditional IEA model, country representatives were picked from a pool of qualified scholars in each nation to compile the case study. Furthermore, the fieldwork for the Civic Education case studies was much less in comparison to TIMSS and the historical or document analysis work was greater.

Nonetheless, the Civic Education Study encountered problems similar to those faced in TIMSS. In the early stages, an organizing committee

created overarching questions much in the way that the four TIMSS case study topic areas were created. However, rather than let the country representatives then create their own set of core questions, the Civic Education committee created an initial set of 15 questions that all country representatives were required to answer. These 15 questions were revised and a set of 18 new questions, more focused on contested or conflicting views, was created.

The creation of the case study proceeded as an interchange among the national representatives, panels of experts in each nation, and the Civic Education organizing committee. In the Civic Education Study, panels of experts in each nation played a major role in crafting the case study itself, although most of the work in creating the final study was the work of the national representative. This created tensions among the representatives, some of whom wanted to significantly amend the original 18 questions, and the organizing committee members, who wanted to keep the questions standard for all participating countries.

This dissatisfaction led to sustained dialogue about the research process and the inclusion of ideas from national representatives in the ongoing conduct of the research to a far greater extent than was possible in TIMSS. Although the TIMSS components collected data simultaneously, the Civic Education team collected and reflected on data sequentially, allowing the research process to move along lines more in keeping with the ideals of mixed methods research. The exchanges between representatives and the committee allowed new questions and interpretations that “arose from the data” to guide how subsequent data would be collected and interpreted (see Glaser & Strauss, 1967).

The overall research design of the IEA Civic Education study is summarized in Figure 7-2. This design follows the integrated process suggested by Morgan (1998), but I emphasize the iterative nature of the analysis because simply linking qualitative and quantitative data collection sequentially does not provide the analytic power generated in the Civic Education Study. There the analytic power of each kind of data was maximized because as it was being analyzed, the second stage of research was being formed. Major findings (and limitations) at each stage were incorporated into the beginning of the next stage maximizing the analytic potential of the study.

IDENTIFYING RESEARCH DESIGN STRENGTHS

Over time the IEA and other cross-national studies have shifted from summative national to case studies that incorporate more historical and cultural material, identifying within-country variation, subpopulations, economic inequality, and other factors. The Civic Education case studies

were more of a mixture of ethnographic and historical material than has been typical in the past, but it was the TIMSS Case Study that truly represents a methodological advancement in conceptualizing and analyzing cultural effects in cross-national studies.

This point is crucial. Although the TIMSS Case Study project generated truly ethnographic case studies, their potential went untapped. The IEA Civic Education case studies were far more limited in terms of the data generated, but their placement in the overall research design allowed the more limited data to be used in a far more efficient manner. The scholars responsible for compiling the Civic Education case studies were integrated into a meta-discussion of larger research design issues in a way that the TIMSS case study (and even video study) personnel were not. This integration allowed the “strength” of qualitative studies—to generate high-quality hypotheses grounded in empirical reality—to be incorporated into the overall research process.

The fact that the Civic Education case studies were more limited in focus (addressing only civic education and concentrating on the mid-teen years) allowed them to be used in a more effective way and points out a simple, yet daunting problem in effectively using studies of cultural dynamics: data overload. The TIMSS case study component generated a rich database of interviews with, and observations of, students, teachers, parents, and administrators that span the K-12 system in all three countries. But the amount of data makes the database hard to use. The initial cleaning and ordering of the files took well over a year for the University of Michigan team and another six months was required to import the data into NUD*IST and provide basic case-level codes.7 Simply preparing the case study database for analysis presented a major time commitment.

The integration of qualitative studies, that model culture as a dynamic system, with other studies requires careful research design strategy. In dealing with national systems of education, issues of regional variation, the presence of subcultures, and variation in teacher practices over the course of schooling and a host of other issues mean that to achieve an integrated use of different forms of analysis, the study must have an extended time frame.

INTEGRATING A CULTURAL ANALYSIS: AN ITERATIVE, MULTIMETHOD APPROACH

The Civic Education Study demonstrates that an integration of cultural analysis and quantitative analysis can be achieved in cross-national studies through careful research design. If research is designed to maximize the interaction of the components over the course of the research project, then such study can document the reality of day-to-day living,

identify pertinent cultural factors, improve accuracy of interpretation, and integrate macrosocial and microsocial perspectives. On the other hand, if the research design does not allow such integration, the overall analysis may suffer from data overload, temporal disjunctures, or other factors that decrease the overall effectiveness of the research. Future research designs must systematically integrate the knowledge derived from one database with the knowledge from other databases in an iterative analytic process.

The results of TIMSS and the IEA Civic Education Study provide insights into how to organize such research. The use of an iterative multimethod model appears most likely to maximize the analytic capacity of individual components in multilevel, multisite approaches and is especially crucial in integrating qualitative components aimed at providing a holistic analysis of phenomena of interest. The IEA Civic Education Study employed an iterative model in which qualitative studies were used to generate ideas for further quantification, but several variations of such a model are feasible. The analytic power of the research design to identify and explain the impact of cultural effects can be maximized by paying attention to the analytic strengths of different kinds of data collected in the component studies, by controlling the temporal relationship of components to each other, by controlling the relative emphasis given to each component, and by using an overall integrated data analysis and instrument generation strategy.

Choosing Components to Maximize Analytic Strength

Research designs that incorporate mixed methods maximize the researcher’s ability to compare the knowledge derived from one component with that derived from another. For example, the fact that Japanese teachers assign less homework in seventh and eighth grades than U.S. teachers (variables in the student and teacher surveys) can be combined with interview and observation data from the case studies that reveal “no homework” policies in some Japanese elementary schools and the role of cram schools in remediation. Such comparison of the databases generally has been assigned to one of two categories by methodologists: triangulation or complementarity (Greene, Caracelli, & Graham, 1989, p. 259; Tashakkori & Teddlie, 1998, p. 43).

Triangulation

The term triangulation is used in several ways in qualitative studies in education, but here I mean using multiple measures to assess a specific

phenomenon. For example, teacher surveys, teacher logs, structured observations, and videos of classroom practice all can be used to measure instructional practice such as the assignment of homework. Each method will record the instructional practice in slightly different ways, but these measures can be related to one another to provide a more accurate assessment of homework assignment. Triangulation also provides researchers with the opportunity to revise their instruments and fine tune their overall research design (see U.S. Department of Education, 1999).

Such triangulation can occur between study components or within components if the component is large enough. For example, in the TIMSSR video study (which sampled about 100 schools in eight nations), the videotape data were complemented by a student questionnaire, a teacher questionnaire, samples of student work, samples of materials used in the lesson, and samples of tests given (http://www.lessonlab.com/timss-r/instruments.htm). These multiple measures of classroom practices can be used to enhance the measurement of instructional practices deemed of interest.

Triangulation is costly both in terms of time and analytic effort. In very large projects like TIMSS, future research designs would do well to identify a specific phenomenon or areas of related phenomena (e.g., how teachers achieve equity of opportunity to learn within classrooms) and design components to allow triangulation of measures around these phenomena of interest. When the study includes a wide range of grade levels, like TIMSS, it is important to take into account the fact that there may be different organizational environments and/or cultural expectations for different levels of schooling in a given nation. For example, widespread “no homework” policies at the elementary level in Japan give way to significant homework assignment in the middle school years. Triangulation of measures would need to be performed on more than one age level in multilevel studies, increasing the risk of data overload.

The TIMSS data offer the possibility of triangulation among teacher surveys, student surveys, case study observations, and the video studies at the Population 2 level for three nations. However, to engage in extensive triangulation of measures even at this level has been daunting. To use triangulation at every level of a multigrade level study like TIMSS likely would be cost prohibitive in terms of collection and unlikely to result in data that could be used by researchers in a timely manner. Rather than triangulating methods at each level, maximizing complementarity of components in early stages of the research would be a more effective way to identify the cultural aspects of a phenomenon (such as opportunity to learn) in different nations and/or regions, providing a path for more specific and limited triangulation of measures in subsequent components.

Complementarity

Survey studies, case studies, and other field-based observational studies can be used either in a confirmatory or exploratory way (Tashakkori & Teddlie, 1998, p. 37). However, survey research typically is used in a confirmatory manner (i.e., to test formal hypotheses), while case studies and other field-based observational studies generally are used to explore given social situations in depth. In multiage level, multinational studies like TIMSS, survey questionnaires offer the potential to generate data that can be used to test hypotheses about the impacts of belief structures on a cross-national level. The TIMSS survey data at the student, teacher, and school levels can be combined with the achievement data to allow researchers to test what factors, cross-nationally, are associated with academic achievement, although they do not allow causal modeling.

Cross-sectional survey data of the kind presented in TIMSS are less useful to the overall analysis process than longitudinal survey data, especially surveys that have been developed with input from ongoing qualitative research. In TIMSS, the case study data complement the survey data (in three nations) by providing researchers with highly detailed descriptions ideal for exploratory (i.e., hypothesis generating) work. These case studies also provide a global description of the public educational systems in these three nations that allows researchers to see how different organizations (i.e., Japanese cram schools and public schools) or groups (e.g., teachers and parents) interact at different levels. However, if survey questions had been derived with input from analysis of the case study data, it is likely that such questions would have provided more insight into how intranational variation in belief structures compared with international variation (for a preliminary attempt to achieve this comparison, see LeTendre, Baker, Akiba, Goesling, and Wiseman, 2001).

To maximize the complementarity within the overall research design, however, more interaction between the exploratory components (case studies or other field studies) and the development of survey instruments is needed. One of the problems in trying to use both the TIMSS case study and survey databases is that sometimes there is good overlap (e.g., in the coverage of homework) and sometimes poor overlap (e.g., in the coverage of family background) between the two components. Initiating exploratory, qualitative components first, and overlapping the analysis of these components with the development of survey, test, or observation instruments, would maximize the overall complementarity of the components.

Finally, as Adam Gamoran pointed out in a review of this paper, a more sophisticated overarching analysis design might have improved the purely quantitative components of TIMSS. A pretest/posttest design combined with longitudinal or time-series surveys of practices and beliefs

would have allowed analysts of TIMSS to test more complex causal models. In planning such large cross-national studies, the temporal relationship of key components is crucial to the overarching design.

Temporal Relationship of Components

The IEA Civic Education project provides a good model for the ideal temporal relationship of components: researchers involved in each stage of research should be integrated into the planning and initial analysis of subsequent stages (an analytic “pass the baton” metaphor, if you will). In this way, important knowledge about the problems and limitations of each component is conserved. Such temporal sequencing also increases the complementarity of the components, as key questions that arise in early stages of the research can then drive the development of research focus and instrument creation in subsequent stages.

In large projects such as TIMSS, temporal sequencing may create substantial costs if all components are given equal weight. In most multimethod research designs, not all components are given the same emphasis or have the same analytic weight as other components. In smaller studies with only two components (a survey and a case study), researchers may place more emphasis on data collection and analysis on one component versus another. In cross-national studies like TIMSS, the complexity of the research design suggests that temporal sequencing and relative emphasis on components must be manipulated as part of the overall research design.

Relative Emphasis of Components

Tashakkori and Teddlie (1998), for example, note that most multimethod research designs assign more or less emphasis to the component studies. The use of a small, “pilot” qualitative study with a subsequent “main” quantitative study is common in much social science research. A small pilot study, perhaps involving focus groups and some limited open-ended interviews, typically is used to generate a first draft of a survey. Future cross-national studies, which must address multiple levels of schooling, could benefit from a research design that systematically manipulates both the emphasis and temporal sequence of the components.

For example, a study using multiple components with three stages of data collection might be conducted on the quality of teacher classroom practice. Simultaneous observational or video studies of a limited number of classrooms could be conducted along with a study based on teacher records or journals. Analysis of these data might highlight one aspect of classroom practice (such as classroom management styles) as being a key

factor affecting instructional quality. This material then could be used to generate a survey or an observation instrument that would be pilot tested. This pilot study would be likely to raise further questions or reveal other areas of interest that then would be incorporated into the final research design, where revised quantitative instruments and more focused qualitative study (perhaps a case study) would be used to gather the main body of data.

AN INTEGRATED ANALYSIS STRATEGY FOR COMPONENTS

Finally, in very large studies like TIMSS, more than one line of research could occur simultaneously, with links across lines increasing the analytic power of each component. Qualitative and quantitative components should be organized in iterative stages, culminating in a final database that could be collected on a larger scale.

For example, in a study of student academic achievement, components such as video studies of classrooms, like those conducted in TIMSS and TIMSS-R, could be integrated by having fewer classrooms, but recording each classroom for longer periods of time. Ideally, the beginning, middle, and end of similar units (such as electricity) should be taped at the same grade level to provide information about patterns of unit flow. In addition, keeping teacher and student logs in videotaped classrooms would provide more accurate assessment (U.S. Department of Education, 1999). The videotapes and logs would be collected in the first stage of research and used as stimuli for focus groups of teachers or parents in subsequent qualitative components designed to highlight cultural effects on teaching.8 The results of these focus group interviews, along with the original videos and/or logs, would serve as the basis for creating instruments or video strategies for the final phase that would address identified patterns. That is, more attention might be directed at comparing veteran and novice teachers, area specialists and nonspecialists, classes of heterogeneous ability, and classes of homogeneous ability if these groups or interactions appear to be especially relevant in the nations in question.

DATA RELEASE AND ANALYSIS

The timely release of data and the incorporation of a large group of secondary analysts also should be considered as part of the overall research strategy. The release of TIMSS data via the World Wide Web by the Boston University team was a major breakthrough in the dissemination of cross-national data. The high quality of the technical reports and data packaging has allowed scholars around the world to use the TIMSS survey and achievement data to engage in significant debates about effects

and methods and what areas need future studies. Largely because of confidentiality issues, neither the TIMSS case study nor the video study data have been released or analyzed in the way that the survey and achievement data have. Future studies should consider ways to disseminate qualitative data that would allow a larger pool of researchers to access the data and link the analysis of qualitative data with the analysis of quantitative data.

Several strategies for data release are possible. First, to address the issue of maintaining subject confidentiality, transcripts of the classroom dialogue in video studies could be edited to delete identifying information and be released along with the corresponding surveys and coded observations. Although this kind of qualitative data would not provide the rich analytic possibilities of the visual data, it would allow access to the data by a larger range of scholars, increasing the possibility of new insights. Researchers who use such textual data might then seek to work with the original video data, following protocols for maintaining confidentiality that the National Center for Education Statistics already has in place.

With case study data, the problem of maintaining confidentiality is slightly different. In the TIMSS case study, even after deleting distinguishing remarks and inserting pseudonyms, reviewers familiar with the school systems studied could readily identify field sites. The power of qualitative data to capture the gestalt or cultural dynamic of a given place means that it is impossible to protect subject anonymity without substantially lessening the analytic capacity of the data. However, releasing portions of the data collected might be feasible. Logs or diaries kept by teachers could be edited to delete identifying remarks, then released. Verbatim transcripts, with identifying text deleted, also might be released. Scholars who analyzed these initial data sets then might seek to work with the original data under specified guidelines.

CONCLUSION: PROBLEMS THAT REQUIRE FURTHER ATTENTION AND EXPERIMENTATION

The major challenge in incorporating cultural analyses in studies of student achievement is that most research in either qualitative sociology or cultural anthropology is exploratory and is thus designed specifically to raise questions, not test hypotheses formally derived from an existing body of theory. For some researchers trained in quantitative methods, the basic techniques of qualitative research violate what they perceive as the basic requirements of “good science.” For example, using snowball sampling, where informants lead the research on to other informants, is a classic technique in qualitative research, especially among “hidden” (e.g.,

drug users, runaways, gay teenagers in schools) populations. Yet many quantitative researchers regard a “random sample” as the sine qua non of good research. Framing qualitative research as a type of “pilot study” does not readily work to bridge this gap as it reduces the qualitative research to such an extent as to make it worthless to pursue and quantitative researchers are likely to voice the same objections to the quality of the pilot study.

Cultural analyses should be incorporated as methodologically distinct, but integrated, components in cross-national studies of schooling so that the effects of beliefs, values, ideological conflicts, or habitual practices can be incorporated into the analysis. Patterns, both within nations and across nations, can be compared and contrasted to improve our understanding of what values, beliefs, or practices are accentuated, legitimized, or even institutionalized. Such analyses, when joined with various types of national quantitative assessments, would more clearly identify the overall similarity or dissimilarity of basic cultural patterns around schooling and would increase understanding of what kinds of reforms or innovations would or would not transfer from one nation to another.

Documenting the range of cultural variation within nation states is perhaps the most important role for cultural analysis in cross-national studies of educational achievement. A wide range of scholars (both quantitative and qualitative) have argued that the unit of the nation state is an inadequate analytic unit because it misses crucial regional variation as well as variation in subpopulations (such as racial, ethnic, linguistic, religious, or other minority groups). Detailed description of cultural variation with regard to core beliefs would greatly inform both quantitative research and policy creation. Documenting cultural change is crucial to understanding how current beliefs or values may or may not hold for the future and thus may or may not be relevant in subsequent assessments or policy recommendations.

Large cross-national studies of educational achievement that attempt to incorporate significant qualitative components in order to analyze cultural effects on learning are a relatively new type of study. TIMSS, with its large number of components and enormous databases, was a groundbreaking study and offers future researchers important lessons. The study of mixed methods is itself relatively new in social sciences, and promises to yield substantial breakthroughs in the future. Advances in computer or software technology (such as programs that allow visual and textual data to be analyzed simultaneously) have radically changed the nature of qualitative research and likely will continue to affect possibilities for research. Within qualitative research, specific forms of research (e.g., case studies or focus group research) have seen substantial methodological debate and evolution in the past 15 years.

In summary, my assessment of the current state of research is that we, as a community of scholars, have only begun to investigate ways to collect, analyze, and apply (to policy formation) these rich data sets. Further methodological improvements are certain to occur.

We are currently in a period where many possibilities exist for combining data and creating new methods for analyzing data. Some problems, like data overload, remain but such problems are not new—historians have dealt with them for centuries. New analytic tools promise the ability to analyze larger data sets with more consistency.

Effectively utilizing large qualitative data sets will require continued support for innovation and experimentation in analysis. Funding specifically for research on how to analyze these large data sets would increase the ability of the academic community in general to access the potential of the data. Just as hierarchical linear model (HLM) analysis has transformed research on school effects, so too, do new programs or strategies for integrating large qualitative and quantitative databases offer the potential to transform cross-national research. Such innovation will require time, money, and patience as dead ends and temporary failures are inevitable in any scientific undertaking, but the potential advances make this area one that deserves continued support and emphasis within the broader scientific community.

NOTES

REFERENCES

Altbach, P., & Kelly, G. (Eds.). (1986). New approaches to comparative education. Chicago: University of Chicago Press.

Anderson, L., Ryan, D., & Shapiro, B. (1989). The IEA Classroom Environment Study. New York: Pergamon Press.

Anderson-Levitt, K. (1987). National culture and teaching culture. Anthropology and Education Quarterly, 18, 33-38.

Anderson-Levitt, K. (2001). Teaching culture. Cresskill, NJ: Hampton Press.

Asay, S., & Hennon, C. (1999). The challenge of conducting qualitative family research in international settings. Family and Consumer Sciences Research Journal, 27(4), 409-427.

Baker, D. (1993). Compared to Japan, the U.S. is a low achiever . . . really: New evidence and comment on Westbury. Educational Researcher, 22(3), 18-20.

Baker, D. (1994). In comparative isolation: Why comparative research has so little influence on American sociology of education. Research in Sociology of Education and Socialization, 10, 53-70.

Bereday, G. (1964). Comparative method in education. New York: Holt, Rinehart & Winston.

Boli, J., & Ramirez, F. (1992). Compulsory schooling in the Western cultural context: Essence and variation. In R. Arnove, P. Altbach, & G. Kelly (Eds.), Emergent issues in education: Comparative perspectives (pp. 15-38). Albany, NY: State University of New York Press.

Bradburn, N., Haertel, E., Schwille, J., & Torney-Purta, J. (1991). A rejoinder to “I never promised you first place.” Phi Delta Kappan, June, 774-777.

Brewer, J., & Hunter, A. (1989). Multimethod research: A synthesis of styles. Newbury Park, CA: Sage.

Caracelli, V., & Greene, J. (1993). Data analysis strategies for mixed-method evaluation designs. Educational Evaluation and Policy Analysis, 15(2), 195-207.

Crossley, M., & Vulliamy, G. (Eds.). (1997). Qualitative educational research in developing countries. New York: Garland.

Daniels, H., & Garner, P. (Eds.). (1999). World yearbook of education 1999: Inclusive education. London: Kogan Page.

Dore, R. P. (1976). The diploma disease. Berkeley: University of California Press.

Douglas, M. (1986). How institutions think. Syracuse, NY: Syracuse University Press.

Eckert, P. (1989). Jocks and burnouts: Social categories and identity in the high school. New York: Teachers College Press.

Ercikan, K. (1998). Translation effects in international assessments. International Journal of Educational Research, 29, 543-553.

Flinn, J. (1992). Transmitting traditional values in new schools: Elementary education of Pulap Atoll. Anthropology and Education Quarterly, 23, 44-58.

Fujita, M. (1989). It’s all Mother’s fault: Childcare and the socialization of working mothers in Japan. Journal of Japanese Studies, 15(1), 67-92.

Fujita, M., & Sano, T. (1988). Children in American and Japanese day-care centers: Ethnography and reflective cross-cultural interviewing. In H. Trueba and C. Delgado-Gaitan (Eds.), School and society: Learning through culture (pp. 73-97). New York: Praeger.

Fukuzawa, R., & LeTendre, G. (2001). Intense years: How Japanese adolescents balance school, family and friends. New York: RoutledgeFalmer.

Gambetta, D. (1987). Were they pushed or did they jump? Individual decision mechanisms in education. New York: Cambridge University Press.

Gamoran, A., & Mare, R. D. (1989). Secondary school tracking and educational equality: Compensation, reinforcement, or neutrality. American Journal of Sociology, 94, 1146-1183.

Glaser, B., & Strauss, A. (1967). The discovery of grounded theory. Chicago: Aldine.

Goetz, J., & LeCompte, M. (1984). Ethnography and qualitative design in educational research. Orlando, FL: Academic Press.

Goldman, S., & McDermott, R. (1987). The culture of competition in American schools. In G. Spindler (Ed.), Education and cultural process (pp. 282-300). Prospect Heights, IL: Waveland Press.

Goldthorpe, J. (1997). Current issues in comparative macrosociology: A debate on methodological issues. Comparative Social Research 16, 1-26.

Grant, C., & Sleeter, C. (1996). After the school bell rings. London: Falmer Press.

Greene, J., Caracelli, V., & Graham, W. (1989). Toward a conceptual framework for mixed-method evaluation designs. Educational Evaluation and Policy Analysis, 11(3), 255-274.

Hallinan, M. (1992). The organization of students for instruction in the middle school. Sociology of Education, 65(April), 114-127.

Hammersley, M., & Atkinson, P. (1983). Ethnography: Principles in practice. New York: Routledge.

Hendry, J. (1986). Becoming Japanese: The world of the pre-school child. Manchester, England: Manchester University Press.

Hoffman, D. (1999). Culture and comparative education: Toward decentering and recentering the discourse. Comparative Education Review, 43(4), 464-488.

Jenkins, R. (1983). Lads, citizens and ordinary kids: Working-class youth life-styles in Belfast. London: Routledge & Kegan Paul.

Jones, P. E. (1971). Comparative education: Purpose and method. St. Lucia: University of Queensland Press.

Kilgore, S. (1991). The organizational context of tracking in schools. American Sociological Review, 56, 189-203.

Kinney, C. (1994). From a lower-track school to a low status job. Unpublished doctoral dissertation, University of Michigan, Ann Arbor.

Lareau, A. (1989). Home advantage: Social class and parental intervention in elementary education. London: Falmer Press.

LeTendre, G. (1996). Constructed aspirations: Decision-making processes in Japanese educational selection. Sociology of Education, 69(July), 193-216.

LeTendre, G. (2000, August). Downplaying choice: Institutionalized emotional norms in U.S. middle schools. Paper presented at the American Sociological Association, Washington, DC.

LeTendre, G., Baker, D., Akiba, M., & Wiseman, A. (2001). The policy trap: National educational policy and the Third International Mathematics and Science Study. International Journal of Educational Policy Research and Practice, 2(1), 45-64.

LeTendre, G., Baker, D., Akiba, M., Goesling, B., & Wiseman, A. (2001). Teachers’ work: Institutional isomorphism and cultural variation in the U.S., Germany and Japan. Educational Researcher, 30(6), 3-16.

Lewis, C. (1995). Educating hearts and minds. New York: Cambridge University Press.

Lincoln, Y., & Guba, E. (1985). Naturalistic inquiry. Beverly Hills, CA: Sage.

MacLeod, J. (1987). Ain’t no makin’ it. Boulder, CO: Westview Press.

Merriam, S. (1988). Case study research in education. San Francisco: Jossey-Bass.

Meyer, J., Ramirez, F., & Soysal, Y. (1992). World expansion of mass education, 1870-1980. Sociology of Education, 65(2), 128-149.

Miles, M. B., & Huberman, A. M. (1994). Qualitative data analysis: An expanded sourcebook. Thousand Oaks, CA: Sage.

Morgan, D. (1998). Practical strategies for combining qualitative and quantitative methods: Applications to health research. Qualitative Health Research, 8(3), 362-376.

National Research Council. (1999). Next steps for TIMSS: Directions for secondary analysis. Board on International Comparative Studies in Education, A. Beatty, L. Paine, & F. Ramirez, Editors. Board on Testing and Assessment, Commission on Behavioral and Social Sciences and Education. Washington, DC: National Academy Press.

Office of Educational Research and Improvement. (1998). The educational system in Japan: Case study findings. Washington, DC: U.S. Department of Education.

Office of Educational Research and Improvement. (1999a). The educational system in the U.S.: Case study findings. Washington, DC: U.S. Department of Education.

Office of Educational Research and Improvement. (1999b). The educational system in Germany: Case study findings. Washington, DC: U.S. Department of Education.

Okano, K. (1993). School to work transition in Japan. Philadelphia: Multilingual Matters.

Okano, K. (1995). Rational decision making and school-based job referrals for high school students in Japan. Sociology of Education, 68(1), 31-47.

Passow, A. H. (1984). The I.E.A. National Case Study. Educational Forum, 48(4), 469-487.

Passow, A. H. P., Noah, H., Eckstein, M., & Mallea, J. (1976). The National Case Study: An empirical comparative study of twenty-one educational systems. New York: John Wiley & Sons.

Peak, L. (1991). Learning to go to school in Japan. Berkeley: University of California Press.