6

Effects of Accommodations on Test Performance

One objective of the workshop was to consider the extent to which scores for accommodated examinees are valid for their intended uses and comparable to scores for nonaccommodated examinees. This is a critical issue in the interpretation of results for accommodated test takers and results that include scores from accommodated and standard administrations. To accomplish this objective, researchers who have investigated the effects of accommodations on test performance were asked to discuss their findings. This chapter summarizes their presentations. The first presentation focused on investigations with NAEP. The remainder of the studies dealt with other tests, several of which included released NAEP items.

RESEARCH ON NAEP

John Mazzeo, executive director of the Educational Testing Service’s School and College Services, described research conducted on the NAEP administrations in 1996, 1998, and 2000. He noted that there were multiple purposes for the research: (1) to document the effects of the inclusion and accommodation policies on participation rates for students with special needs; (2) to examine the effects of accommodations on performance results; (3) to evaluate the effects of increased participation on the measurement of trends; and (4) to examine the impact of inclusion and accommodation on the technical quality of the results.

The multi-sample design implemented in 1996 permitted three sorts

of comparisons. Comparisons of results from the S1 (original inclusion criteria, no accommodations) and S2 (new inclusion criteria, no accommodations) samples allowed study of the effects of changing the inclusion criteria. Comparisons of the S2 and S3 (new inclusion criteria, accommodations allowed) samples allowed study of the effects of providing accommodations. Comparisons of the S1 and S3 samples allowed examination of the net effect of making both types of changes.

Participation Rates for the 1996 Mathematics and Science Assessments

Mazzeo highlighted some of the major findings on participation rates based on the 1996 mathematics and science assessments of fourth and eighth graders. For students with disabilities, comparisons of S1 and S2 revealed that simply changing inclusion criteria, without offering accommodations, had little impact on rates of participation. On the other hand, comparisons of S1 and S3 showed that providing accommodations did increase the number of students who participated in the assessment at grades four and eight.

For English-language learners, comparisons of S1 and S2 suggested that the revised criteria resulted in less participation at grade four, when accommodations were not offered. Comparisons of S1 and S3 showed that offering accommodations in conjunction with the revised inclusion criteria increased participation rates in both grades. Offering accommodations (which included a Spanish language version of the mathematics assessment) had the biggest impact on English-language learners who needed to be tested in Spanish.

Several of the overall findings appear below:

-

42 to 44 percent of the students with disabilities were regularly tested by their schools with accommodations, and 19 to 37 percent were tested with accommodations in NAEP.

-

22 to 36 percent of English-language learners were regularly tested by their schools with accommodations, and 6 to 30 percent were tested with accommodations in NAEP.

-

Some students with disabilities and English-language learners who did not regularly receive accommodations were given them in NAEP, but not all of those who regularly received accommodations were given accommodations in NAEP.

-

Some students with disabilities were included in the assessment even though their IEPs suggested that they should have been excluded from testing.

Effects of Accommodations on Technical Characteristics of the Items

The remaining research questions required examination of test score and item-level performance. Use of the multi-sample design meant that estimation of the NAEP scale score distributions could be conducted separately for the three samples. One of the research questions was whether the results from standard and nonstandard administrations could be “fit” with the same item response theory (IRT) model.1 Two kinds of analyses were used to answer this question: differential item functioning (DIF) analyses and comparisons of IRT model fit. DIF analyses involved comparing performances of accommodated and standard test takers on each administered item, looking for differences in the way the groups performed on the item when ability level is held constant.2 Comparisons of IRT model fit were handled by examining item fit indices estimated in the S2 sample (no accommodations) and the S3 sample (accommodations allowed). Differences were found for some items.

To provide a context for judgments about the results, the incidence of DIF for standard and accommodated test takers was compared to the incidence of DIF for African-American and white examinees. In fourth grade science, 37 percent of the items exhibited DIF for accommodated versus standard test takers, suggesting that these items were more difficult for accommodated examinees. When comparing African-American and white examinees, only 10 percent of the items showed evidence of DIF. DIF was

also found in twelfth grade science where 28 percent of the items appeared to be differentially difficult for accommodated test takers. In contrast, only 12 percent of the items showed DIF in comparisons of African-American and white examinees.

For the item fit indices, the researchers developed a way to collect baseline data about how much variation in fit indices would be likely to occur “naturally.” These baseline estimates were derived by randomly splitting one of the S2 samples into two equivalent half-samples. The researchers conducted the model fit analyses separately for the S1, S2, and S3 samples and also for the half-samples of the S2 sample. They used the natural variation for statistics calculated for the separate half-samples as a basis for evaluating the differences observed in comparing the other three samples. Overall the differences occurred less often in the “real” data than in the half-samples (only one incident of misfit appeared to be significant, in eighth grade science). The IRT model appeared to fit data from the S3 sample somewhat better than the S2 sample.

Effects of Accommodations on NAEP Scale Scores

Another aspect of the research was comparison of reported NAEP results under the different accommodation and inclusion conditions. Again, the researchers estimated NAEP scale score distributions separately for the S1, S2, and S3 samples as well as for the equivalent half-samples of S2. They compared average scale scores for the entire sample, various demographic groups, students with disabilities, and English-language learners as calculated in each of the samples. Comparisons of group mean scale scores as calculated in the S2 and S3 samples revealed some differences. The researchers compared the number of observed differences to the number of differences that occurred when group mean scale scores calculated for the S2 half-samples were compared. Fewer differences in group means were observed when the S2 and S3 results were compared than when the results for the S2 half-samples were compared. In science, nine differences were found for the half-samples compared to five differences in comparing statistics based on S2 with those based on S3. In mathematics, three differences were found, all at grade 12. (See Mazzeo, Carlson, Voelkl, and Lutkus, 2000, for further details about the analyses of the 1996 assessment.)

Findings from the 1998 Reading Assessment

Results from analyses of the 1996 assessment data suggested issues to investigate further in the 1998 reading assessment, which was designed to report state results as well as national results for fourth and eighth graders. As stated previously, the S1 criteria were dropped, and in each of the participating states, the sample was split into two subsamples. Roughly half of the schools were part of the S2 sample (no accommodations were permitted); the other half were part of the S3 sample (accommodations were permitted).

The researchers compared both participation rates and scale score results. Comparisons across the S2 and S3 samples revealed that in most states the participation rate was higher in S3 for students with disabilities but not for English-language learners. Mazzeo believes that this finding was not surprising given that the accommodations for English-language learners did not include a Spanish-language version, linguistic modifications of the items, or oral administration, although extended time and small group (or one-on-one) administration were allowed.

Comparisons of average scale scores for the S2 and S3 samples revealed very small differences at both grades. The researchers devised a way to examine the differences in average scale scores in combination with the change in participation rates from S2 to S3. For the fourth grade sample, this analysis revealed a negative relationship between average scores and participation rates. That is, in the vast majority of states, higher participation rates were associated with lower mean scores. In some states, the participation rate was as much as 5 percent higher for S3 than for S2, while mean scores were as much as 4 points lower for S3 than for S2. Other states had smaller changes.

Findings from the 2000 Mathematics Assessment

Analyses continued with the 2000 mathematics assessment of fourth and eighth graders. For this assessment, a design similar to that used in 1998 was implemented, but this time a Spanish bilingual booklet was offered for English-language learners who needed the accommodation. Comparisons of participation rates across the S2 and S3 samples revealed a substantial increase in inclusion. This time the increases were comparable for students with disabilities and English-language learners.

Scale scores were calculated in the S2 and S3 samples for both grades four and eight, and states were rank ordered according to their reported results for S2 and S3. Comparisons of states’ rank orderings revealed few differences.

Analyses were again conducted to examine differences in average scale scores in combination with changes in participation rates. As with the 1998 assessment, a negative relationship between average scale scores and participation rates was found when results were compared for S2 and S3 for eighth graders. In some states the difference in means between S2 and S3 samples was as much as 4 to 5 points. Mazzeo speculated that this finding signifies that increased inclusion may result in lower overall scores; additional research is needed on this point.

STUDIES AT THE UNIVERSITY OF WISCONSIN

Stephen Elliott, professor at the University of Wisconsin, summarized findings from research he and his colleagues have conducted on the effects of accommodations on test performance. All four studies compared performance of students with disabilities and students without disabilities. A key characteristic of the studies was that they relied on a design in which each student took the test under both accommodated and nonaccommodated conditions, thus serving as his or her own “control.” To facilitate this aspect of the research design, multiple equivalent test forms were used, and each subject took two forms of the test—one form under accommodated conditions and one under nonaccommodated conditions. The researchers used counterbalanced designs3 in their investigations, randomizing the order of the accommodated versus nonaccommodated conditions as well as the form that was used under the two conditions.

The researchers used “effect sizes” to summarize their findings. An effect size is a ratio in which the numerator is the difference between two means (e.g., the difference between the mean for students without disabilities and the mean for students with disabilities on a given test; or the difference between the means when students take the test with accommodations

and without accommodations). The denominator of the ratio is a standard deviation, typically that of the overall population or of the “majority” or comparison group. The researchers used a commonly accepted scheme to categorize effect sizes into large (>.80), medium (.40 to .80), small (<.40), zero, and negative effects (Cohen and Cohen, 1983).

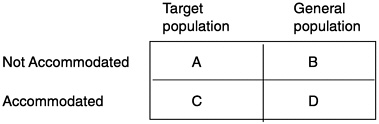

A common framework for interpreting the validity of accommodations is based on discussions by Phillips (1994) and Shepard, Taylor, and Betebenner (1998) of ways to evaluate whether scores obtained with and without accommodations have comparable meanings. As described by Shepard et al., if accommodations are working as intended, an interaction should be present between educational status (e.g., students with disabilities and students without disabilities) and accommodation conditions (e.g., accommodated and not accommodated). The accommodation should improve the average score for a group of students with disabilities, but should have little or no effect on the average score of a group of non-disabled students. If an accommodation improves the performance of both groups, providing it only to certain students (those with a specific disability) is considered to be unfair. Figure 6–1 portrays a visual depiction of the 2×2 experimental design used to test for this interaction effect. An interaction effect would be said to exist if the mean score for examinees in group C were higher than the mean score for group A, and the mean scores for groups B and D were similar. The interaction effect was used in Elliott’s studies (and others in this chapter) as the criterion for judging the validity of scores from acommodated administration.

FIGURE 6–1 Tabular Depiction of Criteria for Evaluating the Validity of Scores from an Accommoded Administrationa

aAn interaction effect is considered to exist if the mean score in cell C is higher than the mean in cell A and the means for cells B and D are similar.

SOURCE: Malouf (2001).

Effects of Accommodation “Packages” on Mathematics and Science Performance Assessments

The first study Elliott described focused on the effects of accommodations on mathematics and science performance assessment tasks. This research was an extension of an earlier study published in 2001 (see Elliott, Kratochwill, and McKevitt, 2001) which produced very similar findings. The participants included 218 fourth graders from urban, suburban, and rural districts, 145 without disabilities and 73 with disabilities. All students with disabilities received accommodations based on teacher recommendations and/or their IEPs. Most students with disabilities received “packages” of accommodations, rather than just a single accommodation. The most frequently used accommodations for students with disabilities participating in this study were

-

Verbal encouragement of effort (60 students)

-

Extra time (60 students)

-

Orally read directions (60 students)

-

Simplify language in directions (55 students)

-

Reread subtask directions (54 students)

-

Read test questions and content to student (46 students)

Students without disabilities were randomly assigned to one of three test conditions: (1) no accommodations, (2) a package of accommodations (i.e., extra time, support with understanding directions and reading words, and verbal encouragement), and (3) an individualized accommodation condition based on the IEP or teacher-recommended accommodations. The students took state-developed mathematics and science performance assessments. Teachers or research project staff administered performance tasks in four one-hour sessions over the course of several weeks.

Effect sizes were calculated by comparing students’ mean performance under accommodated and nonaccommodated conditions. Comparisons of the means for the two groups under the two accommodation conditions revealed a large effect (.88) for students with disabilities. However, a medium effect (.44) was found for students without disabilities. In addition, comparisons of individual-level performance with and without accommodations revealed medium to large effect sizes (.40 or higher) for approximately 78 percent of students with disabilities and 55 percent of students without disabilities. Effect sizes were in the small or zero range for approxi-

mately 10 percent of the students with disabilities and 32 percent of the students without disabilities. Negative effects were found for about 12 percent of the students with disabilities and 13 percent of the students without disabilities (negative effects indicate that students performed better under nonaccommodated conditions than under accommodated conditions). Together, these findings indicated that the interaction effect was not present, thus raising questions about the appropriateness of the accommodations.

Effects of Accommodations on Mathematics Test Scores

The second study Elliott discussed was an experimental analysis of the effects of accommodations on performance on mathematics items (Schulte, Elliott, and Kratochwill, 2000). The participants included 86 fourth graders, half of whom had disabilities. The students were given the Terra-Nova Multiple Assessments Practice Activities mathematics assessment and the Terra-Nova Multiple Assessments mathematics subtest (composed of multiple-choice and constructed-response items). Students without disabilities were randomly paired with students with disabilities within each school, and both students in each pair received the accommodations listed on the IEP for the student with disabilities. All students participated in a practice session to become familiar with the accommodations and test procedures.

Findings from this study indicated that both groups of students benefited from the testing accommodations; thus, again, the interaction effect was not present, leading to questions about the validity of scores when accommodations were used. Small effect sizes were found for students without disabilities; effect sizes were in the small to medium range for students with disabilities. One explanation for these findings may be that constructed-response items are more challenging for all students (i.e., they involve higher levels of reading and thinking skills), and the accommodations may have removed barriers to performance for both groups.

Effects of Extra Time on Mathematics Test Scores

Elliott presented findings from a third study (Marquart and Elliott, 2000), which focused on the effects of extra time accommodations. The research was based on 69 eighth graders who took a short form of a Terra-Nova mathematics test composed entirely of multiple-choice items. One-third of the participants were students with disabilities who had extra time

listed on their IEPs; one-third were students whom teachers, using a rating form developed by researchers, had identified as “at-risk”; and one-third were students functioning at or above grade-level. Students had 20 minutes to complete the test under the standard time condition and up to double that time for the extra time condition. Again, random assignment was used to determine the order of the accommodated and not-accommodated conditions and the form used under each condition. Tests were administered during mathematics class or in a study hall, and students from all three groups were included in each testing session. Participants also responded to surveys.

Findings indicated that students with disabilities and students without disabilities did not differ significantly in the amount of change between their test scores obtained under the standard and extended time conditions. Further, no statistically significant differences were found between students without disabilities and the at-risk students in the amount of change between scores under the two conditions. These findings led the researchers to conclude that the extended time accommodation did not appear to have invalidated scores on the mathematics tasks. In addition, the survey results indicated that, under the extended time conditions, the majority of students felt more comfortable and less frustrated, were more motivated, thought they performed better, thought the test seemed easier, and preferred taking the test with that accommodation.

Effects of Oral Administration on Reading Test Scores

The final study Elliott described examined the effects of using readaloud accommodations on a Terra-Nova reading test administered to eighth graders (McKevitt and Elliott, 2001). Elliott explained that oral reading of a reading test is a frequently used example of an invalid testing accommodation, although few studies have empirically examined this assertion. Oral administration is considered a permissible accommodation on reading tests in nine states but not in Wisconsin or Iowa where the study was conducted.

Study participants consisted of 48 teachers and 79 students (40 students with disabilities and 39 without disabilities). For each student, the teacher identified appropriate accommodations from a list developed by the researchers. The teachers’ selections of accommodations were based on the cognitive demands of the test, the students’ IEPs, and the teachers’ knowledge about their students. Participants were randomly assigned to one of two test conditions. In Condition 1, 21 students with disabilities

and 20 students without disabilities completed one part of the test with no accommodations and the other part with teacher-recommended accommodations (excluding reading test content aloud if recommended). In Condition 2, 19 students with disabilities and 19 without disabilities completed one part of the test with no accommodations and the other part with read-aloud accommodations in addition to those recommended by the teacher. The part of the test completed with and without accommodations was randomly determined by flipping a coin.

Analyses revealed that students without disabilities scored statistically significantly higher than students with disabilities on all parts of the test. Effect sizes were calculated by subtracting each student’s nonaccommodated test score from his or her accommodated test score, then dividing by the standard deviation of the nonaccommodated test scores for the entire sample. The average effect sizes associated with the use of teacher-recommended accommodations were small for students with disabilities (.25) and negative (-.05) for students without disabilities. Average effect sizes associated with the use of read-aloud plus teacher-recommended accommodations were small and nearly similar for the two groups, .22 for students with disabilities and .24 for those without disabilities. Additional post hoc analyses revealed considerable individual variability. Elliott noted, for example, that half of all students with disabilities and 38 percent of students without disabilities had at least a small effect associated with use of the accommodations. No statistically significant interaction effects were found between group and test condition. Elliott pointed out that this study adds evidence to support the popular view that oral reading of a reading test has an invalidating effect on the test scores.

Discussion and Synthesis of Research Findings

In summarizing the key conclusions from the four studies, Elliott noted that they all showed that accommodations affect the test scores of a majority of students with disabilities and some students without disabilities, although there was significant individual variability. He cautioned that the comparability of test scores is questionable when some students who would benefit from accommodations are allowed the accommodations and others are not.

Elliott concluded by highlighting some critical issues for researchers, policy makers, and test publishers. He urged test publishers to be clearer about the intended skills assessed by a test. He distinguished between the

“target skills” that a test is intended to measure and the “access skills” that are needed to demonstrate performance on the target skills. For instance, the target skill measured by a test may be reading comprehension, while vision is an access skill needed to read the test. Thus, a visually handicapped student might need a large-print or Braille version of a test to demonstrate his or her proficiency level with respect to the target skill. Elliott believes that educators’ tasks of deciding upon appropriate accommodations could be made easier if test publishers were more explicit about the target skills being tested. He also maintained that educators need more professional development about assessment and testing accommodations.

In addition, Elliott called for more experimental research using diverse samples of students and various types of items in mathematics and language arts, although he acknowledged that conducting such research is challenging. He urged states to maintain better records of the number of students accommodated and the specific accommodations used; this will make it easier to conduct research and to evaluate trends over time.

STUDIES AT THE UNIVERSITY OF OREGON

Gerald Tindal, professor at the University of Oregon, made a presentation on various studies he has conducted. He began by summarizing the results of several surveys of teachers’ knowledge about accommodations and examinees’ perceptions about the benefits of accommodations. One survey queried teachers about permissible accommodations in their states. The results showed that the responding teachers correctly identified allowable accommodations about half the time, and special education teachers responded correctly about as often as general education teachers. Another survey examined the extent to which teachers identified appropriate accommodations for students. Results indicated that teachers tended to recommend accommodations that did not in fact assist the test taker. Tindal’s surveys also showed that examinees did not accurately identify when an accommodation helped and when it did not. Test takers almost always believed they benefited from an accommodation, but the test results did not always support this notion. Based on these survey results, Tindal concludes that (1) teachers overprescribe test alterations; (2) teachers’ knowledge of appropriate accommodations may be suspect; and (3) students overrate their ability to benefit from test alterations.

Effects of Oral Administration on Mathematics Test Scores

Tindal described several experimental studies he and his colleagues have conducted. One study investigated the effects of certain presentation and response accommodations on performance on a mathematics test (Tindal, Heath, Hollenbeck, Almond, and Harniss, 1998). Under the presentation accommodation, teachers read a mathematics test aloud (rather than students reading items to themselves); under the response accommodation, students marked answers in their test booklets (rather than on an answer sheet). Study participants consisted of 481 fourth graders—403 general education students and 78 special education students. All participated in both response conditions (marking in the test booklet and filling in the bubbles on the answer sheet), with the order of participation in the conditions counterbalanced (see footnote 3). The test takers were randomly assigned to one of the presentation conditions (standard versus read-aloud). In the read-aloud presentation condition, the teacher read the entire test aloud, including instructions, problems, and item choices.

Findings indicated that general education students scored significantly higher than special education students under all conditions. General education students who received the read-aloud accommodation scored slightly higher than those who read the test themselves, although the differences were not statistically significant. However, scores for special education students who received the read-aloud accommodation were statistically significantly higher than the scores for those who did not receive this accommodation.

The researchers concluded that these findings confirmed the presence of a significant interaction effect and suggested that the read-aloud accommodation is valid for mathematics items. They noted one caveat, however: For this study the read-aloud accommodation was group administered, which Tindal believes may have introduced cuing problems. That is, most students in a class know which students perform best on tests, and, because the tests consisted of multiple-choice items, they need only watch to see when these students mark their answers.

Tindal conducted a follow-up study in which the read-aloud accommodation was provided via video and handled in small-group sessions to overcome the cuing problems. The video was used with 2,000 students in 10 states. Findings indicated statistically significant differences between the means for special education and general education students and between the means for those who received the standard presentation and those who

received the video presentation. There was also a statistically significant interaction of status by format—special education students who participated in the video presentation scored three points higher, on average, than those who participated in the standard administration, while no differences were evident between the means for general education students participating in the video presentation and those receiving the standard presentation.

Effects of Language Simplification on Mathematics Test Scores

In another study, Tindal and his colleagues examined the effects of providing a simplified-language version of a mathematics test to students with learning disabilities (Tindal, Anderson, Helwig, Miller, and Glasgow, 1999). Study participants were 48 seventh graders—two groups of general education students enrolled in lower mathematics classes (16 per group) and a third group of 16 students with learning disabilities who had IEPs in reading. Two test forms were developed, one consisting of items in their original form and one with the simplified items. The simplification process involved replacing indirect sentences with direct sentences; reducing the number of words in the problem; replacing passive voice with active voice; removing noun phrases and conditional clauses; replacing complex vocabulary with simpler, shorter words; and removing extraneous information.

Analyses revealed that the simplification had almost no effect on test scores; that is, students who were low readers but did not have an identified disability and those with disabilities performed equally well in either condition. Furthermore, the researchers found that 10 of the items were more difficult in their simplified form than in their original form. Tindal pointed out that the study had several limitations. Specifically, the sample size was small and the subjects were poor readers and students with disabilities, not English-language learners. He believes that the results are not conclusive and that the use of language simplification as an accommodation for students with learning disabilities needs further study.

Comparisons of Scores on Handwritten and Word-Processed Essays

Tindal and his colleagues also studied the accommodation of allowing students to use word processors to respond to essay questions instead of handwriting responses. One study (Hollenbeck, Tindal, Stieber, and Harniss, 1999) involved 80 middle school students who, as part of Oregon’s

statewide assessment, were given three days to compose a handwritten response to a writing assignment. Each handwritten response was transcribed into a word-processed essay, and no changes were made to correct for errors. Both the handwritten and the word-processed versions of each essay was scored during separate scoring sessions, with each response scored on six traits.

Analyses showed that for five of the six traits, the mean scores for handwritten compositions were higher than the means for the word-processed compositions, suggesting that there were differences in the ways scorers rated essays in the two response modes. For three of the traits (Ideas and Content, Organization, and Conventions), the differences between means were statistically significant. Tindall cautioned, however, that because the study participants were predominantly general education students, the findings may not generalize to students with disabilities.

Tindal and his colleagues have also conducted factor analyses4 to study the factor structure of word-processed and handwritten response formats (Helwig, Stieber, Tindal, Hollenbeck, Heath, and Almond, 1999). For this study, 117 eighth graders (10 of whom were special education students) handwrote compositions for the Oregon statewide assessment in February, which were transcribed to word-processed essays prior to scoring. In May the same students responded to a second writing assessment, this time preparing their responses via computer. Both sets of essays were scored on the six traits. The researchers conducted factor analyses on the sets of scores for each response format.

The factor analyses showed that when only handwritten or only word-processed papers were analyzed, a single factor was identified (all traits loaded on a single factor). When data from the two writing methods were combined, two factors emerged. One factor included the trait scores based on the word-processed response, while the other included all the trait scores based on the handwritten response. Correlations between the trait scores for the different response formats were weak, even for scores on common traits. The researchers concluded from these findings that handwritten and

word-processed compositions demonstrate different skills and are judged differently by scorers.

Automated Delivery of Accommodations

Tindal closed his presentation by demonstrating his work in developing systems for computerized delivery of accommodations. His automated system presents items and answer options visually and provides options for the materials to be read aloud and/or presented in Spanish, American Sign Language, or simplified English. Tindal’s goal is to package the automated system so that the student or teacher can select the appropriate set of accommodations and alter them by type of problem. Tindal encouraged participants to access his webpage (http://brt.uoregon.edu) to learn more about his research projects.

RESEARCH ON KENTUCKY’S ASSESSMENT

Laura Hamilton, behavioral scientist with the RAND Corporation, discussed research she and Daniel Koretz have conducted on the Kentucky Instructional Results Information System (KIRIS) statewide assessment. Hamilton presented findings from a study with the state’s 1997 assessment (Koretz and Hamilton, 1999), which was a follow-up to an earlier study on the 1995 assessment (Koretz, 1997; Koretz and Hamilton, 1999). The 1995 assessment consisted of tests in mathematics, reading, science, and social studies given to fourth, eighth, and eleventh graders. The 1997 assessment covered the same subject areas but shifted some of the tests to fifth and seventh graders. Results from the earlier study indicated that Kentucky had been successful with including most students with disabilities, but several issues were identified for further investigation. In particular, the 1995 study revealed questionably high rates of providing accommodations, implausibly high mean scores for groups of students with disabilities, and some indication of differential item functioning in mathematics. The study of the 1997 assessment was designed to examine the stability of the earlier findings, to extend some of the analyses, and to compare results for two types of items. The 1995 assessment consisted only of open-response items (five common items administered to all students). The 1997 assessment included multiple-choice items (16 common items) as well as open-response items (4 common items).

Inclusion and Accommodation Rates

Hamilton presented data on the percentages of students who were given certain accommodations in 1997. The majority of students with disabilities received some type of accommodation; for example, 81 percent of the fourth graders with disabilities received at least one accommodation (14 percent received one accommodation; 67 percent received more than one). The most frequent accommodations were oral presentation, paraphrasing, and dictation. Use of accommodations declined as grade level went up. By grade eight, the percentage of students with disabilities who received accommodations had declined to 69 percent and by grade eleven, to 63 percent.

Comparisons of Scores for Students with Disabilities and the General Population

Hamilton summarized the overall test results for students with disabilities. The mean data were standardized in the population of non-disabled students, a process that converts means for students with disabilities to standard deviation units above or below the mean for students without disabilities.

The results indicated that, overall, students with disabilities scored lower than students without disabilities in all subject areas, at every grade level, and for both item formats (multiple-choice and open-response). The gap between the groups ranged from .4 of a standard deviation for fourth graders on the open-response science items to 1.4 of a standard deviation for eleventh graders in both item formats for reading. The gap tended to increase as grade level increased. The results for 1997 were comparable to those for 1995 for middle school and high school grades but not for elementary school students. In 1995, fourth graders with disabilities performed nearly as well as those without disabilities, with differences ranging from .1 of a standard deviation lower in science to .4 of a standard deviation lower in mathematics. In 1997, means for fourth graders with disabilities ranged from .4 of a standard deviation lower than those without disabilities on the open-ended science item to .7 of a standard deviation lower on the science multiple-choice items.

Comparisons of Scores for Accommodated and Nonaccommodated Students

The researchers also compared group performance for students with disabilities who received accommodations and those who did not. This comparison showed mixed results—in some cases, accommodated students performed less well than students who did not receive accommodations; in a few cases, this pattern was reversed. Hamilton highlighted two noteworthy findings.

The first finding of note involved comparisons of performance patterns from 1995 to 1997 for accommodated and nonaccommodated elementary students. In 1997, elementary students with disabilities who did not receive accommodations scored .6 to .8 of a standard deviation below their counterparts without disabilities on the open response portions of the assessment, depending on the subject area. In 1995, the corresponding differences for elementary students with disabilities who did not receive accommodations were similar in size to those for 1997, that is, .6 to .7 of a standard deviation below students without disabilities. In contrast, the means of elementary students with disabilities who received accommodations ranged from .4 to .7 of a standard deviation below their counterparts without disabilities across the various subject areas in 1997. However, in 1995, the means for elementary students with disabilities who received accommodations ranged from .1 of a standard deviation above to .3 of a standard deviation below the mean for elementary students without disabilities, depending on the subject area.

The second finding of note related to differences in the way students with disabilities who received accommodations and those who did not performed on certain item types. Preliminary analyses revealed that elementary students with disabilities tended to score lower on multiple-choice items than on open-response items in all subjects except reading, and the lower scores appeared to be attributable to students with disabilities who received accommodations. On the other hand, eleventh graders with disabilities tended to score lower on the open-response questions, and these lower scores appeared to be largely attributable to eleventh graders with disabilities who did not receive accommodations.

Comparisons of the Effects of Types of Accommodations

The authors further investigated the effects of accommodations by examining performance for students grouped by type of accommodation and

type of disability. Hamilton and Koretz judged that some of the means for certain categories of accommodations seemed implausible not only because they were well above the mean for the non-disabled students, but also because they were so different from the means of other students who were given different sets of accommodations. Their analyses revealed that the higher levels of performance were associated with learning disabled students’ use of dictation, oral presentation, and paraphrasing accommodations. In 1995, fourth graders with learning disabilities who received dictation in combination with oral presentation and/or paraphrasing outperformed students without disabilities on the reading, science, and social studies open-response items. In contrast, in 1997 fourth graders with learning disabilities who used these specific accommodations attained means on open response items near or below the means for fourth graders without disabilities.

Hamilton and Koretz further studied the effects of certain accommodations by developing several multiple regression5 models in which the test score was the outcome variable, and the various accommodations were used as predictors.6 Regression models were run separately for 1995 and 1997 data and the regression coefficients compared. Findings suggested some differences in the effects of the accommodations across years. The difference of most interest to the researchers was the regression coefficient associated with the use of dictation, which was .7 in 1995 and dropped to .4 in 1997. This finding indicated that use of dictation would be expected to raise a students test score by about .7 of a standard deviation unit in 1995 but by only .4 of a standard deviation unit in 1997. The researchers believe that this difference raises questions about how the dictation accommodation was implemented in 1995.

Results of Item-Level Analyses

The researchers also conducted a number of item-level analyses to discern differences in the ways students with disabilities and students without disabilities responded to the items. They found that students with disabilities, whether accommodated or not, were more likely to skip items or to receive scores of zero, especially in mathematics. Also, even though students with disabilities were allowed extra time, there appeared to be a time effect—items toward the end of the test were left unanswered more often. As part of the item-level analyses, the researchers also looked at item discrimination indices.7 They compared item discrimination indices for students without disabilities, students with disabilities who took the test without accommodations, and students with disabilities who received accommodations. They found that discrimination indices were similar for all three groups on the open-response items but differed on the multiple-choice items. In particular, the more difficult multiple-choice items tended to be less discriminating for students with disabilities than for students without disabilities. Hamilton and Koretz judged these results to be worthy of further investigation.

The researchers also conducted analyses to examine differential item functioning (see footnote 2). These analyses involved two comparisons—one between students with disabilities who did not receive accommodations and students without disabilities; the other between students with disabilities who received accommodations and students without disabilities. The analyses revealed some evidence of DIF on the mathematics tests for students with disabilities who received accommodations (but not for those who were not accommodated). DIF tended to be larger on the multiple-choice items than on the open-response items. One possible explanation for this finding was that the DIF was related to the “verbal load” of the mathematics items. That is, mathematics items that required substantial reading and writing tended to be more positively affected by accommoda-

tions than those that had lighter reading or writing loads. Hamilton believes that this was probably related to the nature of the accommodations that involved paraphrasing, dictation, and other ways of reducing the linguistic demands of the items.

Discussion and Synthesis of Research Findings

Hamilton concluded by pointing out some of the limitations of the two studies. Although the two studies examined the implications of implementing accommodation policies on large-scale assessments, neither utilized an experimental design. Students did not serve as their own controls and were not randomly assigned to conditions as in Tindal’s and Elliott’s work. Hamilton called for more such work, noting that she and her colleagues have tried to launch experimental studies but have met with resistance, mostly due to a reluctance to withhold accommodations, even in a field test.

Another limitation was that there was no criterion against which to compare the performance of the different groups. This made it impossible in most cases to judge whether scores of students who used certain accommodations were more valid than those who used other accommodations or no accommodations. The researchers did not have data on why accommodations were provided to some students in some combinations and not to others; nor were they able to observe how the accommodations were implemented in practice. The data available to them did not indicate whether an accommodation was used on both the multiple-choice and open-response tests or if there were differences in the ways accommodations were implemented for the two formats.

Hamilton noted that the sample sizes for the less common disability categories (e.g., hearing or visual disabilities) were too small to support indepth analyses. She encouraged states to incorporate and maintain better data systems to enable more refined research and more targeted studies that would address the low-prevalence disabilities.

Hamilton also called for more interviews with teachers and test administrators’ like those conducted by Tindal; such studies would provide a better understanding of how educators decide which accommodations should be used. Her analyses raised some interesting questions about verbal load and other kinds of reasoning processes. She speculated that cognitive analyses of test items with both non-disabled students and students

with disabilities would aid in understanding the response processes that the test items elicit and the skills actually being measured.

RESEARCH ON ENGLISH-LANGUAGE LEARNERS

Jamal Abedi, faculty member at the UCLA Graduate School of Education and director of technical projects at UCLA’s National Center for Research on Evaluation, Standards, and Student Testing (CRESST), made a presentation to summarize research findings on the effects of accommodations on test performance of English-language learners. Abedi described a series of studies he has conducted, noting that many of them were sponsored by the National Center for Education Statistics, and the reports are available at the CRESST website (http://cresst96.cse.ucla.edu).

In Abedi’s opinion, there are four criteria to consider in connection with providing accommodations to English-language learners. He terms the first criterion effectiveness. That is, does the accommodation strategy reduce the performance gap between English-language learners and English-proficient students? The second criterion relates to validity. Here, questions focus on the extent to which the accommodation alters the construct being measured. Abedi noted that in studies with English-language learners, it is common to use the interaction effect Elliott described to judge the validity and comparability of scores obtained under accommodated and nonaccommodated conditions. A third criterion is differential impact. In this case, the focus is on whether the effectiveness of the accommodation varies according to students’ background characteristics. A final criterion is feasibility. That is, is the accommodation feasible from logistic and cost perspectives? Abedi discussed research findings within the context of these four criteria.

Studies on Linguistic Modification

Abedi first described several studies that examined the effects of using linguistic modification as an accommodation. In one study, 946 eighth graders (53 percent English-language learners and 47 percent native or “fluent” English speakers) responded to released NAEP multiple-choice and constructed-response mathematics items under accommodated and standard conditions (Abedi, Lord, Hofstetter, and Baker, 2000). Four types of accommodations were used: a linguistically simplified English version of the test, provision of a glossary, extended time (students were given an extra

25 minutes), and glossary plus extended time. One test booklet was developed for each condition, and a comparison sample of students took the test items in their original form with no accommodations. Tests were administered to intact mathematics classes with students randomly assigned to accommodation groups. All participants responded to a background questionnaire and took a NAEP reading test. The highest group mean scores were observed under the glossary plus extended time accommodation. However, this condition resulted in higher means for native English speakers as well as English-language learners, leading to questions about the validity of scores obtained under this accommodation. Performance was lowest for English learners, when they received a glossary but the time limit was not extended—a finding that the authors speculated may have resulted from information overload. The only accommodation that appeared to be effective (i.e., narrowed the score gap between native English speakers and English-language learners) was linguistic modification.

This study also included an in-depth examination of the relationships between test performance and background variables (e.g., country of origin, language of instruction, length of television viewing, attitudes toward mathematics). Multiple regression was used to examine the effects on mathematics performance of background variables, types of accommodation, and a series of interaction effects of background variables and accommodations (e.g., language of instruction by type of accommodation, television viewing by type of accommodation). Multiple regression models were run and results were compared for models that included the interaction effects and models that did not. The analyses revealed that including the interaction effects (background by type of accommodation) resulted in statistically significant increases in the amount of variance in mathematics performance that was explained by the model. The authors highlighted this finding as evidence of differential impact—the effects associated with different forms of accommodations may vary as a function of students’ background characteristics.

Abedi described another study that examined the performance of 1,174 eighth graders on linguistically simplified versions of mathematics word problems, including some released NAEP items (Abedi and Lord, 2001). In this study, 372 English-language learners and 802 native English speakers responded to 20 mathematics word problems; 10 problems were linguistically simplified and 10 were in their original form. Overall, native English speakers scored higher than English-language learners. English-language learners benefited more than native English speakers when given

linguistically simplified items; however, both groups of students performed better with this accommodation, leading to some concerns about the validity of the results for students who receive linguistically simplified items.

The analyses also showed some evidence of differential impact in that students from low socioeconomic backgrounds benefited more from linguistic simplification than others, and students in low-level and average mathematics classes benefited more than those in high-level mathematics and algebra classes. This finding was true for both English-language learners and native English speakers. Among the linguistic features that appeared to cause problems for students were low-frequency vocabulary and passive-voice verb constructions.

Abedi described another study (Rivera and Stansfield, 2001) that examined the effects of modifying the complexity of science items. The authors compared fourth and sixth graders’ performance on the original and modified items. Scores for proficient English speakers did not increase under the linguistic simplification condition, a finding that the authors interpreted as suggesting that linguistic simplification is not a threat to validity.

Translated Tests

Abedi discussed issues associated with providing translated versions of tests. He explained that when a translated instrument is developed, the intent is to produce an assessment in a student’s native language that is the same in terms of content, questions, difficulty, and constructs as the English version of the test. Abedi finds that creating a translated version of an assessment that is comparable to an English version is difficult. There is a high risk of the two versions differing in content coverage and the constructs assessed, which raises validity concerns. Even with efforts to devise ways to equate tests (Sireci, 1997) and the development of international guidelines for test translation and adaptation (Hambleton, 1994), translated assessments are technically difficult, time-consuming, and expensive to develop (National Research Council, 1999). Additionally, some languages, such as Spanish and Chinese, have multiple dialects, which limits the appropriateness of the translated version for some student populations (Olson and Goldstein, 1997).

Abedi discussed findings from a study that compared performance on NAEP word problems in mathematics under linguistic modification and Spanish translation conditions (Abedi, Lord, and Hofstetter, 1998). Par-

ticipants included 1,394 eighth graders from schools with high enrollments of Spanish speakers. Three test booklets were developed. One consisted of items in their original English form, and a second consisted of items translated into Spanish. The third booklet contained linguistically modified English items for which only linguistic structures and nontechnical vocabulary were modified. Participants also took a reading test.

Preliminary analyses showed that, overall, students scored highest on the modified English version, lower on the original English version, and lowest on the Spanish version. Examination of performance by language status revealed that native English speakers scored higher than English-language learners. In addition, modification of the language of the items contributed to improved performance on 49 percent of the items, with students generally scoring higher on items with shorter problem statements.

The authors conducted a two-factor analysis of variance, finding significant differences in mathematics performance by language status and booklet type as well as a significant interaction of status by booklet type. These results persisted even after controlling for reading proficiency. Further investigation into these findings suggested that students tended to perform best on mathematics tests that were in the same language as their mathematics instruction. That is, the Hispanic English-language learners who received their mathematics instruction in English or sheltered English scored higher on the English version of items (standard or linguistically modified) than their Spanish-speaking peers. In contrast, students who received their mathematics instruction in Spanish performed higher on the Spanish-language version of the items than on the modified or standard English form of the items.

The authors also ran a series of multiple regression analyses to examine the effects of students’ background variables on mathematics and reading scores. The results indicated that certain background variables, such as length of time in the United States, overall middle school grades, and number of times the student changed schools, were predictive of performance in mathematics (R2=.35) and reading (R2=.27).

Studies on Oral Administration

Albedi briefly discussed oral administration as an accommodation for English-language learners. He cited a study by Kopriva and Lowrey (1994) that surveyed students as to their preferences regarding orally administered tests. Results indicated three conditions under which students preferred

oral administration in their native language: if they were new to the United States; if they were not literate in their home language; and if they had little oral or literacy proficiency in English. Students tended to prefer oral administration in English if they had been instructed in English for a long period of time and had attained a level of conversational oral proficiency in English but were not yet literate enough to read the test on their own.

Studies on the Provision of English Dictionaries

Abedi summarized findings from several studies on providing commercially published English dictionaries, noting that the findings were somewhat mixed. In one study, English dictionaries were provided to urban middle school students in Minnesota as part of a reading test (Thurlow, 2001b). The results indicated that participants who rated their English proficiency at the intermediate level appeared to benefit from this accommodation, but those who rated themselves as poor readers did not. Results from another study (Abedi, Courtney, Mirocha, Leon, and Goldberg, 2001) with fourth and eighth graders indicated that use of a published dictionary was not effective and was administratively difficult. Abedi observed that published dictionaries differ widely, and different versions can produce different results. Some have entries in “plain language” that are more understandable for English-language learners or for poor readers. He cautioned that dictionaries raise a validity concern because the definitions may provide information that the test is measuring.

Abedi introduced the idea of a customized dictionary as an alternative to a published dictionary. As the name implies, a customized dictionary is tailored to the purposes of a particular test. Only words that appear in the test items are included, and definitions are written so as not to “give away” answers to test questions. Abedi described one study on the use of customized dictionaries (Abedi, Lord, Kim, and Miyoshi, 2000). This study of 422 eighth grade students compared performance on NAEP science items in three test formats: one booklet in original format, one booklet with an English glossary and Spanish translations in the margins, and one booklet with a customized English dictionary. English-language learners scored highest when they used the customized dictionary, and there was no impact on the performance of native English speakers. Abedi interpreted the findings as suggesting that the use of the customized dictionary was effective and did not alter the construct being measured.

Recommendations for Further Study

Abedi concluded his presentation by offering several recommendations of issues needing further study. He believes that in order to more effectively identify and classify English-language learners and interpret reports of their test results, a common definition and valid criteria for classifications are needed.

Like Elliott, Abedi also urges test designers to identify the specific language demands of their assessments so that teachers can ensure that students have the language resources to demonstrate their content-area knowledge and skills. In addition, he called for test designers to modify test questions to reduce unnecessary linguistic complexity. Because reducing the level of linguistic complexity of test questions helps to narrow the performance gap between English-language learners and native English speakers, he believes this should be a priority in the development of all large-scale assessment programs.

Abedi finds that the research demonstrates that student background variables, including language background, are strong predictors of performance. He encourages states and districts to collect and maintain records on background information, including length of time in the United States, type and amount of language spoken in the home, proficiency level in English and in the student’s native language, and number of years taught in both languages.

He also believes that feasibility is an important consideration. Because of the large number of English-language learners who are (or should be) assessed, providing some forms of accommodations might create logistical problems. For example, providing dictionaries or glossaries to all English-language learners, administering assessments one-on-one, or simplifying test items may exceed the capability of a school district or state. Abedi considers it imperative to perform cost-benefit analyses and to track and evaluate accommodation costs.

Finally, Abedi recommended that the effects of accommodations on the construct being measured be monitored and evaluated closely. Ideally, accommodations will reduce the language barrier for English-language learners but have no effect on native English speakers’ performance. Abedi stressed that additional research is needed to examine the effectiveness, validity, and feasibility of the accommodations for different student groups.

SUMMARY OF RESEARCH FINDINGS

To help the reader assimilate the information presented in this chapter, Tables 6–1 through 6–3 highlight the key features and findings from the studies discussed in detail by the third panel of workshop speakers. Tables 6–1 and 6–2 highlight findings for students with disabilities and English-language learners, respectively. Table 6–3 summarizes findings from research on NAEP, which focused on both groups.

TABLE 6–1 Key Features and Findings from Studies on the Effects of Accommodations on Test Performance for Students with Disabilities

|

Accommodations Studied |

Study |

Grade Level |

Subject Area |

Major Findings |

|

Extra time |

Marquat, 2000 |

8th |

Math |

Differences in performance under accommodated and non-accommodated conditions were not statistically significant. |

|

Oral administration |

McKevitt & Elliott, 2001 |

8th |

Reading |

Both students with disabilities and general education students benefited from the accommodation. |

|

Tindal, Heath, Hollenbeck, Almond, & Harniss, 1998 |

4th |

Math |

Student with disabilities showed statistically significant improvement under accommodated condition, general education students did not. |

|

|

Language simplification |

Tindal, Anderson, Helwig, Miller, & Glasgow, 1999 |

7th |

Math |

No effects on scores for students with a reading disability or for general education students. |

|

Typewritten responses to essay questions |

Hollenbeck, Tindal, Steiber, & Harness, 1999 |

Middle school |

Writing |

Higher scores were given to handwritten responses (even though they were transcribed to word-processed format prior to scoring). |

|

|

Helwig, Stieber, Tindal, Hollenbeck, Heath, & Almond, 1999 |

8th |

Writing |

Two factors emerged, one for each response mode, hand-written vs. word-processed. |

|

Multiple types, as specified on students’ IEPs |

Elliott, 2001 |

4th |

Math and science |

Student with disabilities and general education students both benefited from the accommodations. |

|

Schulte, Elliott, & Kratochwill, 2000 |

4th |

Math |

Student with disabilities and general education students both benefited from the accommodations. |

|

|

Koretz, 1997, Hamilton & Koretz, 1999 |

4th, 8th, 11th; 5th, 7th, 11th |

Math, reading, science, and social studies |

Student with disabilities scored lower than general education in all areas and at all grade levels. Gap between student disabilities and general education students increased as grade level went up. Mixed results comparing means for student disabilities who received accommodations and those who did not. Strongest effects observed for dictation, oral presentation, and paraphrasing. Some evidence of DIF in math for students with disabilities who received accommodations, larger for multiple-choice items than for constructed-response items. |

TABLE 6–2 Key Features and Findings from Studies on the Effects of Accommodations for English-Language Learners (ELL)

|

Accommodations Studied |

Study |

Grade Level |

Subject Area |

Major Findings |

|

Linguistic simplification |

Abedi & Lord, 2001 |

8th |

Math |

Both ELL and native English speakers benefited from the accommodation. Low SES and low-level math students benefited more than those in high-level math and algebra. |

|

Linguistic simplification and Spanish translation |

Abedi, Lord, & Hofstetter, 1998 |

8th |

Math |

Performance varied according to language of math instruction; students who received math instruction in English scored best on modified English version. |

|

Linguistic simplification, glossary, and extra time |

Abedi, Lord, Hofstetter, & Baker, 2000 |

8th |

Math |

Both ELLs and native English speakers benefited from glossary and extra time. Effects of accommodations varied as a function of background characteristics. |

|

Linguistic simplification and customized dictionaries |

Abedi, Lord, Kim, & Miyoshi, 2000 |

8th |

Science |

ELLs improved with accommodation; no impact on native English speakers. |

TABLE 6–3 Key Features and Findings from Studies on the Effects of Accommodations on

|

Accommodations Studied |

Study |

Grade Level |

Type of Test |

Major Findings |

|

Extended time; individual or small group administration; large-print, transcription, oral reading, or signing of directions; bilingual dictionaries in math; bilingual Spanish booklet in math 2000 |

Mazzeo, Carlson, Voelkl, & Lutkus, 2000 |

4th and 8th |

NAEP 1996, national assessment in math and science |

Some evidence that IRT model fit data better when accommodations were provided (S3)a than when they were not provided (S2) . Some evidence of DIF for accommodated 4th and 8th graders in science. Little evidence of DIF in math. Slight evidence that changes in administrative conditions had an impact on scale scores. |

|

Mazzeo, 1998 |

4th and 8th |

NAEP 1998, national and state assessment in reading |

Small differences in scale scores between groups when accommodations were provided (S3) and when they were not provided (S2). Higher inclusion rates were associated with lower mean scores for 4th grade state NAEP samples. |

|

|

Mazzeo, 2000 |

4th and 8th |

NAEP 2000, national and state assessment in math |

Comparisons of state’s rankings based on S2 and S3 results showed few differences. Higher inclusion rates associated with lower mean scores for 8th grade State NAEP samples. |

|

|

aStudents with special needs were included in the S2 sample but accommodations were not provided; in the S3 sample, students with special needs were included and provided with accommodations. |

||||