2

Rise of Earthquake Science

Earthquakes have engaged human inquiry since ancient times, but the scientific study of earthquakes is a fairly recent endeavor. Instrumental recordings of earthquakes were not made until the last quarter of the nineteenth century, and the primary mechanism for the generation of earthquake waves—the release of accumulated strain by sudden slippage on a fault—was not widely recognized until the beginning of the twentieth century. The rise of earthquake science during the last hundred years illustrates how the field has progressed through a deep interplay among the disciplines of geology, physics, and engineering (1). This chapter presents a historical narrative of the development of the basic concepts of earthquake science that sets the stage for later parts of the report, and it concludes with some historical lessons applicable to future research.

2.1 EARLY SPECULATIONS

Ancient societies often developed religious and animistic explanations of earthquakes. Hellenic mythology attributed the phenomenon to Poseidon, the god of the sea, perhaps because of the association of seismic shaking with tsunamis, which are common in the northeastern Mediterranean (Figure 2.1). Elsewhere, earthquakes were connected with the movements of animals: a spider or catfish (Japan), a mole or elephant (India), an ox (Turkey), a hog (Mongolia), and a tortoise (Native America). The Norse attributed earthquakes to subterranean writhing of the imprisoned god Loki in his vain attempt to avoid venom dripping from a serpent’s tooth.

FIGURE 2.1 The fallen columns in Susita (Hypos) east of the Sea of Galilee from a magnitude ~7.5 earthquake on the Dead Sea transform fault in A.D. 749. SOURCE: A. Nur, And the walls came tumbling down, New Scientist, 6, 45-48, 1991. Copyright A. Nur.

Some sought secular explanations for earthquakes and their apocalyptic consequences (Box 2.1, Figure 2.2). For example, in 31 B.C. a strong earthquake devastated Judea, and the historian Josephus recorded a speech by King Herod given to raise the morale of his army in its aftermath (2): “Do not disturb yourselves at the quaking of inanimate creatures, nor do you imagine that this earthquake is a sign of another calam-

|

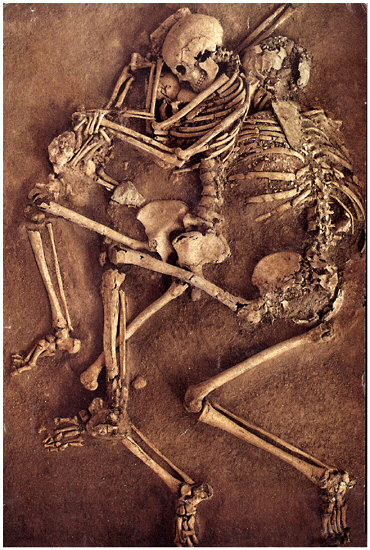

BOX 2.1 Ruins of the Ancient World The collision of the African and Eurasian plates causes powerful earthquakes in the Mediterranean and Middle East. Some historical accounts document the damage from particular events. For example, a Crusader castle overlooking the Jordan River in present-day Syria was sheared by a fault that ruptured it at dawn on May 20, 1202.1 In most cases, however, such detailed records have been lost, so that the history of seismic destruction can be inferred only from archaeological evidence. Among the most convincing is the presence of crushed skeletons, which are not easily attributable to other natural disasters or war and have been found in the ruins of many Bronze Age cities, including Knossos, Troy, Mycenae, Thebes, Midea, Jericho, and Megiddo. Recurring earthquakes may explain the repeated destruction of Troy, Jericho, and Megiddo, all built near major active faults. Excavation of the ancient city of Megiddo—Armageddon in the Biblical prophecy of the Apocalypse—reveals at least four episodes of massive destruction, as indicated by widespread debris, broken pottery, and crushed skeletons.2 Similarly, a series of devastating earthquakes could have destabilized highly centralized Bronze Age societies by damaging their centers of power and leaving them vulnerable to revolts and invasions.3 Historical accounts document such “conflicts of opportunity” in the aftermath of earthquakes in Jericho (~1300 B.C.), Sparta (464 B.C.), and Jerusalem (31 B.C.). |

ity; for such affections of the elements are according to the course of nature, nor does it import anything further to men than what mischief it does immediately of itself.”

Several centuries before Herod’s speech, Greek philosophers had developed a variety of theories about natural origins of seismic tremors based on the motion of subterranean seas (Thales), the falling of huge blocks of rock in deep caverns (Anaximenes), and the action of internal fires (Anaxagoras). Aristotle in his Meteorologica (about 340 B.C.) linked earthquakes with atmospheric events, proposing that wind in underground caverns produced fires, much as thunderstorms produced lightning. The bursting of these fires through the surrounding rock, as well as the collapse of the caverns burned by the fires, generated the earthquakes. In support of this hypothesis, Aristotle cited his observation that earthquakes tended to occur in areas with caves. He also classified earthquakes according to whether the ground motions were primarily vertical or hori-

FIGURE 2.2 The remains of a family—a man, woman, and child (small skull visible next to the woman’s skull)—crushed to death in A.D. 365 in the city of Kourian on the island of Cyprus when their dwelling collapsed on top of them during an earthquake. SOURCE: D. Soren, The day the world ended at Kourion, National Geographic, 30-53, July 1988; Copyright Martha Cooper.

zontal and whether they released vapor from the ground. He noted that “places whose subsoil is poor are shaken more because of the large amount of the wind they absorb.” The correlation he observed between the intensity of the ground motions and the weakness of the rocks on which structures are built remains central to seismic hazard analysis.

2.2 DISCOVERY OF SEISMIC FAULTING

Aristotle’s ideas and their variants persisted well into the nineteenth century (3). In the early 1800s, geology was a new scientific discipline, and most of its practitioners believed that volcanism caused earthquakes, both of which are common in geologically active regions. A vigorous adherent to the volcanic theory was the Irish engineer Robert Mallet, who coined the term seismology in his quantitative study of the 1857 earthquake in southern Italy (4). By this time, however, evidence had been accumulating that earthquakes are incremental episodes in the building of mountain belts and other large crustal structures, a process that geologists named tectonics. Charles Lyell, in the fifth edition of his seminal book The Principles of Geology (1837), was among the first to recognize that large earthquakes sometimes accompany abrupt changes in the ground surface (5). He based this conclusion on reports of the 1819 Rann of Cutch (Kachchh) earthquake in western India—near the disastrous January 26, 2001, Bhuj earthquake— and, in later editions, on the Wairarapa, New Zealand, earthquake of 1855. A protégé of Lyell’s, Charles Darwin, experienced a great earthquake while visiting Chile in 1835 during his voyages on the H.M.S. Beagle. Following the earthquake, he and Captain FitzRoy noticed that in many places the coastline had risen several meters, causing barnacles to die because of prolonged exposure to air. He also noticed marine fossils in sediments hundreds of meters above the sea and concluded that seismic uplift was the mechanism by which the mountains of the coast had risen. Darwin applied James Hutton’s principle of uniformitarianism—“the present is the key to the past”—and inferred that the mountain range had been uplifted incrementally by many earthquakes over many millennia (6).

Fault Slippage as the Geological Cause of Earthquakes

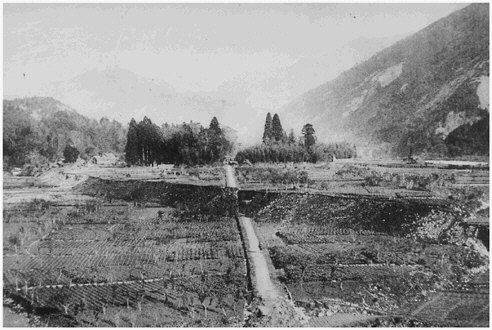

The leap from these observations to the conclusion that earthquakes result from slippage on geological faults was not a small one. The vast majority of earthquakes are accompanied by no surface faulting, and even when such ruptures had been found, questions arose as to whether the ground breaking shook the Earth or the Earth shaking broke the ground. Moreover, the methodology for mapping fault displacements and understanding their relationships to geological deformations, the discipline of structural geology, had not yet been systematized. A series of field studies—by G.K. Gilbert in California (1872), A. McKay in New Zealand (1888), B. Koto in Japan (1891), and C.L. Griesbach in Baluchistan (1892)—demonstrated that fault motion generates earthquakes, thereby documenting that the surface faulting associated with each of these earthquakes was consistent with the long-term, regional tectonic deformation that geologists had mapped (Figure 2.3).

FIGURE 2.3 Photograph of the 1891 Nobi (Mino-Owari) earthquake scarp at Midori, taken by B. Koto, a professor of geology at the Imperial University of Tokyo. Based on his geological investigations, Koto concluded, “The sudden elevations, depressions, or lateral shiftings of large tracts of country that take place at the time of destructive earthquakes are usually considered as the effects rather than the cause of subterranean commotion; but in my opinion it can be confidently asserted that the sudden formation of the ‘great fault of Neo’ was the actual cause of the great earthquake.” This photograph appeared in The Great Earthquake in Japan, 1891, published by the Seismological Society of Japan, which was one of the first comprehensive scientific reports of an earthquake. The damage caused by the Nobi earthquake motivated Japan to create an Earthquake Investigation Committee, which set up the first government-sponsored research program on the causes and effects of earthquakes. SOURCE: J. Milne and W.K. Burton, The Great Earthquake in Japan, 1891, 2nd ed., Lane, Crawford & Co., Yokohama, Japan, 69 pp. + 30 plates, 1892.

Among the geological investigations of this early phase of tectonics, Gilbert’s studies in the western United States were seminal for earthquake science. From the new fault scarps of the 1872 Owens Valley earthquake, he observed that the Sierra Nevada, bounding the west side of the valley, had moved upward and away from the valley floor. This type of faulting was consistent with his theory that the Basin and Range Province between the Sierra Nevada and the Wasatch Mountains of Utah had been formed by tectonic extension (7). He also recognized the similarity of the

FIGURE 2.4 Aerial view, Salt Lake City, showing the scarps of active normal faults of the Wasatch Front, first recognized by G.K. Gilbert. SOURCE: Utah Geological Survey.

Owens Valley break to a series of similar piedmont scarps along the Wasatch Front near Salt Lake City (Figure 2.4). By careful geological analysis, he documented that the Wasatch scarps were probably caused by individual fault movements during the recent geological past. This work laid the foundation for paleoseismology, the subdiscipline of geology that employs features of the geological record to deduce the fault displacement and age of individual, prehistoric earthquakes (8).

Geological studies were supplemented by the new techniques of geodesy, which provide precise data on crustal deformations. Geodesy grew out of two practical arts, astronomical positioning and land surveying, and became established as a field of scientific study in the mid-nineteenth century. One of the first earthquakes to be measured geodetically was the Tapanuli earthquake of May 17, 1892, in Sumatra, which happened during a triangulation survey by the Dutch Geodetic Survey. The surveyor in charge, J.J.A. Müller, discovered that the angles between the survey monuments had changed during the earthquake, and he concluded that a horizontal displacement of at least 2 meters had occurred along a structure later recognized to be a branch of the Great Sumatran fault. R.D. Oldham

of the Geological Survey of India inferred that the changes in survey angles and elevations following the great Assam earthquake of June 12, 1897, were due to co-seismic tectonic movements. C.S. Middlemiss reached the same conclusion for the Kangra earthquake of April 4, 1905, also in the well-surveyed foothills of the Himalaya (9).

Mechanical Theories of Faulting

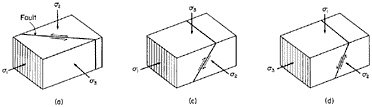

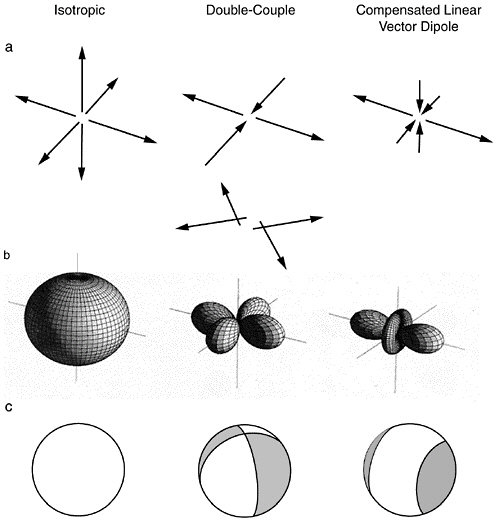

The notion that earthquakes result from fault movements linked the geophysical disciplines of seismology and geodesy directly to structural geology and tectonics, whose practitioners sought to explain the form, arrangement, and interrelationships among the rock structures in the upper part of the Earth’s crust. Although Hutton, Lyell, and the other founders of the discipline of geology had investigated the great vertical deformations required by the rise of mountain belts, the association of these deformations with large horizontal movements was not established until the latter part of the nineteenth century (10). Geological mapping showed that some horizontal movements could be accommodated by the ductile folding of sedimentary strata and plastic distortion of igneous rocks, but that much of the deformation takes place as cataclastic flow (i.e., as slippage in thin zones of failure in the brittle materials that make up the outer layers of the crust). Planes of failure on the larger geological scales are referred to as faults, classified as normal, reverse, or strike-slip according to their orientation and the direction of slip (Figure 2.5).

In 1905, E.M. Anderson (11) developed a successful theory of these faulting types, based on the premises that one of the principal compressive stresses is oriented vertically and that failure is initiated according to a rule published in 1781 by the French engineer and mathematician Charles Augustin de Coulomb. The Coulomb criterion states that slippage occurs when the shear stress on a plane reaches a critical value tc that depends linearly on the effective normal stress sneff acting across that plane:

tc = t0 + µsneff, (2.1)

where t0 is the (zero-pressure) cohesive strength of the rock and µ is a dimensionless number called the coefficient of internal friction, which usually lies between 0.5 and 1.0. Anderson’s theory made quantitative predictions about the angles of shallow faulting that fit the observations rather well (except in regions where fault planes were controlled by strength anisotropy like sedimentary layering). However, it could not explain the existence of large, nearly horizontal thrust sheets that formed at deeper structural levels in many mountain belts. Owing to the large lithostatic load, the total normal stress sn acting on such fault planes was

FIGURE 2.5 Fault types showing principal stress axes and Coulomb angles. For a typical coefficient of friction in rocks (µ ˜ 0.6), the Coulomb criterion (Equation 2.1) implies that a homogeneous, isotropic material should fail under triaxial stress (s1 > s2 > s3) along a plane that contains the s2 axis and lies at an angle about 30° to the s1 direction. According to Anderson’s theory, normal faults should therefore occur where the vertical stress sV is the maximum principal stress s1, and the initial dips of these extensional faults should be steep (about 60°); reverse faults (sV = s3) should initiate as thrusts with shallow dips of about 30°, and strike-slip faults (sV = s2) should develop as vertical planes striking at about 30° to the s1 direction. SOURCE: Reprinted from K. Mogi, Earthquake Prediction, Academic Press, Tokyo, 355 pp., 1985, Copyright 1985 with permission from Elsevier Science.

much greater than any plausible tectonic shear stress, so it was difficult to see how failure could happen. M.K. Hubbert and W.W. Rubey resolved this quandary in 1959 (12) by recognizing that the effective normal stress in the Coulomb criterion should be the difference between sn and the fluid pressure Pf:

sneff = sn – Pf. (2.2)

They proposed that overthrust zones were overpressurized; that is Pf in these zones was substantially greater than the pressure expected for hydrostatic equilibrium and could approach lithostatic values (13). Hence, sneff could be much smaller than sn. Overpressurization may explain why some faults, such as California’s San Andreas, appear to be exceptionally weak.

Elastic Rebound Model

When a 470-kilometer segment of the newly recognized San Andreas rift ruptured across northern California in 1906 (Box 2.2, Figures 2.6 and 2.7), both geologists and engineers jumped at the opportunity to observe first-hand the effects of a major earthquake. Three days after the earthquake, while the fires of San Francisco were still smoldering, California Governor George C. Pardee appointed a State Earthquake Investigation

|

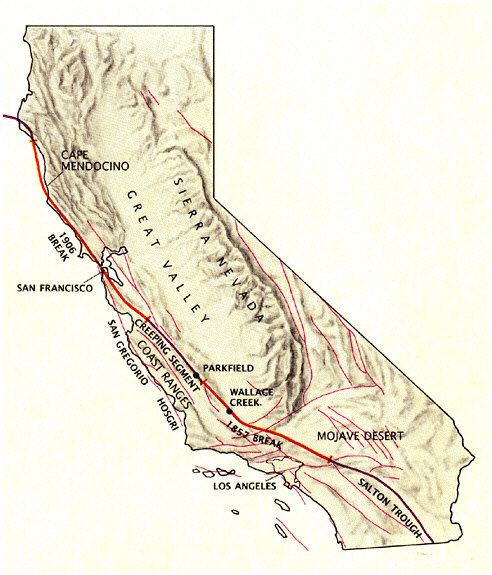

BOX 2.2 San Francisco, California, 1906 At approximately 5:12 a.m. local time on April 18, 1906, a small fracture nucleated on the San Andreas fault at a depth of about 10 kilometers beneath the Golden Gate (20). The rupture expanded outward, quickly reaching its terminal velocity of about 2.5 kilometers per second (5600 miles per hour). Its upper front broke through the ground surface at the epicenter within a few seconds, and its lower front decelerated as it spread downward into the more ductile levels of the middle crust, while the two sides continued to propagate in opposite directions along the San Andreas. Near the epicenter, the rupture displaced the opposite sides of the fault rightward by an average of about 4 meters (a right-lateral strike-slip). On the southeastern branch, the total slip diminished as the rupture traveled down the San Francisco peninsula and vanished 100 kilometers away from the epicenter. To the northwest, the fracture ripped across the neck of the Point Reyes peninsula and entered Tomales Bay, where the total slip increased to 7 meters, sending out seismic waves that damaged Santa Rosa, Fort Ross, and other towns of the northern Coast Ranges. The rupture continued up the coast to Point Arena, where it went offshore, eventually stopping near a major bend in the fault at Cape Mendocino (Figure 2.6). At least 700 people were killed, perhaps as many as 3000, and many buildings were severely damaged.1 In San Francisco, the quake ignited at least 60 separate fires, which burned unabated for three days, consuming 42,000 buildings and destroying a considerable fraction of the West Coast’s largest city. |

Commission, headed by Berkeley Professor Andrew C. Lawson, to coordinate a wide-ranging set of scientific and engineering studies (14). The first volume of the Lawson Report (1908) compiled reports by more than 20 specialists on a variety of observations: the geological setting of the San Andreas; the fault displacements inferred from field observations and geodetic measurements; reports of the arrival time, duration, and intensity of the seismic waves; seismographic recordings from around the world; and detailed surveys of the damage to structures throughout Northern California. The latter demonstrated that the destruction was closely related to building design and construction, as well as to local geology. The intensity maps of San Francisco clearly show that some of the strongest shaking occurred in the soft sediment of China Basin and in the present Marina district, two San Francisco neighborhoods that would be severely damaged in the Loma Prieta earthquake some 83 years later (15). This interdiscipli-

FIGURE 2.7 Panoramic view of the ruins of San Francisco after the April 1906 earthquake and fire, viewed from the Stanford Mansion site. SOURCE: Lester Guensey, Library of Congress, Prints and Photographs Division, [Lc-USZ62-123408 DLC].

nary synthesis is still being mined for information about the 1906 earthquake and its implications for future seismic activity (16).

Professor Henry Fielding Reid of Johns Hopkins University wrote the second volume of the Lawson Report (1910), presenting his celebrated elastic rebound hypothesis. Reid’s 1911 follow-up paper (17) summarized his theory in five propositions:

-

The fracture of the rocks, which causes a tectonic earthquake, is the result of elastic strains, greater than the strength of the rock can withstand, produced by the relative displacements of neighboring portions of the earth’s crust.

-

These relative displacements are not produced suddenly at the time of the fracture, but attain their maximum amounts gradually during a more or less long period of time.

-

The only mass movements that occur at the time of the earthquake are the sudden elastic rebounds of the sides of the fracture towards positions of no elastic strain; and these movements extend to distances of only a few miles from the fracture.

-

The earthquake vibrations originate in the surface of the fracture; the surface from which they start is at first a very small area, which may quickly become very large, but at a rate not greater than the velocity of compressional elastic waves in rock.

-

The energy liberated at the time of an earthquake was, immediately before the rupture, in the form of energy of elastic strain of the rock.

Today all of these propositions are accepted with only minor modifications (18). Although some geologists, for at least the latter half of the nineteenth century, had considered the notion that most large earthquakes result from fault slippage, Reid’s hypothesis was boldly revolutionary. The horizontal tectonic displacements he postulated had no well-estab-

lished geologic basis, for example, and they would remain mysterious until the plate-tectonic revolution of the 1960s (19).

2.3 SEISMOMETRY AND THE QUANTIFICATION OF EARTHQUAKES

In 1883, the English mining engineer John Milne suggested that “it is not unlikely that every large earthquake might with proper appliances be recorded at any point of the globe.” His vision was fulfilled six years later when Ernst von Rebeur-Paschwitz recorded seismic waves on delicate horizontal pendulums at Potsdam and Wilhemshaven in Germany from the April 17, 1889, earthquake in Tokyo, Japan. By the turn of the century, the British Association for the Advancement of Science was sponsoring a global network of more than 40 stations, most equipped with instruments of Milne’s design (21); other deployments followed, expanding the coverage and density of seismographic recordings (22). Working with records of the great Assam earthquake of June 12, 1897, Oldham identified three basic wave types: the small primary (P or compressional) and secondary (S or shear) waves that traveled through the body of the Earth and the “large” (L) waves that propagated across its outer surface (23).

Hypocentral Locations and Earth Structure

Milne investigated the velocities of the P, S, and L waves by plotting their travel times as a function of distance for earthquakes whose location had been fixed by local observations. From curves fit to these travel times, he could then determine the distance from the observing stations to an event with an unknown epicenter, and he could fix its location from the

intersection of arcs drawn at the estimated distance from three or more such stations. By applying this simple technique, he and others began to compile catalogs of instrumentally determined earthquake epicenters (24).

Improved locations meant that seismologists could use the travel time of the seismic waves to develop better models of the variations of wave velocities with depth, which in turn could be used to improve the location of the earthquake’s initial radiation (hypocenter), as well as its origin time. This cycle of iterative refinement of Earth models and earthquake locations, along with advances in the distribution and quality of the seismometer networks, steadily decreased the uncertainties in both. It also led to some major discoveries. In 1906, Oldham presented the first seismological evidence that the Earth had a central core, and in 1914, Beno Gutenberg obtained a relatively precise depth (about 2900 kilometers) to the boundary between the core and the solid-rock shell or mantle (German for coat) surrounding it. From regional recordings of the 1909 Croatian earthquake, the Serbian seismologist Andriji Mohorovicic discovered the sharp increase in seismic velocities that bears his name, often abbreviated the Moho, which separates the lighter, more silica-rich crust from the ultramafic (iron- and magnesium-rich) mantle.

After Milne’s death in 1913, H.H. Turner, an Oxford professor, took over the determination of earthquake hypocenters and origin times. Turner’s efforts to compile earthquake data systematically led, after the First World War, to the founding of the International Seismological Summary (ISS) (25). While preparing the ISS bulletins, Turner (1922) noticed some events with anomalous travel times, which he proposed had hypocenters much deeper than that of typical earthquakes. In 1928, Kiyoo Wadati established the reality of such “deep-focus” earthquakes as much as 700 kilometers beneath volcanic arcs such as Japan and the Marianas, and he subsequently delineated planar regions of seismicity (now called Wadati-Benioff zones) extending from the ocean trenches at the face of the arcs down to these deep events. The Danish seismologist Inge Lehmann discovered the Earth’s inner core in 1936; this “planet within a planet” has since been shown to be a solid metallic sphere two-thirds the size of the Moon at the center of the liquid iron-nickel outer core. By the time Harold Jeffreys and Keith Bullen finalized their travel-time tables in 1940, the Earth’s internal structure was known well enough to estimate the hypocenter of large earthquakes with a standard error often less than 10 kilometers and origin time with a standard error of less than 2 seconds (26).

Earthquake Magnitude and Energy

The next important step in the development of instrumental seismology was the quantification of earthquake size. Maps of seismic damage

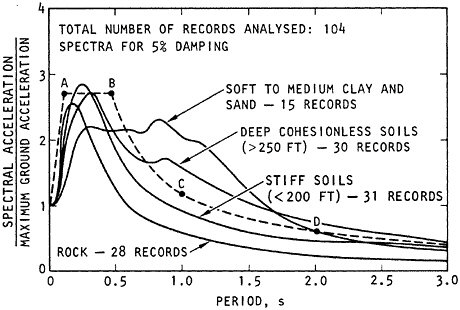

were made in Italy as early as the late eighteenth century. In the 1880s, M.S. Rossi of Italy and F. Forel of Switzerland defined standards for grading qualitative observations by integer values that increase with the amount of shaking and disruption. Versions of their “intensity scale,” as modified by G. Mercalli and others, are still used to map intensity after strong events (27), but they do not measure the intrinsic size of an earthquake, nor can they be applied to events that humans have not felt and observed (i.e., almost all earthquakes). The availability of instrumental recordings and the desire to standardize the seismological bulletins motivated seismologists to estimate the intrinsic size of earthquakes by measuring the amplitude of the seismic waves at a station and correcting them for propagation effects, such as the spreading out of wave energy and its attenuation by internal friction. Several such scales were developed, including one by Wadati in 1931, but the most popular and successful schemes were based on the standard magnitude scale that Charles Richter of Caltech published in 1935.

Richter recognized that seismographic amplitude provides a first-order measure of the radiated energy but that these data are highly variable depending on the type of seismograph, distance to the earthquake, and local site conditions. To normalize for these factors, he considered only southern California earthquakes recorded on Caltech’s standardized network of Wood-Anderson torsion seismometers (28). He defined the local magnitude scale for such events by the formula

ML = log A – log A0, (2.3)

where A is the maximum amplitude of the seismic trace on the standard seismogram; A0 is the amplitude at that same distance for a reference earthquake with ML = 0; and all logarithms are base 10. He fixed the reference level A0 by specifying a magnitude-zero earthquake as an event with an amplitude of 1 micron on a standard Wood-Anderson seismogram at a distance of 100 kilometers (29). An earthquake of magnitude 3.0 thus had an amplitude of 1 millimeter at 100 kilometers, which was about the smallest level measurable on this type of pen-written seismogram (30). Corrections for recordings made at other distances were determined empirically and incorporated into a simple graphic procedure.

During the next decade, Richter and Gutenberg refined and extended the methodology to include earthquakes recorded by various instrument types and at teleseismic distances. Gutenberg published a series of papers in 1945 detailing the construction of magnitude scales based on the maximum amplitude of long-period surface waves (MS), which could be applied to shallow earthquakes at any distance, and teleseismic body waves (mb), which could be applied to earthquakes too deep to excite ordinary

surface waves. To the extent possible, these scales were calibrated to agree with Richter’s definition of magnitude, although various discrepancies became apparent as experience accumulated (31). In 1956, Gutenberg and Richter used surface-wave magnitudes as the basis for an energy formula (with E in joules):

log E = 1.5MS + 4.8. (2.4)

This relationship implies that earthquake energies vary over at least 12 orders of magnitude, a much larger range than previously supposed. It also allows comparison with a new source of seismic energy, the atomic bomb. Seismic signals were recorded by regional stations from the first Trinity test in 1945 (32) and an underwater explosion at Bikini atoll in July of 1946, the Baker test; both generated compressional waves observed at teleseismic distances. The energy released from Baker, a Hiroshima-type device, was about 8 × 1013 joules. Assuming a 1 percent seismic efficiency, Gutenberg and Richter calculated a body-wave magnitude of 5.1 from their revised energy formulas, which agreed reasonably well with their observed value of 5.3 (33). Seismology thus embarked on a new mission, the detection and measurement of nuclear explosions. By 1959, the reliable identification of small underground nuclear explosions had become the primary technical issue confronting the verification of a comprehensive nuclear test ban treaty, and the resulting U.S. program in nuclear explosion seismology, Project Vela Uniform, motivated important developments in earthquake science (34).

Seismicity of the Earth

Observational and theoretical research in Japan, North America, and Europe during the 1930s markedly improved seismogram interpretation. Seismographic readings from an increasingly dense global network of stations were compiled and published regularly in the International Seismological Summary, an invaluable source of data for refining event locations. By 1940, the ability to locate earthquakes was sufficiently advanced to allow the systematic analysis of global seismicity. Gutenberg and Richter produced their first synthesis in 1941, based on their relocation of hypocenters and estimation of magnitudes (35). They used focal depth to formalize the nomenclature of shallow (less than 70 kilometers), intermediate (70 to 300 kilometers), and deep (greater than 300 kilometers) earthquakes; they confirmed that Wadati’s depth of 300 kilometers for the transition from intermediate focus to deep focus was a minimum in earthquake occurrence rate, and they showed a sharp cutoff in global seismicity at about 700 kilometers. Their classic treatise Seismicity of the Earth documented a number of observations about the geographic distribution

of seismicity that helped to establish the plate-tectonic theory: (1) most large earthquakes occur in narrow belts that outline a set of stable blocks, the largest comprising the central and western Pacific basin; (2) nearly all intermediate and deep seismicity is associated with planar zones that dip beneath volcanic island arcs and arc-like orogenic (mountain-building) structures; and (3) seismicity in the ocean basins is concentrated near the crest of the oceanic ridges and rises.

Gutenberg and Richter also discussed a series of issues related to the size distribution and energy release of earthquakes. They found that the total number of earthquakes N greater than some magnitude M in a fixed time interval obeyed the relationship (36)

log N = a – bM, (2.5)

where a and b are empirical constants. Equation 2.5 is equivalent to N = N010–bM; in this form, N0 = 10a is the total number of earthquakes whose magnitude exceeds zero. This is an extrinsic parameter that depends on the temporal interval and spatial volume considered, whereas b describes an exponential fall-off in seismicity with magnitude, a parameter more intrinsic to the faulting process. For a global distribution of shallow shocks, they estimated b ˜ 0.9, so that a decrease in one unit of magnitude gives an eightfold increase in frequency. Subsequent studies have confirmed that regional seismicity typically follows these Gutenberg-Richter statistics, with b values ranging from 0.5 to 2.0. Because spatial extent and energy release grow exponentially with magnitude, Gutenberg-Richter statistics imply a power-law scaling between frequency and size (37).

Gutenberg and Richter noted that even though small earthquakes are much more common than large events, the big ones dominate the energy distribution. According to their energy formula (Equation 2.4), an increase by one magnitude unit gives a 32-fold increase in energy, so that a summation over all events still implies that the total energy increases about a factor of 4 per unit magnitude. They used this type of calculation to dispel the popular notion that minor shocks can function as a “safety valve” to delay a great earthquake. They found that the total annual energy release from all earthquakes was only a fraction of the heat flow from the solid Earth, estimated a few years earlier by the British geophysicist Edward Bullard (38). This calculation was consistent with the idea that earthquakes were a form of work done by a thermodynamically inefficient heat engine operating in the Earth’s interior.

Earthquakes as Dislocations

Although it was known that earthquakes usually originate from sudden movements across a fault, the actual mechanics of the rupture pro-

cess remained obscure (39), and a quantitative theory of how this dislocation forms and generates elastic waves through the spontaneous action of material failure was completely lacking.

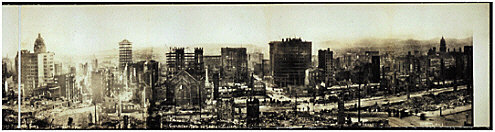

Progress toward a dynamic description of faulting began in Japan, where the high density of seismic stations allowed seismologists to recognize coherent geographic patterns in the seismic radiation. They mapped the first-arriving P-wave pulses into regions of compression (first motion up) and dilatation (first motion down), separated by nodal lines where the initial arrival was very weak (40). Stimulated by these observations, H. Nakano formulated, in 1923, the problem of deducing the orientation of the faulting from the pattern of first motions (41). He expressed the radiation from an instantaneous event in terms of a system of dipolar forces at the earthquake hypocenter. The results appeared to be ambiguous, because the observed “beachball” radiation pattern of P waves (Figure 2.8) could be explained either by a single couple of such forces or by a double couple. A 40-year controversy ensued regarding which of these models is physically correct, until understanding began to grow in the 1960s of the definitive theoretical conclusion that a fault dislocation is equivalent to a double couple (42).

The dislocation model also shed light on the dynamic coupling between the brittle, seismogenic layer and its ductile, aseismic substrate. Geodetic data from the 1906 earthquake had shown that the process of strain accumulation and release was concentrated near the fault. In 1961, Michael Chinnery (43) showed that the displacement from a uniform vertical dislocation decays to half its maximum value at a horizontal distance equal to the depth of faulting, and he applied this result to estimate a rupture depth of 2 to 6 kilometers for the 1906 earthquake. Later workers used Chinnery’s model to provide a physical model for Reid’s elastic rebound theory, arguing that the deformation before the 1906 earthquake was due to nearly steady slip at depth on the San Andreas fault, while the shallow part of the fault slipped enough in the earthquake itself to catch up, at least approximately, with the lower fault surface.

2.4 PLATE TECTONICS

Alfred Wegener, a German meteorologist, first put forward his theory of continental drift in 1912 (44). He marshaled geological arguments that the continents had once been joined as a supercontinent he named Pangea, but he imagined that they moved apart at very rapid rates—tens of meters per year (45)—like buoyant, granitic ships plowing through a denser, basaltic sea of oceanic crust. Jeffreys showed in 1924 that this idea, as well as the dynamic mechanisms Wegener proposed for causing drift (e.g., westward drag on the continents by lunar and solar tidal forces), were

FIGURE 2.8 Graphical representations of three basic types of seismic point sources (left to right): isotropic, double couple, and compensated linear vector dipole. (a) Principal axis coordinates of equivalent force systems. (b) Compressional wave radiation patterns. (c) Curves of intersection of nodal surfaces with the focal sphere. Each of these source types is a specialization of the seismic moment tensor M. SOURCE: Modified from B.R. Julian, A.D. Miller, and G.R. Foulger, Non-double-couple earthquakes: 1. Theory, Rev. Geophys., 36, 525-549, 1998. Copyright 1998 American Geophysical Union. Modified by permission of American Geophysical Union.

physically untenable, and most of the geological community discredited Wegener’s hypothesis (46). In the 1930s, however, the South African geologist A.L. du Toit assembled an impressive set of additional geologic data that supported continental drift beginning in the Mesozoic Era, and the empirical case in its favor was further strengthened when E. Irving and S.K. Runcorn published their compilations of paleomagnetic pole positions in 1956. The paleomagnetic data indicated drifting rates on the order of centimeters per year, several orders of magnitude slower than Wegener had hypothesized. Within the next 10 years, the key elements of plate tectonics were put in place. The main conceptual breakthrough was the recognition that on a global scale, the amount of new basaltic crust generated by seafloor spreading—the bilateral separation of the seafloor along the mid-ocean ridge axis—is balanced by subduction—the thrusting of basaltic crust into the mantle at the oceanic trenches.

Seafloor Spreading and Transform Faults

Submarine mountain ranges, mapped in the 1870s, came into focus as a world-encircling system of extensional tectonics after the Second World War. Marine geologists Maurice Ewing and Bruce Heezen, based at Columbia University, mapped a narrow, nearly continuous “median valley” along the ridge crests in the Atlantic, Indian, and Antarctic Oceans, which they inferred to be a locus of active rifting and the source of the mid-ocean seismicity that Gutenberg and Richter had documented (47). In the early 1960s, Harry H. Hess of Princeton University and Robert S. Dietz of the Scripps Institution of Oceanography advanced the concept of seafloor spreading to account for observations of such phenomena as the paucity of deep-sea sediments and the tendency for oceanic islands to subside with time (48). In his famous 1960 “geopoetry” preprint, Hess noted that crustal creation at the Mid-Atlantic Ridge implies a more plausible mechanism for continental drift than the type originally envisaged by Wegener: “The continents do not plow through oceanic crust impelled by unknown forces; rather they ride passively on mantle material as it comes to the surface at the crest of the ridge and then moves laterally away from it.”

Two distinct predictions based on the theory of seafloor spreading were confirmed in 1966. The first involved the striped patterns of magnetic anomalies being mapped on the flanks of the mid-ocean ridges. In 1963, F. Vine and D. Matthews suggested that such anomalies record the reversals of the Earth’s magnetic field through remnant magnetization frozen into the oceanic rocks as they diverge and cool away from the ridge axis. These geomagnetic “tape recordings” were shown to be symmetric about this axis and consistent with the time scale of geomagnetic reversals worked out from lava flows on land; moreover, the spreading

speed measured from the magnetometer profiles in the Atlantic was found to be nearly constant and in agreement with the average opening rate obtained from the paleomagnetic data on continental rocks (49).

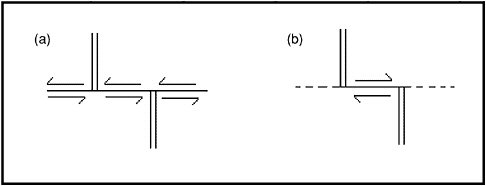

The second confirmation came from the study of earthquakes on the mid-ocean ridges. Horizontal displacements as large as several hundred kilometers had been documented for strike-slip faults on land, by H.W. Wellman for the Alpine fault in New Zealand and by M. Hill and T.W. Dibblee for the San Andreas (50), but even larger displacements—greater than 1000 kilometers—could be inferred from the offsets of magnetic anomalies observed across fracture zones in the Pacific Ocean (51). In a 1965 paper that laid out the basic ideas of the plate theory, the Canadian geophysicist J. Tuzo Wilson recognized that fracture zones were relics of faulting that was active only along those portions connecting two segments of a spreading ridge, which he called transform faults (52). His model implied that the sense of motion across a transform fault would be the opposite to the ridge axis offset. The seismologist Lynn Sykes of Columbia University verified this prediction in an investigation of the focal mechanisms from transform-fault earthquakes (Figure 2.9).

FIGURE 2.9 Two interpretations of two ridge segments offset by a fault. (a) In the pre-plate-tectonic interpretation, the two ridge segments (double lines) would have been offset in a sinistral (left-lateral) sense along the fault (solid line). Earthquakes should occur along the entire fault line. (b) According to the plate-tectonic theory, the two ridge segments were never one continuous feature. Spreading of the seafloor away from the ridges causes dextral (right-lateral) motions only along the section of the fault between the two ridge segments (the transform fault). The extensions of the faults beyond the ridge segments, the fracture zones (dashed lines), are aseismic. Earthquake observations conclusively demonstrated the validity of interpretation (b) for the mid-ocean ridges. SOURCE: Modified from L. Sykes, Mechanism of earthquakes and nature of faulting on mid-ocean ridges, J. Geophys. Res., 72, 2131-2153, 1967. Copyright 1967 American Geophysical Union. Reproduced by permission of American Geophysical Union.

Sykes’s study was facilitated by the rapidly accumulating collection of seismograms, readily available on photomicrofiche, from the new World Wide Standardized Seismographic Network (WWSSN) set up under Project Vela Uniform. These high-quality seismometers had good timing systems, fairly broad bandwidth, and a nearly uniform response to ground motions, and they were installed and permanently staffed around the world at recording sites with relatively low background noise levels (53). The high density of stations allowed smaller events to be located precisely and their focal mechanisms to be determined more rapidly and accurately than ever before. One result was much more accurate maps of global seismicity, which clearly delineated the major plate boundaries, as well as the Wadati-Benioff zones of deep seismicity (Figure 2.10).

Subduction of Oceanic Lithosphere

If the Earth’s surface area is to remain constant, then the creation of new oceanic crust at the ridge crests necessarily implies that some old crust is being recycled back into the mantle. This inference was consistent with the theories of mantle convection that attributed the volcanic arcs and linear zones of compressive orogenesis to convective downwellings (54), which David Griggs had discussed as early as 1939, calling it “a convection cell covering the whole of the Pacific basin, comprising sinking peripheral currents localizing the circum-Pacific mountains and rising currents in the center” (55). Griggs belonged to a growing group of “mobilists” who espoused the view that the Earth’s solid mantle is actively convecting like a fluid heated from below, causing large horizontal displacements of the crust, including continental drift (56). The alternative, expanding-Earth hypothesis states that the planetary radius is increasing, perhaps owing to radioactive heating or possibly to a universal decrease in gravitational strength with time, and that seafloor spreading accommodates the associated increase in surface area (57). Thus, new oceanic crust created at the spreading centers does not have to be balanced by the sinking of old crust back into the mantle.

Because of this controversy, as well as the geologic complexity of the problem, subduction was the last piece of the plate-tectonic puzzle to fall into place (58). While the system of oceanic ridges and transform faults fit neatly together in seafloor spreading, the compressional arcs and mountain belts juxtaposed all types of active faulting, which continued to baffle geologists. Benioff had pointed out the asymmetric polarity of the island arcs, correctly proposing that the deep oceanic trenches are surface expressions of giant reverse faults (59). Robert Coats used this idea to account for the initial formation of island arcs such as the Aleutians and the geochemical data bearing on the development of the

FIGURE 2.10 Locations of earthquake epicenters with body-wave magnitudes greater than 4.5 for the period 1960-1967, which incorporated the improved data of the WWSSN. Computer-generated epicenter maps such as this one first became available in about 1964 and were used to delineate the major plate boundaries and the descending slabs of cold oceanic lithosphere. Dots are epicenters reported by the U.S. Coast and Geodetic Survey. SOURCE: B.L. Isacks, J. Oliver, and L.R. Sykes, Seismology and the new global tectonics, J. Geophys. Res., 73, 5855-5899, 1968. Copyright 1968 American Geophysical Union. Reproduced by permission of American Geophysical Union.

andesitic stratovolcanoes characteristic of these arcs (60). Benioff’s model was based on several misconceptions, however, including the assumption that intermediate- and deep-focus seismicity could be explained by extrapolating trench-type reverse faulting into the mid-mantle transition zone. In fact, the focal mechanism of most earthquakes with hypocenters deeper than 70 kilometers does not agree with Benioff’s model of reverse faulting (61).

The definitive evidence for “thrust tectonics” finally arrived in the form of the great 1964 Alaska earthquake (Box 2.3). The enormous energy released in this event (~3 × 1018 joules) set the Earth to ringing like a bell and allowed precise studies of the terrestrial free oscillations, whose period might be as long as 54 minutes (62). A permanent strain of 10–8 was recorded by the Benioff strainmeter on Oahu, more than 4000 kilometers away, consistent with a fault-dislocation model of the earthquake (63). However, the high-amplitude waves drove most of the pendulum seismometers offscale (64). Moreover, field geologists could not find the fault; all ground breaks were ascribable to secondary effects. What they did observe was a systematic pattern of large vertical motions—uplifts as high as 12 meters and depressions as deep as 2.3 meters, which could easily be mapped along the rugged coastlines by observing the displacement of beaches and the stranded colonies of sessile marine organisms such as barnacles (just as Darwin had done for the 1835 Chile earthquake). By combining this pattern with the seismological and geodetic data, they inferred that the rupture represented the slippage of the Pacific Ocean crust beneath the continental margin of southern Alaska along a huge thrust fault. Geologist George Plafker concluded that “arc structures are sites of down-welling mantle convection currents and that planar seismic zones dipping beneath them mark the zone of shearing produced by downward-moving material thrust against a less mobile block of the crust and upper mantle” (65). By connecting the Alaska megathrust with the more steeply inclined plane of deeper seismicity under the Aleutian Arc, Plafker articulated one of the central tenets of plate tectonics.

Plafker’s conclusions were bolstered by more accurate sets of focal mechanisms that William Stauder and his colleagues at St. Louis University derived (66). Dan McKenzie and Robert Parker took the next major step toward completion of the plate theory in 1967, when they showed that slip vectors from Stauder’s mechanisms of Alaskan earthquakes could be combined with the azimuth of the San Andreas fault to compute a consistent pole of instantaneous rotation for the Pacific and North American plates (67). At the same time, Jason Morgan’s analysis of seafloor spreading rates and transform-fault azimuths demonstrated the global consistency of plate kinematics (68).

Clarity came with the realization that the plate is a cold mechanical

boundary layer that can act as a mechanical stress guide, capable of transmitting forces for thousands of kilometers from one boundary to another (69). The essential elements of the subduction process were brought together in a 1968 paper by seismologists Brian Isacks, Jack Oliver, and Lynn Sykes (70). In addition to obtaining improved data on earthquake locations and focal mechanisms, they delineated a dipping slab of mantle material with distinctively high seismic velocity and low attenuation, which coincided with the Wadati-Benioff planes of deep seismicity (71). They found that they could account for their results, as well as most of the other data on plate tectonics, in terms of three mechanical layers, which J. Barrell and R.A. Daly had postulated earlier in the century to explain the vertical motions associated with isostatic compensation. A cold, strong lithosphere was generated by seafloor spreading at the ridge axis and subsequent conductive cooling of the oceanic crust and upper mantle, attaining a thickness of about 100 kilometers. It slid over and eventually subducted back into a hot, weak asthenosphere. Earthquakes of the Wadati-Benioff zones were generated primarily by stresses internal to the descending slab of oceanic lithosphere when it encountered a stronger, interior mesosphere at a depth of about 700 kilometers.

Deformation of the Continents

Plate tectonics was astounding in its simplicity and the economy with which it explained so many previously disparate geological observations. In the late 1960s and 1970s, geological data were reappraised in the light of the “new global tectonics,” leading to some important extensions of the basic plate theory. However, a major problem was the obvious contrast in mechanical behavior of the oceanic and continental lithospheres. Geophysical surveys in the ocean basins revealed much narrower plate boundaries than observed on land. The volcanic rifts of active crust formation along the mid-ocean ridges were found to be only a few kilometers wide, for example, whereas volcanic activity in continental rifts could be mapped over tens to hundreds of kilometers. Similar differences were observed for transform faults; in the oceans, the active slip is confined to very narrow zones, in marked contrast to the broad belts of continental strike-slip tectonics, which often involve many distributed, interdependent fault systems. For example, only about two-thirds of the relative motion between the Pacific and North American plates turned out to be accommodated along the infamous San Andreas fault; the remainder is taken up on subsidiary faults and by oblique extension in the Basin and Range Province (see Section 3.2).

In 1970, Tanya Atwater (72) explained the geological evolution of western North America over the last 30 million years as the consequence

|

BOX 2.3 Prince William Sound, Alaska, 1964 The earthquake nucleated beneath Prince William Sound at about 5:36 p.m. on Good Friday, March 27, 1964. As the rupture spread outward, its progress to the north and east was stopped at the tectonic transition beneath the Chugach Mountains, behind the port of Valdez, Alaska, but to the southwest it continued unimpeded at 3 kilometers per second down the Alaska coastline, paralleling the axis of the Aleutian Trench for more than 700 kilometers, to beyond Kodiak Island. The district geologist of Valdez, Ralph G. Migliaccio, filed the following report:1 Within seconds of the initial tremors, it was apparent to eyewitnesses that something violent was occurring in the area of the Valdez waterfront … Men, women, and children were seen staggering around the dock, looking for something to hold onto. None had time to escape, since the failure was so sudden and violent. Some 300 feet of dock disappeared. Almost immediately a large wave rose up, smashing everything in its path…. Several people stated the wave was 30 to 40 feet high, or more…. This wave crossed the waterfront and, in some areas reached beyond McKinley Street…. Approximately 10 minutes after the initial wave receded, a second wave or surge crossed the waterfront carrying large amounts of wreckage, etc…. There followed a lull of approximately 5 or 6 hours during which time search parties were able to search the waterfront area for possible survivors. There were none. The height of the tsunami measured 9.1 meters at Valdez, but 24.2 meters at Blackstone Bay on the outer coast of the Kodiak Island group and 27.4 meters at Chenega on the Kenai Peninsula. The city of Anchorage, 100 kilometers west of the epicenter, was shielded from the big tsunami, but it experienced considerable damage, especially in the low-lying regions of unconsolidated sediment that became liquefied by the shaking. Robert B. Atwood, editor of the Anchorage Daily Times, who lived in the Turnagain Heights residential section, described his experiences during the landslide: I had just started to practice playing the trumpet when the earthquake occurred. In a few short moments it was obvious that this earthquake was no minor one…. I headed for the door … Tall trees were falling in our yard. I moved to a spot where I thought it would be safe, but, as I moved, I saw cracks appear in the earth. Pieces of the ground in jigsaw-puzzle shapes moved up and down, tilted at all angles. I tried to move away, but more appeared in every direction…. Table-top pieces of earth moved upward, standing like toadstools with great overhangs, some were turned at crazy angles. A chasm opened beneath me. I tumbled down … Then my neighbor’s house collapsed and slid into the chasm. For a time it threatened to come down on top of me, but the earth was still moving, and the chasm opened to receive the house. Migliaccio and Atwood had witnessed the second largest earthquake of the twentieth century. The plane of the rupture inferred from the dimensions of the aftershock zone was the size of Iowa (800 kilometers by 200 kilometers), and geodetic data showed that the offset along the fault averaged more than 10 meters. The product of these three numbers, which is proportional to a measure of earthquake size called the seismic moment (Equation 2.6), was thus 2000 cubic kilometers, about 100 times greater than the 1906 San Francisco earthquake. Among instrumentally recorded earthquakes, only the Chilean earthquake of 1960, which occurred in a similar tectonic setting, was bigger (by a factor of about 3). Both of these great earthquakes |

|

engendered tsunamis of large amplitude that propagated across the Pacific Ocean basin and caused damage and death thousands of kilometers from their source. Along the Oregon-California coast, 16 people were killed by the Alaska tsunami. In Crescent City, California, a series of large tsunamis inundated the harbor, beginning at four and a half hours, with the third and fourth wave causing the most damage. After the first two had struck, seven people returned to a seaside tavern to recover their valuables. Since the ocean seemed to have returned to normal, they remained to have a drink and were caught by the third wave, which killed five of them.2 |

of the North American plate overriding an extension of the East Pacific Rise along a subduction zone paralleling the West Coast. Her synthesis, which accounts for seemingly disparate events (e.g., andesitic volcanism in northern California, strike-slip faulting along the San Andreas, compressional tectonics in the Transverse Ranges, rifting in the Gulf of California) was grounded in the kinematical principles of plate tectonics (73), and her paper did much to convince geologists that the new theory was a useful framework for understanding the complexities of continental tectonics.

Convergent plate boundaries in the oceans were observed to be broader than the other boundary types, with the zone of geologic activity on the surface encompassing the trench itself, the deformed sediments and basement rocks of the forearc sequence, the volcanic arc that overlies the subducting slab, and sometimes an extending back-arc basin (74). Nevertheless, the few-hundred-kilometer widths of the ocean-ocean convergence zones did not compare with the extensive orogenic terrains that mark major continental collisions. The controlling factors were recognized to be the density and strength of the silica-rich continental crust, which are significantly lower than those of the more iron- and magnesium-rich oceanic crust and upper mantle (75). When caught between two converging plates, the weak, buoyant continental crust resists subduction and piles up into arcuate mountain belts and thickened plateaus that erode into distinctive sequences of sedimentary rock. This distributed deformation also causes metamorphism and melting of the crust, generating siliceous magmas that intrude the crust’s upper layers to form large granitic batholiths. In some instances, the redistribution of buoyancy-related stresses can lead to a reversal in the direction of subduction.

W. Hamilton used these consequences of plate tectonics to explain modern examples of mountain building, and J. Dewey and J. Bird used them to account for the geologic structures observed in ancient mountain belts (76).

Much of the early work on convergent plate boundaries interpreted mountain building in terms of two-dimensional models that consider deformations only in the vertical planes perpendicular to the strikes of the convergent zones. During a protracted continent-continent collision, however, crustal material is eventually squeezed sideways out of the collision zone along lateral systems of strike-slip faults. The best modern example is the Tethyian orogenic belt, which extends for 10,000 kilometers across the southern margin of Eurasia. At the eastern end of this belt, the convergence of the Indian subcontinent with Asia has uplifted the Himalaya, raised the great plateau of Tibet, re-elevated the Tien Shan Mountains to heights in excess of 5 kilometers, and caused deformations up to 2000 kilometers north of the Himalayan front. Earthquakes within these continental deformation zones have been frequent and dangerous.

In a series of studies, P. Molnar and P. Tapponnier explained the orientation of the major faults in southern Asia, their displacements, and the timing of key tectonic events as a consequence of the collision of the Indian continent with Asia (77). They investigated the active faulting in central Asia using photographs from the Earth Resources Technology Satellite, magnetic lineations on the ocean floor, and teleseismically determined focal mechanisms of recent earthquakes. By combining these remote-sensing observations with the plate-tectonic information, they demonstrated that strike-slip faulting has played a dominant role in the mature phase of the Himalayan collision (78).

The more diffuse nature of continental seismicity and deformation was consistent with the notion that the continental lithosphere is some-how weaker than the oceanic lithosphere, but a detailed picture required a better understanding of the mechanical properties of rocks. When subjected to differential compression at moderate temperatures and pressures, most rocks fail by brittle fracture according to the Coulomb criterion (Equation 2.1). Extensive laboratory experiments on carbonates and silicates showed that for all modes of brittle failure, the coefficient of friction µ usually lies in the range 0.6 to 0.8, with only a weak dependence on the rock type, pressure, temperature, and properties of the fault surface. This behavior has come to be known as Byerlee’s law (79), and it implies that the frictional strength of continental and oceanic lithospheres should be about the same, at least at shallow depths.

Rocks deform by ductile flow, not brittle failure, when the temperature and pressure get high enough, however, and the onset of this ductility depends on composition. Investigations of ductile flow began in 1911 with Theodore von Kármán’s triaxial tests on jacketed samples of marble.

It was found that the strength of ductile rocks decreases rapidly with increasing temperature and that their rheology approaches that of a viscous fluid. The brittle-ductile transition thus explained the plate-like behavior of the oceanic lithosphere and the fluid-like behavior of its subjacent, convecting mantle. Rock mechanics experiments further revealed that ductility sets in at lower temperatures in quartz-rich rocks than in olivine-rich rocks, typically at midcrustal depths in the continents. The ductile behavior of the lower continental crust inferred from laboratory data, which was consistent with the lack of earthquakes at these depths, thus explained the less plate-like behavior of the continents (80).

2.5 EARTHQUAKE MECHANICS

Gilbert and Reid recognized the distinction between fracture strength and frictional strength (81), and they portrayed earthquakes as frictional instabilities on two-dimensional faults in a three-dimensional elastic crust, driven to failure by slowly accumulating tectonic stresses—a view entirely consistent with plate tectonics. Although earthquakes surely involve some nonelastic, volumetric effects such as fluid flow, cracking of new rock, and the expansion of gouge zones, Gilbert and Reid’s idealization still forms the conceptual framework for much of earthquake science, both basic and applied. Nevertheless, because the friction mechanism was not obviously compatible with deep earthquakes, as described below, their view that earthquakes are frictional instabilities on faults had, by the time Wilson wrote his 1965 paper on plate tectonics, been considered and rejected by some scientists.

The Instability Problem

Deep-focus earthquakes presented a major puzzle. Seismologists had found that the deepest events, 600 to 700 kilometers below the surface, are shear failures just like shallow-focus earthquakes and that the decrease in apparent shear stress during these events is on the order of 10 megapascals, about the same size as the stress drops estimated for shallow shocks. According to a Coulomb criterion (Equation 2.1), the shear stress needed to induce frictional failure on a fault should be comparable to the lithostatic pressure, which reaches 2500 megapascals in zones of deep seismicity. Shear stresses of this magnitude are impossibly high, and if the stress drop approximates the absolute stress, as most seismologists believe, they would conflict with the observations (82).

Furthermore, if earthquakes result from a frictional instability, the motion across a fault must at some point be accelerated by a drop in the frictional resistance. A spontaneous rupture like an earthquake thus re-

quires some type of strain weakening, but the rock deformations observed in the laboratory at high pressure and temperature tended to display strain hardening during ductile creep. In a classic 1960 treatise Rock Deformation, D. Griggs and J. Handin (83) concluded that the old theory of earthquakes’ originating by ordinary fracture with sudden loss of cohesion was invalid for deep earthquakes, although they did note that extremely high fluid pressures at depth could validate that same mechanism they presumed to hold for shallow events.

A renewed impetus was given to the frictional explanation in 1966, when W.F. Brace and Byerlee demonstrated that the well-known engineering phenomenon of stick-slip also occurs in geologic materials (84). Experimenting on samples with preexisting fault surfaces, they observed that the stress drops in the laboratory slip events were only a small fraction of the total stress. This implies that the stress drops during crustal earthquakes could be much smaller than the rock strength, eliminating the major seismological discrepancy. Subsequent experiments at the Massachusetts Institute of Technology found a transition from stick-slip behavior to ductile creep at about 350°C (85). Stick-slip instabilities thus matched the properties of earthquakes in the upper continental crust, which were usually confined above this brittle-ductile transition, although this could not explain the deeper shocks in subduction zones. In addition, Brace and Byerlee’s work focused theoretical attention on how frictional instabilities depend on the elastic properties of the testing machine or fault system (86).

During the next decade, the servo-controlled testing machine was developed, in which the load levels and strain rates were precisely regulated, so that the postfailure part of the load-deformation curve in brittle materials could be followed without the stick-slip instabilities encountered with less stiff machines (87). Several new aspects of rock friction were investigated, including memory effects and dilatancy (88). The subsequent development of high-precision double-direct-shear and rotary-shear devices (89) allowed detailed measurements of friction for a wide range of materials under variable sliding conditions. This work documented three interrelated phenomena:

-

Static friction µs depends on the history of sliding and increases logarithmically with the time two surfaces are held in stationary contact (90).

-

Under steady-state sliding, the dynamic friction µd depends logarithmically on the slip rate V, with a coefficient that can be either positive (velocity strengthening) or negative (velocity weakening) (91).

-

When a slipping interface is subjected to a sudden change in the loading velocity, the frictional properties evolve to new values over a

-

characteristic slipping distance Dc, measured in microns and interpreted as the slip necessary to renew the microscopic contacts between the two rough surfaces (92).

During 1979 to 1983, J.H. Dieterich and A.L. Ruina (93) integrated these experimental results into a unified constitutive theory in which the slip rate V appears explicitly in the friction equation and the frictional strength evolves with a characteristic time set by the mean lifetime Dc/V of the surface contacts. The behavioral transition of Brace and Byerlee around 350 degrees, from stick-slip to creep, was interpreted by Tse and Rice (94) as a transition from rate weakening to rate strengthening in the crust and was shown to allow models of earthquake sequences in a crustal strike-slip fault to reproduce primary features inferred for natural events, such as the depth range of seismic slip and rapid after-slip below.

Scaling Relations

According to the dislocation model of earthquakes, slip on a small planar fault is equivalent to a double-couple force system, where the total moment M0 of each couple is proportional to the product of the fault’s area A and its average slip u:

(2.6)

The constant of proportionality G is the elastic shear modulus, a measure of the resistance to shear deformation of the rock mass containing the fault, which can be estimated from the shear-wave velocity. For waves that are large compared with the dislocation, the amplitude of radiation increases in proportion with M0, so that this static seismic moment can be measured directly from seismograms. K. Aki made the first determination of seismic moment from the long-period surface waves of the 1964 Niigata earthquake (95). Many subsequent studies have demonstrated a consistent relationship between seismic moment and the various magnitude scales developed from the Richter standard; the results can be expressed as a general moment magnitude MW of the form

(2.7)

Equation 2.7 defines a unified magnitude scale (96) based on a physical measure of earthquake size. Calculating magnitude from seismic moment avoids the saturation effects of other magnitude estimates, and this procedure became the seismological standard for determining earthquake size. The 1960 Chile earthquake had the largest moment of any known seismic event, 2 × 1023 newton-meters, corresponding to Mw = 9.5 (Table 2.1).

TABLE 2.1 Size Measures of Some Important Earthquakes

|

Date |

Location |

MS |

MW |

M0 (1018 N-m) |

|

April 18, 1906 |

San Francisco |

8.25 |

8.0 |

1,000 |

|

Sept. 1, 1923 |

Kanto, Japan |

8.2 |

7.9 |

850 |

|

Nov. 4, 1952 |

Kamchatka |

8.25 |

9.0 |

35,000 |

|

March 9, 1957 |

Aleutian Islands |

8.25 |

9.1 |

58,500 |

|

May 22, 1960 |

Chile |

8.3 |

9.5 |

200,000 |

|

March 25, 1964 |

Alaska |

8.4 |

9.2 |

82,000 |

|

June 16, 1964 |

Niigata, Japan |

7.5 |

7.6 |

300 |

|

Feb. 4, 1965 |

Aleutian Islands |

7.75 |

8.7 |

12,500 |

|

May 31, 1970 |

Peru |

7.4 |

8.0 |

1,000 |

|

Feb. 4, 1975 |

Haicheng, China |

7.4 |

6.9 |

31 |

|

July 28, 1976 |

Tangshan, China |

7.9 |

7.6 |

280 |

|

Aug. 19, 1977 |

Sumba |

7.9 |

8.3 |

3,590 |

|

Oct. 28, 1983 |

Borah Peak |

7.3 |

6.9 |

31 |

|

Sept. 19, 1985 |

Mexico |

8.1 |

8.0 |

1,100 |

|

Oct. 18, 1989 |

Loma Prieta |

7.1 |

6.9 |

27 |

|

June 28, 1992 |

Landers |

7.5 |

7.3 |

110 |

|

Jan. 17, 1994 |

Northridge |

6.6 |

6.7 |

12 |

|

June 9, 1994 |

Bolvia |

7.0a |

8.2 |

2,630 |

|

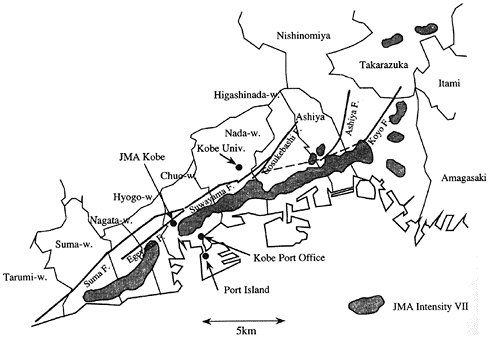

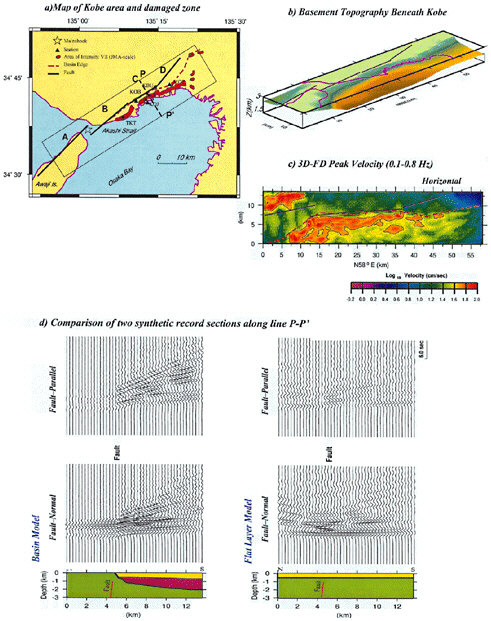

Jan. 16, 1995 |

Hyogo-ken Nanbu, Japan |

6.8 |

6.9 |

24 |

|

Aug. 17, 1999 |

Izmit, Turkey |

7.8 |

7.4 |

242 |

|

Sept. 20, 1999 |

Chi-Chi, Taiwan |

7.7 |

7.6 |

340 |

|

Oct. 16, 1999 |

Hector Mine |

7.4 |

7.1 |

60 |

|

Jan. 13, 2001 |

El Salvador |

7.8 |

7.7 |

460 |

|

Jan. 26, 2001 |

Bhuj, India |

8.0 |

7.6 |

340 |

|

NOTE: All events are shallow except Bolivia, which had a focal depth of 657 km. Moment magnitude MW computed from seismic moment M0 via Equation 2.7. aBody-wave magnitude. SOURCES: U.S. Geological Survey and Harvard University. |

||||

Unless otherwise noted, all magnitudes given throughout the remainder of this report are moment magnitudes.

Beginning in the 1950s, arrays of temporary seismic stations were deployed to study the aftershocks of large earthquakes. Aftershocks are caused by subsidiary faulting from stress concentrations produced by the main shock, owing to inhomogeneities in fault slippage and heterogeneities in the properties of the nearby rocks. Omori’s work on the 1891 Nobi earthquake had demonstrated that the frequency of aftershocks decayed inversely with the time following the main shock (97). In its modern form, “Omori’s law” states that the aftershock frequency obeys a power law of the form

n(t) = A(t + c)–p, (2.8)

where t is the time following the main shock and c and p are parameters of the aftershock sequence. Aftershock surveys confirmed that p is near unity (usually slightly greater) for most sequences. They also showed that the aftershock zone approximated the area of faulting inferred from geologic and geodetic measurements (98).

With independent information about rupture area A from aftershock, geologic, or geodetic information, Equation 2.6 can be solved for the average fault displacement u. Aki obtained a value of about 4 meters for the 1964 Niigata earthquake by this method, consistent with echo-sounding surveys of the submarine fault scarp. A second method derived fault dimensions from the “corner frequency” of the seismic radiation spectrum, an observable value inversely proportional to the rupture duration (99). Corner frequencies were easily measurable from regional and teleseismic data and could be converted to fault lengths by assuming an average rupture velocity (100). Using this procedure, seismologists estimated the source dimensions for a much larger set of events, paving the way for global studies of the stress changes during earthquakes.

For an equidimensional rupture surface, the ratio

Seismic Source Studies

Seismic moment measures the static difference between initial and final states of a fault, not what happens during the rupture. To investigate the dynamics of rupture process, seismologists had to tackle the difficult problem of determining the space-time distribution of faulting during an earthquake from its radiated seismic energy. In the 1960s, a simple kinematic dislocation model with uniform slip and rupture speed was devel-

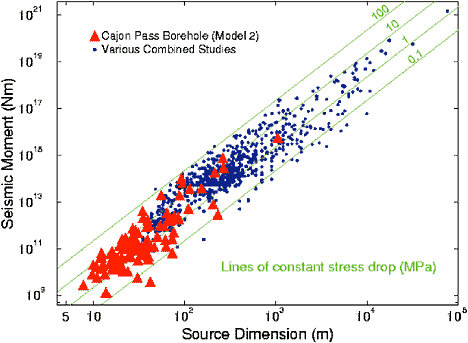

FIGURE 2.11 Seismic moment as a function of source dimension. When plotted on a log-log scale, the diagonal lines (slope = 3) indicate M0 ~ r3 and denote constant stress drop. Note that measurements of the static stress drop vary as the cube of the corner frequency, a sensitivity that contributes to substantial scatter in ?s for individual events. The value cited is based on a numerical study of rupture dynamics (R. Madariaga, Dynamics of an expanding circular fault, Bull. Seis. Soc. Am., 66, 639-666, 1976) and applies to shear waves radiated by a circular, cohesionless fault that stops suddenly around a circular periphery. Using a particular estimate for the source radius in terms of the corner frequency fc yields ?s ˜ 47M0(fc/ß)3. SOURCE: R.E. Abercrombie, Earthquake source scaling relationships from –1 to 5 ML using seismograms recorded at 2.5-km depth, J. Geophys. Res.,100, 24,015-24,036, 1995. Copyright 1995 American Geophysical Union. Reproduced by permission of American Geophysical Union.

oped by N. Haskell to understand the energy radiation from an earthquake and the spectral structure of a seismic source (104). Haskell’s model predicted that the frequency spectrum of an earthquake source is flat at low frequency and falls off as ?–2 at high frequency, where ? is the angular frequency. This simple model (generally called the omega-squared model) was extended to accommodate the much more complex kinematics of real seismic faulting, described stochastically (105), and it was found

to approximate the spectral observations rather well, especially for small earthquakes.

The orientation of an elementary dislocation depends on two directions, the normal direction to the fault plane and the slip direction within this plane, so that the double-couple for a dislocation source is described by a three-dimensional, second-order moment tensorM proportional to M0 (106). By 1970, it was recognized that the seismic moment tensor can be generalized to include an ideal (spherically symmetrical) explosion and another type of seismic source called a compensated linear vector dipole (CLVD). A CLVD mechanism was invoked as a plausible model for seismic sources with cylindrical symmetry, such as magma-injection events, ring-dike faulting, and some intermediate- and deep-focus events (Figure 2.8) (107).

The Stress Paradox

Plate tectonics accounted for the orientation of the stress field on simple plate boundaries, which could be classified according to Anderson’s three principal types of faulting: divergent boundaries (normal faults), transform boundaries (strike-slip faults), and convergent boundaries (reverse faults). The stress orientations mapped on plate interiors using a variety of indicators—wellbore breakouts, volcanic alignments, and earthquake focal mechanisms—were generally found to be coherent over distances of 400 to 4000 kilometers and to match the predictions of intraplate stress from dynamic models of plate motions (108). This behavior implies that the spatial localization of intraplate seismicity primarily reflects the concentration of strain in zones of crustal weakness (109). Explaining the orientation of crustal stresses was a major success for the new field of geodynamics.

About 1970, a major debate erupted over the magnitude of the stress responsible for crustal earthquakes. Byerlee’s law implies that the shear stress required to initiate frictional slip should be at least 100 megapascals, an order of magnitude greater than most seismic stress drops (110). The stresses measured during deep drilling generally agree with these predictions. If the average stresses were this large, however, the heat generated by earthquakes along major plate boundaries would greatly exceed the radiated seismic energy and the heat flowing out of the crust along active fault zones should be very high. Attempts to measure a heat flow anomaly on the San Andreas fault found no evidence of a peak (111). The puzzle of fault stress levels was further complicated as data became available in the middle to late 1980s on principal stress orientations in the crust near the San Andreas (112); the maximum stress direction was found to be steeply inclined to the fault trace and to re-

solve more stress onto faults at angles to the trace of the San Andreas fault than onto the San Andreas fault itself. These results, as well as data on subduction interfaces and oceanic transform faults, suggest that most plate-bounding faults operate at low overall driving stress, on the order of 20 megapascals or less. Various explanations have been put forward (113)—intrinsically weak materials in the fault zones, high fluid pore pressures, or dynamical processes that lower frictional resistance such as wave-generated decreases in normal stress during rupture—but the stress paradox remains a major unsolved problem.

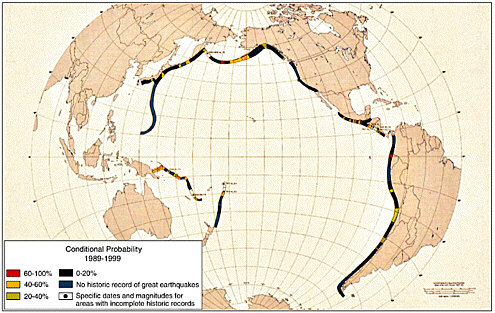

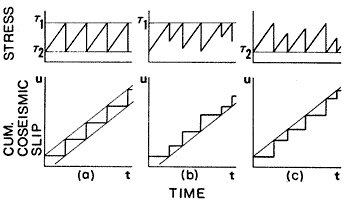

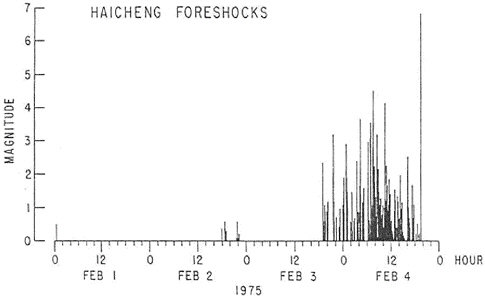

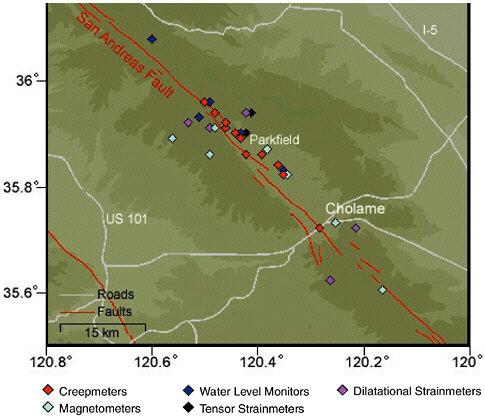

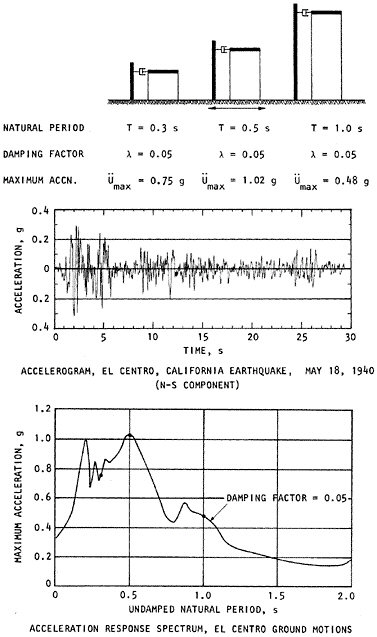

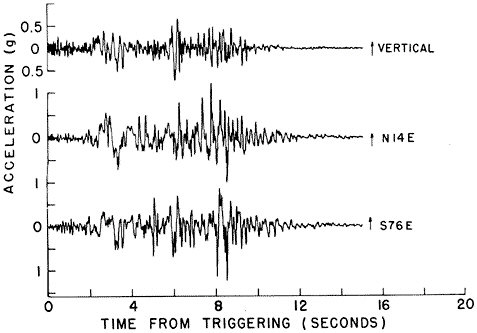

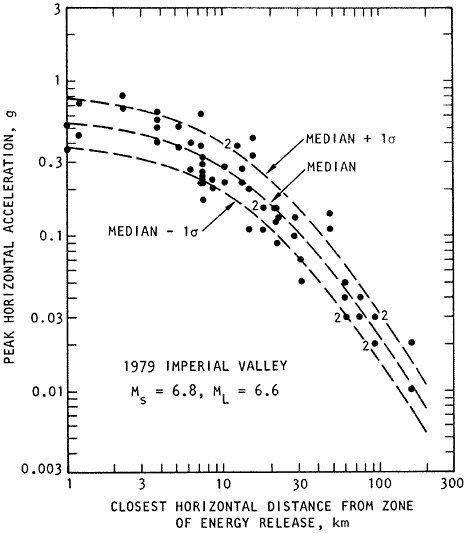

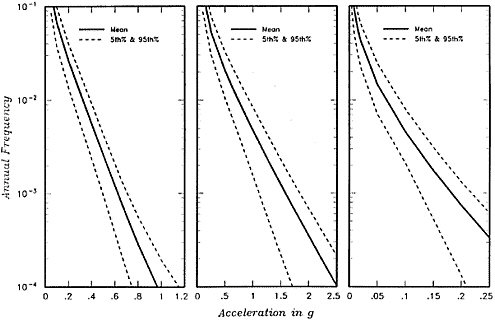

2.6 EARTHQUAKE PREDICTION