PANEL MEMBERS

Tony Scott, General Motors Corporation, Chair

Albert M. Erisman, Institute for Business, Technology, and Ethics, Vice Chair

Michael Angelo, Compaq Computer Corporation

Bishnu S. Atal, AT&T Laboratories-Research

Matt Bishop, University of California, Davis

Linda Branagan, Secondlook Consulting

Jack Brassil, Hewlett-Packard Laboratories

Aninda DasGupta, Philips Consumer Electronics

Susan T. Dumais, Microsoft Research

John R. Gilbert, Xerox Palo Alto Research Center

Roscoe C. Giles, Boston University

Sallie Keller-McNulty, Los Alamos National Laboratory

Stephen T. Kent, BBN Technologies

Jon R. Kettenring, Telcordia Technologies

Lawrence O’Gorman, Avaya Labs

David R. Oran, Cisco Systems

Craig Partridge, BBN Technologies

Debra J. Richardson, University of California, Irvine

William Smith, Sun Microsystems

Don X. Sun, Bell Laboratories/Lucent Technologies

Daniel A. Updegrove, University of Texas, Austin

Stephen A. Vavasis, Cornell University

Paul H. von Autenried, Bristol-Myers Squibb

Mary Ellen Zurko, IBM Software Group

Submitted for the panel by its Chair, Tony Scott, and its Vice Chair, Albert M. Erisman, this assessment of the fiscal year 2002 activities of the Information Technology Laboratory is based on a site visit by the panel on February 26-27, 2002, in Gaithersburg, Md., and documents provided by the laboratory.1

LABORATORY-LEVEL REVIEW

Technical Merit

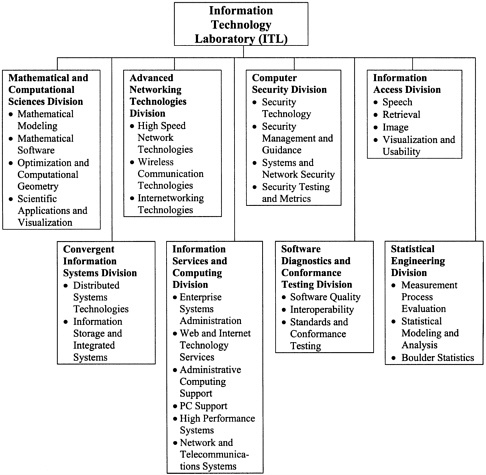

The mission of the Information Technology Laboratory (ITL) is to strengthen the U.S. economy and improve the quality of life by working with industry to develop and apply technology, measurements, and standards. This mission is very broad, and the programs not only encompass technical and standards-related activities but also provide internal consulting services in mathematical and statistical techniques and computing support throughout NIST.2 To carry out this mission, the laboratory is organized in eight divisions (see Figure 8.1): Mathematical and Computational Sciences, Advanced Networking Technologies, Computer Security, Information Access, Convergent Information Systems, Information Services and Computing, Software Diagnostics and Conformance Testing, and Statistical Engineering. The activities of these units are commented on at length in the divisional reviews in this chapter. Below, some highlights and overarching issues are discussed.

The technical merit of the work in ITL remains strong. As part of its on-site reviews, the panel had the opportunity to visit each of the divisions for a variety of presentations and reviews related to the projects currently under way. While it is not possible to review every project in the greatest detail, the panel has been consistently impressed with the technical quality of the work undertaken. The panel also particularly applauds ITL staff’s willingness to take on difficult technical challenges while demonstrating an appropriate awareness of the context in which NIST results will be used and the importance of providing data and products that are not just correct and useful but also timely. Many examples of programs with especially strong technical merit are highlighted in the divisional reviews.

The panel is very pleased to see the progress that has occurred in strategic planning in ITL. A significant development over the past year has been the emergence and acceptance of a framework under which the laboratory activities operate. The framework includes the ITL Research Blueprint and the ITL Program/Project Selection Process and Criteria. The panel observed that these descriptions and tools appear to be well institutionalized within each of the divisions and seem to be having a positive initial impact on improving the direction and efficacy of laboratory projects and programs. These frameworks were widely used in the presentations made to the panel, and the panel noted the emergence of a common “vocabulary” with respect to planning and strategy. Increased collaborations between divisions were also observed. The panel also continues to see progress in the divisions on rational, well-justified decisions about what projects to start and conclude and when to do so.

Program Relevance and Effectiveness

ITL has a very broad range of customers, from industry and government and from within NIST, and the panel found that the laboratory serves all of these groups with distinction. In addition to the panel’s expert opinion, many quantitative measures confirm the relevance and effectiveness of ITL’s programs. One is the level of interaction between laboratory staff and their customers, which continues to rise. Attendance is up at ITL-led and -sponsored seminars, workshops, and meetings; staff participation in standards organizations and consortia is strong; and laboratory staff have robust relationships with researchers and users from companies, governmental agencies, and universities.

FIGURE 8.1 Organizational structure of the Information Technology Laboratory. Listed under each division are the division’s groups.

Another visible measure of the quality and relevance of ITL’s work is the number of awards that laboratory staff receive from NIST, the Department of Commerce, and external sources. Examples include a Department of Commerce Gold Medal and an RSA Public Policy Award for the work on the Advanced Encryption Standard, an R&D 100 Award for the development of the Braille Reader, a series of awards from the National Committee for Information Technology Standards for leadership in standards-related activities such as the work on standards for geographic information systems, and the election of a staff member as a fellow of the American Society for Quality because of his work on

applying statistics to measurement sciences. These honors, spread across the various divisions, recognize outstanding technical and program achievement at numerous levels.

ITL’s interactions with and impact on industrial customers continue to improve each year, and the panel applauds the laboratory’s ability to produce and disseminate results of value to a broad audience. ITL primarily serves two kinds of industrial customers: computer companies (i.e., makers of hardware and software) and the users of their products (which include companies from all sectors, government, and, to some extent, the public). The divisional reviews later in this chapter contain many examples of how ITL makes a difference. Notable cases include the Advanced Networking Technologies Division’s success at raising the visibility of co-interference problems between IEEE 802.11 and Bluetooth wireless networks and NIST’s technical contributions to evaluating possible solutions; the Convergent Information Systems Division’s development of an application that can preview how compressed video appears on different displays, thus allowing producers to make decisions about the amount of compression in light of the equipment likely to be used by the target audience; and the Software Diagnostics and Conformance Testing Division’s facilitation of the development of an open standard and needed conformance tests for extensible markup language (XML). In addition to serving all of these customers, ITL projects also have been known to have an impact worldwide. For example, standards developed with NIST’s help and leadership in the security, multimedia, and biometrics areas are all used throughout the relevant international technical communities.

In last year’s assessment report,3 the panel expressed concerns about industry trends in standards development that would affect ITL’s ability to effectively and openly help industry adopt the most appropriate standards for emerging technologies. The growing use of consortia and other private groups in standards development processes places a burden on ITL, which has to strike a balance between its obligation to support and encourage open processes and its need to be involved as early as possible in standards-setting activities so as to maximize the impact of ITL’s experience and tools. In some cases, a delicate trade-off must be made between participating in a timely way in organizations that will set standards for the industry and avoiding endorsement of standards set by exclusive groups. ITL’s role as a neutral third party and its reputation as an unbiased provider of technical data and tools have produced significant impact in many areas and should not be squandered by association with organizations that unreasonably restrict membership. The panel continues to urge ITL to establish a policy to help divisions decide when participation in closed consortia is appropriate and to consider how NIST can encourage industry to utilize open, or at least inclusionary, approaches to standards development.

Given that consortia, in some form or another, are here to stay and that in some cases it will be vital for NIST to participate in these consortia, the panel supports the efforts recently made by ITL and NIST to work on the internal legal roadblocks to participation, but it suggests that this work could be supplemented by efforts to educate external groups, such as consortia members and lawyers, on ways to facilitate NIST’s timely participation and technical input. This is a customer outreach effort as well as a legal issue.

One customer that relies significantly on ITL’s products and expertise is the federal government, which often uses NIST standards and evaluation tools to guide its purchase and use of information technology (IT) products, particularly in the computer security area. An example is the Computer Security Division’s Cryptographic Module Validation Program (CMVP), which has enabled purchasers,

including the U.S. government, to be sure that the security attributes of the products that they buy are as advertised and appropriate. In the Information Access Division, the new Common Industry Format (CIF) standard provides a foundation for exchanging information on the relative usability of products and is already being used for procurement decisions by several large enterprises. Another key ITL activity relevant to the federal government is the work on fingerprint and face recognition. NIST standards and data have played a key role in the development of automated fingerprint identification systems. Also, since the attacks of September 11, 2001, there has been significant pressure to increase the reliability of biometric recognition technologies, especially face recognition. ITL’s existing, long-term programs and expertise in face, fingerprint, and gait biometrics will provide test data that will help drive system development and help government evaluation of systems capabilities.

Programs such as the work on biometrics, especially face recognition, highlight a question relevant to many information technology activities: that is, in what context will technological advances be used? Information technology is often an enabling technology that will produce new capabilities with expected and unexpected benefits and costs.4 The panel acknowledges that ITL’s primary focus is on technical questions and technical quality, but it emphasizes that for the laboratory’s work to be responsible and for the results to be taken seriously in the relevant communities, recognition of the context in which new technologies will be applied is very important. This context has two elements: the deployment of the technology and the social implications of the technology. In the first area, the deployment questions relate to the functionality of the systems in which new technical capabilities will be used. A testbed is not necessarily meant to determine the “best” technology but rather the one that works well enough to meet the needs for which it is being developed. Often, the process of considering the possible applications of a technology results in a broader appreciation of the potential benefits. For example, appropriate security is actually an enabler that allows e-business, the globalization of work, collaboration across geography, and so on.

Understanding the ultimate goals for new technologies relates to the social implications questions. For example, security has serious implications for privacy. The panel emphasizes that in many of the ongoing programs—such as the work on the potential use of face recognition technologies as security systems in public places—ITL staff made long and arduous efforts to comply with existing privacy legislation. However, when describing the NIST results to public groups (such as the panel), staff should also be sure to take the time to acknowledge the privacy questions and describe potential future issues, as well as discussing the capabilities and benefits of the technological advancements.

Following are two examples of areas in which the panel believes that the potential societal issues or the actual context in which technologies would be used were not being fully considered. The first example is the suggestion that a commercial application for face recognition could be that of having an automated teller machine (ATM) recognize a user with Hispanic features and automatically switch to using Spanish. As many people of Hispanic (or Swedish or Asian) appearance are not in fact speakers of the “native” language implied by their looks, this is a naïve (and perhaps inappropriate) example of the technology’s potential. The second example is in the area of pervasive computing, where NIST’s work on “smart” meeting facilities was demonstrated for the panel. Recording meetings for search and archiving can offer significant benefits in some contexts, but it can also inhibit certain types of discussions. For example, the effectiveness of brainstorming sessions or examinations of “what if” scenarios

might be significantly limited if the participants thought the discussion might later be taken out of context and broadcast.

In addition to strong relationships with customers in industry and in the federal government, ITL places significant emphasis on effectively serving its customers within NIST. The panel commends the focus in both the Mathematical and Computational Sciences Division and the Statistical Engineering Division on building robust collaborations with scientists and engineers throughout ITL and the other NIST laboratories. One example is the work of the Mathematical and Computational Sciences Division on the mathematical modeling of solidification with staff from the Materials Science and Engineering Laboratory; another is the Statistical Engineering Division’s development of a method to combine data from diverse building materials studies for the Building and Fire Research Laboratory.

A primary current responsibility of ITL is that of IT support for all of NIST. The relevant activities—which include the support and maintenance of campus networking, personal computers (PCs), administrative applications (such as accounting software), and telephones—are performed by the Information Services and Computing Division. These service programs were unified in this division in December 2000, and the panel is very pleased at the significant progress observed in the past 2 years. The quality and effectiveness of the support functions have improved and so has the overall planning and strategic approach to providing the relevant services. A “NIST IT Architecture” has been developed, and it should help provide context and scope for each of the subarchitectures and various support functions at NIST. Other recent accomplishments include the formation and centralization of a NIST-wide help desk and increased standardization around core processes such as PC procurement. Issues do still exist, however, including a lack of ability for this division’s staff to enforce or even check compliance with centralized IT standards or policies. For example, many units at NIST do their own systems administration, which could result in uneven implementation of appropriate security applications.

The key issue for IT services at NIST in the next year will be an organizational transition. In February 2002, NIST management announced that the support functions currently housed in ITL will be moved out of the laboratory into a separate unit, headed by a chief information officer (CIO) who will report directly to the NIST director. Since a significant problem for the current unit is the difficulty in getting the NIST laboratories to embrace consistent, institutionwide standards for IT systems, raising the services unit to a level equivalent with the laboratories may provide needed visibility for the issue. Another factor that may help is the emphasis by the current director of this new unit (the acting CIO) on demonstrating to the other NIST laboratories how IT services can facilitate their research and how standardizing basic applications can save time and money. Achieving acceptance of this new unit and centralized IT support across NIST will be a serious leadership challenge, as this approach will be a cultural shift for NIST. The panel encourages benchmarking with organizations such as Agilent Technologies that have successfully made such a transition.

Making the IT services component of NIST a separate unit rather than a division of ITL may bring it closer to other laboratories; however, it is important that this unit maintain close ties with ITL programs. For example, some of the work being done in the Computer Security Division can and should be applied to the security of the NIST system. Work on technologies for meetings can be tested and effectively used throughout NIST. Applying the development work of ITL’s research divisions to NIST as a whole will require the continued tracking in the services unit of relevant ongoing projects and the recognition in ITL of the potential for using NIST as a whole as a testbed.

ITL has done a remarkable job of becoming more customer-oriented over the past several years. The panel applauds the laboratory’s efforts in outreach and notes that the progress reflects improvement in a whole range of areas—for example, gathering wider and more useful input, helping with project selection, and increased dissemination and planning for how customers will utilize NIST results and

products. ITL has supported this increased focus on its customers by measuring outputs and outcomes that provide data on how the laboratory is doing in this area. (One example is that of tracking the number of times ITL-developed standards and technology are adopted by government and industry.)

Now that ITL is serving its customers so well, the panel wants to suggest that some attention could also be paid to strengthening the laboratory’s reputation and stature with its colleagues in relevant research communities. Customers are uniquely positioned to assess the timeliness of and need for ITL results, but ITL’s peers can and should assess the technical excellence of the laboratory’s work. A variety of reasons support having input from both groups, that is, having a balanced scorecard for the laboratory’s portfolio. One reason is that sometimes customer satisfaction is not the right metric, since NIST can, and in some cases should, hold companies to higher standards than the companies might wish. Another reason is that elevating the stature of ITL researchers in their peer communities can raise NIST’s credibility with its customers. Therefore, in the future the panel hopes to see increased emphasis on ITL’s visibility within relevant research communities.

Increased visibility, such as ITL’s successful efforts to improve customer relationships, can be driven by the use of appropriate metrics. It is not entirely clear what outputs or events will effectively measure ITL’s work in this area. Possibilities include but are not limited to the number of times that staff are named as nationally recognized fellows of professional organizations (such as IEEE, the Association for Computing Machinery [ACM], the American Physical Society, and the American Society for Quality), the number of times ITL staff are featured speakers at high-profile conferences, and the number of staff publications in top-tier peer-reviewed IT journals. The metrics will obviously depend on the field in which ITL’s research is occurring. The panel acknowledges that it is often inappropriate to compare NIST researchers directly with people working in industry research units or at universities, because ITL’s role of producing test methods, test data, standards, and so on is different from industrial or academic activities and is often unique. However, ITL’s peers at these other institutions are still in a position to recognize and evaluate the technical merit and quality of the NIST programs. The panel is not suggesting that recognition by external peer communities should replace responsiveness to customer needs as a primary focus, but it is instead suggesting that ITL perform the difficult balancing act of putting more emphasis on publication and interaction in the relevant research community without losing its focus on its customers.

Laboratory Resources

Funding sources for the Information Technology Laboratory are shown in Table 8.1. As of January 2002, staffing for the laboratory included 389 full-time permanent positions, of which 319 were for technical professionals. There were also 105 nonpermanent or supplemental personnel, such as postdoctoral research associates and temporary or part-time workers.

The panel’s primary concern in the area of human resources is the April 2002 retirement of the current director of ITL. The panel has observed and laboratory staff have explicitly stated that morale is at an all-time high in ITL, due in large part to the director’s leadership style and direction. A great deal of concern has surfaced among the staff over the process for filling the director’s slot, how long it will take, and what the caliber and style of the next director will be. The panel recommends that NIST leadership focus on providing clear communication to staff about the selection criteria and frequent updates as to the progress of the search and hiring process. Sharing relevant information will certainly help the transition proceed more smoothly.

One facilities issue highlighted in last year’s assessment report was the location of five divisions in NIST North. The existence and use of NIST North is a perennial issue. The panel recognizes that the

TABLE 8.1 Sources of Funding for the Information Technology Laboratory (in millions of dollars), FY 1999 to FY 2002

|

Source of Funding |

Fiscal Year 1999 (actual) |

Fiscal Year 2000 (actual) |

Fiscal Year 2001 (actual) |

Fiscal Year 2002 (estimated) |

|

NIST-STRS, excluding Competence |

31.6 |

31.9 |

44.4 |

38.8 |

|

Competence |

1.5 |

1.6 |

1.1 |

1.3 |

|

STRS—Supercomputing |

12.1 |

12.0 |

11.9 |

10.0 |

|

ATP |

1.8 |

2.4 |

2.3 |

2.0 |

|

Measurement Services (SRM production) |

0.0 |

0.0 |

0.1 |

0.5 |

|

OA/NFG/CRADA |

8.4 |

9.9 |

12.2 |

14.6 |

|

Other Reimbursable |

0.5 |

1.6 |

1.0 |

0.3 |

|

Agency Overhead |

14.4 |

16.4 |

18.4 |

28.2 |

|

Total |

70.3 |

75.8 |

91.4 |

95.7 |

|

Full-time permanent staff (total)a |

381 |

381 |

368a |

389 |

|

NOTE: Funding for the NIST Measurement and Standards Laboratories comes from a variety of sources. The laboratories receive appropriations from Congress, known as Scientific and Technical Research and Services (STRS) funding. Competence funding also comes from NIST’s congressional appropriations but is allocated by the NIST director’s office in multiyear grants for projects that advance NIST’s capabilities in new and emerging areas of measurement science. Advanced Technology Program (ATP) funding reflects support from NIST’s ATP for work done at the NIST laboratories in collaboration with or in support of ATP projects. Funding to support production of Standard Reference Materials (SRMs) is tied to the use of such products and is classified as “Measurement Services.” NIST laboratories also receive funding through grants or contracts from other [government] agencies (OA), from nonfederal government (NFG) agencies, and from industry in the form of cooperative research and development agreements (CRADAs). All other laboratory funding, including that for Calibration Services, is grouped under “Other Reimbursable.” aThe number of full-time permanent staff is as of January of that fiscal year, except in FY 2001, when it is as of March (due to a reorganization of ITL that year). |

||||

quality of the space in NIST North is significantly better than what would be available on campus; however, access to these improved facilities does not compensate for the distance from the rest of the campus for two of the ITL divisions—the Mathematical and Computational Sciences and the Statistical Engineering Divisions. The distance inhibits informal interactions of the staff of these two divisions with their collaborators in the other laboratories on the main campus. Thus, ITL management has submitted the space requirements of these divisions to NIST management, which will be making revised space allocation decisions related to the new Advanced Measurement Laboratory (AML), due to be completed in 2004. The panel encourages NIST management to make a serious effort to move these two divisions back to the main campus.5 However, 2004 is still several years away. In the meantime, the panel continues to note that a mix of systems taking into account technological and social factors could help compensate for the

distance. Tools such as videoconferencing, Web collaboration packages, and Web broadcasting can support nonphysical interactions, but regular, scheduled (and subsidized) opportunities for face-to-face meetings are necessary to make these technical solutions most effective. These approaches are applicable to the NIST North/main campus gap, as well as to the Gaithersburg/Boulder divide.

A second facilities issue raised in the 2001 assessment report was the poor network connectivity of NIST to the outside world. The panel was very pleased to learn that since the last review, NIST has joined the Internet 2 project, thus dramatically improving the connectivity and placing NIST on a par with the major universities and industrial research organizations that participate in this project. The next step will be educating researchers in the other laboratories at NIST about how to take full advantage of this new capability.

The panel met with staff in “skip-level” meetings (sessions in which management personnel were not present). The key message from these meetings was that in the past few years, under the current management of the laboratory, ITL has become an especially enjoyable place to work, noted for such attributes as respect for the individual, stability, an appropriate level of flexibility, and focus on visible results. The panel also observed this high level of morale in visits to individual divisions. Turnover in ITL was approximately 9 percent this year, down slightly from last year. Although turnover has decreased in industry in the past year and is now about comparable to that in ITL, over the last several years ITL has had a remarkably low comparative turnover rate for an IT organization. The panel applauds laboratory and division management for creating such a positive work environment.

Some issues were brought up in the skip-level meetings. The panel cannot judge if these concerns are broad-based or isolated but does note that perhaps laboratory management should be aware of them. For example, ITL staff said that while relationships with the other NIST laboratories had improved, they still felt that ITL did not have the same status or prestige that other laboratories enjoy. The panel notes that continued interaction with staff in other laboratories, internal and external recognition of staff, and cross-laboratory projects will help ameliorate imbalances or perceptions of “second-class” status. The shift of IT support services to a separate unit also might help emphasize to the rest of NIST that the core mission of ITL is the same as that of the rest of the laboratories. Other concerns expressed by staff included perceived inconsistencies in performance measurement and some related frustrations about apparently unequal burdens of work owing to the difficult process for firing poor performers in the federal system. Such perceptions, if they indeed exist on a broader scale in ITL, would not be unique to ITL, NIST, government agencies, or even businesses in general.

Laboratory Responsiveness

The panel found that, in general, ITL has been very responsive to its prior recommendations and observations. The panel’s comments appear to be taken very seriously, and the suggestions made in the assessment reports are often acted on, especially as related to the redirection and conclusion of projects. When advice is not taken, ITL usually provides a good rationale for why a given action has not occurred. Examples of positive responses to suggestions made in last year’s report include the improved strategic planning observed in the Mathematical and Computational Sciences Division, the redirection of the work on distributed detection in sensor networks in the Advanced Networking Technologies Division, the transfer of the latent fingerprint workstation to a Federal Bureau of Investigation (FBI) contractor in the Information Access Division, and the work on connecting NIST to Internet 2 in the Information Services and Computing Division. More discussion of responsiveness and of areas needing continued attention is presented in the divisional reviews below.

In some areas, the issues raised by the panel are long-term questions or areas in which changes are not entirely within ITL’s power. In these cases, the panel looks to see if serious effort has been made. Usually the panel observes some progress and plans to follow up on the issues in future assessments. The location of the MCSD and SED Divisions in NIST North is one such issue, and while the panel is glad to learn that their relocation in conjunction with the occupation of the AML is being considered, the panel will be watching to see whether this occurs and how ITL handles the time prior to AML’s completion. Another such issue is the growing use by industry of consortia and other private groups to set industry standards. The panel recognizes that this trend cannot be controlled by ITL, but it would like to see further consideration of internal policies on use of closed consortia and of ways to encourage open standards development.

MAJOR OBSERVATIONS

The panel presents the following major observations:

-

The panel is impressed with the progress that has occurred in strategic planning in the Information Technology Laboratory (ITL), particularly in the emergence and acceptance of a framework under which laboratory activities operate. The framework includes an ITL Research Blueprint and ITL Program/Project Selection Process and Criteria.

-

ITL has done a remarkable job of becoming more customer-oriented over the past several years. The panel applauds the laboratory’s efforts in outreach and notes that the progress reflects improvement in a whole range of areas, from gathering wider and more useful input to help with project selection to increased dissemination and planning for how customers will utilize NIST results and products.

-

The strong customer relationships now need to be balanced by robust visibility and recognition in ITL’s external peer communities. Publications in top-tier journals, presentations at high-profile conferences, and awards from ITL’s peers will help confirm the technical merit of the work done at NIST and will add to the laboratory’s credibility with its customers.

-

Conveying awareness of the social issues related to ITL’s technical work in areas such as biometrics is an important element of the credible presentation of ITL results to diverse audiences. In certain areas, considering the technical and social context of how the work will be used may help focus the research on the most appropriate questions.

-

The shift of the information technology (IT) support functions to a new unit reporting directly to the NIST director is an opportunity and a challenge for NIST leadership. If this new unit can convince the NIST laboratories to embrace consistent, institutionwide standards for IT systems, it will be an important step and a major cultural shift at NIST. Appropriate emphasis is being placed on demonstrating how IT services can facilitate research and how standardizing basic applications can save time and money.

-

The retirement of the current director of ITL is clearly a source of concern within the laboratory. The panel recommends that NIST leadership focus on communicating clearly with staff about the selection criteria for the director’s replacement and that it supply staff with frequent updates on the progress of the search and hiring process. Sharing of relevant information will certainly help the transition proceed more smoothly.

DIVISIONAL REVIEWS

Mathematical and Computational Sciences Division

Technical Merit

The mission of the Mathematical and Computational Sciences Division is to provide technical leadership within NIST in modern analytical and computational methods for solving scientific problems of interest to U.S. industry. To accomplish this mission, the division seeks to ensure that sound mathematical and computational methods are applied to NIST problems, and it also seeks to improve the environment for computational science at large. Overall, the panel is very impressed with the quality of the division’s work and the strength of its collaborations with other divisions and laboratories at NIST. The division is on track in its execution of a large, ambitious project—the Digital Library of Mathematical Functions (DLMF)—and it is becoming deeply involved in a strategic NIST-wide project on quantum computing. The panel also observes that the division’s strategic planning process is strong and that it is improving.

The Mathematical and Computational Sciences Division is organized in four groups: Mathematical Modeling, Mathematical Software, Optimization and Computational Geometry, and Scientific Applications and Visualization have common themes, such as better mathematical models, better solvers, application of parallelism, and the development of reference implementations and data sets. The projects are mostly collaborative, with collaborators chosen from other NIST laboratories and from external organizations. The division’s overall portfolio is a balanced mixture of short-term and long-term projects and of projects with small and large numbers of staff. Last year’s assessment report raised some questions about the division’s project selection process and strategic planning, and this year the panel was impressed to see that significant progress had been made in this area. The division has a number of ongoing planning activities at division, laboratory, and NIST-wide levels, and it now has a good mixture of bottom-up and strategically generated projects. The triennial update of the division’s strategic plan is scheduled for later in 2002, and the panel looks forward to reviewing the revised plan in next year’s assessment.

The work on the Digital Library of Mathematical Functions is a good example of the focus on reference materials that makes the division’s products so useful to a broad array of customers. The goal of this ambitious project is to provide a Web replacement for the classic National Bureau of Standards publication Handbook of Mathematical Functions by Abramowitz and Stegun.6 The DLMF will be extremely important to scientists and engineers who need access to the latest tools and algorithms related to special mathematical functions, and this work is precisely in line with the division and NIST missions. The division has formulated a good strategy for developing the DLMF; it is using outside editors, attracting external funding (the division was awarded a competitive grant from the National Science Foundation for this project), attracting internal NIST funding, and developing a single writing style to be used in all chapters. Each chapter includes mathematical properties, methods of computation, and graphs, among other useful information. In the past year, progress on DLMF has continued at a good pace; drafts are now available for much of the work, and validation of the information is taking place. The current schedule calls for formal public release of the completed library in 2003, at which

time the panel expects the DLMF Web site to become one of the most popular mathematical Web sites in the world. In the upcoming year, the critical challenges for this project are not primarily technical, but relate more to program management, as the impending deadlines require a significant amount of editorial and production work. The panel hopes that NIST will allow staffing levels to remain sufficient to ensure a high-quality final product.

An example of the division’s effective work in mathematical modeling is the work on Object Oriented Micromagnetic Framework (OOMMF). The goal of this project is to provide a platform for two- and three-dimensional modeling of magnetic phenomena associated with magnetic storage media. (The two-dimensional code is complete, but work is still under way on the three-dimensional version.) The code is written to be highly configurable; it uses Tcl/Tk to provide easy scripting capabilities and cross-platform graphical user interfaces, and the solvers are open-source C++. In 2001, 11 papers were published using results generated by OOMMF.

An impressive new project is the work on quantum information processing. This “hot” area is attracting a great deal of attention from the research community, and the panel believes that NIST is well positioned to have an impact here, owing to NIST’s Nobel Prize-winning physicists, who have expertise relevant to quantum computing, and to the strength of the Mathematical and Computational Sciences Division both in continuous modeling and simulation and in discrete algorithms.

The work on mathematical modeling of solidification is an excellent example of how the applied mathematicians in the Mathematical and Computational Sciences Division can effectively leverage their expertise to produce significant impact from their consulting and collaborative roles. In this project, one division staff member has teamed up with about six dozen people from the NIST MSEL and a large group of university colleagues to model electrodeposition in support of experiments in this area, to model interfacial instabilities during the cooperative growth of monotective materials, and to develop models of solid-state order-disorder transistions. The staff member from the Mathematical and Computational Sciences Division was elected a fellow of the American Physical Society for his work in the area. Another example of a successful collaborative project is the work on machining process metrology, in which a researcher from this division is working with 10 people from three other NIST laboratories (MSEL, MEL, and PL).

The Scientific Applications and Visualization Group joined the Mathematical and Computational Sciences Division last year, relocating from another division within ITL. This group has a strong positive impact through its collaborations with NIST scientists who require state-of-the-art algorithms and architectures to get the performance they need from their scientific codes. As the sheer volume of data in scientific computations increases, visualization techniques become increasingly essential in order for results to be effectively utilized and interpreted. This group has an excellent combination of a wide range of technical skills and a strong collaborative style, as demonstrated particularly by the cluster of projects around concrete modeling. The group also has a strong role in creating standards; for example, a few years ago it facilitated the development of an Interoperable Message Passing Interface (IMPI) standard, and this standard is now having an impact on commercial software.

Program Relevance and Effectiveness

The Mathematical and Computational Sciences Division staff is well connected, well published, and influential on organizing committees and editorial boards. Their work is well regarded by customers both inside and outside NIST. All of the division’s projects have an excellent record of dissemination of results, through a variety of mechanisms such as software, publications, Web services and documentation, conference talks, and workshops. A key factor in the success and impact of the division is the high

quality of the staff. Overall, the technical excellence of the division’s staff is demonstrated in a variety of ways. Personnel receive numerous internal and external awards (including two elections to professional society fellowships in 2001); the division continues to produce a significant number of refereed publications and invited talks; staff serve as editors for many journals (including ACM Transactions on Mathematical Software, for which the division chief is editor-in-chief); and division personnel fill many senior leadership positions in professional societies and working groups and on conference organizing committees.

Last year, the panel cited the Java Numerics project as an excellent example of work with impact and vision and as a good use of NIST’s scientific leadership role. Clearly NIST agrees, as the leaders of the project were awarded the NIST Bronze Medal in 2001. In 2002, this project continues to produce important results. Another impressive project that supports NIST’s core mission is the work on Sparse Basic Liner Algebra Subprograms (BLAS). As with Java Numerics, this work on mathematical software standards is able to impact the commercial landscape owing to the high quality and reputation of the division’s scientists.

Last year’s assessment report discussed the importance—as an element of maintaining the reputation of NIST scientists—of supplementing the many articles coauthored by division staff and appearing in the journals of their collaborators’ fields with publication in their own disciplinary journals. The division’s FY 2001 annual report7 lists the publications that appeared or were accepted in refereed journals this past year; about 12 are in journals in mathematics, scientific computation, or visualization, another 20 or so are in journals in other disciplines, and the last handful are harder to categorize. This represents a balance between the division’s mission of consulting and collaboration and the need for its staff to be recognized as leaders in the mathematical and computational research communities. The former requirement should not be allowed to overshadow the latter one, and the panel encourages the division to maintain and in some cases to raise its visibility at premier mathematical and computational conferences in the areas of division expertise. For example, several projects in the division have a significant component involving computational geometry. The division therefore could consider raising its profile at the annual ACM Symposium on Computational Geometry. Familiarity with the journals and activities in these fields is an important factor in identifying opportunities, and the panel was pleased to learn that the availability of online journals is easing somewhat the problems noted last year with maintaining the NIST library’s journal collections.

Since the terrorist attacks in September 2001, a variety of programs at NIST have been affected by the federal government’s changing priorities. In the Mathematical and Computational Sciences Division, some research projects will probably achieve a higher profile because of their relevance for homeland security. Examples include the projects on image processing and on laser radar (LADAR) systems, both of which will help protect the safety of first responders to terrorist events or other disasters. The division and ITL are participating in NIST-wide planning for activities connected to homeland security and counterterrorism. Indirect effects, such as increased budget uncertainty at various government agencies with which the division works, will also have an impact on the division.

TABLE 8.2 Sources of Funding for the Mathematical and Computational Sciences Division (in millions of dollars), FY 1999 to FY 2002

|

Source of Funding |

Fiscal Year 1999 (actual) |

Fiscal Year 2000 (actual) |

Fiscal Year 2001 (actual)a |

Fiscal Year 2002 (estimated) |

|

NIST-STRS, excluding Competence |

3.3 |

3.6 |

4.1 |

7.2 |

|

Competence |

0.2 |

0.2 |

0.1 |

0.1 |

|

STRS—supercomputing |

0.7 |

0.6 |

3.4 |

0.6 |

|

ATP |

0.1 |

0.1 |

0.5 |

0.5 |

|

OA/NFG/CRADA |

0.4 |

0.7 |

0.9 |

1.9 |

|

Other Reimbursable |

0.0 |

0.1 |

0.0 |

0.0 |

|

Total |

4.7 |

5.3 |

9.0 |

10.3 |

|

Full-time permanent staff (total)b |

30 |

27 |

39b |

39 |

|

NOTE: Sources of funding are as described in the note accompanying Table 8.1. aThe difference between the FY 2000 and FY 2001 funding and staff levels reflects the reorganization of ITL, in which the Scientific Applications and Visualization Group was moved out of the Convergent Information Systems Division and into this division. bThe number of full-time permanent staff is as of January of that fiscal year, except in FY 2001, when it is as of March. |

||||

Division Resources

Funding sources for the Mathematical and Computational Sciences Division are shown in Table 8.2. As of January 2002, staffing for the division included 39 full-time permanent positions, of which 36 were for technical professionals. There were also 15 nonpermanent or supplemental personnel, such as postdoctoral research associates and temporary or part-time workers.

Resources in the division are tight, and the panel is concerned about several effects, related mainly to the constraints on the number of permanent staff, which has been basically constant for several years (the rise in Table 8.2 is due to an organizational change in which a new group was added). In this environment, the division has not been able to hire permanent staff to address continuing and emerging needs in computational science at NIST. One such area is quantum computing, where the division cannot recruit new permanent staff and is instead making good use of outside consultants and collaborators and a new postdoctoral research associate in this area. Traditional areas that are also short-staffed include numerical analysis, mathematical software, and optimization. The panel is particularly concerned about the situation in the Mathematical Software Group, where staffing constraints have limited the group’s ability to explore new projects and forced several existing projects into “maintenance mode.” While the ongoing work in the group is all meritorious, the panel believes that the group is being stretched dangerously thin.

One factor affecting the division’s ability to hire new staff is the virtual lack of attrition recently among permanent staff. This lack of turnover reflects the high morale observed in the division. The panel found that individual researchers are enthusiastic about their work and their management. Staff praised laboratory management’s fostering of good communications both horizontally and vertically; the panel notes that this is quite a change from 3 years ago, when morale problems were severe. Indeed,

many positive comments were made about the entire laboratory and division management chain during the panel’s meetings with individual staff members. Taking the rarity of new hires to permanent staff as a given, management has made effective use of affiliated faculty at area universities and of postdoctoral research associates. Unfortunately, space in this division is tight, limiting the possible number of summer visitors.

Given the many demands on personnel’s time in the current environment, the division must make careful decisions about when and how to develop new revisions of software packages. In many current software projects, the developers are working on a new release for an already successful package. The panel believes that the strategic goals of the division should be taken into account when deciding the priorities with respect to which packages receive new releases. In particular, the panel encourages the division to develop a set of criteria on which to base decisions concerning the time at which work on a second release should begin and what the goals of the release should be. Such criteria should emphasize how the second release would promote the overall mission and goals of the division. In some cases, the decision may be that the most strategic use of resources would be to cease further development on one package and move to a new project.

Another area in which the division needs to be careful about effective deployment of staff time is the long-term maintenance of Web resources such as the Guide to Available Mathematical Software (GAMS), Matrix Market, and the Template Numerical Toolkit (TNT). The division has a huge Web presence (which the panel applauds), and this presence is bound to increase with the release of DLMF and other projects. Many traditional NIST activities produce standard reference data or materials, which are then distributed to customers by NIST’s Office of Measurement Services. This static model is not a good fit to the Mathematical and Computational Sciences Division’s standard information resources, but the division would certainly benefit from a new approach that allowed the research groups to be relieved of some of the more mechanical tasks required to maintain these resources on the Web. A solution to this problem is not obvious, because proper, long-term maintenance of resources such as DLMF requires the attention of a mathematician (not just a Webmaster), yet this mathematician’s time might be better spent on new projects. Indeed, finding a balance between providing useful and up-to-date technical information on the Web and having time to develop new research activities is an issue for most scientific and research organizations that post technical Web pages, and NIST can provide leadership in this area. As a first step, the panel suggests that the division think about the factors that might go into deciding at what level to carry out long-term maintenance of Web pages and that it develop a policy governing these decisions.

As has been noted in many past reports, the housing of most of the division at NIST North makes informal interaction between division staff and personnel on the main campus difficult. This is a significant disadvantage for the division, as collaborative efforts with other NIST laboratories are a primary focus. Once a collaboration has begun, the physical distance is only an inconvenience, but many new collaborations, especially those in new areas, originate from casual contacts that are not currently available to the staff at NIST North. Increasing the difficulty of discovering new areas for cooperative work may have negative long-term impacts on the vitality of the division’s project mix. A related concern is that the division’s new group, Scientific Applications and Visualization, is currently located on the main campus, not in NIST North, and the panel did not observe much interaction between this group and the rest of the division, probably because of the physical separation. Another locational issue is the relationship between the division’s Gaithersburg personnel (which include division management) and its small group at NIST Boulder. Some of the Boulder staff have expressed a desire for closer connections with the rest of the division. Division management should consider mechanisms to increase Boulder-Gaithersburg interactions, such as a small dedicated travel fund for staff travel between the two

sites. It would also be useful to have some Boulder staff travel to Gaithersburg for the annual assessment of the division.

Advanced Networking Technologies Division

Technical Merit

The mission of the Advanced Networking Technologies Division is to provide the networking industry with the best in test and measurement technology. This mission statement is appropriate, and it accurately reflects the NIST and laboratory missions within the context of technologies relevant to this division’s work. The division focuses on using test and measurement technologies to improve the quality of networking specifications and standards and to improve the quality of networking products based on public specifications. In emerging technology areas, the division also performs modeling and simulation work to help ensure that specifications produced by industry and standardization organizations are complete, unambiguous, and precise. The panel finds that the division’s activities over the past year are definitely relevant and effective and that the programs encompass several of the currently important areas in networking research.

The work of the Advanced Networking Technologies Division is of consistently high quality, and the panel is pleased to see that the incremental improvements observed over the past 3 years are continuing. The organization of ongoing programs around coherent research themes has produced good synergy and allowed more communication and collaboration among the research groups. The themes also provide continuity as projects are completed and new activities initiated.

The Advanced Networking Technologies Division consists of three groups: High Speed Network Technologies, Wireless Communication Technologies, and Internetworking Technologies. Currently, the division’s work is organized in six projects: Networking for Pervasive Computing, Wireless Ad Hoc Networks, Agile Switching, Internet Telephony, Internet Infrastructure Protection, and Quantum Information Networks. These projects are generally well focused on achieving specific and valuable goals and are well directed in support of the NIST mission. The panel is particularly pleased by the balance among the projects: half are driven purely by needs of relevant external communities, and half are projects coordinated across ITL and other NIST laboratories. A good mix of time scales also exists, as two projects are aimed at having short-term impacts, three at intermediate-term effects, and one at long-term goals. Below, the panel describes some of the highlights and issues observed in its assessment of the division’s activities.

A great many activities are under way in the Networking for Pervasive Computing area. Two of these activities are aimed at supporting the development of networking standards for relevant devices. The first focuses on issues surrounding how to craft the various ubiquitous wireless standards (e.g., IEEE 802.15 and IEEE 802.11) so that they do not conflict within the unlicensed 2.4-GHz band. The original designers of the relevant standards all assumed that simply by complying with Federal Communications Commission (FCC) regulations for operation in this band, their technology would not conflict with the operation of other radio technologies sharing the spectrum. However, it has now become clear, largely through work done in the Advanced Networking Technologies Division, that successful coexistence will almost certainly require more than compliance with FCC regulations, and NIST has taken an important leadership role on questions related to reconciling the standards. Division staff have extended their earlier work on formal modeling of Bluetooth and simulation of the interactions between it and IEEE 802.11, and they are now developing tools to assess the effectiveness of various methods of coexistence, including synchronized receivers and combined radios. These results are valuable, and the

panel is particularly impressed with how aggressively and effectively the division tackled the problem. The timely information coming out of NIST will allow the IEEE (Institute of Electrical and Electronics Engineers) groups to incorporate the division’s solutions into the standards. The second effort in the area of networking standards for pervasive computing devices focuses on the analysis of the resource discovery protocols being developed for ubiquitous computing systems. Current efforts include work on modeling service descriptions for Jini and Universal Plug and Play (UPnP). The panel continues to be impressed with this activity and suggests extending the work to the Internet Engineering Task Force Service (IETF) Location Protocol.

The Wireless Ad Hoc Networks project was formed this year by combining the division’s work on technologies and standards for mobile ad hoc networks (MANETs) and for smart sensor networks. The work on MANETs encompasses both analysis and simulation. While developing the simulations, division staff evaluated the effectiveness of two popular simulation environments, OPNET and NS. Thus, one valuable outcome of the project is a forthcoming report comparing the usefulness of these two environments for simulating MANETs; this information should help drive the future evolution of these simulation tools. The division’s research on MANET routing criteria focuses on Kinetic Spanning Trees and clustering structures; this new activity has considerable promise and is well aligned with current work in this field outside NIST. In the smart sensors area, the work on distributed detection in sensor networks has been redirected into investigating networking protocols and distributed algorithms for support of sensor networks, as suggested by the panel in last year’s assessment.

In the Agile Switching project, the division has completed its project on modeling, evaluation, and research of lightwave networks (MERLiN). This year’s focus is now on extending the work to multilayer restoration multiprotocol label switching (MPLS) optical restoration and recovery. As part of this work, division staff developed a modeling tool called GHOST, which promises to have wide utility in research and in industry for analyzing approaches to restoration and recovery. In addition to developing this tool, staff are also using GHOST to investigate various aspects of restoration, particularly those involving large-scale failures and multiple simultaneous failures. A new facet of this project is the work on extending GHOST to allow the investigation of the interaction between failure/recovery and quality of service-based traffic engineering; this effort promises to produce valuable results by next year’s assessment. The panel notes that integrating and aligning the new project on game theoretic approaches to analyzing failure and restoration with the work on extending GHOST would benefit both projects.

The past year has seen a great deal of progress in the project on Internet Telephony (voice over Internet Protocol [IP]). The staff spent the first year of this project (2000) learning the technology and building an interesting set of diagnostic and testing tools for session initiation protocol (SIP)-based call signaling. The development of such an interoperability test tool is valuable to the community, and the Web-enabled SIP load generation and trace capture elements of this tool are already demonstrating their utility by helping implementers tease out subtle interoperability problems. Now that the basic pieces of this project have achieved critical mass, the panel suggests that it would be advantageous to expand the effort to include the associated protocol machinery that surrounds basic call signaling (such as telephony routing over IP, telephone number mapping, and call routing). Addressing questions related to the other elements around SIP-based call signaling would significantly enhance NIST’s contributions in this area.

In early 2001, the Advanced Networking Technologies Division completed its valuable work on developing reference implementations for Internet Protocol Security (IPsec). Now the emphasis has shifted to Internet infrastructure protection, in particular to the protection of the Domain Name System (DNS) via DNSsec. This important project is a collaborative effort with the Computer Security Division, and the panel believes that the focus on DNSsec is appropriate. While DNSsec has not enjoyed wide adoption to date, recent events have raised the general awareness about the need to protect shared

Internet services such as the DNS, and hence the impact of NIST’s work in this area should be higher in the future. The division has also begun a new project on evaluating the performance and scalability of IPsec key management protocols. This timely work should help inform the IETF’s ongoing effort to select a successor to the Internet Key Exchange (IKE) key management protocol. The panel also urges the division to be alert to potential new issues in high-performance IPsec extensions that are expected to arise in the next year or two.

As part of the NIST-wide initiative in quantum computing, the Advanced Networking Technologies Division is working with the Computer Security Division on protocols and prototypes for quantum cryptography. The Advanced Networking Technologies Division’s contribution, known as the Quantum Information Networks project, is in the area of key management protocols for quantum key distribution. The panel is pleased to see that this project has both a protocol design and a prototyping element using a real quantum channel. This project is associated with the IPsec key management protocols work mentioned above, and the panel expects that good synergy should be achievable across the two areas. While the practical impact of the Quantum Information Networks work is too far in the future to predict, having a few such long-term projects provides a good balance to the division’s overall research program. In addition, the division is able to contribute to a NIST-wide program, thus keeping researchers and management engaged in NIST’s overall mission.

In summary, the panel is very pleased with the division’s ability to sunset activities either because the stated goals have been accomplished or because technical innovations require a shift in focus. Programs concluded this past year include the development of reference implementations for IPsec, the MERLiN project, the broadband wireless work on IEEE 802.16, the 3G cellular work, and the active network project, which has been shifted to the technology transfer stage. The division has also demonstrated impressive agility and the ability to jump into an area early and to select work with significant potential impact. Between the 2001 and 2002 assessments, the division used project mergers and conclusion to move from nine projects to six (one of which is entirely new). This consolidation helps highlight synergies between activities and helps reduce the number of projects with just a few staff members working on them. The panel is also impressed with the closer collaborations that have developed between staff working on different projects; specific examples of effective cooperative pairings include that of optical restoration modeling and MPLS and that of sensor networks and MANET. Utilization of the expertise and results available in other groups within the division is a constructive way to leverage a project’s resources and maximize NIST’s impact.

Program Relevance and Effectiveness

Staff of the Advanced Networking Technologies Division continue to be active in a variety of industry organizations, including the IETF, the IEEE, and the International Telecommunications Union. NIST personnel are well respected by the staff of these standards organizations and by the communities they serve. The value of the division’s standards-related efforts are realized in several ways. Most often, technical work done at NIST, such as modeling and analysis or development of testing tools and evaluation criteria, provides a greater understanding of the implications of proposed standards or supplies solutions to problems that could arise in standards development. NIST’s familiarity with the networking community and its reputation for an unbiased technical approach are also useful in determining what issues have inspired the standards effort and in defining the technical space on which the standards bodies should be focusing. One example of recent impact is the division’s notable success at raising the visibility of co-interference problems between IEEE 802.11 and Bluetooth wireless networks. Current efforts with the potential for significant future impact include the work on SIP

interoperability testing, which is likely to help tighten the specifications and arbitrate interoperability disputes, and the work on understanding the dynamics of resource discovery protocols, which may help improve existing solutions such as UPnP and Jini, while potentially showing the benefits of more general and standards-based approaches such as Service Location Protocol.

In the 2001 assessment, the panel discussed at length the growing practice of industry to develop standards in consortia or other private grouping rather than through the traditional “open” approach of mainly utilizing professional organizations. The situation has not changed since last year, and the issue remains important. The panel, and the division, recognize that the “closed” system is somewhat antithetical to the NIST and governmental philosophy of supporting all U.S. companies and the public in an open manner. However, to carry out the NIST mission of strengthening the U.S. economy, the division must be able to impact the standards that will be used in the networking community no matter how they are developed. Therefore, NIST should develop a policy on this issue, together with criteria for deciding when and how to participate in these consortia. Some of the closed standards groups are actually very inclusive, with minimal burdens placed on participants; others may be designed to exclude potential competitors and should not be endorsed by NIST. Therefore, NIST should also consider whether it could develop a strategy for encouraging the IT community to continue to utilize open, or at least quasi-open, models of standards development.

The Advanced Networking Technologies Division assumes a leadership role in the networking communities, in part by virtue of the standards activities described above. However, it is important for the staff to build awareness of NIST’s expertise and to maintain its reputation in other ways. The division does publish in journals and conference proceedings, and its personnel attend a variety of meetings. These activities are highly appropriate, but the panel suggests that a larger presence in the more prestigious publications and conferences of the networking field might be appropriate. Stronger and more visible participation in the top tier in these areas would provide the widest dissemination and enable the greatest impact for NIST results. It would also allow the division to burnish its reputation, develop the reputations and visibility of its most respected staff, and position itself as a key element of the networking community.

Division Resources

Funding sources for the Advanced Networking Technologies Division are shown in Table 8.3. As of January 2002, staffing for the division included 24 full-time permanent positions, of which 20 were for technical professionals. There were also 10 nonpermanent or supplemental personnel, such as postdoctoral research associates and temporary or part-time workers.

The primary issue for the Advanced Networking Technologies Division is its limited number of full-time permanent staff. The division performs relevant and effective work, in part because of a large cadre of guest researchers (20 people as of February 2002). This heavy reliance on visitors means that the division depends on temporary employees to support the mission-critical projects, and the potential exists for unexpected delays or the premature termination of an important effort when a guest researcher leaves NIST. The panel believes that these risks are currently outweighed by the benefits provided by the added manpower and the relationships built with other institutions, but the division should continue to be careful about maintaining an appropriate balance between permanent and temporary staff. Similarly, caution should be exercised about the balance between internal and external funds.

The panel was pleased to observe that morale within the division is quite good and that the staff is enthusiastic about its work. This year’s transition in leadership (a new division chief) was accomplished very smoothly, with no disruption in focus or loss of momentum. Division and laboratory management

TABLE 8.3 Sources of Funding for the Advanced Networking Technologies Division (in millions of dollars), FY 1999 to FY 2002

|

Source of Funding |

Fiscal Year 1999 (actual) |

Fiscal Year 2000 (actual) |

Fiscal Year 2001 (actual) |

Fiscal Year 2002 (estimated) |

|

NIST-STRS, excluding Competence |

4.9 |

4.0 |

4.1 |

4.6 |

|

Competence |

0.2 |

0.3 |

0.2 |

0.2 |

|

ATP |

0.3 |

0.5 |

0.3 |

0.2 |

|

OA/NFG/CRADA |

1.2 |

1.7 |

1.5 |

2.2 |

|

Total |

6.6 |

6.5 |

6.1 |

7.2 |

|

Full-time permanent staff (total)a |

30 |

27 |

21a |

24 |

|

NOTE: Sources of funding are as described in the note accompanying Table 8.1. aThe number of full-time permanent staff is as of January of that fiscal year, except in FY 2001, when it is as of March. |

||||

should fill the position of High Speed Network Technologies Group leader soon to allow the acting group leader to focus his attention on his new responsibilities as division chief.

Computer Security Division

Technical Merit

The mission of the Computer Security Division is to improve information systems security by:

-

Raising awareness of information technology risks, vulnerabilities, and protection requirements, particularly for new and emerging technologies;

-

Researching, studying, and advising agencies of IT vulnerabilities and devising techniques for the cost-effective security and privacy of sensitive federal systems;

-

Developing standards, metrics, tests, and validation programs to promote, measure, and validate security in systems and services, to educate consumers, and to establish minimum security requirements for federal systems; and

-

Developing guidance to increase secure IT planning, implementation, management, and operation.

The division’s programs directly support this mission and are consistent with the mission of the Information Technology Laboratory and of NIST. Privacy and security are essential to protecting electronic commerce, critical infrastructure, personal privacy, and private and public assets, so this work makes important contributions to strengthening the U.S. economy and promoting the public welfare.

The programs under way in the Computer Security Division are highly appropriate, and the division’s work has great technical merit. After a reorganization, the division is now composed of four groups: Security Technology, Systems and Network Security, Security Management and Guidance, and Security Testing and Metrics (the last two groups are new).

The Advanced Encryption Standard (AES) continues to be the focus of the Security Technology Group. In August 2001, NIST hosted a second workshop to continue to facilitate the analysis and development of new modes of operation for AES.8 NIST staff are also developing test and validation suites for the applications of AES. The open design of the AES, and the competition used to select it, have greatly enhanced the reputation of the division and are a model for future standards work.

The Systems and Network Security Group is working in a broad range of areas, including emerging technologies, reference data and implementations, and security guidance. One project is aimed at providing the technical support necessary to create a ubiquitous smart card infrastructure in the United States. Specific NIST efforts include the development of automated test suites and a testbed for the Government Smart Card Program, as well as the development of architectural models and security testing criteria. This work is appropriate because it will enable the development of consistent test methodologies for smart cards and will also reduce their cost and encourage their use in many areas. Another ongoing activity in this group is the ICAT Metabase, which is a searchable index of computer vulnerabilities that links users to a variety of publicly available vulnerability databases and patch sites. By integrating ICAT with other standard lexicons, such as the Common Vulnerabilities Enumeration, division staff have made this resource invaluable to industry as well as to researchers and users of computer systems.

Another activity in the Systems and Network Security Group is the work related to security on mobile devices, such as personal digital assistants (PDAs). The goal of this work is to develop new security mechanisms for wireless mobile devices so that the devices can be used as smart cards or computation devices to validate information about the possessor. While this objective is clearly appropriate for this division, the panel is concerned about the direction of the project and is not convinced that the work will prove fruitful. Securing hand-held PDAs is important, indeed critical, when the owner of the PDA is trying to protect information on the PDA. However, if the owner is untrusted, and a third party places information on a PDA that is to be used later as a security token (for whatever purpose), an untrusted party then has access to that information and can read, alter, and/or delete it. More specifically, securing the information would require that the PDA be a reference monitor, which it is not. The division should recast the work with this observation in mind.

A relatively new effort in the Systems and Network Security Group is a project on defending public telephone networks, including analysis of signaling system seven (SS7) vulnerabilities. The scope of this project is not clear to the panel. It might address basic communication security, application-specific security requirements for SS7, or both. The former area can be addressed via underlying communication protocol security mechanisms, such as the use of IPsec when SS7 is carried over IP. Concerns in the latter area are intrinsic to SS7 and have been studied by telephone companies in the past. For example, in the early 1990s, GTE Labs developed an SS7 “firewall” to protect central office switches. Before embarking on this project, division staff should be familiar with previous work in this area, not all of which has necessarily been widely published. Thus, the panel recommends that division staff contact the research groups that have worked in this area, such as the Verizon Technology Organization (which houses the former GTE Labs), Telecordia (formerly Bellcore), and the National Communication System technical staff, in order to become familiar with previous work in the area of SS7 security and countermeasures.

Work in the new Security Management and Guidance Group is entirely appropriate, and the group’s goal of advising and assisting government agencies is laudable. The panel felt that, on the whole, the

programs under way to implement these goals are very suitable for the division and have high technical merit. A primary focus is the Computer Security Resource Center, a valuable Web site that provides information about computer security for the public.9 This Web site is accessed by a wide range of organizations, including federal agencies, businesses, and schools, and exemplifies how the division can effectively make information available and accessible to a broad audience. The maintenance of this site is consistent with the division’s mission, as is the work on outreach to both federal agencies and businesses. These activities serve both to educate the public about computer security and to provide resources that the public can use.

One of the key programs in the new Security Testing and Metrics Group is the National Information Assurance Partnership (NIAP) program, which focuses on developing Common Criteria protection profiles and investigating issues related to the use of these profiles in developing security requirements for the federal government. The goal of this work is to enable quicker, more effective security evaluations and to standardize baseline security requirements for particular environments and products. In the past year, six Common Criteria testing laboratories have been accredited, and work is proceeding on the development of new protection profiles (such as for financial institutions). Fourteen nations have now signed mutual recognition testing agreements (recognizing the Common Criteria and the Common Criteria testing laboratories).

Another cornerstone of the Security Testing and Metrics Group is the work on cryptographic security testing and cryptographic module validation. A variety of efforts contribute to these projects, including work on public-key infrastructure (PKI) standards, components, and committees. One goal of this work is to enable businesses, citizens, and organizations to interact with the government over the Internet. Originally, the plan was to develop a single portal for this “e-government” program, but, unfortunately, the lack of funding in 2002 for integrating authentication into this project seriously weakened its viability.