4

Autonomous Behavior Technologies

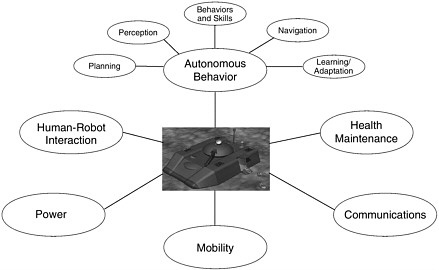

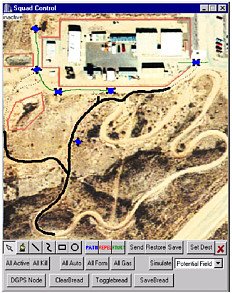

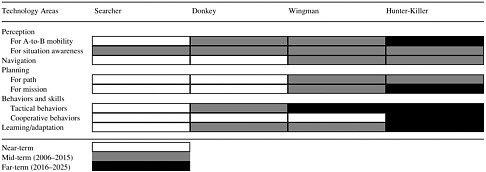

An unmanned ground vehicle (UGV) encompasses the broad technology areas depicted in Figure 4-1. The next two chapters review and evaluate the state of the art in each of these UGV technology areas. This chapter evaluates technologies needed for the autonomous behavior subsystems that are unique to unmanned systems: perception, navigation, planning, behaviors and skills, and learning/adaptation.

As part of the evaluation of each technology area the committee estimated technology readiness levels (TRL) relative to the development of specific UGV systems. Table 4-1 summarizes the basic criteria for TRL estimates.

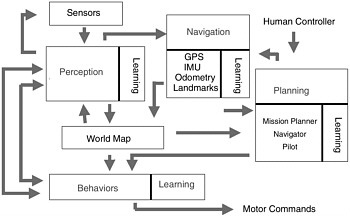

Technology areas responsible for autonomous behavior are depicted in Figure 4-2. It is important to note that these technologies are software-based, except for sensors (needed for A-B mobility and situation awareness). The figure illustrates how the software subsystems depend upon each other and are linked together to provide “intelligence” for a UGV.

The Perception subsystem takes data from sensors and develops a representation of the world around the UGV, called a world map, sufficient for taking those actions necessary for the UGV to achieve its goals. It consists of a set of software modules that carry out lower-level image-processing functions to segment features in the scene using geometry, color, or other properties up to higher-level reasoning about the classification of objects in the scene. The Perception subsystem can control sensor parameters to optimize perception performance and can receive requests from the planner or from the behaviors and skills subsystem to focus on particular regions or aspects of the scene.

The Navigation subsystem keeps track of the UGV’s current position and pose (roll, pitch, yaw) in absolute coordinates. It also provides the means to convert vehicle-centered sensor readings into an absolute frame of reference. It will generally use a variety of independent means such as an IMU (inertial measurement unit), GPS (global positioning system), and odometry with estimates from all combined by a Kalman filter or something similar. It may make use of visual landmarks if they can be provided by the Perception subsystem.

The Planning subsystem is a hierarchy of modules: the Mission Planner decides B is the destination; the Navigator does global A to B path planning based on an a priori map and other data; the Pilot does moment-to-moment trajectory planning. Using information from the Navigation subsystem and the world model, the planner can also plan sensor and sensor data-processing activities. For example, it can cue certain sensors to point in a particular direction or activate a specific feature detection algorithm.

Software for Behaviors and Skills combines inputs from Perception, Navigation, and Planning and translates them into motor commands for the UGV to move and accomplish work. This also includes software necessary for the robot to accomplish specific mission-functions, including those based on tactics, techniques, and procedures used in military operations.

Learning/Adaptation software is used to improve performance through experience. It offers a way for a system to become robust over time (i.e., to be able to handle variability not initially anticipated by the system’s programmers). Learning is not implemented as a separate subsystem but is incorporated as part of Perception, Navigation, Planning, and Behaviors.

PERCEPTION

The perception technologies discussed in this section include the sensors, computers, and software modules essential for the fundamental UGV capabilities of A to B mobility and situation awareness. The section describes the current state of the art, estimates the levels of technology readiness, identifies capability gaps, and recommends areas of research and development needed. Additional details relating to perception for autonomous mobility are contained in Appendix C.

FIGURE 4-1 Areas of technology needed for UGVs.

A UGV’s ability to perceive its surroundings is critical to the achievement of autonomous mobility. The environment is too dynamic and map data too inaccurate to rely solely on a single navigation means, such as the global positioning system (GPS). The vehicle must be able to use data from onboard sensors to plan and follow a path through its environment, detecting and avoiding obstacles as required.

The goal of perception technology is to relate features in the sensor data to those features of the real world that are sufficient, both for the moment-to-moment control of the vehicle and for planning and replanning. Humans are so good at perception, the brain does it so effortlessly, that we tend to underestimate its difficulty. It is difficult, both because the perception process is not well understood and because the algorithms that have been shown to be useful in perception are computationally demanding.

Technical Objectives and Challenges

The actions required by a UGV to move from A to B take place in a perceptually complex environment. An FCS UGV is likely to operate in any weather (rain, fog, snow), during day or night, in the presence of dust or other battlefield obscurants, and in conjunction with friendly forces opposed by an enemy. Perception system tasks are summarized in Table 4-2.

The UGV must be able to avoid positive obstacles such as rocks or trees (or indoors obstacles like furniture) and a negative obstacle such as a ditch. Water obstacles present special challenges; the UGV must avoid deep mud or swampy regions, where it could be immobilized, and must traverse slopes in a stable manner so that it will not turn over. The move from A to B can take place in different terrains and vegetation backgrounds (e.g., desert with rocks and cactus, woodland with varying canopy densities, scrub grassland, on a paved road with sharply defined edges, in an urban area), with different kinds and sizes of obstacles to avoid (rocks in the open, fallen trees masked by grass, collapsed masonry in a street), and in the presence of other features that have tactical significance (e.g., clumps of grass or bushes, tree lines, or ridge crests that could provide cover).

Each of these environments imposes its own set of demands on the perception system, modified additionally by such factors as level of illumination, visibility, and surrounding activity. In addition to obstacles it must detect such features as a road edge if the path is along a road, or features indicating a more easily traversed local trajectory if it is operating off-road. The perception system must be able to detect, classify, and locate a variety of natural and manmade features to confirm or refine the UGV’s internal estimate of its location (recognize land marks); to validate assumptions made by the global path planner prior to initiation of the

TABLE 4-1 Criteria for Technology Readiness Levels

|

TRL Number |

Description |

|

1. Basic principles observed and reported |

Lowest level of technology readiness. Scientific research begins to be translated into applied research and development. Examples might include paper studies of a technology’s basic properties. |

|

2. Technology concept and/or application formulated |

Invention begins. Once basic principles are observed, practical applications can be invented. The application is speculative and there is no proof or detailed analysis to support the assumption. Examples are still limited to paper studies. |

|

3. Analytical and experimental critical function and/or characteristic proof of concept |

Active research and development is initiated. This includes analytical studies and laboratory studies to physically validate analytical predictions of separate elements of the technology. Examples include components that are not yet integrated or representative. |

|

4. Component and/or breadboard validation in laboratory environment |

Basic technology components are integrated to establish that the pieces will work together. This is relatively “low-fidelity” compared to the eventual system. Examples include integration of ad hoc hardware in a laboratory. |

|

5. Component and/or breadboard validation in relevant environment |

Fidelity of breadboard technology increases significantly. The basic technological components are integrated with reasonably realistic supporting elements so that the technology can be teased in a simulated environment. Examples include “high-fidelity” laboratory integration of components. |

|

6. System/subsystem model or prototype demonstration in a relevant environment |

Representative model or prototype system, which is well beyond the breadboard tested for TRL 5, is tested in a relevant environment. Represents a major step up in a technology’s demonstrated readiness. Examples include testing a prototype in a high-fidelity laboratory environment or in simulated operational environment. |

|

7. System prototype demonstration in an operational environment |

Prototype near or at planned operational system. Represents a major step up from TRL 6, requiring the demonstration of an actual system prototype in an operational environment, such as in an aircraft, vehicle, or space. Examples include testing the prototype in a test-bed aircraft. |

|

8. Actual system completed and “fight qualified” through test and demonstration |

Technology has been proven to work in its final form and under expected conditions. In almost all cases, this TRL represents the end of true system development. Examples include developmental test and evaluation of the system in its intended weapon system to determine if it meets design specifications. |

|

9. Actual system “fight proven” through successful mission operations |

Actual application of the technology in its final form and under mission conditions, such as those encountered in operational, test and evaluation. In almost all cases this is the end of the last “bug-fixing” aspects of true system development. Examples include using the system under operational mission conditions. |

FIGURE 4-2 Autonomous behavior subsystems. Courtesy of Clint Kelley, SAIC.

TABLE 4-2 Perception System Tasks

|

On-Road |

Off-Road |

|

Find and follow the road |

Follow a planned path subject to tactical constraints. |

|

Detect and avoid obstacles |

Find mobility corridors that enable the planned path or that support replanning. |

|

Detect and track other vehicles |

Detect and avoid obstacles. |

|

Detect and identify landmarks |

Identify features that provide cover, concealment, vantage points or as required by tactical behaviors. Detect and identify landmarks. Detect, identify, and track other vehicles in formation. Detect, identify, and track dismounted infantry in force. |

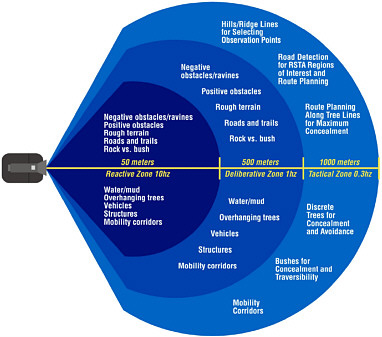

traverse (e.g., whether the region of the planned path is traversable); to gather information essential for path replanning (e.g., identify potential mobility corridors) and for use by tactical behaviors1 (e.g., “when you reach B, find and move to a suitable site for an observation post” or “move to cover”). The perception horizon begins at the front bumper and extends out to about 1,000 meters. Figure 4-3 illustrates the different demands that might be placed on a UGV perception system.

Specific objectives for A-to-B mobility are derived from the required vehicle speed and the characteristics of the assumed operating environment (e.g., obstacle density, visibility, illumination [day/night], and weather [affects visibility and illumination but may also alter feature appearance]). Table C-1 in Appendix C summarizes the full scope of environments, obstacles, and other perceptual challenges to autonomous mobility.

State of the Art

In the 18 years since the beginning of the Defense Advanced Research Projects Agency (DARPA) Autonomous Land Vehicle (ALV) program, there has been significant progress in the canonical areas of perception for UGVs: road-following, obstacle detection and avoidance (both on-road and off), and terrain classification and traversability analysis for off-road mobility. There has not been comparable progress at the system level in attaining the ability to go from A to B (on-road and off) with minimal intervention by a human operator. There are significant gaps in road-following capability and performance characterization particularly for the urban environment, for unstructured roads, and under all-weather conditions. Driving performance more broadly, even on structured roads, is well below that of a human operator. There is little evidence that perception technology is capable of supporting cross-country traverses of tactical significance, at tactical speeds, in unknown terrain, and in all weather, at night, or in the presence of obscurants. Essentially no perception capability exists (excluding limited UGV RSTA [reconnaissance, surveillance, and target acquisition] demonstrations) beyond 60 meters to 80 meters. Ability to detect tactical features or to carry out situation assessment in the region 100 meters to 1,000 meters is nonexistent as a practical matter.

The state of the art is based primarily on the DOD and Army Demo III project, the DARPA PerceptOR (Perception Off-Road) project, and research supported by the U.S. Department of Transportation, Intelligent Transportation Systems program. The foundation for much of the current research was provided by the DARPA ALV project, 1984–89, and the DARPA/Army/OSD Demo II project, 1992–98. Perception capabilities demonstrated by these and other projects are described in Appendix C and Appendix D.

On-Road

Army mission profiles show that a significant percentage of movement (70 percent to 85 percent) is planned for primary or secondary roads. Future robotic systems will presumably have similar mission profiles with significant on-road components. In all on-road environments the perception system must at a minimum detect and track a lane to provide an input for lateral or lane-steering control (road-following); detect and track other vehicles either in the lane or oncoming to control speed or lateral position; and detect static obstacles in time to stop or avoid them.2 In the urban environment, in particular, a vehicle must also navigate intersections, detect pedestrians, and detect and recognize traffic signals and signage.

On-road mobility has been demonstrated in three environments: (1) open-road: highways and freeways; (2) urban “stop and go”; and (3) following dirt roads, jeep tracks, paths and trails in less structured environments from rural to undeveloped terrain. Unstructured roads pose a challenge because the appearance of the road is likely to be highly variable, generally with no markings, and edges may not be distinct.

FIGURE 4-3 Perception zones for cross-country mobility. Courtesy of Benny Gothard, SAIC.

Perception for lane detection warning a driver of lane departures on structured, open roads are at the product stage. About 500,000 miles of lane detection and tracking operation has been demonstrated on highways and freeways. Lanes can be tracked at human levels of driving speed (e.g., 65 mph) or better under a range of visibility conditions (day, night, rain) and for a variety of structured roads (see Jochem, 2001). None of the systems can match the performance of an alert human driver using context and experience in addition to perception. Most systems are advisory and do not control the vehicle, although the capability exists to do so.

On-road mobility in an urban environment is very difficult. Road-following, intersection detection, and traffic avoidance cannot be done in any realistic situation. Signs and traffic signals can be segmented and read only if they conform to rigidly defined specifications and if they occupy a sufficiently large portion of the image. Pedestrian detection remains a problem. A high probability of detection is accompanied by a high rate of false positives. Because of the complexity of the urban environment, approaches must be data-driven, rather than model-driven. A variety of specialized classifiers or feature detectors are required to provide accurate and rapid feature detection and classification. Running all of these continuously requires considerable computing power. Research is required on controller strategies to determine which should be active at any time. Active camera control (active vision) is required for the urban environment because of the simultaneous need for wide fields of view and high resolution. Little research has been done on the use of active vision in an urban environment. (See Appendix C.)

Autonomous mobility on less structured roads has not received much emphasis despite its potential military importance. There is no experience comparable to the 500,000 miles or more of lane detection and tracking operation on highways and freeways; limitations are not as well understood and systems are not as robust. The limited experiments suggest UGVs can operate day and night at about human levels of driving speed (e.g., 10 mph to 40 mph) only on unstructured roads (secondary roads, dirt roads, jeep tracks, trails) where the road is dry, relatively flat with gentle slopes, no sharp curves and no water crossings or standing water. The road must be differentiable from background, using readily computed features. Current approaches may lose the road on sharp curves or classify steep slopes as obstacles.

Difficulty will be encountered when the “road” is defined more by texture and context. Performance on unstructured roads can be significantly affected by weather to the extent it reduces the saliency of the perceptual cues, and is likely to vary from road to road. Standing water can be detected but depth cannot be estimated. Mud can be detected in some cases, but not in others. Crown height can be measured but generally is not used. Texture could be used to provide warning of upcoming rough road segments, but computational issues remain.

On-road obstacle detection (other vehicles and static objects) is much less developed than lane tracking. Capability has been demonstrated using both active (LADAR, radar) and passive (stereo-video) sensors. For example, static objects 14 cm tall were detected at distances of 100 meters using stereo-video in daylight (Williamson, 1998). Vehicles were detected at up to 180 meters using radar (Langer and Thorpe, 1997). A very narrow sensor field of view is required to detect obstacles in time to stop or avoid them at road speeds, and sensors and processing must be actively controlled. Most demonstrations of obstacle detection were staged under conditions much less demanding than realworld operations and comprehensive performance evaluation has not been done. Capabilities developed for off-road obstacle detection are applicable to the on-road problem but have been demonstrated only at low speed. On-road obstacle detection has not generally been integrated with lane-tracking behavior for vehicle control.

Road-following assumes that the vehicle is on the road. A special case is detecting a road, particularly in a cross-country traverse, where part of the planned path may include a road segment. The level of performance on this task is essentially unknown.

Road-following capability can support leader-follower operation in militarily significant settings where the time interval between the preceder (route-proofing) vehicle and the follower is sufficiently short so that changes to the path are unlikely. Autonomous, unaccompanied driving behavior, particularly in traffic or in urban terrain with minimum operator intervention, is well beyond the state of the art. Consider the performance of the human driver relative to today’s UGVs. The current road safety statistics for the United States reveal that the mean time between injury-causing crashes is tens of thousands of vehicle hours. By contrast, it would be a major challenge for a UGV to get through 0.1 hours of unassisted driving in moderate to heavy traffic, and it is doubtful that that could be accomplished consistently in a statistically valid series of experiments. Despite impressive demonstrations today’s automated systems remain many orders of magnitude below human driving performance under a realistic range of challenging driving conditions.

Insufficient attention has been given on-road driving behavior in view of Army mission profiles, which call for vehicles to operate mostly on-road. Essentially no research has been done on the additional skills beyond road-following and obstacle avoidance required to enable driving behavior more generally.

Off-Road

Autonomous off-road navigation requires that the vehicle characterize the terrain as necessary to plan a safe path through it and detect and identify features that are required by tactical behaviors. Characterization of the terrain includes describing three-dimensional terrain geometry, terrain cover, and detecting and classifying features that may be obstacles including rough or muddy terrain, steep slopes, and standing water, as well as such features as rocks, trees, and ditches.

No quantitative standards, metrics, or procedures exist for assessing off-road UGV performance. It is difficult to know if progress is being made in off-road navigation and where deficiencies may exist. Unlike road-following, speed as a metric to gauge progress in off-road mobility is incomplete and may be misleading. No meaningful comparisons can be made without knowing the environmental conditions, the details of the terrain, and in particular, how much reliance was placed on prior knowledge to achieve demonstrated performance.

Published results and informal communications provide no evidence that UGVs can drive off-road at speeds equal to those of manned vehicles. Although UGV speeds up to 35 km/h have been reported, the higher speeds have generally been achieved in known benign terrain, and under conditions that did not challenge the perception system nor the planner. During the ALV and Demo II experiments in similar benign terrain, manned HMMWVs (high-mobility multi-purpose wheeled vehicles) were driven up to 60 km/h. In more challenging terrain the top speeds for all vehicles would be lower but the differential likely greater.

The ability to do all-weather or night operations or operations in the presence of battlefield obscurants has not been adequately demonstrated. In principle LADAR-based (laser detection and ranging) perception should be relatively indifferent to illumination and should operate essentially the same in daylight or at night. FLIR (forward looking infrared radar) also provides good nighttime performance. LADAR does not function well in the presence of obscurants. Radar or FLIR has potential depending on the specifics of the obscurant. There has not been UGV system-level testing in bad weather or with obscurants, although experiments have been carried out with individual sensors. Much more research and system-level testing under realistic field conditions are required to characterize performance.

The heavy almost exclusive dependence of DEMO III on LADAR may be in conflict with tactical needs. Strategies to automatically manage the use of active sensors must be developed. Depending on the tactical situation, it may be appropriate to use them extensively, only intermittently, or not at all.

RGB (red, green, blue, including near IR) video provides a good daytime baseline capability for macro terrain classification: green vegetation, dry vegetation, soil/rocks, and sky. Material properties can now be used with geometry to more accurately classify features as obstacles. This capability is not yet fully exploited. More detailed levels of classification during the day require multiband cameras (or a standard camera with filters), use of texture and other local features, and more sophisticated classifiers. Detailed characterization of experimental sites (ground truth) is required for progress. More research is required on FLIR and other means for detailed classification at night. Simple counts of LADAR range hits give a measure of vegetation density, once vegetation has been classified, and provide an indication of whether the vehicle can push through. Reliable detection of water remains a problem. Different approaches have been tried with varying degrees of success. Fusion may provide more reliable and consistent results.

Positive obstacles that subtend 10 or more pixels, that are not masked by vegetation or obscured for other reasons, and are on relatively level ground, can be reliably detected by stereo at speeds no greater than about 20 km/h, depending on the environment. LADAR probably requires 5 pixels and can reliably detect obstacles at somewhat higher speeds (e.g., 30km/h). LADAR, stereo-color, and stereo-FLIR all work well for obstacle detection. Day and night performance should be essentially equivalent, but more testing is required. Again, less is known about performance under bad weather or obscurants.

Little work has been done to explicitly measure the size of obstacles. This bears on the selection of a strategy by the Planner. No proven approach has been demonstrated for the detection of occluded obstacles. LADAR works for short ranges in low-density grass. There have been some promising experiments with fast algorithms for vegetation removal, which could extend LADAR detection range. Some experiments have been done with FOLPEN (foliage penetration) radar but the results are inconclusive. Radar works well on some classes of thin obstacles (e.g., wire fences). LADAR can also detect wire fences. Stereo and LADAR can detect other classes of thin obstacles (e.g., thin poles or trees). Radar may not detect nonmetallic objects, depending on moisture content. Much more research is required to characterize performance.

Detection of negative obstacles continues to be limited by geometry. While performance has improved because of gains in sensor technology (i.e., 10 pixels can be placed on the far edge at greater distances), sensor height establishes an upper bound on performance, and negative obstacles (depressions less than a meter wide) cannot be seen beyond about 20 meters. With the desire to reduce vehicle height to improve survivability the problem will become more difficult.

Little work has been done on detecting tactical features at ranges of interest. Tree lines and overhangs have been reliably detected but only at ranges less than 100 meters. Essentially no capability exists for feature detection or situation assessment for ranges from about 100 meters out to 1,000 meters.

Cross-country capability is very immature and limited. Demonstrations have been carried out in known, relatively benign environments; have seemingly been designed to highlight perception and other system strengths and potential military benefits; and have consequently done much less to advance the state of the art. Such demonstrations may potentially mislead observers as to the maturity of the state of the art.

Improvements in individual sensor capability, sensor data fusion, and in active vision are required to achieve autonomous A-to-B mobility. Improvements in LADAR range, frame rate, and instantaneous field of view (IFOV) are necessary and improvements in video resolution and dynamic range are desirable. Multi- or hyperspectral sensors could substantially improve the ability to do rapid terrain classification in daylight. Multiband thermal FLIR could potentially allow terrain classification at night. However, the conditions under which the UGVs must operate are so diverse that no single sensor modality will be adequate. Different operating conditions (missions, terrains, weather, day/night, obscurants) will pose different problems for each sensor modality, and complementary sensor systems with different vulnerabilities will be needed to provide system robustness through data fusion.

Much work will be required to translate existing research on sensor fusion into a capability for UGVs. Active vision must also be emphasized to address the trade-off between IFOV and required field of regard. Again, research exists but useful applications lag. Use of active vision could provide earlier obstacle detection and reduce the likelihood of the vehicle becoming trapped in a cul-de-sac. The development of appropriate algorithms for data fusion and active vision and their integration into the UGV perception system should be a high priority.

Technology Readiness

Except for the teleoperated Searcher UGV, the example systems defined in Chapter 2 presuppose a number of firm requirements for perception. The most fundamental are those to move autonomously from A to B either on roads or cross-country. Three maximum speeds were specified: 40 km/h, 100 km/h, and 120 km/h. Movement was to take place under day, night, or limited visibility conditions. Table 4-3 refines the TRL criteria used to estimate technology readiness for perception technologies. Tables 4-4 through 4-8 then provide TRL estimates as associated with particular sensor technologies for mobility, detection, and situation awareness. “Speed” in the tables corresponds with the example UGV systems as follows: 40 km/h (Donkey), 100 km/h (Wingman), and 120 km/h (Hunter-Killer). (No perception

TABLE 4-3 Technology Readiness Criteria Used for Perception Technologies

capabilities are required by the Searcher example.) The estimates are highly aggregated judgments of performance across a variety of situations:

-

On-road: Includes performance on structured and unstructured roads from those designed to standards and are well marked to barely perceptible dirt tracks. Structured roads have known, constant geometries (e.g., lane width, radius of curvature) and clear lane and boundary markings. Unstructured roads may be of variable geometry, have abrupt changes in curvature, and may be difficult to distinguish from background (may be paved or unpaved). Environments range from open-road to urban stop-and-go to open road. Performance includes lane-following and speed adjustment to avoid vehicles in lane (moving obstacles). In an urban environment performance may also require intersection detection and navigation and traffic signal and signage recognition and understanding. Obstacle avoidance requires detection of stopped vehicles, pedestrians, and static objects. In a combat environment obstacles may include bomb craters, masonry piles, or other debris. Obstacle detection on unstructured roads, in particular, may be more difficult because curves or dips may limit opportunity to look far ahead.

TABLE 4-4 TRL Estimates for Example UGV Applications: On-Road/Structured Roads

|

Speed (km/h) |

Day |

Night and Limited Visibilitya |

|

Lane-Following and Speed Adjustment (Collision Avoidance) |

||

|

40 |

TRL 4/bTRL 4 SV or MV+R |

TRL 3/cTRL 3 SFLIR or MFLIR+R |

|

100 |

TRL 4/TRL 3 SV or MV+R |

TRL 3/TRL 2 SFLIR or MFLIR+R |

|

120d |

TRL 3/TRL 2 SV or MV+R |

TRL 3/TRL 2 SFLIR or MFLIR+R |

|

Obstacle Avoidance |

||

|

40 |

TRL 3/eTRL 3 SV or LADAR |

TRL 3/fTRL 3 SFLIR, LADAR, radar |

|

100g |

TRL 3/TRL 2 SV or LADAR |

TRL 3/TRL 2 SFLIR, LADAR, radar |

|

120h |

TRL 2/TRL 1 |

TRL 2/TRL 1 |

|

aIncludes rain, snow, fog, and manmade obscurants. bDemonstrated lane-following and speed adjustment. cDemonstrated lane-following only, but speed adjustment with radar demonstrated in daylight should work equally well. dArchitecture must be optimized for real-time performance. The assumption for urban environments is that the vehicle will maneuver similarly to rescue vehicles or police in pursuit (i.e., as fast as circumstances permit but no faster than 120 km/h). eObstacle avoidance integrated with road-following. fWill require data fusion (e.g., multiple IR bands, FLIR with radar). gAt about this speed or greater, active vision required. hNo obstacle detection capability demonstrated at 100 or 120 km/h. Note: TRL = technology readiness level; SV = stereo video; MV+R = monocular video plus radar; SFLIR = stereo forward looking infrared; MFLIR+R = monocular forward looking infrared plus radar; LADAR = laser detection and ranging. |

||

-

Off-road: Terrain types are highly variable (e.g., desert, mountains, swampy terrain, forests, tall-grass-covered plains); have positive and negative obstacles (e.g., ditches, gullies) some of which will be visible and others that will be hidden in cover. Performance requires segmenting the terrain into traversable and nontraversable regions using geometry (i.e., size of features and assessment of material properties [rock, soil, vegetation, including assessment of terrain roughness, fordable water, and trafficability of steep slopes, or muddy or swampy regions]).

-

Detection of tactical features: Requires identifying natural and manmade features that could provide cover or concealment (e.g., tree lines or ridge crests, large rocks, buildings) or support mission packages (e.g., select a site for an observation post). Region: 100 meters to 1,000 meters.

TABLE 4-5 TRL Estimates for Example UGV Applications: On-Road/Unstructured Roads

|

Speed (km/h) |

Day |

Night and Limited Visibility |

|

Lane-Following and Speed Adjustment |

||

|

40 |

TRL 3a SV or MV+R |

TRL 3 SFLIR or MFLIR+R |

|

100b |

TRL 3 |

TRL 3 |

|

120 |

TRL 3 |

TRL 3 |

|

Obstacle Avoidance |

||

|

40 |

TRL 3c |

TRL 3 |

|

100 |

TRL 3 |

TRL 2 |

|

120 |

TRL 2 |

TRL 2 |

|

aNeed color or texture segmentation to cover all likely situations. ALVIN, RALPH, and Robin demonstrated road-following during day. Robin at night with FLIR. Not integrated with speed adjustment (e.g., radar) for unstructured roads nor with obstacle avoidance. bRequire active vision for lane-following at higher speeds due to possibility of abrupt curves. cObstacle avoidance demonstrated at 40 km/h but not integrated with road-following or speed adjustment on unstructured roads. Note: TRL = technology readiness level; SV = stereo video; MV+R = monocular video plus radar; SFLIR = stereo forward looking infrared; MFLIR+R = monocular forward looking infrared plus radar. |

||

TABLE 4-6 TRL Estimates for Example UGV Applications: Off-Road/Cross-Country Mobility

|

Speed (km/h) |

Day |

Night and Low Visibility |

|

Terrain Classification |

||

|

40 |

TRL 4 Color video, multiband |

TRL 2 Multiband FLIR |

|

100a |

TRL 2 |

TRL 2 |

|

120 |

TRL 1 |

TRL 1 |

|

Obstacle Avoidanceb |

||

|

40 |

TRL 5 LADAR, SV, FOLPEN |

TRL 3 LADAR, SFLIR, FOLPEN |

|

100c |

TRL 3 |

TRL 3 |

|

120 |

TRL 1 |

TRL 1 |

|

aRequires macro-texture analysis, terrain reasoning to predict terrain roughness. bUses geometry alone or applies geometric criteria to objects that pass through material classification sieve. cRequires active vision. Note: TRL = technology readiness level; LADAR = laser detection and ranging; SV = stereo video; FOLPEN = foliage penetration; SFLIR = stereo forward looking infrared. |

||

TABLE 4-7 TRL Estimates for Example UGV Applications: Detection of Tactical Features

|

Examplea |

Day |

Night |

|

Donkey |

TRL 4b |

TRL 3 |

|

Wingman |

TRL 3 |

TRL 3 |

|

Hunter-Killer |

TRL 2 |

TRL 2 |

|

aDonkey: cover and concealment (natural and manmade); Wingman: cover and concealment; Hunter-Killer: cover and concealment, select observation post (OP), select ambush site and kill zone. bVery limited, tree-lines and overhangs. Note: TRL = technology readiness level. |

||

TABLE 4-8 TRL Estimates for Example UGV Applications: Situation Assessment

|

Examplea |

Day |

Night |

|

Donkey |

TRL 2 |

TRL 2 |

|

Wingman |

TRL 2 |

TRL 2 |

|

Hunter-Killer |

TRL 1 |

TRL 1 |

|

aDonkey: detect, track, and avoid other vehicles or people; Wingman: track manned “leader” vehicle, detect, track, and avoid other vehicles or people, distinguish among friendly and enemy combat vehicles, and detect unanticipated movement or activities; Hunter-Killer: detect, track, and avoid other vehicles or people, discriminate among friendly and enemy vehicles, detect unanticipated movement or activities, and detect potential human attackers in close proximity. Note: The assumption is that the focus is on a region extending from 100 meters to 1,000 meters. RSTA is assumed to start at 1,000 meters. TRL = technology readiness level. |

||

-

Situation assessment: Requires identifying and locating friendly and enemy vehicles and dismounted personnel in a region extending from 100 meters to 1,000 meters.

In addition to the task-specific variables above, perception performance will be affected by weather, levels of illumination, and natural and manmade obscurants that affect visibility.

Salient Uncertainties

The success in detecting and tracking vehicles for traffic avoidance argues for the eventual success of on-road perception-based leader-follower operation.3 Limited success in

detecting pedestrians suggests that off-road leader-follower, where the vehicle follows dismounted infantry, is also a long-term potential.

To be useful for any mission UGVs must be able to go from A to B with minimal intervention by a human operator; however, there are no quantitative standards, metrics, or procedures for evaluating UGV performance. There is uncertainty as to how much progress has been made and where deficiencies exist. For example: Is DEMO III performance improved over DEMO II? If so, by how much? For what capabilities and under what conditions? Because there is little statistically valid test data, particularly in environments designed to stress and break the system (e.g., unknown terrain, urban environments, night, and bad weather), there is considerable uncertainty as to how systems might perform in these environments. Similarly, there is no systematic process for benchmarking algorithms in a systems context and corresponding uncertainty as to where improvements are required.

The foregoing provides the basis for the answer to Task Statement Question 4.a in Box 4-1.

Recommended Research

As a high priority, the Army should develop predictive performance models and other tools for UGV autonomous

|

BOX 4-1 Task Statement Question 4.a Perception Component of “Intelligent” Perception and Control Question: What are the salient uncertainties in the “intelligent” perception and control components of the UGV technology program, and are the uncertainties technical, schedule related, or bound by resource limitations as a result of the technical nature of the task, to the extent it is possible to enunciate them? Answer: The greatest uncertainties are in describing UGV performance and in determining the effect of perception (and other subsystems) on UGV system performance. No metrics have been developed and no statistically significant data have been collected in unknown environments under stressing conditions. There are no procedures for benchmarking algorithms and hence considerable uncertainty if the algorithms are best-of-breed. In the absence of metrics and data there is little basis for system optimization and a corresponding uncertainty about performance losses due to system integration issues. There is no systematic way to determine where improvements are required and in what order. The uncertainties exist because of a lack of resources in the Army’s program. |

behavior architecture system engineering and performance optimization. This work includes:

-

Statistically valid data collection in unknown environments under stressing conditions leading to the development of predictive performance models, and

-

Development of performance metrics and algorithm benchmarking.

An equally high priority should go to development and integration of real-time algorithms for data fusion and active vision. Other important areas include development and integration of real-time algorithms for terrain classification using texture analysis and multispectral data and development and integration of algorithms for sensor management, particularly active sensors.

NAVIGATION

Navigation for UGV is a large problem domain that includes such elements as current location (both absolute and relative); directions to desired location(s) such as final destination or intermediate waypoints; aiding in situational awareness (SA) including providing the location of friendly forces and targets over a large region; the mapping of immediate surroundings, how to navigate about the immediate surroundings and how to navigate to the next waypoint or final destination; and the detection of nearby hazards to mobility. Navigation overlaps and has interrelationships with several other key areas of this study, including perception, path planning, behaviors, human–machine interface, and communications. One of the major goals of the navigation module is to aid in providing enough information to allow near-autonomous mobility for the UGV.

State of the Art

Currently GPS/INS is often used for airborne and ground vehicles to determine current location and to provide directions to desired locations. GPS/INS is a proven technology that is currently used in many applications. For GPS/ INS, the inertial navigation system (INS) provides accurate relative navigation with the normal drift of the INS corrected by the absolute position obtained by GPS. With selective availability turned off GPS provides accuracy of 10 to 20 meters. This accuracy is dependent upon the geometry of the satellites used to determine the position. Horizontal position accuracy is usually better than vertical position accuracy. Horizontal errors of only 3 to 5 meters are common. Accuracy of 1 meter or less can be obtained using differential GPS (DGPS). One relative navigation technique for a communication network is to determine the relative position of each member of the network by ranging on the network communication signals. By ranging on all or most of the communications signals of a network the topology of the members

of the network can be determined. To pin down the absolute location of this topology requires that the absolute location of some of the members of the network be determined by some other method. To provide situation awareness and information about geographical surroundings, the UGV’s current position can be tied to geographical information system (GIS) databases, such as detailed terrain maps and current situation maps. These databases can be stored on board the UGV or very recent databases can be downloaded by means of communication links. Current position information integrated with GIS databases has been used in many commercial products. Other relevant SA information including non– line of sight (NLOS) and beyond line of sight (BLOS) targets can be provided to the UGV from other team members by the communication network. Onboard sensors (i.e., perception) can also be used to detect and locate potential line-of-sight (LOS) targets and nearby friendly units.

To illustrate the current state and future needs of UGV navigation, the navigation aspects of each of the four example military applications from Chapter 2 are described separately in the following paragraphs. The first application, the Searcher, is a teleoperated UGV used to search urban environments (e.g., buildings) or tunnels. Because this UGV is teleoperated, the range from the operator to the UGV is likely to be less than 1 km, and the Searcher may even be within sight of the operator. Therefore, all navigation decisions can be made by the operator, and there is little need for any sophisticated navigation sensors onboard the Searcher. Teleoperation is currently being used successfully in several military robotic programs, including Matilda and the Standardized Robotic System (SRS).

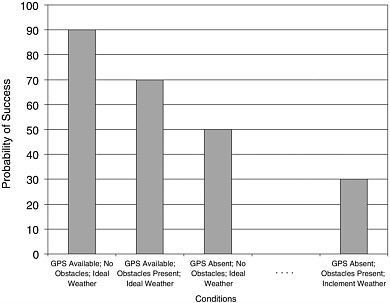

Another UGV example application is the Donkey, an unmanned small unit logistics server. The Donkey is envisioned as being in the semiautonomous preceder/follower UGV class. The Donkey will follow electronic paths (electronic “bread crumbs”) through urban or rural terrain from a start point to a release point. Navigation along this electronic path (e.g., GPS waypoints, radio frequency tags, or defined points on an electronic map) is critical for successful performance of the Donkey. If the path were defined as GPS waypoints, latitude/longitude points, or other absolute position points then the Donkey would probably use GPS/INS (or another beacon navigation system integrated with a relative navigation system) as its main navigation system.

To move along the electronic path various techniques utilizing onboard sensors combined with navigation equipment will allow the Donkey to detect immediate hazards and to navigate around these hazards while still progressing along the path (see sections titled “Perception” and “Path Planning”). Navigation techniques for the Donkey must also consider threat capabilities. Since all navigation techniques have some vulnerabilities, multiple navigation techniques should be used in conjunction to reduce these vulnerabilities. For the Donkey, environmental conditions along the path may have changed since the path was defined. The Donkey may have to operate in areas of GPS denial (or denial of other navigation beacons), either intentional (jamming) or environmental/unintentional (urban canyon, indoors, heavy foliage). Also, communication networks may be jammed. Thus navigation may have to be performed without any outside aiding (at least for some period of time).

There is much current work being done to alleviate some of the vulnerabilities of GPS to jamming (including development of both new signals and frequencies); however, it must be assumed that GPS will always have some vulnerabilities. For some current applications the combination of GPS and INS is used to resolve this problem. If GPS were denied, navigation could be performed by “riding” the INS until GPS is restored. If the Donkey could recognize its environment (perception), it may be able to determine its position based upon comparison of external sensor data with onboard maps, utilizing its last known position. The Donkey must also be able to detect when its navigation solution is in error and exhibit the appropriate behavior when this occurs. For GPS, receiver autonomous integrity monitoring (RAIM) is one technique used to verify the validity of individual satellite signals. RAIM has requirements dictating how quickly errors must be detected and what probability of missed errors or false positives are allowable.

The third UGV example application is the Wingman, a platform-centric autonomous ground vehicle. The navigation requirements of the Wingman include the ability to navigate to designated areas without any path information supplied (drive from point A to point B) and to operate at predefined standoff positions relative to the section leader. Thus, the Wingman will have to determine its absolute position and its position relative to the section leader, and to navigate with little supervision. In some instances human interaction from the section leader may aid the Wingman in determining its navigation position. Again navigation is critical for the successful performance of this UGV. The Wingman will probably use GPS/INS (or another beacon navigation system supplemented by INS) as its main navigation system. If high-accuracy positions were needed (errors of less than 10 meters), DGPS might also be required. The Wingman’s relative position compared to the section leader can be determined by communication between the section leader and the Wingman in which each tells the other its absolute position. It may be possible for the Wingman to range off of communications signals from the section leader to aid in determining its relative position compared to the section leader. Theoretically, near-autonomous mobility (point A to point B) can be obtained by various techniques utilizing onboard sensors combined with navigation equipment to allow the UGV to detect immediate hazards and to navigate around these hazards while still progressing towards the desired location (see sections on “Perception” and “Path Planning”). Tests to date have shown that all techniques have drawbacks and near-autonomous mobility has yet to be achieved. One technique includes vision detectors utilizing

pattern recognition and neural networks to identify hazards (UGV perception of external environment). Utilization of detailed terrain maps combined with GPS/INS has also been attempted, but GPS/INS position errors along with the need to update terrain maps as rapidly fluctuating conditions warrant have been stumbling points. The vulnerabilities mentioned in the above section on the Donkey also apply to the Wingman. Because the Wingman may also have automatic, lethal, direct fire capabilities, it is even more imperative for this UGV to determine its absolute position, the relative positions of all friendly/innocent assets, and detect when its navigation position is in error.

The final UGV example application is the Hunter-Killer unit, a group of network-centric autonomous vehicles. These UGVs will be tied together through a local wireless network. Autonomy from human inputs will be greater for the Hunter-Killer than for the Wingman. The navigational criticality and capabilities for the Hunter-Killer will be very similar to the Wingman discussed above. The Hunter-Killer will also probably use GPS/INS (or another beacon navigation system combined with a relative navigation system) as its primary navigation system. Because of the communications network inherent in the Hunter-Killer, relative navigation/geolocation of individual units can be performed by ranging on these communications signals. This will help to overcome the vulnerability of GPS/INS, on which UGV depends for navigation/geolocation, because in areas of GPS denial (e.g., urban environments), the ultra wide band (UWB) network signal may remain viable. Individual units may be able to send paths to other units to aid the other units in navigating from point to point. The vulnerabilities mentioned in the above section on the Donkey also apply to the Hunter-Killer. Like the Wingman, the Hunter-Killer will also have automatic, lethal, direct fire capabilities. Therefore it is imperative for this UGV to determine its absolute position, the relative positions of all friendly/innocent assets, and detect when its navigation position is in error.

For all example applications UGV navigation will be highly dependent upon the level of autonomy required of the UGV (see section on “Perception”). The final navigation solution (for all but the teleoperated Searcher) will probably involve an integration of onboard sensor information (sensor fusion), GPS/INS (or another beacon absolute navigation system integrated with a relative navigation system), navigation integrated with communications signals, the sharing of navigation information by all relevant assets (satellites, pseudolites, UAVs, other UGVs), and some operator oversight. Navigation requirements will thus impact (and be impacted by) perception, path planning, behavior, and communication requirements.

Technology Readiness Estimates

GPS/INS is a proven navigation technology (TRL 9). Other mature forms of navigation include dead reckoning (e.g., INS) and other beacon techniques (e.g., LORAN [long-range navigation]). Relative navigation on communications signals is also mature but requirements on bandwidth and utilization of timing sources complicate the problem. Teleoperation has been shown to be viable (TRL 6) in various programs including Matilda and the SRS. The ability of various communications signals (e.g., UWB) to be viable for relative navigation when GPS is denied needs to be investigated; some work is being done in this area, and the method is probably at a TRL 4 or 5.

Perception and path-planning technologies are closely related to navigation technologies, and the fusion of navigation, perception, path planning, and communications is the key to autonomous A-to-B mobility. This fusion is also the most technically challenging area and the least technically mature. Assigning a TRL value to this fusion of navigation, perception, path planning, and communications is difficult, but a reasonable guess is TRL 1 or 2.

Salient Uncertainties

The major technology gap for beacon absolute navigation (e.g., GPS/INS) is the threat of denial of the signal. For GPS much current work is ongoing to improve GPS anti-jamming characteristics, corrections for multipath problems in urban environments, and other GPS signal-tracking improvements. Combining GPS (or any other beacon navigation) with relative navigation using communications signals will help in areas where GPS is sometimes precluded; this combination of GPS and geolocation on communications signals needs to be developed. Further integration between navigation and communications will help to create more robust positioning solutions. Perception can also be used to aid in navigation, for example, the ability to determine the angle to various landmarks for which the position is known a priori can be used to determine the current position. The integration of navigation and perception is another technology gap that needs to be filled. UGVs must be able to detect when they are lost and then exhibit the appropriate behavior. For GPS, RAIM helps to meet this requirement, but for other navigation systems this ability to detect navigation errors will have to be developed. To reach near or full autonomy, UGV navigation will require the integration of perception, path-planning, communications, and various navigation techniques. This integration of multiple systems is the largest technology gap in autonomous navigation.

One of the greatest risks for UGV navigation is the interrelationships of navigation, perception, communications, path planning, man–machine interface, behavior, and the level of autonomy of the UGV. Decreased performance in any one of these areas implies greater emphasis on one or all other areas. For example, an inability of perception to delineate obstacles requires that navigation may have to rely more upon GPS/INS and maps or upon the man–machine interface (teleoperation or semiautonomy), either of which imply

a greater reliance upon communications. The interrelationship between these disparate areas must be recognized and planned from the start of the program.

Because no one navigation solution meets all conditions, several navigation techniques must be included in any UGV design. Probably both an absolute and a relative navigation technique will be necessary. The selection of which navigation techniques to utilize will be requirements driven. The four military applications presented in Chapter 2 are a good start toward defining the problem. Requirements that have to be pinned down include:

-

Do we expect to be in an area of GPS denial?

-

Do we expect operators to always be able to communicate to UGVs?

-

Is the operator–UGV communication real-time?

-

Is relative position to other assets as important as absolute position?

-

Is relative position “good enough” for most assets?

-

How long can the UGV be expected to operate without an updated absolute navigation position?

-

What are the mission goals?

-

What is the expected behavior of the UGV when it is lost?

-

What navigation failure rate or position error is tolerable?

Note that these requirements can be modified as the program progresses but a first cut at these requirements is necessary to bound the navigation problem. If initial requirements are not defined, program risk grows greatly. The possible military applications presented in previous sections are a good first step in defining initial requirements.

Full autonomous navigation for all conditions is probably not feasible, especially in the near future. Therefore, requirements for the man–machine interface for operator aiding need to be included in all future programs. How the UGV recognizes that it is lost and what the behavior should be when the UGV knows it is lost needs to be defined. The FCS program should define semiautonomous navigation capabilities at different levels of operator control. As navigation improves operator control can be lessened but at each stage of development a viable product is produced. Note that the progression of the possible military applications from the Searcher through to the Hunter-Killer is an evolution from no autonomous navigation through semiautonomous navigation all the way to full autonomous navigation.

The foregoing provides the basis for the answer to Task Statement Question 4.a as it pertains to the navigation component of “intelligent” perception and control. See Box 4-2.

Recommended Areas of R&D

Currently there is much ongoing research to improve GPS anti-jamming characteristics, corrections for multipath

|

BOX 4-2 Task Statement Question 4.a Navigation Component of “Intelligent” Perception and Control Question: What are the salient uncertainties in the “intelligent” perception and control components of the UGV technology program, and are the uncertainties technical, schedule related, or bound by resource limitations as a result of the technical nature of the task to the extent it is possible to enunciate them? Answer: Further integration between navigation and communication will help to create more robust positioning solutions. UGVs must be able to detect when they are lost and then react appropriately. The ability to detect navigation errors will have to be developed. To reach near or full autonomy, UGV navigation will require the integration of perception, path planning, communication, and various navigation techniques. This integration of multiple systems is the largest technology gap in autonomous navigation. These uncertainties are bound by resource limitations and result from the technical nature of the task. |

problems in urban environments, and other GPS signal-tracking improvements. UGV navigation should be able to “ride the coat-tails” of these efforts to obtain the best GPS navigation solutions. Research and development should be done in the following areas to yield the desired autonomous navigation solutions for all possible environmental conditions:

-

Relative navigation utilizing communications signals (especially ultra-wide band signals due to their ubiquitous characteristics) integrated with GPS.

-

Improved integration of GPS (or any absolute navigation position) with accurate digitized maps to aid in point-to-point mobility and perception.

-

Integration of perception with accurate maps to allow UGV to determine its position by comparison of sensor input and with map information.

-

Integration of absolute navigation system (i.e., GPS) with sensor information (perception) and map information to determine a more accurate position.

-

Utilization or development of improved active beacons that are viable in urban, heavy foliage environments, or jammed environments (e.g., pseudolites, beacon signals for indoor navigation).

-

Development of error detection techniques for any navigation system chosen for UGV navigation.

-

Development of UGV behavior when the UGV detects that it is lost in order for the UGV to recover its position.

-

Integration of absolute navigation techniques (e.g., GPS, LORAN), relative navigation techniques (INS, dead-reckoning, relative position estimates based upon ranging on communication signals), position estimates based upon perception, information received from other assets (including UAVs, pseudolites), and path-planning information. This is a large research area and probably the most important research area for UGV navigation. The absolute and relative navigation techniques chosen are interrelated with other system requirements, including perception, communications, power, stealth (i.e., is the UGV entirely passive), available computer power, and path planning. Various combinations of these navigation techniques and other position estimation methods should be integrated and evaluated. Note that the interrelationships of navigation, path planning, perception, and communication must be evaluated at a system level.

Impact on Logistics

The use of any beacon navigation system will require the installation of the navigation beacons. Even for GPS it may be necessary to set up pseudolites or utilize airborne pseudolites.

The electronic path will have to be determined and disseminated before a Donkey UGV can be utilized. Maps may have to be generated and disseminated to aid in navigation for the Donkey, Wingman, and Hunter-Killer. These maps will have to be as recent as possible and may have to contain much SA information.

Communications to support navigation inputs will have to be set up for the Hunter-Killer and possibly for the Wingman. These same resources may also be needed to support communications with other assets in the area of operations.

PLANNING

This section defines the scope of the planning technology area. It describes the mid- and far-term state of the art, and identifies the impact, if any, on Army operations or logistics. Planning for Army UGV systems encompasses software for path planning, which interacts with both perception and navigation, and mission planning.

Path Planning

Path planning is the process of generating a motion trajectory from a specified starting position to a goal position while avoiding obstacles in the environment. The input to a path-planning algorithm is a geometric map of the environment (and possibly the material composition of the environment in more advanced path planners), where positive and negative obstacles have been identified, and the starting and goal points are given. The output of the path-planning algorithm is a set of waypoints that specify the trajectory the vehicle must follow. For a completely known environment, path planning need only be performed once before the motion begins. However, the environment is often only partially known, and as obstacles are discovered they must be entered into the map and the path must be replanned.

State of the Art

Academic. Research in planning robotic motion dates back to the late 1960s, when computer-controlled robots were first being developed. Most advances were made in the 1980s and 1990s as computers became readily available and inexpensive. An excellent reference that summarizes many of these algorithms is Latombe (1991).

The notion of configuration space is commonly used to represent obstacles in the robot’s environment (Lozano-Perez, 1983). Configuration space for a mobile robotic vehicle is typically a two- or three-dimensional space representing the x and y position and possibly the orientation in the x–y plane. The vehicle and obstacles are simplified in shape to polygons, and the vehicle itself is further simplified to a point by “growing” the obstacles by the vehicle silhouette.

As described in Latombe (1991), there are three computational approaches to path (or motion) planning. The first is a roadmap approach where regions of free space are linked together by a network of one-dimensional curves. Once a roadmap is constructed, path planning is reduced to searching for roads that connect the initial starting point to an ending point.

The second approach involves decomposing the free space into nonoverlapping regions called cells. This cell decomposition may be exact or approximated by a prespecified geometric shape, typically a square. A connectivity graph represents the adjacency relation among the cells. The graph is searched to determine a sequence of cells that link the starting position to the goal position.

The third approach is a potential field method where the direction and speed of motion are specified by the gradient of a potential field function that is minimized when the vehicle reaches the goal point. Obstacles are avoided by adding in repulsive terms that move the vehicle away from the obstacles. The potential fields algorithm is computationally efficient and easy to implement in real time. As obstacles are discovered, repulsive vectors are easily added. One disadvantage of a potential field algorithm is that it is possible for the local minimum to occur and for the resultant gradient vector to be zero before the vehicle reaches the goal. Another disadvantage is that potential fields may also cause instability (i.e., oscillatory motion) at higher speeds (Koren and Borenstein, 1991). In these cases the first two approaches must be used to drive the vehicle away from the local minimum.

From the systems perspective, path-planning tools depend to a great extent on the quality of the perception and map-building tools. Recent perception advances in the area of simultaneous localization and mapping (SLAM) (Thrun et al., 1998; Choset and Nagatani, 2001; and Dissanayake et al., 2001) will greatly benefit path planning. This technology is potentially very useful for the Army’s UGV program, as it allows a vehicle to estimate where it is located as well as build a map of the environment. DARPA has funded much of the work found in Thrun et al. (1998) through its Tactical Mobile Robotics program.

Commercial. Many of the commercial robot simulation packages such as Simstation and RoboCad contain general six-degrees-of-freedom path planners for industrial robot manipulators. They are used to plan the free space motion of the manipulator in a cluttered factory environment. Some of the commercial mobile robotic vehicles by companies, such as the i-Robot Corporation, contain a simple two-dimensional path planner that is specific for their vehicles. There are no general purpose path planners for UGVs on the commercial market.

Current Army Capabilities. The Demo III experimental unmanned vehicle (XUV) contains an advanced and sophisticated path planner that combines world modeling, optimization, and computational searching algorithms. Planning is performed at several levels based on the time horizon (e.g., 500-ms, 5-s, 1-min, and 10-min plans) and the spatial resolution (0.4-meter, 4-meter, and 30-meter grid spacings). Path segments are weighted based on path length, offset from reference path, detected obstacles, terrain slope, and so on. The segments are stored in a graph and Dijkstra’s algorithm is used to search the graph for the optimal solution. In addition to shortest-path-length plans the planner can also compute road-only paths, and tree line tracking, low-visibility paths. Updating the vehicle path is currently performed at 4 Hz using a 300-MHz Motorola G3 processor.

The success of path planning generally depends on reliable sensor measurements. There are path planners that take uncertainty in sensor measurements into account, but even these planners perform poorly if the sensor measurements are outside assumed statistical limits (Thrun et al., 1998; Choset and Nagatani, 2001; Dissanayake et al., 2001). Although specific details were not given at Demo III, the National Institute of Standards and Technology (NIST) developers of the path planner appear to use a recursive estimator such as a Kalman or Information Filter to improve estimates of the vehicle’s current location and its surrounding obstacles. The new LADAR system on the Demo III XUV vehicle is certainly a big improvement over previous systems using stereo-video alone. The LADAR system is able to detect obstacles regardless of their contrast in the environment. The inertial navigation unit in the Demo III XUV greatly aids in localization of the vehicle in the environment.

Technology Readiness

Path planning for an individual UGV is relatively mature, but mission planning and multiple UGV and UAV planning are relatively immature. Path-planning technology is highly dependent upon both Perception and Navigation technologies for success. As demonstrated in Demo III, the state of the art in path planning for an individual UGV, such as the Donkey and Wingman examples, is estimated at TRL 5, because of limited testing in relevant environments.

The technology readiness level of multiple UGV and UAV path planning is currently TRL 3 for multiple UGVs and TRL 1 for multiple UGVs and UAVs. Under DARPA’s Tactical Mobile Robotics program, path planning for multiple UGVs was demonstrated in Feddema et al. (1999) for six “bread-box-sized” robotic vehicles; in the DARPA PerceptOR program path planning for a combined UAV and UGV has just recently been simulated.

When planning the path of multiple unmanned systems, such as would be the case for the Hunter-Killer example, the communications bandwidth between vehicles is a very important factor. The less communications bandwidth there is, the less coordination between vehicles. Available communications bandwidth also depends on the mission, with more covert missions having less bandwidth. Trade studies need to be performed to determine how much bandwidth is available for each mission. Once this is determined it is possible to develop the appropriate path planners for multiple coordinated vehicles. The budget requirements necessary to bring path planning for multiple UGVs and UAVs up to a TRL 6 is substantially more than for the mission planning aids, and the time horizon could be 10 to 15 years away.

Salient Uncertainties

Algorithm development for multiple vehicle path planners is a relatively low-risk but time-consuming effort. Path planning is a software technology that will most likely be upgraded as perception sensors and mobility platforms are upgraded. As with any software in critical systems, the software must go through a stringent, structured design review, and all branches of the code must be thoroughly tested and validated before being installed. Most planning efforts for robots are in the area of path planning. The major gaps with which the Army should be concerned are in the mission-planning area as discussed below.

Recommended Areas of Research

The following are recommended areas of research and development:

-

The trade-off in computational space and time complexity for multiresolution map generation needs to be further evaluated.

-

Planning for sensor acquisition based on mapping and localization requirements should be evaluated.

-

A hierarchy of path-planning algorithms should be employed. The fastest, most efficient algorithms should be used whenever possible. If these algorithms fail, more sophisticated algorithms should be used. This should provide for graceful degradation of performance (e.g., loss of speed).

-

Most UGV path planning to date has used only geometric and kinematic reasoning. An important next step is to include models of vehicle dynamics, terrain compliance, and dynamics of vehicle and terrain interaction into future planners. Dynamic programming techniques have been successfully applied to trajectory planning of rockets (Dohrmann et al., 1996), and they may also be successfully applied here.

-

The weights used to determine the optimal path are heuristically defined by the developer. Much experimentation is necessary to determine the appropriate weights for all variations in terrain and weather.

-

Simultaneous planning for multiple UGVs and UAVs is still in its infancy. Although not demonstrated for the committee, the Demo III software was capable of controlling up to four UGVs simultaneously. The planning for these vehicles was performed independently with the operator specifying phase transition points to coordinate the vehicles at key locations. In the future a more advanced planner will be needed to control the positions of tens of UGVs and UAVs without having to plan each path individually. This capability may not be needed for initial deployment of UGVs, but it will certainly be needed to meet FCS objectives.

Mission Planning

This section defines the scope of autonomous mission-planning technology. It describes the state of the art and estimates the levels of technology readiness.

Definition

From a military perspective, autonomous mission planning goes well beyond path planning. It is the ability of the autonomous UGV to determine its best course of military action, considering synergistically the mission being supported by the UGV; enemy situation and capabilities; terrain, features, obstacles, and weather conditions; the UGV’s own and friendly force situation and vulnerabilities; noncombatant information; time available; knowledge of military operations and procedures; and unique needs of the integrated mission package. All but the last few items directly support the development of a warfighter’s situational awareness.

The military knowledge base includes tactics, techniques, and procedures as defined in tactical fighting documents and standard operating procedures; and information from unit operations orders, including friendly force structure, detailed mission execution instructions, control graphics, enemy information, logistics (e.g., when and where to refuel), priority for supporting fires, and special instructions. The special instructions can include rules of engagement, communications protocols, and information needed about the enemy.

With enhanced military SA, understanding of its mission, and knowledge of military operations, the UGV can execute its specific mission tasks. To support this execution the UGV will identify individual and unit maneuver needs, covered and concealed routes and battle positions, tactically significant observation and firing positions for both itself and the enemy, and what and when to report and engage.

Assuming the appropriate information is available, the critical technologies needed to provide the above capabilities are software; highly efficient processing capability; and rapid-access, high-capacity, low-power storage devices for real-time cognitive processes.

State of the Art

Mission-planning capabilities for robots are very immature. Most research in the perception and planning areas is focused on path planning, with little to no efforts in mission planning. Little work is being done to develop the cognitive algorithms and knowledge bases needed to support autonomous mission planning (Meyrowitz and Schultz, 2002; U.S. Army, 2001). Most mission-planning technology efforts are in the area of developing mission-planning aids for humans using command and control systems. The only autonomous mission planning appears to be in modeling and simulation technology development efforts in support of developing more realistic decision-making capabilities within simulations (Toth, 2002).

Much work has begun in the area of cognitive modeling. Cognitive models based on neural networks, Bayesian networks, case reasoning, and others are being considered for supporting the decision-making capabilities of synthetic entities. These modeling and simulation efforts could be leveraged by robotic development programs. The Army and Navy have recently begun to exploit this opportunity (U.S. Army, 2002; Toth, 2002).

Needs of Example UGV Systems

The mission-planning needs of the four notional applications vary significantly. The basic Searcher and Donkey will not require mission-planning capabilities. The human lead will plan most of the mission for the Wingman; however, the Wingman will need some mission-planning capability to react to changes in mission while on the move. The Hunter-Killer team will require very complex autonomous

mission-planning capabilities. More advanced versions of the first three applications would require mission-planning capabilities of varying levels of complexity.

Execution of the missions will vary. The teleoperator will execute most of the Searcher’s mission. The Donkey mostly will follow its leader’s execution of the mission. The Wingman and Hunter-Killer team execute most of their missions on their own. Various aspects of the example missions will be executed by specific software developed for tactical behaviors and cooperative behaviors as discussed in the section on Behaviors and Skills.

Technology Readiness Estimate

While the mission planning in Demo III was very good (possibly TRL 5), it was very limited in the number and scope of RSTA mission functions attempted. Much more complete mission- and path-planning algorithms will be required for such missions as counter-sniper, indirect fire, physical security, logistics delivery, explosive ordnance disposal, and military operations in urban terrain (MOUT). Mission-planning aids for human command and control of each of these missions could be brought up to TRL 6 in a few years with moderate funding.

Autonomous mission-planning technologies that would be needed for Wingman and Hunter-Killer systems are at TRL 3. TRL 6 may not be achieved for another 10 years.

Salient Uncertainties

The largest capability gap is in mission planning that is specific to the Army doctrine. This is a chicken-and-egg problem in that the use of robot vehicles is not defined in the Army doctrine, and therefore the doctrine will also have to be developed. Computer scientists and soldiers need to work together to understand the capabilities of UGVs and the needs of the soldier. The soldiers will write the doctrine, while the computer scientists will develop the mission-planning tools.

Automated mission planning is being addressed in the modeling and simulation community, but capabilities are still very immature. Advances are being made in software; highly efficient processing capability; rapid-access, high-capacity, low-power storage devices for real-time cognitive processes; local or distributed high-fidelity knowledge bases; high bandwidth, mobile communications networks; perception technologies; mobility systems for complex terrain, and natural language and gesture recognition technologies that understand military language and gestures. However, these advances are not being integrated into a mission planning technology for robot systems.

Feasibility and Risks