6

Investigating the Influence of the National Science Education Standards on Student Achievement

Charles W. Anderson

Michigan State University

The Committee on Understanding the Influence of Standards in Science, Mathematics, and Technology Education has identified two overreaching questions: How has the system responded to the introduction of nationally developed mathematics, science, and technology standards? and What are the consequences for student learning? (National Research Council, 2002, p. 4). This paper focuses on the second of those questions. In elaborating on the question about the effects of standards on student learning, the National Research Council (NRC) poses two more specific questions (p. 114). The first focuses on the general effects of the standards on student learning: Among students who have been exposed to standards-based practice, how have student learning and achievement changed? The second question focuses on possible differential effects of the standards on students of different social classes, races, cultures, or genders: Who has been affected and how?

This paper responds to those questions in several ways. First, I consider a skeptical alternative: What if the standards are largely irrelevant to the problem of improving student achievement? What if student achievement depends mostly on other factors? What other factors should we consider, and how might we consider their influence? I next consider problems of defining practices that are “influenced by” or “aligned with” standards and suggest refinements in the questions posed above. Next, I review the evidence that is available with respect to the modified questions, considering both research identified in the literature search for this project and a sampling of other relevant research. Finally, I consider the future. What kinds of evidence about the influence of standards on student achievement can we reasonably and ethically collect? How can we appropriately use that evidence to guide policy and practice?

A SKEPTICAL ALTERNATIVE: DO STANDARDS REALLY MATTER?

Biddle (1997) argues that we already know what the most important problems facing our schools are, and they have nothing to do with standards.

If many, many schools in America are poorly funded and must contend with high levels of child poverty, then their problems stem not from confusion or lack of will on the part of educators but from the lack of badly needed resources. If they are told that they must meet higher standards, or—worse—if they are chastised

because they cannot do so, then they will have been punished for events beyond their control. Thus arguments about higher standards are not just nonsensical; if adopted, the programs they advocate can lead to lower morale and reduced effectiveness among the many educators in the U.S. who must cope with poor school funding and extensive child poverty. (pp. 12-13)

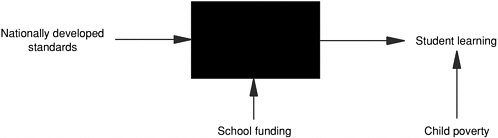

Thus Biddle questions the fundamental premise on which this project is based—that the standards have an influence on student achievement. If you want to know what influences student achievement, says Biddle, don’t follow the standards, follow the money. Improving achievement is about making resources available to children and to their teachers, not about setting standards. The contrasting figures below illustrate Biddle’s argument. Figure 6-1 comes from our inquiry framework.

The NRC points out the inadequacies of this model for investigating the influence of standards and propose an alternative that opens up the black box, suggesting curriculum, professional development, and assessment as channels of influence that influence teaching practice, which in turn influences student learning. Biddle proposes that if we are really interested in improving student learning, we should not waste our time opening up the black box. Instead we need to look outside the black box to find the factors that really influence student learning: school funding and child poverty. Figure 6-2 illustrates Biddle’s alternative model; Biddle claims that the influence of standards is insignificant in comparison with the variables he has identified.

Biddle backs up his argument with analyses of data from the Second International Mathematics Study and Third International Mathematics and Science Study showing that (1) the United States has greater disparities in school funding and higher levels of child poverty than other developed countries participating in the study, and

FIGURE 6-1 The black box.

SOURCE: NRC (2002, p. 12).

FIGURE 6-2 Biddle’s alternative model.

(2) these differences are strongly correlated with the differences in achievement among school districts and among states. Biddle’s arguments can be questioned on a variety of conceptual and methodological grounds, but we could hardly question his basic premise. Factors such as school funding and child poverty do affect student learning, and they will continue to do so whether we have national standards or not.

Thus Biddle’s argument poses a methodological challenge with important policy implications. Methodologically, we have to recognize the difficulty of answering the questions posed at the beginning of this chapter. In a complex system where student learning is affected by many factors, how can we separate the influence of standards from the influence of other factors? This question is important because of its policy implications: Is our focus on standards a distraction from the issues that really matter? If our goal is to improve student learning, should we devote our attention and resources to developing and implementing standards, or would our students benefit more from other emphases?

ALIGNMENT BETWEEN STANDARDS AND TEACHING PRACTICES

We wish to investigate how the standards influence student achievement, but how do we define “influence”? What if the standards influence teachers to teach in ways that are inconsistent with the standards? We cannot investigate the influence of standards on student learning without defining what it means for teaching practices to be “influenced by” or “aligned with” standards. A careful look at the standards themselves and at the complexity of the channels of influence through which they can reach students shows the difficulty of this problem. The standards themselves are demanding and complex. Our inquiry framework quotes Thompson, Spillane, and Cohen (1994) on the challenges that the standards pose for teachers:

…to teach in a manner consistent with the new vision, a teacher would not only have to be extraordinarily knowledgeable, but would also need to have a certain sort of motivation or will: The disposition to engage daily in a persistent, directed search for the combination of tasks, materials, questions, and responses that will enable her students to learn each new idea. In other words, she must be results-oriented, focused intently on what her students are actually learning rather than simply on her own routines for “covering” the curriculum. (NRC, 2002, p. 27)

As the other papers in this publication attest, this hardly describes the typical current practice of most teachers who consider themselves to be responding to the standards. The standards describe a vision of teaching and learning that few current teachers could enact without new resources and long-term support involving all three channels of influence. Teachers would require long-term professional development to develop knowledge and motivation, new curricula with different tasks and materials, and new assessment systems with different kinds of questions and responses.

Other people have other ideas about what is essential about the standards, of course, but almost all of those ideas implicitly require substantial investments in standards-based curricula or professional development. For example, Supovitz and Turner (2000) found that teachers’ self-reports of inquiry teaching practices and investigative classroom cultures depended on the quantity of professional development in Local Systemic Change projects, with the best results for teachers who had spent 80 hours or more in focused professional development.

Thus the nature of the standards has important methodological implications. We are unlikely to be able to separate the influence of the standards from the influence of increased school funding (see Figure 6-2 above) because implementing the standards requires increased school funding. The National Science Education Standards (NSES) call for more expensive forms of curriculum, assessment, and professional development—they are recommendations for investment in our school science programs. While we cannot separate the influence of standards from investments in schools, we can ask what the payoff is for investments in standards-based practice. When schools invest resources in standards-based practice, what is the evidence about the effects of that investment on student learning?

In addition to asking whether investment in standards-based practices is generally worthwhile, we could also ask about the value of particular practices advocated by the standards. In 240 pages of guidelines for teaching, content, professional development, assessment, programs, and systems, the NSES undoubtedly contain

TABLE 6-1 Expanded Research Questions

|

|

Standards as Investment: Effects of investment in standards-based practice |

Standards as Guidelines: Benefits of specific teaching practices endorsed by standards |

|

Benefits of standards for all students |

What evidence do we have that investments in standards-based curricula and professional development produce benefits for student learning? |

What evidence do we have about the influence of particular teaching practices endorsed by the standards on student learning? |

|

Benefits of standards for specific classes of students |

What evidence do we have that investments in standards-based curricula and professional development reduce the “achievement gap” between more advantaged students? |

What evidence do we have about the influence of particular teaching practices endorsed by the standards on the achievement of less and less advantaged students? |

some ideas that are of more value than others. Which standards are really important for student learning? What evidence do we have for the value of particular practices or content emphases?

To this point I have focused on the effects of the standards on student learning in general—the first of the questions at the beginning of the chapter. The second question focuses on possible differential effects of the standards on students of different social classes, races, cultures, or genders: Who has been affected and how? As with questions about the effects of standards on students in general, we can ask questions about both the general value of investment in standards-based teaching and about the efficacy of specific practices advocated by the standards (see Table 6-1). There are currently large gaps between the science achievement of European and Asian American students on the one hand and Hispanic and African American students on the other. Does investment in standards-based teaching practices affect these “achievement gaps”? Are there specific practices advocated by the standards that affect the size of these gaps?

Thus the difficulties of defining “influence” or “alignment” between standards and teaching practices lead us to expand the original two research questions to four. Two of the questions focus on the standards as a call for investment of resources in recommended curricula, professional development, teaching, and assessment practices. These questions ask for evidence about whether the investments made so far are paying off in terms of student learning. The other two questions focus on the standards as guidelines advocating many different specific practices. These questions ask for evidence about how those specific practices affect student learning.

EFFECTS OF INVESTMENTS IN STANDARDS-BASED PRACTICES

Given the caveats above, I attempt in this section to review a sample of studies that relate investments in standards-based practice to student learning or achievement. I looked for papers that met the following criteria:

-

They included some evidence for investment of resources in standards-based curriculum, assessment, or professional development.

-

They included some evidence about the nature or amount of student learning.

-

The evidence supported some argument connecting the investment with the learning.

None of the studies reviewed below met all three criteria well. In all cases, the evidence is incomplete and subject to reasonable alternative interpretations. As I discuss each group of papers, I will try to describe the evidence they provided concerning investments in standards-based practice and student achievement and the limitations of the studies. I first present evidence concerning the general benefits of investment in standards-

based practices for all students, followed by studies that looked for evidence of specific benefits for traditionally underserved students.

General Effects of Investments in Standards-Based Practices

One way to assess the general influence of standards on student achievement is to look for trends in national achievement data in the years after the introduction of the standards. We can expect these trends to be slow to develop. There will inevitably be a substantial lag time as the standards work their way through the different channels on influence (NRC, 2002, p. 114) to affect teaching practice and ultimately student achievement. There is an additional lag time between the collection of data and publication of the analyses. Therefore in this section, I will consider data about mathematics achievement, since the NCTM standards are similar in intent to the NSES but introduced earlier, as well as data about science achievement.

During the 13 years since the introduction of the NCTM standards, there have been two major efforts at data collection on mathematics and science achievement using representative national samples of students that might detect effects of the introduction of standards. The National Assessment of Educational Progress (NAEP) collected data on student mathematics and science achievement at regular intervals between 1990 and 2000. There was also a series of international studies of mathematics and science achievement, including the Second International Mathematics Study (SIMS), the Third International Mathematics and Science Study (TIMSS), and the repeat of the TIMSS study (TIMSS-R). Since these studies collected data at regular intervals, it is possible to look for evidence of progress on a national scale.

Perhaps the most encouraging evidence comes from NAEP data collected since the introduction of the mathematics and science standards (Blank and Langeson, 2001). For example, the number of eighth-grade students achieving proficiency on the mathematics exam increased from 15 percent in 1990, before the NCTM standards could have had a substantial impact, to 26 percent in 2000. Similar gains were recorded at the fourth-and twelfth-grade levels. Science achievement showed much more modest gains during the shorter period since the introduction of the NSES—a 3 percent improvement in eighth-grade proficiency levels between 1996 and 2000. Although these changes are encouraging, they could be due to many factors other than the influence of the NSES. For example, the 1990s were a time of unprecedented national prosperity and (disappointingly modest) decreases in child poverty and increases in school funding. Furthermore, we have no data allowing us to assess how the standards might have influenced the teaching practices experienced by the students in the sample.

The TIMSS (1995) and TIMSS-R (1999) studies also could be used for longitudinal comparisons, this time of the ranking of the United States with respect to other countries in the world. In contrast with the NAEP findings, the TIMSS results showed little or no change in the ranking of the United States in either mathematics or science. In science the ranking of the United States actually slipped slightly between 1995 and 1999 (Schmidt, 2001). Thus there is no evidence that the introduction of standards has helped the United States to gain on other countries with respect to student achievement.

Additional evidence comes from evaluations of systemic change projects. During the decade of the 1990s, the National Science Foundation made a substantial investment in systemic change projects, including Statewide, Urban, and Rural Systemic Initiatives and Local Systemic Change projects (SSIs, USIs, RSIs, and LSCs). In general these projects sought to enact standards-based teaching through coordinated efforts affecting all three channels of influence: curriculum, professional development, and assessment.

The most methodologically sound evidence concerning the impact of the systemic initiatives on student achievement comes from the Urban Systemic Initiatives. Kim et al. (2001) synthesized evaluation reports from 22 USIs. In eighth-grade mathematics, 15 out of the 16 programs reporting achievement data found improvement from a previous year to the final year of the project in student achievement. These comparisons were based on a variety of different achievement tests. In science, 14 of 15 sites showed improvements. These improvements could, of course, have been due to many factors other than the influence of the science and mathematics standards, including teachers “teaching to the test,” the influx of new resources into the systems from the USIs and other sources, and decreases in child poverty associated with the prosperity of the 1990s.

Banilower (2000) reported on the data available from the evaluations of the LSC projects. As examining student data was not a requirement of the evaluation, few projects had examined their impact on student achieve-

ment. Thus, data were available only from nine of 68 projects. Eight of the nine projects reported a positive relationship between participation in the LSC and student achievement, though only half of these constructed a convincing case that the impact could be attributed to the LSC. The remaining studies were flawed by a lack of control groups, failure to account for initial differences between control and experimental groups, or selection bias in the choice of participating schools or students. Given the small number of compelling studies, the data are insufficient to support claims about the impacts of the LSCs in general.

Two LSC projects reported data of some interest. Klentschy, Garrison, and Maia-Amaral (1999) report on achievement data from the Valle Imperial Project in Science (VIPS), which provided teachers in California’s Imperial Valley with professional development and inquiry-based instructional units in science. Fourth- and sixth-grade students’ scores on the science section of the Stanford Achievement Test were positively correlated with the number of years that they had participated in the VIPS program.

Briars and Resnick (2000) report on an ambitious effort to implement standards-based reform in the Pittsburgh schools. The effort included changes in all three channels of influence: the adoption of an NSF-supported elementary mathematics curriculum (Everyday Mathematics), professional development supported by an LSC grant, and an assessment system using tests developed by the New Standards program. There were substantial increases in fourth-grade students’ achievement in mathematics skills, conceptual understanding, and problem-solving. These increases occurred during the year that the cohort of students who had been using Everyday Mathematics reached the fourth grade, and they occurred primarily in strong implementation schools.

Two reports from Rural Systemic Initiatives were available. Barnhardt, Kawagley, and Hill (2000) report that eighth-grade students in schools participating in the Alaska Rural Systemic Initiative scored significantly higher that students in non-participating schools on the CAT-5 mathematics achievement test. Llamas (1999a, 1997b) reports marginal improvements in test scores for students participating in the UCAN (Utah, Colorado, Arizona, New Mexico) Rural Systemic Initiative.

Laguarda et al. (1998) attempted to assess the impact of Statewide Systemic Initiatives on student achievement. They found seven SSIs for which some student achievement data were available. In general, these data showed small advantages for students whose teachers were participating in SSI-sponsored programs. However, the authors caution that “there are serious limitations to the data that underlie these findings, even in the best cases: (1) the quantity of data is extremely limited, both within and across states; (2) the data within states are contradictory in some cases; and (3) the effect sizes are small” (Laguarda Goldstein, Adelman, and Zucker, 1998, p. iv).

Cohen and Hill (2000) investigated the mathematics reform efforts in California (before they were derailed in 1995-96). They found evidence that teachers’ classroom practices and student achievement in mathematics were affected by all three channels in the framework. The overall picture was complex, but in general, student achievement on the California Learning Assessment System (CLAS) mathematics tests was higher when (1) teachers used materials aligned with the California mathematics framework, (2) teachers participated in professional development programs aligned with the framework, (3) teachers were knowledgeable participants in the CLAS system, and (4) teachers reported that they engaged in teaching practices consistent with the framework.

In summary, the data are consistent with the claim that the science and mathematics standards are having a modest positive influence on student achievement, but many alternative interpretations of the data are possible. In general, effect sizes are small and the evidence for a causal connection between the standards and the measured changes in student achievement is weak.

Possible Differential Effects on Diverse Students

The second research question concerns differential effects on groups of students: What evidence do we have that investments in standards-based curricula and professional development reduce the “achievement gap” between more and less advantaged students? The studies discussed in this section compared achievement of European American students with either African American or Hispanic students. These comparisons confound the effects of race, culture, and social class, so these data cannot be used, for example, to differentiate between effects of child poverty and effects of racial prejudice.

Blank and Langesen (2001) report data on achievement of different ethnic groups from the NAEP. The differences in achievement levels remain disturbingly high. For example, 77 percent of European American students, 32 percent of African American students, and 40 percent of Hispanic students scored at the basic level or above in the 2000 eighth-grade mathematics test. There was an 11 percent reduction in the achievement gap for Hispanic students since 1990. The reduction was 2 percent for African American students.

Kim et al. (2001, pp. 20-23) compared achievement of minority and European American students in science and mathematics. At 14 urban sites, the investigators compared the achievement scores of European Americans and the largest ethnic group over two successive years. In five predominantly Hispanic sites there was a reduction in the average achievement gap of 8 percent in mathematics and 5.6 percent in science. In nine predominantly African American sites there was an increase in the achievement gap of 1 percent in math and 0.3 percent in science.

In summary, the meager evidence in the studies reviewed does not indicate that investment in standards-based practices affects the achievement gap between middle class European Americans and other students. Nationally, the achievement gap between Hispanic and European American students seems to be shrinking, but the data are not strong enough to support the claim that this is due to standards-influenced teaching. It is equally likely to be due to other causes, such as the successful assimilation of Hispanic immigrants into the American economy and culture (Ogbu, 1982). The achievement gap between European Americans and African Americans is largely unchanged.

Summary

Overall, the studies reviewed provide weak support for a conclusion that investment in standards-based practices improves student achievement in both mathematics and science. These studies provide no support for the opposite conclusion—that the standards have had negative effects on student achievement. This is an important finding to note, since there are those (e.g., Loveless, 1998) who claim that the evidence shows that “constructivist” standards impede student learning. However, the associations are generally weak, and the studies are generally poorly controlled. The reporting of achievement results is spotty and selective; in many cases the authors had personal interests in reporting positive results. Even in the most carefully controlled studies, the influence of standards is confounded with many other influences on teaching practice and student achievement. The meager evidence in the studies reviewed for this paper does not support a claim that investment in standards-based practices reduces (or increases) the achievement gap between European American and Hispanic or African American students.

It would be nice to know whether investments in standards-based practices have been cost-effective: How does the value added from these investments compare with what we might have gotten from investing the same resources in other improvements? Could it be, for example, that we could have improved student achievement more by using more of our resources to reduce child poverty? These questions, which call for comparisons between what we actually did and the road not taken, are not ones for which we are likely to find data-based answers.

EFFECTS OF SPECIFIC PRACTICES ADVOCATED BY THE STANDARDS

The studies discussed above did not report data on actual classroom teaching practices, so we cannot know, for example, whether the teachers were actually doing what the standards advocate, or how the teachers’ practices were affecting student achievement. In this section I look at the evidence concerning the relationship between teaching practices endorsed by the standards and student learning. In particular, I review studies that address these questions: What evidence do we have about the influence of particular teaching practices endorsed by the standards on student learning? What evidence do we have about the influence of particular teaching practices endorsed by the standards on the achievement of less advantaged students?

I looked for studies that met the following criteria:

-

They included some evidence about the presence or absence of teaching practices endorsed by the standards in science or mathematics classrooms.

-

They included some evidence about the nature or amount of student learning.

-

The evidence supported some argument connecting the teaching practices with the learning.

General Effects of Teaching Practices Endorsed by the Standards

In addition to data on students’ science and mathematics achievement, the TIMSS and TIMSS-R data also include extensive information about the teaching practices and professional development of the teachers of the students in the study. This makes it possible to look for associations between teaching practices, curricula, or professional development and student achievement. One study that attempted to do this carefully was conducted by Schmidt et al. (2001). They found that achievement in specific mathematics topics was related to the amount of instructional time spent on those topics. For some topics, there was also a positive relationship with teaching practices that could be viewed as moving beyond routine procedures to demand more complex performances from students, including (1) explaining the reasoning behind an idea; (2) representing and analyzing relationships using tables, graphs, and charts; and (3) working on problems to which there was no immediately obvious method of solution.

There have not, to my knowledge, been published reports of similar inquiries in science or to investigate connections between student achievement and the many other variables documenting teaching practices in these rich data sets.

Lee, Smith, and Croninger (1995) report on another study looking at relationships between instructional practices and national data sets on student achievement. Lee et al. analyzed data from the 1992 National Education Longitudinal Study, finding positive correlations between student achievement in both mathematics and science and four types of practices consistent with the national standards:

-

a common curriculum for all students

-

academic press, or expectations that all students will devote substantial effort to meeting high standards

-

authentic instruction emphasizing sustained, disciplined, critical thought in topics relevant beyond school

-

teachers’ collective responsibility for student achievement.

Von Secker and Lissitz (1999) report on analyses of data on science achievement from the 1990 High School Effectiveness Study. Although these data predated the NSES, Von Secker and Lissitz found a positive correlation between tenth-grade student achievement (as measured by science tests constructed by the Educational Testing Service) and laboratory-centered instruction. Variables measuring teacher-centered instruction were negatively correlated with student achievement.

One of the Statewide Systemic Initiatives (Ohio’s Project Discovery) went beyond attempts to measure the general impact of the project. Scantlebury, Boone, Kahle, and Fraser (2001) report on the results of a questionnaire administered to 3,249 middle-school students in 191 classes over a three-year period. The questionnaires were designed to measure the students’ attitudes toward science and their perceptions of the degree to which their teachers used standards-based teaching practices, including problem-solving, inquiry activities, and cooperative group work. Student achievement (as measured by performance on a test consisting partly of publicly released NAEP items) and student attitudes toward science were positively correlated with the questionnaire’s measure of standards-based teaching practices. Interestingly, in light of Biddle’s arguments discussed above, the correlations between student achievement and the questionnaire’s measures of home support and peer environment were not significant (though there were positive correlations between the home support and peer environment measures and student attitudes toward science).

Klein, Hamilton, MacCaffrey, Stecher, Robyn, and Burroughs (2000) reported on the first-year results of the Mosaic study, which looked for relationships between student achievement measures and teachers’ responses to questionnaires concerning their teaching practices. They used the questionnaire data to construct two composite variables. A Reform Practices measure included variables such as open-ended questions, real-world problems,

cooperative learning groups, and student portfolios. A Traditional Practices measure included variables such as lectures, answering questions from textbooks or worksheets, and short-answer tests. Pooling data from six SSI sites, they found statistically significant but weak (about 0.1 SD effect size) positive associations between teachers’ reporting of reform practices and student achievement on both open-ended and multiple-choice tests. Teachers’ reports of traditional practices were not correlated with student achievement.

Project 2061 conducted analyses of middle-school mathematics and science teaching materials for the purpose of assessing their likely effectiveness in promoting learning of AAAS Benchmarks for Science Literacy (1993) in science or the NCTM curriculum standards in mathematics. The highest-rated materials were the Connected Mathematics Program (Ridgway, Zawojewski, Hoover, and Lambdin, 2002) in mathematics and an experimental unit teaching kinetic molecular theory entitled Matter and Molecules (Berkheimer, Anderson, and Blakeslee, 1988; Berkheimer, Anderson, Lee, and Blakeslee, 1988). It happens that careful evaluation studies were done on both of these programs.

-

Students in the Connected Mathematics Program (CMP) equaled the performance of students in a control group on tests of computational ability at the sixth- and seventh-grade levels while outperforming control students on tests of mathematical reasoning. At the eighth-grade level, the CMP students were superior on both tests. The advantage of CMP students over control students increased with the number of years that they had been in the program (Ridgway et al., 2002).

-

Teachers using the Matter and Molecules curriculum were able to increase their students’ understanding of physical changes in matter and of molecular explanations for those changes. The percentage of urban sixth-grade students understanding key concepts approximately doubled (from 25 percent to 49 percent) when performance of students using Matter and Molecules was compared with the performance of students taught by the same teachers using a commercial unit that taught the same concepts the year before (Lee, Eichinger, Anderson, Berkheimer, and Blakeslee, 1993).

Possible Differential Effects on Diverse Students

None of the studies reviewed for this report specifically investigated the effects of teaching practices endorsed by the standards on the achievement gap among European American and Hispanic or African American students. There was one study that looked at teaching practices associated with achievement by African American students. Kahle, Meece, and Scantlebury (2000) found that standards-based teaching practices (as measured by a student questionnaire including items representing problem-solving and inquiry activities and cooperative group work) were positively correlated with student achievement for urban African American students. This was true after statistical adjustments for differences attributable to student sex, attitudes toward science, and perceptions of peer support for science learning.

Summary

Overall, the studies reviewed in this section provide weak support for a conclusion that teaching practices consistent with the standards improve student achievement in both mathematics and science. These studies provide no support for the opposite conclusion—that the practices endorsed by the standards are inferior to traditional practices. The meager evidence in the studies reviewed for this paper does not support a claim that practices consistent with the standards reduce (or increase) the achievement gap between European American and Hispanic or African American students. However, the size of the reported effects is small, and the methodological limitations of the studies mean that many other interpretations of the data are defensible.

CASE STUDIES AND DESIGN EXPERIMENTS

The studies reviewed for this paper relied on statistical methods to look for relationships among composite variables. Student achievement was measured by tests that addressed many specific content standards. Teaching

practice was characterized in terms of variables that combined elements of several different teaching standards. Investment in standards-based practices was characterized by general measures of participation in complex programs that combined curriculum reform, assessment, and professional development.

While such composite variables are necessary if we wish to pool the experiences of thousands of individual teachers and students to answer broad questions about the influence of standards, it is not at all clear that we know much about what they mean. We are, in effect, looking at relationships between one variable that combines apples and oranges and another variable that combines pumpkins and bananas. The results may be useful for politicians who need a simple “bottom line,” but the implications for policy or practice are inevitably muddy.

To guide practice, we need analyses that are more specific than the standards, rather than less specific. Teachers need to know more than what kinds of practices are generally appropriate; they must decide what particular practices are appropriate for particular occasions. A fourth-grade teacher who is teaching about light and vision, for example, must decide what to explain to students and how; what hands-on (or eyes-on) experiences to engage students in; what questions to ask and when; and so forth. For all their length and complexity, the NSES provide little help with such questions.

There is, however, a large literature reporting case studies and design experiments that addresses just such questions. These studies, generally focusing on a single classroom or a small number of classrooms, investigate the kinds of specific questions that our fourth-grade teacher needs to answer. They look at relationships between specific teaching practices and students’ learning of specific content. While a general review of these studies is beyond the scope of this paper, I wish to note that they exist and to discuss some of their implications for policy and practice, including the following:

-

This research can help us to develop better standards.

-

This research can help us design systems and practices to enact standards-based teaching.

-

This research can help us to understand the origins of the “achievement gap” and the kinds of practices that might help us to close it.

Improving the Standards

The case study research provides us with a great deal of information that is relevant to the design of the content and teaching standards. For example, research on the conceptions of students of different ages and cultures provides information about the appropriateness of standards for particular levels in the curriculum and about developmental pathways. Project 2061 made an organized attempt to use this research in developing the Benchmarks for Science Literacy; the research that they used is reviewed in Chapter 15 of Benchmarks (AAAS, 1993, pp. 327-78). Design experiments like those reviewed below also provide information about the effectiveness of specific teaching strategies for specific purposes. A careful review of those specific results can help us to improve general recommendations for teaching like those in the NSES.

Enacting the Standards

Case studies and design experiments are also essential for developing the base of specific knowledge necessary to enact the standards in classrooms. For example, Lehrer and Schauble (2002) have edited a book of reports by elementary teachers who have inquired into their students’ classroom inquiry, investigating how children transform their experiences into data, develop techniques for representing and displaying their data, and search for patterns and explanations in their data, and how teachers can work with children to improve their knowledge and practice. These reports and others like them thus contain a wealth of information that is essential for the design process in which advocacy of “science as inquiry” in the standards is enacted in specific classrooms.

Understanding the Achievement Gap

Finally, the case study research can help us to understand why the practices encouraged by the standards are not likely to reduce the achievement gap between students of different races, cultures, or social classes. An

extensive case literature documents the ways in which children’s learning is influenced by language, culture, identity, and motivation—issues addressed only peripherally in the NSES, but centrally important for the teaching of many students (e.g., Lee, 2001; Lynch, 2001; Warren, Ballenger, Ogonowski, Rosebery, and Hudicourt-Barnes, 2001; Rodriguez, 1997). This literature also reports on a limited number of design experiments in which teaching that explicitly addressed these issues and built on the cultural and intellectual resources of disadvantaged children produced substantial benefits for their learning (e.g., Rosebery, Warren, Ballenger, and Ogonowski, 2002). There is still a lot we do not know about reducing the achievement gap, but this literature points us in promising directions.

CONCLUSION

So when all is said and done, what can we conclude about the questions at the beginning of this chapter? Mostly, we can conclude that the evidence is inconclusive. The evidence that is available generally shows that investment in standards-based practices or the presence of teaching practices has a modest positive impact on student learning, but little or no effect on the “achievement gap” between European American and Hispanic or African American students.

It would be nice to have definitive, data-based answers to these questions. Unfortunately, that will never happen. As our inquiry framework (NRC, 2002) attests, the standards lay out an expensive, long-term program for systemic change in our schools. We have just begun the design work in curriculum, professional development, and assessment that will be necessary to enact teaching practices consistent with the standards, so the data reported in this paper are preliminary at best. By the time more definitive data are available, it will be too late to go back. This is true for most complex innovations, significant or trivial. For example, our national decision to invest in interstate highways (as opposed to, say, a system of high-speed rail links) has obviously had enormous consequences for our society, but we will never know what might have happened if we had decided differently. Like the interstate highways, the standards are here to stay. In assessing their impact we will inevitably have to make do with inferences from inconclusive data.

In assessing the impact of the NSES, we must remember that they cannot be enacted without increases in funding for school science programs. It is hard to imagine how teaching consistent with the NSES could take place in schools where most teachers are uncertified, where classes are excessively large, where laboratory facilities or Internet access are not available, or where professional development programs are inadequate, yet those conditions are common in schools today. As Biddle points out, standards can never be a substitute for the material, human, and social resources that all children need to grow and prosper in our society. Our schools and our children need more resources, especially children of poverty and their schools. At best, standards can provide us with guidance about how to use resources wisely.

We must also remember that for all their length and complexity, the NSES provide only rough guidance for the complicated process of school reform. The studies reviewed here address general questions about the large-scale influence of the standards. The standards must exert their influence, though, through millions of individual decisions about curriculum materials, professional development programs, classroom and large-scale assessments, and classroom teaching practices. Those decisions can be guided not only by the standards, but also by the extensive case literature that investigates the effects of particular teaching practices on students and their learning.

Of the studies reviewed in this chapter, those that were conceptually and methodologically most convincing tended to look at relatively close connections in the inquiry framework (NRC, 2002, p. 114). Thus there were convincing studies of relationships between teaching practices and student learning, including both small-scale case studies and larger-scale studies such as those using TIMSS data and the studies by Scantlebury et al. (2001) and Klein et al. (2000). There are also studies that showed interesting relationships between measures of student learning and teachers’ participation in professional development or use of curriculum materials. The longer the chains of inference and causation, though, the less certain the results. My feeling is that we will probably learn more from studies that investigate relationships between proximate variables in the inquiry model (e.g., between teaching practices and student learning or between professional development and teaching practices). We still have a lot to learn, and studies of these relationships will help us become wiser in both policy and practice.