2

IDENTIFYING AND EVALUATING THE LITERATURE

This chapter presents the methods used in identifying and evaluating the epidemiologic literature that form the basis of the committee’s conclusions. It includes a description of basic epidemiologic study designs (such as cohort and case-control) and methodologic issues considered by the committee as it weighed the evidence for or against an association between exposure to insecticides or solvents and a health outcome. The chapter also includes a section on the nature and value of the experimental evidence of toxicity, which is discussed more fully in Chapters 3 and 4.

IDENTIFYING THE LITERATURE

As the committee began its task, the first step was to identify the literature that it would review. Searches were conducted by using the names and synonyms of the relevant insecticides and solvents identified for study (Chapter 1), their Chemical Abstracts Service registry numbers, and numerous occupations known to entail exposure to insecticides and solvents (such as pesticide applicators, painters, and dry cleaners). Background documents and reviews of experimental evidence were also retrieved and examined.

The literature search resulted in the retrieval of about 30,000 titles (Appendix C). As the titles and abstracts were reviewed, it became apparent that many of the studies were not relevant to the committee’s task. The committee therefore developed inclusion criteria for the studies to be reviewed; for example, there had to be an examination of the agents under consideration, the design of a study had to be appropriate to the committee’s task of weighing evidence, the study had to be an original study rather than a review or metaanalysis, and the results of the study had to demonstrate persistent rather than short-term effects. The criteria enabled the committee to narrow the 30,000 titles and abstracts to about 3000 peer-reviewed studies that it would review. The studies retained were primarily occupational studies of workers exposed chronically to insecticides and solvents, including studies of Gulf War veterans that specifically examined exposure to insecticides and solvents. Examples of those excluded from review were studies that focused solely on the efficacy of insecticide use in mitigating the effects of insect infestation or that examined pesticide ingestion and suicide. Similarly, studies of occupations with exposure to multiple agents (for example, farmers, agricultural workers) that did not address specific agents were excluded, as were studies of short-term outcomes.

In addition to the above exclusions, it should be noted that animal studies had a limited role in the committee’s assessment of association between the putative agent and health outcome. Animal data were used for making assessments of biologic plausibility in support of the human epidemiologic data rather than as part of the weight-of-evidence to determine the likelihood that an exposure to a specific agent might cause a long-term outcome.

The committee did not collect original data or perform any secondary data analysis. It did, however, calculate confidence intervals, when a study did not provide them, on the basis of the number of subjects, the relative risk or odds ratio, or the p value. Confidence intervals calculated by the committee are identified as such in the health-outcome chapters (Chapters 5–9).

DRAWING CONCLUSIONS ABOUT THE LITERATURE

The committee adopted a policy of using only published, peer-reviewed literature to draw its conclusions. Although the process of peer review by fellow professionals enhances the likelihood that a study has reached valid conclusions, it does not guarantee it. Accordingly, committee members read each study and considered its relevance and quality. The committee classified the evidence of an association between exposure to a specific agent and a specific health outcome into one of five categories. The categories closely resemble those used by several Institute of Medicine (IOM) committees that have evaluated vaccine safety (IOM, 1991, 1994a), herbicides used in Vietnam (IOM, 1994b, 1996, 1999), and indoor pollutants related to asthma (IOM, 2000). Although the first three categories imply a statistical association, the committee’s conclusions are based on the strength and coherence of the findings in the available studies. The conclusions (Chapters 5–9) represent the committee’s collective judgment.

The committee endeavored to express its judgment as clearly and precisely as the available data allowed, and it used the established categories of association from previous IOM studies because they have gained wide acceptance over more than a decade by Congress, government agencies, researchers, and veterans groups. However, inasmuch as each committee member relied on his or her training, expertise, and judgment, the committee’s conclusions have both quantitative and qualitative aspects. In some cases, committee members were unable to agree on the strength of evidence of an association under review; in such instances, if a consensus conclusion could not be reached, the committee agreed to present both points of view in the text.

The five categories describe different levels of association and sound a recurring theme: the validity of an association is likely to vary with the extent to which the authors reduced common sources of error in making inferences—chance variation, bias, and confounding. Accordingly, the criteria for each category express a degree of confidence based on the extent to which sources of error were reduced.

Sufficient Evidence of a Causal Relationship

Evidence from available studies is sufficient to conclude that a causal relationship exists between exposure to a specific agent and a specific health outcome in humans, and the evidence is supported by experimental data. The evidence fulfills the guidelines for

sufficient evidence of an association (below) and satisfies several of the guidelines used to assess causality: strength of association, dose-response relationship, consistency of association, biologic plausibility, and a temporal relationship.

Sufficient Evidence of an Association

Evidence from available studies is sufficient to conclude that there is a positive association. A consistent positive association has been observed between exposure to a specific agent and a specific health outcome in human studies in which chance1 and bias, including confounding, could be ruled out with reasonable confidence. For example, several high-quality studies report consistent positive associations, and the studies are sufficiently free of bias, including adequate control for confounding.

Limited/Suggestive Evidence of an Association

Evidence from available studies suggests an association between exposure to a specific agent and a specific health outcome in human studies, but the body of evidence is limited by the inability to rule out chance and bias, including confounding, with confidence. For example, at least one high-quality2 study reports a positive association that is sufficiently free of bias, including adequate control for confounding. Other corroborating studies provide support for the association, but they were not sufficiently free of bias, including confounding. Alternatively, several studies of less quality show consistent positive associations, and the results are probably not3 due to bias, including confounding.

Inadequate/Insufficient Evidence to Determine Whether an Association Exists

Evidence from available studies is of insufficient quantity, quality, or consistency to permit a conclusion regarding the existence of an association between exposure to a specific agent and a specific health outcome in humans.

Limited/Suggestive Evidence of No Association

Evidence from well-conducted studies is consistent in not showing a positive association between exposure to a specific agent and a specific health outcome after exposure of any

magnitude. A conclusion of no association is inevitably limited to the conditions, magnitudes of exposure, and length of observation in the available studies. The possibility of a very small increase in risk after exposure studied cannot be excluded.

As the committee began its evaluation, neither the existence nor the absence of an association was presumed. Rather, the committee weighed the strengths and weaknesses of the available evidence to reach conclusions in a common format related to the above categories. It should be noted that although causation and association are often used interchangeably, the committee distinguishes between “sufficient evidence of a causal relationship” and “sufficient evidence of an association.” An association can indicate an increase in risk without exposure to the putative agent being the sole or even primary cause.

Epidemiologic studies can establish statistical associations between exposure to specific agents and health effects, and associations are generally estimated by using relative risks or odds ratios. To conclude that an association exists, it is necessary for exposure to an agent to occur with the health outcome more frequently than expected by chance alone. Furthermore, it is almost always necessary to find that the effect occurs consistently in several studies. Epidemiologists seldom consider a single study sufficient to establish an association; rather, it is desirable to replicate the findings in other studies to draw conclusions about the association. Results from separate studies are sometimes conflicting. It is sometimes possible to attribute discordant study results to such characteristics as the soundness of study design, the quality of execution, and the influence of different forms of bias. Studies that result in a statistically precise measure of association suggest that the observed result was unlikely to be due to chance. When the measure of association does not show a statistically precise effect, it is important to consider the size of the sample and whether the study had the power to detect an actual effect.

Study designs differ in their ability to provide valid estimates of an association (Ellwood, 1998). Randomized controlled trials yield the most robust type of evidence, cohort or case-control studies are more susceptible to bias. Cross-sectional studies generally provide a lower level of evidence than cohort and case-control studies. Determining whether a given statistical association rises to the level of causation requires inference (Hill, 1965). To assess explanations other than causality, one must bring together evidence from different studies and apply well-established criteria (which have been refined over more than a century) (Evans, 1976; Hill, 1965; Susser, 1973, 1977, 1988, 1991; Wegman et al., 1997).

By examining numerous epidemiologic studies, the committee addressed the question, “Does the available evidence support a causal relationship or an association between exposure to a specific agent and a health outcome?” An association between a specific agent and a specific health outcome does not mean that exposure to the agent invariably results in the health outcome or that all cases of the health outcome result from exposure to the agent. Such complete correspondence between agent and disease is the exception in large populations (IOM, 1994b).

The committee evaluated the data and based its conclusions on the strength and coherence of the data in the selected studies. The conclusions expressed in Chapters 5–9 represent the committee’s collective judgment. Occasionally, some committee members believed strongly that the literature supported, for example, a conclusion of “limited/suggested evidence of an association” while others concluded that the literature constituted “inadequate/insufficient evidence to determine whether an association exists.” If

a consensus conclusion could not be reached, both points of view are presented and discussed in the chapter, and the committee notes that further research is needed to resolve uncertainty. Each committee member presented and discussed conclusions with the entire committee.

EVALUATING THE LITERATURE

Epidemiology concerns itself with the study of the determinants, frequency, and distribution of disease in human populations. A focus on populations distinguishes epidemiology from other medical disciplines that focus on the individual. Epidemiologic studies examine the relationship between exposures to agents of interest in a studied population and the development of health outcomes, so they can be used to generate hypotheses for study or to test hypotheses posed by investigators. The following subsection describes the different types of epidemiologic studies and discusses the strengths and weaknesses of each.

Epidemiologic Study Designs

Ecologic Studies

In ecologic studies exposure to specific agents and disease are measured in populations as a whole. The data are presented as averages or rates within populations, and multiple populations are examined. For example, the exposure measurement may be the number of acres treated with insecticides or the per capita use of a particular agent. Morbidity or mortality from a specific disease is then mapped to the averages; each point represents a defined population with a specific biologic or chemical exposure and disease incidence. The correlation between the two variables among populations yields information for determining whether there is an association between exposure to the agent under consideration and outcome. Ecologic studies are not suitably designed to estimate risks for individuals. Indeed, associations found at the population level might not reflect associations at the individual level. Furthermore, the use of exposure measures based on per capita use tend to result in underestimation of any association, given that these variables are only proxies for what one would actually like to measure. But ecologic studies are useful for generating hypotheses and identifying agents that require further study. The most important limitation of ecologic studies is the lack of information at the individual level on other variables (confounding variables) that may explain an observed association between agent and disease.

Cross-Sectional Studies

A cross-sectional study provides a snapshot of a specific population at one point or over a short period in time. Exposure to the putative agent and disease are usually measured simultaneously. Information may be collected on numerous health conditions and current or past exposures to various agents. Disease or symptom prevalence between groups exposed or unexposed to a specific agent can be compared, or, conversely, exposure prevalence among groups with and without the disease can be examined. Although useful for generating hypotheses, cross-sectional studies are less appropriate for determining cause-effect

relationships, because disease information and exposure information are collected simultaneously (Monson, 1990), and it may be difficult or impossible to determine the sequence of exposure to the putative agent and symptoms or disease. Such studies are most appropriate for examining the relation of biologic or chemical exposure to characteristics that do not change (such as blood group and race) or biologic or chemical exposure in situations for which current exposure is an adequate proxy of past exposure. A distinguishing feature of a cross-sectional study is that subjects are included without investigator knowledge of their exposure or disease status.

Cohort Studies

In a cohort study, a group of people who are free of the outcome of interest at a particular time are identified and followed for the occurrence of the outcome. At the beginning of followup, information is collected on exposure to a variety of agents; and the frequency of the outcome of interest during followup in those with and without a particular exposure of interest is compared. In each exposure group, the proportion of subjects who develop the outcome is computed (the risk) and the ratio of the risks (the relative risk, RR) in the two comparison groups is computed. Some cohorts (such as occupational groups) are identified on the basis of their exposure profile; in this case, a comparison group of presumably unexposed subjects can be compiled from other sources (such as national morbidity or mortality statistics). In this context, the measure of comparison is the standardized incidence ratio or the standardized mortality ratio, which takes into account differences in the age or sex distribution between the exposed cohort and the comparison group.

Cohort studies may be prospective or retrospective (historical), depending on whether the onset of disease or symptoms has occurred before (retrospective) or after (prospective) the initiation of the study. In a prospective cohort study, the exposure of interest may be present at the time of study initiation, but the outcome is not. In a retrospective cohort study, investigators begin their observation of the study subjects at a point in the past at which all subjects were free of the outcome of interest and recreate the followup to the present. The weakness of such study designs is their inability to measure multiple exposures. Retrospective cohort studies often focus on mortality rather than incidence because of the relative ease of determining the vital status of subjects in the past and the availability of death certificates to determine the cause of death.

The advantages of cohort studies are best demonstrated in circumstances involving rare exposure—because it can be targeted in the population identification (for example, in an occupational cohort)—or multiple outcomes. The strengths of cohort studies, particularly prospective cohort studies, include the ability to demonstrate a temporal sequence between the agent of interest and outcome and the minimization of selection bias at entry, inasmuch as all participants are presumed to be free of disease at baseline. A potential limitation of cohort studies, however, is the loss of study subjects during long periods of followup, which might result in selection bias if those lost to followup have a different exposure-outcome association from those who remain in the study.

Case-Control Studies

In contrast with how subjects are gathered for cohort studies, individuals are recruited into case-control studies on the basis of disease status. Subjects with the disease of

interest are included as cases, and a comparison group, free of the outcome of interest, is selected as controls. A history of exposure to various agents among cases and controls is usually determined through standardized interviews of the participants or through proxies in the case of decedents or people unable to respond for themselves (such as those with cognitive impairment). Cases and controls may be matched with regard to such characteristics as age, sex, ethnicity, and socioeconomic status to balance the distribution of these variables in the two groups. The groups are then compared with respect to whether they have a history of exposure to the agent or characteristic of interest (Hennekens and Buring, 1987). The odds of exposure to the agent among the cases are compared with the odds of exposure to the agent among controls, and an odds ratio (OR) is computed. The case-control study is subject to a variety of forms of bias because disease has already occurred in one group. The biases and strategies to reduce them are discussed later in this chapter.

The case-control study is most useful for studying diseases with a low frequency in the population, in that it is often possible to recruit sufficient cases from a variety of sources. The challenge is the selection of a control group of people who would have been eligible for inclusion as cases if they had developed the disease (that is, those at risk for the outcome). Case-control studies are also useful for studying multiple exposure variables or determinants because the investigators can design data-collection methods (usually questionnaires) to obtain information on different aspects of exposure of the cases and controls. The cases may respond to questions about past biologic or chemical exposures differently from controls because the cases have already developed the disease. For example, they may overreport being exposed to specific agents in an attempt to “explain” their disease or might underreport such exposures. In either case, there is a potential for recall bias in which the tendency to report exposure to specific agents incorrectly is different for cases and controls. Finally, because a case-control study is conducted after a disease has occurred, special care has to be taken in assessing exposure to ensure that only exposures to the agent under consideration that occurred before onset of the disease are counted as being relevant to the question of etiology.

If living cases are not available, some case-control studies, called mortality odds ratio studies, use death certificates to determine both disease status and exposure. A person’s “usual” or “last occupation” is often recorded on a death certificate and can be used to infer exposure. However, this information is often inaccurate or incomplete.

A more sophisticated variation on the classical case-control study is used increasingly—the nested case-control study. This form involves a sampling strategy whereby a case-control sampling takes place within an assembled cohort. In a nested case-control study, a cohort is identified and followed for the occurrence of the outcome of interest. Whenever a case of the outcome of interest is identified, a sample of the cohort who have not developed the outcome by that time are selected as controls. Information on exposure is then collected from both the cases and the selected controls. In some nested case-control studies, the cohort is assembled in such a way that information on exposure is collected on all subjects at baseline before disease occurrence (for example, blood samples are taken and stored). The advantage of the nested case-control design is that the most appropriate control group is chosen from members of the same cohort who have not developed the outcome at the time that they are chosen. In addition, exposures to the agent

of interest need to be analyzed only for the cases and the selected controls rather than for the entire cohort, and this saves time and money.

Experimental Studies

Experimental studies in humans are the most reliable means of establishing causal associations between exposure to an agent of interest and human health outcomes. Key features of experimental studies are their prospective design, their use of a control group, and their random allocation of exposure to the agent under study. The randomized controlled trial is considered the most informative type of epidemiologic research design for the study of medications, surgical practices, biologic products, vaccines, and preventive interventions. The main drawbacks of randomized controlled trials are their expense, the time needed for completion, and the common practice of systematically excluding many groups of individuals, which limits the conclusions to a relatively small and homogeneous subgroup. Randomized trials are virtually non-existent as a means of determining the health risks of insecticides and solvents.

Measures of Association

The relationships that are examined in each type of epidemiologic study are quantified with statistical measures of association. In cohort studies, the measure of association between exposure to the agent of interest and outcome is the relative risk (RR), computed by dividing the risk or rate of developing the disease or condition over the followup period in the exposed group by the risk or rate in the unexposed group. An relative risk greater than 1 suggests that exposed subjects are more likely to develop the outcome than unexposed subjects, that is, it suggests a positive association between exposure to the putative agent and the disease. Conversely, a relative risk of less than 1 indicates that the agent might protect against the occurrence of the disease. A relative risk close to 1 indicates that there is little appreciable difference in risk (rates) and that there is little evidence of an association between the agent and the outcome.

In occupational cohort mortality studies, risk estimates are often standardized for comparison to general population mortality rates (by age, sex, race, time, and cause) because it can be difficult to identify a suitable control group of unexposed workers. The observed number of deaths among workers (from a specific cause, such as lung cancer) is compared with the expected number of deaths in an identified population, such as the general US population, accounting for age, sex, and calendar year. The ratio of observed to expected deaths produces a standardized mortality ratio (SMR). The SMR is usually a good estimator of relative risk; an SMR greater than 1 generally suggests an increased risk of dying in the exposed group. Less common, but identical in calculation, is a standardized incidence ratio (SIR). Incidence is a measure of new cases of a disease; mortality is the number of reported deaths. SIRs are calculated less often than SMRs, because disease incidence, as an end point, is often more difficult than death for investigators to identify and follow up. For disease mortality, death certificates are often used; they are easier to obtain than registry data that would indicate the incidence of a disease.

A proportionate mortality ratio (PMR) study relates the proportions of deaths from a specific cause in a specified time period between exposed and nonexposed subjects. That makes it possible to determine whether there is an excess or deficit of deaths from that cause

in the exposed population. PMR studies can be misleading because their study design assumes that deaths from other causes are unrelated to the exposure of interest. The problem can be mitigated by selecting control causes of death on the basis of an assumed lack of association with exposure. The lack of direct measures at the individual level is an additional limitation of this study design. Unlike an SMR study that requires knowledge of the specific age, sex, race, or time within the exposed population at each stratum, a PMR study requires knowledge only of the proportion of cause-specific deaths in each stratum. Other complications involve the assignment of exposure to deceased subjects and a lack of temporal sequence.

In case-control studies, in contrast with cohort studies, it is not possible to estimate the risk of disease in each of the exposure groups because the number of subjects with the disease (the cases) is selected by the investigator. What can be computed, however, are the odds of exposure to the agent of interest in the cases and the odds of exposure to the agent in the controls. The ratio between those two quantities is an odds ratio (OR) that can be interpreted in much the same way as the relative risk. An odds ratio of 1 indicates no evidence of an association, odds ratio greater than 1 indicates the possibility of an increased risk, and an odds ratio less than 1 suggests a protective association. It can be shown mathematically that the odds ratio based on the odds of exposure to the putative agent in the cases and in the controls is identical with an odds ratio computed as the ratio of the odds of disease in the exposed group to the odds of exposure in the nondiseased group; this further justifies the use as an approximation to relative risk. The approximation is best when the prevalence of disease in the population is relatively low—precisely the situation in which a case-control study is used.

Ecologic studies use a different measure of association between exposure to the putative agent and disease. These studies map exposure averages to outcome rates by each population included. The measure of association, known as the correlation coefficient (r), estimates the degree to which the exposure to the agent of concern is related to outcome in a linear fashion. An r value can range from −1 to +1 and indicate both the strength and the direction of the association. A positive value close to 1 indicates a strong relation of outcome to exposure; a negative value close to −1 signifies a strong inverse relation. Values close to 0 indicate little or no relation between exposure and outcome. A key assumption is that the relation is linear. If the true relation between exposure and outcome is not linear but can be described by another curve (for example quadratic), the correlation coefficient will not be the most appropriate measure of the association. Graphic assessment of the relation is helpful in this regard.

Assessing the Validity of Findings

As described above, the goal of observational epidemiologic studies is to examine associations between exposures to particular agents and health outcomes in a population. However, the variability of individual experiences and the complexities involved in conducting studies in human populations, as opposed to the controlled laboratory setting, make it important to consider whether explanations other than causality might account for an observed association.

Assessing the Effect of Chance

Statistical analysis provides a means to assess the degree to which an observed measure of association (such as RR, OR, SMR, SIR, and PMR) derived from a study reflects a true association. Using statistical analysis, one can assess the probability (sometimes called the p value) that an association as large or larger than the one actually observed could have been observed even if no true association exists, that is, could have arisen by chance. The magnitude of the p value is used as an aid to researchers in interpreting the results of a study. Typically, a p value of less than 0.05 is taken to be indicative that such a result would be “unlikely” if no true association existed and consequently provides evidence of a real association. A relative risk close to 1 indicates that there is little appreciable difference in risk (rates) and that there is little evidence of an association between the exposure and the outcome. In statistical terminology, a result is said to be “statistically significant” if the p value is smaller than 0.05. Lower (more stringent) p values may be used when multiple comparisons are being made. It is important to note that this preset value is arbitrary and is influenced not only by the size of the association but also by the size of the study sample. For example, if the sample is very large, even associations that are very small may be found to be “statistically significant.” In contrast, a large association observed in a study with very few subjects might not be “statistically significant,” primarily because of the sample size. Thus, in interpreting the results of statistical tests, it is critical to take into account not only the magnitude of the observed effect but also the size of the study sample. As a result, the committee decided not to rely on p values when evaluating the role of chance and did not identify studies as being “statistically significant.” Instead, the committee focused on confidence intervals (CIs) as a more appropriate measure for assessing the association of interest.

A CI is the most likely range of values of the association in question and is based on the observed value of the association, its estimated variability if the study were to be repeated many times, and a specified “level of confidence.” The confidence attached to the interval is actually in terms of the approach that is used rather than in the results themselves. Typically 95% CIs are presented. Thus, one interprets a 95% CI to mean that if the study were replicated 100 times (that is, if 100 samples were chosen from the same population, an association were measured, and a CI were constructed), 95 of the 100 CIs would contain the true value of the association. The width of the CI is influenced by the variability in the study data and by the sample size. Greater variability will increase the range, and increasing the sample size will result in a smaller range. CIs are the most appropriate way of presenting the results of epidemiologic studies because both the magnitude of the association and an assessment of the variability of the findings are provided.

Assessing the Effect of Bias

Various types of bias that are inherent in such studies may compromise the validity of epidemiologic study results. The biases may arise as a result of the choice of study subjects or of the way information on exposure or outcome is assessed. It is important that issues of bias be carefully examined in any review of evidence. In evaluating published studies, the committee considered the likelihood of bias and the possible magnitude and direction of effect of bias on the results.

Bias is a systematic error in the estimation of association between an exposure and an outcome that can result in deviation of the observed value from the true value. Bias can

produce an underestimation or an overestimation of the magnitude of an association. Epidemiologic studies are subject to a variety of biases, and the primary challenge is to design a study as free of bias as possible. Bias may occur even if the utmost care is taken in designing a study. Researchers aware of the potential for bias can take steps to control it in the statistical analysis or interpretation of observed results. There are three main types of bias in epidemiologic research: selection bias, information (or misclassification) bias, and bias due to confounding.

Selection Bias

Selection bias can occur in the recruitment of study subjects to a cohort. For example, in a retrospective cohort study, when the exposed and unexposed groups are selected differentially on outcome, the assembled cohort can differ from the target population with respect to the association between exposure to the agent under study and disease outcome. In cohort studies of industrial populations, selection bias can operate at the time of entry or during followup. Furthermore, comparison of rates in the general population with rates in occupationally exposed cohorts may be subject to what is called the healthy-worker effect. This arises when a population that is healthy enough to be employed experiences lower mortality than the general population, which comprises healthy and unhealthy people. The healthy-worker effect can result in an underestimation of the strength of an association between exposure to an agent and some effect or outcome by failing to compare populations with similar levels of health. To balance the influence of the healthy-worker effect, some investigators divide worker populations into groups based on their levels of exposure to the agent being studied. Comparisons are then made within the cohort, thereby minimizing the healthy-worker effect.

Selection bias is of particular concern in case-control studies because the selection of study subjects is based on disease status. If exposed people were more likely to agree to participate than unexposed people, the study would produce an overestimation of the association in question—unless such selection is also present in the controls. In case-control studies, strategies to reduce the effect of selection bias generally concern the choice of control group.

Selection bias can also influence the results of a study through the pattern of missing data. If data are missing (because of a lack of response by subjects or because of errors in coding) in a way that is related to both the exposure of interest and the outcome, selection bias can occur.

Information Bias

Information bias (also known as misclassification bias) can occur when there are errors in data-collection methods, such as imprecise measurement of exposure or outcome. This is of concern, for example, when death certificates are used as a source of information on causes of death that are not verified. Inaccurate coding can create unknown error. The error may be uniform across the entire study population, in which case it may tend to reduce the apparent magnitude of associations (“bias toward the null”), or it may show up differently between study groups. Information bias in relation to the outcome can result from incorrect assignment of study subjects with respect to the outcome variable; a case may be misclassified as a control, or a member of a cohort during followup who develops disease will not be diagnosed, or vice versa. Such errors in classification of disease status are of

particular concern if the error in classification is also related to the exposure status of the subject. For instance, if smokers are more likely to be diagnosed with emphysema than are nonsmokers with the same symptoms, the observed association between smoking and emphysema will be overestimated. However, misclassification of disease that is not related to exposure generally results in an underestimation of effect.

A number of factors may contribute to information bias in relation to exposure to the putative agent, including poorly designed questionnaires to determine actual exposures, differential recall of exposure between cases and controls, and differences in the interview process. Recall bias is of special concern in case-control studies: a differential likelihood of exposure reporting between study groups can be related to disease status rather than to the actual biologic or chemical exposure. The assumption is that cases would be more likely to report exposure to specific agents and to report it more fully. For example, a person with a chronic disease may be more likely than a healthy participant to report exposure to agents when queried in an attempt to determine the cause of the condition. Interviewer bias occurs, for example, when a questionnaire administrator is aware of a subject’s disease status and inadvertently or intentionally solicits a particular response because of that knowledge.

Confounding

Confounding can occur when a variable related to the exposure and a risk factor for the outcome accounts for all or part of an observed exposure-outcome association. For example, if exposure to a given solvent appears to be associated with the development of lung cancer, cigarette smoking may confound this association if study subjects exposed to the solvent are more likely to have smoked than those not exposed. In this case, the observed relationship between exposure to solvents and lung cancer is said to be “confounded by” or “explained by” smoking. The effect of confounding can be minimized through study design (restriction or matching) or taken into account in the statistical analysis. In this example, restriction of the study subjects to nonsmokers would eliminate the possible confounding effect of smoking, although such a strategy may limit the generalizability of the findings. An alternative approach, most typically used in case-control studies, is to match each case to a control that has the same value of the confounding variable. For example, if age was suspected of confounding the relationship between exposure to insecticides and Parkinson’s disease, investigators planning a case-control study might choose to match each case of Parkinson’s disease to a control of the same age or within the same age range. Statistical techniques also are often used to reduce bias from known confounding factors.

Unmeasured Confounding in Cohort Studies. In cohort studies, it is common for mortality or incidence rates to be compared to rates in the general population and because of logistic difficulties there are often no measurements of confounding factors, such as smoking, available within the cohort. Comparisons of rates could be biased if there are large discrepancies in the prevalence of the risk factors in the cohort and in the general population. Methods have been developed (for example, Axelson, 1978) to estimate whether a confounding factor can explain the entire relative risk that has been observed in the cohort.

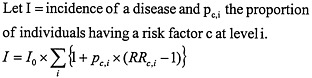

The general calculation is as follows: assume that there is no exposure effect in the cohort (i.e., exposure to solvents is not associated with the incidence of lung cancer). Let I represent the incidence rate in the cohort of the disease under study, let I0 represent the

incidence in the general population, let pc represent the proportion of subjects in the cohort having the risk factor “c,” and RRc is the relative risk of the confounder.

Under the assumptions of no association with exposure and no synergy (the relative risks of the confounder are independent of exposure), the total incidence in the cohort is:

(1)

The confounding relative risk (i.e., the relative risk due solely to the confounder that may be attributed mistakenly as being due to the exposure) is then the incidence in the cohort (I) divided by the incidence in the general population (Ig) (i.e., RR=I/Ig). A fundamental assumption is that only the confounder of interest increases the incidence rate above baseline (I0).

This relationship can be generalized for risk factors that have many levels (e.g., moderate and heavy smokers), as follows:

(2)

For the purposes of illustration, let us take the example of lung cancer and smoking. In a cohort of workers exposed occupationally to solvents, assume that the observed standardized incidence rate (SIR) for lung cancer is two, but the prevalence of smoking in the cohort was not taken into account in the calculation. The question is: could this association be due to a higher rate of smoking among workers in the plant? No definitive answer can be given, however, equation (2) can be used with some realistic values of the proportion of workers smoking at varying intensities and the relative risk of developing lung cancer to obtain a series of expected relative risks under the assumption that there is no effect of exposure.

For example, assume that there is a cohort of subjects exposed to organic solvents and the end point is incidence from lung cancer. Assume further that:

-

There is no association between lung cancer and exposure to organic solvents and only smoking increases the baseline incidence rate.

-

The relative risks for smoking are assumed to be:

-

RRc for moderate smokers=10

-

RRc for heavy smokers=20

-

Table E.1 (Appendix E) shows detailed results under certain assumptions of the prevalence of smoking in the general population and in the occupational cohort. Some of the scenarios are rather implausible, for example, assuming that 70% of the population are heavy smokers. In cases where the prevalence of smoking in the general population exceeds that of the cohort, the expected relative risks are less than unity, meaning that an observed excess relative risk in the cohort would be underestimated because of confounding by smoking. Expected relative risks greater than unity, as would occur when there are more and heavier smokers in the occupational cohort, are interpreted as meaning that some of an observed effect could be explained by smoking. In the instance where all cohort members smoke heavily, the maximum relative risk that could be observed is 3.1. In general, the table shows

that one requires extreme differential smoking patterns to explain observed relative risks above 1.5.

Exposure Assessment

Exposure assessment is important when considering the validity of findings because many epidemiologic studies use crude measurements in determining actual exposure experience. Working in a particular occupation, for example, is often used as a surrogate for exposure to a specific agent. Results of community-based air or water sampling also are often used as indicators of individual exposure to specific agents. For many biologic or chemical exposures, a personal history is a critical part of the assessment, but it is difficult to obtain retrospectively and can be obtained prospectively only with extraordinary effort (Rothman, 1993). The major difficulty that epidemiologists face is that there usually are at best limited records of area samples and only rarely any individual-specific exposure information. Consequently, the estimation of past exposure relies on indirect methods. The major result of this type of industrial hygiene investigation is that the estimates will be misclassified, which, if it is independent of outcome, will lead to attenuated estimates of relative risk. For current exposures, which are relevant for cross-sectional studies of prevalence of disease, making precise measurements is expensive but usually feasible.

Although we describe here the difficulty of assessing occupational exposures to organic solvents, the discussion pertains equally to many occupational exposures, including exposure to insecticides. Because exposure estimation is complex, we cover here only some of the major points that influence the interpretation of results of epidemiologic studies4. The discussion highlights many of the challenges that the committee members faced as they assessed a study’s findings and drew conclusions about the strength of a reported association between a specific agent and a health outcome.

The methods used to assess occupational exposures to organic solvents depend on whether the interest is in current or past solvent exposure. In studies of cancer and other chronic diseases, it is usually assumed that exposures to substances in the distant past—for example, 10–20 years before the onset of disease—are the etiologically relevant ones. That is not always the case; for example, increasing time since last exposure to carbon disulfide appears to lower the risk of ischemic heart disease associated with it (Sweetnam et al., 1987). In cross-sectional studies, the interest is often in more recent or current exposures, although historical exposures might also be of interest in these studies.

Numerous methods are available for measuring current exposure, and these vary in complexity with the setting, the agent, and other factors. The selection of a method usually involves considerations of feasibility and of the balance between accuracy (validity and reliability) and cost. In increasing order of complexity and cost are methods that qualitatively provide estimates of magnitudes of exposure (for example, industrial hygienists familiar with the workplace use rank-ordered scales to attribute level of exposure on the basis of job title or other information related to tasks), methods that measure ambient concentrations in a plant or in specific areas, and methods that measure personal exposures (including biologic monitoring). The quantitative measurements can be coupled with other biologic values, such as breathing rates, and the use of toxicokinetics can be of value in

estimating a dose to a target tissue, such as solvent concentration in the liver. In the case of solvents, one must remember that inhalation may be supplemented by other routes of exposure, such as dermal uptake and ingestion. Metabolites formed when the body attempts to detoxify itself might be more toxic than exposure to the solvent itself. There is usually considerable individual variability, so multiple measurements on a representative sample of workers, allowing for changes in frequency and intensity of exposure, need to be taken to arrive at accurate estimates. Biologic markers of exposure (for example, in blood, urine, or hair samples) can be useful for some solvents, but they might not accurately reflect exposure to solvents that are rapidly absorbed and excreted (Rosenberg et al., 1997).

Estimating historical exposures is very difficult. One must distinguish between different study designs; the main ones are retrospective cohort studies and population-based case-control studies. In cohort studies for which no exposure assessments have been conducted, the comparison is between rates of disease among workers in a plant, for example, and general population rates. Unless exposures are relatively homogeneous throughout the cohort, this method will provide inaccurate results. Investigating mortality patterns by department or job title can augment this study design, but again exposure measures can be highly misclassified if there is heterogeneity of exposure. Another type of cohort study is based on census records; one uses the census to identify individuals in different occupations at a given time and then follows them to determine mortality or morbidity rates. This study design can entail serious misclassification related to exposure heterogeneity and changes in jobs (and types of exposures) through the followup period. In a mortality odds-ratio study, a type of case-control study, death certificates are used to identify cases and controls, with the occupation recorded on the death certificate used as a surrogate of exposure. The occupation may be the last occupation or the “usual” occupation of a subject. Again, this can be highly inaccurate for the reasons cited above, and it can be especially misleading if workers change jobs as a result of illness.

For cohort studies in which investigators have access to the workplace facilities, a number of methods are available (Dosemeci et al., 1990; Stewart et al., 1986). When no actual measurements are available, the “gold standard” is as follows:

-

An industrial-hygiene “walk-through” survey to enumerate current jobs and departments; to describe tasks, working conditions, processes, machines, and tools; and to catalog relevant chemical and physical agents.

-

A detailed campaign of measurement of relevant biologic and chemical exposures.

-

Characterization of historical tasks and chemicals used in departments and in jobs on the basis of estimates of material use, plant production records, hygiene controls and personal protective equipment, job and task description sheets, job and task safety analyses, process descriptions, engineering data on agents used and produced in the various industrial processes, and consultations with company engineers and other knowledgeable employees.

During the walk-through survey, additional information regarding exposure to each agent can be noted, including frequency of exposure, a subjective judgment of intensity of exposure, personal protective equipment used, and environmental controls used (such as exhaust fans). For departments no longer in operation, engineers and other knowledgeable company personnel can be interviewed and information similar to that obtained in the walk-through survey can be recorded.

Because details of each subject’s actual job tasks would generally not be known, although descriptions of departments and jobs by calendar year would usually be available from company records, the attribution of exposure would be based on an assessment of these data by experts—usually engineers, industrial hygienists, and chemists. A job-exposure matrix, which is essentially a set of rules that transforms a job to a set of indexes of exposure to specific agents, can be developed. The rows can represent jobs, and the columns exposures. Each cell of the matrix can contain an exposure index that is assigned in advance by the team; these indices can include estimates of the probability that exposure occurred, and average frequencies and intensities of exposure. Depending on the data, the latter can be coded on quantitative or qualitative scales. From this, one can assign to each worker various indexes of exposure to the agents under consideration. Standard cohort analyses (Breslow and Day, 1987) can then be used to estimate relative risk.

The above description represents the gold standard by which exposures to various agents are attributed in retrospective cohort studies. Prospective cohort studies can use the same approach but accuracy can be improved if a detailed measurement program is included. Such studies are rare and expensive.

In population-based case-control studies, a variety of methods have been used. The gold standard is now considered to be the method developed by Siemiatycki and his collaborators in Montreal (Gérin et al., 1985; Siemiatycki, 1991), as improved by Stewart and Stewart (1994a,b). When experienced chemical coders and interviewers are available, this procedure is thought to be superior to others (Bouyer and Hemon, 1993; Stewart and Stewart, 1994a,b), including the use of job titles and exposure information reported directly by subjects (Blair and Zahm, 1993; Bond et al., 1988; Joffe, 1992) and job-exposure matrices (Coggon et al., 1984; Dewar et al., 1991; Dosemeci et al., 1990; Hinds et al., 1985; Kromhout et al., 1992; Magnani et al., 1987; Olsen, 1988; Siemiatycki et al., 1989). Briefly, the method consists of using a structured interview to obtain details of every job that each subject held. The list is provided to a team of experienced chemists, industrial hygienists, and other experts who are familiar with the industries and jobs under consideration from their own personal experience and from in-depth reviews of the hygiene and industrial-process literature. The team then attributes exposure to a specified list of chemicals using rank-ordered scales for frequency, intensity, and reliability of their assessment. The scales can then be used in standard case-control analyses (Breslow and Day, 1980) to obtain estimates of relative risk by exposure, usually adjusted for other factors that are associated with risk.

Thus, numerous methods can be used to assess exposures in occupational settings. Epidemiologists must determine the reliability and accuracy of the methods as they interpret a study’s results. Similarly, the committee members had to determine the validity of the methods as they weighed the evidence and drew conclusions about the literature.

Health Outcome Assessment

In addition to assessing the available information on the agent in question, the committee paid careful attention to the measurement of health outcomes. As is the case for exposure assessment, detailed and accurate information on the particular health outcome being examined leads to increased precision and increases the probability of detecting a true association between agent and a health outcome. In general, the disease or other health outcome should be defined as clearly as possible by using standard criteria and should be

verified. Misclassification of a disease outcome can result in biased findings in an observational study, either in the inability to find an association that exists or in the reporting of a spurious association.

Information on a health outcome can be obtained in many ways in observational studies, including direct questioning of subjects about symptoms before diagnosis, clinical examination of subjects, medical-record review, and access to vital-statistics registries to determine diagnoses or causes of death of study subjects (Rothman and Greenland, 1998). There are a number of issues related to the use of each of those methods, including the reliability of self-reported symptoms, the accuracy of medical records, and variability in the degree to which death certificates correctly specify causes of death. Some studies include independent verification of diagnosis through pathologic confirmation. That reduces the probability of misclassification of outcome; but pathologic confirmation usually is not an option, and the use of death certificates continues to be the most common method of ascertaining cause of death. Diagnostic criteria and uniformity of disease coding have improved, and research has shown that cancer is coded accurately close to 82% of the time (Hoel et al., 1993). However, for cancers with low fatality rates, cancer-registry data should be used because of the potential for bias due to differential access to treatment. Improvements in reporting of death due to some specific cancers have led to apparent increases in the rates of cause-specific deaths that are probably artifacts of improved methods of diagnosis and reporting. But for other diseases—such as cardiovascular, respiratory, and neurologic disorders—death certificates generally are poorly coded.

Latency Period and Fatality Rate

Observational epidemiologic studies of diseases with long latency or high fatality rates, such as cancer, present a unique combination of challenges because of the difficulty of identifying all cases. The period between first exposure to the putative agent and disease onset is known as the latency period, during which cohort members may be lost to followup. In most instances, the latency period for cancer is believed to be long—5–20 years or longer—and probably depends not only on the type of disease but also on the concentration, duration, and intensity of the exposure and other factors, such as genetic predisposition. Few prospective studies are conducted on cancer outcomes, because of the long period of time before disease onset and the resulting costs. Instead, retrospective studies (either retrospective cohort studies or case-control studies) are conducted to examine the relationship between an agent that occurred in the past and the development of cancer.

Health outcomes, such as certain cancers, that have relatively high case-fatality rates reduce the number of living subjects available for inclusion in a study. Many studies therefore rely on mortality data rather than incidence data. However, the use of mortality data for health outcomes with lower fatality rates must be evaluated differently from those that still have relatively high mortality rates. The fact that exposed populations may have different access to medical care and consequently different survival rates may also bias the study results. In particular, there might be differences in early identification and treatment between exposed and nonexposed members of the population being studied.

THE NATURE AND VALUE OF EXPERIMENTAL EVIDENCE

Scientific evidence regarding the potential for chemical substances to cause adverse health effects comes from studies in human populations (epidemiologic investigations) and from various types of laboratory (experimental) studies. The adverse health effects related to exposure to insecticides and solvents used during the Gulf War have been investigated both epidemiologically and experimentally in animals. Given its charge, the committee used primarily epidemiologic evidence to draw its conclusions of association. Toxicologic information provided additional information on plausibility and mechanism of toxicity, especially when making a conclusion of causality.

For most chemical substances, including the subjects of this report, far more data on the adverse health consequences of exposure can be found in the experimental literature than in the epidemiologic literature. The most telling and useful experimental data come from studies in experimental animals—typically rodents but also other species. In addition, much information is acquired from studies in isolated cells and other biologic systems (in vitro studies).

The use of experimental animals to study the adverse health effects of chemical substances began in the 1920s and evolved slowly until the 1960s, when the science of toxicology began to assume many of its modern attributes. In the last 30–40 years, experimental toxicology has provided a major source of information on the toxic hazards associated with exposures to industrial products and byproducts in air, water, food, soils, consumer products, and the workplace. This evolution in the role of experimental evidence came about, in part, because of increases in scientific understanding of how laboratory animals and other biologic systems could be used to provide reliable toxicity information.

In the early years of the discipline, studies tended to be relatively crude and were often limited to investigations of the consequences of a single large dose of a chemical (acute exposure), usually with the goal of defining lethal doses. Gradually, toxicologists added studies involving repeated daily doses; the earliest typically involved 90 days of exposure in rats and mice (a subchronic exposure, still a valuable source of data). In the late 1940s, some toxicologists developed and implemented chronic protocols, which were needed to detect slowly developing carcinogenic effects. Continued research provided the means to develop the specialized protocols used to study the effects of chemical exposure on the reproductive system and on the developing fetus. In more recent years, protocols related to the investigation of adverse neurologic and immunologic effects have been added to the list of reliable toxicologic methods. Much attention is now focused on studies of the behavior of chemicals in the body (toxicokinetic studies) and other types of investigations, designed to provide detailed understanding of the specific molecular and cellular events underlying the production of specific toxicity results. It is thought that mechanistic understanding will make the use of experimental data easier and more reliable for predicting health outcomes in humans.

Toxicologic studies have several important advantages over epidemiologic studies. Animal studies can provide data on toxic effects before the introduction of a chemical into commerce, and results from those studies can be used to establish limits on human exposure to avoid toxicity risks. Indeed, several federal laws require such premarket testing, with regulatory agency review to ensure the adequacy of the data and to establish safety limits. Epidemiologic studies can provide data only after human exposure has occurred. For

diseases with long latency periods, such as cancer, meaningful results can be obtained only many years, or even decades, after human exposures began. In contrast, carcinogens can be identified in 2-year rodent studies.

It is possible to conduct well-controlled multidose experiments in animals so that quantitatively accurate dose-risk relationships can be obtained and causality firmly established. Two goals that are extremely difficult to achieve with epidemiologic studies. It is also possible to investigate a much wider range of adverse health effects in animals: at the end of the dosing period, for example, it is possible to undertake a complete pathologic examination of every organ and tissue of an animal’s body. Such an examination cannot be undertaken in humans.

It is also possible to study the toxicity of any specific chemical in experimental animals. Epidemiologists are rarely able to identify human populations exposed to a single chemical of interest, so their work is often limited to groups of chemicals, sometimes poorly defined. That problem is prominent in the committee’s reviews of epidemiologic studies of insecticides and solvents.

Animal studies, however, are also limited in several ways. It is generally not possible, for example, to evaluate subjective symptoms (such as headaches, joint aches, dizziness, and feelings of dejection) that are commonly reported by veterans of the Gulf War. (Even current clinical science is challenged when asked to study such phenomena; see Chapter 7.)

The most important limitation of animal studies is related to the simple and obvious fact that rodents, dogs, and even nonhuman primates are not human beings. It is well documented that some responses to toxic chemicals are similar among several species of animals, but it is equally well documented that others vary among species. The general similarity in biologic structures and functions in all mammalian species, including humans, tends to support the use of data from animal models to predict, with reasonably high certainty, adverse effects in humans. But prediction that an adverse effect observed in animals will also occur in sufficiently exposed humans is far more certain than prediction of the magnitude of the dose necessary to produce that effect in humans. That is, extrapolation of qualitative findings between species is more certain than extrapolation of quantitative findings.

A fairly large body of evidence supports the use of animal toxicity results to predict effects in humans. But there are still substantial uncertainties regarding the interpretation and predictive value of animal data. It is well documented that substances that have been identified through epidemiologic studies as human carcinogens (benzene, vinyl chloride, and many others) are also carcinogenic in rodents, but there is not always a good correspondence between the types of cancer observed in humans and those seen in rodents. It might be justified to conclude that animal carcinogens are highly likely to be human carcinogens, but it would be difficult to justify prediction of the type of human cancer expected. That is because animals lack the specificity needed for this study. While it might be adequate from the standpoint of a regulator to conclude that an agent can be classified as a carcinogen, this review requires linkage of that agent to a particular cancer site. But known human carcinogens may produce tumors in the same or in different sites in animals. For example, vinyl chloride, which is known to cause angiosarcoma of the liver in humans, causes zymbal gland tumors in male and female rats (along with a number of other cancer types including angiosarcoma). Humans do not have zymbal glands (NTP, 2002). Other uncertainties restrict

our ability to draw inferences for human health from animal data. Where the available evidence supports the drawing of such inferences, however, failure to do so could lead to a mischaracterization of the human health effects of chemical exposures.

Notwithstanding the limitations in the predictive value of animal data, regulatory agencies, for the reasons described above, rely heavily on such data in assessing risks to humans and in establishing regulatory standards. There is, however, an element of a policy of caution in the regulatory uses of animal data, particularly when the scientific basis of extrapolation of specific types of animal results to humans is not well established. In the absence of adequate or convincing human evidence, regulatory officials act on the basis of animal findings to avoid neglecting a potential risk to humans. Most laws enforced by regulatory agencies permit the agencies wide latitude in the choice of data used to prevent future disease or injury. In the present case, however, the goal is not prevention of risk, but rather the use of the best available data to categorize evidence for a relationship between a chemical exposure and the occurrence of an adverse health outcome in humans. Here, precautionary policies have no substantial role (at least not the same way that they have in regulation). Therefore, studies in human populations played the dominant role for the committee in identifying the relevant associations. Experimental evidence may or may not provide support for epidemiologic conclusions.

Many of the chemicals that are discussed in this report have been subjected to extensive experimental toxicity studies, and all have been the subject of some level of study. A complete summary of all the experimental data available on all the solvents and pesticides or insecticides under review would fill volumes. Given the small role of such studies in this report in the categorization of evidence, such a detailed review would serve no purpose. Instead, the report provides only a broad picture of the most important experimental-toxicity data available in reliable secondary sources (Chapters 3 and 4). Relevant experimental data are discussed, however, in those chapters reaching conclusions of “sufficient evidence of a causal association” to support that categorization.

REFERENCES

Axelson O. 1978. Aspects on confounding in occupational health epidemiology [letter]. Scandinavian Journal of Work, Environment and Health 4(1):98–102.

Blair A, Zahm SH. 1993. Patterns of pesticide use among farmers: Implications for epidemiologic research. Epidemiology 4(1):55–62.

Bond GG, Bodner KM, Sobel W, Shellenberger RJ, Flores GH. 1988. Validation of work histories obtained from interviews. American Journal of Epidemiology 128(2):343–351.

Bouyer J, Hemon D. 1993. Studying performance of a job exposure matrix. International Journal of Epidemiology 22(Suppl 2):S65–S71.

Breslow NE, Day NE. 1980. Statistical Methods in Cancer Research, Vol 1: The Analysis of Case-Control Studies. Lyon, France: IARC Scientific Publications (32)5–338.

Breslow NE, Day NE. 1987. Statistical Methods in Cancer Research, Vol 2: The Design and Analysis of Cohort Studies. Lyon, France: IARC Scientific Publications (82):1–406.

Coggon D, Pannett B, Acheson ED. 1984. Use of a job-exposure matrix in an occupational analysis of lung and bladder cancers on the basis of death certificates. Journal of the National Cancer Institute 72(1):61–65.

Dewar R, Siemiatycki J, Gérin M. 1991. Loss of statistical power associated with the use of a job-exposure matrix in occupational case-control studies. Applied Occupational and Environmental Hygiene 6(6):508–515.

Dosemeci M, Stewart PA, Blair A. 1990. Three proposals for retrospective, semiquantitative exposure assessments and their comparison with the other assessment methods. Applied Occupational and Environmental Hygiene 5(1):52–59.

Ellwood JM. 1998. Critical Appraisal of Epidemiological Studies and Clinical Trials. 2nd ed. Oxford: Oxford University Press.

Evans AS. 1976. Causation and disease: The Henle-Koch postulates revisited. Yale Journal of Biology and Medicine 49(2):175–195.

Gérin M, Siemiatycki J, Kemper H, Begin D. 1985. Obtaining occupational exposure histories in epidemiologic case-control studies. Journal of Occupational Medicine 27(6):420–426.

Hennekens CH, During JE. 1987. Epidemiology in Medicine. Boston: Little, Brown, and Company.

Hill AB. 1965. The environment and disease: Association or causation? Proceedings of the Royal Society of Medicine 58:295–300.

Hinds MW, Kolonel LN, Lee J. 1985. Application of a job-exposure matrix to a case-control study of lung cancer. Journal of the National Cancer Institute 75(2):193–197.

Hoel DG, Ron E, Carter R, Mabuchi K. 1993. Influence of death certificate errors on cancer mortality trends. Journal of the National Cancer Institute 85(13):1063–1068.

IOM (Institute of Medicine). 1991. Adverse Effects of Pertussis and Rubella Vaccines. Washington, DC: National Academy Press.

IOM. 1994a. Adverse Events Associated with Childhood Vaccines: Evidence Bearing on Causality. Washington, DC: National Academy Press.

IOM. 1994b. Veterans and Agent Orange: Health Effects of Herbicides Used in Vietnam. Washington, DC: National Academy Press.

IOM. 1996. Veterans and Agent Orange: Update 1996. Washington, DC: National Academy Press.

IOM. 1999. Veterans and Agent Orange: Update 1998. Washington, DC: National Academy Press.

IOM. 2000. Clearing the Air: Asthma and Indoor Air Exposures. Washington, DC: National Academy Press.

Joffe M. 1992. Validity of exposure data derived from a structured questionnaire. American Journal of Epidemiology 135(5):564–570.

Kromhout H, Heedrik D, Dalerup LM, Kromhout D. 1992. Performance of two general job-exposure matrices in a study of lung cancer morbidity in the Zutphen cohort. American Journal of Epidemiology 136(6):698–711.

Magnani C, Coggon D, Osmond C, Acheson ED. 1987. Occupation and five cancers: A case-control study using death-certificates. British Journal of Industrial Medicine 44(11):769–776.

Monson RR. 1990. Occupational Epidemiology. 2nd ed. Boca Raton, FL: CRC Press, Inc.

NRC (National Research Council). 1991. Human Exposure Assessment for Airborne Pollutants: Advances and Opportunities. Washington, DC: National Academy Press.

NTP (National Toxicology Program). 2002. 10th Report of Carcinogens. Research Triangle Park, NC: NTP.

Olsen J. 1988. Limitations in the use of job exposure matrices. Scandinavian Journal of Social Medicine 16(4):205–208.

Rosenberg J, Katz EA, Cone JE. 1997. Solvents. In: LaDou, J ed. Occupational and Environmental Medicine. 2nd ed. Stamford, Connecticut: Appleton and Lange. Pp. 483–513.

Rothman KJ. 1993. Methodologic frontiers in environmental epidemiology. Environmental Health Perspectives 101(Suppl 4):19–21.

Rothman KJ, Greenland S, eds. 1998. Modern Epidemiology, 2nd ed. Philadelphia: Lippincott-Raven.

Siemiatycki J, Dewar R, Richardson L. 1989. Costs and statistical power associated with five methods of collecting occupation information for population-based case-control studies. American Journal of Epidemiology 130(6):1236–1246.

Siemiatycki J. 1991. Risk Factors for Cancer in the Workplace. Boca Raton, FL: CRC Press.

Stewart PA, Stewart WF. 1994a. Occupational case-control studies: I. Collecting information on work histories and work-related exposures. American Journal of Industrial Medicine 26(3):297–312.

Stewart PA, Stewart WF. 1994b. Occupational case-control studies: II. Recommendations for exposure assessment. American Journal of Industrial Medicine 26(3):313–326.

Stewart PA, Blair A, Cubit DA, Bales RE, Kaplan SA, Ward J, Gaffey W, O’Berg MT, Walrath J. 1986. Estimating historical exposures to formaldehyde in a retrospective mortality study. Applied Industrial Hygiene 1(1):34–41.

Susser M. 1973. Causal Thinking in the Health Sciences: Concepts and Strategies of Epidemiology. New York: Oxford University Press.

Susser M. 1977. Judgement and causal inference: Criteria in epidemiologic studies. American Journal of Epidemiology 105(1):1–15.

Susser M. 1988. Falsification, verification, and causal inference in epidemiology: Reconsideration in the light of Sir Karl Popper’s philosophy. In: Rothman KJ, ed. Causal Inference. Chestnut Hill, MA: Epidemiology Resources. Pp. 33–58.

Susser M. 1991. What is a cause and how do we know one? A grammar for pragmatic epidemiology. American Journal of Epidemiology 133(7):635–648.

Sweetnam PW, Taylor SW, Elwood PC. 1987. Exposure to carbon disulphide and ischaemic heart disease in a viscose rayon factory. British Journal of Industrial Medicine 44(4):220–227.

Wegman DH, Woods NF, Bailar JC. 1997. Invited commentary: How would we know a Gulf War syndrome if we saw one? American Journal of Epidemiology 146(9):704–711.