we hope to do as part of this workshop is to learn from those other disciplines how we can better do the testing of the instruments that we are creating.

We have considerable documentation challenges to face, and I believe a lot of them are going to require behavior modification as well as new tools. And some would say more management as well; since Chet’s not here, I felt more comfortable in saying that one.

I think that it’s important for those of you involved in the education of survey methodologists [to know] that we need to increase the education of both our current staff and our potential new staff in areas related to the importance of documentation, the approaches to documentation, as well as to all issues related to testing of instruments. It’s important, I believe, for the industry as a whole to make an investment in standards and in tools. I don’t think we should be off doing this on our own; we need to be doing this as an industry. I think that the burden of documentation and testing can be reduced through industry-wide standards, and I hope that we can begin the process of setting those standards as part of this workshop.

So [those are] my opening remarks, and Chet apologizes for not being able to be here, but there was the minor issue of the budget for the U.S. Census Bureau, so I guess we can forgive him. If there are any questions about the overall focus, we could entertain those, or I could move right into the talk to provide the setup for the problem. [No questions were raised.]

WHAT MAKES THE CAI TESTING AND DOCUMENTATION PROBLEMS SO HARD TO SOLVE?

Pat Doyle

DOYLE: So, why is it that we now have a documentation and testing problem that we find so hard to solve? Even though we’ve actually been in the area of automation of surveys since the mid-1980s, we didn’t really face these problems until much more recently.

I’m sure most of you know by now what we mean by computer-assisted personal interviewing, but let’s try to get everyone on the same basic page on what we’re really talking about. Basically we have a set of predetermined questions. The phrasing and the sequence have already been set up, and they follow explicit rules that are conditioned on the circumstances of the respondent.

The question text and the rules are implemented in an interactive transaction-oriented computer system. And the system allows for pre-

scribed flows as well as unprescribed flows, i.e., you can back up and go pretty much anywhere you want.

And that ability to back up and move forward and back up creates an infinite number of unique instruments for any given instrument that we can field. Obviously we don’t implement any more than we have respondents to talk to, but we have the capacity to have huge numbers of different variations on the instrument. And the important thing is that all of these have to work perfectly, because we don’t know until we get into the field which unique path has to be functioning for a given observation.

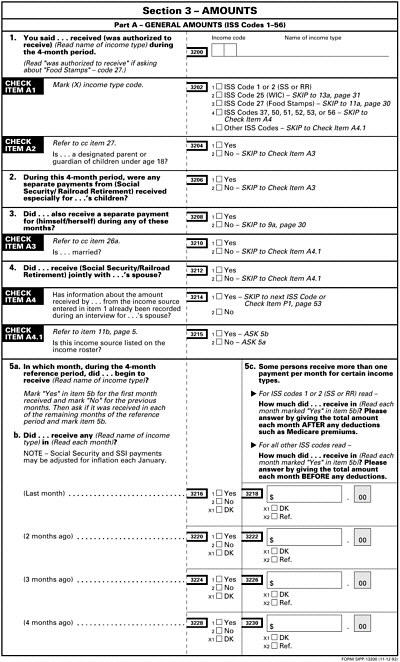

The Census Bureau is known for doing complex surveys. We have always done them. We did challenging surveys in paper; we’ve done challenging surveys in computer-assisted telephone interviewing (CATI). But, boy, now that we’re in computer-assisted personal interviewing we really have some complex instruments, one of which is the Survey of Income and Program Participation (SIPP). [For illustrative purposes, a one-page excerpt from a pen-and-paper version of the SIPP instrument is shown in Figure II-1.]

SIPP is a longitudinal survey, which means that we go back to the same set of respondents repeatedly. It’s nine rounds of interviewing for each sample that we have in the survey. We do three interviews a year; we go back every four months to the same people and also talk to whomever else they live with. We spread the interviewing out over time to equalize the field work load, so every month we’re doing about one quarter of the sample.3 We have an instrument that has about 13,000 different items in it that we’ve put out in the field, that we’ve automated. It’s personal interviewing—carry the laptop to the house, or be at home with the laptop making telephone calls. The instrument is completely coded on the laptop, and there [are] some separate systems that control when the instrument comes in and when the case is set up and so forth.

The information that we collect is monthly, so for every month of the four-month reference period we collect information. We have data that we collect every wave, which is what we call “core information.” We have that for income—well over 50 different income sources. Extensive demographic characteristics. A lot of work history and activities, so we

can get a good measure of earnings. We also have questions that vary from one round of interviewing to the next, so in one interview we might ask the respondents about their asset holdings and in another we might ask them about their fertility or their marital history. Those are called “topical modules,” so they vary from one round of interviewing to the next.

One of the things that helps complicate our instrument development is that when we’re doing a longitudinal survey we don’t like to look dumb. We like to have our [field representatives (FRs)] look like they remember they were here four months ago and be able to say, “Oh, the last time you told us you were doing x; are you still doing x?” That’s called “dependent interviewing.” It’s used in some cases to reduce some spurious error that might occur because a respondent might reply slightly differently now than they did last time—a lot of characteristics like industry or occupation where we can get a slightly different answer if they use a slightly different set of words even though nothing else changed. It’s also used to control for—smooth out—what we call the “seam bias.” For those of you who don’t know: remember I said we have four months in each round of interviewing, so you can get a transition on and off a program from month one to two, and then two to three and three to four. And then you have another round of interviewing, so you get month five from the next wave. Well, so now you have transitions one to two, two to three, three to four, four to five—you’ll notice how I said, “four to five”— five to six, six to seven. What happens is most of the transitions tend to be reported at the juncture between the rounds of interviewing, instead of evenly pretty much throughout the period—that’s what’s called the “seam bias.”

Another survey where we’re in the process of instituting an extremely complex instrument is the Consumer Expenditure Survey (CES). This survey is used as a component of determining the Consumer Price Index (CPI). We have four quarterly visits, plus a bounding interview, to households. Now these are addresses that are followed, so they go back to the same place every time—they don’t always talk to the same set of people. This particular instrument only has about 200,000 separate items that it has in its store of things to ask. It takes an hour and a half to two hours to administer. If you can imagine you have all your expenditures, we’re trying to get a complete record of all of those items and how much you spent on them. It basically covers all sales and excise taxes for all items purchased for basically the unit we’re interviewing and for others, and it’s all classified by type of expenditure. So you can see that it’s an extremely complicated instrument.

For those of you who are not familiar with the way we do these surveys, I’d like to give you a couple of illustrations as to why we have

a complex problem. Let’s consider a question about the new [State] Child’s Health Insurance Program (SCHIP).4 This was instituted a few years ago in response to the concern about lower-income children not having adequate health insurance coverage. So, you might think to yourself, “Let me figure out who’s covered by this program.” So you have a simple question—“Is … covered under the new child’s health insurance program, in a certain period of time?” Simple, basic question. You might think to yourself, “So what’s so hard about putting that into a computer and bringing it up on the screen?” Well, if that’s all we did, that would be fairly simple. And when we were in paper our questions usually weren’t a whole lot more complicated than that. We left it to the field representative to do a lot of the tailoring of the questions to the circumstances of the respondent. So the FR would know to fill in “…” with either “you” or the person’s name—they’d be trained to do this, they’d have the responsibility. The respondent would be trained in how to fill in the reference period, so they would know that if they were in SIPP Rotation x, that they would fill in the four months that were appropriate for that interviewer. So the instrument just had it loosey-goosey there, so to speak.

Well, now that we’ve got it automated, we don’t leave it like that anymore. We now take the opportunity to make this question look different for virtually every respondent. So, one thing we do is to first figure out whether we’re about to talk to a small child—well, we don’t talk to small children directly, we talk to their parents. So we have to have a version of this question for the parent, and that question might be, “Are any of your children covered under this program?” Or it might be a separate question for every child, where we maintain a roster of children and the computer will cycle through the question, explicitly naming each child. We might condition the phrasing of this question on whether they’ve had any other kind of insurance—“you’ve just told us that you have insurance type x; now, do you also have this type of insurance?” As I mentioned earlier, we want to look smart—we want our FRs to look smart, to acknowledge that [the respondent] told us they were covered by this last time. So we would phrase the question, “Last time you told us Sally was covered under CHIP. Is Sally still covered under CHIP?” As I noted, we want to get the reference period right, and if it floats like it does for some surveys the computer is going to predetermine what it is.

Another thing that we like to do which we couldn’t do under paper is [that]—for these programs that have different names depending on the locality, which is true for most of the welfare programs now—we want to pre-load all those names, and check the address of the respondent, and instead of saying “CHIP” we might use the explicit name for the program as it exists at that particular location. We might also want to condition this question on their income characteristics, because CHIP is also a program for low-income children. So maybe we wouldn’t want to ask the question of really rich households, or maybe we want to ask it a different way. We also have situations where we accept a proxy response, so we would want to phrase our question a little differently depending on whether or not we’re talking to the respondent or talking to the proxy. And then of course there’s always the need to have some facility for the FR to answer a respondent’s question: “[What] is this program you’re asking about?” So we need to have that sitting around.

So there you are. Now we’ve taken one simple little one-liner question and created probably three pages of computer code of some kind or another to carry out all the variations on a theme that one could possibly consider one might want to have in the field. The interesting thing is that what you’re trying to do is condition the question on the characteristics of the respondent before you know what the characteristics of the respondent are. So you’re back here imagining all possible things that could happen instead of being able to just precisely target it to what you’re going to get in the field—you have to anticipate it all.

Let’s look at one more example. So we have a fairly simple question— we want to know what their income was from a particular source; in this case it’s an asset-type source. Again, in the original paper version of this, we have a fairly simple question; we have the ellipses where we would fill in the respondent’s name, or “you”, or “he”, depending on the circumstances—again, up to the FR to decide, a lot of freedom there on their part. We’d probably in this case have this set up with a little list of income sources that they’ve compiled—the respondent has gone through and said four or five different things, so now they’ve got a little list. And they’ll cycle through this question—and they have instructions to tell them how to cycle through the question—going through each of the four or five income sources that they’ve got. Now we go to automated— we no longer have the simple one-liner. We would vary the question depending on what type of account they have. We would—particularly in the case of SIPP—when we ask for amounts, we ask the question differently depending on whether or not it’s reported jointly. And, in the newer version, dependent on who it’s jointly with. So, if you have account joint with your spouse we’ll ask the amount question one way; if you have an account jointly with a minor child we’ll ask the question a

second way; if you have an account jointly with somebody else we’ll ask it a third way; and if you have a combination of these, well, Lord knows what you’re going to get. And, of course, if you just have a single-held account we would just ask you what the amount is.

Now we also use dependent interviewing in these circumstances. In this case, what we’re looking at are situations where we don’t initially report back what they told us last time—we wait and let them tell us something. If they don’t know an answer, if they don’t remember, then we do a check to ask them a slightly different version of the question: “Was it bigger than x?” Or, “was it between x and y?” And if they still don’t know then we’ll say, “The last time we were here you told it was about $100. Is that right? Is that about right?” So that will trigger a memory, hopefully, that they can use.

So there we have our infinite variations on this particular theme. And this is—remember—one of 13,000 different items in the SIPP survey.

So basically what we’re doing is trying to optimize the question. We want it to be clear to the respondent what it is we’re trying to ask. For example, if you’re talking about income from a job you probably want to call it “earnings” instead of “income,” just because that’s what we’re used to using in that context. We want to find the easiest way for the respondent to answer. One of the things we’re experimenting with in SIPP now is to ask the respondent whether it would be easier for them to report monthly, weekly, biweekly, annually—whatever way they like, and then depending on which of those they choose they get a slightly different set of questions in order to answer the amount. We’d like to create a friendly rapport between the field representative—which is an interviewer, by the way; this is Census Bureau-speak for an interviewer, my apologies—we want that interaction to be friendly, so we don’t want to force the respondent to answer a question in a way he doesn’t want to. In this case, we wouldn’t force them to report monthly if they really didn’t want to. If they want to report annually, well, we can divide by 12—no problem, we’re in a computing environment, right? The computer can do it. And we also want to give them lots of opportunities to report things in alternative ways.

OK, we’re still not done with the level of complexity that we can introduce into this instrument. We now have all different types of question structures, answer structures. We can have a simple “yes”/“no”, and with a simple “yes”/“no” we can allow them to have a “don’t know/refuse” or not to have a “don’t know/refuse”. We can have a “don’t know/refuse” be visible on the screen or invisible on the screen. Lots of options, just for a “yes”/“no” answer. We can have a single question that has several different answers—if we ask them how long they’ve been involved in an activity, we might let them fill in days, months, or years. So we would

have three different answer opportunities. We can put several questions on a screen or one question on a screen. We can have a different style of answer category if we expect the response to be continuous—like, “give me your income”—versus discrete—“do you have white hair or purple hair?” We also have to deal with a numeric/alphanumeric mix—whatever we do, we allow “don’t know/refuse”, and that comes in as “D” and “R” sitting in a field with amounts like “$100” and “$150”. And of course we can never anticipate every possible category, so we always have an “Other (specify)” with our other categorical responses.

BANKS: I don’t mean to interrupt, but “don’t know/refuse” get conflated—they’re not separate responses?

DOYLE: That’s correct. You can design it pretty much any way you want to, but it’s sitting …

BANKS: So implicitly someone who just says they “don’t know” is the same as someone who …

DOYLE: Oh, no, no—they’re separate. I misunderstood your question. You get a “D” or an “R”—my point [is that] it’s the mix of alphanumeric and numeric that causes some data processing procedures to get a little unhappy.

Sometimes we want to change our questions slightly depending on the way people answer, so we have these little pop-up things—I don’t know what you call these things in a cartoon, but they just pop up. And I mentioned the “other (specify)” and “don’t know/refuse” options.

OK—I’m still not done yet. Let’s talk about what causes the infinite variety of these questionnaires. At any point in an instrument, the Census Bureau—and I know not everyone implements it quite this way—but at any point in an instrument our field representatives can back up and change an answer that was previously made. There are no blocks in there; there is no place that stops them. I know some instruments will limit how far back you can go, but we can go back. We can go back to the prior question, change the answer, and come back to the current one. We can go back five or six different questions, change that answer, come back to the current one. We can go back 10 or 20 questions, change that, go down a slightly different path, and then come back to where we started. Or we can go way back, down a different path, and never get back to where we started. So, we have to allow for that kind of unanticipated flow through an instrument.

PARTICIPANT: When you go back, is it just as though you had never gone down that path? So if you go back to a question that has a branching characteristic, and you’ve made response to that, do you in effect discard all the former material that had been collected so [that] it’s as though you never went down that path? Or do you [use] some of that …

DOYLE: It partly depends on the implementation, but it also depends on the system. CASES, anyway, doesn’t erase it automatically;5 theoretically, it’s eventually supposed to erase it if you don’t go back down that path again. But it’s still sitting there waiting for you because it doesn’t know quite yet whether you want to change. So if you don’t change the path the answers are still there; you don’t have to re-enter them to keep on the same path. But you shouldn’t have to go back and discard them when you change it, but we’ve had some issues with the CASES software. Now I don’t have experience with Blaise.6 Ed, you’re here; can you talk a little bit about your experience with Blaise and the backing up. Does it reset the on-path/off-path sort of stuff?

DYER: How much time do you have? [laughter] We’ve run into a lot of problems with setting outcome codes, for example, just recently where if you set it as you go through the normal path it’s there for you but if you back up it doesn’t erase them unless you tell it to do so. You can arrange it to have those reset; you can initialize those variables. Now there’s an issue about taking it all around. [But more than that] I would have to hear from the experts.

PIERZCHALA: [In Blaise,] if you back up and there are off-route data when you close the interview, the off-route data are wiped out at the time you close the interview. But they’re available while you’re still in that session; they’re there, so if you come back to that path and discover that you should have been there all along they are still available and you don’t have to re-enter them.

PARTICIPANT: That’s probably the optimal solution.

DYER: … unless you happen to design that thing with auxiliary variables and let that off-path get reset, so it’s really based on what your intent is. You can do any number of things.

PIERZCHALA: Well, that’s what I’m saying—you can program any number of things, and you have to be careful what you’re going to program in the first place. And my experience is that a lot of people who are complicated in what they program get mixed up backwards.

DOYLE: Yes, Tom?

PIAZZA: Just to clarify what CASES would do in that situation: basically, it leaves the data there until you go through a cleaning process, and then it will wipe it out. But often [the] Census Bureau doesn’t clean their instruments anyway, so sometimes you have the strangest things because you don’t go through that step.

DOYLE: OK, so does everyone believe that this backing-up feature is complicating our instruments? The other thing we need to be able to do is to successfully—abruptly— end an interview in the middle of talking to a respondent. I mean, things happen, and they stop talking, and you say, “OK, fine, thank you,” and go on to the next person. [This] needs to be able to happen without destroying the instrument. We also need to be able to do this for a whole group of respondents in our case. And once we’ve done that we have to start all over again in the middle. Yes?

ROBINSON: And when you abruptly end, what happens to the data that was entered so far?

DOYLE: I need an expert to answer that question. Adrienne?

ONETO: The data that was entered so far is saved. But I just want to make a comment, though. A lot of what you’ve described so far really is our choice of how we let the automation. I mean, we could have still continued to use our interviewers to determine universes and to determine skip patterns, and to decide what is the most appropriate fill for a question. I think there was this insistence among all of us that once we went to automation we were going to use automation to replace this very complicated cognitive process that all interviewers go through when they conduct the interview. We always had multiple question types, we always had very difficult question puts—I mean, a lot of what you described always existed with our paper instruments. It’s how we chose to implement the automation, and I think that’s important. We could go back to the sort of paper approach and let the field reps determine the universe and the skip and the flow and the …

DOYLE: Except for the cognitive people of the world, who have now got a gold mine for ways that they can predetermine how to appropriately phrase the question so that the FR isn’t using a little bit too much imagination in determining how to phrase it. So it’s good and bad, but you’re right. A lot of this is not because we automated; it’s because we chose to do automation in a certain way. And we’re not alone. Most people are choosing to do automation in this way.

PIAZZA: Let me make a comment on that. Although it’s true that we could leave a lot of fills to the interviewers, when you’re talking about flow it’s a little trickier. Remember, the interviewer can’t just flip the pages of the questionnaire.

ONETO: Right.

PIAZZA: So the flow really has to be built into the instrument. And that, in a sense, was part of the attraction of the computer-assisted interviewing in the first place—that somehow we would eliminate these errors in flow.

ONETO: Yes, that’s true. Except that we could still do a traditional check item, where they’re asked a lot of things and that decides what happens next. But we don’t do that. We collect information that’s already been provided and—behind the scenes—we do the check item and the flow and the skip. That’s what I meant.

PARTICIPANT: Do you ever get any pushback from some of the FRs, especially perhaps those who are more experienced or maybe those with no experience, that they feel they can determine the flow or some of the questions better than the computer can, or that they lack the flexibility that they would get with a paper instrument where they could just flip the pages? Does that ever happen?

ONETO: I think it’s quite the opposite. Interestingly enough, at the Census Bureau, at the nascent states of automation they resisted it. But now the interviewers who are trained with automation do not want to go back to paper, they don’t want the burden of skips and universes and everything else. They like the fact that the automated instrument does it for you.

DOYLE: Yes, basically, that’s been our experience. Now, they do have different attitudes about what works best in the field than we do at headquarters. So we use them in the instrument development process— it’s not quite a formal usability test, but they can sort of tell whether this thing is flowing well or not. And we’ll go back and modify the behind-the-scenes to allow it to flow better and be more comfortable for them. But they’re not asking us to do that control themselves.

COHEN: Could you define “universe”, “fill”, and “skip”, for those of us …

DOYLE: Sure. A “fill”—if you remember back to the question we had before, “What was …’s interest income in reference period, from [some] source?” “…” is a fill. In other words, there would be an instruction behind the scenes to fill in either “you” or the person’s name or “he” or “she”, depending on what the instrument knows about the respondent at that time. That would be a fill; it’s like a piece of code that basically does a calculation based on a characteristic and plugs in a name or something. Similarly, reference period could be handled as a fill, so that instead of seeing a parenthetical remark describing a generic reference period, when this question goes up on the screen it would say “April, May, June, July,” or it would say, “since April 1”. In other words the computer has changed the appearance or the wording on-screen so that it meets the need of this particular case. That’s the fill.

The skip is … Let’s say that just before this interest income amount question we had a question that said, “Do you have an interest-bearing account?” And if they say no we might have some logic that says, “OK, don’t ask the next amount question—skip it and go on to the next.” That’s a skip.

Then the amount question itself now has a universe of people who have said, “Yes, I have an account,” so therefore they’re going to get to answer the amount question. So that’s basically the universe; the skip and the universe go together. The skip patterns tell you how to go forward through the instrument, and the universe tells you who was allowed to answer this question.

OK. So, we’re still not done yet in terms of describing how we can make this thing complicated. We don’t always literally record the output the way it was input. So when the FR types in a number we may or may not store that number in our database. Now, sometimes we don’t store it because there’s a bug in the instrument. Sometimes we don’t store it because we didn’t intend to store it; we might store a recode of it, or we might take two or three questions that are really getting at the same thing and only store one output. We have the freedom to do this if we want. As we said before, we can have very mixed-format output fields that kind of drive computer programs nuts. But we have to make sure that they’re correctly placed on the files. We also have inputs to the instrument. Whenever we go out we don’t just start with a blank slate; we have a little file coming into our instrument that’s telling us where the address is. If this is the second time we’ve interviewed them, it’s feeding back data from the prior interview. So we’ve got this input file we’ve got to worry about, getting it right and getting the interaction between it and the instrument to work right.

We also have the infamous systems. The instrument doesn’t operate in a vacuum; it operates within the context of some other systems that keep track of what cases should be interviewed, which cases are assigned to which field representative and where the cases are; whether they’ve been spawned or not and, if they are, which interviewer gets sent off to them. And then of course there’s the all-important system that captures the data after the interview’s complete and sends it back to some database somewhere, housed in somebody’s computer somewhere. It also is the thing that keeps track of when you’ve abruptly stopped an interview and have to restart it; that’s what’s controlling that activity.

On top of all that we do what automation was sort of built up to do— we do online edits. In other words, while we’re asking these questions, if the respondent gives us an answer that we don’t think is right, we can check it and we can ask them again. “Are you sure you meant to tell me that?” Now, we don’t do that very often because it doesn’t exactly

go toward friendly interactions with the respondent. But we do it a lot when we’re looking for FR keying errors, for example. If we expected a number in the range 1 to 99, and they key in something like 10,000, we might come back with an FR message screen asking whether they can go back and doublecheck that, whether they keyed that in correctly. So we do a lot of that sort of range-checking on the dates; if they say they got married before they were born, we might want to clarify which of those dates was the correct one.

So why is this now a problem when it wasn’t a problem before? When we were doing instruments in paper we were using a medium that constrained our options to those that humans are capable of understanding, capable of documenting, and capable of testing. When we initially did computer-assisted interviewing we were doing it in a telephone environment, often in multi-mode surveys. And the computer-assisted telephone interview (CATI) questionnaire would often track the paper questionnaire. So we were implicitly using the “KISS” [“Keep It Simple, Stupid”] method of instrument design. We still had a lot of responsibility out there for the field rep to do the fills; the flows were not very complicated. We could easily tell what all the possible paths were. We could print out the instrument. We had documentation, and so forth. But when we got to computer-assisted personal interviewing and we weren’t doing multi-mode surveys, boy, we went to town!

And, as Adrienne [Oneto] said, it’s not that automation makes it worse; it’s that we are allowed to make it worse because we are automated and have no constraints.

So, I believe the solution is that the industry needs to restrain itself. But, also being an instrument designer, I know that it’s absolutely impossible. When I sit down to design my set of questions that I want to go into the field with, I’m as bad as anybody else. “No, I’d like it this way if it’s this set of circumstances, and this way if it’s that set of circumstances.” And I can’t restrain myself any better than anybody else can.

I was going to talk a little about the documentation problem and then about the testing problem separately. Yes?

BANKS: It seems that there are sort of two extreme positions that one could take. One is that you we want to presume, to grow, to plan [for] all possible contingencies and plan for that whole tree of possible questions. And the other approach is to basically allow the FR to have a conversation with the respondent and then to fill out the form in an intelligent fashion based on what the respondent said. Is there any experiment that has been done that compares the yield or the accuracy of results under those two kinds of positions?

DOYLE: Would you like to answer that question?

PARTICIPANT: Well, this builds on work that I’ve done on the conversational literature. Not exactly conversations, but there have been experiments where interviewers can override, where they don’t exactly follow the skip patterns—it doesn’t allow full improvisation, but it does relax the script. And in some cases that does dramatically improve accuracy. Most of that comes from clarifying concepts, from clarifying what’s being asked in various parts of the script. That’s not exactly what you ask because there’s not much relaxation of the skip patterns.

GROVES: On the other hand, there’s a paradigm in measurement that asserts that the sensible thing is to standardize—rightly or wrongly— because when you get the data back then the completion of the stimulus accrues to the measurement.

DOYLE: Mark, you had a point?

PIERZCHALA: Well, in all the papers I’ve read about accuracy, the one place where computer-assisted interviewing has really improved things is keeping people on the correct path. You’re not asking questions that you should not ask; you are asking the questions you should ask. On paper we have both those sorts of errors. You get data on paths they shouldn’t have gone down and no data on paths where they should. So we want to keep the skips in there. But there’s this whole different aspect—do you really need to carry the fills out to the nth degree? And can’t the field representative—with their native intelligence—provide the fills themselves?

PARTICIPANT: The issue of restraint is an interesting one, and I’m just asking whether it comes back to negotiating a dialogue between the people responsible for system engineering and testing. It involves saying, “Look, we want certain standards; this is how the surveys are all going to be organized.” And then on the other hand you have the people who do the survey design and—with their set of issues in mind—push the envelope against that. Is that the way it is, and if so how do you negotiate that? Is that negotiated in advance, or each time it comes up?

DOYLE: It’s unique to the circumstance. Lots of times either the budget or the timeline will drive what you can do. So, if you’ve got your survey designers really making this thing super-precise, and it’s getting really complicated, and then all of a sudden the office sees it and says, “Oh, my goodness, there’s no way I’m going to be able to author this in the two weeks I’ve got left,” then we go back and say, “No, no, no, no, no—we can’t do this.” So there’s some back and forth, and there’s some middle-layer people who do the negotiation between the designers and the authors. Lots of people design these staffs differently, but if you think of three different functions there, that’s typically what goes on.

But there are other ways we can restrain ourselves without getting away from the precision, and that’s just to simply have standards for

straightforward things. Like, for instance, “his” and “her” fills—there’s no need for anybody to ever have to program a “his” and “her” fill, after it’s been programmed one time. There’s just no need to do that. If everybody agreed that this is how we do a “his” and “her” fill, then there’s a little function out there and you just have to plop it in. So that’s a level of restraint that’s different from how complex can we make the question. [Would] I give up my freedom to do a “his” and “her” fill the way I want to do it in favor of the way the majority of people want to do it? Which is a different kind of restraint, you see.

PARTICIPANT: This may be something you glossed over in describing the complexity in case management: What kind of flexibility is given to the interviewers in terms of the outcome of the case? What happens with it, and with the Census Bureau’s philosophy of all behind-the-scenes, all automated? Do other organizations think of the interview the same, what the outcome is? At the end when you’re done? That carries a lot into the development process and the testing.

DOYLE: The whole systems side is something I haven’t dwelled on, and I sort of view the setting of that—maybe I’m wrong, but I kind of put that final outcome code setting more on the systems end.

For those of you who don’t know: when the instrument is on the computer, there are interim stages—for instance, you’ve opened the instrument, you’ve tried to conduct the interview, you weren’t successful. You make some sort of interim record that you made this call. Maybe you’ll try again later. You come back later; maybe you got half the interview done. You come back eventually, you get the whole interview. And you make a record. And, at the end, that record of what finally happened is the final outcome code. And that’s what Bill’s talking about. And there [are] some choices that can be made, as to whether you try to keep track of every one of those stages or whether to keep track of the final stage. And the Census Bureau has tended to only keep track of the final stage. But we’ve now decided that that’s not in our interest; we want to know more about what’s going on [in the] attempt to get that interview, so we want to go back—and increase our complexity—to retain all the various different interim codes that came along during the process. And some of the information comes from having opened the instrument; some of it comes from case management, where you don’t even open the instrument. You drive by and discover that you can’t get into the house; you don’t open the instrument, and you have a record in your case management system that says that I tried to interview but I couldn’t.

PARTICIPANT: Just another comment, with regard to [computer-assisted telephone instruments]—they tended to be simple, but then again the automated call scheduling system was extremely complicated.

So you have a very difficult testing problem, to get those to work correctly. And if you’re going to change your algorithm …

DOYLE: And is that still true?

PARTICIPANT: Yes.

DOYLE: So, you can tell my bias is more towards the guts of this thing. And less toward the outer pieces that make this operation work— both of which are important. Mark?

PIERZCHALA: But getting back to the guts of the thing, there’s one thing you haven’t mentioned with respect to this, and that’s software in two or three languages.

DOYLE: That’s true, that’s true.

PIERZCHALA: The pattern of the way you fill with French and English are different. The grammatical structures of the sentences are done differently. And there [are] instruments out there in the world where you don’t know what languages are going to be done.

DOYLE: You’re absolutely right. I completely omitted that. So add yet another dimension to this entire problem.

I don’t think we’re ever going to take any dimensions away; we’re just going to add them.

So, how are we doing on time?

CORK: It’s about five minutes until ten, so you’ve got about thirty-five minutes left.

DOYLE: So I want to do documentation, and then I want to do testing. So I’ll do half-and-half.

So why do we have a documentation problem? First of all, when I talk about documentation now, I’m just talking about documenting the questions that were asked in the field. I’m not talking about documenting files; I’m not talking about study-level quality profiles. I’m just talking about documenting the questions used out in the field.

The main reason we have a problem is that we no longer have the free good of a self-documenting instrument. And we have limited resources to manually develop a substitute for that missing instrument. As you might expect, an organization whose primary purpose is collecting data is going to put [higher] priority on collecting more data and collecting it better than they are on documenting what they collected.

We have some attempts now at automating tools to do documentation. They’re still fairly new; I think we need to continue pushing in that area. Even when we do succeed in documenting an instrument, the thing is so big and so complicated that you can’t get your brain around it. One of the things that we’ve been most weak on in our documentation attempts so far is to provide the context for each question.

POORE: Why do you want documentation? [laughter] If you’re not going to be able to get your brain around it, and if it’s so hard to do, why do you even want to do it?

SCHECHTER: That’s why we like it. [laughter]

DOYLE: Yes, that’s right; partly it’s for Susan [Schechter and the Office of Management and Budget (OMB) review]. In order to get our questionnaires approved to go in the field, the people who approve them have to be able to know what’s in them. So that’s one reason.

POORE: But if they can’t get their brain around it, then they can’t go out in the field?

DOYLE: No, they do, it’s just … you can’t do it in its entirety. What you do is to break it into pieces. And so this individual will sort of know this piece at some level, and this individual will know that piece at some level. And what you can’t do is to get the whole thing and—more importantly—you can’t get all the nuances. Now, when Susan approves an instrument, she hasn’t seen all the nuances. She doesn’t see all the fills. She’d never get anything cleared. We’d never be able to do another survey, if she had to sit and look at every possible variation on a theme.

POORE: In that circumstance, how are you able to ascertain that the document does the job it’s intended to do?

DOYLE: It’s difficult. One of the reasons we have a couple of attempts to do automated instrument documentation is so that we know what we’re getting as a documentation of the instrument as opposed to what we intended to collect. We have other tools that can document what we intend to collect.

POORE: Is that a subjective judgment, or is it …

DOYLE: What do you mean? Is it my subjective judgment that …

POORE: That someone decides that document does what it’s intended to do?

DOYLE: No, usually it’s, “I’m out of money; it’s the best I can do.” Seriously. We’ve run out of money, we’ve run out of time. This is what we’ve got; sorry, that’s it. It’s the best we can do. We know it has problems; maybe if we had more time and money we could fix it.

GROVES: This seems to be a fair question, and it could be revisited. I believe you’ve implicitly put the word “paper” in front of “documentation”. If the intent is to get to the other person information about what the instrument is, does it necessarily have to be on paper?

DOYLE: No, nor do I think it has to be on paper. I didn’t mean to give that impression. In fact, I think it’s impossible to do on paper. What we need is paper providing some amount of information but we can’t rely on paper exclusively because it’s just too complicated to represent that way.

BANKS: I’m really sympathetic to the point of view that was just raised. It seems to me that if I were trying to get approval for a new instrument of this kind, I might want to know whether 80 percent of the respondents fall into a set of about 10 pathways that are truly understood and the remaining 20 percent are in some incredibly complicated, chaotic situation that’s going to be so individually variable that it’s going to be very hard to map. I guess I would feel more comfortable if I knew that some large proportion of the respondents were simple and well-captured and the real complexity only applied to a small percentage.

DOYLE: That’s generally not the criterion that’s used to clear an instrument. The criterion is largely burden—how many questions are we administering these people, how many minutes are we going to be in their home, bothering them to collect this data? And is any of this so redundant with some other collection that we couldn’t save some burden by …

BANKS: But I think this suggestion goes directly to that, because if the 10 pathways that 80 percent of people employ in fact would cause little burden, then that’s helpful to know.

DOYLE: But typically it goes the other way; the most complex paths give you the biggest break on burden, because you’ve tailored it so specifically that you’re only really asking the question when you need to.

MARKOSIAN: So is there a concept of an acceptance test for an instrument, and in particular is there a component of the acceptance test that sets general criteria that need to be passed? Is it institutionalized? Is that kind of written down anywhere?

DOYLE: I would say no.

MARKOSIAN: For example, complexity in terms of actual paths through the instrument.

DOYLE: No, no. First of all, we haven’t been able to measure that.

MARKOSIAN: Well, I don’t mean just that one issue. Surely, before you sign off on an instrument you have some criteria in mind about what that instrument needs to do.

PARTICIPANT: I think that acceptance testing is one of the most difficult challenges of automated surveys in general. It’s very iterative, it always takes a lot more time than anybody ever thinks it should. And I think you’re going to go into some detail on testing. I mean, it’s multi-stage—you do a first clean-up, you do a cosmetic. Then you get into the logic and you just go deeper and deeper into the instrument to make sure it’s field-worthy.

DOYLE: And there’s different types of acceptance. There’s acceptance in terms of, “OK, I’m asking for the information I want to get.” That’s what the analyst is looking for—“Does it ask for what I want?” Then there’s acceptance from the cognitive people—“Is this question be-

ing phrased in a way that the respondent can understand it?” Then there’s acceptance from the operational side—“Is this thing going to break when my FRs are in the field, or not?” “Is it going to go down the right path, or blow up if I back up too far?” There’s acceptance from the clearance process—“What is the burden, and how long is it going to take?” So there’s a whole different set of criteria that’s used to judge whether an instrument is ready to go and doing what it’s supposed to do. And then there’s also—“Can we afford it? Is this thing going to be able to be done within the amount of money we have for the field budget?”

MCCABE: “Complexity” as a dimension, or perhaps as a criterion— is that just a general concept or is there a specific mathematical definition?

DOYLE: No—it’s just a sort of vague nation that this is complicated, and this is simple; you can recognize those two but not really tell what’s between them. Bill, there’s a person behind you whose name I don’t know …

PARTICIPANT: The slide [suggests] that the documentation occurs after the instrument has been completed, but in fact it’s more of a specification problem. Because if you were able to write proper specifications during the development and before the development then your documentation would be less of a problem; it would come from the specs.

DOYLE: That’s right, and in recent times we’ve moved toward using database management systems to develop the specifications, to automatically feed the software that runs the instruments. What we’re finding is that it’s very difficult for that to flow naturally. At that stage, people will get on the phone and say, “Oh, this isn’t quite right, can you fix this?” And all of a sudden we’ve got a disconnect. We’ve got our specs maybe a week, two weeks, a month behind where we are developing the instrument. Maybe we have the staff to go back and put them together, but probably not; they’re probably testing this thing, working really hard to get it into the field, and once it’s in the field they’re off to the next survey. That’s where the problem is. But you’re right; we can do better in terms of having a good set of specifications, and we are. Every time we do a new survey we’re better than we were the last time we did it. But I’m pretty convinced we’re never going to be able to perfectly document an instrument from a spec, unless we change our behavior.

That was the “continuous refinements are hard to track” part, and that’s what causes the instrument and the spec to get out of synch. The other thing that could make our problem a lot easier is back to the old “his”/“her” fill—if we had industry-wide standards, and everybody did some of these things exactly the same way, we wouldn’t have to document them every time we did them differently or had the fifteenth variation on the same fill. So if we had more conventions and standards

I think this problem would become more solvable.

Well, what are we doing now? Everybody produces basically what we call “screen shots”—a list of questions that are asked in the survey. Typically it’s stylized in the sense that it doesn’t tell you every possible fill. It tends to be written in a way that you can read it, rather than TEMP1, TEMP2, TEMP3, 5, 10—that’s the way some of these things are coded. But it doesn’t typically give you the flow of the instrument. Sometimes we do that, but a lot of times we don’t. Flowcharts for instruments are pretty rare because we haven’t yet really been able to generate them automatically; I mean, TADEQ has some approach to this that’s under development.7 We’ve had manual ones done but they’re very resource-intensive to produce.

We have—as I mentioned—TADEQ; we also have IDOC.8 Both of these are attempts to automate the instrument documentation process— to read the instrument software and produce information about that instrument. They take very different approaches. The IDOC notion was to basically capture all the little pieces of the instrument and put them into HTML documents, put it up in Netscape and let you use all the browsers and what-not to go through and follow the instrument. And if you wanted to know what a fill was you could go click on it and go back and find what it was. And if you wanted to find out what a flow was … TADEQ is more focused on analyzing an instrument—telling you how many paths you have, telling you that a path is successful, and so forth. They’re both really good starts at this problem, but we’re not quite there yet.

I just mentioned the instrument development tools—we use these database management systems to do our spec-writing in the hopes that that will automate the instrument production and that we’ll have better specs in the end. Yes?

COHEN: Could you use either IDOC or TADEQ to estimate the respondent burden?

DOYLE: No. No.

COHEN: So the only way to do that is to go out in the field and give it to 200 people and see how long …

DOYLE: Well, and you can see how long it takes a couple of different ways. Now, with automation, we can put in what are called “timers”— you can literally see when the instrument is turned on and turned off and how long the time is. But that’s a little misleading because the FR

may not bother to turn it off when they leave. I mean, we have some timers of 36 hours that are clearly outliers.

MANNERS: I just wanted to say that one of the user requests that will be built into TADEQ is to allow the researcher to put in some times or weights to be built into the questions, so that those can be added up along a path.

DOYLE: Oh, OK.

At the Census Bureau we’ve produced some documentation guidelines for the demographic surveys. Basically, it’s the mantra of “document early, update often.” This is the behavior modification that I’d like to instill in all people who work on surveys. It affects all areas from analysts to methodologists, operational folks to authors, everybody—we need to get on this bandwagon or I don’t think we can solve the problem.

We have some standards; we have them internally at the Census Bureau for the actual implementation of our instruments. We also have, in the documentation arena, the Data Documentation Initiative, which is producing standards for data documentation which includes standards for formatting instrument documentation.9 That’s going to help us a lot once we move toward really getting our instruments documented, having them in a format that can be shared in a machine-readable capacity across all the archives, all the data libraries, all the users, and so forth.

We’ve had three different attempts to produce recommendations for documentation. In the mid-1990s, the Association of Public Data Users (APDU) developed recommendations for the documentation of instruments. Then we had the TADEQ User Group in Europe produce some recommendations. Then there was a meeting at the FedCASIC conference—not this past year, but the one before that—where we talked again.10 This time we brought in not only the experts in producing it but also a number of users; we brought in representation from OMB who does the clearance, our agencies who sponsor our surveys. In your booklet, I think it’s page 24—there’s Table 3 of an article in Of Significance….11 That article summarizes the recommendations from all three of these groups, and Table 3 has kind of got the snapshot picture of all those recommendations. I won’t go through them now because I want to

|

9 |

The Data Documentation Initiative is intended to foster an international standard for metadata (essentially, data about data) on datasets in the social and behavioral sciences. Additional information on the initiative can be found at http://www.icpsr.umich.edu/DDI. |

|

10 |

CASIC stands for “Computer-Assisted Survey Information Collection,” and is a general term used to describe the community of organizations and agencies who conduct survey research using computerized instruments. An annual conference of the federal agencies involved in this work—typically hosted by the Bureau of Labor Statistics (BLS)—is known as the FedCASIC conference series. |

|

11 |

Of Significance… is the journal of the Association of Public Data Users. Specifically, the table Doyle refers to is in Doyle (2001). |

move onto the testing thing. But it’s pretty illustrative. It basically talks about these issues of whether we ought to have standards for “his”/“her” fills, the more straightforward fills. It talks about all the components of documenting an item.

PARTICIPANT: It’s actually on page 115 of the book.

DOYLE: Oh, OK—page 115 of the book, page 23 of the actual paper.

The testing problem … We need to test all of the systems—as Bill reminded me, they are quite complex, and I keep forgetting about them because they’re not quite part of what I do. Which is another part of the problem, by the way. We have all these players in the process and none of them can quite understand why the other players need so [many] resources to do their job. “But I know why I need resources to do my part!”—so therefore I should have all the resources, and then we’ll squeeze the authoring down a little bit, and—oh, well—we’ll squeeze the testing down a little bit so we can do some of this other stuff.

And every one of us has the same problem; we don’t do the other job, so we don’t understand the other job well enough to appreciate their problem, their needs, or what I can do to make their life easier.

We need to test the input—the stuff that’s feeding into the instrument to drive it. We need to test the instrument output—that was something that, in the early days of automation, people just didn’t sort of think about. They just figured that if I’m typing it in, it’ll be in there. And then sometime later they’d get a data file back and go, “Whoops—I missed a few variables here and there.” So we learned our lesson the hard way.

We need to test the flow—all those paths, all the backing up and the going forward, and all the prescribed flows, all need to be tested.

Another thing that drives us nuts is grammar. When we put these fills in, we’ll have a fill for “his”/“her” and a fill for “was”/“were”, and all these other things, and you have to make sure that they all get triggered appropriately, together. And every now and then you’ll be testing along and the question will read that “he were” or “we was” something-or-other and you just have to look for it, because you can’t find it any other way. Now, maybe some of these [grammar] checkers—if they could be processed on every variant of the instruments, maybe that would work for us, I don’t know. But it’s a real problem, and it’s one of those things that will really trip up an FR. If they’re just reading what’s on there and all of a sudden the grammar is bad that’s a really big deal to them; they’re going to see that a lot quicker than they’re going to see a fundamental flaw in the logic.

We have to test the content—are we collecting what we set out to collect? Do we have it phrased the way we intend it to? That’s another aspect of the fills: whether or not we have them coming in appropriately.

Two things that are more important now than they used to be—back when we were doing character-based instruments in DOS, these weren’t quite a big issue. But now, in the Windows environment, the layout of the screen becomes important, and usability issues become very important. So we’ve added to our burden—finding some time for usability testing to test out the screen design and make sure that the choice of colors and how we do our buttons and everything is going to work well in the field.

So who do we have available to do testing? Well, we have the people who developed the systems get people to test the systems. The people who program the instrument—whom we typically call “authors”—have some level of testing that they do themselves. We have the survey managers, which I use generically as this group of people who are kind of running the trains, making sure that the instrument is properly developed. We have the analysts, the ones who asked for particular content to be collected—they need to see if it’s being collected. We have the survey methodologists, the cognitive researchers, who are trying to figure out how to phrase the questions—they need to look to make sure that things are getting properly phrased. We often use our field staff for testing our instruments—once we have an instrument that’s pretty much working, they’re excellent sources for finding things like the grammatical problems. “Oh, I remember interviewing this nice lady and she had this characteristic but that’s not really on this screen, so we really ought to fix it”—really good input. And, then, anybody else we can find … literally. And the better we document the instrument, the better we can use people off the street to test it. If we can’t document the instrument, then we’re stuck testing it with the same people who are trying to get it produced and get it in the field. Which is where we kind of get a resource problem.

What tools do we have available to do our testing? We can review the code, literally, just read it from top-to-bottom, which is fairly easy to do in CASES. It’s not quite as easy to do in Blaise, because Blaise has it split up into different components and it’s a little harder to read in a linear fashion.

We can break the instrument into smaller pieces and test it a little bit at a time, so you can get your hands around it—I can just test my labor force questions independent of all this other stuff.

The way we do our testing primarily is that we manually simulate cases. We sit there and we bang away at the instrument, and we just invent these cases, and we just work through the systems and the instrument. And just try to anticipate everything that’s possible.

We have tools to replay the instrument, so that if we’ve executed it one time on a particular case and then we want to go back and see how

that worked in the field we can do that. We have tools to analyze the instrument that are the same as those used to document the instrument. We have, at the Census Bureau, a “Tester’s Menu” so we can basically post these test instruments and have one of those menu options for processing the output so you can more easily check the output.12 We have tracking systems that keep track of where the code is and what version of the code you’ve got, and that sort of thing.

We also have a testing checklist that I thought I would summarize a little bit for you. Basically, a manager can sit down and make sure that everything has been taken care of; they can make sure that the input files have been tested. They can make sure that they’ve tested all the substantive paths, with and without back-ups and with and without making changes to the instrument as you go along. We have instructions to make sure you’ve checked the minor substantive paths—again, with and without back-ups. [We’re] also looking for questions that end abruptly or questions that have no people to ask them of because there’s no path that gets you there.

Testing the fills—very big deal. Also we need to test the behind-the-scene edits; we need to test whether or not interrupting the instrument works. The outcome codes that Bill was talking about—we need to make sure they’re functioning. We need to make sure that the systems and the instrument are properly talking to each other. We need to check the spelling and grammar. We need to make sure that the fields are being entered properly, and—as I talked about before—whether they’re showing up correctly in the instrument output.

So, with all that, what do we need? Right now, we can’t really automate our scenarios. I had an understanding that [the Research Triangle Institute (RTI)] might be somewhere on the verge of having something like that but I have not actually seen it. Our experience is that when you are in development mode the instrument is not stable enough to be able to generate the scenarios to just re-run this thing over and over again.

We can’t really identify all the possible paths. If we can’t identify them, how are we going to test them? There’s basically the problem that the volume is too high—we just simply can’t get to testing all paths. All the infinite possibilities, with the back-ups and a lot more possibilities for movement around the screen—right now, it’s just a very time-consuming linear process. We do A, then we do B, then we go back and re-do A, and then we do B, and then we do C, and then we go back and do A, then B, then C, and then we do D, and A, and B, and C, and D—it’s

about the only way we can get it done.

And basically we need to re-test the entire system after every change. Because, sure enough, if we don’t, we’ll introduce a bug that we didn’t think we did, and it’ll destroy a path—somewhere out there in the instrument where we didn’t look—and we won’t find out about it until after we’ve collected the data.

Our systems testing is basically distinct from our instrument testing, which is part of another linear process. We have to be pretty much finished with the instrument well in advance of fielding so that we can do an intensive amount of systems testing. Ideally, our systems people want our instruments done two months—completely certified, finished—two months in advance of fielding. I don’t think we’ve ever quite succeeded, but that’s the goal—that’s so that they can feel comfortable that they’ve got the instrument fully functioning.

I’ve talked about the fact that you don’t always have on your output file what you put in. That’s another thing that makes the testing difficult. I’ve also mentioned that in Blaise it’s more difficult to read the code from top to bottom. It’s also difficult in the case Mark [Pierzchala] mentioned, when you have foreign languages—depending on how the translations are embedded or not embedded in the code. If you’re doing a translation as you’re trying to read along the code, and you only know one of those languages, it’s going to get really complicated to read the code. Of course, if you know two languages, it’s still probably going to be hard to read the code.

The systems are not really self-documenting, and that’s what really restrains us in terms of resources to test.

OK, more deficiencies … I wonder how many more I’ve got? Particularly in the case of [graphical user interfaces (GUI)], we need to test on all platforms. Once we’ve tested on our machines at the office, that doesn’t necessarily mean it’s going to work like we think it is when we get it on a laptop in the field. So we’ve got to test on all those. We have to look at performance on several platforms as well.

We never have enough time to test. We never have enough time to document. We never have enough time to do what we want to do. But particularly the testing gets scrunched at the end. Partly that’s because we’re constantly refining it—right up until we go into the field, we’ll get a phone call that we really need to change this question. It’s critical; we just passed this law yesterday that says we have to do x and you really have to change this question. We’re just about to run with the instrument into the field, and that’s a really dangerous situation to be in. It’s critical to update the substance but—if you do it on the fly—you run the risk that you break something else and create a problem. And

because the instrument is continuously refined you can’t get a fixed set of test decks that you can use repeatedly.

I’m about worn out—did I run out of time?

CORK: We’re right on time, so if there [are] one or two questions we could take them now and then go into a short break before getting into a computer science flavor. If there are any questions?

DIPPO: I notice that you’re focusing on documenting the “what” and not addressing the “why.” For instance, in the [Current Population Survey (CPS)], we have the response category be two columns—we did it specifically so that these were the at-the-job and past-the-job entries. And then when we went to go from Micro-CATI to CASES and we wanted to do something, if there wasn’t a documentation of why we did something and why it should be included in the next version, we wanted to have a specific reason.13

DOYLE: I absolutely agree with you. There’s also not sufficient documentation of the results of the pre-testing that we do, to figure out that you really did want to do it for that reason. But, today, I’m only focusing—I take my documentation problems a little bit at a time. For this group, what we’re trying to do is to get them to figure out a way just to document what we did do and we’ll get another group in here to talk about documenting why we do it.

What we’re trying to do in the context of routine quality profiles for our surveys is to start incorporating documentation like that, which says, “We tested these questions, we made these decisions. And this is the critical reason why we’ve done x, y, and z.” It’s part of the quality of the data itself, as opposed to the mechanical or computing problem of documenting this piece of software that we’ve built up.

BANKS: This is probably a bad notion, but could one think about doing instrument development and documentation on the fly? You start off with a simple instrument that you think does a good job of capturing most of what you care about, you go out and use it—and one of your respondents says, “Well, I guess I have that sort of income; I’m going to receive a trust after 30, and the trust money gets compounded with interest. Does that count or not?” And nobody has a clue. So what the FR could do is to write down the narrative account of what that issue is, feed it back to the top of the system, and then you make a judgment call as to whether that’s what you mean by “interest income” or not.

DOYLE: What do you think, Susan, can we do that?

SCHECHTER: Well, I would say this. [For] the review process OMB wants to see as many of those scenarios as possible, from those reviews of the instrument, since all of that influences burden. So we’re interested in those observations from the field. But I don’t know how that would solve the documentation problem; it’s more of an enhancement to the process.

BANKS: I think it’s a part of the documentation; on top of the original, you have an “excuse list” explaining why a change got made.

GROVES: A completely different question: on your slides on testing, you had a role for methods researchers and for analysts. Is that a role that goes beyond just checking that the specs they delivered are being executed?

DOYLE: That’s correct; there is a role beyond that.

GROVES: What is it? Are you letting them re-design in the middle of the go?

DOYLE: In the course of developing, our experience has been that we start out and say, “Do this question.” And we implement the question. And then we start to test it and find that, “Oooooo, this isn’t quite working like I thought it would.” So let’s go back and change the specification so it works slightly differently. It also may happen that we’re implementing the automated instrument at the same time that we’re doing cognitive testing, so that while we’re doing cognitive testing and find that these words don’t mean exactly what we thought they would, let’s change them to these other words. And you have to go back and embed it in there. So some of it is an overlap, to try to reduce the overall length of time it takes to do all of this work.

MANNERS: Just a comment; I think it’s important, to follow up on Cathy’s question, to build in your documentation plans while you’re planning and building the instrument, and to build in links to external databases that give rationale and reasons for items.

DOYLE: Absolutely. And one of the benefits that we derive from using the database management systems to develop specifications is that they are bigger than just the specs for the instrument—they have instructions for post-collection processing, so you can tie in that work. And eventually they’re going to be able to accept back in statistics from the fielding, so you get all your frequency counts. There [are] also ways to link back to other documents that provide the research for, say, the cognitive testing for question x. It’s more than just solving the instrument documentation problem.

PARTICIPANT: I think that one thing we seem to be increasingly coming to [is that] at the same time that we’re trying to get better at enforcing having all the specs, and having better specification, I think it