4

Measure for Measure

The committee was charged with assessing the Environmental Protection Agency (EPA) Science To Achieve Results (STAR) program’s scientific merit, its demonstrated or potential impact on the agency’s policies or decisions, and other program benefits that are relevant to the agency’s mission. Assessment implies measurement. EPA, the Office of Management and Budget, and Congress are intensely focused on using metrics as a means of gauging the value of federal research programs. As a result, officials in EPA’s Office of Research and Development (ORD) urged the committee to develop and use metrics in its evaluation of the STAR program.

Because of the breadth and many dimensions of the committee’s task, the committee considered a broad range of metrics in its evaluation of the STAR program. Clearly a one-size metric will not fit all aspects of the program. This chapter provides a foundation for metrics, addressing what they are, how they are used in evaluation, and some considerations that should be given to their selection and use. The motivation for the emphasis on metrics, stemming from the Government Performance and Results Act (GPRA), is discussed. Finally, bibliometric analysis, a common form of metric, is discussed in relation to the STAR program. Appendix C contains examples of metrics used by federal agencies, academe, and state governments in evaluating their research programs.

WHAT ARE METRICS?

Geisler (2000) defines metrics for the evaluation of a research program as “a system of measurement that includes, (1) the item or object that is being measured; (2) units to be measured, also referred to as ‘standard units’; and (3) value of a unit as compared to other units of reference.” Geisler (2000) goes on to clarify this definition as follows:

A refinement of the definition of metric extends it to include: (a) the item measured (what we are measuring), (b) units of measurement (how we measure), and (c) the inherent value associated with the metric (why we measure, or what we intend to achieve by this measurement). So, for instance, the metric peer review includes the item measured (scientific outcomes), the unit of measurement (subjective assessment), and inherent value (performance and productivity of scientists, engineers, and S&T units).

Types of Metrics

Metrics may be classified as quantitative, semiquantitative, and qualitative. For the purpose of this report, the committee characterizes metrics as quantitative or qualitative, grouping the semiquantitative measures with the qualitative.

Quantitative measures, such as the number of peer-reviewed publications resulting from a grant, have the desirable attributes of public availability and reproducibility. A drawback to quantitative metrics is that they tend to be reductive or one-dimensional, measuring a single quantity. As a result, quantitative metrics, although outwardly simpler to use, are not necessarily more informative than qualitative metrics. Quantitative metrics tend to be more useful at lower levels of evaluation, when information tends to be more discrete, such as a review of a specific grant or center, but become less useful as one evaluates higher levels of integration, such as a review of the entire STAR program.

Qualitative metrics have the advantage of being multidimensional, that is, of comprising an intricate and complex set of measures. Therefore, qualitative metrics are more useful for evaluating higher levels of integration, such as an entire research program. Qualitative metrics can have numerical components; for instance, in reviewing grants, a scoring system of 1 to 5 is commonly used, in which the numbers represent such labels as “excellent”

and “very good.” They do not have many of the characteristics of normal quantitative evaluations; example, a 4 is clearly better than a 2, but it is not necessarily twice as good.

Process and Product Metrics

Metrics may also be classified on the basis of various components of a research program that they are used to evaluate. For instance, Cozzens (2002) defines four types of metrics: input, or money allocated and spent; throughput, or project activities; output, or publications, people, and products; and outcome, or user satisfaction.

The committee chose to use the terms process metrics and product metrics for evaluating the STAR program. Process metrics are used to evaluate the operation or procedures of the STAR program, such as peer review. Product metrics describe the outputs from the program, such as the number of reports, or the influence or effect of the program. In a crude way, process metrics represent internal program assessments and product metrics represent external program assessments.

The emphasis on product metrics is understandable, inasmuch as it is important that programs focus on what they are accomplishing, but process metrics are also important, particularly in warning of possible problems in a research program. A substantial period of time may pass before research programs generate enough products to support a comprehensive review. If such a review indicates serious problems in research results, the processes that led to the problems probably occurred many years earlier. Research managers consider good processes (such as a good peer-review process) to be necessary but not sufficient for ensuring good products.

Attributes of Metrics

It is important that metrics developed for evaluating a program fit together to provide a clear, accurate, comprehensive, and coherent picture. The committee considers some important attributes of metrics to be the following:

-

Meaningful. The metrics should be related to topics that the in-tended audience cares about. The first step in any evaluation should be to identify the audience for which it is being conducted.

-

Simple. The metrics and their measurements should be expressed in as simple and concise terms as possible so that the audience clearly understands what is being measured and what the results of the measurement are.

-

Integrated. The metrics should fit together to provide a coherent picture of the program being evaluated. The focus should be on performance goals and baseline statements in a way that provides a comprehensive recognition of accomplishments and the identification of information gaps.

-

Aligned. The metrics should be solidly aligned and accurately reflect the overall program and agency goals.

-

Outcome-oriented. Although some metrics focus on process, the best focus on desired environmental benefits, not only on the completion of tasks.

-

Consistent. If multiple programs are being evaluated or the same program is being evaluated over time, the same metrics should be used for the different programs and the different times.

-

Cost-effective. Program evaluations can be expensive in the re-sources required to support them and in the disruption that they can cause to the program being evaluated. The benefits of the information that the metrics provide should be commensurate with the costs required to collect it. It is most cost-effective to use information that is being (or should be) collected to support continuing effective program management.

-

Appropriately timed and timely. Some metrics require informa-tion to be collected on a continuing basis, others require information to be collected annually or even less frequently. For an evaluation to be accurate, the information has to be up to date. In some cases, that can influence when the evaluation is undertaken to ensure that it is based on current information.

-

Accurate. The metrics should promote the collection of informa-tion that accurately measures how the program is doing. Inaccurate metrics can seriously skew a program’s performance, inducing it to emphasize accomplishments that show up well according to the metric but that are irrelevant to and perhaps even inconsistent with the program’s goals.

USE OF METRICS IN EVALUATION

The selection of the appropriate metrics necessary to evaluate a research project or program is constrained by the nature of the evaluation, including

the purpose of the evaluation (for example, the intended audience and why the evaluation is being prepared), the type of research activity being evaluated, and the nature of the results that are of interest.

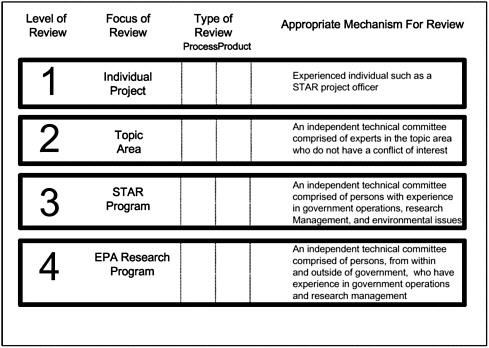

A research program like STAR is multidimensional and therefore should be evaluated on several levels, each level requiring its own set of metrics. The committee considers that a reasonable and logical approach to addressing such complexity in the STAR program encompasses four levels of review (see Figure 4-1). Level 1 focuses on the individual STAR projects and the higher levels of review are more integrative, focusing on research topics (level 2), the overall STAR program (level 3), and the EPA research program (level 4). For each level, two potential types of review may occur: process review and product review. Each type of review draws on metrics pertaining to the level of review. At each level of review, independent experts with appropriate scientific, management, and policy backgrounds would conduct the reviews.

Level 1. The first level of review pertains to an individual research project. Typically, the project officer is responsible for carrying out the process review at this level, which usually includes such issues as ensuring that the work is being conducted on schedule by the appropriate investigators, that annual and other reports are being submitted as required, and that other federal administrative requirements are being satisfied.

A product review is also sometimes carried out for individual research projects. Such a product, or substantive, review is the norm for research being conducted under a contract or cooperative agreement. It is less common for research conducted under a grant. If the project officer has sufficient technical expertise, he or she may conduct both the product review and the process review. However, some organizations bring in an outside expert or even, if the project is unusually large and complex, a team of experts representing the diverse disciplines the project is supposed to be incorporating. At level 1, it is reasonable to conduct both process and product reviews.

At this level, process reviews should be undertaken by people who are familiar with the administrative requirements and the commitments made in the research agreement. Product reviews should be conducted by people who are experienced in conducting research pertaining to the topic and have a knowledge of good research methods.

Level 2. The second level of review pertains to a topic, or a group of projects addressing the same general subject (such as particulate matter or

FIGURE 4-1 Levels of review.

ecologic indicators). This is often the most efficient level for conducting product reviews focusing on the substance of the research. Such reviews are typically conducted by an external review committee comprising experts in the subject being reviewed. The experts should have a good overview of the topic and of the scientific research being conducted in the topic. The experts are in a position to judge the quality of the research being conducted, the success that EPA has had in identifying high-priority research that will fill important gaps in the scientific community’s understanding of the topic, and whether the research being sponsored duplicates work that is being or has been done elsewhere.

Such reviews are most productively undertaken when there is a sufficient body of research results to provide a basis for drawing informed conclusions on the issues being addressed. Because of their cost and the disruption that they can cause, they should be undertaken infrequently. These reviews often also consider process issues, but process issues are more efficiently addressed at levels 3 and 4.

Level 3. The third level of review focuses on the operation and management of the research program and its products. It addresses such issues as how effectively the program communicates research opportunities, how objectively it evaluates proposals, how efficiently it awards grants, and how carefully it monitors projects.

The third level of review is often conducted by a panel of experts, including people who have experience in managing research programs, who are familiar with other research programs that complement or compete with the one being reviewed, who are familiar with the institution where the research program is conducted, and, in the case of government research programs, who are familiar with government operating and administrative procedures.

Level 4. The fourth level of review is often undertaken by an expert committee that comprises people with the same characteristics and experience as those in the level 3 review. This committee includes individuals from both within and outside of government. The focus of the fourth level of review, however, is broader. It is concerned less with how the program operates internally than how it is related to the broader institution and how effective it is in responding to the information needs of the organization and other potential users of the research results. The fourth level addresses such issues as whether the research organization has properly identified its clients, how well the research planning process and the definition of specific research topics include the perspectives of potential users, how effective the research organization is in keeping potential users “plugged in” to the research as it progresses, and how well it disseminates research results to potential users. If the institution sponsors multiple research efforts, as is the case with EPA and its various research centers and laboratories, the fourth level of review also addresses how well and efficiently the efforts are coordinated.

There can be other levels of review. For instance, expert committees are sometimes established to review all the information pertaining to a topic of particular interest. Such committees do not focus on the work conducted or sponsored by one institution but rather review information that has been collected in government, in the academic and nonprofit communities, by private businesses, and in foreign countries. Often, the charge given to an expert committee overlaps two or more levels of review. However, asking a committee established at one level to conduct a review that is more appro

priate for another level is likely to result in inefficiencies and may not provide the degree of insight desired.

The present committee’s own evaluation focused on the overall STAR program—that is, level 3—considering the operation and management of the STAR research program. But the committee also considered the STAR program in relation to ORD and to EPA as a whole (level 4). (The committee’s evaluation of the STAR program is addressed in Chapter 5.)

Caveats Regarding the Use of Metrics

The committee urges caution with respect to the use of metrics in evaluating research programs, because there is an inherent danger of measuring too much and too often. As Geisler (2000) states, “metrics selected should be able to measure what the evaluators wish to be measured. They should be intimately linked to the objectives and motives of the evaluators ... If this criterion is not met, the metrics become a set of irrelevant and useless quantities.” Many others have issued cautions regarding the use and application of metrics. For instance, the National Research Council Committee on Science, Engineering, and Public Policy (COSEPUP) sounded the following warning (NRC 1999):

It is important to choose measures well and use them efficiently to minimize non-productive efforts. The metrics used also will change the behavior of the people being measured. For example, in basic research if you measure relatively unimportant indicators, such as the number of publications per researcher instead of the quality of those publications, you will foster activities that may not be very productive or useful to the organization. A successful performance assessment program will both encourage positive behavior and discourage negative behavior.

BIBLIOMETRIC ANALYSIS

One evaluation tool that has gained much currency is bibliometric analysis. The committee commissioned such an analysis for a small subset of STAR-funded research to help to assess the utility of this technique in evaluating the STAR program (IISCO, Atlanta, GA, unpublished material, 2002).

Bibliometric analysis is based on the premise that a researcher’s work has value when it is judged by peers to have merit (NRC 1999), and it is commonly used in research evaluations because it seeks to measure research productivity by quantifying publication outputs and citations. The popularity of bibliometrics stems from its quantitative nature; it lends itself readily to “ranking.” It can be used in the identification of productive people, institutions, and even countries and in the charting of trends in research (for example, endocrine disruptors is a term that has recently come into fashion). Bibliometric analysis is relatively inexpensive (Geisler 2000).

Bibliometrics has a number of limitations. When a given author’s publications are counted, the extent to which specific publications are derived from a particular research project is rarely clear. Furthermore, some disciplines publish more than others, and some researchers publish more than others.

It is also important to consider the quality of the journal within each discipline in which an article is published. Articles accepted by a prestigious journal can have more influence and should be more highly valued in a bibliometric analysis than articles published in less prestigious journals.

Some materials are covered less fully than others in bibliometrics. Published articles are only one measure of the output of scientific activity, but bibliometrics does not cover, for example, electronic communications, chapters in books, and abstracts.

Additional limitations include the current inability to screen text. Word references are typically based on key words; citations of multiauthor articles tend to be truncated to two or three authors; and in highly collaborative, cross-disciplinary applied research (such as that sponsored by the STAR program), results are published in diverse journals—for instance, research addressing environmental causes of childhood asthma can appear in journals dealing with buildings, general medicine, toxicology, epidemiology, molecular biology, agriculture, or social science (Geisler 2000). Thus the particular abstracts included in the analysis and the key words used to search the abstracts can have an important influence on the publication count. In the analysis undertaken for the committee (discussed below), some of the articles published by some of the researchers did not appear; the journals in which the articles were published were not included in the collections of abstracts that were searched, or the abstracts did not include the specific key words that were used for the search.

Cross-disciplinary comparisons of counts of publications or citations can also be misleading because researchers in some disciplines tend to publish journal articles more frequently than those in others. If a discipline

supports a number of journals, essentially the same article can be repeated by someone who knows how to play the publication game.

The citation-analysis component of bibliometric analysis can avoid some of the problems just noted; for instance, an article published in a prestigious journal is likely to be cited more frequently than one published in an obscure journal. However, citation analyses have their own limitations. One is that they can be biased by an inordinate amount of self-citation and citations by “friends” (Geisler 2000). A high rate of citation does not necessarily provide a measure of quality. For example, an article that contains a serious error or is otherwise controversial may be cited frequently by researchers eager to demonstrate its failings.

Cross-disciplinary comparisons can pose a problem in citation analyses. Disciplines have their own citation traditions. In some fields, such as internal medicine, the senior collaborator is the last author listed and therefore often does not appear in citations that list only the first three authors. In other fields, the primary writer is the first author and the senior collaborator the second author. In mathematics, it is traditional to list authors alphabetically. Interpreting a bibliometric or citation analysis properly requires knowledge of the traditions of a particular research field.

Finally, an article on a topic that many researchers are working on may be widely cited, whereas an article on a new topic or filling an information gap that is being ignored by other researchers may not be, even though the latter may constitute a more important contribution to the state of the science.

Geisler (2000) states that the “consensus among the critics is that the metric has some merit, but its value as a ‘stand-alone’ metric is doubtful.” The committee agrees with that assessment and recommends the use of bibliometric analysis only to support expert reviews; review by a group knowledgeable about a specific research topic will assist in placing the results of bibliometric analysis in the context of the current state of scientific knowledge.

A bibliometric analysis commissioned by the committee analyzed results of grants awarded in response to two requests for applications: 10 ecologic-indicators grants and eight endocrine-disruptor grants funded by STAR in 1996. The assessment indicated that the number of publications by STAR grantees was comparable with that by other researchers in the fields and that the grantees were producing high-quality work. A citation analysis (see Table 4-1) indicated that the rate of citations of STAR-funded research was similar to that of other research in the field.

Overall, the committee concluded that it is essential for bibliometric analysis to be done in conjunction with expert review to assess its quality

TABLE 4-1 Mean Citations for STAR-Funded Research and Other Research in the Fields of Ecologic Indicators and Endocrine Disruptorsa

|

|

Ecologic Indicators |

Endocrine Disruptors |

||

|

Yearb |

Other Research |

STAR Research |

Other Research |

STAR Research |

|

1997 |

10.3 |

10.5 |

13.2 |

37.8 |

|

1998 |

8.6 |

7 |

5.9 |

2.6 |

|

1999 |

5.3 |

7.7 |

7.8 |

6.9 |

|

2000 |

2.7 |

2.8 |

3.3 |

2.9 |

|

2001 |

1.1 |

0.7 |

1.2 |

0.4 |

|

aIdentification of STAR-funded research publications is based on investigators’ judgment. bPapers published earlier can accrue more citations than those published more recently. Source: IISCO, Atlanta, GA, unpublished material, 2002. |

||||

and relevance, inasmuch as the method does have inherent limitations. That conclusion supports a similar recommendation made by COSEPUP (NRC 1999). The committee also believes that the STAR program is too young for bibliometric analyses to be an effective metric for research funded beyond the initial years of the program.

GOVERNMENT PERFORMANCE AND RESULTS ACT

Much of the recent focus on the use of metrics to evaluate research programs stems from the Government Performance and Results Act (GPRA) of 1993. GPRA is intended to focus agency and oversight attention on the outcomes of government activities, so as to ensure the accountability of federal agencies. To that end, it requires each agency to produce three documents: a strategic plan that establishes long-term goals and objectives for a 5-year period, an annual performance plan that translates the goals of the strategic plan into annual targets, and an annual performance report that demonstrates whether an agency’s targets have been met. Federal research agencies have developed various planning processes in response to GPRA.

Although GPRA offers agencies the opportunity to communicate to policy-makers and the public the rationale for and results of their research

programs, it has created substantial challenges for many research agencies (GAO 1997; NRC 1999). Results of research activities are unpredictable and long-term; this places limitations on the use of roadmaps or milestones of progress. Annual performance measures tend to focus on less important findings rather than major scientific or technologic discoveries or advances (Cozzens 2000). Research agencies face other challenges with respect to the political environment surrounding GPRA. The Office of Management and Budget (OMB)wants GPRA to provide it with measures of good management at agencies. However, for research agencies, focusing on good management, although important, does not necessarily produce research results for the public (Cozzens 2000).

In 1999, Congress asked COSEPUP to provide guidance on how to evaluate federal research programs relative to GPRA. The request was in response to the difficulties that federal research agencies were having in linking results with annual investments in research.

COSEPUP concluded that federal research programs can be usefully evaluated regularly in accordance with the spirit and intent of GPRA. However, useful outcomes of basic research cannot be measured directly on an annual basis. The COSEPUP report cautioned that evaluation methods must be chosen to match the character of research and its objectives (NRC 1999). The report concludes that quality, relevance, and leadership are useful measures of the outcome of research (particularly basic research). Federal agencies should use expert review to assess the quality of the research they support, its relevance, to their missions, and its leadership (NRC 1999).

In February 2002, OMB proposed preliminary investment criteria that could be used for evaluating federal basic-research programs. The criteria—quality, relevance, and leadership—were a combination of criteria suggested by COSEPUP and by the Army Research Laboratory (ARL); ARL had selected quality, relevance, and productivity as relevant metrics for evaluating programs of basic and applied research (OMB 2002).

The importance of the OMB criteria was emphasized in a May 2002 memorandum from John H. Marburger, director of the Office of Science and Technology Policy, and Mitchell Daniels, director of OMB. The memorandum included a slightly revised set of evaluation criteria and directed agencies to use the new R&D investment criteria—covering quality, relevance, and performance—in their FY 2004 R&D budget requests (see Table 4-2).

The criteria are intended to apply to all types of R&D, including applied and basic research. However, the memo notes that the administration is aware that predicting and assessing the outcomes of basic research is never

TABLE 4-2 Office of Management and Budget Research and Development Investment Criteria

|

Quality. R&D programs must justify how funds will be allocated to ensure quality R&D. Programs allocating funds through means other than a competitive, merit-based process must justify the exceptions and document how quality is maintained. |

|

Relevance R&D programs must be able to articulate why this investment is important, relevant, and appropriate. Programs must have well-conceived plans that identify program goals and priorities and identify linkages to national and “customer” needs. |

|

Performance R&D programs must have plans and management processes in place to monitor and document how well this investment is performing. Program managers must define appropriate outcome measures and milestones that can be used to track progress toward goals and assess whether funding should be enhanced and redirected. |

easy. The extent to which the criteria are to take the place of GPRA is not clear. As the memo states, “these criteria are intended to be consistent with budget-performance integration efforts. OMB encourages agencies to make the processes they use to satisfy GPRA consistent with the goals and metrics they use to satisfy these R&D criteria. Satisfying the R&D performance criteria for a given program will serve to set and evaluate R&D performance goals for the purposes of GPRA” (OSTP/OMB 2002).

Table 4-3 compares the OMB criteria of quality, relevance, and perfor-mance with the recommendations of the COSEPUP report, EPA’s Office of Research and Development strategic goals (Chapter 2), and the STAR program goals (Chapter 2). From Table 4-3, it is evident that OMB’s R&D criteria are not separate from those of COSEPUP, ORD, or STAR, but rather comprise many of these other criteria or goals. Examining the OMB criteria in this context underscores the fact that the criteria encompass the objectives of EPA’s mission and fall within the research criteria and goals established by COSEPUP and STAR.

To understand how metrics can be used to evaluate programs effectively, the committee reviewed metrics used by other organizations in federal and state governments and academe. Chapter 3 identified some of the

TABLE 4-3 Comparison of Research Criteria and Goals

|

OMBa |

COSEPUPb |

2001 ORD Strategic Planc |

1995 STAR Goalsd |

|

Quality |

Quality |

|

Achieve excellence in research |

|

|

World leadership |

Science leadership |

|

|

Relevance |

Relevance |

Support agency’s mission |

Focus on highest-priority mission-related needs |

|

|

Progress toward practical outcomes |

Integrate science and technol-ogy and to solve problems |

Integrate and communicate results |

|

|

|

Anticipate future issues |

|

|

Performance |

|

High performance |

High accountability and integrity |

|

|

|

|

Partnerships and leveraging |

|

|

Develop and maintain human resources |

|

Develop next generation of environmental scientists |

|

aOMB 2002. bNRC 1999. cEPA 2001. dP. Preuss, presentation to the National Research Council committee, March 18, 2002. |

|||

efforts of federal agencies, and additional efforts by other federal agencies and state governments and academe are summarized in Appendix C.

Review of the metrics discussed in Appendix C indicated that federal research programs tend to focus more on the collection of product metrics than on process metrics. In federal research programs, there tends to be a presumption that peer review is necessary to ensure a successful program. However, there also tends to be relatively little discussion as to who is responsible for conducting peer review. The Air Force, however, uses a highly quantitative evaluation process, with the reviews being conducted by the Air Force Scientific Advisory Board.

Evaluations at the state level are driven principally by economic considerations. There tends to be little targeting of specific research topics except in broad terms, such as nanotechnology. Many of the evaluations are based on surveys of participating institutions and on data routinely collected at the state level, such as numbers of students enrolled in institutions of higher learning. The Experimental Program to Stimulate Competitive Research (EPSCOR) of the National Science Foundation produces a level of standardization that allows comparison of R&D efforts across states and across time. The standardization provides consistency, an important attribute of metrics.

In the committee’s view, none of the evaluation programs has identified the “silver bullet” that will provide an unambiguous measure of the quality of a research program, and many of the organizations continue to wrestle with the problem of how to evaluate their research programs effectively without imposing undue costs or disruptions.

In Chapter 5, the committee uses OMB’s R&D criteria to evaluate the quality, relevance, and performance of the STAR program, as these criteria are to be used by government agencies in assessing their research programs for the FY 2004 budget (OSTP/OMB 2002). In its evaluation, the committee reviewed a large number of potential metrics used by EPA and other organizations and selected a small set to evaluate the STAR program. The metrics are classified as process or product metrics. Because the committee conducted a process-oriented review (level 3), the metrics used in the evaluation tended to be more qualitative than quantitative.

CONCLUSIONS

• On the basis of its review of numerous metrics being used to gauge research programs in and outside EPA, the committee concludes that there

are no “silver bullets” when it comes to metrics. The committee concludes that expert review undertaken by a group of persons with appropriate expertise is the best method of evaluating the STAR research program. The types of experts needed will depend on the level of review being conducted. The use of expert review is supported by recommendations made by COSEPUP and the practices of federal research agencies.

• Expert review panels should be used for evaluating the processes and products of the STAR program. Both process and product reviews are important but should be conducted at the appropriate program levels. A good process is generally necessary but not sufficient to ensure a good product. Thus, product reviews are necessary to ensure that a program is producing high-quality results.

• The committee recommends that the STAR program consider establishing a structured schedule of expert reviews that has four levels: level 1, individual research projects; level 2, topics or groups of research projects; level 3, the entire STAR program; and level 4, the question of how the STAR program is related to the broader institutions of ORD and EPA. Each level should have its own set of metrics. As reviews move to higher levels of program evaluation (from level 1 to level 4), integration becomes more important, and metrics become more qualitative than quantitative.

• The expert reviews should use qualitative and quantitative metrics to support their evaluations. Both types of metrics are valuable in assessing research projects and programs. Quantitative metrics, although outwardly simpler to use, are not necessarily more informative than qualitative metrics. In fact, a numerical veneer can often be more difficult to interpret and less transparent in that it may hide unsuspected idiosyncracies, such as incomplete reporting, different academic practices and evaluations, and interpretations that vary over time. Metrics that do not clearly reflect a program’s purposes and goals can seriously skew its performance. There is truth in the adage that “what you measure is what you get.”

• Bibliometric analysis is important for program evaluation, but it must be conducted in conjunction with expert review; expert review will assist in placing the results of bibliometric analysis in the context of the current state of scientific knowledge.

REFERENCES

Cozzens, S. 2000. Higher education research assessment in the United States. Part 2 County Case-Study B in Valuing University Research: International Experi-

ences in Monitoring and Evaluating Research Output and Outcomes, T. Turpin, S. Garrett-Jones, D. Aylward, G. Speak, R. Iredale, and S. Cozzens, eds. The Centre for Research Policy, University of Wollongong, Canberra [Online]. Available: http://www.dest.gov.au/archive/highered/respubs/value/susan cozintro.htm [accessed Jan. 22, 2003].

Cozzens, S.E. 2002. The Craft of Research Evaluation. Presentation at the Second Meeting on Review EPA’s Research Grants Program, April 25, 2002, Wash-ington, DC.

EPA (U.S. Environmental Protection Agency). 2001. ORD Strategic Plan. EPA/600/R/01/003. Office of Research and Development, U.S. Environmental Protection Agency, Washington, DC. [Online]. Available: http://www.epa. gov/ospinter/strtplan/documents/final.pdf [accessed Jan. 22, 2003].

GAO (U.S. General Accounting Office). 1997. The Government Performance and Results Act: 1997 Governmentwide Implementation Will be Uneven. GAO/GGD-97-109. Washington, DC: U.S. General Accounting Office.

Geisler, E. 2000. The Metrics of Science and Technology. Westport, CT: Quo-rum Books.

NRC (National Research Council). 1999. Evaluating Federal Research Programs: Research and the Government Performance and Results Act. Washington, DC: National Academy Press.

OMB (Office of Management and Budget). 2002. OMB Preliminary Investment Criteria for Basic Research. OMB Discussion Draft, February 2002.

OSTP/OMB (Office of Science Technology and Policy/Office of Management and Budget). 2002. FY 2004 Interagency Research and Development Priorities. Memorandum for the Heads of Executive Departments and Agencies, from John Marburger, Director, Office of Science and Technology Policy, and Mitchell Daniels, Director, Office of Management and Budget, The White House, Washington, DC. May 30, 2002.

Preuss, P.W. 2002. National Center for Environmental Research, History, Goals, and Operation of the STAR Program. Presentation at the First Meeting on Review EPA’s Research Grants Program, March 18, 2002, Washington, DC.