3

Competitive Grant Programs in Other Federal Agencies

To assess the Environmental Protection Agency (EPA) Science To Achieve Results (STAR) program’s progress in establishing a competitive grants program, the committee examines the procedures put into place by other federal agencies. The committee was tasked specifically with addressing the STAR program in relation to other research grants programs. The agencies selected for review were ones that have partnered with STAR in supporting joint research. These agencies represent a broad spectrum of basic and applied research agencies (See Box 3-1).

The National Science Foundation (NSF) and National Institutes of Health (NIH) are often thought of as organizations whose principal mission is to support basic research and the progress of science itself. However, other agencies that have defined service missions related to national security and public welfare also have a long history of supporting fundamental and exploratory research, which informs their missions and is supported by academic expertise. All those agencies have established competitive, peerreview processes to evaluate and select individual investigator and multiinvestigator projects. Depending on the mission and the expected effects of the research, the agencies have differing approaches to reviewing their projects and programs and disseminating the resulting information.

This chapter describes the program-management procedures of the agencies listed in Box 3-1. It addresses the following research-management

|

BOX 3-1 Federal Agencies and Their Research Grants

|

activities: planning, opportunity communication, proposal review process, agency involvement during implementation, project and program evaluation, and dissemination of results. It is important to note that those research activities often overlap and have strong feedback mechanisms as they are carried out by agency program managers. Planning affects project review criteria, project and program evaluation feeds into planning, and so on. Table 3-1 summarizes the procedural aspects of the various research programs that are reviewed here in comparison with that of the STAR program. The committee did not address the issue of cost comparisons to operate the various federal research programs, as this was not part of their charge. Information in this chapter comes from individual discussions with and presentations by agency personnel and from publicly available documentation from the agencies.

THE NATIONAL SCIENCE FOUNDATION

NSF is the principal federal agency with a mission of promoting the progress of science in support of the national welfare and defense (Firth 2002). It is structured to reflect the scientific and engineering disciplines.

TABLE 3-1 Summary of Extramural Grants Programs

|

Program |

Planning |

Communication |

Proposal Review |

Implementation |

Evaluation |

Dissemination |

|

EPA STAR |

Planning process is variable and highly dependent on specific program; programs are conducted within context of multiyear plans and budget plans that are revised at least annually but generally not reviewed externally. |

RFA is published in Federal Register; program offices post the RFA on Web site and announce at professional meetings; in some cases, program officer sends information via NCER listserv to interested parties. |

Peer-review process is conducted by NCER’s peerreview division, which handles review of proposals. |

POs do not have much ability to influence implementation; there is some opportunity for coordination and integration with researchers; however, this varies from PO to PO. |

Evaluations have been done on a program-specific basis and have been conducted by EPA’s SAB, BOSC, and GAO. |

Methods of dissemination include “synthesis” reports, “state of the science” reports, and annual progress meetings. |

|

NSF |

Strategic outcome goals (people, ideas, and tools) set context for investment priorities; priorities are defined by community pressure, disciplinary consensus agendas, professional meeting agendas, review panels and advisory committees, staff input, director’s office and White House guidance. |

RFAs are broadcast through Federal Register and NSF Web site. Competitions for center grants and those associated with interagency partnerships are announced through RFAs. Most proposals to NSF are unsolicited and investigator-initiated. |

Volunteers participate in NSF review process through combination of panel review, letter review, and site visits as appropriate; POs play important role in process. |

Grant mechanism provides for arm’s-length relationship during course of relationships among investigator, institution, and NSF; PO may choose to visit investigator during course of grant. |

Projects are evaluated by publications in refereed journals, presentations at professional meetings, involvement of students, advisory committees, and committees of visitors. |

Results are disseminated through journals, professional meetings, and regular meetings of grantees and contractors. |

|

NIEHS |

Annually, NIEHS divisions undertake planning process to identify areas of emphasis for coming fiscal year; areas of emphasis may be reflected in changes to general program announcement that NIEHS releases; the institute may also decide to release specific RFA for one-time competition in welldefined scientific field. |

Program announcements and RFAs are released through standard federal publications and on institute’s Web site. Majority of 40,000 proposals received by NIH are unsolicited. |

Unsolicited proposals and responses to general program announcements are reviewed through CSR. Proposals that respond to RFAs are reviewed within specific institute. |

Grant mechanisms provide for arm’s-length relationship between investigator and institute; institutes use cooperative agreements and contracts that provide institute staff with considerably more influence; institute staff may choose to visit investigator during course of project with mutual benefit. |

Projects are evaluated by publications in refereed journals, presentations at professional meetings, involvement of students, institute’s National Advisory Council, and its Board of Scientific Counselors. |

Results are disseminated through journals and professional meetings; NIH planning meetings also bring together researchers to report on their work and to provide interactions that may result in future proposals. |

|

DOE BER |

Factors considered in planning: national need, DOE mission need, opportunities consistent with DOE capabilities and mission, critical scientific gaps, and |

Continuing program announcement for entire office of science is published once a year through Federal Register and SC Web site; BER |

Mechanisms for peer review: individual mail reviews, ad hoc committees, or standing committees as |

Grant mechanism provide for arm’s-length relationship between official and investigator; |

BER conducts retrospective reviews of its research in both grant programs and laboratory research; these reviews are conducted by its |

Publication and meeting presentations are significant measures of individual projects. |

|

Program |

Planning |

Communication |

Proposal Review |

Implementation |

Evaluation |

Dissemination |

|

DOE BER |

congressional mandate; mechanisms used to define priorities: FACA advisory committee, commissioned scientific assessments, scientific workshops, program review findings, and recommendations and staff interactions with scientific community. |

specific solicitations are publicized in trade and professional journals and in Federal Register and SC Web site. |

appropriate or required by legislation; each application is reviewed by at least three qualified reviewers and conflict-ofinterest issues are overseen by program manager. |

DOE program manager visits investigators at both universities and national laboratories on regular basis; BER programs also bring university and laboratory investigators together at regular meetings |

FACA committees and in some cases the JASON organization and NRC boards and committees. |

Participation by principal investigators in international or interagency coordination committees is also a measure of project results. |

|

NRI |

Opportunities are identified through proposal submissions of research communities and response and internal discussions of NRI peer-review panels; planning process includes staff participation in interagency coordination |

NRI program description is made available in printed form, on the Internet, in the Federal Register, and at scientific meetings and conferences. |

Proposals are assigned to three panelists and four to six ad hoc reviewers; panel meeting is held to discuss and rank proposals from 1 to 6; awards are based on panel’s ranking; NRI staff finalize budget but |

Although initial terms and conditions govern performance of grant, there is no official mechanism for NRI program managers to direct principal investigators |

NRC has provided reviews of NRI in 1994 and 2000; initiative also evaluates itself against CREES’s GPRA outcomes by providing appropriate descriptions of project impacts on those outcomes; NRI Board of Directors |

Dissemination activities include publication in refereed journals, presentations, scientific conferences, graduate students, NRI staff |

|

|

committees, regular staff meetings with commodity groups and agricultural coalition organizations, and congressional input through NRI budget process. |

|

do not adjust individual project budgets. |

during term of grant. |

provides oversight of NRI policy retrospectively and prospectively. |

presentations of results at meetings with various USDA stakeholders, and preparation and distribution of one-page summaries of particular results. |

|

NASA |

The following contribute to NASA strategic plan: research divisions, standing committees of NASA Advisory Council that undertake program reviews and planning activities that affect divisions’ plans, congressional direction through budget process, and studies of NRC Space Studies Board and Aeronautics and |

NASA research announcements are communicated on NASA Web site, in Commerce Business Daily, and Federal Register; community involvement is part of communication effort. |

Letter and panel reviews are used; also, NASA uses support-service contractor to carry out logistics of peer review; contractor maintains proposal and reviewer databases; program manager suggests reviewers and works with contractor to |

Program managers’ interaction with grantees is similar to that in other agencies; however, program managers often take more active role by organizing formal and informal meetings, |

NAC conducts annual reviews of research divisions to assess their performance against their GPRA measures; NAC standing committees do retrospective reviews of specific elements of divisions; |

NASA also establishes external ad hoc review committees; NRC has NASA has statutory mandate and receives specific funding for publicizing its mission and its results; results are disseminated through journals and professional meetings and |

|

Program |

Planning |

Communication |

Proposal Review |

Implementation |

Evaluation |

Dissemination |

|

NASA |

Space Engineering Board. |

|

ensure conformance with conflict-of-interest rules. |

including professionalsociety meetings. |

reviewed specific NASA programs (for example, through Space Studies Board and Aerospace and Engineering Board). |

to public media. |

|

NOAA |

Each NOAA unit contributes to strategic plan; OAR receives considerable input from major research universities, environmental managers, and general public via Sea Grant network in each coastal state; input is coordinated by three OAR Assistant Deputies for agency cross-cutting and interdisciplinary research activities. |

Research opportunities are communicated in Federal Register, Commerce Business Daily, direct mailings, and NOAA, the Joint Institutes Program, and Sea Grant Web sites. |

Letter reviews and panel reviews are used; reviewers are asked to evaluate proposals on basis of scientific merit, whether proposed work addresses Agency’s mission, and mission of division; additional considerations include cost, opportunities for outreach activities, qualifications of investigators and key scientific personnel, and institutional |

Strong incentives exist for interaction between program managers to visit grantees, stay abreast of program issues, and ensure prompt input into the research results; Sea Grant program also provides opportunities for interaction between researchers at different |

Joint Institute Program, Arctic Research Program, and Office of Global Change Program are all evaluated via regular peer-review visits. |

Research is disseminated through workshops, scientific meetings, and journal articles; because Sea Grant College Program’s mission includes research, outreach, and educational components, it has developed criteria and benchmarks for “connect |

Its FY 2002 budget was about $4.5 billion, of which about $825 million supported the Working Group on Environmental Research and Education (ERE 2003).

Planning

With respect to priority-setting and planning, NSF has three strategic outcome goals (Firth 2002):

-

People. Developing a diverse, internationally competitive and globally engaged workforce of scientists, engineers, and well-prepared citizens.

-

Ideas. Enabling “discovery across the frontier of science and engi-neering connected to learning, innovation, and service to society.”

-

Tools. Providing broadly accessible, state-of-the-art and shared research and education tools.

Those goals set the context for research priorities that are defined in various ways through community pressure, disciplinary consensus agendas, professional-meeting agendas, review panels and advisory committees, staff input, and Director’s Office and White House guidance (for example, in interagency budget initiatives).

Opportunity Communication

Requests for applications (RFAs) are used and broadcast through the Federal Register and the NSF Web site. Competitions for center grants are also announced through RFAs, as are competitions associated with interagency partnerships. However, most proposals to NSF are still unsolicited and investigator-initiated. As priorities change in the planning process, new competitions may be initiated, new emphases may be established, and other emphases may be terminated.

Proposal Review

NSF reviews about 30,000 proposals per year and makes 10,000 new awards to 2,000 colleges, universities, elementary and secondary schools,

nonprofit institutions, and small businesses. Around 50,000 scientists and engineers participate as volunteers in the NSF review process through a combination of panel reviews, letter reviews, and site visits. Expenses are covered, and a small honorarium is provided, but reviewers are not compensated for their time.

The role of the program officer (PO) is important in this process. The POs are subject-matter experts and know the communities from which reviewers should be chosen to provide an effective and fair review. POs oversee the NSF conflict-of-interest and confidentiality policy, but how well the process operates depends ultimately on the honesty of the reviewers.

Implementation

The NSF grant mechanism provides for an arm’s-length relationship between the investigator, the institution, and NSF. The PO may choose to visit the investigator during the course of the grant, and such interactions may be mutually beneficial to the PO and the researcher. The investigator gains the broader perspective of the PO as to progress in the broad community; the PO gains additional and prompt information that may affect future research-program directions.

Evaluation

Individual projects are evaluated on the basis of publications in refereed journals, presentations at professional meetings, and participation of students in the research. The success of a program or division in supporting a particular field of research or in developing a new multidisciplinary research field may be evaluated by advisory committees or by a more focused committee of visitors. Such committees assess not only the progress of the projects and the overall program but also the success of the POs in reviewer selection and process efficiency.

Dissemination of Results

In general, results of NSF-supported research are disseminated through the normal mechanisms of the research community: journals and profes

sional meetings. However, for interdisciplinary and interagency programs, regular meetings of grantees and contractors may also be called by project officers to ensure effective communication and the development of useful networks and collaborations.

NATIONAL INSTITUTE OF ENVIRONMENTAL HEALTH SCIENCES IN NIH

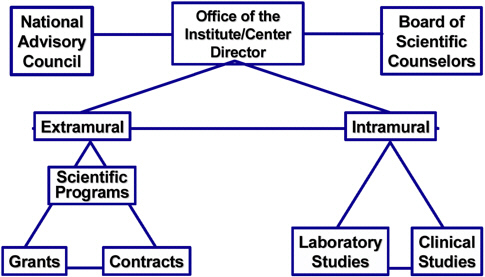

The National Institute of Environmental Health Sciences (NIEHS) is one of 20 institutes and seven centers of NIH. Each institute and center is organized similarly, as indicated in Figure 3-1. Each institute supports intramural and extramural research. Across the institutes in 2001, nearly 90% of the $18.6 billion budget supported extramural research. The institutes use a variety of procurement mechanisms: grants, cooperative agreements, and contracts.

NIEHS’s mission is to reduce the burden of human illness and disease from environmental causes. It examines the character and interrelated effects of environmental exposures, individual susceptibility, age, and length of exposure. NIEHS supports research that ranges from fundamental research in molecular toxicology to the study of disease effects and includes prevention and economic consequences (Thompson 2002). NIEHS’s FY 2001 budget was about $1.47 billion.

NIEHS has collaborated with STAR since 1995 in research on endocrine disruptors and complex chemical mixtures. NIEHS and EPA are cosponsors of the 12 centers for children’s environmental health.

Planning

NIEHS has identified several topics of interest for long-term investment:

-

Genomic imprinting and environmental disease susceptibility.

-

Fetal origins of adult diseases.

-

Oxidative stress and dietary modulation.

-

Human health effects of complex mixtures.

-

Environmental factors in diseases of concern in women and men.

-

Ethical, legal, and social implications of human genetics and genomics research.

FIGURE 3-1 A typical NIH institute or center. Source: C. Thompson, NIEHS, presentation to National Research Council committee, April 25, 2002.

Each year, individual NIEHS divisions undertake a planning process to identify emphases for the coming fiscal year. The planning process takes into account progress in the scientific community, the investments of other agencies, transinstitute activities, congressional directives, and the views of program staff. Changes in emphases may be reflected in changes in the general NIEHS program announcement. The institute may also decide to release a specific RFA for a one-time competition in a well-defined scientific field.

Opportunity Communication

Program announcements and RFAs are released through standard federal publications and on the institute’s Web site. This is similar to mechanisms used by NSF. Competing grant applications are received for three review cycles per year.

Proposal Review

The vast majority of the 40,000 proposals received by NIH are unsolic

ited and reflect the judgment of individual investigators as to their interests and the best opportunities for outstanding science. Overall, 25-30% of the proposals are funded.

Two review processes are carried out by the institutes. Unsolicited proposals and responses to the general program announcements are reviewed through the Center for Scientific Review (CSR). Proposals that respond to RFAs are reviewed within the specific institute.

The two processes use equivalent criteria for scientific merit, including the following:

-

Significance. Does the study address an important problem? How will scientific knowledge be advanced?

-

Approach. Are design and methods well developed and appropri-ate? Are problems addressed?

-

Innovation. Are there novel concepts or approaches? Are the aims original and innovative?

-

Investigator. Is the investigator appropriately trained?

-

Environment. Does the scientific environment contribute to the probability of success? Are there unique features of the scientific environment?

CSR refers unsolicited proposals and responses to general program announcements to a scientific review group (SRG). SRGs are defined by specific guidelines often related to scientific disciplines or fields. The SRG provides the initial scientific review and makes recommendations for the appropriate level of support and duration of the award. It provides priority scores and percentiles for the upper half of the proposals, leaves the lower half unscored, and recommends others for deferral. CSR then refers each of the proposals to a specific institute on the basis of the institute’s mission and stated interests. The institute’s national advisory council (NAC) assesses the quality of the SRG review, makes recommendations to the institute staff on funding, evaluates program priorities and relevance to the institute’s mission, and advises on policy. The NAC may concur with the SRGs, modify the rankings, or defer proposals for re-review. The institute director and staff then determine the specific awards to be funded on the basis of scientific merit, contribution to program balance, and availability of funds.

Proposals that respond to the RFAs from specific institutes are reviewed by institute review groups (IRGs) convened by the particular institute.

IRGs operate in a manner similar to that of the SRGs. However, the institute staff have substantial involvement in the choice of the reviewers and the amount of funds set aside for a particular number of awards. The recommendations of the IRGs are then reviewed by the institutes’ scientific advisory council.

Implementation

The various NIH grant mechanisms provide for an arm’s-length relationship between the investigator, his or her institution, and the institutes— similar to that in NSF. However, in contrast with NSF, the institutes use cooperative agreements and contracts for scientific services provided by user facilities or database operations. Cooperative agreements and contracts provide institute staff with considerably more influence in the conduct of research. In all cases, institute staff may choose to visit an investigator during the course of a project.

Evaluation

Individual projects are evaluated on the basis of publications in refereed journals, presentations at professional meetings, and whether students participate in the research. Projects are more highly valued if students participate. The success of an institute or division in supporting its mission or in developing a new scientific field is evaluated by the institute’s national advisory council or its board of scientific counselors.

Dissemination of Results

Like NSF-supported research, NIH-supported research is disseminated through the normal mechanisms of the research community: journals and professional meetings. However, NIH planning meetings also bring together researchers and program officers to report on their work and to provide interactions that may result in future proposals. Program officers often are involved in interagency planning efforts that benefit from the newest research and development results.

DEPARTMENT OF ENERGY OFFICE OF BIOLOGICAL AND ENVIRONMENTAL RESEARCH

The Office of Biological and Environmental Research (BER) is a division of the Office of Science (SC) in the Department of Energy (DOE). BER supports basic and applied scientific research at universities and the DOE national laboratories. BER’s mission arises from DOE’s goal to understand and mitigate the environmental consequences of its energy and national-security missions. BER also carries out DOE’s mission to develop and extend the frontiers of nuclear medicine (Elwood 2002).

BER has substantial activities that are important elements of interagency programs, such as those in long-term climate science, the human genome program, and proteomics. BER supports the operation of unique facilities at DOE national laboratories, such as high-field magnetic resonance and beamlines at x-ray synchrotron sources. Those facilities are available to university researchers supported by DOE and other agencies; access to them is determined by independent peer-review committees commissioned by the facilities and the DOE programs.

BER uses general program announcements for unsolicited proposals and for program-specific RFAs. It averages 11 such solicitations per year; these do not include joint solicitations with other agencies. The BER program budget was about $554 million in FY 2002; its university program was less than half of that amount. Hence, the peer-review process involves far fewer proposals than that of NSF or NIH, by at least a factor of 10.

Planning

BER considers a number of factors in initiating and carrying out its programs (Elwood 2002):

-

Filling a national need—DOE is one of the principal federal science agencies.

-

Filling a DOE mission need—DOE’s national-security and energy missions are driven by science and technology.

-

Filling opportunities consistent with DOE capabilities and mission.

-

Filling critical scientific gaps that take advantage of DOE’s physi-cal infrastructure.

-

Filling a congressional mandate.

BER uses a number of mechanisms to define program priorities: its Federal Advisory Committee Act (FACA) advisory committee, commissioned scientific assessments, scientific workshops, program-review findings, and recommendations from and staff interactions with the scientific community.

Opportunity Communication

The continuing-program announcement for the entire SC is published once a year through the Federal Register and the SC Web site. BER-specific solicitations are publicized in trade and professional journals, in the Federal Register, and on the SC Web site. Those announcements encompass both grants and cooperative agreements. They may involve a preproposal phase. University-laboratory collaboration may be encouraged; the preproposal enables the program manager to suggest useful collaborations.

Proposal Review

BER carries out peer review using a number of mechanisms: individual mail reviews, ad hoc committees, and standing committees as appropriate or required by legislation. Each application is reviewed by at least three qualified reviewers, and conflict-of-interest issues are overseen by the program manager.

BER uses the following criteria:

-

Scientific or technical merit or the educational benefits of the pro-ject.

-

Appropriateness of methods or approach.

-

Competence of applicants and adequacy of proposed resources.

-

Reasonableness and appropriateness of proposed budget.

-

Other factors established in the solicitation, including program policy factors, such as program balance.

The BER program managers are responsible for determining which proposals are relevant to the agency’s mission, but the program managers may ask reviewers for their recommendations. If the solicitation is expected to generate large numbers of applications, preproposals are encouraged; this step does not preclude submission of a full proposal or the normal meritreview process. Preproposals are reviewed by program managers and, if

appropriate, by a panel of experts knowledgeable in the subject and aware of the program mission.

Implementation

The grant mechanism keeps the BER program official at arm’s length during execution of the grant. But as in NSF, the DOE program manager visits investigators at universities and the national laboratories regularly to stay informed about progress and to support appropriate interactions among the investigators. BER programs also bring university and laboratory investigators together at regular meetings, which support implementation, evaluation, and planning efforts.

Evaluation

BER carries out retrospective reviews of its research in the grant programs and in its laboratory research. The information gained from the reviews is used to guide future program decisions as to new opportunities, program and project continuation, and balance and direction. The reviews are carried out by the FACA advisory committee, but also include the use of the JASON organization and the National Research Council’s boards and committees. As in NIEHS, BER program officers participate in interagency planning efforts that benefit from early use of current R&D results.

Dissemination of Results

Results are disseminated primarily through publications and meeting presentations. BER-sponsored investigator meetings also serve as mechanisms for dissemination of results.

U.S. DEPARTMENT OF AGRICULTURE NATIONAL RESEARCH INITIATIVE COMPETITIVE GRANTS PROGRAM

The U.S. Department of Agriculture (USDA) National Research Initiative (NRI) Competitive Grants Program was established in 1991 as an element of the Cooperative State Research, Education and Extension Service

(CREES). The purpose of NRI is to support high-priority fundamental and mission-linked research of importance in the biologic, environmental, physical, and social sciences relevant to agriculture, food, and the environment. CREES reports to the USDA under secretary for research, education, and economics. The under secretary chairs the NRI Board of Directors, which oversees NRI policy (Johnson 2002).

In 2002, NRI’s budget was about $120 million. Virtually all the NRI budget is devoted to grants. The grants support individual research projects at large and small colleges and universities and federal and private laboratories. NRI also funds conferences, a postdoctoral fellowship program, and a young investigator program, and it provides equipment grants. NRI is responsible for the Experimental Program to Stimulate Competitive Research (EPSCOR) program in USDA.

NRI publishes an annual program description that identifies current research opportunities in eight major topics:

-

Natural resources and the environment.

-

Nutrition, food safety, and health.

-

Animals.

-

Biology and management of pest and beneficial organisms.

-

Plants.

-

Markets, trade, and development.

-

Enhancing value and use of agricultural and forest products.

-

Agricultural systems research.

The program description also identifies strategic issues that crosscut the research topics and reflect research opportunities as they arise on the basis of the needs of the department, interagency opportunities, and developments in the scientific community. In 2002, two strategic issues were identified: agricultural security and safety through functional genomics, and new and re-emerging disease and pest threats. Although the program description provides guidance as to interests of the department and format for proposals, the proposals are considered to be unsolicited from a procurement perspective. NRI on occasion publishes directed program announcements for specific proposals.

Planning

In large measure, the annual program description summarizes the results of the annual planning efforts of the NRI chief scientist and scientific staff.

As in other agencies, scientific opportunities are identified through the proposal submissions of the research communities and the response and internal discussions of the NRI peer-review panels. Staff participation in interagency coordination committees also sets the planning horizon for the program. The staff meet regularly with commodity groups and agricultural coalition organizations that are important stakeholders of the USDA. Congress also provides input through the NRI budget process.

Opportunity Communication

The NRI program description is made available in printed form and on the Internet. It is also published in the Federal Register and made available at scientific meetings and conferences. Each year, NRI conducts “grantsmanship” workshops to familiarize applicants and administrators with NRI’s philosophy, directives, and procedures. NRI staff have specifically presented at workshops for EPSCOR, historically black colleges and universities, and Hispanic-serving institutions.

Proposal Review

NRI receives about 3,000 proposals per year. The proposals are reviewed by panels (28 panels in FY 2000). More than 9,000 scientists contribute to the annual NRI review process. The NRI staff identify the panel members by using criteria that include relevant scientific knowledge, educational background, experience, and professional stature. Other considerations involve balancing diversity in geographic region, type of institution, rank, gender, and minority-group status. NRI staff members also ensure that conflict-of-interest policies are enforced.

At a panel meeting, each project is reported on by three panelists. The primary reviewer provides an overview and summary of the proposal’s strengths and weaknesses. A secondary reviewer provides additional comments, and a reader summarizes a set of four to six ad hoc reviews that are provided by other members of the panel or from scientists outside the panel. Each project is ranked in one of six categories, the lowest of which is “do not fund.” For each project, the panel provides a summary of positive and negative aspects and a synthesis of the discussion in the larger context of panel considerations. On the final day of the panel meeting, the projects are re-ranked by revisiting the categories and providing a numerical rank order

for the top 25%. Awards are based on the panel’s ranking. The NRI staff finalize the budgets but do not adjust individual project budgets. Each project investigator receives the reviews, the panel summary, and the relative ranking of the project.

Implementation

As in other grant programs, initial terms and conditions govern the performance of the grant. There is no official mechanism for NRI program managers to direct principal investigators during the term of the grant.

Evaluation

The National Research Council has provided reviews of NRI in 1994 and 2000 (NRC 1994; NRC 2000). NRI also evaluates itself against CREES’s Government Performance and Results Act (GPRA) outcomes by providing appropriate descriptions of project impacts on those outcomes. The NRI Board of Directors provides oversight of NRI policy retrospectively and prospectively.

Dissemination of Results

As in other programs, publication in refereed journals, presentations at scientific conferences, and graduate students are major mechanisms for dissemination of results. The NRI staff present results at meetings with various USDA stakeholders that convey the impact of NRI research. NRI also uses the extension resources of CREES to prepare and distribute onepage summaries of particular NRI results. The NRI Board of Directors— which is composed of the under secretary and four administrators in the Research, Education, and Economics directorate—also serves as a forum for information and exchange of NRI results.

NATIONAL AERONAUTICS AND SPACE ADMINISTRATION

The National Aeronautics and Space Administration (NASA) is one of

the largest federal science and technology agencies. It accomplishes most of its mission through its major laboratories and contracts with private industry. However, it provides substantial support for university researchers through contracts, cooperative agreements, and grants, which are awarded through a merit-based peer-review process that includes the participation of the scientific community (B. Bennett, NASA, personal commun., June 2002).

Three NASA divisions support peer-reviewed research: Space Science, Earth Science, and Biological and Physical Science Research. The FY 2002 budget for those three divisions was about $5.81 billion. The divisions use similar peer-review processes that conform with overall NASA guidance. Each uses the NASA research announcement; an announcement may identify a division’s broad topics of interest as guidance for unsolicited proposals or may solicit proposals in specific research topics. The NASA Advisory Council (NAC), a FACA committee, has established standing committees for each of the divisions with a strong focus on scientific direction. The chairs of the National Research Council’s Space Studies Board (SSB) and Aeronautics and Space Engineering Board (ASEB) are ex officio members of the Advisory Council (B. Bennett, NASA, personal commun., June 2002).

Planning

Each of NASA’s research divisions contributes to the NASA strategic plan. With respect to the identification and priorities of research, the development of NASA research announcements is an opportunity for interaction with and feedback from the scientific community. The standing committees of the NAC also undertake program reviews and planning activities that affect the divisions’ plans. Congressional direction through the budget process is incorporated into plans. The studies of the Research Council’s SSB and the ASEB are often used in research planning (NASA 2001).

Opportunity Communication

NASA research announcements are broadly communicated on the NASA Web site and in print through the Commerce Business Daily and the Federal Register. The involvement of the community in the development

of the announcement is also part of NASA’s communication effort (NASA 2001).

Proposal Review

NASA uses both letter and panel reviews, depending on the decision of the program manager. NASA has contracted with a support-service contractor to handle the logistics of the peer-review process. All divisions that use peer review work with the same contractor to provide consistency of interaction with proposers and reviewers. The contractor maintains proposal and reviewer databases. The program manager suggests reviewers and works with the contractor to ensure conformance with conflict-of-interest rules.

The principal elements considered in evaluation are intrinsic merit, relevance to NASA’s objectives, and cost. The evaluation of intrinsic merit takes into account overall scientific or technical merit, qualifications of the investigator and other key personnel, institutional resources, experience critical to objectives, plans for education and outreach, and overall standing among other proposals. The intrinsic-merit review does not generally depend on cost or programmatic relevance, but reviewers may be asked for comments on cost and relevance.

Proposals for science that depend on space transport will be evaluated for engineering and management by a panel that includes government and contractor reviewers. This review judges proposals on the feasibility and complexity of accomplishing project goals; it also provides an estimate of the total cost of flight-hardware development.

The NASA program manager has considerable latitude in determining how a project will be evaluated and the funding that will be made available for a project. For complex projects, the program manager may not choose the procurement mechanism (grant, cooperative agreement, or contract) until the review process is completed (NASA 1999).

Implementation

A NASA program manager’s relationship with grantees is subject to the same constraints as in other science agencies. However, the connection between grant-supported research and the missions of NASA divisions

results in considerable interaction through formal and informal meetings, including professional-society meetings. The program manager often takes an active role in organizing those meetings (NASA 1999).

Evaluation

The full NAC reviews the research divisions annually to assess their performance against their GPRA measures. The NAC standing committees do retrospective reviews of specific elements of the divisions. NASA also establishes external ad hoc review committees. For example, the Biological and Physical Research Division has established the Research Maximization and Prioritization Task Force to review and assist in planning for research that will use the International Space Station. The National Research Council has also reviewed specific NASA programs; the SSB and the ASEB have standing committees that often review program activities (NASA 1999).

Dissemination of Results

Of all the federal science and technology agencies, NASA is the only one that has a statutory mandate and receives specific funding for publicizing its mission and its results to both the technical community and the general public. Thus, although NASA-supported science is disseminated through journals and professional meetings, it may also be chosen for broader distribution to the public media. That not only benefits the research and NASA but also enhances the likelihood of a broader research impact (NASA 1999).

NATIONAL OCEANIC AND ATMOSPHERIC ADMINISTRATION

The mission of the National Oceanic and Atmospheric Administration (NOAA) is to describe and predict changes in the earth’s environment and to conserve and manage wisely the nation’s coastal and marine resources to ensure sustainable economic opportunities (NOAA, unpublished material, 1998). The largest divisions of NOAA are the National Weather Service (NWS), the National Marine Fisheries Service (NMFS), the Coastal Ocean Service (COS), and the Office of Ocean and Atmospheric Research (OAR).

The agency accomplishes its mission through inhouse and extramural activities. Although some extramural research funds are managed by NMFS, COS, and NWS, most of the inhouse and extramural research efforts are housed in the OAR. Inhouse research—programmatically divided into oceans, weather, climate, and atmosphere at OAR—is carried out at 12 laboratories. The extramural programs include the National Sea Grant College Program,1 the National Undersea Research Program, the Joint Institute Program, the Arctic Research Program, and the Office of Global Change Program. OAR’s FY 2002 budget was about $340 million.

Planning

Each of NOAA’s units contributes to the agency’s strategic plan. OAR receives considerable input from the nation’s major research universities, environmental managers, and the general public. Much of this input is coordinated by the National Sea Grant College Program network in the coastal states, by the National Underwater Research Program, and by the joint institutes. Input is coordinated by three OAR Assistant Deputies for agency cross-cutting and interdisciplinary research activities.

Opportunity Communications

All research opportunities are broadly communicated in the Federal Register, the Commerce Business Daily, and direct mailings and on NOAA, joint institutes, and sea-grant Web sites.

Proposal Review

NOAA uses letter reviews and panel reviews for the various funding programs. The 31 Sea Grant College programs receive and process more

proposals than any other NOAA division. All programs have uniform guidelines and oversight for peer reviewers, although individual programs maintain their own databases.

Reviewers are asked to evaluate proposals on the basis of scientific merit and whether the proposed work addresses the mission of NOAA and in particular the mission of the division. Cost, opportunities for outreach activities, qualifications of investigators and key scientific personnel, and institutional research infrastructure support are also considered.

Implementation

The extramural grant programs ensure an arm’s-length relationship between researchers and program officers. However, as in other mission agencies, there are strong incentives for visits and exchange to stay abreast of program issues and to ensure prompt input into the research results. The regional character of the National Sea Grant College Program also provides opportunities for regular interaction between researchers at different institutions.

Evaluation

The Joint Institute Program, the Arctic Research Program, and the Office of Global Change Program are all evaluated through regular peerreview visits. Detailed evaluation criteria and performance benchmarks have been developed for the National Sea Grant College Program. The criteria include the following components: effective program planning; organizing and managing for success with meritorious project selections, recruiting of the best talent available, and meritorious institutional program components; and producing significant results. Program management must ensure that consistent production of significant results will have widespread economic or social benefit, contribute to science and engineering, and address the high-priority needs of the state and the nation.

Dissemination of Results

As in other agencies, NOAA research is disseminated through workshops, scientific meetings, and journal articles. Because the National Sea Grant College Program’s mission includes research, outreach, and educa

tional components, it has developed criteria and benchmarks for “connecting with the user.”

CONCLUSIONS

• The agencies described above have research-management processes to plan, solicit, select, and evaluate extramural research activities. All use a competitive peer-review process that draws expert reviewers from the scientific community, and they have processes for screening the reviewers for expertise and conflicts of interest.

• All agencies can use a number of procurement mechanisms, although grants are the principal mechanism for researchers based in universities. Grants require a hands-off relationship between researchers and agency staff; however, all the agencies use some form of meeting or conference to assess progress during the grant period. The meetings can also have other functions: they permit interaction among scientists and between scientists and a broad array of agency staff, resulting in prompt research impact related to agency missions. In addition, the meetings support research collaboration and planning efforts for future research directions and solicitations.

• The agencies have all been required to address GPRA requirements for strategic planning and the development of metrics. They recognize that it is difficult to identify quantitative outcome metrics on problem-driven research even when mission-related, disciplinary topics can be identified.

• In establishing and managing extramural research, the agencies deal with the same issues, and many face the same kind of mission-imperatives that underlie the STAR program. However, most of the agencies described here administer much larger budgets than the STAR program. For example, the NIEHS budget is about 15 times the STAR budget; NSF’s environmental programs are nearly 9 times larger.

• EPA appears to have benefited from the STAR program’s collaborative efforts with other agencies. First, the joint programs have resulted in more EPA-relevant research than STAR would have been able to fund alone, with the joint research mutually benefiting both EPA and the partnering agency. Second, STAR has been able to accelerate the development of its management processes by learning from its partners. For example, like NIEHS, it has established a separate organization to conduct peer review. At the same time, the well-developed EPA Office of Research and Development planning process and STAR’s strong involvement with it provide the STAR program with greater integration and relevance to the EPA mission,

at least at the process level, than is apparent in some of the other agencies described here.

• In general, the committee finds that STAR processes compare favorably with those of its peer agencies, particularly given the relative youth of STAR. In addition, the STAR program’s relevancy review process is more rigorous than that of other agencies.

REFERENCES

Elwood, J. 2002. Department of Energy’s Biological and Environmental Research Grants Program. Presentation at the Second Meeting on the Review of EPA’s Research Grants Program, April 25, 2002, Washington, DC.

ERE (Environmental Research and Education). 2003. What is ERE. Environmen-tal Research and Education, The National Science Foundation, Arlington, VA [Online]. Available: http://www.nsf.gov/geo/ere/ereweb/ what.cfm [accessed Jan. 10, 2003].

Firth, P. 2002. National Science Foundation Research Grants Program. Presenta-tion at the Second Meeting on the Review of EPA’s Research Grants Program, April 25, 2002, Washington, DC.

Johnson, P. 2002. United States Department of Agriculture, National Research Initiative. Presentation at the Second Meeting on the Review of EPA’s Re-search Grants Program, April 25, 2002, Washington, DC.

NASA (National Aeronautics and Space Administration). 1999. Office Work Instruction. Research Solicitation, Evaluation, and Selection. National Aero-nautics and Space Administration. [Online]. Available: http://hqiso9000. hq.nasa.gov [accessed July 2, 2002].

NASA (National Aeronautics and Space Administration). 2001. Office Work Instruction. NASA Research Announcement (NRA) for R&D Investigations. National Aeronautics and Space Administration. [Online]. Available: http:// hqiso9000.hq.nasa.gov [accessed July 2, 2002].

NRC (National Research Council). 1994. Investing in the National Research Initiative: An Update of the Competitive Grants Program in the U.S. Depart-ment of Agriculture. Washington, DC: National Academy Press.

NRC (National Research Council). 2000. National Research Initiative: A Vital Competitive Grants Program in Food, Fiber, and Natural-Resources Research. Washington, DC: National Academy Press.

Thompson, C. 2002. NIEHS Research Grants Program. Presentation at the Sec-ond Meeting on the Review of EPA’s Research Grants Program, April 25, 2002, Washington, DC.