5

Human Factors Considerations for Automatic Identification System Interface Design

From the perspective of the human operator of automatic identification systems (AIS), the “interface” is defined as the display and control mechanisms that enable the exchange of information between the person and the AIS. The interface includes not only the display of information, such as cathode ray tube graphics and auditory warnings, but also data entry and control elements, such as a keyboard or switches.

Developing an effective AIS interface requires a systematic process that considers the capabilities of the users and the demands of the operational environment. Although several researchers have investigated mariner collision avoidance and navigation strategies and information needs, no one has systematically evaluated how AIS can support these and other information needs (Hutchins 1990; Laxar and Olsen 1978; Lee and Sanquist 1993; Lee and Sanquist 2000; Schuffel et al. 1989). To date, neither the design of AIS controls nor the information needs of the mariner and the method of displaying that information have been defined and evaluated sufficiently well. Thus, a focus on human factors considerations for AIS interfaces is needed.

Once a system has been designed, manufactured, and put in service, it must be maintained. The goal of human factors in maintenance, as in design, is to enhance safe, effective, and efficient human performance in the system. In recent years it has become apparent that human factors methodology has as much to contribute to maintenance as it does to design. In the aviation and process control industries, for example, structured human factors methods (e.g., Maintenance Error Decision Aid) are being applied to maintenance with some success (Johnson and Prabhu 1996; Maurino et al. 1998; Reason and Maddox 1998). According to the National Aeronautics and Space Administration (NASA 2002), four primary activities are undertaken in maintenance human factors: (a) human factors task/risk analysis, (b) procedural improvements, (c) maintenance resource management skills and training, and (d) use of advanced displays (to clarify procedures and to make information resources more accessible without task interruption). Because of the changing nature of the workplace and required tasks, especially given

the increasing use of automation in maintenance, workers in these jobs must acquire new skills for tasks that will not necessarily reduce their workloads. In addition, issues of software version control and data maintenance (e.g., updated chart information, updated cargo information) may require special procedures and training as well as more specialized personnel. As will be seen in the discussion below, many of these types of activities are relevant to AIS shipboard displays. Although maintenance issues are important and merit consideration and comprehensive evaluation before implementation of a specific AIS, these system-level (not display-specific) issues were beyond the scope of this report.

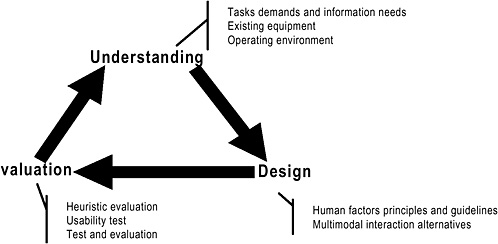

Some of the key human factors considerations important in interface design are outlined in this chapter. A description of the human factors design process is given first. How the three stages of understand–design–evaluate might be applied to the design of AIS interfaces is then discussed. A number of human factors guidelines that can assist in the design of current and future AIS interfaces are also provided.

CORE ELEMENTS OF THE HUMAN FACTORS DESIGN PROCESS

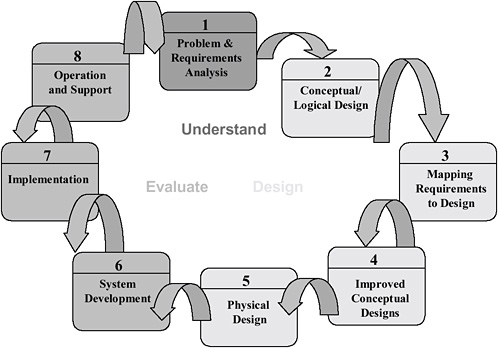

Human factors design activities are an integral element of the overall systems analysis and design process described in Chapter 4. The focus of human factors design is on the interaction between the design and the human. Thus, human factors design processes can be simplified into three major phases: understand the user and the demands of the operational environment, design a system on the basis of human factors engineering principles and data, and evaluate the system to ensure that the system meets the needs of the user (Woods et al. 1996) (see Figure 5-1). These steps are mapped to the systems analysis and design framework outlined in Chapter 4 and shown in Figure 5-2. To begin with, a task or work analysis can be used to provide the initial data to understand the user and the demands of the operational environment (Kirwan and Ainsworth 1992; Vicente 1999). This understanding and the requirements that result are combined with human factors engineering guidelines and principles to create initial design concepts. As shown in Figure 5-1, design often begins by building on findings from the evaluation of an existing system rather than by starting with a blank slate. This is

true also for AIS. AIS design will occur in the context of previously developed navigation, communication, and planning aids. After these initial concepts are developed, designers conduct heuristic evaluations and usability tests with low-fidelity mock-ups or prototypes (Carroll 1995). Usability evaluations in realistic operational contexts are particularly useful because they often help designers better understand the users and their needs.

AIS deployment may result in mariners using the technology in ways that were not anticipated during the initial design. For this reason, analysis of how mariners interact with prototypes is critical to a better understanding of system requirements. This enhanced understanding can then be used to refine design concepts. When the system design becomes more defined, it may be placed in an operational environment for comprehensive testing and evaluation. This final evaluation can be considered to be the final step of system development. It can also be considered as the first step in developing a better understanding of the user for the next version of the prototype or product. For this reason, it is important to consider AIS design and the associated standards and certification development as a continuous process that evolves as more is learned about how mariners use AIS and how AIS affects the maritime industry. This continuous cycle is reflected in the link between evaluation, which ends one iteration, and understanding, which begins the next iteration of the design cycle. Some of the more critical elements of each of these three phases are described in the remainder of this chapter.

The most obvious focus of the design process is the physical display and controls that make up the operator interface. However, with complex technologies such as AIS, training and documentation also represent important elements of design. Ignoring documentation (manuals, instruction cards, help systems) and training can lead to errors, poor acceptance, and ineffective use of the system.

UNDERSTANDING THE NEEDS OF THE OPERATOR

New technology can change demands on the bridge crew dramatically. If they are properly developed, technological advancements should make operators more efficient and safe. Under proper conditions, workload declines and performance improves with the introduction of new navigation tech-

nology, even when the number of crew members declines (Schuffel et al. 1989). Other studies, however, have shown significant performance declines with the introduction of new technology, particularly under medium- and high-stress conditions (Grabowski and Sanborn 2001). Studies in other domains suggest that poorly designed automation may reduce workload under routine conditions but can actually increase workload during stressful operations (Wiener 1989; Woods 1991). One possible explanation for these apparently contradictory findings has been suggested by Lee and Sanquist (2000), who point out that the evaluation of modern technologies often addresses only routine performance and does not consider more stressful and nonnormal conditions where new technology can actually impair performance. (A fuller discussion of automation-related issues and their potential impact on operator performance is given in the section “Human/ Automation Performance Issues.”)

In addition, new technology can introduce new cognitive demands, such as the need to monitor more ships during collision avoidance, to form mental models of the new technology, and to perform complex mental scaling and transformations to bridge the gap between the data presented and the information needed by the operator. Although problems abound, properly implemented technology (such as AIS) promises to enhance ship safety as it eliminates time-intensive, repetitive, and error-prone tasks. To realize the promise of new maritime technology requires a clear understanding of the needs of the operator.

Historical data concerning shipping mishaps indicate that many navigation errors result from misinterpretations or misunderstandings of the signals provided by technological aids (NTSB 1990). Moreover, Perrow (1984) notes that poor judgment in the use of radar contributes to many maritime accidents. In some situations the mariner may receive so many targets and warnings that it may be impossible to evaluate them, and that display may be ignored. In addition, production pressures could force mariners to use the devices to reduce safety margins and operate their vessels more aggressively. The demands and pressures that new technology can place on mariners can induce unanticipated errors. These findings suggest that poorly designed and improperly used technology may jeopardize ship safety. In addition, AIS may eliminate many tasks, make complex tasks appear easy at a superficial level, and lead to less emphasis on training and design. AIS may also introduce new

phenomena that affect mariner decision making, such as automation bias and overreliance on a single source of information to guide collision avoidance and navigation. In this situation, if the display fails to contain the information necessary to specify operator actions, errors will result (Rasmussen 1986; Vicente and Rasmussen 1992). This is particularly problematic with AIS because it may provide information on only a subset of the vessels the operator must consider in navigating a safe course. Thus, it is clearly important to understand the cognitive tasks involved with AIS to guide design and training.

As a demonstration of this process, the committee conducted a preliminary task analysis using observations of a towing vessel representative of those that operate on the upper Mississippi River and its tributaries. This type of inland towing operation involves transiting locks and relatively long voyages. This compares with fleeting vessels, which operate in a relatively small area of the river, and vessels operating on the lower Mississippi, which may rarely encounter locks. Although towing vessels on the lower Mississippi might not encounter locks, they tend to have a much larger cargo and are likely to interact with deep-draft vessels. The towing vessel observed was also a technological leader that already uses electronic charts. Although many towing companies have adopted electronic charts, many smaller companies have not. The towing industry includes many types of vessels and operations, which may lead to different applications of AIS, particularly compared with the application of AIS for deep-draft vessels. To understand the nature of these differences, preliminary observations and a task analysis were conducted. Similar analyses should be performed for other classes of vessels as well.

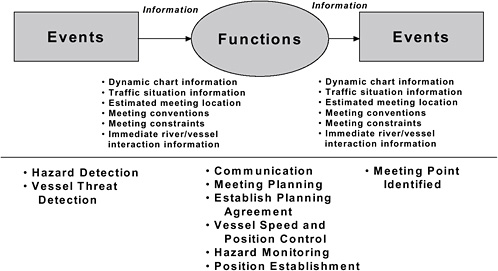

A simple way to organize observations of navigation and communication activity is according to information, functions, and events (see Figure 5-3). A complementary approach would be to address the underlying constraints of the work domain on behavior (Vicente 1999). Both approaches would be useful in a comprehensive analysis of how AIS could support mariners. Information refers to categories of information that the pilots and captains use to guide their actions. In some cases, such as “lock ticket,” the information is contained on a piece of paper, but the information could be in the form of radio communications that update this information, so “lock ticket” represents more than the physical piece of paper. For each information

FIGURE 5-3 Towing vessel meeting example.

category, Table 5-1 shows the different activities and their descriptions, including a source for the activity, such as radio communication or visual observation. Functions are the information transformation processes that achieve system goals. These capture what people and technology do in the pilothouse. As shown in Figure 5-3, each function takes information as an input and generates it as an output. The functions are triggered by events and they also initiate events. Events are the triggers that initiate functions and the state changes that are a consequence of the information transformation and activities associated with a function. Table 5-1 shows a sample of representative information, functions, and events.

An example of the relationships between functions, events, and information can be seen in the towing vessel meeting diagram shown in Figure 5-3. In this example, a towing vessel meeting another vessel experiences at least two events—hazard detection (e.g., fixed-object hazards) and vessel threat detection. The two events result in functions being performed aboard the towing vessel: communication, meeting planning, establishment of a planning agreement, vessel speed and position control, hazard monitoring, and position establishment. Those functions result in another event—identification of a meeting point. Figure 5-3 also shows that the information being used

TABLE 5-1 Representative Information, Functions, and Events

|

Activity |

Description |

|

Type of Activity: Information |

|

|

Event log |

Documents progress along the river, anomalies, and crew changes. This information is stored and communicated using a computer, note pad, and formal paper log. |

|

Lock ticket |

Data needed to coordinate lock passage (e.g., 600- versus 1,200-ft locks). Tow configuration, length, draft, cargo, barge numbers and types. This information is stored and communicated using paper ticket and note pad; changes are communicated by radio. |

|

Vessel/tow configuration |

Information that affects safe passage through channels, locks, and bends. This information includes draft readings, leaks and water in barges, tow length (visual inspection, notes). |

|

Equipment calibration |

Depth estimated by second vessel, physical state of depth gauge, confirmation with vessel/river interaction. This information is communicated by radio, visual observation, vessel response. |

|

Lock waiting location |

Array of vessels stopped along bank before lock. This information is communicated by radio. |

|

Informal chart data |

Addresses lack of detail in charts. Fleeting areas, location of private docks, steepness of bank, type of bank and bottom, eddies. Critical for picking an appropriate place to stop and identifying upcoming river hazards (visual confirmation, local knowledge, general river knowledge, radio communication, e-mail). |

|

Dynamic chart information |

Addresses changing features of the river. River height, sandbars, current, lock status, obstacles, and hazards. This information is stored and communicated using daily USCG updates on the radio, e-mail, and radio communication with other boats. |

|

Traffic situation |

Number, distance, and distribution of approaching boats. This information is communicated and tracked using radio and visual and radar targets. |

|

Estimated meeting location |

Point where vessels are likely to pass on the basis of estimated speed and distance. This information is communicated using radio and a chart. |

|

Activity |

Description |

|

Meeting conventions |

Southbound has right-of-way, Ohio River convention for southbound vessel to take outside of curve. Subsequent vessels of a sequence follow the first. Opposite for lower Mississippi. This information is communicated by radio. |

|

Meeting constraints |

Space available for passage, intended track, mechanical problems of vessel. This information is stored and communicated using the charts and radio. |

|

Immediate river/vessel interaction |

Depth of water below barges, response of tow to control input. This information is based on the actual compared with the expected rate of turn, behavior of lead barges, cavitation, speed/rpm relationship, and current. This information is communicated through visual cues, haptic cues (vibration), and auditory cues. |

|

Lock coordination |

Configure tow, loading to match lock capacity. Share tow information and any changes with lock manager to establish lock type and order. |

|

Type of Activity: Functions |

|

|

Meeting planning |

Broadcast position and intention to identify relevant forward vessels (northbound responsibility). Use estimated speed, distance, and location of hazards to establish a meeting location. |

|

Establishing passing agreement |

Agreeing where and how the vessels will pass (port-to-port or starboard-to-starboard). |

|

Fleeting area and service coordination |

Plan for support services, such as maintenance personnel boarding and fleet boats. |

|

Speed and position control |

Moment-to-moment control of the vessel course through the water. |

|

Identify waiting location |

Determine availability and suitability of places where tow can be temporarily stopped against the riverbank. |

|

Stopped at riverbank |

Stopped, waiting for fog to lift or for turn through lock. |

|

Hazard monitoring and detection |

Scanning the river to identify hazards, which include other vessels, upcoming turns, sandbars, and recreational boaters. |

|

Activity |

Description |

|

Type of Activity: Events |

|

|

Hazard detected |

Detection that a hazard is present. |

|

Upcoming vessels detected |

Realizing that other vessels are in the vicinity. |

|

Meeting point identified |

Determining location at which vessels will meet. |

|

Passing agreement made |

Agreement made between vessels as to where and manner in which they will meet. |

|

Lock delay identified |

|

|

Change in river height |

Changes in water depth. |

|

Lock approached |

|

|

Lock passed |

Have gone through the lock. |

for the functions includes dynamic chart information, traffic situation information, estimated meeting locations, meeting conventions, meeting constraints, and immediate river/vessel interaction information, among other items. This simple example provides an idea of the relationships between information, functions, and events in a towing vessel meeting situation. Note that the relationship between events and functions is sequential: events trigger functions, and functions result in changes in events. Note also that information is a critical input to functions; information is needed for functions to occur.

The relationships between information, functions, and events can be modeled in a variety of ways: for instance, by using data flow diagrams (Hoffer et al. 2002), the Unified Modeling Language for object-oriented software and hardware (Kobryn 1999), data modeling (DATE 2002), transition matrices, and input/output matrices. Computer-aided software engineering tools, as described earlier, are electronic repositories for each of these different types of models that can be used for analysis, design, and development activities. Each of these models and approaches focuses on the use of information to facilitate activities in response to and in order to successfully execute or anticipate events in a domain. The patterns evidenced by different events, information, and functions in a domain provide important clues

as to appropriate technology design and development strategies to assist human operators.

The variety of functions that AIS might support and the variety of information sources demonstrate the challenge of integrating AIS into the mariner’s decision-making process. The variety of information, events, and functions also demonstrates the vessel- and operation-specific nature of AIS display design. While some of the elements of navigation, communication, and planning tasks remain constant across different types of vessels and operating environments, others change.

Thus, systematic analysis of the information, functions, and events that describe mariner activities is needed to derive AIS display and control requirements. The results of this analysis might include a transition matrix that identifies the potential challenges that might interfere with the functions occurring. This can also identify the interface strategies that could help AIS in supporting these transitions (e.g., separate alarms versus integration with other displays). Another result could be an input/output matrix that describes the information flow between functions and whether the information is an input or an output of each function, as well as the data entry and data flow requirements. Unneeded data entry should be avoided, and data output should be organized to avoid overwhelming the operator. An input/output matrix can help identify how AIS outputs can be integrated, combined, and formatted to support the functions with minimal data entry and cognitive transformations. An input/output analysis can also result in specific strategies for supporting efficient manipulation and use of the information and can identify potential breakdowns in information flows and functions. For instance, the initial observation of towing operations identified several considerations for AIS implementation in the inland towing industry:

-

Combining a radar overlay may clutter the electronic chart and require substantial adjustments to avoid inconsistencies in electronic chart orientation. AIS information can further complicate these tasks if it is not carefully integrated.

-

Geographic constraints make meeting point coordination an important task for inland towing.

-

The variable speed and intention of other vessels make meeting location estimation for towing vessels difficult. Any AIS implementation should consider how to address this challenge.

-

The preview of some electronic charts, as commonly used by inland operators, is only 3 miles and may make transit planning difficult. AIS displays should consider the planning horizon of the mariner.

-

Current, imperfect radio communication due to intentional silence, failure to hear due to ambient noise, and being on a different channel present important opportunities for AIS to improve the mariner’s knowledge of his or her domain.

-

Data must be entered in the time available (e.g., picking up a radio is easier than navigating a menu system). AIS may be most effective as a supplement to radio communication rather than as a replacement.

-

Many vessels have computers on the bridge, which can pose a distraction. AIS could exacerbate this risk.

-

Updating vessel status and configuration data could be a substantial task and is potentially subject to high risk of error. For example, towing vessel length and beam measurements change as cargo is picked up and delivered. Some of this data entry could be eliminated if the data were linked to the locking ticket.

-

Information that is static for deep-draft vessels may be dynamic for towing vessels (e.g., adding barges changes “vessel” length).

These preliminary observations do not represent a comprehensive set of considerations for AIS implementation but instead demonstrate why a task analysis can be helpful in identifying display requirements for AIS.

Integration of AIS with Other Bridge Systems

A strong tendency in technology development in the maritime industry is to create stand-alone systems that require the mariner to integrate the information from each system to make decisions. One strategy for AIS development is to treat AIS as a separate system that is independent of the automatic radar plotting aids (ARPA), electronic chart, radio, and other communication and navigation equipment. A stand-alone AIS simplifies the AIS design process but may place a substantial burden on the mariner and severely undermine the utility of the AIS. The mariner may be forced to mentally integrate the information—a process that can be effortful and subject to error. In addition, the mariner may need to resolve differences in data about the same fixture—target vessel and so forth—presented by different instruments

or displays. Only people can make judgments, and the information should be configured in a way that supports judgments.

Another strategy is to integrate AIS information into the displays and controls of existing bridge equipment. The design must consider the type of judgments the mariner must make and the type of constraints under which the mariner must operate; the display needs to support these judgments and make the constraints visible. The specific integration of information and controls depends on a deep understanding of the demands and constraints of the maritime environment and the capabilities of various sensor and data sources. The development of an integrated system faces technological and display design challenges but could dramatically enhance the benefits of the AIS.

Frequently, new systems are developed without careful consideration of how they integrate with current capabilities. For example, navigation aids are installed on ships (Lee and Sanquist 2000), functions are added to flight management systems (Sarter and Woods 1995), and medical device displays are combined (Cook et al. 1990a), often without careful attention to how the information from these systems should be integrated. The general concept of functional integration has significant potential for enhancing human– system performance. Functional integration involves analysis of the information required by each function and the information produced by each function. The information inputs and outputs of the various system functions define links between functions that can either be identified and supported by designers or discovered and accommodated by operators. Operators who are forced to “finish the design” (i.e., to provide necessary links between functions) may experience increased cognitive load, frustration, and dissatisfaction with the system. Without careful consideration, AIS may be poorly integrated and may subject users to the task of finishing the design, which is laborious and prone to error.

As an example, problems can exist with personal pilot units that have the “attitude display” of own ship when only one antenna is used or there is no connection to the ship’s source of true heading (from gyro or Differential Global Positioning System). In this circumstance, own ship’s attitude transmitted to other ships will be in the direction of movement of the ship’s center of gravity, not its true compass heading. This mistaken display of heading gets worse as ship speed slows or cross-channel winds or currents

affect own ship’s movement so that it has to “crab” its way down the channel. While those on the bridge of the ship will probably be aware of this, mariners on bridges of other ships receiving this display of attitude will get faulty information for such a target vessel. This is particularly problematic because some ships will have gyro heading information and will broadcast the actual ship orientation, and other ships that do not have gyros will broadcast heading information that does not match their actual heading.

Graphics are frequently used to document information flows and identify how systems can be integrated. However, graphics of complex systems are often incomprehensible and at best provide only a qualitative description of the system. Typically, each function is represented by an ellipse labeled with the function name. Information flows are usually designated with arrows. These graphics provide a visual representation that can sometimes promote intuitive insight; however, as they become more complex, they grow harder to draw effectively and to comprehend. Alternatively, an information flow may be represented as a matrix in which information flows between functions are summarized as functions arrayed against functions. In each instance where information flows from one function to another, the cell in the matrix contains a “1,” otherwise it contains a “0.” A benefit of the matrix approach is that it easily scales to accommodate increasingly complex systems, in contrast to graphical representations, which can quickly become unmanageable. A matrix representation makes possible a range of mathematical analyses that can reveal relationships that may not be obvious in graphic representation. This representation makes it possible to apply well-established graph theory techniques developed to study engineering systems and social networks (Borgatti et al. 1992; Luce and Perry 1949; Wasserman and Faust 1994) and optimize engineering design (Kusiak 1999; Lee and Sanquist 1996). The complex set of navigation, planning, and communication tasks that AIS may support makes it important to consider systematic approaches to integrate AIS with existing bridge technology.

Translating Data into Information

New technology has the potential to overload people with data. AIS is no exception. To avoid this danger AIS design must carefully evaluate the tasks and decisions AIS is to support and then integrate, transform, and present data in a form that is most cognitively consistent with the tasks it is meant

to support. If the AIS design does not address these considerations, the mariner will need to mentally integrate and transform the data. This burden may lead to errors and misunderstandings. For example, the minimum keyboard and display (MKD) forces mariners to translate numeric data concerning the latitude and longitude of surrounding vessels into a visual representation and assessment of collision hazard.

Mariners have developed successful strategies that rely heavily on representing the data in a format that makes perceptual judgments of collision potential possible (Hutchins 1990; Hutchins 1995b). For example, relative motion vectors of ships on a collision course point toward the mariner’s ship.

Also, a well-designed AIS display should provide attentional guidance to mariners by, for example, highlighting changes and events (such as changes in the status or behavior of a nearby vessel), which may otherwise be missed as they appear in the context of a dynamic data-rich display. Data need to be put in context and presented in reference to related data to transform them into meaningful information. For example, simulations of possible avoidance maneuvers on the screen are useful only if they can be viewed in the context of a map depicting surrounding land masses or water depths. Finally, not all AIS information may be required at all times. Depending on task and task context, the information that is presented may change automatically, or, in a more human-centered design, mariners should be enabled to tailor the information content and display to their changing needs (Guerlain and Bullemer 1996).

Operational Differences and Implications for AIS Interface Design

AIS may be used in a range of operational environments and vessel types. The ideal AIS interface for an oceangoing tanker may be quite different from an interface for a towing vessel on an inland waterway. The operational differences have implications for the way in which AIS may be integrated with other bridge systems and how data are combined into useful information. For example, AIS for a towing vessel on inland waterways that have numerous locks should consider the information that needs to be transferred and the vessel coordination associated with locks. AIS for these vessels must also consider the demands of coordinating passing situations in narrow channels. To maximize the utility of AIS, the human interface must be designed for the

particular demands of each operational environment. A useful AIS interface for oceangoing vessels may not be the same as a useful interface for inland towing vessels.

HUMAN/AUTOMATION PERFORMANCE ISSUES

Automation such as AIS has tremendous potential to extend human performance and improve safety. However, recent disasters indicate that it is not uniformly beneficial. In one case, pilots failed to intervene and take manual control even as the autopilot crashed the Airbus A320 they were flying (Sparaco 1995). In another instance, an automated navigation system malfunctioned and the crew failed to intervene, allowing the Royal Majesty cruise ship to drift off course for 24 hours before it ran aground (Lee and Sanquist 2000; NTSB 1997). On the other hand, people are not always willing to rely on automation when appropriate. Operators rejected automated controllers in paper mills, which undermined the potential benefits of automation (Zuboff 1988). As information technology becomes more prevalent, poor partnerships between people and automation will become increasingly costly and catastrophic (Lee and See in press). For this reason it is important to consider the problems with automation that might also plague AIS if it is not implemented with proper concern for supporting the mariner.

Such flawed partnerships between automation and people can be described in terms of misuse and disuse (Parasuraman and Riley 1997). Misuse refers to the failures that occur when people inadvertently violate critical assumptions and rely on automation inappropriately, whereas disuse signifies failures that occur when people reject the capabilities of automation. Misuse and disuse are two examples of inappropriate reliance on automation that can compromise safety and profitability. Understanding how to mitigate disuse and misuse of automation is a critically important problem for AIS development. When it is first introduced, AIS is likely to suffer from disuse because mariners may be hesitant to trust it. After several years mariners may come to depend on AIS and AIS may suffer from misuse as mariners trust it too much and become complacent about potential failures.

Several more specific problems that underlie the general problems of misuse and disuse have been identified (Bainbridge 1983; Lee and Sanquist 1994; Norman 1990; Sarter and Woods 1994; Wickens et al. 1997; Wiener

and Curry 1980). The following are specific problems with automation that seem relevant to AIS development and implementation:

-

Trust calibration,

-

Configuration errors,

-

Workload,

-

Skill loss and training, and

-

Disrupted human interactions.

In the following sections each of these issues will be described briefly, its connection to AIS identified, and considerations for interface design and training identified.

Trust Calibration

Trust has emerged as a particularly important factor in understanding how people manage automation (Lee and Moray 1992; Lee and Moray 1994). Just as trust guides delegation and monitoring of human subordinates, trust also guides delegation and monitoring of tasks performed by an automated system (Sheridan 1975). Trust has been defined as the attitude that will help achieve an individual’s goals in a situation characterized by uncertainty and vulnerability (Lee and See in press).

Trust in automation is not always well calibrated: sometimes it is too low (distrust), sometimes too high (overtrust) (Lee and See in press; Parasuraman and Riley 1997). Distrust is a type of mistrust: the person fails to trust the automation as much as is appropriate. For example, in some circumstances people prefer manual control to automatic control, even when both are performing equally well (Liu et al. 1993). A similar effect is seen with automation such as AIS that enhances perception. People are biased to rely more on themselves than on automation (Dzindolet et al. 2002). The consequence of distrust is not necessarily severe, but it may lead to inefficiency. In the case of AIS, distrust may lead mariners to ignore the AIS and therefore fail to benefit from the technology.

In contrast to distrust, overtrust of automation, also referred to as complacency, occurs when people trust the automation more than is appropriate. It can have severe negative consequences if the automation is imperfect (Molloy and Parasuraman 1996; Parasuraman et al. 1993). When people per-

ceive a device to be perfectly reliable, there is a natural tendency to cease monitoring it (Bainbridge 1983; Moray 2003). As AIS becomes commonplace and mariners see it work flawlessly for long periods, they may develop a degree of complacency and fail to monitor it carefully.

Configuration Errors

Another problem with automation, and one that is likely to plague AIS use, is configuration errors. Configuration errors occur when data are entered or modes are selected that cause the system to behave inappropriately. The mariner may incorrectly “set up” the automation. As an example, nurses sometimes make errors when they program systems that allow patients to administer periodic doses of painkillers intravenously. If the nurses enter the wrong drug concentration, the system will faithfully do what it was told to do and give the patient an overdose (Lin et al. 2001). With AIS a similar problem could occur if the mariner entered the wrong length for the vessel. The AIS might then indicate a meeting point as being safe even though it is not. To combat this problem, data entry should be minimized, and the entered data should be displayed graphically so that typographical errors are easy to identify.

Workload

Automation is often introduced with the goal of reducing operator workload. However, automation sometimes has the effect of reducing workload during already low-workload periods and increasing it during high-workload periods. In this way, clumsy automation can make easy tasks easier and hard tasks harder. For example, a flight management system tends to make the low-workload phases of flight (such as straight and level flight or a routine climb) easier, but it tends to make high-workload phases (such as maneuvers in preparation for landing) more difficult; pilots have to share their time between landing procedures, communication, and programming the flight management system (Cook et al. 1990b; Woods et al. 1991). AIS may be prone to the same problems of clumsy automation, particularly if there is a high level of text messages during the already high-workload periods associated with transiting restricted waters or coming into a port. Clumsy automation can be avoided by minimizing data entry and configuration requirements and reducing the number of adjustments the mariner might need to make in high-workload periods.

Skill Loss and Training

Errors can occur when people lack the training to understand the automation. As increasingly sophisticated automation eliminates many physical tasks, complex tasks may appear to become easy, leading to less emphasis on training. The misunderstanding of new radar and collision avoidance systems has contributed to accidents (NTSB 1990). One contribution to these accidents is training and certification that fail to reflect the demands of the automation. An analysis of the examination used by the U.S. Coast Guard (USCG) to certify radar operators indicated that 75 percent of the items assess skills that have been automated and are not required by the new technology (Lee and Sanquist 2000). Paradoxically, the new technology makes it possible to monitor a greater number of ships, which enhances the need for interpretive skills such as understanding the rules of the road and the automation. These are the very skills that are underrepresented on the test. Furthermore, the knowledge and skills may degrade because they are used only in rare, but critical, instances (Lee and Sanquist 2000). Training programs and certification that consider the initial and long-term requirements of AIS can help combat potential problems of skill loss and the additional demands that AIS may put on mariners.

Disrupted Human Interactions

Crew interactions and teamwork are critical for effective navigation. In the aviation domain many problems have been traced to poor interactions between crew members (Helmreich and Foushee 1993). There are many circumstances in which subtle communications, achieved by actions or voice inflection, convey valuable information. Sometimes automation may eliminate these information channels. A digital datalink system is being developed that shares several similarities to the AIS. Datalink is proposed to replace air-to-ground radio communications with digital messages that are typed in and appear on a display panel that will eliminate informal information that is currently conveyed by voice inflection (Kerns 1991). Controllers may no longer be able to detect stress and confusion in a pilot’s voice, nor will pilots be able to hear the sense of urgency in the tone of a controller’s voice commanding immediate compliance (Wickens et al. 1997). In addition, the physical interaction between the crew members and the equipment can enhance communication and error recovery. In maritime navigation the way the position is

plotted can indicate its accuracy (Hutchins 1995a); this indication may be lost with AIS, where every target is plotted with apparently the same accuracy. The physical movements of team members, such as reaching for a switch, can communicate intentions. Automation can eliminate such communication by channeling multiple functions and activities through a single panel (Segal 1994). To counteract these potential problems, it might be useful for AIS design to consider the joint information of the interacting crew in addition to the needs of each individual crew member.

SKILL REQUIREMENTS

The successful introduction of AIS depends on understanding the capabilities and training requirements. While AIS has certain unique features and operating functions, it is one of many tools intended to assist the mariner in accomplishing a myriad of ship operational tasks. As such, it should be treated as part of the total bridge navigational system in deciding how best to provide operator training. Operator training is a complex subject in itself, and the committee has not fully investigated it, but its importance is clear.

Most of the deep water mariners who will be using AIS will not be subject to U.S. flag jurisdiction. Thus, their capabilities and training will be overseen by international agreements or the national regulations of the flag of the vessel. In contrast, as previously noted, the majority of the U.S. inland mariners who will be expected to use AIS are those who are employed aboard U.S.-flag commercial towing vessels, coastal traffic and tug/barges, passenger vessels, ferryboats, and offshore supply vessels, which are subject to USCG regulations. The capabilities and training needs among all of these mariners are as varied as the vessels they operate. However, because they share the same waterway, there are certain basic training principles concerning the use of AIS that may be common and may be important to consider.

For example, a number of chart display units are in active use on U.S. ports and waterways, and the experience of the mariners who use these systems is a reasonable gauge of the level of training needed to operate AIS units. Electronic chart systems are in general use on a number of vessel types. AIS-like units have been in use in New Orleans, Tampa, and San Francisco; AIS are being used on the St. Lawrence Seaway as well.

Because commercial towing vessels and other inland waterway vessels are equipped with modern radar, VHF/FM radios, magnetic compasses, and

rate-of-turn indicators, current operators must receive adequate training with these systems. Many are also fitted with one or more of the following: GPS, chart plotters, or electronic charting systems (ECS). Interestingly, as more mariners use this equipment in day-to-day operations, it may be that the addition of AIS will not present too large a burden of additional training so long as the display is appropriate and the operator has adequate skills with existing systems. The particular training requirements will depend on the functionality of the AIS display.

Under present USCG regulations, masters and mates of towing vessels over 26 feet are required to attend radar training as a condition of licensing. Refresher training is required on a 5-year cycle. Because of this requirement, the addition of AIS training might be considered as an adjunct to the radar endorsement. In the past, certification examinations have not always changed to reflect the introduction of new technology (Lee and Sanquist 2000). It would be useful to review all vessel operator training and certification requirements to see how the introduction of AIS might be used to modify present standards rather than introduce new ones.

There will undoubtedly be a phase-in period to facilitate AIS carriage requirements; this period could also be useful to phase in any new requirements for training. Any AIS-specific training could be synchronized with the mariner’s normal radar training cycle. For example, mariners could satisfy AIS training requirements concurrently with the first renewal of a radar certificate, following the implementation of the carriage requirements. This approach would allow mariners to better meet carriage requirement deadlines and ease the burden on examination centers. While the committee believes that training will always be an important factor in the successful introduction of AIS displays, it also believes that a training program will be most useful when it is integrated with a regular, comprehensive operator training program.

DESIGNING THE AIS INTERFACE USING HUMAN FACTORS PRINCIPLES

While it will be important ultimately to tailor the AIS interface design to its intended uses, users, and context, certain general principles should be applied to its design and evaluation. For example, Smith and Mosier (1986)

offer 944 such guidelines, Brown (1988) discusses 302 guidelines, and Mayhew (1992) includes 288 guidelines. Probably the most widely recognized usability heuristics are those based on a factor analysis of 249 usability problems by Nielsen and Levy (1994), which resulted in a concise list of 10 heuristics. This large body of guidelines can help direct AIS interface design, but some are more critical for AIS design than others. In the following sections human factors design principles that appear to be particularly critical for AIS design are identified, and recent trends in information representation that may complement the traditional reliance on visual displays are discussed.

Human Factors Considerations for AIS Interface Design

The following paragraphs briefly discuss some of the best-known and widely accepted heuristics and design principles (from Nielsen and Levy 1994; Shneiderman 1998; Wickens et al. 1997) that should, at a minimum, be applied to the evaluation of proposed AIS shipboard interfaces. The shipboard environment presents several critical considerations that differentiate it from typical desktop and control room situations in which the following human factors guidelines are typically applied. Shipboard displays must consider illumination so that they can be operated in both day and night, and their displays must be visible but not excessively bright at night. Shipboard interfaces should also be designed so that they are operable in heavy seas, wet conditions, and high-vibration situations. The physical layout of the bridge should also influence shipboard design. The shipboard operator is not likely to be monitoring displays constantly, and the placement of shipboard displays relative to other navigation and communication aids may influence their utility. Beyond these general considerations, the following 13 specific interface principles should be taken into account in AIS design:

-

Ensure visibility of system status and behavior: The system should always keep the user informed about its status and activities through timely and effective feedback. This heuristic is essential to a user-centered design paradigm and vital for effective coordination and cooperation in a joint human–machine system. Yet, it is one of the most frequently violated principles. Many current interfaces focus on the presentation of status information but fail to highlight changes and events. They are often characterized by data availability rather than system observability, under which the machine plays an active role in supporting attention allocation

-

and information integration. In the context of AIS, for example, it has been proposed that in the event of system overload, some targets will drop out. It will be important to provide clear indications of such changes in display mode.

-

Create a match between the system and the real world: The system should speak the user’s language. It should follow conventions in the particular domain and use words, phrases, and concepts that are familiar to the mariner rather than system- or engineering-oriented terms. This will reduce training time because it avoids the need for mariners to adapt to the system, and it will help avoid errors (e.g., formatting errors in data entry) and misunderstandings between the system and the mariner. This also means that menu options and error messages should use terms that are meaningful to the mariner rather than terms that are familiar to the software developers. This requires a high degree of familiarity with the tasks and existing navigation tools of the mariner. The developers should identify and use the measurement units of the mariner.

-

Support user control and freedom: Users sometimes choose system functions by mistake. In those cases, they need a clearly marked “emergency exit” to leave the unwanted state without having to go through a lengthy dialogue. For example, the system should support the easy reversal of actions through “undo” and “redo” functions. This will be especially important in the context of high-tempo operations. However, in general, it will be important to adhere to this design principle to ensure that the mariner’s attentional focus is not on operating the system rather than performing the task at hand. In other words, attention should be focused on vessel navigation, not on how to use or interact with the device being used to send or receive information. Such inappropriate attentional fixations have been observed in other domains, where they have contributed to incidents and accidents. The need to support user control and freedom also concerns the changing conditions the mariner faces and the need to allow the mariner to adjust the features of the AIS to accommodate these conditions. One simple example is the need to adjust the display to reflect the changes in lighting from day to night.

-

Ensure consistency: This principle calls for the use of identical terminology for menus and help screens and for the consistent use of colors and display layout. Users should not have to wonder whether different words, situations, or actions mean the same thing, and where they can find information or controls. Instead, designers should attempt to capitalize on user

-

expectations that are derived from learned patterns. Compliance with this heuristic requires not only consideration of each individual display but also of the environment in which it will be used. Users learn certain color-coding schemes or symbols, and it is likely that they will transfer their interpretation of information from known to new displays. As mentioned in Chapter 4, one problem with proposed AIS designs that has been identified already is that symbology requirements have not yet been fully harmonized across different electronic navigation platforms. Using accepted approaches to symbol development could make AIS symbols more visible and interpretable (ISO 1984).

-

Support error prevention, detection, and recovery: For many years, the focus in design has been on error prevention through training and design. More recently, it has been acknowledged that, despite the best intentions, errors will continue to occur and that it is critical to support operators in detecting when an error has been made, why the error occurred, and how it can be corrected. Systems can support these three stages of error management by various means, such as (a) expressing error messages in plain language (no codes); (b) clearly indicating the nature of, and reasons for, the problem; and (c) suggesting promising solutions to the problem.

-

Require recognition rather than recall: The designer should place explicit visible reminders or statements of rules and actions in the environment so that they are available at the appropriate time and place. This principle helps reduce the need for memorization and is essentially a rephrasing of Norman’s (1988) call for “putting knowledge in the world rather than the head” of the user. One example where this principle applies in the context of AIS is the need for officers to enter manually information related to the navigational status of the ship. In the absence of external reminders, this requirement can easily be forgotten by the officer who faces a wide range of competing attentional demands.

-

Support flexibility and efficiency of use: Flexibility and efficiency of use can be supported by enabling experienced users to employ shortcuts or accelerators (that may be invisible to the novice user), such as hidden commands, special keys, or abbreviations. While this principle suggests that the user should be allowed to tailor the interface for frequent actions, it is important to note that this principle does not apply in all contexts. Support for tailoring can create difficulties in collaborative environments (i.e., environments where several operators use the same piece of equipment, such as on a ship’s bridge), where it can lead to misunderstandings

-

and confusion and should be used sparingly. Thus, an analysis of the appropriate level (or levels) of AIS display flexibility and efficiency may be warranted.

-

Avoid serial access to highly related data: In many cases, operators need to access and integrate related data to form an overall assessment of a problem or situation. It is desirable to avoid requiring that these data be accessed in a serial fashion because this imposes considerable memory demands on the part of the operator. This principle calls for the integration of AIS information with existing related information on the bridge [such as the electronic charting and display information systems (ECDIS) display].

-

Apply the proximity compatibility principle: If two or more sources of information must be mentally integrated to complete a task, they should be presented in close display proximity. In contrast, if one piece of information should be the subject of focused attention, it should be clearly separated from other sources of information. Proximity can be created by spatial proximity or through configuring data in a certain pattern or by using similar colors for these elements. This principle is related to the heuristic of minimizing information access costs. Frequently accessed sources of information should be positioned in locations where the cost of traveling between them is minimal. In other words, the user should not be required to navigate through lengthy menus to find information.

-

Avoid new interface management tasks at high-tempo, high-criticality times: A common problem with many automated systems is that they require operators to enter or access data at times when they are already very busy. This has been referred to as “clumsy automation”—automation that helps the least or gets in the way when support is needed the most. In the context of AIS, for example, it can be problematic to expect the officer of the watch to update information on the navigational status of the vessel when the change in status also requires the execution of other actions more directly related to safety.

-

Support predictive aiding: Many tasks require the anticipation of future states and events. A predictive display can aid the user in making predictions and reduces the cognitive load associated with performing this task in an unaided fashion. Humans have difficulty combining complex relationships of dynamic systems to predict future events, particularly when

-

there are delays in feedback (Brehmer and Allard 1991). For this reason, AIS should support mariners in simulating and thus evaluating possible evasive maneuvers by visualizing them as part of a graphic AIS display or as part of an integrated AIS-ARPA/ECS/ECDIS/radar interface.

-

Create representations consistent with the decision to be supported: Operator decision making often depends on the visual representation of information. In particular, graphical integration of data makes it possible for people to see complex relationships that might otherwise be overlooked (Vicente 1992). Relative and absolute motion vectors illustrate the power of representation. Relative motion vectors make potential collisions obvious, whereas absolute vectors show the same information but make collisions more difficult to detect. Understanding the format of the information presented by a given display and how that information must be considered is essential for it to be useful in decision making. Text display of position and motion vector data would make collision detection extremely difficult. Some graphical representations, such as misaligned maps, however, can also be misleading and induce errors (Rossano and Warren 1989). Graphical displays that show position information with precision that exceeds the resolution of the underlying data can easily be misinterpreted. Thus, the resolution of the display should match the precision of the underlying data. The MKD demonstrates a mismatch between display representation and the decision to be supported. Anecdotal information from active mariners who have used MKDs suggests that using the MKD digital readouts of latitude and longitude to make hazard assessments and collision avoidance decisions is much more difficult than using an appropriate graphic display of these data.

-

Consider the principle of multiple resources: The proposed introduction of new systems and interfaces to highly complex and dynamic environments, such as the modern ship bridge, has raised concerns about possible data overload. One promising approach to facilitate the processing of large amounts of data is to distribute information across multiple modalities (such as vision, hearing, and touch) rather than rely increasingly and almost exclusively on presentation of visual information. This principle is discussed in more detail in the following section.

Multimodal Shipboard AIS Displays

The introduction of computerized systems to a variety of domains has increased the potential for collecting, transmitting, and transforming large

amounts of data. However, the ability of human operators to digest and interpret those data has not kept pace. Practitioners are bombarded with data, but they are not supported effectively in accessing, integrating, and interpreting those data. The result is data overload. One of the main reasons for observed problems with data overload is the increasing, almost exclusive, reliance on visual information presentation in interface design. The same tendency can be observed in the development of proposed AIS displays. Presenting information exclusively on a dedicated visual display or integrated with existing visual interfaces may create difficulties for the mariner, whose current tasks already impose considerable visual attentional demands.

Multimodal information presentation—the presentation of information via various sensory channels such as vision, hearing, and touch—is one means of avoiding resource competition and the resulting performance breakdowns. The distribution of information across sensory channels is a means of enhancing the bandwidth of information transfer (Sklar and Sarter 1999). It takes into consideration the benefits and limitations of the various modalities. For example, visual representation seems most appropriate for conveying large amounts of complex detailed information, especially in the spatial domain. A related advantage of visual displays is their potential for permanent presentation, which affords delayed and prolonged attending. Sound, in contrast, is transient and omnidirectional, thus allowing information to be picked up without requiring a certain user location or orientation. Since people cannot “close their ears,” auditory presentation is well suited for time-dependent information and for alerting functions, especially since urgency mappings and prioritization are relatively easy to incorporate in this channel (Hellier et al. 1993).

Auditory alerts and warnings are the most commonly developed auditory display, but recent research suggests that sound can be used in other ways. Sonification contrasts with traditional auditory warnings in that it can convey a rich array of continuous dynamic information. Examples include the static of a Geiger counter, the beep of a pulse oxymetry meter, or the click of a rate-of-turn indicator. Several recent applications of sonification demonstrate its potential. It has reduced error recovery times when tied to standard user interface elements (Brewster 1998; Brewster and Crease 1999). Sonification has also aided in understanding how derivation, transformation, and interpolation affect the uncertainty of data in visualization (Pang et al. 1997). More generally, sonification has shown great potential in domains as diverse

as remote collaboration, engineering analyses, scientific data interpretation, and aircraft cockpits (Barrass and Kramer 1999). These applications show that sonification can convey subtle changes in complex time-varying data that are needed to promote better coordination between people and automation. Because sound does not require the focused attention of a visual display, it may enable operators to monitor complex situations. Just as with visual displays, combining sounds generates a gestalt from the interaction of the components (Brewster 1997). The findings support a theoretical argument that sonification can be a useful complement in visual displays.

Another sensory channel that is still underutilized is the haptic sense. The sense of touch shares a number of properties with the auditory channel. Most important, cues presented via these two modalities are transient in nature and difficult to miss, and thus are well suited for alerting purposes. The advantage of tactile cues over auditory feedback is their lower level of intrusiveness, which helps avoid unnecessary distractions. Also, like vision and hearing, touch allows for the concurrent presentation and extraction of several dimensions, such as frequency and amplitude in the case of vibrotactile cues. The distribution of information across sensory channels is not only a means of enhancing the bandwidth of information transfer; it can support the following additional functions:

-

Redundancy, where several modalities are used for processing the same information. Given the independence of error sources in different modalities, redundancy in human–computer interaction can support error detection and reduce the need for confirmation of one’s intention, especially in the context of safety-critical actions. For example, the AIS could have a redundant auditory alert for important warnings that are displayed on the screen.

-

Complementarity, where several modalities are used for processing different chunks of information that need to be merged. It has been suggested that such a complementary or synergistic use of modalities is in line with users’ natural organization of multimodal interaction.

-

Substitution, where one modality that has become temporarily or permanently unavailable is replaced by some other channel. This may become necessary in case of technical failures or changes in the environment (e.g., high ambient noise level). For example, the AIS could read text

-

messages to the mariner, making it possible for the mariner to keep watching the surrounding vessels rather than reading messages on the display.

In summary, the design of a multimodal AIS interface may be a means of avoiding problems related to data overload. It may allow a reduction in competition among attentional resources and thus support effective attention allocation. For example, a graphic representation of the traffic situation can be combined with speech output or other AIS-specific auditory and tactile alerts that capture the officer’s attention in potential traffic conflicts or other critical events that may be missed because visual or auditory attention is focused on other tasks. In addition to creating multisensory system output, it will be desirable to consider different modalities for providing input to AIS. For example, in some circumstances, the use of a keyboard for AIS data entry may not be possible or desirable. In those cases, voice input or a touch screen could serve as alternatives.

Thus, the benefits and limitations of the combined use of input and output modalities should be explored, as well as the need for the adaptive use of modalities. An adaptive approach to the design of multimodal interfaces may be appropriate for various reasons. Factors that vary over time and that may require a shift in modality usage include the abilities and preferences of individual mariners, environmental conditions, task requirements and combinations, and degraded operations that may render the use of certain channels obsolete. For example, the responsiveness to different modalities appears to shift from the visual to the auditory channel if subjects are in a state of aversive arousal (Johnson and Shapiro 1989). Also, modality expectations and the modality shifting effect play a role.

The feasibility of multimodal interfaces also needs to be carefully evaluated. If a person expects information to be presented via a certain channel, on the basis of either agreements or frequency of use, then the response to the signal will be slower if it appears in an unexpected channel. If people have just responded to a cue in one modality, they tend to be slower to respond to a subsequent cue in a different modality (Spence and Driver 1997). Environmental conditions also affect the feasibility or effectiveness of using a certain modality. For example, high levels of ambient noise may make it impossible for the mariner to use the auditory channel and thus require a switch to a different modality that would otherwise be less desirable.

Human factors design principles and promising multimodal display alternatives may help define useful AIS displays and control designs; however, no research has addressed specific design parameters for AIS. Likewise, multimodal display alternatives seem promising, but research is needed to verify their effectiveness in conveying AIS information.

EVALUATION

Heuristic Evaluation of AIS Interface

Heuristic evaluation, first proposed by Nielsen and Molich (1990), is a low-cost usability testing method for the initial evaluation of human–machine interfaces. The goal of heuristic evaluation is to identify problems in the early stages of design of a system or interface so that they can be attended to as part of an iterative design process. Heuristic evaluation involves having a small set of evaluators examine the interface and judge its compliance with recognized usability principles (the “heuristics”). Each evaluator first inspects the interface independently. Once all evaluations have been completed, the evaluators communicate and aggregate their findings. Heuristic evaluation does not provide a systematic way to generate fixes to the observed problems. However, because heuristic evaluation aims at explaining each observed usability problem with reference to established usability principles, many usability problems have fairly obvious fixes as soon as they have been identified.

Interestingly, a typical human–computer interface expert will identify about a third of the problems with a particular interface using this technique. Another expert, working independently, will tend to discover a different set of problems. For this reason, it is important that two to four experts evaluate the system independently. Heuristic evaluation tends to catch common interface design errors but may neglect more severe problems associated with system functionality. For this reason, usability tests are needed to evaluate whether the system is actually useful.

Heuristic evaluation relies on design principles that tend to be formulated in a context-independent manner. Thus, while it is important to ensure that a new system interface meets those general guidelines and common practices for human–computer interaction, some problems cannot be uncovered without examining device use in context (Woods et al. 1994). As suggested by

Norman (1991, 1): “Clumsiness is not really in the technology; clumsiness arises in how the technology is used relative to the context of demands and resources and agents and other tools.” Thus, it is critical to understand that heuristic evaluation is a necessary but not a sufficient first step in the evaluation of any new system.

Usability Tests and Controlled Experiments

Although heuristic evaluations can identify many human interface design problems, testing and experimentation are required to understand how people actually use the system. This is particularly true with AIS because it has the potential to substantially change the operators’ tasks in ways that cannot be predicted. In addition, relatively little research has addressed AIS interface design. Usability testing has become a standard part of the design process for many major software companies, and the safety-critical nature of AIS makes it important for usability testing to be a part of AIS design.

Operational Test and Evaluation

Usability testing typically involves relatively few people using relatively few functions in a controlled environment. These limits mean that important design flaws may go unnoticed until the system is deployed on actual ships. For this reason, operational test and evaluation is a critical element of the design and evaluation process. Operational test and evaluation places the AIS interface in an actual operational environment to assess how it supports the operator in the full range of conditions that might be encountered. The committee did not identify many examples of operational test programs for AIS interfaces; thus, such operational test and evaluation programs are needed.

ENSURING GOOD INTERFACE DESIGN: DESIGN, PROCESS, AND PERFORMANCE STANDARDS

Good interface design can be guided by three general types of standards: design, process, and performance. Design standards specify the range or value of design parameters. These might take the form of very specific guidance concerning the color and size of display elements or more general guidelines, such as the 13 human factors design principles described above. Although design standards are attractive because they can specify equipment

precisely, they can also be vague and conflicting, which could lead to poor designs (Woods 1992). Adherence to design standards does not guarantee a good design.

Process standards define the required design and evaluation process but do not define any of the features or characteristics of the device. For AIS interface design, process standards might mandate a process that begins with a task or work domain analysis, involves the application of human factors guidelines during design, and culminates in an operational evaluation.

Performance standards define the required efficiency of the human–AIS interface and do not specify the interface features or the design process. Performance standards require a comprehensive test and evaluation process that evaluates how AIS supports the operator in a variety of situations. Performance standards can be complex and costly to administer and may not guarantee a good design because it is impossible to test all possible use scenarios. No one type of standard will guarantee an acceptable AIS interface. A combination of design, process, and performance standards may be necessary to promote effective AIS displays and controls.

SUMMARY

Human factors considerations of AIS span a broad range that includes standards development, operational testing, training and certification, and research and development. The rapid pace of navigation technology development and the limits of traditional design standards make it likely that process and performance standards could be useful mechanisms to address the human factors considerations of AIS display development. Performance standards require operational test and evaluations. These evaluations provide useful information that can help refine process and design standards. Too frequently system design focuses on the physical system and its operation and fails to consider training and certification programs as part of the overall system design. Training and certification can have an important effect on overall system performance and should be considered with the same care as the development of display icons and color schemes.

Shipboard navigation and communication technology is changing quickly. In addition, there are many different operating environments, each with unique requirements for the AIS interface. More important, AIS may be used

in a variety of novel ways that cannot be anticipated until mariners start using it. For these reasons, it is critical to remain flexible and not to mandate a single interface standard. At the same time, the success of AIS depends on developing interfaces that are compatible with the capabilities of the operator and the demands of the operator’s tasks. These factors all argue for a continuing process of understanding the user, design, and evaluation that continues after the initial deployment of AIS. A combination of design standards, process standards, and performance standards is needed to ensure adequate interface design without interfering with the ability of designers to create effective AIS interfaces in the context of rapidly changing technology. These standards should evolve as the mariners’ use of AIS changes over time. Currently, the effect of AIS on the mariner is not well understood. General guidelines, such as the 13 heuristics described above, can help guide design, but research into the following issues is needed:

-

Design, process, and performance standards for the human factors considerations of AIS;

-

The potential benefits of multimodal interfaces to support mariner’s attention management;

-

How technology development and trends in other fields, such as aviation, might influence AIS design; and

-

How interface design can help address the trade-off between information requirements and the associated cost of complex shipboard displays of AIS information.

REFERENCES

Abbreviations

DATE Design, Automation, and Test in Europe

ISO International Standards Organization

NASA National Aeronautics and Space Administration

NTSB National Transportation Safety Board

Bainbridge, L. 1983. Ironies of Automation. Automatica, Vol. 19, No. 6, pp. 775–779.

Barrass, S., and G. Kramer. 1999. Using Sonification. Multimedia Systems, Vol. 7, No. 1, pp. 23–31.

Borgatti, S. P., M. G. Everett, and L. C. Freeman. 1992. UCINET IV Version 1.00. Analytic Technologies, Columbia.

Brehmer, B., and R. Allard. 1991. Dynamic Decision Making: The Effects of Task Complexity and Feedback Delay. In Distributed Decision Making: Cognitive Models for Cooperative Work (J. Rasmussen, B. Brehmer, and J. Leplat, eds.), John Wiley and Sons, New York, pp. 319–334.

Brewster, S. A. 1997. Using Non-Speech Sound to Overcome Information Overload. Displays, Vol. 17, Nos. 3–4, pp. 179–189.

Brewster, S. A. 1998. The Design of Sonically-Enhanced Widgets. Interacting with Computers, Vol. 11, No. 2, pp. 211–235.

Brewster, S. A., and M. G. Crease. 1999. Correcting Menu Usability Problems with Sound. Behaviour and Information Technology, Vol. 18, No. 3, pp. 165–177.

Brown, C. 1988. Human–Computer Interface Design Guidelines. Ablex Publishing, Norwood, N.J.

Carroll, J. M., ed. 1995. Scenario-Based Design: Envisioning Work and Technology in System Development. John Wiley and Sons, New York.

Cook, R. I., D. D. Woods, and M. B. Howie. 1990a. The Natural History of Introducing New Information Technology into a High-Risk Environment. Presented at the Human Factors Society 34th Annual Meeting, Orlando, Fla.

Cook, R. I., D. D. Woods, E. McColligan, and M. B. Howie. 1990b. Cognitive Consequences of “Clumsy” Automation on High Workload, High Consequence Human Performance. Presented at the Space Operations, Applications and Research Symposium, NASA Johnson Space Center.

DATE. 2002. 2002 Design, Automation, and Test in Europe Conference and Exposition. www.computer.org/cspress/CATALOG/pr01471.htm.

Dzindolet, M. T., L. G. Pierce, H. P. Beck, and L. A. Dawe. 2002. The Perceived Utility of Human and Automated Aids in a Visual Detection Task. Human Factors, Vol. 44, No. 1, pp. 79–94.

Grabowski, M. R., and S. D. Sanborn. 2001. Evaluation of Embedded Intelligent Real-Time Systems. Decision Sciences, Vol. 32, No. 1, pp. 95–123.

Guerlain, S., and P. Bullemer. 1996. User-Initiated Notification: A Concept for Aiding the Monitoring Activities of Process Control Operators. Proc., 1996 Annual Meeting, Human Factors and Ergonomics Society, Santa Monica, Calif.

Hellier, E. J., J. Edworthy, and I. Dennis. 1993. Improving Auditory Warning Design: Quantifying and Predicting the Effects of Different Warning Parameters on Perceived Urgency. Human Factors, Vol. 35, No. 4, pp. 693–706.

Helmreich, R. L., and H. C. Foushee. 1993. Why Crew Resource Management? Empirical and Theoretical Bases of Human Factors Training in Aviation. In Cockpit Resource Management (E. L. Wiener, B. G. Kanki, and R. L. Helmreich, eds.), Academic Press, San Diego, Calif., pp. 3–45.