Are All Patent Examiners Equal? Examiners, Patent Characteristics, and Litigation Outcomes1

Iain M. Cockburn

Boston University and NBER

Samuel Kortum

University of Minnesota and NBER

Scott Stern

Northwestern University, Brookings Institution, and NBER

ABSTRACT

We conducted an empirical investigation, both qualitative and quantitative, on the role of patent examiner characteristics in the allocation of intellectual property rights. Building on insights gained from interviewing administrators and patent examiners at the U.S. Patent and Trademark Office (USPTO), we collected and analyzed a novel data set of patent examiners and patent litigation outcomes. This data set is based on 182 patents for which the Court of Appeals for the Federal Circuit (CAFC) ruled on validity between 1997 and 2000. For each patent, we identified a USPTO primary examiner and collected historical statistics derived from the examiner’s entire patent examination history. These data were used to conduct an exploratory investigation of the connection between the patent examination process and the strength of ensuing patent rights. Our main findings are as follows: (i) Patent examiners and the patent examination process are not homogeneous. There is substantial variation in observable characteristics of patent examiners, such as their tenure at the USPTO, the number of patents they have exam-

ined, and the degree to which the patents that they examine are later cited by other patents. (ii) There is no evidence in our data set that examiner experience or workload at the time a patent is issued affects the probability that the CAFC will find a patent invalid. (iii) Examiners whose patents tend to be more frequently cited tend to have a higher probability of a CAFC invalidity ruling. Although we interpret these results cautiously, our findings suggest that all patent examiners are not equal and that one of the roles of the CAFC is to limit the impact of discretion and specialization on the part of patent examiners.

INTRODUCTION

Recent years have seen a worldwide surge in interest in intellectual property rights, particularly patents, in academia, in policy circles, and in the business community. This heightened level of interest has produced a substantial body of research in economics ranging from analyses of decisions to use patents rather than alternative means of protecting intellectual property (Cohen et al., 2000) to studies of the ways in which patents are used and enforced once granted (see, for example, Hall and Ziedonis, 2001; Lanjouw and Schankerman, 2001; Lanjouw and Lerner, 2001). However, little systematic attention has been paid to the process of how patent rights are created.

Indeed, only recently have researchers begun to develop a systematic understanding of the differences in intellectual property regimes across countries and over time (Lerner, 2002). Moreover, except for some preliminary aggregate statistics (Griliches, 1984; 1990), there are no published studies of the empirical determinants of patent examiner productivity, or of linkages between characteristics of patent examiners and the subsequent performance of the patent rights that they issue.2 This chapter offers a preliminary evaluation of the role that some aspects of the examination process may have in determining the allocation of patent rights, in particular the consequences of specialization of examiners in specific technologies and their exercise of discretion in examining patent applications.

Filling in this gap in our knowledge may yield a number of benefits. First, and perhaps most importantly, it is difficult to assess the likely impact of changes in the funding or operation of the U.S. Patent and Trademark Office (USPTO) without some understanding of the “USPTO production function.” For example,

at various points in the past there have been shifts in the resources available to the USPTO as well as in the incentives and objectives provided to examiners, recently focused on reducing the time taken between initial filing of a patent application and final issuance. At the same time, court rulings and revisions in USPTO practice have broadened intellectual property protection into new areas, such as genomics and business methods, where the novelty and obviousness of inventions and the scope of awarded claims may be difficult to assess. These developments raise several important policy concerns. How do the structure and process of patent examination impact the allocation of intellectual property rights? How might changes in the structure and process of examination, from the provision of new incentives to the establishment of new examination procedures, impact patent application and litigation outcomes?

Our analysis has both qualitative and quantitative components. In the first part of the chapter, we review our qualitative investigation, in which we developed an informal understanding of the process of patent examination and investigated potential areas for differences among patent examiners to impact policy-relevant measures of the performance of the patent system. The key insight from our qualitative analysis is that “there may be as many patent offices as patent examiners.” On the basis of this insight, we hypothesize that there may be substantial—and quantifiable—heterogeneity among examiners and that this heterogeneity may affect the outcome of the examination process. In the remainder of the chapter we develop some exploratory tests of this hypothesis.

To perform our quantitative analysis we constructed a novel data set linking USPTO “front page” information for issued patents with data based on the U.S. Court of Appeals for the Federal Circuit (CAFC) record between 1997 and 2000. We considered a sample of 182 patents: those on which the CAFC issued a ruling on validity during this period. For each patent, we identified the primary and secondary examiners associated with the patent and collected the complete set of patents issued by that examiner during his or her tenure at the USPTO. We then constructed measures based on this examiner-specific patent collection, including the examiner’s experience with examination, workload, and measures based on the citation patterns associated with issued patents.

Our sample of “CAFC-tested” patents comes with several limitations. First, it is fairly small, giving us relatively little statistical power for testing some of our hypotheses, such as the effect of examiner experience on subsequent judgments of validity. Second, the sample excluded all patent litigation that had been settled before appeal or had not been appealed from the District Court level. It is quite possible that a lot of the more apparent validity decisions were taken care of below the CAFC level. With these caveats in mind, however, our data set does offer a valuable first look at the characteristics of examiners associated with those patents receiving a high level of judicial scrutiny. We hope that follow-up research will be undertaken to examine whether our findings are confirmed using broader samples of court-tested patents.

We present our key findings in several steps. First, we show that patent examiners differ on a number of observable characteristics, including their overall experience at the USPTO (both in terms of years as well as total number of issued patents), their degree of technological specialization, their propensity to cite their own patents, and their propensity to issue patents that are highly cited. Indeed, a significant portion of the overall variance among patents in measures such as the number and pattern of citations received, the number and pattern of citations made, and the approval time can be explained by the identity of the examiner—in the language of econometrics, “examiner fixed effects.” These examiner effects are significant even after controlling for the patent’s technology field and its cohort (i.e., the year the patent was issued).

We then turn to an examination of whether observable characteristics of our sample of CAFC-tested patents, such as their citation rate or approval time, can be tied to observable characteristics of examiners, such as their experience or the rate at which “their” patents receive citations. Here we find intriguing evidence for the impact of examiners. For example, there is a significant positive relationship between the citations received by a subsequently litigated patent and the “propensity” of its examiner to issue patents that attract a large number of citations. We then tie these relationships to patent validity rulings. Our econometric results provide evidence of a linkage between the patent examination procedures and litigation outcomes. Although the outcome of a test of validity by the CAFC is unrelated to the number of citations received by that particular patent, validity is related to the portion of the citation rate explained by the examiner’s idiosyncratic propensity to issue patents that receive a high level of citations. This examiner-specific citation rate may reflect a number of aspects of the patent examination process, and it may therefore be difficult to attach an unambiguous interpretation to this measure. On the one hand, examiner-specific differences in the propensity of “their” patents to receive future citations may capture differences in the “generosity” of examiners in allowing claims. On the other hand, this variable may capture the impact of examiner specialization, as a consequence of an examiner concentrating on an especially “hot” technology area where patents attract large numbers of future citations. Nonetheless, our empirical findings suggest that USPTO patent examination procedures do allow for significant differences across examiners in the nature and scope of patent rights that are granted. This finding points to an important role for litigation and judicial review in checking the impact of discretion and specialization in the patent examination process.

The remainder of the chapter is organized as follows. In the next section, we review our qualitative data gathering, and motivate the evidence for our key testable hypotheses, which we state in the third section. The fourth section describes the novel data set we have constructed, and the fifth section reviews the results. A final section offers a discussion of our findings and identifies areas for future empirical research in this area.

THE PATENT EXAMINATION PROCESS

Methodology

This section reviews the initial stage of our research, a qualitative investigative phase in which we sought to understand the process of patent examination and the potential role of patent examiner characteristics in that process. This type of investigation is precisely what has been lacking from much academic and policy discussion of the impact of patent office practices, procedures, and personnel on the performance of the intellectual property rights system. Although practitioners and USPTO personnel are intimately acquainted with these procedures, there has been little attempt to identify which aspects of the examination process can be linked through rigorous empirical analysis to the key policy challenges facing USPTO.

Overall, our qualitative research phase included interviews with approximately 20 current or former patent examiners and an equal number of patent attorneys with considerable experience in patent prosecution. This phase involved three distinct stages. First, we informally interviewed former patent examiners and patent attorneys outside the USPTO to develop a basic grounding in the process and procedures of the USPTO and to evaluate some of our initial hypotheses on the impact of patent examiner characteristics and USPTO practice on the allocation of intellectual property rights. We developed a proposal based on this working knowledge to undertake systematic interviews within the USPTO, and with the assistance of the National Academies’ STEP Board, we met with senior USPTO managers to discuss administering a survey linking detailed information about examiner history with information that could be gleaned from patent statistics about differences among patent examiners. We were unable to obtain approval to distribute a systematic survey of our own design to a broad cross section of current and former examiners, but USPTO management generously allowed us to conduct informal interviews and question-and-answer sessions during several visits with a small number of examiners, mostly those in a supervisory role. These conversations were very helpful in developing more subtle, precise, and econometrically testable hypotheses. In the third stage of qualitative research, we confirmed the viability of our hypotheses with individuals external to the USPTO.

The Examination Process

Here we describe the patent examination process in general terms, focusing on the aspects for which we identified potential sources of heterogeneity in examination practice. The USPTO is one of the earliest and among the most visible agencies of the federal government, receiving more certified mail per day than any other single organization in the world. Located in a single campus of con-

nected buildings, the USPTO is staffed by over 3,000 patent examiners and has more than 6,000 total full-time equivalent employees. In recent years the examiner corps has been responsible for over 160,000 patent approvals per year. The federal government raises nearly $1 billion in revenue per year from the fees and other revenue streams associated with the USPTO.

The work flow and procedures associated with patent approval are quite systematic and well-determined.3 After arriving at a central receiving office, and passing basic checks to qualify for a filing date, patent applications are sorted by a specialized classification branch4 that allocates them to one of 235 “Art Units”— a group of examiners who examine closely related technology and constitute an administrative unit. Within the Art Unit, a “Supervisory Patent Examiner” (a senior examiner with administrative responsibilities) looks at the technology claimed in the application and assigns it to a specific examiner. Once the patent is allocated to a given examiner, that examiner will, in most cases, have continuing responsibility for examination of the case until it is disposed of—through rejection, allowance, or discontinuation. The examination process therefore typically involves an interaction between a single examiner and the attorneys of the inventor or assignee. Although the stages associated with this process are relatively structured (and exhaustively documented in the Manual of Patent Examining and Procedure), they leave substantial discretion to the examiner in how to deal with a particular application.

The examination of an application begins with a review of legal formalities and requirements and an analysis of the claims to determine what the claimed invention actually is. The examiner also reads the description of the invention (part of the “specification”) to ensure that disclosure requirements are met. The next step is a search of prior art to determine whether the claimed invention is anticipated by prior patents or nonpatent references and whether the claimed invention is obvious in view of the prior art. There is considerable scope for heterogeneity in this search procedure. The prior art search typically begins with a review of existing U.S. patents in relevant technology classes and subclasses, either through computerized tools or by hand examination of hard copy stacks of issued patents, and may then proceed to a word search of foreign patent documents, scientific and technical journals, or other databases and indexes. USPTO’s Scientific and Technical Information Center maintains extensive collections of reference materials. Word searches typically require significant skill and time to conduct effectively.

The applicant may also include significant amounts of material documenting prior art with the application. The extent to which examiners review this nonpatent material may be a function of the nature of the technology, the maturity of the field, and the ease with which it can be searched. For example, in science-intensive fields like biotechnology where much of the relevant prior art is in the form of research articles published in the scientific literature and indexed by services such as Medline, examiners may rely extensively on nonpatent materials. In very young technologies, or in areas where the USPTO has just begun to grant patents, there may be very limited patent prior art. In more mature technologies examiners may have only a moderate interest in nonpatent materials and a limited ability to easily or effectively search them. Although the scope of patent examination prior art searches has been criticized, our interviews of USPTO personnel suggest that senior USPTO management are keenly aware of external critiques of the examination process and that a variety of initiatives have been set in motion to address some of these limitations.

Once relevant prior art has been identified, the examiner obtains and reads relevant documents. Again, different examiners and different Art Units may use substantially different examination technologies. For example, although many of the mechanical Art Units have historically relied on the “shoes” (the storage bins for hard copy patent documents), and may search for prior art primarily by viewing drawings, a typical search in the life sciences can involve detailed algorithmic searches by computer to evaluate long genetic sequences and review of tens or hundreds of research articles and other references. Some examiners may develop and keep close to hand their own specialized collections of prior art to facilitate searching. Indeed, patent examiners identify and frequently refer to “favorite” examples of prior art that usefully describe (“teach”) the technology area and the bounds of prior art in a way that facilitates the examination of a wide range of subsequent applications.5

After the specification is reviewed to ensure that it provides an adequate “enabling disclosure” and an appropriate wording of claims, the initial examination is complete. The examiner then arrives at a determination of whether or not the claimed invention is patentable and composes a “first action” letter to the applicant (or, normally, the applicant’s attorney) that accepts (“allows”), or rejects, the claims. Some applications may be allowed in their entirety upon first examination. More commonly, some or all of the claims are rejected as being anticipated by the prior art, obvious, not adequately enabled, or lacking in utility, and the examiner will write a detailed analysis of the basis for rejection. The

applicant then has a fixed length of time to respond by amending the claims and/ or supplying additional evidence or argument. After receiving and evaluating this response, the examiner can then “allow” the application if it is satisfactory (the most common stage in the process at which an application proceeds on to final issuance of a patent), negotiate minor changes with the attorney, or write a “second action” letter, which maintains some or all of the initial rejections. In this letter the examiner is encouraged to point out what might be done to overcome these rejections. Although at this stage the applicant’s ability to further amend the application is formally somewhat restricted, in effect, additional rounds of negotiation between the examiner and applicant may ensue. The applicant also has the opportunity to appeal decisions for re-examination or evaluation within an internal USPTO administrative proceeding. However, such actions are quite rare; most applications are allowed (or not) on the second or third action letter.

USPTO operates various internal systems to ensure “quality control” through auditing, reviewing, and checking examiners’ work. This includes the collection and analysis of detailed statistics about various measures of examiner work product flow. For example, Supervisory Patent Examiners, as well as their supervisors, routinely evaluate data relating to the distribution of times to action and the number of actions required before “disposal” of an application through allowance, abandonment, or appeal. These measurements are one of the many tools that USPTO uses to refine the internal management of the examination process.

It is also useful to note that examiners are allocated fixed amounts of time for completing the initial examination of the application and for disposal of the application. However, examiners are free to average these time allotments over their caseload. Moreover, there are differences in these time allocations across technology groups, and there also have been changes over time. Although we do not explore this variation in the current study, exploiting these changes in USPTO practice across technology groups and over time could give some leverage for understanding the relationship between time constraints and patent quality.

Examiner Training and Specialization

Variation among examiners in their conduct of the examination process may arise from several sources. We focus here on two possibilities suggested by our interviews. First, at a given point in time, or for a particular patent cohort, examiners necessarily vary substantially in their experience. Experience may affect the quality of patent examination, and this has been a source of concern in recent years as the rate of hiring into the USPTO has increased, particularly into art areas with little in-house expertise. On the other hand, our qualitative research greatly emphasized the role of the systematic apprenticeship process within the USPTO, which is likely to reduce errors made by junior examiners. For the first several years of their career, examiners are denoted as Secondary Examiners and their work is routinely reviewed by a more senior Primary Examiner. Over time,

the Secondary Examiner takes greater control over his/her caseload and the Primary Examiner focuses on teaching more subtle lessons about the practice of dealing with applicants and their attorneys and instilling the delicate “not too much, not too little” balance that the USPTO is trying to achieve in the patent examination process.

Second, as alluded to above, Art Units may vary substantially in their organization and functioning. In the most traditional group structure, the allocation of work promotes a maximal amount of specialization by individual examiners. For example, in many of the mechanical Art Units, an individual examiner may be responsible for nearly all of the applications within specific patent classes or subclasses. In other Art Units, however, the approach is more team-oriented. In these groups, there is less technological specialization (multiple subclasses are shared by multiple examiners) and there is likely a higher degree of discussion and knowledge sharing among examiners. In the more specialized organization, there are far fewer checks and balances on the practices of a given examiner. When the examiner has all of the relevant technological information; the cost for an auditor to effectively review his/her work becomes very high. By contrast, in less specialized environments, there are likely to be greater opportunities for monitoring, although, obviously, decreased specialization may reduce examiners’ level of expertise in any specific area.

In part because of specialization, primary examiners maintain substantial discretion in their approach to individual applications. Our qualitative interviews suggest that this latitude may result in variation among examiners in how they balance multiple USPTO objectives. Consider the impact of the Clinton administration program (headed up by Vice President Gore) to establish the USPTO as a “Performance-Based Organization” (National Partnership for Reinventing Government, 2000).6 Among other goals, this initiative encouraged examiners to treat applicants as customers and to cooperate with applicants’ attorneys to define and allow (legitimate) claims. Although not changing the formal standards for claims assessment, this program encouraged examiners to use their discretion to increase the applicants’ ability to receive at least some protection for inventions. In our qualitative interviews, there were significant differences among examiners in how they claimed to respond to this new “customer” orientation. Although some acknowledged that it changed their approach to interactions with applicants’ attorneys, others claimed that it had “made no difference” for the day-to-day “balancing act” associated with allowing claims. This heterogeneous response to a single well-defined change in USPTO policy supports our hypothesis that examiners may vary in their approach to the examination process.

Qualitative Findings

Our qualitative investigation of the patent examination process both generated a number of insights central to our hypothesis development and raised some flags about potential hazards for empirical research in this area.

The first key finding from the qualitative evaluation of patent examination can be summarized in the phrase of one of our informants: “There may be as many patent offices as there are patent examiners.” In other words, although the examination process is relatively structured, and USPTO devotes considerable resources to quality control, substantial discretion is provided to examiners in how they deal with applications, and the extent to which they exercise this discretion can potentially vary substantially across examiners. Several features contribute to this potential for heterogeneity, including the formal emphasis on specialization, variation among Art Units and individual examiners in their approach to searching prior art, the fact that much learning is through an apprenticeship system with only a small number of mentors, and the existence of differences across groups and examiners in the time allocated to specific tasks and examination procedures.

This heterogeneity might manifest itself in several ways. First, there may be substantial variation across examiners in the breadth of patent grants—some examiners may have a propensity to systematically allow a more restrictive or more expansive set of claims. One potential consequence of this use of discretion may be that patents issued by examiners who tend to allow broader claims will impinge on a greater number of follow-on inventions and therefore receive more citations over time. Although prior research has emphasized the degree to which the number of citations received by a patent is an indicator of its underlying inventive significance, it is important to recognize that a given patent’s propensity to receive future citations may also be related to the “generosity” of the examiner in allowing a broad patent, relative to an average examiner’s practice.

Second, examiners differ as a result of specialization. Perhaps the key consequence of the organizational structure of the USPTO is the existence of only a handful of examiners within a narrowly defined technological field at a point in time. Specialization confers several benefits, most notably the development of “deep” human capital in established technology areas. At the same time, specialization can bring its own challenges. By construction, specialization raises the costs of monitoring, because it is difficult to disentangle whether the “practice” of a given examiner reflects the nature of the art under his or her purview or reflects idiosyncratic aspects of that examiner that are independent of the art. For example, examiners may vary in their observed propensity for self-citation. (Selfcitation is the practice by which examiners tend to include citations to “their” patents, i.e., patents for which they were the examiner.) A high degree of selfcitation may reflect an examiner’s reluctance to search beyond a narrow set of prior art with which he is already familiar. But it may equally be driven by the

technology area in which the examiner works. Our interviews suggest that a high degree of self-citation is particularly likely for examiners working in technology areas that are highly compartmentalized, with little communication across examiners, and that are highly reliant on hard copy technologies for the prior art search process.

Another impact of specialization may be to reduce the sensitivity of the USPTO to new technology areas. Before the establishment and development of norms for new Art Units, patent applications in a new technology area may be “shoehorned” into existing Art Units. As a result, in the earliest stages of a new technology (a time when the standards of patentability are being established), the examination process depends heavily on the idiosyncratic knowledge base of a small group of examiners with limited expertise in the new technology area. Although the establishment of new Art Units and the development of new standards can address such problems over time, relying on highly specialized examiners in the earliest stages of a new technology area may slow the rate at which USPTO can establish and implement such norms and procedures.

Third, examiners may vary substantially in their effective average “approval time,” the length of time between initial application and the date at which the patent issues. Although a large fraction of the lag between application and approval will, of course, be driven by external forces—the speed at which applicants respond to office actions, for example—differences across Art Units and across examiners in their workload and the type of applications they receive will likely lead to differences in average approval time. It is an interesting question whether this involves a trade-off with other dimensions of quality, specifically the ability to withstand judicial scrutiny.

At the same time that this qualitative analysis formed the basis for our hypotheses concerning how examiners might influence the allocation of patent rights, it also suggested several limitations to any empirical work and some challenges that must be overcome before drawing policy conclusions from it. First, and perhaps most importantly, the analysis highlighted the importance of taking account of variation across technologies and patent cohorts in any empirical analysis. Our investigation suggests that there are large differences across Art Units in examination practice, and these technology effects must be controlled for. In addition, examination practice, resources, and management processes have changed over time, so it is also necessary to control in a detailed way for the cohort in which a particular patent was granted.

Second, we were prompted to be cognizant of how noisy the underlying data generation process is likely to be. Much of the variation in any observable patent characteristic is likely to reflect the nature of the invention, the behavior of the applicant, and other unobserved factors. Our guarded interpretation of the econometric results presented below reflects our recognition that we are investigating rather subtle relationships, in which the impact of examiner effects may be difficult to evaluate in light of the overall noisiness of the data-generating process.

Finally, our qualitative analysis clearly indicated that our econometric analysis should recognize and incorporate the fact that the USPTO has multiple objectives and that there is no single “silver bullet” measure of performance, particularly among easily available statistics. Although, all else being equal, shorter approval times are socially beneficial (particularly in the era when disclosure did not occur until the patent was issued), speed is not a virtue in and of itself; achieving shorter approval times may require trade-offs with other objectives, such as enforceability. With these caveats in mind, we now turn to a fuller development of testable (though exploratory) hypotheses associated with examiner characteristics.

HYPOTHESIS DEVELOPMENT

Our empirical analysis is organized around two sets of hypotheses, those reflecting the relationship between patent characteristics and examiner characteristics and those reflecting the relationship of patent litigation outcomes to patent and/or examiner characteristics.

The Impact of Examiners on Patent Characteristics

One of the key insights from our qualitative analysis is the potential for heterogeneity across examiners in their discretion and specialization to affect observable outcomes of the examination process. First, as a result of their exercise of discretion, examiners may differ in the average scope of the claims in patents issued under their review. Inventors who receive patent rights with substantial scope will, on average, have been allowed more valuable rights. Identifying the impact of examiner “generosity” is subtle. Patents with broader claims are more likely to constrain the claims granted to future inventors. As a result, beyond their innate inventive importance, patents with broader allowed claims will tend to be more highly cited. Conversely, if all examiners use discretion similarly, and all receive applications with a similar distribution of inventive importance, then the average level of citation should not vary across examiners.7

However, examiner specialization may result in differences across examiners in terms of the distribution of inventive performance under their review. For example, some examiners may work in particularly “hot” technology areas where there is a rapid rate of progress; as a result, “their” patents receive large numbers of citations simply because of the larger size of the future “risk set”—i.e., the

number of patents that could potentially cite that examiner’s patents, regardless of their breadth. Thus specialization of examiners may result in variation across examiners in the “average” number of citations received by patents issued by each examiner. Moreover, although the effects of specialization can be conditioned by statistical controls for technology area, it is possible that this specialization effect operates in a more nuanced way or at a level of detail that is not easy for us to control for.

Of course, a number of additional factors determine the number of citations received by a patent or even the average level of citations received by patents associated with a patent examiner, including the particular type of technology and the amount of time that has passed since the application. However, after controlling for technology and cohort effects, variation in the exercise of discretion and specialization may still lead to different citation levels, yielding our first hypothesis:8

H1: Even after controlling for broad technology class, patent examiners will vary in terms of the average level of citations received by the patents they examine.

In addition to this variation among examiners in their discretion and specialization, there is likely to be variation among examiners in their ability to use search technologies that identify the broadest range of possible prior art. Furthermore, differences in the organization of different Art Units will likely result in different levels of communication and monitoring among examiners and among examiners and their supervisors. As discussed above, one of the consequences of this heterogeneity among examiners is that some examiners may tend toward a more autarkic approach to examination, principally relying on their past experience examining in a particular technological field, whereas others will draw on a wider range of resources. This discussion motivates our second hypothesis:

H2: Even after controlling for technology area, examiners will vary in their level of self-citation. Self-citation should be decreasing with the adoption of more advanced prior art search procedures and increasing with the technological specialization of the examiner.

Finally, examiners will vary in the workload they are given and in the allocations of time for particular tasks associated with the examination process. As several examiners related to us, however, this variation may be in place to allow

examiners to more effectively achieve other objectives of the examination process, such as precision or effective communication with the patent bar community in their technological specialty. Thus we offer a third hypothesis about the role of the approval time:

H3: Examiners will vary in their average approval time, above and beyond what can be attributed to the technology of the patents examined. Slower approval will be positively correlated with other dimensions of performance.

The Impact of Examiners on Patent Litigation Outcomes

Ultimately, we are interested in tying examiner characteristics to more objective measures of the performance of the examination process. We organize this portion of the analysis around patent litigation outcomes. Specifically, we are interested in the possibility that the type of heterogeneity implicit in hypotheses H1, H2, and H3 (as well as other examiner characteristics) will manifest itself in imperfections in the scope of patent rights that are allowed by examiners. As a preliminary foray into this area, we focus on findings of invalidity by the CAFC.9 Although the CAFC is not the “ideal” setting in which to study validity (because “obvious” invalidity cases are resolved through settlement or at the District Court level), CAFC decisions do provide a useful exploratory window into how examiner characteristics vary (and matter for litigation outcomes) for patents receiving a very high level of judicial scrutiny. Furthermore, by focusing on invalidity, we develop hypotheses relating to the role that heterogeneity among examiners might play in leading to the “excess” allocation of patent rights (as adjudicated by the CAFC); however, in future work, we hope to explore the converse possibility that this same heterogeneity may also occasionally manifest itself as underprovision.

Perhaps the most obvious potential source of variation among examiners is their overall level of examination experience. In recent years, various commentators have hypothesized that the rapid growth in patent applications and the concomitant rise in the number of examiners have reduced the experience of the average examiner, particularly in technology areas such as business methods, which have only recently begun to receive patent rights. Implicit in this argument is the proposition that less experienced examiners are more likely to inappropriately allow patent rights that should not be granted. Although it is likely true that

experience is helpful in the examination process, the procedures of the USPTO explicitly recognize the value of experience through practices such as the division of responsibilities between primary and secondary examiners and the strong culture of internal promotion. There may therefore be competing effects that mitigate the impact of experience on litigation outcomes. However, to be precise about the specific theory that has been put forth, we offer a testable hypothesis about the impact of examiner experience:

H4: The probability of a litigated patent being ruled valid will be increasing with the experience of the examiner.

In addition, hypotheses H1, H2, and H3 offer at least three potential sources of heterogeneity that may be associated with excess allocation of patent rights and therefore with invalidity findings. First, hypothesis H1 states that some examiners may vary in the degree to which they exercise discretion and the extent to which they are specialized within technology areas and that this variation should be associated with variation in the level of citations received by their patents. Claims allowed by examiners whose exercise of discretion results in allowance of relatively broad claims or who are specialized in “hot” technology areas undergoing rapid changes (either in terms of the technology or in the underlying norms of patentability) may be more likely to be found invalid by the CAFC. As a result, the probability of validity should be declining with examiners’ average level of citations received. Similarly, to the extent that it may be easier to overturn the validity of patents based on less thorough searchers of the prior art, the probability of a ruling of validity may be declining with the self-citation of the examiner. Finally, if there is a trade-off between the speed of approval and the quality of the examination, then the probability of validity will likely be increasing with the approval time of the examiner. This discussion motivates the following hypothesis:

H5: The probability of a litigated patent being ruled valid will be declining with the examiner’s average citations received per patent, declining with the self-citation rate of the examiner, but increasing with the examiner’s average approval time.

It is important to recognize that the relationship between validity and average citations per examiner is subtle, and difficult to interpret, because it measures the combined impact of discretion and specialization (i.e., the two distinct forces leading the average citations per patent to vary among examiners). In our empirical work, we therefore explicitly compare how the relationship between validity and average citations per examiner changes when we include detailed technology class controls. To the extent that including controls for each technology class

does not reduce the impact of average citations per examiner, this suggests that the impact of specialization and/or discretion occurs at a relatively fine-grained level within the USPTO. In addition, we emphasize a more general point: Regardless of whether discretion or specialization is the driver of variation among examiners, the judicial system may provide a “check” on patent examination patterns that result from the organization of the USPTO.10

Finally, the richness of patent data allows us to explore the impact of examiner heterogeneity more precisely. When determining validity, the CAFC will, of course, only consider the merits of the patent under review rather than the historical record of a particular examiner. To determine the impact of examiner characteristics on the probability of validity, only that portion of the citations received by the particular patent that are due to the examiner’s overall patterns are relevant. If hypothesis H1 is true, i.e., the number of citations received by the litigated patent is a function of the examiner’s average number of citations per patent, then this relationship allows us to estimate this portion econometrically.11 This reasoning motivates the following hypothesis:

H6: The probability of validity should be declining with the predicted number of citations received by a patent, where the prediction is based on the examiner-specific citation rate.

Together, these hypotheses provide several potential observable consequences of examiner heterogeneity. Consider, for example, the perennial policy issue of patent “disposal” times. By linking approval to other outcomes (such as validity rulings), these hypotheses offer potential insight into the potential for trade-offs associated with speeding up the examination process. To empirically test these propositions, we must tie these hypotheses to a specific set of data, which we now describe.

THE DATA

Data for this study were derived from the USPTO’s public access patent databases and from the Lexis-Nexis database of decisions of the CAFC.

We began by searching for CAFC decisions in cases where the validity of a patent was contested. In the years 1997-2000, there were 216 such cases, of which 34 were excluded from further consideration because they involved plant patents or re-examined patents or other complicating factors were present. For each of the remaining 182 “CAFC-tested” patents, we determined whether the CAFC found the patent to be valid or invalid and on what grounds: novelty, subject matter, obviousness, procedural errors, etc. Note that in many instances the CAFC found the patent invalid for more than one reason. In just over 50 percent of these 182 cases, the patent was found to be invalid. Of these, the CAFC found problems with novelty (Section 101) in 37 percent of cases, with obviousness (Section 102) in 47 percent of cases, and with the specification of the patent (Section 112) in 15 percent of cases. Although examining CAFC-tested cases does bias the sample away from “obvious” validity issues [i.e., cases with little uncertainty about outcome are likely settled before trial at the District Court level or are less likely to be appealed to the CAFC (Waldfogel, 1995)], this sampling does allow us to assess the characteristics of examiners associated with cases receiving a high level of judicial scrutiny.12

Having obtained this list of CAFC-tested patents, we then used it to construct a sample of “CAFC-tested” examiners.13 To do so we identified the 196 individuals listed as either the primary or secondary examiner for each of the 182 CAFC-tested patents.14

For each CAFC-tested examiner, we searched for all patents granted in the period 1976-2000 on which the individual was listed as a primary or secondary examiner. This search was conducted using a fairly generous “wild card” procedure to allow for typographical errors in the source data and variations in the spelling or formatting of names. Results were then carefully screened by hand to ensure that individuals were correctly identified. For example, our procedure would recognize “Merrill, Stephen A.,” “Merril, Stephen,” “Merrill, S.A.,” and “Merrill, Steve” as being the same person, but would exclude “Meril, S.” or

“Merrill, Stavros A.” If anything, this process erred on the side of caution, so that we may be slightly undercounting examiners’ output. The initial search returned just over 316,000 candidate patents, from which we excluded about 6 percent misidentified patents to arrive at a base data set of 298,441 patents attributable to the 196 CAFC-tested examiners.15

Using the data set of 298,441 patents we constructed complete histories of each CAFC-tested examiner’s patent output during the sample period, as well as various measures of their productivity, experience with examination, workload, and examining practice. Each of these patents was matched to the NBER Patent Citation Data File (Hall, Jaffe, and Trajtenberg, 2001) to obtain data on each patent’s technology classes, citations made, and citations received, as well as variables computed from these data, which measure the breadth of citations.16

In the empirical analysis that follows, we focus on the primary examiner for each of the 182 CAFC-tested patents. Because the same primary examiner may show up several times in the sample, we actually have 136 CAFC-tested primary examiners. In computing statistics across examiners, we weight examiner characteristics by the number of times that each examiner shows up in our data. Table 1 gives variable definitions, and Table 2A presents descriptive statistics for our linked data sets on CAFC-tested patents, CAFC-tested primary examiners, and patent histories of these examiners.

Again, we stress that the set of 182 CAFC-tested patents is a highly selective sample; these patents are not at all representative of the population of all granted patents. Table 2B compares mean values of some key variables for the 182 CAFC-tested patents with those typical of a utility patent applied for in 1980s.17 On average, the CAFC-tested patents contain more claims, make more citations, receive more citations, and take longer to issue. This is not surprising, because litigants who pursue CAFC review likely perceive a high value for intellectual property over a given technology. As well, given that the litigants have not settled, these patents are likely associated with a higher level of ambiguity than an average patent (perhaps an additional reason for the longer time to approval).

|

15 |

Because we have not been able to obtain a definitive matching of examiner ID numbers with issued patents, and have had to work from published data sources, this search misses a small number of patents. We are confident, however, that missing observations are missing at random and therefore do not bias our results. |

|

16 |

Jaffe, Henderson and Trajtenberg (1998) computed two measures of the breadth of citations across technology classes: “originality,” which captures the extent to which citations made by a patent are spread across technology classes, and “generality,” which captures the extent to which citations received by a patent are spread across technology classes. See Table 1 for definitions. |

|

17 |

The statistics for a typical patent are based on the tables and figures in Hall, Jaffe, and Trajtenberg (2001). |

TABLE 1 Variables and Definitions

|

Variable |

Definition |

|

Validity |

|

|

Valid |

Valid = 1 if patent validity upheld by CAFC; 0 else |

|

CAFC Patent Characteristics |

|

|

Citations Received |

No. of Citations to CAFC Patent from grant date through 6/2001 |

|

Claims |

No. of Distinct Claims for CAFC Patent |

|

Approval Time |

Patent Issue Date—Patent Application Date (Days) |

|

Generality |

Jaffe-Henderson-Trajtenberg “Generality” index: |

|

Originality |

Jaffe-Henderson-Trajtenberg “Originality” index: |

|

Primary Examiner Characteristics |

|

|

Experience (no. of patents) |

Cumulative Patent Production by Examiner, both primary and secondary (see Figure 1) |

|

Examiner Citations |

Cumulative Citations to Examiner Patents (through July, 2001) |

|

Examiner Cites Per Patent |

EXAM CITATIONS divided by EXPERIENCE (NO. OF PATENTS) (see Figure 4) |

|

Secondary Experience |

Cumulative Patent Production as Secondary Examiner |

|

Self-Cite |

Share of All Citations to Own Prior Patents (see Figure 5) |

|

Examiner Tech. Experience |

Number of broad technology classes of patents on which the examiner has experience |

|

Examiner Specialization |

Herfindahl-type measure of distribution of examiner’s patents across broad technology classes |

|

Experience (years) |

Cumulative Years Observed as Issuing Examiner (both primary and secondary) |

|

3-Month |

Volume Count of Issued Patents in Three Months Immediately Before Issue Date |

|

Control Variables |

|

|

Tech Class Fixed Effects |

6 Distinct Technology Categories Based on Patent Classes (see Figure 6) |

|

Technology Subclass Fixed Effects |

35 Distinct Technology Subclasses Based on Patent Subclasses (see Hall-Jaffe-Trajtenberg) |

|

Cohort Fixed Effects |

20 Individual Year Dummies Based on CAFC Patent Issue Date |

|

Assignee Fixed Effects |

4 Dummies for Type of Assignee (see Figure 8) |

TABLE 2A Means and Standard Deviations

|

|

Mean |

Standard Deviation |

|

Validity |

||

|

Valid |

0.48 |

0.50 |

|

CAFC Patent Characteristics |

||

|

Citations Received |

16.74 |

21.47 |

|

Claims |

20.52 |

26.05 |

|

Approval Time |

804.60 |

799.80 |

|

Generality |

0.41 |

0.27 |

|

Originality |

0.39 |

0.28 |

|

Examiner Characteristics |

||

|

Experience (no. of patents) |

2180.38 |

1395.65 |

|

Examiner Citations |

14201.68 |

12673.34 |

|

Examiner Cites Per Patent |

6.32 |

3.49 |

|

Secondary Experience |

207.05 |

137.67 |

|

Self-Cite |

0.10 |

0.06 |

|

Examiner Specialization |

0.75 |

0.20 |

|

Experience (years) |

18.67 |

5.67 |

|

3-Month Volume |

41.52 |

35.66 |

Although the CAFC-tested patents are quite selective, there is little reason to believe that the CAFC-tested primary examiners are very different from the population of all examiners.18 On one hand, because of the way we have constructed our sample, the probability of an examiner being in our data set is likely proportional to the examiner’s experience (measured in terms of total patents examined) at the USPTO. Thus, relative to the set of examiners working at the USPTO on

TABLE 2B Patent Characteristics—CAFC Sample Compared to “Universe”

|

|

CAFC Sample (182) |

Typical Patent (1980s application yr) |

|

Claims |

20.5 |

9-14 |

|

Citations Received |

14.0 |

6-8 |

|

Citations Made |

16.7 |

6-8 |

|

Originality |

0.36 |

0.3-0.4 |

|

Generality |

0.41 |

0.3-0.4 |

|

Approval Time (years) |

2.21 |

1.76-2.05 |

any given day, we are undersampling inexperienced examiners. As well, our sample may underrepresent variation in the degree of generosity; patents associated with the least generous examiners are less likely to be subject to an appellate validity claim. As such, our empirical design is providing a lower bound of the impact of examiner experience or generosity on patent litigation outcomes. Because examiner patent histories in our data set begin in 1976, the measures of experience are slightly downward biased. About 30 percent of the examiners in our sample first appear in the data set in 1976, some fraction of whom must be assumed to have begun their careers somewhat earlier. Similarly, citations to patents granted before 1976 cannot be evaluated as self-citations (or not) because we do not have information on who the examiner was, and information based on citations received by patents granted in recent years is limited by the truncation of the data set in 2001.

RESULTS

We present our results in several steps. First, we review evidence of the existence of heterogeneity among examiners and show that an important component of the overall variation in commonly used patent statistics can be explained by examiner “fixed effects.” Having established the existence of observable examiner heterogeneity, we then examine the sensitivity of various characteristics of CAFC-tested patents to observable examiner characteristics. We then turn to a discussion of the determinants of patent validity. Consistent with our discussion in the third section, we evaluate a reduced-form model of the sensitivity of validity to examiner characteristics as well as a more nuanced instrumental variables estimation that only allows examiner characteristics to impact validity through their predicted impact on characteristics unique to the CAFC-tested patent.

The Nature of Examiner Heterogeneity

Our analysis begins with a set of figures that display the heterogeneity among examiners along four distinct dimensions: experience, the level of citations received per patent, the degree of self-citation, and the degree of technological specialization in the patents examined. Figure 1 plots Experience (number of patents) across examiners. We see that although the average examiner in our sample has a lifetime experience of over 2,000 patents, a large number are associated with over 4,000 patents, with a few outliers of over 7,000 patents. This distribution is consistent with the substantial variation we see in the examiners’ length of tenure at the USPTO. For example, nearly a third of the CAFC-tested examiners have over 24 years’ experience at the USPTO.19

FIGURE 1 Experience of examiners.

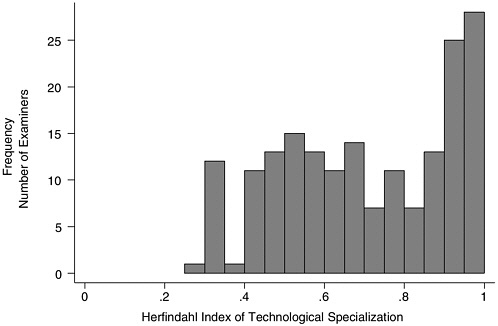

We next turn to an evaluation of the extent to which examiners specialize in particular technology classes over the course of their career. One simple way to measure this specialization is to compute the number of distinct technology classes appearing among the patents examined by a particular examiner. Using six broad technology classes, this measure (Examiner Tech. Experience) is displayed in Figure 2. We see that it is most common to have examined patents in nearly all of the six classes. Yet even if an examiner has dealt with all types of patents, he or she may still be highly specialized within a single technology category with only an occasional patent elsewhere. A more sophisticated approach to deal with this issue is to compute a Herfindahl-type index of the dispersion of an examiner’s patents over technology classes.20 This measure (Examiner Specialization) is plotted in Figure 3. Although some noise is inherent in this measure because of the nature of the technology classification system, its mean level across examiners (0.75) indicates a high average degree of specialization. As Figure 3 indicates, however, there is also considerable variation: Although the modal examiner is highly specialized, with a specialization index near 1, there are still a

significant number of examiners with a much greater degree of dispersion of patents across technology classes.

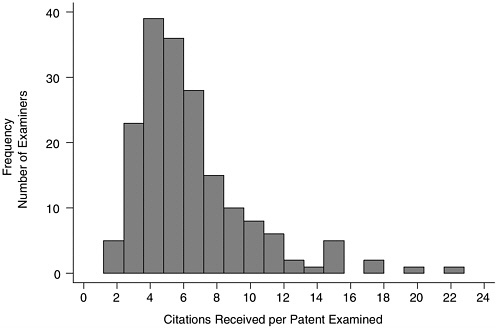

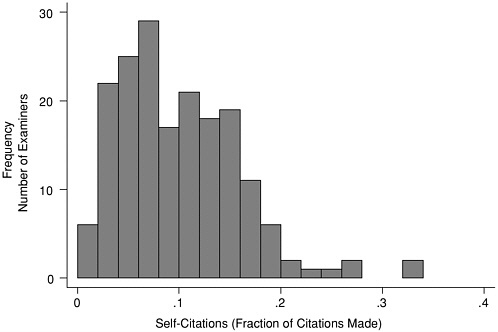

Perhaps more interestingly, there is also substantial variation among examiners in the characteristics of “their” patents. Figure 4 shows the distribution of the average number of citations received overall for all patents issued by each examiner (Examiner Cites Per Patent). The distribution is highly skewed. The coefficient of variation associated with examiner cites received per patent issued is over 0.5; and over 10 percent of examiners have citation rates more than double the average citation rate. Similarly, as shown in Figure 5, although the average self-citation rate (Self-Cite) is relatively low, particularly given the technological specialization of examiners, some examiners have self-citation rates more than three times the sample mean. Another method for understanding the importance of heterogeneity across examiners is to use ANOVA analysis to formally test for the presence of examiner effects in several key statistics associated with the examination process. An advantage of this statistical approach is that we can condition on other variables that might explain the observed differences across examiners, such as the technological areas of the patents they examine. Recall that in H1, H2, and H3 we hypothesized that the differences across examiners were not simply a reflection of the technological area of the patents they examined.

FIGURE 4 Citations received by examiners.

FIGURE 5 Self-citations by examiners.

In Table 3A, we present a simple ANOVA analysis based on our complete sample of 298,441 patents attributed to the 196 CAFC-tested examiners. The results indicate that examiners matter: A significant share of the variance in this sample in the four variables capturing the volume and pattern of citations by and to a particular patent (Citations Made, Citations Received, Originality, and Generality) is accounted for by fixed examiner effects, with a particularly strong ef-

TABLE 3A Analysis of Variance of Patent Characteristics (N = 289,441; 196 Examiner Effects; 36 Technology Subclass Effects; 24 Cohort Effects)

|

Variable |

Fraction of Variance Explained by Examiner Effects |

F-Statistic for No Examiner Effect |

F-Statistic for No Examiner Effect, Controlling for Detailed Technology Class and Cohort |

|

Citations Made |

0.077 |

121.71 |

52.64 |

|

Citations Received |

0.117 |

193.40 |

51.07 |

|

Approval Time |

0.083 |

131.77 |

78.92 |

|

Claims |

0.030 |

44.83 |

16.06 |

|

Generality |

0.079 |

105.56 |

38.97 |

|

Originality |

0.069 |

104.23 |

61.30 |

fect in the ANOVA of Citations Received. A similar result is obtained for the length of time between application and grant: About 8 percent of the variance in this measure can be attributed to differences among examiners. A much smaller share of variance is explained for the number of claims on each patent. These results are robust to controlling for differences across technology classes. As Table 3B shows, there are visible differences across technology classes in the fraction of variance explained by examiner effects. There appears to be much more homogeneity across examiners in examination of mechanical patents, with significantly less homogeneity in Citations Made for chemical patents, and in the approval time for electrical/electronic patents. Overall, these results confirm the intuition we developed in our qualitative investigation: There is substantial heterogeneity across examiners, even after controlling for the important technology and cohort effects.

The above analysis suggests that examiners vary, particularly in terms of the rate at which their patents tend to receive citations. But how does this variation, which we have suggested as a proxy for examiner discretion and/or specialization, affect our set of CAFC-tested patents? Table 4 presents regressions relating Citations Received by the CAFC-tested patents to a set of examiner characteristics and, in particular, Examiner Cites Per Patent. One result is particularly striking: There is a very strong relationship between Examiner Cites Per Patent and Citations Received by CAFC-tested patents. The effect is slightly reduced, but still quite significant, after conditioning on the patent’s detailed technology subclass, cohort, and assignee type. In each of the specifications in Table 4, increasing Examiner Cites Per Patent by one patent (less than one-third of a standard deviation) increases the predicted number of citations of the CAFC-tested patent by more than one (recall that CAFC-tested patents have much higher overall citation rates). Other observable examiner characteristics have a less clear relationship with Citations Received. The overall level of self-citation, experience (both in terms of years as well as the total level of issued patents), and a measure

TABLE 3B Analysis of Variance of Patent Characteristics by Technology Class

|

|

Fraction of Variance Explained by Examiner Effects |

|||||

|

Variable |

Chemical |

ICT |

Drug/Med |

Electronic |

Mechanical |

Other |

|

Citations Made |

0.123 |

0.054 |

0.104 |

0.078 |

0.054 |

0.059 |

|

Citations Received |

0.058 |

0.099 |

0.110 |

0.066 |

0.076 |

0.072 |

|

Approval Time |

0.098 |

0.083 |

0.074 |

0.116 |

0.053 |

0.053 |

|

Claims |

0.033 |

0.027 |

0.028 |

0.022 |

0.031 |

0.037 |

|

Generality |

0.084 |

0.112 |

0.078 |

0.086 |

0.055 |

0.081 |

|

Originality |

0.087 |

0.964 |

0.044 |

0.063 |

0.069 |

0.082 |

TABLE 4 Citations-Received Equation

of near-term work flow (3-Month Volume) are all insignificant in their impact on Citations Received.

Many factors may affect how many citations a patent receives. Citations received are frequently thought to reflect the technological significance of the claimed invention. Pioneering inventions with broad claims and no closely related prior art will tend to be cited frequently as follow-on inventors improve on the original invention. Citations may also reflect the quality or scope of the disclosure accompanying the claims. We cannot directly measure either of these factors here. Nonetheless, these results do indicate that a significant fraction of the variation in citations received by any particular patent is driven by a single aspect of examiner heterogeneity, the average propensity of “their” patents to attract citations. This is true even after controlling for other important attributes of the patent such as the technology class, the year when it was approved, and the type of assignee.

The Impact of Examiner and Patent Characteristics on Litigation Outcomes

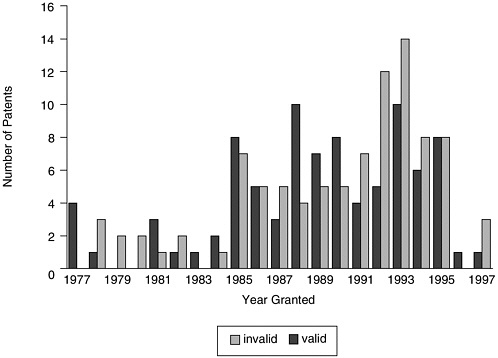

We now turn to the final part of our analysis—linking examiner characteristics to litigation outcomes. Although the overall probability of validity being upheld is approximately 50 percent, there is substantial variation in this percentage across technological area, year of patent approval, and even the type of assignee (see Figures 6-8). For example, although pharmaceutical and medical patents are more likely than not to be upheld, a substantial majority of computers and communications equipment patents are overturned. As well, the age of a patent seems to be an important predictor of validity—pre-1990 approvals are much more likely to be upheld by the CAFC than post-1990 approvals. As we emphasized in the second section of this chapter, these findings suggest the importance of controlling for detailed technology classes and cohorts in our analysis as we seek to evaluate the sensitivity of validity findings to examiner characteristics.

We begin our analysis in Tables 5 and 6, which compare the means of examiner characteristics and patent characteristics, conditional on whether the CAFC ruled the patent valid. Several issues stand out. First, the conditional means associated with most of the patent characteristics are roughly the same. It is useful to note that there is less than a 10 percent difference in the level of Citations Received between the two groups. The only striking difference is in Approval Time, where the time taken to approve invalid patents is significantly higher than the time taken to approve those that were found to be valid. Although it is hard to establish a “negative” result in the context of our highly selected CAFC-tested sample of patents,21 this finding does offer evidence against a simplistic relationship between approval times and validity rulings. Turning to the mean examiner characteristics by validity (Table 6), the striking differences are in terms of Examiner Cites Per Patent and 3-Month Volume. There is no significant difference in the means according to experience level; if anything, invalid patents are associated with examiners with higher mean levels of experience, both in terms of

TABLE 5 Patent Characteristics: Means Conditional on CAFC Validity Ruling

|

|

Invalid |

Valid |

|

Claims |

20.73 |

20.28 |

|

Citations Received |

17.38 |

16.04 |

|

Originality |

0.36 |

0.41 |

|

Generality |

0.41 |

0.41 |

|

Approval Time |

845.51 |

760.90 |

TABLE 6 Examiner Characteristics: Means Conditional on CAFC Validity Ruling

|

|

Invalid |

Valid |

|

Experience (no. of patents) |

2276.40 |

2077.81 |

|

Experience (years) |

18.82 |

18.51 |

|

Examiner Cites Per Patent |

6.89 |

5.72 |

|

Self-Cite |

0.10 |

0.10 |

|

3-Month Volume |

45.56 |

37.19 |

volume and tenure. This stands in useful contrast to the most naïve interpretation of hypothesis H4, which predicts that Experience should be positively correlated with Validity. In contrast, consistent with the suggestion in hypothesis H5, invalid patents do seem to be associated with examiners who have a higher average citation rate.

Of course, these conditional means ignore the important differences across technologies (Figure 6), cohorts (Figure 7), or assignees (Figure 8) and the poten-

FIGURE 6 CAFC patents by technology.

FIGURE 7 CAFC patents by year issued.

tial for correlation among the examiner characteristics themselves. We therefore turn to a more systematic set of regression analyses in Table 7. The dependent variable in the regressions takes the value 1 if the CAFC-tested patent is ruled valid, 0 otherwise.22 The first two columns of Table 7 provide a test for hypothesis H4, the sensitivity of the probability of a validity finding to the experience of the examiner. Whether or not detailed controls are included, there is no significant relationship between any measure of experience and the probability of a ruling of validity. Indeed, we have experimented with a wide variety of specifications relating to these experience measures and there is no systematic relationship between validity and these measures in these data. Once again, these “negative” results must be interpreted with caution given the special nature of the sample; they might suggest that a mechanical relationship between examiner experience and the outcomes of validity rulings, if it exists, may be more subtle than some would argue.

|

22 |

Both Tables 7 and 8 employ a linear probability model, either OLS or IV. The coefficients are therefore easily interpretable and comparable with each other, and we avoid the technical subtleties associated with an implementing instrumental variables probit in the context of a small sample. We experimented with a probit model for the reduced-form OLS results, and the results remain quantitatively and statistically significant. |

FIGURE 8 CAFC patents by assignee type.

In the last two columns of Table 7, we turn to hypothesis H5, the sensitivity of a validity ruling to other examiner characteristics. The only significant relationship is with Examiner Cites Per Patent, which has a significant and large negative coefficient. Moreover, this coefficient increases in absolute value when detailed technology and cohort controls are included. According to (7-4), by increasing the Examiner Cites Per Patent by one standard deviation (3.49), the probability of validity is predicted to decline by over 14 percentage points, from a mean of 48 percent. In other words, the probability of validity is strongly associated with the average rate at which that examiner’s patents have received citations. As we have emphasized in our hypothesis development, the interpretation of this result is subtle. On one hand, the results provide evidence that even high-level litigation outcomes are related to examiner characteristics that result from two key features of the organization of the USPTO—specialization of examiners in narrow technology areas and exercise of discretion by examiners in allowing claims. Moreover, when we control for specialization, the results become stronger, providing a hint that variation among examiners in their “generosity”—a phenomenon we observed in our qualitative interviews—may be important for understanding the allocation of intellectual property rights that result from patent examination procedures.

TABLE 7 Reduced-Form OLS Validity Equation

This finding motivates our final set of regressions, using the instrumental variables procedure, in Table 8. As discussed in the third section of this chapter, we investigate the mechanism by which Examiner Cites Per Patent might affect patent validity rulings by restricting its impact to the citation rate of the litigated patent. In other words, we impose the exclusion restriction that, but for its impact on Citations Received, Examiner Cites Per Patent is exogenous to the validity decision. The results of this IV analysis are striking. On the one hand, the OLS relationship between validity and Citations Received is insignificant (8-1). However, the coefficient on Citations Received in the instrumental variables equations is significant, large, and negative. Although validity is unrelated to the total number of citations received by a patent, validity is strongly related to the portion of the citation rate explained by the examiner’s average propensity to grant patents that attract citations. Moreover, the size of this coefficient increases substantially after the inclusion of technology, cohort, and assignee effects, as well as with the inclusion of other characteristics of CAFC-tested patents. If our results were being driven by unobserved variation across examiners in the types of technologies examined, these controls would likely condition out some of this heterogeneity; the fact that our results become stronger after the inclusion of controls

TABLE 8 Validity Equation

|

|

Valid |

|||

|

Dependent Variable |

(8-1) OLS |

(8-2) IVa |

(8-3) IVa |

(8-4) IVa |

|

Patent Characteristics |

||||

|

Citations Received |

−0.0007 |

−0.0090 |

−0.0228 |

−0.0242 |

|

|

(0.0017) |

(0.0042) |

(0.0106) |

(0.0111) |

|

Claims |

|

|

|

0.003 |

|

|

|

|

|

(0.002) |

|

Originality |

|

|

|

0.238 |

|

|

|

|

|

(0.227) |

|

Generality |

|

|

|

0.188 |

|

|

|

|

|

(0.268) |

|

Approval Time |

|

|

|

−0.00006 |

|

|

|

|

|

(0.00009) |

|

Control Variables |

||||

|

Cohort Fixed Effects |

|

|

Sig. |

Sig. |

|

Technology Subclass Fixed Effects |

|

|

Sig. |

Sig. |

|

Assignee Fixed Effects |

|

|

Insig. |

Insig. |

|

Regression Statistics |

||||

|

Adj. R-squared |

0.000 |

NA |

NA |

NA |

|

No. of observations |

182.00 |

182.00 |

170.00 |

170.00 |

|

aIV: Endogenous = Citations Received; instrumental variable = Examiner Cites Per Patent. Note: Standard errors are in parentheses. |

||||

makes our findings even more suggestive. In other words, even relying on a test that only allows examiner effects to matter through their impact on the citation rate of the litigated patent, and controlling for differences in the timing and type of litigated technology, we find that the CAFC invalidates patent rights associated with examiners whose degree of specialization or exercise of discretion results in an unusually high level of citations received.

DISCUSSION AND CONCLUSIONS

We have conducted an empirical investigation, both qualitative and quantitative, of the role that patent examiners play in the allocation of patent rights. In addition to interviewing administrators and patent examiners at the USPTO, we have constructed and analyzed a novel data set on patent examiners and patent litigation outcomes. Starting with a sample of patents for which the CAFC decided on validity between 1997 and 2000, we collected historical data on those who examined these patents at the USPTO. For each of these examiners, we

collected data on all of the other patents that they examined during their career, allowing us to compute a number of interesting examiner characteristics. The data set obtained by matching these two sources is, of course, based on a highly selected sample, because very few patents make it to the CAFC. Nonetheless, we view the analysis of these CAFC cases as a very useful first step, largely conditioned by the ease of accessibility to data, in exploring a number of hypotheses about the connection between the patent examination process and the issuance of patent rights. Our results are preliminary, but they suggest a number of interesting findings.

First, patent examiners and the patent examination process are not homogeneous. There is substantial variation in observable characteristics of patent examiners, such as their tenure at the USPTO, the number of patents they have examined, the average approval time per issued patent, and the degree of specialization in technology areas. There is also systematic variation in outcomes of the examination process—such as the volume and pattern of citations made and received by patents—that can be attributed to idiosyncratic differences among examiners. Most interestingly, examiners differ in the number of citations made to “their” issued patents, even after controlling for technology class, issuing cohort, and other factors.

Second, we find no evidence in our sample for the most “naïve” hypotheses about examiner characteristics and quality of examination. In particular, we find no strong statistical association between examiner experience or workload at the time a patent is issued and the probability of the CAFC finding it to be invalid if it is subsequently litigated to the appeals court level. We hesitate to make any policy prescriptions based on this “negative” finding, however, unless it were confirmed in subsequent research using a larger sample.

Third, we find that “examiners matter”: Although highly structured, and carefully monitored by USPTO, patent examination is not a mechanical process. Examiners necessarily exercise discretion and are focused in very narrow technology areas, and occasionally the claims allowed under this process are overturned by subsequent judicial review. Our core finding is that the examiners whose patents are cited most often are also more likely to have their patents ruled invalid by the CAFC. Our econometric procedure distinguishes between citations received by a particular patent because of the scope of its claims or the significance of an overall technology area and citations received because of examiner-specific differences in propensity to allow patents that attract citations. It is only the second of these mechanisms that has a statistical relationship with CAFC validity rulings.

The fact that patent examination cannot be mechanistic, and that idiosyncratic aspects of examiner behavior appear to have a significant impact on the nature of the patent rights that they grant, suggests a significant role for the organization, leadership, and management of USPTO. The management literature recognizes the value of corporate culture in the form of informal rules, common values, exemplars of behavior, etc. in providing guidance on how to exercise

discretion. Although idiosyncratic behavior of examiners can be controlled to some extent by formal processes such as supervision, selection of examiners, training, and incentives, the institution’s cultural norms necessarily play an important role in their exercise of discretion in awarding patent rights. Policy changes that impact the organizational structure and internal culture of the USPTO should be careful to take patent examiner behavior into account.

REFERENCES

Cohen, W., R.R. Nelson, and J.P Walsh. (2000). “Protecting Their Intellectual Assets: Appropriability Conditions and Why U.S. Manufacturing Firms Patent (or Not).” NBER Working Paper No. 7552.