8

Patient Safety Reporting Systems and Applications

CHAPTER SUMMARY

Patient safety performance data may be used in support of many efforts aimed at improving patient safety: regulators may use the data for accountability purposes such as licensure and certification programs; public and private purchasers may use the data to offer financial or other incentives to providers; consumers may use comparative safety performance data when choosing a provider; and clinicians may use the data when making referrals. Most important, patient safety data are a critical input to the efforts of providers to redesign care processes in ways that will make care safer for all patients. Applications in all of these areas are currently hampered by inadequate patient safety data systems. Although all applications along the continuum from accountability to learning contribute to a safer health care environment, the committee believes that applications aimed at fundamental system redesign offer particular promise for achieving substantial improvements in safety and quality across the entire health care sector.

High-quality health care is, first, safe health care. Patients should be able to approach health professionals free of fear that seeking help could lead to harm. If safety is to be a core feature of health care delivery systems, clinicians, administrators, and patients will need tools, based on reliable clinical data, to build and assure a safe care environment. Health professionals

must be able to identify safety problems, test solutions, and determine whether their solutions are working. Patients and their representatives need to know what risks exist and how they might be avoided. Data on clinical performance are one key building block for a safe health care delivery system.

Clinical performance data can serve a full range of purposes, from accountability (e.g., professional licensure, legal liability) to learning (e.g., the redesign of care processes, testing of hypotheses). Different purposes necessitate differences in data collection methods, analytic techniques, and interpretation of results. An ideal clinical performance reporting system should be able to function simultaneously along the entire continuum of applications, but such broad use requires careful data system design, automated systems that link directly to care delivery, and explicit data standards.

There are many legitimate applications of clinical performance data, each having its own historical underpinnings and approaches. All of these applications are intended to improve the safety of patient care. The interactions of clinicians and patients are influenced by the environment (e.g., legal liability, purchasing and regulatory policies); the education and training of health professionals (e.g., multidisciplinary training); the health literacy and expectations of individuals (e.g., patients’ understanding of chronic condition and the importance of healthy behaviors); and the organizational arrangements or systems that support care delivery (e.g., internal reporting systems, the availability of computer-aided decision support systems). The greatest gains in patient safety will come from aligning incentives and activities in each of these four areas.

This chapter provides an overview of the many applications of clinical performance data and a discussion of their likely impact. A case study is used to illustrate some of the undesirable consequences that can come from the use of various applications if the data, performance measures, and approaches are not chosen carefully, and if too much emphasis is placed on accountability as opposed to learning applications. Finally, the importance of investing more in approaches targeted directly at fundamental system redesign is briefly discussed. Such approaches offer the greatest potential to improve patient safety but require a far more sophisticated data infrastructure than currently exists in most health care settings.

Although much of the discussion in this chapter presumes the availability of computerized clinical information systems, the collection and analysis of clinical performance data can be carried out without computerized clinical information systems. Such collection and analysis would, however, be greatly facilitated by computerized clinical information systems and the national health information infrastructure (NHII).

THE CONTINUUM OF APPLICATIONS

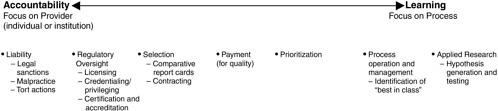

The many applications of clinical performance data are illustrated in Figure 8-1. To the left of the spectrum are applications used by public-sector legal and regulatory bodies that are intended to hold health care professionals and organizations accountable (e.g., professional and institutional licensure and legal liability). To the right of the spectrum are applications that focus on learning, both for organizations and for professionals. The feedback of performance data to clinicians for continuing education purposes falls into this category, as does the redesign of care processes by health care organizations based on analysis of data collected in near-miss and adverse event reporting systems. Falling between these two extremes are applications intended to encourage health care providers to strive for excellence by rewarding those who achieve the highest levels of performance with higher payments and greater demand for their services.

Virtually all applications of clinical performance data are intended to produce improvements in safety and quality. However, their immediate aims—accountability, incentives, and system redesign—are quite different.

|

Aim defines the system—W. E. Deming (1988) |

Accountability

Health care policy makers have long argued that there is an inherent imbalance in access to and understanding of health care information between health care providers and health care consumers (Arrow, 1963; Haas-Wilson, 2001; Robinson, 2001). To help redress this imbalance, health care overseers—federal, state, and county governments; health professional groups; and other patient representatives—have sought to guarantee a minimum level of health care delivery performance on behalf of the general health-consuming public. In recent years, some consumer-driven approaches have been adopted, such as state-level reporting of serious medical errors and the publication of health outcome data.

Health care overseers have carried out their accountability role through licensing programs for health care professionals and certification and accreditation programs for provider institutions and health plans. Traditionally, these oversight processes have focused on the establishment of “market entry” requirements (e.g., medical doctors must graduate from an accred-

ited school and have completed a 1-year internship) and the ongoing identification of substandard performers through such mechanisms as peer review of individual cases (quality assurance), review of patient complaints, and routine inspections of practice settings.

In recent years, many states have established adverse event reporting systems. Health care oversight officials at the state level report that such systems are a useful tool for facility oversight, providing an additional window into facility operations that might otherwise not be available (Rosenthal et al., 2001). Consumers look to government to ensure that facilities are safe and providers are competent. In one survey, nearly 75 percent of respondents said that government should require health care providers to report all serious medical errors (The Henry J. Kaiser Family Foundation and Agency for Healthcare Research and Quality, 2000). In some instances, health outcome data (e.g., hospital mortality rates, mortality rates associated with cardiac surgery) have been used for accountability purposes as well (see the discussion below).

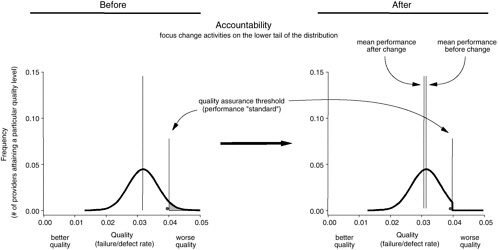

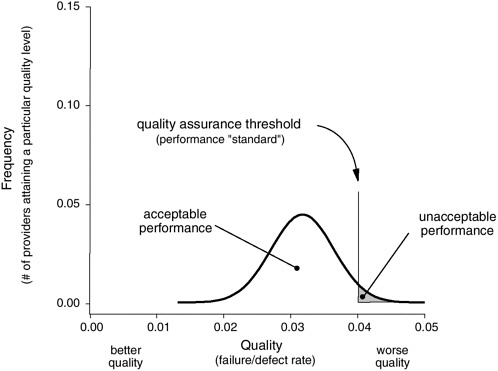

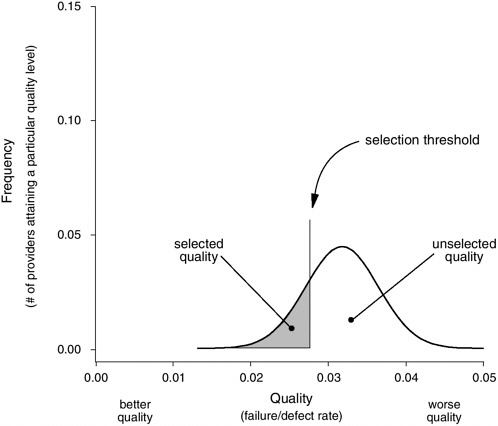

For the most part, legal liability and regulatory oversight processes focus on identifying very poor performers—the “bad apples” (see Figure 8-2) (Berwick, 1989). These processes set a minimum performance standard that is used to assess care providers. For those providers who fail to meet the minimum standard, sanctions (e.g., fines, termination of license) are levied, or reeducation programs and more stringent oversight are demanded.

Incentives

Previous Institute of Medicine (IOM) reports reveal a U.S. health care system that routinely fails to achieve the safest and highest quality of care for Americans who seek its services (Institute of Medicine, 2000, 2001). The vast majority of providers are not negligent, incompetent, or impaired, yet the services they provide are clearly inadequate, suggesting that minimum performance standards alone cannot achieve generally excellent care delivery (James, 1992).

Applications along the middle of the continuum are intended to bridge this gap by providing incentives (e.g., financial payments, public esteem or disgrace) and tools (e.g., comparative data on quality) that will motivate many providers to improve safety and quality. Selection refers to the use of comparative data by group purchasers or individuals to choose health plans and providers. In a quality-driven marketplace, purchasers (through contracting) and patients choose providers that deliver the safest and most effective care (see Figure 8-3). In theory, such selection should motivate all

FIGURE 8-2 Use of safety data for accountability, licensing, or legal action (focus on the lower tail).

providers to improve so they will rank better on comparative safety and quality reports and gain more business in the future. The provision of higher payments to providers that deliver safer and more effective care works in a similar fashion by making it attractive to all providers to strive to achieve a high ranking (Berwick et al., 2003).

These types of applications require certain data and information to be “transparent,” a term denoting the situation in which those involved in health care choices at any level—including patients, health professionals, and purchasers—have sufficiently complete, understandable information about clinical performance to make wise decisions (Institute of Medicine, 2001). Choices involve not just the selection of a health plan, a hospital, or a physician but also the series of testing and treatment decisions that patients face as they work their way through a health care delivery interaction. Transparency changes the focus of accountability, shifting both control and responsibility from clinical professionals and health care overseers to patients.

FIGURE 8-3 Use of safety data for selection (focus on the upper tail).

True transparency necessitates detailed and complete performance data that are valid, reliable, and relevant to the types of decisions an individual must make. For example, a patient about to undergo bypass surgery (or the general internist acting as the patient’s representative) must first select a hospital and a surgeon. In a transparent world, all participants would have access to hospital- and surgeon-specific performance datasets including a rich set of process and outcome measures, satisfaction reports, and other information.

To date, public reporting of performance data has been limited, but experience is growing (Agency for Healthcare Research and Quality, 2001; Baumgarten, 2002; California HealthCare Foundation, 2003; Centers for Medicare and Medicaid Services, 2003; Department of Health and Human Services, 2002a, b; Dudley et al., 2002; McCormick et al., 2002; National Committee for Quality Assurance, 2002a, b). Most public reporting has fo-

cused on organizations (i.e., hospitals and nursing homes) and, to some degree, surgical interventions (Schauffler and Mordavsky, 2001). Very limited information has been reported on medical groups or physicians. Early reporting efforts focused on outcome data (e.g., mortality), while more recent comparative reports have tended to include process-of-care measures, patient perceptions of care, and accreditation status (McGlynn and Adams, 2001).

Studies suggest that public reporting of comparative performance information has had little if any impact on consumer decision making (Schauffler and Mordavsky, 2001). For example, report cards on health plans and hospitals have had a minimal effect on consumers’ selection of health plans (Gabel et al., 1998; Thompson et al., 2003), and simple, easy-to-understand mortality statistics at the level of hospitals and individual physicians do not affect patients’ choices of hospitals or physicians (Chassin et al., 1996, 2002; Mennemeyer et al., 1997; Schneider and Epstein, 1996).

Many factors likely contribute to this lack of response on the part of consumers, including a lack of awareness of the existence of performance information, limitations placed on the choice of health plans and providers by employee benefits or insurance plan design, the presentation of performance information in a manner that is overly complex and fails to capture those aspects of performance of particular interest to consumers, reports not being produced by a trusted source, and questionable validity and reliability of the measures selected and underlying data sources (Hibbard et al., 2001; Hibbard, 1998, 2003; Institute of Medicine, 2001; McGlynn and Adams, 2001; Schauffler and Mordavsky, 2001; Simon and Monroe, 2001). Finally, individual patients are accustomed to choosing specialists and hospitals mainly on the advice of their primary care provider (referring physician), rather than on the basis of performance statistics (Mennemeyer et al., 1997). To date, the use of performance data by patients’ referring physicians has not been widespread enough to influence market dynamics.

Health care delivery organizations and professionals have a great deal to gain from a balanced system of public reporting. To the extent that safer care produces fewer injuries, it can significantly reduce legal exposure, as evidenced by the malpractice experience of surgical anesthesiologists following successful profession-wide improvement efforts (Joint Commission on Accreditation of Healthcare Organizations, 1998; Chassin, 1998; Cohen et al., 1986; Cooper et al., 2002; Duncan and Cohen, 1987; Gaba, 1989; Pierce, 1996). Public reporting creates a level playing field where competitors share equal incentives to invest in better care.

The greatest benefit of public reporting may come not from influencing

patient behavior but from causing health providers to set priorities and goals and motivating them to achieve those goals. Motivation operates through both explicit rewards and the public exposure of shared professional and organizational commitments. It recognizes that change is inherently difficult and that the health care system may need an external goad to drive internal change. Most health care organizations do express a genuine desire to deliver the best possible care to their patients, but they are beset by a host of competing demands. Explicit external expectations, implemented through public reporting balanced across institutions by independent auditing of underlying data systems, can establish shared priorities and parallel investment in the necessary data infrastructure.

Many efforts now under way are aimed at providing financial rewards to providers based on either their relative performance ranking compared with their peers or improvements in their individual performance over time (Bailit Health Purchasing, 2002a; Bailit Health Purchasing, 2002b; Kaye, 2001; Kaye and Bailit, 1999; National Health Care Purchasing Institute, 2002; White, 2002). These payment-for-performance programs focus on health plans, hospitals, clinicians, or some combination of these and utilize many different models of compensation. In addition, a limited number of programs are experimenting with the provision of financial rewards (e.g., lower premiums or copayments) to consumers who select higher-performing providers (Freudenheim, 2002; Salber and Bradley, 2001). Information is not yet available for assessing the impact of financial incentives on the behavior of consumers or providers and ultimately on the safety and quality of care.

The IOM has identified a set of 20 priority areas for the U.S. health care system, and the Department of Health and Human Services will soon be releasing the first annual National Healthcare Quality Report, focused on many of these priority areas (Agency for Healthcare Research and Quality, 2002; Institute of Medicine, 2003). These efforts are intended to stimulate actions to improve quality on the part of health care professionals and organizations. The aim is to encourage complementary and synergistic efforts at the national and community levels and on the part of many stakeholders (e.g., purchasers, regulators, providers, and consumers) to improve safety and quality in a few key areas.

System Redesign

On the far right of the continuum of applications is what might be described as learning approaches. Health care providers’ internal safety and quality improvement programs fall into this category, as do voluntary re-

porting programs in which providers share information on near misses and adverse events or comparative performance data. The work of many health care oversight organizations routinely extends far beyond their core public accountability responsibilities to the use of performance data and the organization’s expertise in improvement methods to help health care providers learn, change systems, and improve performance.

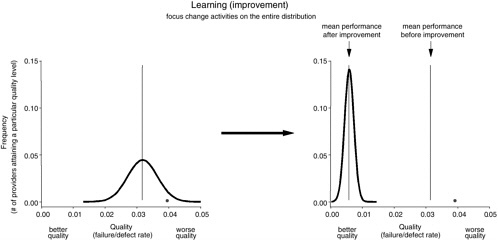

Figure 8-4 illustrates the difference between accountability and learning approaches. While accountability sets a minimum performance standard and directly addresses health care providers whose performance falls below that standard (i.e., the far right tail of the distribution), learning approaches attempt to (1) eliminate inappropriate variation (making the distribution tall and narrow) and (2) document continuous improvement (move all participants in the process to the left in the direction of better quality).

Historically, many learning approaches have relied extensively on comparative performance data, often using the same types of data included in public report cards or provided to purchasers in payment-for-quality programs. These data may not be the most appropriate for setting priorities. A substantial body of evidence has identified a very large gap in overall health care performance, implying that average care may be quite poor (Institute of Medicine, 2001; McGlynn et al., 2003). Comparisons against theoretical “best performance,” combined with assessments of readiness for change (e.g., available leadership, data systems), may be more effective in identifying improvement opportunities. For example, successful benchmarking strategies do not use average performance as a starting point. Instead, initial efforts are made to identify the “best in class,” and extensive analysis is then undertaken to understand and share the processes that achieve top-level performance (American Productivity and Quality Center, 2003). Even excellent organizations can show significant improvement through such an approach. Focusing on the average performer will likely result in a tighter distribution, while a “best in class” focus will not only tighten but dramatically shift the distribution toward higher quality levels.

Shifting the distribution significantly may also have the effect of exposing true “bad apple” performers (represented by the black dot in Figure 8-4), thus enhancing accountability. While rare within the overall health care system, such poor performers do exist and demand a response. They often hide within the tail of the distribution, relying upon chance events among colleagues to obscure their own consistently poor performance. Successful learning and system redesign can shift the tail of the distribution, clearly exposing such individuals for appropriate professional intervention.

ACCOUNTABILITY VERSUS LEARNING: UNDERSTANDING THE CONTINUUM

There are clearly many important applications of patient safety data and many different users. When called upon to respond to the most egregious performance failures, legal and regulatory programs appropriately aim to bar substandard providers from practice and to provide compensation to the injured. Incentive-based approaches aim to create an environment that rewards safety and quality and, in so doing, to encourage providers to pursue system redesign.

Although the three broad categories of applications—accountability, incentives, and system redesign—have quite different immediate aims and operate independently, they are intertwined in several important ways. First, depending upon how well they are crafted, the approaches pursued by legal and regulatory bodies and by purchasers and consumers in the marketplace can have either a positive or a negative effect on the efforts of providers to create a learning environment. Second, applications in all three categories rely to a great extent on the same underlying safety data systems and will do so increasingly in the future. Finally, all three consume scarce health care resources (e.g., dollars, provider time and attention), making an appropriate balance of activities imperative. This section presents a case study involving the use of mortality reports for accountability purposes and then uses this case study to illustrate key points related to issues surrounding the use of patient safety data, including the selection of measures, the risk that the use of performance data will instill fear and provoke defensive behavior on the part of providers, and the concept of preventability. The section ends with a discussion of the implications of the range of applications for patient safety data systems.

|

CASE STUDY Between 1986 and 1992, the Health Care Financing Administration (HCFA) released a series of annual reports assessing mortality outcomes across approximately 5,500 hospitals that treated Medicare patients in the United States (Health Care Financing Administration, 1987). A team of HCFA researchers and statisticians developed risk adjustment models for mortality following hospital discharge and improved and refined those tools over time. Within the reports, the highest 5 percent of hospitals in terms of risk-adjusted death rates were labeled “high mortality outliers,” while the bottom 5 percent were labeled “low mortality outliers.” The news media distributed the result |

|

ing raw and risk-adjusted mortality rates, as well as the rankings, widely. HCFA’s aims in publishing comparative mortality performance data were to assist peer review organizations in targeting their Medicare quality oversight activities, to inform health care consumers so they could make better choices about their own care, and to help health care professionals improve the quality of the care they delivered (Krakauer et al., 1992). A series of studies, including one analysis performed by the HCFA statistical team itself, evaluated the HCFA mortality reports against various gold-standard clinical measures (Green et al., 1990, 1991; Krakauer et al., 1992; Rosen and Green, 1987). Positive predictive value (Weinstein and Fineberg, 1980) for the HCFA mortality reports ranged from 25 to 64 percent. In other words, for every 12 hospitals labeled as “high mortality outliers,” at least 4 and as many as 9 were falsely identified. Among those hospitals judged to demonstrate excellent outcomes, as many as 3 in 5 were miscategorized (Green et al., 1991). In 1993, Bruce Vladek, HCFA’s administrator, halted release of the Medicare mortality reports. He judged that the reports were having little impact on care delivery performance while continuing to generate controversy and consume significant resources (Vladek, 1991). |

Over a decade has passed since HCFA (now the Centers for Medicare and Medicaid Services) produced the annual mortality reports described in this case study. The intervening years have allowed dispassionate reflection on the reasoning, methods, barriers, effects, and limitations associated with a national attempt to use clinical data to drive change in health care delivery. The Medicare mortality reports, updated by parallel consideration of similar, more recent efforts, can serve as a useful case study for understanding issues surrounding data standards for patient safety.

Selection of Measures

Outcome measures, although of keen interest to regulators, purchasers, and individuals, are particularly difficult to use for accountability purposes since they do not necessarily measure competence (Trunkey and Botney, 2001). The outcomes individuals experience are influenced by multiple factors, many of which are outside the control of the health care provider. Given the pejorative nature and potential professional and business consequences of outcome-based accountability systems, health care providers demand accurate, reliable rankings. Patients and patient representatives also require

reliable comparisons. In many circumstances, current clinical data systems and risk adjustment strategies are technically incapable of meeting those reasonable expectations.

There are many potential sources of variation in measured health outcomes (see Box 8-1), and risk adjustment methods can account only for differences in known patient factors. For example, Eddy estimates that all major factors proven to explain infant mortality rates (race, maternal alcohol consumption, maternal tobacco smoke exposure, altitude, and differences in prenatal care delivery performance) account for only about 25 percent of documented variation in patient outcomes (Eddy, 2002). The other 75 percent of outcomes are beyond the reach of risk adjustment strategies.

Geographic aggregation (e.g., variation in hospital programs and local referral patterns) can also play a defining role. A recent evaluation of hospital quality outcome measures found that most produced statistically reliable results when aggregated to the level of a metropolitan area, state, or multistate region, but only a few measures produced valid results at the level of individual hospitals (Bernard et al., 2003). The fact that an outcome mea-

|

BOX 8-1

|

sure demonstrates statistical significance overall, at an aggregated level, does not mean that it will reliably distinguish performance at a detailed individual level (Andersson et al., 1998; Hixson, 1989; Silber et al., 1995). Consequently, many hospital and physician ranking systems based on outcome measures perform poorly (Blumberg and Binns, 1989; Green et al., 1997; Greenfield et al., 1988; Jollis and Romano, 1998; Krumholz et al., 2002; Marshall et al., 2000).

Comparisons of risk-adjusted quality outcomes, when applied for purposes of accountability, work best when they are narrowly focused (e.g., on a single clinical entity); when the underlying patient factors that affect outcomes are well understood; and when those performing the comparisons can access accurate, complete, standardized patient data at a high level of clinical detail. For example, several measurement systems for risk-adjusted mortality outcomes for open heart surgery can account for more than 60 percent of all observed variation in those outcomes (Hannan et al., 1998; O’Connor et al., 1998).

Most health care settings lack the necessary data to support accurate risk adjustment and ranking of providers. Standardized clinical data are not captured as part of the care delivery process. The HCFA mortality reports were produced from Medicare claims data, which lack important clinical detail. Accuracy is also a problem in claims data (Green and Wintfeld, 1993).

On the other hand, learning systems exhibit a high tolerance for imperfect data and an ability to use such data productively. When used for process improvement, risk adjustment removes variation arising from patient factors that are beyond the care delivery system’s control and makes the effects of process changes more clearly identifiable (i.e., it improves the signal-to-noise ratio), an aim quite different from that of accountability systems of improving predictive value. For example, a risk adjustment model that accounts for only 25 percent of outcome variability (by modeling out the contribution of known cofactors) can significantly improve a team’s ability to see structure in the data or determine more accurately whether a process change has improved outcomes. The same risk adjustment likely would not improve comparative outcome data to the point where they could reliably rank care providers for accountability.

The Cycle of Fear

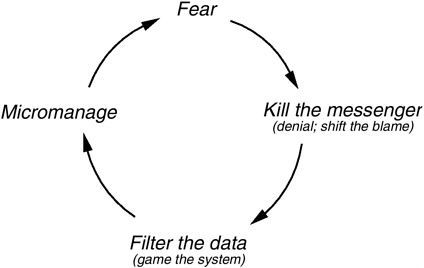

All efforts to improve safety and quality through the use of performance data run the risk of instilling fear and provoking defensive behavior on the part of providers. Scherkenbach outlines three sequential factors in that re-

sponse, labeling them the “cycle of fear” (see Figure 8-5) (Scherkenbach, 1991, p. 98).

Knowledge of predictable human responses to accountability data is a key factor in the design of an effective patient safety reporting system. It is also an important consideration in deciding how much emphasis to place on accountability versus learning applications because the former applications run a much higher risk of instilling fear than do the latter.

Reaction 1: Kill the Messenger

Accountability data inherently focus on individual health professionals or care delivery organizations. Upon being flagged as an outlier, most humans react defensively. They perceive a negative evaluation as a direct attack. In response, they raise defensive barriers that make positive communications difficult.

Under the philosophy that the best defense is a good offense, they often counterattack (shift the blame). They challenge the measurement system, analytic methods, and accuracy of the evaluation. They question the competence and motives of those conducting the assessment. Most important, they try to block access to data that could contribute to similar criticism in the future. For example, Berwick and Wald conducted a survey of hospital leaders’ reactions to the release of the HCFA mortality data in 1987. They found

FIGURE 8-5 Scherkenbach’s cycle of fear.

SOURCE: Scherkenback, 1991.

that all hospitals, regardless of mortality rate, shared an extremely negative view of the accuracy, usefulness, and interpretability of the data (Berwick and Wald, 1990).

Reaction 2: Filter the Data (Game the System)

Many of the data used in outcome analyses are generated by the individuals and institutions who are the focus of the evaluation. In such circumstances, even among conscientious, honest observers, the sentinel effect can significantly alter the data that are recorded and affect comparative outcome rankings. Some such data manipulation may be neither unconscious nor honest: when confronted by outcome measurement for accountability, it is often far easier to look good by gaming the data system than to be good by managing and improving clinical processes.

Anecdotal accounts of “filtering the data” are common in health care. For example, a hospital in the western United States was found to be a high mortality outlier for acute myocardial infarct (AMI) on an early HCFA mortality report. Upon internal review, the hospital discovered that almost all AMI patients were being coded as admissions from their community-based physician’s office, even though many had come through the hospital’s emergency department. Upon realizing that source of admission was an important element in the HCFA risk adjustment model, the hospital began coding all AMI patients as having entered the hospital through the emergency department. By the following year, the hospital had gone from being a high mortality outlier to being a low mortality outlier on the HCFA AMI mortality report without introducing any change in clinical care (James, 1988).

The extent of systematic data manipulation in health care is not known. However, in one study 39 percent of physicians reported falsifying insurance records to obtain payment for care they believed was necessary even though it was not covered by the patient’s policy (Wynia et al., 2000). There is also evidence that voluntary injury detection systems underreport events, although this may be attributable in part to the burden of reporting (Evans et al., 1998).

Industries outside of health care have repeatedly demonstrated that a safe reporting environment is critical to robust failure detection and that robust failure detection is essential to the design of safe systems that significantly reduce failure rates (Institute of Medicine, 2000). Such experience suggests that, whenever possible, accountability for patient safety should focus at the level of an organization rather than the level of individual health professionals working within the organization. Unfortunately, most health

care is delivered outside hospitals and nursing homes by clinicians in small practice settings who lack any strong tie to an organization.

Reaction 3: Micromanage

Most health care delivery consists of complex processes and systems involving many interacting factors that are usually summed by performance metrics. As a result, such summary measures will exhibit a component of apparently random variation (Berwick, 1991). The use of data for accountability can lead health professionals to attempt to focus on tracking and responding to minute, random fluctuations in their process data, whereas careful analysis and redesign of work processes are needed to improve performance. Indeed, micromanagement can lead to worse outcomes, causing overseers to demand more rigorous inspection and oversight, which will likely lead to another iteration of the cycle of fear.

The Concept of Preventability

The most effective patient safety strategies rest upon a broad definition of preventable adverse events. As defined in this report, an adverse event is any unintended harm to a patient caused by medical management rather than by the underlying disease or condition of the patient. Some adverse events are unavoidable. Patients and their caregivers are sometimes forced to knowingly accept adverse secondary consequences to achieve a more important primary treatment goal. The concept of preventability separates care delivery errors from such recognized but unavoidable treatment consequences.

Providers seeking to improve safety generally focus on preventable adverse events. Members of the health care professions, who often associate the term “error” with professional neglect or incompetence or fear that others will do so, may seek to define preventability very narrowly, greatly limiting the scope and impact of patient safety improvement.

For example, a hospital team developed a data-based clinical trigger tool to identify adverse drug events (ADEs), increasing the ADE detection rate by almost two orders of magnitude. The team then analyzed and prioritized causes for the ADEs detected. The single largest category, accounting for 28 percent of events, was allergic and idiosyncratic drug reactions among patients with no previous history of reaction. Thinking at the level of individual health professionals, all members of the team initially agreed that such injuries were outside clinicians’ control and accountability and that the

topic thus was not worthy of further exploration. However, the team ultimately took a systems approach, noting that there is often a range of medications available to address a particular clinical need and that those medications may pose different risks for allergic reaction. The team created computerized alerts that recommended a safer alternative if a physician ordered a medication with a high allergy risk. In addition, the team implemented immediate review of a developing ADE by a pharmacist, under the theory that early intervention might abort the event before it progressed to more serious levels. When implemented, the team’s intervention reduced serious allergic and idiosyncratic drug reactions within the hospital by more than 50 percent (Evans et al., 1994; Pestotnik et al., 1996).

Another good example of a problem previously thought to be largely unpreventable (after standardizing sterile measures) is that of bloodstream infections with central venous lines. While they have important advantages (e.g., the ability to administer large volumes of fluid), short-term vascular catheters are associated with serious complications, particularly infections. Central venous catheters impregnated with rifampin and minocycline have been shown to reduce the incidence of catheter-related bloodstream infection (Darouiche et al., 1999).

It is also important to recognize that current beliefs concerning preventability may be quite limited. Many events presently judged not to be preventable may be so with careful investigation and creative thought. Finally, even if a class of injuries is not presently preventable, a broad focus can generate and prioritize a research agenda that can improve patient safety over time.

Patient safety data systems should cast a wide net, focusing on all types of adverse events, not just those that are preventable based on current understanding and current systems of care delivery. Achieving this broad focus will require careful use of the term “error,” with clear recognition of its linkage to system-level solutions and attention to its pejorative connotations for health professionals. Using the term “injuries” may even be preferable and might make it possible to avoid the type of negative behavior described by the cycle of fear.

Implications for Patient Safety Data Systems

Patient safety data systems must be able to support the full range of applications, from accountability to learning. If they are to do so, they must be carefully designed to capture all relevant data and comply with national data standards. It will also be important to establish an external auditing

process to certify the integrity of patient safety data reported externally for purposes of regulation, public reporting, or payment.

Data System Design

Efficient patient safety data systems that can span a continuum of uses will require careful design. Accountability measures usually report high-order, summary data (James, 1994a, b, 2003). Process management and improvement, on the other hand, require detailed decision-level data (Berwick et al., 2003).

Patient safety data systems should be designed to capture, as part of the patient care process, the data needed for learning applications. While data systems designed for learning can supply accountability data, the opposite is not true; summary data collected for accountability usually lack sufficient detail for learning-based uses (James, 2003). In the absence of careful planning, a health care delivery organization with limited resources may find that all of its measurement resources are consumed by special data collection to comply with external reporting requirements, with none remaining for learning and system redesign (Casalino, 1999). By contrast, a carefully designed data system that captures detailed decision-level data for improvement will be able to comply with external reporting requirements through a concept known as “data reuse” (see Chapter 2).

Learning depends on profound knowledge of key work processes. Process and outcome measures provide insight on what fails, how often and how it fails, what works, how it works, and how to make it work better. Data systems designed for learning can integrate data collection directly into work processes. Integrated data collection is usually more timely, accurate, and efficient. More important, properly designed learning data are immediately useful to front-line workers for process management, so that the burden of data collection is less likely to be perceived as an unfunded mandate (Langley et al., 1996).

Standardized Data

Patient safety data systems should adhere to national data standards. Many external applications of patient safety data, including accountability, the provision of incentives, and priority setting, make use of comparative data. Standardized data definitions are necessary to make such comparisons. Comparative data also serve learning purposes when used to identify “best in class” providers.

Standardized data are important as well for the identification of infrequently occurring safety issues. Many patient injuries occur at such low rates that any single organization will not be able to generate sufficient data within a reasonable length of time for organizational learning to take place. Data rates will be too low to permit recognizing patterns, testing possible solutions, and developing effective preventive actions. Without data standards, moreover, it is difficult for different care delivery organizations to share injury reports, compare results, benchmark processes, and learn from one another’s experience.

Patient Safety Data Audits

When patient safety data are used for external performance reporting, it is important that the data be audited. When performance data are used for licensure or payment purposes or to support purchaser or consumer decisions, providers have an incentive to “look their best.” Audits are necessary to assure all stakeholders that the reporting system is fair. They also provide useful feedback to providers on ways to redesign and strengthen their patient safety data systems.

A data audit conducted by an independent reviewer is intended to ascertain that (1) the data sources used by a health care organization to identify individual adverse events or derive aggregate performance measures (e.g., mammography rate) are complete and accurate, and (2) aggregate measures have been properly calculated (e.g., the denominator includes only women over the age of 50). As discussed further in Chapter 4, an audit process for adverse event reporting may involve review of a provider’s processes for case finding (e.g., individual reports, automated triggers), evaluation (i.e., conduct of root-cause analyses), and classification.

A data audit is different from a patient safety program audit. The primary aim of a data audit is to provide assurance that the numbers reported are reasonably complete, accurate, and reproducible and thus useful for shared analysis and comparison. By design, such an audit does not address how a health care organization responds to an injury or makes decisions for accountability for safety performance. A program audit is much broader than a data audit and focuses on organizational elements that influence safety (e.g., whether a culture of safety exists; whether adequate attention is paid to safety issues at the level of the governing board and managerial and clinical leadership; whether adequate decision supports, such as drug–drug interaction alerts, are available to assist clinicians). The critical elements of an effective patient safety program are discussed in Chapter 9.

In light of the increased emphasis on the public reporting of performance data, it is particularly important that such data be valid and reliable. The assurance provided by an external audit is an essential element of transparency and a potent antidote to misrepresentation, cheating, and corruption. With sufficient reliable, complete, and understandable information, patients are better prepared to participate in health care decisions, and accountability becomes an inherent feature of the care delivery system. Over the long term, the role of health care oversight may narrow, focusing to a great extent on the integrity of the data system used to generate public reports.

Auditing is used extensively outside the health care sector. For example, the American financial markets are driven to a great extent by the information contained in standardized financial statements released by publicly traded companies. The Securities and Exchange Commission oversees (1) the establishment of accounting standards (i.e., generally accepted accounting principles) by the Federal Accounting Standards Board, (2) the establishment of explicit standards describing how an acceptable audit will be conducted (Generally Accepted Audit Standards) by the Audit Standards Board, and (3) the conduct of audits by certified auditors (certified public accountants) (Financial Accounting Standards Board, 2003).

Experience with auditing is far more limited in the health care sector. The National Committee for Quality Assurance (NCQA), a private-sector accrediting and performance reporting organization, oversees an auditing process to assure the integrity of aggregate-level performance data reported by about 460 health plans on 60 performance measures in the Health Plan Employer Data and Information Set (National Committee for Quality Assurance, 2003). The performance measurement data are used to produce comparative performance reports for health care purchasers, employers, and consumers. The data are also required as part of NCQA’s health plan accreditation program. To ensure the integrity of the data reported by health plans, NCQA has auditing criteria (National Committee for Quality Assurance, 2001) that include a program to license audit organizations and certify auditors. There are currently 11 licensed organizations and 71 certified auditors. The auditing criteria address such areas as data completeness, data integrity, thoroughness of system processes, and accuracy of medical record review.

In light of the many types of patient safety applications involving many different users, the health care sector would benefit from national data audit criteria for assessing the integrity of patient safety data systems used to generate reports in support of the full continuum of patient safety applications.

AHRQ, in collaboration with private-sector entities, should assume a lead role in the development of national audit criteria for patient safety data.

THE NEED TO INVEST MORE RESOURCES IN LEARNING APPROACHES

The committee believes that the health system has underinvested by a large margin in learning approaches. The American health care system will continue to lack sufficient capacity to deliver excellent care to all patients without fundamental change in the overall level of performance of the system as a whole.

It is imperative that all health care providers develop comprehensive patient safety systems that promote learning. Learning systems relentlessly redesign care processes in pursuit of “best in class.” They attempt to change the shape of the performance distribution by improving all parts of the process, regardless of initial standing (Berwick et al., 2003). And learning approaches are far less susceptible to barriers arising from the cycle of fear. Chapter 5 provides a discussion of comprehensive patient safety systems in health care organizations.

REFERENCES

Agency for Healthcare Research and Quality. 2001. Annual Report of the National CAHPS Benchmarking Database 2000: What Consumers Say About the Quality of Their Health Plans and Medical Care . Rockville, MD: Westat.

———. 2002. National Healthcare Quality Report. Online. Available: http://www.ahcpr.gov/qual/nhqrfact.htm [accessed August 18, 2003].

American Productivity and Quality Center. 2003. Benchmarking. Online. Available: http://www.apqc.org/portal/apqc/site/generic2?path=/site/benchmarking/free_resources.jhtml [accessed August 18, 2003].

Andersson, J., K. Carling, and S. Mattson. 1998. Random ranking of hospitals is unsound. Chance 11 (3):34–37, 39.

Arrow, K. J. 1963. Uncertainty and the welfare economics of medical care. Am Econ Rev 50 (5):941–973.

Bailit Health Purchasing. 2002a. Ensuring Quality Health Plans: A Purchaser’s Toolkit for Using Incentives. Washington, DC: National Health Care Purchasing Institute.

———. 2002b. Provider Incentive Models for Improving Quality of Care. Washington, DC: National Health Care Purchasing Institute.

Baumgarten, A. 2002. California Managed Care Review 2002. Oakland, CA: California HealthCare Foundation.

Bernard, S. L., L. A. Savitz, and E. R. Brody. 2003. Validating the AHRQ Quality Indicators: Final Report. Rockville, MD: Agency for Healthcare Research and Quality.

Berwick, D. M. 1989. Continuous improvement as an ideal in health care. N Engl J Med 320 (1):53–56.

———. 1991. Controlling variation in health care: A consultation from Walter Shewart. Med Care 29 (12):1212–1225.

Berwick, D. M., and D. L. Wald. 1990. Hospital leaders’ opinions of the HCFA mortality data. JAMA 263 (2):247–249.

Berwick, D. M., B. James, and M. J. Coye. 2003. Connections between quality measurement and improvement. Med Care 41 (1 Supplement):I30–I38.

Blumberg, M. S., and G. S. Binns. 1989. Risk-Adjusted 30-Day Mortality of Fresh Acute Myocardial Infarctions: The Technical Report. Chicago, IL: Hospital Research and Educational Trust (American Hospital Association).

California HealthCare Foundation. 2003. New Web Site Helps Californians Choose a Quality Nursing Home. Online. Available: http://www.chcf.org/print.cfm?itemID=20148 [accessed August 19, 2003].

Casalino, L. P. 1999. The unintended consequences of measuring quality on the quality of medicare. N Engl J Med 341 (15):1147–1150.

Centers for Medicare and Medicaid Services. 2003. Dialysis Facility Compare. Online. Available: http://www.medicare.gov/Dialysis/Home.asp [accessed August 19, 2003].

Chassin, M. R. 1998. Is health care ready for six sigma quality? Milbank Q 764:565–591.

———. 2002. Achieving and sustaining improved quality: Lessons from New York State and cardiac surgery. Health Aff (Millwood) 21 (4):40–51.

Chassin, M. R., E. L. Hannan, and B. A. DeBuono. 1996. Benefits and hazards of reporting medical outcomes publicly. New Engl J Med 334 (6):394–398.

Cohen, M. M., P. G. Duncan, W. D. Pope, and C. Wolkenstien. 1986. A survey of 112, 000 anaesthetics at one teaching hospital (1975–1983). Can Anaesth Soc J 33:22–31.

Cooper, J. B., R. S. Newbower, C. D. Long, and B. McPeek. 2002. Preventable anesthesia mishaps: A study of human factors. Qual Saf Health Care 11(3):277–282. [Reprint of a paper that appeared in Anesthesiology, 1978, 49:399–406.]

Darouiche, R. O., I. I. Raad, S. O. Heard, J. I. Thornby, O. C. Wenker, A. Gabrielli, J. Berg, N. Khardori, H. Hanna, R. Hachem, R. L. Harris, and G. Mayhall. 1999. A comparison of two antimicrobial-impregnated central venous catheters: Catheter study group. N Engl J Med 340 (1):1–8.

Deming, W. E. 1988. System definition. Personal communication to Brent James.

Department of Health and Human Services. 2002a. HHS Launches National Nursing Home Quality Initiative: Broad Effort to Improve Quality in Nursing Homes Across the Country. Online. Available: http://www.dhhs.gov/news/press/2002pres/20021112.html [accessed August 19, 2003].

———. 2002b. Secretary Thompson Welcomes New Effort to Provide Hospital Quality of Care Information. Online. Available: http://www.dhhs.gov/news/press/2002pres/20021212.html [accessed August 19, 2002].

Dudley, R. A., D. Rittenhouse, and R. Bae. 2002. Creating a Statewide Hospital Quality Reporting System: The Quality Initiative. San Francisco, CA: Institute for Health Policy Studies.

Duncan, P. G., and M. M. Cohen. 1987. Postoperative complications: Factors of significance to anaesthetic practice. Can J Anaesth 34:2–8.

Eddy, D. 2002. Summarizing Analyses Performed on Behalf of the National Committee for Quality Assurance, While Evaluating Infant Mortality Rates as a Possible Measurement Set. Personal communication to Institute of Medicine’s Committee on Data Standards for Patient Safety.

Evans, R. S., S. L. Pestotnik, D. C. Classen, T. P. Clemmer, L. K. Weaver, J. F. Orme Jr., J. F. Lloyd, and J. P. Burke. 1998. A computer-assisted management program for antibiotics and other antiinfective agents. N Engl J Med 338 (4):232–238.

Evans, R. S., S. L. Pestotnik, D. C. Classen, S. D. Horne, S. B. Bass, and J. P. Burke. 1994. Preventing adverse drug events in hospitalized patients. Ann Pharmacother 28 (4):523–527.

Financial Accounting Standards Board. 2003. FASB Facts. Online. Available: http://www.fasb.org/facts/ [accessed August 4, 2003].

Freudenheim, M. 2002. June 26. Quality goals in incentives for hospitals. New York Times. Section Late Edition-Final, Section C, Page 1, Col. 5.

Gaba, D. M. 1989. Human error in anesthetic mishaps. Int Anesthesiology Clin 27 (3):137–147.

Gabel, J. R., K. A. Hunt, and K. Hurst. 1998. When employers choose health plans do NCQA accreditation and HEDIS data count? Health Care Quality. Online. Available: http://www.cmwf.org/programs/health_care/gabel_ncqa_hedis_293.asp [accessed December 15, 2003].

Green, J., and N. Wintfeld. 1993. How accurate are hospital discharge data for evaluating effectiveness of care? Med Care 31 (8):719–731.

Green, J., L. J. Passman, and N. Wintfeld. 1991. Analyzing hospital mortality: The consequences of diversity in patient mix. JAMA 265 (14):1849–1853.

Green, J., N. Wintfeld, M. Krasner, and C. Wells. 1997. In search of America’s best hospitals: The promise and reality of quality assessment. JAMA 277 (14):1152–1155.

Green, J., N. Wintfeld, P. Sharkey, and L. J. Passman. 1990. The importance of severity of illness in assessing hospital mortality. JAMA 263 (2):241–246.

Greenfield, S., H. U. Aronow, R. M. Elashoff, and D. Watanabe. 1988. Flaws in mortality data: The hazards of ignoring comorbid disease. JAMA 260 (15):2253–2255.

Haas-Wilson, D. 2001. Arrow and the information market failure in health care: The changing content and sources of health care information. J Health Polit Policy Law 26:Part 3.

Hannan, E. L., A. J. Popp, B. Tranmer, P. Fuestel, J. Waldman, and D. Shah. 1998. Relationship between provider volume and mortality for carotid endarterectomies in New York State. Stroke 29:2292–2297.

Health Care Financing Administration. 1987. Medicare Hospital Mortality Information, 1986. HCFA Pub. No. 01-002. Washington, DC: U.S. Government Printing Office.

Hibbard, J. H. 1998. Use of outcome data by purchasers and consumer: New strategies and new dilemmas. Int J Quality Health Care 10 (6):503-508.

———. 2003. Engaging health care consumers to improve the quality of care. Med Care 41 (1-Supplement):161–170.

Hibbard, J. H., E. Peters, P. Slovic, M. L. Finucane, and M. Tusler. 2001. Making health care quality reports easier to use. Jt Comm J Qual Improv 27 (11):591–604.

Hixson, J. S. 1989. Efficacy of Statistical Outlier Analysis for Monitoring Quality of Care. Chicago, IL: American Medical Association Center for Health Policy Research.

Institute of Medicine. 2000. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press.

———. 2001. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press.

———. 2003. Priority Areas for National Action: Transforming Health Care Quality. Washington, DC: The National Academies Press.

James, B. C. 1988. IHC Health Services. Personal communication to Institute of Medicine’s Committee on Data Standards for Patient Safety.

———. 1992. Good enough? Standards and measurement in continuous quality improvement. In: Bridging the Gap Between Theory and Practice. Chicago, IL: Hospital Research and Educational Trust (American Hospital Association). Pp. 1–24.

———. 1994a. Outcomes measurement. In: Bridging the Gap Between Theory and Practice: Exploring Outcomes Management. Chicago, IL: Hospital Research and Educational Trust (American Hospital Association). Pp. 1–37.

———. 1994b. Breaks in the outcomes measurement chain. Hosp Health Netw 68 (14):60.

———. 2003. Information system concepts for quality measurement. Med Care 41 (1 Supplement):I71–I79.

Joint Commission on Accreditation of Healthcare Organizations. 1998. Sentinel events: Approaches to error reduction and prevention. Jt Comm J Qual Improv 24 (4):175–186.

Jollis, J. G., and P. S. Romano. 1998. Pennsylvania’s focus on heart attack: Grading the scorecard. N Engl J Med 338 (14):983–987.

Kaye, N. 2001. Medicaid Managed Care: A Guide for the States. Portland, ME: National Academy for State Health Policy.

Kaye, N., and M. Bailit. 1999. Innovations in Payment Strategies to Improve Plan Performance. Portland, ME: National Academy for State Health Policy.

Krakauer, H., R. C. Bailey, K. J. Skellan, J. D. Stewart, A. J. Hartz, E. M. Kuhn, and A. A. Rimm. 1992. Evaluation of the HCFA model for the analysis of mortality following hospitalization. Health Serv Res 27 (3):317–335.

Krumholz, H. M., S. S. Rathore, J. Chen, Y. Wang, and M. J. Radford. 2002. Evaluation of a consumer-oriented Internet health care report card: The risk of quality ratings based on mortality data. JAMA 287 (10):1277–1287.

Langley, G. J., K. M. Nolan, C. L. Norman, L. P. Provost, and T. W. Nolan. 1996. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. San Francisco, CA: Jossey-Bass.

Marshall, M. N., P. G. Shekelle, S. Leatherman, and R. H. Brook. 2000. The public release of performance data: What do we expect to gain? A review of the evidence. JAMA 283 (14):1866–1874.

McCormick, D., D. U. Himmelstein, S. Woolhandler, S. M. Wolfe, and D. H. Bor. 2002. Relationship between low quality-of-care scores and HMOs’ subsequent public disclosure of quality-of-care scores. JAMA 288 (12):1484–1490.

McGlynn, E., and J. Adams. 2001. Public release of information on quality. In: R. Anderson, T. Rice, and G. Kominksi, eds. Changing the U.S. Health Care System: Key Issues in Health Services Policy and Management, 2nd Edition. San Francisco, CA: Jossey-Bass, Inc. Pp. 183–202.

McGlynn, E. A., S. M. Asch, J. Adams, J. Keesey, J. Hicks, A. DeCristofaro, and E. A. Kerr. 2003. The quality of health care delivered to adults in the United States. N Engl J Med 348 (26):2635–2645.

Mennemeyer, S. T., M. A. Morrisey, and L. Z. Howard. 1997. Death and reputation: How consumers acted upon HCFA mortality information. Inquiry 34 (2):117–128.

National Committee for Quality Assurance. 2001. HEDIS Compliance Audit: Volume 5. Washington, DC: National Committee for Quality Assurance.

———. 2002a. NCQA Report Cards. Online. Available: http://hprc.ncqa.org/menu.asp [accessed May 6, 2002].

———. 2002b. The State of Health Care Quality: Industry Trends and Analysis. Washington, DC: National Committee for Quality Assurance.

———. 2003. HEDIS Technical Specifications: Volume 3. Washington, DC: National Committee for Quality Assurance.

National Health Care Purchasing Institute. 2002. Rewarding Results. Online. Available: http://www.nhcpi.net/rewardingresults/index.cfm [accessed April 22, 2002].

O’Connor, G. T., J. D. Birkmeyer, L. J. Dacey, H. B. Quinton, C. A. Marrin, N. J. Birkmeyer, J. R. Morton, B. J. Leavitt, C. T. Maloney, F. Hernandez, R. A. Clough, W. C. Nugent, E. M. Olmstead, D. C. Charlesworth, and S. K. Plume. 1998. Results of a regional study of modes of death associated with coronary artery bypass grafting. Northern New England Cardiovascular Disease Study Group 66 (4):1323–1328.

Pestotnik, S. L., D. C. Classen, R. S. Evans, and J. P. Burke. 1996. Implementing antibiotic practice guidelines through computer-assisted decision support: Clinical and financial outcomes. Ann Intern Med 124 (10):884–890.

Pierce, E. C. Jr. 1996. The 34th Rovenstine lecture 40 years behind the mask: Safety revisited. Anesthesiology 84 (4):965–975.

Robinson, J. C. 2001. The end of asymmetric information. J Health Polit Policy Law 26:Part 3.

Rosen, H. M., and B. A. Green. 1987. The HCFA excess mortality lists: A methodological critique. Hosp Health Serv Adm 32 (1):119–127.

Rosenthal, J., M. Booth, L. Flowers, and T. Riley. 2001. Current State Programs Addressing Medical Errors: An Analysis of Mandatory Reporting and Other Initiatives. Portland, ME: National Academy for State Health Policy.

Salber, P., and B. Bradley. 2001. Perspective: Adding Quality to the Health Care Purchasing Equation. Online. Available: http://www.healthaffairs.org/WebExclusives/index.dtl?year=2001 [accessed February 20, 2004].

Schauffler, H. H., and J. K. Mordavsky. 2001. Consumer reports in health care: Do they make a difference? Annu Rev Public Health 22:69–89.

Scherkenbach, W. 1991. The Deming Route to Quality and Productivity: Road Maps and Road Blocks, 11th Edition. Washington, DC: CEE Press Books, George Washington University.

Schneider, E. C., and A. M. Epstein. 1996. Influence of cardiac-surgery performance reports on referral practices and access to care. N Engl J Med 335 (4):251–256.

Silber, J. H., P. R. Rosenbaum, and R. N. Ross. 1995. Comparing the contributions of groups of predictors: Which outcomes vary with hospital rather than patient characteristics? J Am Statistical Assoc 90 (429):7–18.

Simon, L. P., and A. F. Monroe. 2001. California provider group report cards: What do they tell us? Am J Med Qual 16 (2):61–70.

The Henry J. Kaiser Family Foundation and Agency for Healthcare Research and Quality. 2000. National Survey on Americans as Health Care Consumers: An Update on the Role of Quality Information. Online. Available: http://www.kff.org/content/2000/3093/ [accessed August 11, 2003].

Thompson, J. W., S. D. Pinidiya, K. W. Ryan, E. D. McKinley, S. Alston, J. E. Bost, J. B. French, and P. Simpson. 2003. Health plan quality data: The importance of public reporting. Am J Prev Med 24 (1):62–70.

Trunkey, D. D., and R. Botney. 2001. Assessing competency: A tale of two professions. J Am Col Surg 192 (3)385–395.

Vladek, B. C. 1991. Letter to Hospital Administrators: Accompanying Fiscal Year 1991 Medicare Mortality Information. Baltimore, MD: U.S. Department of Health and Human Services, Health Care Financing Administration.

Weinstein, M. C., and H. V. Fineberg. 1980. Clinical Decision Analysis. Philadelphia, PA: W.B. Saunders Company. Pp. 86–88.

White, R. 2002, January 14. A shift to quality by health plans. Los Angeles Times. Section C-1.

Wynia, M. K., D. S. Cummins, J. B. VanGeest, and I. B. Wilson. 2000. Physician manipulation of reimbursement rules for patients: Between a rock and a hard place. JAMA 283 (14):1858–1865.