Checking for Biases in Incident Reporting

TJERK VAN DER SCHAAF and LISETTE KANSE

Department of Technology Management

Eindhoven University of Technology

Incident reporting schemes have long been part of organizational safety-management programs, especially in sectors like civil aviation, the chemical process industry, and, more recently, rail transport and in a few health care domains, such as anaesthesiology, pharmacies, and transfusion medicine. In this paper, we define incidents as all safety-related events, including accidents (with negative outcomes, such as damage and injury), near misses (situations in which accidents could have happened if there had been no timely and effective recovery), and dangerous situations.

But do reporting schemes capture a representative sample of actual events? One of the reasons incident-reporting databases might be biased is a tendency to over- or underreport certain types of events. To address the vulnerabilities of voluntary reporting schemes in terms of the quantity and quality of incident reports, guidelines have been developed for designing and implementing such schemes. Reason (1997) lists five important factors for “engineering a reporting culture”: (1) indemnity against disciplinary proceedings; (2) confidentiality or de-identification; (3) separation of the agency that collects and analyzes the reports from the regulatory authority; (4) rapid, useful, accessible, and intelligible feedback to the reporting community; and (5) the ease of making the report. Another expert, D.A. Lucas (1991) identifies four organizational factors: (1) the nature of the information collected (e.g., descriptive only, or descriptive and causal); (2) the uses of information in the database (e.g., feedback, statistics, and error-reduction strategies); (3) analysis aids to collect and analyze data; (4) organization of the scheme (e.g., centralized or local, mandatory or voluntary). Lucas also stresses the importance of the organization’s model of why humans make mistakes, as part of the overall safety culture.

These are just two examples of organizational design perspectives on reporting schemes. Much less is known about the individual reporter’s perspective: (1) when and why is an individual inclined to submit a formal report of a work-related incident; and (2) what aspects of an incident is an individual able and willing to report.

The starting point for the investigation described in this paper was an observation made during a reanalysis of part (n = 50 reports) of a large database of voluntarily reported incidents at a chemical process plant in the Netherlands, where we encountered very few reports of self-made errors (Kanse et al., in press). This was surprising because this plant had been highly successful in establishing a reporting culture; minor damage, dangerous situations, and large numbers of near misses (i.e., initial errors and their subsequent successful recoveries) were freely reported, two reports per day on average from the entire plant. The 200 employees of the plant, as well as temporary contract workers, contributed to the plant’s near-miss reporting system (NMRS), which had been operational for about seven years by the time we performed the reanalysis. The NMRS was regarded as a “safe” system in terms of guaranteed freedom from punishment as a result of reporting an incident. Even more puzzling was that references to self-made errors were also absent in the particular subset we were analyzing—successfully recovered (initial) errors (human failures) and other failures, which were thus completely inconsequential. The question was why plant operators did not report successful recoveries from self-made errors.

To address this question, we began by reviewing the literature on the reasons individuals fail to report incidents in general and then evaluating their relevance for our study. We then generated a taxonomy of possible reasons for nonreporting. Next, we instituted a diary study in which plant operators were asked to report their recoveries from self-made errors under strictly confidential conditions, outside of the normal NMRS used at the plant. In addition to descriptions of recovery events, we asked them to indicate whether or not (and why) they would normally have reported the event. We then categorized the reasons according to our taxonomy. The results are discussed in terms of the reporting biases we identified and possible countermeasures to improve the existing reporting system.

REASONS FOR NOT REPORTING

We began our search with the Psychinfo and Ergonomics Abstracts databases to ensure that we covered both the domain of work and organizational psychology and the domain of ergonomics, human reliability, and safety. The key words we used were “reporting system and evaluation,” “reporting barriers,” “reporting tendencies,” “reporting behavior,” “reporting biases,” “incident report,” “near miss report,” and, in Psychinfo, simply “near miss.” We included truncated forms of the keywords (i.e., “report*” for report, reporting, and reports) and

alternative spelling to maximize the scope of our search. We assessed potential relevance based on the abstracts; we also added references to our review from the reference lists of items we had selected.

Based on our search, we concluded that, even though there is a relatively large body of literature on organizational design guidelines for setting up incident reporting schemes, very few insights could be found into the reasons individuals decided whether or not to report an incident. We grouped the factors influencing incident reporting into four groups:

-

fear of disciplinary action (as a result of a “blame culture” in which individuals who make errors are punished) or of other people’s reactions (embarrassment)

-

uselessness (perceived attitudes that management would take no notice and was not likely to do anything about the problem)

-

acceptance of risk (incidents are part of the job and cannot be prevented; or a “macho” perspective of “it won’t happen to me”)

-

practical reasons (too time consuming or difficult to submit a report)

Adams and Hartwell (1977) mention the blame culture (as does Webb et al., 1989) and the more practical reasons of time and effort (see also Glendon, 1991). Beale et al. (1994) conclude that the perceived attitudes of management greatly influence reporting levels (see also Lucas, 1991, and Clarke, 1998) and that certain kinds of incidents are accepted as the norm. Similarly, Powell et al. (1971) find that many incidents are considered “part of the job” and cannot be prevented. This last point is supported by Cox and Cox (1991), who also stress the belief in personal immunity (“accidents won’t happen to me”; see also the “macho” culture in construction found by Glendon, 1991). O’Leary (1995) discusses several factors that might influence a flight crew’s acceptance of the organization’s safety culture, and thus the willingness to contribute to a reporting program. These factors include a lack of trust in management because of industrial disputes; legal judgments that ignore performance-reducing circumstances; pressure from society to allocate blame and punish someone for mistakes; the military culture in aviation; and the fact that pilots, justifiably or not, feel responsible or even guilty for mishaps as a result of their internal locus of control combined with their high scores on self-reliance scales. Elwell (1995) suggests that the reasons human errors are underreported in aviation are that flight crew members may be too embarrassed to report their mistakes or that they expect to be punished (see also Adams and Hartwell, 1977, and Webb et al., 1989); and if an error has not been observed by others, they are less likely to report it. Smith et al. (2001) report clear differences (and thus biases in the recording system) between recorded industrial injury events and self-reported events collected via interviews and specifically developed questionnaires.

A number of publications in the health care domain are focusing increasing attention on the reporting of adverse events (i.e., events with observable negative outcomes). For example, Lawton and Parker (2002) studied the likelihood of adverse events being reported by health care professionals and found that reporting is more common in places where protocols are in place and are not adhered to than where there are no protocols in place; in addition reports are more likely when patients were harmed; near misses, they found, are likely to go unreported. The suggested explanations for a reluctance or unwillingness to report are the culture of medicine, the emphasis on blame, and the threat of litigation.

Probably the most comprehensive study so far, and to our knowledge the only one in which individuals were asked to indicate their reasons for not reporting, was undertaken by Sharon Clarke (1998). She asked train drivers to indicate the likelihood that they would report a standard set of 12 realistic incidents (a mix of dangerous situations, equipment failures, and other people’s errors). The drivers were offered a predefined set of six reasons for not reporting in each case: (1) one would tell a colleague directly instead of reporting the incident; (2) this type of incident is just part of the job; (3) one would want to avoid getting someone else in trouble; (4) nothing would be done about this type of incident; (5) reporting involves too much paperwork; and (6) managers would take no notice.

How could we use the results of our search to generate a set of reasons individuals might not report recoveries from self-made errors? Using the four categories of reasons reported in the literature (fear; uselessness; acceptance of risk; practical reasons) as a starting point, we discussed the question with people from three groups of employees at the chemical plant: management, safety department, and operators. Their opinions on possible reasons for not reporting are summarized below:

-

The chemical plant operators, part of a high-reliability organization (HRO), or at least something close to that (for a description of the characteristics of an HRO, see Roberts and Bea, 2001), rarely gave the reasons we found in the literature—namely the acceptance of incidents as part of their jobs or as unavoidable or a conviction that they were bound to happen.

-

None of the groups said plant management systematically ignored reported risks, which could make coming forward with information useless.

-

Most of the employees thought operators might be afraid or ashamed to report their own initial errors that required recovery actions.

-

Employees also considered it less important to report incidents that were indicative of well known risks because they were widely known by their colleagues and, therefore, had minimal learning potential.

-

Some types of incidents might not be considered appropriate to the goals of the reporting scheme.

-

Another suggestion was that if they themselves could “take care” of the situation, reporting the incident would be superfluous.

-

If there were ultimately no consequences, the incident could be considered unimportant.

-

Finally, the lack of time (“always busy”) could be a factor, as could other practical reasons (e.g., not fully familiar with the system).

Based on the considerations listed above, we propose the following six possible reasons for not reporting recoveries from self-made errors: (1) afraid / ashamed; (2) no lessons to be learned from the event; (3) event not appropriate for reporting; (4) a full recovery was made, so no need to report the event; (5) no remaining consequences from the event; (6) other factors.

THE DIARY STUDY

Methodology

Following the methods used in previous studies, we used personal diaries to get reports of everyday errors (Reason and Lucas, 1984; Reason and Mycielska, 1982; and especially Sellen, 1994). We asked all of the employees on one of the five shifts at the chemical plant to participate in a diary study for 15 working days (five afternoon shifts, five night shifts, and five morning shifts). Twenty-one of the 24 operators filled out a small form for every instance when they realized that they had recovered from a self-made error. The form contained several items: describe the self-made error(s); describe the potential consequences; tell who discovered the error(s), including how and when; describe the recovery action(s) taken; describe remaining actual consequences; and finally, “Would you have reported such an incident to the existing Near Miss Reporting System (choose from yes/no/maybe)” and “Why (especially if the answer is no).” We did not offer any preselected possible reasons as options for the last question, because we wanted the operators to feel free to express themselves.

Results

During the 15 days of the diary study, the 21 operators completed forms relating to 33 recoveries from self-made errors. In only three cases did they indicate that the incident would also have been reported to the existing NMRS; for five of the remaining cases, no reason(s) were given for not reporting.

Transcribed answers to the last question in the 25 remaining cases were given to two independent coders—the authors and another human-factors expert with experience in human-error analysis. One coder identified 32 reasons; the other found 34 reasons. The coders then reached a consensus on 32 identifiable reasons. The two coders independently classified each of the 32 reasons into one

TABLE 1 Examples of Coded Transcripts

|

Code Assigned |

Example from Transcript |

|

No lessons to be learned from the event |

The unclear/confusing situation is already known. |

|

Not appropriate for reporting |

System is not meant for reporting this kind of event. |

|

Full recovery made |

I made the mistake and recovery myself. |

|

No remaining consequences |

Mistake had no consequences. |

|

Other |

Not reported at the time, too busy. |

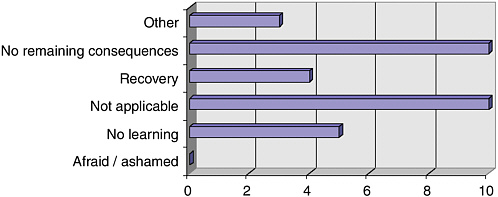

of the six categories. They agreed on 28 of the 32 reasons and easily reached consensus on reasons they had coded differently. A typical example of the statements and the resulting code are shown in Table 1. The overall results are shown in Figure 1.

In addition to the results shown above, the operators, on average, judged the potential consequences of the incidents in the diary study, if they not been recovered from, to be as serious as the consequences of incidents normally reported to the existing NMRS (Kanse et al., 2004). Potential consequences for each reported event were: production/quality loss, delay, damage; injury/health effects; and environmental effects. The severity for each type of consequence was also indicated (no consequences, minor consequences, considerable consequences, or major consequences).

The remaining consequences after recovery, however, differed from the consequences indicated for the events studied in the re-analyzed part of the existing NMRS database (Kanse et al., 2004). After recovery from self-made errors as reported in the diary study, in three events a minor delay remained, in one event minor production/quality loss remained, and one event involved minor repair costs. In contrast, there were remaining consequences in a much higher percentage of the 50 events from the NMRS (involving multiple, different types of failures per event): in six events a minor delay remained; in one event there were minor health-related consequences; in four events there were minor environmental consequences; in 14 events the hazard continued to exist for a significant time before the final correction was implemented; and in 20 events there were minor repair costs. These findings suggest that a complete and successful recovery from self-made errors may be easier to achieve than from other types of failures or combinations of failures. The main differences were in repair costs and the time during which the hazard continued.

FIGURE 1 Distribution of 32 reasons given by 21 operators for not reporting 25 “diary incidents” to the existing NMRS.

CONCLUSIONS

In terms of the trustworthiness of results, the diary study successfully complemented and provided a check on the existing near-miss database. Respondents were open and frank with the author who collected the data, which they otherwise would not have shared with the plant management and safety staff. They also described their reasons for not reporting clearly. The fact that two independent coders were able to use the taxonomy of reasons indicates its potential usefulness for the future.

The plant’s management and safety staff were somewhat surprised at the results shown in Figure 1. Some of them had expected that there would still be some fear or shame about reporting self-errors and/or a low level of perceived potential consequences as the major reasons successful recoveries were not reported. Thus, the results showed a genuine difference between operators and management in perceived importance, as measured by the options of no lessons to be learned, not appropriate for the system, full recovery, and no remaining consequences. Our hope is that the plant will now set up a program that clearly communicates management’s sincere interest in learning about the personal and system factors that make successful recoveries possible and that they will not adopt an attitude of “all’s well that ends well”; according to Kanse et al. (2004), the latter attitude is not compatible with the way an HRO should function. The fact that none of the participants mentioned being afraid or ashamed to report errors may be a very positive indicator of the plant’s safety culture.

The success of this limited (in time and resources) diary study suggests that the procedure could be repeated after the implementation of a program to convince operators of the importance of reporting recoveries, especially successful recoveries. A follow-up study could measure the change in operators’ percep-

tions. The second study (and subsequent studies from time to time) could monitor the emergence of other, possibly new, reasons for not reporting.

REFERENCES

Adams, N.L., and N.M. Hartwell. 1977. Accident reporting systems: a basic problem area in industrial society. Journal of Occupational Psychology 50: 285–298.

Beale, D., P. Leather, and T. Cox. 1994. The Role of the Reporting of Violent Incidents in Tackling Workplace Violence. Pp. 138–151 in Proceedings of the 4th Conference on Safety and Well-Being. Leicestershire, U.K.: Loughborough University Press.

Clarke, S. 1998. Safety culture on the UK railway network. Work and Stress 12(1): 6–16.

Cox, S., and T. Cox. 1991. The structure of employee attitudes to safety: a European example. Work and Stress 5(2): 93–106.

Elwell, R.S. 1995. Self-Report Means Under-Report? Pp. 129–136 in Applications of Psychology to the Aviation System, N. McDonald, N. Johnston, and R. Fuller, eds. Aldershot, U.K.: Avebury Aviation, Ashgate Publishing Ltd.

Glendon, A.I. 1991. Accident data analysis. Journal of Health and Safety 7: 5–24.

Kanse, L., T.W. van der Schaaf, and C.G. Rutte. 2004. A failure has occurred: now what? Internal Report, Eindhoven University of Technology.

Lawton, R., and D. Parker. 2002. Barriers to incident reporting in a healthcare system. Quality and Safety in Health Care 11: 15–18.

Lucas, D.A. 1991. Organisational Aspects of Near Miss Reporting. Pp.127–136 in Near Miss Reporting as a Safety Tool, T.W. van der Schaaf, D.A. Lucas, and A.R. Hale, eds. Oxford, U.K.: Butterworth-Heinemann Ltd.

O’Leary, M.J. 1995. Too Bad We Have to Have Confidential Reporting Programmes!: Some Observations on Safety Culture. Pp. 123–128 in Applications of Psychology to the Aviation System, N. McDonald, N. Johnston, and R. Fuller, eds. Aldershot, U.K.: Avebury Aviation, Ashgate Publishing Ltd.

Powell, P. I., M. Hale, J. Martin, and M. Simon. 1971. 2000 Accidents: A Shop Floor Study of Their Causes. Report no. 21. London: National Institute of Industrial Psychology.

Reason, J. 1997. Managing the Risk of Organisational Accidents. Hampshire, U.K.: Ashgate Publishing Ltd.

Reason, J., and D. Lucas. 1984. Using Cognitive Diaries to Investigate Naturally Occurring Memory Blocks. Pp. 53–70 in Everyday Memory, Actions and Absent-Mindedness, J.E. Harris and P.E. Morris, eds. London: Academic Press.

Reason, J., and K. Mycielska. 1982. Absent-minded?: The Psychology of Mental Lapses and Everyday Errors. Englewood Cliffs, N.J.: Prentice Hall, Inc.

Roberts, K.H., and R.G. Bea. 2001. Must accidents happen?: lessons from high-reliability organisations. Academy of Management Executive 15(3): 70–77.

Sellen, A.J. 1994. Detection of everyday errors. Applied Psychology: An International Review 43(4): 475–498.

Smith, C.S., G.S. Silverman, T.M. Heckert, M.H. Brodke, B.E. Hayes, M.K. Silverman, and L.K. Mattimore. 2001. A comprehensive method for the assessment of industrial injury events. Journal of Prevention and Intervention in the Community 22(1): 5–20.

Webb, G.R., S. Redmand, C. Wilkinson, and R.W. Sanson-Fisher. 1989. Filtering effects in reporting work injuries. Accident Analysis and Prevention 21: 115–123.