Stuck on a Plateau

A Common Problem

CHRISTOPHER A. HART

Office of System Safety

Federal Aviation Administration

After declining significantly for about 30 years to a commendably low rate, the rate of fatal accidents for commercial aviation worldwide has been stubbornly constant for many years (Boeing Commercial Airplane Group, 2003). As part of the effort to address this problem, the Federal Aviation Administration (FAA) Office of System Safety proposed the establishment of a global aviation information network (GAIN), a voluntary, privately owned and operated network of systems that collects and uses aviation safety information about flight operations, air traffic control operations, and maintenance to improve aviation safety.1 The proactive use of information has been greatly facilitated by technological advances that have improved the collection and use of information about adverse trends that may be precursors of future mishaps.

In the course of developing GAIN, the Office of System Safety noted that many other industries have also experienced a leveling off of mishap rates. These industries include other transportation industries, health care, national security, chemical manufacturing, public utilities, information infrastructure protection, and nuclear power. Not satisfied with the status quo, most of these industries are attempting to find ways to start their rates going down again.

Most of the industries have robust backups, redundancies, and safeguards in their systems so that most single problems, failures, actions, or inactions

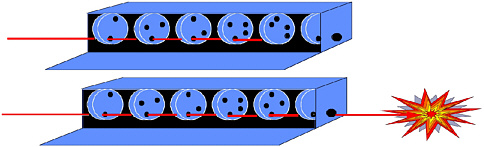

FIGURE 1 Graphic representation of potential mishaps. Source: adapted from Reason, 1990.

usually do not result in harm or damage. Several things must go wrong simultaneously, or at least serially (as “links in an accident chain”), for harm or damage to occur. That, of course, is the good news. The bad news is that the absence of a single weak point means there is no single, easily identifiable point at which to intervene.

This scenario can be represented graphically as a box containing several disks with holes spinning about a common axis (Figure 1). A light shining through the box represents a potential mishap, with each disk being a defense to the mishap. Holes in the disks represent breaches in the defenses. If all of the breaches happen to “line up,” the light emerges from the box, and a mishap occurs. This figure is adapted from the Swiss cheese analogy created by James Reason (1990) of Manchester University in the United Kingdom. In Reason’s analogy, a mishap occurs when the holes line up in a stack of cheese slices.

Each spinning disk (or slice of cheese) can be compared to a link in the chain of events leading to an accident. Each link, individually, may occur relatively frequently, without harmful results; but when the links happen to combine in just the wrong way—when the holes in the spinning wheels all happen to line up—a mishap occurs.

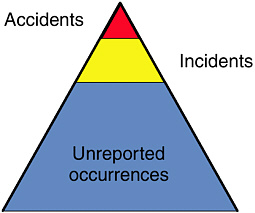

In systems with robust defenses against mishaps, the characteristic configuration of incidents and accidents can be depicted by a Heinrich pyramid (Figure 2), which shows that, for every fatal accident, there will be three to five nonfatal accidents and 10 to15 incidents; but there will also be hundreds of unreported occurrences (the exact ratios vary).

Usually, occurrences are not reported because, by themselves, they are innocuous (i.e., they do not result in mishaps). Nevertheless, unreported occurrences are the “building blocks” of mishaps; if they happen to combine with other building blocks from the “unreported occurrences” part of the pyramid, they may someday result in a mishap.

FIGURE 2 The Heinrich pyramid. Source: Heinrich, 1931.

COLLECTION AND ANALYSIS OF INFORMATION

Most industries have begun to consider the feasibility of collecting and analyzing information about precursors before they result in mishaps. Too often, the “hands-on” people on the “front lines” note, after a mishap, that, they “all knew about that problem.” The challenge is to collect the information “we all know about” and do something about it before it results in a mishap.

Many industries have instituted mandatory reporting systems to collect information. They generally find, however, that there is no reasonable way to mandate the reporting of occurrences that do not rise to the level of mishaps or potential regulatory violations. Short of a mishap, the system must generally rely upon voluntary reporting, mostly from frontline workers, for information about problems. In the aviation industry, reporting about events near the top of the pyramid is generally mandatory, but reporting most events in the large part of the pyramid is generally voluntary. In most industries, including aviation, most of the information necessary for identifying precursors and addressing them is likely to be in the large part of the pyramid.

Legal Deterrents to Reporting

In the United States, four factors have discouraged frontline workers, whose voluntary reporting is most important, from coming forth with information. First, potential information providers may be concerned that company management and/or regulatory authorities will use the information for punitive or enforcement purposes. Thus, a worker might be reluctant to report a confusing process, fearing that management or the government might not agree that the process is

confusing and might punish the worker. A second concern is that reporting potential problems to government regulatory agencies may result in the information becoming accessible to the public, including the media, which could be embarrassing, bad for business, or worse. A third major obstacle to the collection of information is the possibility of criminal prosecution. The fourth concern, perhaps the most significant factor in the United States, is that collected information may be used against the source in civil litigation.

Most or all of these legal issues must be addressed before the collection and sharing of potential safety data can begin in earnest. GAIN’s experience in the aviation industry could benefit other industries in overcoming these obstacles.

Analytical Tools

Once the legal issues have been addressed, most industries face an even more significant obstacle—the lack of sophisticated analytical tools that can “separate sparse quantities of gold from large quantities of gravel” (i.e., convert large quantities of data into useful information). These tools cannot solve problems automatically, but they can generally help experienced analysts accomplish several things: (1) identify potential precursors; (2) prioritize potential precursors; (3) develop solutions; and (4) determine whether the solutions are effective. Most industries need tools for analyzing both digital data and text data.

To identify and resolve concerns, most industries will have to respond in a significantly different way than they have in the past. The ordinary response to a problem in the past was a determination of human error, followed (typically) by blaming, retraining, and/or punishing the individual who made the last mistake before the mishap occurred.

As mishap rates stabilize, they become more resistant to improvement by blaming, punishing, or retraining. The fundamental question becomes why employees who are highly trained, competent, and proud of doing the right thing make inadvertent and potentially life-threatening mistakes that can hurt people, often including themselves (e.g., airline pilots). Blaming problems on “human error” may be accurate, but it does little to prevent recurrences of the problem. Stated another way, if people trip over a step x times per thousand, how big must x be before we stop blaming people for tripping and start focusing on the step. (Should it be painted, lit up, or ramped? Should there be a warning sign?)

Most industries are coming to the conclusion that the historic focus on individuals, although still necessary, is no longer sufficient. Instead of focusing primarily on the operator (e.g., with more regulation, punishment, or training), it is time that we also focus on the system in which operations are performed. Given that human error cannot be eliminated, the challenge is how to make the system (1) less likely to result in human error and (2) more capable of withstanding human errors without catastrophic results.

Expanding our focus to include improving the system does not mean reducing the safety accountability of frontline employees. It does mean increasing the safety accountability of many other people, such as those involved in designing, building, and maintaining the system. In health care, for example, the Institute of Medicine’s Committee on Quality of Heath Care in America issued a report that concluded that as many as 98,000 people die every year from medical mistakes (IOM, 2000). The report recommends that information about potentially harmful “near-miss” mistakes (i.e., mishap precursors) be systematically collected and analyzed so that analysts can learn more about their causes and develop remedies to prevent or eliminate them. The premise of this proactive approach is described as follows:

Preventing errors means designing the health care system at all levels to make it safer. Building safety into processes of care is a much more effective way to reduce errors than blaming individuals …. The focus must shift from blaming individuals for past errors to … preventing future errors by designing safety into the system…. [W]hen an error occurs, blaming an individual does little to make the system safer and prevent someone else from committing the same error.

Intense public interest in improving health care systems presents major opportunities for creating processes that could be adopted by other industries. If health care and other industries joined forces, they could avoid “reinventing the wheel,” to their mutual benefit. As industries focus more on improving the system, they will find they need to add two relatively unused concepts into the analytical mix: (1) system-wide intervention and (2) human factors.

System-Wide Interventions

Operational systems involve interrelated, tightly coupled components that work together to produce a successful result. Most problems have historically been fixed component by component, rather than system-wide. Problems must be addressed not only on a component level, but also on a system-wide basis.

Human Factors

Most industries depend heavily upon human operators to perform complex tasks appropriately to achieve a successful result. As more attention is focused on the system, we must learn more about developing systems and processes that take into account human factors. Many industries are studying human-factors issues to varying degrees, but most are still at an early stage on the learning curve.

SHARING INFORMATION

Although the collection and analysis of information can result in benefits even if the information is not shared, the benefits increase significantly if the information is shared—not only laterally, among competing members of an industry, but also between various components in the industry. Sharing makes the whole much greater than the sum of its parts because it allows an entire industry to benefit from the experience of every member. Thus, if a member of an industry experiences a problem and fixes it, every other member can benefit from that member’s experience without having to encounter the problem. Moreover, when information is shared among members of an industry, problems that appear to a single member as an isolated instance can much more quickly be identified as part of a trend.

The potential benefits of sharing information increase the need for more sophisticated analytical tools. Since there is little need, desire, or capability for sharing raw data in most industries, data must first be converted into useful information for sharing to be meaningful. If data are not first converted into useful information, sharing will accomplish little.2

Thus, industry, governments, and labor must work together to encourage the establishment of more programs for collecting and analyzing information and to encourage more systematic sharing of information. Governments can facilitate the collection and sharing of information by ensuring that laws, regulations, and policies do not discourage such activities and by funding research on more effective tools for analyzing large quantities of data.

BENEFITS OF COLLECTING, ANALYZING, AND SHARING INFORMATION

More systematic collection, analysis, and sharing of information is a win-win strategy for everyone involved. Private industry wins because there will be fewer mishaps. Labor wins because, instead of taking the brunt of the blame and the punishment, frontline employees become valuable sources of information about potential problems and proposed solutions to accomplish what everyone wants—fewer mishaps. Government regulators win because the better they understand problems, the smarter they can be about proposing effective, credible remedies. Improved regulations further benefit industry and labor because effective remedies mean they will get a greater “bang for their buck” when they implement the remedies. Last but not least, the public wins because there are fewer mishaps.

Immediate Economic Benefits

Whether other industries will enjoy the economic benefits that are becoming apparent in the aviation industry is still unclear. A common problem in aviation has been the difficulty encountered by airline safety professionals trying to “sell” proactive information programs to management because the cost justifications are based largely on accidents prevented. The commendably low fatal accident rate in aviation, combined with the impossibility of proving that an accident was prevented from instituted safety measures, makes it difficult, if not impossible, to prove that a company benefits financially from proactive information sharing. There might be an accident in the next five to seven years, and a proactive information program might prevent the accident that we might have. Needless to say, this is not a compelling argument for improving the “bottom line.”3

Fortunately, an unforeseen result has begun to emerge from actual experience. The first few airlines that implemented proactive information programs quickly noticed (and reported) immediate, sometimes major savings in operations and maintenance costs as a result of information from their safety programs. It is not clear whether other industries, most notably health care, will enjoy immediate savings from information programs, but conceptually the likelihood seems high.

If immediate economic benefits can be demonstrated, this could be a significant factor in the development of mishap-prevention programs, which would become immediate, and sometimes major, profit centers, instead of merely “motherhood and apple pie” good ideas with potential, statistically predictable, future economic benefits. Moreover, the public would benefit, not only from fewer mishaps, but also from lower costs.

CONCLUSION

Many industries, including most transportation industries, health care, national security, chemical manufacturing, public utilities, information infrastructure protection, and nuclear power, have experienced a leveling off of the rate of mishaps. They are now considering adopting proactive information-gathering programs to identify mishap precursors and remedy them in an effort to lower the rate of mishaps further. It is becoming apparent that there are many common reasons for the leveling off and that many common solutions can be used by most of these industries. Although one size does not fit all, these industries have

an unprecedented opportunity to work together and exchange information about problems and solutions for the benefit of everyone involved.

REFERENCES

Boeing Commercial Airplanes Group. 2003. Statistical Summary of Commercial Jet Airplane Accidents, Worldwide Operations, 1959–2002. Chicago, Ill.: The Boeing Company. Available online at http://www.boeing.com/news/techissues/pdf/statsum.pdf.

Heinrich, H.W. 1931. Industrial Accident Prevention. New York: McGraw-Hill.

IOM (Institute of Medicine). 2000. To Err Is Human: Building a Safer Healthcare System. Washington, D.C.: National Academy Press.

Reason, J. 1990. Human Error. Cambridge, U.K.: Cambridge University Press.