3

Measuring R&D in Business and Industry

The annual survey of R&D in industry is one of the most important products of the NSF’s Science Resources Statistics (SRS) division. It measures a sector that accounts for the bulk of R&D activity in the economy, accounting for 66 percent of funding for R&D by source and more than 70 percent of funds spent on R&D.

Because of the predominance of the industrial sector in R&D, the industrial R&D expenditure data are closely watched as an indicator of the health of the R&D enterprise in the United States. For example, a recent downturn in real spending on industrial R&D has given rise to some concern over the health of that enterprise.1 The decline in industrial R&D spending between 2001 and 2002, coinciding with the overall economic slowdown, was identified by NSF as the largest single-year absolute and percentage reduction in current-dollar industry R&D spending since NSF initiated the survey in 1953 (National Science Foundation, 2004). This downturn was identified as the leading cause of the downtick in the ratio of R&D spending to gross domestic product from 2000 to 2002.

This most important R&D survey is also the most problematic. No survey in the NSF portfolio of R&D expenditure surveys has been as impacted by changes in the R&D environment (discussed in Chapter 1) as the Survey of Industrial Research and Development.

This survey is also the most sensitive to changes in the procedures for statistical measurement. Little is known about respondents, what they

know, and how they treat this survey. We do not know who is responding in each firm, or what their position or capabilities are. Nor do we know how the data each firm reports are gathered within the firm, or their relationship to similar data reported to the Securities and Exchange Commission (SEC).2

Since its inception in 1953, the survey has experienced several focused efforts to modernize and strengthen its conceptual and technical foundation. The most ambitious modernization effort took place a little over a decade ago, in 1992, when the sample design was substantially modified to better represent the growing service sector, extend coverage to smaller firms, and collect additional information for understanding R&D outsourcing and that performed by foreign subsidiaries.

The 1992 redesign effort updated the operations of the survey to reflect many aspects of the changed environment and correct some of the most severe deficiencies, which had rendered the survey results quite misleading over time. At the conclusion of that redesign, however, there was still much to do to meet a growing need for data to measure the emerging realities in the conduct and measurement of R&D in the United States. Not much progress has been made since that redesign; in fact, the survey changes that have been introduced since 1992 have been almost cosmetic in their application. Minimal revisions have been implemented to maintain currency, such as the introduction of the North American Industry Classification System (NAICS) in 1997 and the more recent changes in questionnaire wording and collection procedures (see Box 3-1).

Thus, this decade-old redesign of the survey has left several issues unresolved. A major unresolved issue is the failure to implement collection of R&D data at the product or line-of-business level of detail; another is the failure to learn more about respondents and to sharpen concepts and definitions to more adequately reflect business organization for R&D; and a third is the inability to speed the production and release of the estimates to data users.

With the passage of time, the need to consider further revisions has accelerated. The R&D environment has changed even further since the early 1990s. As discussed in Chapter 1, organizational arrangements for the conduct of R&D have continued to change apace. New forms of R&D in

|

BOX 3-1

|

the service sector (particularly in the biomedical fields), the expanding role of small businesses, the geographic clustering of R&D, cooperation, collaboration, alliances, “open innovation,” partnerships, globalization, government programs to promote advanced technology demonstrations and nanotechnology—all have impacted the relevance and quality of the data. The list of structural and organizational changes in the business world that impact on the ability to understand the extent and impact of R&D grows daily. Despite NSF staff efforts, the survey is not keeping up.

The panel concludes that it is time to implement another major redesign of this survey (Conclusion 3.1). The redesign would take a four-pronged approach:

-

The redesign would begin with a reassessment of the U.S. survey against the “standard,” that is, the international definitions as promulgated

|

-

through the Frascati Manual (Organisation for Economic Co-operation and Development, 2002a), thereby adding some data items. It would benchmark U.S. survey methodology against best practices in other countries, many of which appear to be producing data of better quality and with more relevance than NSF.

-

In order to sharpen the focus of the survey and fix problems further identified in this report, the redesign would update the questionnaire to facilitate an understanding of new and emerging R&D issues. In particular, it would test and implement the collection of data on R&D funds from abroad, from affiliate firms, and from independent firms and other institutions for the performance of R&D in the United States. It would sharpen the question on the outsourcing of R&D to distinguish between payments to affiliated firms, to independent firms, and to other institutions abroad. It would make more extensive use of web-based

-

collection technology after appropriate cognitive and methodological research.

-

The redesign would enhance the program of data analysis and publication that would facilitate additional respondent cooperation, enhance the understanding of the industrial R&D enterprise in the United States, and provide feedback on the quality of the data to permit updating the survey methodology on an ongoing basis. The redesign would be supported by an extensive program of research, testing, and evaluation so as to resolve issues regarding the appropriate level at which to measure R&D, particularly to answer, once and for all, the question about the collectability of product or line-of-business detail.

-

The redesign would revise the sample to enhance coverage of growing sectors and the collection procedures to better nurture, involve, and educate respondents and to improve relevance and timeliness.

To assist NSF in identifying these venues for improvement and in prioritizing these tasks, this chapter addresses each of these areas.

INTERNATIONAL STANDARDS

The concepts and definitions of R&D used in the United States today are generally in keeping with international standards. This is not surprising. After all, the science of measuring R&D was pioneered in the United States, and it has proceeded ahead by virtue of an international effort marked by remarkable comity and underscored by collaborative development. This collaboration among the national experts in member countries of the Organisation for Economic Co-operation and Development (OECD) who collect and issue national R&D data has been ongoing for about five decades, under OECD auspices. The collaboration has been codified in the Frascati Manual, which was initially issued about 40 years ago, with several updates over the years, the most recent in 2002.

Nonetheless, there is increasing evidence that the United States is departing somewhat from the reporting standards used internationally. For example, the OECD publication Main Science and Technology Indicators (Organisation for Economic Co-operation and Development, 2004) provides an indication that some data are not comparable within OECD guidelines. Although not of serious consequence, these departures indicate that some catch-up work may be needed in order to conform with international standards.3

Progress in Other Countries

In spite of a long history of U.S. leadership in measuring R&D, there is evidence that other countries may have caught up with and moved ahead of the United States in the measurement of industrial R&D. To the extent that this may have happened, there are reasons for the trend. Other countries generally have more access to administrative sources of data, or they have traditions in statistical collection that allow (or prescribe) more intrusive collection of data in greater depth than is considered feasible in the United States. The experience of countries in the European Community with the development of the round of Community Innovation Surveys (CIS) is instructive in this regard. The fact that other countries have developed more information on foreign funding of domestic R&D is an indicator of how they have extended knowledge of the R&D enterprise beyond that available to U.S. policy makers.

One country that has made recent strides in the measurement of industrial R&D, which could well be emulated in many respects in the United States, is Canada. It has developed a robust R&D expenditure measurement program.

The Industrial Research and Development Survey in Canada

A survey of research and development performance in commercial enterprises (privately or publicly owned) and of industrial nonprofit organizations has been conducted in Canada since 1955. The survey has changed in frequency, in detail, and in use of administrative data over the years. Now it is an annual survey of enterprises that perform $1 million (Canadian) worth or more of R&D, complemented by the use of administrative data from the tax authority in order to eliminate the reporting burden for small R&D performers. The tax data arise because of the federal Scientific Research and Experimental Development program, which, in some form, is

|

|

Depreciation is reported in place of gross capital expenditures in the business enterprise sector. Higher education (and national total) data were revised back to 1998 due to an improved methodology that corrects for double-counting of R&D funds passed between institutions. Breakdown by type of R&D (basic research, applied research, etc.) was also revised back to 1998 in the business enterprise and higher education sectors due to improved estimation procedures. Beginning with the 2000 Government Budget Appropriations or Outlays for R&D (GBAORD) data, budgets for capital expenditure, “R&D plant” in national terminology, are included. GBAORD data for earlier years relate to budgets for current costs only. The United States Technological Balance of Payments (TBP) data cover only “royalties and license fees,” which are internationally more comparable. Other transactions, notably “other private services,” have been excluded. |

available to all firms that perform R&D. The data are available to the statistical office under a provision of the Statistics Act.

Not only are the tax files a source of data for R&D performing enterprises, but they also provide the list of enterprises in Canada that perform R&D. Of course, not all firms apply for the tax benefit, as some regard the administrative demand and the risk of audit as outweighing the benefits. However, these firms tend to be small performers of R&D with few staff committed to the activity. With the survey frame given by administrative records, the statistical office is able to take data directly from the appropriate tax form for about 85 percent of the population of R&D performers. For the rest, those that perform $1 million or more of R&D, a more elaborate questionnaire is mailed to the enterprise, once a contact person is identified as a result of an initial telephone contact. It is felt that this initial contact is essential to the success of the survey.

The information collected includes the expenditure on the performance of R&D, including current and capital costs, and the full-time-equivalent number of personnel engaged in the activity. As well as collecting data on the performance of R&D, questions are asked about the source of funds. There are five sources: governments (federal or provincial); business, including the firm itself; higher education; private nonprofit organizations; and foreign firms and other institutions. About 20 percent of funding for industrial R&D in Canada comes from abroad, as measured in the Canadian R&D survey. In contrast, the United States does not collect this type of information on the industrial R&D survey, missing an opportunity to illuminate this important aspect of the globalization of R&D. Although data exist on foreign sources of R&D funding for other countries, there are no data on foreign funding sources of U.S. R&D performance (National Science Board, 2004).

The country of control of the R&D-performing firm in the Canadian survey is derived from administrative data, which are used to examine the different characteristics of foreign-controlled and domestic-controlled enterprises in relation to their performance of R&D. About 30 percent of the expenditures on industrial R&D in Canada are made by foreign-controlled firms. This spending emanates from within Canada; it is separately identified although counted as domestic R&D spending, in keeping with international practice.

Most R&D performers in Canada have a single head office with one geographical location for both their production and R&D units. However, there are large firms that have production units classified to different NAICS codes, and the production units may be in different provinces. For these firms, special follow-up is required to identify the geographical and industrial allocation of the R&D performed in Canada. Once this is done, the data can be presented by geographical region.

The survey is also a vehicle for questions about new or growing activities, such as R&D in software, biotechnology, new materials, and the environment. It collects data on payments and receipts for R&D and other technological services. And it supports international comparison of Canada’s R&D performance (for more details, see Statistics Canada, 2003).

ADEQUACY OF CONCEPTS AND DEFINITIONS

Mainly for purposes of historical and cross-sectional comparisons and continuity, the definitions that underscore NSF’s collection of R&D expenditure data from industry have followed a one-size-fits-all philosophy. This approach impedes understanding of the scope and nature of industrial R&D.

The division of industrial R&D activities into the standard categories of basic research, applied research, and development has characterized the industrial R&D survey since its inception. In an effort to maintain a concordance with the definitions collected in federal and academic surveys, as well as with international sources, NSF defines industrial basic research as the pursuit of new scientific knowledge that does not have specific immediate commercial objective, although it may be in fields of present or potential commercial interest; industrial applied research is investigation that may use findings of basic research toward discovering new scientific knowledge that has specific commercial objectives with respect to new products, services, processes, and methods; and industrial development is the systematic use of the knowledge and understanding gained from research or practical experience directed toward the production or significant improvement of useful products, services, processes, or methods, including the design and development of useful products, materials, devices, and systems (U.S. Census Bureau, 2004).

The reporting of data by these basic categories is only as sound as the understanding of the meaning of those categories. In an important 1993 study, Link asked research directors from three research-intensive industries about the accuracy of the categories for describing the scope of R&D that is financed by their companies. Most of the industrial firms reported that the categories described their scope, but there were disagreements among the largest firms. The disagreement had to do with the narrowness of the definition of development. The researcher’s conclusion was that the NSF definition understated the amount of development, from the perspective of the firms (Link, 1996).

This problem at the outer edge of the definition of research and development is not surprising since, with some notable exceptions like pharmaceuticals and semiconductors, most corporate investment into R&D exploits science-based inventions that are beyond the research and

development phase. The development of new goods and services is often based on costs and specifications defined by marketing opportunities. Branscomb and Auerswald (2002) point out that blurred distinctions between these traditional categories complicate accurate analysis of existing data.

Ambiguous usage of these common categories leaves the door open for variation in interpretation by survey respondents, especially across different firms and industries. Jankowski (2002) points out that the service sector (finance, communications, transportation, and trade) creates value and competes by buying products and assembling them into a system or network, efficiently running or operating the system, and providing services for customers who are often members of the public. It is an open question whether the system design, system operation, and service design and delivery functions can be readily classified into the traditional components of R&D. An example suggested to the panel during its workshop would be a major retailing company that has invested in innovative supply chain management techniques. Such a company would quite possibly not recognize and report this activity as R&D. It was observed that at least one large retailer with widely recognized leadership in distribution management reports no R&D program in its 10K filings to the SEC.

The distinction between applied research and the two other components of R&D is especially problematic in the industrial sector. Applied research could well involve original research believed to have commercial applications, and it could include research that applies knowledge to the solution of practical problems (Branscomb and Auerswald, 2002). Although, the share of R&D represented by applied research has been fairly steady at about 20 percent of the total since the industry data were first published in 1953, the possible blurring of the lines between the basic and applied research and between applied research and development has the potential to distort analysis of the role of R&D in the generation of innovation and growth. These questions are important for focusing and assessing such federal investment programs as the Advanced Technology Program and the Small Business Innovation Research programs, in which the federal government provides project-level support of early-stage commercial technological development.

In assessing the impact of R&D on industries, these distinctions are also important. Branscomb and Auerswald observe that mature industries, such as the automotive sector, tend to invest a smaller percentage of R&D into earlier stages of technological development than do industries at an earlier stage of evolution, such as biotechnology. They make the case for this evolutionary shift in R&D investment strategy by tracing the emerging emphasis on maximizing the “yield from R&D.” This has been accomplished by reducing the amount of basic research accomplished in corporate laboratories, outsourcing, collaborations with universities, and developing corporate venture capital organizations that spin off from the core business.

In contrast, the overwhelming focus of U.S. industry on development activities more than on pure research means that three-fourths of R&D activity is lumped into these categories that are closer to the customer. These are less likely to be carried out in formal R&D facilities and thus less likely to be classified as R&D. Especially in the service sector, the failure to recognize these customer-driven activities as development would tend to bias the estimates of R&D expenditures downward. At the same time, there are classification issues in the development category. Today, companies classify activities that range from advanced technology development to operational system development in the same general category. There is evidence that companies are having difficulty in separating development from engineering as technology advances (McGuckin, 2004). The same issue exists for “technical service,” which has certain elements of development embedded. In consequence, the lumping of these activities into one aggregated “development” category tends to obscure important shifts in R&D emphasis over time.

Definitional quirks lead to other anomalies. Software development simulating product performance in lieu of product tests is reported as R&D, but other software development, such as software to predict the nature of consumer demand, is not considered R&D. Heavy investments in technical service developments in quality control and in artificial intelligence and expert systems are similarly uncounted in the R&D expenditure estimates.

The panel learned that companies may be able to provide additional useful breakouts of more detailed information on the components of R&D if the categories are carefully constructed for ease of collection. For example, one large manufacturing corporation maintains detailed records on investment in developmental functions and could report using a classification structure akin to that adopted in the Department of Defense Uniform Budget and Fiscal Accounting Classifications for RDT&E (research, development, test, and evaluation) Budget Activities.4 It is not certain whether other companies could be similarly accommodating to providing additional detail to help further define the boundaries and details of the R&D classification structure. There is a need for additional classification detail to better describe differences between the industrial sectors and to better focus on the sources of innovative activity.

The potential lack of understanding of the concepts and definitions in the Survey of Industrial Research and Development (often referred to as RD-1, the number on the survey form) is especially troubling. A Census

Bureau study found that R&D professionals and support personnel have a good understanding of these concepts, even though they do not necessarily classify their own work that way (U.S. Census Bureau, 1995). However, the persons most often charged with responding to the questionnaire are in the financial or government relations offices of the companies, and their understanding is less likely to be accurate. This finding was confirmed in panel discussions with representatives of reporting companies and covered industries. Although definitions of these terms are included in the instructions transmitted with the forms, prior to the 2003 survey they were not included on the form and thus may have been overlooked. Abbreviated instructions have been included in a revised 2003 questionnaire introduced in March 2004.

NSF should conduct research into recordkeeping practices of reporting establishments by industry and size of company to determine if they can report by more specific categories that further elaborate applied research and development, such as the categories utilized by the Department of Defense (Recommendation 3.1).

ALIGNMENT OF THE SURVEY WITH TAX AND ACCOUNTING PRACTICES

R&D data have never existed in isolation. From their beginning, they were molded and shaped in a larger context. The work that went into developing the collection of information on federal R&D emanated from the concern of Vannevar Bush and others over the lagging role of the U.S. R&D enterprise. Similarly, the concern over appropriate measurement of industrial R&D arose from a concern over the impact of R&D on productivity and economic growth.

By the same token, the conceptual foundation of industrial R&D and the basis for the R&D measures have, for two decades, been closely associated with U.S. tax and accounting policies. Along with international standards and policies promulgated by the U.S. Office of Management and Budget, the tax code and accounting rules have shaped and defined R&D.

A study by Hall (2001) for the European Union finds that there are several features of federal corporate tax law that have an effect on the amount and type of R&D: expensing of R&D; the research and experimentation (R&E) tax credit; foreign source allocation rules for R&D spending; the preferential capital gains tax rate; accelerated depreciation and investment tax credits for capital equipment; and the treatment of acquisitions, especially as it relates to the valuation of intangibles.

The foremost provision of the tax code that defines R&D is the federal research and experimentation tax credit. The credit is designed to stimulate research investment by virtue of a tax credit for incremental research ex-

penditures. The justification for the tax stimulus, as described by the National Science Board (2002), is that returns from research, especially long-term research, often are hard to capture privately, as others might benefit directly or indirectly from them. Therefore, without some policy such as a tax credit, businesses might engage in levels of research below those that would benefit a broader constituency (National Science Board, 2002:4-16). The tax credit is a feature in many developed countries, and it has been a pillar of U.S. government R&D policy since 1981.

In application, the tax credit appears to have a significant impact on corporate R&D accounting and decision making. The credit is provided for 20 percent of qualified research above a base amount, so it becomes worthwhile for a company to clearly identify activities that meet the definition of qualified research. Young companies have different, more liberal rules than older companies, so companies may adjust their corporate R&D strategies as they age. Finally, the tax credit has provisions for basic research payments paid to universities and other scientific research organizations, perhaps encouraging collaborative arrangements and outsourcing.

Although the tax credit legislation does not define R&D, the Internal Revenue Service (IRS) has issued defining regulations that define R&E as expenditure incurred in connection with the taxpayer’s trade or business that represents research and development costs in the experimental or laboratory sense. Research expenses that are qualified for claiming under the regulations are those that include research undertaken for discovering information that is technological in nature, for which the application is intended to be useful in the development of a new or improved business component, and that relate to new or improved function, performance, reliability, or quality. In a series of decisions, tax courts have held that this definition does not include R&D performed by financial service institutions or involving software development more generally.5

The tax code goes on to define basic research as any original investigation for the advancement of scientific knowledge not having a commercial objective. The definition includes domestic research conducted by foreign firms, but it does not include basic research conducted outside the United States by affiliates of U.S. companies.

Hall (2001) indicates that, in contrast to the tax code, the Federal Accounting Standards Board, which sets the standard for reporting to the Securities and Exchange Commission on the 10K form, defines R&D as follows:

Research—planned search or critical investigation aimed at the discovery of new knowledge with the hope that such knowledge will be useful in developing a new product or service or a new process or technique or in bringing about a significant improvement in an existing product or process.

Development—the translation of research findings or other knowledge into a plan or design for a new product or process or for a significant improvement to an existing product or process.

These tax and accounting definitions are not precisely the same as the international standards and the associated U.S. definitions of the components of R&D. A company is confronted with a multiplicity of definitions and interpretations of activities called R&D that are impacting it at various levels. An activity may be defined as R&D in the laboratory but not by the tax accountant. Neither the laboratory nor the tax accountants may fully agree with the operating definition used by the company agent who has responsibility for completing and submitting the RD-1 form to the Census Bureau.6

Research and development spending by businesses plays a significant role in valuing intangibles. Although the tax accounting standards expense R&D, there is frequent discussion over the appropriateness of treating R&D as an investment. The Federal Accounting Standards Board is currently considering proposals to capitalize acquired in-process R&D. Obviously, further moves of regulators in the direction of recognizing intangibles as assets will provide valuable information for the surveys.

In any event, full exploration of the treatment of R&D as an intangible investment requires definitional and measurement breakthroughs. For example, a recent study by Corrado et al. (2004) identified R&D as the largest source of business investment on intangibles. Their taxonomy defines conventional R&D (science and engineering research and development) as the major component of “scientific and creative property.” Other components of this subcategory of intangibles are copyright and license costs and other product development, design, and research expenses (particularly in the finance and service sectors). (The latter two subcategories are currently not included in the industrial R&D surveys.) Other intangible assets identified include “computerized information” and “economic competencies” and are clearly not captured by the survey.

Some evidence of the impact of classification of developmental activities and differing treatment of foreign R&D was identified in the 1999 comparison of the SEC 10K reports and the NSF industry R&D estimates

by Hall and Long (1999). The RD-1 instructions exclude “routine product testing” and “technical services” from the definition of R&D. Companies have difficulty in distinguishing between nonroutine and routine processes, especially in light of the 10K standard that narrowly defines modifying a product to satisfy a specific customer’s needs as a routine customer service to be excluded from R&D, even if the work is performed by R&D employees. Companies thus face two sets of definitions and reporting rules. Differing treatments of foreign R&D also affect the series. Hall and Long found that, in the aggregate, the SEC 10K data do not show a slowdown in the 1980s, while the NSF data exhibit a decline.

In summary, tax and accounting standards play an important role in determining the definitions and reporting mechanisms in firms that report on the industry R&D survey. One may speculate that the substantial scrutiny on corporate bookkeeping in recent years has served to give even more impetus to improvement in reporting than was the case a few years ago when Hall and Long concluded that “substantial effort appears to have gone into getting the R&D numbers ‘right’ in the professional accounting world, by which we mean following the definitions and reporting requirements carefully and systematically” (Hall and Long, 1999:27).

THE INDUSTRIAL R&D SURVEY

Selecting an Appropriate Scope for the Industrial R&D Survey

The relevance of the industrial R&D survey is defined both by the content of the data collection and by the selection of businesses covered by the survey. This selection is called the “scope” of the survey. The scope has changed over the years to reflect the changing nature of R&D and the changing locale in which R&D is conducted in the United States, but there are still major shortfalls in the scope of the survey (Kusch and Ricciardi, 1995).

The survey has always included manufacturing and most nonagricultural industries. From its earliest years, it has excluded trade associations, railroad industries, and agricultural cooperatives. Over the years, it also had policies about the size of companies that were in scope, for years excluding all manufacturing companies with 50 or fewer employees. In later years, manufacturing industries assumed to have little or no R&D were excluded, but uneasiness about the extent of R&D in smaller industries led to their inclusion in later years. Variable cutoffs based on number of employees were used for many years to reduce scope. At other times cutoffs were reinstated. Single units with fewer than five employees were eliminated from scope.

Today, all for-profit companies classified in nonfarm businesses are

|

BOX 3-2 The earliest files were derived from lists of businesses reporting to the Board of Old Age and Survivor’s Insurance (BOASI), which had the benefit of having industry codes classified into Standard Industrial Classification (SIC) codes. Then, for a time, the sample for the manufacturing industries was selected from the Annual Survey of Manufactures (ASM) carried out by the Bureau of the Census. The BOASI records still constituted the frame for the nonmanufacturing industries. Lists from the Department of Defense (DoD) of the largest R&D contractors supplemented both sources. In 1967, the 1963 Census Enterprise Statistics file was the frame for multiunit manufacturing companies. Single unit manufacturers were sampled from the 1963 Economic Censuses. Social Security Administration (SSA) files represented the nonmanufacturing universe. Lists of R&D contractors supplemented the selected panel for the Department of Defense and the National Aeronautic and Space Administration (NASA). After updating to the latest economic census, the same procedure was followed for the 1971-1975 panel, with the exception that the Enterprise file was used for selected nonmanufacturing industries. The SSA files represented the remaining nonmanufacturing industries. In 1976, a change in frame sources occurred that holds to this day. The Census Bureau’s Standard Statistical Establishment List (SSEL) was used. This list is updated annually and contains all nonfarm entities that the Census Bureau knows about. Although it was still not used for some of the nonmanufacturing companies, beginning in 1981, it was the prime source for all manufacturing and nonmanufacturing companies. It is still supplemented by lists from DoD and NASA, and occasionally other sources and is now called the Business Register. |

included in the coverage of the survey. There is no size criterion, except for the exclusion of single units of companies with fewer than five employees.

On a practical basis, the scope is defined and limited by the sampling frame for the survey. The sampling frame has changed over time. The frame has shifted from a social security file based on the Board of Old Age and Survivors’ Insurance program, to a frame built on multiple sources, to a frame constructed using the U.S. Census Bureau’s Business Register (see Box 3-2). The Census Bureau Business Register is the foundation of the Bureau’s economic programs. This establishment database contains data from the Internal Revenue Service, the Social Security Administration, and the Bureau of Labor Statistics. It serves as a frame for selecting samples for all of the Census Bureau’s economic programs and is updated on an ongoing basis for births and deaths.

One of the most difficult issues in the industry R&D survey is distinguishing those companies that perform R&D from among the many that do

not—the problem of finding a needle in a haystack (see Box 3-3). Some of these performers are known from previous surveys or other sources, many of which are contained in the “certainty” part of the sample—firms that should be included in the survey with certainty—which now totals 1,100 units. Most of the sampled companies in the industry R&D survey perform no R&D at all. In fact, the survey imposes a burden on nine firms to find just one that does reportable R&D. However, the small number of firms that are newly discovered by the survey may report significant expenditures. Multiplying their expenditures by a large sampling weight can lead to spikes in the time series of estimated totals and inflates variances. This can be especially crucial for estimates of small populations, such as state totals.

In response to the problem of severe fluctuations in the state estimates, NSF and the Census Bureau have taken several steps. One was to develop an estimator, a composite that takes into account research on small-area estimation. A second was to consider the use of some type of smoothing across time to try and eliminate or reduce the spikes. This option is under study and has not been implemented.

A third step has been to add questions to the Company Organization Survey about the performance of R&D. The Company Organization Survey is conducted annually by the Census Bureau to obtain current organization and operating information on multiestablishment firms. The results are used to maintain the Business Register. The United States Code, Title 13, authorizes this survey and provides for mandatory responses.

The Company Organization Survey is an annual survey that collects individual establishment data for multiestablishment companies. It is designed to collect ownership and operational status information for every

|

BOX 3-3

|

||||||||||||||

establishment of a company for purposes of maintaining the Census Bureau’s Business Register—the source of the sample for the industry R&D survey in 2004 (Bostic, 2003). Beginning in 2003, two questions were added to the survey to determine whether any R&D is done and, if so, the dollar amount (see Box 2-1):

-

“Did this company sponsor any research and development activities during 2003?”

-

“Yes—Did the expenditures on these research and development activities exceed $3 million?”

It is expected that the answers to the new questions will assist NSF and the Census Bureau in selecting a better sample for the 2004 survey. The information will permit distinguishing between companies that are R&D performers and those that are not, so that more of the sample can be directed toward companies with known R&D activity.

A fourth step is to retain the top 50 performers in each state as certainties each year. Since the noncertainty sample is independently selected each year, some performers identified in the current year may not be sampled in the next year. A rotating panel sample using permanent random numbers as described below may be a useful way of controlling this last problem.

A rotating panel sample would retain the noncertainty sample units for several years consecutively. Thus, when a sample company is found to have some R&D expenditures but not enough to make it a certainty, the company would remain in the sample for a few years and continue to contribute to the estimate instead of potentially dropping out the next year. After the system is fully under way, a portion of the sample would be rotated out every year and a replacement set of units rotated in. This is most easily implemented using stratified equal probability sampling. Currently, the noncertainty universe is partitioned between large and small companies. Large companies are sampled with probability proportional to size while small companies are selected by stratified simple random sampling. Thus, rotating panels would be straightforward to implement for the small companies while a switch to stratified simple random sampling might be needed for the stratum consisting of large companies. If the measures of size now used for large companies are not strong predictors of R&D within this stratum, then converting to equal probability sampling in strata would not sacrifice efficiency. Strata could be constructed so that uniform within-stratum rates would approximate the desired rates of probability proportional to size.

A variation on the rotating panel design is to have no overlap at all between the samples for consecutive years. This could be desirable in strata in which virtually no R&D is performed. Having 100 percent rotation

would increase the possibility of picking up some performers while still maintaining a probability sample, although complete rotation of the sample would considerably weaken estimates of change.

Use of permanent random numbers (PRNs) is one simple way of implementing a rotation scheme. Ohlsson (1995) and Ernst et al. (2000) describe the PRN method and its properties. A PRN could be assigned to each company on the frame by generating a uniform random number between 0 and 1. For each stratum in the design, a sampling window would be established depending on the desired sampling rate for the stratum. For example, a sampling rate of 0.1 could be achieved by selecting all companies in a stratum with 0 < PRN ≤ 0.1. By moving the window for the next time period to, say, 0.2 < PRN ≤ 0.12 (an expected) 20 percent of the time period 1 sample would be rotated out and an additional 20 percent rotated in. This method does lead to a random initial sample size, but with a large sample size this is a relatively minor issue. (Prior to 1998, the RD-1 survey did use a type of Poisson sampling that had a random sample size.) A variant of this method, called sequential random sampling, orders the units in a stratum by PRN and selects the first n of these. At the first time period, 0.2n in the example above would be dropped and the next 0.2n in the sorted list added to the sample. Although this method will yield a fixed sample size, as long as 0.2n is an integer, the selection probabilities of units will change if the stratum population size changes due to births and deaths. The sampling window method more naturally accommodates births and deaths while maintaining a fixed sampling rate, as noted below. A variation on PRN sampling, called collocated sampling, uses equally spaced random numbers and can help reduce variation in sample sizes. This method is used in several establishment surveys conducted by the Bureau of Labor Statistics (Butani et al., 1998).

By assigning a PRN to new entrants to the universe and applying this sampling window method to an updated frame at each time period, the sample automatically updates itself for births and deaths. Thus, cross-sectional estimates can be made that properly represent the current universe. The amount of overlap in the initial sample can also be controlled to improve estimates of changes. Nonresponse will, of course, mean that the amount of overlap will not be totally under control, but it can be predicted, at least in an average sense.

Supplementation with Special Lists

One concern in the industry R&D survey is that small start-ups that are engaged in research and development activities are not quickly captured by the Business Register used as the frame for the survey. An effective way of including new small companies may be to use commercial lists as a supple-

ment to the Business Register. One example is the CorpTech list of technology companies sold by OneSource Information Services, Inc. In 2004, this file consisted of 50,199 U.S. entities and 2,395 non-U.S.-owned entities, arrayed in 14,766 units. Over 50 percent of the entities in this database were in the hard-to-identify service industries, with telecommunications and Internet and computer software companies predominating. Other potential sources of data to be explored are the Dun & Bradstreet U.S. marketing file, lists of venture capital firms (Venture One, VentureXpert, and the Securities Data Company’s Strategic Alliances database), and the Recombinant Capital file.

The use of dual frames is common practice when attempting to survey a universe that has certain segments that are of special interest and that may otherwise be difficult to locate (Groves and Lepkowski, 1985; Kott and Vogel, 1995). For example, in its Commercial Building Energy Consumption Survey, the Department of Energy uses several list frames of special types of buildings to ensure that the sample sizes of hospitals, schools, and large buildings are adequate (Energy Information Agency, 2001).

Finally, there is the possibility that NSF has, in its own programs, the capacity to strengthen the frame for the industrial R&D survey. The Survey of Earned Doctorates (SED) is designed to obtain data on the number and characteristics of individuals receiving research doctoral degrees from U.S. institutions. This survey collects information from over 40,000 recent graduates in science and engineering research fields, who are asked to “name the organization and geographic location where you will work or study.” This information is tabulated by name of organization, geographic location, and sector (government, private, nonprofit).

Another human resource survey, the Survey of Doctorate Recipients (SDR), is designed to provide demographic and career history information about individuals with doctoral degrees and asks questions about the place and nature of work. Specifically, the survey collects information on educational history (field of degree/study, school, year of degree, etc.), the employer’s main business, employer size, employment sector (academia, industry, government), geographic place of employment, and work activity (teaching, basic research, etc.). This information is available for about 40,000 individuals with research doctorate degrees. It is not a simple task to align the information provided by individuals with the Business Register information maintained by the Census Bureau; however, the advantage of being able to focus attention on the employers of people with educational credentials suggesting an R&D focus offers possibilities for identifying such employers and stratifying the frames for the purpose of estimating the amount of R&D in industry.

There are a number of practical problems to be solved in using one or more supplemental lists. Lists may overlap and duplicates must be handled

in some way. The units on the lists may not all be the same—establishments may be mixed in with companies, for example—and some editing will be needed in advance of sampling. However, the payoff in efficiency through utilization of supplemental lists could be substantial, and the panel recommends investigating this approach (Recommendation 3.2).

Classification Issues

The industrial R&D survey is a survey of companies, not business units. The present practice of classifying R&D activity within the companies follows the standard Census Bureau practice for allocating economic activity by companies in which R&D activity is attributed to the company’s primary industry classification. Each company is assigned a single NAICS code based on payroll. Multiunit companies are assigned a code that represents their largest activity as measured by payroll.7

This practice of assignment of industrial classification has evolved over the years. Initially, the classification code was that of the establishment having the largest number of employees. Later, when the Census Bureau used the annual survey of manufacturers as the sample frame, major activity for a company was based on value added, then on product shipment. Now that the Census Bureau’s Business Register is the frame, the assignment is based on payroll.

As was pointed out in a previous National Research Council (NRC) report, this means, for example, if 51 percent of a firm’s payroll is classified as in motor vehicles and 49 percent in other products, all of the R&D activities are classified in “motor vehicles” (National Research Council 2000:89). This lumping of R&D activity into a single code can result in overrepresentation of some major industrial activities and underrepresentation of others. The distortion is most serious in companies that are highly diversified.

In order to obtain a more accurate depiction of the industrial classification of R&D activity, it has been suggested that the data be collected by business unit within the companies. The business unit is a firm’s activities associated with a given product market. If that activity could be separately identified, reported, and aggregated, data on R&D would more sharply

depict the true nature of the R&D activity, enabling a better understanding of the character of technological innovation.

It has also been suggested that collection of data at the line-of-business level would improve understanding of the geographic focus of R&D. At present, collection of R&D data at the corporate level requests that the company submit data on R&D expenditures by state. Companies have various means of ascribing R&D activity to states. Strong anecdotal evidence suggests that companies have developed means of allocating activity by geographic area that produce data of questionable quality. If the data were collected by line of business, it might be possible to also improve the accuracy of the estimates of state R&D activity and to obtain information of substate geographical location for the first time.

For these good reasons, a 1997 NRC workshop report concluded that the industry R&D survey should be administered to business units (a firm’s activities associated with a given product market) and should collect “R&D expenditures, composition of R&D (process versus product; basic research, applied research and development), share of R&D that is self-financed, supported by government, or other contract, as well as contextual information on business unit sales, domestic and foreign, and growth history of the business unit” (National Research Council, 1997:20).

Although a strong case can be made for collection of data at the business unit level, there are also key questions to be answered: Are the data available at the appropriate level of detail, are they collectable, and is the cost of obtaining the data, both in terms of collection resources and burden on the respondents, justified by the benefit?

Issues in Identifying the Line of Business

As discussed above, since the earliest days of industrial R&D classification, the practice of determining the code of a company by its main activity means, in essence, that the entire research and development operation of a company is classified in that industry (Griliches, 1980). Early on, however, there were voices that suggested the need for more detailed coding. For example, Mansfield (1980) suggested that, for some purposes, it might have been better to use finer industrial categories. Otherwise, some results for individual industry groups are difficult to interpret (Mansfield, 1980). The debate continues today. Recent work by the Conference Board has concluded that there is considerable heterogeneity in the cost structure and skills among business units of the same firm (McGuckin, 2004). These differences are masked in today’s R&D data.

The problem stems from the fact that the reporting unit for the industrial R&D survey is the company, defined as a business organization of one or more establishments under common ownership or control. The

TABLE 3-1 Company Counts, RD-1 Data, 2000 (by company and other R&D in $million)

|

Sector |

Total |

< 0.2 |

≥ 0.2, < 1 |

≥ 1, <10 |

≥ 10, < 100 |

≥ 100 |

|

Manufacturing companies |

16,917 |

9,805 |

3,777 |

2,559 |

632 |

145 |

|

Nonmanufacturing companies |

17,456 |

7,781 |

5,260 |

3,443 |

896 |

76 |

|

Total companies |

34,373 |

17,586 |

9,037 |

6,002 |

1,528 |

221 |

|

SOURCE: National Science Board (2002). |

||||||

survey includes publicly traded and privately owned, nonfarm business firms in all sectors of the U.S. economy. For the latest year for which data have been released, company count data are shown in Table 3-1. The number of nonmanufacturing companies in the sample has exceeded the number of manufacturing companies, although the number of very large R&D performers in manufacturing is still larger than the number in nonmanufacturing.

Through the years, there have been a number of attempts to disaggregate the R&D data by collecting information from companies at the line of business, enterprise, or product level.

It has been noted that global companies disaggregate their data when they file the corporate income tax form with IRS, since they are obligated to show their tax liability on income for the domestic part of the company. They also distinguish R&D in the United States and abroad when they apply for the R&D tax credit. The Bureau of Economic Analysis, in its foreign direct investment surveys, requires global companies to do the same. And at the Census Bureau, when the Company Organization Survey is sent out, the target is the domestic part of the company, and the same distinction is made when enterprise statistics data are compiled and published. Thus, there appears to be ample precedent, both in government agencies and in respondent companies, for reporting R&D separately for the domestic and foreign parts of the company.

NEW DATA APPROACHES

FTC Line of Business Program

The country went through a substantial merger wave in the late 1960s and early 1970s, which was typified by the creation of some very large diversified companies. In both the financial community and the government

regulatory community, concern about the loss of useful data began to grow. In the financial community, the issue was how investors could continue to make sense out of corporate data as one large company after another got acquired by the large conglomerate buyers. The result was that the Financial Accounting Standards Board issued its Statement of Financial Accounting Standard (SFAS) 14.8

The Bureau of Economics at the FTC was interested in standard measures of industrial organization performance variables: sales, assets, profits, advertising and other marketing expense, and R&D expense. Given specific interests of the FTC—enforcement of antitrust and consumer protection laws—the bureau designed a data collection program that would get at least the four leading companies in each industry. The target of four companies per industry was designed to provide publishable industry detail while protecting confidentiality. The FTC designed the industry list to highlight industries that were large and subject to firm and industry behavior that made them attractive from the perspective of the FTC’s mandate.

The contribution of line-of-business data to understanding how companies organize and account for R&D and how R&D should be classified is exemplified in comparing the FTC results with the standard view obtained by analysis of the Census Bureau results. The purpose of the FTC effort was to disaggregate the companies’ data across relatively specifically defined industries so as to be able to compute industry aggregates that would more accurately reflect industry performance. The Line of Business Program was designed to capture conventional “industrial organization” variables—a variety of profit measures, advertising expenses, and R&D expenses. Some comparative data for 1977 for the FTC and NSF/Census Bureau data collections are shown in Table 3-2. A further treatment of the history of the program is given below.

As shown in the table, 456 companies reported a total of 3,680 manufacturing lines of business, so the average number is 8.1 per company. (The true average could be less than 8.1, though probably not much less.) There could be fewer, although probably not many fewer, since each reporting company was required to report even small amounts of R&D if it had such activities.

The impact of the NSF/Census Bureau procedures of assigning a whole company to a single industry and using a relatively small number of quite aggregative industry definitions, relative to the approach taken by the FTC, can be seen clearly in Table 3-3. The ratios of company-financed R&D to

TABLE 3-2 Comparison of Federal Trade Commission (FTC) and National Science Foundation (NSF) Data, 1977

|

Source |

Companies |

Units |

Units/Company |

Industries |

Units/Industry |

|

FTC Line of Business |

456 |

3,680 |

8.1 |

259 |

14.2 |

|

NSF/Census Bureau, manufacturing, all reporters |

13,497 |

13,497 |

1.0 |

25 |

539.9 |

|

NSF/Census Bureau, manufacturing, all reporters, 259 industries |

13,497 |

13,497 |

1.0 |

259 |

52.1 |

|

NSF/Census Bureau, manufacturing, ≥ $5 million in R&D, FTC Line of Business |

507 |

4,107 |

8.1 |

|

|

|

SOURCE: Long (2003). |

|||||

sales, as well as the ranks, are taken from the 1977 FTC report. The matching ratio data for NSF are taken from or derived from their published report, and the ranks were then determined.

The first line of the table, for what the pharmaceutical industry calls ethical (prescription) drugs, is striking. The industry definition is at the finest level of detail in the FTC list, being part of a 4-digit Standard Industrial Classification (SIC) (2834 pt.). In the other column, the Census Bureau determined that, even though the pharmaceutical industry (283) is shown as one of the reporting industries, some aspect of the data required the suppression of the company-financed R&D data. The number in the table is for all chemicals except industrial chemicals (283-289). Even this is at a finer level than the one used in the Griliches study, which prompted Mansfield to observe, “[f]or example, the chemical industry includes petroleum, chemical, and drug firms. Thus, when R and D intensity and other variables are regressed on firm size, a considerable part of the relationship must be due to the well-known differences among the petroleum, chemical, and drug industries” (Mansfield, 1980:455).

Data for drugs are not the only problem, however. Third in the FTC ranking is aircraft engines and parts. The closest industry in the NSF publication is aircraft and missiles. More on the impacts of this two-pronged approach of assigning a whole company to a single industry and using very aggregative industries is presented below.

Although the FTC data were shown to be quite valuable in understand-

TABLE 3-3 Company R&D/Sales Ratios, 1977

|

Industry |

Federal Trade Commission % |

Federal Trade Commission Rank |

National Science Foundation % |

National Science Foundation Rank |

|

Ethical drugs |

10.2 |

1 |

3.6 |

8 |

|

Computing equipment |

8.9 |

2 |

9.4 |

1-2 |

|

Aircraft engines & parts |

8.4 |

3 |

2.9 |

10 |

|

Calculating, accounting machines |

7.3 |

4 |

9.4 |

1-2 |

|

Photographic supplies |

6.3 |

5 |

5.3 |

4-6 |

|

Semiconductors |

6.1 |

6 |

3.0 |

9 |

|

Photocopying equipment |

5.7 |

7 |

5.3 |

4-6 |

|

Optical instruments |

5.5 |

8 |

5.3 |

4-6 |

|

Engineering and scientific instruments |

5.0 |

9 |

5.4 |

3 |

|

Telephone, telegraph, radio, TV equipment |

4.9 |

10 |

4.3 |

7 |

|

SOURCES: Federal Trade Commission (1985); National Science Board (2002). |

||||

ing the operations of companies, opposition arose from about a third of the expected respondents, culminating in a large lawsuit, joined by about 180 companies. Arguments by the plaintiffs were that: (1) the FTC was a law enforcement agency, so they could be sure that the data would be used only for statistical purposes; (2) the FTC did not have the legal authority to collect the data; and (3) allocation and transfer pricing practices vary across firms, so the data would be too flawed to be of use. The case went to trial, the FTC won the case, and the program was put into operation.

Data were collected for 1974-1977, but support for the program began to wane, due to business opposition and political pressure against data collection programs. The program was formally cancelled, although the FTC decided that the data already collected would be made available for research along with the microdata. The inclusion of R&D data in the report form did

not in itself raise much concern during the contest over the program, because opponents apparently did not see it as particularly noteworthy.9

Product Group Data

To overcome some of the conceptual and collectability issues associated with assigning industrial classifications to detailed business units within companies, there have been several attempts to study R&D using R&D by product field. The product field data usually refer only to applied research and development—basic research is, by definition, not possible to ascribe to specific products and is excluded. In this framework, all pharmaceutical R&D would be counted as pharmaceuticals regardless of whether conducted by pharmaceutical companies or other industries. Early attempts to analyze product field data were limited by problems of reliability of published data (Griliches and Lichtenberg, 1984).

A recent study shows the differences in the industrial breakdown of R&D in the United Kingdom that are induced by collecting product group data rather than industry of origin of the spending. It indicates, for example, that total R&D in pharmaceuticals would be £2.5 billion if defined by product, versus just £743 thousand if defined by industry (Griffith et al., 2003).

IRI/CIMS Business Segment Reporting

Starting in 1993 and continuing through 1998, the Industrial Research Institute (IRI), working with the Center for Innovation Management Studies (CIMS), formerly at Lehigh University and now at North Carolina State University, collected R&D data from member companies for business segments. These business segments were for the most part the same as the industry segments that companies reported on their 10K filings with the SEC.

Each segment was classified into 1 of about 70 industries, most of which were at the 4-digit SIC level. For the years for which data were collected, the number of companies and business segments are shown in Table 3-4. In addition to data for business segments, the survey collected data on R&D labs. The program ceased the collection of new data with the 1998 reporting year.

TABLE 3-4 Industrial Research Institute and Center for Innovation Management Studies’ Business Segment Reporting

|

Item |

1993 |

1994 |

1995 |

1996 |

1997 |

1998 |

|

Companies |

62 |

83 |

84 |

79 |

82 |

77 |

|

Business segments |

142 |

181 |

157 |

142 |

138 |

131 |

|

Segments/company |

2.3 |

2.2 |

1.9 |

1.8 |

1.7 |

1.7 |

|

SOURCE: Bean et al. (2000). |

||||||

Carnegie Mellon Survey

Under the direction of Wesley Cohen, Carnegie Mellon University collected data on a large number of manufacturing R&D laboratories, after expending a substantial amount of resources on identifying the labs and key people at them (Cohen et al., 2000, 2002a). The population sampled was all of the R&D labs or units located in the United States conducting R&D in manufacturing industries as part of a manufacturing firm. The sample was randomly drawn from the eligible labs listed in the Directory of American Research and Technology or in Standard and Poor’s COMPUSTAT, stratified by 3-digit SIC industry (RR Bowker Inc., 1994).10 The survey asked R&D unit managers questions about the “focus industry” of their unit, which the authors defined as the principal industry for which the unit conducted its R&D activities. The survey sampled 3,240 labs and received 1,478 responses, yielding an unadjusted response rate of 46 percent and an adjusted response rate of 54 percent.11

Cohen and associates asked R&D unit or lab managers to answer questions with reference to the “focus industry” of their R&D lab or unit, defined as the principal industry for which the unit was conducting its R&D. Thus, respondents identified the industry for which the lab conducts research.

This substantial data file has now been used to support empirical work on a number of R&D issues. The data cover only one year, but the study makes available a wide variety of variables, supporting research on the public-private question, the role of patents, the impact of spillovers, and firm size and diversity effects. Additional research based on this important study has shown that the impact of industrial R&D on university and

government research is much more pervasive than previously thought, suggesting that the returns to such publicly-funded research are likely greater than typically thought; proved that patents stimulate R&D across the entire manufacturing sector; and shown that patents contribute to greater R&D spillovers in Japan than in the United States, suggesting that patents can have an important effect of the diffusion of new technology.

Center for Economic Studies

Since Griliches’ original work, the Census Bureau has developed the Center for Economic Studies (CES) to support the kind of research he did by pulling resources from the Census Bureau and other places together into one facility while facilitating access to outside researchers. CES now has available for use data from 1972 through 2000. A modest number of researchers have used the R&D data.

One major effort that utilized the newly available facilities of the CES has been the development of a set of master files of Census Bureau R&D survey reports with reports based on COMPUSTAT, with a special emphasis on R&D and related variables. Several dozen working papers that use these files have been released (Hall and Long, 1999).

The important work of the Center for Economic Studies highlights the potential of more intensive use of firm-level data linking R&D expenditures with other aspects of the behavior of the firm. This work suggests the need to collect and process R&D or innovation data at the firm level in such a manner that it can be integrated with the rich new longitudinal firm-level datasets that have been and are being constructed at the federal statistical agencies. Such microdata integration is essential for a number of reasons. First, such data integration permits micro-based research, which is essential for scientific analysis. Second, such data integration permits richer public domain aggregated statistics that can be constructed based on the integrated data. Third, such data integration permits a cross-check on potential data problems. For example, some of the new matched employer-employee data have rich information about the workforce composition and earnings associated with different types of workers. The latter information might be quite useful for checking against the survey responses on the number of employees devoted to R&D activities.

Can R&D Line-of-Business Data Be Collected?

It is intuitive that the business unit is the appropriate unit of collection, if it is possible to collect data at the business unit level. Many of the data on R&D activity in multiunit companies are available only at the divisional or business unit level (Hill et al., 1982). For example, to complete the RD-1

form at the company level of aggregation, as requested in the industrial R&D survey program, someone at the corporate level has to gather and collate data from business units. The panel was able to discuss this process of aggregation with one major company in which this process is formal, structured, and embedded. However, many firms do not have standardized and centralized procedures for completing these data requests.

In order to answer the central question of whether line-of-business data could be collected in the industrial R&D survey, the Special Studies Branch of the Manufacturing and Construction Division of the Census Bureau conducted a study based on a survey of 45 companies that had filed RD-1 forms for 1997 (U.S. Census Bureau, 2000). The study concluded that reporting on the RD-1 form at the line-of-business level would be inappropriate because: (1) companies use different terms to refer to lines of business; (2) companies are not able to assign the lines of business they have in industry terms; and (3) companies could not provide detail on basic research, applied research, and development.

While this study was illustrative, it was far from conclusive. Indeed, 43 of the 45 companies stated that they could report R&D at a subcompany level. The problem was a lack of uniformity as to the reportable subcompany unit. Some could report on “groups,” others on “sectors,” still others on “product lines.” These various nomenclatures may have reflected differing organizational structures, but they could also have simply reflected naming conventions.

A critique of this survey indicated problems with analysis of nonresponse and failure to use collateral data from the Census Bureau and elsewhere (Long, 2003). Needless to say, additional study of the collectability of line-of-business data is warranted.

Revisiting Previous NRC Recommendations

This is not the first time a National Research Council study group has looked at the issue of line-of-business reporting and recommended action to NSF. After examining the appropriate unit of measure for the R&D survey, the Committee to Assess the Portfolio of the Division of Science Resource Studies of the NSF concluded that NSF should “examine the costs and benefits of administering the Survey of Industrial Research and Development at the line of business level” (National Research Council, 2000:8). We reemphasize that recommendation, and we conclude that appropriate assignment of industrial classification to industrial R&D activity requires additional breakdowns of data at the business unit level (Recommendation 3.3).

Since the publication of the previous NRC recommendation, NSF and the Census Bureau have taken the significant step of collecting R&D data in the Company Organization Survey. This survey collects data at the estab-

lishment level, and provides, for the first time, a platform for assessing the feasibility of collection and the attendant cost and benefit issues associated with line-of-business reporting. We urge NSF and the Census Bureau to evaluate the results of the initial collection of R&D data in the Company Organization Survey to determine the long-term feasibility of collecting these data (Recommendation 3.4).

NSF and the Census Bureau should test the ability to collect some disaggregated data by more detailed NAICS codes. It could take a top-down approach, as tested by the FTC, or a bottom-up approach, as utilized in the Yale and Carnegie Mellon surveys. In either case, the ability of reporters at the central office or in decentralized operating units to respond to the inquiry is the key to collecting valid line-of-business data. We recommend that the record-keeping practice surveys should be used to assess the feasibility of respondents providing this additional detail and the burden it would actually impose on reporters. With this information in hand, NSF and its advisory committee (recommended in Chapter 8) should decide whether the collection of reliable R&D line-of-business data is feasible and, if so, for all or a subset of reporters, and at which frequency (Recommendation 3.5). If possible, this investigation should be undertaken jointly with representatives of industry, whose cooperation will be absolutely essential to the success of the collection of these additional data.

MEASURING THE GEOGRAPHIC DISPERSION AND IMPACT OF R&D

Interest in the geographical dispersion and impact of industry R&D expenditures is keen on the part of national and local decision makers, as well as those who seek to understand the relationship between investment and outcomes. The NSF documents that support the Office of Management and Budget (OMB) approval of the industrial R&D survey claim that state statistics are among the most important and most frequently requested statistics produced from this survey. Requests for these statistics come from agencies, both public and private, in states where a great deal of industry R&D is performed and from states that are trying to spur new R&D performance.

In view of this interest, and attentive to the fact that much of the interest comes from the U.S. Congress, the development of estimates of R&D expenditures by state has been a key objective of the industry survey program for some time. It is therefore unfortunate that the estimates that are now produced are not very good. Given the current survey design and implementation, they could not be expected to be very good.

The basic problem of state estimation for the R&D survey has two sources. First, R&D is a rare event among the population of all companies.

In the survey it is reflected in the reports of only about 3,400 companies in recent survey rounds. These reports cover most but not all of the largest companies, as well as about 10 percent of the 35,000 firms that conduct some R&D in a given year. Estimates that are derived from these responses may be adequate for the United States as a whole, but they could be entirely inadequate at the state level if key R&D performers in a given state did not report. Second, some reporters may not report each year, generating estimates that indicate false growth or decline in activity.

State-level reliability requirements are taken into consideration during sample selection, but R&D estimates for states are highly volatile due to the “rare event” nature of the measured variables. The year-to-year volatility is aided and abetted by the fact that the Census Bureau selects independent samples each year for all but the certainty strata.

The response of survey managers to this problem has been threefold.

-

Enlarge the sample. Beginning in 2002, the sample was increased by 6,000, largely to provide for more sample units for each state.

-

Change the sample selection process. Also in 2002, the Census Bureau began to use the last 4 years of reported R&D survey data to assign the probability of selection. Companies that reported at least once in the 4-year period were divided into three strata: all cases were taken when reported R&D exceeded $3 million; probability proportionate to size constrained by industry and state methods were used for those reporting up to $3 million; and simple random sampling was used when no R&D was previously reported. In addition, state information from the Business Register was used for the first time to assign probabilities of selection to the 1.8 million companies for which R&D status was unknown. The top 50 firms in each state based on payroll are now selected with certainty for the sample.

-

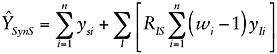

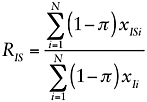

Improve the estimation process. The Census Bureau uses a small-area estimation procedure with the goal of reducing the mean square error in which the estimate is originally a weighted value. The Census Bureau considered mean square error as a measure of some of the bias. To overcome this effect, the Census Bureau created a model that seeks to minimize fluctuations from year to year (see Box 3-4). The model, which does not affect variance at national level, is applied to the final data in order to smooth out the estimates. While the smoothing reduces the variability, it makes it harder for the states to analyze the meaning of the data.

The panel commends the National Science Foundation and the Census Bureau for developing this composite estimator, which takes into account research on small-area estimation. However, we recommend that additional simulations be conducted to assess the bias, variance, and mean square error of these new state estimates (Recommendation 3.6). In addi-

|

BOX 3-4   where ysi = reported R&D in state S of ith company yIi = reported R&D in industry I of ith company wi = weight of ith company xISi = payroll in industry I and state S of ith company xIi = payroll in industry I of ith company SOURCE: Formula provided by staff of the National Science Foundation. |

tion, future research could profitably explore alternative estimators for handling outliers, drawing on the literature on finite population estimation (see, for example, Cochran, 1997; Kish, 1965).

SURVEY DESIGN ISSUES

Today’s R&D survey is, in reality, two surveys in one. One survey, which has existed from the earliest days of the data collection, is a survey of the companies thought to have the largest R&D expenditures. These companies can be characterized in statistical methodology terms as a “certainty stratum.”

The very first survey defined the certainty stratum as all companies within scope having 1,000 or more employees. Over the years, the criteria changed. After 1994, the size criterion based on number of employees was dropped. In 1996, the criteria were total R&D expenditures of $1 million or more based on the previous year’s survey or on predetermined sampling

TABLE 3-5 Sample Size and Response Rates, 1990-2002, Industry R&D Survey

|

Year |

Sample Size |

Manufacturers |

Nonmanufacturers |

Response Rate |

|

1990 |

1,696 |

about 1,400 |

about 300 |

79.3% |

|

1991 |

1,648 |

about 1,400 |

about 300 |

85.2 |

|

1992 |

23,376 |

11,818 |

11,558 |

84.0 |

|

1993 |

23,923 |

15,018 |

8,905 |

81.8 |

|

1994 |

23,543 |

2,939 |

10,604 |

84.8 |

|

1995 |

23,809 |

7,595 |

16,214 |

85.2 |

|

1996 |

24,964 |

4,776 |

20,188 |

83.9 |

|

1997 |

23,327 |

4,655 |

18,672 |

84.7 |

|

1998 |

24,809 |

4,836 |

19,973 |

82.7 |

|

1999 |

24,231 |

4,933 |

19,498 |

83.2 |

|

2000 |

24,844 |

4,808 |

20,036 |

81.1 |

|

2001 |

24,809 |

4,505 |

20,604 |

83.2 |

|

2002 |

30,999 |

10,920 |

20,079 |

80.9 |