4

Infrastructure Management Performance Measures

If you can’t measure it, you can’t manage it.

—Peter Drucker

Everything that can be counted does not necessarily count; everything that counts cannot necessarily be counted.

—Albert Einstein

INTRODUCTION

Industry and management gurus have long espoused the importance of performance measures to achieving management objectives. Like the instruments in an airplane cockpit, measures are needed for managers to know the effect of decisions on the direction and rate of change in performance. However, it is also important that the performance measures be based on criteria that correspond to the desired outcomes. In management, as in physical science, it is often difficult and not always possible to obtain objective measures of key outcomes.

Since enactment of the Government Performance and Results Act of 1993, performance measures have played an increasingly important role in the management of federal agencies. They are used by agencies and by the Office of Management and Budget to gauge the progress an agency is making in achieving its annual goals; however, they can also be used internally as a management tool to improve procedures and thus increase long-term performance.

This chapter is in four parts: (1) a discussion of performance measures and related models that have been employed by other organizations to assess their

facilities and infrastructure (F&I) performance and to guide efforts to improve management policies and procedures; (2) a critique of the metrics that DOE currently uses to manage its F&I; (3) a proposal for an integrated management approach for DOE with an illustration of how it might be applied; and (4) a brief discussion of a decision system to determine whether to sustain, recapitalize, or dispose of a facility.

BEST PRACTICES

The NRC report Stewardship of Federal Facilities: A Proactive Strategy for Managing the Nation’s Public Assets (NRC, 1998) argues that there is a direct relationship between the condition of a facility and its ability to serve a mission or to continue to serve as the mission changes. The report noted that:

Performance measures are critical elements of a comprehensive management system for facility maintenance and repair. Determining how well the maintenance function is being performed or how effectively maintenance funds are spent requires well-defined measures. Although it may appear that mission readiness and cost alone are insufficient to judge the performance of the maintenance and repair function, if the measures are broad enough, they will capture all relevant aspects of a facility’s condition. (NRC, 1998, p. 71)

In the course of the current study, the committee reviewed several processes and performance assessment models from private and public organizations that were considered to be among the best practices for facilities and infrastructure management. Some of these models specifically address the performance of facilities, while others address broader facilities management issues and process improvement. Although no single entity presented a model that was completely applicable to DOE, components of these models appear appropriate for consideration in an integrated management approach for DOE.

Strategic Assessment Model

The Association of Higher Education Facilities Officers (APPA) developed and maintains an assessment model called the Strategic Assessment Model (SAM) (APPA, 2001). It is an evaluation and management tool that enables facilities professionals to track organizational performance along an array of key performance indicators or metrics. SAM was used for an APPA-sponsored survey that collected data from 165 educational institutions (including K-12, community colleges, and institutions of higher education) as a benchmark for comparing facilities management performance. It enables the facilities professional to assess an organization’s performance, the effectiveness of its primary processes, the readiness of employees to embrace the challenges of the future, and the ability to

satisfy its clients. Facilities managers can utilize the model for self-improvement, benchmarking, and to support a program of continuous process improvement.

SAM is derived from two widely accepted systems for continuous process improvement: the Malcolm Baldrige National Quality Award and the “balanced scorecard” (BSC). It incorporates the best of both systems and provides a consistent vocabulary for continuous improvement of facilities management. As a process improvement tool, SAM addresses the following three basic questions: (1) Where have you been (the past)? (2) Where are you going (the future)? and (3) What do you need to get there (the present)?

The Malcolm Baldrige National Quality Program

The Malcolm Baldrige National Quality Program1 is generally recognized as a premier continuous improvement program in the United States. It was established over a decade ago to stimulate businesses and industry to focus on quality and continuous improvement. Its primary objective is to improve enterprises to meet the demanding challenges of the ever-changing and competitive environment.

The Malcolm Baldrige Criteria for Performance Excellence are reviewed, updated, and refined annually to ensure that the program emphasizes the factors that will make the greatest difference in improving an organization and achieving performance excellence. The criteria (NIST, 2004) are the basis for organizational self-assessment, with the core values and concepts of the program embodied in 7 main categories and 19 subcategories. The seven main categories are:

-

Leadership

-

Strategic Planning

-

Customer and Market

-

Innovation and Analysis

-

Human Resource Focus

-

Process/Internal Management

-

Business Results

The Balanced Scorecard

The second paradigm for improvement is the “balanced scorecard” (BSC). BSC is a concept developed by Robert S. Kaplan, professor at the Harvard Business School, and David P. Norton, president of Renaissance Solutions, Inc.

|

1 |

The U.S. Department of Commerce is responsible for the Malcolm Baldrige National Quality Program and annual award. The National Institute of Standards and Technology (NIST) manages the program. Additional information regarding the program can be obtained online at http://www.nist.gov. |

(Kaplan and Norton, 1996). Developed as a tool for organizational leaders to mobilize their staff for achieving organizational goals effectively, BSC addresses performance metrics from four perspectives: (1) financial, (2) the organization’s internal processes, (3) innovation and learning, and (4) customer service. The BSC approach provides a holistic view of current operating performance, as well as the drivers for future performance. While the concept primarily addresses the needs of business and industry, its success and acceptance have also been growing rapidly in the public sector.

The Strategic Assessment Model uses elements of the balanced scorecard approach to integrate both financial and nonfinancial measures both to show a clear linkage between facilities and organizational goals and strategies, and to assess successful facilities management across organizations. Under this concept, goals become the road map to the desired outcomes. BSC can be used as a management tool for communicating goals and strategies throughout an organization, as well as for communicating the progress and status of the strategies for accomplishing the goals.

SAM combines quantitative performance indicators with the qualitative criteria for determining the levels of performance of an organization in each of the BSC perspectives. Key performance indicators have been defined for each of the four BSC perspectives listed below:

-

The financial perspective reflects the organization’s stewardship responsibility for capital and financial resources associated with the operation and preservation of physical assets. Financial performance indicators are tracked to ensure that services are delivered in an efficient, cost-effective manner. The financial perspective is linked to the other perspectives through the relationships between costs and results in achieving the other scorecard objectives. The primary facilities management competencies include:

-

operations and maintenance,

-

energy and utilities,

-

planning, design, and construction, and

-

administrative and support functions.

-

-

The internal process perspective addresses the key aspects of the organization’s processes for the delivery of internal services. These processes may include handling of work orders, procurement, billing, and relationships with suppliers. Measures should indicate that the processes for services are efficient, systematic, and focused on customer needs. There is an emphasis on identifying internal opportunities for improvements and measuring results.

-

The innovation and learning perspective addresses key practices directed to creating a high-performance workplace and a learning organization. In a learning organization, people at all levels, individually and

-

collectively, are continually increasing their knowledge and capacity to produce the best practices and best possible result. This perspective considers how the organization’s culture, work environment, employee support climate, and systems enable and encourage employees to contribute effectively. There is an emphasis on measuring results relating to employee well-being, satisfaction, development, motivation, work systems performance, and effectiveness.

-

The customer service perspective addresses how the organization determines the requirements, expectations, and preferences of customers to ensure the relevance of its services and to develop new services.

An objective of RPAM is to increase accountability and improvements in facilities management. In pursuit of this objective DOE needs to be aware of the varied interests of its stakeholders and satisfy their unique requirements. Tax-payers are interested in ensuring that health, safety, and national security are protected and that resources are efficiently and effectively utilized. Facility users want cost-effective services that are of high quality and sufficient to meet their own mission requirements. Employees have certain expectations of their supervisors and have personal needs in the workplace environment. A scorecard approach could help DOE managers balance these interests.

Navy Shore Installation Management Balanced Scorecard

The Navy has developed a system for shore installation management (SIM) based on a balanced scorecard approach. The Navy’s SIM FY2003 Stockholders Report (Navy, 2003) notes that:

The balanced scorecard is particularly applicable for SIM because it is a management system (not only a measurement system) that enables organizations to clarify their vision and strategy and translate them into action by viewing the organization from four perspectives, developing metrics, collecting data, and analyzing the results relative to each of these perspectives. Simplified, and as agreed by the Navy’s SIM Shore Installation Planning Board (SIPB), it provides an improved methodology to gauge overall performance. (Navy, 2003, p. 10-1)

Although not yet fully populated, the SIM scorecard includes seven metrics to manage the Navy’s shore facilities and infrastructure to provide the greatest long-term benefit to the fleet. Following the balanced scorecard approach, the Commander of Naval Installations (CNI) has identified a family of performance measures that link cost and resource management, customer satisfaction, policies and procedures, and human capital management to the CNI’s vision and strategy. The Navy SIM balanced scorecard includes the following metrics:

Customer

-

Percent of customers satisfied with performance

Investment

-

Program to requirements ratio

-

Budget to program ratio

-

Execution to budget ratio

Process

-

Percent of functional areas with approved standards

-

Capability achieved to capability planned ratio

Workforce

-

Employee satisfaction and effectiveness

The metrics assess both past performance and planning and budgeting for the future. The ratios are used as a general indicator of the accuracy of assessed requirements, program credibility, budget accuracy, and alignment of budget and functional requirements. The Navy notes that to improve its decisions, it needs timely data that relates planning to programming decisions and provides the ability to see the results of the programming and budgeting cycle in the execution phase. These metrics provide the additional detail needed at the service level to compliment the DoD metrics discussed below.

Although APPA and the Navy both use multiple-metric BSC systems to evaluate performance and support management decisions, their objectives and approaches vary. There is no single example that the committee can recommend for DOE to follow; however, the committee notes that DOE needs to expand the number of metrics it uses and ensure their consistent use throughout the department and that these metrics should be coordinated and verifiable to support F&I management decisions at all levels.

Department of Defense Facilities Management Metrics

Prior to 2001, the Department of Defense (DoD) utilized the Backlog of Maintenance and Repair (BMAR) as the primary metric to ascertain the amount of funds required to sustain its facilities. BMAR provided a measure of what had not been done versus a measure of what should be done. Frustrated by BMAR’s inability to account for the department’s efforts to improve its facility maintenance, DoD developed the Facilities Sustainment Model (FSM) as a department-wide standard for measuring facilities maintenance requirements and improvements.

DoD now uses a comprehensive set of tools that includes the FSM, the Facilities Recapitalization Metric (FRM) to assess the recapitalization rate, and the Installation Readiness Report (IRR) to asses the output of its F&I programs. This set of metrics is used consistently by all defense services and used at the departmental level to validate budget planning and funding requests (DoD, 2002).

The IRR is a tool to present an annual picture of facility conditions. As such, it assists in the management of limited installation resources. The following are four ratings used in IRR:

Q-Ratings (Quality): Facilities deficiencies versus replacement plant value

N-Ratings (Quantity): Facilities assets versus requirements

F-Ratings (Combined): Overall assessment of quality and quantity (lower of N- and Q-ratings)

C-Ratings (Readiness): Relationship of the quantitative and qualitative ratings to mission readiness

FRM is expressed as the number of years it would take to regenerate a physical plant and is calculated as the ratio of the value of assets (i.e., replacement plant value) to the recapitalization programmed for the physical plant. The adequacy of the facility recapitalization budget can be assessed based on an estimated recapitalization requirement of 67 years. FRM is a macrolevel tool and is not applicable to a single facility. The application of FRM to other systems requires adjustment of the recapitalization requirement. Both FRM and FSM are dependent on adequate and correct inventories.

National Aeronautics and Space Administration Full Cost Management Model

The National Aeronautics and Space Administration (NASA) considers its real property to be an integral part of its mission and a corporate asset that requires ongoing capital investment (NASA, 2004). Beginning in FY2005, NASA will manage its facilities using a Full Cost Management Model. The model consists of new construction, revitalization/modernization, and sustainment components. The requirements in each category are determined using one of the four tools described below:

-

Facilities Sustainment Model (FSM). Parametric model based on facility type and current replacement value (CRV) (another term used for replacement plant value or RPV). The NASA FSM is a large database of sustainment (maintenance and repair) costs adapted from the DoD FSM.

-

Deferred Maintenance (DM). Parametric model, based on a complete “fence-fence” visual inspection and assessment of facilities, and combined performance ratings of nine subsystems divided by the facility CRV. This replaces the backlog of maintenance and repair (BMAR) as the agency metric.

-

Facility Condition Index (FCI). A rating of 1–5 based on DM inspections.

-

Facilities Revitalization Rate (FRR). Number of years it will take for a facility to be fully revitalized at a given rate of investment. Calculated as CRV/annual revitalization funding.

U.S. Coast Guard Shore Facility Capital Asset Management Metrics

The U.S. Coast Guard (USCG) Office of Civil Engineering has developed a suite of performance metrics to provide information needed to support shore facility capital asset management decisions (Dempsey, 2003). The objective is to link F&I decisions to mission execution while integrating their impact on the cost of doing business and the cost of total ownership. The suite of metrics includes the following:

-

Mission dependency index

-

Facility condition indices

-

Space utilization indices

-

Suitability indices

-

Physical security index

-

Environmental compliance indices

-

Real property assessment index

-

Building code indices

-

Total ownership cost ratio

The metrics are developed from interviews, questionnaires, and asset data for individual buildings and sites and linked to a geographical information system (GIS) database. The combined information can be used to make USCG-wide planning decisions based on the level and urgency of needs.

The mission dependency index (MDI) was originally created by the Naval Facilities Engineering Service Center as an operational risk management metric that relates facilities and infrastructure to mission readiness. The MDI starts with a standard questionnaire to rate the criticality of the mission and of the facilities to the mission. The scores from the questionnaire are weighted and normalized on a scale of 100, which is divided into five levels of mission dependency. This measure, combined with the other measures of facility condition, suitability, and costs, can be used to support rational capital asset management decisions.

Facility Condition Index

The facilities management profession has long embraced the facility condition index (FCI) metric, which is the ratio of cost of deferred maintenance to the replacement plant value (RPV) of the facility expressed as a percentage. Building condition is often defined in terms of the FCI; for example: a rating of 0 to 5

percent FCI is good, 5 to 10 percent FCI is fair, 10 to 30 percent FCI is poor, and greater than 30 percent FCI is critical. The FCI is recognized internationally and has almost universal acceptance from facilities professionals in education, governments, institutions, and private industries. The FCI is used as an indicator of the condition of a single building, which can be combined arithmetically to assess a group of buildings or an entire portfolio of facilities, and has been used to determine the budget required to sustain facilities.

However, the FCI alone is not sufficient to assess the readiness of a facility to support its mission, as it represents the effectiveness of a maintenance program at a moment in time. The FCI is determined by the value of all the operational maintenance activities necessary to keep an inventory of facilities in good working order. It does not represent what is needed to keep existing facilities up-to-date, relevant to their mission, and compliant with current standards.

Needs Index

Using the FCI is a first logical step; however, another metric, the needs index (NI), provides for a more robust and holistic assessment than the FCI alone. The NI was first introduced in 1998 by the Association of Higher Education Facility Officers (APPA). NI is the ratio of deferred maintenance plus the value of funding needed for renovation, modernization, regulatory compliance, and other capital renewal requirements divided by the RPV. NI combines the elements of sustainment and recapitalization to provide a more holistic metric for creating a business case for facilities funding.

The concept of sustainment includes regularly scheduled maintenance as well as anticipated repairs or replacement of components periodically over the expected service life of a facility. Recapitalization includes keeping the existing facilities up-to-date and relevant in an environment of changing needs such as code compliance. Recapitalization also includes facility replacement, but does not include facility expansion or other actions that add space to the inventory. It should be noted that the NI does not include any need that is not part of an institution’s current physical structure. In other words, the capital planning process is not part of the performance indicator because the future needs of an institution are not yet part of the institution’s RPV.

DOE METRICS

Chapter 3 discussed DOE performance metrics in terms of their application to planning and budgeting decisions. This section discusses these metrics in comparison to a set of preferred characteristics for performance metrics and management systems.

Preferred Characteristics of Performance Measures

There are three critical concerns when considering metrics for performance assessments: (1) understanding the nature of measures from an assessment perspective; (2) selecting measures that possess certain desirable properties or attributes; and (3) selecting measures that not only provide an assessment of how the program or system is performing but also support decisions that could improve the program or system.

Four sets of assessment measures are identified in Box 4-1. Although the first three sets (input, process, and outcome) have been discussed at length in the evaluation literature, the literature is not consistent regarding their respective definitions. For this reason, Box 4-1 identifies the measures in greater detail.2 Spending a given number of dollars on maintenance of a facility is an input measure and proceeding according to specifications is a process measure, but they may or may not affect the outcome measures of occupant attitude, occupant behavior, or achievement of the program’s objectives, goals, and mission. In general, the input and process measures serve to explain the resultant outcome measures. Input measures alone are of limited usefulness since they indicate only a facility’s potential, not the actual performance. On the other hand, the process measures identify the facility’s performance, but do not consider the impact of that performance. The outcome measures are the most meaningful observations since they reflect the ultimate impacts of the facility on achieving the goals and mission of the programs operated in the facility. In practice, as might be expected, most of the available assessments are fairly explicit about the input measures, less explicit about the process measures, and somewhat fragmentary about the outcome measures.

The fourth set of evaluation measures, systemic measures, can be regarded as impact measures, but they have generally been overlooked in the evaluation literature. The systemic measures allow the facility’s impact to be viewed from a total systems perspective. Box 4-1 lists four systemic contexts in which to view the facility’s impacts. It is also important to view the facility and infrastructure in terms of the organizational context (i.e., National Nuclear Security Agency, Science, Environmental Management, Fossil Energy, Civilian Radioactive Waste Management, Energy Efficiency and Renewable Energy, and Nuclear Energy, Science, and Technology) within which it functions. Thus, the facility’s impact on the immediate organization and on other organizations’ needs to be assessed. The pertinent input, process, and outcome measures should be viewed over time, from a longitudinal perspective. That is, the impact of the facility must be assessed not only in comparison to an immediate period but also in the context of a longer

|

BOX 4-1 INPUT MEASURES (WHAT?—REFLECTS POTENTIAL)

PROCESS MEASURES (HOW?—REFLECTS PERFORMANCE)

OUTCOME MEASURES (WHY?—REFLECTS NEAR TERM IMPACT)

SYSTEMIC MEASURES (WHY?—REFLECTS SYSTEMIC IMPACT)

SOURCE: Adapted from Tien (1999), pp. 811-824. |

time horizon. Only in this way can a facility’s condition be observed from a life-cycle perspective.

The first three systemic contexts can be regarded as focused more on facility performance, while the fourth assesses the facility results from a broader, policy-oriented perspective. In addition to assessing the policy implications, it is important to address feasible and beneficial alternatives to the program. The alternatives

could range from slight improvements to the existing facility to recommendations for new and different facilities. More importantly, whatever input, process, outcome, and systemic measures are employed, they should all be independently reviewed to ensure that they possess the following five important attributes.

-

Measurability. Are the pertinent measures well defined and specific? Are they measurable? Are they valid? Are they easy to interpret and hard to dispute? Are they available? Are they easy to obtain?

-

Reliability. Are the sites consistent in the way they define a particular measure? Are the measures obtained in one period or setting statistically the same as those obtained in another period or setting? Are measures that are derived from two or more other measures (e.g., percentages, averages) subject to instability (i.e., a change in the derived measure cannot be explicitly attributed)? Are the measures reliably up-to-date?

-

Accuracy. Are the reported measures actually measuring what they should? Have they been checked, double-checked, and perhaps even triple-checked? Are they obtained at the source? Do they suffer from transcription or instrumentation errors?

-

Robustness. Are the pertinent measures robust in scope (e.g., averages are not robust because they fail to capture the underlying variability in data, whereas quantile measures may be preferred since they provide a better understanding of inherent variability)? Do the measures reflect critical variability in regard to contamination, cost of clean-up, or other factors?

-

Completeness. Does the group of selected measures effectively describe the system’s input, process, and impacts? Does the derived index or combination of indices reflect a complete picture of the system?

From a systems perspective, performance measures should be used not only to assess or provide feedback on the status or impact of a facility or site but also to support management decisions, through decision support modeling that could improve the entire system of facilities or sites. Such an integrated management system is discussed later in this chapter, with proposed metrics that are measurable, reliable, accurate, robust, and complete. The metrics included in the proposed model can be combined to provide indices that reflect performance (e.g., ACI and AUI) and impact (e.g., mission condition index), as well as serving to support critical decisions concerning performance evaluation, performance prediction, budget planning, budget allocation, life-cycle cost analysis, and location and construction of new mission-oriented laboratories.

DOE Department-Wide Metrics

DOE’s Real Property Asset Management (Order O 430.1B) (RPAM) (DOE, 2003) defines two corporate-level performance measures, and departmental plan-

ning guidance has set a benchmark metric for maintenance funding. The order references requirements for performance measures that link the performance of program goals and budgets to outputs and outcomes, but development of these measures and related performance targets is delegated to the program and site offices.

DOE’s department-wide performance measures include the following:

-

The asset utilization index (AUI) is defined in RPAM as “the ratio of the area of operating facilities or land holdings justified through annual utilization surveys (numerator) to the area of all operational and excess facilities or land holdings without a funded disposition plan (denominator).”

-

The asset condition index (ACI) is defined in RPAM as “one (1) minus the facility condition index (FCI), where FCI is the ratio of deferred maintenance to replacement plant value (RPV).”

-

Departmental planning guidance sets a maintenance funding target at a minimum of 2 percent of RPV.

In the context of the preferred characteristics identified above, both the AUI and the ACI are process rather than performance measures (see Box 4-1). Furthermore, both measures lack reliability and robustness and, even coupled with the target funding measure, are not complete enough to adequately describe the F&I management system’s input, process, and impacts, or to guide critical F&I decisions. For example, how does attaining an ACI of 0.95 by 2005 translate into dollars required to make it happen or relate to accomplishing DOE’s missions? The committee believes that a more complete set of measures is needed as described in the integrated facility management system described below.

As discussed in Chapter 3, the committee believes that a maintenance funding target based on a rule of thumb of 2 to 4 percent of RPV is of limited value because it is not robust and does not form a complete measurement system when combined with the two process measures. The committee urges DOE to develop a metric that can assess F&I maintenance budget requirements with more precision than a range of $1.5 billion. To increase F&I planning and budget precision, DOE should develop a group, or scorecard, of six to eight measures that include input, process, outcome, and systemic measures that meet the five attributes discussed above.

Congressionally Mandated Metric

Congress requires (U.S. Congress, 2001) that an equivalent square footage of DOE excess facilities be taken down or demolished if a new facility is to be constructed. Although the intent of this mandate is admirable, there have been some less than admirable consequences. The use of a simple metric that only assesses the area of new facilities and the area of demolished facilities has driven

decisions that are detrimental to DOE’s facilities and infrastructure objectives. For example, the committee is aware of excess facilities proposed for demolition based on cost without consideration of the impact on the program, such as demolishing an employee cafeteria so that a facility could be constructed at a different location on the same campus. It would have been more beneficial to DOE’s objectives if a hazardous building on the same campus were cleaned up and demolished, even though the comparable expenditure would not have yielded an equivalent square footage.

If the intent of the requirement is to reduce DOE’s inventory of excess real property assets and improve the department’s facilities, the committee hopes that Congress will revise its requirement to address decontamination and demolition by measuring the equivalent dollar value of repair, replacement, demolition, and cleanup to be undertaken if a new facility is to be constructed. Clearly, the intent should be to get rid of hazardous facilities first, before benign facilities are demolished. Unfortunately, if Congress wants to both improve the quality of facilities and reduce the inventory, then funding will need to match the cost of demolition and disposal.

The current metric, AUI, responds to the congressional mandate but, as noted above, is insufficient for effective management of DOE assets. The AUI needs to be supplemented with a measure that addresses the impact of an excess asset on the site and on the DOE complex. This could be accomplished with a risk-adjusted AUI that factors the relative impact of the excess area or with an additional measure of the excess property liability by dividing the estimated cost for decommissioning and demolition by the RPV.

DOE Assessment Data

Timely, informed decisions cannot be made without up-to-date, accurate data. A large number of measures are collected and stored in DOE’s Facilities Information Management System (FIMS), but, although the committee is impressed with the quality assurance plan for FIMS, it is unclear that the FIMS data fully satisfy the five attributes of effective metrics (measurability, reliability, accuracy, robustness, and completeness). Furthermore, as with many data warehouses, the stored data are typically not identified or defined in terms of what information is required for critical decision making. The unchecked growth of a data warehouse aggravates a condition known as “data rich and information poor” (DRIP). The DRIP problem highlights the need not only to collect decision-driven data but also to be consistent about data definitions and other data quality concerns, such as the need to integrate data before they are mined for information to support tactical and strategic decisions. The other DOE F&I data warehouse is the Condition Assessment Information System (CAIS), which contains the Condition Assessment Survey (CAS) results. Unfortunately, as noted in Chapter 3, DOE sites are not required to provide CAIS data and there is no

quality assurance plan for CAIS. As a consequence, CAIS data lack measurability, reliability, accuracy, robustness, and completeness. Moreover, the real property data elements in CAIS are not necessarily consistent with the corresponding FIMS real property data elements. Since CAS (or CAS-equivalent) results constitute an important input to the integrated approach proposed by the committee, the CAIS should become a part of FIMS and subject to FIMS quality assurance mandates. In addition, the integrated FIMS/CAIS database should require standardized and consistent data, subject to well-defined data collection and updating procedures.

INTEGRATED MANAGEMENT APPROACH

As indicated earlier in this chapter, it is important to select performance measures that provide for an assessment of how the program or system is performing, support management decisions, and provide the basis for continuing improvement of the program. The facilities management system (FMS) focuses on performance measures and management systems, which can be part of a balanced scorecard approach to program improvement. The expenditures required to establish an integrated facilities management system for DOE are justified when considering the quantity of money that the department spends each year on the operations, maintenance, repair, recapitalization, and replacement of facilities.

In order to minimize the overall costs and maximize the benefits of facilities stewardship, a systematic approach is needed to manage F&I. As discussed in Chapters 2 and 3, facilities management requires a life-cycle process of planning, designing, constructing, operating, renewing, and disposing of facilities in a cost-effective manner. It should combine engineering principles with business practices and economic theory to facilitate a more organized and logical approach to decision making. An effective FMS is composed of operational packages—including methods, procedures, data, software, policies, and decisions—that link and enable the carrying out of all the activities involved in facilities management. In others words, FMS is a logical process composed of an assembly of functional components that support the successful execution of the facilities management process. The FMS described below is intended to provide the same type of decision support information as the suite of metrics used by the U.S. Coast Guard. However, FMS is proposed as an outgrowth of DOE’s current performance measurement system and therefore is a variation of the best practices described above.

FMS integration can make more effective and efficient use of scarce resources, with effectiveness as an indicator of whether a program is successful in meeting its objectives and efficiency as a measure of how well a program is using resources in achieving its objectives. The ultimate goal of FMS integration is to promote efficiency (doing things right) in order to make the program more effective (doing the right thing).

Integrated management of facilities and infrastructure has many advantages, and substantial benefits can be achieved through its implementation. The benefits include the following:

-

Free flow of information. Integration yields greater compatibility, which allows data and information to be accessed and shared from one system to another and from one department to another.

-

Elimination of redundant data. Duplicate efforts in data collection and storage are eliminated through data sharing and limited resources can be used more effectively.

-

Better solutions. An integrated system allows analysis results from one system to be immediately available to others, and results among systems can be used together to achieve overall optimal decisions.

-

Cost reduction in system development and maintenance. Effective integration will reduce the total system development costs through coordinated and standardized software coding. Department-wide standardization of software can also make future system maintenance less expensive.

System integration does not mean creating one huge and complicated system; rather it is a process where all the components of subsystems are logically linked together on a common platform with a modular approach.

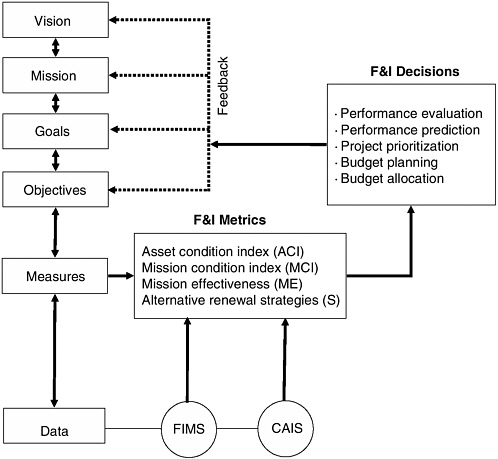

The concept of an integrated management system for DOE F&I is depicted in Figure 4-1. In this system, the F&I-related vision is tied to the program mission, goals, and objectives. In turn, the higher-level decisions are supported by information derived from data in the FIMS/CAIS data warehouse and from the metrics described below. Most importantly, the measures employed to support critical F&I decisions also provide essential feedback for updating and modifying the vision, mission, goals, and objectives. Thus, the data and metrics can be used not only to assess F&I, as DOE is doing with the ACI and AUI metrics, but also to manage the F&I through decision support models.

Metrics and Decisions

This section addresses the F&I metrics and critical F&I decisions that the committee believes are the core of effective F&I management. There are other metrics and decisions that can be considered by DOE, but the purpose here is to illustrate an integrated management system that can be adapted to specific DOE applications.

Metrics

Four metrics are considered: asset condition index (ACI), mission condition index (MCI), mission effectiveness (ME), and alternative renewal strategies (S).

FIGURE 4-1 An integrated management approach for DOE facilities and infrastructure.

Most of the data for these metrics are derived from data that have already been collected and that are available in the FIMS and/or CAIS databases.

Asset Condition Index The ACI (i.e., ![]() ) is a measurement of the physical and structural fitness or integrity of a facility or infrastructure, which typically deteriorates over time due to various factors such as utilization, environment, material degradation, and construction quality. Currently, DOE’s ACI measurements are on a scale from 0 to 1 and are stored in FIMS. If obtained objectively and consistently, the ACI can be a good indicator of the physical condition of a facility. It should be emphasized that the ACI should be measured at the facility level in order to support such decisions as prioritizing renewal projects, and the

) is a measurement of the physical and structural fitness or integrity of a facility or infrastructure, which typically deteriorates over time due to various factors such as utilization, environment, material degradation, and construction quality. Currently, DOE’s ACI measurements are on a scale from 0 to 1 and are stored in FIMS. If obtained objectively and consistently, the ACI can be a good indicator of the physical condition of a facility. It should be emphasized that the ACI should be measured at the facility level in order to support such decisions as prioritizing renewal projects, and the

data need to be based on consistent assumptions and verifiable for use at the departmental level.

Mission Condition Index The mission condition index (MCI) is a measurement of the physical and structural fitness of a facility or infrastructure in fulfilling a particular mission or sub-mission. Facilities are not built simply to house equipment or people but rather to provide support for successfully completing a mission. Therefore, measuring only the physical condition is inadequate. For example, the physical condition of a facility might be perfect (i.e., ACI = 1), but if the facility cannot meet the mission needs at all, then its MCI should be 0.

A facility’s MCI is related to its ACI by a factor k, the mission condition adjustment factor, which is defined on a scale from 0 to 1 to represent the degree to which the facility supports a particular mission or sub-mission. Thus the MCI of facility f for sub-mission sm is expressed as:

where k(f, sm) is the mission condition adjustment factor of facility f for submission sm, and ACI(f) is the asset condition index of facility f. Table 4-1 can be used to determine the possible values of k(f, sm). If both k and ACI range from 0 to 1, then so does the MCI.

Mission Effectiveness Index Mission effectiveness index (ME) is a measurement of how effective a facility is in fulfilling the overall mission. The mission effectiveness of facility f is derived from the MCI(f, sm), adjusted weighting factor w(f→sm), which is the relative criticality of facility f to sub-mission sm; and w(sm→m), which is the relative criticality of sub-mission sm to the overall mission m. Assuming that all the w weights range from 0 to 1 (i.e., similar to the

TABLE 4-1 Determining Mission Condition and Effectiveness Adjustment Factors

|

Degree That a Facility Supports a Mission or Sub-mission |

|

|

|

Mission Condition |

Mission Effectiveness |

k, Mission Condition Adjustment Factor or w(sm→m), Mission Effectiveness Factor |

|

Excellent |

Critical |

0.80 ~ 1.00 |

|

Good |

Essential |

0.60 ~ 0.79 |

|

Fair |

Necessary |

0.40 ~ 0.59 |

|

Poor |

Optional |

0.20 ~ 0.39 |

|

Very Poor |

Expendable |

0.00 ~ 0.19 |

Table 4-1 weighting scheme), then ME(f) is the product of the above three identified factors:

Determining Mission Condition Index and Mission Effectiveness Index Adjustment Factors DOE will need to develop a process for determining the MCI and ME factors that is consistent throughout the department. FIMS and CAIS do not currently contain all the data needed to support the proposed integrated FMS but fields could be added. Data similar to those used to determine the needs index or the USCG’s suite of performance measures are needed to support adjustment factor decisions, but the process will always require the application of expert judgment. To begin with, DOE will need to develop detailed performance definitions and a cadre of trained personnel who can apply these definitions consistently throughout the department. As more data are collected over time, key performance indicators may be identified that correlate with the adjustment factors.

Alternative Strategies Alternative strategies (S) are possible strategies for the sustainment and renewal of a facility. Each alternative strategy can be defined as a possible action to be taken, together with its corresponding unit cost and expected impact on the facility. An example set of the alternative strategies for the sustainment and renewal of DOE facilities and infrastructure is provided in Table 4-2. The unit cost of the alternative strategies would be approximated by using the average cost of the specific actions in the corresponding strategy category.

Management Decisions

The committee believes that DOE should include formal analysis or model-

TABLE 4-2 Facility Renewal Strategies, Unit Cost, and Impact

|

Strategy (S) |

Unit Cost, Cs(f), $/ft2 |

Impact on Facility in Terms of ACI and k |

|

|

ACI |

k |

||

|

Do nothing |

|

No impact |

0 |

|

Routine maintenance |

|

Postpone deterioration (ΔACI = 0) |

0 |

|

Minor renewal |

|

Marginal improvement (ΔACI < 0.2) |

< 0.2 |

|

Major renewal |

|

Significant improvement (ΔACI ≥ 0.2) |

≥ 0.2 |

|

Disposal/reconstruction |

|

New facility |

1 |

ing processes in its management procedures so that decisions can be made in a consistent and timely manner. The committee has recommended consideration of DoD’s Facilities Sustainment, Restoration, and Modernization (S/RM) construct for department-level planning and budgeting decisions. The proposed FMS considers five facility-level decisions: performance evaluation, performance prediction, project prioritization, budget planning, and budget allocation. If a consistent process is used for these decisions, they can be combined to support decisions for sites and programs, and used with the S/RM for departmental decisions.

Facility Performance Evaluation The main purpose of facility performance evaluation is to determine the current condition of a facility in order to make engineering decisions. The measures or indices for characterizing the condition of each subsystem may differ, but the general structure of the evaluation models should be consistent. It is helpful to aggregate detailed individual measures at the subsystem level into a measure to describe the overall performance at the system level. For the purpose of prioritizing facility renewal needs, both the facility’s physical condition (i.e., ACI) and mission condition (i.e., MCI) should be considered in any performance evaluation model.

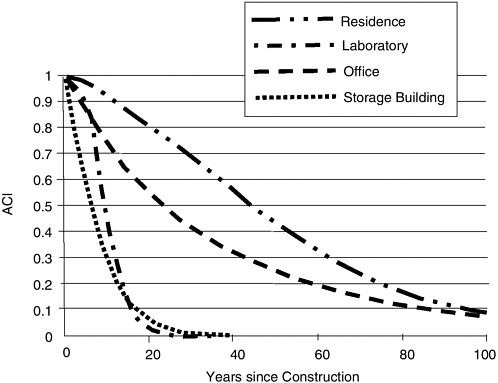

Performance Prediction Performance prediction is based on an understanding of the facility’s life cycle and its deterioration over time. In order to plan for facility sustainment and renewal, it is necessary to predict the future condition of a facility at any given. Developing robust models to predict deterioration and performance over time is a challenging task because of the complexity of factors that affect the performance of facilities. Facility deterioration has been described as a symmetric S-shape curve (Lufkin, 2004). The committee developed a facility deterioration curve (FDC) model by modifying the Pearl curve, which was originally constructed for S-shape growth phenomena,

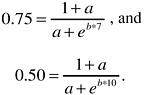

where FDC(f, t) is the facility deterioration curve for facility f at time t, and a and b are constants to be determined through model calibration with observed data. For example, if we know that the condition of a facility, in terms of ACI, has deteriorated from its brand new condition (ACI = 1.0) to ACI = 0.75 at year 7 (i.e., t0.75 = 7) and kept deteriorating to ACI = 0.5 at year 10 (i.e., t0.5 = 10), then we have two data points:

By plugging these data into the S-curve equation ![]() we have:

we have:

From these two equations, we can solve for the two unknown constants a and b with the following results:

Four important observations can be made concerning the FDC: (1) it can be shown that for a newly constructed, mission-directed facility, its ACI over time is equal to its FDC, with a value of 1 at time 0; (2) as depicted in Figure 4-2(a) the S-curve for a facility with sustainment would be higher than the corresponding S-curve for the same facility without sustainment (i.e., maintenance tends to extend the facility’s useful life); (3) as depicted in Figure 4-2(b) renewals also tend to extend a facility’s useful life by raising the remaining portions of the

FIGURE 4-2 Impact of maintenance and renewals on the asset condition index.

S-curve; and (4) when considering a facility’s life cycle, FDC and ACI are functions of time.

Project Prioritization In general, budgets are insufficient to fund all of an organization’s facilities maintenance and renewal projects, leading to the need to prioritize the projects. The most commonly used approach is to prioritize needs over a particular time horizon (typically a year) by assessing the impact of a predefined set of renewal strategies using a predefined prioritization criterion, usually the ratio of effectiveness to cost. Such a cost-effectiveness analysis can be carried out with relative ease if the data are available to apply decision support metrics.

Budget Planning For the purpose of planning, it is necessary to know the budget needed to keep both individual facilities and the entire complex of facilities at a specified level of service. The estimated budget can serve as the basis for both budget plans and annual budget requests. Mathematically, the budget planning objective is to minimize total cost for facilities given performance constraints—for example, that the average MCI value of all the facilities should be kept above a desired target level. Budget planning models such as the DoD’s S/RM are typically formulated as a linear programming, integer programming, or other optimization problem.

Budget Allocation In general, the budget received is different from (usually less than) the budget requested. As a consequence, one of the key functions for facilities and infrastructure management is to select projects from a population of planned maintenance and renewal efforts so that the budget is not exceeded. Budget allocation can be accomplished by using a wide range of methodologies, from the simplest ranking to sophisticated optimization. For budget allocation using a simple ranking process, the adjusted MCI can be used. From the perspective of optimization, the purpose of the budget allocation process is to maximize the total performance of all the facilities over a predetermined analysis period (usually the lifespan of the facilities) under a number of constraints, including, for example, that the budget is not exceeded. Again, as with budget planning, budget allocation models are typically formulated as a linear programming, integer programming, or other optimization problem.

The Navy tracks the performance of its budget decisions by comparing its program to requirements, budget to program, and execution to budgets. These metrics are useful as performance improvement tools, but an FMS is needed to proactively make the best decisions.

An Example Application of an Integrated Facility Management System

The purpose of this hypothetical example is to illustrate how the integrated management approach might be applied in a static manner. When the time variable

is included, as it should be in practice, the decision problem becomes dynamic. The time variable can be included in the analysis described below by using numerical integration to calculate the average improvement in the performance measure that will result from a maintenance and repair action. The example uses a hypothetical site with five buildings and two sub-missions, and posits two strategy problems to consider within a cost-effectiveness framework. The application follows an 11-step approach.

Step 1. Identify f, the facility to be included in the analysis. The example is a small research site; its mission is, of course, research, and it has two sub-missions, researcher development and public education. There are five buildings: a researcher residence, an office, a storage building, and two laboratories (their respective square footage is shown in Table 4-5). The strategy being considered for each facility is either to replace or to recapitalize.

Step 2. Determine the organization’s sub-missions and w(sm→m), the relative criticality of each sub-mission sm to the organization’s mission m.

Step 3. Determine w(f→sm), the relative criticality of each facility f to each submission sm, and then calculate w(f→m), the relative criticality of facility f to mission m, by summing the component parts.

Step 4. Determine ko(f, m), the initial mission condition adjustment factor for facility f to the mission m, by employing Table 4-1.

Step 5. Determine ACIo(f), the initial asset condition index for facility f, based on application of the facility deterioration curve (FDC). A plausible set of S-curves for the four different types of facilities is shown in Figure 4-3; they were determined by solving for the FDC curve parameters, a and b, when the condition of the facilities deteriorates to ACI 0.75 and ACI 0.5 of the original condition—that is, ACI 1.0. The parameter values are summarized in Table 4-3.

Using the storage building to illustrate how to predict performance using FDCs, the prediction model becomes:

With the above equation, we can predict the ACI value for a storage building at a given time since its construction. In this example, we assumed that the time since construction for the storage building was 35 years; thus:

FIGURE 4-3 Example of S-shape curves for facility performance prediction.

TABLE 4-3 Parameter Values of Facility Deterioration Curves

|

|

Residence |

Research |

Office |

Storage Building |

|

t0.75 |

3.5 |

7 |

10 |

25 |

|

t0.5 |

7 |

10 |

25 |

45 |

|

a |

2 |

29.77 |

−0.2818 |

4.451 |

|

b |

0.198 |

0.3459 |

0.0228 |

0.04143 |

(See Step 5 of Table 4-4 for the ACI of the other buildings in the hypothetical example.)

TABLE 4-4 Steps in an Example Application: Cost Effectiveness of Alternative Renewal Strategies

|

Step 1 |

Step 2 |

|

Step 3 |

Step 4 |

Step 5 |

||

|

|

|

|

Facility Criticality |

|

|

||

|

Facility |

SubMission |

SubMission Criticality |

T |

R |

Total |

Miss. Cond. Adj. Factor |

Initial Cond. |

|

F |

sm |

w(sm→m) |

w(f→T) |

w(f→R) |

w(f→m) w(f→T)+ w(f→R) |

ko(f, m) See Table 4-1 |

ACIo(f) Facility Deterior. Formula |

|

Residence |

T, Teaching |

0.6 |

0.15 |

0 |

0.15 |

0.85 |

0.5 |

|

Office |

T, Teaching |

0.6 |

0.8 |

0 |

0.8 |

0.65 |

0.48 |

|

Storage Building |

T & R |

1 |

0.05 |

0.05 |

0.1 |

0.95 |

0.63 |

|

Lab 1 |

R, Research |

0.4 |

0 |

0.95 |

0.95 |

0.65 |

0.5 |

|

Lab 2 |

R, Research |

0.4 |

0 |

0.95 |

0.95 |

0.1 |

0.33 |

Step 6. Determine MCIo(f), the initial mission condition index for facility f, which is equal to

Step 7. Determine MEo(f), the initial mission effectiveness for facility f, which is equal to

Step 8. Identify S, the possible renewal strategies for facility f, their corresponding unit costs Cs(f), their corresponding adjustment factors ks(f, m), and their corresponding asset condition indices, ACIs(f), all based on Table 4-3.

Step 9. Determine MCIs(f), the mission condition index for facility f after strategy s is applied; it is equal to

|

Step 6 |

Step 7 |

Step 8 |

Step 9 |

Step 10 |

Step 11 |

|||

|

Initial. Miss. Cond. Index |

Initial Miss. Effect. |

Renewal Strategy |

Unit Cost ($/sqft) |

Adj. Factor Due to s |

Condition Due to s |

Cond. Index Due to s |

Miss. Effect. Due to s |

Cost Effect. of s |

|

MCIo(f) ko(f, m)* ACIo(f) |

MEo(f) w(sm→m)* w(f→m)* MCIo(f) |

s |

Cs(f) See Table 4-2 |

ks(f, m) See Table 4-2 |

ACIs(f) See Table 4-2 |

MCIs(f) ks(f, m)* ACIs(f) |

MEs(f) w(sm→m)* w(f→m)* MCIs(f) |

CEs(f) (MEs(f)– MEo(f))/ Cs(f) |

|

0.425 |

0.03825 |

Replace Recap. |

90 150 |

0.9 0.95 |

0.7 0.9 |

0.63 0.855 |

0.0567 0.07695 |

0.000205 0.000258 |

|

0.312 |

0.14976 |

Replace Recap. |

75 100 |

0.7 0.9 |

0.8 0.95 |

0.56 0.855 |

0.2688 0.4104 |

0.001587 0.002606 |

|

0.5985 |

0.05985 |

Replace Recap. |

50 100 |

0.95 0.95 |

0.75 0.92 |

0.7125 0.874 |

0.07125 0.0874 |

0.000228 0.000276 |

|

0.325 |

0.1235 |

Replace Recap. |

250 275 |

0.75 0.8 |

0.6 0.85 |

0.45 0.68 |

0.171 0.2584 |

0.000190 0.000491 |

|

0.033 |

0.01254 |

Demo & Replace |

325 |

1 |

1 |

1 |

0.38 |

0.001131 |

Step 10. Determine MEs(f), the mission effectiveness for facility f after strategy s is applied; it is equal to

Step 11. Calculate CEs(f), the cost effectiveness of applying strategy s to facility f; it is equal to

The following management decisions for the five buildings are considered based on the hypothetical data.

Performance Evaluation The results in Table 4-4 for Steps 1 through 7 all

provide insight into the performance of the individual facilities. For example, it is seen that the office building has the highest mission effectiveness, while lab 2 has the least; these results are not surprising, given the underpinning values for facility criticality and mission condition adjustment factors.

Performance Prediction As indicated in Step 5, and illustrated in Figure 4-3, the facility deterioration curve is applied to the four types of facilities that are included in this example.

Project Prioritization The five facility renewal projects can be prioritized based on the cost-effectiveness values calculated in Table 4-4. The best project or strategy is the one that yields the highest cost-effectiveness value. Apparently, for the residence, office, storage facility, and lab 1, the recapitalization strategy is better than the replacement strategy. Subsequently, based on selecting the most cost-effective strategy for each facility, the five facilities can be ranked in descending order: office, lab 2, lab 1, storage building, and residence. This prioritized list is summarized in Table 4-5.

Budget Planning With the area of each facility and the unit cost for the selected strategy, the total cost for implementing the selected strategies can be calculated. The budget required for this example can then be determined based on the ranking and calculated costs. To complete the renewals for all five of the facilities, a total of $68.75 million would be required. However, if one decides to carry out the renewals for only the top three ranked facilities, the required total budget would be $48.75 million, as indicated in Table 4-5.

Budget Allocation For a given level of available budget, the allocation can be

TABLE 4-5 Illustration of the Ranking, Budget Planning, and Budget Allocation

|

Facility |

CostEffect. |

Ranking |

Unit Cost ($/ft2) |

Space (ft2 ×1000) |

Total Cost ($ million) |

Budget Planning ($ million) |

Budget Allocation ($ million) |

|

Office |

0.002606 |

1 |

100 |

50 |

5.00 |

5.00 |

5.00 |

|

Lab 2 |

0.001131 |

2 |

325 |

50 |

16.25 |

16.25 |

16.25 |

|

Lab 1 |

0.000491 |

3 |

275 |

100 |

27.50 |

27.50 |

|

|

Storage Building |

0.000276 |

4 |

100 |

20 |

2.00 |

|

2.00 |

|

Residence |

0.000258 |

5 |

150 |

120 |

18.00 |

|

|

|

Total |

|

|

|

|

68.75 |

48.75 |

23.25 |

done by selecting projects in accordance with their cost-effectiveness ranking until the budget is exhausted. For example, if the total available budget is $24 million, then the office, lab 2, and storage building would be selected for renewals, as shown in Table 4-5.

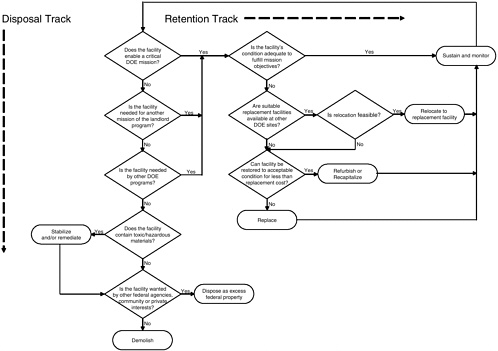

DETERMINING WHETHER TO REPAIR, RENOVATE, OR REPLACE

The decision process for facility sustainment and recapitalization begins with an objective assessment of the role of a specific facility in enabling mission objectives. This initial step in DOE’s planning process is taken during the development of the 10-year plans for each site. The critical question that must be answered is whether a facility and its related infrastructure support a critical DOE mission. The answer to this question can help determine the k factor used in the integrated facility management model discussed above and serve as the starting point for the decision-making process illustrated in Figure 4-4. This process will determine whether the facility is placed on a disposal or retention track.

Disposal Track

If an existing facility is not needed for a critical mission, the next logical question is whether it is needed for other DOE missions or programs. If affirmative, the facility is put on the retention track. If no existing or planned DOE mission or program needs the facility, then it can either be disposed of as surplus federal property or demolished. In both cases, the facility will no longer be part of the DOE inventory and will not require sustainment or recapitalization by the department. However, if the facility contains toxic or hazardous materials, it must be stabilized or remediated before its ultimate disposition.

Retention Track

If the facility enables a critical program or mission need, then it should be evaluated to determine the appropriate long-term sustainment or recapitalization strategy. If the facility’s existing condition is satisfactory, then sustainment of the desired condition is all that is necessary. If the current condition is unacceptable, then the question becomes whether to relocate, recapitalize, or replace. The answer will be determined in part by logistics, economics, and the prevailing and expected regulatory climate. For example, it would probably not be feasible to relocate laboratory facilities that are integral to future production activities even if they were in poor condition and an acceptable substitute was available at another site. Similarly, surrounding communities or regulators might not want to construct a certain type of facility new, but an existing facility could be restored to acceptable condition. If the facility were critical to the program mission, then

recapitalization would be the appropriate option. Finally, detailed economic analyses of all alternatives are required so that a comprehensive assessment can be made. In all cases, once an appropriate strategy is developed, there must be a commitment to sustain the facility over its lifetime.

FINDINGS AND RECOMMENDATIONS

Finding 4a. The current metrics defined by RPAM are inadequate for the size and complexity of DOE’s facilities. The committee has identified metrics that have been successfully used by other organizations (the Association of Higher Education Facilities Officers’ Strategic Assessment Model, the Navy’s balanced scorecard approach, the Department of Defense sustainment and recapitalization model, the National Aeronautics and Space Administration’s full cost management model, and the U.S. Coast Guard’s capital asset management approach) and suggests an integrated management system derived from the current DOE metrics, but there is at present no single example that clearly defines the suite of metrics that should be used by DOE.

Recommendation 4a-1. DOE needs to select the most promising metrics from the many alternatives, populate them with real DOE data, and conduct pilot programs to determine the best alternatives. Selected metrics should be agreed to by the responsible parties and the senior managers that will use the data for decision making.

Recommendation 4a-2. DOE should require a consistent set of measures and procedures for departmental decision-making processes. DOE should consider using the National Nuclear Security Administration Ten-Year Comprehensive Site Plan (TYCSP) guidance document (DOE, 2004) throughout the department and should incorporate a set of metrics for an integrated system to proactively manage the sustainment and renewal of F&I. DOE’s suite of measures should include input, process, outcome, and systemic measures that possess the critical attributes of measurability, reliability, accuracy, robustness, and completeness.

Finding 4b. The congressional mandate that DOE must reduce its inventory of excess facilities is admirable, but the metric eliminating one square foot for every square foot of new facility constructed has led to the demolition of benign facilities rather than hazardous facilities that affect the quality of the site.

Recommendation 4b. The mandate for elimination of excess facilities should continue; however, the metric for elimination of excess capital assets should be revised to reflect the impact on full liability of excess property including sustainment, security, and environmental safety costs.

Finding 4c. Performance measures need to support decisions, provide an assessment of the status of F&I and of how well the management system is performing, and provide direction for continuous process improvement.

Recommendation 4c. DOE should establish goals to support continuous improvement for F&I management. The development of a suite of measures to manage F&I process improvement should be considered. Regardless of the specific group of measures, it should include metrics that assess performance for the following aspects of F&I management:

-

Financial

-

Customers external to the F&I management organization

-

People internal to the F&I management organization

-

Organizational internal processes

REFERENCES

APPA (The Association of Higher Education Facilities Officers). 2001. The Strategic Assessment Model. Alexandria, Va.: The Association of Higher Education Facilities Officers.

Dempsey, James J. 2003. U.S. Coast Guard Shore Facility Capital Asset Management. Washington, D.C.: U.S. Coast Guard.

DoD (U.S. Department of Defense). 2002. Facilities Recapitalization Front-End Assessment. Washington, D.C.: Department of Defense.

DOE (U.S. Department of Energy). 2003. Real Property Asset Management (Order O 430.1B). Washington, D.C.: U.S. Department of Energy.

DOE. 2004. FY2006–FY2010 Planning Guidance. Washington, D.C.: U.S. Department of Energy.

Kaplan, Robert S., and David P. Norton. 1996. The Balanced Scorecard. Boston, Mass.: Harvard Business School Press.

Lufkin, Peter S. 2004. An Alternative Review of Facility Depreciation and Recapitalization Costs. Santa Barbara, Calif.: Whitestone Research.

NASA (National Aeronautics and Space Administration). 2004. NASA Facilities Maintenance and Repair Requirements Determination: A Primer. Washington, D.C.: National Aeronautics and Space Administration.

Navy. 2003. Stockholders’ Report: Supporting the Warfighter. Washington, D.C.: U.S. Navy.

NIST (National Institute of Standards and Technology). 2004. Baldrige National Quality Program. Available online at http://baldrige.nist.gov/. National Institute of Standards and Technology. Accessed June 30, 2004.

NRC (National Research Council). 1998. Stewardship of Federal Facilities: A Proactive Strategy for Managing the Nation’s Public Assets. Washington, D.C.: National Academy Press.

Tien, J.M. 1999. Evaluation of Systems, in Handbook of Systems Engineering and Management, edited by A.P. Sage and W.B. Rouse. New York, N.Y.: John Wiley & Sons.

U.S. Congress, House of Representatives, Committee on Appropriations. 2001. Energy and Water Development Appropriations Bill, 2002. House Report 107-112. Washington, D.C.: U.S. Congress.