Capturing and Simulating Physically Accurate Illumination in Computer Graphics

PAUL DEBEVEC

Institute for Creative Technologies

University of Southern California

Marina del Rey, California

Anyone who has seen a recent summer blockbuster has seen the results of dramatic improvements in the realism of computer-generated graphics. Visual-effects supervisors now report that bringing even the most challenging visions of film directors to the screen is no longer in question; with today’s techniques, the only questions are time and cost. Based both on recently developed techniques and techniques that originated in the 1980s, computer-graphics artists can now simulate how light travels within a scene and how light reflects off of and through surfaces.

RADIOSITY AND GLOBAL ILLUMINATION

One of the most important aspects of computer graphics is simulating the illumination in a scene. Computer-generated images are two-dimensional arrays of computed pixel values, with each pixel coordinate having three numbers indicating the amount of red, green, and blue light coming from the corresponding direction in the scene. Figuring out what these numbers should be for a scene is not trivial, because each pixel’s color is based both on how the object at that pixel reflects light and the light that illuminates it. Furthermore, the illumination comes not only directly from the light sources in the scene, but also indirectly from all of the surrounding surfaces in the form of “bounce” light. The complexities of the behavior of light—one reason the world around us appears rich

and interesting—make generating “photoreal” images both conceptually and computationally complex.

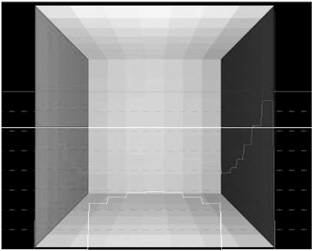

As a simple example, suppose we stand at the back of a square white room in which the left wall is painted red, the right wall is painted blue, and the light comes from the ceiling (Figure 1). If we take a digital picture of this room and examine its pixel values, we will indeed find that the red wall is red and the blue wall is blue. But when we look closely at the white wall in front of us, we will notice that it isn’t perfectly white. Toward the right it becomes bluish, and toward the left it becomes pink. The reason for this is indirect illumination: toward the right, blue light from the blue wall adds to the illumination on the back wall, and toward the left, red light does the same.

Indirect illumination is responsible for more than the somewhat subtle effect of white surfaces picking up the colors of nearby objects—it is often responsible for most, sometimes all, of the illumination on an object or in a scene. If I sit in a white room illuminated by a small skylight in the morning, the indirect light from the patch of sunlight on the wall lights the rest of the room, not the direct light from the sun itself. If light did not bounce between surfaces, the room would be nearly dark!

In early computer graphics, interreflections of light between surfaces in a scene were poorly modeled. Light falling on each surface was computed solely as a function of the light coming directly from light sources, with perhaps a

FIGURE 1 A simulation of indirect illumination in a scene, known as the “Cornell box.” The white wall at the back picks up color from the red and blue walls at the sides. Source: Goral et al., 1984. Reprinted with permission. (The Cornell box may be seen in color at http://www.graphics.cornell.edu/online/box/history.html.)

roughly determined amount of “ambient” light added irrespective of the actual colors of light in the scene. The groundbreaking publication showing that indirect illumination could be modeled and computed accurately was presented at SIGGRAPH 84, when Goral et al. of Cornell University described how they had simulated the appearance of the red, white, and blue room example using a technique known as radiosity.

Inspired by physics techniques for simulating heat transfer, the Cornell researchers first divided each wall of the box into a 7 × 7 grid of patches; for each patch, they determined the degree of its visibility to every other patch, noting that patches reflect less light if they are farther apart or facing away from each other. The final light color of each patch could then be written as its inherent surface color times the sum of the light coming from every other patch in the scene. Despite the fact that the illumination arriving at each patch depends on the illumination arriving (and thus leaving) every other patch, the radiosity equation could be solved in a straightforward way as a linear system of equations.

The result that Goral et al. obtained (Figure 1) correctly modeled that the white wall would subtly pick up the red and blue of the neighboring surfaces. Soon after this experiment, when Cornell researchers constructed such a box with real wood and paint, they found that photographs of the box matched their simulations so closely that people could not tell the difference under controlled conditions. The first “photoreal” image had been rendered!

One limitation of this work was that the time required to solve the linear system increased with the cube of the number of patches in the scene, making the technique difficult to use for complex models (especially in 1984). Another limitation was that all of the surfaces in the radiosity model were assumed to be painted in matte colors, with no shine or gloss.

A subsequent watershed work in the field was presented at SIGGRAPH 86, by Jim Kajiya from Caltech, who published “The Rendering Equation,” which generalized the ideas of light transport to any kind of geometry and any sort of surface reflectance. The titular equation of Kajiya’s paper stated in general terms that the light leaving a surface in each direction is a function of the light arriving from all directions upon the surface, convolved by a function that describes how the surface reflects light. The latter function, called the bidirectional reflectance distribution function (BRDF), is constant for diffuse surfaces but varies according to the incoming and outgoing directions of light for surfaces with shine and gloss.

Kajiya described a process for rendering images according to this equation using a randomized numerical technique known as path tracing. Like the earlier fundamental technique of ray tracing (Whitted, 1980), path tracing generates images by tracing rays from a camera to surfaces in the scene, then tracing rays out from these surfaces to determine the incidental illumination on the surfaces. In path tracing, rays are traced not only in the direction of light sources, but also randomly in all directions to account for indirect light from the rest of the scene.

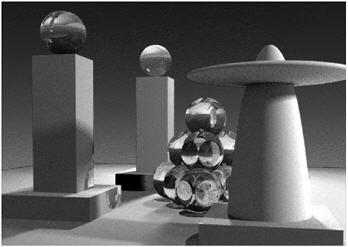

FIGURE 2 A synthetic scene rendered using path tracing. Source: Kajiya, 1986. Reprinted with permission.

The demonstration Kajiya produced for his paper is shown in Figure 2. This simple scene shows realistic light interactions among both diffuse and glossy surfaces, as well as other complex effects, such as light refracting through translucent objects. Although still computationally intensive, Kajiya’s randomized process for estimating solutions to the rendering equation made the problem tractable both conceptually and computationally.

BRINGING REALITY INTO THE COMPUTER

Using the breakthroughs in rendering techniques developed in the mid-1980s, it was no simple endeavor to produce synthetic images with the full richness and realism of images in the real world. Photographs appear “real” because shapes in the real world are typically distinctive and detailed, and surfaces in the real world reflect light in interesting ways, with different characteristics that vary across surfaces. And also, very importantly, light in the real world is interesting because typically there are different colors and intensities of light coming from every direction, which dramatically and subtly shape the appearance of the forms in a scene. Computer-generated scenes, when constructed from simple shapes, textured with ideal plastic and metallic reflectance properties, and illuminated by simple point and area light sources, lack “realism” no matter how accurate or computationally intensive the lighting simulation. As a result, creating photoreal images was still a matter of skilled artistry rather than advanced technology. Digital artists had to adjust the appearance of scenes manually.

Realistic geometry in computer-generated scenes was considerably advanced in the mid-1980s when 3-D digitizing techniques became available for scanning

the shapes of real-world objects into computer-graphics programs. The Cyberware 3-D scanner, an important part of this evolution, transforms objects and human faces into 3-D polygon models in a matter of seconds by moving a stripe of laser light across them. An early use of this scanner in a motion picture was in Star Trek IV for an abstract time-travel sequence showing a collage of 3-D models of the main characters’ heads. 3-D digitization techniques were also used to capture artists’ models of extinct creatures to build the impressive digital dinosaurs for Jurassic Park.

DIGITIZING AND RENDERING WITH REAL-WORLD ILLUMINATION

Realism in computer graphics advanced again with techniques that can capture illumination from the real world and use it to create lighting in computer-generated scenes. If we consider a particular place in a scene, the light at that place can be described as the set of all colors and intensities of light coming toward it from every direction. As it turns out, there is a relatively straightforward way to capture this function for a real-world location by taking an image of a mirrored sphere, which reflects light coming from the whole environment toward the camera. Other techniques for capturing omnidirectional images include fisheye lenses, tiled panoramas, and scanning panoramic cameras.

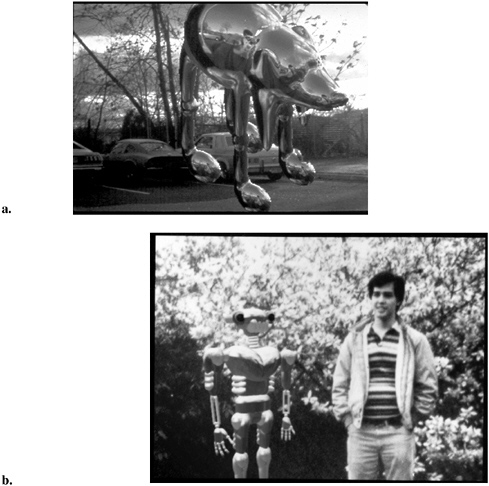

The first and simplest form of lighting from images taken from a mirrored sphere is known as environment mapping. In this technique, the image is directly warped and applied to the surface of the synthetic object. The technique using images of a real scene was used independently by Gene Miller and Mike Chou (Miller and Hoffman, 1984) and Williams (1983). Soon after, the technique was used to simulate reflections on the silvery, computer-generated spaceship in the 1986 film Flight of the Navigator and, most famously, on the metallic T1000 “terminator” character in the 1991 film Terminator 2. In all of these examples, the technique not only produced realistic reflections on the computer-graphics object, but also made the object appear to have truly been in the background environment. This was an important advance for realism in visual effects. Computer-graphics objects now appeared to be illuminated by the light of the environment they were in (Figure 3).

Environment mapping produced convincing results for shiny objects, but innovations were necessary to extend the technique to more common computer-graphics models, such as creatures, digital humans, and cityscapes. One limitation of environment mapping is that it cannot reproduce the effects of object surfaces shadowing themselves or of light reflecting between surfaces. The reason for this limitation is that the lighting environment is applied directly to the object surface according to its surface orientation, regardless of the degree of visibility of each surface in the environment. For surface points on the convex hull of an object, correct answers can be obtained. However, for more typical

FIGURE 3 Environment-mapped renderings from the early 1980s. a. An environment-mapped shiny dog. Source: Miller and Hoffman, 1984. Reprinted with permission. b. An environment-mapped shiny robot. Source: Williams, 1993. Reprinted with permission.

points on an object, appearance depends both on which directions of the environment they are visible to and light received from other points on the object.

A second limitation of the traditional environment mapping process is that a single digital or digitized photograph of an environment rarely captures the full range of light visible in a scene. In a typical scene, directly visible light sources are usually hundreds or thousands of times brighter than indirect illumination from the rest of the scene, and both types of illumination must be captured to represent the lighting accurately. This wide dynamic range typically exceeds the dynamic range of both digital and film cameras, which are designed to capture a range of brightness values of just a few hundred to one. As a result, light sources

typically become “clipped” at the saturation point of the image sensor, leaving no record of their true color or intensity. This is not a major problem for shiny metal surfaces, because shiny reflections would become clipped anyway in the final rendered images. However, when lighting more typical surfaces—surfaces that blur the incidental light before reflecting it back toward the camera—the effect of incorrectly capturing the intensity of direct light sources in a scene can be significant.

We developed a technique to capture the full dynamic range of light in a scene, up to and including direct light sources (Debevec and Malik, 1997). Photographs are taken using a series of varying exposure settings on the camera; brightly exposed images record indirect light from the surfaces in the scene, and dimly exposed images record the direct illumination from the light sources without clipping. Using techniques to derive the response curve of the imaging system (i.e., how recorded pixel values correspond to levels of scene brightness), we assemble this series of limited-dynamic-range images into a single high-dynamic image representing the full range of illumination for every point in the scene. Using IEEE floating-point numbers for the pixel values of these high-dynamic-range images (called HDR images or HDRIs), ranges exceeding even one to a million can be captured and stored.

The following year we presented an approach to illuminating synthetic objects with measurements of real-world illumination known as image-based lighting (IBL), which addresses the remaining limitations of environment mapping (Debevec, 1998). The first step in IBL is to map the image onto the inside of a surface, such as an infinite sphere, surrounding the object, rather than mapping the image directly onto the surface of the object. We then use a global illumination system (such as the path-tracing approach described by Kajiya [1986]) to simulate this image of incidental illumination actually lighting the surface of the object. In this way, the global illumination algorithm traces rays from each object point out into the scene to determine what is lighting it. Some of the rays have a free path away from the object and thus strike the environmental lighting surface. In this way, the illumination from each visible part of the environment can be accounted for. Other rays strike other parts of the object, blocking the light it would have received from the environment in that direction. If the system computes additional ray bounces, the color of the object at the occluding surface point is computed in a similar way; otherwise, the algorithm approximates the light arriving from this direction as zero. The algorithm sums up all of the light arriving directly and indirectly from the environment at each surface point and uses this sum as the point’s illumination. The elegance of this approach is that it produces all of the effects of the real object’s appearance illuminated by the light of the environment, including self-shadowing, and it can be applied to any material, from metal to plastic to glass.

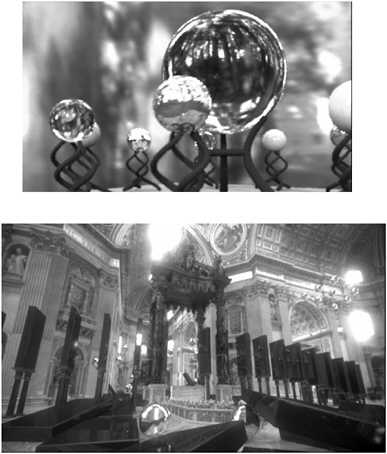

We first demonstrated HDRI and IBL in a short computer animation called Rendering with Natural Light, shown at the SIGGRAPH 98 computer-animation

festival (Figure 4, top). The film featured a still life of diffuse, shiny, and translucent spheres on a pedestal illuminated by an omnidirectional HDRI of the light in the eucalyptus grove at UC Berkeley. We later used our lighting capture techniques to record the light in St. Peter’s Basilica, which allowed us to add virtual tumbling monoliths and gleaming spheres to the basilica’s interior for our SIGGRAPH 99 film Fiat Lux (Figure 4, bottom). In Fiat Lux, we used the same lighting techniques to compute how much light the new objects would obstruct from hitting the ground; thus, the synthetic objects cast shadows in the same way they would have if they had actually been there.

The techniques of HDRI and IBL, and the techniques and systems derived from them, are now widely used in the visual-effects industry and have provided visual-effects artists with new lighting and compositing tools that give digital

FIGURE 4 Still frames from the animations Rendering with Natural Light (top) and Fiat Lux (bottom) showing computer-generated objects illuminated by and integrated into real-world lighting environments.

FIGURE 5 A virtual image of the Parthenon synthetically illuminated with a lighting environment captured at the USC Institute for Creative Technologies.

actors, airplanes, cars, and creatures the appearance of actually being present during filming, rather than added later via computer graphics. Examples of elements illuminated in this way include the transforming mutants in X-Men and X-Men 2, virtual cars and stunt actors in The Matrix Reloaded, and whole cityscapes in The Time Machine. In our latest computer animation, we extended the techniques to capture the full range of light of an outdoor illumination environment—from the pre-dawn sky to a direct view of the sun—to illuminate a virtual 3-D model of the Parthenon on the Athenian Acropolis (Figure 5).

APPLYING IMAGE-BASED LIGHTING TO ACTORS

In my laboratory’s most recent work, we have examined the problem of illuminating real objects and people, rather than computer-graphics models, with light captured from real-world environments. To accomplish this we use a series of light stages to measure directly how an object transforms incidental environmental illumination into reflected radiance, a data set we call the reflectance field of an object.

The first version of the light stage consisted of a spotlight attached to a two-bar rotation mechanism that rotated the light in a spherical spiral about a person’s face in approximately one minute (Debevec et al., 2000). At the same time, one or more digital video cameras recorded the object’s appearance under every form of directional illumination. From this set of data, we could render the object under any form of complex illumination by computing linear combinations of the color channels of the acquired images. The illumination could be chosen to be measurements of illumination in the real world (Debevec, 1998) or the illumination present in a virtual environment, allowing the image of a real person to be photorealistically composited into a scene with the correct illumination.

An advantage of this photometric approach for capturing and rendering objects is that the object need not have well defined surfaces or easy-to-model reflectance properties. The object can have arbitrary self-shadowing, interreflection, translucency, and fine geometric detail. This is helpful for modeling and rendering human faces, which exhibit all of these properties, as do many objects we encounter in our everyday lives.

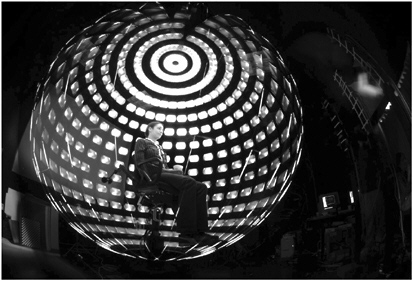

Recently, our group constructed two additional light stages. Light Stage 2 (Figure 6) uses a rotating semicircular arm of strobe lights to illuminate the face from a large number of directions in about eight seconds, much more quickly than Light Stage 1. For this short a period of time, an actor can hold a steady facial expression for the entire capture session. By blending the geometry and reflectance of faces with different facial expressions, we have been able to create novel animated performances that can be realistically rendered from new points of view and under arbitrary illumination (Hawkins et al., 2004). Mark Sagar and his colleagues at Sony Pictures Imageworks used related techniques to create the digital stunt actors of Tobey Maguire and Alfred Molina from light stage data sets for the film Spider-Man 2.

For Light Stage 3, we built a complete sphere of 156 light sources that can illuminate an actor from all directions simultaneously (Debevec et al., 2002). Each light consists of a collection of red, green, and blue LEDs interfaced to a computer so that any light can be set to any color and intensity. The light stage

FIGURE 6 Light Stage 2 is designed to illuminate an object or a person from all possible directions in a short period of time, allowing a digital video camera to capture directly the subject’s reflectance field. Source: Hawkins et al., in press. Reprinted with permission.

can be used to reproduce the illumination from a captured lighting environment by using the light stage as a 156-pixel display device for the spherical image of incidental illumination. A person standing inside the sphere then becomes illuminated by a close approximation of the light that was originally captured. When composited over a background image of the environment, it appears nearly as if the person were there. This technique may improve on how green screens and virtual sets are used today. Actors in a studio can be filmed lit as if they were somewhere else, giving visual-effects artists much more control over the realism of the lighting process.

In our latest tests, we use a high-frame camera to capture how an actor appears under several rapidly cycling basis lighting conditions throughout the course of a performance (Debevec et al., 2004). In this way, we can simulate the actor’s appearance under a wide variety of different illumination conditions after filming, providing directors and cinematographers with never-before-available control of the actor’s lighting during postproduction.

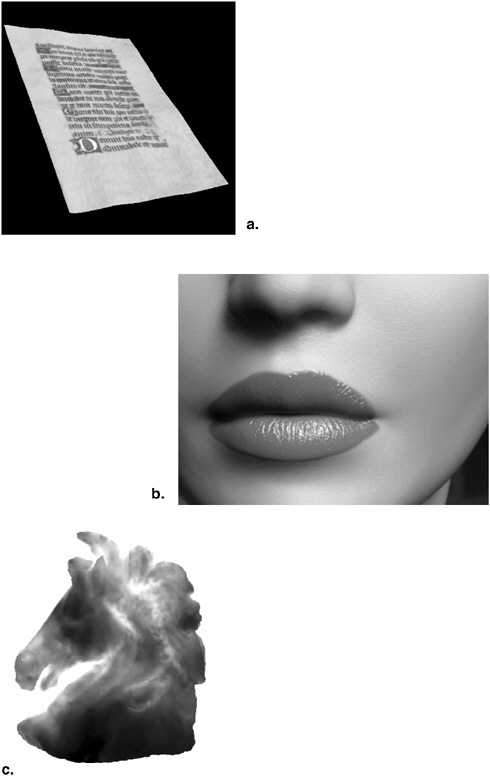

A REMAINING FRONTIER: DIGITIZING REFLECTANCE PROPERTIES

Significant challenges remain in the capture and simulation of physically accurate illumination in computer graphics. Although techniques for capturing object geometry and lighting are maturing, techniques for capturing object reflectance properties—the way the surfaces of a real-world object respond to light—are still weak. In a recent project, our laboratory presented a relatively simple technique for digitizing surfaces with varying color and shininess components (Gardner et al., 2003). We found that by moving a neon tube light source across a relatively flat object and recording the light’s reflections using a video camera we could independently estimate the diffuse color and the specular properties of every point on the object. For example, we digitized a 15th-century illuminated manuscript with colored inks and embossed gold lettering (Figure 7a). Using the derived maps for diffuse and specular reflection, we were able to render computer-graphics versions of the manuscript under any sort of lighting environment with realistic glints and reflections from different object surfaces.

A central complexity in digitizing reflectance properties for more general objects is that the way each point on an object’s surface responds to light is a complex function of the direction of incidental light and the viewing direction—the surface’s four-dimensional BRDF. In fact, the behavior of many materials and objects is even more complicated than this, in that incidental light on other parts of the object may scatter within the object material, an effect known as subsurface scattering (Hanrahan and Krueger, 1993). Because this effect is a significant component of the appearance of human skin, it has been the subject of interest in the visual-effects industry. New techniques for simulating subsurface scattering effects on computer-generated models (Jensen et al., 2001) have

led to more realistic renderings of computer-generated actors (Figure 7b) and creatures, such as the Gollum character in Lord of the Rings.

Obtaining models of how real people and objects scatter light in their full generality is a subject of ongoing research. In a recent study, Goesele et al. (2004) used a computer-controlled laser to shine a narrow beam onto every point of a translucent alabaster sculpture (Figure 7c) and recorded images of the resulting light scattering using a specially chosen high-dynamic-range camera. By making the simplifying assumption that any point on the object would respond equally to any incidental and radiant light direction, the dimensionality of the problem was reduced from eight to four dimensions yielding a full characterization of the object’s interaction with light under these assumptions. As research in this area continues, we hope to develop the capability of digitizing anything—no matter what it is made of or how it reflects light—so it can become an easily manipulated, photoreal computer model. For this, we will need new acquisition and analysis techniques and continued increases in computing power and memory capacity.

ACKNOWLEDGMENTS

The author wishes to thank Andrew Gardner, Chris Tchou, Tim Hawkins, Andreas Wenger, Andrew Jones, Maya Martinez, David Wertheimer, and Lora Chen for their support during the preparation of this article. Portions of the work described in this article have been sponsored by a National Science Foundation Graduate Research Fellowship, a MURI Initiative on three-dimensional direct visualization from ONR and BMDO (grant FDN00014-96-1-1200), Interval Research Corporation, the University of Southern California, and U.S. Army contract number DAAD19-99-D-0046. Any opinions, findings, and conclusions or recommendations expressed in this paper do not necessarily reflect the views of the sponsors. More information on many of these projects is available at <http://www.debevec.org/>.

REFERENCES

Debevec, P. 1998. Rendering synthetic objects into real scenes: bridging traditional and image-based graphics with global illumination and high dynamic range photography. Pp. 189–198 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 98 International Conference. New York: ACM Press/ Addison-Wesley.

Debevec, P., A. Gardner, C. Tchou, and T. Hawkins. 2004. Postproduction re-illumination of live action using time-multiplexed lighting. Technical Report No. ICT TR 05.2004. Los Angeles: Institute for Creative Technologies, University of Southern California.

FIGURE 7 a. A digital model of a digitized illuminated manuscript lit by a captured lighting environment. Source: Gardner et al., 2003. Reprinted with permission. b. A synthetic model of a face using a simulation of subsurface scattering. Source: Jensen et al., 2001. Reprinted with permission. c. A digital model of a translucent alabaster sculpture. Source: Goesele et al., 2004. Reprinted with permission.

Debevec, P., T. Hawkins, C. Tchou, H.P. Duiker, W. Sarokin, and M. Sagar. 2000. Acquiring the reflectance field of a human face. Pp. 145–156 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 97 International Conference. New York: ACM Press/Addison-Wesley.

Debevec, P.E., and J. Malik. 1997. Recovering high dynamic range radiance maps from photographs. Pp. 369–378 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 97 International Conference. New York: ACM Press/Addison-Wesley.

Debevec, P., A. Wenger, C. Tchou, A. Gardner, J. Waese, and T. Hawkins. 2002. A lighting reproduction approach to live-action compositing. Association for Computing Machinery Transactions on Graphics 21(3): 547–556.

Gardner, A., C. Tchou, T. Hawkins, and P. Debevec. Linear light source reflectometry. 2003. Association for Computing Machinery Transactions on Graphics 22(3): 749–758.

Goesele, M., H.P.A. Lensch, J. Lang, C. Fuchs, and H-P. Seidel. 2004. DISCO—Acquisition of Translucent Objects. Pp. 835–844 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 31st International Conference, August 8–14, 2004.

Goral, C.M., K.E. Torrance, D.P. Greenberg, and B. Battaile. 1984. Modelling the interaction of light between diffuse surfaces. Association for Computer Machinery Computer Graphics (3): 213–222.

Hanrahan, P., and W. Krueger. 1993. Reflection from layered surfaces due to subsurface scattering. Pp. 165–174 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 1993 International Conference. New York: ACM Press/Addison-Wesley.

Hawkins, T., A. Wenger, C. Tchou, A. Gardner, F. Goransson, and P. Debevec. 2004. Animatable facial reflectance fields. Proceedings of the 15th Eurographics Symposium on Rendering in Norrkoping, Sweden, June 21–23, 2004.

Jensen, H.W., S.R. Marschner, M. Levoy, and P. Hanrahan. 2001. A practical model for subsurface light transport. Pp. 511–518 of the Proceedings of the Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques 2001 International Conference. New York: ACM Press/Addison-Wesley.

Kajiya, J.T. 1986. The rendering equation. Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques Computer Graphics 20(4): 143–150.

Miller, G.S., and C.R. Hoffman. 1984. Illumination and Reflection Maps: Simulated Objects in Simulated and Real Environments. Course Notes for Advanced Computer Graphics Animation. New York: Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques Computer Graphics.

Whitted, T. 1980. An improved illumination model for shaded display. Communications of the Association for Computing Machinery 23(6): 343–349.

Williams, L. 1983. Pyramidal parametrics. 1983. Association for Computing Machinery Special Interest Group on Computer Graphics and Interactive Techniques Computer Graphics 17(3): 1–11.