Interfaces for Ground and Air Military Robots

Robot: 1 a: a machine that looks like a human being and performs various complex acts (as walking or talking) of a human being; also: a similar but fictional machine whose lack of capacity for human emotions is often emphasized; b: an efficient insensitive person who functions automatically; 2: a device that automatically performs complicated often repetitive tasks; 3: a mechanism guided by automatic controls (Merriam-Webster’s Collegiate Dictionary, Eleventh Edition)

First Law of Robotics: A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey orders given it by human beings, except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Source: Isaac Asimov (1989), p. 40 (Runaround) in I, Robot, Ballantine Books, NY.

U.S. Army Goal: To build intelligent autonomous systems as combat multipliers.

INTRODUCTION

In the early years of robotics and automated vehicles, the fight was against nature and not against a manifestly intelligent opponent. In this context, researchers and engineers in artificial intelligence aspired to design completely autonomous systems. In military environments, however, where prediction and anticipation are complicated by the existence of an intelligent adversary, it is essential to retain human operators in the control loop.

Future military and civilian interface technologies will be influenced greatly by currently evolving autonomous and semiautonomous systems. Operators will act at times as monitors, as controllers, and as supervisors, each role putting different and new demands on their perceptual, motor, and cognitive capacities. In particular, future combat systems will require operators to control and monitor aerial and ground robotic systems and to act as part of larger teams coordinating diverse robotic systems over multiple echelons. The goals for future operator control units are that they be (1) integrated into the soldier’s total task environment, (2) capable of being used to monitor and control multiple systems, and (3) interchangeable, with a minimum of practice, among nonexpert soldiers. Display designers should give consideration to the trade-offs between meeting diverse operational requirements and minimizing display and control requirements.

In this context, in November 2004 the National Research Council’s Committee on Human Factors hosted a workshop with support from the Army Research Laboratory. The workshop addressed the challenges of operator control unit design from the perspectives of engineers who design these interfaces and human factors specialists who provide design guidance based on an understanding of human cognitive and physical capabilities and limitations. The workshop was focused on the operational context at the brigade level and below. Physical design issues ranged from such problems as the size, resolution, and control constraints for small infantry-carried operator control units to the impact of motion for an operator control unit mounted on large armored vehicles. Cognitive requirements related to design issues include the need to support common situation awareness, multitasking, and teaming across multiple echelons. The major goal of the workshop was to identify the most important human-related research and design issues from both the engineering and human factors perspectives and to develop a list of lessons learned and fruitful research directions.

The workshop planning committee was composed of three members

of the National Research Council’s Committee on Human Factors—Peter Hancock (workshop chair), University of Central Florida, John Lee, University of Iowa, and Joel Warm, University of Cincinnati. This committee was responsible for organizing the workshop and suggesting topics and presenters. Leading engineers and human factors researchers were chosen based on their work on human-robotic interface design.

The specific objectives, introduced at the workshop by Michael Barnes of the Army Research Laboratory, included:

-

Examining scalable interfaces in terms of specialized requirements, 3-D displays, multimodal displays, handheld versus mounted control units, operational constraints, and common look and feel.

-

Summarizing “what we know” and “what we need to know” as a precursor for design guidelines.

-

Identifying research areas ripe for exploitation.

It is evident from the presentations and discussions that took place throughout the workshop that work on scalability is in its infancy. Rather than presenting definitive design solutions, presentations focused on such research topics as span of control, communication paradigms, multitasking, levels of detail, and size of display or control units. These topics represent different elements that contribute to an overall understanding of interface scalability issues.

Definition of Terms

Throughout this report, we use domain-specific terms such as unmanned vehicle (UV), unmanned (or uninhabited) aerial vehicle (UAV), unmanned (or uninhabited) combat aerial vehicle (UCAV), and unmanned ground vehicle (UGV). Unmanned vehicles refer to the various modes of transportation used without an on-board operator. They can be employed for use on the ground, in the air, at sea, or in space. Unmanned aerial vehicles refer specifically to aircraft operated without an on-board pilot. More recently, unmanned combat aerial vehicles have denoted a specific class of unmanned aerial vehicle, dedicated to tactical airborne applications and including on-board deliverable weapons. With respect to these and other remote entities, we also use the term robot, and the form of communication between such an entity and its human controller is termed human-robotic interaction. While unmanned vehicles or robots are the entities

of concern, their control is effected through some form of interface that is usually computer-mediated and that itself contains some degree of intelligence (see Hancock and Chignell, 1989).

An operator control unit (OCU) is the interface by which an operator is able to control a remote, unmanned vehicle. A tactical control unit (TCU) is an interface used by an operator to control a remote, unmanned weapon system. Scalable interfaces are interfaces for robots of different sizes, types, and purposes that share common principles. Design may be guided at different times by principles related to common look and feel, definition of functions, logic of the software and the intelligence behind the robot, and functionality. At present, scalable interfaces are very much contingent on the state-of-the-art of interface design, the evolving nature of the remote system to be controlled, and the resident capacities of the respective military users. Significant change and evolution in scalable interfaces are expected in the near future; the workshop was therefore concerned with such directions and concomitant design recommendations.

Organization of the Report

The workshop included five paper sessions, each with a chair, and two presenters—one an engineer and the other a human factors scientist. Session chairs were Julie Adams, Vanderbilt University; Missy Cummings, Massachusetts Institute of Technology; John Lee, University of Iowa; Geb Thomas, University of Iowa; and Joel Warm, University of Cincinnati. The paper sessions were bracketed by an opening session that set the context and a final session organized as a panel summary and discussion. Presentations are available electronically at http://www7.nationalacademies.org/bcsse/Presentations.html. The workshop agenda and list of participants appear in Appendix A, and biographical sketches of the planning committee and speakers appear in Appendix B.

For this summary, the presentations have been regrouped around issues and themes. The first section sets the context; in general terms, it provides the scope of the current program and describes progress that has been made in the development and application of military robots. The second section summarizes papers on human-robotic interface design issues from theoretical, experimental, and observational perspectives. The third section presents applied research findings and examines the difficulties in robotic field implementations in a variety of domains. The fourth section covers the coordination, delegation, and communication among

human team members and between these humans and the robots they control. The final section provides a summary of the key technical and ethical issues raised by presenters and participants.

Setting the Context: Overview of Military Robotics

Charles Shoemaker, Army Research Laboratory

Robotics is a revolutionary technology for America’s military forces. The vision for the future consists of tactical unmanned aerial vehicles, unmanned shooter platforms, robotic seekers, robotic sensors, and robotic control platforms. Over the past 20 years, the budget for robotic-related work has increased from approximately $40 million to $750 million annually. Most of the current funding is invested in research to support work on the future combat environment.

The motivations for high levels of autonomy in military systems are increased survivability, increased span of control (one on many), reduced communication data rate, reduced supervisory workload, and new operational flexibility. The major challenge lies in two of these areas: (1) the network requirements for information and data processing by the systems and (2) reducing supervisory load.

The urban vision for the Future Force Warrior consists of ground and air units, both manned and unmanned, on a single network (see Figure 1). Examples of unmanned systems include unmanned aerial vehicles, large and small unmanned ground vehicles, unmanned ground stations, backpackable robotics up to 40 lb, mules (a two-ton supply vehicle that follows four-man squads) and packable robotics, and armed robotic vehicles weighing 10 to 15 tons. Finally, an automated navigation system is planned to provide such capabilities to the manned vehicles as well as to the armed robotic vehicles and mules.

The Collaborative Technology Alliance has been established to provide a research base for robotic technology. It is composed of university, government, and private-sector research and engineering laboratories that have been funded to provide key robotics technology to the military through basic and applied research. The alliance is focused on several major areas, including visual perception, intelligent control in complex tactical environments, and human-machine interface.

FIGURE 1 Future combat systems, increment I.

Advances in visual perception include:

-

LADAR (laser detection and ranging) processing refinements leading to finer resolution and better separation of the objects from their backgrounds (the evolution of technology now allows near field perception to reach out to 200 meters);

-

new stereo techniques tuned to complex environments, such as forests and grassy environments;

-

motion stereo for mid-range perception;

-

detecting water;

-

detecting and identifying thin wires;

-

detecting moving objects; and

-

360-degree situation awareness.

With LADAR, it is difficult to see holes in the terrain; however, improvements are being made. The goal is to generate a fused visualization by combining LADAR and other stereo image sources to create a more robust system.

A second focus of the Collaborative Technology Alliance is intelligent

control. Advances in this area include local planning, maneuvering in dynamic environments, implementing tactical behaviors, and supporting collaborative operations among manned and unmanned vehicles. The Reference Model Architecture for Unmanned Ground Vehicles hierarchical structure of goals and commands has been developed to provide a world representation at seven hierarchical levels, including planning, replanning, and reacting. A multiresolution map allows data to flow up and down across levels of resolution, thus supporting planning at each level. Problems arise, however, when a time lag occurs in translating the real-time, high-resolution data generated by the robot to the map.

A third focus is the integration of technologies and human performance testing, including operator control unit configurations. The concept is based on collaboration between ground and air vehicles. The air vehicle provides the baseline and the change (e.g., target location) in the environment, and the ground vehicle autonomously travels toward the change (target location). The goal of the operator is to diagnose the difficulty of the terrain and maximize coverage for a patrol mission, while minimizing both exposure and time. All those features will be available to the planning operator when he or she wants to move the robot from Point A to Point B. In this design and development process, there is a strong ethic of involving soldiers early in the process. Interfaces currently under development include a 17-inch screen and a personal digital assistant (PDA) with similar functionality1 and a 12-inch tablet personal computer that can be attached to a mule.

The current level of field performance of the experimental unmanned vehicles indicates that they can run in snow and rain; in some instances, they have traveled 7 kilometers over terrain without operator input. Comparisons of the experimental unmanned vehicles to a manned Humvee show that the two systems perform similarly at night. Of a total of 600 kilometers of runs, the percentage of distance accomplished autonomously was overall 93 percent at the Fort Indiantown gap environment and 96 percent in the urban area used for the study. Approximately 88 percent of the time, the robot operated autonomously; human interventions were 1 to 3 minutes.

HUMAN-ROBOTIC INTERACTION: THEORETICAL, EXPERIMENTAL, AND OBSERVATIONAL PERSPECTIVES

The military has pioneered the development of technology to support remote action using automated and semiautomated robots. However, the degree of benefit derived from such technical developments is restricted by the bandwidth of communication and the effectiveness of the individual and collective interfaces to these remote resources. Specifically, the interaction with the human controller represents a crucial bottleneck for effectiveness. Facilitating this interaction is the domain of human factors.

This section includes four presentations. David Dahn’s presentation covered the work currently being performed by Micro Analysis and Design on interfaces for Army robotic systems. Michael Goodrich’s presentation provided data from laboratory studies on controlling multiple robots. Kevin Bennett’s talk provided guidance and examples of interfaces designed using cognitive engineering principles. Christopher Wickens offered the human factors/human engineering perspective to interface design. Some key points that emerged from these presentations included:

-

Unmanned ground vehicles require more detailed imagery than unmanned aerial vehicles. Because the ground environment is more dynamic and uncertain, synthetic vision based on LADAR and radar images becomes very important. Current bandwidth limitations restrict the level of detail that can be transmitted from the vehicle to the remote interface.

-

Operator workload capacity is challenged by requiring management of more than one robot at a time or performing more than one task at a time. Overload can result in errors.

-

Designs must account for issues of operator attention—either focusing too long on one task or having attention diverted by new and compelling, but not necessarily relevant, information can lead to errors.

-

Operator confusion will be minimized by keeping the interface design as simple as feasible for the task at hand. Although standardization can be useful in this regard, it may induce confusion. For example, standardization across platforms may lead to a situation in which the operator does not develop different mental models for each system being controlled—a situation that can lead to errors.

-

Direct manipulation graphic interfaces for multitasking merit further consideration.

Designing a Family of Interfaces

David Dahn, Micro Analysis and Design, Inc.

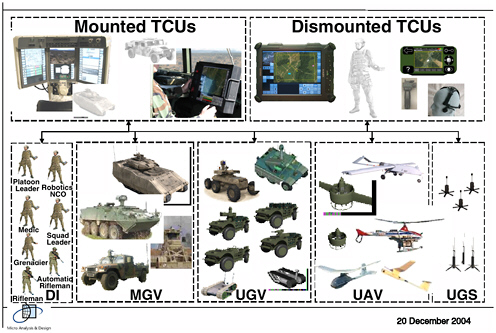

The overall technical objective of the work at Micro Analysis and Design is to provide a family of modern tactical control unit interfaces to weapon systems with unmanned vehicle control capability that are usable in harsh environments while in motion and incorporating supervision and command and control capabilities. Specifically, tactical control units are being designed to provide multimodal input and output capabilities and multifunction displays, at the same time working to minimize warfighter workload. Other desirable features include generating reliable and trusted automation systems and advancing situation awareness. As shown in Figure 2, the family of warfighter tactical control units being developed by the Army is diverse and scalable. They are designed for a wide variety of operators and systems, including dismounted infantry soldiers, manned ground vehicles, unmanned ground and air vehicles, and unmanned ground stations. A central focus is achieving interoperability among these autonomous and manned systems.

A critical consideration in interface design is operator workload. Switching from one system to another and operating in different modes

FIGURE 2 Family of warfighter tactical control units.

can easily result in cognitive overload. Furthermore, physical workload and the physical environment can also be significant sources of demand. Tactical control units are being designed to support distributed workload through multimodal displays and controls incorporating vision, audition, speech, and touch, as well as through intelligent aiding. Situation understanding is supported by maps, operational graphic overlays, and weapon system mapping software. The command and control intelligent architecture is a rule-based system that interacts with battlefield information to provide a variety of aids, including warnings, advisories, and route planning assistance. Human factors principles that have been followed in developing design alternatives include analyzing tactical needs, drawing on past operator control unit successes, reducing operator workload through the use of intelligent aids, using common and clear symbology, grouping similar controls, employing a modular design strategy, and tailoring interfaces to warfighters.

The tactical behaviors currently under investigation by Micro Analysis and Design are divided into collective tasks and individual tasks. Examples of collective tasks include performing route reconnaissance or conducting a tactical move (of more than one unmanned vehicle). Individual tasks include command and control, communicating, moving, looking, and shooting.

The objectives discussed for future control architecture and the tactical behavior of unmanned systems are:

-

Deploying unmanned systems as “co-combatants,” which includes ensuring competent execution of collective tasks, individual tasks, and skills (i.e., tactical behaviors); developing system competency ratings for unmanned vehicles tailored to robotic roles and analogous to Army military occupational specialty/skill levels; communicating as a soldier (two-way receive/transmit); being goal/task oriented (versus prescriptive automation); and working in teams (manned and unmanned).

-

Developing integrated, scalable architecture from servo control up to small combat unit to allow autonomous systems to behave tactically (look, move, communicate, shoot) and to interoperate with manned weapons and command, control, communication, computer, surveillance, and reconnaissance (C4ISR) systems.

-

Using a common operating system to provide warfighters (i.e., “deciders”) anywhere in the battle space with a common command, control,

-

and communications approach to tasking and managing distributed battlefield sensors and shooters.

Span of Control and Human Robot Teams: An Interface Perspective

Michael A. Goodrich, Brigham Young University

The first part of Goodrich’s presentation focused on controlling multiple robots, and the second described the interface design work in his laboratory.

Research on Multiple Robots

The research question is how many robots, team members, or tasks can a single individual manage in a given time frame? In essence, how long can an operator neglect one management responsibility in favor of another before performance degrades beyond an acceptable limit? This, of course, depends on the complexity of the tasks and the amount of autonomy that can be given to the robot.

To examine these questions, Goodrich developed measures of neglect and recovery. Neglect, measured as neglect time (NT), was defined as the amount of time an operator can turn his or her attention away from the robot and still maintain an adequate level of performance. Different robots have different levels of autonomy, different performance degradation curves, and thus different neglect times. Recovery is measured by interaction time (IT), defined as the time it takes an operator to regain control and reestablish an acceptable performance level. The switch from NT to IT can be more or less costly depending on the diversity of tasks, the complexity of the interface, and the degree of autonomy. Taking all these factors into consideration, equations have been developed to predict how much neglect time is associated with various robot control tasks. For relatively simple, homogeneous tasks, the neglect time will be longer and allow the operator to control more than one robot; for more complex, heterogeneous tasks, the allowable neglect time will be shorter, thus reducing the number of robots that can be controlled.

Given the research on neglect and task complexity, what can be done with interface design to assist an operator in managing multiple assets? The second part of Goodrich’s presentation dealt with experiments on interface design.

FIGURE 3 The Brigham Young University MagiCC PDA screen.

SOURCE: Quigley, Goodrich, and Beard (2004).

Research on Interface Design

Alternative control schemes were designed for the operation of semiautonomous fixed-wing unmanned aerial vehicles as shown in Figure 3. For the display, a simplified “wing view” of the vehicle was used to convey the roll and the altitude without a moving horizon. Heading and velocity were displayed as gauges that resemble a compass and a speedometer. For the velocity, the operator was only provided with the terms “min” and “max.” In order to keep the vehicle from crashing on the ground, an image of the ground was presented in the visual flight display. The interface maintained constant flight parameters until a new command was received. For all three parameters there was a “desired” and an “actual visualization.”

The control interface included (1) a graphic direct manipulation interface for PDA and full-size computers, (2) a voice recognition system, (3) a force feedback joystick, and (4) a force sensing interface (using IBM TrackPoint, or a novel “physical icon” interaction, in which the operator holds a physical model of the vehicle).

The PDA-based direct manipulation interface resulted in a median performance time of 2.62 seconds, compared with performance with the joystick and the physical icon, which was faster (1.44 and 1.61 seconds, respectively). The direct manipulation interface offered a compromise between the physical interface and the numeric parameter-based interface. The greatest strength of the direct manipulation interface over the physical interface was the ability of the interaction scheme to function as the user’s attention decreased, that is, it functions during NT (neglect time). With physical objects such as a icon or a joystick, an operator is required to continuously hold the altitude, while for the direct manipulation interface, interaction is not required until a change is needed.

Interface Design: A Cognitive Systems Engineering Perspective

Kevin B. Bennett, Wright State University

Bennett’s presentation focused on interface design from a cognitive systems engineering perspective. He defined a cognitive triad as consisting of an agent, a domain, and an interface. The mapping between domain and interface includes the content critical to describing the work domain and how that content is presented at the interface. The other important mapping is between the interface and the capabilities and limitations of the agent.

In the context of military command and control, there are both intent-driven and law-driven aspects to battlefield action. For example, there is a law-driven technological core in the domain consisting largely of the constraints of physics—bullets fly so far, tanks go so fast, and so forth. Intent-driven aspects include the intent of the commander (agent) and the intent of the enemy. Interpretation of these intents can be ambiguous. In his current research, the assumption was that commanders are highly trained, frequent users. In this context, the most suitable interface was a combination of visual icons and geometrical forms.

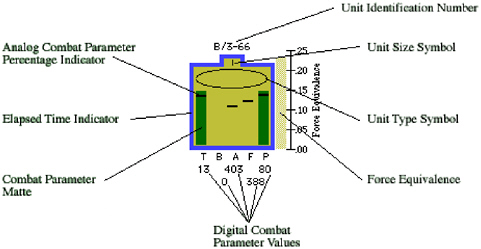

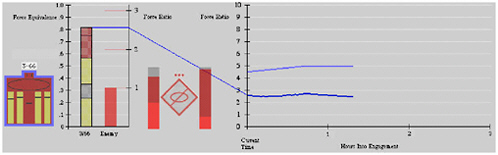

Figure 4 presents the icon that was developed. Combat power is the primary concern in this domain. There are at least five elements that contributed to combat power: tanks, armored personnel carriers, ammunition, fuel, and personnel. Bennett and his colleagues have translated these parameters into elements that are relevant in this domain. For example, instead of gallons of gas, they display kilometers of travel. The background color of the icon provides salient information concerning combat status. The more detailed information appears in the form of an analog percentage

FIGURE 4 Interface design: Icon structure.

FIGURE 5 Interface design: Graphical form.

indicator. This representation can be used at all levels (e.g., brigade, company, platoon).

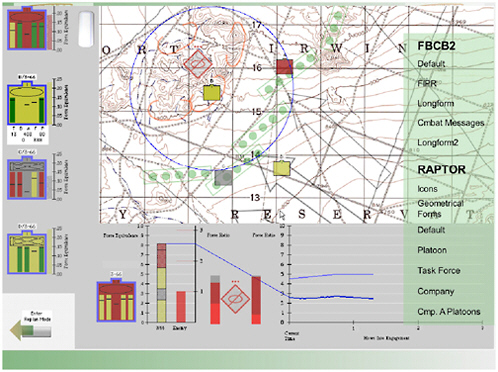

The graphical forms were developed for mission design—the example shown in Figure 5 is an offensive scenario. It is a graphical representation of the overall combat power or force equivalents of the friendly units. While the heights of the bars represent the force equivalents, combat power is also given as a function of time and with respect to the planned mission objectives. The overall view given in Figure 6 includes a map that allows drilling down to access greater detail by pointing and clicking. This provides a brief summary of different echelons, the combat power, and the location of the forces on the map. Bennett’s conclusion is that the most important feature for performance is an intact perception-action loop.

FIGURE 6 Interface design: Top-level display.

Human Factors Observations on Human-Robotic Interfaces

Christopher D. Wickens, University of Illinois at Urbana-Champaign

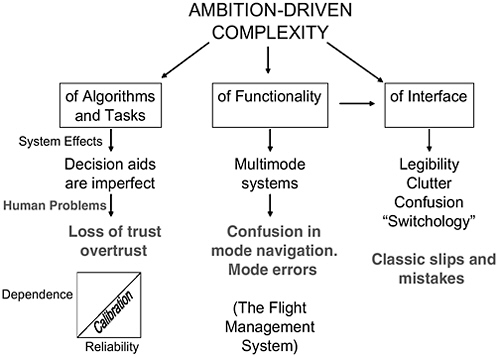

Wickens began with a key principle of interface design—simplicity. His research suggests lowering ambition, filtering out tasks, and providing adequate redundancy to ensure backup when systems fail. Figure 7 shows the pitfalls associated with ambition-driven complexity. Minimizing confusion is critical. For example, it is important to avoid making different functions look too similar or opening two different windows in the same display frame. Furthermore, systems in which adaptive automation makes mode changes without operator consent can increase confusion and may cause the operator to forget with which vehicle he or she is dealing. Despite careful design, operator error is inevitable, so all consequences should be reversible.

A large body of extant human factors research points to the following guidelines for designing complex systems and their interfaces:

-

Only the functions that are reliable enough should be automated (reliability below 70 to 80 percent degrades performance and therefore should not be implemented).

-

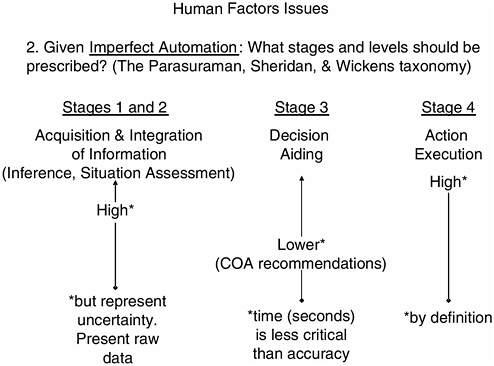

Automation is imperfect. The taxonomy proposed by Parasuraman, Sheridan, and Wickens (2000) provides guidance in deciding which types of functions to automate (see Figure 8).

-

Maintaining situation awareness is critical to effective task performance.

-

Performance errors occur when an operator spends more than optimal engagement time on one task to the detriment of another (cognitive tunneling) or when attention is diverted from an important task by engaging compelling information. Easily identified alerts for neglected tasks can be helpful.

-

The operator will need to access detail at various points during task performance. However, it is important that a global display of the situation is always present.

The research Wickens discussed shows that things will go wrong; therefore, he said, it is critical that the design (and training) accommodate expected imperfections. Human factors in design cannot consist of following a checklist or a set of written standards. It is important to draw on lessons learned from prior human factors Army studies (National Research Council, 1997; Endsley, 1996; Wickens and Rose, 2001).

APPLICATION AND REAL ENVIRONMENTS

The following presentations were grouped together because each represents examples of implementation and use of humans and robots in the field and demonstrate overall the diversity and the complexity of the realm of human-robotic interaction. The first presentation was given by Robin Murphy, one of the first researchers to be involved in robotic urban search and rescue and who headed a team at the World Trade Center on September 11, 2001. John Pye presented his experience on lessons learned when human-packable robotic systems are deployed in the field, specifically, robots and robotic controllers for U.S. troops serving in Afghanistan. Scott Thayer presented the operational issues associated with robots in a subterranean environment.

A number of key points were raised in these presentations. For Murphy and her colleagues, navigation was not a major problem. In search and

rescue, the only decisions to be made relate to finding a victim or identifying a structural problem. In addition, weight is not a key issue and as a result larger monitors can be used for conducting a visual task that can benefit from higher resolution on bigger displays. For dismounted soldiers, as Pye discussed, weight means everything and is critical for the successful implementation of any robotic system. Thayer emphasized the attentional cost of controlling multiple robots and switching among tasks. In current practice, it takes multiple operators to control one robot, rather than one operator for multiple robots (the desired force multiplier).

It became clear across the presentations that the domain of human-robotic interaction is not unitary in demands, needs, or goals. In addition to the cognitive aspect of controlling a robot, there are still many unresolved physical design issues. These include, but are not limited to, resolution, size, weight, and control hardware.

Navigation Is Not the Problem

Robin Murphy, Center for Robot-Assisted Search and Rescue, University of South Florida

The first known actual use of robots for urban search and rescue was at the World Trade Center disaster on September 11, 2001. These robots were used to search for victims; to search for paths through the rubble as a guide to excavation; to inspect structures; and to detect hazardous materials. They were used because they can travel deeper than traditional search equipment (robots can routinely penetrate 5 to 20 meters into the interior of the rubble pile compared with 2 meters for a camera mounted on a pole); they can enter spaces too small for humans or dogs; and they can enter a place still on fire or one posing great risk of structural collapse. All robots were teleoperated due to the unexpected complexity of the environment, the limitations of the sensors, and user acceptance issues.

Search and rescue is a good domain for the study of human-robotic interaction because it involves people in many roles: robot operators, remote consumers of the information from the robot, and “out front” people such as victims and workers. Search and rescue is an emerging domain in which the environment is the natural adversary. The Center for Robot-Assisted Search and Rescue now operates with a budget of $2 to 3 million per year in the following areas: response team training (certifies over 400 rescuers from 21 states and 10 counties), basic research, prototyping, and field evaluation.

For search and rescue, the navigation choices are not significant. The robot is stationary for the majority of time, while the operators and consumers of the data are busy identifying and interpreting relevant data transmitted from the robot. In current practice, two observers interact with one robot; the bottleneck is situation awareness, not navigation. Information flow is hierarchical and lateral, and roles change constantly. Shared visual interaction is the common ground (e.g., robot-assisted medical reach-back). The task domain supports physically co-located teams for safety. The team task is to interpret the information from robot sensors and not to drive the robot.

Murphy and her colleagues have conducted two situation awareness (SA) studies measuring the percentage of statements related to various tasks and team members for high-SA teams and low-SA teams. Individuals were assigned to high-SA and low-SA teams by experts who rated each individual’s level on a five-point scale while observing his or her task performance.

The results showed that high-SA teams made significantly more comments, talked more about the search mission, and asked fewer questions of their team members. Operators with high SA were 9 times more likely to locate a victim.

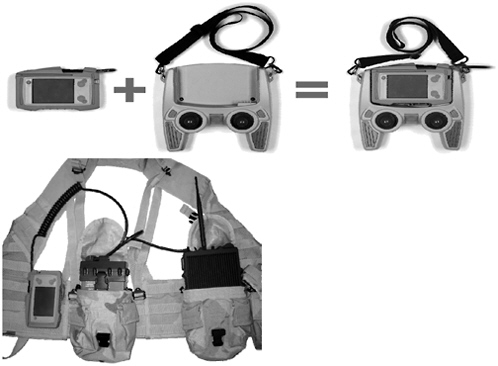

Development and Field Trials of the Advanced Robotic Controller—Lessons Learned

John Pye, Exponent Failure Analysis Associates

Pye and his colleagues at Exponent have developed the advanced robotic controller (ARC) and tested its application to the field in Afghanistan. The primary goal was to create a robotic controller that would ensure adequate network communication and information sharing among platoon members and at the same time be provided at an acceptable weight. The components of the system, as shown in Figure 9, include a PDA, a mesh radio, two joystick controllers with seven buttons, and one battery that runs for approximately 30 hours. The PDA is used to view images from the robot sensor input, including video, maps, and global positioning system locations. The joystick is connected to the mesh radio; this shape and the layout of the buttons were familiar to the soldiers in the field. The ARC was carried by the soldiers assigned to control the robot; the rest of the soldiers in the platoon carried a mesh radio and a PDA. The control interface was not standardized across the deployed robotic systems (unmanned aerial ve-

FIGURE 9 Advanced robotic controller components.

hicles and unmanned ground vehicles). This decision was based on the fact that there was little task sharing. Each human controller was assigned a specific robot. A controller was required to monitor the PDA for streaming video from the robot as well as processing other data/images of assets in the field. The operator also received messages from other operators and video from the robot. Robots were considered team members and were usually in sight of the controller.

A total of 60 ARCs were tested during a one-month period in Afghanistan. Members of the Army Rapid Equipping Force comprised the test group. Their role was to field test new technologies designed to meet operational shortcomings. Several key lessons were learned from the field test. First, with regard to the controller, weight was of central importance—even at 6 lbs., the ARC was judged to be too heavy. Some soldiers indicated that carrying the controller would require getting rid of another piece of equipment that was also important for warfighting and survival. Second, soldiers believed that they could handle more buttons on the joystick controller. Third, the resolution/size of images on the PDA screen were seen as useful but not optimal for use in command missions. Finally, as noted above, networking is critical. The capability for voice communi-

cation between devices and the control of the robot is central, as the robot is the sensor feed and this information must be shared by platoon members. In terms of span of control, a minimum of two dismounted infantry were needed to control one robot—one controlling the robot and one attending to the local environment.

Adaptive Interfaces for Robots

Scott M. Thayer, Carnegie Mellon University

Thayer opened his presentation with a review of robot interface properties suggested by a National Research Council report (1992). These include:

-

Flexibility: able to control a host of current and planned unmanned platforms.

-

Adaptability: able to support different operators and skill levels.

-

Robustness: able to succeed despite operations in an uncertain, dynamic, and hostile world.

-

Responsiveness: able to provide a mission-centric perspective that enables operators to react in tactical timeframes.

A primary goal of the military robotic programs is to make it possible for one remote operator to control multiple robots on the front line, with the effect of taking the soldiers out of harm’s way. Thayer’s work focuses on subterranean robots. These robots often do not have an interface outside the engineering world; that is, many times they have no control communication from an operator, no global positioning system, and a very high level of autonomy. They are considered disposable. The robot’s job is to search abandoned mines and return with a map or the location of trapped miners. Working in this real-world environment, the ratio of operators to robots is nine operators to one robot.

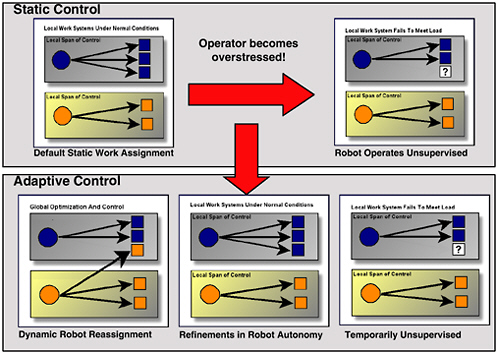

Moving from the subterranean context to the battlefield creates a more complex set of tasks for both the robot and the operator. In a combat situation, the tasks include moving, shooting, communicating, and sustaining. These tasks are accomplished by individuals, by units, by platoons, and so forth. At each level there are rules. Humans can learn the rules and operate autonomously. Robots, in contrast, are far less capable—they require supervisory control by a human operator. The key question is how to effectively extend the span of control of the operator. One proposal is adaptive

FIGURE 10 Static versus adaptive control.

control in which workload can be automatically shifted from one operator to another or from the operator to the robot, using adjustable or sliding autonomy, when a cognitive overload condition is approached (Figure 10). At one end of the scale is a fully autonomous system and at the other end is a teleoperated system. In between are various combinations of automation and human supervision or control.

Thayer proposed to invest more in interface design, particularly to ensure compatibility between the spatial and temporal aspects of the task and the tools provided at the interface. One example of a spatial task is route planning using spatial displays rather than command language as an aid to the operator. For temporal planning, Thayer is working on a display that provides events on a time line.

COORDINATION, COMMUNICATION, AND DELEGATION

This section combines three presentations on the ways in which human operators communicate and collaborate among themselves in order to operate the robots and on the ways in which human and robots should communicate. Nancy Cooke examined coordination among robot team

members and sought to define measures of coordination among humans. The other two presentations provided specific approaches to delegation. Christopher Miller discussed delegation in the context of supervisory control. Robert Hoffman examined delegation in terms of the language (policy) of communication between intelligent agents and humans. A central theme running through each of these presentations is the need to understand and interpret the intent of the communicator on the part of the receiver (either human or robot).

Design for Coordination and Control

Nancy Cooke, Arizona State University East

Cooke and her colleagues have conducted research in the context of a simulated ground control station for unmanned aerial vehicles in which three-person teams are required to fly a simulated unmanned aerial vehicle for the purpose of taking reconnaissance photographs. The three roles include the air vehicle operator, the payload operator, and the data exploitation, mission planning, and communication operator. Team members received unique, yet overlapping, training. The roles are interdependent and require coordination and information sharing in order to complete a mission successfully. Operators can be either in a co-located environment in which they see each other and all the displays, or they can be geographically distributed. How team members were distributed significantly affected team process: co-located teams demonstrated “better” team process than the distributed teams. However, under high-workload conditions, team performance was better for distributed teams. Compared with co-located teams, distributed teams spent less time debriefing, talked less, displayed a greater variety of communication flow patterns (less stability), and were more likely to experience difficulties when communication breakdowns occurred.

Team coordination in the context of command and control consists of the timely and adaptive sharing of information among team members. Poor coordination contributes to system-wide failure. In Cooke’s work on human-robot teams in the UAV context, human failures were estimated as the cause of approximately 33 to 43 percent of the failures. A portion of those failures involved coordination problems. An example is provided below.

According to the accident investigation board report, the Predator experienced a fuel problem during its descent. Upon entering instrument meteoro-

logical conditions and experiencing aircraft icing, the Predator lost engine power. The two Predator pilots, who control the aircraft from a ground station, executed critical action procedures, but were unable to land the aircraft safely. It crashed in a wooded area.

According to the report, the pilots’ attention became too focused on flying the Predator in icing and weather conditions they had rarely encountered. The report also cites lack of communication between the two pilots during the flight emergency as a cause of the accident.

According to Cooke, technology can hinder coordination. Activities that facilitate communication include:

-

Development of coordination-centric cognitive task analysis.

-

Empirical work in synthetic testing environments on coordination.

-

Usability testing and empirical work to test intervention.

-

Development of coordination metrics and models.

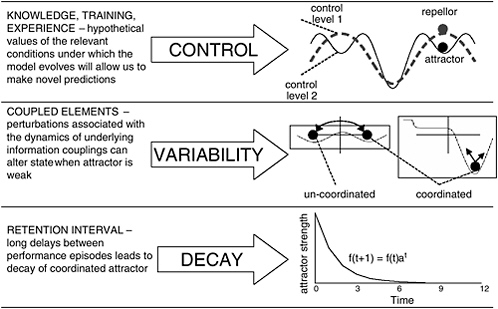

Figure 11 provides a model of coordination proposed by Cooke. At this stage, the model consists of various paradigms adopted from other dynamical system literature.

FIGURE 11 A model of coordination.

Delegation Approaches for Scalable Robot and Vehicle Control

Christopher Miller, Smart Information Flows Technology

The goal of Miller’s work is to emulate delegation in human-human work relationships to allow the operator to smoothly adjust the level of automation used, depending on such variables as time available, workload, decision criticality, and trust. It is important to preserve the same degree of intent specification when the human is interacting with robots. Delegation means giving to a subordinate the responsibility to perform a task along with some authority and resources. For the relationship to be defined as delegation, the supervisor must retain overall control and authority. The supervisor should have the ability to interact flexibly at multiple levels of control and variable granularity, while maintaining the capabilities of subordinates.

There is a trade-off for delegation between the workload of the supervisor and the ability of the subordinate to adequately carry out the delegated work. Each time the supervisor delegates a task, there will be some degree of uncertainty about performance and competency. It is critical that the subordinate understand the intent of the supervisor and posses the skill or knowledge to complete the task successfully. Intent specification is central.

The metaphor Miller and his colleagues have used in the study of delegation is that of a playbook, an analogy to a sports team’s book of approved plays. In a Playbook™ approach, delegation requires a shared knowledge by supervisor and subordinates of domain goals, tasks, and actions. The supervisor calls plays, and subordinates (robots) have autonomy within the scope of the play. Each play references a defined range of plan or behavior alternatives. Miller briefly described five components of task delegation that can be used in different combinations for different styles of delegation and in different domains. These include goals, plans, constraints, stipulations, and value statements. Each type of task delegation requires a rich declaration of intent.

Finding the appropriate level, set, and range of delegable activities requires traditional human-computer interaction design techniques. The playbook enables a human supervisor to use varying levels of automation for task planning and delegation to a knowledgeable subordinate. Playbook™ accomplishes this by making use of rich and detailed models of the tasks to be performed that are shared by both the supervisor and the automation. Each task is accomplished by specific mixes of humans and automation.

The Creation of Complex Technology for Navigating Complex Worlds

Robert Hoffman, Institute for Human and Machine Cognition

Hoffman’s work focuses on cognitive demands and requirements for complex cognitive systems. Complex cognitive systems, also known as complex sociotechnical domains, include teams composed of humans and technology. These systems are dynamic and involve continual adaptation, multiple points of view, multiple agendas and ambitions, and heterogeneous capabilities and methods.

Teams of humans and robots represent one type of complex cognitive system. Humans receive input from robots in terms of sensory images and warnings, and they in turn monitor, supervise, and control the action of the robot. In this arrangement, humans are asked to infer and monitor the intent of the robot. Inferring intent is a complex task. Humans are asked to understand progress toward goals in terms that are machine-relevant representations rather than human-centered representations. Both human and robot team members need to make their targets, states, capacities, intentions, changes, and upcoming actions obvious to the other intelligences that supervise and coordinate with them. How does one team member tell another team member, human or robot, that it is having trouble performing a function but is not yet failing to perform? How and when does a team member effectively reveal or communicate that it is moving toward a limit of its capacity? How are goals changed and communicated when the situation changes?

One promising framework proposed by Hoffman for managing team behavior and interaction is “knowledgeable agent-oriented policy services.” This framework was developed to control software agents operating in teams. It provides a set of policy services designed to regulate the behavior of the system without requiring the cooperation of the components that are being governed. Policies specify authorization—an agent in the system is allowed to behave one way and not in another. Policies also specify obligation—there are actions or behaviors that are absolutely required and those that are forbidden. Policies can be assigned a priority, and these can change depending on the situation. The types of policies include rules for controlling resources, monitoring and responding to other team members, sending and receiving messages, sequencing information, and moving from one location to another. Although this work is still in the research stage, it may provide useful direction for thinking about human and robot teams.

Hoffman left participants with a final thought. In complex cognitive systems, cognitive workload is distributed. Separate intelligences reside in specific individuals, including robots. Coordination might be achieved by process-oriented machines or scatterbot—that is, treating several robots as a single machine with a single shared process but with separate processors. These robots with their common process could roam from agent to agent, growing, changing, adapting their policies, negotiating goals, and coordinating communication from a common place. Thus, the intelligences would navigate across the robots as they proceed.

KEY ISSUES

The final session of the workshop was devoted to a panel discussion to summarize key issues and identify future research directions. The panel members were Richard Pew (chair), Mica Endsley, Peter Hancock, Dennis McBride, and Thomas Sheridan. Following a brief statement from each panelist, there was an open discussion with workshop participants. The following section presents an overview of the primary themes.

The Current State of Scalable Interface Design

A major aim of the workshop was to examine research on scalability factors for interfaces to remote vehicles. As evident from the presentations and discussions, scalability is still a very new area that requires systematic experimental investigation; specifically, it taxes the present state of understanding about interfaces, posing challenges well beyond those needed for traditional static systems control configurations. The system-wide sharing of combat information and the importance of distal situation awareness, combined with single point control, inevitably loads current hand-held devices to their operational limits and beyond. In relying heavily on visual representations, the problem of seeing the whole picture is one that requires innovative display solutions. Although there was some discussion of this issue, as detailed below, it is clear that interface format for scalability remains a central barrier to successful implementation and has yet to be adequately addressed. The topics that were addressed, however, such as span of control, communication paradigms, multitasking, level of detail, and size of displays and controls are all important elements in understanding factors to be considered in designing for scalability.

Workshop participants discussed the idea that scalability could be based

on more than a single dimension. One common foundation is the class or type of vehicle. This dimension has had some success in considering scalability in unmanned aerial vehicles. For ground vehicles, however, class definitions and role characteristics are not well defined and thus not yet as effective for considering scalability. Another way to view scalability is in terms of the command structure, the level of command, and the span of control. In this regard, it is important to consider that the structure of information flow in future military operations might not resemble the current hierarchical structure. A third approach is to identify the relevant attributes for scalability based on sets of situations and then define the most appropriate types of interfaces for such situations. In this case, consideration of scalability would include the combined tasks of the operator and the unmanned vehicle and the location in which the tasks were being performed. Participants commented that each of these approaches offers useful directions for future design considerations. It should be noted that a large body of research has been conducted over the years on interface design. It would be useful to review these studies carefully for relevant approaches to the current problems of scalability.

Technical Issues

Missions and Tasks

The mission and the task provide the requirements for the human-robotic interaction. For example, in some tasks, such as moving through an urban environment, navigation of an unmanned vehicle requires a significant effort on the part of the remotely located human operator. In other tasks, such as search and rescue, navigation requires little effort and most of the operator’s time is spent on interpretation of the pictures sent back by the robot. Imagery remains important on almost every kind of mission by supporting different functions, depending on the task. It is important to note that such imagery is multimodal and can be haptic and auditory, as well as visual. Multimodal integration is a significant challenge for the future.

Another example is the distinction between confronting an active adversary, as in the military context, and searching the environment for obstacles to navigation. The requirement for dealing effectively with an adversary and protecting the robot adds a layer of complexity to communication and control.

Managing Intent

Managing intent is of central importance. Mental modelers and artificial intelligence researchers refer constantly to internal or mental models of robot behavior. It is important for the mental model of the operator to correspond to the mental model of the robot. At one extreme, if an operator has a perfect representation of the world, he or she will never require any real-time data from the real world. At the other extreme, if the operator has perfect information flow from the outside world, he or she will never need an internal mental model. Part of the challenge is to synthesize representations from the robot and the operator because, in the real world, both are imperfect, particularly when the world is changing rapidly. The real world is full of series of nested tasks, and operators are not good in situations in which the performance of one task is interrupted by the need to perform another task.

Command Language

Another issue concerns developing an effective command language. The command is the output side for the human and the input side for the robot. How does one design a language that is natural to the operator while precise to the robot? How does one communicate frames of reference? One approach is to give commands that are relative, that is, to relate elements to one another and to the environment. Miller’s Playbook™ is presented in relative terms, although it is more relative to the opponents than to the team members themselves. Football players are trained to operate in response to other players and not just to go to a specific location. Standardization of language and its display are important. Chaos results when each individual makes up his or her own display and command structure.

Teams Versus Individuals

Workshop presenters and participants from varied backgrounds dealt extensively with coordination. The individual operator can see only part of the overall picture, either because the data are not available or because the operator does not have time to view and interpret all the incoming data. In many of the presentations, the point was made that it takes more than one individual to control a robot. The most important consideration for coordination among team members in the field is a common mental model

and language supported by an interface that clearly represents the model. Information sharing and team collaboration are key determinants of overall system performance. Supporting team coordination as well as task performance through coordination-centric design is an important interface function.

Intervention

The recovery issue of manual override surfaced frequently. Human operators must have the ability to intervene because robots will reach impasses. That is, when something goes wrong how does the human go to a lower level of automation or intervene completely and still maintain graceful performance without chaos?

Common Look and Feel

There were differing opinions among workshop participants regarding the need for a common look and feel across interfaces. One approach suggested that common look and feel provides a useful means for reaching across all platforms using common interface elements that can be tailored to the operator needs and tasks. This approach can lead to flexibility in implementation and can be employed for different missions and tasks. However, it is important that the operator performing different tasks receive a clear signal denoting the difference. It is also important to carefully consider situations in which similarity and standardization reduce operator workload and those in which they add to operator confusion.

Field Experience Necessary

A final issue is the lack of field contact by both engineers and human factors researchers. It is important for researchers to stay in touch with active operational environments. Military robots work in “very messy worlds,” which differ from those found in research laboratories. The workshop participants saw this interaction as critical and observed that formal support in terms of grant resources could facilitate such endeavors, perhaps as typified by the structure found in Robin Murphy’s organization.

Ethical and Social Issues

A number of ethical and social issues related to the implementation of robotics technologies were raised throughout the workshop. First, practitioners such as Pye, who applies the technology in the field, were concerned that when a robot or ground vehicle is sent out, the operator has no control over whom it may encounter and whether the engagement is the desired one. From Pye’s experience in Afghanistan, the first to confront the unmanned ground vehicle were the village children, who were curious to see what this obvious novelty was all about.

A second issue concerns the design of future robotic mission and robotic capability. At present, most robots are only extensions of perception (e.g., to fly, drive a camera). However, the ultimate goal is to affect action at a distance. Peter Hancock pointed out that, as a consequence, there are troublesome moral issues beyond strategic concerns, such as what happens when the enemy manages to take control of the assets? Should one pursue the effort for action at a distance?

A third issue concerns the ethical implications of allowing robots greater autonomy. Although release of ordnance is supposedly reserved for human decision makers, how soon will it be before such functions are planned for automation? Hancock observed that it is the moral responsibility of the researchers to consider the long-term effects of their present actions.

A fourth issue concerned the use of video game technology. Because soldiers are familiar with video games, it has been suggested by some in the training community that training devices and interfaces should be designed as video games (particularly with regard to control mechanisms and the physical layout of the devices). Workshop participants expressed concern about this idea because the use of such video games may lead the soldier to feel less engaged on the battlefield. Furthermore, he or she would perhaps feel inclined to pull the trigger quickly and eventually become unable to distinguish real from virtual activities (Hancock, in press). Missy Cummings, an experienced fighter pilot, opposed the proposed game path on the basis that making it fun may serve to diminish moral accountability and sensibility with respect to the task.

A fifth and final issue concerns invasion of privacy. Dennis McBride observed that currently there are many instances in which law enforcement agencies and military agencies cooperate. In the future, such cooperation

could lead to situations in which the surveillance technology or robots and unmanned vehicles designed for combating the enemy might be used against American civilians without their knowledge and without the ability to protect their privacy. It is important to be cognizant of future technology developments and guard against possible abuses of these capabilities.