5

Designing Science Assessments

In this report the committee has stressed the importance of considering the assessment system as a whole. However, as was discussed in Chapter 2, the success of a system depends heavily on the nature and quality of the elements that comprise it, in this case, the items, strategies, tasks, situations, or observations that are used to gather evidence of student learning and the methods used to interpret the meaning of students’ performance on those measures.

In keeping with the committee’s conclusion that science education and assessment should be based on a foundation of how students’ understanding of science develops over time with competent instruction, we have taken a developmental approach to science assessment. This approach considers that science learning is not simply a process of acquiring more knowledge and skills, but rather a process of progressing toward greater levels of competence as new knowledge is linked to existing knowledge, and as new understandings build on and replace earlier, naïve conceptions.

This chapter begins with a brief overview of the principal influences on the committee’s thinking about assessment. It concludes with a summary of the work of two design teams that used the strategies and tools outlined in this report to develop assessment frameworks around two scientific ideas: atomic-molecular theory and the concepts underlying evolutionary biology and natural selection.

The chapter does not offer a comprehensive examination of test design, nor a how-to manual for building a test; a number of excellent books provide that kind of information (see, for example, Downing and Haladyna, in press; Irvine and Kyllonen, 2002). Rather, the purpose of this chapter is to help those concerned with the design of science assessments to conceptualize the process in ways that

may be somewhat different from their current thinking. The committee emphasizes that in reshaping their approaches to assessment design states should, at all times, adhere to the Standards for Educational and Psychological Testing (American Educational Research Association, American Psychological Association, and the National Council on Measurement in Education, 1999).

DEVELOPMENTAL APPROACH TO ASSESSMENT

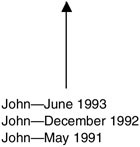

A developmental approach to assessment is the process of monitoring students’ progress through an area of learning over time so that decisions can be made about the best ways to facilitate their further learning. It involves knowing what students know now, and what they need to know in order to progress. This approach to assessment uses a learning progression (see Chapter 3), or some other continuum to provide a frame of reference for monitoring students’ progress over time.1Box 5-1 is an example of a science progress map, a continuum that describes in broad strokes a possible path for the development of science understanding over the course of 13 years of education. It can also be used for tracking and reporting students’ progress in ways that are similar to those used by physicians or parents for tracking changes in height and weight over time (see Box 5-2).

Box 5-3 illustrates another conception of a progress map for science learning. The chart that accompanies it describes expectations for student attainment at each level along the continuum in four domains of science subject matter: Earth and Beyond (EB); Energy and Change (EC); Life and Living (LL); and Natural and Processed Materials (NPM). The creators of this learning progression (and the committee) emphasize that any conception of a learning continuum is always hypothetical and should be continuously verified and refined by empirical research and the experiences of master teachers who observe the progress of actual students.

A developmental approach implies the use of multiple sources of information, gathered in a variety of contexts, that can help shed light on student progress over time. These approaches can take a variety of forms ranging from large-scale externally developed and administered tests to informal classroom observations and conversations, or any of the many strategies described throughout this report. Some of the measures could be standardized and thus provide comparable information about student achievement that could be used for accountability purposes; others might only be useful to a student and his or her classroom teacher. A developmental approach provides a framework for thinking about what to assess and when particular constructs might be assessed, and how evi-

|

BOX 5-1

SOURCE: LaPointe, Mead, and Phillips (1989). Reprinted by permission of the Educational Testing Service. |

dence of understanding would differ as students gain more content knowledge, higher-order and more complex thinking skills, and greater depth of understanding about the concepts and how they can be applied in a variety of contexts.

For example, kinetic molecular theory is a big idea that does not usually appear in state standards or assessments until high school. However, important concepts that are essential to understanding this theory should develop earlier. Champagne et al. (National Assessment Governing Board, 2004)2 provide the

|

2 |

Available at http://www.nagb.org/release/iss_paper11_22_04.doc. |

following illustration of how early understandings underpin more sophisticated ways of understanding big ideas.

Children observe water “disappearing” from a pan being heated on the stove and water droplets “appearing” on the outside of glasses of ice water. They notice the relationships between warm and cold and the behavior of water. They develop models of water, warmth, and cold that they use to make sense of their observations. They reason that the water on the outside of the glass came from inside the glass. But their reasoning is challenged by the observation that droplets don’t form on a glass of water that is room temperature. Does the water really disappear? If so, where did the water droplets come from when a cover is put on the pot, and why doesn’t the water continue disappearing when the cover is on?

These observations, models of matter, warmth and cold, are foundations of the sophisticated understandings of kinetic-molecular theory. Water is composed of molecules, they are in motion, and some have sufficient energy to escape from the surface of the water. This model of matter allows us to explain the observation that water evaporates from open containers. Understanding temperature as a measure of the average kinetic energy of the molecules provides a model for explaining why the rate at which water evaporates is temperature dependent. The higher the temperature of water the greater is the rate of evaporation.

This simple description illustrates that at different points along the learning continuum the understandings and skills that need to be addressed through instruction and assessed are fundamentally different.

INFLUENCES ON THE COMMITTEE’S THINKING

The committee drew on a variety of sources in thinking about the design of developmental science assessments, including the work of the design teams described in Chapter 2 and those described below. We also reviewed work conducted by a variety of others interested in this type of assessment (Wiggins and McTighe, 1998; CASEL, 2005; Wilson 2005; Wilson and Sloane 2000; Wilson and Draney 2004), the work of the Australian Council for Educational Research (Masters and Forster, 1996), and the work that guided the creation of the strand maps included in the Atlas of Science Literacy (AAAS, 2001).3

The Assessment Triangle

Measurement specialists describe assessment as a process of reasoning from evidence—of using a representative performance to infer a wider set of skills or

|

3 |

See Figure 4-1. |

|

Elaborated Framework Science > Earth and Beyond, Energy and Change, Life and Living, Natural and Processed Materials

|

||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||

SOURCE: Western Australia Curriculum Council. Reprinted by permission. |

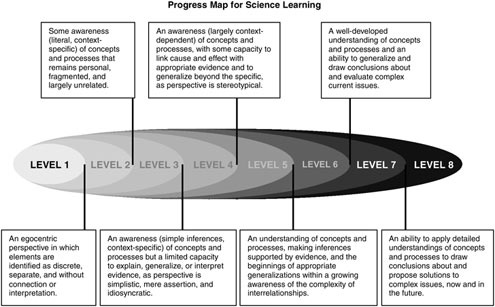

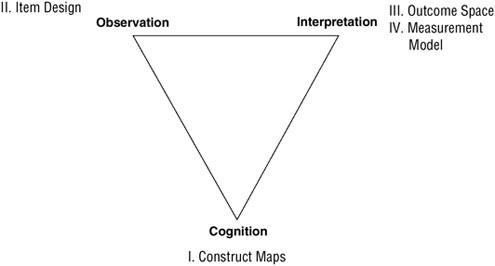

knowledge. The process of collecting evidence to support inferences about what students know is fundamental to all assessments—from classroom quizzes, standardized achievement tests, or computerized tutoring programs, to the conversations students have with their teachers as they work through an experiment (Mislevy, 1996). The NRC’s Committee on the Cognitive Foundations of Assessment portrayed this process of reasoning from evidence in the form of what it called the assessment triangle (NRC 2001b, pp. 44–51) (see Figure 5-1).

The triangle rests on cognition, a “theory or set of beliefs about how students represent knowledge and develop competence in a subject domain” (NRC, 2001b, p. 44). In other words, the design of the assessment begins with specific understanding not only of which knowledge and skills are to be assessed but also of how understanding develops in the domain of interest. This element of the triangle links assessment to the findings about learning discussed in Chapter 2. In measurement terminology, the aspects of cognition and learning that are the targets for the assessment are referred to as the construct.

A second corner of the triangle is observation, the kinds of tasks that students would be asked to perform that could yield evidence about what they know and can do. The design and selection of the tasks need to be tightly linked to the specific inferences about student learning that the assessment is meant to support. It is important to note here that although there are a variety of questions that some kinds of assessment could answer, an explicit definition of the questions about which information is needed must play a part in the design of the tasks.

The third corner of the triangle is interpretation, the methods and tools used to reason from the observations that have been collected. The method used for a large-scale standardized test might be a statistical model, while for a classroom

FIGURE 5-1 The assessment triangle.

assessment it could be a less formal, more practical method of drawing conclusions about student understanding based on the teacher’s experience. This vertex of the triangle also may be referred to as the measurement model.

The purpose of presenting these three elements in the form of a triangle is to emphasize that they are interrelated. In the context of any assessment, each must make sense in terms of the other two for the assessment to produce sound and meaningful results. For example, the questions that dictate the nature of the tasks students are asked to perform should grow logically from an understanding of the ways learning and understanding develop in the domain being assessed. Interpretation of the evidence produced should, in turn, supply insights into students’ progress that match up with those same understandings. Thus, the process of designing an assessment is one in which specific decisions should be considered in light of each of these three elements.

From Concept to Implementation

The assessment triangle is a concept that describes the nature of assessment, but it needs elaboration to be useful for constructing measures. Using the triangle as a foundation, several different researchers have developed processes for assessment development that take into account the logic that underlies the assessment triangle. These approaches can be used to create any type of assessment, from a classroom assessment to a large-scale state testing program. They are included here to illustrate the importance of using a systematic approach to assessment design in which consideration is given from the outset to what is to be measured, what would constitute evidence of student competencies, and how to make sense of the results. A systematic process stands in contrast to what the committee found as a typical strategy for assessment design. These more common approaches tend to focus on the creation of “good items” in isolation from all other important facets of design.

Evidence-Centered Assessment Design

Mislevy and colleagues (see for example, Almond, Steinberg, and Mislevy, 2002; Mislevy, Steinberg, and Almond, 2002; and Steinberg et al., 2003) have developed and used an approach—evidence-centered assessment design (ECD)—for the construction of educational assessment that is based on evidentiary argument. The general form of the argument that underlies ECD (and the assessment triangle discussed above as well as the Wilson construct mapping process discussed below) was outlined by Messick (1994, p. 17):

A construct-centered approach would begin by asking what complex of knowledge, skills, or other attributes should be assessed, presumably because they are tied to explicit or implicit objectives of instruction or are otherwise valued by

society. Next what behaviors or performances should reveal those constructs, and what tasks or situations should elicit those behaviors? Thus, the nature of the construct guides the selection and construction of relevant tasks as well as the rational development of construct-based scoring criteria and rubrics.

ECD rests on the understanding that the context and purpose for an educational assessment affects the knowledge and skills to be measured, the conditions under which observations will be made, and the nature of the evidence that will be gathered to support the intended inference. Thus, there is recognition that good assessment tasks cannot be developed in isolation, but rather assessment must be designed from the start around the intended inferences, the observations and performances that are needed to support those inferences, the situations that will elicit those performances, and a chain of reasoning that will connect them.

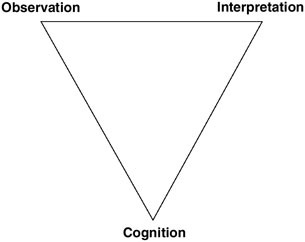

ECD employs a conceptual assessment framework (CAF) that is broken down into multiple pieces (models) and a four-process architecture for assessment delivery systems (see Box 5-4). The CAF serves as a blueprint for assessment design that specifies the knowledge and skills to be measured, the conditions under which observations will be made, and the nature of the evidence that will be gathered to support the intended inferences. Mislevy and colleagues argue that by breaking the specifications into smaller pieces they can be reassembled in differ-

|

BOX 5-4  SOURCE: Almond, Steinberg, and Mislevy (2002); Mislevy, Steinberg, and Almond (2002). |

ent configurations for different purposes. For example, an assessment that is intended to provide diagnostic information about individual students would need a finer grained student model than would an assessment designed to provide information on how well groups of students are progressing in meeting state standards. ECD principles allow the same tasks to be used for these different purposes (if the task model is written generally enough) but require that the evidence model differ to provide the level of detail that is consistent with the purpose of the assessment.

As discussed throughout this report, assessments are delivered in a variety of ways and ECD provides a generic framework for test delivery that allows assessors to plan for diverse ways of delivering an assessment. The four-process architecture for assessment delivery outlines the processes that the operations of any assessment system must contain in some form or another (see Box 5-4). These processes are selecting the tasks, items, or activities that comprise the assessment (the activity selection process); selecting a means for presenting the tasks to test takers and gathering their responses (the presentation process); scoring the responses to individual items or tasks (response processing); accumulating evidence of student performance across multiple items and tasks to produce assessment (or section) level scores (summary scoring process). ECD relies on specific measurement models that are associated with each task for accomplishing the summary scoring process.

For an example of how the ECD framework was used to create a prototype standards-based assessment, see An Introduction to the BioMass Project (Steinberg et al., 2003). The approach to standards-based assessment that is described in this paper moves from statements of standards in a content area, through statements of the claims about students’ capabilities the standards imply, to the kinds of evidence one would need to justify those claims, and finally to the development of assessment activities that elicit such evidence (p. 9).

Mislevy has also written about how the ECD approach can be used in a program evaluation context (Mislevy, Wilson, Ercikan, and Chudowsky, 2003). More recently, he and a group of colleagues have been working on a computerized test specification and development system that is based on this approach, called PADI (Principled Assessment Design for Inquiry) (Mislevy and Haertel, 2005).

Construct Modeling Approach

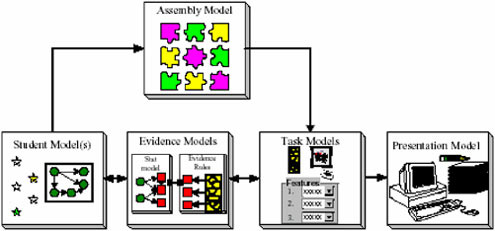

Wilson (2005) also expands on the assessment triangle by proposing another conceptualization—a construct modeling approach—that uses four building blocks to create different assessments that could be used at all levels of an education system (see Figure 5-2). Wilson conceives of the building blocks as a guide to the assessment design process, rather than as a lock-step approach. He is clear that each of the steps might need to be revisited multiple times in the develop-

FIGURE 5-2 Assessment building blocks.

ment process in order to refine and revise them in response to feedback. We use these building blocks as a framework for illustrating the assessment design process. The building blocks are:

-

Specification of the construct(s)—the working definitions of what is to be measured.4

-

Item design—a description of all of the possible forms of items and tasks that can be used to elicit evidence about student knowledge and understanding embodied in the constructs.

-

The outcome space—a description of the qualitatively different levels of responses to items and tasks (usually in terms of scores) that are associated with different levels of performance (different levels of performance are often illustrated with examples of student work).

-

The measurement model—the basis on which assessors and users associate scores earned on items and tasks with particular levels of performance—that is, the measurement model must relate the scored responses to the construct.

APPLYING THE BUILDING BLOCKS

The committee found that in designing an assessment, the various tasks described by the building blocks would be accomplished differently depending on the purpose of the assessment and who is responsible for its design. For example, in the design of classroom assessment, teachers would most likely be responsible for all aspects of assessment design—from identifying the construct to interpreting the results. However, when a large-scale test is being developed, state personnel would typically identify the constructs to be measured,5 and professional test contractors might take primary responsibility for item development, scoring, and applying a measurement model—sometimes in collaboration with the state. (Patz, Reckase, and Martineau, 2005, discusses the division of labor in greater detail.)

Specifying the Construct

Key to the development of any assessment, whether it is a classroom assessment embedded in instruction or a large-scale state test that is administered externally, is a clear specification of the construct that is to be measured. The construct can be broad or specific; for example, science literacy is a construct, as is knowledge of two-digit multiplication. Chapter 3 discussed, in the context of assessing inquiry, the difficulty of developing an assessment when constructs are not clearly specified and their meanings not clearly understood.

Any standards-based assessment should begin with the state content standards. However, most standards documents specify constructs using terms such as “knowing” or “understanding.” For example, state standards might specify that students will know that heat moves in a predictable flow from warmer objects to cooler objects until all objects are at the same temperature, or that students will understand interactions between living things and their environment. But, as was discussed in Chapter 4, most state standards do not provide operational definitions of these terms. Thus, a standard that calls for students to “understand” is open to wide interpretation, both about what should be taught and about what would be accepted as evidence that students have met the goal. The committee urges states to follow the suggestions in Chapter 4 for writing standards so that they convey more than abstract constructs.

The committee found that learning performances, a term adopted by a number of researchers—Reiser (2002) and Perkins (1998) among others—provide a way of clarifying what is meant by a standard by suggesting connections between the conceptual knowledge in the standards and related abilities and understandings that can be observed and assessed. Learning performances are a way of elabo-

|

BOX 5-5 Some of the key practices that are enabled by scientific knowledge include the following:

|

rating on content standards by specifying what students should be able to do when they achieve a standard. For example, learning performances might indicate that students should be able to describe phenomena, use models to explain patterns in data, construct scientific explanations, or test hypotheses. Smith, Wiser, Anderson, Krajcik, and Coppola (2004) outlined a variety of specific skills6 that

SOURCE: Smith et al. (2004). |

could provide evidence of understanding under specific conditions and provide examples of what evidence of understanding might look like (see Box 5-5).

The following example illustrates how one might elaborate on a standard to create learning performances and identify targets for assessment. Consider the following standard that is adapted from Benchmarks for Science Literacy (AAAS, 1993, p. 124) about differential survival: [The student will understand that] Individual organisms with certain traits are more likely than others to survive and have offspring. The benchmark clearly refers to one of the central mechanisms of evolu-

tion, the concept often called “survival of the fittest.” Yet the standard does not indicate which skills and knowledge might be called for in working to attain it. In contrast, Reiser, Krajcik, Moje, and Marx (2003) amplify this single standard as three related learning performances:

-

Students identify and represent mathematically the variation on a trait in a population.

-

Students hypothesize the function a trait may serve and explain how some variations of the trait are advantageous in the environment.

-

Students predict, using evidence, how the variation on the trait will affect the likelihood that individuals in the population will survive an environmental stress.

Reiser and his colleagues contend that this elaboration of the standard more clearly specifies the skills and knowledge that students need to attain the standard and therefore better defines the construct to be assessed. For example, by indicating that students are expected to represent variation mathematically, the elaboration suggests the importance of particular mathematical concepts, such as distribution. Without the elaboration, the need for this important aspect may or may not have been inferred by an assessment developer.

Selecting the Tasks

Decisions about the particular assessment strategy to use should not be dictated by the desire to use one particular item type or another, or by untested assumptions about the usefulness of specific item types for tapping specific cognitive skills. Rather, such decisions should be based on the usefulness of the item or task for eliciting evidence about students’ understanding of the construct of interest and for shedding light on students’ progress along a continuum representing how learning related to the construct might reasonably be expected to develop.

Performance assessment is one approach that offers great potential for assessing complex thinking and reasoning abilities, but multiple-choice items also have their strengths. Although many people recognize that multiple-choice items are an efficient and effective means of determining how well students have acquired basic content knowledge, many do not recognize that they also can be used to measure complex cognitive processes. For example, the Force Concept Inventory (Hestenes, Wells, and Swackhamer, 1992) is an assessment that uses multiple-choice items but taps into higher-level cognitive processes. Conversely, many constructed response items used in large-scale state assessments tap only low level skills, for example by asking students to demonstrate declarative knowledge and recall of facts or to supply one-word answers. Metzenberg (2004) provides examples of this phenomenon drawn from current state science tests.

An item or task is useful if it elicits important evidence of the construct it is intended to measure. Groups of items or series of tasks should be assembled with a view to their collective ability to shed light on the full range of the science

content knowledge, understandings, and skills included in the construct as elaborated by the related learning performances.

Creating Items7 from Learning Performances

When used in a process of backward design, learning performances can guide the development of assessment strategies. Backward design begins with a clear understanding of the construct. It then focuses on what would be compelling evidence or demonstrations of learning (the committee calls these learning performances) and the consideration of what evidence of understanding would look like.

Box 5-6 illustrates the process of backward design by expanding a standard into learning performances and using the learning performances to develop assessment tasks that map back to the standard. For each learning performance, several assessment tasks are provided to illustrate how multiple measures of the same construct can provide a richer and more valid estimation of a student’s attainment of the standard. It is possible to imagine that some of these tasks could be used in classroom assessment while others that target the same standard could be included on statewide large-scale assessment. Smith et al. (2004), have illustrated this process more thoroughly in their paper by outlining learning performances for a series of K–8 standards on atomic-molecular theory. Their task sets include multiple-choice and performance items that are suitable for a variety of assessment purposes, from large-scale annual tests to assessments that can be embedded in instruction.

For each assessment task included in Box 5-6, distractors (incorrect responses) that can shed light on students’ misconceptions are also shown because distractors can provide information on what is needed for student learning to progress. An item design strategy developed by Briggs, Alonzo, Schwab, and Wilson (2004), which they call Ordered Multiple Choice (OMC), expands on this principle.

A unique feature of OMC items is that they are designed in such a way that each of the possible answer choices is linked to developmental levels of student understanding, facilitating the diagnostic interpretation of student responses. OMC items provide information about the developmental understanding of students that may not be available from traditional multiple-choice items. In addition, they are efficient to administer and to score, thus yielding information that can be quickly and reliably provided to schools, teachers, and students. Briggs et al. (2004) see potential for this approach in creating improved large-scale assessments, but they note that considerable research and development are still needed.

|

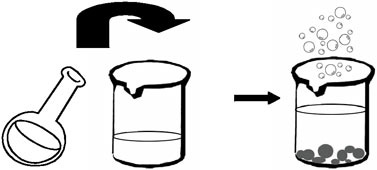

BOX 5-6 Standard: As the result of activities in grades 5–8, all students should develop understanding that substances react chemically in characteristic ways with other substances to form new substances with different characteristic properties (National Research Council, 1996, Content Standard B5-8:1B).1 Further clarification of the standard: Substances have distinct properties and are made of one material throughout. A chemical reaction is a process where new substances are made from old substances. One type of chemical reaction is when two substances are mixed together and they interact to form new substance(s). The properties of the new substance(s) are different from the old substance(s). When scientists talk about “old” substances that interact in the chemical reaction, they call them reactants. When scientists talk about new substances that are produced by the chemical reaction, they call them products. Students differentiate chemical change from other changes, such as phase change, morphological change, etc. Prior knowledge that students need:

Possible misconception that students might hold:

Possible learning performances and associated assessment tasks: The next learning performance makes use of the skill of identifying. Identifying

|

|

involves applying category knowledge to particular exemplars. In identifying, students may consider only one exemplar (Is this particular object made of wax?). Identifying also encompasses the lower range of cognitive performances we want students to accomplish. Learning performance 1: Students identify chemical reactions. Associated Assessment Task #1: Which of the following is an example of a chemical reaction?

Associated Assessment Task #2: A class conducted an experiment in which students mixed two colorless liquids. After mixing the liquids, the students noticed bubbles and a gray solid that had formed at the bottom of the container.

Notice Part B goes beyond the learning performance to include justification for one’s response. The next learning performance makes use of the practice of constructing evidence-based explanations. Constructing explanations involves using scientific theories, models, and principles along with evidence to build explanations of phenomena; it might also entail ruling out alternative hypotheses. Developing an evidence-based explanation is a higher order cognitive task. Learning performance 2: Students construct a scientific explanation that includes a claim about whether a process is a chemical reaction, evidence in the form of properties of the substances and/or signs of a reaction, and reasoning that a chemical reaction is a process in which substances interact to form new substances so that there are different substances with different properties before compared to after the reaction. |

|

Associated Assessment Task #1: Carlos takes some measurements of two liquids—butanic acid and butanol. Then he stirs the two liquids together and heats them. After stirring and heating the liquids, they form two separate layers—layer A and layer B. Carlos uses an eye-dropper to get a sample from each layer and takes some measurements of each sample. Here are his results:

Write a scientific explanation that states whether a chemical reaction occurred when Carlos stirred and heated butanic acid and butanol. SOURCE: Smith et al. (2004). |

||||||||||||||||||||||||||||||||||||||||

Box 5-7 from Briggs et al. (2004) contains a learning progression that identifies common errors. Items that might be used to tap into students’ understandings and misconceptions are included. The explanations of answer choices illustrate how assessment tasks can be made more meaningful if distractors shed light on instructional strategies that are needed to reconstruct student misconceptions.

Describing the Outcome Space

As was discussed earlier, assessment is a process of making inferences about what students know based on observations of what they do in response to defined situations. Interpreting student responses to support these inferences requires two things: a scored response and a way to interpret the score. Scoring multiple-choice items requires comparing the selected response to the scoring key to determine if the answer is correct or not. Scoring performance tasks,8 however, re-

quires both judgment and defined criteria on which to base the judgment. We refer to these criteria as a rubric. A rubric includes a description of the dimensions for judging student performance and a scale of values for rating those dimensions. Rubrics are often supplemented with examples of student work at each scale value to further assist in making the judgments. The performance descriptors that are part of the states’ achievement standards could be associated with the rubrics that are developed for individual tests or tasks. A discussion of achievement standards is included in Chapter 4.

Box 5-8 is a progress guide or rubric that is used to evaluate students’ performance on an assessment of the concept of buoyancy. The guide could be useful to teachers and students because it provides information about both current performance and what would be necessary for students to progress.

Delaware has developed a system for gleaning instructionally relevant information from responses to multiple-choice items. The state uses a two-digit scoring rubric modeled after the scoring rubric used in the performance tasks of the Third International Mathematics and Science Study (TIMSS). The first digit of the score indicates whether the answer is correct, incorrect, or partially correct; the second digit of an incorrect or partially correct response score indicates the nature of the misconception that led to the wrong answer. Educators analyze these misconceptions to understand what is lacking in students’ understanding and to shed light on aspects of the curriculum that are not functioning as desired (Box 5-9).

Determining the Measurement Model

Formal measurement models are statistical and psychometric tools that allow interpreters of assessment results to draw meaning from large data sets about student performance and to express the degree of uncertainty that surrounds the conclusions. Measurement models are a particular form of reasoning from evidence that include formal rules for how to integrate a variety of data that may be relevant to a particular inference. There are a variety of measurement models and each model carries both assumptions and inferences that can be drawn when the assumptions are met.

For most of the last century, interpreting test scores was thought of in terms of an assumption that a person’s observed score (O) on a test was made up of two components, true score (T) and error (E), i.e., O = T + E. From that formulation were derived methods of determining how much error was present, and working backward, how much confidence one could have in the observed score. Reliability is a measure of the proportion of variance of observed score that is attributable to the true score rather than to error. The main portions of the traditional psychometrics of test interpretation, test construction, etc. are built on this basis.

Another commonly used type of measurement model is item response theory (IRT), which, as originally conceived, is appropriate to use in situations where the

|

BOX 5-7

|

Sample ordered multiple-choice (OMC) items based upon Earth in the Solar System Progress Variable Item appropriate for fifth graders:

Item appropriate for eighth graders:

A unique feature of OMC items is that each of the possible answer choices in an OMC item is linked to developmental levels of student understanding, facilitating the diagnostic interpretation of student item responses. OMC items seek to combine the validity advantages of open-ended items with the efficiency advantages of multiple-choice items. On the one hand, OMC items provide information about the developmental understanding of students that is not available with traditional multiple-choice items; on the other hand, this information can be provided to schools, teachers, and students quickly and reliably, unlike traditional open-ended test items. SOURCE: Briggs, Alonzo, Schwab, and Wilson (2004). Developed by WestEd in conjunction with the BEAR Center at the University of California, Berkeley, with NSF support (REC-0087848). Reprinted with permission. |

||||||||||||||||||||||||||||||||||

|

BOX 5-8 Buoyancy: WTSF Progress Guide

|

||||||||||||||||

construct is unidimensional (that is, a single underlying trait, such as understanding biology, explains performance on a test item). IRT models make a further assumption, that is, the probability of the observed responses is determined by two types of unobservables, an examinee’s ability and parameters that characterize the items. A range of different mathematical models are used to estimate these parameters. When the assumption of undimensionality is not met, a more complex version of the item response theory model—multidimensional item response theory—is more appropriate. This model allows for the use of items that measure more than one trait, such as both understanding biology and understanding of chemistry.

SOURCE: http://www.caesl.org/conference/Progress_Guides.pdf. Reprinted by permission of the Center for Assessment and Evaluation of Student Learning. |

||||||||||||||||||||

In large-scale assessment programs it is typical for state personnel to decide on the measurement model that will be used, in consultation with the test development contractor. Most often it will be either classical test theory or one of the IRT models. Other models are available (see for example, Chapter 4 of NRC [2001b] for a recent survey), although these have mainly been confined to research studies rather than large-scale applications. The decision about which measurement model to use is generally based on information provided by the state about the inferences it wants to support with test results, and on the model the contractor typically uses for accomplishing similar goals.

|

BOX 5-9 Question I: Your mixture is made with three chemicals that you have worked with in this unit. You may not have the same mixture as your neighbor. Using two or more senses, observe your unknown mixture. List at least three physical properties you observed. Do not taste the mixture. This question measures students’ ability to observe and record the physical properties of a mixture. Criterion for a complete response:

SOURCE: http://www.scienceassessment.org/pdfxls/chemicaltest/oldpdfs/A6.18.pdf. |

EVALUATING THE COGNITIVE VALIDITY OF ASSESSMENT

Educators, policy makers, students, and the public want to know that the inferences that are drawn from the results of science tests are justified. To address the cognitive validity of science achievement tests, Shavelson and colleagues (Ayala, Yin, Shavelson, and Vanides 2002; Ruiz-Primo, Shavelson, Li, and Schultz 2001) have developed a strategy for analyzing science tests to ascertain what they are measuring. The same process can be used to analyze state standards and to compare what an assessment is measuring with a state’s goals for student learning.

At the heart of the process is a heuristic framework for conceptualizing the

construct of science achievement as comprised of four different but overlapping types of knowledge. The knowledge types are:

-

Declarative knowledge is knowing what—for example, knowledge of facts, definitions, or rules.

-

Procedural knowledge is knowing how—for example knowing how to solve an equation, perform a test to identify an acid or base, design a study, identify the steps involved in other kinds of tasks.

-

Schematic knowledge is knowing why—for example, why objects sink or float, or why the seasons change—and includes principles or other mental models that can be used to analyze or explain a set of findings.

-

Strategic knowledge is knowing how and when to apply one’s knowledge in a new situation or when assimilating new information—for example, developing problem-solving strategies, setting goals, and monitoring one’s own thinking in approaching a new task or situation.

Using an examinee–test interaction perspective to explain how students bring and apply their knowledge to answer test questions, the researchers developed a method for logically analyzing test items and linking them to the achievement framework of knowledge types (Li, 2001). Each item on a test goes through a series of analyses that are designed to ascertain whether the item will elicit responses that are consistent with what the assessment is intending to measure and if the responses that they elicit can be interpreted to support any intended inferences that the assessor hopes to draw from the results.

Li, Shavelson, and colleagues (Li, 2001; Shavelson and Li, 2001; Shavelson et al., 2004) applied this framework in analyzing the science portions of the Third International Mathematics and Science Study—Repeat (TIMMS-R) (Population 2) and the Delaware Student Testing Program. They found that both tests were heavily weighted on declarative knowledge—almost 60 percent. The remaining items were split between procedural and schematic knowledge. The researchers also analyzed the Delaware science content standards using the achievement framework and found that the state standards were more heavily weighted toward schematic knowledge than was the assessment—indicating that the assessment did not adequately represent the cognitive priorities contained in the state standards. These findings led to changes in the state testing program and the development of a strong curriculum-connected assessment system for improvement of student learning to supplement the state test and provide additional information on students’ science achievement (personal communication, Rachel Wood).

BUILDING DEVELOPMENTAL ASSESSMENT AROUND LEARNING

The committee commissioned two design teams that included scientists, science educators, and experts with knowledge of how children learn science to

suggest ways of using research on children’s learning to develop large-scale assessments at the national and state levels, and classroom assessments that were coherent with them. The teams were asked to consider the ways in which tools and strategies drawn from research on children’s learning could be used to develop new approaches to elaborating standards and to designing and interpreting assessments.

Each team was asked to lay out a learning progression for an important theory or big idea in the natural sciences. The learning progression was to be based on experimental studies, cognitive theory, and logical analysis of the concepts, principles, and theory. The teams were asked to consider ways in which the learning progression could be used to construct strategies for assessing students’ understanding of the foundations for the theory, as well as their understanding of the theory itself. The assessment strategies (if they developed them) were to be developmental, that is, to test students’ progressively more complex understanding of the various layers of the theory’s foundation in a sequence in which cognitive science suggests it reasonably can be expected to develop. The work of these two groups is summarized below. Copies of their papers can be obtained at http://www7.nationalacademies.org/bota/Test_Design_K-12_Science.html.

Implications of Research on Children’s Learning for Assessment: Matter and Atomic-Molecular Theory9

This team used research on children’s learning about the nature of matter and materials, how matter and materials change, and the atomic structure of matter10 to illustrate a process for developing assessments that reflect research on how students learn and develop understanding of these scientific concepts.

Their first step was to organize the key concepts of atomic molecular theory around six big ideas that form two major clusters: the first two form a macroscopic level cluster and the last four form an atomic-molecular level cluster (Box 5-10 provides further detail on these concepts). The atomic-molecular theory elaborates on the macroscopic big ideas studied earlier and provides deeper explanatory accounts of macroscopic properties and phenomena.

Using research on children’s learning, the team identified pathways—learning progressions—that would trace the path that children might follow as instruction helps them move from naïve ideas to more sophisticated understanding of atomic molecular theory. The group noted that research points to the challenges

|

BOX 5-10 Children’s ability to appreciate the power of the atomic theory requires a number of related understandings about the nature of matter and material kinds, how matter and materials change, and the atomic structure of matter. These understandings are detailed in the standards documents. Smith et al. (2004) organize them around six big ideas that form two major clusters: the first two form a macroscopic level cluster and the last four form an atomic-molecular level cluster. The first cluster is introduced in the earliest grades and elaborated throughout schooling. The second is introduced in middle school and elaborated throughout middle school and high school. The atomic-molecular theory elaborates on the macroscopic big ideas studied earlier and provides deeper explanatory accounts of macroscopic properties and phenomena. Six Big Ideas of Atomic Molecular Theory That Form Two Major Clusters

SOURCE: Smith et al. (2004). |

inherent in moving through the progressions, as they involve macroscopic understandings of materials and substances as well as nanoscopic understandings of atoms and molecules. Box 5-11 contains these progressions as they were conceived by this design team. The team offers the following caveats about this progression. First, learning progressions are not inevitable and there is no one correct order—as children learn, many changes are taking place simultaneously in multiple, interconnected ways, not necessarily in the constrained and ordered

|

BOX 5-11

|

way that it appears in a learning progression. Second, any learning progression is inferential or hypothetical as there are no long-term studies of actual children learning a particular concept, and describing students’ reasoning is difficult because different researchers have used different methods and conceptual frameworks.

For designing assessments to tap into students’ progress along this learning progression, the team suggested a three-stage process:

-

Codify the big ideas into learning performances: types of tasks or activities suitable for classroom settings through which students can demonstrate their understanding of big ideas and scientific practices.

-

Use the learning performances to develop clusters of assessment tasks or items, including both traditional and nontraditional items that are (a) connected to principles in the standards and (b) analyzable with psychometric tools.

-

Use research on children’s learning as a basis for interpretation of student

Throughout elementary school, students are working to coordinate the first two levels as they develop a sound macroscopic understanding of matter and materials based on careful measurement. From middle school onward they are coordinating all four levels as they develop an understanding of the atomic-molecular theory and its broad explanatory power.

SOURCE: Smith et al. (2004). |

-

responses, explaining how responses reveal students’ thinking with respect to big ideas and learning progressions.

In creating examples to illustrate their process, the team laid out its reasoning at each step in the development process—from national standards to elaborated standards to learning performances to assessment items and interpretations—and about the contributions that research on children’s learning can make at each step. In doing so they illustrate why they believe that classroom and large-scale assessments developed using these methods will have three important qualities that are missing from most current assessments:

-

Clear principles for content coverage. Because the assessments are organized around big ideas embodied in key scientific practices and content, their organization and relationship to themes in the curriculum will be clear. Rather than sampling randomly or arbitrarily from a large number of individual standards, assess-

-

ments developed using these methods can predictably include items that assess students’ understanding of the big ideas and scientific practices.

-

Clear relationships between standards and assessment items. Because the reasoning and methods used at each stage of the development process is explicit, the interpretation of standards and the relationships between standards and assessment items is clear. The relationship between standards and assessment items is made explicit and is thus easy to examine.

-

Providing insights into students’ thinking. The assessments and their results will help teachers to understand and respond to their students’ thinking. For this purpose, the interpretation of student responses is critically important, and reliable interpretations require a research base. Thus, developing items that reveal students’ thinking is far easier for matter and atomic molecular theory than it is for other topics with less extensive research bases.

While this group demonstrates the key role that research on learning can play in the design of high-quality science assessments, they note that for assessors whose primary concern is evaluation and accountability, these qualities may not seem as important as some others qualities, such as efficiency and reliability. They conclude, however, that assessments with these qualities are essential for the long-term improvement of science assessment.

Evolutionary Biology11

While the importance of incorporating research findings about student learning into assessment development is widely recognized, research in many areas of science learning is incomplete. The design team that addressed evolutionary biology argued, however, that waiting for research to close all of the gaps would be unwarranted. To illustrate why waiting may not be necessary, the team developed an approach for producing inferences about student learning that apply a contemporary view of assessment and exploit learning theory. Their approach is to use learning theory to more clearly identify what should be assessed and what tasks or conditions could provide evidence about students’ understanding, so that inferences about students’ knowledge are well founded. The approach has three components.

First, in a standards-based education system, assessment developers rely on standards to define what students should know (the constructs), yet standards often obscure the important disciplinary concepts and practices that are inherent in them. To remedy this, the team suggests that a central conceptual structure be developed around the big ideas contained in the standards as a means to clarify what it is important to assess. Many individual standards may relate to the same

big idea, so that focusing on them is a means of condensing standards. Ideally, a big idea is revisited throughout schooling, so that a student’s knowledge is progressively refined and elaborated. This practice potentially simplifies the alignment between curriculum and assessment because both are tied to the same set of constructs.

The team also advocates that big ideas be chosen with prospective pathways of development firmly in mind. They note that these are sometimes available from research in learning, but typically also draw on the opinions of master teachers as well as some inspired guesswork to bridge gaps in the research base.

Second, standards are aligned with the big ideas, so that they can be considered in the context of more central ideas. This practice is another means of pruning standards, and it is a way to develop coherence among individual standards.

Third, standards are elaborated as learning performances. As described earlier, learning performances describe specific cognitive processes and associated practices that are linked to achieving particular standards, and thus help to guide the selection of situations for gathering evidence of understanding as well as clues as to what the evidence means.

The team illustrates its approach by developing a cartography of big ideas and associated learning performances for evolutionary biology for the first eight years of schooling. The cartography traces the development of six related big ideas that support students’ understanding of evolution. The first and most important is diversity: Why is life so diverse? The other core concepts play a supporting role: (a) ecology, (b) structure-function, (c) variation, (d) change, and (e) geologic processes. In addition to these disciplinary constructs, two essential habits of mind are included: mathematical tools that support reasoning about these big ideas, and forms of reasoning that are often employed in studies of evolution, especially model-based reasoning and comparative analysis. At each of three grade bands (K–2; 3–5, 6–8), standards developed by the National Research Council (1996) and American Association for the Advancement of Science (1993) are elaborated to encompass learning performances. As schooling progresses, these learning performances reflect increasing coordination and connectivity among the big ideas. For example, diversity is at first simply treated as an extant quality of the living world but, over years of schooling, is explained by recourse to concepts developing as students learn about structure-function, variation, change, ecology, and geology.

The team chose this topic because of its critical and unifying role in the biological sciences and because learning about evolution requires synthesis and coordination among a network of related concepts and practices, ranging from genetics and ecology to geology, so that understanding evolution is likely to emerge across years of schooling. Thus, learning about evolution will be progressive and involve coordination among otherwise discrete disciplines (by contrast, one could learn about ecology or geology without considering their roles in evolution). Unlike other areas in science education, evolution has not been thor-

oughly researched. The domain presents significant challenges for those who wish to describe the pathways through which learning in this area might develop that could guide assessment. Thus, evolution served as a test-bed for the approach.

CONCLUSIONS

Designing high-quality science assessments is an important goal, but a difficult one to achieve. As discussed in Chapter 3, science assessments must target the knowledge, skills, and habits of mind that are necessary for science literacy, and must reflect current scientific knowledge and understanding in ways that are accurate and consistent with the ways in which scientists understand the world. It must assess students’ understanding of science as a content domain and their understanding of science as an approach. It must also provide evidence that students can apply their knowledge appropriately and that they are building on their existing knowledge and skills in ways that will lead to more complete understanding of the key principles and big ideas of science. Adding to the challenge, competence in science is multifaceted and does not follow a singular path. Competency in science develops more like an ecological succession, with changes taking place simultaneously in multiple interconnected ways. Science assessment must address these complexities while also meeting professional technical standards for reliability, validity, and fairness for the purposes for which the results will be used.

The committee therefore concludes that the goal for developing high-quality science assessments will only be achieved though the combined efforts of scientists, science educators, developmental and cognitive psychologists, experts on learning, and educational measurement specialists working collaboratively rather than separately. The experience of the design teams described in this chapter and multiple findings of other NRC committees (NRC, 1996, 2001b, 2002) support this conclusion. Commercial test contractors do not generally have the advantage of these diverse perspectives as they create assessment tools for states. It is for this reason that we suggest in the next chapter that states create their own content-specific advisory boards to assist state personnel that are assigned to work with the contractors. These bodies can advise states on the appropriateness of assessment strategies and the quality and accuracy of the items and tasks included on any externally developed tests.

QUESTIONS FOR STATES

This chapter has described ways of thinking about the design of science assessments that can be applied to assessments at all levels of the system. We offer the following questions to guide states in evaluating their approaches to the development of science assessments:

Question 5-1: Have research and expert professional judgment about the ways in which students learn science been considered in the design of the state’s science assessments?

Question 5-2: Have the science assessments and tasks been created to shed light on how well and to what degree students are progressing over time toward more expert understanding?