5

Review of Instruments

To provide a basis for deliberations, the committee collected and analyzed assessments that have been used or might be used to measure an aspect of technological literacy, even if they were not designed explicitly for that purpose. In fact, only about one-third of the assessment “instruments” collected by the committee were explicitly designed to measure technological literacy. Of these, only a handful was based on a conceptual model of technological literacy like the one presented in Technically Speaking or Standards for Technological Literacy. Indeed, the universe of assessments of technological literacy is very small.

A combination of formal methods (e.g., database searches) and informal methods (e.g., inquiries to knowledgeable individuals and organizations) were used to collect assessment instruments. The committee believes most of the relevant assessment instruments were evaluated, but, because the identification process was imperfect, the portfolio of instruments should not be considered comprehensive.

Altogether, the committee identified 28 assessment instruments of several types, including formal criterion- or norm-referenced tests, performance-based activities intended to measure an aspect of design or problem-solving ability, attitude or opinion surveys, and informal quizzes. Item formats ran the gamut from multiple-choice and short-answer questions to essays and performance tasks. About half the instruments had been used more than once; a very few had been administered many times over the course of a decade or more. The others, such as assessments developed as research for Ph.D. dissertations, had been used once, if at all.

The population of interest for most of the instruments was K–12 students. Teachers were the target population for two, the Praxis Technology Education Test (ETS, 2005) and the Engineering K–12 Center Teacher Survey (ASEE, 2005). The rest were designed to test out-of-school adults. Although the focus of this project is on assessment in the United States, the committee also studied instruments developed in Canada, England, and Taiwan. The approaches to assessment in non-U.S. settings provided useful data for the committee’s analysis.

The purposes of the assessment tools varied as much as the instruments themselves. They included diagnosis and certification of students, input for curriculum development, certification of teachers, resource allocation, program evaluation, guidance for public policy, suitability for employment, and research. The developers of these assessments could be divided into four categories: state or federal agencies, private educational organizations, academic researchers, and test-development or survey companies.

Table 5-1 provides basic information about the instruments, according to target population. More detailed information on each instrument, including sample items and committee observations, is provided in Appendix E.

The committee reviewed each instrument through critiques written by committee members, telephone conferences, and face-to-face discussions. In general, the reviews focused on two aspects of the assessments: (1) the type and quality of individual test items; and (2) the format or design of the assessment. The reviews provided an overview of current approaches to assessing technological understanding and capability and stimulated a discussion about the best way to conduct assessments in this area.

No single instrument struck the committee as completely adequate to the task of assessing technological literacy.

Although a number of the instruments reviewed were thoughtfully designed, no single instrument struck the committee as completely adequate to the task of assessing technological literacy. This is not surprising, considering the general challenge of developing high-quality assessments; the multifaceted nature of technological literacy; the characteristics of the three target populations; the relatively small number of individuals and organizations involved in designing assessments for technological literacy; and the absence of research literature in this area. And as noted, only a few of the instruments under review were designed explicitly to assess technological literacy in the first place.

TABLE 5-1 Technological-Literacy-Related Assessment Instruments

|

Name |

Developer |

Primary Purpose |

Frequency of Administration |

|

K–12 Students |

|

||

|

Assessment of Performance in Design and Technology |

Schools Examinations and Assessment Council, London |

Curriculum development and research. |

Once in 1989. |

|

Design Technology |

International Baccalaureate Organization |

Student achievement (part of qualification for diploma). |

Regularly since 2003. |

|

Design-Based Science |

David Fortus, University of Michigan |

Curriculum development and research. |

Once in 2001–2002. |

|

Design Team Assessments for Engineering Students |

Washington State University |

Assess students’ knowledge, performance, and evaluation of the design process; evaluate student teamwork and communication skills. |

Unknown. |

|

Future City Competition—Judges Manual |

National Engineers Week |

To help rate and rank design projects and essays submitted to the Future City Competition. |

Annually since 1992. |

|

ICT Literacy Assessmenta |

Educational Testing Service |

Proficiency testing. |

Launched in early 2005. |

|

Illinois Standards Achievements Test—Science |

Illinois State Board of Education |

Measure student achievement in five areas and monitor school performance. |

Annually since 2000. |

|

Industrial Technology Literacy Testb |

Michael Allen Hayden, Iowa State University |

Assess the level of industrial-technology literacy among high school students. |

Once in 1989 or 1990. |

|

Infinity Project Pretest and Final Test |

Geoffrey Orsak, Southern Methodist University |

Basic aptitude (pretest) and student performance. |

Ongoing since 1999. |

|

Information Technology in a Global Society |

International Baccalaureate Organization |

Student evaluation. |

Semiannually at the standard level since 2002; higher-level exams will be available in 2006. |

|

Massachusetts Comprehensive Assessment Systems—Science and Technology/ |

Massachusetts Department of Education |

Monitor individual student achievement, gauge school and district performance, satisfy |

Annually since 1998. |

|

Name |

Developer |

Primary Purpose |

Frequency of Administration |

|

Engineering |

|

requirements of No Child Left Behind Act. |

|

|

Multiple Choice Instrument for Monitoring Views on Science-Technology-Society Topics |

G.S. Aikenhead and A.G. Ryan, University of Saskatchewan |

Curriculum evaluation and research. |

Once in September 1987–August 1989. |

|

New York State Intermediate Assessment in Technologyb |

State Education Department/State University of New York |

Curriculum improvement and student evaluation. |

Unknown. |

|

Provincial Learning Assessment in Technological Literacyb |

Saskatchewan Education |

Analyze students’ technological literacy to improve their understanding of the relationship between technology and society. |

Once in 1999. |

|

Pupils’ Attitudes Toward Technology (PATT-USA)b |

E. Allen Bame and William E. Dugger, Jr., Virginia Polytechnic Institute and State University; Marc J. de Vries, Eindhoven University |

Assess student attitudes toward and knowledge of technology. |

Dozens of times in many countries since 1988. |

|

Student Individualized Performance Inventory |

Rodney L. Custer, Brigitte G. Valesey, and Barry N. Burke, with funding from the Council on Technology Teacher Education, International Technology Education Association, and the Technical Foundation of America |

Develop a model to assess the problem-solving capabilities of students engaged in design activities. |

Unknown. |

|

Survey of Technological Literacy of Elementary and Junior High School Studentsb |

Ta Wei Le, et al., National Taiwan Normal University |

Curriculum development and planning. |

Once in March 1995. |

|

Test of Technological Literacyb |

Abdul Hameed, Ohio State University |

Research. |

Once in April 1988. |

|

TL50: Technological Literacy Instrumentb |

Michael J. Dyrenfurth, Purdue University |

Gauge technological literacy. |

Unknown. |

|

WorkKeys—Applied Technologyc |

American College Testing Program |

Measure job skills and workplace readiness. |

Multiple times since 1992. |

|

Name |

Developer |

Primary Purpose |

Frequency of Administration |

|

K–12 Teachers |

|

|

|

|

Engineering K–12 Center Teacher Survey |

American Society for Engineering Education |

Inform outreach efforts to K–12 teachers. |

Continuously available. |

|

Praxis Specialty Area Test: Technology Educationa |

Educational Testing Service |

Teacher licensing. |

Regularly. |

|

Out-of-School Adults |

|

|

|

|

Armed Services Vocational Aptitude Battery |

U.S. Department of Defense |

Assess potential of military recruits for job specialties in the armed forces and provide a standard for enlistment. |

Ongoing in its present form since 1968. |

|

Awareness Survey on Genetically Modified Foods |

North Carolina Citizens’ Technology Forum Project Team |

Research on public involvement in decision making on science and technology issues. |

Once in 2001. |

|

Eurobarometer: Europeans, Science and Technology |

European Union Directorate General for Press and Communication |

Monitor changes in public views of science and technology to assist decision making by policy makers. |

Surveys on various topics conducted regularly since 1973; this poll was conducted in May/June 2001. |

|

European Commission Candidate Countries Eurobarometer: Science and Technology |

Gallup Organization of Hungary |

Monitor public opinion on science and technology issues of concern to policy makers. |

Periodically since 1973; this survey was administered in 2002. |

|

Gallup Poll on What Americans Think About Technologyb |

International Technology Education Association |

Determine public knowledge and perceptions of technology to inform efforts to change and shape public views. |

Twice, in 2001 and 2004. |

|

Science and Technology: Public Attitudes and Public Understanding |

National Science Board |

Monitor public attitudes, knowledge, and interest in science and technology issues. |

Biennially from 1979 to 2001. |

|

aAlso administered to community and four-year college students. bDesigned explicitly to measure some aspects of technological literacy. cAlso used in community college and workplace settings. |

|||

Mapping Existing Instruments to the Dimensions of Technological Literacy

Only about one-third of the instruments collected were developed with the explicit goal of measuring technological literacy. Only two or three of these were designed with the three dimensions of technological literacy spelled out in Technically Speaking in mind. Nevertheless, the committee found the three dimensions to be a useful lens through which to analyze all of the instruments. When viewed this way, some instruments and test items appeared to be more focused on teasing out the knowledge component than testing capability. Others were more focused on capability or critical thinking and decision making. In some cases, the instruments and items addressed aspects of two or even all three dimensions of technological literacy.

Knowledge Dimension

Every assessment instrument examined by the committee assumed some level of technological knowledge.

Every assessment instrument examined by the committee assumed some level of technological knowledge on the part of the person taking the test or participating in the poll or survey. Because the three dimensions are interwoven and overlapping (see Chapter 2), even assessments focused on capability or ways of thinking and acting tap into technological knowledge. The committee did not undertake a precise count but estimated that one-half to three-quarters of the assessment instruments were mostly or entirely designed to measure knowledge.

The knowledge dimension is evident in the handful of state-developed assessments, which are designed to measure content standards or curriculum frameworks that spell out what students should know and be able to do at various points in their school careers. Massachusetts and Illinois, for example, have developed assessments that measure technological understanding as part of testing for science achievement. The Massachusetts Comprehensive Assessment System (MCAS) science assessment instrument (MDE, 2005a,b) reflects the addition in 2001 of “engineering” to the curriculum framework for science and technology (MDE, 2001). In the 2005 science assessment, 9 of the 39 5th-grade items and 10 of the 39 8th-grade items targeted the technology/ engineering strand of the curriculum. In the 5th-grade test, 6 of the 9 questions were aligned with state standards for engineering design; the others were aligned with standards for tools and materials. Questions in the 8th-grade exam were related to standards for transportation,

construction, bioengineering, and manufacturing technologies; engineering design; and materials, tools, and machines.

Multiple-choice items that are well crafted can elicit higher order thinking. The 2002 8th-grade MCAS, for example, included the following item:

|

An engineer designing a suspension bridge discovers it will need to carry twice the load that was initially estimated. One change the engineer must make to her original design to maintain safety is to increase the

|

To arrive at the suggested correct answer (B), students must be able to define “load” and “tension” in an engineering context. But they must also make the connection between the diameter and strength of the load-bearing structure (the wire in this case). A student would be more likely to be able to answer this question if he or she had participated in design activities in the classroom, such as building a bridge and testing it for load strength.

Open-ended questions can also probe higher-order thinking skills. Although these kinds of questions are more time consuming to respond to and more challenging to score, they can provide opportunities for test takers to demonstrate deeper conceptual understanding. To assess students’ understanding of systems, for instance, a question on the New York State Intermediate Assessment in Technology requires that students fill in a systems-model flow chart for one of four systems (a home heating system, an automotive cooling system, a residential electrical system, or a hydroponic growing system).

Recent versions of the Illinois science assessment (ISBE, 2003) were developed with the Illinois Learning Standards in mind (ISBE, 2001a,b). The standards spell out learning goals related to technological design and relationships among science, technology, and society (STS). Of the 70 multiple-choice items on the 2003 assessment, 14 were devoted to STS topics, and 14 were devoted to “science inquiry,” which includes technological design. As in the Massachusetts assessment, design-related

items required that students demonstrate an understanding of the design process, although they were not asked to take part in an actual design task as part of the test.

Even when learning standards are the basis for the assessment design, the connection between the standards and individual test items is not always clear. A sample question for the 4th-grade Illinois assessment, for instance, asks students to compare the relative energy consumption of four electrical appliances. The exercise is intended to test a standard that suggests students should be able to “apply the concepts, principles, and processes of technological design.” However, the question can be answered without knowing the principles of technological design.

The Illinois State Board of Education has devised a Productive Thinking Scale (PTS) by which test developers can rate prospective test items according to the degree of conceptual skill required to answer them (Box 5-1). Similar in some ways to Bloom’s taxonomy (Bloom et al., 1964), PTS is specifically intended to be used for developing multiple-choice items. The state tries to construct assessments with most questions at level 3 or level 4; level 1 items are omitted completely; level 2 questions are used only if they address central concepts; level 5 items are used sparingly; and level 6 items are not used because the answers are indeterminate. Level 4, 5, and 6 items seem likely to encourage higher order thinking. Although PTS is used for the development of science assessments, the same approach could be adapted to other subject areas, including technology.

|

BOX 5-1 Productive Thinking Scale

Source: ISBE, 2003. |

||||||||||||||||||||||||||||

From 1977 through 1999, the federal National Assessment of Educational Progress (NAEP) periodically asked 13- and 17-year-olds the same set of science questions as part of an effort to gather long-term data on achievement. The committee commissioned an analysis of responses to the few questions from this instrument that measure technological understanding (Box 5-2).

The Canadian Provincial Learning Assessment in Technological Literacy, an instrument administered in 1999 in Saskatchewan, includes a number of items intended to test 5th-, 8th-, and 11th-graders’ conceptions of technology and the effect of that understanding on responsible citizenship, among other issues (Saskatchewan Education, 2001). Student achievement was measured in five increasingly sophisticated

|

BOX 5-2 Selected Data from the NAEP Long-Term Science Assessment, 1977–1999 In 1985, responses to technology-related questions in the 1976–1977 and 1981–1982 NAEP long-term science assessment were analyzed as part of a dissertation study (Hatch, 1985). The analysis included more than 50 questions common to assessments of 13- and 17-year-olds that met the author’s definition of technological literacy. In later editions of the test, which involved about 16,000 students, many of these questions were dropped. As part of its exploration of this “indirect” assessment of technological literacy, the committee asked Dr. Hatch to analyze data from all five times the test was administered, the most recent in 1999. Among the 12 questions common to both age groups, two were of particular interest to the committee:

It is impossible to state with confidence the reasons for the dramatic changes in students’ apparent understanding of the benefits and negative consequences of technology use. The differences undoubtedly have something to do with changes in government and private-sector concerns about energy use and air pollution over this span of time. This example illustrates why items that mention specific technologies must be periodically reviewed for currency. Because storm windows have largely been replaced by double- or triple-glazed windows, a student faced with this same question today might not be able to answer it, simply because she did not understand what was being asked. More important, from the committee’s perspective, this example illustrates the potential value of time-series data for tracking changes in technological literacy. SOURCE: Hatch, 2004. |

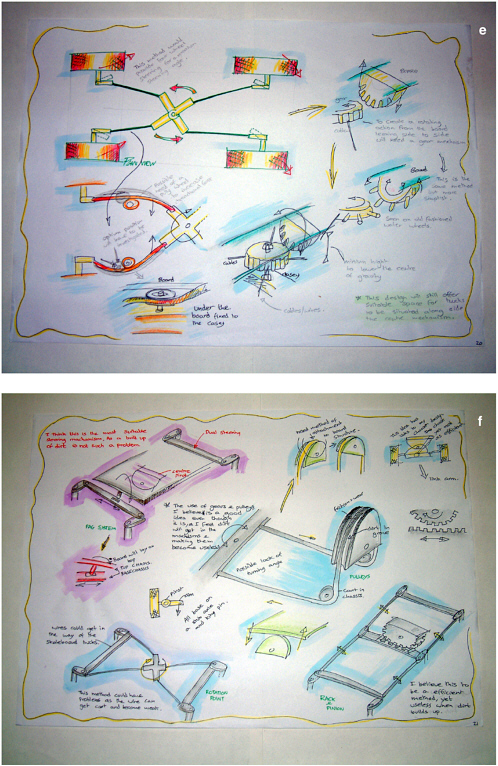

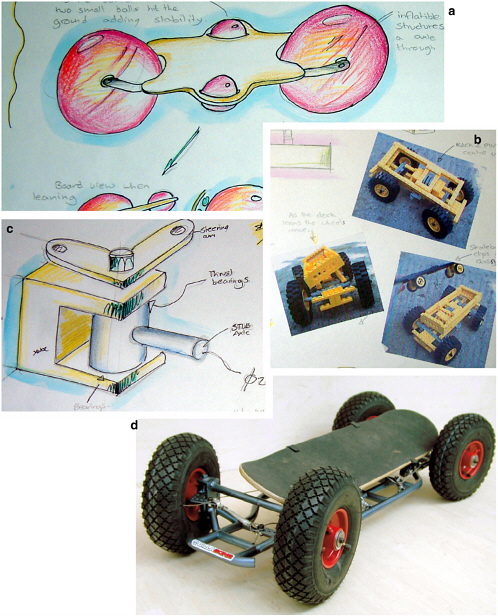

FIGURE 5-1 Level 5 exemplar of eighth-grade student responses to a question about technology, Saskatchewan 1999 Provincial Learning Assessment in Technological Literacy. Source: Saskatchewan Education, 2001.

levels according to a rubric developed by a panel of teachers, business leaders, parents, students, and others. Students who cited computers as the only example of technology, for instance, were classified at the lowest level. Students who had a more comprehensive understanding of technology as artifacts made by people to extend human capabilities scored significantly higher (Figure 5-1).

A great many of the knowledge-focused assessments reviewed by the committee relied heavily on items that required test takers to recall facts or define terms. Although knowledge of certain facts and terminology is essential to the mastery of any subject, this type of item has a major drawback because it does not tap into deeper, conceptual understanding. The following question from an assessment intended for high school students is illustrative (Hayden, 1989).

|

A compact disk can be used to store:

|

In this question, the suggested correct answer is E, all of the above. One might disagree with the wording of the answers (e.g., is “language” or the printed word stored on a CD?). But the significant issue is that the question focuses on the superficial aspects of CD technology rather than the underlying concepts, such as that information can take multiple forms and that digitization facilitates the storage, retrieval, and manipulation of data. A correct answer does little more than demonstrate a familiarity with some of the capabilities of one type of data-storage device. Although there is a place in assessments for testing factual knowledge, questions of this type could easily dominate an assessment given the number of technologies about which one might reasonably ask questions. In addition, because of the pace of technological development, narrowly targeted items may quickly become obsolete as one technology replaces another.

Narrowly targeted items may quickly become obsolete as one technology replaces another.

Nearly one-third of the 100 items in the Pupils’ Attitude Toward Technology instrument address the knowledge dimension of technological literacy. The assessment, developed in the 1980s by a Dutch group headed by Marc de Vries, has been used in many countries, including the United States (Bame et al., 1993). The test includes statements with which students are asked to indicate agreement or disagreement. The statements deal with basic and important ideas about the nature of technology, such as the relationship between technology and science, the influence of technology on daily life, and the role of hands-on work in technological development.

High school students and out-of-school adults considering entering the military can choose to take the Armed Services Vocational Aptitude Battery (ASVAB). The ASVAB has eight sections, including items on auto and shop knowledge, mechanics, and knowledge of electronics. Sample items in ASVAB test-preparation books require mostly technical rather than conceptual understanding (e.g., Kaplan, 2003). This reflects the major purpose of the test, which is to identify individuals suited for specialty jobs in the armed forces. ASVAB is notable because it is an online, “adaptive” testing option for adult test takers. In adaptive testing, a right or wrong answer to a question determines the difficulty of the next question.

A group of engineering schools, the Transferable Integrated Design Engineering Education Consortium, has developed an instrument for testing knowledge of the design process (TIDEE, 2002). This is the only assessment in the committee’s analysis explicitly intended for college

students. The Design Team Knowledge Assessment, parts of which are completed by individuals and parts of which are completed by teams, consists of extended-response and essay questions.

The Praxis Specialty Area Test for Technology Education was designed to assess teachers’ knowledge (ETS, 2005). Seventy percent of the 120 multiple-choice questions on the exam address knowledge of specific categories of technology (e.g., information and communication, construction); the remaining items test familiarity with pedagogical concepts. The focus on specific technologies reflects the historical roots of technology education in industrial arts. According to Educational Testing Service, the Praxis test is being “aligned” more closely with the ITEA Standards for Technological Literacy to reflect a less vocational, more academic and engineering-oriented view of technology studies.

The following sample item from the Praxis test highlights another potential shortcoming of items that assess only factual knowledge.

|

The National Standards for Technology Education published in 2000 by the International Technology Education Association are titled:

|

In this example, the suggested correct answer is B, Standards for Technological Literacy. A stickler might point out that the full title of the ITEA document is Standards for Technological Literacy: Content for the Study of Technology, which means that none of the answers is correct. More important, however, the value of demonstrating knowledge of the title of this document is not evident. An item that tests a prospective teacher’s knowledge of the contents of the standards would have greater value.

In adult populations, assessment instruments tend to take the form of surveys or polls, and the test population is typically a small, randomly selected sample of a larger target population. Two attempts to gather information about what American adults know and think about technology in 2001 and 2004 were public opinion polls conducted for

ITEA by the Gallup organization (ITEA, 2001, 2004). Six questions from the 2001 survey were repeated in the 2004 poll, including two in the knowledge dimension. One was an open-ended question intended to elicit people’s conceptions of technology, the vast majority of which were narrowly focused on computers. The second, a multiple-choice question, was intended to assess people’s knowledge of how everyday technologies, such as portable phones, cars, and microwave ovens, function. Nearly half of the respondents thought, incorrectly, that there was a danger of electro-cution if a portable phone were used in the bathtub. One knowledge-related question that appeared only in the 2001 poll was intended to assess people’s conceptions of design, but the bulk of the questions (17 in 2001 and 16 in 2004) were focused on attitudes and opinions about technology and technological literacy.

For more than 20 years, the National Science Board (NSB) sponsored the work of Jon Miller in the development of a time series of national surveys to measure the public understanding of and attitudes toward science and technology. The summary results of these surveys were published in a series of reports called Science and Engineering Indicators (NSB, 1981, 1983, 1986, 1988, 1990, 1992, 1994, 1996, 1998, 2000), and more detailed analyses were published in journals and books (Miller, 1983a,b, 1986, 1987, 1992, 1995, 1998, 2000, 2001, 2004; Miller and Kimmel, 2001; Miller and Pardo, 2000; Miller et al., 1997). Questions about the operating principle of lasers and the role of antibiotics in fighting disease illustrate the kinds of technology-oriented items included in this series.

Adults have also been surveyed about their technology savvy for more specific purposes. In 2001, for example, researchers at North Carolina State University administered a 20-question, multiple-choice test to 45 people taking part in an experimental “citizens’ consensus conference” on genetically modified foods. Questions focused on participants’ understanding of the purposes, limits, and risks of genetic engineering. Although this test was not reviewed by the committee, some of the same researchers were involved more recently in the development of a test to assess adult knowledge of and attitudes toward nanotechnology (Cobb and Macoubrie, 2004).

Capability Dimension

One of the distinguishing characteristics of technological literacy is the importance of “doing.” Described in Technically Speaking as the

“capability” dimension, doing includes a range of activities and abilities, including hands-on tool skills and, most significantly, design skills. The ITEA Standards for Technological Literacy suggests that K–12 students should understand the attributes of design and be able to demonstrate design skills. Using an iterative design process (see Figure 3-1) to identify and solve problems provides insights into how technology is created that cannot be gained any other way.1 The problem-solving nature of design, with its cognitive processes of analysis, comparison, interpretation, evaluation, and synthesis, also encourages higher order thinking.

Assessing technology-related capability is difficult, however. For one thing, little is known about the psychomotor processes involved. In addition, the cost of developing and administering assessments that involve students in design activities tends to be prohibitive, at least for large-scale assessments, because of the time and personnel required. However, the committee believes there are some promising approaches to measuring design and problem-solving skills that avoid some of these time and resource constraints. (These approaches are discussed in detail in Chapter 7.)

Many attempts have been made to develop standardized instruments for capturing design behavior in educational settings. Custer, Valesey, and Burke (2001) created the Student Individualized Performance Inventory (SIPI), which tests four dimensions: clarification of the problem and design; development of a plan; creation of a model/prototype; and evaluation of the design solution. A student’s performance in each dimension is rated as expert, proficient, competent, beginner, or novice. SIPI has been used several times for research purposes (Rodney Custer, Illinois State University, personal communication, May 5, 2005).

Problem solving in a technological context is the focus of the American College Testing (ACT) WorkKeys applied technology assessment, which is intended to help employers compare an individual’s workplace skills with the skills required for certain technology-intensive jobs. The 32 multiple-choice test items present real-world problems ranging in difficulty from simple to highly complex. Sample items on the ACT website (http://www.act.org/workkeys/assess/tech/) require test takers to

decide on the placement of a thermostat in a greenhouse; safely place a load on a trailer pulled by a pickup truck; troubleshoot a bandsaw that will not turn on; and diagnose a hydraulic car lift that is malfunctioning. Multiple-choice tests of capability must be interpreted cautiously, however. A test taker who picks the correct “solution” to a problem-solving task in the constrained environment of multiple choice may not fare nearly as well in an open-ended assessment, where potential solutions may not be spelled out, or in the real world, where the ability to work with tools and materials may come into play. Assessment of capability is one area where computer-based simulation may play a useful role (see Chapter 7).

Assessment of capability is one area where computer-based simulation may play a useful role.

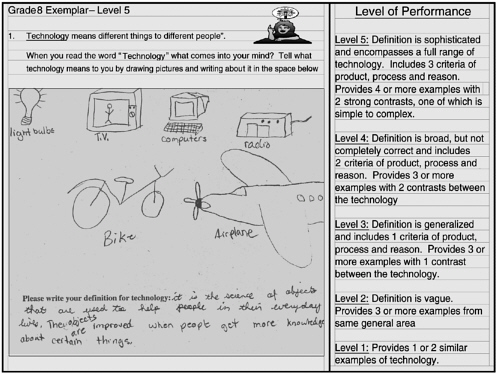

In the United Kingdom, design and technology have been a mandatory part of the pre-college curriculum since 1990. To test students’ design skills, the Assessment of Performance in Design and Technology project developed a 90-minute paper-and-pencil assessment based on carefully tested design tasks intended to measure design capabilities, communication skills, and conceptual understanding of materials, energy, and aesthetics. The assessment was administered to a sample of 10,000 15-year-olds in the United Kingdom in the late 1980s (Kimbell et al., 1991). A small subset of the 10,000 also completed a half-day collaborative modeling exercise, and a subset of these students took part in a design activity that lasted for several months. The research team also developed a rubric for calculating an overall (holistic) score and subtest scores for each student. Figure 5-2 shows an example of a highly rated design effort (holistic score of 5 on a scale of 0 to 5). The rubric has since been adapted for use in assessments of student-selected design and technology projects throughout the United Kingdom.

The British design and technology curriculum centers on doing “authentic” design tasks, activities that represent a believable and—for the student—meaningful challenge. Children in the early grades might be asked to devise a bed for a favorite stuffed animal; children in the middle grades might design a temperature-controlled hutch in which to keep the class rabbit; older students might develop an all-terrain skateboard or an automatic fish feeder for an aquarium.

From an assessment standpoint, performance on the designing and making activity—from the articulation of a design brief to the review of a working prototype—is of primary interest in the United Kingdom. This means that specific knowledge, specific capabilities, and specific ways of critical thinking and decision making are relevant only insofar as they advance a student’s design work. In the British model for assessing

FIGURE 5-2 Drawings, models, and final product from a design project for an all-terrain skateboard developed by a 17-year-old student at Saltash Community School in Cornwall, England. The student lived on a farm with no hard surfaces suitable for a traditional skateboard. (a) early-stage concept sketch, (b) Lego model, (c) detail sketch, component of final steering mechanism, (d) completed skateboard, and (e and f, facing page) late-stage concept sketches, steering mechanism.

technological literacy, there is almost no interest in determining what students know, independent of specific design challenges. However, there is considerable interest in how students use their knowledge, whether they recognize when they are missing key information, and how skillfully they gather new data. In the end, how well or poorly a student scores in the assessment process is a function of how well his or her performance aligns with the elements of good design practice.

In contrast, in the United States, curriculum in technology, as in most subjects, is centered on the acquisition of specific knowledge and skills. Although design activities may be used to reinforce or aid students in the acquisition of certain concepts and capabilities, performance on a design task is not usually the primary basis for assessment. Instead, assessments are based mostly on content standards, which represent expert judgments about the most important knowledge and skills for students to master.

The committee found a great deal to commend the British approach to assessing design-related thinking. For one thing, the design-centered method much more closely mimics the process of technology development in the real world and seems likely to promote higher order thinking. Engineers and scientists in industry or in academic or government laboratories identify and then attempt to solve practical problems using some version of an iterative design methodology. This is a fairly open-ended process, at least at the beginning. For another thing, the design-centered method of assessment reinforces many key notions in technology that cannot easily be taught any other way. The ideas that design always involves some degree of uncertainty and that no human-designed product is without shortcomings are more likely to be understood at a deeper level by someone who has engaged in an authentic design challenge than by someone who has not.

The committee found a great deal to commend the British approach to assessing design-related thinking.

The Saskatchewan Education 1999 Provincial Learning Assessment in Technological Literacy required that students demonstrate capability in two areas: (1) the use of information technology; and (2) performance on design-related tasks. Over a period of two to three hours, students used word-processing software and an Internet Web browser, adjusted controls on a clock radio to specified settings, conducted a paper-and-pencil design task (a plan for a playground [grades 5 and 8] and a plan for a small town [grade 8]), and built and tested multipart devices (levers and balances [grade 5] and Lego model cars [grades 8 and 11]). Student performance was rated according to five performance levels

that focused almost exclusively on the quality of the product rather than the design process.

In 2001, the International Baccalaureate Organization (IBO) began offering a Design Technology curriculum that emphasizes the use of scientific information and “production techniques” to solve problems (IBO, 2001). End-of-course assessments include three paper-and-pencil exams that include a mixture of multiple-choice, short-answer, and extended-response items. As part of their coursework, IB students also take part in individual and group design projects. Teachers use a detailed rubric to assess how well students meet expectations related to project planning; data collection, processing and presentation; conclusions and evaluation; manipulative skills; and personal skills.

The committee also reviewed the assessment component of the Future City Competition, an annual extracurricular, design-related challenge available to K–12 students as part of National Engineers Week (http://www.new.org). The Future City Competition encourages teams of middle-school students to design a city using Sim City™ software and, separately, to build a small, model city that satisfies the theme of the contest for that year. The computer-design entries are judged by volunteers with varied backgrounds, including engineering. The score sheet for the computer-designed city includes whether the city design incorporates certain features, such as transportation and recreation. The score sheet for the built model assigns points for creativity, accuracy and scale, transportation, a moving-part component, and attractiveness.

The committee found no instruments that required teachers to demonstrate the kind of technological capability envisioned in Technically Speaking and broadened by the committee to include design-related skills. The ACT WorkKeys assessment was the only instrument that targeted technological capability in out-of-school adults, although it is also used in K–12 schools and community colleges. Undoubtedly, there are other assessments that test technical proficiency or skill in job-specific areas, such as computer-network administration or computer numerically controlled milling. But these assessments are beyond the scope of this project.

Critical-Thinking and Decision-Making Dimension

The critical thinking and decision-making dimension of technological literacy suggests a process that includes asking questions, seeking and weighing information, and making decisions based on that

information. Critical thinking is a form of higher order thinking that has historical roots dating back to the Greek philosophers. The National Council for Excellence in Critical Thinking Instruction defines critical thinking this way (Paul and Nosich, 2004):

Critical thinking is the intellectually disciplined process of actively and skillfully conceptualizing, applying, analyzing, synthesizing, or evaluating information gathered from, or generated by, observation, experience, reflection, reasoning, or communication, as a guide to belief and action.

Critical thinking and decision making may be the most cognitively complex dimension of technological literacy. Not only does it require knowledge related to the nature and history of technology and the role of engineers and others in its development, it also requires that individuals be aware of what they do not know so they can ask meaningful questions and educate themselves in an appropriate manner.

Critical thinking and decision making may be the most cognitively complex dimension of technological literacy.

The committee found very few assessments that addressed this aspect of technological literacy. One of them was the assessment for Information in a Global Society, a program of study developed by IBO, which includes a two-hour-long paper-and-pencil exam with several open-ended questions. The 2002 assessment included questions related to the use of school identification cards, the development of an online business, Web publishing, Web-based advertising, and video camera surveillance in public spaces. As part of each question, students were asked to discuss and weigh the importance of the social and/or ethical concerns raised by the use of that technology. Examiners used a rubric-like marking scheme to score this part of the assessment.

The Saskatchewan Education 1999 Provincial Learning Assessment in Technological Literacy also addressed critical thinking by requiring that students make and defend decisions concerning the uses and management of technology. Eighth- and 11th-grade students, for instance, watched a videotaped drama involving a union leader, Ed, who was forced to decide between retaining jobs for his fellow employees and supporting an expensive solution to his company’s waste-disposal problem. The open-ended assessment asked students to decide which option Ed should select and to discuss how difficult societal issues like this might be solved.

Attitudes Toward Technology

As noted in Chapter 2, a person’s attitudes toward technology can provide a context for interpreting the results of an assessment. The committee found that assessments of general, or public, literacy related to technology tend to focus on people’s awareness, attitudes, beliefs, and opinions rather than on their knowledge, capabilities, or critical thinking skills.

Assessments conducted periodically over an extended period of time can track changes in public views on specific issues, such as the use of nuclear power or the development of genetically modified foods. This is one purpose of the data available in Science and Engineering Indicators published by NSB, which address attitudes toward federal funding of scientific research, as well as specific topics, such as biotechnology and genetic engineering, space exploration, and global warming. They also reveal a good deal about people’s beliefs, as distinct from their attitudes. For instance, in 2004, the Indicators focused on the belief in various pseudosciences, such as astrology (NSB, 2004). Recent versions of the NSB reports have compared the attitudes or beliefs of Americans with those of citizens of other countries.

Assessment of attitudes, beliefs, and opinions are often used by government decision makers and others to gauge the effectiveness of public communication efforts or the need for new policies. In the case of technology, measuring attitudes can provide insights into the level of comfort with technology; the role of the public in the development of a technology; and whether public concerns about technology are being heard by those in positions of power. In order to communicate risk effectively, it is necessary to understand the attitudes of those who are being warned (Morgan et al., 2002). The Eurobarometer surveys, for example, which are conducted periodically in the European Union, attempt to measure public confidence in certain technologies, such as the Internet, genetically modified foods, fuel-cell engines, and nanotechnology. Eurobarometer surveys typically involve about 1,000 people (15 and older) in each member country (currently 25 countries).

Measuring attitudes can provide insights into the level of comfort with technology.

The American Association for Engineering Education (ASEE, 2005) developed the Survey of Teachers’ Attitudes About Engineering, an online assessment instrument for gauging the views of K–12 teachers about engineering, engineers, and engineering education (Box 5-3). ASEE plans to use the results of this survey, which includes 44-multiple-choice

|

BOX 5-3 Survey of Teachers’ Attitudes Toward Engineering Examples of Attitudinal Statementsa Engineers spend a lot of time working alone. Engineering has a large impact on my life. A basic understanding of technology is important for understanding the world around us. Engineers need to be good at thinking creatively.

Source: ASEE, 2005. |

questions, to improve its outreach efforts to the K–12 education community. As of spring 2005, about 400 teachers had taken the survey. Responses are scored on a five-part Likert scale (strongly agree, agree, neutral, disagree, strongly disagree).

The Boston Museum of Science, home to the National Center for Technological Literacy (http://www.mos.org/doc/1505/), has developed several instruments intended to elicit student and teacher conceptions (and misconceptions) of engineering and technology (Christine Cunningham, Boston Museum of Science, personal communication, May 10, 2005). Based on the Draw a Scientist Test (Finson, 2002), the museum’s “What Is Technology?” test and the “What Is Engineering?” test ask students to look at 16 pictures accompanied by short descriptions and select the ones that express their ideas about technology or engineering. The museum uses similar tests with teachers who take part in its Engineering Is Elementary Program.

Not all attitudinal assessments or surveys target adults. Views on Science, Technology, and Society (VOSTS), for example, was developed by researchers at the University of Saskatchewan and administered to some 700 Canadian high school students in the early 1990s. The purpose of the assessment was to eliminate ambiguities in student interpretations of multiple-choice questions (Aikenhead and Ryan, 1992). The developers of the 114 VOSTS items relied heavily on student essays and interview responses in developing position statements about science and technology; scientists; and the nature and development of scientific and technological knowledge. The questionnaire reflects the entire range of students’ views. Because there are no obviously wrong answers, students chose the answer that most closely reflected their views (Box 5-4).

|

BOX 5-4 Views on Science, Technology, and Society Sample Question When a new technology is developed (for example, a better type of fertilizer), it may or may not be put into practice. The decision to use a new technology depends on whether the advantages to society outweigh the disadvantages to society. Your position, basically: (Please read from A to G, and then choose one.)

|

The attitudes portion of the Pupils’ Attitudes Toward Technology instrument consists of 58 statements and a five-part Likert scale for responses. Each item is related to a student’s interest in technology, perception of technology and gender, perception of the difficulty of technology as a school subject, perception of the place of technology in the school curriculum, and ideas about technological professions (Box 5-5).

Filling the Assessment Matrix

The conceptual matrix proposed in Chapter 2 is intended as guidance for assessment developers. The matrix can be made less theoretical

|

BOX 5-5 Pupils’ Attitudes Toward Technology (PATT) Sample Attitudinal Statementsa Technology is as difficult for boys as it is for girls. I would rather not have technology lessons at school. I would enjoy a job in technology. Because technology causes pollution, we should use less of it. |

by filling the 12 cells with examples of assessment items that address the content and cognitive specifications of each cell. To this end, the committee reviewed the items in the portfolio of collected assessment instruments to identify those that might fit into the matrix (Table 5-2). For instance, the committee found questions that might be placed in the cell in the upper left-hand corner of the matrix representing the cognitive dimension of knowledge and the content domain related to technology and society.

Some of the items fit the cells better than others, and, because they come from different sources, the style of question and target populations vary. Of course, one would not use this piecemeal approach to design an actual assessment, and the quality of most of the selected items is not nearly as high as for the items one would devise from scratch. Nevertheless, this exercise demonstrates the potential usefulness of a matrix and gives the reader a sense of the scope of the cognitive and content dimensions of an assessment of technological literacy.

Table 5-2 begins on next page.

TABLE 5-2 Assessment Matrix for Technological Literacy with Items from Selected Assessment Instruments

|

|

Technology and Society |

Design |

|

Knowledge |

In the late 1800s the railroad was built across Canada. What effects did this have on life in Saskatchewan?

|

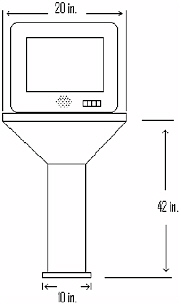

Marcus designed a television stand like the one shown below for his family.  |

|

|

What impact (or effects) do people living longer have?

|

|

|

|

His father is worried that the stand could tip over. Look at the measurements in the drawing. How can Marcus improve the design so the stand would be less likely to tip over?

|

|

|

|

Someone concerned about the environment would prefer to buy

|

|

|

|

If you were designing a product that has to be easily serviced, you would assemble it with

|

|

|

Capabilities |

Business and industry use technology in a variety of ways. Sometimes the technology that is used has negative results. The automobile industry makes |

A group of young people have investigated the needs of people with small gardens and decided to make a floor and wall plant holder. They have |

|

Products and Systems |

Characteristics, Core Concepts, and Connections |

|

Which of the following is a key factor that enables an airplane to lift?

|

Indicate whether you believe each item to be “definitely technology,” “might be technology,” “not technology,” or “don’t know”

|

|

Using a portable phone while in the bathtub creates the possibility of being electrocuted. (True or False)g |

|

|

To find the depth of the ocean, some ships send a sound wave to the ocean floor and record the time it takes to return to the detector. The kind of wave used by this detector is the same as that used by

|

|

|

Which system would locate a lost person with the appropriate signal sending devices?

|

|

|

A bicycle is considered a complex machine because it is

|

|

|

|

Technical developments and scientific principles are related because:

|

|

(This assessment item requires a clock radio)

|

|

|

Technology and Society |

Design |

|

Capabilities |

use of robotics for many reasons: to create products that are uniform, to ensure accuracy, to save time, and to perform dangerous tasks.

|

decided that the plant holder must be able to do the four things shown here. Your task today is to take this idea and develop it as far as you can in the time available. Design a floor and wall plant holder that:

|

|

|

|

An igloo is a structure that is used for survival in extremely cold environments with snowstorms. The structure is typically made of blocks of ice laid one on another in order to form the shape of a dome. Describe how you would test this structure to evaluate its ability to withstand static and dynamic forces: Describe how you would test this structure to evaluate its thermal insulation:o |

|

Critical Thinking and Decision Making |

“Technology makes the world a better place to live in!” Do you totally agree with the above statement? Discuss in full detail, using specific examples, your viewpoint on the impact and responsibilities involving technology and technological developments.q |

A new prototype for a refrigerator that contains a computer and has a computer display screen mounted in its door has been developed. The display screen uses touch-screen technology. As well as standard computer programs, the computer runs a database on which the user can maintain a record of the refrigerator contents including sell-by. Explain three reasons for conducting market research before starting the design of the new refrigerator-computer for sale in the global marketplace.t |

|

|

When a new technology is developed (for example, a better type of fertilizer), it may or may not be put into practice. The decision to use a new technology depends on whether the advantages to society outweigh the disadvantages to society. Your position basically:

|

|

|

|

Why would tightly closed windows be a good design choice in cold climates but not a good design choice in hot climates?u |

|

Products and Systems |

Characteristics, Core Concepts, and Connections |

|

|

|

Explain the benefits of using standards for mobile phones.v |

People have frequently noted that scientific research has created both beneficial and harmful consequences. Would you say that, on balance, the benefits of scientific research have outweighed the harmful results, or have the harmful results of scientific research been greater than its benefits?y |

|

On the internet, people and organizations have no rules to follow and can say just about anything they want. Do you think this is good or bad? Explain.w |

|

|

When you put food in a microwave oven, it heats up rapidly. On the other hand, when you hold an barely warms at all. Give two reasons for this.x |

We always have to make trade-offs (compromises) between the positive and negative effects of science. Your position basically: |

|

|

There are always trade-offs between benefits and negative effects:

|

|

|

Technology and Society |

Design |

|

Critical Thinking and Decision Making |

|

|

|

|

What do you think are the 3 most important pieces of technology ever made?

|

|

|

a1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Student Test Booklet Day 1, Sample A, page 8, question 24, Intended level: 8th grade. b1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Student Test Booklet Day 1, Sample A, page 4, question 8. Intended level: 8th grade. cIllinois Standards of Achievement Test–Science. Intended level: 4th grade students (page 37, question 59). dMCAS Spring 2003 Science and Technology/Engineering. Intended level: grade 5, page 235, question 30. eTest of Technological Literacy, Dissertation by Abdul Hameed, Ohio State University, 1988. page 173, question 9. Intended level: 7th and 8th grade. fTechnological Literacy of Elementary and Junior High School Students (Taiwan), question 55. gITEA/Gallup Poll on Americans’ Level of Literacy Related to Technology. Table 11, page 5, March 2002. Intended level: adults. hIllinois Standards of Achievement Test–Science (page 19, question 4). Intended level: 4th grade students. iInternational Baccalaurate: Information Technology in a Global Society. Standard Level, Paper 1, N02/390/S(1), page 3, question 4. Intended level: high school. j1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Student test booklet day 1, Sample A, Part A, question 1. Intended level: 5th, 8th and 11th grade. kMCAS Spring 2003 Science and technology/Engineering (page 233, question 26). Intended level: grade 5. lThe Development and Validation of a Test of Industrial Technological Literacy (Hayden Dissertation), page 178, question 33. Intended level: high school. mNew York State Intermediate Assessment in Technology. Question 18. Intended level: 7th and 8th grade. nAssessment of Performance in Design and Technology, The Final Report of the APU Design and Technology Project, 1985– 1991, figure 7.5, page 104. Intended Level: students aged 15 years. oDesign-Based Science (Fortus Dissertation), Structures for extreme environments content test, question 19. Intended level: 9th and 10th grade. |

||

|

Products and Systems |

Characteristics, Core Concepts, and Connections |

|

|

|

|

|

There are NOT always trade-offs between benefits and negative effects:

|

|

p1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Performance station test, sample B, performance station 3 clock radio, stage 4, page 9 (questions 1–8). Intended level: 8th grade. q1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Thread 2, page 33. Intended level: 11th grade. rThe Development of a Multiple Choice Instrument for Monitoring Views on Science-Technology-Society Topics. Intended Level: 12th grade.(80133). s1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Student Test Booklet Day 2, Sample A, page 7, question 4a, b, c, d. Intended level: 8th grade. tInternational Baccalaureate: Design Technology. Fall 2004 exam N04/DESTE/HP3/ENG/TZ0/XX, Higher Level, Paper 3, page 12, question F4. uDesign-Based Science (Fortus Dissertation). Structure for extreme environments content test, question 17. Intended level: 9th and 10th grade. vInternational Baccalaureate: Design Technology. Fall 2004 exam N04/4/DESTE/HP3/ENG/TZ0/XX, page 20, question H4, Higher Level, Paper 3. w1999 Provincial Learning Assessment in Technological Literacy, Saskatchewan Education. Student Test Booklet Day 2, Sample A, page 4, question 3e. Intended level: 8th grade. xDesign-Based Science (Fortus Dissertation). Safer cell phones content assessment, page 6, question 16. Intended level: 9th and 10th grade. yNSF Indicators, Public Understanding of Science and Technology—2002, page 7–14, Appendix Table 7-18. Intended level: adults. zThe Development of a Multiple Choice Instrument for Monitoring Views on Science-Technology-Society Topics, question 40311. Intended level: 12th grade. |

|

References

AAAS (American Association for the Advancement of Science). 1993. Benchmarks for Science Literacy. Project 2061. New York: Oxford University Press.

Aikenhead, G.S., and A.G. Ryan. 1992. The Development of a New Instrument: Monitoring Views on Science-Technology-Society (VOSTS). Available online at: http://www.usask.ca/education/people/aikenhead/vosts_2.pdf (May 20, 2005).

ASEE (American Society for Engineering Education). 2005. Teachers’ Survey Results. ASEE Engineering K–12 Center. Available online at: http://www.engineeringk12.org/educators/taking_a_closer_look/survey_results.htm (October 5, 2005).

Bame, E.A., M.J. de Vries, and W.E. Dugger, Jr. 1993. Pupils’ attitudes towards technology: PATT-USA. Journal of Technology Studies 19(1): 40–48.

Bloom, B.S., B.B. Mesia, and D.R. Krathwohl. 1964. Taxonomy of Educational Objectives. New York: David McKay.

Cobb, M.D., and J. Macoubrie. 2004. Public perceptions about nanotechnology: risks, benefits and trust. Journal of Nanoparticle Research 6(4): 395–405.

Custer, R.L., G. Valesey, and B.N. Burke. 2001. An assessment model for a design approach to technological problem solving. Journal of Technology Education 12(2): 5–20.

ETS (Educational Testing Service). 2005. Sample Test Questions. The Praxis Series, Specialty Area Tests, Technology Education (0050). Available online at: http://ftp.ets.org/pub/tandl/0050.pdf (May 27, 2005).

Finson, K.D. 2002. Drawing a scientist: what do we know and not know after 50 years of drawing. School Science and Mathematics 102(7): 335–345.

Hatch, L.O. 1985. Technological Literacy: A Secondary Analysis of the NAEP Science Data. Unpublished dissertation, University of Maryland.

Hatch, L.O. 2004. Technological Literacy: Trends in Academic Progress—A Secondary Analysis of the NAEP Long-term Science Data. Draft 6/16/04. Paper commissioned by the National Research Council Committee on Assessing Technological Literacy. Unpublished.

Hayden, M.A. 1989. The Development and Validation of a Test of Industrial Technological Literacy. Unpublished dissertation, Iowa State University.

Hill, R.B. 1997. The design of an instrument to assess problem solving activities in technology education. Journal of Technology Education 9(1): 31–46.

IBO (International Baccalaureate Organization). 2001. Design Technology. February 2001. Geneva, Switzerland: IBO.

ISBE (Illinois State Board of Education). 2001a. Science Performance Descriptors— Grades 1–5. Available online at: http://www.isbe.net/ils/science/word/descriptor_1-5.rtf (April 11, 2005).

ISBE. 2001b. Science Performance Descriptors—Grades 6–12. Available online at: http://www.isbe.net/ils/science/word/descriptor_6-12.rtf (April 11, 2005).

ISBE. 2003. Illinois Standards Achievement Test—Sample Test Items: Illinois Learning Standards for Science. Available online at: http://www.isbe.net/assessment/PDF/2003ScienceSample.pdf (August 31, 2005).

ITEA (International Technology Education Association). 2001. ITEA/Gallup Poll Reveals What Americans Think About Technology. A Report of the Survey Conducted by the Gallup Organization for the International Technology Education Association. Available online at: http://www.iteaconnect.org/TAA/PDFs/Gallupreport.pdf (October 5, 2005).

ITEA. 2004. The Second Installment of the ITEA/Gallup Poll and What It Reveals

as to How Americans Think about Technology. A Report of the Second Survey Conducted by the Gallup Organization for the International Technology Education Association. Available online at: http://www.iteaconnect.org/TAA/PDFs/GallupPoll2004.pdf (October 5, 2005).

Kaplan. 2003. ASVAB—The Armed Services Vocational Aptitude Battery. 2004 Edition. New York: Simon and Schuster.

Kimbell, R., K. Stables, T. Wheeler, A. Wosniak, and V. Kelly. 1991. The Assessment of Performance in Design and Technology. The Final Report of the APU Design and Technology Project 1985–1991. London: School Examinations and Assessment Council.

MDE (Massachusetts Department of Education). 2001. Science and Technology/ Engineering Curriculum Framework. Available online at: http://www.doe.mass.edu/frameworks/scitech/2001/0501.doc (April 11, 2005).

MDE. 2005a. Science and Technology/Engineering, Grade 5. Released test items. Available online at: http://www.doe.mass.edu/mcas/2005/release/g5sci.pdf (August 31, 2005).

MDE. 2005b. Science and Technology/Engineering, Grade 8. Released test items. Available online at: http://www.doe.mass.edu/mcas/2005/release/g8sci.pdf (August 31, 2005).

Miller, J.D. 1983a. The American People and Science Policy: The Role of Public Attitudes in the Policy Process. New York: Pergamon Press.

Miller, J.D. 1983b. Scientific literacy: a conceptual and empirical review. Daedalus 112(2): 29–48.

Miller, J.D. 1986. Reaching the Attentive and Interested Publics for Science. Pp. 55– 69 in Scientists and Journalists: Reporting Science as News, edited by S. Friedman, S. Dunwoody, and C. Rogers. New York: Free Press.

Miller, J.D. 1987. Scientific Literacy in the United States. Pp. 19–40 in Communicating Science to the Public, edited by D. Evered and M. O’Connor. London: John Wiley and Sons.

Miller, J.D. 1992. From Town Meeting to Nuclear Power: The Changing Nature of Citizenship and Democracy in the United States. Pp. 327–328 in The United States Constitution: Roots, Rights, and Responsibilities, edited by A.E.D. Howard. Washington, D.C.: Smithsonian Institution Press.

Miller, J.D. 1995. Scientific Literacy for Effective Citizenship. Pp. 185–204 in Science/Technology/Society as Reform in Science Education, edited by R.E. Yager. New York: State University of New York Press.

Miller, J.D. 1998. The measurement of civic scientific literacy. Public Understanding of Science 7: 1–21.

Miller, J.D. 2000. The Development of Civic Scientific Literacy in the United States. Pp. 21–47 in Science, Technology, and Society: A Sourcebook on Research and Practice, edited by D.D. Kumar and D. Chubin. New York: Plenum Press.

Miller, J.D. 2001. The Acquisition and Retention of Scientific Information by American Adults. Pp. 93–114 in Free-Choice Science Education, edited by J.H. Falk. New York: Teachers College Press.

Miller, J.D. 2004. Public understanding of, and attitudes toward scientific research: what we know and what we need to know. Public Understanding of Science 13: 273–294.

Miller, J.D., and L. Kimmel. 2001. Biomedical Communications: Purposes, Audiences, and Strategies. New York: Academic Press.

Miller, J.D., and R. Pardo. 2000. Civic Scientific Literacy and Attitude to Science and Technology: A Comparative Analysis of the European Union, the United States, Japan, and Canada. Pp. 81–129 in Between Understanding and Trust: The

Public, Science, and Technology, edited by M. Dierkes and C. von Grote. Amsterdam: Harwood Academic Publishers.

Miller, J.D., R. Pardo, and F. Niwa. 1997. Public Perceptions of Science and Technology: A Comparative Study of the European Union, the United States, Japan, and Canada. Madrid: BBV Foundation.

Morgan, G., B. Fischhoff, A. Bostrom, and C.J. Atman. 2002. Risk Communication: A Mental Models Approach. New York: Cambridge University Press.

NRC (National Research Council). 1999. How People Learn: Brain, Mind, Experience, and School. Edited by J.D. Bransford, A.L. Brown, and R.R. Cocking. Washington, D.C.: National Academy Press.

NSB (National Science Board). 1981. Science Indicators, 1980. Washington, D.C.: Government Printing Office.

NSB. 1983. Science Indicators, 1982. Washington, D.C.: Government Printing Office.

NSB. 1986. Science Indicators, 1985. Washington, D.C.: Government Printing Office.

NSB. 1988. Science and Engineering Indicators, 1987. Washington, D.C.: Government Printing Office.

NSB. 1990. Science and Engineering Indicators, 1989. Washington, D.C.: Government Printing Office.

NSB. 1992. Science and Engineering Indicators, 1991. Washington, D.C.: Government Printing Office.

NSB. 1994. Science and Engineering Indicators, 1993. Washington, D.C.: Government Printing Office.

NSB. 1996. Science and Engineering Indicators, 1996. Washington, D.C.: Government Printing Office.

NSB. 1998. Science and Engineering Indicators, 1998. Washington, D.C.: Government Printing Office.

NSB. 2000. Science and Engineering Indicators, 2000. Washington, D.C.: Government Printing Office.

NSB. 2004. Science and Engineering Indicators, 2004. Washington, D.C.: Government Printing Office.

Paul, R., and G.M. Nosich. 2004. A Model for the National Assessment of Higher-Order Thinking. Available online at: http://www.criticalthinking.org/resources/articles/a-model-nal-assessment-hot.shtml (January 19, 2006).

Saskatchewan Education. 2001. 1999 Provincial Learning Assessment in Technological Literacy. May 2001. Available online at: http://www.learning.gov.sk.ca/branches/cap_building_acct/afl/docs/plap/techlit/1999techlit.pdf.

TIDEE (Transferable Integrated Design Engineering Education). 2002. Assessments. Available online at: http://www.tidee.cea.wsu.edu/resources/assessments.html (August 31, 2005).