SUMMARY OF THE WORKSHOP

INTRODUCTION

A workshop on the validation of toxicogenomic technologies was held on July 7, 2005, in Washington, DC, by the National Research Council (NRC). The workshop concept was developed during deliberations of the Committee on Emerging Issues and Data on Environmental Contaminants (see Box 1 for a description of the committee and its purpose) and was planned by the ad hoc workshop planning committee (The ad hoc committee membership and biosketches are included in Appendix A.) These activities are sponsored by the National Institute of Environmental Health Sciences (NIEHS). The day-long workshop featured invited speakers from industry, academia, and government who discussed the validation practices used in gene-expression (microarray) assays1,2 and other toxicogenomic technologies. The workshop also included roundtable discussions on the current status of these validation efforts and how they might be strengthened.

|

BOX 1 Overview of the Committee on Emerging Issues and Data on Environmental Contaminants The Committee on Emerging Issues and Data on Environmental Contaminants was convened by the National Research Council (NRC) at the request of NIEHS. The committee serves to provide a public forum for communication between government, industry, environmental groups, and the academic community about emerging issues in the environmental health sciences. At present, the committee is focused on toxicogenomics and its applications in environmental and pharmaceutical safety assessment, risk communication, and public policy. A primary function of this committee is to sponsor workshops on issues of interest in the evolving field of toxicogenomics. These workshops are developed by ad hoc NRC committees largely composed of members from the standing committee. In addition, the standing committee benefits from input from the Federal Liaison Group. The group, chaired at the time of the meeting by Samuel Wilson, of NIEHS, consists of representatives from various federal agencies with interest in toxicogenomic technologies and applications. Members of the Federal Liaison Group are listed in Appendix C of this report. |

The workshop agenda (see Appendix B) had two related sections. Part 1 of the workshop, on current validation strategies and associated issues, provided background presentations on several components essential to the technical validation of toxicogenomic experiments including experimental design, reproducibility, and statistical analysis. In addition, this session featured a presentation on regulatory considerations in the validation of toxicogenomic technologies. The presentations in Part 2 of the workshop emphasized the validation approaches used in published studies where microarray technologies were used to evaluate a chemical’s mode of action.3

This summary is intended to provide an overview of the presentations and discussions that took place during the workshop. This summary only describes those subjects discussed at the workshop and is not intended to be a comprehensive review of the field. To provide greater depth and insight into the presentations from Part 1 of the workshop,

original extended abstracts by the presenters are included as Attachments 1 through 4. In addition, the presenters’ slides and the audio from the meeting are available on the Emerging Issues Committee’s Web site.4

WORKSHOP SUMMARY

Introduction

Kenneth S. Ramos, of the University of Louisville and co-chair of the workshop planning committee, opened the workshop with welcoming remarks, background on the standing and workshop planning committees, and speaker introductions. Ramos also provided a brief historical perspective on the technological advances and applications of toxicogenomics. Beginning in the early 1980s, new technologies, such as those based on polymerase chain reaction (PCR),5 began to permit evaluation of the expression of individual genes. Recent technological advances (for instance, the development of microarray technologies) have expanded those evaluations to permit the simultaneous detection of the expression of tens of thousands of genes and to support holistic evaluations of the entire genome. The application of these technologies has enabled researchers to unravel complexities of cell biology and, in conjunction with toxicologic evaluations, the technologies are used to probe and gain insight into questions of toxicologic relevance. As a result, the use of the technologies has become increasingly important for scientists in academia, as well as for the regulatory and drug development process.

John Quackenbush, of the Dana-Farber Cancer Institute and co-chair of the workshop, followed up with a discussion of the workshop concept and goals. The workshop concept was generated in response to the standing committee’s and other groups’ recognition that the promises of toxicogenomic technologies can only be realized if these technologies are validated. The application of toxicogenomic technologies, such as DNA microarray, to the study of drug and chemical toxicity has improved the ability to understand the biologic spectrum and totality of the toxic response and to elucidate potential modes of toxic action. Although early studies energized the field, some scientists continue to question

whether results can be generalized beyond the initial test data sets and the steps necessary to validate the applications. In recognition of the importance of these issues, the NRC committee dedicated this workshop to reflecting critically on the technologies to more fully understand the issues relevant to the establishment of validated toxicogenomic applications. Because transcript profiling using DNA microarrays to detect changes in patterns of gene expression is in many ways the most advanced and widely used of all toxicogenomic approaches, the workshop focused primarily on validation of mRNA transcript profiling using DNA microarrays. Some of the issues raised may be relevant to proteomic and metabolic studies.

Validation can be broadly defined in different terms depending on context. Quackenbush delineated three components of validation: technical validation, biologic validation, and regulatory validation (see Box 2).6 Because of the broad nature of the topic, the workshop was designed to primarily address technical aspects of validation. For example, do the technologies actually provide reproducible and reliable results? Are conclusions dependent on the particular technology, platform, or method being used?

Part 1:

Current Validation Strategies and Associated Issues

The first session of the workshop was designed to provide background information on the various experimental, statistical, and bioinformatics issues that accompany the technical validation of microarray analyses. Presenters were asked to address a component of technical validation from their perspective and experience; the presentations were not intended to serve as comprehensive reviews. A short summary of the topics in each presentation and a discussion between presenters and other workshop participants is presented below. This information is intended

|

BOX 2 Validation: Technical Issues Are the First Consideration in a Much Broader Discussion In general, the concept of validation is considered at three levels: technical, biologic, and regulatory. Technical validation focuses on whether the technology being used provides reproducible and reliable results. The types of questions addressed are, for example, whether the technologies provide consistent and reproducible answers and whether the answers are dependent on the choice of one particular technology versus another. Biologic validation evaluates whether the underlying biology is reflected in the answers obtained from the technologies. For example, does a microarray response indicate the assayed biologic response (for example, toxicity or carcinogenicity)? Regulatory validation begins when technical and biologic validation are established and when the technologies are to be used as a regulatory tool. In this regard, do the new technologies generate information useful for addressing regulatory questions? For example, do the results demonstrate environmental or human health safety? |

to be accessible to a general scientific audience. The reader is referred to the attachments by the presenters of this report for greater technical detail and a comprehensive discussion of each presentation.

Experimental Design of Microarray Studies

Kevin Dobbin, of the National Cancer Institute, provided an overview of experimental design issues encountered in conducting microarray assays. Dobbin began by discussing experimental objectives and explaining that there is no one best design for every case because the design must reflect the objective a researcher is trying to achieve and the practical constraints of the experiments being done. Although the high-level goal of many microarray experiments is to identify important pathways or genes associated with a particular disease or treatment, there are different ways to approach this problem. Thus, it is important to clearly define the experimental objectives and to design a study that is driven by those objectives. Experimental approaches in toxicogenomics can typically be grouped into three categories based on objective: class comparison, class prediction, or class discovery (see Box 3 and the description in Attachment 1).

|

BOX 3 Typical Experimental Objectives in mRNA Microarray Analyses Class Comparison Goal: Identify genes differentially expressed among predefined classes of samples. Example: Measure gene products before and after toxicant exposure to identify mechanisms of action (Hossain et al. 2000). Example: Compare liver biopsies from individuals with chronic arsenic exposure to those of healthy individuals (Lu et al. 2001). Class Prediction Goal: Develop a multigene predictor of class membership. Example: Identify gene sets predictive of toxic outcome (Thomas et al. 2001). Class Discovery Goal: Identify sets of genes (or samples) that share similar patterns of expression and that can be grouped together. Class discovery can also refer to the identification of new classes or subtypes of disease rather than the identification of clusters of genes with similar patterns. Example: Cluster temporal gene-expression patterns to gain insight into genetic regulation in response to toxic insult (Huang et al. 2001). |

Dobbin’s presentation outlined several experimental design issues faced by researchers conducting microarray analyses. He discussed the level of biologic and technical replication7 necessary for making statistically supported comparisons between groups. He also discussed issues related to the study design that arise when using dual-label microarrays,8

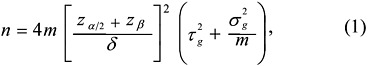

including strategies for the selection of samples to be compared on each microarray, the use of control samples, and issues related to dye bias.9 The costs and benefits of pooling RNA samples for analysis on microarrays were discussed in relation to the study’s design and goals. As an example to help guide investigators, Dobbin presented a sample-size formula to determine the number of arrays needed for a class comparison experiment (see Equation 1). This formula calculates the statistical power of a study based on the variability estimates of the data, the number of arrays, the level of technical replication, the target fold-change in expression that would be considered acceptable, and the desired level of statistical significance to be achieved (see Attachment 1 for further details).

The ensuing workshop discussion on Dobbin’s presentation focused on the interplay between using technical replicates and using biologic replicates. Dobbin emphasized the importance of biologic replication compared with technical replication for making statistically powerful comparisons between groups, because it captures not only the variability in the technology but also samples the variation of gene expression within a population.

where

n = number of arrays needed

m = technical replicates per sample

δ = effect size on base 2 log scale (e.g., 1 = 2-fold)

α = significance level (e.g., .001)

1-β = power

z = normal percentiles (t percentiles preferable)

t2g = biological variation within class

s2g = technical variation.

Multiple-Laboratory Comparison of Microarray Platforms

Rafael Irizarry, of Johns Hopkins University, described published studies that examined issues related to reproducibility of microarray analyses and focused on between-laboratory and between-platform comparisons. The presentation examined factors driving the variability of measurements made using different micorarray platforms (or other mRNA measurement technologies), including the “lab effect,”10 practitioner experience, and use of different statistical-assessment and data-processing techniques to determine gene-expression levels. Irizarry’s presentation focused on understanding the magnitude of the lab effect, and he described a study where a number of laboratories analyzed the same RNA samples to assess the variability in results (Irizarry et al. 2005). Overall, the results suggest that labs using the Affymetrix microarray systems have better accuracy than the two-color platforms, although the most accurate signal measure was attained by a lab using a two-color platform. In this analysis, a small group of genes had relatively large-fold differences between platforms. These differences may relate to the lack of accurate transcript information on these genes. As a result, the probes used in different platforms may not be measuring the same transcript. Moreover, disparate results may be due to probes on different platforms querying different regions of the same gene that are subject to alternative splicing or that exhibit divergent transcript stabilities.

Beyond describing the results of the analysis, Irizarry provided suggestions for conducting experiments and analyses to compare various microarray platforms. The suggestions included use of relative, as opposed to absolute, measures of expression; statistical determinations of precision and accuracy; and specific plots to determine whether genes are differentially expressed between samples. These techniques are described in Attachment 2. Irizarry also commented that reverse transcriptase PCR (RTPCR) should not be considered the gold standard for measuring gene expression and that the variability in RTPCR data is very similar to microarray data if enough data points are analyzed. In this regard, the large quantity of data produced by microarrays is useful in describing the variability in the technology’s response. However, this attribute is sometimes portrayed as a negative because the data can appear variable. Conversely,

RTPCR produces comparatively few measurements, and one is not able to readily assess the variability.

Irizarry also commented that obtaining a relatively low correspondence between lists of genes generated by different platforms is to be expected when comparing just a few genes from the thousands of genes analyzed. On this point, it was questioned how and whether researchers can migrate from the common practice of assessing 1,000s of genes and selecting only a few as biomarkers to the practice of converging on a smaller number of genes that reliably predict the outcome of interest. Also, would a high-volume, high-precision platform be a preferred alternative? Further questions addressed measurement error in microarray analyses and whether, because of the magnitude of this error, it was possible to detect small or subtle changes in mRNA expression. In response, Irizarry emphasized the importance of using multiple biologic replicates so that consistent patterns of change could be discerned.

Statistical Analysis of Toxicogenomic Microarray Data

The next presentation by Wherly Hoffman, of Eli Lilly and Company, discussed the statistical analysis of microarray data. This presentation focused on the Affymetrix platform and discussed the microarray technology and statistical hypotheses and analysis methods for use in data evaluation. Hoffman stated that, like all microarray mRNA expression assays, the Affymetrix technology uses gene probes that hybridize to mRNA (actually to labeled cDNA derived from the mRNA) in biologic samples. This hybridization produces a signal with intensity proportional to the amount of mRNA contained in the sample. There are various algorithms that may be used to determine hybridized mRNA signal intensity from background signals.

Hoffman emphasized the importance of defining the scientific questions that any given experiment is intended to address and the importance of including statistical expertise early on in the process to determine appropriate statistical hypotheses and analyses. During this presentation, three types of experimental questions were addressed along with the statistical techniques for their analysis (as mentioned by Hoffman, these techniques are also described in Deng et al. 2005). The first example presented data from an experiment designed to identify differences in gene expression in animals exposed to a compound at several different doses. Hoffman discussed the statistical techniques used to evaluate differences

in expression between exposure levels while considering variation in responses from similarly dosed animals and variation in responses from replicate microarrays. In this analysis (using a one-factor [dose] nested analysis of variance [ANOVA] and t-test), it is essential to accurately define the degrees of freedom. Hoffman pointed out that the degree of freedom is determined by the number of animal subjects and not the number of chips (when the chips are technical replicates that represent application of the same biologic sample to two or more microarrays). Thus, technical replicates should not be included when determining the degrees of freedom. If this is not factored into the calculation, the P value is inappropriately biased because exposure differences appear to have greater significance. The second example included data from an experiment designed to evaluate gene expression over a time course. The statistical analysis on this type of experiment must capture the dose effect, the time effect, and the dose-time interaction. Here, a two-factor (dose and time) ANOVA is used. The third example provided by Hoffman was an experiment to determine those genes affected by different classes of compounds (alpha, beta, or gamma receptor agonists). This analysis evaluated dose-response trends of microarray signal intensities when known peroxisomal proliferation activated receptor (PPAR) agonists were tested on agonist knockout and wild-type mice to determine those probe sets (genes) that responded in a dose-response manner. Here, a linear regression model is used for examining the dose-response trends at each probe set. This model considers the type of mice (wild type or mutant), the dose of the compound, and their interaction.

Hoffman also discussed graphical tools to detect patterns, outliers, and errors in experimental data, including box plots, correlation plots, and principal component analysis (PCA). Other visualization tools, such as clustering analysis and the use of volcano plots used to show the general patterns of microarray analysis results, were also presented. These tools are further discussed in Attachment 3.

Finally, multiplicity issues were discussed. Although microarray analyses are able to provide data on the expression of thousands of genes in one experiment, there is the potential to introduce a high rate of false positives. Hoffman explained various approaches used to control the rate of false positives, including the Bonferroni approach, but commented that recent progress in addressing the multiple testing problems has been made, including work by Benjamini and Hochberg (1995). (These approaches as well as the relative advantages and disadvantages are further discussed in Attachment 3.)

The short discussion following this presentation centered primarily on the visualization tools presented by Hoffman and the type of information that they convey.

Diagnostic Classifier—Gaining Confidence Through Validation

Clinical diagnosis of disease primarily relies on conventional histological and biochemical evaluations. To use toxicogenomic data in clinical diagnostics, reliable classification methods11 are needed to evaluate the data and provide accurate clinical diagnoses, treatment selections, and prognoses. Weida Tong, of the Food and Drug Administration (FDA), spoke about classification methods used with toxicogenomic approaches in clinical applications. These classification methods (learning methods) are driven by mathematical algorithms and models that “learn” features in a training set (known members of a class) to develop diagnostic classifiers and then classify unknown samples based on those features. Tong’s presentation focused on the issues and challenges associated with sample classification methods using supervised12 learning methods.

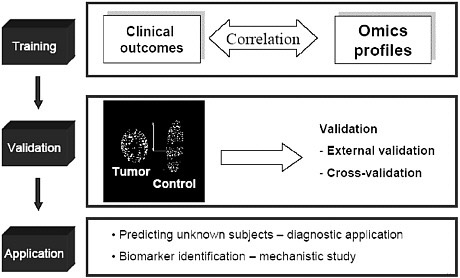

The development of a diagnostic classifier can be divided into three steps: training, where gene expression or other toxicogenomic profiles are correlated with clinical outcomes to develop a classifier; validation, where profiles are validated using cross-validation13 or external valida-

tion14 approaches; application, where the classifier is used to classify an unknown subject for a clinical diagnosis or for biomarker identification (see Figure 1 and Attachment 4). In this presentation, the “decision forest” method, developed by Tong et al. (2004), was discussed with an emphasis on prediction confidence and chance correlation.15 The decision forest approach is a consensus modeling method; that is, it uses several classifiers instead of a single classifier (hence, the decision forest instead of a decision tree) (see Box 4). This technique may be used with microarray, proteomics, and single-nucleotide polymorphism data sets. An example of this technique was presented that used mass spectra from protein analyses of serum from individuals to distinguish patients with prostate cancer from healthy individuals. Here, mass spectra peaks were used as independent variables for classifiers. Initially, only a few peaks were identified as classifiers and run on the entire pool of healthy individuals and cancer patients; this analysis is considered a decision tree and has an associated error (misclassification) rate. Combining decision trees (additional runs with distinct classifiers) into a decision forest improves the predictive accuracy.

Tong emphasized that validating a classifier has three components: the first is determining whether the classifier accurately predicts unknown samples; the second is determining the prediction confidence for classifying different samples or individuals; and the third is establishing that correlations between a diagnostic classifier and disease are not just because of chance (chance correlation). Tong’s presentation focused on the techniques to evaluate predictive confidence and chance correlation and emphasized the usefulness of a 10-fold cross-validation technique in providing an unbiased statistical assessment of prediction confidence and chance correlation (see Attachment 4).

Discussion following Tong’s presentation focused on the distinction between external validation methods and details surrounding the cross-validation methods (described in Attachment 4 and Tong et al.

FIGURE 1 Three steps in the development of a diagnostic classifier. Source: Tong 2004.

|

BOX 4 Decision Forest Analysis for Use with Toxicogenomics Data Decision forest (DF) is a consensus modeling technique that combines multiple decision tree models in a manner that results in more accurate predictions than those derived from an individual tree. Since combining several identical trees produces no gain, the rationale behind decision forests is to use individual trees that are different (that is, heterogeneous) in representing the association between independent variables (gene expression in DNA microarray, m/z peaks in SELDI-TOF data, and structural descriptors in SAR modeling) and the dependent variable (class categories) and yet are comparable in their prediction accuracy. The heterogeneity requirement assures that each tree uniquely contributes to the combined prediction. The quality comparability requirement assures that each tree makes a similar contribution to the combined prediction. Since a certain degree of noise is always present in biologic data, optimizing a tree inherently risks overfitting the noise. Decision forest tries to minimize overfitting by maximizing the difference among individual trees to cancel some random noise in individual trees. The maximum difference between the trees is obtained by constructing each individual tree using a distinct set of dependent variables. Source: Modified from Tong 2006. |

2004). In addition, questions were raised about the extent to which established classifiers could be extrapolated beyond the original training set. Tong indicated that the results of the cross-validation technique could describe the predictive accuracy of an established classifier within the confines of the original data set but not new, independent data sets.

Toxicogenomics: ICCVAM Fundamentals for Validation and Regulatory Acceptance

The final presentation of the morning session was by Leonard Schectman, the chair of the Interagency Coordinating Committee on Validation of Alternative Methods (ICCVAM). This presentation described the validation and regulatory acceptance criteria and guidelines that are currently in place and have been compiled and adopted by ICCVAM and its sister agency the European Center for Validation of Alternative Methods.

At present, the submission of toxicogenomic data to regulatory agencies is being encouraged (for example, FDA 2005). However, the regulatory agencies generally consider it premature to base regulatory decisions solely on toxicogenomics data, given that the technologies are rapidly evolving and in need of further standardization, validation, and understanding of the biologic relevance. In addition, regulatory acceptability and implementation will in part depend on whether these methods have utility for a given regulatory agency and for the products that that agency regulates.

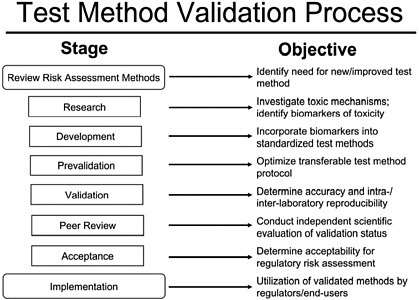

Schectmann described ICCVAM’s 2003 updated guidelines for nomination and submission of methods (ICCVAM 2003). These guidelines detail ICCVAM validation and regulatory acceptance criteria. Figure 2 outlines the generalized scheme of the validation process, as presented by Schechtman. Components of this process include standardization of protocols, variability assessments, and peer review of the test method. The presentation concluded with the overall comment that validation in the regulatory arena is, for the most part, a prerequisite for regulatory acceptance of a new method.

In response to the presentation, it was questioned whether regulatory agencies were required to go through the ICCVAM process before they could use or accept information from a new test. Schectmann responded that it was not required—the process is made available to help guide a validation effort, and because multiple agencies are part of the

FIGURE 2 ICCVAM test method validation process. Source: ICCVAM 2003.

ICCVAM process, an ICCVAM-accepted test is likely to be accepted by those agencies. However, he cautioned that acceptance of any given method goes far beyond validation, and the ICCVAM process is one that facilitates the validation of a method but does not provide or guarantee regulatory acceptance of that method.

It was suggested by a participant that one aspect of the test-validation process (distribution of chemicals for testing), as outlined in the presentation, would not work well in the field of toxicogenomics but that the distribution of biologic samples (for mRNA quantification) would be a better alternative. Schectmann clarified that many new technologies did not exist when the ICCVAM process was initiated and that other validation approaches could be used. He emphasized that there is nothing about the ICCVAM process that is inflexible relative to the new or different technologies.

The fundamental differences between the processes for validating new technologies and those used to validate conventional, currently used toxicological methods were discussed next. It was noted that there is an apparent disconnect in that a very elaborate validation process is established for new methods, yet thousands of chemicals are currently being

evaluated with methods (such as quantitative-structure-activity relationships) that would likely not pass through the current ICCVAM validation process. Overall, it was questioned whether this process sets up a system where “the perfect was the enemy of the good,” because new technologies can offer information, for instance, in a chemical’s weight-of-evidence evaluation? Schechtman responded that he did not believe that it was necessary to wait for a final stamp of approval. Indeed, the U.S. Environmental Protection Agency (EPA) and FDA are accepting data and mechanistic information from tests that have not undergone, and probably will never undergo, the ICCVAM validation process. Even the classical toxicological tests themselves have never been validated in this manner.

Part 2:

Case Studies: Classification Studies and the Validation Approaches

The second session of the workshop featured case studies where mRNA expression microarray assays were used to classify compounds according to their toxicological mode of action. Authors of the original papers presented salient details of their studies, emphasizing validation techniques and concepts. The presentations and discussion are described below and the author’s PowerPoint slides are available on the committee’s Web site. As mentioned before, this report is intended to present the information at a level accessible to a general scientific audience. Technical details on the presentations are presented at a cursory level. Readers are referred to the original publications, cited in each section, for greater technical detail and a more comprehensive treatment of specific protocols.

Proof-of-Principle Study on Compound Classification Using Gene Expression

Hisham Hamadeh, of Amgen, outlined a two-part proof-of-principle study on compound classification that used microarray technologies (Hamedah et al. 2002a,b). This study was initiated in 1999 when many of these technologies were in their infancy and current validation techniques had not yet been devised. However, the experimental design and concepts used for validation and classification in those early

studies remain illustrative for discussion. The purpose of the study was to determine whether gene-expression profiles resulting from exposure to various compounds could be used to discriminate between different toxicological classes of compounds. This study evaluated the gene-expression profiles resulting from exposure to two compound classes, peroxisome proliferators (including three test compounds: clofibrate, Wyeth 14,643, and gemfibrozil) and enzyme inducers (modeled by the cytochrome P450 inducer, phenobarbital). Hamadeh described the experimental design of the study, highlighting data analyses used to designate whether gene expression was significantly induced.

Gene induction results were presented using hierarchical clustering,16 principal components analysis, and pairwise correlation. These visualization techniques demonstrated that although phenobarbital-exposed animals exhibited significant interanimal variability, they could be readily distinguished from those exposed to the peroxisome proliferators on the basis of gene expression. To expand on results obtained with this limited data set, the researchers attempted to classify blinded samples based on earlier data. A classifier using 22 genes with the greatest differential expression between the two compound classes was used to classify unknown samples into a compound class. This gene set was determined by statistical analyses of the training set (tests on the model compounds described above) using linear discriminant analysis and a genetic algorithm for pattern recognition (Hamadeh et al. 2002b). Blinded samples were classified initially by visual comparison of the levels of mRNA induction or repression in blind samples to the known compounds. Subsequently, pairwise correlation analysis of expression level of the 22 discriminant genes was also used. Correlations of r ≥ 0.8 between blinded and known samples were used to determine whether the unknown was similar to the known class.

The analysis was able to successfully discern the identity of the blinded compounds. Phenytoin, an enzyme inducer similar to phenobarbital, was classified as phenobarbital-like; DEHP, a peroxisome proliferator, was also indicated as such; and the final compound, hexobarbital, has a similar structure to phenobarbital but is not an enzyme inducer, was not classified as being either phenobarbital-like or a peroxisome proliferator. Overall, the conclusions of this study are that it was possible to

separate compounds based on the gene-expression profiles and that it is feasible to gain information on the toxicologic class of blinded samples through interrogation of a gene-expression database.

Workshop participants were interested in the suite of discriminant genes that were used for the evaluation of chemical class and in whether that number of genes could be narrowed down. For instance, the question was asked whether it would be satisfactory to use only the induction profiles of CYP2B and CYP4A17 to indicate the class of the unknowns. Hamadeh reported that this type of evaluation had been conducted and the number of discriminant genes could indeed be narrowed down. However, he noted that a larger number of discriminant genes allow for increased resolution between compounds. Of course, microarray analysis also provides information on many genes that would not be obtained from a simple evaluation of individual gene transcripts, and this is particularly useful when analyzing unknown samples.

The amount and origin of the variability seen within a chemical class was also discussed. Hamadeh explained that there was interanimal variability but that generally the variability in the microarray responses mirrored those seen in the animal responses (for example, whether animals within a group exhibited hypertrophy, necrosis, or the presence of lesions). Overall, the level of interanimal variability did not alter the end result that expression profiles were different for the different classes.

The discussion emphasized that mRNA expression results have several layers of intertwined information that can complicate the analysis of factors eliciting gene-expression changes. Beyond the molecular targets that are specifically affected by a compound, there are expression changes associated with the pathology resulting from exposure (for example, necrosis or hypertrophy). Gene-expression changes can also be related to an event that is secondary, or downstream, from the initial toxicologic interaction. A compound may also interact with other targets not associated with its toxic or therapeutic action. In addition, all of these effects may change, depending on time after dose, which adds another layer of complexity to the analysis. As a result, the number of genes that are used to screen for certain chemical classes is generally low and intended to screen for certain toxicities.

Acute Molecular Markers of Rodent Hepatic Carcinogenesis Identified by Transcription Profiling

Kyle Kolaja, of Iconix Pharmaceuticals, presented a study that sought to identify biomarkers of hepatic carcinogenicity using microarray mRNA expression assays (Kramer et al. 2004). In particular, identifiers of nongenotoxic carcinogenicity were desired because the conventional method for determining this mode of action (a 2-year rodent carcinogenicity assay) is time consuming and expensive. The study evaluated nine well-characterized compounds, including five nongenotoxic rodent carcinogens, one genotoxic carcinogen, one carcinogen that may not act via genotoxicity, a mitogen,18 and a noncarcinogenic toxicant. Rats from the control group and three groups that received different dose levels of each compound were sacrificed after 5 days of dosing, and liver extracts were tested in microarray assays. The purpose of the analysis was to correlate the short-term changes in gene expression with the long-term incidence of carcinogenicity (known from previous studies of these model compounds). Kolaja highlighted the data analysis used to designate whether gene expression was significantly induced or repressed. Significantly affected genes were correlated to carcinogenic index (based on cancer incidence in 2-year rodent carcinogenicity studies).

The study resulted in the identification of two optimal discriminatory genes (biomarkers): cytochrome P450 reductase (CYP-R) and transforming growth factor-β stimulated clone 22 (TSC-22). TSC-22 negatively correlated with carcinogenic potential, and CYP-R correlated with carcinogenicity. The results were validated initially by measuring the mRNA levels using another mRNA measurement technique, quantitative PCR (Q-PCR). This analysis indicated a strong correlation between the microarray data and the Q-PCR data generated from the same set of samples. From a biologic standpoint, the role of TSC-22 in carcinogenesis is consistent with its involvement in the regulation of cellular growth, development, and differentiation.

The results of this analysis were extended by a “forward validation” of these biomarkers, that is, the independent determination of these genes as carcinogenic biomarkers by other groups or studies. Kolaja described two independent studies (Iida et al. 2005; Michel et al. 2005) using both rats and mice that identified TSC-22 as a potential marker of early

changes that correlate with carcinogenesis. Additional study at Iconix Pharmaceuticals on 26 nongenotoxic carcinogens and 110 noncarcinogens indicated that the TSC-22 biomarker at day 5 after dosing had an accuracy for detecting the carcinogens of about 50% and for excluding compounds as carcinogens at about 80%. Kolaja remarked that these results were fairly robust, especially recognizing that the biomarker is a single-gene biomarker being compared across a very diverse set of compounds. Overall, it is very difficult to find one gene that is a suitable biomarker in terms of predictive performance. It was noted that multiple genes create a more integrated screening biomarker and allow for stronger predictivity, performance, and accuracy. Kolaja also stated that in the future it would be more appropriate for validation strategies to emphasize the biologic and not methodologic aspects of the validation, because the testing of a biologic question captures the technical aspects. As such, additional tests on treatments and models would follow with less emphasis on platforms and methods.

During the discussion, it was questioned whether it was possible that TSC-22 was correlative rather than mechanistic—that is, if the TSC-22 was related to another general response (such as liver weight change) and not to carcinogenesis? Kolaja mentioned that he would not be surprised if liver weight changes were also seen at day 5 and that the possibility that TSC-22 was a correlative response had not been ruled out. Another question raised was whether analyzing data sets using a multiple-gene biomarker had correspondingly greater technical difficulty compared with a single-gene biomarker? Kolaja indicated that it was the same type of binary analysis (Is a sample in the class or not?), but with multiple genes, the answer relies on the compendium of genes, and the mathematical modeling. He also noted that recent mathematical algorithms and models have become increasingly better at class separation.

Study Design and Validation Strategies in a Predictive Toxicogenomics Study

Guido Steiner, of Roche Pharmaceuticals, presented a study that used microarray analyses to classify compounds by mode of toxicologic action (Steiner et al. 2004). The goals of the study were to predict hepatotoxicity of compounds from gene-expression changes in the liver with a model that can be generalized to new compounds to classify compounds according to their mechanism of toxicity and to show the viabil-

ity of a supervised learning approach without using prior knowledge about relevant genes.

In this study, six to eight model hepatoxicants for each known mode of action (for example, steatosis, peroxisome proliferation, and choleostasis) were tested. Rat liver extracts were obtained at various times (typically under 24 hours) following dosing with model compounds and tested for changes in mRNA expression. Clinical chemistry, hematology, and histopathology were used to assess toxicity in each animal. The gene-expression data from tests of the model toxicants became the training set for the supervised learning methods (in this study, support vector machines [SVMs]) (see Box 5). One aspect of this study that differed from many comparisons of gene-expression levels is that the commonly used statistical measures denoting significant gene-expression changes (magnitude of change and associated P value) were not used. Rather, the discriminatory features from the microarray results for classification were selected using recursive feature elimination (RFE), a method that uses the output of the SVMs to extract a compact set of relevant genes that as an ensemble yield a good classification accuracy and stabilize against the background biologic and experimental variation (see Box 5 and Steiner et al. 2004). Features for a particular class were selected from gene-expression profiles from animals exposed to model compounds. A compound’s class was based on results from the serum chemistry profile and liver histopathology.

Steiner’s presentation focused on the study design and validation considerations that need to be addressed when conducting this type of study. First, only a small set of compounds within a class are typically available for developing classification algorithms, and it is important to consider whether these compounds are adequately representative of class toxicity. Overall, this problem is difficult to predict or avoid a priori. In the presented study, some well-characterized treatments were initially selected, and the problem of generalizability within a toxic class was dealt with during the model validation phase. So then, how is a well-characterized training set defined? This question was approached by carefully selecting the compound using phenotypic anchoring based on the clinical chemistry and histopathologic data, subsequently confirming that the clinical results correspond with those in the literature, and then using the higher-dose treatments in the training sets that had no ambiguity regarding the toxic manifestation. One implication of this approach is that the “scale” for detecting an effect is set higher (that is, gene-expression signatures in the training set are based on higher dose, “real”

effects at the organ level). Also, although the meaning for this class (in terms of gene expression) is well defined, the ability to extrapolate to lower doses is not known until this question is tested.

Another issue is that the toxicity classes established by researchers may not be accurate. Some tested compounds may show a mixed toxicity. Steiner explained that the model would pick up the various aspects of the toxicity, and indeed, results presented in Steiner et al. (2004) indicated that to be the case.

|

BOX 5 Classification of Microarray Data Using Algorithms and Learning Methods Various methods are used to analyze large-scale gene-expression data. Unsupervised methods widely reported in the literature include agglomerative clustering (Eisen et al. 1998), divisive clustering (Alon et al. 1999), K-means clustering (Everitt 1974), self-organizing maps (Kohonen 1995), and principal component analysis (Joliffe 1986). Support vector machines (SVMs), on the other hand, belong to the class of supervised learning algorithms. Originally introduced by Vapnik and co-workers (Boser et al. 1992; Vapnik 1998), they perform well in different areas of biologic analysis (Schölkopf and Smola 2002). Given a set of training examples, SVMs are able to recognize informative patterns in input data and make generalizations on previously unseen samples. Like other supervised methods, SVMs require prior knowledge of the classification problem, which has to be provided in the form of labeled training data. Used in a growing number of applications, SVMs are particularly well suited for the analysis of microarray expression data because of their ability to handle situations where the number of features (genes) is very large compared with the number of training patterns (microarray replicates). Several studies have shown that SVMs typically tend to outperform other classification techniques in this area (Brown et al. 2000; Furey et al. 2000; Yeang et al. 2001). In addition, the method proved effective in discovering informative features such as genes that are especially relevant for the classification and therefore might be critically important for the biologic processes under investigation. A significant reduction of the gene number used for classification is also crucial if reliable classifiers are to be obtained from microarray data. A proposed method to discriminate the most relevant gene changes from background biologic and experimental variation is gene shaving (Hastie et al. 2000). However, we chose another method, recursive feature elimination (RFE) (Guyon et al. 2002), to create sets of informative genes. Source: Steiner et al. 2004. |

Data heterogeneity is also an issue, and a primary reason protocol standardization and chip quality control is essential. Time-matched vehicle controls19 should be used; according to Steiner, it is a necessity because changes occur (for instance, due to variations in circadian rhythms, age, or the vehicle) through an experiment’s time course. To handle the known remaining heterogeneities, the SVM models were always trained using a one-versus-all approach where data points of all toxicity classes are seen at the same time. In this setting, chances are good that any confounding pattern is represented on both sides of the classification boundary. Therefore, the SVM can “learn” the differences that are related to toxicity class (which are designated) and ignore the patterns that are not related to toxicity class (experimental factors driving data heterogeneity).

Another issue that needs to be considered is data overfitting. This effect can occur when the number of features in the classification model is too great compared with the number of samples, and it can lead to spurious conclusions. In this regard, the SVM technique has demonstrated performance when the training set is small (Steiner et al. 2004). Selection of model attributes in SVMs, including the aforementioned RFE function, also limit the potential for overfitting. However, the true performance of the model has to be demonstrated using a strict validation scheme that also takes into account that a number of marker genes have to be selected from a vast excess of available (and largely uninformative) features.

Steiner also stated that a compound classification model should not confuse gene-expression changes associated with a desired pharmacological effect with those from an unwanted toxic outcome. The SVM model addresses this concern based on the assumption that pharmacological action is compound specific and the toxic mechanism is typical for a whole class; if this is true, then the SVM will downgrade features associated with a compound-specific effect and find features for classification that work for all compounds within a class.

The final issue considered by Steiner was that of sensitivity and the need for a model and classification scheme to be at least as sensitive as the conventional clinical or histological evaluations. In this study, increased sensitivity of the developed classification scheme was demonstrated with a sample that had no effect using conventional techniques,

but there was a small shift from the control group toward the active group in a three-dimensional scatter plot for visualizing class separation. This shift is a hint that gene-expression profiling could be more sensitive than the classical end points used in this study (Steiner et al. 2004).

Discussion from workshop participants included questions about whether the described systems were capable of detecting effects at a lower dose or if they were only detecting effects at an earlier time point (that is, the effect would have been manifested at the dose but at a later time). Steiner explained that those assessments had been completed and that the model worked quite well in making correct predictions from lower doses than those that elicit classic indicators of toxicity.

During discussion, it was noted that Steiner’s data set indicated differences in responses between strains of rats, which has important implications for cross-species extrapolation (for example, between rodents and humans). Notable differences seen in microarray results between two inbred strains of rats might presage the inapplicability of these techniques to humans. Steiner replied that this was an important question not addressed in the study but that the authors did not imply that the effect in humans could be predicted from the rat data. Hamedeh pointed out that the training method used in that example data set did not consider both strains in the training set, and identifiers could have likely been found if this had been done. However, the extrapolation of this classification scheme to humans would create a whole different set of issues because those analyses would be conducted with different microarray chips (human based not rat based).

Roundtable Discussion

A roundtable discussion, moderated by John Quackenbush and open to all audience members, was held following the invited presentations, and the strengths and limitations of the current validation approaches and methods to strengthen these approaches were considered. Although technical issues and validation techniques were discussed, many of the comments focused on biologic validation, including the extent to which microarray results indicated biologic pathways, the linking of gene-expression changes to biologic events, the different requirements of biologic and technical validation, the impact of individual, species and environmental variability on microarray results, and the use of microarray assays to evaluate the low-dose effects of chemicals. The primary themes of this discussion are presented here.

Technical Issues and Validation Techniques

John Balbus, of Environmental Defense, commented that most of the presentations during the day were from case studies of self-contained data sets from a particular lab or group. However, it is commonly thought that a benefit of obtaining toxicogenomic data is that they will be included in larger databases and mined for further information. In this context, he noted the level of difficultly in drawing statistically sound conclusions from self-contained data sets and asked whether analyzing a fully populated database would create even greater complexities and whether it was possible to achieve sufficient statistical power from data mining. John Quackenbush suggested that a level of data standardization would be necessary to analyze a compiled database and that the quality of experiments in the database would exert a major influence. In addition, these analyses may require that comparisons are only made between similar technologies or applications. Irizarry suggested that a large database would also serve as a resource of independent data sets for evaluating whether a phenomenon seen in one experiment has been seen in others.

Casimir Kulikowski, of Rutgers University, raised a technical issue relating to cross-validation techniques used in binary classification models in toxicogenomics. Those models usually involve very different types of categories for a positive response for a specific compound versus other possible responses. In cross-validation, most techniques assume symmetry with random sampling from each class. In Steiner’s presentation, the sampling was appropriately compound specific, but a question arises as to whether it could also take into account confounding issues not known a priori, such as the possibility of a compound differentially affecting different biologic pathways. More generally, cross-validation methods may need to be applied in a more stratified manner for problems dealing with multiple classes or mixed classes, or where there are relationships between the classes. For instance, there may be a constrained space for the hypothesis of a toxic response affecting a single (regulatory or metabolic) pathway, but one may also wish to focus on other constraints that have not yet been satisfied to generate additional information for other pathways. One approach would be to use causal pathway analysis, together with its counterfactual20 network, to limit the possible outcomes of hypothesis generation. This means that if a set of assertions can

be made based on the current state of the art, the investigator can identify those counterfactual questions that might actually be scientifically interesting. (This technique has currently been proposed for biomedical image classification, but it might also apply to microarray-based classification studies.) Kulikowski commented that it was problematic to design classifiers as simple, binary classifications and then assume that the toxic response class is the same as the class representing the mixture of all other responses. It would be more desirable to tease out what that mixture class is and then figure out how it should be stratified in a systematic manner.

Microarray Assays for the Analysis of Biologic Pathways

Federico Goodsaid, of FDA, commented that based on the presentations, the analytical validation of any given platform was fairly straightforward, but the end product of these studies (a set of genes to be used as a marker) is likely to be platform dependent, and these sets of genes will not be the same across platforms. However, he noted that identifying identical sets of genes across platforms is not essential as long as the markers are supported by sufficient biologic validation. Another participant provided an example of this concept: In a study of sets of genes indicative of breast cancer tumor metastasis, different microarray platforms indicated completely distinct sets of genes as markers of breast cancer. However, when these gene sets were mapped biologically, there was complete overlap of the pathways in which those genes were involved, thus, there was good agreement in terms of the biologic pathways. John Quackenbush also commented on the results of recent studies presented in Nature Methods,21 where a variety of platforms were tested using the same biologic samples. In general, these studies indicated that although variability exists between labs and microarray platforms, and different platforms identify different biomarkers of those pathways, common biologic pathways emerge.

Linking Gene-Expression Changes to Biologic Events

Bill Mattes, of Gene Logic,22 commented that it was necessary to

understand gene-expression changes in an appropriate biologic context, particularly if gene-expression changes are causative of a pathology or if they are just coincident (or correlative) to the pathology. Also, it is important that the time course be considered because gene-expression changes will vary through time. Overall, when the goal is understanding the etiology of a biologic effect, the gene-expression changes examined should be those preceding, not coincident to, the biologic effect of interest. Joseph DeGeorge, of Merck Pharmaceuticals, also commented on the importance of understanding the biologic context of gene-expression changes in validation efforts. For instance, if a gene-expression signal is obtained from animal tests with no associated pathology, it is important to ask if the change in mRNA expression is the first step to pathology or just an adaptive response. It is also important to know if the response is real or false or an artifact of screening a multitude of genes, and it is important to ask whether an observed response would be relevant to human risk when extrapolating from animal to humans.

Recognizing Differences in Biologic and Technical Validation

The importance of distinguishing between the vastly different needs for technical and biologic validation was also discussed by Kenneth S. Ramos. Many of the difficult concepts being addressed relate solely to biologic validation, and sufficient technical validation can be readily achieved using multiple compounds tested on a single animal strain under a single set of conditions. This would constitute the technical validation of a biomarker, even though the biology is not exactly understood. Conversely, for biologic validation, the needs are quite different, and the problems are more substantial, as has been discussed.

Geoff Patton, of EPA, commented that it was his personal opinion that when scientists seek to achieve biologic validation, they will not just rely on one method such as microarray technologies to make statements on biologic mechanisms or pathways. It is not sufficient to auto-validate within the same technique; it is necessary to look for other confirmatory information. He also stated that microarray assays provide multiple opportunities for gaining biologic insight from transcriptomics. For example, insight can be developed by evaluating events upstream of gene expression (such as the transcriptional regulatory elements and transcription factors driving gene-expression results) to solidify the understanding of co-regulated genes. Insight can also be gained by analyzing downstream events, such as the relationship to pathologic markers or the break

point between adaptation and frank toxicity. Patton commented that the current understanding of modes of action can be sufficient to manipulate the biologic systems and to use other tools (such as proteomics or pathology) to demonstrate the validity of mechanistic or mode-of-action biomarkers. Thus, biologic validation will not be solely achieved with microarray technologies. To achieve biologic validation, a framework to describe how to assemble information to execute external validation of the pathway is needed.

Impact of Individual, Species, and Environmental Variability on Microarray Results

Georgia Dunstan, of Howard University, emphasized the importance of genetic variation when considering toxicogenomic results and of not extrapolating beyond the reference (for example, species and conditions) of the original experiments and platforms. The contribution of genetic variation between model systems and between individuals in response to various stressors cannot be avoided.

Leigh Anderson, of Plasma Proteome Institute, commented on the lack of microarray studies that characterize individual variation. For example, studies on the variation between inbred rat strains would be useful because understanding the interindividual variation will be critical in choosing genes as stable biomarkers. However, although that research is relatively simple, it is not being done, raising the question of how cross-species extrapolation can be discussed when the variation within a species has not been determined? It indicates the time is still early for these technologies.

Cheryl Walker, of the M.D. Anderson Cancer Center, commented on the impact of environmental variability on the stability of the genomic biomarkers. For instance, what happens to these biomarkers and signatures as you start to get away from a controlled light/dark cycle, diet, and nutritional status? Anderson replied that, at least in the field of proteomics, there are a known series of situations to be avoided (for example, animals undergoing sexual maturation and the use of proteins controlled by cage dominance). He commented that these types of variables and effects should be catalogued for microarray assays as well.

Hisham Hamedah indicated that there was an ongoing effort at the International Life Sciences Institute (ILSI) to obtain data from several companies on control animals. At the member companies of ILSI, there are different strains, different feeding regimens, and different method-

ologies. This effort has obtained data from about 450 chips tested on liver samples. Although the analysis is not yet complete, the goal is to identify genes with very low variance, and the results could possibly be extrapolated to other tissues or even other species.

Bill Mates, of Gene Logic, responded that in his experience, expression changes respond to these environmental variables in predictable ways. In fact, the wealth of information provided in microarray assays can permit researchers to find errors in study implementation, and he mentioned an experience where perturbations in expression profiles indicated improper animal feeding or watering.

Sarah Gerould, of the U.S. Geological Survey (USGS), commented that Dunstan’s initial comments on cross-species differences were particularly important in ecotoxicology, which focuses on different kinds of fish, birds, and insects. Beyond the variety of organisms and their range of habitats, the organisms are exposed to multiple contaminants, increasing the potential difficulties in ecotoxicologic applications of these technologies.

Evaluating Low-Dose Effects of Chemicals

Jim Bus, of Dow Chemical, commented that most of the presentations during the workshop focused on screening pharmaceutical compounds in an overall attempt to avoid potential adverse outcomes as they enter the therapeutic environment. However, for the chemical manufacturing industry, the questions are of a different nature (although screening is a component) because, unlike pharmaceutical exposures, humans are not intentionally dosed, and the exposures are substantially lower. Therefore, toxicogenomic assays present an opportunity for biologic validation of effects, particularly at the low end of the dose-response curve where conventional toxicologic animal tests are insufficient. Currently, low-dose effects are addressed by, for example, 10-fold uncertainty factors or a linearized no-threshold model, but these techniques are primarily policy and are not biologically driven. Bus referred to “real world” environmental exposures that are thousands-fold lower than those assessed using conventional toxicologic models. In particular, he was interested in determining how toxicogenomics can assist in bridging the uncertainty associated with default uncertainty factors and models. These issues emphasize the need for biologic validation of these technologies and the potential for their application to the regulatory arena.

Federico Goodsaid, of FDA, brought up a related issue, commenting that conventional animal-based toxicology experiments use a limited number of samples and replicates, limiting the ability to see low-dose effects. Further, one of the most daunting tasks in using animal models to try to predict human safety issues is extrapolating from a limited sampling to what would happen with humans. In this effort, toxicogenomics perhaps represents a tool to go beyond what is currently available and to increase the power of the animal models and look at very low doses over a long period of time.

Summary Statements and Discussion

Kenneth S. Ramos moderated a summary discussion where, to initiate the discussion, he asked whether participants were comfortable with technical validation of the microarray technologies and whether it is appropriate for the field to progress to focusing on biologic validation. Indeed, similar to the roundtable session, the ensuing discussion focused on issues surrounding biologic validation, and some participants brought up themes mentioned earlier, such as the need to define mRNA expression changes that do and do not constitute a negative effect and that genetic diversity will confound extrapolation between species and among humans. Several participants also commented on the current state of validation efforts. The themes that emerged in this discussion are presented here.

Validation Issues with Microarray Assays Are Not Novel

Several participants suggested that many of the validation issues brought up throughout the day were not isolated to toxicogenomic assays. Linda Greer, of Natural Resources Defense Council, noted that in conventional animal bioassays, we often do not understand the biology underlying why, for example, animals may or may not get tumors; we do not understand the individual variation within an inbred animal strain nor how to make comparisons between species. Greer stated that she was actually relieved to hear the lack of dispute regarding technical validation of microarray technologies, because a common perception among non-specialists is that the technology does not produce consistent results. However, she noted that technical questions have been narrowed and addressed as demonstrated by, for example, the afore-mentioned series of

papers in Nature Methods.23 Also, the questions regarding biologic validation apply to a lot of toxicology issues. In this regard, toxicogenomic assays are in the same state as many other conventional methodologies.

Rafael Irizarry responded to concerns that microarray assays rely heavily on “black box” mathematical preprocessing, where complex electrical and optical data is converted to a gene-expression level. He noted that other technologies also rely on mathematical algorithms or other preprocessing of raw data, for instance, RTPCR and functional magnetic resonance imaging (FMRI). However, a notable difference is that these other technologies do not produce a wealth of data like the microarray technologies, possibly explaining why the issue is rarely considered.

Bill Mattes commented on the level of consistency of microarray results generated among different labs. He stated that microarrays are like other technically demanding technologies, where everybody is not able to produce reliable data. For instance, inexperienced practitioners of histopathology via microscopy, which is considered a “gold standard” for detecting pathologic responses, will not produce reliable results. In this regard, Mattes suggested discussing the development of standards to qualify good practice. Irizarry also suggested that the scientific community use a type of internal validation of practitioners where, for example, laboratories would periodically hybridize a universal standardized reference and submit results to compare against other researchers.

Validation in What Context: Technical, Biologic, or Regulatory

Leonard Schectmann, of FDA, asked which participants had used microarray technologies and whether they were confident that the technologies had been sufficiently validated and were ready for widespread use (or “prime time” as stated by a few participants). Ramos suggested that, in this context, perhaps prime time was not the best term; rather, that these technologies had undergone sufficient validation and that it was understood they could be used to generate reliable and reproducible results. Other participants also asserted that it was necessary to understand the context in which the term validation was being used.

Yvonne Dragan, of FDA, said that it has been shown with microarray technologies that technical reproducibility can be achieved in the

laboratory and that reproducibility across different laboratories depends on each laboratory’s proficiency at the technique, with replicability being possible if the labs are proficient at the analyses. Whether reproducibility is possible across platforms depends on whether the platforms are assessing the same thing. For example, if probe sets on the microarray chip are from different locations in the same gene, then different questions are being asked. Therefore, overall, the answers depend on the level at which validation is desired. Asking whether microarrays are capable of accurately measuring mRNA levels is one question. Biologic questions are different, however, and one has to ask whether this method is the right one to address the questions being asked?

Kerry Dearfield, of USDA, commented that the question of whether a technology was ready for prime time really meant it was ready to be accepted by the regulatory agencies. In this regard, Dearfield commented that the microarray technologies were not quite there yet. Technical reproducibility, while important, does not specifically address the types of questions being asked in the regulatory field, and accurately answering the biologic questions is essential. It will be necessary to directly tie expression changes to some type of adverse end point and thus be able to address questions of regulatory interest (for example, safety or efficacy). Another application for risk assessment is when toxicogenomics will be used to examine if effects can be seen at earlier times after dosing or at lower doses. Tough questions will remain. For example, if expression changes that can be associated with a pathologic effect are seen at low doses where that pathology has not been observed, how will that information be considered in the regulatory arena? Would regulations change based on expression changes? To progress, it is necessary to ensure that the technologies are technically solid and generating reproducible, believable information. Then, that information has to be linked to biologic effects that people are concerned about. This type of technical and biologic validation needs to be tied together prior to use in the regulatory arena to address public health concerns. To get to this point, the technology will need to go through some form of internationally recognized process where, for example, performance measures for the technologies are specified, so the agencies can use the generated information.

Carol Henry, of the American Chemistry Council, commented on the potential for a group of independent researchers to recommend principles and practices necessary for the technical validation of these technologies, so the field can advance to the point where the technologies can be used in public health and environmental regulatory settings. Developing these practices could aid getting the technologies into a formal proc-

ess where agencies might actually be able to accept it; right now, toxicogenomic submissions are being considered on a case-by-case basis.

Richard Canady, of the Office of Science and Technology,24 commented that a reason to go through a validation process or certification of practitioners is to improve practice overall and reduce the odds that questionable data sets would become an accepted part of the weight of evidence for regulatory decisions (particularly for data that support a decision almost entirely). He also commented that it is important to recognize the value of data to support arguments about, for instance, dose-response extrapolation below the observable range, where it may not be possible to obtain biologically validation. In these applications, data would be used in weight-of-evidence arguments to help researchers understand the biology. Although it is good to think about validation of assays and maybe even certification of practitioners, it is bad to close the door and categorically exclude information.

Goodsaid stated that efforts were under way at FDA to develop an efficient and standard process to receive genomic information and to minimize the confusion regarding potential regulatory applications of the technologies.

Wrap-Up Discussion

To finish the workshop, John Quackenbush assembled several summary statements of themes he heard emerge from the workshop discussions and projected these for the audience (see Box 6). The statements encapsulated the technical and biologic validation considerations addressed in the speaker’s presentations and the discussion that followed. Discussion on the summary statements was brief, and the workshop was adjourned.

|

BOX 6 Summary Statements from John Quackenbush, Harvard University

|

REFERENCES

Alon, U., N. Barkai, D.A. Notterman, K. Gish, S. Ybarra, D. Mack, and A.J. Levine. 1999. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. USA 96(12):6745-6750.

Benjamini, Y., and Y. Hochberg. 1995. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. Roy. Stat. Soc. B Met. 57(1):289-300.

Boser, B.E., I.M. Guyon, and V.N. Vapnik. 1992. A training algorithm for optimal margin classifiers. Pp. 144-152 in Proceedings of the Fifth Annual International Conference on Computational Learning Theory, 27-29 July 1992, Pittsburgh, PA. Pittsburgh, PA: ACM Press.

Brown, M.P., W.N. Grundy, D. Lin, N. Cristianini, C.W. Sugnet, T.S. Furey, M. Ares, Jr., and D. Haussler. 2000. Knowledge-based analysis of microarray gene expression data by using support vector machines. Proc. Natl. Acad. Sci. USA 97(1):262-267.

Deng, S., T.-M. Chu, Y.K. Truong, and R. Wolfinger. 2005. Statistical methods for gene expression analysis. Computational Methods for High-

throughput Genetic Analysis: Expression Profiling. Chapter 5 in Bioinformatics, Vol. 7, Encyclopedia of Genetics, Genomics, Proteomics and Bioinformatics. Wiley [online]. Available: http://www.wiley.com/legacy/wileychi/ggpb/aims-scope.html [accessed April 6, 2006].

Eisen, M.B., P.T. Spellman, P.O. Brown, and D. Botstein. 1998. Cluster analysis and display of genome-wide expression patterns. Proc. Natl. Acad. Sci. USA 95(25):14863-14868.

Everitt, B. 1974. Cluster Analysis. London: Heinemann.

FDA (Food and Drug Administration). 2005. Guidance for Industry: Pharmacogenomic Data Submissions. U.S. Department of Health and Human Services, Food and Drug Administration, Center for Drug Evaluation and Research, Center for Biologics Evaluation and Research, Center for Devices and Radiological Health. March 2005 [online]. Available: http://www.fda.gov/cder/guidance/6400fnl.pdf [accessed April 10, 2006].

Furey, T.S., N. Cristianini, N. Duffy, D.W. Bednarski, M. Schummer, and D. Haussler. 2000. Support vector machine classification and validation of cancer tissue samples using microarray expression data. Bioinformatics 16(10):906-914.

Guyon, I., J. Weston, S. Barnhill, and V.N. Vapnik. 2002. Gene selection for cancer classification using support vector machines. Mach. Learn. 46(1-3):389-422.

Hamadeh, H.K., P.R. Bushel, S. Jayadev, K. Martin, O. DiSorbo, S. Sieber, L. Bennett, R. Tennant, R. Stoll, J.C. Barrett, K. Blanchard, R.S. Paules, and C.A. Afshari. 2002a. Gene expression analysis reveals chemical-specific profiles. Toxicol. Sci. 67(2):219-231.

Hamadeh, H.K., P.R. Bushel, S. Jayadev, O. DiSorbo, L. Bennett, L. Li, R. Tennant, R. Stoll, J.C. Barrett, R.S. Paules, K. Blanchard, and C.A. Afshari. 2002b. Prediction of compound signature using high density gene expression profiling. Toxicol. Sci. 67(2):232-240.

Hastie, T., R. Tibshirani, M.B. Eisen, A. Alizadeh, R. Levy, L. Staudt, W.C. Chan, D. Botstein, and P. Brown. 2000. “Gene shaving” as a method for identifying distinct sets of genes with similar expression patterns. Genome Biol. 1(2):RESEARCH0003.

Hossain, M.A., C.M.L. Bouton, J. Pevsner, and J. Laterra. 2000. Induction of vascular endothelial growth factor in human astrocytes by lead. Involment of a protein kinase C/activator protein 1 complex-dependent and hypoxia-inducible factor 1-independent signaling pathway. J. Biol. Chem. 275(36):27874-27882.

Huang, Q., R.T. Dunn, II, S. Jayadev, O. DiSorbo, F.D. Pack, S.B. Farr, R.E. Stoll, and K.T. Blanchard. 2001. Assessment of cisplatin-induced nephrotoxicity by microarray technology. Toxicol Sci. 63(2):196-207.

ICCVAM (Interagency Coordinating Committee on the Validation of Alternative Methods). 2003. Guidelines for Nomination and Submission of New, Revised, and Alternative Test Methods. NIH Publication No. 03-

4508. U.S. Department of Health and Human Services, U.S. Public Health Service Research, National Institute of Health, National Institute of Environmental Health Sciences, Triangle Research Park, NC. September 2003 [online]. Available: http://iccvam.niehs.nih.gov/docs/guidelines/subguide.htm [accessed April 10, 2006].

Iida, M., C.H. Anna, W.M. Holliday, J.B. Collins, M.L. Cunningham, R.C. Sills, and T.R. Devereux. 2005. Unique patterns of gene expression changes in liver after treatment of mice for 2 weeks with different known carcinogens and non-carcinogens. Carcinogenesis. 26(3):689-699.

Irizarry, R.A., D. Warren, F. Spencer, I.F. Kim, S. Biswal, B.C. Frank, E. Gabrielson, J.G. Garcia, J. Geoghegan, G. Germino, C. Griffin, S.C. Hilmer, E. Hoffman, A.E. Jedlicka, E. Kawasaki, F. Martinez-Murillo, L. Morsberger, H. Lee, D. Petersen, J. Quackenbush, A. Scott, M. Wilson, Y. Yang, S.Q. Ye, and W. Yu. 2005. Multiple-laboratory comparison of microarray platforms. Nat. Methods 2(5):329-330.

Joliffe, I.T. 1986. Principal Component Analysis. New York: Springer.

Kohonen, T. 1995. Self-Organizing Maps. Berlin: Springer.

Kramer, J.A., S.W. Curtiss, K.L. Kolaja, C.L. Alden, E.A. Blomme, W.C. Curtiss, J.C. Davila, C.J. Jackson, and R.T. Bunch. 2004. Acute molecular markers of rodent hepatic carcinogenesis identified by transcription profiling. Chem. Res. Toxicol. 17(4):463-470.

Lu, T., J. Liu, E.L. LeCluyse, Y.S. Zhou, M.L. Cheng, and M.P. Waalkes. 2001. Application of cDNA microarray to the study of arsenic-induced liver diseases in the population of Guizhou, China. Toxicol. Sci. 59(1):185-192.

Michel, C., R.A. Roberts, C. Desdouets, K.R. Isaacs, and E. Boitier. 2005. Characterization of an acute molecular marker of nongenotoxic rodent hepatocarcinogenesis by gene expression profiling in a long term clofibric acid study. Chem. Res. Toxicol. 18(4):611-618.

Nature Methods. 2005. May 2005, Volume 2, No. 5.

Schneider, J., and A.W. Moore. 1997. Cross validation. In A Locally Weighted Learning Tutorial Using Vizier 1.0. February 1, 1997 [online]. Available: http://www.cs.cmu.edu/~schneide/tut5/node42.html [accessed April 6, 2006].

Schölkopf, B., and A. Smola. 2002. Learning with Kernels. Cambridge, MA: MIT Press.