The chapters in Part II provide overviews of state-of-the-art methods of human-system integration (HSI) that can be used to inform and guide the design of person-machine systems using the incremental commitment model approach to system development. We have defined three general classes of methods that provide robust representation of multiple HSI concerns and are applicable at varying levels of effort throughout the development life cycle. These broad classes include methods to

-

Define context of use. Methods for analyses that attempt to characterize early opportunities, early requirement and the context of use, including characteristics of users, their tasks, and the broader physical and organizational environment in which they operate, so as to build systems that will effectively meet users’ needs and will function smoothly in the broader physical and organizational context.

-

Define requirements and design solutions. Methods to identify requirements and design alternatives to meet the requirements revealed by prior up-front analysis.

-

Evaluate. Methods to evaluate the adequacy of proposed design solutions and propel further design innovation.

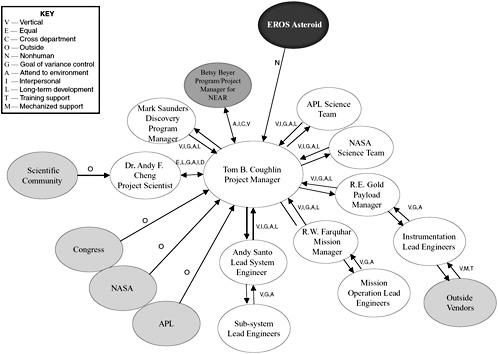

Figure II-1 presents a representative sampling of methods that fall into each activity category and the shared representations that are generated by these methods. A number of points are highlighted in the figure:

-

The importance of involving domain practitioners—the individuals who will be using the system to achieve their goals in the target domain—as active partners throughout the design process.

-

The importance of involving multidisciplinary design experts and other stakeholders to ensure that multiple perspectives are considered throughout the system design and evaluation process and that stakeholder commitment is achieved at each step.

-

The availability of a broad range of methods in each class of activity. Appropriate methods can be selected and tailored to meet the specific needs and scope of the system development project.

-

The range of shared representations that can be generated as output of each of four HSI activities. These representations provide shared views that can be inspected and evaluated by the system stakeholders, including domain practitioners, who will be the target users of the system. The shared representations serve as evidence that can be used to inform risk-driven decision points in the incremental commitment development process.

We realize that the classification of methods for discussion in the three chapters that follow is to some extent arbitrary, as many of the methods

FIGURE II-1 HSI activities, participants, methods, and shared representations.

are applied at several points in the system design process and thus logically could be presented in more than one chapter. The assignment of methods to classes and chapters is based on how the methods are most frequently used and where in the design process they make the greatest contribution. As already noted, the presentation of methods is not exhaustive. We have selected representative methods in each class, as well as some less well-known methods that have been used primarily in the private sector and that we think have applicability to military systems as well. Chapter 1 provides other sources of methods.

The committee further recognizes that many of the methods described (e.g., event data analysis methods, user evaluation studies) build on foundational methods derived from the behavioral sciences (e.g., experimental design methodology, survey design methods, psychological scaling techniques, statistics, qualitative research methods). These foundational methods are

not explicitly covered in this report because they are well understood in the field, and textbooks that cover the topics are widely available (e.g., Charlton and O’Brien, 2002; Cook and Campbell, 1979; Coolican, 2004; Fowler, 2002; Yin, 2003). However, two categories of foundational methods that are not explicitly covered but deserve some discussion are briefly described below. Both of these method categories—function allocation and performance measurement—are integral to the application of other methods throughout the design process.

Function allocation is the assignment of functions to specific software or hardware modules or to human operators or users. In the case of hardware and software, it is a decision about which functions are sufficiently similar in software requirements or interfunction communication to collect together for implementation. In the case of assignment to human users versus software/hardware, it is a matter of evaluating the performance capacities and limitations of the users, the constraints imposed by the software and hardware, and the system requirements that imply users because of safety or policy implications. Everyone agrees that function allocation is, at the base level, a creative aspect of the overall design process. Everyone agrees that it requires hypothesis generation, evaluation and iteration. In our view, it spans the range of activities that are represented by the methodologies we are describing and does not, by itself, have particular methodologies associated with it. There have been attempts to systematize the process of achieving function allocation (Price, 1985), but in our view they encompass the several parts of the design process that we are discussing in this section and do not add new substantive information. Readers interested in the topic itself are referred to Price (1985) and a special issue on collaboration, cooperation, and conflict in dialogue systems of the International Journal of Human-Computer Studies (2000).

Performance measurement supports just about every methodology that is applied to human-system integration. Stakeholders are interested in the quality of performance of the systems under development, and they would like to have predictions of performance before the system is built. While they may be most interested in overall system performance—output per unit time, mean time to failure, probability of successful operation or mission, etc.—during the development itself, there is a need for intermediate measures of the performance of individual elements of the system as well, because diagnosis of the cause of faulty system performance requires more analytic measures at lower functional levels. From a systems engineering point of view, one may consider system-subsystem-module as the analysis breakdown; however, when one is concerned with human-system integration, the focus is on goal-task-subtask as the relevant decomposition of performance, because it is in terms of task performance that measures specifically of human performance are most meaningful and relevant.

TABLE II-1 Types of Performance Measures

|

Types of Performance Measures |

Potential Uses |

|

|

|

|

|

The values of parameters reflecting the various states of the system as a function of time |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Types of Performance Measures |

Potential Uses |

|

|

|

|

Table II-1 contains some examples of the kinds of measures that are likely to be of interest.

Since each situation is different, the analyst must consider the context of use under which measurement or prediction is to be undertaken, the goals of the measurement, the characteristics of the users who will be tested or about whom performance will be inferred, and the level of detail of analysis required in order to select specific measures to be used.

6

Defining Opportunities and Context of Use

In the past when new technologies were introduced, the focus was on what new capabilities the technology might bring to the situation. People then had to find ways to cope with integrating their actions across often disparate systems. Over time, as computational capability has increased and become more flexible, one has seen a shift in focus toward understanding what people need in given situations and then finding ways for technology to support their activities. In other words, people no longer need to adapt to the technology—the technology can be designed to do what people and the situation demand. The challenge is to understand human needs in dynamic contexts and respond with solutions that leverage the best of what technology has to offer and at the same time resonate with people’s natural abilities. The emphasis and risks have switched from the technology to the users.

This chapter introduces a range of methods that can be used to gain an understanding of users, their needs and goals, and the broader context in which they operate. The methods provide a rich tool box to support two of the major classes of human-system integration (HSI) activities that feed into the incremental commitment model (ICM): defining opportunities and requirements and defining context of use. They include methods that focus on the capabilities, tasks, and activities of users (e.g., task analysis methods that characterize the tasks to be performed and their sequential flow, cognitive task analysis methods that define the knowledge and mental strategies that underlie task performance), as well as methods that examine the broader physical, social, and organizational context in which individu-

als operate (e.g., field observations and ethnography, contextual inquiry, analysis of organizational and environmental context).

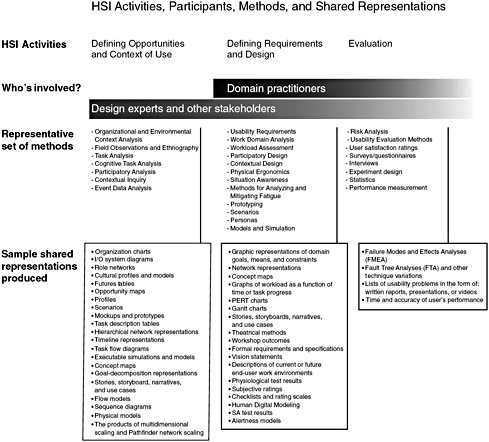

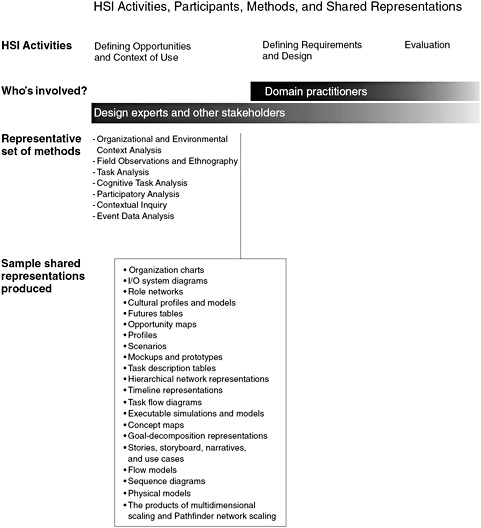

The chapter covers a variety of complementary approaches, ranging from participatory analysis methods, which include domain practitioners as active partners, to event data analysis methods, which promise the potential of more automated and less obtrusive ways of uncovering user activities and needs. The chapter also covers methods for capturing and communicating knowledge about users and the context of use in the form of compelling shared representations, including storyboards, scenarios, role networks, and input/output system diagrams. Figure 6-1 provides an overview. Each method is discussed in terms of use, shared representations, contribution to the system development process, and strengths and limitations.

The extent to which system requirements can be defined at the beginning of a project varies. When requirements are poorly defined, there may be unanticipated opportunities for new features or new applications of the system. Typically, there are more opportunities than resources to respond to them. It is therefore prudent to define the space of opportunities and then to evaluate those opportunities and choose the most promising ones.

In order to build systems that can support users and their tasks effectively, it is important to understand the broader context of use. This strategy can be particularly important if the system needs are poorly understood, or if a new system is to be designed and deployed into a domain in which there is no predecessor system. In these cases, it is easy to engage in a rush to judgment—that is, to design and deploy a system based on assumptions, rather than on the actual opportunities that old assumptions may not reveal. However, even for systems that will occupy a known niche, it is important to understand the context of use, because field conditions and work practices change, and old solutions may no longer fit the current realities.

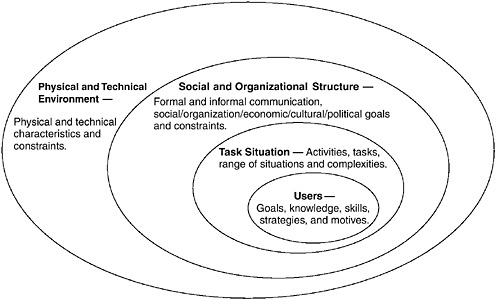

As illustrated in Figure 6-2, the context of use includes understanding the characteristics of the users, their motivations, goals, and strategies; the activities and tasks they perform and the range and complexity of situations that arise and need to be supported; the patterns of formal and informal communication and collaboration that occur and contribute to effective performance; and the broader physical, technical, organizational, and political environment in which the system will be integrated. Understanding the context of use is especially important as one moves toward more complex systems and systems of systems.

Context of use analysis methods are particularly important during the exploration phase of the incremental commitment model, when the focus is on understanding needs and envisioning opportunities. Among the promised benefits of leveraging context of use analyses to inform design are systems that are more likely to be successful when deployed because they

FIGURE 6-1 Representative set of methods and sample shared representations for defining opportunities and context of use.

address the specific problems facing users and are sensitive to the larger system context. Experience has shown that introduction of new technology does not necessarily guarantee improved human-machine system performance (Woods and Dekker, 2000; Kleiner, Drury, and Palepu, 1998) or the fulfillment of human needs (Muller et al., 1997b; Nardi, 1996; National Research Council, 1997; Rosson and Carroll, 2002; Shneiderman, 2002). Poor use of technology can result in systems that are difficult to learn or use, can create additional workload for system users, or, in the extreme,

FIGURE 6-2 Context of use encompasses consideration of the user, the task situation, the social and organizational structure within which activities take place, as well as the physical and technical environment that collectively provide opportunities and impose constraints on performance.

can result in systems that are more likely to lead to catastrophic errors (e.g., confusion that leads to pilot error and fatal aircraft accidents).

Context of use analysis methods can play an important role in mitigating the risks of these types of design failures by promoting a more complete understanding of needs and design challenges as part of the incremental commitment model. This more complete understanding can help avoid common design pitfalls, such as local optimizations, in which a focus on improving a single aspect of a system in isolation inadvertently results in degradation of the overall system because of unanticipated side effects. It can also help manage “feature creep” (the proliferation of too many disjointed features in a single release) by integrating new ideas into a few, powerful innovations. Thus, an important benefit of investing in context of use analysis is a reduction in risk exposure by reducing the risk that the design will fail to meet the user’s needs and thus not be adopted, as well as by reducing the risk that a design will be put in place that contributes to performance problems with costly economic or safety implications.

While we have focused on the value of context of use analyses during the early exploratory phases of the incremental commitment model,

these methods continue to be relevant throughout the system development process, up to and including when systems are fielded. The context of use is constantly evolving, and introduction of new technology can produce operational and organizational changes, not all of which will have been anticipated ahead of time (Woods and Dekker, 2002; Patterson, Cook, and Render, 2002; Roth et al., in press). For example, as part of a recent power plant control room upgrade, computerized procedures were developed that integrated plant parameter information with the procedures so that the lead operator could work through the procedures without having to ask others for plant state information. This had the (anticipated) consequence of improving the lead operator’s situation awareness of plant state and the speed with which the procedures could be executed. However, it decreased the situation awareness of the other crew members (an unanticipated negative consequence) because the lead operator no longer needed to keep them as tightly in the loop. This was discovered during observational studies (O’Hara and Roth, 2005) conducted as part of the initial system introduction. As a consequence, crew operating philosophy and training were completely redefined so as to capitalize on the crew members’ freed-up mental resources (they could now provide an independent and diverse check on plant state), resulting in improved shared situation awareness of the entire team.

This example highlights the importance of continuing to monitor the context of use up to and beyond system introduction to establish that the intended benefits of new technologies are realized and that unintended side effects (e.g., new forms of error, new vulnerabilities to risk) are identified and mitigated. Analyses of context of use can be used to guide midcourse design corrections, as well as to lay the groundwork for next-generation system development.

ORGANIZATIONAL AND ENVIRONMENTAL CONTEXT

Overview

A guiding tenet of work-centered design approaches in human-system integration is that an understanding of the characteristics of the users, including their motivations, goals, and strategies and the context of work, should be central drivers for the specification of the entire system design and not just the user interface. The advantage of a whole-systems approach is the recognition that an organization is, in itself, a system and some organizational designs can better support the organization’s mission and vision (Lytle, 1998) than others. Some context-oriented questions that drive design in a human-centered systems engineering approach include

-

Who are the stakeholders, or interested parties, in the system?

-

How can the voices of all of the stakeholders be heard?

-

How can conflicts among stakeholders’ needs be resolved?

-

What are the goals and constraints in the application domain?

-

What social and interactive patterns occur in the domain of practice?

-

What is the broader organizational/sociopolitical context in which the work is placed?

The success of human-system design and integration is to a large extent dependent on the appropriate consideration of organizational, macroergonomic, and sociotechnical factors in a system-of-systems perspective. Macroergonomics, a subdiscipline of ergonomics, promotes an analysis of work systems at the level of subsystems or contributing factors (i.e., personnel, technological, organizational, environmental, and cultural and their interactions) before pursuing traditional microergonomics intervention. At the same time, success also depends on a continual focus on the needs of each stakeholder, as well as an openness to balance and rebalance the design and implementation trade-offs between or among stakeholder needs. In complex designs with many stakeholders, there may in fact be no global optimization, but rather a series of trade-offs that result in a system that delivers some value to each stakeholder group. It is also important to remember that, whereas requirements may become fixed, application domains seldom remain stable. As a result, any optimization scheme may turn out to be short-lived, because the conditions that were considered in crafting the optimization may themselves change.

Here, we focus on a brief introduction to analyzing the enterprise and the environment as relevant contexts for system development, using the sociotechnical systems perspective and focusing on four general methods with associated sources of data and shared representations (Table 6-1). As adapted from sociotechnical systems theory, a guiding assumption is that to evaluate factors in the environment or organization, variances between what is observed and what is desired can be identified by the analyst and should be minimized (Emery and Trist, 1978) by those responsible for operational or process improvement. A variance then is an unexpected or unwanted deviation from a standard operating condition, specification, or norm (Emery and Trist, 1978).

Key variances potentially significantly impact system performance criteria, or interact with several other variances, or both. Performance is broadly defined to include technical performance (e.g., efficiency, productivity) as well as social performance (e.g., safety, satisfaction). Typically, 10-20 percent of variances are considered key variances. The notion is not dissimilar from the notion of special and common causes of variance in quality as-

TABLE 6-1 Organizational and Environmental Methods and Respective Sources of Data and Shared Representations

|

General Method |

Source of Data (input) |

Shared Representation (output) |

|

Organizational system scan |

Authority and communication analysis |

Organization charts Table of gaps (variances) |

|

|

Mission, vision, principle analysis Input/output analysis |

Input/output system model |

|

Role analysis |

Gap-focused survey, focus groups, and/or interviews |

Role network |

|

Cultural analysis |

Culture survey |

Cultural profile |

|

Stakeholder analysis |

Gap-focused survey, focus groups, and/or interviews |

Futures table |

surance. Special causes are the outliers (in a statistical sense) that should be managed first, in order to place the system in control. Once outliers are managed, common or system causes of variance can be reduced to improve overall system performance.

Shared Representations

The main purpose of this section is to make and illustrate the point that understanding and to some extent evaluating organizational context are useful endeavors. The shared representations presented have been selected for their potential appreciation by a wide and diverse audience and are the shared representations that map to a sociotechnical systems approach to organizational context.

Organization Charts: A widely known but often incorrectly or underused representation is the organizational chart, which depicts lines of authority and communication in an organization. In theory, formal, informal, and normative depictions of an organization’s lines of authority and communication can be developed. The formal structure is the published chart. The informal chart is a representation of the actual lines of authority and communication in the organization and relates to the informal organization. The normative structure is the theoretical best structure, given a number of considerations.

Regarding communication processes, various theories have been proposed to explain the emergence, maintenance, and dissolution of communication networks in organizational research (Monge and Contractor, 1999). Although a detailed presentation is beyond the scope of this report, these theories consist of self-interest (social capital theory and transaction cost economics); mutual self-interest and collective action; exchange and

dependency theories (social exchange, resource dependency, and network organizational forms); contagion theories, (social information processing, social cognitive theory, institutional theory, structural theory of action); cognitive theories (semantic networks, knowledge structures, cognitive social structures, cognitive consistency); theories of homophily (social comparison theory, social identity theory); theories of proximity (physical and electronic propinquity); uncertainty reduction and contingency theories; social support theories; and evolutionary theories. Regarding a theoretical “best” structure, practically, the state of the art is to choose among a set of alternative types, each with its own strengths and weaknesses. These general types and their associated strengths and weaknesses are discussed below.

Table of Organizational Variances: Mission, vision, and principles represent the identity of an organization. Mission is the purpose of the organization, vision is the envisioned future, and principles are the values or underlying virtues that guide organizational behavior. As the contextual environment for a system, the organization portrays the identity it professes through its published mission, vision, and principles statements, and it also has the actual identity represented by the perceptions of organizational members and other stakeholders or observers. Thus, there is sometimes a difference between the organizations preferred and actual profile. A table can be constructed that highlights the gaps between the preferred and the actual mission, vision, and principles and can include action items or interventions that are designed to decrease the gaps.

Input/Output System Diagram: An alternative to the organization chart is the system diagram or map. A system diagram or map is a representation of the organization as an input output model. Such depictions were popularized by Deming (2000), starting with the restoration period following World War II. Rather than depicting who reports to whom, this representation illustrates what the organization does from a process perspective. In a focus group or through a survey, opportunities for improvement are identified. Also, since systems operate as input-output transformers, depicting the organization in such terms provides an opportunity to illustrate where the technical system fits in the organizational context. Finally, as described in Kleiner (1997), performance criteria and metrics can be mapped to these systems.

Role Network: A role network, based on a role analysis, is also a useful shared representation. A job within an organization is defined by the formal job description that is a contract or agreement between the individual and the organization. This is not the same as a work role within the system, which is comprised of the actual behaviors of a person occupying a position or job in relation to other people. These role behaviors result from actions and expectations of a number of people in a role set. A role set is comprised of people who are sending expectations and reinforcement to

the role occupant. Figure 6-3 is an example of a role network for the Near Earth Asteroid Rendezvous (NEAR), a project managed by the Applied Physics Lab for NASA.

Cultural Profile: Shared representations related to culture include organizational culture and climate assessment tools. These typically take the form of the results produced by survey instruments. Schein (1993) describes culture at three levels: the artifacts (what is visible), espoused values (attributes that guide behavior), and basic underlying assumptions (deeply held beliefs).

Futures Table: An environmental scan is the major representation shared during environmental analysis. During a scan or analysis of the subenvironments, the key stakeholders are identified. Their expectations for the system are identified and evaluated and gaps are noted in a futures table. Conflicts and ambiguities are seen as opportunities for system or interface improvement. As with other variance or gap analyses, minimizing the variances is the objective.

Uses of Methods

Consistent with the sociotechnical systems approach, we summarize the general methods associated with the presented shared representations. Detailed coverage of nested techniques, such as survey design and analysis or focus group management, is beyond the scope of this report.

Organizational System Scan

The purpose of designing an organizational structure is to create lines of authority and communication in an enterprise in support of a strategy. In the context of system development, these lines of authority and communication establish and define ownership and management of the system in question. This will ultimately serve as a major determinant of the level of system success. The organizational design is also the manner by which an organization distributes its purpose or mission throughout the enterprise. Ideally, a given system supports the mission or purpose of the organization, and the structure facilitates accomplishment of the mission. Also, all employees and users ought to understand the overall purpose of the enterprise, the contributing role of the system, and their personal role in achieving the purposes of the system and enterprise. If the organizational design is appropriate and effective, this is more likely to occur.

Three core dimensions of organizational design underlying all organizational structures can be analyzed: these are referred to as complexity, formalization, and centralization (Hendrick and Kleiner, 2001). Complexity has two components—differentiation and integration. Differentiation refers

to the segmentation of the organizational design. Integration refers to the coordinating mechanisms in an organization. Coordinating mechanisms serve to tie together the various segments. Systems often have an integrative function associated with their purpose. An increase in integration is also believed to increase complexity and therefore cost. Formalization refers to the degree to which there are standard operating procedures, detailed job descriptions, and other systematic processes or controls in the organization. Centralization refers to the degree to which decision making is concentrated in a relatively few number of personnel.

The dimensions noted above manifest themselves in different organizational structures. The functional organizational design classifies workers into common technical specialization domains. This type of design works best in small to moderately sized enterprises (up to 250 employees) that have standardized practices and a stable external environment. Some advantages of the functional organizational design include professional identity, professional development, and the minimization of redundancy. The major weakness associated with this structure is suboptimization, a condition characterized by competition, coordination, and communication challenges laterally across units at the same level in the hierarchy.

The product or divisional organizational design organizes workers by product cluster. In many organizations, divisions characterize the clusters. At the system level, many complex systems are really “products” in a divisional organizational design. The product variation of the functional design attempts to minimize suboptimization. Instead of focusing on functions, which relate to the mission only indirectly, personnel theoretically identify with a product or system and therefore the product’s customer more readily. Another intended advantage with this design is to allow the development and management of profit centers. Each division (or system) can be operated as a business within the business. However, within each product cluster or division, functions typically appear. Thus, the functional units still exist, although at a lower level. Other variations, such as the geographic structure, have comparable strengths and weaknesses.

Since all of the previously mentioned alternatives are variations of the functional design, all have major shortcomings. Specifically, some suboptimization will occur. Thus, enterprise designers derived a new alternative, mostly inspired from a combination of the functional and product structures. The function x product matrix (or the function x project) attempts to integrate the best of functional and product structures. Specifically, the benefits associated with professionalism and lack of redundancy is retained from the functional design. From the product structure, a focus on the customer reduces the possibility of suboptimization.

The major flaws associated with the matrix structure are the potentially confused lines of authority and communication. For example, a complex

military or aerospace system that is managed by a matrix structure could have a conflict between a safety manager (functional authority) and a project manager (project authority). Making a launch deadline at the expense of safety could be a dangerous result. One workaround for such a scenario is to determine a priori which axis has more authority or, based on decision type, identify clearly who has final authority.

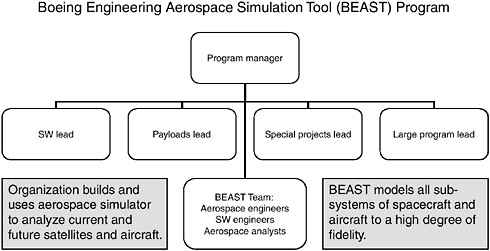

Figure 6-4 illustrates a product-focused matrix organization for developing BEAST, a high-fidelity aerospace simulation tool (Eichensehr, 2006). This organization creates both advantages and challenges for the group that manages the system. The fact that product area leads (software, payloads, special projects, and large programs) all depend on the same matrix of support engineers and programmers means that goals and efforts are more easily aligned. Pockets of team members function across projects and transfer results from the latest studies and the latest software techniques. The matrix allows superior communication and effective cohesiveness over the BEAST product team (Eichensehr, 2006).

System or organizational scanning involves evaluating the organization’s mission, values, history, current change activities, and business environment (Lytle, 1998). It involves defining the workplace in systems terms, including relevant boundaries. The enterprise’s mission is detailed in systems terms (i.e., inputs, outputs, processes, suppliers, customers, internal controls, and feedback mechanisms). The system scan also establishes initial boundaries of the work system. As described by Emery and Trist (1978), there are throughput, territorial, social, and time boundaries to consider. Entities outside the boundaries identified during the system scan are part of the external environment which is discussed below.

FIGURE 6-4 Example of organizational design (used with permission).

Role Analysis

Role analysis addresses who interacts with whom, about what, and how effective these relationships are. In a role network, the focal role (i.e., the role responsible for controlling key variances) is first identified. With the focal role identified at the center, other roles can be identified and placed on the diagram relative to the focal role. Based upon the frequency and importance of a given relationship or interaction, line length can be varied, where a shorter line represents more or closer interactions. Finally, arrows can be added to indicate the nature of the communication in the interaction. A one-way arrow indicates one-way communication and a two-way arrow indicates two-way interaction. Two one-way arrows in opposite directions indicate asynchronous (different time) communication patterns. To show the content of the interactions between the focal role and other roles and the evaluation of the presence or absence of a set of functional relationships for functional requirements, labels are used to indicate the goal of controlling variances. These labels might be

-

adaptation to short-term fluctuations.

-

integration of activities to manage internal conflicts and promote smooth interactions among people and tasks.

-

long-term development of knowledge, skills, and motivation in workers.

Also the presence or absence of particular relationships is identified as follows:

-

vertical hierarchy,

-

equal or peer,

-

cross-boundary,

-

outside, and

-

nonhuman.

The relationships in the role network are then evaluated. Internal and external customers of roles can be interviewed or surveyed for their perceptions of role effectiveness as well.

Cultural Analysis

A cultural context is needed for effective system development. While this section cannot be exhaustive, we intend to convey the importance of this often ignored area of system support. Organizational culture is related to the norms, beliefs, unwritten rules, and practices in an organization

(Deal and Kennedy, 1982) and is an area of organization that has been significantly understudied (Schein, 1996). According to Schein (1993), organizational culture is a pattern of shared basic assumptions that a group learned as it solved its problems of external adaptation and internal integration. This apparently has worked well enough to be considered valid and therefore to be taught to new members as the correct way to perceive, think, and feel in relation to those problems (Schein, 1993). The fundamental basis of culture has to do with underlying or foundational values. Culture is different from organizational climate. Culture is much more permanent and pervasive, whereas climate is the temporary reaction to critical incidents and events.

Culture can theoretically be changed in several ways. First, major policy changes or launches of new systems can affect the culture of an enterprise, as change can be mandated by management. Sometimes however, policy and strategic change are invalid reactions to forces from the environment. Second, changing the behaviors of leaders can induce a culture change. Leaders need to model expected behavior if they want enterprise members to modify their own behaviors and attitudes. Third, selection and training can help to change cultures. Finally, when appropriate, a comprehensive work system design change can and often will result in a culture change. Most large-scale system development launches should be conducted as part of a comprehensive work system design change. A work system design change supported by valid changes in policy and leadership and training is likely to be the best approach to achieving desired culture change and effective performance of a new or improved system.

Stakeholder Analysis

In addition to an enterprise context for systems, systems have environments that surround them and the enterprise of which they are a part. The environment is the source of resources (inputs) that are received by systems and enterprises, and it is the stakeholders in the environment that ultimately evaluate the success of the system or enterprise.

An external environment can be further divided into relevant subenvironments. Subenvironment categories typically encompass economic, cultural, technological, educational, political, and other factors. The system itself can be redesigned to align better with external expectations or, conversely, the system owners can attempt to change the expectations of stakeholders to be more consistent with system needs. According to the sociotechnical systems view, the response to variability in part will be a function of whether the environment is viewed by the system owners as a source of provocation or inspiration (Pasmore, 1988). The gaps between system and environmental expectations are often gaps of perception, and

communication interfaces need to be developed between subenvironment personnel and the system owners or operators.

Contributions to System Design Phases

As part of developing an understanding of the context of use, it is desirable to understand the organizational context, specifically in terms of organizational structure, roles, culture, and the environment. Thus, analyzing these contexts is most useful at the beginning stages of the system design process. Analysis, design, and implementation that fail to include the perspectives of stakeholders can often lead to systems that fail in functional, organizational, or economic terms. Work-centered approaches attempt to prevent these types of design failures by explicitly grounding the design in the broad context of the work relationship and work practices to be performed and the sociotechnical system in which it is placed. But attention to the organization and the environment does not stop once the system is designed. As Carayon (2006) indicates, the design of sociotechnical systems in collaboration with both the workers and the customers requires increasing attention not only to the design and implementation of systems, but also to the continuous adaptation and improvement of systems in collaboration with customers. Thus, an impact can be made during the design phase, implementation, and operation of sociotechnical systems (Carayon, 2006). Table 6-2 illustrates how variances can be identified and data established for analysis when evaluating gaps between managers’ and employees’ perceptions.

Strengths, Limitations, and Gaps

The enterprise and the environment will have a major impact on whether a system is successful in meeting its intended mission and is well received. Hendrick and Kleiner (2001) claim that the environment is four times more powerful than other subsystems as a determinant of success. By assessing the enterprise and environmental contexts in the initial design, the likelihood of a successful outcome will be enhanced. There are several frameworks that promote this type of contextual understanding. A challenge is that the entire context cannot be known, and thus it is difficult to decide how much contextual knowledge is enough.

TABLE 6-2 Examples of Role Variances

|

|

Key Function from Position Description |

Perception by Employer |

Perception by Employee |

Variance |

|

|

Degree |

Intensity |

||||

|

1 |

Ensures that required resources are available for the program(s). |

Will supervise cost engineers providing cost estimating and other services. |

Only one staff in Northern VA, so doing hands-on cost engineering services (CES). |

3 |

−3 |

|

2 |

Manage one or more very complex programs or portions of larger programs having a lifetime value greater than $50 million. |

Will concentrate on business development activities to grow divisional revenue. |

Will do business development as necessary to grow CES staff. |

1 |

+1 |

|

3 |

Maintains relationships with customer to satisfy requirements and develop new or additional business opportunities. |

Will perform as member of proposal teams for work pursued by other divisions and departments. |

Will prepare proposals only for CES department. |

3 |

−2 |

|

4 |

Serves as primary customer contact for government agency or office. |

Will attend available seminars, conferences, and engineering society meetings for networking. |

Will keep networking activities reasonable so as not to interfere with operations. |

1 |

+1 |

|

5 |

Selects, trains, motivates, and disciplines key staff. |

Will assist other program managers with project controls services. |

Will assist and mentor other program managers as well as CES staff. |

1 |

+2 |

FIELD OBSERVATIONS AND ETHNOGRAPHY

Overview

Ethnographic Principles

Ethnographic approaches can provide excellent in-depth reports on conditions of use in specific case studies or at specific locations or sites. These approaches are typically used to build an overall context of use for such specific, in-depth cases. It is therefore crucial to select a broad set of cases, so that the in-depth information provides a good range of what is going on in the domain of interest.

The practice of ethnography involves both a relatively obvious set of

procedures and a subtler set of orientations and disciplines; without the latter, the procedures by themselves are unlikely to lead to good-quality data. Nardi (1997) notes that “one of the greatest strengths of ethnography is its flexible research design. The study takes shape as the work progresses” (p. 362). However, this flexibility may also present risks, if the analyst does not know how to make the numerous choices that are always present in field research. More broadly, Blomberg et al. (2003) state, “The ethnographic method is not simply a toolbox of techniques, but a way of looking at a problem” (p. 967). Note that this stance is almost the exact opposite of participatory analysis and design (see below), which tend to be a results-oriented set of methods and practices. We therefore begin with a set of principles of the ethnographic way of looking and then describe the practices. These principles are particularly important in defining an opportunity space.

The first principle is that ethnography is holistic (i.e., the assumption that all aspects of the work domain are related to one another, and that no single aspect can be studied in isolation from the others). As Nardi (1997) explains, the quality of the relationship may be complex, including not only relationships of similarity and convergence, but also ones of tension, contradiction, and conflict. This principle of holism is contrary to many experimental laboratory heuristics, which tend to control as many variables as possible, to isolate a small number of variables of interest, and then to manipulate those variables in a systematic manner. By contrast, ethnography may focus on an aspect of interest, but it remains open to discovering how that aspect of interest is related to other aspects, variables, and influences. From the perspective of defining an opportunity space, this orientation can lead to new understandings and syntheses of diverse concepts that might be considered only in isolation in more traditional analytic approaches.

The second principle is that ethnography is descriptive, rather than evaluative. Nardi (1997) describes some uses of ethnography for evaluation, occurring relatively late in a product life cycle; however, she agrees with Blomberg et al. (2003) that the principal use of ethnography is early in the life cycle, when evaluation would be premature. It is also the case that ethnography is specialized toward nonjudgmental descriptions, and that there are much more powerful evaluative techniques available when those are needed (see Chapter 8). The purpose of taking a descriptive stance is strategic: ethnography avoids judgment in order to remain open to possibilities, and in order to integrate diverse aspects and concepts into a rich picture of the domain (see Monk and Howard, 1998).

The third principle is that ethnography focuses on—and privileges—the point of view of the people whose work or lifeways are being described (the “members,” as anthropologists term them). Most ethnographic reports are intended to take the reader into the mind set of the people who are described. Thus, an ethnographic report tends to require a subsequent step

of translation or conversion into a set of engineering requirements. Again, this focus on the members’ perspectives is an advantage when the goal is to define an opportunity space.

Finally, the fourth principle is that ethnography is usually practiced in the members’ natural setting. In the field setting, the analyst can see things that have become so commonplace to the members that no one thinks to talk about them. There the analyst can hypothesize new relationships and can use the flexibility of ethnography to test those relationships by turning the analysis in a new direction.

Ethnographic Practices

Ethnography tends to focus on a small number of cases, and to study those cases in depth and detail. It is therefore essential to manage the diversity in those cases, to use that diversity strategically, and exercise caution in generalizing conclusions to other cases that were not studied. There are several strategies and procedures for managing these sampling issues.

As discussed by Blomberg et al. (2003), Bernard (1995) proposed an influential set of disciplined approaches for choosing samples (study sites) for ethnography. In the most controlled quota approach, the team can determine which types of sites are most representative of their application domain, and they have the ability to obtain as many samples (specific sites) of each type to satisfy their sampling requirements. The purposive approach is similar, except that the team cannot control the number of sites of each required type.

But sometimes a team cannot exercise even this much control of where or how they will collect their ethnographic data. In the convenience approach, teams improvise with whatever sites become available. One potential enhancement of this improvisational strategy is the snowball approach, in which each site helps to recruit other sites for the study.

If the analyst cannot prespecify the sites for the study, then what happens to the discipline and systematic sampling advocated by Bernard (1995)? One approach that has gained a strong following is called grounded theory (Glazer and Strauss, 1967), which makes strategic use of the diversity among sites in an ongoing, hypothesis-testing manner. In grounded theory, the analyst begins with a general research question, rather than a specific theory and hypothesis. Each site becomes an opportunity for theory creation and theory refinement concerning the research question, and part of the discipline of grounded theory is to do the hard work of revising the analyst’s theory after each site. Subsequent sites are chosen precisely because they provide a strong test of the current revision of the (evolving) theory.

For example, a military ethnographer might study a first site in a flat

desert setting, perhaps drawing some tentative conclusions about how warfighters create defensive perimeters based on experiences at that site. However, the terrain is likely to influence those perimeters. More subtly, the terrain might also influence how warfighters construct those perimeters and might even influence the organizational dynamics of who orders the perimeter, who constructs it, and who depends on it (recall the principle of holism described above). Therefore, the analyst would strategically choose a different terrain for the second field site—perhaps a mountainous terrain. Observations at the second site would lead to a more refined theory. The more refined theory might lead to questions that contrast natural obstacles with human-made environments. The analyst might therefore choose a third site that is in a city. And so on.

In this way, grounded theory makes strategic use of the variability that is available in the world, and strives to maximize the variance in the factors of interest. Note that this subtle discipline is quite different from laboratory studies, in which the goal is usually to minimize and control variability. The maximization of variability naturally leads to more opportunities for insights, and thus can contribute powerfully to defining an opportunity space.

Within the bounds of the chosen sampling approach (e.g., quota, purpose, convenience, snowball, or grounded theory), ethnographic practices tend to take a small number of forms. The traditional practices are interviews and observations; however, within ethnography, each of these practices has some important details.

Interviews are typically open, that is, the analyst has a list of topics to ask about, but not a list of specific questions that must be answered before the conclusion of the interview. In contrast to conventional sociological or psychological surveys, ethnographic interviews are not intended to collect a data point on each of a number of preplanned and required dependent measures. Instead, the interview follows the principle of flexibility, aiming to record a rich and holistic description of the members’ perceptions of their work and world.

Observations are similarly open-ended. Ethnographers often describe observation practices along a range from observer-participant to participant-observer. The observer-participant attempts to be as unobtrusive as possible, merely recording as much data as possible. By contrast, the participant-observer attempts to join into the activities that she or he is observing and to learn about those activities “from the inside.” In some cases, ethnographers supplement the observer-participant approach with recording technologies, both audio and video (e.g., Blomberg et al., 1993). In other cases, ethnographers recruit members (the people being studied) to create their own recordings or diaries (Buur et al., 2000; Wasson, 2000). Most traditional ethnographic observations tend to be broad and holistic.

When appropriate, however, the analyst may modify this breadth into a focus on a particular person, object, event, or activity.

Ethnographers emphasize that the practice of ethnography requires both (a) knowledge of specific practices and (b) a perspective and orientation that come from in-depth study—preferably in the form of an apprenticeship to a practicing ethnographer (Blomberg et al., 1993, 2003; Glazer and Strauss, 1967; Nardi, 1997). As noted above, the vital flexibility of ethnographic practice becomes strength in the discernment of an expert, but a danger in the improvisations of a novice. The practices are frequently valuable for any analyst; however, we encourage newcomers to these methods to seek advice and if possible working collaborations with more experienced practitioners.

Two recent trends are beginning to affect the well-established set of practices in ethnography. First, the Internet itself has become a new influence on culture and cultural practices. Unlike conventional face-to-face ethnographic practice, new practices are required to study people, work, play, collaboration, and spiritual practices on the Internet (e.g., Beaulieu, 2004; see also Hine, 2000; Miller and Slater, 2001; Olsson, 2000; Wittel, 2000). Because our report does not focus on Internet issues, we note this trend but do not describe it in detail.

Second, computer technology has allowed more powerful analytic methods through easy “coding” of field records or transcripts (annotations in terms of a hierarchy of terms and categories of observations) and easy sharing of coding schemes and coded materials. Some of these coding programs may also be used on multimedia field records (e.g., analog or digital video), thus extending the power and utility of those media for ethnographic record-keeping and presentation. Several commercial tools have become de facto standards in this area; see recommendations from the American Anthropology Association for details.1

Contributions to the System Design Process

There are major claims of the usefulness of ethnographic work to systems engineering and design (e.g., Hutchins, 1995). For an accessible survey of early success stories, see Hughes et al., (1995). For a more detailed set of accounts, see Button (1992), Luff et al. (2000), and Taylor (2001).

Nardi (1997) and Blomberg et al. (2003) provide detailed discussions of the role of ethnography in system development work. Nardi notes the variety of ways that an ethnographer continually brings users’ issues to the

|

1 |

For example, http://www.stanford.edu/~davidf/ethnography.html contains an updated list of free and commercial coding products, as a service of the American Anthropological Association; more generally, see http://www.aaanet.org/resinet.htm. |

attention of the development team, in both early analysis and even as a proxy for users in early testing.

Blomberg and colleagues note that the interpretation of ethnographic results is a kind of analysis, carried out according to the principles reviewed above. They describe four types of potential contributions from this type of analysis to system development:

-

Propose, inform, enhance, and update the working models that developers use as they think about the end-users (see also Hughes et al., 1997).

-

Provide generative concepts to support innovation and creativity in developers’ efforts to define new solutions.

-

Provide a critical lens (elsewhere called a framework) with which to evaluate and prioritize feature ideas and solution alternatives.

-

Serve as a reference for development teams.

Shared Representations

Several types of intermediary products or shared representations may be used between ethnographers and their clients (e.g., systems engineers or developers):

-

Experience models are documents or visualizations that help software professionals to understand patterns of human behavior, thought, and communication.

-

Opportunity maps are analytic summaries of the relationship of multiple dimensions, such as human activities versus evolutionary changes in attitudes.

-

Profiles are similar to personas (discussed later in this chapter).

-

Scenarios, as discussed later in this chapter and in Chapter 7.

-

Mock-ups and prototypes, as discussed later in this chapter and in Chapter 7.

Strengths, Limitations, and Gaps

Much has been written of the difficulty of translating ethnographic insights into analytic requirements (e.g., Hughes et al., 1992). Crabtree and Rodden (2002) call this relationship a “perennial problem.” Ethnographic investigations tend to go into great depth in a small number of sites or cases and to restrict their interpretations to these local settings (Nardi, 1997); it is difficult to generalize reliably from these small samples to the larger risk-reduction questions of what features, functions, or technologies are needed across all relevant users and circumstances (see the power-

ful theoretical and practical analysis of ethnographers’ contextualism and engineers’ abstractionism of Potts and Hsi, 1997; see also Hughes et al., 1997). Ethnographers often prefer to provide a wealth of detail, whereas systems professionals often desire a more reductionist summary (Crabtree and Rodden, 2002; Somerville et al., 2003).

One of the hallmarks of ethnography is its ability to change focus and direction when faced with new insights (Nardi, 1997), whereas systems engineering prefers a straightforward process model with known steps, milestones, and completion dates. Ethnographic investigations tend to privilege the perspective of the members (the people being described in the analysis) in rich qualitative terms, whereas systems engineering is often a matter of trading off one perspective against another through the use of common or intertranslatable metrics; these metrics are difficult to apply to a description that is couched entirely in the language of the users’ workplace and world (Crabtree et al., 2000).

Much progress has been made more recently in the integration of ethnography into systems engineering. Somerville et al. (2003) provide a thematic analysis and review of issues in this evolving interdisciplinary partnership. They, as well as Hughes et al. (1997), identified three dimensions of the users’ work in which ethnographers and systems engineers have been shown to make mutually beneficial knowledge exchanges: (1) distributed coordination, (2) plans and procedures, and (3) awareness of the work of others. Potts and Hsi (1997) used a similar strategy of identifying several key dimensions that can serve as conceptual landmarks for both ethnographers and engineers: (1) decomposition into goals, agents, and objects; (2) analysis of the relationships among goals, agents, and objects in terms of actions and responsibilities; and (3) a set of conceptual, literal, or historical test cases for system robustness, phrased in terms of obstacles and defenses. By contrast, Millen (2000) recommended that applied industrial ethnography be streamlined (in terms of both process and outcome) into a pragmatic set of practices called “rapid ethnography,” emphasizing (1) strategically constrained focus and scope, (2) selective work with a small number of key informants, (3) convergence of evidence using multiple field data techniques, and (4) collaborative analysis of qualitative data (for related approaches to collaborative analysis of field data, see Holtzblatt, 2003; Holtzblatt et al., 2004).

The wealth of detail that is available through ethnography is undeniable. The translation of that detail into a form that is useful to systems engineers and designers has been a difficult problem, but there are now both guidelines for how to make that translation and convincing success stories of the effectiveness of the translation in a diversity of system and product environments. In the language of Potts and Hsi (1997), there are now effective ways to bring powerful insights from the contextualism of

ethnography into the powerful constructive environment of systems engineering and design.

TASK ANALYSIS

Overview

Suppose you were trying to design a new control room (e.g., for a process control plant or a ship command center). How would you know what displays and controls are needed and how they should be physically laid out? Or suppose you were designing a new web site for an organization (e.g., a corporation, a university, a government agency). How would you know what information to include on the web site and how to organize the information? One of the first things you would need to know is what tasks people will be performing using the system and how those tasks are performed, so that you could design the system and related supports (e.g., procedures, training manuals, tools).

Task analysis refers to any process that identifies and examines the tasks that are performed by users when they interact with systems. Kirwan and Ainsworth (1992) provide a comprehensive review of task analysis methods, covering 25 major task analysis techniques.

Typical task analysis methods are used to understand human-machine and human-human interactions by breaking down tasks or scenarios into component task steps or physical (and sometimes also mental) operations. The result is a detailed description of the sequential and simultaneous activities of a person (or multiple people) as they interact with a device or system to achieve specific objectives. In this section we focus on task analysis methods that are particularly suited for defining tasks and the behavioral sequence of activities necessary to accomplish the task. The next section, on cognitive task analysis, describes specific methods for uncovering and representing the knowledge and mental activities that underlie more cognitive performance (e.g., situation assessment, planning, decision making).

The general task analysis process involves identifying tasks to be analyzed, collecting task data, analyzing the results to produce a detailed task description, and then generating an external shared representation of the analyzed tasks. The output is a description that includes specification of the individual task steps required, the technology used in completing the task (controls, displays, etc.) and the sequence of the task steps involved.

One of the most commonly used task analysis methods is hierarchical task analysis (HTA). HTA involves breaking down the task under analysis into a nested hierarchy of goals, subgoals, plans, and specific (mental or physical) operations (Annett, 2005).

The first step involves data collection to understand the individual(s)

performing the task, the equipment or components used to perform the task, and the substeps involved in performing the task, including the stimuli that trigger a task step and the required human response. A variety of methods can be used to collect these data, including

-

Observation of actual task performance.

-

Task walkthroughs or talkthroughs.

-

Verbal protocols.

-

Tabletop analyses of expected interaction given design descriptions.

-

Interviews with domain practitioners.

-

Surveys and questionnaires.

-

User-kept diaries and activity logs.

-

Automated records (e.g., computer logs of web searches, keystroke capture).

The analysis may be based on examination of video or audio recordings of task performance, detailed notes taken during task performance, or quantitative or qualitative summaries of task performance across individuals.

An interesting example is provided by Ritter, Freed, and Haskett (2005), who performed a task analysis to identify the range of tasks that users of a university department web site might want to accomplish. They used multiple converging techniques to identify these tasks, including analysis of existing web sites, review of web search engine logs to determine typical web site search queries, and interviews of a range of different types of users (e.g., current and prospective students, staff, parents, alumni).

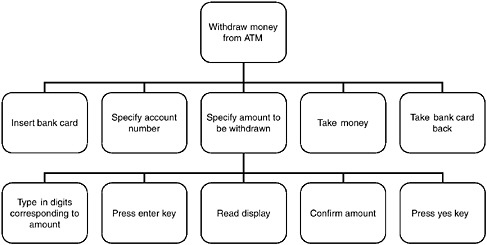

Once the data are collected, it is then analyzed to provide a detailed description of the task steps. In the HTA method, the overall goal of the task under analysis is specified at the top of the hierarchy (e.g., start-up plant, land aircraft, or withdraw money from an ATM machine). Once the overall task goal has been specified, the next step is to break down the overall goal into subgoals (usually four or five) that constitute subelements of the task. For example in the case of withdrawing money from an ATM machine, the substeps might be (1) inserting bank card, (2) specifying account to withdraw funds from, (3) specifying amount to be withdrawn, (4) taking money, and (5) taking bank card back. The subgoals are then further broken down into more detailed subgoals, until specific actions/operations are identified at the lowest nodes in the network. For example, in the case of withdrawing money, the lowest nodes may specify (1) type in digits corresponding to desired amount to be withdrawn, (2) press the enter key, (3) read display, (4) confirm that the amount displayed on the screen confirms to desired amount, and (5) press “yes” key.

Another typical method is a sequential task flow analysis. A flow diagram representation is used to specify the sequence of steps that would be

taken under different conditions. This approach was used in the intravenous infusion pump case study described in Chapter 5 (see Figure 5-5).

In the Symbiq™ IV Pump case study, task analysis and contextual inquiry were done through multiple nurse shadowing visits to the most important clinical care areas in several representative hospitals. An important outcome of the task analysis was the foundation of the use-error risk analysis, in the form of a failure modes and effects analysis (FMEA) (see Table 5-3 for an example). Each task statement from the task analysis became a row in the FMEA of possible use errors and their consequences.

Shared Representations

A variety of shared representations are used to depict the output of the task analysis. These can take the form of graphical, tabular, or text descriptions (Stanton et al., 2005b; Kirwan and Ainsworth, 1992). Examples include task description tables, hierarchical network representations, timeline representations, and task flow diagrams. Figure 6-5 shows an example of a graphic representation of the output of a hierarchical task analysis for the simple example of an ATM withdrawal of money. Typically the graphic representation would be supplemented with text providing contextual background and details.

Examples of a task flow diagram and a task description table are provided in the risk analysis section of Chapter 8. The outputs of task analyses

FIGURE 6-5 An example of graphic representation of a portion of a hierarchical task analysis of the “withdraw money from ATM” task. The graphic would be accompanied by textual descriptions that provided contextual background and task details.

can also be expressed as executable simulation models (see models and simulation in Chapter 7).

Uses of Methods

Task analysis methods are widely used to provide a step-by-step description of the activity under analysis. They provide a basis for assessing characteristics of a task, including the number and complexity of task steps, sequential dependencies among task steps, the temporal characteristics of the task (e.g., mean and distribution of time durations for each step and for the task as a whole), the physical and mental task requirements, equipment requirements, mental and physical workload, and potential for error.

Contributions to System Design Phases

The results of a task analysis are relevant to multiple phases in the system design process. The results of task analysis are used to inform

-

function allocation.

-

staffing and job organization.

-

task and interface design and evaluation.

-

procedures development.

-

training requirements specification.

-

physical and mental workload assessment.

-

human reliability assessment (i.e., error prediction and analysis).

Strengths, Limitations, and Gaps

Task analysis is one of the most useful and flexible tools available for analyzing and documenting the sequential aspects of task performance. It requires minimal training and is easy to implement. Tasks can be analyzed at different levels of detail, and the output feeds numerous human factors analyses throughout the system development process.

One of the major strengths of task analyses is that they identify when, how, and with what priority information will be needed to perform expected tasks for which analyses have been performed. As such they provide a powerful tool for creating displays, procedures, and training to support individuals in performing tasks in the range of situations that have been anticipated and analyzed. They help to reduce the risks of device or task mismatches.

Among the disadvantages that are typically mentioned include that data collection and analysis can be resource intensive to perform thoroughly. Detailed task analyses can be particularly time-consuming to conduct for

large, complex tasks (Stanton et al., 2005b). The resource commitment can be amortized if the results of a single task analysis are leveraged to feed into multiple HSI design and analysis activities (e.g., job design, procedure development, training development, human reliability analysis).

Another limitation of sequential task analysis approaches noted by Miller and Vicente (1999) is that they are prone to produce “compiled” procedural knowledge of the steps involved in performing a task, without explicit representation of the deeper rationale for why the task steps work, and how they may need to be adapted to cover situations that were not explicitly analyzed. The results of the task analysis may be narrowly applicable to the specific scenarios analyzed. As a consequence, displays, procedures, and training that exclusively rely on the results of the task analysis may be brittle in the face of unforeseen contingencies (Miller and Vicente, 1999). Work domain analysis, described in Chapter 7, provides a complementary analyses technique, intended to compensate for the potential limitations of task analysis methods.

COGNITIVE TASK ANALYSIS

Overview

Traditional task analysis approaches break tasks down into a series of external, observable behaviors. For tasks that involve few decision-making requirements (e.g., assembly line jobs, interacting with a consumer product that are expected to be easy to operate, such as ATM machines), traditional task analysis methods work well. However many critical jobs (e.g., air traffic control, military command and control, intelligence analysis, electronics troubleshooting, emergency response) involve complex knowledge and cognitive activities that are not observable and cannot be adequately characterized in terms of sequences of task elements. Examples of cognitive activities include monitoring, situation assessment, planning, deciding, anticipating, and prioritizing. Cognitive task analysis (CTA) methods have emerged that are specifically tailored to uncovering the knowledge and cognitive activities that underlie complex performance. CTA methods provide a means to explicitly identify the requirements of cognitive work so as to be able to anticipate contributors to performance problems (e.g., sources of high workload, contributors to error) and specify ways to improve individual and team performance (through new forms of training, user interfaces, or decision aids).

CTA methods provide knowledge acquisition techniques for collecting data on the knowledge and strategies that underlie performance as well as methods for analyzing and representing the results. A variety of specific techniques for knowledge acquisition have been developed that

draw on basic principles and methods of cognitive psychology (Ericsson and Simon, 1993; Hoffman, 1987; Potter et al., 2000; Cooke, 1994; Roth and Patterson, 2005). These include structured interview techniques, such as applied cognitive task analysis (Militello and Hutton, 2000) and goal-directed task analysis (Endsley, Bolte, and Jones, 2003); critical incident analysis methods that investigate actual incidents that have occurred in the past (Flanagan, 1954; Klein, Calderwood, and MacGregor, 1989; Dekker, 2002); cognitive field observation studies that examine performance in actual environments or in high-fidelity simulators (Woods, 1993; Roth and Patterson, 2005; Woods and Hollnagel, 2006, Ch. 5); “think-aloud” protocol analysis methods in which domain practitioners are asked to think aloud as they solve actual or simulated problems (e.g., Gray and Kirschenbaum, 2000); and simulated task methods in which domain practitioners are observed as they solve analog problems under controlled conditions (Patterson, Roth, and Woods, 2001).

Schraagen, Chipman, and Shalin (2000) provide a broad survey of different CTA approaches. Crandall, Klein, and Hoffman (2006) provide an excellent how-to handbook with detailed practical guidance on how to perform a cognitive task analysis. Comprehensive catalogues of CTA methods and additional guidance can also be found on two active web sites: http://www.ctaresource.com maintained by Aptima, Inc., and http://www.mentalmodels.mitre.org/, maintained by MITRE Corp.

Representative Methods

One of the most powerful means of uncovering the cognitive demands inherent in a domain and the knowledge and skills that enable experts to cope with its complexities is to study actual incidents that have occurred in the past to understand what made them challenging and why the individuals who confronted the situation succeeded or failed (Flanagan, 1954; Dekker, 2002). The critical decision method (CDM) is a structured interview technique using that approach (Klein, Calderwood, and MacGregor, 1989; Hoffman, Crandall, and Shadbolt, 1998; Klein and Armstrong, 2005). It is one of the most widely used CTA methods. The CDM approach involves asking domain experts to describe past challenging incidents in which they have participated.

A CDM session includes four interview phases or “sweeps” that examine the incident in successively greater detail. The first sweep identifies a complex incident that has the potential to uncover cognitive and collaborative demands of the domain and the basis of domain expertise. In the second sweep, a detailed incident timeline is developed that shows the sequence of events. The third sweep examines key decision points more

deeply using a set of probe questions (e.g., What were you noticing at that point? What was it about the situation that let you know what was going to happen? What were your overriding concerns at that point?). Finally the fourth sweep uses what-if queries to elicit potential expert-novice differences (e.g., someone else, perhaps with less experience might have responded differently). The output is a description of the subtle cues, knowledge, goals, expectancies and expert strategies that domain experts use to handle cognitively challenging situations.

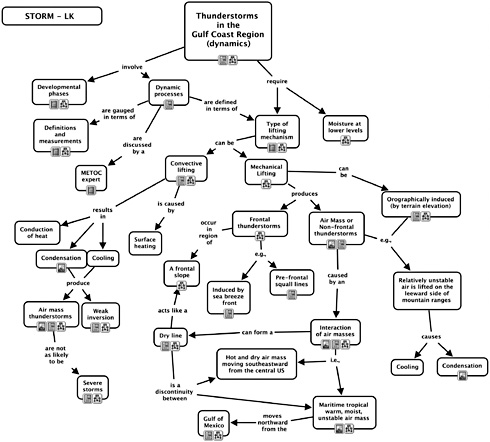

Concept mapping is another interview technique that has been used to uncover and document the knowledge and strategies that underlie expertise (Crandall, Klein, and Hoffman, 2005). In this kind of knowledge elicitation, the CTA analyst helps domain practitioners build up a representation of their domain knowledge using concept maps. Concept maps are directed graphs made up of concept nodes connected by labeled links. They are used to capture the content and structure of domain knowledge that experts employ in problem solving and decision making. Concept mapping are typically conducted in group sessions that include domain experts (e.g., three to five) and two facilitators. One facilitator provides support in the form of suggestions and probe questions, while the second facilitator creates the concept map based on the participants’ comments for all to review and modify. The output is a graphic representation of expert domain knowledge that can be used as input to the design of training or decision aids. Figure 6-6 is an example of a concept map that depicts the knowledge of cold fronts in Gulf Coast weather of an expert in meteorology.

Cognitive task analysis techniques have been developed to explore how changes in technology and training are likely to impact practitioner skills, strategies, and performance vulnerabilities. The introduction of new technologies can often have unanticipated effects. This has been referred to as the “envisioned world” problem (Woods and Dekker, 2000). New, unanticipated complexities can arise that create new sources of workload, problem-solving challenges, and coordination requirements. In turn, individuals in the system will adapt, exploiting the new power provided by the technology in unanticipated ways, and creating clever work-arounds to cope with technology limitations, so as to meet the needs of the work and human purposes.

CTA techniques to explore how people are likely to adapt to the envisioned world include using concrete scenarios or simulations to simulate the cognitive demands that are likely to be confronted. Woods and Hollnagel (2006) refer to these methods as “staged world” techniques. One example is a study that used a high-fidelity training simulator to explore how new computerized procedures and advanced alarms were likely to affect the strategies used by nuclear power plant crews to coordinate activities and

FIGURE 6-6 An example of a concept map that represents expert knowledge of the role of cold fronts in the Gulf Coast. It was created using a software suite called CmapTools. Icons below the nodes provide hyperlinks to other resources (e.g., other cmaps, and digital images of radar and satellite pictures; digital videos of experts). CmapTools was developed at the Institute for Human and Machine Cognition, and is available for free download at http://ihmc.us. Figure courtesy of R.R. Hoffman, Institute for Human and Machine Cognition.

maintain shared situation awareness (Roth and Patterson, 2005). Another example is a study by Dekker and Woods (1999) that used a future incident technique to explore the potential impact of contemplated future air traffic management architectures on the cognitive demands placed on domain practitioners. Controllers, pilots, and dispatchers were presented with a series of future incidents to jointly resolve. Examination of their problem solving and decision making revealed dilemmas, trade-offs, and points of

vulnerability in the contemplated architectures, enabling practitioners and developers to think critically about the requirements for effective performance for these envisioned systems.

Relationship to Task Analysis