4

New Approaches— Learning Systems in Progress

OVERVIEW

Incorporation of data generation, analysis, and application into healthcare delivery can be a major force in the acceleration of our understanding of what constitutes “best care.” Many existing efforts to use technology and create research networks to implement evidence-based medicine have produced scattered examples of successful learning systems. This chapter includes papers on the experiences of the Veterans Administration (VA) and the practice-based research networks (PBRNs) that demonstrate the power of this approach as well as papers outlining the steps needed to knit together these existing systems and expand these efforts nationwide toward the creation of a learning healthcare system. Highlighted in particular are key elements—including leadership, collaboration, and a research-oriented culture—that underlie successful approaches and their continued importance as we take these efforts to scale.

In the first paper, Joel Kupersmith discusses the use of the EHR to further evidence-based practice and research at the VA. Using diabetes mellitus as an example, he outlines how VistA (Veterans Health Information Systems and Technology Architecture) improves care by providing patient and clinician access to clinical and patient-specific information as well as a platform from which to perform research. The clinical data within electronic health records (EHRs) are structured such that data can be aggregated from within VA or with other systems such as Medicare to provide a rich source of longitudinal data for health services research (VA Diabetes Epidemiology Cohort [DEpiC]). PBRNs are groups of ambulatory

practices, often partnered with hospitals, academic health centers, insurers, and others to perform research and improve the quality of primary care. These networks constitute an important portion of the clinical research enterprise by providing insight from research at the “coalface” of clinical care. Robert L. Phillips suggests that many lessons could be learned about essential elements in building learning communities—particularly the organization and resources necessary—as well as how to establish such networks between many unique practice environments. The electronic component of the Primary Care Research Network PBRN has the potential to extend the capacity of existing PBRNs by providing an electronic connection that would enable the performance of randomized controlled trials (RCTs) and many other types of research in primary care practices throughout the United States.

While the work of the VA and PBRNs demonstrates immense potential for the integration of research and practice within our existing, fragmented, healthcare system, the papers that follow look at how we might bring their success to a national scale. George Isham of HealthPartners lays out a plan to develop a national architecture for a learning healthcare system and discusses some recent activities by the AQA (formerly the Ambulatory Care Quality Alliance) to promote needed systems cooperation and use of data to bring research and practice closer together. In particular, AQA is focused on developing a common set of standardized measures for quality improvement and a strategy for their implementation; a unified approach to the aggregation and sharing of data; and common principles to improve public reporting. Citing a critical need for rapid advance in the evidence base for clinical care, Lynn Etheredge makes the case for the potential to create a rapidly learning healthcare system if we build wisely on existing resources and infrastructure. In particular he focused on the potential for creating virtual research networks and the improved use of EHR data. Through the creation of national standards, the many EHR research registries and databases from the public and private sectors could become compatible. When coupled with the anticipated expansion of databases and registry development these resources could be harnessed to provide insights from data that span populations, health conditions, and technologies. Leadership and stable funding are needed along with a shift in how we think about access to data. Etheredge advances the idea of the “economics of the commons” as one to consider for data access in which researchers would give up exclusive access to some data but benefit from access to a continually expanding database of clinical research data.

IMPLEMENTATION OF EVIDENCE-BASED PRACTICE IN THE VA1

Joel Kupersmith, M.D.

Veterans Administration

As the largest integrated delivery system in the United States, the Veterans Health Administration serves 5.3 million patients annually across nearly 1,400 sites of care. Although its patients are older, sicker, and poorer than the general U.S. population, VA’s performance now surpasses other health systems on standardized quality measures (Asch et al. 2004; Kerr et al. 2004; Jha et al. 2003). These advances are in part related to VA’s leadership in the development and use of the electronic health record, which has fostered veteran-centered care, continued improvement, and research. Human and system characteristics have been essential to the transformation of VA care.

Adding computers to a care delivery system unprepared to leverage the advantages of health information can create inefficiency and other negative outcomes (Himmelstein and Woolhandler 2005). In contrast, during the period of time in which VA deployed its EHR, the number of veterans seen increased from less than 3 million to nearly 5 million, while costs per patient and full-time employees per patient both decreased (Evans et al. 2006; Perlin et al. 2004). To understand how this could be possible, it is important to highlight historical and organizational factors that were important to the adoption of VA’s EHR.

VA health care is the product of decades of innovation. In 1930, Congress consolidated programs for American veterans under VA. Facing more than 1 million returning troops following World War II, VA partnered with the nation’s medical schools, gaining access to faculty and trainees and adding research and education to its statutory missions. That bold move created an environment uniquely suited to rapid learning. At present, VA has affiliations with 107 medical schools and trains almost 90,000 physicians and associated health professionals annually.

The VA was originally based on inpatient care, and administrative and legal factors created inefficiency and inappropriate utilization. By the 1980s, the public image of the VA was poor. In 1995, facing scrutiny from Congress, VA reorganized into 22 integrated care networks. Incentives were created for providing care in the most appropriate setting, and legislation established universal access to primary care.

|

1 |

This paper is adapted from an article copyrighted and published by Project HOPE/Health Affairs as Kupersmith et al., “Advancing Evidence Based Care in Diabetes Through Health Information Technology: Lessons from the Veterans Health Administration, Health Affairs, 26(2),w156-w168, 2007. The published article is archived and available online at www.healthaffairs.org. |

These changes resulted in a reduction of 40,000 inpatient beds and an increase of 650 community-based care sites. Evidence-based practice guidelines and quality measures were adopted and safeguards were put in place for vulnerable groups such as the mentally ill and those needing chronic care while VA’s performance management system held senior managers accountable for evidence-based quality measures. All of these changes created a strong case for robust information systems and spurred dramatic improvements in quality (Jha et al. 2003; Perlin et al. 2004).

VistA: VA’s Electronic Health Record

Because VA was both a payer and a provider of care, its information system was developed to support patient care and its quality with clinical information, rather than merely capture charges and facilitate billing. In the early 1980s, VA created the Decentralized Hospital Computer Program (DHCP), one of the first EHRs to support multiple sites and healthcare settings. DHCP developers worked incrementally with a network of VA academic clinicians across the country, writing and testing code locally and transmitting successful products electronically to other sites where they could be further refined. Over time, the group had created a hospital information system prototype employing common tools for key clinical activities. The system was launched nationally in 1982, and by 1985, DHCP was operational throughout VA.

DHCP evolved to become the system now known as VistA, a suite of more than 100 applications supporting clinical, financial, and administrative functions. Access to VistA was made possible through a graphical user interface known as the Computerized Patient Record System (CPRS). With VistA-CPRS, providers can securely access patient information at the point of care and, through a single interface, update a patient’s medical history, place orders, and review test results and drug prescriptions. Because VistA also stores medical images such as X-rays and photographs directly in the patient record, clinicians have access to all the information needed for diagnosis and treatment. As of December 2005, VistA systems contained 779 million clinical documents, more than 1.5 billion orders, and 425 million images. More than 577,000 new clinical documents, 900,000 orders, and 600,000 images are added each workday—a wealth of information for the clinician, researcher, or healthcare administrator.

Clinicians were engaged at the onset of the change process. This meant working incrementally to ensure usability and integration of the EHR with clinical processes. Both local and national supports were created (e.g., local “superusers” were designated to champion the project), and a national “Veterans Electronic Health University” facilitated collaboration among local, regional, and national sponsors of EHR rollout. National

performance measures, as well as the gradual withdrawal of paper records, made use of the EHR an inescapable reality. With reductions in time wasted searching for missing paper records and other benefits, over time, staff came to view VistA-CPRS as an indispensable tool for good clinical care (Brown et al. 2003).

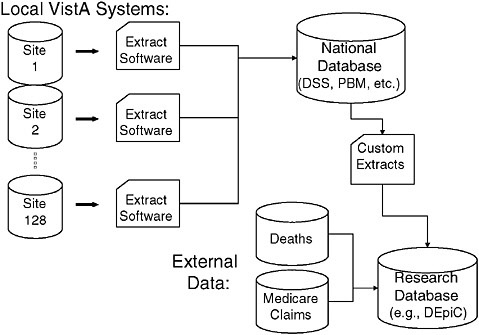

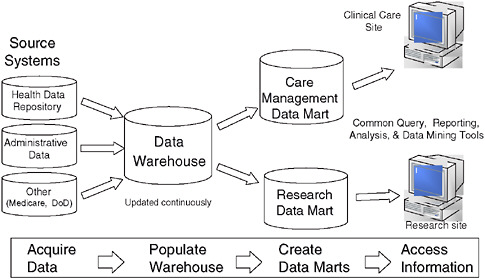

VistA-CPRS allows clinicians to access and generate clinical information about their individual patients, but additional steps are needed to yield insights into population health. Structured clinical data in the EHR can be aggregated within specialized databases, providing a rich source of data for VA administrators and health services researchers (Figure 4-1). Additionally, unstructured text data, such as clinician notes, can be reviewed and abstracted electronically from a central location. This is of particular benefit to researchers—VA multisite clinical trials and observational studies are facilitated by immediate 100 percent chart availability. Furthermore, VA has invested in an External Peer Review Program (EPRP), in which an independent external contractor audits the electronic text records to assess clinical performance using evidence-based performance criteria. Finally, data derived

FIGURE 4-1 The sources and flow of the data most often used by VA researchers for national studies. Most data originate from the VistA system but VA data can also be linked with external data sources such as Medicare claims data and the National Death Index.

from the EHR can be supplemented by information from other sources such as Medicare utilization data or data from surveys of veterans.

Leveraging the EHR: Diabetes Care in VA

Much of the work that follows has been supported by VA’s Office of Research and Development through its Health Services Research and Development and Quality Enhancement Research Initiative (QUERI) programs (Krein 2002). Diabetes care in the VA illustrates advantages of a national EHR supported by an intramural research program. Veterans with diabetes comprise about a quarter of those served, and the VA was an early leader in using the EHR for a national diabetes registry containing clinical elements as well as administrative data. While VA’s EHR made a diabetes registry possible, operationalizing data transfer and transforming it into useful information did not come automatically or easily. In the early 1990s, VA began extracting clinical data from each local VA database into a central data repository. By the year 2000, the VA diabetes registry contained data for nearly 600,000 patients receiving care in the VA, including medications, test results, blood pressure values, and vaccinations. This information has subsequently been merged with Medicare claims data to create the DEpiC (Miller et al. 2004).

Of diabetic veterans, 73 percent are eligible for Medicare and 59 percent of dual eligibles use both systems. Adding Medicare administrative data results in less than 1 percent loss to followup, and while it is not as rich as the clinical information in VA’s EHR, its addition fills gaps in follow-up, complication rates, and resource utilization (Miller, D. Personal communication, March 10, 2006.) Combined VA and Medicare data also reveal a prevalence of diabetes among veterans exceeding 25 percent. The impact of the diabetic population on health expenditures is considerable, including total inpatient expenditures (VA plus Medicare) of $3.05 billion ($5,400 per capita) in fiscal year 1999 (Pogach and Miller 2006).

The rich clinical information made possible through the EHR yields other insights. For example, VA has identified a high rate of comorbid mental illness (24.5 percent) among patients with diabetes and is using that information to understand the extent to which newer psychotropic drugs, which promote weight gain, as well as mental illness itself, contribute to poor outcomes (Frayne et al. 2005). The influence of gender and race or ethnicity can also be more fully explored using EHR data (Safford et al. 2003).

Delineating and tracking diabetic complications are also facilitated by the EHR, for example, the progression of diabetic kidney disease. Using clinical data from the EHR allows identification of early chronic kidney disease in a third of veterans with diabetes, less than half of whom have renal

impairment indicated in the record (Kern et al. 2006). VA is able to use the EHR to identify patients at high risk for amputation and is distributing that information to clinicians in order to better coordinate their care (Robbins, J. 2006. Personal communication, February 17, 2006).

EHR-Enabled Approaches to Monitoring Quality and Outcomes

Traditional quality report cards may provide incentives to health providers to disenroll the sickest patients (Hofer et al. 1999). VA’s EHR provides a unique opportunity to construct less “gameable” quality measures that assess how well care is managed for the same individual over time for diseases such as diabetes where metrics of process quality, intermediate outcomes, and complications (vision loss, amputation, renal disease) are well defined.

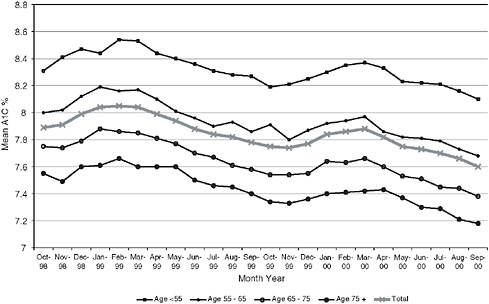

Using the VA diabetes registry, longitudinal changes within individual patients can be tracked. In Figure 4-2, case-mix-adjusted glycosylated hemoglobin values among veterans with diabetes decreased by −0.314 percent (range −1.90 to 1.03, p < .0001) over two years, indicating improved glycemic control over time, rather than simply the enrollment of healthier veterans (Thompson et al. 2005). These findings provide a convincing demonstration of effective diabetic care.

FIGURE 4-2 Trends in mean hemoglobin A1c levels.

Longitudinal data have other important uses. For example, knowledge of prior diagnoses and procedures can distinguish new complications from preexisting conditions. This was shown to be the case for estimates of amputation rates among veterans with diabetes, which were 27 percent lower once prior diagnoses and procedures were considered. Thus longitudinal data better reflect the effectiveness of the management of care and can help health systems avoid being unfairly penalized for adverse selection (Tseng et al. 2005). Longitudinal data from the EHR are also important for evaluating the safety and effectiveness of treatments, which are critical insights for national formulary decisions.

Advancing Evidence-Based Care

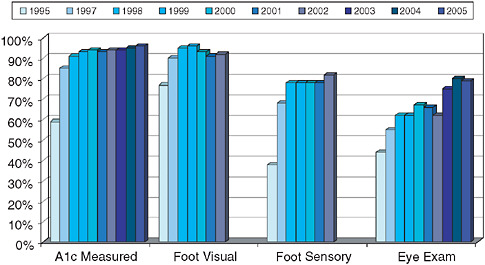

Figure 4-3 shows the trends in VA’s national performance scorecard for diabetes care based on EHR data. In addition to internal benchmarking, this approach has compared VA performance to commercial managed care (Kerr et al. 2004; Sawin et al. 2004). While these performance data are currently obtained by abstracting the electronic chart, the completion of a national Health Data Repository with aggregated relational data will eventually support automatic queries about quality and outcomes ranging from the individual patient to the entire VA population (see below).

The richness of EHR data allows VA to refine its performance measures.

FIGURE 4-3 Improvement in VA diabetes care (based on results from the VA External Peer Review Program).

VA investigators were able to demonstrate that annual retinal screening was inefficient for low-risk patients and inadequate for those with established retinopathy (Hayward et al. 2005). As a consequence, VA modified its performance metrics and is developing an approach to risk-stratified screening that will be implemented nationally.

The greatest advantage of the EHR in the VA is its ability to improve performance by influencing the behavior of patients, clinicians, and the system itself. For instance, VA’s diabetes registry has been used to construct performance profiles for administrators, clinical managers, and clinicians. These profiles included comparisons between facilities and identified the proportion of veterans with substantial elevations of glycosylated hemoglobin, cholesterol, and blood pressure. Patient lists also facilitated follow-up with high-risk individuals. Additionally, the EHR allowed consideration of the actions taken by clinicians to intensify therapy in response to elevated levels (e.g., starting or increasing a cholesterol medication when the low-density lipid cholesterol is elevated). This approach credits clinicians for providing optimal treatment and also informs them about what action might be required to improve care (Kerr et al. 2003).

Data from the EHR and diabetes registry also demonstrated the critical importance of defining the level of accountability in diabetes quality reporting. EHR data show that for most measures in the VA, only a small fraction ( 2 percent) of the variance is attributable to individual primary care providers (PCPs), and unless panel sizes are very large (200 diabetics or more), PCP profiling will be inaccurate. In contrast, much more variation (12-18 percent) was attributed to overall performance at the site of care, a factor of relevance for the design of approaches to rewarding quality. It also highlights the important influence of organizational and system factors on provider adherence to guidelines (Krein 2002).

The EHR can identify high-risk populations and can facilitate targeted interventions. For instance, poor blood pressure control contributes significantly to cardiovascular complications, the most common cause of death in diabetics. VA investigators are currently working with VA pharmacy leaders to find gaps in medication refills or lack of medication titration and thereby proactively identify patients with inadequate blood pressure control due to poor medication adherence or inadequate medication intensification. Once identified, those patients can be assigned proactive management by clinical pharmacists integrated into primary care teams and trained in behavioral counseling (Choe et al. 2005). Other approaches currently being tested and evaluated using EHR data are group visits, peer counseling, and patient-directed electronic reminders.

VistA-CPRS provides additional tools to improve care at the point of service. For example, PCPs get reminders about essential services (e.g., eye

exams, influenza vaccinations) at the time they see the patient, and CPRS functions allow providers and patients to view trends in laboratory values and blood pressure control. Perhaps most importantly, the VA’s EHR allows for effective care coordination across providers in order to communicate patients’ needs, goals, and clinical status as well as to avoid duplication of services.

Care Coordination and Telehealth for Diabetes

In-home monitoring devices now can collect and transmit vital data for high-risk patients from the home to a care coordinator who can make early interventions that might prevent the need for institutional intervention (Huddleston and Cobb 2004). Such a coordinated approach is possible only with an EHR. Based on promising pilot data as well as needs projections, VA has implemented a national program of Care Coordination through Home Telehealth (CCHT) (Chumbler et al. 2005).

Information technology also supports cost-effective access to specialized services. VA recently piloted the use of digital retinal imaging to screen for diabetic retinopathy and demonstrated it could be a cost-effective alternative to ophthalmoscopy for detecting proliferative retinopathy (Conlin et al. 2006). Diabetic retinopathy is not only a preventable complication but also a biomarker for other end-organ damage (e.g., kidney damage). In October 2005, VA began implementing a national program of teleretinal imaging to be available on VistA-CPRS for use by clinicians and researchers. In the future, computerized pictorial analysis and new tools for mining text data across millions of patient records have the potential to transform the clinical and research enterprise by identifying biomarkers of chronic illness progression.

Limits of the EHR in VA

Although VA has one of the most sophisticated EHRs in use today, VistA is not a single system, but rather a set of 128 interlinked systems, each with its own database (i.e., a decentralized system with central control). This limits its ability to make queries against all of a patient’s known data. In addition, lack of standardization for laboratory values such as glycosylated hemoglobin and other data elements creates challenges for aggregating available data for administrative and research needs. The VA diabetes registry, while a product of the EHR, took years of effort to ensure data integrity.

A national data standardization project is currently under way to ensure that data elements are compliant with emerging health data standards and data management practices. Extracting data from free-text data fields,

a challenge for all electronic records, will be addressed by defining moderately structured data elements for public health surveillance, population health, clinical guidelines compliance, and performance monitoring. Mapping of legitimate local variations to standard representations will allow easier creation of longitudinal registries for a variety of conditions.

The care of diabetes is complex and demanding, and delivering all indicated services may require more time than is typically available in a follow-up visit (Parchman, Romero, and Pugh 2006). Studies of the impact of the EHR on workflow and efficiency in VA and other settings have shown conflicting results (Overhage et al. 2001). While it is unlikely that the EHR saves time during the office encounter, downstream benefits such as better care coordination, reduction of duplicative and administrative tasks, and new models of care (e.g., group visits) translate into a “business case” when the reimbursement structure favors population management.

Creating Patient-Centered, Community-Based Care: My HealtheVet

VA’s quality transformation since 1996 involved shifting from inpatient to integrated care. The next phase will involve empowering patients to be more actively engaged and moving care from the clinic to the community and home. Again, health information technology has been designed to support the new delivery system.

My HealtheVet (MHV) is a nationwide initiative intended to improve the overall health of veterans and support greater communication between VA patients and their providers. Through the MHV web portal, veterans can securely view and manage their personal health records online, as well as access health information and electronic services. Veterans can request copies of key portions of their VA health records and store them in a personal “eVAult,” along with self-entered health information and assessments, and can share this information with their healthcare providers and others inside and outside VA. The full functionality of MHV will help patients plan and coordinate their own care through online access to care plans, appointments, laboratory values, and reminders for preventive care (U.S. Department of Veterans Affairs 2006). Research itself can be facilitated by MHV—patients will be able to identify ongoing clinical studies for which they are eligible to enroll, communicate with investigators via encrypted e-mail, have their outcomes tracked through computer-administered “smart surveys,” and even provide suggestions for future studies. In addition, the effectiveness of patient-centered care can be evaluated.

The Twenty-first Century EHR

The next phase of VistA-CPRS will feature open-source applications and relational database structures. One benefit of the conversion will be easier access to national stores of clinical data through a unified Health Data Repository (HDR) that will take the place of the current 128 separately located VistA systems. The HDR is under construction and currently contains records from nearly 16 million unique patients, with more than 900 million vital sign recordings and 461 million separate prescriptions.

Additionally, a clinical observations database linked to SNOMED (Systematized Nomenclature of Medicine) terms and semantic relationships will greatly expand the scope of data available for research data-mining activities. Enhanced decision support capabilities will help clinicians provide care according to guidelines and understand situations where it is appropriate to deviate from guidelines. The reengineered EHR will also link orders and interventions to problems, greatly enhancing VA’s clinical data-mining capabilities.

To support the delivery of consistent information to all business units, VA is developing a Corporate Data Warehouse (CDW; Figure 4-4), which will include the Health Data Repository as the primary source of clinical data but also encompass other administrative and financial datasets (including Medicare data) to create a unified view of the care of veterans. Among other things, the CDW will supplement the capabilities of VistA by provid-

FIGURE 4-4 VA Corporate Data Warehouse architecture.

ing an integrated analytical system to monitor, analyze, and disseminate performance measures. This will greatly enhance population-based health services research by offering standardized data across all the subjects it contains, tools for rapidly performing hypothesis testing, and ease of data acquisition. Unlike VA’s current diabetes registry, which has been labor-intensive to create and maintain, future registries based on the CDW will be easier to construct and update. The CDW will eventually facilitate personalized medicine by allowing the linkage of genomic information collected from veterans to longitudinal outcome information.

These changes will introduce a greater degree of central control than was present during the early days of VA’s EHR, but clinicians and researchers will continue their involvement in developing innovations.

VA has been an innovator in the EHR, developing a clinically rich system “from the ground up” that has become so integrated into the delivery of care and the conduct of research that one cannot imagine a VA health system without it. However, many factors in addition to the EHR contributed to VA’s quality transformation, including a culture of academician-clinicians that valued quality, scientific evidence, and accountability (for which EHR became an organizer and facilitator); the presence of embedded researchers who themselves were active clinicians, managers, policy makers, and developers of VistA-CPRS; and a research infrastructure that could be applied to this topic (Greenfield and Kaplan 2004; Perlin 2006). While the data structures themselves are complex and sometimes flawed, they are, because of their user origins, effectively linked to the needs of clinicians and researchers, who in turn incorporate their input into the further evolution of VA’s EHR.

The design of the VA system also ensures that overall incentives are aligned to realize the beneficial externalities of EHR. VA benefits, for instance, by being able to eliminate duplicative test ordering when veterans seek care at different facilities (Kleinke 2005). The cost of maintaining the EHR amounts to approximately $80 per patient per year, roughly the amount saved by eliminating one redundant lab test per patient per year (Perlin 2006). It should be noted that VA also benefits greatly by being an interactive, permeable entity in a free market system—VA is an enrollment system, not an entitlement program or a safety-net provider, and thus has incentives for maintaining high satisfaction and perceived value among those it serves.

For patient care management, VA’s EHR has developed an infrastructure and system for collecting and organizing information from which a diabetes database (DEpiC) evolved to provide valuable information related to disease prevalence, comorbidities, and costs that are necessary for quality improvement, system-wide planning, and research. Longitudinal within-

cohort assessment, made possible by the EHR, is a substantial advance in attaining precise measures of quality that mitigate the effects of adverse patient selection and has the potential to facilitate a variety of clinical care advances.

Home telehealth linked to EHR has made possible novel patient-provider interactions of which the care coordination and teleretinal imaging initiatives are among the earliest prototypes. This approach has the capacity to expand care delivery to many others, and the benefits are not limited to the home-bound—a new generation of Internet-savvy veterans will appreciate 24/7 access for health care the same way they do for instant messaging and shopping. MHV, which is in its launch phase, is part of the future plan to give individuals control over their health and includes many possibilities for research.

One more important initiative enabled by the EHR has a capacity to substantially change the practice of medicine—adding genomic information to the medical record. With its voluminous EHR database, VA has an unprecedented opportunity to identify the genetic correlates of disease and drug response, which may transform medical practice from a process of statistical hunches to one of targeted, personalized care. Because of the vastly larger scale of the healthcare enterprise and the changing needs of veterans, VA’s focus now has models in place to shift to issues involving clinical decision support, content standardization, and enhanced interaction between patients, VA providers, and other systems. These capabilities are made possible by VA’s EHR, and the VA experience may provide a model for how federal health policies can help the United States create a learning healthcare system.

PRACTICE-BASED RESEARCH NETWORKS

Robert L. Phillips, Jr., M.D., M.S.P.H., The Robert Graham Center

James Mold, M.D., M.P.H., University of Oklahoma

Kevin Peterson, M.D., M.P.H., University of Minnesota

The development of practice-based research networks was a natural response to the disconnect between national biomedical research priorities and intellectual curiosity and questions arising at the “coalface” of clinical care. The physician’s office is where the overwhelming majority of people in the United States seek care for illness and undifferentiated symptoms (White et al. 1961). Forty years later, there has been almost no change in this ecology of medicine (Green et al. 2001). The growth in investment in biomedical research over those same four decades has been tremendous, but it has largely ignored the place where nearly a billion visits to a highly trained professional workforce take place. Curiosity is an innately human

property, and many of the early PBRNs formed around collections of clinicians who could not find answers in the literature for the questions that arose in their practices, who did not recognize published epidemiologies, and who did not feel that interventions of proven efficacy in controlled trials could achieve equivalent effectiveness in their practices. PBRNs began to formally appear more than four decades ago to fill the gaps in knowledge identified in primary care and have been called by the IOM “the most promising infrastructure development that [the committee] could find to support better science in primary care” (Green 2000; IOM 1996). PBRNs are proven clinical laboratories critical to closing the gaps between what is known and what we need to know and between what is possible and what we currently do.

More recently, PBRNs have begun to blur the lines between research and quality improvement, forming learning communities that “use both traditional and nontraditional methods to identify, disseminate, and integrate new knowledge to improve primary care processes and patient outcomes” (Mold and Peterson 2005). The interface of discovery, research, and its application—the enterprise of quality improvement—is a logical outcome when the location of discovery is also the location of care delivery. Networks move quality improvement out of the single practice and into a group process so that the processes and outcomes can be compared and studied across clinics and can be generalized more easily.

The successful combination of attributes that creates a learning community within PBRNs has definite characteristics. Six characteristics of a professional learning community have been identified: (1) a shared mission and values, (2) collective inquiry, (3) collaborative teams, (4) an action orientation including experimentation, (5) continuous improvement, and (6) a results orientation (Mold and Peterson 2005). PBRNs demonstrating these characteristics are among the members of the Institute for Healthcare Improvement’s Breakthrough Series Collaboratives and recognized by the National Institutes of Health (NIH) Inventory and Evaluation of Clinical Research Networks. The networks in Prescription for Health, a program funded by the Robert Wood Johnson Foundation (RWJF) in collaboration with AHRQ, are innovating to help people change unhealthy behaviors, testing different interventions using common measures that permits pooling data from approximately 100 practices. As other types of clinical networks are developed, they will need similar orientations to realize the benefits of integrating research and practice.

Primary care PBRNs can be instructive to other clinical networks in understanding the resources and organization necessary for successfully integrating research and practice, and for translating external research findings into practice, acting in many ways like any other adaptive, learning entity (Green and Dovey 2001). The Oklahoma Primary Care Research

and Resource Network (OKPRN) is a good example of one such learning community. The OKPRN organizes its practices into pods—geographically organized clinic groupings of about eight practices. The pods rely on a central core research and quality improvement (QI) support team that provides grant, analytic, research design, and administration support. Each pod is supported by a Practice Enhancement Assistant (PEA) who acts as a quality improvement coordinator; research assistant; disseminator of ideas between practices; identifier of areas requiring research, development, or education; and facilitator, helping practices apply research findings. This support structure enables the pods and the larger network to do research and integrate findings into practice. The system also promotes the maturation and confidence of clinicians as researchers and leaders of clinical care improvement.

The electronic Primary Care Research Network, ePCRN, is a very different, but likewise instructive PBRN that is testing the capacity of electronic integration to facilitate research and research translation with funding support from the NIH. Beyond the normal PBRN functions, ePCRN’s highly integrated electronic backbone facilitates the performance of randomized controlled trials in primary care practices anywhere in the United States and promotes the rapid integration of new research findings into primary care. This electronic backbone can perform many research and QI functions such as routinely identifying patients eligible for ongoing studies, analyzing patient registries to conduct benchmarking of clinical quality measures, or providing prevention reminders at the point of care. Its robust electronic infrastructure is also instructive for what other learning networks might be capable of accomplishing with the right resources.

While the practices that participate in PBRNs are not well integrated into the traditional “road map” of research, they are integral to the healthcare system and have many natural connections to entities on the roadmap that could be used more effectively for research and research translation. Many PBRNs do enable practices to partner with hospitals, academic health centers, insurers, specialty societies, quality improvement organizations (e.g., National Committee for Quality Assurance, National Quality Forum [NQF], Quality Improvement Organizations), community organizations, nonprofits, and federal funding entities to perform studies and improve care in integrated efforts. The clinicians who participate in PBRNs have natural connections to the entities that form the traditional research infrastructure, but these connections lack the resources to support learning communities, to support practice-based research, and to translate research into practice. Even if the practices in a learning healthcare system are not organized into formal PBRNs, they will need to share some of the same characteristics and have some of the same resources to be successful. These include (1) expert clinician scientists who are financially supported to stay in practice while

formulating researchable questions and executing studies, (2) modernized institutional review board policies, and (3) stabilized funding that is not tied to a particular study, but rather sustains operations and communication systems across and between research projects. There is some evidence that this is beginning to happen:

-

Several institutes have formed or are evaluating clinical trial networks including the National Cancer Institute; the National Heart, Lung, and Blood Institute; and the National Institute of Neurological Disorders and Stroke. There have also been collaborations between the National Cancer Institute and the Agency for Healthcare Research and Quality to fund PBRN studies of cancer screening. However, in most of these cases, networks are composed primarily of physicians participating in research on specific diseases and specialty-based offices, and generally provide subsets of the population seen in primary care.

-

The National Institute of Dental and Craniofacial Research recently awarded $75 million for three seven-year grants to form a national PBRN for the evaluation of everyday issues in oral health care.

-

In 2006, three PBRN networks were funded as pilot programs under the NIH Roadmap for the National Electronics and Clinical Trials (NECTAR) network: (1) the ePCRN, which potentially includes all primary care clinicians; (2) the Health Maintenance Organization Research Network (HMORN), which includes physician researchers from managed care organizations; and (3) the Regional Health Organization (RHIO) Network, which includes providers caring primarily for the underserved. The early success of these efforts resulted in development of important research resources for primary care that could provide a platform for interconnection and interoperability between several thousand participating primary care clinics currently serving approximately 30 million patients. Unfortunately, just one year into funding, the NIH decided to stop funding these programs in lieu of support for other Roadmap initiatives.

-

The Agency for Healthcare Research and Quality (AHRQ) is developing a network of 5 to 10 primary care PBRNs engaged in rapid turnaround research leading to new knowledge and information that contributes to improved primary care practice.

An investment of $30 million or more per year for five years would potentially support 15 to 20 PBRNs selected through a competitive Request for Application (RFA) open to eligible primary care PBRNs and supported

by a national center. More than 100 primary care PBRNs currently exist. Virtually all existing PBRNs can be identified through registrations and inventories kept at the IECRN (Inventory and Evaluation of Clinical Research Networks), the AHRQ PBRN Resource Center, and the Federation of Practice-Based Research Networks. If each PBRN recruited and trained 250 to 1,000 community clinicians in human subjects protection and in preparation for clinical trials and translational research, nearly 100 million patients would be served by this cadre of up to 50,000 clinical research associates. The PBRNs would provide regional support for clinicians and be coordinated through the national center. Resources would be available to all NIH institutes and centers for appropriate clinical trials and translational research. In effect, this investment would promote the development of clinical learning communities that care for nearly one-third of all Americans.

The development of a national research infrastructure that provides value and function to the basic scientist and the community clinical investigator is both feasible and practical. This will require the development of new partnerships with academic centers in the discovery of new knowledge and the pursuit of better practice. These partnerships provide the best hope to deliver NIH’s achievements in improving health rapidly and effectively for the American people. Through the NIH Roadmap’s Clinical Translational Science Awards (CTSA), NIH has begun to develop a home for clinical research. Some of the academic centers receiving this funding, including Duke University, the University of California at San Francisco, and the University of Oregon, are reaching out to their local community clinics or regional clinical research networks, primarily through their community engagement functions. Although the CTSA program will stimulate the development of regionally strong translational centers and some of these centers will provide a pathway for participation of local or regional communities, the promise of transformational change that brings the national primary care community into the clinical research enterprise remains unfulfilled.

The CTSA builds on an academically centered model that presumes new translational resources will be shared with practice-based community investigators over time. Although primary care has made important inroads in some academic centers, many academic centers lack PBRNs and have too few experienced ambulatory care investigators to ensure a bidirectional exchange of information or provide enough sharing of resources to stabilize an ambulatory care research infrastructure.

A learning healthcare system can learn a great deal from PBRNs, particularly for ambulatory care—the bulk of the clinical enterprise, the location most neglected by research and quality improvement efforts, and the setting where most Americans receive medical care. There is a timely opportunity for the NIH, federal agencies, and philanthropic foundations

to create an interconnected and interoperable network that assists ambulatory clinicians in integrating discovery into clinical practice. Funding for the initial practice-based research infrastructure would serve an important role for the translation of research into the community and for promoting the integration of the national community of ambulatory care-based investigators into the clinical research enterprise. Such an investment will also support the essential elements of learning communities—a constant state of inquiry and a desire to improve among all clinicians.

NATIONAL QUALITY IMPROVEMENT PROCESS AND ARCHITECTURE

George Isham, M.D., M.S.

HealthPartners

If consistent improvement in health and care is to be achieved across the entire country, individual learning healthcare organizations will need to be knit together by a national infrastructure in a learning system for the nation. If this is not done, individual examples of progress such as the Veterans Administration, Mayo Clinic, Kaiser Permanente, and HealthPartners will remain exceptions in a disconnected fragmented healthcare system. This paper discusses what is needed to take us beyond these examples and create that learning system for the nation—a National Quality Improvement Process and Architecture (NQIPA). The AQA, previously known as the Ambulatory care Quality Alliance, is an important element enabling the NQIPA.

That the healthcare system is broken has been highlighted by a number of Institute of Medicine (IOM) reports (IOM 1999, 2001), and arguably, it does not really exist as a system. The IOM’s recent report on performance measurement (IOM 2005) pointed out that “there are many obstacles to rapid progress to improve the quality of health care but none exceeds the fact that the nation lacks a coherent, goal-oriented, and efficient system to assess and report the performance of the healthcare system.”

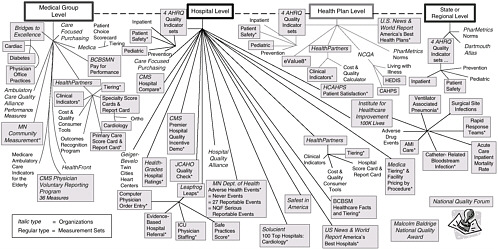

To illustrate this point, HealthPartners’ informatics department put together a map of some of the measurement sets that are relevant to our work as a health organization; see Figure 4-5 (HealthPartners 2006). Each of these standard measurement sets affects one of our activities as a medical group, hospital, health plan, or an organization regulated in Minnesota. Many of these standard sets are similar or measure the same issue or condition slightly differently. As an example, we have been required to measure mammography screening many different ways for different standard measurement sets required by different organizations or standards.

Other obstacles to creating the needed coherent, goal-oriented, and

efficient system include a lack of clear goals and objectives for improving quality in health care, the lack of leadership focus on improving quality, the lack of a culture of quality and safety in our organizations, the lack of comparative information on what works and what doesn’t that is based on scientific evidence, the lack of an environment that creates systematic incentives and supports for improving quality by individual organizations and healthcare professionals, and the lack of the universal availability of an electronic health record system that is interoperable with other EHRs and designed for decision support at the point of care and quality reporting. One of the most critical obstacles is the lack of social mechanisms and institutions on the national and regional levels that enable the collaboration necessary for individual organizations and professionals to work with each other to evaluate and improve the quality of care across the country.

A National Quality Improvement Process and Architecture

To knit together a learning system for the country and create an NQIPA, a national strategy and infrastructure is needed that enables individual healthcare providers and their organizations to know the quality of care they deliver, to have the incentives and tools necessary to improve care, and to provide information critical to individual patients and the public about the quality of care they receive. The work may be described as a seven-step process model for quality improvement (Isham and Amundson October 2002):

-

Establish Focus and Target Goals: Set broad population health goals.

-

Agree on Guidelines: Develop best-practice guidelines with physicians.

-

Devise Standard Measurements: Formulate evaluation standards for each goal.

-

Establish Targets: Set clinical care performance improvement targets.

-

Align Incentives: Reward medical groups for achieving targets.

-

Support Improvement: Assist medical groups in implementation.

-

Evaluate and Report on Progress: Disseminate information on outcome

Some progress has been made at the national and regional levels on some elements of a national support system to ensure health, safety, and quality (IOM 2006). For example, recently there has been significant progress in establishing this support system for quality improvement in Minnesota. In August 2006, the governor of Minnesota signed an executive order

directing the state agencies to use incentives in the purchasing of health care that are based on established statewide goals, against the achievement of performance using specific targets and standard quality measures based on evidence-based clinical standards (Office of Governor Tim Pawlenty 2006). This initiative (QCARE) was designed by a work group that used the seven-step model in developing its recommendations.

Institutions in place in Minnesota that enable this model include the Institute for Clinical Systems Improvement, which is a collaborative of Physician Medical Groups and Health Plans that develops and implements evidence-based clinical practice guidelines and provides technical assistance to improve clinical care (Institute for Clinical Systems Improvement 2006). The Institute for Clinical Systems Improvement is an effective way to engage the support of local practicing physicians for evidence-based standards of care. The Institute for Clinical Systems Improvement involves group practices large and small that represent 75 percent of the non-federal physicians practicing in the state.

Minnesota Community Measurement, a second Minnesota collaborative of medical providers, health plans, purchasers, and consumers that collects and publicly reports clinical quality using standard measures grounded in the evidence-based, clinical standards work of the Institute for Clinical Systems Improvement is also a critical component that enables the support system for quality in Minnesota (MN Community Measurement 2006). Incentives for working on improving quality are not only provided by the governor’s QCARE program, but also by the private health plans in their pay for performance programs, and by Bridges to Excellence, a national pay for performance program (Bridges to Excellence 2006). All of these use the Institute for Clinical Systems Improvement and Minnesota Community Measurement as the common mechanism for creating incentives founded on evidence-based clinical standards, guidelines, targets, and measures. The individual organizational quality results are publicly reported.

The Minnesota experience can be used in the effort to create a national system to support the improvement of quality of care across the country. NQIPA would be most effective as a federation of regional systems with the ability to engage local providers of care that are knit together by a national system of standards and rules and supported by mechanisms to aggregate data from national and regional sources for two purposes. The first is to report quality improvement progress on a national level for Medicare and other national purchasers. The second is to feed back performance at a regional level to enable local healthcare organizations and providers to be engaged in and part of the process of actually improving the quality of care over time. It is also critical that Medicare, national medical specialty societies, national purchasers, and others with national perspectives and needs be a part of and served well by this system. It is important, therefore,

that NQIPA implement national standards and priorities uniformly in each region of the country and be able to aggregate data and information at the multiregional and national levels.

Progress has been made at the national level, although there is much yet to be done. National goals have been suggested (IOM 2003). What is needed next are the top 10 quality issues and problems by specialty to drive the development of evidence-based guidelines, measures, and incentives by specialty, including underuse, overuse, and misuse issues and problems. Many groups produce useful evidence-based recommendations and guidelines, but what is needed now are more evidence-based reviews of acute care, chronic care, and comparative drug effectiveness.

In addition, many organizations use incentives. In 2005, 81 commercial health plans had pay for performance programs, and the Centers for Medicare and Medicaid Services (CMS) was sponsoring six pay for performance demonstrations (Raths 2006). In August 2006, the President signed an executive order promoting quality and efficient health care in federal government-administered or sponsored healthcare programs (The White House 2006). Already mentioned above is the effort by large employers to support incentives through the national Bridges to Excellence Program not only in Minnesota but in many states across the country. Needed now are more healthcare purchasing organizations synchronizing their incentives to standard targets against standard measures that address the most important quality issues across the private and public sectors. There are effective national and regional efforts that engage healthcare organizations and individual physicians in improving the quality of care (Institute for Clinical Systems Improvement 2006; Institute for Healthcare Improvement 2006). Unfortunately, all physician practices and regions of the country are not taking advantage of these healthcare improvement resources. More regional collaboratives are necessary to facilitate improvement in care in all regions of the country. Above all, these individual efforts need to be knit together to form a national strategy and support system—that is, NQIPA.

The AQA Alliance

The AQA Alliance (www.aqaalliance.org) is a broad-based national collaborative of physicians, consumers, purchasers, health insurance plans, and others that has been founded to improve healthcare quality and patient safety through a collaborative process. Key stakeholders agree on a strategy for measuring performance of physicians and medical groups, collecting and aggregating data in the least burdensome way, and reporting meaningful information to consumers, physicians, and stakeholders to inform choices and improve outcomes. The effort’s goals are to reach consensus as soon as possible on:

-

A set of measures for physician performance that stakeholders can use in private health insurance plan contracts and with government purchasers;

-

A multiyear strategy to roll out additional measurement sets and implement measures in the marketplace;

-

A model (including framework and governing structure) for aggregating, sharing, and stewarding data; and

-

Critical steps needed for reporting useful information to providers, consumers, and purchasers.

Currently there are more than 125 AQA alliance-affiliated organizations including the American College of Physicians, American Academy of Family Physicians, American College of Surgeons, American Association of Retired Persons, Pacific Business Group on Health, America’s Health Insurance Plans, and many others. Much progress has been made since the AQA alliance was established in late 2005.

The performance measures workgroup (www.aqaalliance.org/performancewg.htm) has established a framework for selection measures, principles for the use of registries in clinical practice settings, a guide for the selection of measures for medical subspecialty care, principles for efficiency measures along with a starter set of conditions for which cost of care measures should be developed first, and 26 primary care measures as a starter set. In addition, eight cardiology measures, as well as measures for dermatology, rheumatology, clinical endocrinology, radiology, neurology, ophthalmology, surgery, and orthopedic and cardiac surgery, have been approved. The AQA parameters for the selection of measures emphasize that “measures should be reliable, valid and based on sound scientific evidence. Measures should focus on areas which have the greatest impact in making care safe, effective, patient-centered, timely, efficient or equitable. Measures which have been endorsed by the NQF should be used when available. The measure set should include, but not be limited to, measures that are aligned with the IOM’s priority areas. Performance measures should be developed, selected and implemented though a transparent process” (AQA Alliance 2006).

The data-sharing and aggregation workgroup (www.aqaalliance.org/datawg.htm) has produced principles for data sharing and aggregation; provided a recommendation for a National Health Data Stewardship Entity (NHDSE) to set standards, rules, and policies for data sharing and aggregation, described desirable characteristics of an NHDSE; developed guidelines and key questions for physician data aggregation projects, and established principles to guide the use of information technology systems that support performance measurement and reporting so as to ensure that electronic health record systems can report these data as part of routine

practice. As a consequence of this workgroup’s effort, six AQA pilot sites were announced in March 2006. They include the California Cooperative Healthcare Reporting Initiative, Indiana Health Information Exchange, Massachusetts Health Quality Partners, Minnesota Community Measurement, Phoenix Regional Healthcare Value Measurement Imitative, and Wisconsin Collaborative for Healthcare Quality. These pilots are to serve as learning labs to link public and private datasets and assess clinical quality, cost of care, and patient experience. Each of these sites has strong physician leadership, a rich history of collaboration on quality and data initiatives, and the necessary infrastructure and experience to support public and private dataset aggregation. The collaboration across health plans and providers in these six pilot efforts yield a comprehensive view of physician practice. The lessons from the pilot sites can provide valuable input in the establishment of a national framework for measurement, data sharing, and reporting (NQIPA).

The third AQA alliance workgroup is the reporting workgroup (www.aqaalliance/reportingwg.com). It has produced principles for public reporting as well as principles for reporting to clinicians and hospitals, and has had discussions on reporting models and formats.

There are significant opportunities and challenges for the work of the AQA alliance. Among the opportunities are expansion of the measurement sets to address the critical quality issues in all specialties and expansion of the six pilot sites to form a national network of regional data aggregation collaboratives covering all regions of the country. The engagement and support of all medical and surgical specialty groups as well as physicians and their organizations are critical to the success of this work. Determining a business model and funding sources for the expansion of the pilot sites and the operation of the NHDSE are significant challenges. The expansion of the measurement set to address cost of care, access to care, equity, and patient-centered issues represents a major opportunity as well a significant methodological challenge. Determining the best legal structure and positioning between the public and private sectors of the NHDSE will be critical to its success in setting standards and rules for data aggregation for the public and private sectors.

Establishing a common vision for the NQIPA will be important for mobilizing the effort necessary to maximize the value of priority setting, evidence-based medicine, target setting, measurement development, data aggregation, incentives for improved performance, and the public reporting of performance. Getting on with the task of implementing this vision is urgent. Every year that goes by without effective action represents another year of a quality chasm not bridged, of lives lost needlessly, of quality of life diminished unnecessarily.

ENVISIONING A RAPID LEARNING HEALTHCARE SYSTEM

Lynn Etheredge

George Washington University

The United States can develop a rapid learning healthcare system. New research capabilities now emerging—large electronic health record databases, predictive computer models, and rapid learning networks—will make it possible to advance clinical care from the experience of tens of millions of patients each year. With collaborative initiatives in the public and private sectors, a national goal could be for the health system to learn about the best uses of new technologies at the same rate that it produces new technologies. This could be termed a rapid learning health system (Health Affairs 2007).

There is still much to be done to reach that goal. Biomedical researchers and technology firms are expanding knowledge and clinical possibilities much faster than the health system’s ability to assess these technologies. Already, there are growing concerns about the evidence base for clinical care, its gaps and biases (Avorn 2004; Kassirer 2005; Abramson 2004; Ioannidis 2005; Deyo and Patrick 2005). Technological change is now the largest factor driving our highest-in-the world health spending, which is now more than $2 trillion per year. With advances in the understanding of the human genome and a doubling of the NIH research budget to more than $28 billion, there may be an even faster stream of new treatment options. Neither government regulation, healthcare markets, consumers, physicians, nor health plans are going to be able to deal with these technology issues, short of rationing, unless there are more rapid advances in the evidence base for clinical care.

The “inference gap” concept, described by Walter Stewart earlier in this volume, incisively captures the knowledge issues that confront public officials, physicians, and patients for the 85 million enrollees in the Medicare and Medicaid programs. As he notes, the clinical trials database is built from randomized clinical trials mostly using younger populations with single diagnoses. The RCT patients are very different from Medicare and Medicaid enrollees who are mostly older patients with multiple diagnoses and treatments, women and children, and individuals with seriously disabling conditions. With Medicare and Medicaid now costing more than $600 billion annually—and projected to cost $3.5 trillion over the next five years— there is a fiscal, as well as a medical, imperative to learn more rapidly about what works in clinical care. As a practical matter, we cannot learn all that we would like to know, as rapidly as we need to know it, through RCTs and need to find powerful and efficient ways to learn rapidly from practice-based evidence.

Large EHR research databases are the key development that makes it possible to create a rapid learning health system. The VA and Kaiser Permanente are the public and private sector leaders; their new research databases each have more than 8 million EHRs. They are likely to add genomic information. New networks with EHR databases—“virtual research organizations”—add even more to these capacities. For instance, HMORN with 14 HMOs has 40 million enrollees and is sponsoring the Cancer Research Network (which has about 10 million patient EHRs) with the National Cancer Institute, as well as the Vaccine Safety Datalink (which has about 6 million patient records) with the Centers for Disease Control and Prevention (CDC). Institutions with EHR databases and genome data include Children’s Hospital of Philadelphia, Marshfield, Mayo, and Geisinger. Large research projects that need to access paper health records from multiple sites are now administratively complicated, time-consuming, expensive, and done infrequently. In contrast, studies with computerized EHR databases and new research software will be done from a computer terminal in hours, days, or a few weeks. Thousands of large population studies will be doable quickly and inexpensively.

A fully operational national rapid learning system could include many such databases, sponsors, and networks. It could be organized in many different ways, including by enrolled populations (the VA, Medicare, Medicaid, private health plans); by healthcare professions (specialist registries); by institution (multispecialty clinics and academic health centers); by health condition (disease registries and national clinical studies databases); by technology (drug safety and efficacy studies, coverage with evidence development studies); by geographic area (Framingham study); by age cohort (National Children’s Study); or by special population (minorities, genomic studies). With national standards, all EHR research registries and databases could be compatible and multiuse.

The key short-term issues for advancing a rapid learning strategy include leadership and development of learning networks, development of research programs, and funding. As reflected in the spectacularly rapid advances of the Human Genome Project and its sequels, we should be thinking about creating a number of leading-edge networks that cut across traditional organizational boundaries. Among potential new research initiatives, it is notable that large integrated delivery systems, such as Kaiser and VA, have been early leaders and that many parts of NIH could be doing much more to develop ongoing national research networks (see paper by Katz, Chapter 5) and EHR databases. With respect to new databases, the NIH could require reporting of all its publicly funded clinical studies, in EHR-type formats, into national computer-searchable NIH databanks; peer-reviewed journals could require that the datasets of the clinical studies they publish

also be available to the scientific community through such NIH databanks (National Cancer Institute 2005). This rapid learning strategy would take advantage of what we economists term the “economics of the commons”; each individual researcher would give up exclusive access to some data, but would benefit, in return, from access to a vast and expanding treasure trove of clinical data from the international research community. With these carefully collected, rich data resources, powerful mathematical modeling approaches will be able to advance systems biology, “virtual” clinical trials, and scientific prediction-based health care much more rapidly. There will also be benefits for research on heterogeneity of treatment responses and the design of “practical clinical trials” to fill evidence gaps (see papers by Tunis, Chapter 1, and by Eddy and Greenfield, Chapter 2). Another important research initiative would be to develop “fast track” learning strategies to evaluate promising new technologies. One model suggested is to establish study designs for new technologies when they are first approved and to review the evidence from patient experience at a specified date (e.g. three years later) to help guide physicians, patients, and future research as these technologies diffuse into wider use (Avorn 2004).

To implement a national learning strategy, the Department of Health and Human Services (HHS) health agencies and the VA could be designers and funders of key public or private initiatives. HHS first-year initiatives could include expanding on the National Cancer Institute’s (NCI’s) Cancer Research Network with NIH networks for heart disease and diabetes; starting national computer searchable databases for NIH, the Food and Drug Administration (FDA), and other clinical studies; a broad expansion of AHRQ’s research to address Medicare Rx, Medicaid, national health spending, socioeconomic and racial disparities, effectiveness, and quality issues; expanding CDC’s Vaccine Safety Datalink network and FDA’s post-market surveillance into a national FDA-CDC program for evaluation of drug safety and efficacy, including pharmacogenomics; starting national EHR research programs for Medicaid’s special needs populations; and initiating a national “fast track” learning system for evaluating promising new technologies. A first-year budget of $50 million for these initiatives takes into account that research capabilities are still capacity limited by EHR database and research tool development. Within five years, a national rapid learning strategy could be taken to scale with about $300 million a year.

To move forward, a national learning strategy also needs vision and consensus. The IOM is already having a key catalytic role through this workshop and publication of these papers. This paper identifies many opportunities for the public and private sectors to collaborate in building a learning healthcare system.

REFERENCES

Abramson, J. 2004. Overdosed America. New York: HarperCollins.

Asch, S, E McGlynn, M Hogan, R Hayward, P Shekelle, L Rubenstein, J Keesey, J Adams, and E Kerr. 2004. Comparison of quality of care for patients in the Veterans Health Administration and patients in a national sample. Annals of Internal Medicine 141(12):938-945.

AQA Alliance. 2006 (April) AQA Parameters for Selecting Measures for Physician Performance. Available from http://www.aqaalliance.org/files/AQAParametersforSelectingAmbulatoryCare.doc. (accessed April 4, 2007).

Avorn, J. 2004. Powerful Medicines. New York: Alfred A. Knopf.

Bridges to Excellence. 2006. Bridges to Excellence Overview. Available from http://www.bridgestoexcellence.org/bte/about_us/home.htm. (accessed November 30, 2006).

Brown, S, M Lincoln, P Groen, and R Kolodner. 2003. Vista-U.S. Department of Veterans Affairs national-scale HIS. International Journal of Medical Informatics 69(2-3):135-156.

Choe, H, S Mitrovich, D Dubay, R Hayward, S Krein, and S Vijan. 2005. Proactive case management of high-risk patients with Type 2 diabetes mellitus by a clinical pharmacist: a randomized controlled trial. American Journal of Managed Care 11(4):253-260.

Chumbler, N, B Neugaard, R Kobb, P Ryan, H Qin, and Y Joo. 2005. Evaluation of a care coordination/home-telehealth program for veterans with diabetes: health services utilization and health-related quality of life. Evaluation and the Health Professions 28(4):464-478.

Conlin, P, B Fisch, J Orcutt, B Hetrick, and A Darkins. 2006. Framework for a national teleretinal imaging program to screen for diabetic retinopathy in Veterans Health Administration patients. Journal of Rehabilitation Research and Development 43(6):741-748.

Deyo, R, and D Patrick. 2005. Hope or Hype. New York: American Management Association/AMACOM Books.

Evans, D, W Nichol, and J Perlin. 2006. Effect of the implementation of an enterprise-wide electronic health record on productivity in the Veterans Health Administration. Health Economics, Policy, and Law 1(2):163-169.

Frayne, S, J Halanych, D Miller, F Wang, H Lin, L Pogach, E Sharkansky, T Keane, K Skinner, C Rosen, and D Berlowitz. 2005. Disparities in diabetes care: impact of mental illness. Archives of Internal Medicine 165(22):2631-2638.

Green, L. 2000. Putting practice into research: a 20-year perspective. Family Medicine 32(6):396-397.

Green, L, and S Dovey. 2001. Practice based primary care research networks. They work and are ready for full development and support. British Medical Journal 322(7286):567-568.

Green, L, G Fryer Jr., B Yawn, D Lanier, and S Dovey. 2001. The ecology of medical care revisited. New England Journal of Medicine 344(26):2021-2025.

Greenfield, S, and S Kaplan. 2004. Creating a culture of quality: the remarkable transformation of the department of Veterans Affairs Health Care System. Annals of Internal Medicine 141(4):316-318.

Hayward, R, C Cowan Jr., V Giri, M Lawrence, and F Makki. 2005. Causes of preventable visual loss in type 2 diabetes mellitus: an evaluation of suboptimally timed retinal photocoagulation. Journal of General Internal Medicine 20(5):467-469.

Health Affairs. 2007. A rapid learning health system. Health Affairs (collection of articles, special web edition) 26(2):w107-w118.

Himmelstein, D, and S Woolhandler. 2005. Hope and hype: predicting the impact of electronic medical records. Health Affairs 24(5):1121-1123.

Hofer, T, R Hayward, S Greenfield, E Wagner, S Kaplan, and W Manning. 1999. The unreliability of individual physician “report cards” for assessing the costs and quality of care of a chronic disease. Journal of the American Medical Association 281(22):2098-2105.

Huddleston, M, and R Cobb. 2004. Emerging technology for at-risk chronically ill veterans. Journal of Healthcare Quality 26(6):12-15, 24.

Institute for Clinical Systems Improvement. 2006. Available from http://www.icsi.org/about/index.asp. (accessed November 30, 2006).

Institute for Healthcare Improvement. 2006. Available from http://www.ihi.org/ihi. (accessed December 3, 2006).

IOM (Institute of Medicine). 1996. Primary Care: America’s Health in a New Era. Washington, DC: National Academy Press.

———. 1999. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press.

———. 2001. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press.

———. 2003. Priority Areas for National Action: Transforming Health Care Quality. Washington, DC: The National Academies Press.

———. 2005. Performance Measurement: Accelerating Improvement. Washington, DC: The National Academies Press.

———. 2006. The Richard and Hilda Rosenthal Lectures 2005: The Next Steps Toward Higher Quality Health Care. Washington, DC: The National Academies Press.

Ioannidis, J. 2005. Contradicted and initially stronger effects in highly cited clinical research. Journal of the American Medical Association 294(2):218-228.

Isham, G, and G Amundson. 2002 (October). A seven step process model for quality improvement. Group Practice Journal 40.

Jha, A, J Perlin, K Kizer, and R Dudley. 2003. Effect of the transformation of the Veterans Affairs Health Care System on the quality of care. New England Journal of Medicine 348(22):2218-2227.

Kassirer, J. 2005. On The Take. New York: Oxford University Press.

Kern, E, M Maney, D Miller, C Tseng, A Tiwari, M Rajan, D Aron, and L Pogach. 2006. Failure of ICD-9-CM codes to identify patients with comorbid chronic kidney disease in diabetes. Health Services Research 41(2):564-580.

Kerr, E, D Smith, M Hogan, T Hofer, S Krein, M Bermann, and R Hayward. 2003. Building a better quality measure: are some patients with “poor quality” actually getting good care? Medical Care 41(10):1173-1182.

Kerr, E, R Gerzoff, S Krein, J Selby, J Piette, J Curb, W Herman, D Marrero, K Narayan, M Safford, T Thompson, and C Mangione. 2004. Diabetes care quality in the Veterans Affairs Health Care System and commercial managed care: the TRIAD study. Annals of Internal Medicine 141(4):272-281.

Kleinke, J. 2005. Dot-gov: market failure and the creation of a national health information technology system. Health Affairs 24(5):1246-1262.

Krein, S. 2002. Whom should we profile? Examining diabetes care practice variation among primary care providers, provider groups, and health care facilities. Health Services Research 35(5):1160-1180.

Miller, D, M Safford, and L Pogach. 2004. Who has diabetes? Best estimates of diabetes prevalence in the Department of Veterans Affairs based on computerized patient data. Diabetes Care 27(Suppl.):2, B10-B21.

MN Community Measurement. 2006. Our Community Approach. Available from http://mnhealthcare.org/~wwd.cfm. (accessed November 30, 2006).

Mold, J, and K Peterson. 2005. Primary care practice-based research networks: working at the interface between research and quality improvement. Annals of Family Medicine 3(Suppl.):1, S12-S20.

National Cancer Institute. 2005 (June). Restructuring the National Cancer Clinical Trials Enterprise. Available from http://integratedtrials.nci.nih.gov/ict/. (accessed May 24, 2006).

Office of Governor Tim Pawlenty. 2006 (July 31). Governor Pawlenty Introduces Health Care Inititative to Improve Quality and Save Costs. Available from http://www.governor.state.mn.us/mediacenter/pressreleases/PROD007733.html. (accessed November 30, 2006.

Overhage, J, S Perkins, W Tierney, and C McDonald. 2001. Controlled trial of direct physician order entry: effects on physicians’ time utilization in ambulatory primary care internal medicine practices. Journal of the American Medical Informatics Association 8(4):361-371.

Parchman, M, R Romero, and J Pugh. 2006. Encounters by patients with Type 2 diabetes—complex and demanding: an observational study. Annals of Family Medicine 4(1):40-45.

Perlin, J. 2006. Transformation of the U.S. Veterans Health Administration. Health Economics, Policy, and Law 1(2):99-105.

Perlin, J, R Kolodner, and R Roswell. 2004. The Veterans Health Administration: quality, value, accountability, and information as transforming strategies for patient-centered care. American Journal of Managed Care 10(11.2):828-836.

Pogach, L, and D Miller. 2006. Merged VHA-Medicare Databases: A Tool to Evaluate Outcomes and Expenditures of Chronic Diseases. Poster Session, VHA Health Services Research and Development Conference, February 26, 2006.

Raths, D. 2006 (February). 9 Tech Trends: Pay for Performance. Healthcare Informatics Online. Available from http://healthcare-informatics.com/issues/2006/02/48. (accessed December 2, 2006).

Safford, M, L Eaton, G Hawley, M Brimacombe, M Rajan, H Li, and L Pogach. 2003. Disparities in use of lipid-lowering medications among people with Type 2 diabetes mellitus. Archives of Internal Medicine 163(8):922-928.

Sawin, C, D Walder, D Bross, and L Pogach. 2004. Diabetes process and outcome measures in the Department of Veterans Affairs. Diabetes Care 27(Suppl.):2, B90-B94.

Thompson, W, H Wang, M Xie, J Kolassa, M Rajan, C Tseng, S Crystal, Q Zhang, Y Vardi, L Pogach, and M Safford. 2005. Assessing quality of diabetes care by measuring longitudinal changes in hemoglobin A1c in the Veterans Health Administration. Health Services Research 40(6.1):1818-1835.