2

The Evolving Evidence Base— Methodologic and Policy Challenges

OVERVIEW

An essential component of the learning healthcare system is the capacity to continually improve approaches to gathering and evaluating evidence, taking advantage of new tools and methods. As technology advances and our ability to accumulate large quantities of clinical data increases, new challenges and opportunities to develop evidence on the effectiveness of interventions will emerge. With these expansions comes the possibility of significant improvements in multiple facets of the information that underlies healthcare decision making, including the potential to develop additional insights on risk and effectiveness; an improved understanding of increasingly complex patterns of comorbidity; insights on the effect of genetic variation and heterogeneity on diagnosis and treatment outcomes; and evaluation of interventions in a rapid state of flux such as devices and procedures. A significant challenge will be in piecing together evidence from the full scope of this information to determine what is best for individual patients. This chapter offers an overview of some of the key methodologic and policy challenges that must be addressed as evidence evolves.

In the first paper in this chapter, Robert M. Califf presents an overview of the alternatives to large randomized controlled trials (RCTs), and Telba Irony and David Eddy present three methods that have been developed to augment and improve current approaches to generating evidence. Califf suggests that, while the RCT is a valuable tool, the sheer volume of clinical decisions requires that we understand the best alternative methods to use when RCTs are inapplicable, infeasible, or impractical. He outlines

the potential benefits and pitfalls of practical clinical trials (PCTs), cluster randomized trials, observational treatment comparisons, interrupted time series, and instrumental variables analysis, noting that advancements in methodologies are important; but increasing the evidence base will also require expanding our capacity to do clinical research—which can be exemplified by the need for increased organization, clinical trials that are embedded in a nodal network of health systems with electronic health records, and development of a critical mass of experts to guide us through study methodologies.

Another issue complicating evaluation of medical devices is their rapid rate of turnover and improvement, which makes their appraisal especially complicated. Telba Irony discusses the work of the Food and Drug Administration (FDA) in this area through the agency’s Critical Path Initiative and its Medical Device Innovation Initiative. The latter emphasizes the need for improved statistical approaches and techniques to learn about the safety and effectiveness of medical device interventions in an efficient way, which can also adapt to changes in technology during evaluation periods. Several examples were discussed of the utilization of Bayesian analysis to accelerate the approval process of medical devices. David M. Eddy presented his work with Archimedes to demonstrate how the use of mathematical models is a promising approach to help answer clinical questions, particularly to fill the gaps in empirical evidence. Many current gaps in evidence relate to unresolved questions posed at the conclusion of clinical trials; however most of these unanswered questions do not get specifically addressed in subsequent trials, due to a number of factors including cost, feasibility, and clinical interest. Eddy suggests that models can be particularly useful in utilizing the existing clinical trial data to address issues such as head-to-head comparisons, combination therapy or dosing, extension of trial results to different settings, longer follow-up times, and heterogeneous populations. Recent work on diabetes prevention in high-risk patients illustrates how the mathematical modeling approach allowed investigators to extend trials in directions that were otherwise not feasible and provided much needed evidence for truly informed decision making. Access to needed data will increase with the spread of electronic health records (EHRs) as long as person-specific data from existing trials are widely accessible.

As we accumulate increasing amounts of data and pioneer new ways to utilize information for patient benefit, we are also developing an improved understanding of increasingly complex patterns of comorbidity and insights into the effect of genetic variation and heterogeneity on diagnosis and treatment outcomes. Sheldon Greenfield outlines the many factors that lead to heterogeneity of treatment effects (HTE)—variations in results produced by the same treatment in different patients—including genetic, environmental, adherence, polypharmacy, and competing risk. To improve the specificity of

treatment recommendations, Greenfield suggests that prevailing approaches to study design and data analysis in clinical research must change. The authors propose two major strategies to decrease the impact of HTE in clinical research: (1) the use of composite risk scores derived from multivariate models should be considered in both the design of a priori risk stratification groups and data analysis of clinical research studies; and (2) the full range of sources of HTE, many of which arise for members of the general population not eligible for trails, should be addressed by integrating the multiple existing phases of clinical research, both before and after an RCT.

In a related paper, David Goldstein gives several examples that illustrate the mounting challenges and opportunities posed by genomics in tailoring treatment appropriately. He highlights recent work on the Clinical Anti-psychotic Trials of Intervention Effectiveness (CATIE), which compared the effectiveness of atypical antipsychotics and one typical antipsychotic in the treatment of schizophrenia and Alzheimer’s disease. While results indicated that, with respect to discontinuation of treatment, there was no difference between typical and atypical antipsychotics, in terms of adverse reactions, such as increased body weight or development of the irreversible condition known as tardive dyskinesia, these medications were actually quite distinct. Pharmacogenetics thus offers the potential ability to identify subpopulations of risk or benefit through the development of clinically useful diagnostics, but only if we begin to amass the data, methods, and resources needed to support pharmacogenetics research.

The final cluster of papers in this chapter engage some of the policy issues in expanding sources of evidence, such as those related to the interoperability of electronic health records, expanding post-market surveillance and the use of registries, and mediating an appropriate balance between patient privacy and access to clinical data. Weisman et al. comment on the rich opportunities presented by interoperable EHRs for post-marketing surveillance data and the development of additional insights on risk and effectiveness. Again, methodologies outside of the RCT will be increasingly instrumental in filling gaps in evidence that arise from the use of data related to interventions in clinical practice because the full value of an intervention cannot truly be appreciated without real-world usage. Expanded systems for post-marketing surveillance offer substantial opportunities to generate evidence; and in defining the approach, we also have an opportunity to align the interests of many healthcare stakeholders. Consumers will have access to technologies as well as information on appropriate use; manufacturers and regulatory agencies might recognize significant benefit from streamlined or harmonized data collection requirements; and decision makers might acquire means to accumulate much-needed data for comparative effectiveness studies or recognition of safety signals. Steve Teutsch and Mark Berger comment on the obvious utility of clinical stud-

ies, particularly comparative effectiveness studies—to demonstrate which technology is more effective, safer, or beneficial for subpopulations or clinical situation—for informing the decisions of patients, providers, and policy makers. However they also note several of the inherent difficulties of our current approach to generating needed information, including a lack of consensus on evidence standards and how they might vary depending on circumstance, and a needed advancement in the utilization, improvement, and validation of study methodologies.

An underlying theme in many of the workshop papers is the effect of HIPAA (Health Insurance Portability and Accountability Act) regulation on current research and the possible implications for utilizing data collected at the point of care for generation of evidence on effectiveness of interventions. In light of the substantial gains in quality of care and advances in research possible by linking health information systems and aggregating and sharing data, consideration must be given to how to provide access while maintaining appropriate levels of privacy and security for personal health information. Janlori Goldman and Beth Tossell give an overview of some of the issues that have emerged in response to privacy concerns about shared medical information. While linking medical information offers clear benefits for improving health care, public participation is necessary and will hinge on privacy and security being built in from the outset. The authors suggest a set of first principles regarding identifiers, access, data integrity, and participation that help move the discussion toward a workable solution. This issue has been central to many discussions of how to better streamline the healthcare system and facilitate the process of clinical research, while maximizing the ability to provide privacy and security for patients. A recent Institute of Medicine (IOM) workshop, sponsored by the National Cancer Policy Forum, examined some of the issues surrounding HIPAA and its effect on research, and a formal IOM study on the topic is anticipated in the near future.

EVOLVING METHODS: ALTERNATIVES TO LARGE RANDOMIZED CONTROLLED TRIALS

Robert M. Califf, M.D.

Duke Translational Medicine Institute and the Duke University Medical Center

Researchers and policy makers have used observational analyses to support medical decision making since the beginning of organized medical practice. However, recent advances in information technology have allowed researchers access to huge amounts of tantalizing data in the form of administrative and clinical databases, fueling increased interest in the question of whether alternative analytical methods might offer sufficient validity to

elevate observational analysis in the hierarchy of medical knowledge. In fact, 25 years ago, my academic career was initiated with access to one of the first prospective clinical databases, an experience that led to several papers on the use of data from practice and the application of clinical experience to the evaluation and treatment of patients with coronary artery disease (Califf et al. 1983). However, this experience led me to conclude that no amount of statistical analysis can substitute for randomization in ensuring internal validity when comparing alternative approaches to diagnosis or treatment.

Nevertheless, the sheer volume of clinical decisions made in the absence of support from randomized controlled trials requires that we understand the best alternative methods when classical RCTs are unavailable, impractical, or inapplicable. This discussion elaborates upon some of the alternatives to large RCTs, including practical clinical trials, cluster randomized trials, observational treatment comparisons, interrupted time series, and instrumental variables analysis, and reviews some of the potential benefits and pitfalls of each approach.

Practical Clinical Trials

The term “large clinical trial” or “megatrial” conjures an image of a gargantuan undertaking capable of addressing only a few critical questions. The term “practical clinical trial” is greatly preferred because the size of a PCT need be no larger than that required to answer the question posed in terms of health outcomes—whether patients live longer, feel better, or incur fewer medical costs. Such issues are the relevant outcomes that drive patients to use a medical intervention.

Unfortunately, not enough RCTs employ the large knowledge base that was used in developing the principles relevant to conducting a PCT (Tunis et al. 2003). A PCT must include the comparison or alternative therapy that is relevant to the choices that patients and providers will make; all too often, RCTs pick a “weak” comparator or placebo. The populations studied should be representative; that is, they should include patients who would be likely to receive the treatment, rather than including low-risk or narrow populations selected in hopes of optimizing the efficacy or safety profile of the experimental therapy. The time period of the study should include the period relevant to the treatment decision, unlike short-term studies that require hypothetical extrapolation to justify continuous use.

Also, the background therapy should be appropriate for the disease, an issue increasingly relevant in the setting of international trials that include populations from developing countries. Such populations may be comprised of “treatment-naïve” patients, who will not offer the kind of therapeutic challenge presented by patients awaiting the new therapy in countries where

active treatments are already available. Moreover, patients in developing countries usually do not have access to the treatment after it is marketed. Well-designed PCTs offer a solution to the “outsourcing” of clinical trials to populations of questionable relevance to therapeutic questions better addressed in settings where the treatments are intended to be used. Of course, the growth of clinical trials remains important for therapies that will actually be used in developing countries, and appropriate trials in these countries should be encouraged (Califf 2006a).

Therefore, the first alternative to a “classical” RCT is a properly designed and executed PCT. Research questions should be framed by the clinicians who will use the resulting information, rather than by companies aiming to create an advantage for their products through clever design. Similarly, a common fundamental mistake occurs when scientific experts without current knowledge of clinical circumstances are allowed to design trials. Instead, we need to involve clinical decision makers in the design of trials to ensure they are feasible and attractive to practice, as well as making certain that they include elements critical to providing generalizable knowledge for decision making.

Another fundamental problem is the clinical research enterprise’s lack of organization. In many ways, the venue for the conduct of clinical trials is hardly a system at all, but rather a series of singular experiences in which researchers must deal with hundreds of clinics, health systems, and companies (and their respective data systems). Infrastructure for performing trials should be supported by the both the clinical care system and the National Institutes of Health (NIH), with continuous learning about the conduct of trials and constant improvements in their efficiency. However, the way trials are currently conducted is an engineering disaster. We hope that eventually trials will be embedded in a nodal network of health systems with electronic health records combined with specialty registries that cut across health systems (Califf et al. [in press]). Before this can happen, however, not only must EHRs be in place, but common data standards and nomenclature must be developed, and there must be coordination among numerous federal agencies (FDA, NIH, the Centers for Disease Control and Prevention [CDC], the Centers for Medicare and Medicaid Services [CMS]) and private industry to develop regulations that will not only allow, but encourage, use of interoperable data.

Alternatives to Randomized Comparisons

The fundamental need for randomization arises from the existence of treatment biases in practice. Recognizing that random assignment is essential to ensuring the internal validity of a study when the likely effects of an intervention are modest (and therefore subject to confounding by indica-

tion), we cannot escape the fact that nonrandomized comparisons will have less internal validity. However, nonrandomized analyses are nonetheless needed, because not every question can be answered by a classical RCT or a PCT, and a high-quality observational study is likely to be more informative than relying solely on clinical experience. For example, interventions come in many forms—drugs, devices, behavioral interventions, and organizational changes. All interventions carry a balance of potential benefit and potential risk; gathering important information on these interventions through an RCT or PCT might not always be feasible.

As an example of organizational changes requiring evaluation, consider the question: How many nurses, attendants, and doctors are needed for an inpatient unit in a hospital? Although standards for staffing have been developed for some environments relatively recently, in the era of computerized entry, EHRs, double-checking for medical errors, and bar coding, the proper allocation of personnel remains uncertain. Yet every day, executives make decisions based on data and trends, usually without a sophisticated understanding of their multivariable and time-oriented nature.

In other words, there is a disassociation between the experts in analysis of observational clinical data and the decision makers. There are also an increasing number of sources of data for decision making, with more and more healthcare systems and multispecialty practices developing data repositories. Instruments to extract data from such systems are also readily available. While these data are potentially useful, questionable data analyses and gluts of information (not all of it necessarily valid or useful) may create problems for decision makers.

Since PCTs are not feasible for answering the questions that underlie a good portion of the decisions made every day by administrators and clinicians, the question is not really whether we should look beyond the PCT. Instead, we should examine how best to integrate various modes of decision making, including both PCTs and other approaches to data analysis, in addition to opinion based on personal experience. We must ask ourselves: Is it better to combine evidence from PCTs with opinion, or is it better to use a layered approach using PCTs for critical questions and nonrandomized analyses to fill in gaps between clear evidence and opinion?

For the latter approach, we must think carefully about the levels of decision making that we must inform every day, the speed required for this, how to adapt the methodology to the level of certainty needed, and ways to organize growing data repositories and the researchers who will analyze them to better develop evidence to support these decisions. Much of the work in this arena is being conducted by the Centers for Education and Research in Therapeutics (CERTs) (Califf 2006b). The Agency for Healthcare Research and Quality (AHRQ) is a primary source of funding for these efforts, although significant increases in support will be needed

to permit adequate progress in overcoming methodological and logistical hurdles.

Cluster Randomized Trials

If a PCT is not practical, the second alternative to large RCTs is cluster randomized trials. There is growing interest in this approach among trialists, because health systems increasingly provide venues in which practices vary and large numbers of patients are seen in environments that have good data collection capabilities. A cluster randomized trial performs randomization on the level of a practice rather than the individual patient. For example, certain sites are assigned to intervention A, others use intervention B, and a third group serves as a control. In large regional quality improvement projects, factorial designs can be used to test more than one intervention. This type of approach can yield clear and pragmatic answers, but as with any method, there are limitations that must be considered. Although methods have been developed to adjust for the nonindependence of observations within a practice, these methods are poorly understood and difficult to explain to clinical audiences. Another persistent problem is contamination that occurs when practices are aware of the experiment and alter their practices regardless of the randomized assignment. A further practical issue is obtaining informed consent from patients entering a health system where the practice has been randomized, recognizing that individual patient choice for interventions often enters the equation.

There are many examples of well-conducted cluster randomized trials. The Society of Thoracic Surgeons (STS), one of the premier learning organizations in the United States, has a single database containing data on more than 80 percent of all operations performed (Welke et al. 2004). Ferguson and colleagues (Ferguson et al. 2002) performed randomization at the level of surgical practices to test a behavioral intervention to improve use of postoperative beta blockers and the use of the internal thoracic artery as the main conduit for myocardial revascularization. Embedding this study into the ongoing STS registry proved advantageous, because investigators could examine what happened before and what happened after the experiment. They were able to show that both interventions work, that the use of this practice improved surgical outcomes, and that national practice improved after the study was completed.

Variations of this methodologic approach have also been quite successful, such as the amalgamation of different methods described in a recent study by (Schneeweiss et al. 2004). This study used both cluster randomization and time sequencing embedded in a single trial to examine nebulized respiratory therapy in adults and the effects of a policy change. Both

approaches were found to yield similar results with regard to healthcare utilization, cost, and outcomes.

Observational Treatment Comparisons

A third alternative to RCTs is the observational treatment comparison. This is a potentially powerful technique requiring extensive experience with multiple methodological issues. Unfortunately, the somewhat delicate art of observational treatment comparison is mostly in the hands of naïve practitioners, administrators, and academic investigators who obtain access to databases without the skills to analyze them properly. The underlying assumption of the observational treatment comparison is that if the record includes information on which patients received which treatment, and outcomes have been measured, a simple analysis can evaluate which treatment is better. However in using observational treatment comparisons, one must always consider not only the possibility of confounding by indication and inception time bias, but also the possibility of missing data at baseline to adjust for differences, missing follow-up data, and poor characterization of outcomes due to a lack of prespecification. In order to deal with confounding, observational treatment comparisons must include adjustment for known prognostic factors, adjustment for propensity (including consideration of inverse weighted probability estimators for chronic treatments), and employment of time-adjusted covariates when inception time is variable.

Resolving some of these issues with definitions of outcomes and missing data will be greatly aided by development of interoperable clinical research networks that work together over time with support from government agencies. One example is the National Electronic Clinical Trials and Research (NECTAR) network—a planned NIH network that will link practices in the United States to academic medical centers by means of interoperable data systems. Unfortunately, NECTAR remains years away from actual implementation.

Despite the promise of observational studies, there are limitations that cannot be overcome even by the most experienced of researchers. For example, SUPPORT (Study to Understand Prognoses and Preferences for Outcomes and Risks of Treatment) (Connors et al. 1996; Cowley and Hager 1996) examined use of a right heart catheter (RHC) using prospectively collected data, so there were almost no missing data. After adjusting for all known prognostic factors and using a carefully developed propensity score, this study found an association between use of RHC in critically ill patients and an increased risk of death. Thirty other observational studies came to the same conclusion, even when looking within patient subgroups to ensure that comparisons were being made between comparable groups.

None of the credible observational studies showed a benefit associated with RHC, yet more than a billion dollars’ worth of RHCs were being inserted in the United States every year.

Eventually, five years after publication of the SUPPORT RHC study, the NIH funded a pair of RCTs. One focused on heart disease and the other on medical intensive care. The heart disease study (Binanay et al. 2005; Shah et al. 2005) was a very simple trial in which patients were selected on the basis of admission to a hospital with acute decompensated heart failure. These patients were randomly assigned to receive either an RHC or standard care without an RHC. This trial found no evidence of harm or of benefit attributable to RHC. Moreover, other trials were being conducted around the world; when all the randomized data were in, the point estimate comparing the two treatments was 1.003: as close to “no effect” as we are likely ever to see. In this instance, even with some of the most skillful and experienced researchers in the world working to address the question of whether RHC is a harmful intervention, the observational data clearly pointed to harm, whereas RCTs indicated no particular harm or benefit.

Another example is drawn from the question of the association between hemoglobin and renal dysfunction. It is known that as renal function declines, there is a corresponding decrease in hemoglobin levels; therefore, worse renal function is associated with anemia. Patients with renal dysfunction and anemia have a significantly higher risk of dying, compared to patients with the same degree of renal dysfunction but without anemia. Dozens of different databases all showed the same relationship: the greater the decrease in hemoglobin level, the worse the outcome.

Based on these findings, many clinicians and policy makers assumed that by giving a drug to manage the anemia and improve hematocrit levels, outcomes would also be improved. Thus, erythropoietin treatment was developed and, on the basis of observational studies and very short term RCTs, has become a national practice standard. There are performance indicators that identify aggressive hemoglobin correction as a best practice; CMS pays for it; and nephrologists have responded by giving billions of dollars worth of erythropoietin to individuals with renal failure, with resulting measurable increases in average hemoglobin.

To investigate effects on outcome, the Duke Clinical Research Institute (DCRI) coordinated a PCT in patients who had renal dysfunction but did not require dialysis (Singh et al. 2006). Subjects were randomly assigned to one of two different target levels of hematocrit, normal or below normal. We could not use placebo, because most nephrologists were absolutely convinced of the benefit of erythropoietin therapy. However, when an independent data monitoring committee stopped the study for futility, a trend toward worse outcomes (death, stroke, heart attack, or heart failure)

was seen in patients randomized to the more “normal” hematocrit target; when the final data were tallied, patients randomized to the more aggressive target had a significant increase in the composite of death, heart attack, stroke and heart failure. Thus the conclusions drawn from observational comparisons were simply incorrect.

These examples of highly touted observational studies that were ultimately seen to have provided incorrect answers (both positive and negative for different interventions) highlight the need to improve methods aimed at mitigating these methodological pitfalls. We must also consider how best to develop a critical mass of experts to guide us through these study methodologies, and what criteria should be applied to different types of decisions to ensure that the appropriate methods have been used.

Interrupted Time Series and Instrumental Variables

A fourth alternative to large RCTs is the interrupted time series. This study design requires significant expertise because it includes all the potential difficulties of observational treatment comparisons, plus uncertainties about temporal trends. However, one example is drawn from an analysis of administrative data, in which data were used to assess retrospective drug utilization review and effects on the rate of prescribing errors and on clinical outcomes (Hennessy et al. 2003). This study concluded that, although retrospective drug utilization review is required of all state Medicaid programs, the authors were unable to identify an effect on the rate of exceptions or on clinical outcomes.

The final alternative to RCTs is the use of instrumental variables, which are variables unrelated to biology that produce a contrast in treatment that can be characterized. A national quality improvement registry of patients with acute coronary syndromes evaluated the outcomes of use of early versus delayed cardiac catheterization using instrumental variable analysis (Ryan et al. 2005). The instrumental variable in this case was whether the patient was admitted to the hospital on the weekend (when catheterization delays were longer) or on a weekday (when time to catheterization is shorter). Results indicated a trend toward greater benefit of early invasive intervention in this high-risk condition. One benefit of this approach is that variables can be embedded in an ongoing registry (e.g., population characteristics in a particular zip code can be used to create an approximation of the socioeconomic status of a group of patients). However, results often are not definitive, and it is common for this type of study design to raise many more questions than it answers.

Future Directions: Analytical Synthesis

A national network funded by the AHRQ demonstrates a concerted, systematic approach to addressing all these issues in the context of clinical questions that require a synthesis of many types of analysis. The Developing Evidence to Inform Decisions about Effectiveness (DEcIDE) network seeks to inform the decisions that patients, healthcare providers, and administrators make about therapeutic choices. The DCRI’s first project as part of the DEcIDE Network examines the issue of choice of coronary stents. This is a particularly interesting study because, while there are dozens of RCTs addressing this question, new evidence continues to emerge. Briefly, when drug-eluting stents (DES) first became available to clinicians, there was a radical shift in practice from bare metal stents (BMS) to DES. Observational data from our database—now covering about 30 years of practice—are very similar to those reported in RCTs and indicate reduced need for repeat procedures with DES, because they prevent restenosis in the stented area.

The problem, however, is that only one trial has examined long-term outcomes among patients who were systematically instructed to discontinue dual platelet aggregation inhibitors (i.e., aspirin and clopidogrel). This study (Pfisterer et al. In press; Harrington and Califf 2006) was funded by the Swiss government and shows a dramatic increase in abrupt thrombosis in people with DES compared with BMS when clopidogrel was discontinued per the package insert instructions, leaving the patients receiving only aspirin to prevent platelet aggregation. In the year following discontinuation of clopidogrel therapy, the primary composite end point of cardiac death or myocardial infarction occurred significantly more frequently among patients with DES than in the BMS group. This was a small study, but it raises an interesting question: If you could prevent restenosis in 10 out of 100 patients but had 1 case of acute heart attack per 100, how would you make that trade-off? This is precisely the question we are addressing with the DEcIDE project.

Despite all these complex issues, the bottom line is that when evidence is applied systematically to practice improvement, there is a continuous improvement in patient outcomes (Mehta et al. 2002; Bhatt et al. 2004). Thus the application of clinical practice guidelines and performance measures seems to be working, but all of us continue to dream about improving the available evidence base and using this evidence on a continuous basis. However, this can only come to pass when we use informatics to integrate networks, not just within health systems but across the nation. We will need continuous national registries (of which we now have examples), but we also need to link existing networks so that clinical studies can be conducted more effectively. This will help ensure that patients, physicians, and scientists form true “communities of research” as we move from typi-

cal networks of academic health center sites linked only by a single data coordinating center to networks where interoperable sites can share data.

A promising example of this kind of integration exists in the developing network for child psychiatry. This is a field that historically has lacked evidence to guide treatments; however, there are currently 200 psychiatrists participating in the continuous collection of data that will help answer important questions, using both randomized and nonrandomized trials (March et al. 2004).

The classical RCT remains an important component of our evidence-generating system. However, it needs to be replaced in many situations by the PCT, which has a distinctly different methodology but includes the critical element of randomization. Given the enormous number of decisions that could be improved by appropriate decision support however, alternative methods for assessing the relationships between input variables and clinical outcomes must be used. We now have the technology in place in many health systems and government agencies to incorporate decision support into practice, and methods will evolve with use. An appreciation of both the pitfalls and the advantages of these methods, together with the contributions of experienced analysts, will be critical to avoiding errant conclusions drawn from these complex datasets, in which confounding and nonintuitive answers are the rule rather than the exception.

EVOLVING METHODS: EVALUATING MEDICAL DEVICE INTERVENTIONS IN A RAPID STATE OF FLUX

Telba Irony, Ph.D.

Center for Devices and Radiological Health, Food and Drug Administration

Methodological obstacles slow down the straightforward use of clinical data and experience to assess the safety and effectiveness of new medical device interventions in a rapid state of flux. This paper discusses current and future technology trends, the FDA’s Critical Path Initiative, the Center for Devices and Radiological Health (CDRH), Medical Device Innovation Initiative and, in particular, statistical methodology being currently implemented by CDRH to take better advantage of data generated by clinical studies designed to assess safety and effectiveness of medical device interventions.

The Critical Path is the FDA’s premier initiative aiming to identify and prioritize the most pressing medical product development problems and the greatest opportunities for rapid improvement in public health benefits. As a major source of breakthrough technology, medical devices are becoming more critical to the delivery of health care in the United States. In addition, they are becoming more and more diverse and complex as improvements

are seen in devices ranging from surgical sutures and contact lenses to prosthetic heart valves and diagnostic imaging systems.

There are exciting emerging technology trends on the horizon and our objective is to obtain evidence on the safety and effectiveness of new medical device products as soon as possible to ensure their quick approval and time to market. New trends comprise computer-related technology and molecular medicine including genomics, proteomics, gene therapy, bioinformatics, and personalized medicine. We will also see new developments in wireless systems and robotics to be applied in superhigh-spatial-precision surgery, in vitro sample handling, and prosthetics. We foresee an increase in the development and use of minimally invasive technologies, nanotechnology (extreme miniaturization), new diagnostic procedures (genetic, in vitro, or superhigh-resolution sensors), artificial organ replacements, decentralized health care (home or self-care, closed-loop home systems, and telemedicine), and products that are a combination of devices and drugs.

CDRH’s mission is to establish a reasonable assurance of the safety and effectiveness of medical devices and the safety of radiation-emitting electronic products marketed in the United States. It also includes monitoring medical devices and radiological products for continued safety after they are in use, as well as helping the public receive accurate, evidence-based information needed to improve health. To accomplish its mission, CDRH must perform a balancing act to get safe and effective devices to the market as quickly as possible while ensuring that devices currently on the market remain safe and effective. To better maintain this balance and confront the challenge of evaluating new medical device interventions in a rapid state of flux, CDRH is promoting the Medical Device Innovation Initiative. Through this initiative, CDRH is expanding current efforts to promote scientific innovation in product development, focusing device research on cutting-edge science, modernizing the review of innovative devices, and facilitating a least burdensome approach to clinical trials. Ongoing efforts include the development of guidance documents to improve clinical trials and to maximize the information gathered by such trials, the expansion of laboratory research, a program to improve the quality of the review of submissions to the CDRH, and expansion of the clinical and scientific expertise at the FDA. The Medical Device Critical Path Opportunities report (FDA 2004) identified key opportunities in the development of biomarkers, improvement in clinical trial design, and advances in bioinformatics, device manufacturing, public health needs, and pediatrics.

The “virtual family” is an example of a project encompassed by this initiative. It consists of the development of anatomic and physiologically accurate adult and pediatric virtual circulatory systems to help assess the safety and effectiveness of new stent designs prior to fabrication, physical testing, animal testing, and human trials. This project is based on a

computer simulation model which is designed to mimic all physical and physiological responses of a human being to a medical device. It is the first step toward a virtual clinical trial subject. Another example is the development of a new statistical model for predicting the effectiveness of implanted cardiac stents through surrogate outcomes, to measure and improve the long-term safety of these products.

To better generate evidence on which to base clinical decisions, the Medical Device Innovation Initiative emphasizes the need for improved statistical approaches and techniques to learn about the safety and effectiveness of medical device interventions in an efficient way. It seeks to conduct smaller and possibly shorter trials, and to create a better decision-making process.

Well-designed and conducted clinical trials are at the center of clinical decision making today and the clinical trial gold standard is the prospectively planned, randomized, controlled clinical trial. However, it is not always feasible to conduct such a trial, and in many cases, conclusions and decisions must be based on controlled, but not randomized, clinical trials, comparisons of an intervention to a historical control or registry, observational studies, meta-analyses based on publications, and post-market surveillance. There is a crucial need to improve assessment and inference methods to extract as much information as possible from such studies and to deal with different types of evidence.

Statistical methods are evolving as we move to an era of large volumes of data on platforms conducive to analyses. However, being able to easily analyze data can also be dangerous because it can lead to false discoveries, resources wasted chasing false positives, wrong conclusions, and suboptimal or even bad decisions. CDRH is therefore investigating new statistical technology that can help avoid misleading conclusions, provide efficient and faster ways to learn from evidence, and enable better and faster medical decision making. Examples include new methods to adjust for multiplicity to ensure that study findings will be reproduced in practice as well as new methods to deal with subgroup analysis.

A relatively new statistical method that is being used to reduce bias in the comparison of an intervention to a nonrandomized control group is propensity score analysis. It is a method to match patients by finding patients that are equivalent in the treatment and control groups. This statistical method may be used in nonrandomized controlled trials and the control group may be a registry or a historical control. The use of this technique in observational studies attempts to balance the observed covariates. However, unlike trials in which there is random assignment of treatments, this technique cannot balance the unobserved covariates.

One of the new statistical methods being used to design and analyze clinical trials is the Bayesian approach, which has been implemented and

used at CDRH for the last seven years, giving excellent results. The Bayesian approach is a statistical theory and approach to data analysis that provides a coherent method for learning from evidence as it accumulates. Traditional (also called frequentist) statistical methods formally use prior information only in the design of a clinical trial. In the data analysis stage, prior information is not part of the analysis. In contrast, the Bayesian approach uses a consistent, mathematically formal method called Bayes’ Theorem for combining prior information with current information on a quantity of interest. When good prior information on a clinical use of a medical device exists, the Bayesian approach may enable the FDA to reach the same decision on a device with a smaller-sized or shorter-duration pivotal trial. Good prior information is often available for medical devices. The sources of prior information include the company’s own previous studies, previous generations of the same device, data registries, data on similar products that are available to the public, pilot studies, literature controls, and legally available previous experience using performance characteristics of similar products. The payoff of this approach is the ability to conduct smaller and shorter trials, and to use more information for decision making. Medical device trials are amenable to the use of prior information because the mechanism of action of medical devices is typically physical, making the effects local and not systemic. Local effects are often predictable from prior information when modifications to a device are minor.

Bayesian methods may be controversial when the prior information is based mainly on personal opinion (often derived by elicitation methods). They are often not controversial when the prior information is based on empirical evidence such as prior clinical trials. Since sample sizes are typically small for device trials, good prior information can have greater impact on the analysis of the trial and thus on the FDA decision process.

The Bayesian approach may also be useful in the absence of informative prior information. First, the approach can provide flexible methods for handling interim analyses and other modifications to trials in midcourse (e.g., changes to the sample size). Conducting an interim analysis during a Bayesian clinical trial and being able to predict the outcome at midcourse enables early stopping either for early success or for futility. Another advantage of the Bayesian approach is that it allows for changing the randomization ratio at mid-trial. This can ensure that more patients in the trial receive the intervention with the highest probability of success, and it is not only ethically preferable but also encourages clinical trial participation. Finally, the Bayesian approach can be useful in complex modeling situations where a frequentist analysis is difficult to implement or does not exist.

Several devices have been approved through the use of the Bayesian approach. The first example was the INTER FIX™Threaded Fusion Device by Medtronic Sofamor Danek, which was approved in 1999. That device

is indicated for spinal fusion in patients with degenerative disc disease. In that case, a Bayesian predictive analysis was used in order to stop the trial early. The statistical plan used data of 12-month visits combined with partial data of 24-month visits to predict the results of patients who had not reached 24 months in the study (later these results were confirmed). Later (after approval) the sponsor completed the follow-up requirements for the patients enrolled in the study. The final results validated the Bayesian predictive analysis, which significantly reduced the time that was needed for completion of the trial (FDA 1999).

Another example is the clinical trial designed to assess the safety and effectiveness of the LT-CAGE™ Tapered Fusion Device, by Medtronic Sofamor Danek, approved in September 2000. This device is also indicated for spinal fusion in patients with degenerative disc disease. The trial to assess safety and effectiveness of the device was planned as Bayesian, and Bayesian statistical methods were used to analyze the results. Data from patients that were evaluated at 12 and 24 months were used combined with data from patients evaluated only at 12 months in order to make predictions and comparisons for success rates at 24 months. The Bayesian predictions performed during the interim analyses significantly reduced the sample size and the time that was needed for completion of the trial. Again, the results were later confirmed (Lipscomb et al. 2005; FDA 2002).

A third example, where prior information was used along with interim analyses is the Bayesian trial for the St. Jude Medical Regent heart valve, which was a modification of the previously approved St. Jude Standard heart valve. The objective of this trial was to assess the safety and effectiveness of the Regent heart valve. The trial used prior information from the St. Jude Standard heart valve by borrowing the information via Bayesian hierarchical models. In addition, the Bayesian experimental design provided a method to determine the stopping time based on the amount of information gathered during the trial and the prediction of what future results would be. The trial stopped early for success (FDA 2006).

In 2006, the FDA issued a draft guidance for industry and FDA staff that elaborates on the use of Bayesian methods. It covers Bayesian statistics, planning a Bayesian clinical trial, analyzing a Bayesian clinical trial, and post-market surveillance. A public meeting for discussion of the guidance took place in July 2006; this can be found at http://www.fda.gov/cdrh/meetings/072706-bayesian.html.

In general, adaptive trial designs, either Bayesian or frequentist, constitute an emerging field that seems to hold promise for more ethical and efficient development of medical interventions by allowing fuller integration of available knowledge as trials proceed. However, all aspects and trade-offs of such design need to be understood before they are widely used. Clearly there are major logistic, procedural, and operational challenges in using

adaptive clinical trial designs, not all of them as yet resolved. However, they have the potential to play a large role and be beneficial in the future. The Pharmaceutical Research and Manufacturers of America (PhRMA) and the FDA organized a workshop that took place on November 13 and 14, 2006, in Bethesda, Maryland, to discuss challenges, opportunities and scope of adaptive trial designs in the development of medical interventions. PhRMA has formed a working group on adaptive designs that aims to facilitate a constructive dialogue on the topic by engaging statisticians, clinicians, and other stakeholders in academia, regulatory agencies, and industry to facilitate broader consideration and implementation of such designs. PhRMA produced a series of articles that have been published in the Drug Information Journal, Volume 40, 2006.

Finally, formal decision analysis is a mathematical tool that should be used when making decisions on whether or not to approve a device. This methodology has the potential to enhance the decision-making process and make it more transparent by better accounting for the magnitude of the benefits as compared with the risks of a medical intervention.

CDRH is also committed to achieving a seamless approach to regulation of medical devices in which the pre-market activities are integrated with continued post-market surveillance and enforcement. In addition, appropriate and timely information is fed back to the public. This regulatory approach encompasses the entire life cycle of a medical device. The “total product life cycle” enhances CDRH’s ability to fulfill its mission to protect and promote public health. CDRH’s pre-market review program cannot guarantee that all legally marketed devices will function perfectly in the post-market setting. Pre-market data provide a reasonable estimate of device performance but may not be large enough to detect the occurrence of rare adverse events. Moreover, device performance can render unanticipated outcomes in post-market use, when the environment is not as controlled as in the pre-market setting. Efforts are made to forecast post-market performance based on pre-market data, but the dynamics of the post-market environment create unpredictable conditions that are impossible to investigate during the pre-market phase. As a consequence, CDRH is committed to a Post-market Transformation Initiative and recently published two documents on the post-market safety of medical devices. One describes CDRH’s post-market tools and the approaches used to monitor and address adverse events and risks associated with the use of medical devices that are currently on the market (see “Ensuring the Safety of Marketed Medical Devices: CDRH’s Medical Device Post-market Safety Framework”). The second document provides a number of recommendations for improving the post-market program (see “Report of the Post-market Transformation Leadership Team: Strengthening FDA’s Post-market Program for Medical Devices”). Both of these documents are available at

http://www.fda.gov/cdrh/postmarket/mdpi.html. It is important to mention that one of the recommended actions to transform the way CDRH handles post-market information to assess the performance of marketed medical device products is to design a pilot study to investigate quantitative decision-making techniques to evaluate medical devices throughout the “total product life cycle.”

In conclusion, as the world of medical devices becomes more complex, the Center for Devices and Radiological Health is developing tools to collect information, make decisions, and manage risk in the twenty-first century. Emerging medical device technology will fundamentally transform the healthcare and delivery system, provide new and cutting-edge solutions, challenge existing paradigms, and revolutionize the way treatments are administered.

EVOLVING METHODS: MATHEMATICAL MODELS TO HELP FILL THE GAPS IN EVIDENCE

David M. Eddy, M.D., Ph.D., and David C. Kendrick, M.D., M.P.H.

Archimedes, Inc.

A commitment to evidence-based medicine makes excellent sense. It helps ensure that decisions are founded on empirical observations. It helps ensure that recommended treatments are in fact effective and that ineffective treatments are not recommended. It also helps reduce the burden, uncertainty, and variations that plague decisions based on subjective judgments. Ideally, we would answer every important question with a clinical trial or other equally valid source of empirical observations.

Unfortunately, this is not feasible. Reasons include high costs, long durations, large sample sizes, difficulty getting physicians and patients to participate, large number of options to be studied, speed of technological innovation, and the fact that the questions can change before the trials are completed. For these reasons we need to find alternative ways to answer questions—to fill the gaps in the empirical evidence.

One of these is to use mathematical models. The concept is straight-forward. Mathematical models use observations of real events (data) to derive equations that represent the relationships between variables. These equations can then be used to calculate events that have never been directly observed. For a simple example, data on the distances traveled when moving at particular speeds for particular lengths of time can be used to derive the equation “distance = rate × time” (D = RT). Then, that equation can be used to calculate the distance traveled at any other speeds for any other times. Mathematical models have proven themselves enormously valuable in other fields, from calculating mortgage payments, to designing budgets,

to flying airplanes, to taking photos of Mars, to e-mail. They have also been successful in medicine, examples being computed tomography (CT) scans and magnetic resonance imaging (MRI), radiation therapy, and electronic health records. Surely there must be a way they can help us improve the evidence base for clinical medicine.

There is very good reason to believe they can, provided some conditions are met. First, we must understand that models will never be able to completely replace clinical trials. There are several reasons. Most fundamentally, trials are our anchor to reality—they are observations of real events. Models are not directly connected to reality. Indeed, models are built from trials and other sources of empirical observations. They are simplified representations of reality, filtered by observations and constrained by equations and will never be as accurate as reality. Not only are they one step removed from empirical observations, but they cannot exist without them. Thus, if it is feasible to answer a question with a clinical trial, then that is the preferred approach. Models should be used to fill the gaps in evidence only when clinical trials are not feasible.

The second condition is that the model should be validated against the clinical trials that do exist. More specifically, before we rely on a model to answer a question we should ensure that it accurately reproduces or predicts the most important clinical trials that are adjacent to or surround that question. The terms “adjacent to” and “surround” are intended to identify the trials that involve similar populations, interventions, and outcomes. For example, suppose we want to compare the effects of atorvastatin, simvastatin, and pravastatin on the 10-year rate of myocardial infarctions (MIs) in people with coronary artery disease (previous MI, angina, history of percutaneous transluminal coronary angioplasty [PTCA], or bypass). These head-to-head comparisons have never been performed, and it would be extraordinarily difficult to do so, given the long time period (10 years), very large sample sizes required (tens of thousands), and very high costs (hundreds of millions of dollars). However a mathematical model could help answer these questions if it had already been shown to reproduce or predict the existing trials of these drugs versus placebos in similar populations. In this case the major adjacent trials would include 4S, the Scandinavian Simvastatin Survival Study (Randomised trial of cholesterol lowering in 4,444 patients with coronary heart disease [4S] 1994); WOSCOPS (Shepherd et al. 1995); CARE (Flaker et al. 1999), LIPID (Prevention of cardiovascular events and death with pravastatin in patients with coronary heart disease and a broad range of initial cholesterol levels [LIPID] 1998), PROSPER (Shepherd et al. 2002), CARDS (Colhoun et al. 2004), TNT (LaRosa et al. 2005), and IDEAL (Pedersen et al. 2005).(Pedersen et al. 2005).

The methods for selecting the surrounding trials and performing the validations are beyond the scope of this paper, but four important ele-

ments are that (1) the trials should be identified or at least reviewed by a third party, (2) the validations should be performed at the highest level of clinical detail of which the model is capable, (3) all the validations should be performed with the same version of the model, and (4) to the greatest extent possible, the validations should be independent in the sense that they were not used to help build the model. On the third point, it would be meaningless if a model were tweaked or parameters were refitted to match the results of each trial. On the fourth point, it is almost inevitable that some trials will have been used to help build a model. In those cases we say that the validation is “dependent”; these validations ensure that the model can faithfully reproduce the assumptions used to build it. If no information from a trial was used to help build the model, we say that a validation against that trial is “independent.” These validations provide insights into the model’s ability to simulate events in new areas, such as new settings, target populations, interventions, outcomes, and durations.

If these conditions are met for a question, it is not feasible to conduct a new trial to answer the question, and there is a model that can reproduce or predict the major trials that are most pertinent to the question, then it is reasonable to use the model to fill in the gaps between the existing trials. While that approach will not be as desirable as conducting a new clinical trial, one can certainly argue that it is better than the alternative, which is clinical or expert judgment.

If a model is used, then the degree of confidence we can place in its results will depend on the number of adjacent trials against which it has been validated, on the “distance” between the questions being asked and the real trials, and on how well the model’s results matched the real results. For example, one could have a fairly high degree of confidence in a model’s results if the question is about a subpopulation of an existing trial whose overall results the model has already predicted. Other examples of analyses about which we could be fairly confident are the following:

-

Head-to head comparisons of different drugs, all of which have been studied in their own placebo-controlled trials, such as comparing atorvastatin, simvastatin, and pravastatin;

-

Extension of a trial’s results to settings with different levels of physician performance and patient compliance;

-

Studies of different doses of drugs, or combinations of drugs, for which there are good data from phase II trials on biomarkers, and there are other trials connecting the biomarkers to clinical outcomes;

-

Extensions of a trial’s results to longer follow-up times; and

-

Analyses of different mixtures of patients, such as different proportions of people with CAD, particular race/ethnicities, comorbidities,

-

or use of tobacco, provided the model’s accuracy for these groups has been tested in other trials.

As one moves further from the existing trials and validations, the degree of confidence in the model’s results will decrease. At the extreme, a model that is well validated for, say Type 2 diabetes, cannot be considered valid for a different disease, such as coronary artery disease (CAD), congestive heart failure (CHF), cancer, or even Type 1 diabetes. A corollary of this is that a model is never “validated” in a general sense, as though that were a property of the model that carries with it to every new question. Models are validated for specific purposes, and as each new question is raised, their accuracy in predicting the trials that surround that question needs to be examined.

Example: Prevention of Diabetes in High-Risk People

We can illustrate these concepts with an example. Several studies have indicated that individuals at high risk for developing diabetes can be identified from the general population and that with proper management the onset of diabetes can be delayed, or perhaps even prevented altogether (Tuomilehto et al. 2001; Knowler et al. 2002; Snitker et al. 2004; Chiasson et al. 1998; Gerstein et al. 2006). Although these results indicate the potential value of treating high-risk people, the short durations and limited number of interventions studied in these trials leave many important questions unanswered.

Taking the Diabetes Prevention Program (DPP) as an example, it showed that in people at high risk of developing diabetes, over a follow-up period of four years about 35 percent developed diabetes (the control arm). Metformin decreased this to about 29 percent, for a relative reduction of about 17 percent. Lifestyle modification decreased it to about 18 percent, for a relative reduction of about 48 percent. Over the mean follow-up period of 2.8 years the relative reduction was about 58 percent. This is certainly an encouraging finding and is sufficient to stimulate interest in diabetes prevention. However 2.8 years or even 4 years is far too short to determine the effects of these interventions on the long term progression of diabetes or any of its complications; for example:

-

Do the prevention programs just postpone diabetes or do they prevent it altogether?

-

What are the long-term effects of the prevention programs on the probabilities of micro- and macrovascular complications of diabetes, such as cardiovascular disease, retinopathy, and nephropathy?

-

What are the effects on long-term costs, and what are the cost-effectiveness ratios of the prevention programs?

-

Are there any other programs that might be more cost effective?

-

What would a program have to cost in order to break even—no increase in net cost?

These new questions need to be answered if we are to plan diabetes prevention programs rationally. Ideally, we would answer them by continuing the DPP for another 20 to 30 years. But that is not possible for obvious reasons. The only possible method is to use a mathematical model to extend the trial. Specifically, if a model contains all the important variables and can demonstrate that it is capable of reproducing the DPP, along with other trials that document the outcomes of diabetes, then we could use it to run a simulated version of the DPP for a much longer period of time. This approach would also enable us to explore other types of prevention activities and see how they compare with metformin and the lifestyle modification program used in the DPP.

An example of such a model is the Archimedes model. Descriptions of the model have been published elsewhere (Schlessinger and Eddy 2002; Eddy and Schlessinger 2003a, 2003b). Basically, the core of the model is a set of ordinary and differential equations that represent human physiology at roughly the level of detail found in general medical textbooks, patient charts, and clinical trials. It is continuous in time, with clinical events occurring at any time. Biological variables are continuous and relate to one another in ways that they are understood to interact in vivo. Building out from this core, the Archimedes model includes the development of signs and symptoms, patient behaviors in seeking care, clinical events such as visits and admissions, protocols, provider behaviors and performance, patient compliance, logistics and utilization, health outcomes, quality of life, and costs. Thus the model simulates a comprehensive health system in which virtual people get virtual diseases, seek care at virtual hospitals and clinics, are seen by virtual healthcare providers, who have virtual behaviors, use virtual equipments and supplies, generate virtual costs, and so forth. An analogy is Electronic Arts’ SimCity game, but starting at the level of detail of the underlying physiologies of each of the people in the simulation rather than city streets and utility systems. This relatively high level of physiological detail enables the model to simulate diseases such as diabetes and their treatments. For example, in the model people have livers, which produce glucose, which is affected by insulin resistance and can be affected by metformin. Similarly, people in the model can change their lifestyles and lose weight, which affects the progression of many things including insulin resistance, blood pressure, cholesterol levels, and so forth. Thus

the Archimedes model is well positioned to study the effects of activities to prevent diabetes.

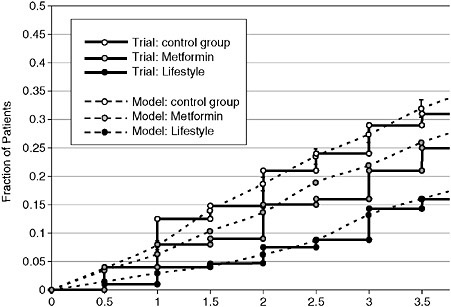

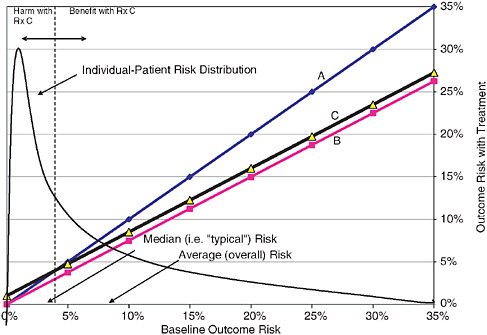

The Archimedes model is validated by using the simulated healthcare system to conduct simulated clinical trials that correspond to real clinical trials (Eddy et al. 2005). This provides the opportunity to compare the outcomes calculated in the model with outcomes seen in the real trials. Thus far the model has been validated against more than 30 trials. The first 18 trials, with seventy-four separate treatment arms and outcomes, were selected by an independent committee appointed by the American Diabetes Association (ADA) and have been published (Eddy et al. 2005). The overall correlation coefficient between the model’s results and those of the actual trials is 0.98. Ten of the eighteen trials in the ADA-chosen validations provided independent validations; they were not used to build the model itself. The correlation coefficient for these independent validations was 0.96. An example of an independent validation that is particularly important for this application is a prospective, independent validation of the DPP trial itself; the published results matched the predicted results quite closely (Figure 2-1). The Archimedes model also accurately simulated several trials that

FIGURE 2-1 Model’s predictions of outcomes in Diabetes Prevention Program. Comparison of proportions of people progressing to diabetes in the control group observed in the real Diabetes Prevention Program (DPP) (solid lines) and in the simulation of the DPP by the Archimedes model (dashed lines).

SOURCE: Eddy et al. Annals of Internal Medicine 2005; 143:251-264.

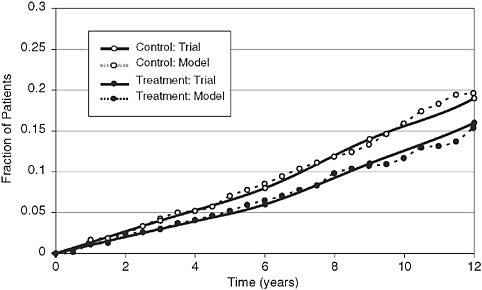

FIGURE 2-2 Comparison of model’s calculations and results of the United Kingdom Prospective Diabetes Study (UKPDS): Rates of myocardial infarctions in control and treated groups.

SOURCE: Eddy et al. Annals of Internal Medicine 2005; 143:251-264.

observed the progression of diabetes, development of complications, and effects of treatment.

An important example is the progression of diabetes and development of coronary artery disease in the United Kingdom Prospective Diabetes Study (Figure 2-2). The ability of the model to simulate or predict a large number of trials relating to diabetes and its complications builds confidence in its results.

Thus the prevention of diabetes in high-risk people meets the criteria outlined above—it is impractical or impossible to answer the important questions with real clinical trials, there is a model capable of addressing the questions at the appropriate level of physiological detail, and the model has been successfully validated against a wide range of adjacent clinical trials.

Methods

Use of the Archimedes model to analyze the prevention of diabetes in high risk people has been reported in detail elsewhere (Eddy et al. 2005). To summarize, the first step was to create a simulated population that corresponds to the population used in the DPP trial. This was done by start-

ing with a representative sample of the U.S. population, from the National Health and Nutrition Examination Survey (NHANES (National Health and Nutrition Evaluation Survey 1998-2002), and then applying the inclusion and exclusion criteria for the DPP to select a sample that matched the DPP population. Specifically, the DPP defined individuals to be at high risk for developing diabetes and included them in the trial if they had all of the following: body mass index (BMI) > 24, fasting plasma glucose (FPG) 90-125 mg/dL, and oral glucose tolerance test (OGTT) of 140-199 mg/dL. We then created copies or clones of the selected people from NHANES, by matching them on approximately 35 variables. A total of 10,000 people were selected and copied. This group was then exposed to three different interventions, corresponding to the arms of the real trial (baseline or control, metformin begun immediately, and the DPP lifestyle program begun immediately). The three groups were then followed for 30 years and observed for progression of diabetes and development of major complications such as myocardial infarction, stroke, end-stage renal disease, and retinopathy. Cost-generating events as well as symptoms and outcomes that affect the quality of life were also measured. The results could then be used to answer the questions about the long-term effects of diabetes prevention.

Do the Prevention Programs Just Postpone Diabetes or Do They Prevent It Altogether?

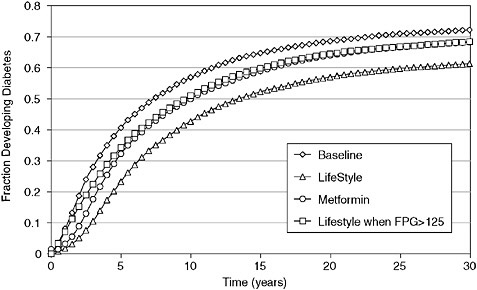

This can be answered by comparing the effects of metformin and lifestyle on the proportion of people who developed diabetes over the 30-year period. The results are shown in Figure 2-3. The natural rate of progression to diabetes, seen in the control group, was 72 percent over the 30-year follow-up period. Lifestyle modification, as offered in the DPP and continued until a person develops diabetes, would reduce the incidence of diabetes to about 61 percent, for a relative reduction of 15 percent. Thus, over a 30-year horizon the DPP lifestyle modification would actually prevent diabetes in about 11 percent of cases, while delaying it in the remaining 61 percent. In the metformin arm, about 4 percent of cases of diabetes would be prevented, for a 5.5 percent relative reduction in the 30-year incidence of diabetes.

What Are the Long-Term Effects of the Prevention Programs on the Probabilities of Micro- and Macrovascular Complications of Diabetes, like Cardiovascular Disease, Retinopathy, and Nephropathy?

This question is also readily answered, in this case by counting the number of clinical outcomes that occur in the control and lifestyle groups. The effects of the DPP lifestyle program on long-term complications of dia-

FIGURE 2-3 Model’s calculation of progression to diabetes in four programs.

SOURCE: Eddy et al. Annals of Internal Medicine 2005; 143:251-264.

betes are shown in Table 2-1. The 30-year rate of serious complications (including myocardial infarctions, congestive heart failure, retinopathy, stroke, nephropathy, and neuropathy) was reduced by an absolute 8.4 percent, from about 38.2 percent to about 29.8 percent, or a relative decrease of about 22 percent. The effects on other outcomes are shown in Table 2-1.

What Are the Effects on Long-Term Costs, and What Are the Cost-Effectiveness Ratios of the Prevention Programs?

The effects of the prevention activities on these outcomes can be determined by tracking all the clinical events and conditions that affect quality of life or that generate costs. Over 30 years, the aggregate per-person cost of providing care for diabetes and its complications in the control group was $37,171. The analogous costs in the metformin and lifestyle groups were $4,081 and $9,969 higher, respectively. The average cost-effectiveness ratios for the metformin and lifestyle groups (both compared to no intervention, or the control group), measured in terms of dollars per quality adjusted life year (QALY) gained, were $35,523 and $62,602, respectively.

TABLE 2-1 Expected Outcomes Over Various Time Horizons for Typical Person with DPP Characteristics

|

|

Without Lifestyle Program (baseline) |

Difference made by Lifestyle Program |

||||

|

Years of Follow-Up |

10 |

20 |

30 |

10 |

20 |

30 |

|

Diabetes |

56.91% |

68.55% |

72.18% |

−14.26% |

–11.58% |

−10.84% |

|

CAD/CHF |

|

|

|

|

|

|

|

Have an MI |

3.98% |

8.53% |

12.02% |

−0.39% |

−1.07% |

−1.65% |

|

Develop CHF (systolic or diastolic) |

0.23% |

0.67% |

1.19% |

−0.07% |

−0.07% |

−0.08% |

|

Retinopathy |

|

|

|

|||

|

Develop “Blindness” (legal) |

0.71% |

2.16% |

3.02% |

−0.39% |

−1.04% |

−1.44% |

|

Develop prolific diabetic retinopathy |

1.38% |

3.15% |

4.33% |

−0.68% |

−1.36% |

−1.40% |

|

Develop retinopathy |

1.11% |

2.57% |

3.39% |

−0.53% |

−1.15% |

−1.21% |

|

Total serious eye complication |

3.20% |

7.89% |

10.74% |

−1.60% |

−3.55% |

−4.05% |

|

Stroke (ischemic or hemorrhagic) |

2.89% |

6.99% |

11.61% |

−0.46% |

−0.97% |

−1.42% |

|

Nephropathy |

|

|||||

|

Develop ESRD |

0.00% |

0.00% |

0.07% |

0.00% |

0.00% |

−0.04% |

|

Need Dialysis |

0.00% |

0.00% |

0.05% |

0.00% |

0.00% |

−0.03% |

|

Need a kidney transplant |

0.00% |

0.00% |

0.02% |

0.00% |

0.00% |

−0.01% |

|

Total serious kidney complication |

0.00% |

0.00% |

0.15% |

0.00% |

0.00% |

−0.08% |

|

Neuropathy (symptomatic) |

|

|

|

|||

|

Develop foot ulcers |

0.68% |

1.43% |

1.78% |

−0.38% |

−0.65% |

−0.74% |

Are There Any Other Programs That Might Be More Cost-Effective?

The DPP had three arms: control, metformin begun immediately (i.e., when the patient is at risk of developing diabetes, but has not yet developed diabetes), and lifestyle modification begun immediately. Given the high cost of the lifestyle intervention as it was implemented in the DPP, it is reasonable to ask what the effect would be of waiting until a person progressed to diabetes and then beginning the lifestyle intervention. It is clearly not possible to go back and restart the DPP with this new treatment arm, but it is fairly easy to add it to a simulated trial. The results are summarized in Table 2-2. Compared to beginning the lifestyle modification immediately, waiting until a person develops diabetes gives up about 0.034 QALY, or about 21 percent of the effectiveness seen with immediate lifestyle modifica-

tion. However, the delayed lifestyle program increases costs about $3,066, or about one-third as much as the immediate lifestyle program. Thus the delayed program is more cost-effective in the sense that it delivers a quality-adjusted life year at a lower cost than beginning the lifestyle modification immediately—$24,523 versus $62,602. If the immediate lifestyle program is compared to the delayed lifestyle program, the marginal cost per QALY of the immediate program is about $201,818.

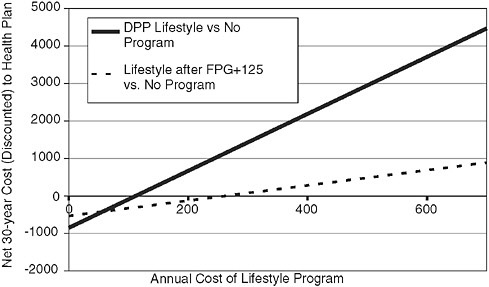

What Would a Program Have to Cost in Order to Break Even—No Increase in Net Cost?

This can be addressed by conducting a sensitivity analysis on the cost of the intervention. Figure 2-4 shows the relationship between the cost of the

TABLE 2-2 30-Year Costs, QALYs, and Incremental Costs/QALY for Four Programs from Societal Perspective (Discounted 3%)

|

|

Cost per person |

QALY per person |

Average cost/ QALYa |

Incremental increase in cost |

Incremental increase in QALYs |

Incremental cost/QALY |

|

Baseline |

$37,171 |

11.319 |

|

|

|

|

|

Lifestyle when FPG>125b |

$40,237 |

11.444 |

$24,523 |

$3,066 |

0.125 |

$24,523 |

|

DPP Lifestylec |

$47,140 |

11.478 |

$62,602 |

$6,903 |

0.034 |

$201,818 |

|

Metformin |

$41,189 |

11.432 |

$35,523 |

dominated |

dominated |

dominated |

|

aCompared to Baseline. bIncremental values are compared to Baseline. cIncremental values are compared to Lifestyle when >125. ABBREVIATIONS: QALY − quality-adjusted life-year, FPG − fasting plasma glucose, DPP − Diabetes Prevention Program. SOURCE: Eddy et al. Annals of Internal Medicine 2005; 143:251-264. |

||||||

DPP lifestyle program and the net financial costs. In order to break even, the DPP lifestyle program would have to cost $100 if begun immediately and about $225 if delayed until after a person develops diabetes. In the DPP trial itself, the lifestyle modification program cost $1,356 in the first year and about $672 in subsequent years.

FIGURE 2-4 Costs of two programs for diabetes prevention.

SOURCE: Eddy et al. Annals of Internal Medicine 2005; 143:251-264.

Discussion and Conclusions