1

Introduction

1.1

SMALL BUSINESS INNOVATION RESEARCH PROGRAM CREATION AND ASSESSMENT

Created in 1982 by the Small Business Innovation Development Act, the Small Business Innovation Research (SBIR) program was designed to stimulate technological innovation among small private-sector businesses while providing the government with cost-effective new technical and scientific solutions to challenging mission problems. The SBIR program was also designed to help stimulate the U.S. economy by encouraging small businesses to market innovative technologies in the private sector.1

As the SBIR program approached its twentieth year of existence, the U.S. Congress requested that the National Research Council (NRC) of the National Academies conduct a “comprehensive study of how the SBIR program has stimulated technological innovation and used small businesses to meet Federal research and development needs,” and make recommendations on improvements to the program.2 Mandated as a part of the SBIR program’s renewal in 2000, the NRC study has assessed the SBIR program as administered at the five federal agencies that together make up 96 percent of SBIR program expenditures. The agencies

are, in decreasing order of program size, the Department of Defense (DoD), the National Institutes of Health (NIH), the National Aeronautics and Space Administration (NASA), the Department of Energy (DoE), and the National Science Foundation (NSF).

The NRC Committee assessing the SBIR program was not asked to consider if the SBIR program should exist or not—Congress has affirmatively decided this question on three occasions.3 Rather, the Committee was charged with providing assessment-based findings to improve public understanding of the program as well as recommendations to improve the program’s effectiveness.

1.2

SBIR PROGRAM STRUCTURE

Eleven federal agencies are currently required to set aside 2.5 percent of their extramural research and development (R&D) budget exclusively for SBIR contracts. Each year these agencies identify various R&D topics, representing scientific and technical problems requiring innovative solutions, for pursuit by small businesses under the SBIR program. These topics are bundled together into individual agency “solicitations”—publicly announced requests for SBIR proposals from interested small businesses. A small business can identify an appropriate topic it wants to pursue from these solicitations and, in response, propose a project for an SBIR grant. The required format for submitting a proposal is different for each agency. Proposal selection also varies, though peer review of proposals on a competitive basis by experts in the field is typical. Each agency then selects the proposals that are found best to meet program selection criteria and awards contracts or grants to the proposing small businesses.

As conceived in the 1982 Small Business Development Act, the SBIR program’s grant-making process is structured in three phases:

-

Phase I grants essentially fund feasibility studies in which award winners undertake a limited amount of research aimed at establishing an idea’s scientific and commercial promise. Today, the legislation anticipates Phase I grants as high as $100,000.4

-

Phase II grants are larger—typically about $750,000—and fund more extensive R&D to further develop the scientific and commercial promise of research ideas.

-

Phase III. During this phase, companies do not receive further SBIR awards. Instead, grant recipients should be obtaining additional funds from a procurement program at the agency that made the award, from

-

private investors, or from the capital markets. The objective of this phase is to move the technology from the prototype stage to the marketplace.

Obtaining Phase III support is often the most difficult challenge for new firms to overcome. In practice, agencies have developed different approaches to facilitate SBIR grantees’ transition to commercial viability; not least among them are additional SBIR grants.

Previous NRC research has shown that firms have different objectives in applying to the program. Some want to demonstrate the potential of promising research, but they may not seek to commercialize it themselves. Others think they can fulfill agency research requirements more cost-effectively through the SBIR program than through the traditional procurement process. Still others seek a certification of quality (and the investments that can come from such recognition) as they push science-based products toward commercialization.5

1.3

SBIR REAUTHORIZATIONS

The SBIR program approached reauthorization in 1992 amidst continued concerns about the U.S. economy’s capacity to commercialize inventions. Finding that “U.S. technological performance is challenged less in the creation of new technologies than in their commercialization and adoption,” the National Academy of Sciences at the time recommended an increase in SBIR funding as a means to improve the economy’s ability to adopt and commercialize new technologies.6

Following this report, the Small Business Research and Development Enhancement Act (P.L. 102-564), which reauthorized the SBIR program until September 30, 2000, doubled the set-aside rate to 2.5 percent.7 This increase in the percentage of R&D funds allocated to the program was accompanied by a stronger emphasis on encouraging the commercialization of SBIR-funded technologies.8 Legislative language explicitly highlighted commercial potential

as a criterion for awarding SBIR grants. For Phase I awards, Congress directed program administrators to assess whether projects have “commercial potential,” in addition to scientific and technical merit, when evaluating SBIR applications.

The 1992 legislation mandated that program administrators consider the existence of second-phase funding commitments from the private sector or other non-SBIR sources when judging Phase II applications. Evidence of third-phase follow-on commitments, along with other indicators of commercial potential, was also to be sought. Moreover, the 1992 reauthorization directed that a small business’s record of commercialization be taken into account when evaluating its Phase II application.9

The Small Business Reauthorization Act of 2000 (P.L. 106-554) extended the SBIR program until September 30, 2008. It called for this assessment by the National Research Council of the broader impacts of the program, including those on employment, health, national security, and national competitiveness.10

1.4

STRUCTURE OF THE NRC STUDY

This NRC assessment of the SBIR program has been conducted in two phases. In the first phase, at the request of the agencies, a research methodology was developed by the NRC. This methodology was then reviewed and approved by an independent National Academies panel of experts.11 Information about the program was also gathered through interviews with SBIR program administrators and during two major conferences where SBIR officials were invited to describe program operations, challenges, and accomplishments.12 These conferences highlighted the important differences in each agency’s SBIR program goals, practices,

|

9 |

GAO report had found that agencies had not adopted a uniform method for weighing commercial potential in SBIR applications. See U.S. General Accounting Office, 1999, Federal Research: Evaluations of Small Business Innovation Research Can Be Strengthened, AO/RCED-99-114, Washington, DC: U.S. General Accounting Office. |

|

10 |

The current assessment is congruent with the Government Performance and Results Act (GPRA) of 1993: <http://govinfo.library.unt.edu/npr/library/misc/s20.html>. As characterized by the GAO, GPRA seeks to shift the focus of government decision making and accountability away from a preoccupation with the activities that are undertaken—such as grants dispensed or inspections made—to a focus on the results of those activities. See <http://www.gao.gov/new.items/gpra/gpra.htm>. |

|

11 |

The SBIR methodology report is available on the Web. Access at <http://www7.nationalacademies.org/sbir/SBIR_Methodology_Report.pdf>. |

|

12 |

The opening conference on October 24, 2002, examined the program’s diversity and assessment challenges. For a published report of this conference, see National Research Council, SBIR: Program Diversity and Assessment Challenges, Charles W. Wessner, ed., Washington, DC: The National Academies Press, 2004. The second conference, held on March 28, 2003, was titled “Identifying Best Practice.” The conference provided a forum for the SBIR program managers from each of the five agencies in the study’s purview to describe their administrative innovations and best practices. |

and evaluations. The conferences also explored the challenges of assessing such a diverse range of program objectives and practices using common metrics.

The second phase of the NRC study implemented the approved research methodology. The Committee deployed multiple survey instruments and its researchers conducted case studies of a wide profile of SBIR firms. The Committee then evaluated the results and developed both agency-specific and overall findings and recommendations for improving the effectiveness of the SBIR program. The final report includes complete assessments for each of the five agencies and an overview of the program as a whole.

1.5

SBIR ASSESSMENT CHALLENGES

At its outset, the NRC’s SBIR study identified a series of assessment challenges that must be addressed. As discussed at the October 2002 conference that launched the study, the administrative flexibility found in the SBIR program makes it difficult to make cross-agency assessments. Although each agency’s SBIR program shares the common three-phase structure, the SBIR concept is interpreted uniquely at each agency. This flexibility is a positive attribute in that it permits each agency to adapt its SBIR program to the agency’s particular mission, scale, and working culture. For example, the NSF operates its SBIR program differently than DoD because “research” is often coupled with procurement of goods and services at DoD but rarely at NSF. Programmatic diversity means that each agency’s SBIR activities must be understood in terms of their separate missions and operating procedures. This commendable diversity makes an assessment of the program as a whole more challenging.

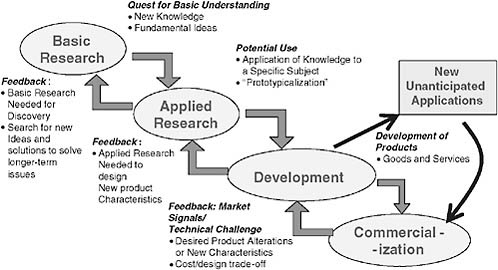

A second challenge concerns the linear process of commercialization implied by the design of SBIR’s three-phase structure.13 In the linear model illustrated in Figure 1-1, innovation begins with basic research supplying a steady stream of fresh and new ideas. Among these ideas, those that show technical feasibility become innovations. Such innovations, when further developed by firms, become marketable products driving economic growth.

As the NSF’s Joseph Bordogna observed at the launch conference, innovation almost never takes place through a protracted linear progression from research to development to market. Research and development drives technological innovation, which, in turn, opens up new frontiers in R&D. True innovation, Bordogna noted, can spur the search for new knowledge and create the context in which the next generation of research identifies new frontiers. This nonlinearity, illustrated in Figure 1-2, makes it difficult to rate the efficiency of SBIR program. Inputs do not match up with outputs according to a simple function. Figure 1-2, while

FIGURE 1-1 The Linear Model of Innovation.

FIGURE 1-2 A Feedback Model of Innovation.

more complex than Figure 1-1, is itself a highly simplified model. For example, feedback loops can stretch backward or forward by more than one level.

A third assessment challenge relates to the measurement of outputs and outcomes. Program realities can and often do complicate the task of data gathering. In some cases, for example, SBIR recipients receive a Phase I award from one agency and a Phase II award from another. In other cases, multiple SBIR awards may have been used to help a particular technology become sufficiently mature to reach the market. Also complicating matters is the possibility that for any particular grantee, an SBIR award may be only one among other federal and nonfederal sources of funding. Causality can thus be difficult, if not impossible, to establish. The task of measuring outcomes is made harder because companies that have garnered SBIR awards can also merge, fail, or change their names before a product reaches the market. In addition, principal investigators or other key individuals can change firms, carrying their knowledge of an SBIR project with them. A technology developed using SBIR funds may eventually achieve commercial success at an entirely different company than that which received the initial SBIR award.

Complications plague even the apparently straightforward task of assessing commercial success. For example, research enabled by a particular SBIR award may take on commercial relevance in new, unanticipated contexts. At the launch conference, Duncan Moore, former Associate Director of Technology at the White House Office of Science and Technology Policy (OSTP), cited the case of SBIR-funded research in gradient index optics that was initially considered a commercial failure when an anticipated market for its application did not emerge. Years later, however, products derived from the research turned out to be a major commercial success.14 Today’s apparent dead end can be a lead to a major achievement tomorrow. Lacking clairvoyance, analysts cannot anticipate or measure such potential SBIR benefits.

Gauging commercialization is also difficult when the product in question is destined for public procurement. The challenge is to develop a satisfactory measure of how useful an SBIR-funded innovation has been to an agency mission. A related challenge is determining how central (or even useful) SBIR awards have proved in developing a particular technology or product. In some cases, the Phase I award can meet the agency’s need—completing the research with no further action required. In other cases, surrogate measures are often required. For example, one way of measuring commercialization success is to count the products developed using SBIR funds that are procured by an agency such as the DoD. In practice, however, large procurements from major suppliers are typically easier to track than products from small suppliers such as SBIR firms. Moreover, successful development of a technology or product does not always translate into successful “uptake” by the procuring agency. Often, the absence of procurement may have little to do with the product’s quality or the potential contribution of the SBIR program.

Understanding failure is equally challenging. By its very nature, an early-stage program such as SBIR should anticipate a high failure rate. The causes of failure are many. The most straightforward, of course, is technical failure, where the research objectives of the award are not achieved. In some cases, the project can be a technically successful but a commercial failure. This can occur when a procuring agency changes its mission objectives and hence its procurement priorities. NASA’s new Mars Mission is one example of a mission shift that may result in the cancellation of programs involving SBIR awards to make room for new agency priorities. Cancelled weapons system programs at the Department of Defense can have similar effects. Technologies procured through SBIR may also fail in the transition to acquisition. Some technology developments by small businesses do not survive the long lead times created by complex testing and certification procedures required by the Department of Defense. Indeed, small firms encounter considerable difficulty in penetrating the “procurement thicket”

that characterizes defense acquisition.15 In addition to complex federal acquisition procedures, there are strong disincentives for high-profile projects to adopt untried technologies. Technology transfer in commercial markets can be equally difficult. A failure to transfer to commercial markets can occur even when a technology is technically successful if the market is smaller than anticipated, competing technologies emerge or are more competitive than expected, if the technology is not cost competitive, or if the product is not adequately marketed. Understanding and accepting the varied sources of project failure in the high-risk, high-reward environment of cutting-edge R&D is a challenge for analysts and policy makers alike.

This raises the issue concerning the standard on which SBIR programs should be evaluated. An assessment of SBIR must take into account the expected distribution of successes and failures in early-stage finance. As a point of comparison, Gail Cassell, Vice President for Scientific Affairs at Eli Lilly, has noted that only one in ten innovative products in the biotechnology industry will turn out to be a commercial success.16 Similarly, venture capital funds often achieve considerable commercial success on only two or three out of twenty or more investments.17

In setting metrics for SBIR projects, therefore, it is important to have a realistic expectation of the success rate for competitive awards to small firms investing in promising but unproven technologies. Similarly, it is important to have some understanding of what can be reasonably expected—that is, what constitutes “success” for an SBIR award—and some understanding of the constraints and opportunities successful SBIR awardees face in bringing new products to market. From the management perspective, the rate of success also raises the

question of appropriate expectations and desired levels of risk taking. A portfolio that always succeeds would not be pushing the technology envelope. A very high rate of “success” would thus, paradoxically, suggest an inappropriate use of the program. Understanding the nature of success and the appropriate benchmarks for a program with this focus is therefore important to understanding the SBIR program and the approach of this study.