1

Introduction

Small businesses are a major driver of high-technology innovation and economic growth in the United States, generating significant employment, new markets, and high-growth industries.1 In this era of globalization, optimizing the ability of innovative small businesses to develop and commercialize new products is essential for U.S. competitiveness and national security. Developing better incentives to spur innovative ideas, technologies, and products—and ultimately to bring them to market—is thus a central policy challenge.

Created in 1982 through the Small Business Innovation Development Act, the Small Business Innovation Research (SBIR) is the nation’s largest innovation program. SBIR offers competition-based awards to stimulate technological innovation among small private-sector businesses while providing government agencies new, cost-effective, technical and scientific solutions to meet their diverse mission needs. The program’s goals are four-fold: “(1) to stimulate technological innovation; (2) to use small business to meet federal research and development needs; (3) to foster and encourage participation by minority and disadvantaged

persons in technological innovation; and (4) to increase private sector commercialization derived from federal research and development.”2

A distinguishing feature of SBIR is that it embraces the multiple goals listed above, while maintaining an administrative flexibility that allows very different federal agencies to use the program to address their unique mission needs.

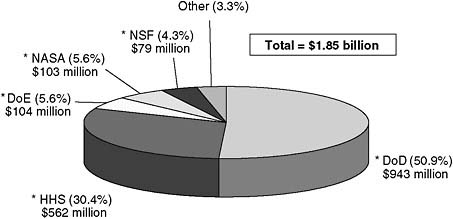

SBIR legislation currently requires federal agencies with extramural R&D budgets in excess of $100 million to set aside 2.5 percent of their extramural R&D funds for SBIR. In 2005, the 11 federal agencies administering the SBIR program disbursed over $1.85 billion dollars in innovation awards. Five agencies administer over 96 percent of the program’s funds. They are the Department of Defense (DoD), the Department of Health and Human Services (particularly the National Institutes of Health [NIH]), the Department of Energy (DoE), the National Aeronautics and Space Administration (NASA), and the National Science Foundation (NSF). (See Figure 1-1.)

As the Small Business Innovation Research (SBIR) program approached its twentieth year of operation, the U.S. Congress asked the National Research Council (NRC) to carry out a “comprehensive study of how the SBIR program has stimulated technological innovation and used small businesses to meet federal research and development needs” and make recommendations on improvements to the program.3 The NRC’s charge is, thus, to assess the operation of the SBIR program and recommend how it can be improved.4

This report provides an overview of the NRC assessment. It is a complement to a set of five separate reports that describe and assess the SBIR programs at the Departments of Defense and Energy, the National Institutes of Health, the National Aeronautics and Space Administration, and the National Science Foundation.

The purpose of this introduction is to set out the broader context of the SBIR program. Section 1.1 provides an overview of the program’s history and legislative reauthorizations. It also contrasts the common structure of the SBIR program with the diverse ways it is administered across the federal government. Section 1.2 describes the important role played by SBIR in the nation’s innovation system, explaining that SBIR has no public or private sector substitute. Section 1.3 then lists the advantages and limitations of the SBIR concept, including benefits and challenges faced by entrepreneurs and agency officials. Section 1.4 summarizes some of the main challenges of the NRC study and opportunities for

FIGURE 1-1 Dimensions of the SBIR program in 2005.

NOTE: Figures do not include STTR funds. Asterisks indicate those departments and agencies reviewed by the National Research Council.

SOURCE: U.S. Small Business Administration, Accessed at from <http://tech-net.sba.gov>, July 25, 2006.

improving SBIR. Finally, Section 1.5 looks at the changing perception of SBIR in the United States and the growing recognition of the SBIR concept around the world as an example of global best practice in innovation policy. The increasing adoption of SBIR-type programs in competitive Asian and European economies underlines the need, here at home, to improve upon and take advantage of this unique American innovation partnership program.

1.1

PROGRAM HISTORY AND STRUCTURE

In the 1980s, the country’s slow pace in commercializing new technologies—compared with the global manufacturing and marketing success of Japanese firms in autos, steel, and semiconductors—led to serious concern in the United States about the nation’s ability to compete economically. U.S. industrial competitiveness in the 1980s was frequently cast in terms of American industry’s failure “to translate its research prowess into commercial advantage.”5 The pessimism of some was reinforced by evidence of slowing growth at corporate re-

search laboratories that had been leaders of American innovation in the postwar period and the apparent success of the cooperative model exemplified by some Japanese kieretsu.6

Yet, a growing body of evidence, starting in the late 1970s and accelerating in the 1980s, began to indicate that small businesses were assuming an increasingly important role in both innovation and job creation. David Birch, a pioneer in entrepreneurship and small business research, and others suggested that national policies should promote and build on the competitive strength offered by small businesses.7

Meanwhile, federal commissions from as early as the 1960s had recommended changing the direction of R&D funds toward innovative small businesses.8 These recommendations were unsurprisingly opposed by traditional recipients of government R&D funding.9 Although small businesses were beginning to be recognized by the late 1970s as a potentially fruitful source of innovation, some in government remained wary of funding small firms focused on high-risk technologies with commercial promise.

The concept of early-stage financial support for high-risk technologies with commercial promise was first advanced by Roland Tibbetts at the National Science Foundation. As early as 1976, Mr. Tibbetts advocated that the NSF should increase the share of its funds going to innovative, technology-based small businesses. When NSF adopted this initiative, small firms were enthused and proceeded to lobby other agencies to follow NSF’s lead. When there was no immediate response to these efforts, small businesses took their case to Congress and to higher levels of the Executive branch.10

In response, the White House convened a conference on Small Business

|

6 |

Richard Rosenbloom and William Spencer, Engines of Innovation: U.S. Industrial Research at the End of an Era. Boston, MA: Harvard Business Press, 1996. |

|

7 |

David L. Birch, “Who Creates Jobs?” The Public Interest, op. cit. Birch’s work greatly influenced perceptions of the role of small firms. Over the last 20 years, it has been carefully scrutinized, leading to the discovery of some methodological flaws, namely making dynamic inferences from static comparisons, confusing gross and net job creation, and admitting biases from chosen regression techniques. See S. J. Davis, J. Haltiwanger, and S. Schuh, “Small Business and Job Creation: Dissecting the Myth and Reassessing the Facts, Working Paper No. 4492, Cambridge, MA: National Bureau of Economic Research, 1993. These methodological fallacies, however, “ha[ve]not had a major influence on the empirically based conclusion that small firms are over-represented in job creation,” according to Per Davidsson. See Per Davidsson, “Methodological Concerns in the Estimation of Job Creation in Different Firm Size Classes,” Working Paper, Jönköping International Business School, 1996. |

|

8 |

For an overview of the origins and history of the SBIR program, see George Brown and James Turner, “The Federal Role in Small Business Research,” Issues in Science and Technology, Summer 1999, pp. 51-58. |

|

9 |

See Roland Tibbetts, “The Role of Small Firms in Developing and Commercializing New Scientific Instrumentation: Lessons from the U.S. Small Business Innovation Research Program,” in Equipping Science for the 21st Century, John Irvine, Ben Martin, Dorothy Griffiths, and Roel Gathier, eds., Cheltenham UK: Edward Elgar Press, 1997. For a summary of some of the critiques of SBIR, see Section 1-3 of this Introduction. |

|

10 |

Ibid. |

in January 1980 that recommended a program for small business innovation research. This recommendation was grounded in:

-

Evidence that a declining share of federal R&D was going to small businesses;

-

Broader difficulties among innovative small businesses in raising capital in a period of historically high interest rates; and

-

Research suggesting that small businesses were fertile sources of job creation.

A widespread political appeal in seeing R&D dollars “spread a little more widely than they were being spread before” complemented these policy rationales. Congress responded, under the Reagan administration, with the passage of the Small Business Innovation Research Development Act of 1982, which established the SBIR program.11

1.1.1

The SBIR Development Act of 1982

The new SBIR program initially required agencies with R&D budgets in excess of $100 million to set aside 0.2 percent of their funds for SBIR. This amount totaled $45 million in 1983, the program’s first year of operation. Over the next six years, the set-aside grew to 1.25 percent.12

The legislation authorizing SBIR had two broad goals13:

-

“to more effectively meet R&D needs brought on by the utilization of small innovative firms (which have been consistently shown to be the most prolific sources of new technologies); and

-

“to attract private capital to commercialize the results of federal research.”

1.1.2

The SBIR Reauthorizations of 1992 and 2000

The SBIR program approached reauthorization in 1992 amidst continued worries about the U.S. economy’s capacity to commercialize inventions. Finding that “U.S. technological performance is challenged less in the creation of new technologies than in their commercialization and adoption,” the National Academy of Sciences at the time recommended an increase in SBIR funding

|

11 |

Additional information regarding SBIR’s legislative history can be accessed from the Library of Congress. See <http://thomas.loc.gov/cgi-bin/bdquery/z?d097:SN00881:@@@L>. |

|

12 |

The set-aside is currently 2.5 percent of an agency’s extramural R&D budget. |

|

13 |

U.S. Congress, Senate, Committee on Small Business (1981), Senate Report 97-194, Small Business Research Act of 1981, September 25, 1981. |

as a means to improve the economy’s ability to adopt and commercialize new technologies.14

Following this report, the Small Business Research and Development Enhancement Act (P.L. 102-564), which reauthorized the SBIR program until September 30, 2000, doubled the set-aside rate to 2.5 percent. This increase in the percentage of R&D funds allocated to the program was accompanied by a stronger emphasis on encouraging the commercialization of SBIR-funded technologies.15 Legislative language explicitly highlighted commercial potential as a criterion for awarding SBIR grants.16

The Small Business Reauthorization Act of 2000 (P.L. 106-554) extended SBIR until September 30, 2008. It also called for an assessment by the National Research Council of the broader impacts of the program, including those on employment, health, national security, and national competitiveness.17

1.1.3

Previous Research on SBIR

The current NRC assessment represents a significant opportunity to gain a better understanding of one of the largest of the nation’s early-stage finance programs. Despite its size and 24-year history, the SBIR program has not previously been comprehensively examined. While there have been some previous studies, most notably by the General Accounting Office and the Small Business Administration, these have focused on specific aspects or components of the program.18

|

14 |

See National Research Council, The Government Role in Civilian Technology: Building a New Alliance, Washington, DC: National Academy Press, 1992, p. 29. |

|

15 |

See Robert Archibald and David Finifter, “Evaluation of the Department of Defense Small Business Innovation Research Program and the Fast Track Initiative: A Balanced Approach,” in National Research Council, The Small Business Innovation Research Program: An Assessment of the Department of Defense Fast Track Initiative, Charles W. Wessner, ed., Washington, DC: National Academy Press, 2000, pp. 211-250. |

|

16 |

In reauthorizing the program in 1992 (PL 102-564) Congress expanded the purposes to “emphasize the program’s goal of increasing private sector commercialization developed through Federal research and development and to improve the federal government’s dissemination of information concerning the small business innovation, particularly with regard to woman-owned business concerns and by socially and economically disadvantaged small business concerns.” |

|

17 |

The current assessment is congruent with the Government Performance and Results Act (GPRA) of 1993: <http://govinfo.library.unt.edu/npr/library/misc/s20.html>. As characterized by the GAO, GPRA seeks to shift the focus of government decision making and accountability away from a preoccupation with the activities that are undertaken—such as grants dispensed or inspections made—to a focus on the results of those activities. See <http://www.gao.gov/new.items/gpra/gpra.htm>. |

|

18 |

An important step in the evaluation of SBIR has been to identify existing evaluations of SBIR. These include U.S. Government Accounting Office, Federal Research: Small Business Innovation Research Shows Success But Can be Strengthened, Washington, DC: U.S. General Accounting Office, 1992; and U.S. Government Accounting Office, “Evaluation of Small Business Innovation Can Be Strengthened,” Washington, DC: U.S. General Accounting Office, 1999. There is also a 1999 unpublished SBA study on the commercialization of SBIR surveys Phase II awards from 1983 to 1993 among non-DoD agencies. |

There have been few internal assessments of agency programs.19 The academic literature on SBIR is also limited.20

Writing in the 1990s, Joshua Lerner of the Harvard Business School positively assessed the program, finding “that SBIR awardees grew significantly faster than a matched set of firms over a ten-year period.” 21 Underscoring the importance of local infrastructure and cluster activity, Lerner’s work also showed that the “positive effects of SBIR awards were confined to firms based in zip codes with substantial venture capital activity.” These findings were consistent with both the corporate finance literature on capital constraints and the growth literature on the importance of localization effects.22

To help fill this assessment gap, and to learn about a large, relatively under-evaluated program, the National Academies’ Committee for Government-Industry Partnerships for the Development of New Technologies was asked by the Department of Defense to convene a symposium to review the SBIR program as a whole, its operation, and current challenges. Under its chairman, Gordon Moore, Chairman Emeritus of Intel, the Committee convened government policymakers, academic researchers, and representatives of small business for the first comprehensive discussion of the SBIR program’s history and rationale, review

of existing research, and identification of areas for further research and program improvements.23

The Moore Committee reported that:

-

SBIR enjoyed strong support in parts of the federal government, as well as in the country at large.

-

At the same time, the size and significance of SBIR underscored the need for more research on how well it is working and how its operations might be optimized.

-

There should be additional clarification about the primary emphasis on commercialization within SBIR, and about how commercialization is defined.

-

There should also be clarification on how to evaluate SBIR as a single program that is applied by different agencies in different ways.24

Subsequently, the Department of Defense requested the Moore Committee to review the operation of the SBIR program at Defense with a particular focus on the role played by the Fast Track Initiative. This major review involved substantial original field research, with 55 case studies, as well as a large survey of award recipients. The response rate was relatively high, at 72 percent.25 It found that the SBIR program at Defense was contributing to the achievement of mission goals—funding valuable innovative projects—and that a significant portion of these projects would not have been undertaken in the absence of the SBIR funding.26 The Moore Committee’s assessment also found that the Fast Track Program increases the efficiency of the Department of Defense SBIR program by encouraging the commercialization of new technologies and the entry of new firms to the program.27

More broadly, the Moore Committee found that SBIR facilitates the development and utilization of human capital and technological knowledge.28 Case studies have shown that the knowledge and human capital generated by the SBIR program has economic value, and can be applied by other firms.29 And, by acting as a “certifier” of promising new technologies, SBIR awards encourage further private sector investment in an award winning firm’s technology.

Based on this and other assessments of public-private partnerships, the Moore Committee’s Summary Report on U.S. Government-Industry Partnerships recom-

|

BOX 1-1 The Moore Committee Report on Public-Private Partnershipsa In a program-based analysis led by Gordon Moore, Chairman Emeritus of Intel, the National Academies Committee on Government-Industry Partnerships for the Development of New Technologies found that “public-private partnerships, involving cooperative research and development activities among industry, universities, and government laboratories can play an instrumental role in accelerating the development of new technologies from idea to market.” Partnerships Contribute to National Missions “Experience shows that partnerships work—thereby contributing to national missions in health, energy, the environment, and national defense—while also contributing to the nation’s ability to capitalize on its R&D investments. Properly constructed, operated, and evaluated partnerships can provide an effective means for accelerating the progress of technology from the laboratory to the market.” Partnerships Help Transfer New Ideas to the Market “Bringing the benefits of new products, new processes, and new knowledge into the market is a key challenge for an innovation system. Partnerships facilitate the transfer of scientific knowledge to real products; they represent one means to improve the output of the U.S. innovation system. Partnerships help by bringing innovations to the point where private actors can introduce them to the market. Accelerated progress in obtaining the benefits of new products, new processes, and new knowledge into the market has positive consequences for economic growth and human welfare.” Characteristics of Successful Partnerships “Successful partnerships tend to be characterized by industry initiation and leadership, public commitments that are limited and defined, clear objectives, cost sharing, and learning through sustained evaluations of measurable outcomes, as well as the application of the lessons to program operations.b At the same time, it is important to recognize that although partnerships are a valuable policy instrument, they are not a panacea; their demonstrated utility does not imply that all partnerships will be success- |

mended that “regular and rigorous program-based evaluations and feedback is essential for effective partnerships and should be a standard feature,” adding that “greater policy attention and resources to the systematic evaluation of U.S. and foreign partnerships should be encouraged.”30

Drawing on these recommendations, the December 2000 legislation mandated the current comprehensive assessment of the nation’s SBIR program. This NRC assessment of SBIR is being conducted in three phases. The first

|

ful. Indeed, the high-risk—high-payoff nature of innovation research and development assures some disappointment.” Partnerships Are a Complement to Private Finance “Partnerships focus on earlier stages of the innovation stream than many venture investments, and often concentrate on technologies that pose greater risks and offer broader returns than the private investor normally finds attractive.c Moreover, the limited scale of most partnerships—compared to private institutional investments—and their sunset provisions tend to ensure early recourse to private funding or national procurement. In terms of project scale and timing in the innovation process, public-private partnerships do not displace private finance. Properly constructed research and development partnerships can actually elicit ‘crowding in’ phenomena, with public investments in R&D providing the needed signals to attract private investment.”d |

phase developed a research methodology that was reviewed and approved by an independent National Academies panel of experts. Information available about the program was also gathered through interviews with officials at the relevant federal agencies and through two major conferences where these officials were invited to describe program operations, challenges, and accomplishments. These conferences highlighted the important differences in agency goals, practices, and evaluations. They also served to describe the evaluation challenges that arise from the diversity in program objectives and practices.31

The second phase of the study implemented the research methodology. The Committee deployed multiple survey instruments and its researchers conducted case studies of a wide variety of SBIR firms. The Committee then evaluated the results, and developed the recommendations and findings found in this report for improving the effectiveness of the SBIR program.

The third phase of the study will provide an update of the survey and related case studies, as well as explore other issues that emerged in the course of this study. It will, in effect, provide a second snapshot of the program and of the agencies’ progress and challenges.

1.1.4

The Structure and Diversity of SBIR

Eleven federal agencies are currently required to set aside 2.5 percent of their extramural research and development budget exclusively for SBIR awards and contracts. Each year these agencies identify various R&D topics, representing scientific and technical problems requiring innovative solutions, for pursuit by small businesses under the SBIR program. These topics are bundled together into individual agency “solicitations”—publicly announced requests for SBIR proposals from interested small businesses.

A small business can identify an appropriate topic that it wants to pursue from these solicitations and, in response, propose a project for an SBIR grant. The required format for submitting a proposal is different for each agency. Proposal selection also varies, though peer review of proposals on a competitive basis by experts in the field is typical. Each agency then selects through a competitive process the proposals that are found to best meet program selection criteria, and awards contracts or grants to the proposing small businesses.

In this way, SBIR helps the nation capitalize more fully on its investments in research and development.

1.1.4.1

A Three-Phase Program

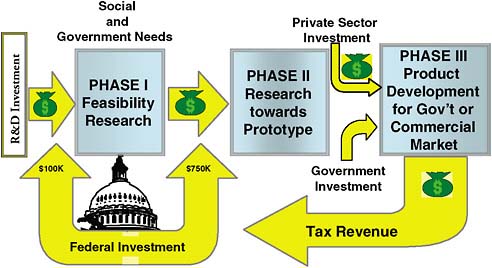

As conceived in the 1982 Act, the SBIR grant-making process is structured in three phases:

-

Phase I grants essentially fund a feasibility study in which award winners undertake a limited amount of research aimed at establishing an idea’s scientific and commercial promise. The 1992 legislation standardized Phase I grants at $100,000. Approximately 15 percent of all small businesses that apply receive a Phase I award.

-

Phase II grants are larger—typically about $500,000 to $850,000—and fund more extensive R&D to develop the scientific and technical merit and the feasibility of research ideas. Approximately 40 percent of Phase I award winners go on to this next step.

FIGURE 1-2 The structure of the SBIR program.

-

Phase III is the period during which Phase II innovation moves from the laboratory into the marketplace. No SBIR funds support this phase. To commercialize their product, small businesses are expected to garner additional funds from private investors, the capital markets, or from the agency that made the initial award. The availability of additional funds and the need to complete rigorous testing and certification requirements at, for example, the Department of Defense or NASA can pose significant challenges for new technologies and products, including those developed using SBIR awards.

Figure 1-2 provides a schematic of the three phases of SBIR, showing how SBIR helps the nation better leverage the federal government’s substantial investment in research and development. In describing the program’s concept, it also helps to illustrate that the tax system provides a noninvasive means for the federal government to recoup, over time, its investment in small business innovation research. Successful small businesses create employment, with taxes paid on payroll and revenue which, when taxed, defrays some of the costs of the program to the nation’s treasury. Of course, innovation spurred by SBIR and its commercialization create value to the nation far beyond tax revenues. Ultimately, the innovation spurred by the SBIR creates products which, in turn, create additions to consumer surplus for the United States and exports around the world.32

1.1.4.2

Significant Program Diversity

Although the SBIR programs at all eleven agencies share the common three-phase structure, they have evolved separately to adapt to the particular mission, scale, and working cultures of the various agencies that administer them. For example, NSF’s operation of its SBIR differs considerably from that of the Department of Defense, reflecting in large part differences in size of the agencies as well as the extent to which “research” is coupled with procurement of goods and services. Within the Department of Defense, in turn, the SBIR program is administered separately by ten different defense organizations, including the Navy, the Air Force, the Army, the Missile Defense Agency, and DARPA. Similarly, there are 23 institutes and centers at National Institutes of Health administering their own SBIR program. The number of independent operations has led to a diversity of administrative practices, a point discussed below. (See Table 1-1.) This diversity means that the SBIR program at each agency must be understood in its own context, making the task of assessing the overall program a challenging one.33

TABLE 1-1 Variation in Agency Approaches to SBIR

|

1.1.4.3

The Role of the Small Business Administration

The Small Business Administration (SBA) coordinates the SBIR program across the federal government and is charged with directing its implementation at all 11 participating agencies. Recognizing the broad diversity of the program’s operations, SBA administers the program with commendable flexibility, allowing the agencies to operate their SBIR programs in ways that best address their unique agency missions and cultures.

SBA is charged with reviewing the progress of the program across the federal government. To do this, SBA solicits program information from all participating agencies and publishes it quarterly in a Pre-Solicitation Announcement.34 SBA also operates an online reporting system for the SBIR program that utilizes Tech-Net—a Web-based system linking small technology businesses with opportunities

|

33 |

For a review of the diversity within the SBIR program, see National Research Council, SBIR: Program Diversity and Assessment Challenges, op. cit. |

|

34 |

Access at <http://www.sba.gov/sbir/mastersch.pdf>. |

|

BOX 1-2 What Is an Innovation Ecosystem? An innovation ecosystem describes the complex synergies among a variety of collective efforts involved in bringing innovation to market.a These efforts include those organized within, as well as collaboratively across large and small businesses, universities, and research institutes and laboratories, as well as venture capital firms and financial markets. Innovation ecosystems themselves can vary in size, composition, and in their impact on other ecosystems. By linking these different elements, SBIR strengthens the innovation ecosystem in the United States, thereby enhancing the nation’s competitiveness. The idea of an innovation ecosystem builds on the concept of a National Innovation System (NIS) popularized by Richard Nelson of Columbia University. According to Nelson, a NIS is “a set of institutions whose interactions determine the innovative performance … of national firms.”b The idea of an innovation ecosystem highlights the multiple institutional variables that shape how research ideas can find their way to the marketplace. These include, most generally, rules that protect property (including intellectual property) and the regulations and incentives that structure capital, labor, and financial and consumer markets. A given innovation ecosystem is also shaped by shared social norms and value systems—especially those concerning attitudes towards business failure, social mobility, and entrepreneurship.c In addition to highlighting the interdependencies among the various participants, the idea of an innovation ecosystem also draws attention to their ability to change over time, given different incentives. This dynamic element sets apart the idea of an “innovation ecosystem.” In this regard, the term “innovation ecosystem” captures an analytical approach that considers how public policies can improve innovation-led growth by strengthening links within the system. Incentives found within intermediating institutions like SBIR can play a key role in this regard by aligning the self-interest of venture capitalists, entrepreneurs and other participants with desired national objectives.d

|

within federal technology programs.35 Through Tech-Net, SBA collects and disseminates essential commercialization and other impact data on SBIR.

1.2

ROLE OF SBIR IN THE U.S. INNOVATION ECOSYSTEM

By providing scarce pre-venture capital funding on a competitive basis, SBIR encourages new entrepreneurship needed to bring innovative ideas from the laboratory to the market. Further, by creating new information about the feasibility and commercial potential of technologies held by small innovative firms, SBIR awards aid investors in identifying firms with promising technologies. As noted, SBIR awards appear to have a “certification” function, and often act as a stamp of approval for young firms allowing them to obtain resources from outside investors.36

1.2.1

The Importance of Small Business Innovation

Equity-financed small firms are a key feature of the U.S. innovation ecosystem, serving as an effective mechanism for capitalizing on new ideas and bringing them to the market.37 In the United States, small firms are also a leading source of employment growth, generating 60 to 80 percent of net new jobs annually over the past decade.38 These small businesses also employ nearly 40 percent of the United States’ science and engineering workforce.39 Research commissioned by the Small Business Administration has also found that scientists and engineers working in small businesses produce 14 times more patents than their counterparts in large patenting firms in the United States—and these patents tend to be of higher quality and are twice as likely to be cited.40

Small businesses renew the U.S. economy by introducing new products and new lower cost ways of doing things, sometimes with substantial economic benefits. They play a key role in introducing technologies to the market, often re-

|

35 |

Access Tech-Net at <http://tech-net.sba.gov/>. |

|

36 |

Joshua Lerner, “Public Venture Capital,” in National Research Council, The Small Business Innovation Research Program: Challenges and Opportunities, op. cit. |

|

37 |

Zoltan J. Acs and David B. Audretsch, Innovation and Small Firms, op. cit. |

|

38 |

U.S. Small Business Administration, Office of Advocacy, “Small Business by the Numbers,” Washington, DC: U.S. Small Business Administration, 2006. This net gain depends on the interval examined since small businesses churn more than do large ones. For a discussion of the challenges of measuring small business job creation, see John Haltiwanger and C. J. Krizan, “Small Businesses and Job Creation in the United States: The Role of New and Young Businesses” in Are Small Firms Important? Their Role and Impact, Zoltan J. Acs, ed., Dordrecht: Kluwer, 1999. |

|

39 |

U.S. Small Business Administration, Office of Advocacy, “Small Business by the Numbers,” op. cit. |

|

40 |

Ibid. |

sponding quickly to new market opportunities.41 By contrast, large firms are less prone to pursue technological opportunities in new and emerging areas. They tend to focus more on improving the performance of existing product lines because they often cannot risk the possibility of large losses on failed breakthrough efforts on their stock price.42 University research has traditionally focused more on education and publications. The small business entrepreneur often demonstrates the willingness to take on the risks of a new venture, offsetting these risks against the possible rewards of a major (or even moderate) success.

Indeed, many of the nation’s large, successful, and innovative firms started out as small entrepreneurial firms. Firms like Microsoft, Intel, AMD, FedEx, Qualcomm, Adobe, all of which grew rapidly in scale from small beginnings, have transformed how people everywhere work, transact, and communicate. The technologies introduced by these firms continue to create new opportunities for investment and sustain the rise in the nation’s productivity level.43

These economic and social benefits underscore the need to encourage new equity-based high-technology firms in the hope that some may develop into larger, more successful firms that create the technological base for the nation’s future competitiveness. This reality is reflected in recent economic theories on the link between increased investments in knowledge creation and entrepreneurship and economic growth. (See Box 1-3.)

1.2.2

Challenges Facing Small Innovative Firms

1.2.2.1

Overcoming Knowledge Asymmetries

Despite their value to the United States economy, small businesses entrepreneurs with new ideas for innovative products often face a variety of challenges in bringing their ideas to market. Because new ideas are by definition unproven, the knowledge that an entrepreneur has about his or her innovation and its com-

|

BOX 1-3 New Growth Theory and the Knowledge-based Economy Neoclassical theories of growth long emphasized the role of labor and capital as inputs.a Technology was exogenous—assumed to be determined by forces external to the economic system. More recent growth theories, by comparison, emphasize the role of technology and assume that technology is endogenous—that is, it is actually integral to the performance of the economic system. The New Growth Theory, in particular, holds that sustaining growth requires continuing investments in new knowledge creation, calling on policy makers to pay careful attention to the multiple factors that contribute to knowledge creation, including research and development, the education system, entrepreneurship, and an openness to trade and investment.b To a considerable extent, knowledge-based economies are distinguished by the changing way that firms do business and how governments respond in terms of policy.c Key features of a knowledge-based economy include:

|

mercial potential may not be fully appreciated by prospective investors.44 For example, few investors in the 1980s understood Bill Gates’ vision for Microsoft or, more recently, Bill Page’s and Sergey Brin’s vision for Google.

1.2.2.2

Overcoming Knowledge Spillovers

Another hurdle for entrepreneurs is the leakage of new knowledge that escapes the boundaries of firms and intellectual property protection. The creator of new knowledge can seldom fully capture the economic value of that knowledge for his or her own firm.45 The benefits of R&D thus accrue to others who did not make the relevant R&D investment. This public-goods problem can inhibit investment in promising technologies for both large and small firms. Overcoming this problem is especially important for small firms focused on a particular product or process.46

1.2.3

The Challenge of Market Commercialization

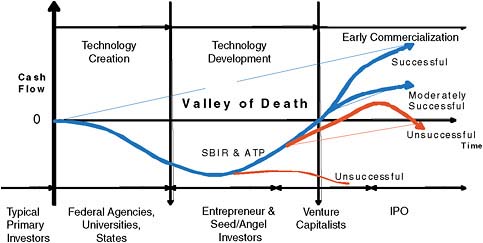

The challenge of incomplete and insufficient information for investors and the problem for entrepreneurs of moving quickly enough to capture a sufficient return on “leaky” investments can pose substantial obstacles for new firms seeking seed capital. Because the difficulty of attracting investors to support an imperfectly understood, as yet-to-be-developed innovation is especially daunting, the term “Valley of Death” has come to describe the period of transition when a developing technology is deemed promising, but too new to validate its commercial potential and thereby attract the capital necessary for its continued development.47 (See Figure 1-3.)

FIGURE 1-3 The Valley of Death.

SOURCE: Adapted from L.M. Murphy and P. L. Edwards, Bridging the Valley of Death—Transitioning from Public to Private Sector Financing, Golden, CO: National Renewable Energy Laboratory, May 2003.

This means that inherent technological value does not lead inevitably to commercialization; many good ideas perish on the way to the market. This reality belies a widespread myth that U.S. venture capital markets are so broad and deep that they are invariably able to identify promising entrepreneurial ideas and finance their transition to market. In reality, angel investors and venture capitalists often have quite limited information on new firms. These potential investors are also often focused on a given geographic area. And, as the recent dot-com boom and bust illustrates, venture capital is also prone to herding tendencies, often following market “fads” for particular sectors or technologies.48

1.2.3.1

The Limits of Angel Investment

Angel investors are typically affluent individuals who provide capital for a business start-up, usually in exchange for equity. Increasingly, they have been organizing themselves into angel networks or angel groups to share research and

|

ence Policy: A Report to Congress by the House Committee on Science, Washington, DC: Government Printing Office, 1998. Accessed at <http://www.access.gpo.gov/congress/house/science/cp105-b/sci-ence105b.pdf>. For an academic analysis of the Valley of Death phenomenon, see Lewis Branscomb and Philip Auerswald, “Valleys of Death and Darwinian Seas: Financing the Invention to Innovation Transition in the United States,” The Journal of Technology Transfer, 28(3-4), August 2003. |

|

48 |

See Tom Jacobs, “Biotech Follows Dot.com Boom and Bust,” Nature, 20(10):973, October 2002. For an analytical perspective, see Andrea Devenow and Ivo Welch, “Rational Herding in Financial Economics, European Economic Review, 40(April):603-615, 1996. Devenow and Welch find that when investment managers are assessed on the basis of their performance relative to their peers (rather than against some absolute benchmark), they may end up making investments similar to each other. |

pool their own investment capital. The U.S. angel investment market accounted for over $25.6 billion in the United States in 2006.49 It is a source of start-up capital for many new firms.

Yet, the angel market is dispersed and relatively unstructured, with wide variation in investor sophistication, few industry standards and tools, and limited data on performance.50 In addition, most angel investors are highly localized, preferring to invest in new companies that are within driving distance.51 This geographic concentration, lack of technological focus, and the privacy concerns of many angel investors, make angel capital difficult to obtain for many high-technology start-ups, particularly those seeking to provide goods and services to the federal government.

1.2.3.2

The Limits of Venture Capital

Like angels, venture capital in the United States is concentrated geographically in the country’s high-technology regions. This clustering pattern creates large gaps in the availability of venture capital in rural areas and other regions that do not have high technology clusters served by concentrations of venture investors.

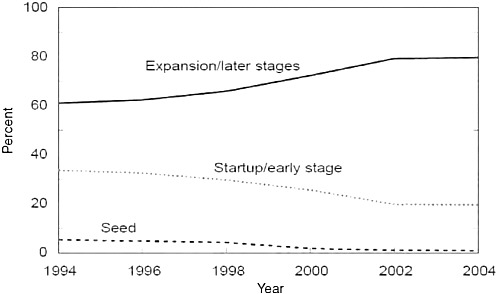

Venture capitalists are different from angel investors, however, in that they typically manage the pooled money of others in a professionally managed fund. Given their obligations to their investors, venture capital firms tend not to invest upstream in the higher-risk, early-stages of technology commercialization, and they have been increasingly moving further downstream in recent years. (See Figure 1-4.) In 2005, venture capitalists in the United States invested $21.7 billion over the course of 2,939 deals. However, 82 percent of venture capital in the United States was directed to firms in the later stages of development, with the remaining 18 percent directed to seed and early-stage firms.52

Typically, venture capitalists are also interested in larger investments that are easier to manage than is appropriate for many small innovative technology firms.53 Large venture capital funds are deterred by the costs of meeting due dili-

FIGURE 1-4 Venture capital disbursements by state of financing.

SOURCE: National Science Board, Science and Engineering Indicators 2006, Arlington, VA: National Science Foundation, 2006.

gence requirements to permit involvement in managing many small investments with remote and uncertain payoffs. The size of the average venture capital deal was $8.3 million in 2006, whereas the average SBIR Phase III project requires $400,000 to $1 million in funds. Venture capital deal size has also been rising over the past decade.54 This trend is accelerating.

Together, these realities of the angel and venture markets underscore the challenge faced by new firms seeking private capital to develop and market promising innovations within private capital markets.

1.2.3.3

The Challenge of Federal Procurement

Commercializing SBIR-funded technologies though federal procurement is no less challenging for innovative small companies. Finding private sources of funding to further develop successful SBIR Phase II projects for government procurement—those innovations that have demonstrated technical and commercial feasibility—is often difficult because the government’s demand for products is unlikely to be large enough to provide a sufficient return for venture investors.

Venture capitalists also tend to avoid funding firms focused on government contracts, due to the higher costs, regulatory burdens, and limited markets associated with government contracting.55

Institutional biases in federal procurement also hinder government funding needed to transition promising SBIR technologies. Procurement rules and practices often impose high costs and administrative overheads that favor established suppliers. In addition, many acquisition officers have traditionally viewed the SBIR program as a “tax” on their R&D budgets, representing a “loss” of resources and control, rather than an opportunity to develop rapid and lower cost solutions to complex procurement challenges.56 This perception, in turn, can lead to limited managerial attention, less optimal mission alignment, and fewer resources being devoted to the program.

Even when they see the value of a technology, providing “extra” funding to exploit it in a timely manner can be a challenge for government managers that requires time, commitment and, ultimately, the interest of those with budgetary authority for the programs or systems. Attracting such interest and support is not automatic and may often depend on personal relations and advocacy skills rather than on the intrinsic quality of the SBIR project.

1.2.4

The Federal Role in Addressing Early-stage Financing Gap

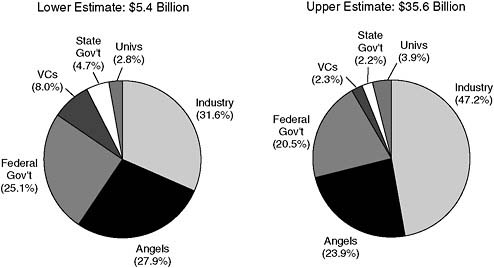

Although business angels and venture capital firms, along with industry, state governments, and universities provide funding for early stage technology development, the federal role is significant. Research by Harvard University’s Lewis Branscomb and Philip Auerswald estimates that the federal government provides between 20-25 percent of all funds for early-stage technology development.57 (See Figure 1-5.)

This contribution is noteworthy because government awards address segments of the innovation cycle that private investors often do not fund because they find it too risky or too small.

FIGURE 1-5 Estimated distribution of funding sources for early-stage technology development.

SOURCE: Lewis M. Branscomb and Philip E. Auerswald, Between Invention and Innovation: An Analysis of Funding for Early-Stage Technology Development, Gaithersburg, MD: National Institute of Standards and Technology, 2002, p. 23.

|

“It does seem that early-stage help by the government in developing platform technologies and financing scientific discoveries is directed exactly at the areas where institutional venture capitalists cannot and will not go.”a David Morgenthaler, Founding Partner of Morgenthaler Ventures |

SBIR is the main source of federal funding for early-stage technology development in the United States. Based on Banscomb and Auerswald’s lower estimate of the distribution of funding sources, SBIR provides over 20 percent of funding for early-stage development from all sources and over 85 percent of federal financial support for direct early-stage development. Moreover, SBIR has no public or private substitutes. (See Box 1-4.) Funding opportunities under SBIR can thus provide early stage finance for technologies that are not readily supported by venture capitalists, angel investors, or other sources of early-stage funding in the United States.

|

BOX 1-4 The Federal Role in Comparative Perspective “Small technology firms with 500 or less employees now employ 54.8 percent of all scientists and engineers in US industrial R&D. However, these nearly 6 million scientists and engineers are able to obtain only 4.3 percent of extramural government R&D dollars. In contrast, large and medium firms with more than 500 employees combined employ only 45.2 percent but receive 50.3 percent of government R&D funds. Universities receive 35.3 percent, non-profit research institutions 10.0 percent, and states and foreign countries 1.0 percent. Of the 4.3 percent that goes to small firms 2.5 percent is from SBIR and the related Small Business Technology Transfer (STTR) program. Together they receive less than 10 percent of the funding that large firms receive.”a Roland Tibbetts, “SBIR Renewal and U.S. Economic Security”b

|

1.3

SBIR: STRENGTHS AND LIMITATIONS

SBIR leverages small business innovation to address government and societal needs in such areas as health, security, environment, and energy. The strength of the SBIR concept lies in aligning the interests of each of the participants in the program with the goals of the program. SBIR proposals are industry-initiated based on broad solicitations posted by federal agencies. This bottom-up design promotes a positive interest by small businesses in the outcome of their research. Similarly, the federal agencies can each use the program to advance their own missions; ownership rests with the many agencies, not a single “tech agency.”

As described below, both entrepreneurs and agency managers have found SBIR to be a useful tool to help them further their goals in fostering and commercializing new technologies. Although the SBIR concept is a robust one, fundamentally its potential lies in how it is implemented.

1.3.1

SBIR: A Tool for Entrepreneurs to Innovate

A variety of SBIR grant features make the program attractive from the entrepreneur’s perspective. Among these is that there is no dilution of ownership and that no repayment is required. SBIR has no recoupment provisions, other than the tax system itself.

|

BOX 1-5 Advantages and Limitations of the SBIR Concept Advantages of the SBIR Concept SBIR plays a catalytic role at an early stage in the technology development cycle. The awards have the virtue that they are not repayable and they do not dilute ownership or control of a firm’s management. The awards enable the firm to explore new technological options and in Phase II can often demonstrate a technology’s potential. The intellectual property rights remain with the firm, creating an opportunity for downstream contracts. Perhaps most importantly, the awards provide a signaling effect affirming both the quality and a potential market for the technology. These advantages are significant and are not matched by private finance or other public-sector mechanisms. Few private sector substitutes exist to the SBIR program. The SBIR contributions are also quite distinct from both bank lending and private equity financing of small technology firms. Commercial lending places a financial burden on small businesses with a long product-development cycle. Private equity funding does not require the small business to keep up with interest payments, but it does require the small business to give up a share of ownership and an associated measure of control. Firms that seek equity funding early in their development may be compelled to accept lower valuations that result in a greater loss of control for the same amount of funding than would be the case at a later stage of development.a SBIR does not compete with the private financial markets. On the contrary, often it facilitates the functioning of financial markets by signaling quality—reducing information asymmetries that complicate contracting. Such quality signaling partially reduces impediments (discussed above) typically faced by technology entrepreneurs in seeking financing from both private and public sources. In additional to non-SBIR federal funds, the dominant sources of follow-on funding include self-finance, other domestic private companies, foreign companies, and nonventure capital private equity (angel investors). Few public-sector substitutes exist to the SBIR program. SBIR continues to offer small firms an opportunity to perform federal R&D in a manner that is minimally burdensome to the firms from a contracting standpoint. While firms in different industries have access to an array of targeted programs to develop technologies for particular government goals, no other government-wide program offers technology-development awards to small business in amounts, or with objectives, comparable to the SBIR program.b Current Limitations of the SBIR Concept Overhead Costs. SBIR provides many small awards to small businesses to explore technological options to meet federal mission needs. Inherent in this approach is the disadvantage of high overhead costs for the administering agency, as compared with much larger, “bundled” contracts with a single large provider. Agency managers often require more time and energy to solicit, evaluate, and monitor multiple small awards than for larger contracts. The lack of funding in the program to help defray its management costs works against its acceptance within the host agencies and limits the ability |

1.3.1.1

Advantages for Entrepreneurs

Importantly, grant recipients retain rights to intellectual property developed using the SBIR award, with no royalties owed to the government, though the government retains royalty-free use for a period.

As noted previously, being selected to receive SBIR grants also confers a “certification effect” on the small business—a signal to private investors of the technical and commercial promise of the technology held by the small business.58

In these ways, SBIR enhances the opportunities for entrepreneurs to turn an innovative idea into a marketable product. Below, we look at some additional reasons why entrepreneurs find SBIR useful.

Some small innovative businesses see SBIR as a strategic asset in their development, but often in different ways. Depending on the firm’s size, relationship to capital, and business development strategy, firms can have quite different objectives in applying to the program. Some seek to demonstrate the potential of promising research. Others seek to fulfill agency research requirements on a cost-effective basis. Still others seek a certification of quality (and the investments that can come from such recognition) as they push science-based products towards commercialization.59

As they strive to move across the Valley of Death, many small firms see SBIR as one element within a more diversified strategy that includes seeking funding from state programs, angel investors, and other sources of early-stage funding, as well as technology validation through collaboration with universities and other companies.

1.3.1.2

SBIR as a Path to Federal Procurement

Many entrepreneurs are at a disadvantage in traversing the federal acquisition process. New firms are often unfamiliar with government regulations and procurement procedures, especially for defense products, and find themselves at a disadvantage vis-à-vis incumbents. To access the federal procurement process, small companies must learn to deal with a complex and sometimes arcane contracting system characterized by many rules and procedures. A major advantage of SBIR awards is that they enable a successful SBIR firm to obtain a “single source” contract for the subsequent development of the technology and product derived from the SBIR award. SBIR thus assists small firms who lack the re-

|

58 |

This certification effect was initially identified by Josh Lerner, “Public Venture Capital,” in National Research Council, The Small Business Innovation Program: Challenges and Opportunities, op. cit. |

|

59 |

See Reid Cramer, “Patterns of Firm Participation in the Small Business Innovation Research Program in Southwestern and Mountain States,” in National Research Council, The Small Business Innovation Research Program: An Assessment of the Department of Defense Fast Track Initiative, op. cit. |

sources to invest in “contracting overhead,” by creating an alternative path for small business to enter the government procurement system.

1.3.2

SBIR’s Advantages for Government

SBIR can provide agency officials with technical solutions to help solve operational problems. Faced with an operational puzzle, a program officer can post a solicitation that describes the problem in order to prompt a variety of innovative solutions. These solutions draw on the scientific and engineering expertise found across the nation’s universities and innovative small businesses.

Agency challenges successfully addressed through SBIR solicitations range from rapidly deployable high-performance drones for the Department of Defense (see Box 1-6) to needle-free injectors sought by NIH to facilitate mass immunizations. Drawing on SBIR, the government can leverage private sector ingenuity to address public needs. In the process, it helps to convert ideas into potential products, creating new sources of innovation.

1.3.2.1

A Low-cost Technical Probe

A significant virtue of SBIR is that it can act in some cases as a “low-cost technological probe,” enabling the government to explore more cheaply ideas that

|

BOX 1-6 Meeting Agency Challenges—The Case of the Navy Unmanned Air Vehicle Originally incorporated as a self-financed start-up firm in 1989, Advanced Ceramics Research (ACR) now manufactures products for a diverse set of industries based on its innovative technologies. Over time, it has participated in the SBIR program of several federal agencies, including DoD, NASA, Department of Energy, and the National Science Foundation. ACR is now actively engaged in the development and marketing of the Silver Fox, a small unmanned aerial vehicle (UAV) that was developed with SBIR assistance. While in Washington, DC, to discuss projects with Office of Naval Research (ONR) program managers in 2000, ACR representatives also had a chance meeting with the SBIR program manager for the Navy, interested in small SWARM unmanned air vehicles (UAVs). At the time, ONR expressed an interest and eventually provided funding for developing a new low cost small UAV as a means to engage in whale watching around Hawaii, with the objective of avoiding damage from the Navy’s underwater sonic activities. Once developed however, the UAV’s value as a more general-purpose battlefield surveillance technology became apparent, and the Navy provided additional funding to refine the UAV. |

|

BOX 1-7 Case Study Example: Providing Answers to Practical Problems Aptima, Inc. designed an instructional system for the Navy to improve boat handling safety by teaching strategies that mitigate shock during challenging wave conditions. In addition, the instructional system was to raise skill levels while compressing learning time by creating an innovative learning environment. Phase I of the project developed a training module, and in Phase II, instructional material, including computer animation, videos, images, and interviews were developed. The concept and the supporting materials were adopted as part of the introductory courses for Special Operations helmsmen with the goal of reducing injuries and increasing mission effectiveness. |

may hold promise. In some cases, government needs can be met by the “answer” provided through the successful conclusion of the Phase I or Phase II award, with no further research required or a product (e.g., an algorithm or software diagnostic) developed. Here, a small business successfully completes the requirements and objectives of a Phase II contract, meeting the needs of the customer, without gaining additional commercialization revenues. In other cases, awards can provide valuable negative proofs, by identifying dead ends before substantial federal investments are made.

1.3.2.2

Quick Reaction Capability

Because it is flexible and can be organized on an ad hoc basis, SBIR can be an effective means to focus the expertise and innovative technologies dispersed around the country to address new national needs rapidly. In its 2002 report, Making the Nation Safer, the National Academies identified SBIR as an existing model of government-industry collaboration that could contribute to current needs, such as the war on terror.60 SBIR, with its established selection procedures and mechanisms for evaluating and granting awards, offers major benefits in comparison to funding completely new programs; it can “hit the ground running.”

Speaking at a National Academies conference on how public-private partnerships can help the government respond to terrorism, Carole Heilman, of the National Institute of Allergy and Infectious Diseases, described how within a month of the September 11, 2001 terrorist attacks, NIAID put out an SBIR solicitation concerning a particular technical problem encountered in preparing the nation’s biodefense. This solicitation drew about 300 responses within a month, she noted,

adding that “it was a phenomenal expression of interest and capability and good application, with extremely thoughtful approaches.”61

1.3.2.3

Diversifying the Government’s Supplier Base

SBIR awards can help government agencies diversify their supplier base. By providing a bridge between small companies and the federal agencies, SBIR can serve as a catalyst for the development of new ideas and new technologies to meet federal missions in health, transport, the environment, and defense.

This potential role of SBIR is particularly relevant to the Department of Defense as it faces new challenges in an era of constrained budgets, stretched manpower, and new threats. Military capabilities in this new era increasingly depend on the invention of new technologies for new systems and platforms. SBIR can play a valuable role in providing innovative technologies that address evolving defense needs.

1.3.3

SBIR’s Role in Knowledge Creation: Publications and Patents

SBIR projects yield a variety of knowledge outputs. These contributions to knowledge are embodied in data, scientific and engineering publications, patents and licenses of patents, presentations, analytical models, algorithms, new research equipment, reference samples, prototypes products and processes, spin-off companies, and new “human capital” through enhanced know-how and expertise. One indication of this knowledge creation can be seen in a NRC survey of NIH projects.62 Thirty-four percent of firms that have won SBIR awards from NIH reported having generated at least one patent, and just over half of NIH respondents published at least one peer-reviewed article.63

Projects funded by SBIR grants often involve high technical risk, implying novel and difficult research rather than incremental change. At NSF, for example, of the 54 percent of Phase I projects that did not get a follow-on Phase II, 32 percent did not apply for a Phase II, and of these, half did not do so for technical reasons.64 This suggests a robust program that encourages technical risk, and one that recognizes that not all projects will succeed.

|

61 |

See Carole Heilman, “Partnering for Vaccines: The NIAID Perspective” in Charles W. Wessner, Partnering Against Terrorism: Summary of a Workshop, Washington, DC: The National Academies Press, 2005, pp. 67-75. |

|

62 |

National Research Council, An Assessment of the SBIR Program at the National Institutes of Health, Charles W. Wessner, ed., Washington, DC: The National Academies Press, 2009, Appendix B. |

|

63 |

Because of data limitations, it was not feasible to apply bibliometric and patent analysis techniques to assess the relative importance of these patents and publications. |

|

64 |

See National Research Council, An Assessment of the SBIR Program at the National Science Foundation, Charles W. Wessner, ed., Washington, DC: The National Academies Press, 2008. |

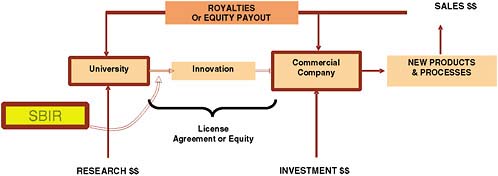

FIGURE 1-6 SBIR and the commercialization of university technology.

SOURCE: Adapted from C. Gabriel, Carnegie Mellon University.

There is also anecdotal evidence concerning “indirect path” effects of SBIR. Investigators and research staff often gain new knowledge and experience from projects funded with SBIR awards that may become relevant in a different context later on—perhaps for another project or another company. These effects are not directly measurable, but interviews and case studies affirm their existence and importance.65

1.3.4

SBIR and the University Connection

SBIR is increasingly recognized as providing a bridge between universities and the marketplace. In the NRC Firm Survey, conducted as a part of this study, over half of respondents reported some university involvement in SBIR projects.66 Of those companies, more than 80 percent reported that at least one founder was previously an academic.

SBIR encourages university researchers to found companies based on their research. Importantly, the availability of the awards and the fact that a professor can apply for an SBIR award without founding a company, encourages applications from academics who might not otherwise undertake the commercialization of their own discoveries. In this regard, previous research by the NRC has shown that SBIR awards directly cause the creation of new firms, with positive benefits in employment and growth for the local economy.67 Of course, not all universities

|

65 |

See, for example, the NRC-commissioned case study of National Recovery Technologies, Inc. Early SBIR support for NRT technology that uses optoelectronics to sort metals and alloys at high speeds is now being evaluated for its use in airport security by the Transportation Security Administration. See National Research Council, An Assessment of the SBIR Program at the National Science Foundation, op. cit. |

|

66 |

National Research Council, NRC Firm Survey. See Appendix A. |

|

67 |

National Research Council, The Small Business Innovation Research Program: An Assessment of the Department of Defense Fast Track Initiative, op. cit., p. 35. |

in the United States have a strong commercialization culture, and there is great variation in the level of success among those universities that do.68

1.4

ASSESSING SBIR

Regular program and project analysis of SBIR awards are essential to understand the impact of the program. A focus on analysis is also a means to clarify program goals and objectives and requires the development of meaningful metrics and measurable definitions of success. More broadly, regular evaluations contribute to a better appreciation of the role of partnerships among government, university, and industry. Assessments also help inform public and policy makers of the opportunities, benefits, and challenges involved in the SBIR innovation award program.

As we have noted before, despite its large size and 25-year history, SBIR has not done especially well with regard to evaluation. As a whole, the program has been the object of relatively limited analysis. This assessment of the SBIR program is the first comprehensive assessment ever conducted among the departments and agencies charged with managing the bulk of the program’s resources.

A major challenge has been the lack of data collection and assessment within the agencies and the limited number and nature of external assessments. Despite the challenges of assessing a diverse and complex program, the NRC assessment has sought to document the program’s achievements, clarify common misconceptions about the program, and suggest practical operational improvements to enhance the nation’s return on the program.

1.4.1

The Challenges of Assessment

At its outset, the NRC’s SBIR study identified a series of assessment challenges that must be addressed.69

1.4.1.1

Recognizing Program Diversity

One major challenge is that the same administrative flexibility that allows each agency to adapt the SBIR program to its particular mission, scale, and working culture makes it difficult, and often inappropriate, to compare programs across agencies. NSF’s operation of SBIR differs considerably from that of the Department of Defense, for example, reflecting, in large part, differences in the

|

68 |

Donald Siegel, David Waldman, and Albert Link, “Toward a Model of the Effective Transfer of Scientific Knowledge from Academicians to Practitioners: Qualitative Evidence from the Commercialization of University Technologies,” Journal of Engineering and Technology Management, 21(1-2): March-June 2004, pp. 115-142. |

|

69 |

See National Research Council, SBIR: Program Diversity and Assessment Challenges, op. cit. |

extent to which “research” is coupled with procurement of goods and services. Although SBIR at each agency shares the common three-phase structure, the SBIR concept is interpreted uniquely at each agency and each agency’s program is best understood in its own context.

1.4.1.2

Different Agencies, Divergent Programs

The SBIR programs operated by the five study agencies (DoD, NIH, NSF, NASA, and DoE) are perhaps as divergent in their objectives, mechanisms, operations, and outcomes as they are similar. Commonalities include:

-

The three-phase structure, with an exploratory Phase I focused on feasibility, a more extended and better funded Phase II usually over two years, and for some agencies a Phase III in which SBIR projects have some significant advantages in the procurement process but no dedicated funding;

-

Program boundaries largely determined by SBA guidelines with regard to funding levels for each phase, eligibility, and

-

Shared objectives and authorization from Congress, including adherence to the fundamental congressional objectives for the program, i.e., compliance with the 2.5 percent allocation for the program.

However important this shared framework, there is also a profusion of differences among the agencies. In fact, the agencies differ on the objectives assigned to the program, program management structure, award size and duration, selection process, degree of adherence to standard SBA guidelines, and evaluation and assessment activities. No program shares an electronic application process with any other agency; there are no shared selection processes, though there are “shared” awards companies (but not projects). There are shared outreach activities, but no systematic sharing (or adoption) of best practices.

The following section summarizes some of the most important differences, drawing directly on a more detailed discussion in Chapter 5, focused on program management.

1.4.1.3

Award Size and Duration

Most agencies follow the SBA guidelines for award size ($100,000 for Phase I and $750,000 for Phase II) and duration (6 months/2 years) most of the time. However, some agencies have reduced the size of awards (e.g., several DoD components for Phase I, NSF for Phase II), partly in order to create funding either to bridge the gap between Phase I and Phase II, or to create incentives for

companies to find matching funding for an extended Phase II award (e.g., NSF Phase IIB).

NIH, however, has in many cases extended both the size and duration of Phase I and Phase II awards. The 2006 GAO study indicated that more than 60 percent of NIH awards from 2002 to 2005 were above the SBA guidelines; discussions with NIH staff indicate that no-cost extensions have become a standard feature of the NIH SBIR program.

These operational differences reflect the differences in agency objectives, means (or lack thereof) for follow-on funding, and circumstances (e.g., time required for clinical trials at NIH).

1.4.1.4

Balancing Multiple Objectives

Congress, as indicated earlier in this chapter, has mandated four core objectives for the agencies, but has not, understandably, set priorities among them.

Recognizing the importance the Congress has attached to commercialization, all of the agencies have made efforts to increase commercialization from their SBIR programs. They also all make considerable efforts to ensure that SBIR projects are in line with agency research agendas. Nonetheless, there are still important differences among them.

The most significant difference is between acquisition agencies and non-acquisition agencies. The former are focused primarily on developing technologies for the agency’s own use. Thus at DoD, a primary objective is developing technologies that will eventually be integrated into weapons systems purchased or developed by DoD.

In contrast, the nonacquisition agencies do not, in the main, purchase outputs from their own SBIR programs. These agencies—NIH, NSF, and parts of DoE—are focused on developing technologies for use in the private sector.

This core distinction largely colors the way programs are managed. For example, acquisition programs operate almost exclusively through contracts—award winners contract to perform certain research and to deliver certain specified outputs; nonacquisition programs operate primarily through grants—which are usually less tightly defined, have less closely specified deliverables, and are viewed quite differently by the agencies—more like the basic research conducted by university faculty in other agency-funded programs.

Thus, contract-type research focuses on developing technology to solve specific agency problems and/or provide products; grant-type research funds activities by researchers.

1.4.1.5

Topics, Solicitations, and Deadlines

Technical topics are used to define acceptable areas of research at all agencies except NIH, where they are viewed as guidelines, not boundaries. There are three kinds of topic-usage structures among the agencies:

-

Procurement-oriented approaches, where topics are developed carefully to meet the specific needs of procurement agencies;

-

Management-oriented approach, where topics are used at least partly to limit the number of applications;

-

Investigator-oriented approaches, where topics are used to publicize areas of interest to the agency, but are not used as a boundary condition.

Acquisition agencies (NASA and DoD) use the procurement-oriented approach; NIH uses the investigator-oriented approach, and NSF and DoE use the management-oriented approach.

Agencies publish these topics of interest in their solicitations—the formal notice that awards will be allocated. Solicitations can be published annually or more often, depending on the agency, and agencies can also offer multiple annual deadlines for applications (as do NIH and some DoD components), or just one (DoE and in effect NSF).

1.4.1.6

Award Selection

Agencies differ in how they select awards. Peer review is widely used, sometimes by staff entirely from outside the agency (e.g., NIH), sometimes entirely from internal staff (e.g., DoD), sometimes a mix of internal and external staff, e.g., NSF. Some agencies use quantitative scoring (e.g., NIH); others do not (e.g., NASA). Some agencies have multiple levels of review, each with specific and significant powers to affect selection; others do not.

Companies unhappy with selection outcomes also have different options. At NIH, they can resubmit their application with modifications at a subsequent deadline. At most other agencies, resubmission is not feasible (where topics are tightly defined, the same topic may not come up again for several years, or at all). Most agencies do not appear to have widely used appeal processes, although this is not well documented.

The agencies also differ in how they handle the specific issue of commercial review—assessing the extent to which the company is likely to be successful in developing a commercial product, in line with the congressional mandate to support such activities.

DoD, for example, has developed a quantitative scorecard to use in assessing the track record of companies that are applying for new SBIR awards. Other agencies do not have such formal mechanisms, and some, such as NIH, do not

provide any mechanism for bringing previous commercialization records formally into the selection process.

This brief review of agency differences underscores the need to evaluate the different agency programs separately. At the same time, opportunities to apply best practices concerning selected aspects of the program do exist.

1.4.1.7

Assessing SBIR: Compared to What?

The high-risk nature of investing in early-stage technology means that the SBIR program must be held to an appropriate standard when it is evaluated. An assessment of SBIR should be based on an understanding of the realities of the distribution of successes and failures in early-stage finance. As a point of comparison, Gail Cassell, Vice President for Scientific Affairs at Eli Lilly, has noted that only one in ten innovative products in the biotechnology industry will turn out to be a commercial success. Similarly, venture capital firms generally anticipate that only two or three out of twenty or more investments will produce significant returns.70 In setting metrics for SBIR projects, it is therefore important to have realistic expectations of success rates for new firms, for firms with unproven but promising technologies, and for firms (e.g., at DoD and NASA) which are subject to the uncertainties of the procurement process. Systems and missions can be cancelled or promising technologies can not be taken up, due to a perception of risk and readiness that understandably condition the acquisition process. It may even be a troubling sign if an SBIR program has too high a success rate, because that might suggest that program managers are not investing in a sufficiently ambitious portfolio of projects.

|

70 |

While venture capitalists are a referent group, they are not directly comparable insofar as the bulk of venture capital investments occur in the later stages of firm development. SBIR awards often occur earlier in the technology development cycle than where venture funds normally invest. Nonetheless, returns on venture funding tend to show the same high skew that characterizes commercial returns on the SBIR awards. See John H. Cochrane, “The Risk and Return of Venture Capital,” Journal of Financial Economics, 75(1):3-52, 2005. Drawing on the VentureOne database, Cochrane plots a histogram of net venture capital returns on investments that “shows an extraordinary skewness of returns. Most returns are modest, but there is a long right tail of extraordinary good returns. 15 percent of the firms that go public or are acquired give a return greater than 1,000 percent! It is also interesting how many modest returns there are. About 15 percent of returns are less than 0, and 35 percent are less than 100 percent. An IPO or acquisition is not a guarantee of a huge return. In fact, the modal or ‘most probable’ outcome is about a 25 percent return.” See also Paul A. Gompers and Josh Lerner, “Risk and Reward in Private Equity Investments: The Challenge of Performance Assessment,” Journal of Private Equity, 1(Winter 1977):5-12. Steven D. Carden and Olive Darragh, “A Halo for Angel Investors,” The McKinsey Quarterly, 1, 2004, also show a similar skew in the distribution of returns for venture capital portfolios. |

1.4.2

Addressing SBIR Challenges