3

Taking Advantage of New Tools and Techniques

INTRODUCTION

As with virtually every scientific endeavor, clinical effectiveness research can be improved and expedited through innovation. In this case, innovation means the better use of existing tools and techniques as well as the development of entirely new methods and approaches. Understanding these emerging tools and techniques is critical to the discussion of improvements to the clinical effectiveness research paradigm. Better tools and enhanced techniques are fundamental building blocks in redesigning the clinical effectiveness paradigm, and new methods and strategies for evidence development are needed to use these tools to capture and analyze the increasingly complex information and data generated. In turn, better evidence will lead to stronger clinical and policy decisions and set the stage for further research.

Opportunities provided by developments in health information technology are reviewed in Chapter 4. In this chapter we review innovative uses of existing research tools as well as emerging methods and techniques. Part of the reform needed to enhance clinical effectiveness research is a more widespread understanding of different research tools and techniques, including greater clarity about what each can offer the overall research enterprise, both alone and in synergy with other approaches. A further need is broad, substantive support for ongoing development of new approaches and applications of existing tools and techniques that researchers believe may offer more benefits. As noted in Chapter 1, greater attention is needed to understand which approach is best suited for which situation and under what circumstances.

The papers included in this chapter offer observations on improvements needed in the design and interpretation of intervention trials; methods that take better advantage of system-level data; possible improvements in analytic tools, sample size, data quality, organization, and processing; and novel techniques that researchers are beginning to use in conjunction with new information, models, and tools.

Citing models from Duke University, The Society of Thoracic Surgeons (STS), and the Food and Drug Administration’s (FDA’s) Critical Path Clinical Trials Transformation Initiative, Robert M. Califf from Duke University discusses opportunities to improve the efficiency of clinical trials and to reduce their exorbitant costs. Innovations in the structure, strategy, conduct, analysis, and reporting of trials promise to make them less expensive, faster, more inclusive, and more responsive to important questions. Particular attention is needed to identify regulations that improve clinical trial quality and eliminate practices that increase costs without an equal return in value. Finally, establishing “envelopes of creativity” in which innovation is encouraged and supported is essential to maximizing the appropriate use of this methodology.

Confounding is often the biggest issue in effectiveness analyses of large databases. Innovative analytic tools are needed to make the best use of large clinical and administrative databases. Sebastian Schneeweiss from Harvard Medical School observes that instrumental variable analysis is an underused, but promising, approach for effectiveness analyses. Recent developments of note include approaches that exploit the concepts of proxy variables using high-dimensional propensity scores and provider variation in prescribing preference using instrumental variable analysis.

Rejecting any suggestion that “one trial = all trials,” Donald A. Berry from the University of Texas M.D. Anderson Cancer Center makes the case that adaptive and, particularly, Bayesian approaches lend themselves well to synthesizing and combining sources of information, such as meta-analyses, and provide means of modeling and assessing sources of uncertainty appropriately. Therefore, Berry asserts, they are ideally suited for experimental trial design.

Mark S. Roberts of the University of Pittsburgh, representing Archimedes Inc. at the workshop, suggests that physiology-based simulation and predictive models, such as an eponymous model developed at Archimedes, have the potential to augment and enhance knowledge gained from randomized controlled trials (RCTs) and can be used to fill “gaps” that are difficult or impractical to answer using clinical trial methods. Of particular relevance is the potential for these models to perform virtual comparative effectiveness trials.

This chapter concludes with a discussion of the dramatic expansion of information on genetic variation related to common, complex disease and

the potential of these insights to improve clinical care. Teri A. Manolio of the National Human Genome Research Institute reviews recent findings from genomewide association studies that will enable examination of inherited genetic variability at an unprecedented level of resolution. She proposes opportunities to better capture and use these data to understand clinical effectiveness.

INNOVATIVE APPROACHES TO CLINICAL TRIALS

Robert M. Califf, M.D.

Vice Chancellor for Clinical Research

Duke University

As we enter the era in which we hope that “learning health systems” (IOM, 2001) will be the norm, the evolution of randomized controlled trials required to meet the tremendous need for high-quality knowledge about diagnostic and therapeutic interventions has emerged as a critical issue. All too often, discussion about medical evidence gravitates toward a comparison of randomized controlled trials and studies based on observational data, rather than toward a serious examination of ways to improve the operational methods of both approaches. My own experience in assessing the relative merits of RCTs versus observational studies dates back more than 25 years (Califf and Rosati, 1981), and recent discussions on this topic remind me of conversations I had as a medical student in 1977 with Eugene Anson Stead, Jr., M.D., the former chair of the Department of Medicine at Duke University. Dr. Stead founded the Duke Cardiovascular Disease Database, which eventually evolved into the Duke Clinical Research Institute; he is credited with helping change cardiovascular medicine from a discipline largely based on anecdotal observation to one based on clinical evidence. Dr. Stead, who was significantly ahead of his time, introduced us to a device not yet in common use—the computer—and urged us to record outcomes data on all of our patients. Further, he stressed that simply collecting information on acute, hospital-based practice was not sufficient; instead, we should add to this computerized collection throughout our patients’ lives.

I firmly believe that this approach—building human systems that take advantage of the power of modern informatics—is the key to improving both RCTs and observational studies. Within the domain of clinical trials, an informatics-based approach holds promise both for pragmatic trials in broad populations, as well as in proof-of-concept (POC) trials intended to elucidate complex biological effects in small groups of people.

In 1988, our research group published a paper in which we concluded that well-designed and carefully executed observational studies could provide research data that were comparable in quality to those provided by

RCTs (Hlatky et al., 1988). We have learned much since then, a point recently driven home during rounds in the Duke Coronary Care Unit (CCU). Time after time, we were faced with decisions that, had there had been a trial with an inception time for enrollment that coincided with the time point when we needed to make that clinical decision, the trial would likely have provided invaluable information for our CCU deliberations.

While observational studies can provide useful knowledge, they are inadequate for detecting modest differences in effects between treatments (Peto et al., 1995), because without a common inception point and randomization to equally distribute known and unknown confounding factors, the risk of an invalid answer is substantial (DeMets and Califf, 2002a, 2002b). Innovation in clinical trials, in my view, is mostly concerned with performing them in optimal fashion, so that more knowledge is created more efficiently.

How Can We Foster Quality in Clinical Trials?

The most urgently needed innovation in implementing clinical trials is a more intelligent approach to defining and producing quality. Since randomization is such a powerful tool for creating a basis to compare alternatives from a common inception point, we should abandon the assumption that the common critiques of RCTs stem from unalterable rules governing the conduct of such trials. Clinical trials are not required of their nature to be expensive, slow, noninclusive, and irrelevant to measurement of outcomes that matter to patients and medical decision makers. While innovative statistical methods have provided exciting additions to our capabilities, the main source of innovation in trials must be a focus on the fundamental “blocking and tackling” of clinical trials.

A Structural Framework for Clinical Trials

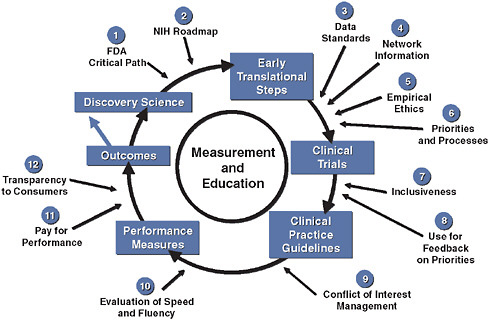

We have published a model, shown in simplified form in Figure 3-1, which integrates quantitative measurements of quality and performance into the development cycle of existing and future therapeutics (Califf et al., 2002). Such a model can serve as a basic approach to the development of reliable knowledge about medical care that is necessary but not sufficient for those wishing to provide the best possible care for their patients. Currently, it takes too long to complete this cycle, but if we had continuous, practice-based registries and the ability to randomize within those registries, we could see in real time which patients were included and excluded from trials. Further, upon completing the study, we could then measure the uptake of the results of the trial in practice. Such an approach provides a

FIGURE 3-1 Innovation in clinical trials: relevance of evidence system.

SOURCE: Copyrighted and published by Project HOPE/Health Affairs as Califf, R. M., R. A. Harrington, L. K. Madre, E. D. Peterson, D. Roth, and K. A. Schulman. 2007. Curbing the cardiovascular disease epidemic: Aligning industry, government, payers, and academics. Health Affairs (Millwood) 26(1):62-74. The published article is archived and available online at www.healthaffairs.org.

system wherein everyone contributes to the registry and the results of trials are fed back into the registry in a rapid cycle.

We have invested considerable efforts in evaluating the details of the system for generating clinical evidence from the perspective of cardiovascular medicine, where there is a long history of applying scientific discoveries to large clinical trials, which in turn inform clinical practice. Figure 3-1 summarizes the complex interplay of relevant factors. If we assume that scientific discoveries are evaluated through proper clinical trials, clinical practice guidelines and performance indicators can be devised and continuous evaluation through registries can measure improved outcomes as the system itself improves. In this context, there are at least a dozen major factors that must be iteratively improved in order for this system to work more efficiently and at lower cost (Califf et al., 2007).

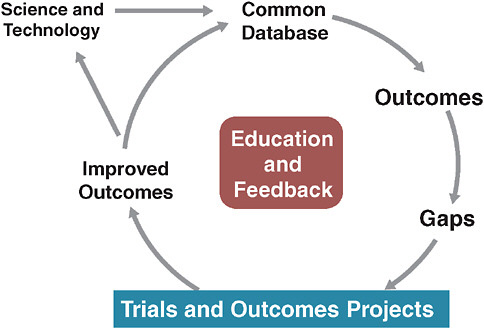

A specific model of this approach has been implemented by STS (Ferguson et al., 2000). Over time, STS has developed a clinical practice

FIGURE 3-2 The Society of Thoracic Surgeons evidence system model.

SOURCE: Derived from Ferguson, T. B., et al. 2000. The STS national database: Current changes and challenges for the new millennium. Committee to establish a national database in cardiothoracic surgery, The Society of Thoracic Surgeons. The Annals of Thoracic Surgery 69(3):680-691.

database that is used for quality reporting, and, increasingly, for continuously analyzing operative issues and techniques (Figure 3-2). The STS model also allows randomized trials to be conducted within the database.

The most significant aspects of this model lie in its constantly evolving, continuously updated information base and its methods of engaging practitioners in this system by providing continuous education and feedback. Many have assumed that we must wait on fully functional electronic health records (EHRs) for such a system to work. However, we need not wait for some putatively ideal EHR to emerge. Current EHRs have serious shortcomings from the perspective of clinical researchers, since these records must be optimized for individual provider–patient transactions. Consequently, they are significantly suboptimal with respect to coded data with common vocabulary—an essential feature for the kind of clinical research enterprise we envision. This deficit severely hobbles researchers seeking to evaluate aggregated patient information in order to draw inferential conclusions about treatment effects or quality of care. While we await the

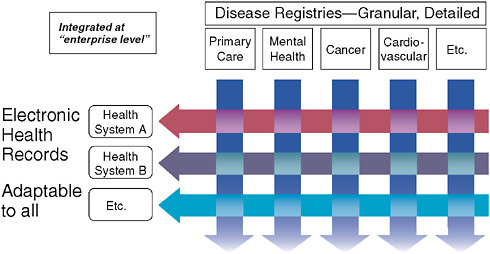

FIGURE 3-3 Fundamental informatics infrastructure—matrix organizational structure.

resolution of issues regarding EHR functionality, the best approach will be to construct a matrix between the EHR and continuous professional-based registries (disease registries) that measure clinical interactions in a much more refined and structured fashion (Figure 3-3). Such a system would allow us to perform five or six times as many trials as can now be done for the same amount of money; even better, such trials would be more relevant to clinical practice. As part of our Clinical and Translational Sciences Award (CTSA) cooperative agreement with the National Institutes of Health (NIH), we are presently working on such a county-wide matrix in Durham County, North Carolina (Michener et al., 2008).

New Strategies for Incorporating Scientific Evidence into Clinical Practice

New efficiencies can be gained through applying innovative informatics-based approaches to the broad pragmatic trials discussed above; however, we also must develop more creative methods of rapidly translating new scientific findings into early human studies. The basis for such POC clinical trials lies in applying an intervention to elucidate whether an intended biological pathway is affected, while simultaneously monitoring for unanticipated effects on unintended biological pathways (“off-target effects”). This process includes acquiring a preliminary indication of dose–response relationships and of whether unintended pathways are also being perturbed (again, while providing a basic understanding of dose–response relationships). POC studies are performed to advance purely scientific understand-

ing or to inform a decision about whether to proceed to the next stage of clinical investigation. We used to limit ourselves by thinking that we could only perform POC studies in one institution at a time, but we now know that we can perform exactly the same trials, with the same standard operating procedures and the same information systems in India and Singapore, as well as in North Carolina. The basis for this broadened capability, as in pragmatic clinical trials, is the building of clinical research networks that enable common protocols, data structures, and sharing of information across institutions. This broadening of scope affords the ability to rethink the scale, both physical and temporal, for POC clinical trials. The wide variation in costs in these different environments also deserves careful consideration by U.S. researchers.

New Approaches to Old Problems: Conducting Pragmatic Clinical Trials

When considering strategies for fostering innovation in clinical trials, several key points must be borne in mind. The most important is that there exists, particularly in the United States, an entrenched notion that each clinical trial, regardless of circumstances or aims, must be done under precisely the same set of rules, usually codified in the form of standard operating procedures (SOPs). Upon reflection, it is patently obvious that this is not (or should not be) the case; further, acting on this false assumption is impairing the overall efficiency of clinical trials. Instead, the conduct of trials should be tailored to the type of question asked by the trial, and to the circumstances of practice and patient enrollment for which the trial will best be able to answer that question. We need to cultivate environments where creative thought about the pragmatic implementation of clinical trials is encouraged and rewarded (“envelopes of innovation”), and given the existing barriers to changes in trial conduct, financial incentives may be required in order to encourage researchers and clinicians to “break the mold” of entrenched attitudes and practices.

What is the definition of a high-quality clinical trial? It is one that provides a reliable answer to the question that the trial intended to answer. Seeking “perfection” in excess of this goal creates enormous costs while at the same time paradoxically reducing the actual quality of the trial by distracting research staff from their primary mission. Obviously, in the context of a trial evaluating a new molecular entity or device for the first time in humans, there are compelling reasons to measure as much as possible about the subjects and their response to the intervention, account for all details, and ensure that the intensity of data collection is at a very high level. Pragmatic clinical trials, however, require focused data collection in large numbers of subjects; they also take place in the clinical setting where their usual medical interactions are occurring, thereby limiting the scope of detail

for the data that can be collected on each subject. To cite a modified Institute of Medicine definition of quality, “high quality with regard to procedural, recording and analytic errors is reached when the conclusion is no different than if all of these elements had been without error” (Davis, 1999).

Efficacy trials are designed to determine whether a technology (a drug, device, biologic, well-defined behavioral intervention, or decision support algorithm) has a beneficial effect in a specific clinical context. Such investigation requires carefully controlled entry criteria and precise protocols for intervention. Comparisons are often made with a placebo or a less relevant comparator (these types of studies are not sufficiently informative for clinical decision making because they do not measure the balance of risk and benefit over a clinically relevant period of time). Efficacy trials—which speak to the fundamental question, “can the treatment work?”—still require a relatively high level of rigor, because they are intended to establish the effect of an intervention on a specific end-point in a carefully selected population.

In contrast, pragmatic clinical trials determine the balance of risk and benefit in “real world” practice; i.e., “Should this intervention be used in practice compared with relevant alternatives?” (Tunis et al., 2003). The population of such a study is allowed to be “messy” in order to simulate the actual conditions of clinical practice; operational procedures for the trial are designed with these decisions in mind. The comparator is pertinent to choices that patients, doctors, and health systems will face, and outcomes typically are death, clinical events, or quality of life. Relative cost is important and the duration of follow-up must be relevant to the duration that will be recommended for the intervention in practice.

When considering pragmatic clinical trials, I would argue we actually do not want professional clinical trialists or outstanding practitioners in the field to dominate our pool of investigators. Rather, we want to incorporate real-world conditions by recruiting typical practitioners who practice the way they usually do, with an element of randomization added to the system to provide, at minimum, an inception time and a decision point from which to begin the comparison. A series of papers recently have been published that present a detailed summary of the principles of pragmatic clinical trials (Armitage et al., 2008; Baigent et al., 2008; Cook et al., 2008; Duley et al., 2008; Eisenstein et al., 2008; Granger et al., 2008; Yusuf et al., 2008).

The Importance of Finding Balance in Assessing Data Quality

If we examine the quality of clinical trials from an evidence-based perspective we might emerge with a very different system (Yusuf, 2004). We know, for example, that an on-site monitor almost never detects fraud, largely because if someone is clever enough to think they can get away with

TABLE 3-1 Taxonomy of Clinical Errors

|

Error Type |

Monitoring Method |

|

Design error |

Peer review, regulatory review, trial committee oversight |

|

Procedural error |

Training and mentoring during site visits; simulation technology |

|

Recording error |

|

|

Random |

Central statistical monitoring; focused site monitoring based on performance metrics |

|

Fraud |

Central statistical monitoring; focused site monitoring based on unusual data patterns |

|

Analytical error |

Peer review, trial committees, independent analysis |

fraud, that person is likely to be adroit at hiding the signs of their deception from inspectors. A better way to detect fraud is through statistical process control, performed from a central location. For example, a common indicator of fraudulent data is that the data appear to be “too perfect.” If data appear ideal in a clinical trial, they are unlikely to be valid: That is not the way that human beings behave. Table 3-1 summarizes monitoring methods to find error in clinical trials that take advantage of a complete perspective on the design, conduct, and analysis of trials.

Recent work sheds light on how to take advantage of natural units of practice (Mazor et al., 2007). It makes sense, for example, to randomize clusters of practices rather than individuals when a policy is being evaluated (versus treating an individual). Several studies that have followed this approach were conducted as embedded experiments within ongoing registries; the capacity to feed information back immediately within the registry resulted in improvements in practice. Although the system is not perfect, there is no question that it makes possible the rapid improvement of practice and allows us to perform trials and answer questions with randomization in that setting.

Disruptive Technologies and Resistance to Change

All this, however, suggests the question: If we are identifying more efficient ways to do clinical trials, why are they not being implemented? The problem is embedded in the issue of disruptive technology—initiating a new way of doing a clinical trial is disruptive to the old way. Such disruption upsets an industry that has become oriented, both financially and philosophically, toward doing things in the accustomed manner. In less highly regulated areas of society, technologies develop in parallel and the “winners” are chosen by the marketplace. Such economic Darwinian

selection causes companies that remain wedded to old methods to go out of business when their market is captured by an innovator who offers a disruptive technology that works better. In most markets, technology and organizational innovation drive cost and quality improvement. Providing protection for innovation that will allow those factors to play out naturally in the context of medical research might lead to improved research practices, thereby generating more high-quality evidence and, eventually, improving outcomes.

In our strictly regulated industry, however, regulators bear the mantle of authority, and the risk that applying new methods will result in lower quality is not easily tolerated. This in turn creates a decided barrier to innovation, given the extraordinarily high stakes. There is a question that is always raised in such discussions: If you do human trials less expensively and more efficiently, can you prove that you are not hurting patient safety?

What effect is all of this having? A major impact is cost: Many recent cardiovascular clinical outcomes trials have cost more than $350 million dollars to perform. In large part this expense reflects procedures and protocols that are essentially unnecessary and unproductive, but required nonetheless according to the prevailing interpretation of regulations governing clinical trials by the pharmaceutical and device companies and the global regulatory community.

Costing out the consequences of the current regulatory regime can yield staggering results. As one small example, a drug already on the market evidenced a side effect that is commonly seen in the disease for which it is prescribed. The manufacturer believed that it was required to ship by overnight express the adverse event report to all 2,000 investigators, with instructions that the investigators review it carefully, classify it, and send it to their respective IRBs for further review and classification. The cost of that exercise for a single event that contributed no new knowledge about the risk and benefit balance of the drug was estimated at $450,000.

Starting a trial in the United States can cost $14,000 per site before the first patient is enrolled simply because of current regulations and procedures governing trial initiation, including IRB evaluation and contracting. A Cooperative Study Group funded by the National Cancer Institute recently published an analysis demonstrating that a minimum of more than 481 discrete processing steps are required for an average Phase II or Phase III cancer protocol to be developed and shepherded through various approval processes (Dilts et al., 2008). This results in a delay of more than 2 years from the time a protocol is developed until patient enrollment can begin, and means that “the steps required to develop and activate a clinical trial may require as much or more time than the actual completion of a trial.”

We must ask: Do the benefits conferred by documenting pre-study evaluation visits or pill counts really outweigh the costs of collecting such data, for example? Do we need 800 different IRBs reviewing protocols for large multicenter trials, or could we enact studies using central IRBs or collaborative agreements among institutional IRBs? Is all the monitoring and safety reporting that we do really necessary (or even helpful)?

Transforming Clinical Trials

All is not dire, however. One promising new initiative is the FDA Critical Path Initiative (public/private partnership [PPP]): the Clinical Trials Transformation Initiative (CTTI), which is intended to map ways to better trials (www.trialstransformation.org). A collaboration among the FDA, industry, academia, patient advocates, and nonacademic clinical researchers, CTTI is designed to conduct empirical studies that will provide evidence to support redesign of the overall framework of clinical trials and to eliminate practices that increase costs but provide no additional value. The explicit mission of CTTI is to identify practices that through adoption will increase the quality and efficiency of clinical trials.

Another model that we could adapt from the business world is the concept of establishing “envelopes of creativity.” In short, we need to create spaces within organizations where people can innovate with a certain degree of creative freedom, and where financial incentives reward this creativity. Pediatric clinical trials offer a good example of this approach. Twenty years ago, clinical trials were rarely undertaken in children; many companies argued that they simply could not be done. Pediatricians led the charge to point out that the end result of such an attitude was a shocking lack of knowledge about the risks and benefits of drugs and devices in children. Congress was persuaded to require pediatric clinical trials and grant patent extensions for companies that performed appropriate trials in children (Benjamin et al., 2006). The result was a significant increase in the number of pediatric trials and a corresponding growth in knowledge about the effects of therapeutics in children (Li et al., 2007).

Conclusions

If we all agree that clinical research must be improved in order to provide society with answers to critical questions about medical technologies and best practices, a significant transformation is needed in the way we conduct the clinical trials that provide us with the most reliable medical evidence. We need not assume that trials must be expensive, slow, noninclusive, and irrelevant to the measurement of important outcomes that matter most to patients and clinicians. Instead, smarter trials will

become an integral part of practice in learning health systems as they are embedded into the information systems that form the basis for clinical practice; over time, these trials will increasingly provide the foundation for integrating modern genomics and molecular medicine into the framework of clinical care.

INNOVATIVE ANALYTIC TOOLS FOR LARGE CLINICAL AND ADMINISTRATIVE DATABASES

Sebastian Schneeweiss, M.D., Sc.D.

Harvard Medical School

BWH DEcIDE Research Center on Comparative Effectiveness Research

Instrumental Variable Analyses for Comparative Effectiveness Research Using Clinical and Administrative Databases

Physicians and insurers need to weigh the effectiveness of new drugs against existing therapeutics in routine care to make decisions about treatment and formularies. Because FDA approval of most new drugs requires demonstrating efficacy and safety against placebo, there is limited interest by manufacturers in conducting such head-to-head trials. Comparative effectiveness research seeks to provide head-to-head comparisons of treatment outcomes in routine care. Because healthcare utilization databases record drug use and selected health outcomes for large populations in a timely way and reflect routine care, they may be the preferred data source for comparative effectiveness research.

Confounding caused by selective prescribing based on indication, severity, and prognosis threatens validity of nonrandomized database studies that often have limited details on clinical information. Several recent developments may bring the field closer to acceptable validity, including approaches that exploit the concepts of proxy variables using high-dimensional propensity scores and exploiting provider variation in prescribing preference using instrumental variable analysis. The paper provides a brief overview of those two approaches and discusses their strengths, weaknesses, and future developments.

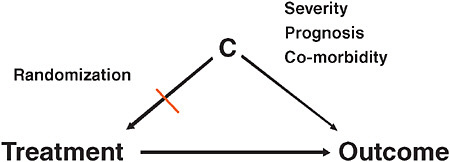

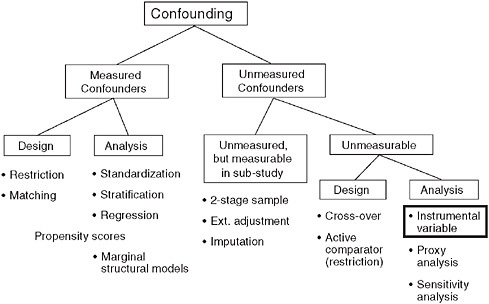

Very briefly, what is confounding? Patient factors become confounders (“C” in Figure 3-4) if they are associated with treatment choice and are also independent predictors of the outcome. When researchers are interested in the causal effect of a treatment on an outcome, factors that are independently predicting the study outcome, such as severity of the underlying condition, prognosis, co-morbidity, are at the same time also driving the treatment decision. Once these two conditions are fulfilled, you have a confounding situation and you get biased results. In large-claims database

FIGURE 3-4 Explanation of confounding factors in comparative effectiveness research.

analyses, confounding is one of the biggest issues in comparative effectiveness research. Randomization breaks this association between patient factors and treatment assignment. In Figure 3-1, once you break one of the two arms of the tent, then you no longer have confounding.

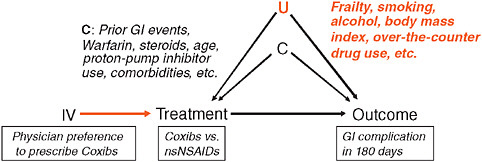

We have a large continuum of comparative effectiveness research, within which some questions are heavily confounded by design while others are not; the separation is usually by unintended treatment effects and intended treatment effects. An example is in the use of selective Cox-2 inhibitors (coxibs) and cardiac events. In 1999 and 2000 when coxibs were first marketed, nobody was thinking that independent cardiovascular risk factors would influence the decision of whether to treat with the coxibs or nonselective nonsteroidal anti-inflammatory drugs (nsNSAIDs), so there was no association. Consequently there is very little potential for confounding studying unintended cardiovascular outcomes. However, when we studied coxib use and the reduction in gastric toxicity, a heavily marketed advantage of coxibs, risk factors for future gastroinestinal (GI) events drive the decision to use coxibs; consideration of GI symptoms, although often quite subtle and likely not recorded in databases, are nevertheless driving the treatment decision and may therefore cause confounding.

As Figure 3-5 suggests, epidemiologists have a whole toolbox of techniques to control confounding by measured factors (Schneeweiss, 2006). But what about the unmeasured confounders, such as the subtle GI symptoms that are not recorded in claims data, but nevertheless are driving the treatment decision?

We can sample a subpopulation and collect more detailed data there, but what options are there when such a subsampling to measure clinical details is not a possible or practical? One of the strategies is to use instrumental variables. An instrumental variable (IV) is an unconfounded substitute for the actual treatment. In this approach, instead of model-

FIGURE 3-5 Dealing with unmeasured confounding factors in claims data analyses.

SOURCE: Schneeweiss, S. 2006. Sensitivity analysis and external adjustment for unmeasured confounders in epidemiologic database studies of therapeutics. Pharmacoepidemiology and Drug Safety 15:291-303. Reprinted with permission from Wiley-Blackwell, Copyright © 2006.

ing treatment and outcome, researchers model the instrument—which is unrelated to patient characteristics and therefore unconfounded—and then rescale the estimate for the correlation between the instrumental variable and the actual treatment.

One of the key assumptions is that the instrumental variable is not associated with either the measured or unmeasured confounders and is not related to the outcome directly other than through the actual treatment. This is necessary for instrumental variables to produce valid results. Consequently, in working with such instruments, researchers have to identify a sort of quasi-random treatment assignment in the real world. For the sake of this paper, two are readily identifiable:

Interruption in Medical Practice

This quasi-random treatment assignment can be caused by sudden and massive interruptions of treatment patterns, for example by regulatory changes. An example might be the FDA aprotinin advisory that reduced the medication’s use by 50 percent—a massive shift. For the same patient candi-

dates for aprotinin, a cardiac surgeon would likely choose a different course of treatment before and after the advisory. A similar example is found in the evolution of the coronary stents; a patient coming for a percutaneous procedure on one day might be treated with a bare metal stent but a year later, after the rapid adoption of drug-eluting stents that same patient might be given a drug-coated stent.

Strong Treatment Preference

Several papers have contributed to our understanding of this valuable instrument for evaluating the comparative effectiveness of therapeutics, which considers such instruments as the distance to specialist, geographic area, physician prescribing preference, and hospital formularies (Brookhart et al., 2006; McClellan et al., 1994; Stukel et al., 2007). A valid preference-based instrument would be the observation of a quasi-random treatment choice mechanism, for example, some hospitals have certain drugs on formulary and others don’t, but patients do not elect to go to one hospital versus another based on whether or not a particular medication is on formulary.

Figure 3-6 presents an example focused on the use of coxibs and nsNSAIDs, with GI complications as the causal relationship, and physician preference to prescribed coxibs versus nonselective NSAIDs (Schneeweiss et al., 2006). This nightmare for everyone writing treatment guidelines might be the dream of an epidemiologist: The same patients get treated differently by different physicians; some physicians always prescribe coxibs and some physicians never prescribe coxibs to patients that need pain therapy (Schneeweiss et al., 2005; Solomon et al., 2003).

FIGURE 3-6 IV estimation of the association between NSAIDs and GI complication.

SOURCE: Adapted by permission from Macmillan Publishers, Ltd. Clinical Pharmacology & Therapeutics 82:143-156, Copyright © 2007.

Some confounders such as the use of steroids and other medications can be measured with information that we can draw from claims data. However, there will remain unmeasured confounders—for example, body mass index, and the use of over-the-counter drugs. Such information is usually not available in claims data, leading one to ask what happens when one compares the conventional multivariate-adjusted analysis to an instrumental variable based on physician preference. Data not shown here indicate that the risk difference estimates for GI complications for coxibs in a conventional multivariate analysis is around 0, meaning “no association.” What we would expect, of course, is a protective effect. When we did the instrumental variable analysis on coxibs and reduced GI toxicity (not shown), we see a negative risk difference, indicating a protective effect of the coxibs as compared to nsNSAIDs. This is an example where the confounding is strong and the confounding factor is either not measured in claims data or is measured only to a small extent.

Let us consider three core assumptions about instrumental variables (Angrist, 1996). One assumption is that the instrument is related to the actual exposure—otherwise it can’t be an instrument—and is a strong predictor of treatment. The assumption is that physician prescribing preference strongly predicts future choices of treatments. This assumption is empirically testable. In comparison with IV analyses from economics, the strength of the physician prescribing preference IV is greater than most but not all published examples (Rassen, 2008).

A second assumption is that the instrument should not be associated with any measured or unmeasured patient care characteristics. To prove such an assumption—a more difficult exercise than proving the first assumption—one must consider the extent to which one achieves balance in the measured covariates between the two treatment groups. This involves summarizing all of the measurable individual covariants into a summary metric called the Mahalanobis distance that considers the covariance between individual patient factors. In this case the physician preference for a variety of instrument definitions has led to substantial reduction in imbalance among observed patient characteristics (Rassen, 2008). The hope is that when improvement in balance in the measured covariates can be achieved by the instrument, there will be a corresponding improvement in the unmeasured covariates. This is different from the balance achieved by propensity score matching that is limited to the measured patient characteristics and their correlates (Seeger et al., 2005).

A third assumption is that there should be no direct relationship with outcome other than through actual treatment. It can be attempted to empirically test this assumption in the case of the treatment preference instrument through what is colloquially called the “good doc/bad doc” model, which suggests that treatment preference may be correlated with other physician

characteristics that relate to better outcomes. For example, some physicians who generally practice medicine better might have a preference for coxibs versus other NSAIDs. This creates a physician-level correlation and therefore introduces confounding. To test this assumption, other quality of care measures, such as prescribing long-acting benzodiazepines, or problematic tricyclic antidepressant prescribing could be assessed in a study of the effectiveness of antipsychotics. The result was that among general practitioners there was no quality of prescribing and thus a reduced chance of violations of this third assumption (Brookhart et al., 2007).

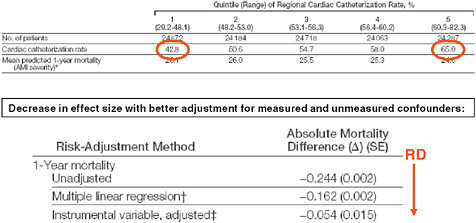

Another example used regional variation in heart catheterization rates in patients with acute myocardial infarctions as an instrument (Stukel et al., 2007). As seen in Figure 3-7, patients in this study were arranged by quintile of regional cardiac catheterization rates. In the first quintile, 43 percent of patients received a heart catheter; in the highest quintile group, 65 percent received heart catheterization.

One could argue that there shouldn’t be anything different between these populations because patients did not select their residence according to whether their regional cardiac catheterization rate is high. If this argument holds than there are some patients not receiving catheterization who would receive catheterization if they happened to live in another region. Thus there is quasi-random treatment assignment for these patients.

Looking at the effect estimates in Figure 3-7 we find that the protective effect of heart catheterization in patients with acute myocardial infarction

FIGURE 3-7 Regional variation in cardiac catheterization and risk of death.

SOURCE: Journal of the American Medical Association 297(3):278-285. Copyright © 2007 American Medical Association. All rights reserved.

FIGURE 3-8 Time as an instrumental variable.

SOURCE: Johnston, K. M., P. Gustafson, A. R. Levy, and P. Grootendorst. 2008. Use of instrumental variables in the analysis of generalized linear models in the presence of unmeasured confounding with applications to epidemiological research. Statistics in Medicine 27(9):1539-1556. Reproduced with permission of John Wiley & Sons, Ltd.

in an unadjusted analysis of 24 less deaths per year per 100 patients reduces in the multivariate-adjusted regression to only 16 deaths prevented; and with the instrumental variable regression, only 5.

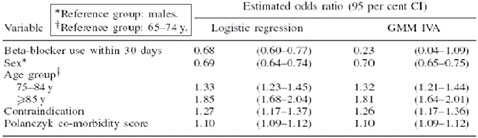

One final example (Figure 3-8) uses time as an instrumental variable. The question here concerns the use of beta-blocker after heart failure hospitalization and 1-year mortality, and whether beta-blocker use is correlated with reduced mortality. After some landmark trials had been published, beta-blocker use in patients with heart failure increased substantially. The investigators defined the binary instrument either before or after this increased use of beta-blocker. As the figure shows, the estimated odds ratio using standard logistic regression was 0.68, whereas the instrumental variable ratio was 0.23—without suggesting which is “right,” we see that there is a considerable difference between the two estimates.

The most frequently mentioned limitation of instrumental variables is that two critical assumptions are not testable but assumptions must be argued using context knowledge. Several empirical tests were suggested to partially evaluate IV assumptions using empirical data, but ultimately we cannot fully prove that assumptions are fully valid. However, readers may be reminded that conventional regression analyses are based on assumptions, including that the model is specified correctly, i.e., that all confounders are measured and included in the model, an assumption that is inherently untestable. The lower statistical efficiency as a consequence of the two-stage estimation process is another limitations. In large databases with tens of thousands of people exposed to drug therapy that is usually a minor issue.

Comparative effectiveness research should routinely explore whether

a valid instrument variable is identifiable in settings where important confounders remain unmeasured. One should search for random components in the treatment choice process, which will sometimes lead to a valid instrument. We have found that the physician prescribing preference instrument is worth considering in many situations of drug effectiveness research. We have further recommended that instrumental variable analyses should be secondary to conventional regression modeling until we better understand the qualities of preference-based instruments and how to best empirically test IV assumptions. We further suggest to perform sensitivity analyses to assess how much violation of IV assumptions may change the primary effect estimate (Brookhart, 2007).

In conclusion, instrumental variable analyses are currently underutilized but very promising approaches for comparative effectiveness research using nonrandomized data. Instrumental variable analyses can lead to substantial improvements, particularly in situations with strong unmeasured confounding. The prospect of reducing residual confounding comes at the price of somewhat untestable assumptions for valid estimation. Plenty of research is ahead, particularly developing better methods to empirically assess the validity of IV assumptions and systematic screens for instrument candidates.

ADAPTIVE AND BAYESIAN APPROACHES TO STUDY DESIGN

Donald A. Berry, Ph.D.

Head, Division of Quantitative Sciences

Professor and Frank T. McGraw Memorial Chair for Cancer Research

Chairman, Department of Biostatistics The University of Texas M.D. Anderson Cancer Center

Modern clinical studies are subject to the most rigorous of scientific standards. In particular, modern research relies heavily on the randomized clinical study that was introduced by A. Bradford Hill in the 1940s (MRC Streptomycin in Tuberculosis Studies Committee, 1948). Applying randomization in a clinical research setting was an enormous advance and it revolutionized the notion of treatment comparisons. For a variety of reasons, mostly coincidence, the RCT became tied to the frequentist approach to statistical inference. In this approach the inferential unit is the study itself, and the conventional measure of inference is the level of statistical significance. In the early days of the RCT the sample size was fixed in advance. Over time, preplanned interim analyses were incorporated to allow for stopping the study early for sufficiently conclusive results.

Randomization will continue to be important in clinical research. However, randomization is difficult and expensive to effect, and there are legiti-

mate ways of learning without randomizing. Moreover, learning can take place at any time during a study and not just when accrual is stopped and sufficient follow-up information obtained. The goal of this chapter is to describe an approach to clinical study design that improves on randomization in two ways. One way is to make RCTs more flexible, with data accrues during the study used to guide the study’s course. The other improvement is incorporating different sources of information to enable better conclusions about comparative effectiveness. Both use the Bayesian approach to statistics (Berry, 1996, 2006). This approach is ideal for both purposes. As regards the first, Bayes rule provides a formalism for updating knowledge with each new piece of information that is obtained, with updates occurring at any time. As regards the second, the Bayesian approach is inherently synthetic. Its principal measures of inference are the probabilities of hypotheses based on the totality of information available at the time.

Précis for Frequentist Statistics

Historically, the standard statistical measures used in clinical research have been frequentist. Frequentist conclusions are tailored to and driven by the study’s design. Probability calculations are restricted to the so-called “sample space,” the set of outcomes possible for the design used. To make these calculations requires the assumption that a particular mechanism that produces the observation. An especially important assumption is that the experimental treatment being evaluated is ineffective, the “null hypothesis.” Other hypotheses can be assumed as well, including that the experimental treatment has a particular specified advantage.

The most familiar frequentist inferential measure is the “p-value,” or observed statistical significance level. This is the probability of observations in the sample space as extreme or more extreme than the results actually observed, calculated assuming the null hypothesis. To make this calculation requires finding the probabilities (under the null hypothesis) of results that are potentially observable. It also requires ordering the possible results of the experiment so that “more extreme results” can be identified to enable adding probabilities over these results.

An important frequentist calculation made in advance of a study is its statistical power. This is the probability of achieving statistical significance in the study (defined as having a p-value of 0.05 or smaller) when the truth is that the experimental treatment has some particular benefit.

In all of the above calculations the design must be completely described in advance for otherwise the probabilities in the sample space and even the sample space itself will be unknown. And the study must be complete, having followed the design as specified in advance. The mathematics are easiest when the sample size is fixed and treatment assignments do not depend on

the interim results. But frequentist measures can be calculated (perhaps only via simulation) for any prospective design, however complicated. One potential stumbling block in a complicated study is identifying an ordering of the study results. There is no natural way of ordering study results in the frequentist approach when the study has a complicated design. For example, there is no good frequentist approach to answer questions such as, “Given the current results of the study, how much credibility should I place in the null hypothesis as opposed to competing hypotheses?” That makes it difficult to alter the course of the study on the basis of those results.

Précis for Bayesian Statistics

There are many publications describing the Bayesian approach—for example, Berry (2006) and Spiegelhlater (2004). I will give a brief description here, highlighting some points of special importance in clinical study design. In the Bayesian approach, anything which is unknown—including hypotheses—has a probability. So the null hypothesis has a probability. And this probability can be calculated at any time: at the end of the study, during the study, and at the beginning of the study. The last of these is called a “prior probability.” Probabilities calculated during or after a study are based on whatever results are available at the time and are called “posterior probabilities.” For example, a Bayesian can always answer the question in the previous paragraph by giving the current (posterior) probability of the null hypothesis.

The Bayesian approach has a characteristic that is very important in designing clinical studies: It enables calculating probabilities of future observations based on previous observations. Frequentists can calculate probabilities of future observations only by assuming particular hypotheses. In the Bayesian approach predictive probabilities do not require assuming a particular hypothesis because these probabilities are averages with respect to the current posterior probabilities of the various hypotheses.

The online learning aspect of the Bayesian approach makes it ideal for building adaptive designs. If a study’s design is developed as the study is being conducted, which is possible in the Bayesian approach, it is impossible to calculate the study’s false-positive rate. This is why I insist on building designs prospectively. It is more work because one must consider many possibilities that will not arise in the actual trial: “What would I want to do if the data after 40 patients are as follows: …?” The various “operating characteristics” of any prospective study design, including its false-positive rate, can be calculated. Except in the simplest of adaptive designs, such calculation will require simulation.

Clinical Studies with Adaptive Designs

Clinical studies, including RCTs, are usually static in the sense that sample size and treatment assignment are fixed in advance. Results observed during the study are not used to guide the study’s course. There are exceptions. One is a two-stage Phase II cancer trial in which stopping is possible after the first stage if the results are either very promising or very discouraging. Also, Phase III and Phase IV trial designs usually prescribe interim analyses for early stopping in case one treatment arm is performing much better than the other. However, these methods are crude and they are limited in the design modifications that are possible. In particular, interim analyses are allowed at only a small number of epochs, limiting ability to adjust course in mid-study. In addition, traditional early stopping criteria in late phase studies are so conservative that few of them stop early in practice.

The simplicity of studies that have static designs makes them appealing inferential tools. But such studies are costly, in both time and resources. Late-phase clinical trials tend to be large. Large clinical trials are expensive, which increases the cost of health care. And large studies use patient resources that might be used more effectively for other investigations. Moreover, large sample size means exposing many patients to a treatment that may be ineffective and perhaps even harmful. Despite being large, static studies too often reach their full accrual goal and prescribed patient followup time only to conclude that the scientific goal was not achieved.

A more flexible approach is to use the information that accrues in a study to modify its subsequent course. Such designs are adaptive in that modifications depend on the interim results. Among the modifications possible are stopping the study early, changing eligibility criteria, expanding accrual (by adding additional clinical sites), extending accrual beyond the study’s original sample size if its conclusion is still not clear, dropping or adding arms (including combinations of other arms) or doses, switching from one clinical phase to another, and shifting focus to subsets of the patient populations (such as responders). Combinations of these are possible. For example, one might learn that an arm performs poorly in one subset of patients and so that arm is dropped within that subset but it continues otherwise. Adaptive designs also include unbalanced randomization (more patients assigned to some of the treatment arms than others based on interim results of the study) where the degree of imbalance depends on the accumulating data. For example, arms that will provide more information or that are performing better than other arms can be weighted more heavily in the randomization. Adaptations are considered in the light of accumulating information concerning the hypotheses in question.

Consider two examples. First is a circumstance that occurs commonly in drug studies. Patient accrual and follow-up end without a clear

conclusion—the results are neither clearly positive nor clearly negative. For example, the statistical significance level for the primary end-point may be slightly larger than the targeted 5 percent. The company has to carry out another study. A flexible approach in the original study would include the possibility of continuing to accrue patients depending on the results available at the time of the targeted end of accrual. (The overall false-positive rate is affected by such analyses but the final significance levels can be adjusted accordingly.) Allowing for the possibility of extending accrual may increase the study’s sample size. A modest increase in average sample size buys a substantial increase in statistical power. This favorable trade-off is because accrual is extended only when the available information indicates that such an extension is worthwhile. Most importantly, the possibility of extending accrual minimizes the chance of having to carry out an additional study when the drug is in fact effective. Moreover, any increase in average sample size can be more than compensated by incorporating frequent interim analyses with the possibility of stopping for futility (that is, if the results on the experimental agent are not sufficiently promising).

A more extreme example of flexibility has the explicit goal of treating patients in the study as effectively as possible, while learning rapidly about relative therapeutic benefits. Patients are assigned with higher probabilities to therapies that are performing better. Such designs are attractive to patients and so can lead to increased participation in clinical studies. And they lead to rapid learning about better performing therapies. Inferior treatments are dropped from consideration early (Giles et al., 2003). Logistics are more complicated because study databases must be updated as soon as results become available; such updating includes information about early end-points that may be related to the primary long-term end-points.

Adaptations are not limited to the data accumulating in the study in question. Information that is reported from other studies also may be used in affecting a study’s course.

Using Multifarious Sources of Information

The Bayesian approach is inherently synthetic. Inferences use all available sources of information. Appropriately combining these sources is seldom easy. Populations may be different. Protocols may be different. Some sources may be clinical trials while others are databases accumulated in clinical practice.

Because the Bayesian approach is tailored to combining information, it is increasingly used in meta-analyses (Stangl, 2000). But it can be used in much more complicated settings as well. One of the most complicated is the following. Breast cancer mortality in the United States started to drop in about 1990, decreasing by about 24 percent over the decade 1990–2000.

Possible explanations included mammographic screening and adjuvant treatment with tamoxifen and chemotherapy. The National Cancer Institute funded seven groups to sort out the issue, with the goal of proportionally attributing the decrease to these explanations (Berry et al., 2005).

One of the seven groups took a simulation-based Bayesian approach (Berry et al., 2006). We used relevant empirical information from 1975 to 2000, including the use of screening mammography (schedules such as annual, biennial, haphazard) by the woman’s age and year, the characteristics of tumors detected by screening (and which screen) and symptomatically (including interval cancers), the use of tamoxifen by disease stage and the woman’s age (and the tumor’s hormone-receptor status), the use of polychemotherapy by disease stage and age, and the survival benefits of tamoxifen and chemotherapy by disease stage, age, and hormone-receptor status. We did not have longitudinal information on any set of women and so we had to piece together the effects of the various factors.

As in Bayesian modeling more generally, the important unknown parameters (benefits of treatment, survival after breast cancer depending on method of detection, background incidence of cancer [no screening] over time) had prior probability distributions. For example, for the survival benefit of tamoxifen for women with hormone-receptor positive tumors we based the prior distribution on the Oxford Overview of randomized trials, but with much greater standard deviation than that from the Overview to account for the possibility that tamoxifen used in clinical practice might not have the same benefit as in clinical trials. We generated many thousands of cohorts of 2 million U.S. women having the age distribution of U.S. women in 1975. We accounted for emigration and immigration. For each simulation we selected a particular value from each of the various prior distributions. For example, for one cohort we might have chosen a 20 percent reduction in the risk of breast cancer death when using tamoxifen. We assigned non-breast-cancer survival times to each woman consistent with the overall survival pattern of the actual U.S. population. Women in each simulation got breast cancer with probabilities according to their ages and their use of screening, again consistent with the actual U.S. population. Their cancers had characteristics depending on age and method of detection. Their treatment depended on their tumors’ characteristics and was consistent with the mores of the day. We generated breast cancer survival ages for women who were diagnosed with the disease, and these women were recorded as dying of breast cancer if these ages were younger than their non-breast-cancer survival.

For each simulation we tabulated over 1975–2000 the incidence of breast cancer by stage and breast cancer mortality. If these matched the actual U.S. population statistics sufficiently well then we “accepted” the values of the parameters for that simulation into the posterior distribution

of the parameters. Most simulations did not match actual mortality. But some did. We simulated enough cohorts to form reasonable conclusions about the posterior distributions.

One set of conclusions in this example was the relative contributions of screening and treatment to the observed decrease in mortality. Another was that despite having access to the various sources of data, our conclusions about the relative contributions of screening and treatment were uncertain. The Bayesian approach allowed for quantifying this uncertainty. The six non-Bayesian models provided point estimates of the relative contributions. Interestingly, these point estimates were consistent with the uncertainty concluded by the Bayesian model.

Still another conclusion from the Bayesian model was that the benefits of tamoxifen and chemotherapy in clinical practice are similar to the benefits seen in the clinical trials. Again, there is some uncertainty in this statement. Although the means of the posterior distributions of these parameters were very similar to the means of the corresponding prior distributions, the posterior standard deviations were not much smaller than the prior distributions.

Conclusion

Statistical philosophy and methodology has contributed in important ways to medical research. The standard approaches are rigorous and not very flexible. Such a tack has been critical to establishing medicine as a science. But having achieved a high plateau, we must move even higher. In this chapter I have suggested some ways that medical research can be more flexible and yet maintain scientific rigor. Bayesian thinking and methodology can help in synthesizing information from various sources and in building more efficient designs. Efficiencies include smaller sample sizes, usually, but also greater accuracy in comparing treatment effectiveness.

SIMULATION AND PREDICTIVE MODELING

Mark S. Roberts, M.D., M.P.P.,

University of Pittsburgh

David M. Eddy, M.D., Ph.D.,

Archimedes, Inc.

Randomized clinical trials have substantial advantages in isolating and testing the effect of an intervention. However, RCTs have weaknesses and limitations, including problems with generalizability, duration, and costs. Physiology-based models, such as the Archimedes model, have the potential to augment and enhance knowledge gained from clinical trials and can be

used to fill in “gaps” that are difficult or impractical to answer using clinical trial methods.

Physiology-based models are mechanistic in nature and model disease processes at a biological level rather than through statistical relationships between observed data and outcomes. When properly constructed, they replicate the results of the studies used to build them, not only in terms of outcomes but also in terms of the changes in biomarkers and clinical findings as well. A unique characteristic of a properly constructed physiology-based model is its ability to predict the results of studies and trials that have not been used in the model’s construction, a process that provides very strong validation of its predictions.

This paper will describe the Archimedes model as an example of a physiology-based model and will propose uses for such models. The methods for representing and calibrating the mechanistic processes will be described, and comparisons of simulated trials to actual clinical trials as a method of validation will be presented. Multiple uses of the Archimedes model to enhance and extend existing clinical trials as well as to conduct virtual comparative effectiveness trials also will be discussed.

Strengths and Weakness of Randomized Controlled Trials

The main strength of randomized controlled trials is that the random assignment to treatment and control group renders those groups equivalent and eliminates bias by indication, resulting in intervention and control groups that are balanced in known and unknown parameters. At the same time, strictly controlled protocols isolate the specific effect of the intervention.

The weaknesses of RCTs are well known. They often represent a narrow spectrum of disease, are conducted in specialized, highly controlled environments, and are expensive. Patients and physicians must agree to participate, which produces a selection bias that limits generalizability to other populations. They often require a large number of patients and follow-up times so long that the trial results might be eclipsed by the pace of technologic change. This is true, for example, in HIV disease, in which antiretroviral resistance patterns are rapidly and constantly changing, and the number of HIV drugs is rapidly expanding. Finally, RCTs usually represent efficacy, not effectiveness, as they are typically conducted in tightly controlled settings in which care processes have high levels of compliance and protocol adherence.

Physiology-Based Models

The use of physiology-based or mechanistic models as an adjunct or alternative to RCTs has been increasing in several different fields. Although

only recently used in medicine, there are some interesting examples of this in sepsis (Day et al., 2006; Reynolds et al., 2006; Vodovotz et al., 2004), in critical care and injury (Clermont et al., 2004b; Saka et al., 2007), in the acquisition of antiretroviral resistance in HIV disease (Braithwaite et al., 2006, 2008), and in the Archimedes model, which currently includes cardiovascular and metabolic diseases (Eddy and Schlessinger, 2003a; Heikes et al., 2007; Sherwin et al., 2004).

Physiology-based models seek to represent the underlying biology of the disease. They are continuous in time and generally model the physiological processes that create the data observed in the world: They do not simply model the relationship between observed variables and outcomes statistically. Physiology-based models can represent many different levels of detail, from physiologic variables and biomarkers that create disease through anatomy, symptoms, behaviors, all the way up through interactions with health systems, utilization, and costs.

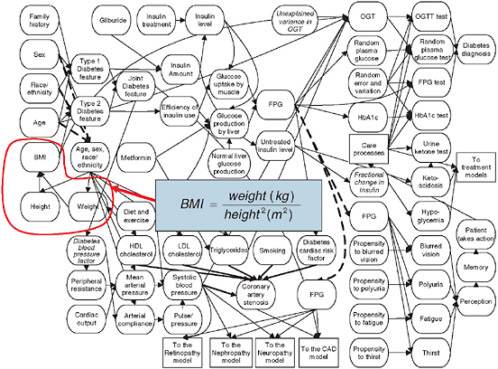

The Archimedes model is designed to represent actual biological relationships and is best illustrated visually in a similar manner to how these relationships are presented in a standard textbook of physiology, with physiological parameters and their relationships described with influence-diagrams at multiple levels of detail from whole organ relationships to processes that occur within organs to those within cells, etc. Similarly, every virtual individual in the Archimedes model has a virtual heart with four virtual chambers, a virtual circulatory system that has a virtual blood pressure and responds to virtual changes in cardiovascular dynamics. The virtual individual has a virtual liver that produces virtual glucose, a virtual gut that absorbs virtual nutrients, a virtual pancreas with virtual beta cells that make virtual insulin, and virtual muscle mass and virtual fat cell mass that utilizes glucose as a function of the amount of virtual insulin available.

Figure 3-9 shows a small portion of the model, but illustrates the types of variables and relationships that are in the Archimedes model. The figure resembles the “bubble diagrams” from physiology texts, and in this particular example, represents some of the factors that affect diabetes and other metabolic conditions. In the figure, every oval represents a characteristic, biological parameter, condition, test, intervention, symptom, or other type of clinically important variable. Some of the relationships are trivial and obvious as, for example, is the relationship between height and weight that defines the body mass index (BMI) with a simple functional form. Most of the functions are substantially more complicated and are typically represented as differential equations that relate the instantaneous change in a particular physiological parameter to the level and change of many other variables. The equations that are contained in the Archimedes model relate the various physiological variables to each other and to specific outcomes, such as the development of diabetes and heart disease. The functional

FIGURE 3-9 Physiological factors affecting development of diabetes. BMI is shown as one such variable, composed of the components height and weight through the indicated equation.

SOURCE: Copyright © 2003 American Diabetes Association. From Diabetes Care, Vol. 26, 2003; 3093-3101. Modified with permission from the American Diabetes Association.

form of the equations and the coefficients on the terms of the equations are derived from and calibrated with data from a wide variety of empirical sources, ranging from studies of basic biology to large longitudinal trials and datasets. A more complete description of the Archimedes model and its development is available elsewhere (Eddy and Schlessinger, 2003a; Schlessinger and Eddy, 2002).

Validation of a Physiology-Based Model

One of the most important steps in the building and use of a model is validation. Confidence in a model’s predictions is necessary if models are to be used for clinical and health policy decisions. In general, model validation starts with demonstrating that the model can replicate the results of the trials and studies that were used to develop and calibrate the model. This is called a “dependent” validation. This method of validation is used in both biological and statistical models. However, perhaps the most appropriate “gold standard” of validation is the ability to replicate the results of multiple actual clinical trials that have not been used to build or modify the model. This is called an “independent” validation. A clinical trial enrolls real people, administers real treatments (usually by randomizing them to specific therapies), and records real outcomes a specified time later. The Archimedes model can replicate that process by enrolling virtual people with the exact characteristics of their counterparts in real clinical trials and randomly assign them to virtual treatments that represent the real treatments used in the trial, record virtual outcomes using the same definitions and methods used in the trials, and then compare the results of the virtual trial to those of the real trial. Data available from separate Phase I or Phase II trials can be used to estimate the effects of the intervention on the relevant biomarkers. The Archimedes model has been validated by successfully replicating more than 50 major clinical trials. About half of these validations have been independent.

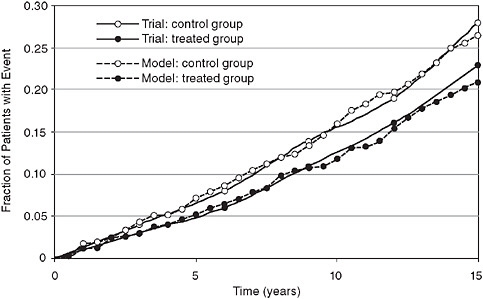

An example of a dependent validation is provided in Figure 3-10, which compares the actual results from the UK Prospective Diabetes Study (UKPDS) to the simulated results calculated by replicating the trial in Archimedes. Although technically a dependent validation, it is important to note that the models results shown in Figure 3-10 were not “fitted” to the results of the trial. Rather, data from the trial were used to fit only two equations: the rate of progression of insulin resistance in untreated diabetes and the effect of insulin resistance on progression of plaque in coronary arteries. Simulation of the trial involved scores of other equations that were not touched by any data from the trial. Thus even though dependent, this validation tests large parts of the model.

Prospective and independent validations also have been conducted.

FIGURE 3-10 Retrospective (dependent) validation: Simulated UKPDS trial comparing real trial results (fatal and nonfatal myocardial infarction) to a simulated version of the trial using the Archimedes model.

SOURCE: Copyright © 2003 American Diabetes Association. From Diabetes Care, Vol. 26, 2003; 3102-3110. Modified with permission from the American Diabetes Association.

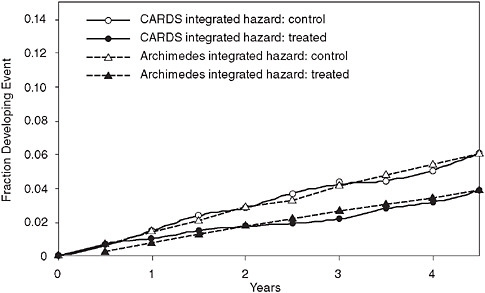

Figure 3-11 shows the results of a validation that was both prospective and independent. It predicted the results of the Collaborative Atorvastatin Diabetes Study (CARDS), which tested the ability of a lipid-lowering medication to reduce cardiovascular events in patients with diabetes. The figure shows the actual trial result for both the intervention and control arm (solid lines) and the predictions of the Archimedes model (dotted lines). In this validation, the model’s results were sent in sealed envelopes to the ADA and the study investigators prior to the release of the study’s results.

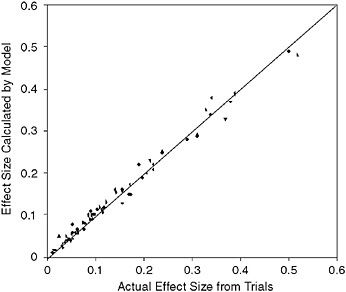

The results for 18 clinical trials have been published. Figure 3-12 compares the results of 74 simulated trials in diabetes, lipid control, and cardiovascular disease, and graphs the actual relative risk found from a trial and the results calculated by the Archimedes model. Because the ability to replicate the results from each arm is considered a validation of the model, this graph represents many more validations than the simple number of clinical trials. The correlation coefficient of the actual and predicted results is r = 0.99.

FIGURE 3-11 Prospective and independent validation of the CARDS trial comparing real trial results to results predicted by the Archimedes model.

SOURCE: Derived from Mount Hood Modeling Group. 2007. Computer modeling of diabetes and its complications: A report on the fourth mount hood challenge meeting. Diabetes Care 30(6):1638-1646. Modified with permission from the American Diabetes Association.

Applications of Physiology-Based Models

There are several ways that physiology-based prediction models can be used to enhance clinical trials. One is to help identify and set priorities for new trials. Another is to facilitate the design of new trials. For example, as the validations described above have shown the Archimedes model can be used to estimate the rates of outcomes in control groups and the expected magnitude of the effects of treatments. This information can then be used to help calculate sample sizes, and the durations required to detect outcomes with specified powers. Another use of physiology-based models is to extend clinical trials to estimate long-term outcomes. If a model has successfully calculated the outcomes in the trial of interest over the duration of the trial, and if it has successfully calculated the important biomarkers and clinical outcomes in a variety of other trials that involve similar populations and interventions, then there is good reason to believe its projections for the outcomes of trial over a longer follow-up period will be accurate. At the least, such a trial-validated application is the best available method for estimating longer term outcomes. Related roles of well-validated physiology-

FIGURE 3-12 Comparison of Archimedes model and multiple trials. The x-axis represents the size of the effect measured in the actual trial; the y-axis is the size of the effect in the simulated version of the trial in Archimedes.

SOURCE: Copyright © 2003 American Diabetes Association. From Diabetes Care, Vol. 26, 2003; 3102-3110. Modified with permission from the American Diabetes Association. Modified from Eddy and Schlessinger, 2003a.

based models are to extend a trial’s results to other outcomes that were not examined in the original trial, such as logistic or economic outcomes, and to examine the results for subpopulations.

Physiology-based models can also be used to customize the results of atrial to different settings. For example, a model that has been demonstrated to be accurate in predicting the results of the original trial and related trials can be used to address such issues as settings that have different levels of performance and compliance, and settings that have different background protocols and/or cost structures. For example, a common complaint of clinical trials is that they represent efficacy, the effect of a medication or intervention in tightly controlled, highly specialized environments. However, the effectiveness of these therapies in real-world conditions may be quite different, because of different levels of adherence to the intervention or differences in the quality of baseline care. The model also can study variations in the background rates of healthcare practices seen in different settings. For example, if we are testing a medication for decreasing cardiovascular risk in diabetic patients, but happen to be con-

cerned about a setting in which patients seen in emergency rooms have a very small chance of being treated with thrombolytic, the overall effect on cardiovascular outcomes will be different than would be seen in a setting in which the use of thrombolytics is very high. These types of processes can be included large-scale physiologic-based models but are virtually impossible to incorporate in regression based and Markov models.

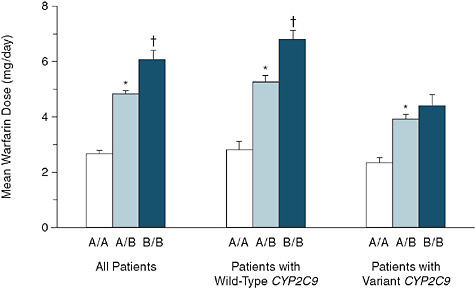

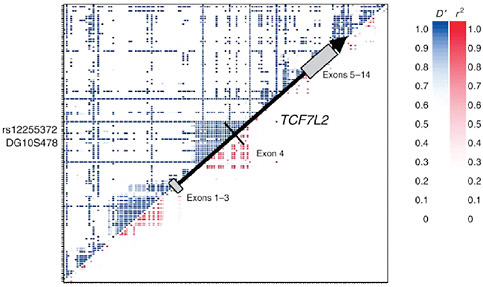

Physiology-based models also can be used for analyzing the comparative effectiveness of different treatments for a condition. Suppose there are trials of Medication A versus placebo and of Medication B versus placebo but no trials directly comparing Medication A versus Medication B. Rather than conduct a new trial that compares A versus B, which could be extremely expensive and take years (by which time new medications will invariably have been introduced), physiology-based models that have successfully predicted the two original trials can provide the best currently available estimate of what a real trial of A versus B would be likely to show. This information can then be used to understand the potential value of a new trial of A versus B, to plan a new trial if it is deemed to be desirable, and to recommend what practices should be followed while waiting for the trials results.