4

Organizing and Improving Data Utility

INTRODUCTION

An enormous untapped capacity for data analysis is emerging as the research community hones its capacity to collect, store, and study data. We are now generating and have access to vastly larger collections of data than have been available before. The potential for mining these robust databases to expand the evidence base is experiencing commensurate growth. New and emerging design models and tools for data analysis have significant potential to inform clinical effectiveness research. However, further work is needed to fully harness the data and insights these large databases contain. As these methods are tested and developed, they are likely to become an even more valuable part of the overall research arsenal—helping to address inefficiencies in current research practices, providing meaningful complements to existing approaches, and offering means to productively process the increasingly complex information generated as part of the research enterprise today.

This chapter aims to (1) characterize some key implications of these larger electronically accessible health records and databases for research, and (2) identify the most pressing opportunities to apply these data more effectively to clinical effectiveness research. The papers that follow were derived from the workshop session devoted to organizing and improving data utility. These papers identify technological and policy advances needed to better harness these emerging data sources for research relevant to providing the care most appropriate to each patient.

From his perspective at the Geisinger Health System, Ronald A. Paulus describes successful applications of electronic health records (EHRs) and

point-of-care data to create delivery-based evidence and make further steps in transforming clinical practice. These data present the opportunity to develop data useful for studies needed to complement and fill gaps in randomized controlled trial (RCT) findings. In the next paper, Alexander M. Walker from Worldwide Health Information Science Consultants and the Harvard School of Public Health discusses approaches to the development, application, and shared distribution of information from large administrative databases in clinical effectiveness research. He describes augmented databases that include laboratory and consumer data and discusses approaches to creating an infrastructure for medical record review, implementing methods for automated and quasi-automated examination of masses of data, developing “rapid-cycle” analyses to circumvent the delays of claims processing and adjudication, and opening new initiatives for collaborative sharing of data that respect patients’ and institutions’ legitimate needs for privacy and confidentiality. In the context of the ongoing debate about the relative value of observational data (e.g., as provided by registries) versus RCTs, Alan J. Moskowitz from Columbia University argues that registries provide data that are important complements to randomized trials (including efficacy and so-called pragmatic randomized trials) and to analyses of large administrative datasets. In fact, Moskowitz asserts, registries can assess “real-world” health and economic outcomes to help guide decision making on policies for patient care.

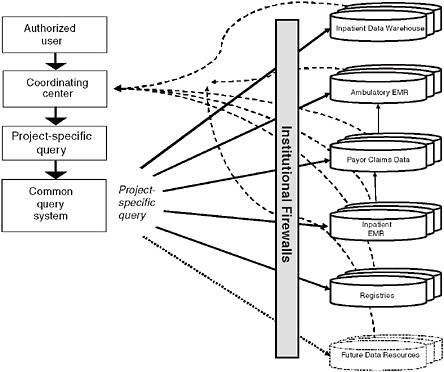

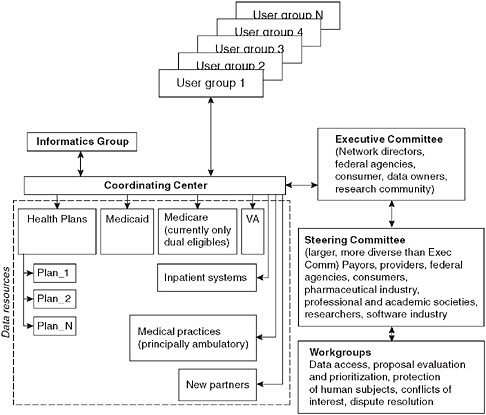

Complicated research questions increasingly need current information derived from a variety of sources. One promising source is distributed research models, which provide multi-user access to enormous stores of highly useful data. Several models are currently being developed. Speaking on that topic was Richard Platt, from Harvard Pilgrim Health Care and Harvard Medical School, who reports on several complex efforts to design and implement distributed research models that derive large stores of useful data from a variety of sources for multiple users.

THE ELECTRONIC HEALTH RECORD AND CARE REENGINEERING: PERFORMANCE IMPROVEMENT REDEFINED

Ronald A. Paulus, M.D., M.B.A.; Walter F. Stewart, Ph.D., M.P.H.; Albert Bothe, Jr., M.D.; Seth Frazier, M.B.A.; Nirav R. Shah, M.D., M.P.H.; and Mark J. Selna, M.D.; Geisinger

Introduction

The U.S. healthcare system has struggled with numerous, seemingly intractable problems including fragmented, uncoordinated, and highly variable care that results in safety risks and waste; consumer dissatis-

faction; and the absence of productivity and efficiency gains common in other industries (The Commonwealth Fund Commission on a High Performance Health System, 2005). Multiple stakeholders—patients and families, physicians, payors, employers, and policy makers—have all called for order of magnitude improvements in healthcare quality and efficiency. While many industries have leveraged technology to deliver vastly superior value in highly competitive environments over the last several decades, healthcare performance has, on a comparative basis, stagnated. In the absence of the ability to transform performance, health care “competition” has too often focused on delivering more expensive services promoted by better marketing and geographic presence; true outcomes-based competition has been lacking (Porter and Olmsted-Teisberg, 2006). Implications of these failures have been profound for the care delivery system and for all Americans.

Recently, one area of hope has emerged: the adoption of electronic health records. EHRs, if successfully deployed, have tremendous potential to transform care delivery. Despite a primary focus on benefits derived from practice standardization and decision support, diverse uses of EHR data including enhanced quality improvement and research activities may offer an equal or even greater potential for fundamental care delivery transformation. Limits of guideline-based evidence have produced a growing recognition that observational data may be essential to complement gaps in randomized controlled trial data needed to fulfill this transformation potential. Despite serious challenges, EHR data may offer an invaluable look into interventions and outcomes in clinical practice and offer promise as a complementary source of evidence directly relevant to everyday practice needs.

EHR data also may provide an essential complement to clinical performance improvement initiatives. Healthcare performance improvement activities are defined here as an ongoing cycle of positive change in organization, care process, decision management, workflow, or other components of care, regardless of methodology (collectively PI) (Hartig and Allison, 2007). Despite the underlying logic and history of success in other business sectors, the impact of healthcare performance improvement activities is often negligible or unsustainable. As with the evidence gap, EHR data offer promise as a transformation resource for PI. The inability to achieve broad and systematic quality and operational improvements in our delivery system has left all stakeholders deeply frustrated.

This paper explores a potentially powerful new approach to leverage the latent synergy between EHR-based PI efforts and research and presents a vision of how PI at the clinical enterprise level is being transformed by the EHR and associated data aggregation and analysis activities. In that context, we describe a revision to the classic Plan-Do-Study-Act (PDSA)

cycle that reflects this integration and the development of a Performance Improvement Architecture (PI Architecture), a set of reusable parts, components, and modules along with a process methodology that focuses relentlessly on eliminating all unnecessary care steps, safely automating processes, delegating care to the lowest cost, competent caregiver, maximizing supply chain efficiencies and activating patients in their own self-care. Early Geisinger Health System (Geisinger) experience suggests that use of such a PI Architecture in creating change is likely to provide guidance on what to improve, an enhanced ability to implement and track initiatives and to specifically link discrete elements of change to meaningful outcomes, a simultaneous focus on quality and efficiency, improved utilization of scarce healthcare resources and personnel, dramatic acceleration of the pace of change, and the capacity to maintain and grow that change over time.

Delivery-Based Evidence—A New EHR Role

When doctors care for patients, the very essence of the interaction requires extrapolation from knowledge and experience to tailor care for the particular circumstances at hand (i.e., bridging the “inferential gap”) (Stewart et al., 2007). No two patients are alike. While a certain level of “experimentation” is a part of good care, the knowledge base required for such experimentation is growing at a pace that far exceeds the ongoing learning capacity of primary care providers and even most specialists. Hence, the nature of care provided is dated or experimental, venturing beyond what is known or is optimal.

How do providers move beyond the limits of what they can learn or “trials where n = 1”? Although the RCT serves as the “gold standard” design for making causal inferences from data, there are practical limits to the utility of RCT-based evidence (Brook and Lohr, 1985; Flum et al., 2001; Krumholz et al., 1998). Today, RCTs are largely guided by the Food and Drug Administration (FDA) and related regulatory needs, not necessarily by the most important clinical questions. They are frequently performed in specialized settings (e.g., academic medical centers or the Veterans Administration) that are not representative of the broader arena of care delivery. RCTs are used to test drugs and devices in highly selected populations (i.e., patients with relatively low co-morbid disease burdens), under artificial conditions (i.e., a simple, focused question) that are often unrelated to usual clinical care (i.e., managing complex needs of patients with multiple co-morbidities), and are focused on outcomes that may be incomplete (e.g., short-term outcomes leading to changes in a disease mediator). Efficacy equivalence with existing therapies rather than comparative effectiveness is the dominant focus of most trials, with little or no thought given to economic constraints or consequences. RCTs are not usually positioned to address fundamental

questions of need for subgroups with different co-morbidities, and results rarely translate into the clinical effectiveness hoped for under real-world practice conditions (Hayward et al., 1995). As the population continues to age and the prevalence of co-morbidities increases, the gap between what we know from RCTs and what we need to know to support objective clinical decisions is increasing, despite the pace at which new knowledge is being generated. Furthermore, decisions based primarily on randomized trial data do not incorporate local values, knowledge, or patient preferences into care decisions.

From a distance, EHR data offer promise as a complementary source of evidence to more directly address questions relevant to everyday practice needs. However, a closer look at EHR data reveals challenges. Compared to data collection standards established for research, EHR data suffer from many limitations in both quality and completeness. In research settings, specialized staff follow strict data collection protocols; in routine care, even simple measures such as blood pressure or smoking status are measured with many more sources of error. For example, the wording of a question may differ, and responses to even identical questions can be documented in different manners. In routine care, the completeness of data may vary significantly by patient, being directly related to the underlying disease burden and the need for care. Furthermore, physicians may select a particular medication within a class based on the perceived severity of a patient’s disease, resulting in a complex form of bias that is difficult to eliminate (i.e., confounding by indication) (de Koning et al., 2005). In the near term, these and other limitations will raise questions about the credibility of evidence derived from EHR data. However, weaknesses inherent to EHR data as a source of evidence (e.g., false-positive associations) and to the current practice of PI (e.g., initiatives confined to guideline-based knowledge) can be mitigated through replication studies using independent EHRs and by using PI to test and validate EHR-based hypotheses.

Healthcare Quality Improvement

Since the early observations of Shewart, Juran, and Demming, quality improvement has become routine in most business sectors and has been formalized into a diverse set of methodologies and underlying philosophies such as Total Quality Management, Continuous Quality Improvement, Six Sigma, Lean, Reengineering and Microsystems (Juran, 1995). While latecomers, healthcare organizations have increasingly adopted these practices in an attempt to optimize outcomes. Healthcare PI involves an ongoing cycle of change in organization, care process, decision management, workflow, or other components of care, evolving from a culture often previously

dominated by blame and fault finding (e.g., peer and utilization review) to devising evidence-based “systems” of care.

In general, healthcare PI relies on “planning” or “experimentation” approaches to improve outcomes. These models employ a diversity of philosophies including a commitment to identifying, meeting, and exceeding stakeholder needs; continuously improving in conjunction with escalating performance standards; applying structured, problem-solving processes using statistical and related tools such as control charts, cause-and-effect diagrams, and benchmarking; and empowering all employees to drive quality improvements. Experimentation-based PI typically relies on the PDSA model (Shewhart, 1939), as recently refined by the Institute for Healthcare Improvement (IHI) for the healthcare community (see Box 4-1) (Institute for Healthcare Improvement). Most approaches involve analysis that begins with a “diagnosis” of cause(s), albeit with limited data, followed by new data collection (frequently manual) to validate that the new process improves outcomes. Deployment of these models is often labor-intensive (e.g., evidence gathering, workflow observation), and effectuating change may take months, in part due to lack of dedicated support resources as well as a historical lack of focus on scalability. As a result, each successive itera-

|

BOX 4-1 IHI PDSA Cycle Step 1: Plan—Plan the test or observation, including a plan for collecting data.

Step 2: Do—Try out the test on a small scale.

Step 3: Study—Set aside time to analyze the data and study the results.

Step 4: Act—Refine the change, based on what was learned from the test.

|

tion may be performed without the ability to reuse previously developed tools, datasets, or analytics.

Limitations to Healthcare Performance Improvement

Despite the underlying logic and history of success in other business sectors, the impact of healthcare PI has too frequently been negligible or unsustainable (Blumenthal and Kilo, 1998). The gap between the potential for PI and results from actual practice has been substantial, as have the consequences of historical failures to improve outcomes. A number of factors explain this gap.

First, PI initiatives are commonly motivated by guideline-based evidence and, as such, are subject to the same limitations as RCT data discussed above. Second, the PI-focused outcome may be only distantly or indirectly related to meaningful change in patient health or to a concrete measure of return on investment (ROI), largely because of the limits to available data and how such initiatives are organizationally motivated and executed. For example, there may not be the organizational will to make change happen or to support change efforts to sustainability. Even when PI is applied to an important problem (e.g., slowing progression of diabetes) in a manner that improves a chosen metric (e.g., ordering a HbA1c lab test), the effort may have only an incomplete or a delayed effect on more relevant outcomes (e.g., fewer complications, reductions in hospital admissions or improved quality of life). Third, outcomes are usually not evaluated in real time or at frequent intervals, limiting the timeliness, ease, and speed of innovation, as well as the dynamism of the process itself. When change and the associated process unfold in slow motion, participants’ (or their authorizing leaders’) commitment may not rise to or maintain the threshold required to institutionalize new standards of practice. Fourth, validation that a PI intervention actually works may be lacking altogether or lacking in scientific or analytic rigor, leaving inference to the realm of guesswork. Fifth, when human or labor-intensive processes are required to maintain change, performance typically regresses to baseline levels as vigilance wanes. Lastly, without a broad strategic framework, PI can be perceived as the “initiative of the month,” leading to temporary improvements that are quickly lost due to inadequate hardwiring, support systems, vigilance, or PI integration across an organization.

The Geisinger Health System Experience

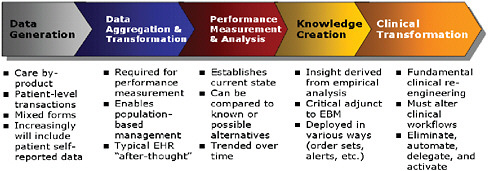

At Geisinger, PI is evolving to become a continuous process involving data generation, performance measurement, and analysis to transform clinical practice, mediated by iterative changes to clinical workflows by elimi-

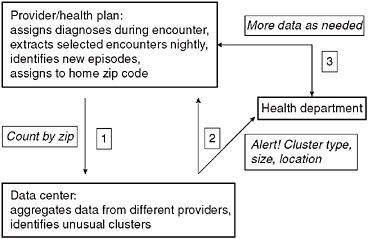

FIGURE 4-1 Transformation infrastructure.

nating, automating, or delegating activities to meet quality and efficiency goals (see Figure 4-1).

By way of background, Geisinger is an integrated delivery system located in central and northeastern Pennsylvania comprised of nearly 700 employed physicians across 55 clinical practice sites providing adult and pediatric primary and specialty care; 3 acute care hospitals (one closed, two open staff); several specialty hospitals; a 215,000 member health plan (accounting for approximately one-third of the Geisinger Clinic patient care revenue); and numerous other clinical services and programs. Geisinger serves a population of 2.5 million people, poorer and sicker than national benchmarks, with markedly less in- and out-migration. Organizationally, Geisinger manages through clinical service lines, each co-led by a physician-administrator pair. Strategic functions such as quality and innovation are centralized with matrixed linkage to operational leaders. A commercial EHR platform adopted in 1995 is fully utilized across the system (Epic Systems Corporation, 2008). An integrated database consisting of EHR, financial, operational, claims, and patient satisfaction data serves as the foundation of a Clinical Decision Intelligence System (CDIS).

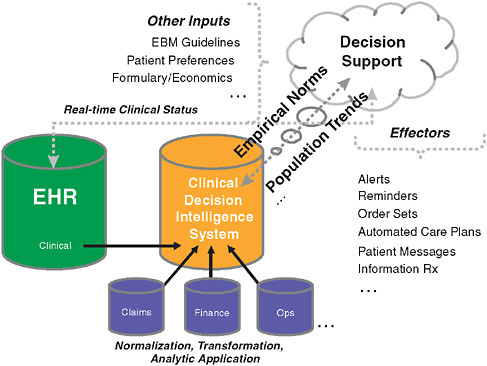

At Geisinger, data are increasingly viewed as a core asset. A very heavy emphasis is placed on the collection, normalization, and application of clinical, financial, operational, claims, and other data to inform, guide, measure, refine, and document the results of PI efforts. These data are combined with other inputs (e.g., evidence-based guidelines, third-party benchmarks) and leveraged via decision support applications as schematically illustrated below (see Figure 4-2).

FIGURE 4-2 Clinical decision intelligence system design.

Transforming Performance Improvement: From a Human Process to a Scalable Performance Improvement Architecture

Early Geisinger experience supports the view that a PI Architecture, including EHR data and associated data warehousing capabilities can transform healthcare PI, as well as how an organization behaves.

Data, System, and Analytic Requirements

Most performance improvement efforts lack the rich data required to validate outcomes (i.e., test the initial hypothesis) or the integrated data infrastructure required for rapid feedback to refine or modify large-scale interventions. When available at all, data are often limited in scope and consist of simple administrative and/or manually collected elements that may not be generated as part of the routine course of care. By contrast, robust EHRs inherently provide for extensive, longitudinal data (i.e., clinical test results, vital signs, reason for order or other explicit information regarding the intent of the provider, etc.). When used in conjunction with an integrated data warehouse and normalized, searchable electronic data,

EHRs can motivate a quantum shift in the PI paradigm. As a core asset, this new PI Architecture is used to ask questions, pose hypotheses, refine understanding, and ultimately develop improvement initiatives that are directly relevant to current practice with a dual focus on quality and efficiency.

Natural “experiments” are intrinsic to EHR data. Patients with essentially the same or similar disease profiles receive different care. For example, one 60-year-old diabetic patient may be prescribed drug A, while a similar diabetic patient may be described drug B because of formulary or practice style differences. When repeated hundreds or thousands of times, routinely collected EHR data offer a unique data mining resource for important clinical and economic insights. When combined with health plan claims and other information, additional questions may be answered such as: Is there a difference in drug fill/refill rates between drugs A and B identified above?

In addition to the need for an EHR, an integrated, normalized data asset simplifies the logistics and cycle time for exploration, development of an ROI argument (e.g., forecasting, simulating), planning and implementation, and performance analysis. While data aggregation, standardization, and normalization are often centralized activities, data access should be as decentralized, simple, and low cost as possible (i.e., no incremental barrier to review). Providing clinical and business end-users with direct, unrestricted access helps to motivate a cultural shift toward identifying opportunities for improving care quality and access and for reducing the cost of care. In this way, everyday clinical hunches (e.g., a patient who used drug X subsequently shows impaired renal function) can be formulated into questions (e.g., “has this phenomenon been observed in the last X hundred patients that we cared for here?”), rapid analysis, and “answers.” This capability to rapidly place in context both the individual patient and the broader population is routinely missing in nearly all healthcare delivery organizations. This frame of reference is important for physicians who have been shown to be overly sensitized by recent patient experience (Greco and Eisenberg, 1993; Poses and Anthony, 1991).

The PI Architecture should be capable of answering previously imponderable questions such as “How many patients with chronic kidney disease do we care for?” and in so doing, compare the results from operationally identified patients (e.g., derived from the Problem List) versus biologically identified patients (e.g., via calculations from laboratory creatinine measurements). This level of data interrogation enables PI teams to be fully grounded in the reality of what actually happens, rather than guided by impressions, selective or hazy memories, or idyllic desires. Similarly, when using benchmarks to compare performance, hypothesis-driven data mining asks “Why are we different?,” regardless of whether that difference is positive or negative. As such, it enables even a benchmarking leader to continue to innovate and improve (Gawande, 2004). This approach parallels Berwick’s recent call to “equip the workforce to study the effects of their efforts, actively

and objectively, as part of daily work” and creates a “culture of empirical review” as a critical determinant of success (Berwick, 2008).

Organizational Requirements

Global and local organizational requirements are essential to institutionalizing a culture of improvement using a PI Architecture. First, Board and CEO level support for transformation is required to support adoption. PI Architecture investment is not trivial, and several years are required to reach peak output. Stable resourcing and strategic investment is essential to achieve success. Control and responsibility of the PI process (e.g., selection of issues, control of implementation, and evaluation of outcomes and ongoing feedback) must be entrusted to leaders held accountable for results. Where PI is centralized, local clinical and operational leaders must be engaged from the beginning to be part of and motivated by the opportunities inherent to the care process change. In addition, staff (or teams) should be experienced in change management, workflow analysis, health information technology (HIT) integration, and performance management skills and orientation. The extent to which this group has aligned goals and is free to innovate beyond usual organizational constraints, policies, and practices will dictate the breadth of possible change. Finally, passion for success is a powerful force. We believe that an entrepreneurial approach to PI, a well-established motivation in other business sectors, produces sustainable change, especially when balanced with appropriate skepticism on defining success and the “permission” to fail but with the expectation of ultimately persevering.

At Geisinger, this culture is embedded through formal links between the traditional silos of Innovation, Clinical Effectiveness, Research, and the Clinical Enterprise along with critical underlying support from Information Technology. Innovation’s role is to support a broad range of change initiatives that are designed to fundamentally challenge historical assumptions. Innovation typically reaches for large successes with a focus on knowledge transfer across the organization and on creating a reusable, scalable transformation infrastructure. Clinical Effectiveness often takes a complementary approach to change across a broader swath of the organization with a focus on process redesign and skill development. The Clinical Enterprise represents the “front line” of patient care; its “sources of pain” provide a strong indication of opportunity; its ideas, clinical hunches, and feedback on innovation are essential for success.

At Geisinger, research has a multi-year horizon. Adoption of a traditional research and development model, used in other business sectors, leads to a translation-focused process to bring value to the clinical enterprise, rather than a focus on traditional “knowledge creation.” This model

leads to ongoing interactions, where research leverages the insights of Innovations, Clinical Effectiveness, the Clinical Enterprise, the ROI model, and the tactics of implementation. To some degree, PI initiatives serve as the preliminary work for research to pursue a product-oriented process for extending and scaling the PI architecture that moves beyond the tactics of initiatives, relies less on organizational vigilance and individual learning, and can more easily be scaled within Geisinger and potentially exported elsewhere. The continuum of activities among collaborating divisions offers a unique potential for broader commercial application via Geisinger Ventures, which seeks to capture fundamental breakthrough technologies, techniques, or approaches to care that represent a sensible and more certain means of translating knowledge to practice (i.e., through the commercial marketplace) in a manner that cannot be achieved rapidly by publications, speaking, or collaboratives.

Building a Performance Improvement Architecture

The core feature of the PI Architecture (and associated analytics and process methodology) is to support the following key goals: (1) to rank-order PI initiatives for the largest ROI; (2) to support a simultaneous focus on quality and efficiency; (3) to require the development or refinement of reusable parts, components, and modules from each PI initiative to support future efforts; and (4) to ensure that practitioners evaluate the opportunity to eliminate any unnecessary steps in care, automate processes when safe and effective to do so, delegate care to the least-cost, competent caregiver, and activate the patient as a participant in her own self-care.

Using this model, care processes selected for improvement can be identified proactively via a thoughtful rank-ordering of problems based upon ROI criteria (whether clinical, business, or both). Example ROI-based approaches include selecting those processes with outcomes farthest from benchmark performance; those with the largest impact by patient population or resource consumption; or those with the most significant variation. The absence of an ROI-based selection process often precludes the development of a “clinical business plan” that can meet the requirements of skeptical observers, an activity routine in other industries and one where if skipped makes post-intervention value determination problematic. As Berwick noted, when evaluating areas for PI intervention one must “reconsider thresholds for action on evidence” (Berwick, 2008). In this context an appropriate threshold may be far below the traditional research standard of significance where p < 0.05. Less restrictive interpretations of data and “evidence” are commonplace in other industries, where in the absence of better information, a p-value < 0.5 is often indicative of a reasonable idea for change, and p-values < 0.25 would routinely create sustained success.

Of course, evidence at this level may not indicate a true need to change care, but rather the need to more formally study a partially validated question in a more rigorous manner.

Once selected, attractive areas for more detailed PI intervention tend to fall into two broad categories: (1) what should we be doing systematically that we are not? and (2) what should we stop doing that is causing harm or simply not adding value? These questions are fundamentally related to whether or not some aspect of provider-delivered care (e.g., the treatment plan, flow, caregivers, timing, or setting such as inpatient versus outpatient, nursing unit versus nursing unit, etc.) improves the value of healthcare delivery. One structured way to perform this analysis is to review at least the following:

-

Missing Elements of Care. Is something missing that seems to provide benefit (e.g., beta blockers post MI, statins for CAD)?

-

Potential Diagnostic or Therapeutic Substitutions. Does something (or someone) seem to work better than another (e.g., breast MRI versus mammography in high-risk patients)?

-

Excess Diagnostic or Treatment Intensity. What care patterns persist but appear to add no apparent value (e.g., plain film + CT + MRI + PET)?

-

Flow Impediments. Does the sequence of care and/or settings seem to make a difference (e.g., weekend care, getting to the right inpatient unit)?

-

Supply Chain Inefficiencies. Is care standardized enough to generate maximum supply chain economies and familiarity (e.g., implant devices or benefits of silver-impregnated versus standard foley catheters relative to UTI)?

-

Provider Care Team Variation. Are there different outcomes with different providers and/or provider teams (e.g., physician–physician, physician–NP, etc.)?

Box 4-2 defines an update of the PDSA cycle to reflect the availability of a PI Architecture.

Benefits of a Performance Improvement Architecture

Several important benefits from our recent experience evolving this approach are noteworthy as potentially generalizable findings.

|

BOX 4-2 Performance Improvement Architecture Cycle Step 1: Document Focus—Document the current state using local data.

Step 2: Simulate—Confirm hypothesis via electronic review and simulate results if desired state is achieved.

Step 3: Iterate—Try out the test on a small scale, but with a strategy for rapid escalation.

Step 4: Accelerate—Leverage reusable parts from past initiatives and build core infrastructure for future work.

|

Reduced Cycle Time

First, much of the historical PDSA cycle can be performed electronically. For example, opportunities for improvement can be automatically rank-ordered according to specified criteria (e.g., systematically screening care relative to evidence-based guidelines, with deviations used as objective input for ranking). Also, “clinical hunches,” comparisons of actual performance to guidelines, evaluation of new medical literature findings for local practice, and other comparisons can be tested via database queries in a matter of minutes, rather than taking days, weeks, or months using traditional human-based assessments. If designed appropriately, the impact of hopeful interventions can be simulated. Such simulations can provide insight into the need for change and also can help to establish the clinical-business case and anticipate the ROI from any given intervention, again with only limited resource commitments. As a result, those hypotheses that actually make it to a real test of change are much more likely to be important and to have a greater chance for success. Once tests are underway, real-time data access supports rapid change cycles, where sequentially refined hypotheses can be tested and refined in increasingly shorter short periods of time.

Increased Quality of Hypothesis Generation and Relevance of Initiatives

Second, the purview of inquiry moves beyond guidelines, encompassing questions more directly relevant to practice and the related business case, as well as what an organization should stop doing, recognizing that many components of care are embedded by tradition and offer little or no value. Importantly, metrics can be focused on measures that are directly relevant to patient health (e.g., actual low density lipoprotein levels rather than lab orders) and downstream impact (e.g., cardiac events or visits avoided), substantially improving the saliency of feedback to guide productive change that yields tangible value, holds the attention of organizational leaders, and motivates continued vigilance.

Increased Sustainability

EHRs can be used to “hard wire” process changes, to automatically track and trend important metrics after an intervention has been made, to watch for regression, and to learn of unexpected consequences (whether good or bad). Further, dashboards can serve to link PI efforts to strategic objectives and gain the attention of a much broader community to provide additional incentive to maintain gains from change.

Increased Focus on Return on Investment

Because resources are always constrained, it is critical to focus PI efforts on those interventions that can deliver the most clinical and business return. Under this framework, PI is strongly focused on ROI as evidenced by empirical data. As those data allow for more thoughtful clinical-business planning, more leaders are engaged (e.g., CFO, clinic directors), thereby enabling PI to rapidly evolve its purview to a much broader and more refined set of measurable outcomes that are likely to impact quality and efficiency in a material way.

Enhanced Research Capabilities

A PI Architecture can augment research. First, PI informs opportunities for success. Interventions that appear to be important, impactful, and sustained, guide researchers on opportunities that are likely to be successful for more complete testing via a robust study and for development and testing of tools to replace labor-intensive workflows and processes. Second, the data asset can be used to quickly “confirm or deny” results from newly published trials, whether randomized or observational. Further, when performed proactively, data mining for unintended consequences of new drugs can be an important adjunct to current forms of postmarketing surveillance. Similarly, one can mine such databases (which include reason codes for medication orders) for off-label usage patterns, risks, and benefits. All of these data-driven opportunities would be enhanced even further if disparate health systems using common data standards pooled their data (or results) for such purposes. Finally, EHRs can be used to identify patients who meet criteria for research studies and to capture data elements relevant for analysis.

Summary and Conclusion

Many health systems are experimenting with new approaches to quality improvement that leverage EHR capabilities. In addition to practice standardization and decision support, EHR data provide a new source of hypotheses and evidence for both PI and research. When complimented by a broader data aggregation, analysis infrastructure, and process to create a PI Architecture, the potential is significant. While there are numerous limitations yet to be overcome, the latent potential between EHR (and other electronic) data, performance improvement and research is both significant and exciting. The next decade of work will be transformative; this is an exciting time for health care.

ADMINISTRATIVE DATABASES IN CLINICAL EFFECTIVENESS RESEARCH

Alexander M. Walker, M.D., Dr.P.H.

Harvard School of Public Health

World Health Information Science Consultants, LLC

Background

The most exigent demands for large-scale integration of medical data have come from healthcare administrators and payors. Their needs to create effective payment schemes and basic monitoring of medical resource utilization have been susceptible to ready standardization and have provided immediate financial returns that have in turn justified the investment in the requisite data systems. The many-to-many relations between insurers and providers in the United States, in which an insurer may deal with hundreds of thousands of providers and a provider may deal with tens of insurers, has meant that the only functioning systems are highly standardized and internally consistent.

The resulting progress in the development of administrative databases stands in marked contrast to the world of electronic health records, which capture far more complex clinical and laboratory data, and for which there has been the growth of many competing local standards. While the advantages in patient care with a well-functioning electronic record are evident to practitioners, the cost and complexity of these systems still poses a barrier to implementation. Implemented systems that follow different standards pose even more formidable barriers to standardization.

For all the advantages that a research-enabled electronic health record will one day offer, it is administrative databases that form the heart of large-scale population research for most medical applications. The purpose of this report is to touch on the key features of these resources.

Insurance Claims Data

The most widespread technique for distributing healthcare funds in industrial countries involves some form of fee-for-service reimbursement, in which providers of services turn to private or governmental insurance programs for payment for specified services. Insurance schemes have been advocated as the most effective way to pay for services even in societies with limited medical resources (Second International Conference on Improving Use of Medicines, 2004).

The population definition for an insurance database is contained in the eligibility file, which identifies all covered individuals and basic demo-

graphic data such as date of birth, sex, and address. This file will include dates of coverage and may contain some family information in the form of an identified primary contract holder, along with dependents.

The service claims in a typical insurance database include identities of both the provider and recipient of services, the nature and date(s) of services, and presumptive diagnoses that motivated the services. Services may be visits, diagnostic tests, or procedures. The results of laboratory procedures, as opposed to the fact of the test having been performed, are not part of the insurance claims system.

Hospitalizations are a special form of service, typically accompanied by more detailed information, including dates, procedures, and primary and secondary diagnoses. In the United States, physician charges that do not flow through the hospital billing system appear as individual provider claims during a period of hospitalization and can be used to flesh out events during hospitalization.

Pharmacy insurance claims arise for each dispensing, with identities of the pharmacy, the prescribing physician, and the recipient and details on the product supplied, substance, manufacturer, form, dose, quantity, and days supply. The indication for treatment is not typically part of the claim and must typically be inferred from diagnoses recently assigned in conjunction with visits to the prescribing physician.

Insurers may use these data internally for administrative purposes. Researchers in the United States operate under rules set by HIPAA (the Health Insurance Portability and Accountability Act), which circumscribes their permitted activities in order to safeguard individual’s medical privacy. Under HIPAA, personally identifying data, termed PHI (protected health information) includes both obvious identifiers, such as name and address, and data from which persons might be identifiable with the supplementary use of other publicly identifiable information. This includes for example exact date of birth. HIPAA provides standards for creating “deidentified” data, which can be exchanged and analyzed without further oversight. If PHI is required, researchers must obtain the permission of a Privacy Board, which is typically constituted under an Institutional Review Board (IRB). The researcher needs to provide details of methods by which the minimum necessary amount of PHI will be employed for the minimum time required and which will safeguard that PHI during its period of use.

Currently available insurance claims databases with full information range in size of up to about 20 million persons cross-sectionally, with substantially larger numbers of cumulative “lives” and for data that may omit one or more of the elements above. U.S. Medicare data, not yet widely available, include claims information on over 40 million persons over the age of 65, with drug data from 2007 forward.

Though well suited to studies of health services utilization, insur-

ance data serve clinical research only with substantial further processing and with caution even at that. Drug use is inferred from dispensing data. Medical conditions must be inferred from patterns of claims for services, treatments, and diagnostic procedures. Thus a recently used algorithm for venous thromboembolism included the occurrence of a suitable diagnosis associated with a physician visit, emergency room or hospital claim, performance of an appropriate imaging procedure, and at least two dispensings of an anticoagulant (Jick et al., 2007; Sands et al., 2006). Algorithms for more subtle conditions may be more complex still. Conditions for the pattern of care attendant on a “rule out” diagnosis resembles that for a confirmed diagnosis may be impossible to identify with any specificity.

The advantages of pure insurance claims data include easy access to data on very large numbers of individuals, detailed drug information, and the absence of reporting biases related to knowledge of exposure or outcome. There are substantial drawbacks. There is a lag in the creation of research-ready insurance files that runs from months to a year. The lack of medical record validation means that crucial cases may be missed and that others may be incorrectly ascribed to a condition under study. For nonemergency conditions, it may be very difficult to pinpoint the date of onset and the distinction between recurring, recrudescent, and new-onset conditions may be elusive. Apart from special circumstances involving serious acute outcomes and drug or vaccine exposures, insurance claims data may typically be insufficient for clinical research purposes.

Augmented Claims Data

Research groups within the insurance organizations that generate data have begun to systematically augment these files. Increasingly insurers are negotiating arrangements with independent laboratories under which the analyte results must be submitted with the claim for reimbursement. These are outpatient files and do not represent complete laboratory histories. Since the arrangements are made between the insurer and the laboratory, an individual’s record will contain repeated measures to the extent that he/she returns to the same site for testing. These have been used for example to relate cardiovascular disease to severe anemia (Walker et al., 2006).

Marketing databases contain self-report data on ethnicity and income, which have been linked to insurance data. In the United States there are available files that link postal code information to detailed census data on income and ethnicity as well.

Far more important than laboratory values and income has been the ability to return to providers and patients for direct information. With Privacy Board approval, researchers can approach physicians and institutions holding patients’ medical records to verify diagnoses and treatments,

and to eke out information on lifestyle, chronic risk factors, and family history that is not available in the insurance claims history. With IRB approval, they can approach patients themselves for information, biometric data, and even tissue samples. These studies permit analyses carried out with a reasonable certainty that the underlying elements are correct.

A good example of the multifaceted work that augmented claims databases permit is an FDA-mandated program of surveillance of the oral contraceptive Yasmin. The progestational agent in Yasmin is drospirenone, which is functionally related to the potassium-sparing diuretic spironolactone. Though no problems of potassium handling had been seen in clinical trials, the analogy was sufficient to bring the FDA to have the sponsor initiate a program that (1) followed hospitalization and mortality in over 20,000 Yasmin initiators and a two-fold larger comparison group; (2) monitored contraindicated dispensing to patients with adrenal, renal, and hepatic dysfunction; (3) quantified the use of potassium monitoring in certain indicated patients; and (4) ascertained the outcomes of breakthrough pregnancies. Chart reviews, physician interviews, and even interventions with doctors prescribing to contraindicated patients rounded out a clinically useful surveillance program (Eng et al., 2008; Mona Eng et al., 2007; Seeger et al., 2007).

Enhanced claims studies include the insurance claims database advantages of large numbers of subjects, detailed drug exposure information, and lack of reporting bias, and add to these much greater confidence in the nature of events being studied and knowledge of timing. Like insurance claims studies, research programs in augmented databases may still be hindered by a lag in adjudication of claims on the order of months to a year. These data resources serve well for observational studies of outcomes that are highly likely to result in medical care.

Automated and Quasi-Automated Database Review

Many of the research and surveillance activities that take place in insurance files take advantage of repeatedly implemented computer routines, which offer the hope that some of these programs could be automated as decision support tools for both clinical safety and efficacy.

The core idea for creating such tools is to simplify the welter of claims data into manageable units. In part this can come about through routine implementation of algorithms, such as the one described above for venous thromboembolism, into standard units for off-the-shelf programming or routine tabulation. A number of data holders have taken this concept even further, with the concept of “episode groupers,” programs that recast a broad range of related claims into single clinical entities, such as for example “community acquired pneumonia.”

A second element of routine surveillance computer programs is a standard way for handling the confounding that is so prevalent in observational studies of the outcomes medical regimens. One approach is to take the wealth of data represented in insurance claims into a multivariate prediction of therapeutic choice, called a propensity score. These models can be rich because they draw on thousands or ten of thousands of observations and can incorporate claims history items that collectively represent strong proxies for confounding factors (Seeger et al., 2005). Propensity-matched groups can be created routinely in advance for new, commonly used therapies, or scores can be calculated and stored with individual records for future use.

The final sine qua non of automated surveillance is a plan for dealing with multiplicity of outcomes. Some investigators have proposed restricting attention to a smallish number of disease outcomes previously associated with drug effects, such as hepatitis, rashes, or ocular toxicity. This may be a strategy with little marginal gain, as these will be precisely the drug outcomes for which clinicians are most sensitive and likely to report adverse effects already. Another option is to apply a formal Bonferroni correction to thousands of possible combinations of drugs and outcomes being tested, much in the same way that whole-genome scans are subjected to radical statistical attenuation to reduce false positives. This approach has the drawback of curtailing power to detect true association in proportion to the reduction in risk of false positives (Walker and Wise, 2002).

A more productive approach to multiplicity in large database is to apply both statistical and medical logic to the problem of pruning false-positive results. Does the timing look right? Is the outcome plausible in light of the mechanism of action, or perhaps the route of administration of a drug? Are there analogies to be drawn from the experience with similar products?

Decision support tools do not have a promising history. Perhaps the technology for creating them tends to lag the decision-maker needs, or it may be that the enthusiasm required to generate development funding inevitably raises expectations beyond what the technologists can reasonably achieve. It may be that comprehensive indexing, retrieval, and counting functions, and not sophisticated analysis, are the proper goal of massive, automated data integration.

Distributed Processing

Part of the push for greater sensitivity and speed in drug safety surveillance is taking the form of programs to include large numbers of automated databases in common surveillance mechanisms. At the level of database amalgamation, the large U.S. insurance databases would seem to be ideal candidates, as they already operate under common rules for coding and

have similar structures, imposed by the common format of the component data items.

There are however major institutional barriers to having holders of large datasets contribute them to a common pool. Giving up the ability to approve of the analyses done in one’s own data underlies some of the reluctance, and it may be that the details of pricing and reimbursement contained in the data are considered sensitive and proprietary.

The most promising solution to both computational and institutional obstacles to very large database research may lie in distributed processing, discussed by Richard Platt in more detail elsewhere in this volume. Under distributed processing models, data holders create standard views of their databases, or even standardized extracts. A central office then distributes computer code to pull out key information from each database, for transmittal back, where the statistical coordinating center assembles the elements into a common analysis.

A Note of Caution

Observational data, no matter how assembled, require special care in clinical effectiveness research. The likelihood that persons undergoing compared therapies will different with respect to fundamental predictors of outcome is large and needs to be addressed head-on. There is a growing family of research methodologies, including propensity techniques (mentioned above), proxy variable analysis, and instrumental variables that are the objects of vigorous methodological research (Schneeweiss, 2007). While these necessary efforts continue, science-based skepticism of non-randomized studies remains highly appropriate, even though unthinking rejection may properly belong to the past.

CLINICAL EFFECTIVENESS RESEARCH: THE PROMISE OF REGISTRIES

Alan J. Moskowitz, M.D., and Annetine C. Gelijns, Ph.D.

Mount Sinai School of Medicine

Introduction

In comparison to other sectors of the economy, modern health care is a technologically highly innovative field. New drugs, devices, procedures, and behavioral interventions continuously emerge from the research and development (R&D) pipeline and then get established into clinical practice. The R&D process in medicine generally involves “premarketing” clinical trials, particularly in the case of drugs, biological products, and devices.

The development process of these new technologies as well as procedures, however, does not end with their introduction and adoption into practice. Over time as these interventions diffuse into widespread use, the medical profession tends to further modify and extend their application—by finding new populations, indications, and long-term effects. These dynamic patterns of adaptation and evolution underscore the importance of measuring the health and economic outcomes of clinical interventions in everyday practice and drive the renewed interest in developing a robust clinical effectiveness research enterprise.

There are various ways of measuring the clinical effectiveness of diagnostic and therapeutic interventions, including so-called pragmatic randomized trials, large administrative dataset analyses, and observational studies using clinical registries (Gliklick and Dreyer, 2007; Tunis et al., 2003). In this paper, we focus on the role and potential of registries in capturing information about “real-world” health and economic outcomes. We also highlight their potential value for assessing quality of care; for instance, through studies of risk-adjusted volume–outcome relationships. Finally, we address an often under-examined benefit of clinical registries; that is, their potential to accumulate information that, in turn, can increase the efficiency of randomized clinical trials, and premarketing studies in general. As such, clinical registries can be an important tool to help to guide decision making for patient care and health policy.

Obviously, clinical registries have their methodological and practical vulnerabilities, and we will review some analytical, organizational, and financial measures to strengthen them. In particular, we will discuss the incentives of stakeholders to support these data collection efforts and new models of public-private partnerships. But first, we will provide a more in-depth rationale for investing in clinical registries, most of which can be found in the dynamics of the medical innovation process itself.

Importance of Downstream Innovation and Learning

Over time, we have seen a move toward more rigorous and well-controlled premarketing studies for all therapeutic and diagnostic modalities. Despite this move, there are practical constraints that limit how much we will learn in the premarketing setting. Randomized trials involve a sampling process and typically minimize heterogeneity of the target population to facilitate the efficient testing of hypotheses. Clinical trials have limited timeframes and usually are underpowered for secondary end-points. Moreover, the skill of the participating centers may be specialized, raising questions about generalizability of trial results to a broader set of healthcare institutions and practitioners. Regulatory (premarket approval) and clinical decisions, therefore, are made in the context of uncertainty and

limited information about the ultimate outcomes of an intervention. Dispelling such uncertainties requires measuring outcomes in widespread clinical use (Gelijns et al., 2005).

The focus on general practice allows us to capture outcomes of a broader set of providers and to detect long-term and low-frequency events, such as serious adverse events. In addition to the spreading of a new technology throughout the healthcare system, and its attendant change in outcomes, clinical practice is the locus of much downstream learning and innovation. First, after a new technology is introduced into practice, the medical profession typically expands and shapes the targeted patient population within a particular disease category. A case in point is coronary artery bypass grafting (CABG) surgery. Only 4 percent of patients, who were treated with such surgery a decade after its introduction, would have met the eligibility criteria of the trials that determined its initial value (Hlatky et al., 1984). These trials excluded the elderly, women, and patients with a range of co-morbidities, all of whom are recipients of CABG surgery today.

Second, the process of postmarketing innovation also includes the discovery of totally new, and often unexpected, indications of use. The history of pharmaceutical innovation is replete with such discoveries (see Table 4-1). A case in point are the alpha blockers, which were first introduced for hypertension and only 20 years later were found to be an important agent in the treatment of benign prostate hyperplasia. We found that the discovery of such new indications of use is an important public health and economic phenomenon, accounting for nearly half of the overall market for blockbuster drugs (Gelijns et al., 1998).

A third dimension of downstream learning is that physicians gain

TABLE 4-1 Original and New Indications for Pharmaceuticals

|

Drug |

Original Indications |

New Indications |

|

Beta-blockers |

Angina pectoris, arrhythmias |

Hypertension, anxiety, migraine headaches |

|

Aspirin |

Pain |

Stroke, coronary artery disease |

|

Anticonvulsants |

Seizure disorders |

Mood stabilization |

|

Alpha blockers |

Hypertension |

Benign prostatic hyperplasia |

|

RU-486 |

Abortive agent |

Endometriosis, fibroid tumors, benign brain tumors |

|

Fluoxetine (Prozac) |

Depression |

Bulimia, obsessive compulsive disorder |

|

Thalidomide |

Anti-emetic and tranquilizer |

Leprosy; graft-vs-host, Bechet’s, AIDS, ulcers |

further know-how about integrating a technology into the overall management of particular patients. Consider, for example, left ventricular assist devices (LVADs). These devices were FDA approved in 1998 to support end-stage heart failure patients awaiting cardiac transplant as a bridge to transplantation. Subsequently, LVADs were approved for marketing by the FDA in 2002 and for reimbursement by Medicare in 2003 for those patients who were ineligible for transplantation. This indication is also referred to as “destination therapy,” or intended life-long implantation of the LVAD. Whereas LVAD destination therapy was shown to provide a clear survival, functional status, and quality-of-life benefit over medical management, LVADs were plagued by significant serious adverse events, especially bleeding, infections, and thromboembolic events (Rose et al., 2001). Following approval of the device, the expanding experience of clinicians further highlighted shortcomings in its use and safety, which led to subsequent incremental device improvements by the manufacturing community. At the same time, clinicians improved their management of LVAD patients by modifying the operative procedure, developing new ways to prevent driveline infections, and changing anticoagulation regimens, among others. These changes in patient management techniques led to a reduction in the adverse event profile associated with the therapy. Beyond changing clinical outcomes, these changes affected economic outcomes as well. Over time, for example, there has been a 25 percent reduction in the length of stay for the implant hospitalization from an average of 44 days in the pivotal FDA trial (with a mean cost of $210,187) to 33 days within 3 years of dissemination—the most costly part of the care process (Miller et al., 2006; Oz et al., 2003). The dissemination to the broader healthcare system, and the changes in technologies, patients, and management techniques over time, argue for ongoing monitoring of health outcomes.

What Can We Learn from Registries?

Clinical registries, as mentioned, are an important means to capture use and outcomes in everyday practice. A recent Agency for Healthcare Research and Quality (AHRQ) report defined registries as an “organized system using observational study methods to collect uniform data to evaluate specified outcomes for a population defined by a particular disease, condition or exposure, and that serves a predetermined scientific, clinical or policy purpose” (Gliklick and Dreyer, 2007). Table 4-2 depicts some registries and their different objectives.

An important objective of registries is to collect data on long-term outcomes and rare adverse events. This is especially the case, where outcomes and adverse events take a long time to manifest themselves; a dramatic example can be found in diethylstilbestrol (DES), where clear cell carci-

TABLE 4-2 Existing Registry Content and Sponsor Descriptions

|

Name |

Content |

Sponsor |

|

INTERMACS |

National registry of patients receiving mechanical circulatory support device therapy to treat advanced heart failure. (Membership required for Medicare clinical site approval) |

Joint effort: National Heart, Lung and Blood Institute (NHLBI), Centers for Medicare & Medicaid Services (CMS), and FDA |

|

Cardiac Surgery Reporting System |

Detailed information on all CABG surgeries performed in New York State for tracking provider performance. (Reporting mandated for all hospitals in New York State performing CABG) |

New York State Department of Health |

|

ICD Registry (Implantable Cardioverter Defibrillator Registry) |

Detailed information on implantable cardoverter defibrillator implantations. (Meet CMS coverage with evidence development policy) |

The Heart Rhythm Society & American College of Cardiology Foundation |

|

ICG G (International Collaborative Gaucher Group) |

Information on clinical characteristics, natural history, and long-term treatment outcomes of patients with Gaucher Disease, a rare disorder. |

Genzyme Corporation |

|

CASE S-PMS (Carotid Artery Stenting with Emboli Protection Surveillance Post-Marketing Study) |

Evaluation outcomes of carotid artery stenting in periapproval setting. |

Cordis Coporation |

|

Alpha-1 Antitrypsin Deficiency Research Registry |

Regsitry of patients with alpha-1 antitrypsin deficiency for purposes of recruiting them to clinical trials. |

Alpha-1 Foundation |

noma of the vagina only appeared in daughters of the women taking the drug to prevent premature birth. The realization of its side effects subsequently led to a registry for those exposed to DES.

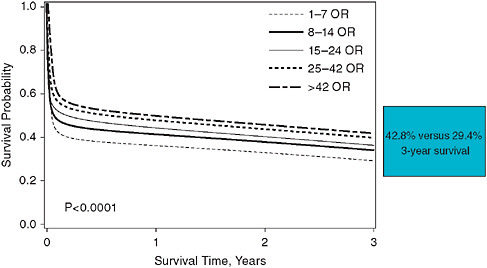

Another important use of registries is to gather information on the outcomes achieved as a technology spreads to a wide range of practitioners and institutions. As such, registries can measure the quality of care provided. Administrative datasets, which are less costly in terms of data collection, also lend themselves to this purpose. Using the Medicare dataset, for exam-

FIGURE 4-3 Survival after open ruptured AAA by hospital volume quintiles (1995– 2004, Medicare, n = 41,969).

SOURCE: Reprinted from the Journal of Vascular Surgery, Vol. 48/No. 5, Egorova et al. 2008. National outcomes for the Treatment of ruptured abdominal aortic aneurysm: Comparison of open versus endovascular repairs, pp. 1092-1100, with permission from Elsevier.

TABLE 4-3 Endovascular Repair AAA Patients (2000–2004, Medicare, n = 39,815)

|

Risk Factor |

Parameter |

Odds Ratio and 95% CL |

P-Value |

|

Renal Failure w/ Dialysis |

1.95 |

7.06 [5.23–9.53] |

<.0001 |

|

LE Ischemia |

1.27 |

3.55 [2.65–4.75] |

<.0001 |

|

Age ≥85 |

1.13 |

3.10 [1.57–2.37] |

<.0001 |

|

Liver Disease |

0.93 |

2.52 [1.54–4.12] |

0.0002 |

|

CHF |

0.80 |

2.23 [1.89–2.64] |

<.0001 |

|

Renal Failure w/o Dialysis |

0.65 |

1.91 [1.45–2.51] |

<.0001 |

|

Age 80–84 |

0.65 |

1.92 [1.56–2.36] |

<.0001 |

|

Female |

0.52 |

1.68 [1.42–1.99] |

<.0001 |

|

Neurological |

0.45 |

1.59 [1.29–1.94] |

0.0001 |

|

Chronic Pulmonary |

0.45 |

1.57 [1.35–1.83] |

<.0001 |

|

Hospital Annual Vol <7 |

0.37 |

1.45 [1.18–1.80] |

0.0005 |

|

Age 75–79 |

0.34 |

1.40 [1.14–1.71] |

0.001 |

|

Surgeon EVAR Vol <3 |

0.26 |

1.30[1.04–1.62] |

0.002 |

|

NOTE: AAA = abdominal aortic aneurysm; CL = confidence limit. SOURCE: Reprinted from the Journal of Vascular Surgery, Vol. 50/Issue 6, Egorova, Giacovelli et al. 2009. Defining high-risk patients for endovascular aneurysm repair, pp. 1271-1279, with permission from Elsevier. |

|||

ple, we found a significant volume–outcome relationship for open repair in about 42,000 ruptured abdominal aortic aneurysm (AAA) patients treated between 1995 and 2004 (Figure 4-3; Egorova, 2008). AAA patients now increasingly receive an endovascular repair, which was approved for reimbursement in 2000. The same volume–outcome relationship holds for high-risk AAA patients treated by an endovascular approach between 2000 and 2006 (Table 4-3). Again, low volume, less than 7 procedures per year, is an independent predictor of mortality, increasing risk by 45 percent (Egorova, 2009). In comparison to administrative datasets, however, registries are able to offer the clinical details needed to create richer statistical models that better characterize patient risk factors and process of care variables to predict outcomes. To expand on our aneurysm case, for example, a clinical registry would have been able to provide important information about the anatomical features of the aneurysm, which are not captured in administrative datasets and yet may have an important influence on outcomes. Moreover, in administrative datasets, it is often hard to distinguish between baseline co-morbidities and adverse events (e.g., myocardial infarction or heart failure) during the hospitalization of interest. Registries do not have this problem, and if they capture the whole population they are an important tool for measuring quality of care. If registries are used to measure quality of care among providers than it is obviously important that they appropriately adjust for differences in risk of the populations among these providers, and risk-adjustment techniques are improving (see below).

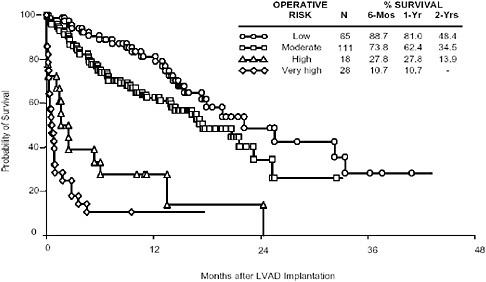

Just as registries are able to capture a broadening of providers, they also can capture the use and outcomes of a technology in a broader set of patients. To return to the LVAD case, the pivotal trial for destination therapy patients demonstrated a significant survival and quality-of-life benefit of the HeartMate (HM) XVE LVAD over optimal medical management. In fact, Kaplan-Meier survival analysis showed a 48 percent reduction in the hazard of all-cause mortality in the LVAD group (hazard ratio = 0.52; 0.34–0.78; p = 0.001). In the 2 years following CMS approval (2003) for reimbursement, an analysis of an industry-sponsored postmarketing registry showed that the overall survival rate of LVAD patients remained similar to the trial. However, a multivariable regression analysis of the larger population captured by the registry (n = 262) identified that baseline risk factors, such as poor nutrition, hematological abnormalities, and markers of end-organ dysfunction, distinguish patient risk groups (Lietz et al., 2007). Stratification of destination therapy candidates into low, medium, high, and very high risk on the basis of a risk score corresponded with very different 1-year survival rates (81 percent, 62 percent, 28 percent, and 11 percent, respectively; see Figure 4-4). The broader experience of clinical registries, as such, can provide important information to stratify patients on the basis of baseline risk factors, and, thereby, help to refine patient selection criteria.

FIGURE 4-4 Probability of survival after LVAD implantation.

Finally, an important objective for clinical registries is their ability to provide comparative effectiveness information. In New York State, for example, registries exist for all patients undergoing CABG surgery (Cardiac Surgery Reporting System) or interventional cardiac procedures (Percutaneous Coronary Intervention Reporting System). Over time, numerous randomized trials have compared CABG surgery to percutaneous transluminal coronary angioplasty (PTCA). However, both procedures have been characterized by a high level of ongoing incremental change (e.g., most trials pre-dated the use of stents) as well as ongoing changes in patient selection criteria, raising questions about the clinical effectiveness of these approached in particular patient groups. An analysis of nearly 60,000 patients captured by the above-mentioned New York State registries showed that for patients with two or more diseased coronary arteries CABG is associated with higher adjusted rates of survival than stenting (Hannan et al., 2005).

Strengthening Registries

Enhancing the value of registries for clinical effectiveness research requires obtaining “trial quality” data at low cost and low burden, and

here we will review some opportunities for strengthening data elements and data collection.

Target Population

The target patient population needs to be clearly defined, and data should capture its characteristics in terms of medical history and severity of illness. In the case of LVADs, for example, the National Institutes of Health (NIH) provided financial support for the creation of a registry, with close involvement of CMS, FDA, the clinical community and industry, called INTERMACS. This registry targets patients who receive durable mechanical circulatory assist devices (either for bridge to transplantation or destination therapy). The data elements were designed to capture important baseline characteristics of LVAD patients and have resulted in patient profiles that are useful for clinical communication and treatment planning that correlate with mortality risk (INTERMACS, 2008). Even though registries are more apt to capture broader populations than randomized efficacy trials, there is always a risk that patients are entered selectively. Statewide hospital discharge datasets (such as SPARCS in New York State) may offer a means for monitoring the completeness of patient population capture. Linking payment for patient care to data entry is another way to improve capture. By participating in INTERMACS, for example, clinical centers can meet CMS and Joint Commission on the Accreditation of Healthcare Organizations (JCAHO) reporting requirements, necessary for certification, which stipulate that centers submit data to a nationally audited registry that tracks life-time outcomes of all destination LVAD patients (INTERMACS, 2008).

Outcomes

In terms of outcome measures, mortality is a relatively unambiguous end-point, but adverse events (AEs) require standardization of definitions that should not be unique to a registry but should be more generally accepted in the clinical community. INTERMACS, for example, offered much needed standardization of AE definitions, and facilitated the comparisons of different circulatory support devices, which until recently defined important events differently, including stroke and major bleeding. Registries can improve data quality by adjudicating adverse events and implementing a monitoring process to ensure data integrity. Functional status and quality of life are critical end-point measures, but difficult to capture and analyze longitudinally, even in randomized trials. But as with randomized trials, using instruments that are self-administered or administered by phone, such the Rankin scale in stroke, can increase feasibility. For some

diseases, such as heart failure, there is a correlation between patient-derived measures of functional status and hospitalizations, which facilitates using hospitalizations as a proxy measure.

Control Group

Critical for measuring comparative effectiveness is defining the control group, which optimally will be internal to the registry being analyzed. While device registries, for example, may facilitate comparing the effectiveness of alternative devices, such registries are unlikely to provide a medical therapy control group needed for evaluating new indications for device use. Such questions are better addressed in a broader disease-based registry; for example, defining the appropriate role of LVADs in managing slightly less sick heart failure patients would require a comparison to patients receiving optimal medical management and expansion of the LVAD registry to an overall heart failure registry. One weakness of observational studies (i.e., nonrandomized studies) is that clinical judgment is the basis for treatment assignment and clinical characteristics of the comparison groups may differ substantially, affecting the ability to make fair comparisons. Rigorous techniques to adjust for these differences, such as propensity score-based analyses, have become more common over time. However, our ability to create such adjustment models requires that we have an understanding of the prognostic factors that affect treatment outcomes. With newer forms of treatment, this is not always the case. If there is very rapid technological change, the evolution to major new patient populations and/or little know-how about prognostic factors, observational studies may no longer be sufficient and randomized trials may be in order.

Data Collection Burden and Cost

Improving the efficiency of data collection for registries is crucially dependent on advances in the use of informatics. With the growth and improvement of electronic health records, institutions have the capability of automated transfer of patient, process of care, and outcome data into registries, which may address some of the data collection and cost burden. In the same manner, administrative datasets can be linked to patient record, which would improve their usefulness for clinical effectiveness studies.

The Role of Registries in Improving the Clinical Trials Process

An under-examined benefit of registries may be their potential to increase the efficiency of conducting RCTs. First, registry data can provide a prior estimate of the success distribution in the control group that gets

updated by prospectively collected data in a randomized trial (through Bayesian analysis), or concurrent control data can be directly pooled with randomized data. The benefit of either approach could be to allow a higher likelihood of randomization into the experimental group, say a 3:1 or 4:1 randomization ratio (Neaton et al., 2007). This is especially important when there are strong physician and patient preferences for an experimental therapy. This is often the case with major surgical interventions for life-threatening diseases and may constitute a major deterrent to enrollment in a randomized trial.

Registries also may offer a means to eliminate the need for collecting a new control group altogether, which has relevance to premarketing efficacy trials for orphan diseases and small populations. Here registries can provide an empirically derived performance goal or objective performance criterion to facilitate a single arm study. The use of LVADs for bridge to transplantation, for instance, is a so-called orphan indication, with around 500 patients being implanted in the United States annually. INTERMACS is now providing data to establish a performance goal in terms of “survival to transplantation or being alive at 180 days and listed for transplantation” for newer generation devices that are seeking approval for use in Bridge-to-Transplant (BTT) patients. More recently, INTERMACS has been the source for providing a matched control arm.

Finally, the existence of a robust postmarketing infrastructure can balance the acceleration of premarketing trials. This is especially important if drugs or devices are approved under the FDA fast track mechanism. For example, of the 60 cancer drugs that have been approved between 1995 and 2004, a third of these compounds received accelerated approvals based on surrogate measures of clinical benefit (Roberts and Chabner, 2004).

Concluding Observations

In conclusion, the often underappreciated dynamics of medical innovation, where much of innovation and downstream learning takes place in actual clinical practice itself, argues for capturing the changing outcomes throughout the lifecycle of medical interventions. Registries offer the means to do so, and recently, new opportunities for addressing their traditional weaknesses have emerged in the realm of informatics, analytical techniques, and new models of financing. With the expansion and enhancement of electronic health records comes the possibilities of utilizing the clinical encounter to directly populate research registries and decreasing the burden of primary data collection. Moreover, efforts to address the traditional weaknesses of observational registry-based studies have led to the increased use of propensity score techniques to adjust for differences in baseline differences between nonrandomized comparison groups. Finally, an important