INTRODUCTION AND OVERVIEW

The demand for better evidence to guide healthcare decision making is increasing rapidly for a variety of reasons, including the adverse consequences of care administered without adequate evidence, emerging insights into the proportion of healthcare interventions that are unnecessary, recognition of the frequency of medical errors, heightened public awareness and concern about the very high costs of medical care, the burden on employers and employees, and the growing proportion of health costs coming from out of pocket (Fisher and Wennberg, 2003; Fisher et al., 2003a, 2003b; IOM, 2000, 2001, 2008a; McGlynn et al., 2003; Wennberg et al., 2002). Although nearly $2.5 trillion was spent in 2009 on health and medical care in the United States, only a very small portion of that amount—perhaps less than one tenth of 1 percent—was devoted to learning what works best in health care, for whom, and under what circumstances.

To improve the effectiveness and value of the care delivered, the nation needs to build its capacity for ongoing study and monitoring of the relative effectiveness of clinical interventions and care processes through expanded trials and studies, systematic reviews, innovative research strategies, and clinical registries, as well as improving its ability to apply what is learned from such study through the translation and provision of information and decision support. Several recent initiatives have proposed the development of an entity to support expanded study of the comparative effectiveness of interventions. To inform policy discussions on how to meet the demand for more comparative effectiveness research (CER) as a means of improving

the effectiveness and value of health care, the Institute of Medicine (IOM) Roundtable on Value & Science-Driven Health Care convened a workshop on July 30–31, 2008, titled Learning What Works: Infrastructure Required for Comparative Effectiveness Research. Box S-1 describes the issues that motivated the meeting’s discussions: the substantial and growing interest in activities and approaches related to CER; the lack of coordination of key activities, such as the selection and design of studies, synthesis of existing evidence, methods innovation, and translation and dissemination of CER information; shortfalls and widening gaps in the workforce needed in all areas of CER; the opportunities presented by the recent calls for expanded resources for work on the comparative effectiveness of clinical interventions; the growing appreciation of the infrastructure needed to support this work; and the need for a trusted, common venue to identify and characterize the need categories, begin to estimate the shortfalls, consider approaches to addressing the shortfalls, and identify priority next steps.

BOX S-1

Issues Motivating the Discussion

- Substantial demand for greater insights into the comparative clinical effectiveness of clinical interventions and care processes to improve the effectiveness and value of health care.

- Expanded interest and activity in the work needed—e.g., comparative effectiveness research, systematic reviews, innovative research strategies, clinical registries, coverage with evidence development.

- Currently fragmented and largely uncoordinated selection of studies, study design and conduct, evidence synthesis, methods validation and improvement, and development and dissemination of guidelines.

- Expanding gap in workforce with skills to develop data sources and systems, design and conduct innovative studies, translate results, and guide application.

- Opportunities presented by the attention of recent initiatives and the increasing possibility of developing an entity and resources for expanded work on the comparative effectiveness of clinical interventions.

- Growing appreciation of the importance of assessing the infrastructure needed for this work—e.g., workforce needs, data linkage and improvement, new methodologies, research networks, technical assistance.

- Desirability of a trusted, common venue to identify and characterize the need categories, begin to estimate the shortfalls, consider approaches to addressing the shortfalls, and identify priority next steps.

The goal of the workshop was to clarify the elements and nature of the needed capacity, solicit quantitative and qualitative assessments of the needs, and characterize them in a fashion that will facilitate engagement of the issues by policy makers. Two assumptions guided the discussions but were not explored as part of the workshop: resources will be available to expand work on the comparative effectiveness of medical interventions, and, given recent public discourse on the need for a stronger focus on the work, a designated entity would be developed with a formal charge to coordinate the expanded work.

The workshop gathered leading practitioners in health policy, technology assessment, health services research, health economics, information technology (IT), and health professions education and training to explore, through invited presentations, the current and future capacity needed to generate new knowledge and evidence about what works best, including skills and workforce, data linkage and improvement, study coordination and result dissemination, and research methods innovation. Participants explored, in both qualitative and quantitative terms, the nature of the work required, the IT and integrative vehicles required, the skills and training programs required, the priorities to be considered, the role of public–private partnerships, and the strategies for immediate attention while considering the long-term needs and opportunities. Through the course of the workshop, a number of common themes and implications emerged. These are indicated below, along with a number of possible follow-up actions identified for Roundtable consideration.

Since the meeting, three events have occurred with significant implications for the infrastructure necessary for comparative effectiveness research: (1) the American Recovery and Reinvestment Act of 2009 (ARRA) included $1.1 billion for the conduct of CER; (2) formal assessments by the IOM and the federal government have recommended priorities for such research; and (3) the Accountable Care Act of 2010 (ACA) established an independent Patient-Centered Outcomes Research Institute (PCORI). See Appendixes C, D, and, E for additional background. Accordingly some of the information has been updated as appropriate to bring the text current with 2011 circumstances.

Comparative Effectiveness Research and the

Roundtable on Value & Science-Driven Health Care

The IOM’s Roundtable on Value & Science-Driven Health Care provides a trusted venue for key stakeholders to work cooperatively on innovative approaches to the generation and application of evidence that will drive improvements in the effectiveness and efficiency of medical care in the United States. Participants seek the development of a learning health system

that enhances the availability and use of the best evidence for the collaborative healthcare choices of each consumer and healthcare professional, that drives the process of discovery as a natural outgrowth of patient care, and that ensures innovation, quality, safety, and value in health care. As leaders in their fields, Roundtable members work with their colleagues to identify issues not being adequately addressed, determine the nature of the barriers and possible solutions, and set priorities for action. They marshal the energy and resources of the sectors represented on the Roundtable to work for sustained public–private cooperation for change.

This work is focused on the three major dimensions of the challenge:

- accelerating progress toward the long-term vision of a learning health system, in which evidence is both applied and developed as a natural product of the care process,

- expanding the capacity to meet the acute, near-term need for evidence of comparative effectiveness to support medical care that is maximally effective and produces the greatest value,

- improving public understanding of the nature of evidence, the dynamic character of evidence development, and the importance of insisting on medical care that reflects the best evidence.

Roundtable members have set a goal that by the year 2020, 90 percent of clinical decisions will be supported by accurate, timely, and up-to-date clinical information and will reflect the best available evidence. To achieve this goal, Roundtable members and their colleagues work to identify priorities for action on those key issues in health care where progress requires cooperative stakeholder engagement. Central to these efforts is the Learning Health System series of workshops and publications that collectively characterize the key elements of a healthcare system that is designed to generate and apply the best evidence about the healthcare choices of patients and providers as well as identify barriers to the development of such a system and opportunities for progress.

Each meeting is summarized in a publication available through the National Academies Press. Workshops in this series include the following:

- The Learning Healthcare System (July 20–21, 2006)

- Judging the Evidence: Standards for Determining Clinical Effectiveness (February 5, 2007)

- Leadership Commitments to Improve Value in Healthcare: Toward Common Ground (July 23–24, 2007)

- Redesigning the Clinical Effectiveness Research Paradigm: Innovation and Practice-Based Approaches (December 12–13, 2007)

- Clinical Data as the Basic Staple of Health Learning: Creating and Protecting a Public Good (February 28–29, 2008)

- Engineering a Learning Healthcare System: A Look to the Future (April 28–29, 2008)

- Learning What Works: Infrastructure Required for Learning Which Care is Best (July 30–31, 2008)

- Value in Health Care: Accounting for Cost, Quality, Safety, Outcomes, and Innovation (November 17–18, 2008)

- The Healthcare Imperative: Lowering Costs and Improving Outcomes (May, July, September, December, 2009)

- Digital Infrastructure for the Learning Health System: The Foundation for Continuous Improvement in Health and Health Care (July, September, October, 2010)

This publication summarizes the proceedings of the seventh workshop in the Learning Health System series, which focused on the infrastructure needs—e.g., methods, coordination capacities, data resources and linkages, workforce—for developing an expanded and efficient national capacity for CER. A synopsis of the key points from each of the sessions is included in this chapter, with more detailed information on session presentations and discussions found in the chapters that follow. Sections of the workshop summary not specifically attributed to an individual are based on the presentations, background papers, and discussions associated with the workshop, and reflect the views of this publication’s rapporteurs, not those of the IOM Roundtable on Value & Science-Driven Health Care.

Day 1 featured two keynote speakers who provided a vision for developing an infrastructure that can contribute to an evidence base of what works best for whom, as well a sense of some of the potential returns from health care driven by evidence (Chapter 1), and presentations by speakers asked to characterize the nature of the work (Chapter 2), the information networks (Chapter 3), and the talent (Chapter 4) needed to carry out that vision. Day 2 featured discussions focused on identifying priority items for implementation to meet current shortfalls and opportunities to build upon existing public–private partnership efforts (Chapter 5). Chapter 6 provides a summary of the final session’s discussion to outline key elements of a roadmap for progress, suggest some “quick hits” for immediate implementation, and opportunities to build needed support; this chapter also highlights common themes from the meeting’s discussions and suggestions on opportunities for follow-up actions by the Roundtable. An overview of the topics discussed in specific manuscripts is provided in Table S-1.

A white paper, authored by staff in 2007 and titled Learning What Works Best: The Nation’s Need for Evidence on Comparative Effectiveness

TABLE S-1 Overview of the Specific Aspects of Comparative Effectiveness Research (CER) Infrastructure Addressed in This Publication’s Manuscript

| Chapter | Manuscript and Author(s) | CER Research Methods and Settings | Clinical Data Development and Use | Health Information Technology | Evidence Review and Synthesis | Coordination and Dissemination | Workforce Education and Training | International CER Efforts |

| 1 | The Nation’s Need for Evidence on Comparative Effectiveness in Health Care: Learning What Works Best J. Michael McGinnis et al. | |||||||

| A Vision for the Capacity to Learn What Care Works Best Mark B. McClellan | ||||||||

| The Potential Returns from Evidence-Driven Health Care Gail R. Wilensky | ||||||||

| 2 | The Cost and Volume of Comparative Effectiveness Research Erin Holve and Patricia Pitman | |||||||

| Intervention Studies That Need to Be Conducted Douglas B. Kamerow | ||||||||

| Clinical Data Sets That Need to Be Mined Jesse A. Berlin and Paul E. Stang | ||||||||

| Knowledge Synthesis and Translation That Need to Be Applied Richard A. Justman |

| Methods That Need to Be Developed Eugene H. Blackstone et al. | ||||||||

| Coordination and Technical Assistance That Need to Be Supported Jean R. Slutsky | ||||||||

| 3 | Electronic Health Records: Needs, Status, and Costs for U.S. Healthcare Delivery Organizations Robert H. Miller | |||||||

| Data and Information Hub Requirements Carol C. Diamond | ||||||||

| Integrative Vehicles Required for Evidence Review and Dissemination Lorne A. Becker | ||||||||

| 4 | Comparative Effectiveness Workforce—Framework and Assessment William R. Hersh et al. | |||||||

| Toward an Integrated Enterprise—The Ontario, Canada, Case Sean R. Tunis et al. | ||||||||

| 5 | Information Technology Platform Requirements Mark E. Frisse |

| Chapter | Manuscript and Author(s) | CER Research Methods and Settings | Clinical Data Development and Use | Health Information Technology | Evidence Review and Synthesis | Coordination and Dissemination | Workforce Education and Training | International CER Efforts |

| 5 | Data Resource Development and Analysis Improvement T. Bruce Ferguson, Jr., and Ansar Hassan | |||||||

| Practical Challenges and Infrastructure Priorities for Comparative Effectiveness Research Daniel E. Ford | ||||||||

| Transforming Health Professions Education Benjamin K. Chu | ||||||||

| Building the Training Capacity for a Health Research Workforce of the Future Steven A. Wartman and Claire Pomeroy | ||||||||

| Public–Private Partnerships Carmella A. Bocchino et al. | ||||||||

| 6 | The Roadmap—Policies, Priorities, Strategies, and Sequencing Stuart Guterman et al. |

in Health Care, provided important context for the workshop discussions. The executive summary of that white paper and the full manuscript are included in Chapter 1 and Appendix A, respectively. Appendix B includes evidence summaries of research questions identified and other materials relevant to discussion in a paper in Chapter 2. Appendixes C and D present the recommendations of two groups for priority studies in CER: Initial National Priorities for Comparative Effectiveness Research, an Institute of Medicine report; and the Federal Coordinating Council for Comparative Effectiveness Research Report to the President and Congress. Appendix E contains the portions of the ACA relevant to the structure, funding, and charge of PCORI. The workshop agenda, biographical sketches of the workshop participants, and a list of workshop attendees can be found in Appendixes F, G, and H, respectively.

COMMON THEMES

Common themes that emerged from the 2 days of discussion are summarized in Box S-2 and elaborated in the text that follows:

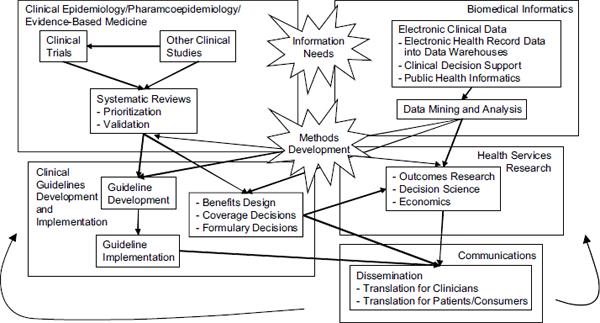

- Care that is effective and efficient stems from the integrity of the infrastructure for learning. The number of medical diagnostics and treatments available to patients and caregivers is increasing, but the knowledge about their effectiveness—in particular, their comparative effectiveness—is not keeping pace. This is in part a function of the rate of change, but it is also a product of capacity that is both underdeveloped and, as several participants noted, substantially fragmented, which leads to gaps, inefficiencies, and inconsistencies in the work. The accelerating rate of change in the interventions requiring effectiveness assessment compels a substantial shoring up in the level of effort, the nature of the effort, and the coordination of the effort in order to produce the necessary insights into the right care for different people under different circumstances.

- Coordinating work and ensuring standards are key components of the evidence infrastructure. Several presentations highlighted the point that substantial activity is currently under way in effectiveness research, including work on comparative effectiveness, but the activities are fragmented and often redundant in both structure and function. The fact that the application of evidence lags behind its production is in part a function of the disparate and “siloed” approaches between and within organizations seeking and developing information. The notions of infrastructure for evidence development therefore also include the capacity for greater coordination in the setting of study priorities; the development of systematic

BOX S-2

Infrastructure Required for Comparative Effectiveness

Research: Common Themes

- Care that is effective and efficient stems from the integrity of the infrastructure for learning.

- Coordinating work and ensuring standards are key components of the evidence infrastructure.

- Learning about effectiveness must continue beyond the transition from testing to practice.

- Timely and dynamic evidence of clinical effectiveness requires bridging research and practice.

- Current infrastructure planning must build to future needs and opportunities.

- Keeping pace with technological innovation compels more than a head-to-head and time-to-time focus.

- Real-time learning depends on health information technology investment.

- Developing and applying tools that foster real-time data analysis is an important element.

- A trained workforce is a vital link in the chain of evidence stewardship.

- Approaches are needed that draw effectively on both public and private capacities.

- Efficiency and effectiveness compel globalizing evidence and localizing decisions.

decisions for the conduct of CER, systematic reviews, and guideline development; and the need to ensure the consistent translation of developed information. The identification of priority conditions, evaluation, and evidence gaps is needed in order to target limited resources, especially for high-cost or high-volume procedures and interventions.

- Learning about effectiveness must continue beyond the transition from testing to practice. “The learning process cannot stop when the label is approved,” one meeting participant pointed out. Premarket testing for the safety and effectiveness of various interventions cannot assess the results for all populations or the circumstances of use and differences in practice patterns, so gathering information as interventions are applied in practice settings should represent a key focus in designing the infrastructure to learn which care is best. Local coverage decisions and private insurer use

of coverage with evidence development approaches were cited as opportunities to learn as a part of the care process.

- Timely and dynamic evidence of clinical effectiveness requires bridging research and practice. Although historical insulation of clinical research from the regular delivery of healthcare services evolved to facilitate data capture and control for confounding factors, it may not adequately inform the real-world setting of clinical practice. With the prospect of enhanced electronic data capture at the point of care on real-world patient populations, and statistical approaches to improve analysis, as well as increasing demand to keep pace with technologic innovation, this divide increasingly limits the utility of research results. Efforts under way to better engage health delivery organization, practitioners, patients, and the community in research prioritization, conduct, and results dissemination should be supported and expanded.

- Current infrastructure planning must build to future needs and opportunities. Research is often driven more by the methods than the questions. In fact, both are important, and infrastructure planning must account for both the key emerging healthcare questions and the key emerging CER opportunities. Emerging questions include those related to the management of multiple co-occurring chronic diseases of increasing prevalence in an aging population, the improved insights into individual variation relevant to both treatments and diagnostics, and the impact of innovation in shortening the lifecycle of any particular intervention. Emerging tools include innovations in trial design, the development of new statistical approaches to data analysis, and the development of electronic medical and personal health records.

- Keeping pace with technological innovation compels more than a head-to-head and time-to-time focus. Much of the current discussion about CER has emphasized the need for more clinical trials and more head-to-head studies. Although there are numerous examples of diagnostic and treatment interventions for which such studies are needed, the notion of a research process that essentially offers periodic and static determinations is inherently limited. Especially with the rapid pace of change in the nature of interventions and the difficulty, expense, and time required to develop studies—and the challenges of ensuring the generalizability of results in the face of limitations of the traditional approach to randomized controlled trials (RCTs)—a first-order priority for effectiveness research is the establishment of infrastructure for a more dynamic, real-time approach to learning. Leveraging new tools, such as health information technology (IT) should allow for

a more networked and distributed approach to information sharing and evidence creation.

- Real-time learning depends on HIT investment. It was noted that collecting data is the most time-intensive part of trials and studies, and IT is critical to streamlining this work. Moreover, it is the key to accelerated learning from broader-based clinical experience. We heard that “[t]he type of learning needed cannot be paper based.” The increasing complexity of the factors involved in understanding the effectiveness of clinical options under different circumstances requires a blend of database access and computing power that can only be provided from broadly applied HIT. Although not in itself sufficient to produce the information required for better medical care management, it is a necessity for the continuous improvement expected of a learning health system. A policy framework for privacy and security will be necessary to build and maintain public trust that information will be protected as it is shared.

- Developing and applying tools that foster real-time data analysis is an important element. The scope and scale of evidence needs suggests that innovation is needed across the range of research methods, from making clinical trials faster and less expensive to moving beyond randomized trials to better address practical circumstances, using registries, observational databases, and other emerging data resources. If full advantage is to be taken of HIT, statistical tools and analytic algorithms that can be embedded in databases to allow real-time insights will be important. Similarly, tools are needed that will allow findings to be drawn from databases built on different vendor platforms, using semantic technology to integrate currently disparate medical data, in order to develop the next generation of statistical tools for the analysis of clinical data, including the building of models that allow insights to be generated by virtual studies.

- A trained workforce is a vital link in the chain of evidence stewardship. As in many other domains, progress in CER will relate to the capacity to develop and maintain the broad and diversely skilled workforce needed. Mention was often made of that factor as a determining element as well for progress in development of the learning health system. Given the pace of change in the number and variety of clinical interventions as well as in the tools and approaches to assessing them, there is a need to ensure that these developing opportunities are matched by the skills of the workforce. This includes training and education in the methodologies of research design, the translation of research, guideline development, and the maintenance and mining of clinical records. But it also

includes giving attention to reorienting the education of frontline caregivers around their emerging responsibilities for access, interpretation, and discussion with patients of a dynamic evidence base, as well as helping to ensure the availability and integrity of the clinical data that shape conclusions on evidence.

- Approaches are needed that draw effectively on both public and private capacities. Several times in the course of the meeting it was pointed out that although the total investment in CER in the United States is substantial, it is inefficient because of the absence of a vehicle for common priority setting and coordination of efforts and because the work on effectiveness done by private companies in product development and testing is usually not accessible to the broader community. In aggregate, private investment often far exceeds public investment in assessing a given intervention, but even when available, studies associated with an enterprise with a commercial stake may be viewed suspiciously. Several models are in development to establish public–private collaborative efforts to improve the efficiency and effectiveness of the work.

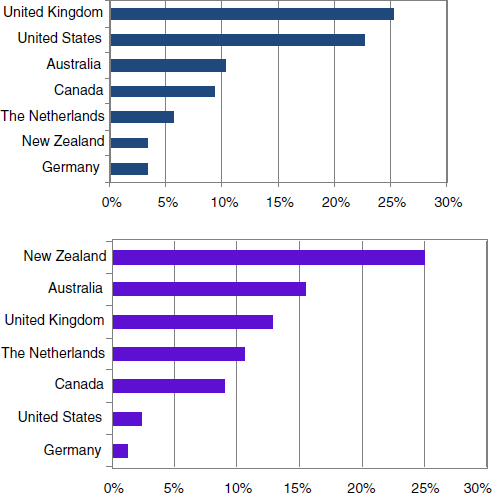

- Efficiency and effectiveness compel globalizing evidence and localizing decisions. Two presentations featured international work, including the Cochrane Collaboration on evidence synthesis, and efforts in Ontario, Canada, to develop and apply insights about the comparative effectiveness of clinical interventions. Reference was made throughout the meeting to work going on elsewhere in the world and, in particular, to work at the National Institute for Health and Clinical Excellence in the United Kingdom. This brought clearly into play the need to ensure that, where possible, common work to assess an intervention’s clinical effectiveness—or collective work to assess the body of evidence—be collaborative and well coordinated across boundaries, while also being mindful that different cultural and policy environments may lead to different decisions at the local level.

Key Factors and Needs

Workshop speakers described a number of implications of the current state of play for the development of an infrastructure for CER (Box S-3). These included the following:

- Several elements are involved in infrastructure development. Developing the infrastructure for CER has at least five dimensions: (1) putting in place the physical capacity, i.e., the hardware; (2) developing the analytic tools and methods; (3) training the work-

BOX S-3

Key Factors and Needs for Expanded Comparative

Effectiveness Research Capacity

• Several elements are involved in infrastructure development:

o putting in place the physical capacity, i.e., the hardware;

o developing the analytic tools and methods;

o training the workforce needed;

o establishing processes for efficient and effective operation; and

o shaping the strategy for attention and phasing.

• Strategies and priorities for infrastructure application include the following:

o conduct of systematic reviews,

o conduct of primary research,

o clinical registry resources,

o introduction of health information technology throughout practice,

o fostering public and private collaboration, and

o keeping focus on the utility and impact of a networked world.

force needed; (4) establishing processes for efficient and effective operation; and (5) shaping the strategy for attention and phasing. Presentations at the meeting described and discussed in qualitative terms the needs and challenges in each of these dimensions and offered “opening bid” quantitative estimates on the needs for the IT infrastructure, as well as for investments in human capital. Refinements of these first approximations will be needed, as will additional clarity on the analytic tools, processes, and strategies for a stronger infrastructure for research into effective health care.

- Strategies and priorities for infrastructure application. The dimensions noted above represent in certain ways the functional dimensions of relevance to the infrastructure that is needed for effectiveness research. There are phasing considerations as well, in part driven by the ability and need to take actions even without additional resources and in part driven by the time required to set in motion the necessary activities. Suggestions for key strategies and priorities for progress included the following:

o Conduct of systematic reviews. There is an immediate need to improve the conduct, coordination, and consistency of systematic reviews—a point that, in effect, echoed the recommendations of the 2008 IOM report Knowing What Works in Health Care: A Roadmap for the Nation.

o Conduct of primary research. Similarly, the approach to primary research on effectiveness needs a more systematic means of determining priorities, better tools and more streamlined designs, and a deeper bench workforce to do the work.

o Clinical registry resources. In making the transition to a pattern of real-time, continuous learning in health care—in effect, creating a beta approach to clinical data systems that generate learning—the technologies for clinical registries and in the field of registry development, maintenance, and improvement will need to be strengthened.

o Introduction of HIT throughout practice. In the area of IT development, the issues include the need to install appropriate hardware in virtually every clinical setting, the incorporation into operating software of design elements that are pegged to research activities and embedded analytic tools, the incorporation of design elements used in decision assistance, and training of the required workforce to work with this technology.

o Fostering public and private collaboration. The longer-term development needed to sustain the growth and improvement of the infrastructure will include the design of approaches that foster meaningful public and private collaboration in support of the research activities.

o Keeping focus on the utility and impact of a networked world. Also important to guide strategy development in the long term are approaches designed to take advantage of the resources emerging in our increasingly networked world—the opportunities for which hints are provided by recent developments, such as the Patients Like Me Web site, the HMO Research Network, the registries of the Society of Thoracic Surgeons, and even information made available by such resources as Google and Wikipedia.

CONTEXT, PRESENTATION, AND DISCUSSION SUMMARIES

Background for workshop discussions was provided by an IOM staff-authored background brief that illustrates the case for expanded CER, provides an overview of current CER activities and needs, and briefly discusses relevant issues not under consideration at the workshop (e.g., financing and structure of a new entity to coordinate CER work). Workshop presentations focused on key infrastructure needs and identified components of and existing capacity for CER, provided qualitative and quantitative assessments of what is needed to meet the demand, and suggested options for strengthening and building upon existing infrastructure. Much of the discussion focused

on the prioritization of these needs and how to develop the beginnings of a roadmap of specific immediate steps and priority actions needed to move from where we are to where we need to be. The background brief, workshop presentations, and meeting discussions are summarized below—with expanded discussion included in the main body of the text.

The Need and Potential Returns for Comparative Effectiveness Research

Enhancing the capacity for CER is not an end in itself but is rather a means to begin guiding the development of a healthcare system in which care is evidence driven and focused on providing care of value to individual patients. The staff-authored issue brief, provided as background for meeting discussions, and two presentations provided an important starting point for workshop discussions by summarizing current CER capacity, outlining a vision for—and suggesting the potential returns of—an evidence- and value-driven healthcare system.

The Nation’s Need for Comparative Effectiveness Research1

The nation’s capacity has fallen far short of its need for producing reliable and practical information about the care that works best. Medical-care decision making is now strained, at both the level of the individual patient and the level of the population as a whole, by the growing number of diagnostic and therapeutic options for which there is insufficient evidence to make a clear choice (IOM, 2008a). As reviewed in the background paper provided to workshop participants, these developments have fundamental implications for health prospects, and to capture and use them effectively and efficiently will require a proportionate commitment to understand their advantages and appropriate applications. It is a problem in both capacity investment and resource allocation. If only 1 percent of the nation’s healthcare bill were devoted to understanding the effectiveness of the care purchased, the total investment would be more than $20 billion annually. In contrast, even accounting for the support from all private and public sources, the aggregate national commitment to assessing clinical interventions is still likely well under 1 percent.2

_______________

1 At the request of the Roundtable’s sustainable capacity working group, Roundtable staff developed an Issue Overview on national capacity for CER (IOM, 2007). This paper was provided as part of the meeting briefing materials to inform workshop discussion and is summarized briefly here (S-16–S-19). The complete white paper can be found in Appendix A of this publication.

2 The American Recovery and Reinvestment Act of 2009 provided $1.1 billion of funds to the National Institutes of Health, Agency for Healthcare Research and Quality, and the Secretary of Health and Human Services for activities related to CER.

Activities currently under way to assess the effectiveness of healthcare interventions are broad but underresourced and fall far short of the need (IOM, 2007). In addition to the contributions of industry through phase 3 and 4 trials, several government agencies support CER, including the Agency for Healthcare Research and Quality (AHRQ), which has a specific mandate and a small appropriation for CER. The total of all appropriations to all federal agencies—the National Institutes of Health (NIH), the Veterans Health Administration, the Department of Defense, the Centers for Medicare & Medicaid Services (CMS), the Food and Drug Administration (FDA), AHRQ, and the Centers for Disease Control and Prevention—for all health services research amounts to about $1.5 billion, only a modest portion of which is devoted to CER and which is far below the industry level (AcademyHealth, 2005). Additional work, also modest, is undertaken by certain of the larger healthcare delivery organizations. Evidence synthesis activity is supported by the insurance industry, professional societies, healthcare organizations, and government. AHRQ has established a network of 13 AHRQ-sponsored evidence-based practice centers that review literature and produce evidence reports including comparative effectiveness reviews. Organizations interested in evidence reviews will often draw upon syntheses performed by several well-established technology assessment entities (IOM, 2008a).

The most pressing needs of clinicians and their patients center on the development of reliable studies on which to base their decisions. These needs have been characterized in various ways, and they can be grouped into the key areas indicated in Box S-4 (Buto and Juhn, 2006; Clancy, 2006; Health Industry Forum, 2006; Hopayian, 2001; Kupersmith et al., 2005; Rowe et al., 2006). The related key challenges are summarized in Table S-2.

To narrow the rapidly growing gap between the available evidence on clinical effectiveness and the evidence necessary for sound clinical decision making, various organizations and recent public articles have called for the creation of a new entity and a quantum increase—several billion dollars—for CER (IOM, 2008a; Kupersmith et al., 2005; Wilensky, 2005). The various approaches suggested for building the required capacity can be grouped into four categories according to the funding patterns for their support (Box S-5). Each of the approaches is based on an existing or recent model. Although presented as discrete models for discussion purposes, they are not mutually exclusive.

Other implementation considerations include those related to funding and program management. Suggestions for the funding mechanism range from a direct annual federal appropriation or a small set-aside from the Medicare Trust Fund to the structuring of proportionately matching contributions including set-asides from Medicare fund expenditures, from private health insurance premiums, or from manufacturer research and

BOX S-4

Prominent Comparative Effectiveness Research

Activities and Needs

- Studies of comparative effectiveness (“head to head”)

- Systematic reviews of comparative effectiveness

- Assessment of comparative value/cost effectiveness

- Coordinated priority setting and execution

- Improved study designs and research methods

- Better linkage of studies of efficacy, safety, and effectiveness

- Appropriate evidence standards consistently applied

- Consistent recommendations for clinical practice

- Guidance for coverage and funding

- Dissemination, application, and public communication

SOURCE: IOM, 2007.

TABLE S-2 Prominent Comparative Effectiveness Research Activities and Needs—Key Challenges

|

|

|

|

Issue |

Key Challenges |

|

|

|

|

Head-to-head studies |

Scant resources; rapidly increasing need; comparison choice |

|

Systematic reviews |

Few primary studies; inconsistent methods; uncoordinated |

|

Comparative value insights |

Little agreement on metrics or role of costs; cost fluctuation |

|

Priority setting |

Fragmentation; inefficiency; no mechanism for coordination |

|

Study designs and tools |

Clinical trial time/cost/limits; large dataset mining methods |

|

Research life-cycle links |

Efficacy–effectiveness disjuncture; postapproval surveillance |

|

Evidence standards |

Standards not adapted to needs; inconsistency in application |

|

Practice guidance |

Disparate approaches; conflicting recommendations |

|

Coverage guidance |

Narrow evidence base; limited means for provisional coverage |

|

Application tools |

Public misperceptions; incentive structures; decision support |

|

|

|

SOURCE: IOM, 2007.

BOX S-5

Models for Enhancing Capacity

Incremental funding augmentations

- Incremental model

Publicly funded entity

- Executive branch agency model

- Independent government commission model

- Legislative branch office model

Privately funded entity

- Operating foundation model

- Investment tax credit cooperative model

Public–private funded entity

- User fee public model

- Federally funded research and development center public model

- Independent cooperative model

- Independent quasi-governmental authority model

SOURCE: IOM, 2007.

development expenditures (Hopayian, 2001; Health Industry Forum, 2006; Kupersmith et al., 2005; Wilensky, 2005). There can be many variations on these themes, but the key concept is related less to the source of the funds invested than to the value of the return for the outcomes and efficiency of the nation’s health care.

Because of the challenges to increasing CER through a simple appropriation to an existing agency—the difficulty of marshaling an appropriation at a sufficient level, the agency’s lack of political independence, the limited ability to draw on other agencies—much of the recent discussion has focused on three of the independent models, often with blended public and private funding (Buto and Juhn, 2006; Kupersmith et al., 2005; Wilenksy, 2005). As independent entities, each of these approaches assumes the establishment of a governing board composed of stakeholders and charged with priority setting, broad budget allocation, and fiduciary responsibility for execution of the program of activities. These approaches differ in the degree of insulation between the stakeholder priority setting and the conduct of the scientific studies as well as in the ways the studies would be managed, the involvement of existing agencies, and the reporting of results.

To this end, the ACA (2010) established the Patient-Centered Outcomes Research Institute (PCORI) as an independent non-profit organization to

assist in informing the health decisions of “patients, clinicians, purchasers, [and] policy-makers.” The ACA appropriated to the PCORI Trust Fund $10 million, $50 million, and $150 million for fiscal year 2010-2012. Additionally, $150 million plus $1 per Medicare part A and B enrollee has been appropriated for 2013 and $150 million plus $2 for each A/B enrollee, each year from 2014-2019. As outlined in the Act, PCORI will set a national agenda for research priorities, fund entities that conduct priority research, improve clinical effectiveness research methods, and ensure transparency and broad dissemination of its findings. It will be overseen by a Governing Board, comprised of 19 members appointed by head of the Government Accountability Office, as well as 2 ex officio representatives from the Agency for Healthcare Research and Quality and the National Institutes of Health. For more information on PCORI, see Appendix E.

A Vision for the Capacity to Learn Which Care Is Best

The growing support for CER represents an important first step in transforming health care. Mark B. McClellan, director, Engelberg Center for Health Care Reform, from the Brookings Institution emphasized that as the infrastructure required to expand the nation’s capacity for CER is identified and prioritized, policy makers will need to consider how these elements can serve the longer-term goal of developing a learning health system. Efforts to improve the key infrastructure elements and data networks, methods, and workforce should also consider how to best build upon current health system capacities. Key advances needed for these elements include supporting a virtual approach to linking databases through the development of needed standards and incentives; advancing innovative approaches to clinical trials to facilitate their conduct in real-world settings, as well as improved statistical and epidemiologic methods; and a focus on developing a broad, cross-disciplinary workforce with capabilities in biostatistics, epidemiology, decision analysis, health economics, health services research, and program evaluation. To take best advantage of the many efforts already under way, infrastructure is also needed to promote the sharing and learning from the diverse experiences of all stakeholders. Public–private partnerships are one possible approach to helping organizations share information and learn more quickly about what works best.

McClellan also suggested that for infrastructure development, form should follow function, and he identified four critical evidence gaps that need to be addressed by CER and a learning health system. First, to move beyond evaluating the average impact of a treatment in a population and toward targeted medicine, researchers need a better understanding of current care and how this might vary from patient to patient. Large epidemiologic datasets will be useful to develop the disease models or natural

histories that provide such baselines for future evaluations. The development and use of these data resources have several implications for infrastructure, including the need to develop and implement complete standards for data collection, clinical trials, and electronic records.

Establishing the means to monitor the safety of medical therapies and products is another key evidence gap. Developing data networks and requisite methods of analysis will help to support the creation of a national, virtual infrastructure—as endorsed in the FDA Amendments Act of 2007—for monitoring product use, including adverse reactions. Such infrastructure may eventually serve as an important component of infrastructure for evidence generation—by supporting studies that compare the safety and effectiveness of treatments in different subgroups.

A third and related need is developing a reliable and relevant evidence base on the comparative effectiveness of treatment options to help physicians and patients make the best possible healthcare decisions. At present, conducting carefully randomized studies in real-world situations on practical treatment questions can be difficult as well as costly and time consuming. Moreover, by the time a large randomized trial is completed, the information may be outdated. The key challenge is to move beyond approaches that generate evidence about the overall average effect—in one population versus another—to the efficient development of information relevant to particular types of patients. In this respect, work is needed to determine limitations, methodological challenges, and needed improvements in data collection methods, as well as to develop agreement on the amount and type of evidence needed for decision-making purposes.

Finally, infrastructure development must aim to address the evidence gap related to understanding effective treatment strategies and policies. Current capacity cannot contend with the impending exponential growth in the complexity of medical decision making. Subtle differences in the management of chronic diseases and practice patterns affecting chronic disease management often result in broad variations in care delivered. In the absence of information on these technologies and strategies, care provided can be only marginally beneficial or even harmful. McClellan also noted that some suggest it should be possible to reduce costs in Medicare by 20 percent without consequences for patient outcomes if these variations are addressed. However, these practices often cannot be assessed in a simple RCT, but require study in real-world settings. Such studies could be very useful in closing the gap between what we know works and what is delivered in medical practice, as well as in understanding underlying issues related to the coordination and integration of care that constitute a major problem in the current healthcare system. Infrastructure needed to address this challenge should involve broad collaboration among stakeholders, as well as developing consensus on the best methodological approaches. A

particular focus is needed on methods development for studies in real-world settings, including observational approaches.

The Potential Returns from Evidence-Driven Health Care

Interest in the potential of comparative clinical effectiveness information to help Americans learn to “spend smarter” is part of a drive towards the increased availability and use of evidence to guide medical practice. According to Gail R. Wilensky, senior fellow at Project HOPE (Health Opportunities for People Everywhere), the potential ability for better information to improve health outcomes and also to help moderate spending increases is enormous. To capture some of the potential savings that CER could bring, consideration needs to be given to which approaches, data resources, and analyses will be most useful in producing the information needed. Data will be available from many sources, and it will be important to find ways to identify and address issues related to study design limitations and biases, as well as to reduce the costs and time required for the collection of new prospective data for comparative effectiveness trials and studies. Anticipated need for expanded CER might begin with an initial investment of several hundred million dollars and then ramp up to $4–$6 billion a year. Several steps are critical to ensuring a return on this investment. First, a center should be established and charged with creating better information on comparative clinical effectiveness. Second, priority setting for comparative effectiveness analyses should focus on medical conditions in high-cost, high-volume areas as well as areas that are subject to substantial practice variation. It will also be important to consider issues of clinical relevance, disease burden, and the various subgroups that are particularly affected. Third, it is essential to recognize that all stakeholders need to be a part of the decision-making process, as a slowdown in spending rates will have broad effects across the healthcare system.

Wilensky suggested that an immediate infrastructure priority should be to develop a common national data source that captures what is known about the likely clinical outcomes of various treatments for relevant population subgroups. Moreover, although cost- and clinical-effectiveness information should be considered in reimbursement and even clinical decisions, Wilensky underscored the importance—for technical and political reasons—of keeping these analyses and the places where they are conducted separate. Finally, as important as it is to have information available on clinical and cost effectiveness, the potential gains will not be achieved unless the reimbursement system is changed to make better use of information to reward health care of value rather than just paying more for doing more. In this respect, she emphasized the importance of expecting and allowing for different players to use this information differently, the need to make CER

information available to help guide reimbursement policies that reward good clinical outcomes, and the importance of taking advantage of the diversity in healthcare delivery by tying local coverage decisions to evidence development. Finally, it will be particularly important to provide legislative authority to CMS to introduce what is known about clinical and cost effectiveness into its reimbursement decisions.

The Work Required

CER aims to determine what works best for whom under which circumstances to inform the healthcare decisions of patients, physicians, and policy makers. Developing information that is relevant and understandable to these end users requires the efficient conduct of a range of activities, including primary research, synthesis, and translation. Efficient use of resources depends on prioritization of research questions, coordination of disparate but related efforts, and advancing research methods and data resources. The presentations described in Chapter 2 discussed the nature of the specific types of the work required, clarified what is known about the current capacity, illustrated the opportunities to improve care presented by expanded investment, and offered initial suggestions about policies or activities for progress.

Cost and Volume of Current Comparative Effectiveness Research

As policy discussions about CER gather momentum, there continues to be a lack of awareness of the current scale of CER. Erin Holve, senior manager at AcademyHealth, presented the results of a survey of the costs and volume of current CER. The study was based on stakeholder interviews with research funders and researchers as well as a review of databases that track health research. In this work, CER was defined as an examination of the effectiveness of the risk and benefits of two or more healthcare services or treatments used to treat a specific disease (e.g., pharmaceuticals, medical devices, medical procedures, other treatment modalities in appropriate real-world settings). Results from both sources suggest there are currently more than 600 studies under way in the area. Three primary research categories were considered: head-to-head trials (including pragmatic trials), observational studies (including registry studies, prospective cohort studies, and database studies), and syntheses and modeling (including systematic reviews). Data from the interviews demonstrate a wide range in the cost of conducting CER, both across and within study designs, although costs clustered by study design (Table S-3). Interviewees emphasized a need for multi-disciplinary training to expose researchers to a variety of methods in trials, observational studies, and syntheses. Finally, the study revealed an

TABLE S-3 Costs of Various Comparative Effectiveness Studies

|

|

||

|

Type of Study |

Cost |

|

|

|

||

|

Head to head |

Randomized controlled trials: Smaller Larger |

$2.5m–$5m $15m–$20m |

|

|

||

|

Observational |

Registry studies Large prospective cohort studies Small retrospective database studies |

$2m–$4m $800k–$6m $100k–$250k |

|

|

||

|

Synthesis |

Simulation/modeling studies Systematic reviews |

$100k–$200k $200k–$350k |

|

|

||

absence of clear definitions regarding the scope of comparative effectiveness as well as a limited understanding of the appropriate methods for conducting CER. These definitional and organizational issues may be an impediment to coordinating future CER activities.

Intervention Studies That Need to Be Conducted

To illustrate the multifaceted need for comparative effectiveness information on procedures, devices, pharmaceuticals, diagnostics, and health systems and to highlight some of high-priority studies that might be needed, Douglas B. Kamerow, chief scientist at RTI International, presented results from a stakeholder work group convened to pilot a process for identifying candidate comparative effectiveness studies. The process resulted in the adoption of selection criteria—including the importance of the conditions being treated or prevented, the current availability of effective treatments or preventive interventions, lack of definitive knowledge about the relative effectiveness of available treatments, research plausibility, and study type heterogeneity. These criteria were used to select among candidates nominated in the following comparative effectiveness categories: diagnostic studies drug–drug comparisons, health services systems studies, preventive interventions, surgical studies, and treatment studies across modalities. Potential comparative effectiveness studies identified using this process are

listed in Table S-4, and brief evidence reviews developed by Kamerow for each item are provided in Appendix B. Importantly, this exercise illuminated a number of challenges facing those who seek to prioritize the work needed. Key lessons learned included the existence of many opportunities for research that can make a difference in costs and outcomes; the importance of establishing an explicit and transparent process and of considering stakeholder perspectives and input during the process of nomination, review, and selection; and the need for carefully defined research questions and the utilization of the full range of study designs and methods. Many of these issues and perspectives were similarly reflected in the report on CER priorities mandated under ARRA and conducted by the IOM, the summary of which is found at Appendix C.

Clinical Data Sets That Need to Be Mined

Reflecting on the opportunities to better use routinely captured electronic data to generate insights on benefit and safety, Jesse A. Berlin, vice president of pharmacoepidemiology at Johnson & Johnson, noted that the appropriate use of existing data, as well as creative new uses of existing data collection mechanisms, will be crucial to improving healthcare decision making. Reviewing the major strengths and limitations of currently available administrative data in addressing questions of comparative effectiveness and safety, he noted that most existing databases currently used in observational studies of pharmaceuticals were created for a purpose other than research. These purposes include allowing payers to track expenditures in order to manage costs (in insurance claims databases, the largest and most prevalent type), manage patients (electronic medical records), and manage purchasing and capacity (facility-specific databases). These databases can provide relatively inexpensive and rapid access to clinical data and analyses of pharmaceutical exposures within a quantifiable source population, and these data reflect healthcare decisions and outcomes as they were actually made (versus the artificial constructs of an RCT). By virtue of reflecting actual, real-world clinical practice, databases offer an external validity that is greater than RCTs. However, databases can be limited by missing data (particularly on such confounders as smoking, height, weight, race, over-the-counter drugs, and alcohol consumption), failures to follow up (due to turnover in healthcare plans), and data quality issues (e.g., miscoding of diagnoses). Furthermore, data reflect the healthcare delivery system and, as such, may fail to account for or capture healthcare visits outside of providers using a specific electronic health record (EHR) system, due to benefit design or other issues. The use of these data for research is also complicated by the difficulty of capturing benefits of treatment (e.g., improvement in blood pressure, quality of life) compared to the relatively

TABLE S-4 The Comparative Effectiveness Studies Inventory Project Identified 16 Candidate Topics for Comparative Effectiveness Research

| Study Topic | Study Type | Age Group | Condition |

| Treatment of attention deficit hyperactivity disorder in children: drugs, behavioral interventions, no prescription | Comparative effectiveness treatment studies across modalities | Children | Mental diseases |

| Treatment of acute thrombotic/embolic stroke: clot removal, reperfusion drugs | Comparative effectiveness treatment studies across modalities | Adults | Heart and vascular diseases |

| Treatment of chronic atrial fibrillation: drugs, catheter ablation, surgery | Comparative effectiveness treatment studies across modalities | Adults | Heart and vascular diseases |

| Treatment of chronic low back pain | Comparative effectiveness treatment studies across modalities | Adults | Neurological diseases |

| Gamma knife surgery for intracranial lesions vs. surgery and/or whole brain radiation | Comparative effectiveness treatment studies across modalities | Adults | Neurological diseases |

| Treatment of localized prostate cancer: watchful waiting, surgery, radiation, cryotherapy | Comparative effectiveness treatment studies across modalities | Adults | Cancer |

| Diagnosis and prognosis of breast cancer using genetic tests: human epidermal growth factor receptor 2 and others | Diagnostic studies | Adults | Cancer |

| Over-the-counter drug treatment of upper respiratory tract infections in children | Drug–drug and drug–placebo treatment studies | Children | Respiratory disorders |

| Drug treatment of depression in primary care | Drug–drug and drug–placebo treatment studies | Adults | Mental disorders |

| Study Topic | Study Type | Age Group | Condition |

| Drug treatment of epilepsy in children | Drug–drug and drug–placebo treatment studies | Children | Neurological diseases |

| Use of erythropoiesis-stimulating agents in the treatment of hematologic cancers | Drug–drug and drug–placebo treatment studies | Adults | Cancer |

| Outcomes of percutaneous coronary interventions in hospitals with and without onsite surgical backup | Health services/systems studies | Adults | Heart and vascular diseases |

| Screening hospital inpatients for methicillin-resistant Staphylococcus aureus infection | Preventive interventions | Adults | Infectious diseases |

| Tobacco cessation: nicotine replacement agents, oral medications, combinations | Preventive interventions | Adults | Preventive interventions |

| Prevention and treatment of pressure ulcers | Surgical studies | Adults | Dermatological diseases |

| Inguinal hernia repair: open vs. minimally invasive | Surgical studies | Adults | Surgical disorders |

NOTE: Study topics are categorized by study type, age group, and condition.

SOURCE: Kamerow, 2009.

high capture of treatment risks that are often also codified as “clinical diagnoses.” Regardless, little is understood of how much of an effect these benefits or risks have, or the perceptions of the patients or healthcare providers regarding the benefits and risks.

Designing targeted studies within databases is a promising direction for research. For example, special data collection screens might pop up on an in-office computer when patients matching a specific set of criteria were under consideration. This idea could be extended to include the conduct of large, simple, randomized studies within the databases. The question

is whether additional aspects of data collection can be tailored (as in primary data collection efforts) within the context of an existing data collection system. These ideas are not novel, but neither have they yet been widely adopted. Other ideas discussed include capturing the data at the physician–patient interface and providing data back to clinicians. Feedback to providers could encourage them to enroll in clinical trials and could permit healthcare professionals to better understand their own treatment decisions and the impacts of those decisions.

Knowledge Synthesis and Translation That Need to Be Applied

Currently the United States lacks a single reliable source that people can use to evaluate the safety and effectiveness of medical treatments. In January 2008, the IOM published Knowing What Works in Health Care: A Roadmap for the Nation (IOM, 2008b), which explored the national capacity to use scientific evidence to identify highly effective clinical services. Richard A. Justman, national medical director at UnitedHealthcare served on the report committee, and he discussed key findings related to the state of knowledge synthesis and translation and opportunities to scale up national capacity to meet the anticipated demand. While there are multiple avenues available today to help consumers, physicians, and others decide which treatments are safe and effective, they all have significant limitations. Justman highlighted how the absence of a national comparative effectiveness architecture has led to an evidence base that is replete with gaps, duplications, and contradictions (Table S-5). For example, some systematic reviews of clinical evidence and some clinical practice guidelines lack scientific rigor, relying on a consensus of expert opinion rather than clinical evidence as the basis for their conclusions. The body of clinical evidence for some health services that consumers and physicians are interested in may be weak or totally lacking. Bias and conflict of interest on the part of experts further complicate the understanding of the conclusions that can be drawn from available clinical evidence. Finally, the multiple clinical guidelines available for the treatment of the same condition frequently make differing recommendations. The 2008 report urged Congress to direct the Secretary of Health and Human Services to designate a single entity to ensure the production of credible, unbiased information about what is known and what is not known about clinical effectiveness. It also recommended the appointment of a Clinical Effectiveness Advisory Board to oversee the program and the appointment of a Priority Setting Advisory Committee to identify high-priority topics. The report further prescribed the development of evidence-based methodological standards for systematic reviews, including a common language for characterizing the strength of evidence. It recommended that bias be minimized by balancing competing interests,

publishing conflict-of-interest disclosures, and prohibiting voting by members with material conflicts.

Methods That Need to Be Developed

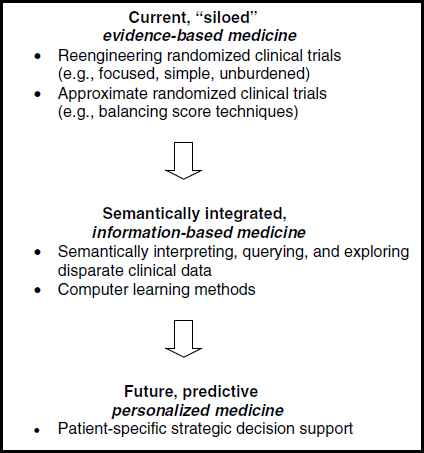

To contend with the growing scope and scale of clinical evidence needs, work is needed to improve and refine current research methods as well as to develop innovative new approaches to ensuring the development of efficient, timely, and relevant information for healthcare decision making. Although randomized clinical trials and meta-analyses of these trials provide the best evidence for use in comparative studies of the effectiveness of clinical interventions and care, it is impossible, difficult, unethical, or prohibitively expensive to randomize all relevant factors. Eugene H. Blackstone, head of clinical investigations at the Cleveland Clinic Heart and Vascular Institute, presented five foundational methodologies that will accelerate movement from the current siloed approach to evidence generation to an approach that enables predictive and personalized medicine (Figure S-1).

Re-engineering clinical trials will require addressing six main pitfalls associated with traditional RCTs: (1) complexity, (2) data capture, (3) generalizability, (4) equipoise, (5) appropriateness, and (6) funding. For the many instances in which even RCTs are not feasible or sufficient to meet information needs, methods for conducting approximate randomized trials using balancing strategies and real-world observational clinical data have become increasingly common—although a number of their important features remain to be explored and understood. Many trials focus on early outcomes or else introduce medicines or devices that bring additional complications. Thus methods for longitudinal surveillance and long-term outcomes analysis—e.g., birth-to-death, patient-centric health records populated with discrete values for variables—are also needed.

Among the most promising methodologies emerging is the semantic representation of data. The elements of this methodology include the storage of patient data as nodes and arcs (graphs) that can seamlessly link all types of data across current medical silos, from genomics to outcomes; a rich ontology of medicine that permits natural-language queries of complex patient data without the need to understand the underlying data structure; the assembly of this ontology and the assertions that make it useful; and intelligent agents to assist in the discovery of unsuspected relationships and unintended adverse outcomes. An immediate focus should be on supporting a worldwide effort to develop the comprehensive formal ontology of medicine needed to implement semantic databases and knowledge bases.

Methods are then needed to transform the results of trials, approximate trials, and automated discovery from static publications into dynamic, patient-specific (“personalized”) medical decision support tools

TABLE S-5 Duplicated Efforts by Selected Health Plans and Technology Assessment Firms, 2006

| Type of Service | Health Plans | Technology Assessment Firms |

||||||

| United Healthcare | Kaiser Permanente | Aetna | WellPoint | Hayes, Inc. | Technology Evaluation Center |

ECRI Institute | ||

| Screening | ||||||||

| Genetic testing to predict breast cancer recurrence | √ | √ | √ | √ | √ | √ | √ | |

| Proteomic testing for ovarian cancer | √ | √ | √ | √ | √ | |||

| Virtual (computed tomography [CT]) colonoscopy | √ | √ | √ | √ | √ | √ | √ | |

| Disease management | ||||||||

| Ambulatory blood pressure monitoring | √ | √ | √ | √ | √ | √ | √ | |

| Intermittent intravenous insulin therapy | √ | √ | √ | √ | √ | |||

| Diagnosis | ||||||||

| CT angiography for suspected coronary artery disease | √ | √ | √ | √ | √ | √ | √ | |

| Microvolt T-wave alternans | √ | √ | √ | √ | √ | √ | √ | |

| Wireless capsule endoscopy | √ | √ | √ | √ | √ | √ | √ | |

| Treatment | |||||||

| Brachytherapy for various cancers: breast, ovarian, and prostate cancer and brain tumors | √ | √ | √ | √ | √ | √ | √ |

| Dysfunctional uterine bleeding and fibroids | √ | √ | √ | √ | √ | √ | √ |

| Fallopian tube occlusion for permanent contraception | √ | √ | √ | √ | √ | ||

| Growth factor–mediated lumbar spinal fusion | √ | √ | √ | √ | √ | ||

| Intracoronary brachytherapy | √ | √ | √ | √ | √ | √ | √ |

| Minimally invasive surgery for low back pain | √ | √ | √ | √ | √ | √ | √ |

| Photodynamic therapy for Barrett’s esophagus and esophageal cancer | √ | √ | √ | √ | √ | √ | |

| Vagus nerve stimulation for intractable depression | √ | √ | √ | √ | √ | √ | √ |

| Devices | |||||||

| Artificial total disc replacement for lumbar and cervical spine | √ | √ | √ | √ | √ | √ | √ |

| Cochlear implants | √ | √ | √ | √ | √ | √ | |

| Total artificial heart | √ | √ | √ | √ | √ | √ | |

| Total hip resurfacing arthroplasty | √ | √ | √ | √ | √ | √ | √ |

NOTE: Not all reviews are comprehensive assessments. Agency for Healthcare Research and Quality evidence-based practice centers have reviewed 5 of the 20 topics listed (ambulatory blood pressure monitoring, CT angiography, proteomic testing for ovarian cancer, spinal fusion for low back pain, and uterine fibroids). The Kaiser Permanente entries represent all Kaiser regions.

SOURCE: IOM, 2008a.

FIGURE S-1 Five foundational methodologies that need to be developed.

(simulation). Although such methodologies are widely used for institutional assessment and ranking, they need to become clinically rich and easily used real-time tools. The discrepancy between the “goodness of fit” of models to data and the minimization of prediction error needs to be addressed to enable accurate decision support. Algorithmic techniques, such as random forests–based methods, are intriguing and promise to fill the gap in accurately predicting a patient’s response to treatment, but they are still in their infancy. Moving beyond randomized trials to the real world, exploiting emerging semantic technology in order to integrate currently disparate medical data, using the knowledge generated for strategic decision support, and developing the next generation of statistical tools for analysis of clinical data are but a few concrete examples of the methods that need to be developed to provide an infrastructure for learning which is the right treatment, for the right patient, at the right time.

Some preliminary estimates of the resources needed to spur methodology development are suggested.

Coordination and Technical Assistance That Need to Be Supported

AHRQ has played a leading role in promoting the evidence development, synthesis, and translation activities integral to CER. Jean R. Slutsky, director of AHRQ’s Center for Outcomes and Evidence noted that CER as a concept and reality has grown rapidly over the past several years. Most of this work has built upon an appreciation for the role of technology assessment, comparative study designs, and HIT in the gathering and dissemination of best evidence to clinical practice; however, the development of the infrastructure needed for an expanded national capacity for CER has received less attention. To plan for such capacity rationally and strategically, one must have an understanding of the range of organizations currently conducting CER activities as well as some idea of which functions might benefit from either centralized or local approaches. Slutsky described lessons learned from AHRQ’s work to support CER, outlined some practical realities of the current state of play, and suggested some priority areas in need of attention if the nation is to better meet the information needs of the diverse healthcare system.

Slutsky noted that priority CER needs include improvements aimed at supporting and training researchers; providing technical assistance in research design, conduct, and implementation; and developing capacities to prioritize, coordinate, fund, and engage stakeholders in CER activities. Training in research design and translation are particularly important to ensure that designs and protocols efficiently and effectively answer research questions and that findings are not used inappropriately and do not have unintended consequences. Because of the impact of CER on many different sectors (e.g., patients, industry, health plans), the research must be well designed and conducted in a fair and transparent manner, and receive adequate funding and support. Provisions in the ARRA and ACA hold promise in this respect. In addition, CER-focused public–private partnerships building on the work of other federal agencies (e.g., NIH, CMS, coverage with evidence development, and the Department of Veterans Affairs) are beginning to emerge to address some of these issues. As AHRQ discovered in developing the Section 1013 healthcare program, involving stakeholders early, listening to them, and involving them throughout the process are critically important.

The Information Networks Required

The scale of efficiencies gained through improvements to methods, coordination, and prioritization of CER will be limited by the available

capacity to capture, access, and share relevant data and information. Design and development of robust information networks and efforts to foster collaboration around common work are critical aspects of CER infrastructure. This capacity, too, has been addressed in recent legislation. The Health Information Technology for Economic and Clinical Health (HITECH) Act, enacted as part of ARRA in 2009, allocates $20 billion to be used as incentive payments to promote the adoption and “meaningful use” of electronic health records. Along these lines, presentations summarized in Chapter 3 discuss key current issues and needed capacity for networks to support the generation, synthesis, and the application of evidence, as well as for providing opportunities to support learning from clinical practice.

Information Technology Requirements

Robust, advanced clinical information systems (CIS)—including EHRs—are increasingly viewed as essential support for an evidence-based and learning health system. To provide policy makers with “order of magnitude” CIS estimates of the new or additional spending needed to speed broad adoption in care delivery organizations throughout the nation, Robert H. Miller, professor of health economics at the University of California at San Francisco described current EHR adoption, future EHR capital and operating expenditure requirements, and prospects for EHR adoption in the $648 billion hospital sector and the $447 billion physician and clinical services sector (spending levels as of 2006). EHR capabilities and estimated adoption level in hospital inpatient systems are indicated in Table S-6. Miller estimated that roughly $90 billion in new money may be needed over 8 years for robust hospital EHRs. Despite the 1.7 percent increase this represents for total hospital spending, adoption of EHRs will likely increase. There is substantial momentum in this sector, as health systems and larger hospitals increasingly see CIS as a cost of doing business, although public hospitals and unaffiliated hospitals with low or negative margins will likely lag behind.

Miller used rough estimates of the number of office-based physicians to develop an order of magnitude estimate of $40–$50 billion in new money for robust physician EHRs that may be needed over 8 years. This 1–1.25 percent average increase in physician services expenditure is feasible for most practices, and evidence suggests that the return on such an investment for physician practices could be substantial. Larger physician practices are adopting EHRs relatively rapidly, especially compared to solo/small groups (i.e., 10 physicians or fewer) and community health centers—for whom the business case is not perceived as favorable.

To achieve full EHR adoption, all types of healthcare delivery organizations need to increase CIS; however, more will be needed to achieve

TABLE S-6 Hospital Electronic Health Record Capabilities and Adoption Estimates

|

|

||

|

Stage |

Description |

2008 |

|

|

||

|

Stage 7 |

Medical record fully electronic; healthcare organization able to contribute continuity of care document as by-product of electronic medical record; data warehousing/mining |

0.1% |

|

Stage 6 |

Physician documentation (structured templates), full clinical decision support, full Radiology Picture Archiving and Communication System (PACS) |

1.0% |

|

Stage 5 |

Closed loop medication administration |

1.3% |

|

Stage 4 |

Computerized physician order entry, clinical decision support (clinical protocols) |

1.9% |

|

Stage 3 |

Clinical documentation (flow sheets), clinical decision support system (error checking), PACS available outside radiology |

32.9% |

|

Stage 2 |

Clinical data repository, controlled medical vocabulary, clinical decision support system inference engine, may have document imaging |

33.2% |

|

Stage 1 |

Ancillaries—lab, radiology, pharmacy |

12.5% |

|

Stage 0 |

All three ancillaries not installed |

17.1% |

|

|

||