INTRODUCTION

Most of the activities integral to comparative effectiveness research (CER) have been conducted on a small scale over the past several decades; yet, meeting an increased demand for CER and the efficient translation and application of CER findings requires more than simply expanding existing programs and infrastructure. In addition to incorporating the new structures, systems, and elements of health information technologies (HITs) into current practice, innovative new approaches will be needed to drive improvements in both research and practice. Work will be increasingly interdisciplinary—requiring coordination and cooperation across professions and healthcare sectors. One of the key themes of workshop discussion was the need for increased funding and support for training a workforce to meet the unique needs of developing and applying comparative effectiveness information.

Papers in this chapter were presented in draft form at the workshop to begin to characterize the workforce needs for the emerging discipline of CER.1 William R. Hersh and colleagues explore the heterogeneous set

_______________

1 Comments of workshop reactor panel participants guided the development of the manuscript by Hersh and colleagues presented in this chapter. Sector perspective panelists included Jean Paul Gagnon (sanofi-aventis), Bruce H. Hamory (Geisinger Health System), Steve E. Phurrough (Centers for Medicare & Medicaid Services), and Robert J. Temple (Food and Drug Administration). Panelists commenting on training and education needs included Eric B. Bass (Johns Hopkins University), Timothy S. Carey (University of North Carolina at Chapel Hill), Don E. Detmer (American Medical Informatics Association), David H. Hickam (Eisenberg Center), and Richard N. Shiffman (Yale University).

of activities that contribute to the field of CER and define key workforce components and related training requirements. CER will draw its workforce from a variety of backgrounds—clinical medicine, clinical epidemiology, biomedical informatics, biostatistics, and health policy—and settings, including academic units, university centers, contract research organizations, government, and industry. A key challenge will be developing programs to foster interdisciplinary and cross-sector approaches.

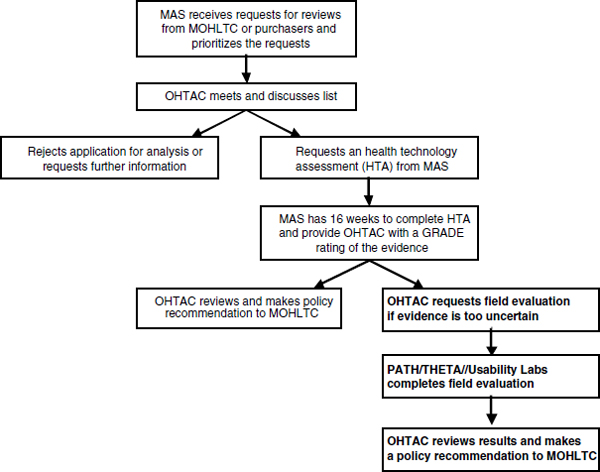

To provide an example of how different workforce elements might be best organized and engaged in a system focused on developing and applying clinical effectiveness information, Sean R. Tunis and colleagues present an overview of a program for health interventions assessment in Ontario, Canada. A direct link between decision makers and CER entities facilitates research timeliness and a clear focus on the information needs of decision makers. Ontario’s experience provides insights on how the United States might best expand CER capacity, offers a model for developing an integrated workforce that addresses important organizational and funding issues, and suggests some possible efficiencies to be gained through international cooperation.

COMPARATIVE EFFECTIVENESS WORKFORCE—

FRAMEWORK AND ASSESSMENT

William R. Hersh, M.D., Oregon Health and Science University;

Timothy S. Carey, M.D., M.P.H., University of North Carolina;

Thomas Ricketts, Ph.D., University of North Carolina; Mark Helfand,

M.D., M.P.H., Oregon Health and Science University; Nicole Floyd,

M.P.H., Oregon Health and Science University; Richard N. Shiffman,

M.D., M.C.I.S., Yale University; David H. Hickam, M.D., M.P.H.,

Oregon Health and Science University2

Overview

There have been increasing calls for a better understanding of “what works” in health care (IOM, 2008), driven by a system that allows for learning and improvement based on such an understanding (IOM, 2007).

_______________

2 We thank the following individuals who provided comments, critiques, and additions to early versions of this report: Mark Doescher, M.D., M.P.H., University of Washington; Erin Holve, Ph.D., AcademyHealth; Marian McDonagh, Pharm.D., Oregon Health & Science University; Lloyd Michener, M.D., Duke University; Cynthia Morris, Ph.D., Oregon Health & Science University; LeighAnne Olsen, Ph.D., Institute of Medicine; Robert Reynolds, Sc.D., Pfizer Corp.; Robert Schuff, M.S., Oregon Health & Science University; Carol Simon, Ph.D., The Lewin Group; Brian Strom, M.D., M.P.H., University of Pennsylvania; Jonathan Weiner, Dr.P.H., Johns Hopkins University.

One of the means for assessing what works is CER. The AcademyHealth Methods Council defines CER as “research studies that compare one or more diagnostic or treatment options to evaluate effectiveness, safety, or outcomes” (EHR Adoption Model, 2008). The goals of this report are to define the many components of CER, to explore the necessary training requirements for a CER workforce, and to provide a framework for developing a strategy for future workforce development.

The objective of CER is to provide a sustainable, replicable approach to identifying effective clinical services (IOM, 2008). However, although the term CER is widely used, there is no consensus on how best to achieve this objective, and there is little understanding of the challenges required to meet it. There is, for example, wide disagreement about the importance of its different components. The Institute of Medicine (IOM) committee on “knowing what works in health care” emphasizes the central role of comparative effectiveness reviews as a critical linkage between evidence-based medicine (EBM) and practice guidelines, coverage decision making, clinical practice, and health policy (IOM, 2008), whereas Tunis views the knowledge of CER as deriving from practical clinical trials that compare interventions head to head in real clinical settings (Tunis, 2007). The IOM Roundtable on Value & Science-Driven Health Care expands the notion of CER to include other forms of learning about health care (IOM, 2007), such as the growing amount of data derived from secondary sources, including electronic health record (EHR) systems, which feeds other analyses, such as health services research (HSR). This knowledge in turn drives the development and implementation of clinical practice guidelines, benefits coverage decisions, and allows the general dissemination of knowledge to practitioners, policy makers, and patients. The ideal learning health system will feed back knowledge from these activities to inform continued CER.

While some organizations take an optimistic view of the benefits that CER can bring to improving the quality and cost-effectiveness of health care (Swirsky and Cook, 2008), others sound a more cautionary note. CER will not occur without political and economic ramifications. For example, the Congressional Budget Office notes that CER might lower the cost of health care, but only if it is accompanied by changes in the incentives for providers and patients to use new, more expensive technologies even when they are not proven to be better than less expensive ones (Ellis et al., 2007). A report from the Biotechnology Industry Organization raises concerns that population-based studies may obscure benefits to individual patients or groups and that even in the absence of statistically significant differences among interventions, some individuals may benefit more from some treatments than others (Buckley, 2007). Finally, many argue that CER could turn out to be ineffective unless it is funded and conducted independently of the

federal executive branch by a dedicated new entity (Emanuel et al., 2007; Kirschner et al., 2008; Wilensky, 2006).

In the United States, a clear leader in CER has been the Agency for Healthcare Research and Quality (AHRQ). The AHRQ research portfolio includes evidence-based practice centers (EPCs) (Helfand et al., 2005), which perform comparative effectiveness reviews—that is, syntheses of existing research on the effectiveness, comparative effectiveness, and comparative harms of different healthcare interventions (Slutsky et al., 2010). The work of the EPCs feeds AHRQ’s Effective Health Care Program,3 which also supports original CER through the Developing Evidence to Inform Decisions about Effectiveness network and via dissemination through the John M. Eisenberg Clinical Decisions and Communications Science Center (Eisenberg Center). AHRQ has also made a substantial investment in funding HIT projects to improve the quality and safety of healthcare delivery. The agency also funds health services research as well as pre-and postdoctoral training and career development (K awards) in all of these areas.

Another potential venue for increased CER is the effort by the National Institutes of Health (NIH) to promote clinical and translational research (Zerhouni, 2007). While many think of clinical and translational research as “bench to bedside” (i.e., moving tests and treatments from the lab into the clinical setting), the NIH and others have taken a broader view. With the traditional bench-to-bedside translational research labeled as “T1,” other types of translation are defined as well, such as “T2” (assessing the effectiveness of care shown to be efficacious in controlled settings, or bedside to population) and “T3” (delivering care with quality and accountability) (Woolf, 2008). NIH has sponsored many trials that qualify as CER, and although this type of research is not a primary focus for the agency, the training needed to conduct CER overlaps that of T2 and T3 translation. Thus the Clinical and Translational Science Awards (CTSA) initiative greatly expands the clinical research training needed to conduct CER.4 As CER absorbs researchers and staff, however, it may also compete with other types of research programs in T1 and some T2 areas. Over the past 3 years, the NIH has awarded funding to 38 CTSA centers, with a goal for an eventual steady state of 60 centers. These centers aim to speed the translation of research from the laboratory to clinical implementation and to the community. The work of CER, examining the effectiveness of treatments in real-world settings, including watching

_______________

3 For more information, see http://effectivehealthcare.ahrq.gov (accessed September 8, 2010).

4 Since this paper was originally authored, the 2009 American Reinvestment and Recovery Act provided $1.1 billion of funds for activities related to CER—including $400 million to the Office of the Secretary of the Department of Health and Human Services, $600 million to AHRQ, and $400 million to the NIH.

for harms to patients with multiple comorbidities, is highly relevant to the CTSA initiative.

One challenge for CER is that it currently exists as a heterogeneous field rather than a specific discipline. While this heterogeneity is probably appropriate to the status of CER as an emerging field of study and effort, it also makes planning for its workforce needs challenging. Investigators and staff in CER come from many backgrounds, including clinical medicine, clinical epidemiology, biomedical informatics, biostatistics, and health policy. They work in a number of settings, including academic units, university centers, contract research organizations, government, and industry. It is not known how well the capacity of the current workforce would absorb any sort of marked increase in demand for CER activities. Finally, there is no specific entity that funds CER, despite calls for there to be so (Wilensky, 2006).

Nonetheless, a variety of stakeholders must have access to the best comparative information about medical tests and treatments (Drummond et al., 2008). Physicians need to be able to assess the benefits and harms of various clinical decisions for their patients, who in turn themselves are becoming increasingly involved in decision making. Likewise, policy makers must weigh the evidence for, and against coverage of, increasingly expensive technologies, especially when marginal costs vastly exceed marginal benefits.

Therefore this report was approached with the assumption that CER should be encouraged as part of the larger learning health system. The authors of this report, leaders with expertise in major known areas of CER, were recruited to define the scope of CER, answer a set of questions concerning the workforce, and work together to develop a framework and a plan for future work. The first task was to achieve consensus among ourselves for defining the components of CER. The next task was to develop a framework for enumerating the workforce and to propose an agenda for defining its required size, skill set, and educational requirements. A draft of this report was presented at the workshop described in this proceedings on July 30–31, 2008. A reactor panel provided some initial feedback, and subsequently more experts were contacted, all of whom are listed in the footnotes on pp. 191 and 192. This led to finalization of the framework and agenda for further research and policy making related to the CER workforce.

Framework for Comparative Effectiveness Research Workforce Characterization

The scope of CER was defined by developing a figure that depicts the subareas of CER and that is organized around the flow of information and knowledge. Next a preliminary model was developed for how workforce

needs might be quantified. The knowledge and challenges in each area were elaborated, followed by a discussion of the issues that will arise with efforts to expand the scope and capacity of CER.

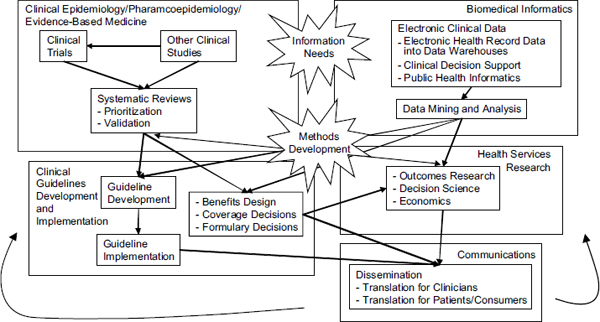

As illustrated in Figure 4-1, information and knowledge originate from clinical trials and other clinical research studies, particularly studies using registries, EHRs, practice network data, and pharmacoepidemiologic studies. This information is synthesized in comparative effectiveness reviews and technology assessments, sometimes including meta-analyses, decision analyses, or economic analyses, which inform the development of evidence-based clinical guidelines and decisions about coverage. HSR evaluates the optimal delivery and the societal health and economic effects of the corresponding changes in the health system. Finally, the information and knowledge are disseminated to both patients and professionals. Each of these components cycles back to its predecessors, and the continuously learning health system maintains a constant interaction among them.

It was also recognized that there are many areas of overlap among the components. For example, experts in biomedical informatics can work synergistically with clinical epidemiologists to determine data requirements and information needs for CER studies. Likewise, clinical guideline developers and implementers can collaborate with health services researchers in technology assessment.

Characterization of Specific Components of the Workforce

The next task was to develop a framework for enumerating the workforce and to make some estimates of its necessary size. Each author was assigned one of the major components of Figure 4-1 and asked to address the workforce needs in that particular area, taking into account the following questions:

- What are the issues and problems for the workforce at present?

- What skill set is needed to address current issues and problems?

- Where are these skills currently developed or obtained?

- What will be the projected needs as CER scales up in healthcare settings? Do we need more people? Do we need to further develop current capacity? What are the training needs?

- What are the recommendations for assessing and measuring the needs for the current and future workforce?

Clinical Epidemiology

A core concept underlying CER is that there is a continuum that begins with research evidence, then moves to systematic review of the overall body

FIGURE 4-1 Key activity domains for comparative effectiveness research. Workforce development will be critical to support the many primary functions within each of these domains as well as to foster the cross-domain interactions and activities identified (e.g., methods development, identifying information needs).

of evidence, and then to the interpretation of the strength of the overall evidence that can be used for developing credible clinical practice guidelines (IOM, 2008). While they overlap with other disciplines, the skills required to conduct CER are not widely taught. This section focuses on the four types of research involved in CER analyses as well as the personnel needed to conduct those analyses: (1) practical clinical trials and conventional clinical research, (2) systematic evidence reviews and technology assessment, (3) pharmacoepidemiologic research, and (4) clinical epidemiology methods research.

Practical Clinical Trials and Conventional Clinical Research

A wide variety of studies are useful in CER (Chou et al., 2010). Most would agree, however, that increasing the amount of CER will require expanding the capability for conducting practical, head-to-head “effectiveness” trials. Such trials are distinct from the so-called efficacy or explanatory clinical trials performed in the regulatory approval process. Explanatory trials, which focus on comparison with placebo treatments in highly selected subjects, are a necessary step in evaluating new therapies, but they are usually not an adequate guide for clinical practice. It can be difficult to determine from such trials—and from the systematic reviews that aggregate them—what the “best” treatments are. In contrast, effectiveness trials, such as practical clinical trials, compare treatments in a head-to-head manner in settings that can be applied to real-world clinical practice. The characteristics that distinguish effectiveness from explanatory (efficacy) studies are listed in Box 4-1 (Gartlehner et al., 2006).

Tunis and colleagues note a number of disincentives to perform-

BOX 4-1

Characteristics Distinguishing Effectiveness from Explanatory Studies

- Populations in primary care or general population

- Less stringent eligibility criteria

- Health outcomes

- Long study duration; clinically relevant treatment modalities

- Assessment of adverse events

- Adequate sample size

SOURCE: Gartlehner et al. (2006).

ing head-to-head comparisons of treatments, such as the disease-oriented nature of the NIH and the commercial motivations of pharmaceutical and other companies (Tunis et al., 2003). Indeed, few such trials have been performed. In a recent survey, Luce and colleagues were able to identify fewer than 20 such trials in the literature (Luce et al., 2008). A frequently stated goal for comparative effectiveness is for the number of effectiveness trials performed each year to grow to 50 trials. As discussed below, accomplishing this goal will require methodological advances in designing and conducting studies as well as training programs devoted to this new type of clinical trial research.

Because so few effectiveness trials have been performed, training in how to design and conduct them is not widely available. While there is overlap, the expertise and the team composition required for practical clinical trials differ from what is required for smaller efficacy trials. For example, practical clinical trials will need to use streamlined, more efficient procedures for recruitment and monitoring than large efficacy trials use (Califf, 2006). They should take advantage, for instance, of Web-based tools for trial management and the potential for using EHR systems to identify, recruit, and allocate subjects to treatment arms within and across health systems (Bastian, 2005; Langston et al., 2005; Reboussin and Espeland, 2005). They also need to develop methods for involving consumers and, for trials conducted in practice networks, office-based clinicians in the design and conduct of trials. Finally, some practical trials require specialized statistical skills (Berry, 2006).

Comparative Effectiveness Reviews and Technology Assessments

Comparative effectiveness reviews are a cornerstone of evidence-based decision making (Helfand, 2005). These reviews follow the explicit principles of systematic reviews, but they are more comprehensive and multidisciplinary, requiring a wider range of expertise. As noted in the EPC Guide to Conducting Comparative Effectiveness Reviews, comparative effectiveness reviews “expand the scope of a typical systematic review, which focuses on the effectiveness of a single intervention, by comparing the relative benefits and harms among a range of available treatments or interventions for a given condition. In doing so, [comparative effectiveness reviews] more closely parallel the decisions facing clinicians, patients, and policy makers, who must choose among a variety of alternatives in making diagnostic, treatment, and healthcare delivery decisions” (Methods Reference Guide, 2008). While some technology assessments are similar in scope to a comparative effectiveness review, most are smaller, more focused reviews that require a narrower range of expertise.

Within the emerging, somewhat poorly defined field of CER, conduct-

ing comparative effectiveness reviews and technology assessments is the most developed component. In contrast with other components of CER, guiding principles and explicit guidance for the conduct of comparative effectiveness reviews are available and are widely used. Examples include guidance tools from the UK National Institute for Health and Clinical Excellence (NICE)5 and the recently released EPC Guide (Methods Reference Guide, 2008).

The underlying disciplines for conducting CER are clinical epidemiology and clinical medicine. Individual comparative effectiveness reviews are usually conducted by project teams led by a project principal investigator under the oversight of a center director. The center director must have exceptional, in-depth disciplinary knowledge and skills in the underlying core disciplines of clinical epidemiology, clinical medicine, and medical decision making. The director should also have applied experience in addition to theoretical knowledge of these areas. For example, it is essential that the director have experience working with guideline panels, coverage committees, health plans, consumer groups, and other bodies that use evidence in decision making. Without such leadership, comparative effectiveness reviews may miss the mark, failing to address the information needs of the target audiences.

It is also important that the director, or other senior investigators, have experienced conducting clinical research studies and not just appraising them. Qualifications for center directors generally include an M.D. degree with additional training leading to a master’s degree plus a record of academic productivity representing outstanding contributions in a field such as clinical research design, literature synthesis, statistics, pharmacoepidemiology, or medical decision making. The most important competencies of the project leader are an understanding of clinical research study designs and clinical decision making. Collectively, the project leader and other investigators and staff must have expertise in various areas, such as interviewing experts (including patients) to identify important questions for the review to address, protocol development, project management, literature retrieval and searching, formal methods to assess the quality and applicability of studies, critical appraisal of studies, quantitative synthesis, and medical writing.

This workforce can be characterized based on the experience of the AHRQ EPCs. Through the Effective Health Care Program, the EPCs have completed 15 CERs over a period of approximately 3 years. The average cost of an AHRQ CER is $250,000 to $350,000, depending on its complexity. In these centers, investigators usually have a Ph.D. in epidemiology, pharmacoepidemiology, or biostatistics, or an M.D. with research fellowship training and a master’s degree in a pertinent field. Ideally, all

_______________

5 See http://www.nice.org.uk/ (accessed September 8, 2010).

participants should have experience conducting systematic reviews and an understanding of methodological research in the area of systematic reviews, clinical epidemiology, meta-analysis, or cost-effectiveness analysis. Most importantly, they should have the ability to work with healthcare decision makers who need information to make more informed decisions; they should be able to formulate problems carefully, often working with technical experts (including patients and clinicians) to develop an analytic framework and key questions addressing uncertainties that underlie controversy or variation in practice; they should have a broad view of eligible evidence, one that has recognized that the kinds of evidence included in a review depends on the kinds of questions asked and on what kinds of evidence are available to answer them; and they should understand that while systematic reviews do not in themselves dictate decisions, they can play a valuable role in helping decision makers clarify what is known as well as unknown about the issues surrounding important decisions and, in that way, affect both policy and clinical practice (Helfand, 2005).

Also required for systematic reviews are research librarians who have skills in finding evidence for systematic reviews through using electronic bibliographic databases, citation-tracking resources, regulatory agency data repositories, practice guidelines, unpublished scientific research, Web sites and proprietary databases, bibliographic reviews, expert referrals, and publications of meeting proceedings, as well as hand-searching of key journals. Statisticians are needed who have skills in providing advice and critique on the statistical methods used in published and unpublished clinical studies; in conducting statistical analyses, including meta-analysis and other standard analysis and computation; and in preparing statistical reports, including figures and tables. EPCs also require editors who can improve the readability and standardization of evidence reports. In addition, EPCs require research support staff. Research associates must have the ability to critically assess the effectiveness and safety of medical interventions; experience with systematic reviews of the medical literature; knowledge of the fundamentals of epidemiology, study design, and biostatistics; facility in conceptualizing and structuring tasks; and experience with clinical research methods. Research assistants need skills in maintaining bibliographies; coordinating peer review contacts and documents; and assisting in the development of summary reports, figures, tables, and final reports using particular style guidelines. Table 4-1 shows the typical staffing for a CER evidence report funded by AHRQ for a 1-year period.

Although the number of systematic reviews that is necessary may be among the easier of the “how much” questions to ask, there is no clear answer. The Cochrane Collaboration6 originally estimated a need

_______________

6 See http://www.cochrane.org/ (accessed September 8, 2010).

TABLE 4-1 Required Staffing for a Comparative Effectiveness Research Evidence Report

| Role | Activity | Training | Full-Time Equivalent |

| Center director | Leadership | Clinical epidemiology, clinical medicine, decision making | 0.05–0.3 |

| Principal investigator | Leadership | Clinical epidemiology and clinical medicine | 0.4 |

| Co-investigator | Domain expertise | Clinical | 0.2 |

| Co-investigators | Methods expertise | Clinical + fellowship or master’s or Ph.D. | 0.4 to 0.6, depending on scope |

| Research associate | Critical appraisal | M.S./M.P.H./other master’s | 1.0 |

| Research assistant | Data management | B.S. or M.S. | 0.5 |

| Librarian | Literature searching | M.L.S. | 0.05 |

| Statistician | Statistical analysis | M.S. or Ph.D. | 0.1 |

for 20,000 reviews; to date, it has completed 3,539 and developed 1,868 protocols for reviews that are proposed or under way. The AHRQ EPCs have produced 168 evidence reports and 16 technical reviews.7 The Drug Effectiveness Review Project8 produced 28 original reports and updated 45 reports in its first 3 years.

Of course, systematic reviews are not static documents and, as such, require updating when new evidence becomes available. As increasing numbers of reports are completed, the workforce needs will shift from producing reports to updating them. Shojania et al. have noted that systematic reviews published in the medical literature have a half-life of about 5.5 years, with about 23 percent requiring updating within 2 years of publication (Shojania et al., 2007). Moher et al. surveyed the literature on signals that updates are required and noted that few robust methods exist for detecting them. It is clear, however, that the growing number of systematic reviews being performed will require updating as new evidence from CER and related work becomes available (Moher et al., 2007).

As the existence of the Cochrane Collaboration, an international effort, indicates, efforts going on in other countries may be useful in various areas

_______________

7 See http://www.ahrq.gov/clinic/epcix.htm (accessed September 8, 2010).

8 See http://www.ohsu.edu/drugeffectiveness/ (accessed September 8, 2010).

of CER—and especially useful in systematic reviews, which are based on scientific literature. For example, NICE produces reports of evidence for health care. Programs in Canada and Sweden provide these as well.

Current training programs are probably adequate to absorb a moderate increase in demand for systematic reviews, but significant expansion of systematic reviews will require more capacity, which will inevitably lead to competition with other clinical research needs. The current training pathways are heterogeneous, and schools of public health and medicine could be much more explicit in developing tracks and certificate programs in systematic review and related areas. These could exist within degree programs in clinical effectiveness, epidemiology, informatics, and so forth. There is a substantial need, however, for biostatisticians and methodologists (who may be in epidemiology or other disciplines) to advance meta-analytic methods in systematic reviews.

Pharmacoepidemiology

An additional area where particular expertise will be needed is pharmacoepidemiology. Recent efforts of the Food and Drug Administration (FDA) to expand drug safety monitoring will require the employment of dozens of pharmacoepidemiologists, creating a competition for their services with the increasing CER activities. The recent FDA Administration Amendments Act of 20079 calls for expanding the Prescription Drug Use Fee Act to devote more effort to drug safety, including in areas such as pharmacoepidemiology (Kirschner et al., 2008). It has been estimated that this could require the additional need for 80 to 100 pharmacoepidemiologists (Mullin, 2007). This will create competition for pharmacoepidemiologists who could perform CER work.

An even more challenging problem is that the number of Pharm.D.’s and Ph.D.’s specifically trained in pharmacoepidemiology in North America is small and inadequate to meet growing needs. To meet new additional demand will take time and a several-fold increase in graduates. This will require expanding existing programs and establishing new programs. No one knows how easily individuals trained in other subsets of epidemiology (infectious disease, cardiovascular, environmental, and so on) can be retrained into pharmacoepidemiologists. Device safety and CER will be even more challenging, given their specialized nature and the paucity of high-quality randomized controlled trials (RCTs). Not all pharmacoepidemiology programs examine devices; it will be necessary to expand the field at the same time that training is being expanded.

_______________

9 Food and Drug Administration Amendments Act of 2007. 2007. HR 3580, 110th Cong.

Clinical Epidemiology Methods Research

Clinical epidemiology integrates epidemiologic methods and knowledge of clinical practice and decision making in order to develop clinical research methodology and to appraise clinical research (Fletcher and Fletcher, 2005; Haynes et al., 2005). Its purpose is to develop and apply methods to observe clinical events that will lead to valid conclusions. The availability of senior clinical epidemiologists is limited. This limitation is important because it will affect the capacity to train clinical researchers, conduct practical clinical trials and comparative effectiveness reviews, and develop new methods for clinical research.

To sum up the areas considered so far, analysis of the relevant data in the areas of clinical epidemiology, clinical research, pharmacoepidemiology, and EBM show that the workforce required is likely to be substantial, not available solely based on those who are currently trained, and dependent on the amount of systematic reviews, clinical trials, and other work related to CER that policy makers and others believe must be funded. Furthermore, for all categories of workers, and especially physicians, CER will find itself in a competition both for various types of clinical researchers and also for clinical practitioners, for which there is already a looming shortage (Dall et al., 2006), especially in primary care (Goodman, 2008), which is the area where the need is greatest and from which CER physician researchers are likely to be drawn.

Biomedical Informatics

Another discipline with many contributions to make in CER is biomedical informatics (BMI). This discipline is focused on the acquisition, storage, and use of information in health care and biomedical research, usually assisted by information technology (IT) (Hersh, 2002). The use of BMI for CER is one of a number of “re-uses” or secondary uses of clinical data derived from the EHR and other sources of patient information (Safran et al., 2007). An example of how this has been done is provided in the learning health system workshop summary (Weissberg, 2007). Other potential areas for reuse of EHR data include public health surveillance, health information exchange, clinical and translational research, and personal health records.

The reuse of clinical data currently accounts for a negligible portion of the effort that healthcare delivery organizations devote to clinical IT implementation. Most of the effort goes to deploying systems and is focused on their optimal use for direct clinical care. Furthermore, many individuals who work in the collection or storage of data potentially useful for CER also have other, and sometimes more prominent, roles in the workforce.

However, new training and skills will be required as additional reuse of clinical data is undertaken.

It must be noted that much data in EHR systems are not research-quality data. Clinical documentation is often not a high priority for clinicians. Forms and other types of clinical data capture can be cumbersome and time consuming for busy clinicians to use, and clinicians often do not appreciate the importance of entering high-quality data as part of routine clinical care. BMI workers must be well attuned to the needs of CER and related disciplines if they are to meet the informatics needs of CER.

Of course, implementing EHRs and reusing their data are not the only areas of BMI that are of importance to CER. Biomedical informaticians have skills that are needed in a variety of other areas, including the following:

- information needs assessment;

- data mining, text mining, and other forms of knowledge discovery (e.g., tools that help streamline the production of systematic reviews) (Cohen et al., 2006); and

- ontology development and knowledge management (e.g., projects like The Biomedical Research Integrated Domain Group (BRIDG) Model and other efforts to improve BMI in clinical research) (Fridsma et al., 2008).

Before getting into the details of the informatics workforce for CER, let us take a broader look at that workforce more generally. Most research assessing the HIT workforce has looked only at specific settings or professional groups. In developed countries, there are generally three categories of professionals who make up the HIT workforce:

- IT professionals—usually with a technical background, such as computer science or management information systems,

- health information management professionals—the allied health profession historically focused on medical records, and

- biomedical informatics professionals—working at the intersection of IT and health care, usually with a formal background in one or both.

Probably the most comprehensive assessment of the HIT workforce was carried out in England (Eardley, 2006). This analysis estimated that the HIT workforce employed 25,000 full-time equivalents (FTEs) out of 1.3 million workers in the National Health Service, or about 1 IT staff per 52 non-IT workers. Studies done in the United States have generally focused on one group in the workforce, such as IT or health information management

professionals. Gartner Research assessed IT staff in integrated delivery systems of varying size (Gabler, 2003). Among 85 such organizations studied, there was a consistent finding of about 1 IT staff per 56 non-IT employees, which was similar to the ratio noted above in England.

More recently, Hersh and Wright used the Healthcare Information and Management Systems Society (HIMSS) Analytics Database10 to analyze hospital IT staff (Hersh and Wright, 2008). This database contains self-reported data from about 5,000 U.S. hospitals, including elements such as number of beds, total staff FTEs, total IT FTEs (as well as broken down by major IT job categories), applications, and the vendors used for those applications. A recent addition to the HIMSS Analytics Database is the Electronic Medical Record (EHR) Adoption Model, which uses eight stages to rate hospitals on how far they have gone toward creating a paperless record environment (EHR Adoption Model, 2007). “Advanced” HIT is generally assumed to be stage 4, which includes computerized physician order entry (CPOE) and other forms of clinical decision support that have been shown to be associated with improvements in the quality and safety of health care (Chaudhry et al., 2006).

Hersh and Wright found the overall IT staffing ratio to be 0.142 IT FTE per hospital bed. Extrapolating to all hospitals beds in the United States, this suggests a total current hospital IT workforce size of 108,390 FTEs. They also found that average IT staffing ratios varied based on the EMR Adoption Model score. Average staffing ratios generally increased with adoption score, but hospitals at stage 4 had a higher average staffing ratio than hospitals at stages 5 or 6. If all hospitals in the United States were operating at the same staffing ratios as stage 6 hospitals (0.196 IT FTE per bed), a total of 149,174 IT FTEs would be needed to provide coverage—an increase of 40,784 FTEs from the current hospital IT workforce.

No studies have quantified the numbers of BMI professionals, although some studies have qualitatively assessed certain types, such as chief medical information officers (Leviss et al., 2006; Shaffer and Lovelock, 2007). The value of BMI professionals is also hinted at in the context of studies showing flawed implementations of HIT leading to adverse clinical outcomes (Han et al., 2005), which may have been preventable with application of known best practices from informatics (Sittig et al., 2006), and other analyses showing that most of the benefits from HIT have been limited to a small number of institutions with highly advanced informatics programs (Chaudhry et al., 2006). Others have documented the importance of “special people” in successful HIT implementations (Ash et al., 2003).

With this general framework, it is possible to discuss the needs of the

_______________

10 This database is derived from the Dorenfest IDHS+ Database, see http://www.himssanalytics.com (accessed September 22, 2010).

informatics workforce for CER. One place to start is with the institutions funded by the CTSA program. Many institutions funded under the CTSA initiative have developed research data warehouses for clinical data that can be used for reuse of clinical data. Workforce needs include those with IT skills in deploying EHR systems, relational databases, and networked applications, as well as those with a more clinically focused orientation who will actually carry out CER activities. The skill set for CER varies depending on the job. Table 4-2 lists the job titles, job responsibilities, and degrees and skills required for various HIT positions. There is unfortunately very little standardization in these jobs. There is also minimal overlap in the skill sets. The jobs can be broadly divided into those that are IT (more technical and less requiring of clinical expertise) and BMI (less technical and more requiring of clinical expertise).

Where will these BMI skills be developed or obtained? Although some technical and clinical skills are obtained through one’s formal education, much skill development in BMI currently takes place on the job. In addition, with the rapidly changing nature of IT and BMI, many skills must be learned on the job because some applications did not exist during the individual’s education or training. A repeated statement heard from employers of IT and BMI personnel is that “soft skills” are essential. These include the ability to work in groups as well as to communicate effectively orally and in writing. BMI personnel in particular are often viewed as functioning in a “bridge” capacity among IT and clinical personnel.

What are the projected needs as CER is scaled up in healthcare settings? The research by Hersh and Wright cited above indicates that the need for IT personnel increases with the increasing sophistication of EHR adoption, perhaps leveling off after the implementation of CPOE and clinical decision support are reached. The estimates by Hersh and Wright do not include any BMI personnel because the resource they used for their work did not include data on these personnel. In addition, because the data resource did not include data on those who specifically do CER activities, the researchers also do not explicitly include any of these activities. CER informatics work will require both IT and BMI personnel. One common assertion concerning BMI personnel is that there should be one physician and one nurse trained in BMI in each of the 5,000+ hospitals in the United States (Safran and Detmer, 2005). This has led to the 10×10 (“ten by ten”) program of the American Medical Informatics Association, which aims to provide a detailed introduction in BMI to 10,000 individuals by the year 2010 (Hersh and Williamson, 2007).

Of course, there are other needs for BMI professionals and researchers as well. Areas described above, such as information needs assessment, data and text mining, and ontology and knowledge management will require even more personnel. Indeed, the BMI field is rapidly evolving, with grow-

TABLE 4-2 Job Titles, Responsibilities, and Training Required for Health Information Technology Professionals

|

|

||

|

Job Title |

Job Responsibilities |

Degrees and Skills Required |

|

|

||

|

Information Technology (IT) |

||

|

Chief information officer |

Oversees all IT operations of organizations |

IT, computer science (CS), or management information systems (MIS) |

|

Director, clinical research informatics |

Oversees clinical research applications, including comparative effectiveness research (CER) |

biomedical informatics (BMI) |

|

Data warehouse manager |

Oversees development of research data warehouse |

IT, CS, or MIS |

|

Web designer |

Designs Web front end for data access systems |

IT, CS, or MIS |

|

Web engineer |

Deploys Web back end for data access systems |

IT, CS, or MIS |

|

Research applications programmer |

Develops CER and other applications |

IT, CS, or MIS |

|

Database administrator |

Administers research data warehouse |

IT, CS, or MIS |

|

Project manager |

Manages CER and other projects |

IT, CS, or MIS |

|

Biomedical Informatics |

||

|

Chief medical information officer |

Oversees clinical IT applications, including research data warehouse |

BMI |

|

Physician leads |

Provide leadership in implementation and use of electronic health records |

BMI, formally or informally |

|

Medical informatics researcher |

Oversees data mining activities for CER |

BMI |

|

Medical informatics researcher |

Oversees information needs assessment for CER |

BMI |

|

Research analyst |

Works with medical informatics researchers to collect data and carry out analysis with medical informatics researchers |

Variety |

|

|

||

ing attention paid to the need for professional development and recognition (Hersh 2006 2008).

There is a need for more research to better characterize the optimal IT, health information management, and BMI personnel for general operational EHR and related systems as well as for CER activities specifically. Such research must measure not only health IT practices as they are now, but also what they may become as the implementation agenda advances. Research must sample a wide variety of health organizations to determine quantitatively (e.g., number of people and their skills and education required) as well as qualitatively (e.g., notions of what they need that is not measured by surveys) what they do now, plan to do in the future, and should be doing to achieve an optimal learning health system.

Clinical Guidelines Development and Implementation

Practice guidelines represent an effector arm of the comparative effectiveness process (IOM, 1990). Once scientific studies are performed and their outcomes are systematically reviewed, multidisciplinary guideline development panels are convened to transform the summarized knowledge into recommendations about appropriate care. Those recommendations are then disseminated and presented to many different types of teams for implementation. Because there is some confusion about the use of these terms, guideline authoring will be defined as the translation of scientific evidence and expert consensus into policy statements. Dissemination refers broadly to the publication and spread of those policy statements. Guideline implementation refers to the operationalization of policies in clinical settings with the goals of improving specific processes and outcomes of care and of addressing specific barriers and challenges to uptake.

Ideally, guidelines are produced by multidisciplinary teams that together provide a complete skill set. Unfortunately, these teams are often convened for a single purpose, and skills and knowledge accumulated by team members are not reused in subsequent guideline development efforts.

Guideline development requires topic (domain) expertise that varies from one topic to the next. To create evidence-based guidelines, knowledge must be distilled from the scientific literature and combined with expert judgment. Authors typically work from evidence tables, meta-analyses, and systematic reviews to summarize the facts that are known about a topic. Such evidence summaries may be sought externally or produced by experts within the team itself. Often, however, there are “holes” in the evidence base that must be addressed, either by eliciting expert opinion and experience or by developing an agenda for further research.

Even within a single guideline topic there are often multiple clinical perspectives that should be represented, such as primary care and specialty

care, medical and surgical approaches, and the insights of paraprofessionals. Guideline authoring teams also require two types of methodologists: those who can help topic experts to understand the evidence and its quality, and those who understand the guideline development process, including how evidence quality and benefits and risks should be weighed in order to create statements about the strength of a recommendation. Furthermore, the perspectives of patients who suffer from the condition of interest are often invaluable in formulating recommendations that accommodate the values of that group of people with the greatest stake. The process of formulating policy from scientific evidence requires yet another skill set. Finally, skills in teambuilding, mediation, project management, and leadership are essential to ensure that a well-designed product emerges from the process in reasonable time.

Where are these skills currently developed or obtained? Expertise in clinical care comes most often from clinical training and experience. Expertise in judging evidence may come from coursework in epidemiology and study design. Expertise in policy development usually derives from experience for clinicians, but it may be obtained in formal health policy and public policy studies. Skill in the implementation of guideline recommendations is developed in different ways depending on the specific intervention. Expertise in education, evidence-based decision making, marketing, psychological conditioning, informatics, social and organizational behavior, regulation, financial analysis, and healthcare administration may all be useful in various situations, depending on the implementation strategies selected. Also, as noted above, the experience gained in authoring or implementing a specific guideline is often wasted when not reused.

Because guideline authors tend to focus on policy creation, they pay little attention to how those policies will be implemented. In many situations, authors deliberately introduce vagueness and underspecification because they are unsure how to address such things as gaps in evidence, lack of consensus, and potential legal implications. These limitations in clarity must be identified and resolved before the guideline recommendations can be implemented. The American Academy of Pediatrics is piloting a program called the Partnership for Policy Implementation in which a pediatrician-informatician is made a part of the guideline development team to help ensure that the guideline product can be implemented effectively.

What are the projected needs in this area? Currently, AHRQ’s National Guidelines Clearinghouse contains more than 2,000 “evidence-based” guidelines. Based on observations of how soon they become outdated (Shekelle et al., 2001), these guidelines should be reviewed and reaffirmed, revised, or retired every 5 years. Even allowing for no growth, 400 guideline review teams must reassemble each year. If the advice of the IOM is followed and a central agency is developed to help standardize guideline devel-

opment efforts, this could decrease the number of teams creating guidelines and would probably—through “certification”—result in improved reuse of guideline development skills.

The guideline authoring process needs more individuals who are skilled at evidence searching, extraction, and filtering, as well as in policy development. The EPCs may be capable of meeting the current needs of requesting organizations, but additional numbers for performing updates, horizon scans, and filling in holes in the evidence base will be necessary. It will also be valuable to have additional staff within the national professional organizations who can coordinate and lead guideline development initiatives.

The lack of a central organization complicates the estimation of workforce needs. There is little opportunity for guideline developers and implementers to interact—there are no national organizations or national meetings that are attended by both groups. This contributes to the poor communication described above. The Guidelines International Network provides such a venue, but it is composed mostly of Europeans. AHRQ and the National Guidelines Clearinghouse might consider convening such an activity. The quantity of workers required in this area would depend on the number of guidelines necessary, followed by an assessment of what organizations require in order to implement them in their local settings.

Health Services Research

HSR is currently a robust and growing field. It draws from a number of disciplines. Health services researchers regularly participate in CER, and organizations and departments using the label “HSR” successfully compete for grants and contracts in this area. Informal conversations with training program directors indicate that graduates today have multiple job opportunities.

There are many programs claiming to train health services researchers. Whether they evolved from a conscious assessment of what the health system required in terms of research or whether they evolved from other beginnings is not necessarily important; what is important is that the field is established, recognized in formal policy, supported by institutions and professions, and capable of guiding its own destiny. The future of HSR is open to speculation—whether it will evolve as a specialized profession with a coherent and formally bounded sphere of influence or remain more an informally defined “point of view” is an open question. The text in this section is derived from a monograph authored by Ricketts (2007).

As CER gains more attention from scientists, practitioners, and funders, health services researchers will likely adapt their skills and content expertise toward issues of CER. There will, however, be some particular challenges to health services researchers as they become involved in CER studies. CER

requires detailed knowledge of randomized trial design, and many HSR studies use observational studies. CER will also require knowledge of the clinical conditions under study and the practice contexts in which the treatments are applied. Nonclinicians will need either to acquire this knowledge or to develop detailed collaborations with clinicians who have symmetric training in methods.

As a field rather than a specific discipline, HSR has skill sets that cut across multiple domains. In the past, there have not been specifically defined core competencies within HSR. Over the past several years AHRQ partnered with AcademyHealth to define doctoral-level “core competencies” for HSR through a series of white papers and meetings (Forrest et al., 2005, 2009). Table 4-3 shows the current list of competencies.

Some of these competencies extend beyond CER. For example, most CER does not involve primary data collection. There is a substantial overlap between the core competencies in Table 4-3 and the skills needed to conduct CER, including a knowledge of study designs, the ability to develop conceptual models, responsible conduct of research, secondary data methods, study design, implementation of protocols, clear scientific communication, and collaboration with stakeholders.

HSR is recognized in universities and research institutes as a pathway for development of advanced inquiry. Academics are recognized by the vocational cognomen “health services researcher” as often as by the more academic discipline titles of “economist” or “sociologist.” There is now an extensive infrastructure in universities, research institutes, and centers for training health services researchers. AcademyHealth, in its inventory of training programs, lists 127 graduate programs in HSR in the United States and Canada. The complexity of the field is reflected in the variety and scope of programs that identify themselves as preparing health service researchers. To complicate matters more, the practicing health services researcher may not actually be formally trained in a program called “HSR.”

Some of the programs will likely adapt relatively easily to the need to incorporate skill sets important to CER. As noted elsewhere in this paper, meeting future demands for literature synthesis and meta-analysis will probably not be difficult. Other components of CER, such as pharmacoepidemiology and the assessment of treatment harm through the merging of disparate secondary data, may need to invest in additional doctoral-level training positions in order to meet rising demand. One risk of not meeting increased demand will be the need to use less-well trained individuals to conduct CER.

AHRQ and AcademyHealth have recently completed a general assessment of the training issues and needs for HSR professionals (Ricketts, 2007). Those authors recognized that CER represented one aspect of HSR,

TABLE 4-3 Competencies for Health Services Research

|

|

|

|

Core Competency |

Educational Domains |

|

|

|

|

Breadth of health services research (HSR) theoretical and conceptual knowledge |

Health, financing of health care, organization of health care, health policy, access and use, quality of care, health informatics, literature review |

|

In-depth disciplinary knowledge and skills |

(Variable depending on the discipline or interdisciplinary area of specialization) |

|

Application of HSR foundational knowledge to health policy problems |

Health, financing of health care, organization of health care, health policy, access and use, quality of care, health informatics, literature review |

|

Pose innovative HSR questions |

Scientific method and theory, literature review, proposal development |

|

Interventional and observational study designs |

Study design, survey research, qualitative research |

|

Primary data collection methods |

Health informatics, survey research, qualitative research, data acquisition and quality control |

|

Secondary data acquisition methods |

Health informatics, HSR data sources, data acquisition and quality control |

|

Conceptual models and operational measures |

Scientific method and theory, measurement and variables |

|

Implementation of research protocols |

Health informatics, survey research, qualitative research, data acquisition and quality control |

|

Responsible conduct of research |

Research ethics |

|

Multidisciplinary teamwork |

Teamwork |

|

Data analysis |

Advanced HSR analytic methods, economic evaluation and decision sciences |

|

Scientific communication |

Proposal development, dissemination |

|

Stakeholder collaboration and knowledge translation |

Health policy, dissemination |

|

|

|

SOURCE: Data derived from Forrest et al., 2005, 2009.

but that the field is currently very labile. Three approaches to workforce planning for CER and HSR can be recommended:

- Researchers conducting CER as well as funders and policy makers planning new initiatives should regularly communicate with educators so that new needs for training can be incorporated in a timely fashion. While training programs should not alter curriculums with each new federal request for applications, they should be responsive to changes in the research and policy environment.

- AHRQ and AcademyHealth should continue to conduct periodic surveys (every 2 to 3 years) and key informant interviews to assess

the state of the workforce for health services and CER, communicating with researchers in industry, contract research, and academe regarding the quality and availability of personnel at multiple levels of training.

- AHRQ and AcademyHealth should also regularly examine the number and type of training programs in HSR and CER and communicate with funders regarding adequacy of supply and congruence of curriculums with the expressed need of the research organizations conducting CER. Modifications to predoctoral, postdoctoral, and career development (K series) programs can be based on these evaluations.

Dissemination

The purpose of evidence translation and dissemination is to develop practical tools to improve decision making by end users. The group of end users is broadly defined and includes people who have medical problems (patients), their families and caregivers, clinicians, healthcare administrators, governmental policy makers, and employers. Evidence translation is the process of extracting key messages from evidence summaries (systematic reviews or technology assessments) and placing those messages into the context of the decisions made by end users. To be useful, translation needs to lead to the creation of products (such as summaries tailored for particular audiences) that can be accessed by those end users. Dissemination is then the step of making those products accessible for the end users. Dissemination can occur through various avenues, including the distribution of printed products, making the products available on Web sites, and other modes of electronic distribution (such as interactive decision aids, podcasts, and e-mail).

To help individuals use clinical evidence in their decisions, the summaries of that evidence must be unbiased. The evidence sources are often complex scientific documents that provide detailed and highly nuanced explanations of the body of evidence. The process of evidence translation requires careful analysis and summarization to avoid errors or oversimplification. Evidence summaries are only useful if they are applicable to the actual decisions made by the people involved in health care. This activity requires a thorough knowledge of the methodologies used in clinical research and systematic reviews. It also requires a clear understanding of the clinical context in which the evidence will be applied. Thus, the technical skills required to perform translation are similar to those required for the development of systematic reviews. Individuals must be able to understand the methodologies in order to translate the reports without introducing bias. The process of developing key messages often involves

simplification, and this requires a careful deconstructing of the information in a systematic review.

After the process of evidence translation has determined the messages that will be useful for decision makers, the next step is to summarize this information in products that can serve as tools to aid decisions. Because such tools can take various forms, the skills are multidisciplinary and include the ability to provide clearly written documents and to design interactive content for electronic distribution. Developing effective decision tools can also benefit from the input of stakeholders and opinion leaders. This input helps to ensure that the evidence translation will meet the critical needs of decision makers. After the decision tools are developed in draft form, testing them with end users provides valuable insight into how they can be modified and improved. Both of these steps (obtaining formative input and performing testing with end users) require qualitative research skills. Finally, after the decision tools are developed and tested with end users, they are ready for public release and dissemination. Dissemination is a specialized activity that requires skills related to public relations, journalism, and communications.

The multidisciplinary team required to perform evidence translation and dissemination includes a variety of individuals who have different skills and who commonly have diverse educational backgrounds. While some individuals may play more than one role (e.g., a clinician with skills in clinical research methodologies), the required skills are so diverse that a multidisciplinary team is needed. Table 4-4 summarizes the individuals who compose the team.

What are the projected needs in this area as CER is scaled up? At present there are relatively few groups doing state-of-the-art evidence translation and dissemination in the United States. As programs to increase CER move forward, there will be a growing need for individuals to perform translational work. Some members of the multidisciplinary team (particularly clinicians and methodologists) will be drawn from the same pool as those performing the work of information synthesis. Thus, the need for translation will increase the need for an infrastructure to train such individuals. For the other team members, the necessary training will come from programs that provide training in informatics, qualitative research, and communication.

The need for additional workers in dissemination-related areas of CER is based on the amount of activity deemed required to most effectively distribute such knowledge. This in turn is a function of the output from the other activities shown in Figure 4-1 that feed into dissemination. Another factor in quantifying the amount of dissemination required is the different types of healthcare professionals (e.g., physician specialists, physician

TABLE 4-4 Roles, Skill Sets, and Backgrounds of Personnel Involved in Comparative Effectiveness Research Dissemination

| Role | Skill Set | Contribution | Educational Background |

|

Clinician |

Understanding of clinical issues |

Defining key decisions to which the evidence will be applied |

Medicine, nursing, pharmacy |

|

Research methodologist |

Understanding sources of bias |

Defining key messages derived from systematic reviews or technology assessments |

Clinical epidemiology |

|

Writer |

Synthesizing contextual information and key messages |

Creating plain language explanations |

Health communication |

|

Qualitative researcher |

Qualitative data collection and synthesis |

Interviewing key informants and end users |

Qualitative methods |

|

Computer programmer |

Creating Web-based and other interactive content |

Developing electronic tools to aid decision making |

Biomedical informatics and/or computer science |

|

Dissemination specialist |

Understanding audiences and avenues for dissemination |

Developing effective dissemination strategies |

Communication, public relations, journalism |

generalists, nonphysicians) and patients (e.g., those with varying levels of health and general literacy).

Overlapping Areas

Figure 4-1 shows two areas of explicit overlap, and there are likely to be more. Each of these areas is likely to generate additional needs, such as people who work, perform research, and teach at these margins. The first of these areas of overlap is information needs assessment. Trialists and systematic reviewers must, for example, work with domain experts to determine the key aspects of their research questions to be studied. Likewise,

guideline implementers and developers must be driven by an information needs assessment process.

The second area of overlap is methodology. As with most research in general, CER is driven by a diverse set of methods applied in many medical domains. Furthermore, the intersection of these areas may create the need for new methodology, such as the best methods for approaching, if possible, research-quality data in an EHR. It will take additional workforce to conceptualize and develop this methodology, followed by practitioners to implement it and professors to teach it. Technology assessment is one specific area of methodology that will require all of this.

Summarizing and Quantifying

The above analysis of workforce components has identified the broad range of activities that make up CER. These will come not only from research in traditional clinical research and related areas but also through analysis of the growing amount of data in EHR systems, experiences with clinical guidelines development and implementation, aspects of HSR, and more widespread dissemination of knowledge. Current research and other work in these areas remains productive, but a substantial scaling up of funding will require better policy coordination, more funding, and—what is assessed in this paper—understanding and planning for workforce needs. There are several challenges to achieving the vision and goals for CER related to workforce needs.

The first challenge in defining the CER workforce is to grapple with the larger question of the quantity of CER that is necessary for the learning health system. To quantify the needs, these questions must be answered:

- What quantity of comparative clinical trials and other clinical research will be required?

- What quantity of CER systematic reviews will need to be performed?

- What amount of pharmacoepidemiological and related analysis will be required, or even possible, especially given the small number of pharmacoepidemiologists?

- How many medical centers will be willing or able to use their EHR systems or local guideline implementation to provide data for CER?

- What quantity of clinical practice guidelines will need to be produced?

- What types and amounts of HSR will be necessary for CER?

- What types and quantities of dissemination will be required for CER? At how many levels will the content require reformatting?

These various aspects of CER do not exist in isolation. In this analysis,

a number of areas have been identified that require an interaction of activities and skills across various areas of CER. Therefore it will not be enough simply to plan in discrete areas such as clinical epidemiology, BMI, or HSR. Furthermore, this analysis focused only on the actual work to be done and not on the leadership required to guide CER work. As in all fields, leadership will be necessary to develop and advocate for the vision of CER and the learning health system, to manage its deployment and training, and to interact with the leadership of related and separate disciplines.

Recognizing that there are different areas of CER and diverse skill needs within them, a total summation of the workforce will quantify the need in each area and then sum those needs across all the areas. How much CER, and how much of each component of CER, must be quantified by policy makers and others who must take into account the demand for each type of CER, the supply of the workforce, competition for other tasks these workers might do, and funding available for CER.

The original intention in this report was to provide a quantitative first approximation of the workforce needs for CER. However, as the authors developed the framework and explored the issues more deeply, it was apparent that there are too many unanswered questions about the scope, breadth, and quantity of CER that need to be clarified in order to achieve the larger goals for a learning health system. This view was validated by many of the experts listed in the acknowledgements, who advised against attempting to quantify needs as long as there is such an unclear picture of, and future for, CER.

There are a number of reasons why a quantitative assessment of the CER workforce is not possible. The main one is that the true scope of CER is not known. For example, even in the area of clinical epidemiology, which has probably the most clarity about needs of any of the areas that were assessed, there is no clear answer about how many systematic reviews, practical and other clinical trials, and pharmacoepidemiological analyses are required. While the number of personnel required for systematic reviews is relatively well understood, the requirements for the other categories of clinical trials and pharmacoepidemiological analyses are much less clear.

Beyond clinical epidemiology, the picture becomes even less certain. While BMI, development and implementation of clinical guidelines, and dissemination could become a major part of CER, the amount of each that will need to be done—or that even falls under the rubric of CER—is not clear. Furthermore, in all of these areas, CER would be secondary to the larger tasks of maintaining IT systems for clinical care; using guidelines to improve the quality, safety, consistency, and cost effectiveness of operational clinical care; and disseminating all types of clinical knowledge. How much of the work would actually be CER is not known or easy to determine. Even in HSR, the amount of research to be done that could be

classified as CER is not certain. Clearly, in an analysis held under the rubric of “EBM,” there is little evidence to make sound judgments about specific workforce needs.

It is certain, however, that CER will require a diverse array of skilled workers to meet its agenda. Any effort to undertake CER in a major way, such as through the establishment of a centralized public agency or private institute, should have the quantification, efficient deployment, and required education of the workforce as an early research agenda item. Determining how to implement and scale up CER will be a major challenge if its size and scope is to be seriously increased.

This leads to a number of larger policy questions. For example, how will CER be financed? In the case of research studies, who will fund the work comprehensively, especially in light of a national research enterprise that focuses on disease-based and investigator-initiated research? Likewise, who will fund the development of clinical practice guidelines? For studies derived from the secondary use of clinical data, which medical centers and health systems will be required to participate, and how will they be funded? Will it become an expected part of healthcare delivery? Finally, how will the knowledge generated from CER be disseminated? What amount of dissemination will be required, and how will it be funded?

It is also worth noting that work in CER will face competition from other areas for researchers and their staffs, especially among physician researchers. As the baby boomer generation enters the Medicare age group, there is a growing need for physicians and other clinical practitioners. Likewise, there are also demands for physicians to enter non-CER research areas, such as those encouraged by the CTSA program, which could be a help or a hindrance to CER research. This is true for other areas of CER as well, such as the need for pharmacoepidemiologists because of the growing amount of drug monitoring and safety called for in recent FDA-related legislation. As such, any policy or funding that increases CER will need to recognize the competition for workers and skills from related areas, which will drive up the salaries of researchers and their staffs.

There is also competition for workers even within CER work. This analysis has mostly focused on academic settings, but there are others who have an interest in performing CER and related work. This includes government agencies, nonacademic healthcare systems, and manufacturers of drugs, devices, and other medical tests and treatments. One possible silver lining in this competition is the potential to partner with international organizations engaged in similar work, such as NICE. While not all CER work transfers easily across borders, populations, and cultures, there is likely some amount that can.

Research and policy development will also need to be provided to the locations where leadership in CER will be required to pilot the leading edge

of research, especially in methodologies, and to train the next generation. The best sites to establish these centers of excellence will probably be those that house EPCs, CTSA centers, AHRQ HSR training, and informatics research and educational programs. As in all of these specific initiatives, national consortiums should be established to share the vision, best practices, and policy for developing the pipeline of new researchers.

The determination of the scope and amount of workforce required for CER is a research agenda itself. Particularly for the workforce required, and for the components within it (such as clinical epidemiology, BMI, and HSR), research should be undertaken to identify not only the skills required now, but also how the workforce will be best organized in the future for maximum efficiency, best-quality output, and the anticipated expansion. This should be done through a variety of methods, including estimates of quantitative needs (e.g., amount of research, number of researchers and their staff, existing capacity, how much expansion is required) as well as qualitative understanding (e.g., people and organizational challenges, academic homes, career advancement).

Once this research agenda identifies the workforce and the skills it needs, it will also be necessary to determine the types of educational programs required to train those individuals, such as the competencies and curriculums of such programs. This will require policy on how to fund such education, especially for those with increasing burdens of educational debt already acquired for their basic education. This may be another area where international partnerships may be helpful.

CER promises an exciting approach to improving the quality of health care while reducing its cost though more efficient use of the most effective approaches to clinical care. A major part of achieving this system will be a coordinated and adequately funded approach. There are many challenges to reaching that goal, including the provision of a workforce that can bring the requisite knowledge and skills to CER problems and solutions. Much further research, policy, and funding will be required to achieve this vision.

TOWARD AN INTEGRATED ENTERPRISE—THE ONTARIO, CANADA, CASE

Danielle Whicher, Kalipso Chalkidou, Irfan Dhalla,

Leslie Levin, and Sean R. Tunis

Center for Medical Technology Policy, Baltimore, Maryland, USA

National Institute for Health and Clinical Excellence, London, UK

Department of Medicine, University of Toronto, Ontario, CA

Medical Advisory Secretariat (MAS), Ontario Ministry of Health and

Long Term Care, Toronto, Ontario, CA

Overview