INTRODUCTION

Comparative effectiveness research (CER) is composed of a broad range of activities. Aimed at both individual patient health and overall health system improvement, CER assesses the risks and benefits of competing interventions for a specific disease or condition as well as the system-level opportunities to improve health outcomes. To meet the ultimate goal of providing information that is useful to guide the healthcare decisions of patients, providers, and policy makers, the work required includes conducting primary research (e.g., clinical trials, epidemiologic studies, simulation modeling); developing and maintaining data resources in order to conduct primary research, such as registries or databases for data mining and analysis, or to enhance the conduct of other types of clinical research; and synthesizing and translating a body of existing research via systematic reviews and guideline development methods. To ensure the best return on investment in these efforts, work is also needed to advance the development of new or refined study methodologies that improve the efficiency and relevance of research as well as reduce its costs. Similarly, to guide the overall clinical research enterprise in the efficient production of information of true value to healthcare decision makers requires the identification of priority research questions that need to be addressed, the coordination of the various aspects of evidence development and translation work, and the provision of technical assistance, such as study design and validation.

The papers that follow provide an overview of the nature of the work required, noting lessons learned about the known benefits of the country’s

capacity and experience, and illustrating opportunities to improve care through capacity building. Emerging from these papers is the notion that although a number of diverse, innovative, and talented organizations are engaged in various aspects of this work, additional efforts are needed. Gains in efficiency are possible with improved coordination, prioritization, and attention to the range of methods that can be employed in CER.

Two papers provide a sense of the potential scope and scale of the necessary CER. Erin Holve and Patricia Pittman from AcademyHealth estimate that approximately 600 comparative effectiveness studies were ongoing in 2008, including head-to-head trials, pragmatic trials, observational studies, evidence syntheses, and modeling. Costs for these studies range broadly, but cluster according to study design. Challenges to develop the workforce needed for CER suggest the need for greater attention to infrastructure for training and funding researchers. Providing a sense of the overall need for comparative effectiveness studies, Douglas B. Kamerow from RTI International discusses the work of a stakeholder group to develop a prioritization process for CER topics and some possible criteria for prioritizing evidence needs. This process yielded 16 candidate research topics for a national inventory of priority CER questions. Possible pitfalls of such an evaluation and ranking process are discussed.

Three papers provide an overview of the work needed to support, develop, and synthesize research. Jesse A. Berlin and Paul E. Stang from Johnson & Johnson survey data resources for research, and discuss how appropriate use of data and creative uses of data collection mechanisms are crucial to help inform healthcare decision making. Given the described strengths and limitations of available data, current systems are primarily resources for the generation and strengthening of hypotheses. As the field transitions to electronic health records (EHRs) however, the value of these data could dramatically increase as targeted studies and data capture capabilities are built into existing medical care databases. Richard A. Justman, from the United Health Group, discusses the challenges of evidence synthesis and translation as highlighted in a recent Institute of Medicine (IOM) report (2008). Limitations of evidence synthesis and translation have led to gaps, duplications, and contradictions; and, key findings and recommendations from a recent IOM report provide guidance on infrastructure needs and options for systematic review and guideline development. Eugene H. Blackstone, Douglas B. Lenat, and Hemant Ishwaran from the Cleveland Clinic discuss five foundational methodologies that need to be refined or further developed to move from the current siloed, evidence-based medicine (EBM) to semantically integrated, information-based medicine and on to predictive personalized medicine—including reengineered randomized controlled trials (RCTs), approximate RCTs, semantically exploring disparate

clinical data, computer learning methods, and patient-specific strategic decision support.

Finally, Jean R. Slutsky from the Agency for Healthcare Research and Quality (AHRQ) provides an overview of organizations conducting CER activities and reflects on the importance of coordination and technical assistance capacities to bridge these activities. Particular attention is needed as to which functions might be best supported by centralized versus local, decentralized approaches

THE COST AND VOLUME OF COMPARATIVE EFFECTIVENESS RESEARCH

Erin Holve, Ph.D., M.P.H., Director; Patricia Pittman, Ph.D.,

Executive Vice President, AcademyHealth

Overview

In the ongoing discussion about CER, there has been limited understanding of the current capacity for conducting CER in the United States. This report intends to help fill this gap by providing an environmental scan of the volume and the range of costs of recent CER. This work was funded by the California HealthCare Foundation. Current production of CER is not well understood, perhaps due to the relatively new use of the term, or perhaps as a result of fragmented funding streams.

Comparative Effectiveness Research Environmental Scan

This study sought to determine whether there is a significant body of CER under way so that policy makers interested in improving outcomes can plan appropriate initiatives to bolster CER in the United States. This study does not catalog the universe of CER because existing data sources are limited by the way that research databases are developed. However, it is a first attempt to assess the approximate volume and cost of CER.

The study focused on four major objectives:

- Identify a typology of CER design.

- Characterize the volume of research studies that address comparative effectiveness questions.

- Provide a range of cost estimates for conducting comparative effectiveness studies by type of study design.

- Gather information on training to support the capacity to produce CER.

These efforts relied on three modes of data collection. The first phase included the development of a framework of study designs and topics. The second consisted of a structured search of research projects listed in two databases: www.clinicaltrials.gov and Health Services Research Projects in Progress (HSRProj),1 a database of health services research projects in progress. The third consisted of in-person and telephone interviews with 25 research organizations identified as leaders in comparative effectiveness studies. The number, type, and costs of studies were noted, although only studies cited by funders were included in the estimates of volume because of the possibility of double-counting studies cited by both researchers and funders. Interviews were used to triangulate information on costs and the relative importance of different designs.

An important study limitation was that it was not possible to cross-reference the databases and the interviews. As a result, although these sources are comparable, they should not be aggregated.

In an initial focus group with experts on CER, a definition of CER was developed to guide the project. Though many definitions of CER have been developed,2 this project relies on the following:

- CER is a comparison of the effectiveness of the risks and benefits of two or more healthcare services or treatments used to treat a specific disease or condition (e.g., pharmaceuticals, medical devices, medical procedures, other treatment modalities) in approximate real-world settings

- The comparative effectiveness of organizational and system-level strategies to improve health outcomes is excluded from this definition, as is research that is clearly “efficacy” research. This means that studies that compare an intervention to placebo or to usual care were excluded from our counts.

The expert panel also developed a framework of research designs to make it possible to categorize findings systematically. For the purposes of this study there was general agreement that there are three primary research categories3 applicable to CER:

_______________

1 HSRProj may be accessed at www.nlm.nih.gov/hsrproj/ (accessed September 22, 2010).

2 In a recent report from the Congressional Budget Office, the authors state that comparative effectiveness is “simply a rigorous evaluation of the impact of different treatment options that are available for treating a given medical condition for a particular set of patients” (CBO, 2007). An earlier report by the Congressional Research Service makes an additional distinction that comparative effectiveness is “one form of health technology assessment” (CRS, 2007).

3 During the interviews we attempted to identify research by more specific types, asking questions about pragmatic trials, registry and modeling studies, and systematic reviews.

- head-to-head trials (including pragmatic trials);

- observational studies (including registry studies, prospective cohort studies, and database studies); and

- syntheses and modeling (including systematic reviews).4

Clinicaltrials.gov is the national registry of data on clinical trials, as mandated by the Food and Drug Administration (FDA) reporting process required for drug regulation. Clinicaltrials.gov includes more than 53,000 study records and theoretically provides a complete set of information on all clinical trials of drugs, biologics, and devices subject to FDA regulations (Zarin and Tse, 2008). While the vast majority of trials included in clinicaltrials.gov are controlled experimental studies, there are some observational studies as well.

HSRProj is a database of research projects related to healthcare access, cost, and quality as well as the performance of healthcare systems. Some clinical research may be included in HSRProj if it is focused on effectiveness. HSRProj includes a variety of public and private organizations but only a limited number of projects funded by private or industry sources.

A search was conducted in www.clinicaltrials.gov for phase 3 and phase 4 observational and interventional studies. Phase 4 studies were narrowed by searching only for studies containing the term effectiveness. Studies that were explicitly identified as efficacy studies or those that did not include at least two active comparators were excluded. The HSRProj database was also searched for studies on effectiveness. Because HSRProj does not differentiate between study design phases, a search was also conducted by the types of studies identified in the framework. The studies identified through the process of searching both databases were then searched by hand in order to identify projects that met the definition of CER.

The interview phase of the project included in-person and telephone interviews with research funders and researchers who conduct CER. An initial sample of individuals funding and conducting CER were contacted in response to recommendations by the focus group panel, and these initial

_______________

4 Observational research studies include a variety of research designs but are principally defined by the absence of experimentation or random assignment (Shadish et al., 2002). In the context of CER, cohort studies and registry studies are generally thought of as the most common study types. Prospective cohort studies follow a defined group of individuals over time, often before and after an exposure of interest, to assess their experience or outcomes (Last, 1983), while retrospective cohort studies frequently use existing databases (e.g., medical claims, vital health records, survey records) to evaluate the experience of a group at a point or period in time.

Registry studies are often thought of as a particular type of cohort study based on patient registry data. Patient registries are organized systems using observational study methods to collect patient data in a uniform way. These data are then used to evaluate specific outcomes for a population of interest (Gliklich and Dreyer, 2007).

contacts in turn recommended other respondents—a “snowball” sample. In total, 35 individuals representing 25 research funders or research organizations participated in the project.5

Findings

For the project, 689 comparative effectiveness studies were identified in clinicaltrials.gov and HSRProj. Of these the vast majority are “interventional trials” listed on clinicaltrials.gov; specifically, they are phase 3 trials that compare two or more treatments “head to head,” have real-world elements in their study design, and do not explicitly include efficacy in their description of the study design. Only 19 studies are phase 4 post-marketing studies that compare multiple treatments. Seventy-three CER projects were identified in HSRProj. The process of manually searching project titles confirmed that most studies in this database were observational research, although a handful of studies were specifically identifiable as registry studies, evidence synthesis, or systematic reviews.

The interviews with funders identified 617 comparative effectiveness studies, of which approximately half were observational studies (prospective cohort studies, registry studies, and database studies). Research syntheses (reviews and modeling studies) and experimental head-to-head studies also represent a significant proportion of research activities.

Neither clinicaltrials.gov nor HSRProj publish funding amounts, so interviews with funders and researchers are the sole source of data on cost. As would be expected across the range of study designs covered, there is an extremely broad array of cost for CER studies. However, despite the range, cost estimates provided in the interviews did tend to cluster, particularly by study type. While the cost of conducting head-to-head randomized trials was extremely broad, including studies as costly as $125 million, smaller trials tended to range from $2.5 million to $5 million and larger studies from $15 million to $20 million.

Likewise, the range of cost for observational studies was extremely broad but tended to cluster (Table 2-1). While large prospective cohort studies cost $2 million to $4 million, large registry studies cost between $800,000 and $6 million, with most examples falling at the higher end of this range, although a few were substantially less. Retrospective database studies tended to be less expensive and cost on the order of $100,000

_______________

5 Though an initial group of potential respondents was identified as funders of CER, it was often necessary to speak with multiple individuals to find the appropriate person or group responsible for CER within the organization’s portfolio. For this reason the response rate among individuals is lower than might be expected for a series of key informant interviews. Thirteen organizations were identified that did not suggest they received funding for CER from other sources. This subset is used as the sample of organizations that fund or self-fund CER.

TABLE 2-1 Costs of Various Comparative Effectiveness Studies

|

|

||

|

Type of Study |

Cost |

|

|

|

||

|

Head to head |

Randomized controlled trials: Smaller Larger |

$2.5m–$5m $15m–$20m |

|

|

||

|

Observational |

Registry studies Large prospective cohort studies Small retrospective database studies |

$2m–$4m $800k–$6m $100k–$250k |

|

|

||

|

Synthesis |

Simulation/modeling studies Systematic reviews |

$100k–$200k $200k–$350k |

|

|

||

to $250,000. Systematic reviews and modeling studies tended to be less expensive and have a far narrower range of cost, in part because these studies were based on existing data, with many falling between $100,000 to $200,000. It is important to note, however, that this may not include the cost of procuring data. Systematic reviews cluster around a range of $200,000 to $350,000.

There are, of course, additional activities and costs of involving stakeholders in research agenda setting as well as prioritizing, coordinating, and disseminating research on CER that are not included here.6 These important investments will need to be considered in the process of budgeting for CER.7

_______________

6 Examples of activities designed to prioritize and coordinate research activities include the National Cancer Institute’s CER Cancer Control Planet (http://cancercontrolplanet.cancer.gov/), which serves as a community resource to help public health professionals design, implement, and evaluate CER-control efforts (NCI, 2007). Within the the Agency for Healthcare Research and Quality, the prioritization and research coordination efforts for comparative effectiveness studies are undertaken as part of the Effective Health Care Program. Translation and dissemination of CER findings is handled by the John M. Eisenberg Clinical Decisions and Communications Science Center, which aims to translate research findings to a variety of stakeholder audiences. No budget information is readily available for the Eisenberg Center activities.

7 Examples of stakeholder involvement programs include two programs at the FDA focused on involving patient stakeholders, the Patient Representative Program and the comparative

Finally, the interviews also shed light on two subjects discussed at this workshop (IOM, 2008): (1) the need for additional training in the methods and conduct of CER; and (2) the need to bring researchers together to discuss the relative contributions of RCTs and observational studies in the context of CER.

While interviewees generally commented that they have some capacity to respond to an increase in the demand for CER, some noted that they have had difficulty finding adequately trained researchers to conduct such research. For the moment, training programs are limited primarily to research trainees working with AHRQ’s evidence-based practice centers and the Developing Evidence to Inform Decisions about Effectiveness (DEcIDE) network. Respondents also mentioned two postdoctoral training programs designed to teach researchers how to conduct effectiveness research. The Duke Clinical Research Institute (DCRI) offers a program for fellows and junior faculty. Fellows studying clinical research may take additional coursework and receive a masters of trial services degree in clinical research as part of the Duke Clinical Research Training Program. The Clinical Research Enhancement through Supplemental Training (CREST) program at Boston University is the second program mentioned. CREST trains researchers in aspects of clinical research design, including clinical epidemiology, health services research (HSR), biobehavioral research, and translational research. Both the DCRI and CREST programs have a strong emphasis on clinical research using both randomized experimental study designs and observational designs.

Other funding for training includes the National Institutes of Health (NIH) K30 awards to support career development of clinical investigators and to develop new modes of training in theories and research methods. The program’s goal is to produce researchers capable of conducting patient-oriented research on epidemiologic and behavioral studies and on outcomes or health services research (NIH, 2006). As of January 2006, the Office of Extramural Affairs lists 51 curriculum awards funded through the K30 mechanism. In November 2007 AHRQ released a Special Emphasis Notice

_______________

effectiveness research Drug Development Patient Consultant Program (Avalere Health, 2008; FDA, 2009). Other examples of stakeholder involvement programs include the National Institute for Occupational Safety and Health–National Occupational Research Agenda program, the American Thoracic Society Public Advisory Roundtable, and the National Institutes of Health director’s Council of Public Representatives (COPR). These efforts can represent a sizeable investment in order to assure stakeholder involvement among the potentially diverse group of end users. For example, the COPR is estimated to cost approximately $350,000 per year (Avalere Health, 2008). From an international perspective, the UK’s National Institute for Health and Clinical Excellence (NICE) allocates approximately 4 percent of their annual budget (approximately $775,000) in NICE’s Citizen’s Council and for their “patient involvement unit” (NICE, 2004).

for Career Development (K) Grants focused on CER (AHRQ, 2007). Four career awards are slated to support the development, generation, and translation of scientific evidence by enhancing the understanding and development of methods used to conduct CER.

The challenge of filling the “pipeline” of researchers working on comparative effectiveness is exacerbated by what many of the interviewees viewed as a fundamental philosophical difference between researchers who were academically trained in observational research and those who are trained on the job to conduct clinical trials. Several respondents noted that these differences likely arise because the majority of researchers are trained in either observational study methods or randomized trials, but rarely both. In addition, as noted by Hersh and colleagues,8 there are many unknowns related to assessing the workforce needs for CER, including the unresolved definitional issues and scope of comparative effectiveness. As noted by Hersh, the proportion of CER that is focused on randomized trials, observational research, and syntheses has strong implications for the number of researchers (and the type of training) that will be needed. For this reason it is important to track research production and funding for CER in order to anticipate future needs.

Some respondents noted that differences in training manifest themselves in disagreements about the benefits of various observational study designs. Nevertheless, most individuals interviewed as part of this study felt that RCTs, observational studies (including registry studies, prospective cohort studies, and quasi-experiments), and syntheses (modeling studies and systematic reviews) are complementary strategies to generate useful information to improve the evidence base for health care. Furthermore, many participants agreed that, as CER evolves, it will be critical to develop a balanced research portfolio that builds on the strengths of each study type. To facilitate this balance, some of the interviews, as well as many comments at the IOM’s Roundtable on Value & Science-Driven Health Care (IOM, 2008) suggest that training opportunities to bridge gaps in language and methods used by researchers may be helpful in creating a balanced portfolio of CER.

Conclusion

Findings from this study indicate that there is a greater volume of ongoing CER than may initially have been supposed. The cost of conducting this research varies greatly, although it tends to cluster by type of study.

_______________

8 Hersh, B., T. Carey, T. Ricketts, M. Helfand, N. Floyd, R. Shiffman, and D. Hickam. A framework for the workforce required for comparative effectiveness research. See Chapter 4 of this publication.

The range of studies that are currently being conducted and the cost variation by study type have implications for the mix of activities that may be undertaken by an entity focused on CER. Furthermore, as identified in the interviews, assuring sufficient research capacity to conduct CER is likely to require an investment in multidisciplinary training, with an emphasis on bridging the gap between awareness of the strengths and limitations of randomized trials, observational study designs, and syntheses.

INTERVENTION STUDIES THAT NEED TO BE CONDUCTED

Douglas B. Kamerow, M.D., M.P.H.

Chief Scientist, Health Services and Policy Research, RTI International,

and Professor of Clinical Family Medicine, Georgetown University

Overview

CER compares the impact of different treatments for medical conditions in a rigorous, practical manner. At the request of the IOM’s Roundtable on Value & Science-Driven Health Care, IOM staff convened in 2008 a multisectoral working group to create a national priority assessment inventory. Their charge was to set criteria for choosing appropriate CER topics and then to nominate and review example topics for needed research. An abridged summary of the report is presented in Appendix B. Appendixes C and D, respectively, are the recommended priority CER studies proposed in 2009 by the IOM Committee on Comparative Effectiveness Research Prioritization and the Federal Coordinating Council for Comparative Effectiveness Research.

Introduction

CER has been defined as “rigorous evaluation of the impact of different options that are available for treating a given medical condition for a particular set of patients,” (CBO, 2007) and “the direct comparison of existing healthcare interventions to determine which work best for which patients and which pose the greatest benefits and harms” (Slutsky and Clancy, 2009). Broadly construed, this type of research can involve comparisons of different drug therapies or devices used to treat a particular condition as well as comparisons of different modes of treatment, such as pharmaceuticals versus surgery. It can also be used to compare different systems or locations of care and varied approaches to care, such as different intervals of follow-up or changes in medication dosing levels for a particular condition. CER also may be used to investigate diagnostic and

preventive interventions. All of these studies may include an evaluation of costs as well as an assessment of clinical effectiveness.

Comparative assessment research is especially valuable now, in an era of unprecedented healthcare spending and large, unexplained variations in care for patients with similar conditions. The IOM’s Roundtable on Value & Science-Driven Health Care set a goal for the year 2020 that 90 percent of clinical decisions will be supported by accurate, timely, and up-to-date clinical information that reflects the best available evidence. Currently, there is insufficient evidence about which treatments are most appropriate for certain groups of patients and whether or not those treatments merit their sometimes significant costs. The healthcare community requires improved evidence generation that will address how different interventions compare to one another when applied to target patient populations. CER provides the opportunity to ground clinical care in a foundation of sound evidence.

The current system usually ensures that when a new drug or device is made available, there is evidence to show its effectiveness compared to a placebo in ideal research conditions—that is, its efficacy. But there is often an insufficient body of evidence demonstrating its relative effectiveness compared to existing or alternative treatment options, especially in real-world settings. This limited scope of information increases the likelihood that clinical decisions are not based on evidence but rather on local practice style, institutional tradition, or physician preference. Although the numbers vary, some estimate that less than half—perhaps well less than half—of all clinical decisions are supported by sufficient evidence (IOM, 2007). This lack of evidence also leads to substantial geographic variations in care, further supporting the idea that patients may be subjected to treatments that are unnecessarily invasive—or not aggressive enough—for a variety of conditions. These variations in care partly explain healthcare spending differences across geographic regions that cannot be fully accounted for by price differences or illness rates. Geographic variations in treatment approach are often greater when there is less agreement within the medical community about the appropriate treatment. Variation in treatment approach for a variety of conditions is of significant concern because it has not been demonstrated that areas with higher levels of spending—where presumably patients are treated with more aggressive or expensive options or with simply more treatment—have significantly better health outcomes than areas with lower levels of spending.

The Institute of Medicine and Comparative Effectiveness

The IOM’s Roundtable on Value & Science-Driven Health Care recognized the importance of furthering CER to ensure that all clinical deci-

sions are based on sound evidence. The participants at the Roundtable’s July 2007 meeting of sectoral stakeholders concluded both that current resources to support head-to-head assessments of treatment options are not optimal and that a stronger consensus is needed on the priorities and approaches for assessing the comparative clinical effectiveness of health interventions. Participants at this meeting identified the need for the development of what they initially termed a “national problem list” to illustrate key evidence gaps and to prompt discussions leading to national studies.

National Priority Assessment Inventory

After initial work was done by IOM staff, the proposed project was renamed the National Priority Assessment Inventory. A working group was convened to serve as a steering committee for the project. Nominees for the working group were sought from IOM Roundtable stakeholders representing the different participating sectors—patients, caregivers, integrated care delivery organizations, insurers, regulators, employees and employers, clinical investigators, and healthcare product developers. The working group was composed of physicians and researchers representing different specialties and perspectives, coming from academia, government, private practice, medical specialty societies, and industry. The working group was given three tasks to accomplish in a series of conference calls involving either the entire group or only certain individuals:

- Review and refine selection criteria for identifying and evaluating needed research.

- Solicit and nominate candidate research topics.

- Review and comment on the final list of pilot topics.

Selection Criteria

The working group initially discussed and refined criteria that could be used to identify and evaluate candidate research topics. The final five selection criteria are listed in Box 2-1.

The first criterion selected was the importance of the conditions being treated or prevented. The working group wanted to concentrate on research for problems that were serious, common, or costly. Though not wanting to set quantitative cutoffs, they felt it was important that the studies chosen involved conditions that would be clearly recognized as important by clinicians, policy makers, and patients.

Second, effective treatments or preventive interventions needed to be available for the chosen conditions. Reasonable alternative treatments and resulting variations in practice are basic requirements for CER. Alternatives to be compared could include different drugs, different treatment modalities

BOX 2-1

Selection Criteria to Identify and Evaluate Research Topics

- Importance of the conditions

- Effective treatments or preventive interventions available

- Current knowledge about the relative effectiveness not definitive

- Research must be feasible and realistic

- The selection process should yield a heterogeneous group of topics

(drugs, devices, surgery, etc.), different preventive interventions, or different settings and systems of care.

Third, current knowledge about the relative effectiveness of alternative treatments or modalities must not be definitive, so that uncertainty exists in treatment selection for different settings and populations. In short, while an existing body of research is clearly necessary, unresolved questions should still exist.

Fourth, research to answer selected questions must be feasible and realistic. A head-to-head trial or data analysis that could improve knowledge and guide decision making should be practical to perform, without major design or financial barriers.

Fifth, the work group agreed that this pilot selection process should yield a heterogeneous group of topics. The final topic list was intended to provide examples of treatment, prevention, and HSR on a variety of conditions, using several different modes of treatment, and for conditions in differing demographic groups.

Selection Process

Candidate topics were solicited in a number of ways. Working group members suggested many topics from their respective clinical and administrative experience; they also asked colleagues for suggestions. IOM staff generated topics from prior work they had done as well as from outside sources. A prioritized list of 100 Medicare research priorities generated by the Medicare Evidence Development and Coverage Advisory Committee was also reviewed, as was a list of 14 priority conditions created for AHRQ’s Effective Health Care Program. A special effort was made to identify topics related to the care of children because so much effort is usually concentrated on adults and the elderly.

After this nomination process, about two dozen potential topics and studies were classified by condition, applicable population, type of treatment, setting, and other categories, and then were discussed by the working group. Staff began to evaluate the candidate topics by doing literature searches to determine available literature and feasibility. In an informal iterative process performed with the working group, the list was reduced to 16 topics. This list was then circulated to sectoral representatives for comments, and in July 2008 it was discussed and approved by members of the Roundtable on Value & Science-Driven Health Care. Staff then did further literature reviews and wrote brief summaries of the final example topics.

The 16 study topics are listed in Table 2-2 and are categorized by type of study, condition, and age group. As mentioned previously, the topics were chosen in part to provide examples from each of the categories in these tables.

Study Topic Summaries

Sixteen study topic summaries are included in Appendix B of this publication. All of the summaries are organized in the same manner:

- description of the condition or problem,

- available treatments or interventions,

- current evidence about the treatments or interventions,

- issues needing research and conclusions, and

- brief list of references.

Lessons Learned from This Project

The original, perhaps naïve, intent of this project was to produce a list of the 20 or so “best” or “most important” comparative effectiveness studies that need to be done immediately. However, evaluating and ranking studies proved to be difficult to do. Some nominations were impractical or difficult to operationalize; for others, evidence was not available. Ranking topics by potential national costs of the condition would skew the rankings toward common and expensive adult problems and leave childhood problems out entirely. Comparing topics is often an “apples vs. oranges” exercise, and common metrics are not always available. The process did, however, produce some clear lessons learned:

Much research needs to be done. It was not difficult to gather nominations and information about research that has the potential to make a real difference in costs and outcomes. It bears repeating that the topics chosen are just examples of questions that need to be answered.

Stakeholders should be consulted. A process without input and review

TABLE 2-2 The Comparative Effectiveness Studies Inventory Project Identified 16 Candidate Topics for Comparative Effectiveness Research

| Study Topic | Study Type | Age Group | Condition |

| Treatment of attention deficit hyperactivity disorder in children: drugs, behavioral interventions, no prescription | Comparative effectiveness treatment studies across modalities | Children | Mental diseases |

| Treatment of acute thrombotic/embolic stroke: clot removal, reperfusion drugs | Comparative effectiveness treatment studies across modalities | Adults | Heart and vascular diseases |

| Treatment of chronic atrial fibrillation: drugs, catheter ablation, surgery | Comparative effectiveness treatment studies across modalities | Adults | Heart and vascular diseases |

| Treatment of chronic low back pain | Comparative effectiveness treatment studies across modalities | Adults | Neurological diseases |

| Gamma knife surgery for intracranial lesions vs. surgery and/or whole brain radiation | Comparative effectiveness treatment studies across modalities | Adults | Neurological diseases |

| Treatment of localized prostate cancer: watchful waiting, surgery, radiation, cryotherapy | Comparative effectiveness treatment studies across modalities | Adults | Cancer |

| Diagnosis and prognosis of breast cancer using genetic tests: human epidermal growth factor receptor 2 and others | Diagnostic studies | Adults | Cancer |

| Over-the-counter drug treatment of upper respiratory tract infections in children | Drug–drug and drug–placebo treatment studies | Children | Respiratory disorders |

| Drug treatment of depression in primary care | Drug–drug and drug–placebo treatment studies | Adults | Mental disorders |

| Study Topic | Study Type | Age Group | Condition |

| Drug treatment of epilepsy in children | Drug–drug and drug–placebo treatment studies | Children | Neurological diseases |

| Use of erythropoiesis-stimulating agents in the treatment of hematologic cancers | Drug–drug and drug–placebo treatment studies | Adults | Cancer |

| Outcomes of percutaneous coronary interventions in hospitals with and without onsite surgical backup | Health services/systems studies | Adults | Heart and vascular diseases |

| Screening hospital inpatients for methicillin-resistant Staphylococcus aureus infection | Preventive interventions | Adults | Infectious diseases |

| Tobacco cessation: nicotine replacement agents, oral medications, combinations | Preventive interventions | Adults | Preventive interventions |

| Prevention and treatment of pressure ulcers | Surgical studies | Adults | Dermatological diseases |

| Inguinal hernia repair: open vs. minimally invasive | Surgical studies | Adults | Surgical disorders |

NOTE: Study topics are categorized by study type, age group, and condition.

SOURCE: Kamerow, 2009.

by a broad set of stakeholders risks missing important topics as well as not obtaining different perspectives on all nominated topics. Clinicians ask one kind of question; payers and employers often have different concerns. All perspectives need to be considered in nominating, vetting, and ultimately deciding on research topics. That said, some topics nominated by stakeholders were not confirmed as being important or practical after literature reviews.

Research questions need to be carefully defined. Once general topics had been selected, literature reviews often found either too much or

not enough evidence to support a call for further research. Topics were often reoriented as a result of the state of the evidence, being narrowed, expanded, or abandoned according to what the evidence said.

Different types of studies are needed. Three types of comparative assessment studies are often described. The gold standard for CER is a prospective, randomized head-to-head trial comparing specific treatments or interventions for a condition. Such trials, however, can be expensive and usually take time to complete. In some cases, the extant literature is sufficient to assess comparative effectiveness, and systematic reviews with or without formal meta-analysis can be created from data taken from previous studies. This type of study is usually the fastest to complete. Finally, existing clinical and payment data systems can sometimes be analyzed to compare the effectiveness of drugs or devices without collecting new data. These studies can also be done relatively rapidly. It was often difficult to determine from the state of the literature which type of comparative effectiveness study was appropriate for each of the nominated topics.

An explicit, transparent process is important. Significant funding will be necessary to undertake these studies. Unlimited funding is never available, of course, and there will be limits on the number of projects that can be initiated simultaneously, so priorities must be assigned. While the external validity of this selection and ranking processes has not been proven, the face validity of the process will be important for continued stakeholder support.

Continuous updating is important. Advances and new data appear all the time, so frequent updates of literature reviews will help make sure that the topics selected still need research.

Prioritizing topics is very difficult. As mentioned above, ranking studies by their importance is difficult to do in a manner that is equitable. The burden of suffering caused by a condition, expressed in prevalence or mortality rates, can be determined for many conditions, but it is skewed by greater prevalence of disease in older populations. Taking into account the years of productive life lost can help to correct for some of the age bias, but such calculations do not necessarily reflect costs. Treatment cost data are biased towards conditions whose treatments involve expensive devices or drugs. And so on.

“Indication creep” is challenging to assess. Often drugs or devices that have been proven effective for one indication or condition will be used to treat other, related problems without strong evidence. Questions then arise about their effectiveness for the new indication. Are de novo studies required, or can results from research on similar problems be extrapolated to apply?

Systematic reviews and evidence-based guidelines are important and helpful, as are expert-written editorials. Staff found that medical journal

editorials accompanying research articles often provided a good orientation for subjects being considered. When available, systematic reviews and meta-analyses helped in the assessment of what is known and what needs to be done. Evidence-based clinical practice guidelines were also useful, especially when they included research agendas that emerged from the guideline creation process.

The distinction between efficacy and effectiveness is an important one. More often than not, clinical trials and the resulting meta-analyses report on work done in academic centers with highly selected patients. Except for some very recent trials, most do not include minority or gender diversity. This limits the ability to extrapolate from trial or meta-analysis findings to real-world populations.

Much more needs to be done. This effort is only a preliminary step in setting criteria and providing examples of CER. In several instances a single test or technology was discussed as an example of several needed study topics and areas. A much larger and more comprehensive assessment process is needed to create a truly national inventory of needed research.

CLINICAL DATA SETS THAT NEED TO BE MINED

Jesse A. Berlin, Sc.D., Vice President, Epidemiology; and

Paul E. Stang, Ph.D., Senior Director, Epidemiology,

Johnson & Johnson Pharmaceutical R&D9

Overview

Information is like fish: It’s better when it’s fresh.

Healthcare delivery, by virtue of the evolving ability of systems to capture and store data and the intense interest in analyzing those data, is poised for transformation. The key to the transformation will be how the data and analyses are used to inform decisions to improve health. If successful, the system can be transformed into a learning health system that will provide high-quality data to inform decisions made by policy makers, healthcare providers, and patients, who can then use the same data collection and analytic tools to assess the impact of those decisions. The critical elements needed to transform health care and to create a “learning organization” (Senge, 1990) include experimentation with new approaches, learning from personal and corporate past experience, learning from best practices of

_______________

9 The authors would like to acknowledge the insightful comments of Michael Fitzmaurice on earlier drafts.

others, efficient and rapid knowledge transfer, and a systematic approach to problem solving.

This model has been recast for health care by Etheredge (2007), who espouses the value of observational research in medical care data as a laboratory for testing hypotheses and undertaking research to inform rapid learning and improve the efficiency of healthcare delivery in the United States in addition to addressing a number of other information gaps. However, the current capacity and data resources are only capable of filling the short-term needs and therefore represent a modest initial step toward informing better healthcare decision making. The contribution of electronic data collection to achieving the vision of the learning health system will be directly related to the quality, breadth, and depth of the data capture.

The basic contention underlying this paper is that appropriate use of existing data and creative uses of existing data collection mechanisms will be crucial to operationalizing the above elements, the end result of which will be improvement of healthcare decision making in the near term. With this goal in mind, the strengths and limitations of currently available administrative data to address questions of comparative effectiveness (and safety) will be explored, and additional ways in which the existing infrastructure for these databases might be used to support further data collection efforts, perhaps more specifically targeted, will be proposed.

Currently Available Healthcare Databases:

Claims and Electronic Health Records

Most existing healthcare databases were created for purposes other than research. Currently, payers (including managed care organizations, insurance companies, employers, and governments) use data to track expenditures for multiple purposes. This gives rise to the so-called claims or claims-based databases that contain the coded transactional billing records between a clinician and an organization that allow the healthcare provider to be reimbursed for a given patient. In parallel, the details of the patient–clinician interaction contained within the medical record have slowly been moving from paper to computers, giving rise to EHRs, which can also be aggregated into a database. The EHR may, in some cases, represent all of the care provided to a given patient by an institution or staff health maintenance organization, but in other cases it may represent just that portion of care rendered by the individual clinician with the EHR. The Mayo Clinic, long the keeper of extensive longitudinal paper “dossier” medical records, now uses an electronic system. The General Practices Research Data (GPRD) based in the United Kingdom and records from Kaiser Permanente and the Henry Ford Health Systems are a few other examples of these

EHR databases. These data reflect not only the patient–clinician interaction but also the underlying healthcare delivery system (and its idiosyncrasies).

Existing secondary data sets, such as those listed in Box 2-2, offer a number of advantages for research. Studies are inexpensive and can be done quickly, relative to the cost of doing a clinical study, because the data have already been collected and organized into a database; the data reflect healthcare decisions and outcomes as they were actually made (vs. the artificial constructs of an RCT); and each database represents an identifiable and quantifiable source population, i.e., a “denominator” for the calculation of event rates and mean values. By virtue of reflecting actual, real-world clinical practice, these databases also offer improved external validity relative to RCTs.

Despite all of these advantages for research, secondary data have several important limitations and obvious gaps. For claims data, research would be restricted to coded diagnoses, some of which may be erroneous, may be part of an active workup (e.g., the code may be MI [myocardial infarction], but the interaction was part of a “rule-out” MI), or may omit a code altogether because it is not required for billing (e.g., one of four active problems during the visit). Some relevant data may not have been collected or consistently recorded, particularly those potential confounding factors that may be associated with the choice of therapy and with the outcome of interest. The absence of these data may be critical when making comparisons between specific therapeutic options, as they may strongly influence the study results or the conclusions drawn, but they cannot be managed in the design or analysis of these databases. The problem of confounding of comparisons between therapeutic options is why randomization is such a powerful aspect of clinical trials. Unfortunately, many existing databases lack consistent capture of such factors as smoking status, alcohol consumption, use of over-the-counter (OTC) medications; even weight and height are not always routinely captured in these databases (Box 2-2). As an example, Ilkhanoff and colleagues (2005) used a case-control study that included interview-based primary data collection from participants to show that adjustment for confounding factors not captured in electronic databases—e.g., smoking, family history, years of education—had a substantial impact on estimates of relative risk for MI associated with non-aspirin, non-steroidal anti-inflammatory drug (NANSAID) use, relative to nonusers. The inability of databases to capture OTC use of NANSAIDs and OTC aspirin use also had marked effects on study findings.

There is also a general lack of longitudinality in the patient record that reflects the current healthcare system. Loss to follow-up occurs because people are constantly changing healthcare systems or coverage, either as part of the annual choices provided by employers or because their eligibility for

BOX 2-2

Examples of Existing Healthcare Databases

Healthcare delivery systems: Kaiser Permanente, Group Health Cooperative of Puget Sound, Geisinger Health System, Henry Ford Health System, Rochester Epidemiology Project (Mayo Clinic)

Aggregated claims: PharMetrics, Marketscan (Thompson-Medstat)

Electronic medical record systems independent of a single healthcare delivery system: General Practice Research Database (united Kingdom), The Health Improvement Network (based in the united Kingdom), General Electric (Centricity), Cerner

Hospital: Premier, Cerner

Government-managed or -funded programs: Medicaid, Centers for Medicare & Medicaid Services (Medicare), Veteran’s Administration, Department of Defense

U.S. government surveys: National Health and Nutrition Examination Survey, Medical Expenditure Panel

Specialty data and registries: Surveillance, Epidemiology, and End Results (Cancer Registry), Food and Drug Administration spontaneous adverse event database (voluntary drug adverse event reports), the Nordic countries linkable registries (Sweden, Denmark)

Canadian provincial databases: Saskatchewan, Manitoba, Régie de l’Assurance Maladie du Québec

Prescription-tracking databases: IMS Health, Verispan

coverage changes. About 10 to 20 percent of patients in a given insurance database may leave a given plan during a given year. Having a unique identification number that follows a given patient across all interactions with the healthcare system would alleviate this problem with longitudinality, but it is clear there are appropriate concerns about confidentiality that such a system would trigger.

Finally, there are entire segments of the population and healthcare system that are poorly represented in these data. The interactions of the 49

million Americans without healthcare coverage are essentially lost to the current system since there is no ability to link individual patients with a unique identification number. Similarly, elderly people and those in institutions are essentially overlooked in most analyses because of lack of access to their clinical data, even though these are the very groups that are most at risk for poor coordination of care and less favorable outcomes.

Studying Benefits and Risks in Existing Healthcare Data: Information Asymmetry

Because the data source represents a clinical interaction, any retrospective research will be limited to events that could be characterized as part of the coded or detailed physician interaction. In general, the benefits of treatment are more common than the risks, but unlike risk, benefits are poorly represented in these data sources, as they do not fit into the current diagnostic vernacular. Many of the benefits of treatment (e.g., reduction in blood pressure, improvement in mood or quality of life, return to full mobility, fewer number of seizures per month, reduced symptoms of schizophrenia) are not clinical diagnoses, and they are not usually captured in databases because they cannot be represented in coded claims. It is not always known what impact these other measures have on more serious events, nor is it known how their importance is perceived by patients and providers. The claims do capture use-based measures (e.g., switching drugs, changes in emergency department use, hospitalizations), and the data would include reductions in clinical events (e.g., myocardial infarction). Conversely, most potential harms from therapies are clinical events and would be captured in clinical encounter data (e.g., agranulocytosis, hepatitis, renal failure).

In the context of comparative effectiveness studies, differences in effectiveness (usually considered to be benefits) between two treatments, especially between two drugs in the same class, may be small in magnitude. Evaluating such small differences in effects absolutely requires strong control over potential confounding variables if internal validity is to be maintained. If one fails to control confounding variables, the observed differences in effects (or safety) could be misleading, as they might represent differences in the underlying characteristics of patients exposed to one or the other product rather than true differences in effect between the products. Several efforts, including the Developing Evidence to Inform Decisions about Effectiveness (DEcIDE) network and the Centers for Education and Research on Therapeutics, both of which were set up by AHRQ and the Observational Medical Outcomes Partnership from the Foundation for the NIH, are under way to better understand the strengths and limitations of these databases, the performance characteristics of the methods, and the

ability to use them as the initial line of surveillance for potential safety issues.

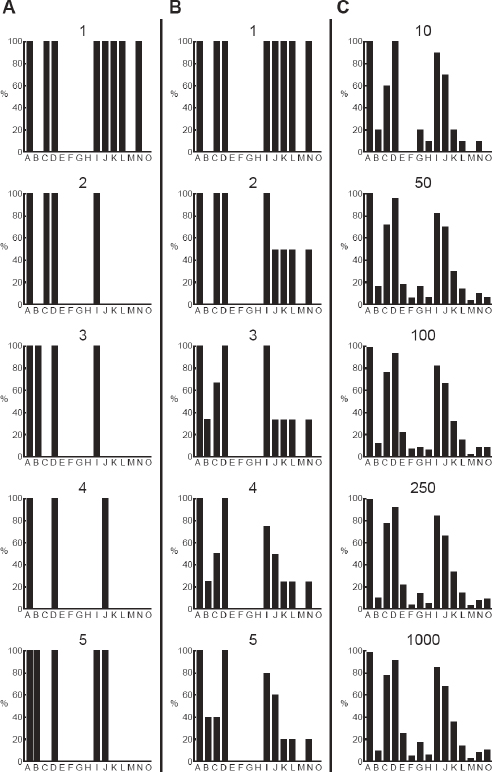

Because these databases reflect care as it is really delivered, generally without the consistent screening and capture of information that exists in a clinical trial, variability and uncertainty can be high. Erkinjuntti and colleagues offer a striking example in which the prevalence of dementia in a single Canadian cohort of patients varied from 3.1 to 29.1 percent simply by applying different accepted case definitions of dementia (Figure 2-1) (Erkinjuntti et al., 1997). With secondary data it is unclear what diagnostic criteria (if any) were applied in the clinical setting to arrive at a diagnosis, and there are other measures that are either inherently prone to variability, such as blood pressure, or are subjective in nature, such as the Tanner score. When measures are highly variable and taken outside the context of a carefully controlled study with standardized measurement techniques, it will often be more difficult to detect differences in these measures between treatment approaches. By engaging the clinician directly at the point of data entry, the record system itself can help standardize the capture of data, can solicit additional detail, or can push summary information and links to additional resources.

Existing databases are generally considered to be reasonably complete with respect to determining “exposure” to particular drugs (and, to a lesser

FIGURE 2-1 The prevalence of dementia across different diagnostic criteria in the same Canadian cohort.

NOTE: CAMDEX = Cambridge Mental Disorders of the Elderly Examination;

DSM = Diagnostic and Statistical Manual of Mental Disorders; ICD = International Classification of Diseases.

SOURCE: Derived from data in Erkinjuntti et al. (1997).

extent, medical devices) (Strom, 2005). However, databases capture only the prescription of a medication (from EHR) or, at most, the prescription plus the dispensing of the prescription (from pharmacy records or billing). The databases do not (cannot) capture whether the drug was actually used or, if used, whether it was used correctly.

While there are a number of challenges in the use of current databases to address questions of comparative effectiveness, it is still possible to learn from current efforts to inform further designs and improvements in the capture of data, governance, and methods. The current systems can best be thought of as hypothesis generating and, potentially, hypothesis strengthening; it is unclear to what extent they can be considered definitive sources of data for confirmation. In some respects, current systems represent databases in search of a question. As the field transitions to EHRs, some of the issues with coding mentioned above will be dramatically reduced, and the value and impact of these data and the evolving methods will improve. The ability to link a patient across data sets will further strengthen the capacity of the data sets to provide more definitive answers, increasing the value of these data sets; the appearance of prompted “pop-ups” to collect or refine data at the point of entry will similarly increase the value of the data sets. Real value will come from the ability to use the EHR system as a data collection vehicle for randomized studies in the populations covered by the data sources. This concept is expanded in the following section.

Looking Ahead

Given the limitations of working with available databases, it seems likely that more robust data collection at the clinical interface would improve insights from, and the quality of, observational databases. Accumulating higher-quality data could help provide insight into current practice and help improve care. Simply adding flags to denote “rule-out” diagnoses would help researchers to better distinguish actual events from clinical workup. Similarly, with respect to general hospitalization data where much effort is focused (because of its high costs), if a separate diagnostic list of those findings was included at the time of admission (rather than the existing discharge diagnoses only), it would have a major impact on the ability to track and determine risks and effectiveness of hospital-based occurrences. Finally, as more devices are implanted, physically coding (e.g., with bar codes) the individual devices with a unique identification would facilitate future identification and the tracking of safety and outcomes. The ongoing Centers for Medicare & Medicaid Services (CMS) payments to hospitals and physicians for reporting quality data and for improved performance on

quality measures may be an appropriate mechanism to help motivate and implement such changes.

However, the innovation that holds the greatest potential for informing change in health care will be the ability to use the existing data infrastructure and healthcare delivery system to take advantage of randomization at points of clinical equipoise to generate new insights into interventions and their outcomes. There are many possible innovative approaches, but the focus here is specifically on the idea of “designing studies into the database.”

In general terms, the goal is to increase the power of the existing data collection through the EHR by enhancing data collection with special data collection forms, e.g., screens that pop up on a computer in the physician’s office for patients who match a specific set of criteria. The basic idea is to tailor additional aspects of data collection (as would be done in a separately designed and implemented primary study) within the context of an existing data collection system. This increases the possibility of going one step further by conducting large simple randomized studies (also called naturalistic or real-world trials) by “randomizing into the database,” a concept that was first described by Sacristan and coworkers (1997) and later employed by Mosis and colleagues in the Dutch Integrated Primary Care Information database (Mosis et al., 2005a, 2005b). Their findings suggest that the technical infrastructure existed but that the requirements for recruitment in general practice for this particular study were inconsistent with the flow of patient care and were too time consuming (Mosis et al., 2006). At a high level, this would again involve the use of a computer in the clinician’s office that, when a patient meeting certain criteria presents, would generate a special screen asking the clinician whether or not he or she is willing to randomize that patient.

This model is being revived across the United Kingdom in the Research Capability Programme in the Connecting for Health initiative, which includes educating the public on the importance of participating in clinical trials as a way of contributing to medical knowledge and advancement. Making such trials practical in the context of primary care may well require either structural changes in the process of care, in order to facilitate patient and physician participation, or adjustments to how these trials are conducted, so that the interruptions to the usual process of care are minimized. Various scenarios might be considered as far as when, in the course of care, computerized “prompts” would be presented to the clinician: during periodic reviews of new data, for example, vs. when a patient presents for any reason vs. when a patient presents with specific symptoms vs. when a prescription for one of the study drugs is written. These various scenarios have potentially very different implications for the acceptability of the trial

to the clinician. Research is ongoing in the UK GPRD to test the feasibility of “randomized trials within the database.”10

The significance is that the same clinical data capture can be used as data capture for randomized (or observational) studies. The database provides part of the infrastructure for conducting a targeted study, thereby reducing time and costs while engaging the clinician in the process. This approach will require a variety of steps if it is to be feasible: modifying the existing infrastructure to produce new data collection tools and integrate those into existing systems, training clinicians to use the new systems, and perhaps even training clinicians in the principles of clinical epidemiology, so that they might better appreciate the value of research based in actual clinical settings.

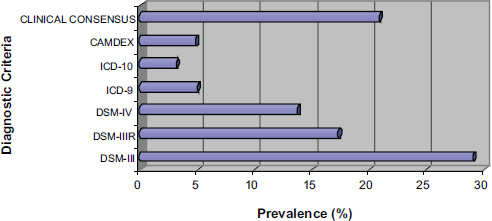

The discussion so far has focused on data collection aimed at specific research questions. However, capturing the data at the physician–patient interface may have a number of other potential applications beyond contributing to larger trials. Data on individual physician practices and outcomes from specific encounters could form a foundation for single-physician-focused efforts that would allow practitioners to see and track what works and what doesn’t, not just for their own patients but for all (similar) patients in the database, and to do a better job of understanding their own treatment decisions and the impact of those decisions. It is essential that data flow not be unidirectional. This concept of data flowing back to practitioners and informing future practice is captured in Figure 2-2. This is consistent with the views expressed by others (Etheredge, 2007; Stewart et al., 2007) and would be the foundation of a learning health system.

To overcome barriers generated by the structure of the healthcare system (particularly in the United States) and the dispersion of healthcare data, a broader view of data collection and integration is required. Comprehensive health records capturing all of the clinical encounters with a given patient will require an infrastructure to link across databases (issuing a unique patient identifier to enable this linkage), perhaps to include the personal health record maintained by the patient himself or herself. This generates more than just information technology (IT) requirements. For example, one must consider privacy concerns, particularly in the United States with its Health Insurance Portability and Accountability Act (HIPAA) regulations. Making links across hospitals, claims, electronic medical records, and other data sources will be best accomplished by using unique personal identifiers (e.g., social security numbers), which would require a reexamination of the country’s HIPAA and protected personal information culture.

An investment in workforce and methods will also be required to realize the full benefit of these changes to the data and IT infrastructure.

_______________

10 See http://www.gprd.com/home/default.asp (accessed September 22, 2010).

FIGURE 2-2 Data flow in a learning system.

SOURCE: Reprinted with permission from Anceta American Medical Group Association’s Collaborative Data Warehouse.

Clinicians will need to be provided with more training to make sure they understand how their contribution of quality data directly affects the value of the information that they and others can retrieve. A variety of specialists, including informaticians, methodologists, and epidemiologists, will also be required to assure that there are continuing improvements to the systems.

Sharing of de-identified, individual-level, clinical trials data could provide an incredibly rich source of data to investigators. Again, however, doing so presents challenges because of privacy and informed consent concerns. Strict de-identification, required under HIPAA, is a time-consuming and costly activity (as opposed to the use of limited data sets from which certain key identifiers have been stripped). Safety analyses of erthyropoietin-stimulating agents are currently being conducted by the Cochrane Hematological Malignancies Group. For this effort, data have been provided by multiple sponsors of trials, including three pharmaceutical companies. There are other ongoing consortia of many types. For example, a Duke/FDA/Industry Cardiac Safety Research Consortium exists, the purpose of which is to create an electrocardiogram (ECG) library from clinical trials that could be used to identify early predictors of cardiac risk (Cardiac Risk ECG Library). Thus there is ample precedent for successful collaborative efforts across academic, government, and private sectors, with the success of each resting on the ability to amass data from a number of different sources.

The comments thus far have focused, at least implicitly, on pharma-

ceuticals, but it is important to remember that comparative effectiveness questions also arise with respect to medical devices. Currently, as far as is known, none of the administrative databases that are publicly available (usually for a fee) can distinguish among devices by manufacturer, so, for example, one may know a coronary stent was used, but much would be unknown, including which company made that stent and specific details around the individual stent (e.g., manufacturing lot number).

Summary

Data can be powerful and, one hopes, represent truth. Existing databases are currently most useful for paying bills and reflecting medical treatment. For research, the most useful applications of administrative data to date have been to support the analysis of potential safety issues. Although a number of statistical methods are available for controlling potential confounders, their contribution may be limited by the availability and accuracy of the required data. This lack of sufficiently detailed information on confounding factors is potentially a bigger problem than any challenges related to statistical methods when studying small-magnitude differences in the effectiveness of two therapies. Existing databases generally lack specific information on effectiveness, except when effectiveness can be represented in terms of clinical events.

Although not necessarily optimal, there are rational options that would improve the existing data collection system and its usefulness to both clinician and researcher. In particular, further development of a system at the point of care that records information, prompts the clinician for additional information, and provides insight and summarization back to the clinician should improve the quality of care by making critical data directly available when the clinician is making treatment decisions. This feedback loop is a critical link in the development of a learning health system that functions both for direct patient care and for the development of a research infrastructure that will help improve quality.

Future directions may include a mix of data quality and infrastructure efforts. Simple data-side adjustments that would improve the usefulness of these data (e.g., a “rule-out” flag that would signal more directly a clinician’s intent rather than leave others to misinterpret a code as an occurrence, a hospital admission findings list, unique identification numbers on all implanted devices) would be smaller steps that could generate huge returns in the quality of patient care. The ultimate goal is to begin both building targeted studies and enhanced data capture capabilities into the framework of existing medical care databases as well as making information flow both from and to healthcare providers in a way that is immediately beneficial and effective in informing their care of the patient.

KNOWLEDGE SYNTHESIS AND TRANSLATION THAT NEED TO BE APPLIED11

Richard A. Justman, UnitedHealthcare

EBM is now the mantra of physicians, consumers, purchasers of health care, regulators, payers, and others who want to know which medical test or treatment works best. Unfortunately, an agreed-upon infrastructure to determine which treatment works best does not exist today. In fact, although everyone agrees that it is a good idea to follow the principles of EBM in deciding which treatment works best, there is no such agreement on the standards of clinical evidence to be used in a given situation. A national system for grading clinical evidence does not exist. This creates an interesting conundrum for persons who want answers to specific questions.

For example, a patient with localized prostate cancer seeking information on which treatment would be best for him is likely to obtain different answers depending upon whether he asks a primary care physician, a urologist, or a radiation oncologist. In fact, an asymptomatic 50-year-old man cannot even obtain definitive answers about whether he should be screened for prostate cancer with an inexpensive, readily available blood test for prostate-specific antigen.

Physicians seeking information based upon grades of clinical evidence face a similar dilemma. For example, microvolt T-wave alternans is an office-based test that predicts the risk of life-threatening cardiac arrhythmia. The American College of Cardiology grades the evidence supporting its use as grade 2a (Zipes et al., 2006). A physician seeking information about the use of bevacizumab to treat breast cancer will learn that the National Cancer Care Network drug compendium grades the evidence supporting its use as grade 2a. However, the definitions of grade 2a given by these two highly respected professional organizations do not match.12

_______________

11 Note: This section is adapted from portions of Knowing What Works in Health Care: A Roadmap for the Nation, a report of the Institute of Medicine Committee on Reviewing Evidence to Identify Highly Effective Clinical Services (IOM, 2008).

12 American College of Cardiology: “Evidence level IIa: conditions for which there is conflicting and/or a divergence of opinion about the usefulness/efficacy or a procedure or treatment. Weight of evidence/opinion is in favor or usefulness/efficacy” (Hunt, et al., 2005). National Comprehensive Cancer Networks (NCCN) Category 2A: “The recommendation is based on lower-level evidence, but despite the absence of higher-level studies, there is uniform consensus that the recommendation is appropriate. Lower-level evidence is interpreted broadly, and runs the gamut from phase 2 to large cohort studies to case series to individual practitioner experience. Importantly, in many instances, the retrospective studies are derived from clinical experience of treating large numbers of patients at a member institution, so NCCN Guidelines panel members have firsthand knowledge of the data. Inevitably, some recommendations must address clinical situations for which limited or no data exist. In these instances the congruence of experience-based judgments provides an informed if not confirmed direction for optimizing

Institute of Medicine Committee to Identify

Highly Effective Clinical Services

In June 2006 the Robert Wood Johnson Foundation asked the Institute of Medicine to do the following:

- Recommend an approach to identifying highly effective clinical services.

- Recommend a process to evaluate and report on clinical effectiveness.

- Recommend an organizational framework for using evidence reports to develop recommendations on appropriate clinical applications for specified populations.

The imperative behind this request derives from the need to constrain healthcare costs, which have been rising faster than the consumer price index without a commensurate improvement in health outcomes; the need to reduce the idiosyncratic geographic variation in the use of healthcare services; the need to improve clinical quality, including health outcomes; the need to give consumers information they need to make healthcare choices; and the need to help purchasers of health care and payers to decide which services to include in their benefit designs.

Clinical Safety and Effectiveness: Current State

While there are multiple avenues available today to help consumers, physicians, and others decide which treatments are safe and effective, they all have significant limitations. In addition, the lack of a national comparative effectiveness architecture leaves the current state replete with gaps, duplications, and contradictions, as noted below (see also Table 2-3).

The FDA reviews the safety and effectiveness of medications. However, comparative effectiveness studies are not required as part of this review, so users lack information on which medication works best for a particular disease scenario. Another division of the FDA, the Center for Devices and Radiological Health (CDRH) reviews medical devices. The level of review performed by the CDRH is determined by the classification of the device. In any event, head-to-head trials and long-term outcome studies are not required in order to approve a medical device for marketing. Physicians commonly prescribe both medications and medical devices for other than the labeled indications (“off label” prescribing). This sometimes leads to

_______________

patient care. These recommendations carry the implicit recognition that they may be superseded as higher-level evidence becomes available or as outcomes-based information becomes more prevalent” (NCCN, 2008).

conflicting and confusing results. For example, bevacizumab is labeled for treatment of breast cancer. It is not labeled for treatment of age-related macular degeneration (AMD). Yet the evidence for improved vision in persons with AMD is stronger than the evidence of prolonged survival for women with breast cancer.

Multiple organizations perform systematic reviews of medical tests and treatments, including the Cochrane Collaboration; Hayes, Inc.; the ECRI Institute; and the Blue Cross Blue Shield Technology Evaluation Center. These respected organizations provide excellent systematic reviews of available clinical evidence. However, many of the topics they review are new or emerging technologies, so there is insufficient information from which valid conclusions can be drawn. Two of these organizations provide brief reports of new technologies, but the lack of available evidence makes these reports of limited use to persons who must make treatment decisions today.

Various agencies of the federal government have performed systematic reviews of available clinical evidence. Two such organizations, the National Center for Health Care Technology and the Office of Technology Assessment, did so in the past, but no longer do so. AHRQ now contracts with evidence-based practice centers to complete systematic reviews. These reports are uniformly excellent, but they address only a small number of topics about which consumers and physicians need to know. AHRQ also maintains the National Guideline Clearinghouse, a repository of clinical practice guidelines developed by other organizations. The Department of Veterans Affairs (VA), CMS, and the NIH provide information about the safety and effectiveness of medical treatments in different ways. However, they, too, lack the infrastructure to offer comprehensive information about the comparative effectiveness of health services.

Professional specialty societies and health plans, among others, develop clinical practice guidelines. Some of these guidelines provide excellent reviews of clinical evidence, noting both the strength of the evidence and the strength of the recommendations they make. Other guidelines are less fastidious in their review of evidence, relying instead on a consensus of expert opinion.