2

Existing Technology Forecasting Methodologies

INTRODUCTION

Technology Forecasting Defined

If individuals from disparate professional backgrounds were asked to define technology forecasting, chances are that the responses would be seemingly unrelated. Today, technology forecasting is used widely by the private sector and by governments for applications ranging from predicting product development or a competitor’s technical capabilities to the creation of scenarios for predicting the impact of future technologies. Given such a range of applications, it is no surprise that technology forecasting has many definitions. In the context of this report, it is “the prediction of the invention, timing, characteristics, dimensions, performance, or rate of diffusion of a machine, material, technique, or process serving some useful purpose.1 This chapter does not specifically address disruptive technology forecasting but addresses instead the most common methods of general technology forecasting in use today and in the past.

A forecast is developed using techniques designed to extract information and produce conclusions from data sets. Forecasting methods vary in the way they collect and analyze data2 and draw conclusions. The methods used for a technology forecast are typically determined by the availability of data and experts, the context in which the forecast will be used and the needs of the expected users. This chapter will provide a brief history of technology forecasting, discuss methods of assessing the value of forecasts, and give an overview of forecasting methodologies and their applications.

History

Technology forecasting has existed in one form or another for more than a century, but it was not until after World War II (WWII) that it began to evolve as a structured discipline. The motivation for this evolution was the U.S. government’s desire to identify technology areas that would have significant military importance.

In 1945, a report called Toward New Horizons was created for the U.S. Army Air Forces (von Karman, 1945). This report surveyed the technological development resulting from WWII, discussed the implications of that development, and suggested future R&D (Neufeld et al., 1997). Toward New Horizons, written by a committee chaired by Theodore von Karman, arguably represents the beginning of modern technology forecasting.

In the late 1940s, the RAND Corporation was created to assist the Air Force with, among other things, technology forecasting. In the 1950s and 1960s, RAND developed the Delphi method to address some of the weaknesses of the judgment-based forecasting methodologies of that time, which were based on the opinions of a panel of experts. The Delphi method offers a modified structured process for collecting and distilling the knowledge from a group of experts by means of a series of questionnaires interspersed with controlled opinion feedback (Adler and Ziglio, 1996). The development of the Delphi method marked an important point in the evolution of technology forecasting because it improved the value of an entire generation of forecasts (Linstone and Turoff, 1975). The Delphi method is still widely used today.

The use of technology forecasting in the private sector began to increase markedly during the 1960s and 1970s (Balachandra, 1980). It seems likely that the growing adoption of technology forecasting in the private sector, as well as in government agencies outside the military, helped to diversify the application of forecasts as well as the methodologies utilized for developing the forecasts. The advent of more powerful computer hardware and software enabled the processing of larger data sets and facilitated the use of forecasting methodologies that rely on data analysis (Martino, 1999). The development of the Internet and networking in general has also expanded the amount of data available to forecasters and improved the ease of accessing these data. Today, technology forecasting continues to evolve as new techniques and applications are developed and traditional techniques are improved. These newer techniques and applications are looked at later in this chapter.

DEFINING AND MEASURING SUCCESS IN TECHNOLOGY FORECASTING

Some would argue that a good forecast is an accurate forecast. The unfortunate downside of this argument (point of view) is that it is not possible to know whether a given forecast is accurate a priori unless it states something already known. Accuracy, although obviously desirable, is not necessarily required for a successful forecast. A better measure of success is the actionability of the conclusions generated by the forecast in the same way as its content is not as important as what decision makers do with that content.

Since the purpose of a technology forecast is to aid in decision making, a forecast may be valuable simply if it leads to a more informed and, possibly, better decision. A forecast could lead to decisions that reduce future surprise, but it could also inspire the organization to make decisions that have better outcomes—for instance, to optimize its investment strategy, to pursue a specific line of research, or to change policies to better prepare for the future. A forecast is valuable and successful if the outcome of the decisions based on it is better than if there had been no forecast (Vanston, 2003). Of course, as with assessing accuracy, there is no way to know whether a decision was good without the benefit of historical perspective. This alone necessitates taking great care in the preparation of the forecast, so that decision makers can have confidence in the forecasting methodology and the implementation of its results.

The development of a technology forecast can be divided into three separate actions:

-

Framing the problem and defining the desired outcome of the forecast,

-

Gathering and analyzing the data using a variety of methodologies, and

-

Interpreting the results and assembling the forecast from the available information.

Framing the problem concisely is the first step in generating a forecast. This has taken the form of a question to be answered. For example, a long-range Delphi forecast reported by RAND in 1964 asked participants to list scientific breakthroughs they regarded as both urgently needed and feasible within the next 50 years (Gordon and Helmer, 1964).

In addition to devising a well-defined statement of task, it is also important to ensure that all concerned parties understand what the ultimate outcome, or deliverable, of the forecast will be. In many cases, the forecaster

and the decision maker are not the same individual. If a forecast is to be successful, the decision maker needs to be provided with a product consistent with what was expected when the process was initiated. One of the best ways to assure that this happens is to involve the decision maker in the forecasting, so he or she is aware of the underlying assumptions and deliverables and feels ownership in the process.3

Data are the backbone of any forecast, and the most important characteristic of a data set is its credibility. Using credible data increases the probability that a forecast will be valuable and that better decisions will be made from it. Data can come in a variety of forms, but the data used in forecasting are of two types: statistical and expert opinion. The Vanstons have provided criteria for assessing both data types before they are used in a forecast (Vanston and Vanston, 2004).

For statistical data, the criteria are these:

-

Currency. Is the timeliness of the data consistent with the scope and type of forecast? Historical data are valuable for many types of forecasts, but care should be taken to ensure that the data are sufficiently current, particularly when forecasting in dynamic sectors such as information technology.

-

Completeness. Are the data complete enough for the forecaster(s) to consider all of the information relevant to an informed forecast?

-

Potential bias. Bias is common, and care must be taken to examine how data are generated and to understand what biases may exist. For instance, bias can be expected when gathering data presented by sources who have a specific interest in the way the data are interpreted (Dennis, 1987).

-

Gathering technique. The technique used to gather data can influence the content. For example, subtle changes in the wording of the questions in opinion polls may produce substantially different results.

-

Relevancy. Does a piece of data have an impact on the outcome of the forecast? If not, it should not be included.

For data derived from expert opinion, the criteria are these:

-

Qualifications of the experts. Experts should be carefully chosen to provide input to forecasts based on their demonstrated knowledge in an area relevant to the forecast. It should be noted that some of the best experts may not be those whose expertise or credentials are well advertised.

-

Bias. As do statistical data, opinions may also contain bias.

-

Balance. A range of expertise is necessary to provide different and, where appropriate, multidisciplinary and cross-cultural viewpoints.

Data used in a forecast should be scrutinized thoroughly. This scrutiny should not necessarily focus on accuracy, although that may be one of the criteria, but should aim to understand the relative strengths and weaknesses of the data using a structured evaluation process. As was already mentioned, it is not possible to ascertain whether a given forecast will result in good decisions. However, the likelihood that this will occur improves when decision makers are confident that a forecast is based on credible data that have been suitably vetted.

It is, unfortunately, possible to generate poor forecasts based on credible data. The data are an input to the forecast, and the conclusions drawn from them depend on the forecasting methodologies. In general, a given forecasting methodology is suited to a particular type of data and will output a particular type of result. To improve completeness and to avoid missing relevant information, it is best to generate forecasts using a range of methodologies and data.

Vanston offers some helpful discussion in this area (Vanston, 2003). He proposes that the forecast be arranged into five views of the future. One view posits that the future is a logical extension of the past. This is called an “extrapolation” and relies on techniques such as trend analyses and learning curves to generate forecasts. A contrasting view posits that the future is too complex to be adequately forecasted using statistical techniques, so it is likely

to rely heavily on the opinions or judgments of experts for its forecast. This is called an “intuitive” view. The other three views are termed “pattern analysis,” “goal analysis,” and “counter puncher.” Each type of view is associated with a particular set of methodologies and brings a unique perspective to the forecast. Vanston and his colleagues propose that it is advantageous to examine the problem from at least two of the five views. This multiview approach obviously benefits from using a wider range of data collection and analysis methods for a single forecast. Because the problem has been addressed from several different angles, this approach increases the confidence decision makers can have in the final product. Joseph Martino proposed considering an even broader set of dimensions, including technological, economic, managerial, political, social, cultural, intellectual, religious, and ecological (Martino, 1983). Vanston and Martino share the belief that forecasts must be made from more than one perspective to be reasonably assured of being useful.

TECHNOLOGY FORECASTING METHODOLOGIES

As was discussed earlier, technology forecasting methodologies are processes used to analyze, present, and in some cases, gather data. Forecasting methodologies are of four types:

-

Judgmental or intuitive methods,

-

Extrapolation and trend analysis,

-

Models, and

-

Scenarios and simulations.

Judgmental or Intuitive Methods

Judgmental methods fundamentally rely on opinion to generate a forecast. Typically the opinion is from an expert or panel of experts having knowledge in fields that are relevant to the forecast. In its simplest form, the method asks a single expert to generate a forecast based on his or her own intuition. Sometimes called a “genius forecast,” it is largely dependent on the individual and is particularly vulnerable to bias. The potential for bias may be reduced by incorporating the opinions of multiple experts in a forecast, which also has the benefit of improving balance. This method of group forecasting was used in early reports such as Toward New Horizons (von Karman, 1945).

Forecasts produced by groups have several drawbacks. First, the outcome of the process may be adversely influenced by a dominant individual, who through force of personality, outspokenness, or coercion would cause other group members to adjust their own opinions. Second, group discussions may touch on much information that is not relevant to the forecast but that nonetheless affects the outcome. Lastly, groupthink4 can occur when forecasts are generated by groups that interact openly. The shortcomings of group forecasts led to the development of more structured approaches. Among these is the Delphi method, developed by the RAND Corporation in the late 1940s.

The Delphi Method

The Delphi method is a structured approach to eliciting forecasts from groups of experts, with an emphasis on producing an informed consensus view of the most probable future. The Delphi method has three attributes—anonymity, controlled feedback, and statistical group response5—that are designed to minimize any detrimental effects of group interaction (Dalkey, 1967). In practice, a Delphi study begins with a questionnaire soliciting input on a topic. Participants are also asked to provide a supporting argument for their responses. The questionnaires are collected, responses summarized, and an anonymous summary of the experts’ forecasts is resubmitted to the

|

4 |

Groupthink: the act or practice of reasoning or decision making by a group, especially when characterized by uncritical acceptance or conformity to prevailing points of view. Groupthink occurs when the pressure to conform within a group interferes with that group’s analysis of a problem and causes poor decision making. Available at http://www.answers.com/topic/groupthink. Last accessed June 11, 2009. |

|

5 |

“Statistical group response” refers to combining the individual responses to the questionnaire into a median response. |

participants, who are then asked if they would care to modify their initial responses based on those of the other experts. It is believed that during this process the range of the answers will decrease and the group will converge toward a “correct” view of the most probable future. This process continues for several rounds, until the results reach predefined stop criteria. These stop criteria can be the number of rounds, the achievement of consensus, or the stability of results (Rowe and Wright, 1999).

The advantages of the Delphi method are that it can address a wide variety of topics, does not require a group to physically meet, and is relatively inexpensive and quick to employ. Delphi studies provide valuable insights regardless of their relation to the status quo. In such studies, decision makers need to understand the reasoning behind the responses to the questions. A potential disadvantage of the Delphi method is its emphasis on achieving consensus (Dalkey et al., 1969). Some researchers believe that potentially valuable information is suppressed for the sake of achieving a representative group opinion (Stewart, 1987).

Because Delphi surveys are topically flexible and can be carried out relatively easily and rapidly, they are particularly well suited to a persistent forecasting system. One might imagine that Delphi surveys could be used in this setting to update forecasts at regular intervals or in response to changes in the data on which the forecasts are based.

Extrapolation and Trend Analysis

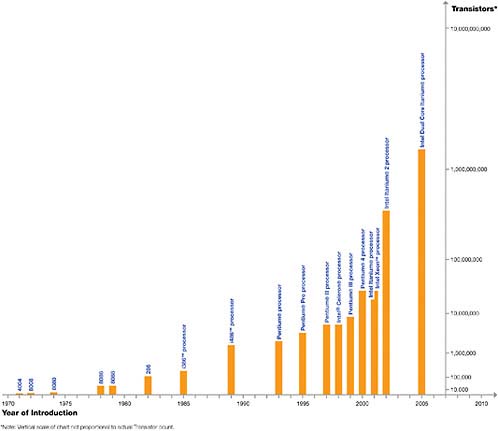

Extrapolation and trend analysis rely on historical data to gain insight into future developments. This type of forecast assumes that the future represents a logical extension of the past and that predictions can be made by identifying and extrapolating the appropriate trends from the available data. This type of forecasting can work well in certain situations, but the driving forces that shaped the historical trends must be carefully considered. If these drivers change substantially it may be more difficult to generate meaningful forecasts from historical data by extrapolation (see Figure 2-1). Trend extrapolation, substitution analysis, analogies, and morphological analysis are four different forecasting approaches that rely on historical data.

Trend Extrapolation

In trend extrapolation, data sets are analyzed with an eye to identifying relevant trends that can be extended in time to predict capability. Tracking changes in the measurements of interest is particularly useful. For example, Moore’s law holds that the historical rate of improvement of computer processing capability is a predictor of future performance (Moore, 1965). Several approaches to trend extrapolation have been developed over the years.

Gompertz and Fisher-Pry Substitution Analysis

Gompertz and Fisher-Pry substitution analysis is based on the observation that new technologies tend to follow a specific trend as they are deployed, developed, and reach maturity or market saturation. This trend is called a growth curve or S-curve (Kuznets, 1930). Gompertz and Fisher-Pry analyses are two techniques suited to fitting historical trend data to predict, among other things, when products are nearing maturity and likely to be replaced by new technology (Fisher and Pry, 1970; Lenz, 1970).

Analogies

Forecasting by analogy involves identifying past situations or technologies similar to the one of current interest and using historical data to project future developments. Research has shown that the accuracy of this forecasting technique can be improved by using a structured approach to identify the best analogies to use, wherein several possible analogies are identified and rated with respect to their relevance to the topic of interest (Green and Armstrong, 2004).

Green and Armstrong proposed a five-step structured judgmental process. The first step is to have an administrator of the forecast define the target situation. An accurate and comprehensive definition is generated based on

FIGURE 2-1 Moore’s law uses trend analysis to predict the price and performance of central processing units. SOURCE: Available at http://www.cyber-aspect.com/features/feature_article~art~104.htm.

advice from unbiased experts or from experts with opposing biases. When feasible, a list of possible outcomes for the target is generated. The next step is to have the administrator select experts who are likely to know about situations that are similar to the target situation. Based on prior research, it is suggested that at least five experts participate (Armstrong, 2001). Once selected, experts are asked to identify and describe as many analogies as they can without considering the extent of the similarity to the target situation. Experts then rate how similar the analogies are to the target situation and match the outcomes of the analogies with possible outcomes of the target. An administrator would use a set of predefined rules to derive a forecast from the experts’ information. Predefined rules promote logical consistency and replicability of the forecast. An example of a rule could be to select the analogy that the experts rated as the most similar to the target and adopt the outcome implied by that analogy as the forecast (Green and Armstrong, 2007).

Morphological Analysis (TRIZ)

An understanding of how technologies evolve over time can be used to project future developments. One technique, called TRIZ (from the Russian teoriya resheniya izobretatelskikh zadatch, or the “inventor’s problem-solving theory”), uses the Laws of Technological Evolution, which describe how technologies change throughout their lifetimes because of innovation and other factors, leading to new products, applications, and technologies. The technique lends itself to forecasting in that it provides a structured process for projecting the future attributes of a present-day technology by assuming that the technology will change in accordance with the Laws of Technological Evolution, which may be summarized as follows:

-

Increasing degree of ideality. The degree of ideality is related to the cost/benefit ratio. Decreasing price and improving benefits result in improved performance, increased functionality, new applications, and broader adoption. The evolution of GPS from military application to everyday consumer electronics is an example of this law.

-

Nonuniform evolution of subsystems. The various parts of a system evolve based on needs, demands, and applications, resulting in the nonuniform evolution of the subsystem. The more complex the system, the higher the likelihood of nonuniformity of evolution. The development rate of desktop computer subsystems is a good example of nonuniform evolution. Processing speed, disk capacity, printing quality and speed, and communications bandwidth have all improved at nonuniform rates.

-

Transition to a higher level system. “This law explains the evolution of technological systems as the increasing complexity of a product or feature and multi-functionality” (Kappoth, 2007). This law can be used at the subsystem level as well, to identify whether existing hardware and components can be used in higher-level systems and achieve more functionality. The evolution of the microprocessor from Intel’s 4004 into today’s multicore processor is an example of transition to a higher-level system.

-

Increased flexibility. “Product trends show us the typical process of technology systems evolution is based on the dynamization of various components, functionalities, etc.” (Kappoth, 2007). As a technology moves from a rigid mode to a flexible mode, the system can have greater functionality and can adapt more easily to changing parameters.

-

Shortening of energy flow path. The energy flow path can become shorter when energy changes form (for example, thermal energy is transformed into mechanical energy) or when other energy parameters change. The transmission of information also follows this trend (Fey and Rivin, 2005). An example is the transition from physical transmission of text (letters, newspapers, magazines, and books), which requires many transformational and processing stages, to its electronic transmission (tweets, blogs, cellular phone text messaging, e-mail, Web sites, and e-books), which requires few if any transformational or processing stages.

-

Transition from macro- to microscale. System components can be replaced by smaller components and microstructures. The original ENAIC, built in 1946 with subsystems based on vacuum tubes and relays, weighed 27 tons and had only a fraction of the power of today’s ultralight laptop computers, which have silicon-based subsystems and weigh less than 3 pounds.

The TRIZ method is applied in the following stages (Kucharavy and De Guio, 2005):6

-

Analysis of system evolution. This stage involves studying the history of a technology to determine its maturity. It generates curves for metrics related to the maturity level such as the number of related inventions, the level of technical sophistication, and the S-curve, describing the cost/benefit ratio of the technology. Analysis of these curves can help to predict when one technology is likely to be replaced by another.

|

6 |

More information on the TRIZ method is available from http://www.inventioneeringco.com/. Last accessed July 21, 2009. |

-

Roadmapping. This is the application of the above laws to forecast specific changes (innovations) related to the technology.

-

Problem formulation. The engineering problems that must be addressed to realize the evolutionary changes predicted in the roadmapping stage are then identified. It is in this stage that technological breakthroughs needed to realize future technologies are specified.

-

Problem solving. Many forecasts would terminate in the problem formulation stage since it is generally not the purpose of a forecast to produce inventions. In spite of this, TRIZ often continues. This last stage involves an attempt to solve the engineering problems associated with the evolution of a technology. Although the attempt might not result in an actual invention, it is likely to come up with valuable information on research directions and the probability of eventual success in overcoming technological hurdles.

Models

These methods are analogous to developing and solving a set of equations describing some physical phenomenon. It is assumed sufficient information is available to construct and solve a model that will lead to a forecast at some time in the future; this is sometimes referred to as a “causal” model. The use of computers enables the construction and solution of increasingly complex models, but the complexity is tempered by the lack of a theory describing socioeconomic change, which introduces uncertainty. The specific forecast produced by the model is not as important as the trends it reveals or its response to different inputs and assumptions.

The following sections outline some model-based techniques that may be useful for forecasting disruptive technology. Some of them were used in the past for forecasting technology, with varying success.

Theory of Increasing Returns

Businesses that produce traditional goods may suffer from the law of diminishing returns, which holds that as a product becomes more commonplace, its marginal opportunity cost (the cost of foregoing one more unit of the next best alternative) increases proportionately. This is especially true when goods become commoditized through increased competition, as has happened with DVD players, flat screen televisions, and writable compact discs. Applying the usual laws of economics is often sufficient for forecasting the future behavior of markets. However, modern technology or knowledge-oriented businesses tend not to obey these laws and are instead governed by the law of increasing returns (Arthur, 1996), which holds that networks encourage the successful to be yet more successful. The value of a network explodes as its membership increases, and the value explosion attracts more members, compounding the results (Kelly, 1999). A positive feedback from the market for a certain technological product is often rewarded with a “lock-in.” Google, Facebook, and Apple’s iPhone and iPod are examples of this. A better product is usually unable to replace an older product immediately unless the newer product offers something substantially better in multiple dimensions, including price, quality, and convenience of use. In contrast, this does not happen in the “goods” world, where a slightly cheaper product is likely to threaten the incumbent product.

Although the law of increasing returns helps to model hi-tech knowledge situations, it is still difficult to predict whether a new technology will dislodge an older product. This is because success of the newer product depends on many factors, some not technological. Arthur mentions that people have proposed sophisticated techniques from qualitative dynamics and probability theory for studying the phenomenon of increasing returns and, thus, perhaps to some extent, disruptive technologies.

Chaos Theory and Artificial Neural Networks

Clement Wang and his colleagues propose that there is a strong relationship between chaos theory and technology evolution (Wang et al., 1999). They claim that technology evolution can be modeled as a nonlinear process exhibiting bifurcation, transient chaos, and ordered state. What chaos theory reveals, especially through bifurcation patterns, is that the future performance of a system often follows a complex, repetitive pattern rather than a linear process. They further claim that traditional forecasting techniques fail mainly because they depend

on the assumption that there is a logical structure whereby the past can be extrapolated to the future. The authors then report that existing methods for technology forecasting have been shown to be very vulnerable when coping with the real turbulent world (Porter et al., 1991).

Chaos theory characterizes deterministic randomness, which indeed exists in the initial stages of technology phase transition.7 It is during this transition that a technology will have one of three outcomes: It will have a material impact, it will incrementally improve the status quo, or it will fail and go into oblivion. A particular technology exists in one of three states: (1) static equilibrium, (2) instability or a state of phase transition (bifurcation), and (3) bounded irregularity (chaos state). Bifurcations model sudden changes in qualitative behavior in technology evolution and have been classified as pitchfork bifurcation (gradual change, such as the switch from disk operating system [DOS] to Windows or the uniplexed information and computing system [UNIX]); explosive bifurcation (dramatic change, such as the digital camera disrupting the analog camera); and reverse periodic-adding bifurcation (dominant design, such as the Blu-ray Disc superseding the high-definition digital video disc [HD-DVD] format).

Wang and colleagues propose a promising mathematical model to aid in forecasting that uses a neural network to perform pattern recognition on available data sets. This model is useful because chaos is very sensitive to initial conditions. The basic methodology for applying artificial neural networks to technology forecasting follows these high-level steps: (1) preprocessing the data to reduce the analytic load on the network, (2) training the neural network, (3) testing the network, and (4) forecasting using the neural network. The proposed neural network model contains input and output processing layers, as well as multiple hidden layers connected to the input and output layers by several connections with weights that form the memory of the network. These are trained continuously when existing and historical data are run on the neural networks, perhaps enabling the detection of some basic patterns of technology evolution and improvement of the quality of forecasts.

It is possible to imagine utilizing some of these approaches for modeling disruptive technology evolution and forecasting future disruptions. They require, however, a great deal of validated data, such as the data available from the Institute for the Future (IFTF), to produce credible results.8 Besides needing large and diverse validated training sets, artificial neural networks require significant computational power. Users of these networks should be mindful that these automated approaches could amplify certain biases inherent in the algorithms and to the data set for training. These networks can reinforce bias patterns found in the data and users, causing the network to produce unwarranted correlations and pattern identifications. It is important that both the algorithms and the data are reviewed frequently to reduce bias.

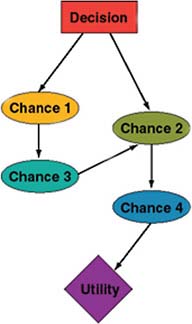

Influence Diagrams

An influence diagram (ID) is a compact graphical and mathematical representation of a decision situation.9 In this approach, the cause-effect relationships and associated uncertainties of the key factors contributing to a decision are modeled by an interconnection graph, known as an influence diagram (Howard and Matheson, 1981). Such diagrams are generalizations of Bayesian networks and therefore useful for solving decision-making problems and probabilistic inference problems. They are considered improved variants of decision trees or game trees.

The formal semantics of an influence diagram are based on the construction of nodes and arcs, which allow specifying all probabilistic independencies between key factors that are likely to influence the success or failure of an outcome. Nodes in the diagram are categorized as (1) decision nodes (corresponding to each decision to be made), (2) uncertainty or chance nodes (corresponding to each uncertainty to be modeled), or (3) value or utility nodes (corresponding to the assigned value or utility to a particular outcome in a certain state), as shown in Figure 2-2. Arcs are classified as (1) functional arcs to a value node, indicating that one of the components of the additively separable utility function is a function of all the nodes at their tails; (2) conditional arcs to an uncertainty node, indicating that the uncertainty at their heads is probabilistically conditioned on all the nodes at their tails;

|

7 |

“Technology phase transition” is the process in which one technology replaces another. |

|

8 |

An example of an influence diagram can be found at the IFTF Future Now Web site at http://www.iftf.org/futurenow. Last accessed October 28, 2008. |

|

9 |

Available at http://en.wikipedia.org/wiki/Influence_diagram. Last accessed July 15, 2009. |

FIGURE 2-2 Example of an influence diagram. SOURCE: Adapted from Lee and Bradshaw (2004).

(3) conditional arcs to a deterministic node, indicating that the uncertainty at their heads is deterministically conditioned on all the nodes at their tails; and (4) informational arcs to a decision node, indicating that the decision at their heads is made with the outcome of all the nodes at their tails known beforehand.

In an influence diagram, the decision nodes and incoming information arcs model the alternatives; the uncertainty or deterministic nodes and incoming conditional arcs model the probabilistic and known relationships in the information that is available; and the value nodes and the incoming functional arcs quantify how one outcome is preferred over another. The d-separation criterion of Bayesian networks—meaning that every node is probabilistically independent of its nonsuccessor nodes in the graph given the outcome of its immediate predecessor nodes in the graph—is useful in the analysis of influence diagrams.

An influence diagram can be a useful tool for modeling various factors that influence technology evolution; however, its ability to accurately predict an outcome is dependent on the quality of values that are assigned to uncertainty, utility, and other parameters. If data can be used to help identify the various nodes in the influence diagrams and polled expert opinion can be funneled into the value assignments for uncertainty and utility, then a plethora of tools known to the decision theoretic community could be used to forecast a certain proposed event in future. The error bars in such forecasting will obviously depend on the quality of the values that capture the underlying interrelationships between the different factors and decision subprocesses.

Influence diagrams are an effective way to map and visualize the multiple pathways along which technologies can evolve or from which they can emerge. Forecasters can assess the conditions and likelihoods under which a technology may emerge, develop, and impact a market or system. By using the decision nodes and informational arcs as signposts, they can also use influence diagrams to help track potentially disruptive technologies.

Scenarios and Simulations

Scenarios are tools for understanding the complex interaction of a variety of forces that come together to create an uncertain future. In essence, scenarios are stories about alternative futures focused on the forecasting problem at hand. As a formal methodology, scenarios were first used at the RAND Corporation in the early days of the cold war. Herman Kahn, who later founded the Hudson Institute, pioneered their implementation as he thought through the logic of nuclear deterrence. His controversial book On Thermonuclear War was one of the first published applications of rigorous scenario planning (Kahn, 1960). The title of the follow-up edition, Thinking About the Unthinkable, suggests the value of the method—that of forcing oneself to think through alternative possibilities, even those which at first seem unthinkable (Kahn, 1962). It was a methodology particularly well suited to a surprise-filled world. Indeed, Kahn would often begin with a surprise-free scenario and take it from there, recognizing along the way that a surprise-free future was in fact quite unlikely.

In technology forecasting, scenarios have been used to explore the development paths of technologies as well as how they roll out into the world. The first case, using scenarios to anticipate development paths, entails a study of the fundamental science or engineering and its evolution, the tools needed to develop the technology, and the applications that will drive its economics. To forecast the future of the semiconductor while the technology was in its infancy required an understanding of solid state physics, silicon manufacturing, and the need for small guidance systems on U.S. missiles, all of which were critical to the early days of the microchip.

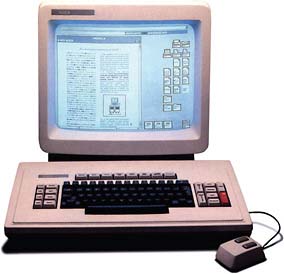

Using scenarios to forecast how technologies will play out in the real world calls for understanding the potential performance of the new technology and how users and other stakeholders will apply the technology. Early efforts to forecast the future of new technologies such as the personal computer, the cell phone, and the Internet missed the market because forecasters did not imagine that falling prices and network effects would combine to increase the value of the technology. These failed forecasts resulted in enormous business losses. IBM ultimately lost the personal computer market, and AT&T, despite its early dominance, never controlled the cell phone or the Internet market because it could not imagine the potential of the new technology. Xerox never capitalized on its pioneering work in graphical user interfaces (GUIs) because the company’s management never saw how GUI could be combined with the revolution in personal computing to reshape the core of its document business (see Figure 2-3).

FIGURE 2-3 Xerox’s Star system, which pioneered the GUI and the mouse. SOURCE: Image courtesy of Bruce Damer, DigiBarn Computer Museum, and Xerox Corporation.

In another approach, “backcasting,” planners envision various future scenarios and then go back to explore the paths that could lead them there from the present,10 examining the paths, decisions, investments, and breakthroughs along the way. Backcasting is a unique form of forecasting. Unlike many forecasting methods that begin by analyzing current trends and needs and follow through with a projection of the future, backcasting starts with a projection of the future and works back to the present. The grounding in the future allows the analysis of the paths from the present to a future to be more concrete and constrained.

Forecasting centering on backcasting would start by understanding the concerns of the stakeholders and casting those concerns in the context of alternative futures. Scenarios could then be envisioned that describe what the world would look like then: Sample scenarios could include a world without oil; wars fought principally by automated forces, drones, and robots; or a world with a single currency. Forecasters then would work backward to the present and generate a roadmap for the future scenario. The purpose of the backcast would be to identify signposts or tipping points that might serve as leading indicators. These signposts could be tracked with a system that alerts users to significant events much as Google News monitors the news for topics that interest users.

There are many challenges involved in executing an effective backcast. Matching the range of potential alternative futures with the range of stakeholder concerns is difficult but extremely important for making sure the backcast scenarios are relevant. The number of backcasts required for a forecast can become unwieldy, especially if there are numerous stakeholders with diverse needs and concerns and multiple pathways for arriving at a particular future. Backcasting requires an imaginative expert and/or a crowd base to generate truly disruptive scenarios and signposts.

Dynamic Simulations and War Games

Military leaders have attempted to simulate the coming battle as a training exercise since the earliest days of organized combat. The modern war games used in technology forecasting are another way of generating and testing scenarios. The military has war games and simulations to test new technologies and applications to better understand how they might change military strategy and tactics. War games are useful tools when human judgment plays an important role in how a technology is deployed. They test an engineered or technological capability that is then tested against the response of various actors who are actually played by live people and interact with one another over time to shape the outcome. For example, Monsanto might wish to simulate a political situation to anticipate the reaction to genetically modified seeds.11 Careful selection of stakeholders involved in the simulation might inadvertently have anticipated adverse public reaction. A significant market opportunity would be lost if the company faces global opposition to its genetically modified rice.

Other Modern Forecasting Techniques

New capabilities and challenges lead to the creation of new forecasting techniques. For example, the ability of the Internet to create online markets has opened new ways to integrate judgment into a prediction market (see below for a definition). Meanwhile, the rapid advance of engineering in sometimes surprising directions, such as sensing and manipulating matter and energy at a nanometer scale, opens up major discontinuities in potential forecasts, posing particularly difficult problems. The committee suggests considering the following techniques for forecasting disruptive technologies.

Prediction Markets

Prediction markets involve treating the predictions about an event or parameter as assets to be traded on a virtual market that can be accessed by a number of individuals (Wolfers and Zitzewitz, 2004).12 The final market

|

10 |

Available from http://en.wikipedia.org/wiki/Backcasting. Last accessed May 6, 2009. |

|

11 |

Available from www.planetarks.org/monsanto/goingahead.cfm. Last accessed April 28, 2009. |

|

12 |

An example of a contemporary prediction market is available from http://www.intrade.com/. Last accessed October 28, 2008. |

value of the asset is taken to be indicative of its likelihood of occurring. Because prediction markets can run constantly, they are well suited to a persistent forecasting system. The market naturally responds to changes in the data on which it is based, so that the event’s probability and predicted outcome are updated in real time.

Prediction markets use a structured approach to aggregate a large number of individual predictions and opinions about the future. Each new individual prediction affects the forecasted outcome. A prediction market automatically recomputes a forecast as soon as a new prediction is put into the system. One advantage of prediction markets over other forecasting techniques such as the Delphi method is that participation does not need to be managed. The participants take part whenever they want, usually when they obtain new information about a prediction or gain an insight into it.

Prediction markets may benefit from other forecasting techniques that generate a signpost, such as backcasting and influence diagrams. Describing the signpost that appears when a particular point has been reached in a prediction market may help to encourage market activity around a prediction (Strong et al., 2007). One shortcoming of prediction markets is that it is difficult to formulate some forecasting problems in the context of a market variable. Some believe, moreover, that predictive markets are also not ideal for long-term disruptive technology forecasts, where uncertainty is high, probabilistic outcomes are very small (low probability), signals and signposts are sparse, and disruptions caused by technology and signposts can set in rapidly and nonlinearly (Graefe and Weinhardt, 2008).

Alternate Reality Games

With the advent of the ability to create simulated worlds in a highly distributed network, multiplayer system alternate realities have begun to emerge online. An example of an alternate reality game (ARG) is a simulation run by the Institute for the Future (IFTF), World Without Oil (Figure 2-4). Simulations like this can be used to

FIGURE 2-4 Screen shot from World Without Oil. SOURCE: Image courtesy of Ken Eklund, game designer, creative director, and producer.

test possibilities that do not yet exist. ARGs bring together scenarios, war games, and computer simulations in one integrated approach. Progress is made in ARGs when both first-order changes and second-order13 effects are observed and recorded during game play. ARGs can enhance a forecast by revealing the impact of potential alternative future scenarios through game play and role playing.

Online Forecasting Communities

The Internet makes it possible to create online communities like prediction markets engaged in continuous forecasting activities. Techcast, a forecasting endeavor of George Washington University, a research project led by William E. Halal, has been online since 1998. It has developed into a service billing itself as a “virtual think tank” and is providing a range of services for a fee. Like any such service, its success depends on the quality of the individuals it brings together and its effectiveness in integrating their combined judgment. The endurance and continuing refinement of Techcast suggests it is becoming more useful with time.

Obsolescence Forecasting

Occasionally, major technologies are made obsolete by fundamental advances in new technology. The steam engine, for instance, gave way to electric motors to power factories, and the steamship eliminated commercial sailing. An interesting question about the future would be to ask how key current technologies might be made obsolete. Will the electric drive make the gasoline engine obsolete? Will broadcast television be made obsolete by the Internet? What would make the Internet, the cell phone, or aspirin obsolete? These are questions whose answers can already be foreseen. Asking them may reveal the potential for major discontinuities. Obsolescence forecasting can be considered a form of backcasting, because forecasters envision a scenario intruded on by an obsolescing technology, then explore how it happened.

Time Frame for Technology Forecasts

It is important to understand that the time frame for a forecast will depend on the type of technology as well as on the planning horizon of the decision maker. For example, decisions on software may move much faster than decisions on agricultural genetic research because of different development times and life cycles. Generally, the longer the time frame covered by a technology forecast, the more uncertain the forecast.

Short Term

Short-term forecasts are those that focus on the near future (within 5 years of the present) to gain an understanding of the immediate world based on a reasonably clear picture of available technologies. Most of the critical underpinning elements are understood, and in a large fraction of cases these forecasts support implementations. For example, a decision to invest in a semiconductor fabrication facility is based on a clear understanding of the technologies available within a short time frame.

Medium Term

Medium-term forecasts are for the intermediate future (typically within 5 to 10 years of the present) and can be characterized, albeit with some gaps in information, using a fairly well understood knowledge base of technology trends, environmental conditions, and competitive environments. These forecasts may emerge from ongoing research programs and can take into account understandings of current investments in manufacturing facilities or

|

13 |

Second-order effects are the unintended consequences of a new technology that often have a more powerful impact on society than the more obvious first-order changes. Available at www.cooper.com/journal/2001/04/the_secondorder_effects_of_wir.html. Last accessed July 12, 2009. |

expected outcomes from short-term R&D efforts. Assumptions derived from understanding the environment for a particular technology can be integrated into a quantitative forecast for the industry to use in revenue assessments and investment decisions.

Long Term

Long-term forecasts are forecasts of the deep future. The deep future is characterized by great uncertainty in how current visions, signposts, and events will evolve and the likelihood of unforeseen advances in technology and its applications. These forecasts are critical because they provide scenarios to help frame long-term strategic planning efforts and decisions and can assist in the development of a portfolio approach to long-term resource allocation.

While long-term forecasts are by nature highly uncertain, they help decision makers think about potential futures, strategic choices, and the ramifications of disruptive technologies.

CONCLUSION

Modern technological forecasting has only been utilized since the end of WWII. In the last 50 years, technology forecasts have helped decision makers better understand potential technological developments and diffusion paths. The range of forecasting methods has grown and includes rigorous mathematical models, organized opinions such as those produced by the Delphi method, and the creative output of scenarios and war games. While each method has strengths, the committee believes no single method of technology forecasting is fully adequate for addressing the range of issues, challenges, and needs that decision makers face today. Instead, it believes that a combination of methods used in a persistent and open forecasting system will improve the accuracy and usefulness of forecasts.

REFERENCES

Adler, M., E. Ziglio, eds. 1996. Gazing into the Oracle: The Delphi Method and Its Application to Social Policy and Public Health. London: Kingsley.

Armstrong, J.S. 2001. Combining forecasts. In J.S. Armstrong, ed., Principles of Forecasting, Boston, Mass.: Kluwer Academic Publishers.

Arthur, Brian W. 1996. Increasing returns and the new world of business. Harvard Business Review (July-August).

Balachandra, Ramaiya. 1980. Technological forecasting: Who does it and how useful is it? Technological Forecasting and Social Change 16: 75-85.

Dalkey, Norman C. 1967. DELPHI. Santa Monica, Calif.: RAND Corporation.

Dalkey, Norman C., Bernice B. Brown, and S.W. Cochran. 1969. The Delphi Method, III: Use of Self-Ratings to Improve Group Estimates. Santa Monica, Calif.: RAND Corporation.

Dennis, Robin L. 1987. Forecasting errors: The importance of the decision-making context. Climatic Change 11: 81-96.

Fey, Victor, and Eugene Rivin. 2005. Innovation on Demand: New Product Development Using TRIZ. New York: Cambridge University Press.

Fisher, J.C., and R.H. Pry. 1970. A simple substitution model of technological change. Technological Forecasting and Social Change 3(1): 75-78.

Gordon, Theodore J., and Olaf Helmer. 1964. Report on a Long-Range Forecasting Study. Santa Monica, Calif.: RAND Corporation.

Graefe, Andreas, and Christof Weinhardt. 2008. Long-term forecasting with prediction markets—A field experiment on applicability and expert confidence. The Journal of Prediction Markets 2(2): 71-91.

Green, Kesten C., and J. Scott Armstrong. 2004. Structured analogies for forecasting. Working Paper 17, Monash University Department of Econometrics and Business Statistics. September 16.

Green, Kesten C., and J. Scott Armstrong. 2007. Structured analogies for forecasting. International Journal of Forecasting 23(3): 365-376.

Howard, Ronald A., and James E. Matheson. 1981. Influence diagrams. Pp. 721-762 in Readings on the Principles and Applications of Decision Analysis, vol. I (1984), Howard, Ronald A., and James E. Matheson, eds., Menlo Park, Calif.: Strategic Decisions Group.

Kahn, Herman. 1960. On Thermonuclear War. Princeton, N.J.: Princeton University Press.

Kahn, Herman. 1962. Thinking About the Unthinkable. New York: Horizon Press.

Kappoth, Prakasan. 2007. Design features for next generation technology products. TRIZ Journal. Available at http://www.triz-journal.com/archives/2007/11/05/. Last accessed October 29, 2009.

Kelly, Kevin. 1999. New Rules for the New Economy: 10 Radical Strategies for a Connected World. New York: Penguin Books. Available at http://www.kk.org/newrules/index.php. Last accessed July 21, 2009.

Kucharavy, Dmitry, and Roland De Guio. 2005. Problems of forecast. Presented at ETRIA TRIZ Future 2005, Graz, Austria, November 16-18. Available at http://hal.archives-ouvertes.fr/hal-00282761/fr/. Last accessed November 11, 2008.

Kuznets, S. 1930. Secular Movements in Production and Prices: Their Nature and Their Bearing on Cyclical Fluctuations. Boston, Mass.: Houghton & Mifflin.

Lee, Danny C., and Gay A. Bradshaw. 2004. Making monitoring work for managers. Available at http://www.fs.fed.us/psw/topics/fire_science/craft/craft/Resources/Lee_Bradshaw_monitoring.pdf. Last accessed November 3, 2008.

Lenz, R.C. 1970. Practical application of technical trend forecasting. In The Science of Managing Organized Technology, M.J. Cetron and J.D. Goldhar, eds., New York: Gordon and Breach, pp. 825-854.

Linstone, Harold A., and Murray Turoff. 1975. The Delphi Method: Techniques and Application. Reading, Mass.: Addison-Wesley.

Martino, Joseph P. 1969. An Introduction to Technological Forecasting. London: Gordon and Breach Publishers.

Martino, Joseph P. 1983. Technological Forecasting for Decision Making. Amsterdam, The Netherlands: North-Holland Publishing.

Martino, Joseph P. 1987. Recent developments in technological forecasting. Climatic Change 11: 211-235.

Martino, Joseph P. 1999. Thirty years of change and stability. Technological Forecasting and Social Change 62: 13-18.

Moore, George E. 1965. Cramming more components onto integrated circuits. Electronics 38(8).

Neufeld, Jacob, George M. Watson Jr., and David Chenoweth. 1997. Technology and the Air Force: A Retrospective Assessment. Washington, D.C.: U.S. Government Printing Office, p. 41.

Porter, Alan L., Thomas A. Roper, Thomas W. Mason, Frederick A. Rossini, and Jerry Banks. 1991. Forecasting and Management of Technology. New York: John Wiley & Sons.

Rowe and Wright. 1999. The Delphi technique as a forecasting tool: Issues and analysis. International Journal of Forecasting 15(4): 353-375.

Stewart, Thomas R. 1987. The DELPHI technique and judgmental forecasting. Climatic Change 11: 97-113.

Strong, R., L. Proctor, J. Tang, and R. Zhou. 2007. Signpost generation in strategic technology forecasting. In Proceedings of the 16th International Conference for the International Association of Management Technology (IAMOT), pp. 2312-2331.

Vanston, John H. 2003. Better forecasts, better plans, better results. Research Technology Management 46(1): 47-58.

Vanston, John H., and Lawrence K. Vanston. 2004. Testing the tea leaves: Evaluating the validity of forecasts. Research Technology Management 47(5): 33-39.

von Karman, Theodore. 1945. Science: The Key to Air Supremacy, summary vol., Toward New Horizons: A Report to General of the Army by H.H. Arnold, Submitted on behalf of the A.A.A. Scientific Advisory Group (Wright Field, Dayton, Ohio: Air Materiel Command Publications Branch, Intelligence, T-2). 15 December.

Wang, Clement, Xuanrui Liu, and Daoling Xu. 1999. Chaos theory in technology forecasting. Paper MC-07.4. Portland International Conference on the Management of Engineering and Technology. Portland, Oregon. July 25-29.

Wolfers, Justin, and Eric Zitzewitz. 2004. Prediction markets. National Bureau of Economic Research, Working paper W10504. Available at http://www.nber.org/papers/w10504. Last accessed October 28, 2008.