In an ideal world, scientists and the public would have exact, complete, and uncontested information on the factors responsible for pollution-related environmental and human health problems. Such information and analyses would allow decision makers to determine accurately and precisely which regulatory controls would lead to measurable benefits to human health and the environment, the costs associated with a regulatory control, and the time frame over which control measures would reduce exposure. Decision makers would be able to predict accurately whether contemplated regulations would lead to job loss or stimulate new programs, whether communities would be disrupted or energized, and whether regulation would unreasonably burden affected industries, state agencies, or tribes. In the absence of that information, decision makers would at least have an analysis of all uncertainties in the information and the expected impacts of those uncertainties on public health, the economy, and the public at large. Furthermore, in that ideal world time and resources would not be limiting, and the requisite information and analyses would be available at the time needed, and in the quality and quantity needed, so that decision makers would be able to make decisions consistently using relevant data.

In reality, however, decision makers do not have perfect information upon which to base decisions or to predict the impact and consequences of such decisions. But decisions need to be made despite those uncertainties. The available data on the multiple factors, including human health risk, that shape environmental decisions rarely encompass all relevant considerations. For example, although the toxic effects of a test substance may be definitive and undisputed in laboratory animals, the potential for

risk to humans caused by environmental exposure to the same substance may be uncertain and controversial. Exposure levels for certain chemicals may be measurable and reproducible for populations in some locations but available only as modeled estimates in others. Investigators may have data to show that a pollutant is present in the soil at certain locations but may not have data to determine whether residents are exposed to it and, more importantly, whether residents are exposed at potentially harmful levels. Sophisticated methods for quantifying uncertainty may be available for some data categories but untested and of uncertain utility for others. Gathering additional information would require time and resources. As a result, uncertainty is always present in data and analysis, and decision making is invariably based on a combination of well-understood and less-well-understood information.

Consistent with its mission to “protect human health and the environment”1 (Box 1-1) (EPA, 2011), the U.S. Environmental Protection Agency (EPA) estimates the nature, magnitude, and likelihood of risks to human health and the environment; identifies the potential regulatory actions for mitigating those risks and best protecting public health2 and the environment; and considers the need to protect the public along with other factors when deciding on the appropriate regulatory action. Each of those steps has uncertainties that can be estimated qualitatively, quantitatively, or both qualitatively and quantitatively. A challenge for EPA is to determine how to best develop and use those estimates of uncertainty in data and analyses in its decision-making processes.

UNCERTAINTY AND ENVIRONMENTAL DECISION MAKING

Human health risk assessment3 is one of the most powerful tools used by EPA in making regulatory decisions to manage threats to health and the environment. Historically, the analysis and consideration of uncertainty in decision making at EPA has focused on the uncertainties in the data and the analysis used in human health risk assessments, including the underlying sciences that comprise the field, such as exposure science, toxicology, and modeling.

___________________

1 Many of the principles discussed in this report apply also to ecological risk assessment and decision making, but because the committee’s charge focuses on human health, the report addresses issues relating only to the human health component of EPA’s mission.

2 Throughout this report the committee uses the term public health when referring to EPA’s mission. The committee includes in the use of that term the whole population and individuals or individual subgroups within the whole population.

3 Human health risk assessment is a systematic process within which scientific and other information relating to the nature and magnitude of threats to human health is organized and evaluated (NRC, 1983).

BOX 1-1

The Mission of the U.S. Environmental Protection Agency

The mission of the U.S. Environmental Protection Agency (EPA) is to protect human health and the environment. Specifically, EPA’s purpose is to ensure that

• all Americans are protected from significant risks to human health and the environment where they live, learn, and work;

• national efforts to reduce environmental risk are based on the best available scientific information;

• federal laws protecting human health and the environment are enforced fairly and effectively;

• environmental protection is an integral consideration in U.S. policies concerning natural resources, human health, economic growth, energy, transportation, agriculture, industry, and international trade, and these factors are similarly considered in establishing environmental policy;

• all parts of society—communities, individuals, businesses, and state, local, and tribal governments—have access to accurate information sufficient to effectively participate in managing human health and environmental risks;

• environmental protection contributes to making our communities and ecosystems diverse, sustainable, and economically productive; and

• the United States plays a leadership role in working with other nations to protect the global environment.

SOURCE: EPA, 2011.

The typical goal of a human health risk assessment is to develop a statement—the risk characterization—regarding the likelihood or probability that exposures arising from a given source, or in some cases from multiple sources, will harm human health. The risk characterization should include a statement about the scientific uncertainties associated with the assessment and their effect on the assessment, including a clear description of the confidence that the technical experts have in the results. Such information is provided to decision makers at EPA for consideration in their regulatory decisions (EPA, 2000; NRC, 2009). That information is also made available to the public. Uncertainties in the health risk assessment could stem, for example, from questions about how data from animals exposed to a chemical or other agent relate to human exposures or from uncertainties in the relationship between chemical exposures, especially low-dose chemical exposures, and a given adverse health outcome.

The statutes and processes guiding decision making at EPA make it clear that uncertainties in data and analyses are legitimate and predicable aspects of the decision-making process. Congress, the courts, and advisory bodies such as the National Research Council have recognized the inevitability of uncertainties in human health risk assessment and environmental decision making and, in some instances, have urged EPA to give special attention to this aspect of the process. The origins, necessity, and legitimacy of uncertainty as an aspect of EPA decision making, therefore, have both legal and scientific underpinnings. Those bases are discussed below.

Legal Basis

To fulfill its mission, EPA promulgates regulations to administer congressionally mandated programs. Although the statutes that govern EPA do not always contain explicit references to uncertainty in human health risk assessments, a number of statutes clearly imply that the information available to EPA may be uncertain and permit the agency to rely on uncertain information in its rulemaking. In other words, the need for EPA to consider and account for uncertainty when promulgating regulations is implicit in the statutes under which the agency operates. For example, the statutes related to air4 and water5 recognize and allow for uncertainty in decision making by calling for health and environmental standards with an “adequate” or “ample” margin of safety. Other statutes require judgments relating to the “potential” for environmental harm and “a reasonable certainty that no harm will result.”6 Congress’s recognition of the uncertainty inherent in factors other than human health risks is also evident, for example, in such statements as “reasonably ascertainable economic consequences of the rule.”7 Such statements indicate a recognition by Congress that, at the time of a regulatory decision, data and information may be incomplete, controversial or otherwise open to variable interpretations, or that use of the available data and information may require assumptions about future events and conditions that are unknown or uncertain at the time of the rulemaking. Although the statutory language may seem vague and incomplete, the fact that such language was incorporated into a law by Congress indicates a recognition that EPA should have the discretion to interpret the statute and to develop approaches informed by agency experience, expertise, and decision-making needs.

___________________

4 Clean Air Act Amendments of 1990, Pub. L. No. 101-549 (1990).

5 Clean Water Act Amendments, Pub. L. No. 107-377, Sec. 1412(b)(3)(B)(iv) (1972).

6 Federal Insecticide, Fungicide, and Rodenticide Act of 1947, Pub. L. No. 80-104 (1947).

7 Toxic Substances Control Act of 1976, Pub. L. No. 94-469, Sec 6(c)(D) (1976).

Furthermore, some provisions do explicitly mandate that EPA discuss uncertainties in reports to Congress and other entities. For example, the Clean Air Act (CAA)8 requires EPA to report to Congress on “any uncertainties in risk assessment methodology or other health assessment technique, and any negative health or environmental consequences to the community of efforts to reduce such risks.” The Clean Water Act (CWA) Amendments9 require EPA to specify, in a publicly available document, “to the extent practicable … each significant uncertainty identified in the process of the assessment of public health effects and studies that would assist in resolving the uncertainty.”

In addition, several statutes contain provisions that amplify and clarify legislative objectives with respect to the uncertainties associated with assessing human health risk. For example, the 1996 Food Quality Protection Act (FQPA) specifies the following for pesticide approvals: “In the case of threshold effects … an additional ten-fold margin of safety for the pesticide chemical residues shall be applied for infants and children.”10 The 1996 amendments to the Safe Drinking Water Act (SDWA) are similarly explicit about the presentation of human health risk estimates and uncertainty in those estimates:

The Administrator shall, in a document made available to the public in support of a regulation promulgated under this section, specify, to the extent practicable—

(i) Each population addressed by any estimate of public health effects;

(ii) The expected risk or central estimate of risk for the specific populations;

(iii) Each appropriate upper-bound or lower-bound estimate of risk;

(iv) Each significant uncertainty identified in the process of the assessment of public health effects and studies that would assist in resolving the uncertainty; and

(v) Peer-reviewed studies known to the Administrator that support, are directly relevant to, or fail to support any estimate of public health effects and the methodology used to reconcile inconsistencies in the scientific data.11

Even from EPA’s earliest days the courts have upheld the agency’s legal authority and its need to account for uncertainty in its decision-making process. For example, in 1980 the U.S. Court of Appeals for the District of Columbia Circuit accepted and expanded on EPA’s need to account for uncertainty by upholding an EPA decision related to the then new National Ambient Air Quality Standard (NAAQS) for particulate matter.12

___________________

8 CAA Amendments of 1990, Pub. L. No. 101-549, Sec. 112(f)(1)(C) (1990).

9 CWA Amendments, Pub. L. No. 107-377, Sec. 1412(b)(3)(B)(iv) (1972).

10 42 U.S.C. § 346a(b)(2)(C) (1996).

11 SDWA Amendments of 1996, Pub. L. No. 104-182, § 300g-1(b)(3) (1996).

12 40 CFR pt. 50.

In affirming EPA’s regulation under the CAA, the court pointed to “the Act’s precautionary and preventive orientation”13 and noted that “some uncertainty about the health effects of air pollution is inevitable”14 and that “Congress provided that the Administrator is to use his judgment in setting air quality standards precisely to permit him to act in the face of uncertainty.”15

Scientific Basis

As a discipline, science treats uncertainty as a natural and legitimate part of measurement methodology and, therefore, as an expected aspect of technical data used in decision making. To make scientific progress, “uncertainty, discrepancy, and inconsistency” are often necessary to point the way to new lines of experimentation and new discoveries (Lindley, 2006). However, the variability in the state of understanding and uncertainty is quite different from the more binary and absolute world of regulatory and courtroom decisions surrounding the EPA’s regulatory decisions. To try to bridge that gap, the scientific community has endeavored to provide regulatory decision makers with a more comprehensive view of the estimates of risks, and a number of scientific reports have been published that focus on regulatory decision making and, to some extent, on the uncertainties inherent in the information that supports EPA’s human health regulatory decisions and on the implications of that uncertainty.

Over the past three decades two core themes, which were outlined in the germinal report Risk Assessment in the Federal Government: Managing the Process (hereafter the Red Book) (NRC, 1983), have governed core aspects of human health risk assessment and decision making at EPA: (1) the special meaning of “uncertainty” in relation to human health risk assessment and decision making; and (2) the interface between risk assessment and risk management, that is, the interface between estimating risks and the decision about how to manage those risks. When discussing uncertainties, the Red Book (NRC, 1983) focused on uncertainty in risk estimates and, to some extent, in economic analyses, stating that “there is often great uncertainty in estimates of the types, probability, and magnitude of health effects associated with a chemical agent, of the economic effects of a proposed regulatory action, and of the extent of current and possible future human exposures” (p. 11; emphasis added). The report highlights

___________________

13 Lead Industries Ass’n, Inc. v. EPA, 647 F.2d 1130, 1155 (D.C.Cir. 1980), 64 [http://openjurist.org/647/f2d/1130/lead-industries-association-inc-v-environmental-protection-agency].

14 Lead Industries Ass’n, Inc. v. EPA, 647 F.2d 1130, 1155 (D.C.Cir. 1980), 63 [http://openjurist.org/647/f2d/1130/lead-industries-association-inc-v-environmental-protection-agency].

15 Lead Industries Ass’n, Inc. v. EPA, 647 F.2d 1130, 1155 (D.C.Cir. 1980), 63 [http://openjurist.org/647/f2d/1130/lead-industries-association-inc-v-environmental-protection-agency].

the need to include uncertainties in characterizations of health risks, stating, “The summary effects of the uncertainties in the preceding steps are described in this step” (p. 20; emphasis added). It also highlights the need to communicate uncertainty and the paucity of guidance on how to do so, stating, “The final expressions of risk derived in [the risk characterization] will be used by the regulatory decision maker. … Little guidance is available on how to express uncertainties in the underlying data and on which dose–response assessments and exposure estimates should be combined to give a final estimate of possible risks” (p. 36; emphasis added).16

Over a decade later, Science and Judgment in Risk Assessment: Managing the Process (NRC, 1994), in response to its charge, focused on estimates of human health risks and statistical methods to quantify the uncertainty in those estimates. In line with those reports, EPA has focused a great deal of attention on methods for quantifying and expressing the uncertainty in health risk estimates.

Understanding Risk: Informing Decisions in a Democratic Society (hereafter Understanding Risk) (NRC, 1996), discussed the uncertainty inherent in estimates of health risks in the broad context of regulatory decisions, including those made by EPA. That committee wrote, “Significant advances have been made in recent years in the development of analytical methods for evaluating, characterizing, and presenting uncertainty and for analyzing its components, and well documented guidance for conducting an uncertainty analysis is available” (p. 108). The committee focused, however, “on the role of uncertainty in risk characterization and the role that uncertainty analysis can play as part of an effective iterative process for assessing, deliberating, and understanding risks when discussing uncertainties” (p. 108). It also proposed that “[p]erhaps the most important need is to identify and focus on uncertainties that matter to understanding risk situations and making decisions about them,” and emphasized “the critical importance of social, cultural, and institutional factors in determining how uncertainties are considered, addressed, or ignored in the tasks that support risk characterization” (p. 108). In other words, Understanding Risk highlighted the subjective nature of interpreting uncertainty in human health risk estimates and how that subjectivity—which is influenced by social and cultural factors such as public values and preconceptions—can affect how the uncertainty is characterized.

___________________

16 Responding to this emphasis in that and subsequent NRC reports, EPA treats risk characterization as a fundamental element in risk assessment and, importantly, the place where uncertainties analyzed in the course of the assessment are collected and described for decision makers. For this reason, risk characterization in EPA guidance documents almost always incorporates uncertainties. Similarly, “transparency” almost always embraces the idea of full disclosure of uncertainties, and the rationale for options considered and choices made, among other things.

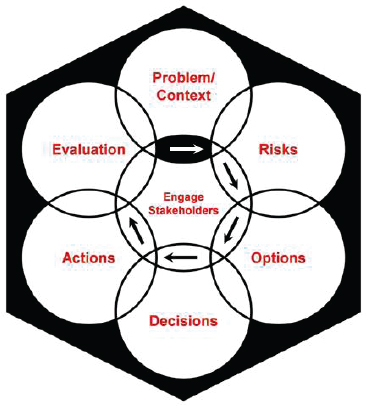

The final report of the Presidential/Congressional Commission on Risk Assessment and Risk Management (1997a) recommended a risk management framework geared toward environmental risk decisions (see Figure 1-1). The three main principles of the framework are (1) putting health

FIGURE 1-1 The Presidential/Congressional Commission on Risk Assessment and Risk Management’s framework for risk management decisions. The commission designed the framework “to help all types of risk managers—government officials, private-sector businesses, individual members of the public—make good risk management decisions.” The framework has six stages: define the problem and put it in context; analyze the risks associated with the problem in context; examine options for addressing the risks; make decisions about which options to implement; take actions to implement the decisions; and conduct an evaluation of the actions’ results. The framework should be conducted in collaboration with stakeholders and should use “iterations if new information is developed that changes the need for or nature of risk management.”

SOURCE: Presidential/Congressional Commission on Risk Assessment and Risk Management, 1997, p. 3.

and environmental problems in their larger, real-world contexts,17 (2) involving stakeholders “to the extent appropriate and feasible during all stages of the risk management process” (p. 6), and (3) providing risk managers (referred to as the decision makers in this report) and stakeholders with opportunities to revisit stages within the framework when new information emerges. When discussing uncertainty in health risk characterizations for routine risk assessments, the report recommended using qualitative descriptions of uncertainty rather than quantitative analyses, because it “is likely to be more understandable and useful than quantitative estimates or models to [decision makers] and the public” (p. 170). In Volume 2 of its report, the commission further discussed uncertainty analyses, focusing on the uncertainties in both health risk estimates and economic analyses (Presidential/Congressional Commission on Risk Assessment and Risk Management, 1997b). Studies have shown, however, that different people interpret qualitative descriptions differently (Budescu et al., 2009; Wallsten and Budescu, 1995; Wallsten et al., 1986). See Chapter 6 for further discussion.

Science and Decisions: Advancing Risk Assessment (NRC, 2009) emphasized that risk assessment is an applied science used to help evaluate risk management options, and, as such, assessments should be conducted with that purpose in mind. It further stated that “descriptions of the uncertainty and variability inherent in all risk assessments may be complex or relatively simple; the level of detail in the descriptions should align with what is needed to inform risk-management decisions” (p. 5). The report recommended that EPA adopt a three-phase framework and employ a “broad-based discussion of risk-management options early in the process, extensive stakeholder participation throughout the process, and consideration of life-cycle approaches in a broader array of agency programs” (p. 260). Phase III of the framework, the risk-management phase, includes identifying the factors other than human health risk estimates that affect and are affected by the regulatory decision. These include the factors discussed above that EPA is required by certain statutes to consider, such as technologies and costs. The report does not, however, discuss the uncertainties in those factors and how any such uncertainties should affect EPA’s decisions.

More recently, A Risk-Characterization Framework for Decision Making at the Food and Drug Administration (NRC, 2011) emphasized that “risk characterization should be decision-focused” and describe “potential

___________________

17 “Evaluating problems in context involves evaluating different sources of a particular chemical or chemical exposure, considering other chemicals that could affect a particular risk or pose additional risks, assessing other similar risks, and evaluating the extent to which different exposures contribute to a particular health effect of concern” (Presidential/Congressional Commission on Risk Assessment and Risk Management, 1997b, p. 5).

effects of alternative decisions on health rather than on comparing different health and environmental hazards” (p. 21). The report discussed the factors that, in addition to human health risks, are sometimes considered in the decisions of the Food and Drug Administration (FDA): social factors, political factors, and economic factors.18 Those factors often are not independent from the estimates of human health risks. The report briefly discussed uncertainty, focusing on the uncertainty in the characterization of risk, not the uncertainty in the other factors that play a role in FDA’s decision making.

Over the past several decades EPA’s science advisory boards (SABs) have also addressed the importance of considering uncertainties in risk assessment and decision making. For example, the SABs have emphasized its importance in radiological assessments (EPA, 1993, 1999b), the CAA (EPA, 2007), microbial risk assessments (EPA, 2010b), expert elicitation (EPA, 2010b), and a comparative risk framework methodology (EPA, 1999a).

These reports have contributed greatly to the science of risk assessment, uncertainty analysis, and environmental regulatory decision making. Although a number of those reports discuss the factors beyond the estimates of health risks that play a role in regulatory decision making, when discussing the analysis and implications of uncertainty on decision making they focus on the uncertainty in the estimates of human health risks. The reports typically do not discuss the uncertainty inherent in the other factors that are considered in regulatory decisions. This report broadens the discussion of uncertainty to include the uncertainty in factors in addition to human health risk assessments.

THE CONTEXT OF THIS REPORT AND THE CHARGE TO THE COMMITTEE

The major environmental statutes administered by EPA call for the agency to develop and use scientific data and analyses to evaluate potential human health risks. (See, for example, sections 108 and 109 of the CAA,19 Section 3 of the Federal Insecticide, Fungicide, and Rodenticide Act

___________________

18 EPA defines economic factors as the factors that “inform the manager on the cost of risks and the benefits of reducing them, the costs of risk mitigation or remediation options and the distributional effects” (EPA, 2000, p. 52). When discussing economic analysis, EPA includes the examination of net social benefits, impacts on industry, governments, and nonprofit organizations, and effects on various subpopulations, particularly low-income and minority individuals and children, using distributional analyses (EPA, 2010a).

19 CAA of 1963, Pub. L. No. 2-206 (1963).

[FIFRA],20 and Section 4 of the Toxic Substances Control Act [TSCA].21) As a result, the uncertainties inherent in scientific information—that is, in the data and analyses—are incorporated in the health risk assessment process. While a substantial database may exist for any particular chemical undergoing regulatory review, uncertainties invariably raise questions about the reliability of risk estimates and the scientific credibility of related regulatory decisions. Because some aspects of interpreting uncertainty are subjective, different risk assessors, regulators, and observers who approach the use of risk assessments and uncertainty analyses from different perspectives might have different interpretations of the results.

The presence of uncertainty has delayed decisions because it was thought or argued that more definitive answers to outstanding questions were needed and that research could provide those answers. Unfortunately, however, research cannot always provide more definitive information. It might not be possible at all to obtain that information, or obtaining it might require more time or resources than are available. In many cases additional research may address some forms of uncertainty while, at the same time, identifying other, new areas of uncertainty. Such delays can threaten public health by delaying the implementation of protective measures. The need for decision making despite uncertainty is highlighted in these passages from Estimating the Public Health Benefits of Proposed Air Pollution Regulations: “Even great uncertainty does not imply that action to promote or protect public health should be delayed,” and “The potential for improving decisions through research must be balanced against the public health costs because of a delay in the implementation of controls. Complete certainty is an unattainable ideal” (NRC, 2002, p. 127). Delaying risk assessments because of uncertainty also diminishes the established practices that provide for a scientifically based, consistent approach for assessors to fill in gaps when information about a given chemical is lacking—for example, by extrapolating from animal data to humans and from high to low doses, by using uncertainty factors, by developing exposure scenarios, or by relying on other defaults. Delaying decisions also undervalues the estimating of uncertainties using simple qualitative or complex quantitative analyses.

There is an increasingly complex set of tools at EPA’s disposal for uncertainty analyses in risk assessments. Those tools pose new challenges for decision making and for the communication of EPA’s decisions, including the need to make deliberate decisions about when to use different tools or approaches and how best to communicate complex statistical analyses to nontechnical stakeholders. Rather than barring decisions in the face of

___________________

20 FIFRA of 1947 to regulate the marketing of economic poisons and devices and for other purposes, Pub. L. No. 80-104 (1947).

21 TSCA, Pub. L. No. 94-469 (1976).

uncertainty, transparency and scientific rigor have been used to help ensure the responsible use of uncertain information. The focus, however, remains on the uncertainty in the human health risk assessment, often with the uncertainties inherent in the other factors that play a role in EPA’s decisions being ignored.

Given the challenges in decision making in the face of uncertainty, EPA requested that the Institute of Medicine (IOM) convene a committee to provide guidance to decision makers at EPA—and their partners in the states and localities—on approaches to managing risk in different contexts when confronting uncertainty. EPA also sought guidance on how information on uncertainty should be presented in order to best help decision makers make sound decisions and to increase transparency with the public about those decisions.

Specifically, EPA directed: “Based upon available literature, theory, and experience, the committee will … provide its best judgment and rationale on how best to use quantitative information on uncertainty in the estimates of risk in order to manage environmental risks to human health and for communicating this information.”22 The specific questions that EPA requested the committee to address are presented in Box 1-2.

The IOM convened a committee of experts23 in the fields of risk assessment, public health, health economics, decision analysis, public policy, risk communication, and environmental and public health law (see Appendix B for biographical sketches of committee members). The committee met five times, including two open sessions during which committee members discussed relevant issues with outside experts, and discussed the charge with the EPA. Appendix C presents the agendas of the open sessions.

___________________

22 Consistent with its charge, the committee focused on “environmental risks to human health” in this report and did not directly address ecological risk assessment. The committee notes, however, that many of the principles discussed and developed in this report would also apply to decision making related to ecological risks.

23 Michael Taylor and Robert Perciasepe resigned from the committee upon consideration of an appointment at the Food and Drug Administration and EPA, respectively. Steven Schneider died in July 2010. All three members contributed a great deal to the intellectual development of the report but were not involved in the approval of the final report.

BOX 1-2 Committee’s Statement of Task

Based upon available literature, theory, and experience, the committee will provide its best judgment and rationale on how best to use quantitative information on the uncertainty in estimates of risk in order to manage environmental risks to human health and for communicating this information.

Specifically, the committee will address the following questions:

• How does uncertainty influence risk management under different public health policy scenarios?

• What are promising tools and techniques from other areas of decision making on public health policy? What are benefits and drawbacks to these approaches for decision makers at EPA and their partners?

• Are there other ways in which EPA can benefit from quantitative characterization of uncertainty (e.g., value of information techniques to inform research priorities)?

• What approaches for communicating uncertainty could be used to ensure the appropriate use of this risk information? Are there communication techniques to enhance the understanding of uncertainty among users of risk information like risk managers, journalists, and citizens?

• What implementation challenges would EPA face in adopting these alternative approaches to decision making and communicating uncertainty? What steps should EPA take to address these challenges? Are there interim approaches that EPA could take?

COMMITTEE’S APPROACH TO THE CHARGE

Audience for the Report

The committee considered decision makers at EPA to be a main audience for this report. In addition, the committee prepared this report for a broader audience, which includes other environmental professionals, journalists, and interested observers.

Questions Within the Statement of Task

The committee found it helpful to clarify the questions within its statement of task. The committee was asked “how … uncertainty influence[s] risk management under different public health policy scenarios?” However, the committee found it more useful to deliberate on how uncertainty can and should influence decisions and help decision makers, rather than focusing on how it currently influences risk-management decisions.

Although there is an emphasis in the charge on “how best to use quantitative information on the uncertainty” and how “EPA can benefit from quantitative characterization of uncertainty,” other aspects of the charge necessitate a broader look at uncertainty analysis beyond quantitative analysis. For example, questions about how “uncertainty influence[s] risk management under different public health policy scenarios,” the “tools and techniques from other areas of decision making,” and “what approaches for communicating uncertainty could be used to ensure the appropriate use of this risk information” require looking at both qualitative and quantitative analyses of uncertainty. Descriptive and qualitative narratives about uncertainty are instructive for decision makers and the public and, often, are more readily available and more comprehensible than the more technical quantitative analyses. In fact, a descriptive narrative, or else using both descriptive and quantitative approaches, is sometimes more appropriate. Accordingly, the committee interprets the charge as calling for an examination of decision-making uncertainties in both descriptive and quantitative, or narrative and numerical, terms.

Sources of Uncertainty: Factors That Play a Role in Decision Making and Their Uncertainty

Decision making at EPA is a multifaceted process that involves a broad variety of laws, activities, participants, and products. Congressionally enacted statutes and executive orders establish the fundamental principles and primary components for consideration in EPA’s decisions (see Table 1-1 for examples). While health impacts are an important component of all EPA decisions, some statutes require that a decision be based solely on health impacts without consideration of cost or other factors, whereas other statutes require a consideration of technological feasibility, costs, or both. For example, the drinking water standards promulgated under the Safe Drinking Water Act24 require that certain costs and technological availability be considered. In contrast, the standards that EPA promulgates under the NAAQS Act25 consider only the protection of public health, although state regulators can consider costs, feasibility, and other factors when developing implementation plans to meet the NAAQS.

In addition to statutory requirements, executive orders require government agencies, including EPA, to conduct benefit–cost analyses (BCAs) as part of a regulatory analysis when promulgating certain regulations unless otherwise prohibited by statute. (See below for further discussion of BCA.)

___________________

24 SWDA, Pub. L. No. 93-523.

25 42 U.S.C. § 7409(b) (2010).

A series of executive orders (see, for example, Executive Orders 1286626 and 1356327 for executive agencies and 13579 for independent regulatory agencies) discuss the need for regulatory impact analyses, stating that agencies “should assess all costs and benefits of available regulatory alternatives, including the alternative of not regulating.”28 In 2003 the Office of Management and Budget (OMB) issued Circular A-4 which provides further guidance to agencies conducting such analyses. Executive Order 13563, in addition to discussing cost–benefit analyses, also requires agencies, including EPA, to develop a plan for conducting periodic, retrospective analyses of their regulations.29 OMB also publishes a yearly report to Congress on the benefits and costs of regulations and mandates (OMB, 2011). That document summarizes the costs and benefits of agencies across the government and, to reflect some of the uncertainty, includes a range of potential benefits.

Executive orders also require a consideration of the effects of regulations on certain populations. For example, Executive Order 13045 requires certain analyses for rules that “concern an environmental health risk or safety risk that an agency has reason to believe may disproportionately affect children.” Executive Order 12898, Federal Actions to Address Environmental Justice in Minority Populations and Low-Income Populations,30 encourages agencies to conduct their activities “in a manner that ensures that such programs, policies, and activities do not have the effect of excluding persons (including populations) from participation in, denying persons (including populations) the benefits of, or subjecting persons (including populations) to discrimination under, such programs, policies, and activities, because of their race, color, or national origin.”31

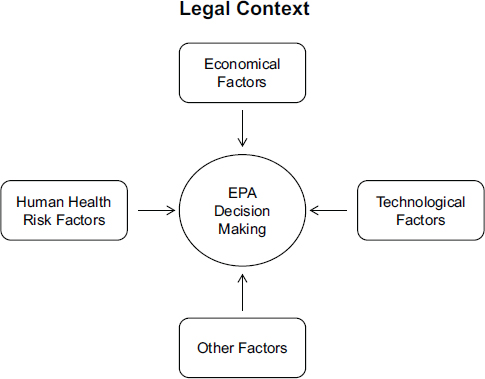

Factors that are involved in regulatory decision making have been discussed before. For example, EPA’s Risk Characterization Handbook describes seven factors that influence decision making: risk assessment, economic factors, technological factors, legal factors, social factors, political factors, and public values (EPA, 2000). More recently, A Risk-Characterization Framework for Decision Making at the Food and Drug Administration presented a framework for decision makers at FDA that includes four factors considered in FDA’s decisions: factors related to the estimates of risks to public health (that is, the risk-characterization factors), economic factors, social factors, and political factors (NRC, 2011). In Figure 1-2, this committee has modified that framework to illustrate the three main factors that, depending on the legal context, EPA considers in

___________________

26 Exec. Order No. 12866. 58 FR 51735 (October 4, 1993).

27 Exec. Order No. 13563. 76 FR 3821 (January 21, 2011).

28 Exec. Order No. 13045. 62 FR 19885 (April 23, 1997).

29 Exec. Order No. 13563. 76 FR 3821 (January 21, 2011).

30 Exec. Order No. 12898. 77 FR 11752 (February 28, 2012).

31 Exec. Order No. 12898 Section 2-2. 77 FR 11752 (February 28, 2012).

| Statute and Program |

Considerations Under the Statute |

| Clean Air Act—National Emission Standards for Hazardous Air Pollutants |

To determine whether to add or delete a pollutant from the Hazardous Air Pollutants (HAPs) list, EPA must consider whether the “substance may reasonably be anticipated to cause any adverse effects to the human health or adverse environmental effects” but not costs or technical feasibility.a |

|

Costs are permissible considerations in EPA’s determination of maximum achievable control technologies (MACTs) and generally available control technologies (GACTs). |

|

|

For MACTs, EPA can consider whether the control technology achieves the “maximum degree of reduction in emissions” of the HAPs as well as “the cost of achieving such emission reduction, and any non-air quality health and environmental impacts and energy requirements.”b |

|

|

For GACTs, EPA may instead elect to use “generally available control technologies or management practices.”c |

|

| National Ambient Air Quality Standards (NAAQS) |

NAAQS themselves must be set to levels “requisite to protect the public health” “allowing for an adequate margin of safety” (for primary standards),d and levels “requisite to protect the public welfare from any known or anticipated adverse effects associated with the presence of such air pollutant in the ambient air” (for secondary standards).e |

|

The statute assigns implementation responsibilities to state regulators, who may consider costs, feasibility, and other factors in developing the required state implementation plans (SIPs).f In an area that does not achieve the NAAQS for a given pollutant, a proposed new source of that pollutant must achieve the lowest achievable emission rateg in order to receive the necessary permit. Thus with MACT and GACT determinations, uncertainties in determining costs as well as uncertainties in environmental effects may be relevant to a decision. |

|

many of its decisions: (1) estimates of human health risks, (2) economics, (3) technology availability, and (4) other factors such as social factors (for example, environmental justice) and the political context. Some of those factors are discussed in key statutes under which EPA operates, and numerous EPA guidance documents on risk assessments acknowledge those factors. However, the uncertainty embedded in the data and analyses related to those factors and how those factors and their uncertainty should affect a decision are rarely discussed, other than in the statement “Once

| Statute and Program |

Considerations Under the Statute |

| Safe Drinking Water Act (SDWA) |

To regulate a contaminant under the primary drinking water regulations, EPA must first determine that the contaminant has an adverse effect on human health or welfare.h The maximum contaminant level goal must be established at a level at which no known health effects may occur and which allow an adequate margin of safety, without consideration of cost.i |

|

The mandatory, enforceable standard under the SDWA, the maximum contaminant level, is set at a level that is economically and technologically feasible. Establishing that level requires consideration of a number of factors, such as the quantifiable and nonquantifiable health risk reduction benefits,j the quantifiable and nonquantifiable costs,k and the effects of the contaminant on the general population and groups within the general population, such as infants and elderly.l |

|

a 42 U.S.C. § 7412(b)(2)(C).

b 42 U.S.C. § 7412(d)(2).

c 42 U.S.C. § 7412(d)(5).

d 42 U.S.C. § 7409(b)(1).

e 42 U.S.C. § 7409(b)(2).

f 42 U.S.C. § 7410; see also Union Elec. Co. v. EPA, 427 U.S. 246, 266-67 (1976) (discussing how, although the U.S. EPA cannot consider costs in deciding whether to approve or disapprove an SIP, a state can do so in structuring its proposed SIP).

g 42 U.S.C. § 7503(a)(2).

h 42 U.S.C. § 300f(1)(B); 300f(2).

i 42 U.S.C. § 300g-1(b)(4)(A).

j 42 U.S.C. § 300g-1(b)(3)(C)(i)(I) & (II).

k 42 U.S.C. § 300g-1(b)(3)(C)(i)(III) & (IV).

l 42 U.S.C. § 300g-1(b)(3)(C)(i)(V).

the risk characterization is completed, the focus turns to communicating results to the decision maker. The decision maker uses the results of the risk characterization, other technological factors, and non-technological social and economic considerations in reaching a regulatory decision. … These Guidelines are not intended to give guidance on the nonscientific aspects of risk management decisions” (EPA, 2001, p. 51). In addition, other factors are referred to in Figure 1-2. Those other factors might include the political context of a decision or social factors, such as environmental justice.

FIGURE 1-2 Factors considered in EPA’s decisions.

NOTE: The legal context of a decision—that is, the statutory requirements and constraints in the nation’s environmental laws—shapes the overall decision-making process, with general directives relating to data expectations, schedules and deadlines, public participation, and other considerations. That legal context also, to a large extent, determines the other factors that EPA considers in its decisions, in particular, human health risks, technological, and economical factors. At the same times those laws allow considerable discretion in the development and implementation of environmental regulations. The figure is modeled after a figure in A Risk-Characterization Framework for Decision Making at the Food and Drug Administration (NRC, 2011). Other factors, although more challenging to quantify, can also play a role in EPA’s decisions, including social factors, such as environmental justice, and the political context.

The uncertainty in those other factors can be difficult, if not impossible, to quantify, and therefore the analysis of that uncertainty will not be discussed in depth in this report. Nevertheless, that uncertainty can influence EPA’s decisions and, as discussed in Chapters 5, 6, and 7, it is important that decision makers are aware of those factors and are transparent about how those factors influenced a decision.

EPA has done a great deal of highly skilled and scientific work on uncertainty analysis for estimates of human health risks. Although the committee was not tasked with reviewing the technical aspects of those uncertainty analyses, it did review the uncertainty analyses conducted in some risk assessments for context and as examples (see Chapter 2). It also reviewed a number of guidance documents, advisory committee reports, and advice from the National Research Council (NRC), all of which, as discussed above, focus on those uncertainties dealing with risk estimates, and not on other factors that affect EPA’s decisions. References to uncertainty in decision making typically discuss the uncertainty in estimates of human health risk (NRC, 1983, 1994, 1996, 2009, 2011).

The charge to the committee does not focus solely on uncertainty related to human health risk assessments but rather asks the committee to look more broadly at uncertainty in the decision-making process. For example, the charge asks how “uncertainty influence[s] risk management” and “other ways EPA can benefit from quantitative characterization of uncertainty (e.g., value of information techniques to inform research priorities),” and refers broadly to decision making. The committee was concerned that solely focusing attention—and resources—on reducing uncertainties in the risk assessment could lead to a false confidence that the most important uncertainties are addressed; extreme attention to reducing uncertainties in the human health risk assessment might not be sufficient without an attempt to characterize the other factors that are addressed in decision making and their uncertainties. The committee, therefore, examined the assessment of uncertainties in factors in addition to the risk to human health and the role of those uncertainties in the decision-making process (see Chapter 3). Although the uncertainty in the data and analyses related to the other factors cannot always be quantified, the report discusses the importance of being aware of those factors, potential uncertainties in those factors, and how they influence decisions, and communicating that information when discussing the rationale for EPA’s decision.

When approaching its charge, the committee was aware that the consideration of uncertainty by EPA in its regulatory decisions could be evaluated in a number of ways. The uncertainty in decisions could be evaluated solely on the standard that is set—that is, whether a standard limiting the amount of ozone in air or arsenic in drinking water or establishing remediation levels for a hazardous waste site is adequate or too protective. The decisions could also be evaluated by the quality of their technical and scientific support, such as risk assessments, costs and feasibility analysis, or regulatory impact analysis. They could also be evaluated on the basis of the process by which they were developed—for example, on the opportunities for public participation, the transparency in the decision-making process, and whether social trust was established through the process. Because each

of those aspects is integral to the decision-making process and each contributes to an understanding of the role of uncertainty in decision making, the committee looked broadly at those aspects when it considered decision making in the face of uncertainty.

All EPA decisions involve uncertainty, but the types of uncertainty can vary widely among decisions. For an analysis of uncertainty to be useful, a first and critical step is to identify the types of key uncertainties that are involved in a particular decision problem. Understanding the types of the uncertainty that are present will help EPA’s decision makers determine when to invest resources to reduce the uncertainty and how to take that uncertainty into account in their decisions.

In this report, the committee classifies uncertainty in two categories: (1) statistical variability and heterogeneity (also called aleatory or exogenous uncertainty), and (2) model and parameter uncertainty (also called epistemic uncertainty). It also discusses a third category of uncertainty, referred to as deep uncertainty (uncertainty about the fundamental processes or assumptions underlying a risk assessment),32 which is based on the level of uncertainty. Uncertainty stemming either from statistical variability and heterogeneity or from model and parameter uncertainty can be deep uncertainty. Chemical risk assessors typically consider uncertainty and variability to be separate and distinct, but in other fields uncertainty encompasses statistical variability and heterogeneity as well as model and parameter uncertainty (Swart et al., 2009). The committee discusses its rationale for including variability and heterogeneity as one type of uncertainty in Box 1-3.

The three different types of uncertainty are discussed below.

Statistical Variability and Heterogeneity

Variability and heterogeneity, which together are sometimes referred to as aleatory uncertainty, refer to the natural variations in the environment, exposure paths, and susceptibility of subpopulations (Swart et al., 2009). They are inherent characteristics of the system under study, cannot be controlled by decision makers (NRC, 2009; Swart et al., 2009), and cannot be reduced by collecting more information. Empirical estimates of variability and heterogeneity can, however, be better understood through research, thereby refining such estimates.

___________________

32 Deep uncertainty has also been called severe uncertainty and hard uncertainty (CCSP, 2009).

BOX 1-3

Uncertainty Versus Variability and Heterogeneity: The Committee’s Use of the Terms

In the context of chemical risk assessment, uncertainty has typically been defined narrowly. For example, Science and Decisions (NRC, 2009) defines uncertainty as a “[l]ack or incompleteness of information” (p. 97). It defines variability as the “true differences in attributes due to heterogeneity or diversity” (p. 97), and, as with previous reports (NRC, 1983, 1994), it does not consider variability and heterogeneity to be specific types of uncertainty.

In other settings, such as research on climate change, variability is considered a type or nature of uncertainty (CCSP, 2009). In addition, even reports related to chemical risk assessment highlight the importance of evaluating both uncertainty and variability in risk assessments and considering both in the decision-making process (NRC, 1983, 1994, 2009). When EPA makes a regulatory decision it must consider what information it might be missing, the variability and heterogeneity in the information that it has, and the uncertainty in that variability and heterogeneity. The committee, therefore, discusses variability and heterogeneity in this report. When using the term uncertainty generically, it includes variability and heterogeneity in its definition.

Variability occurs within a probability distribution that is known or can be ascertained. It can often be quantified with standard statistical techniques, although it may be necessary to collect additional data. If variability comes, in part, from heterogeneity, populations can be divided into subcategories on the basis of demographic, economic, or geographic characteristics with associated percentages and possibly a probability distribution over the percentages if they are uncertain. That stratification into distinct categories can tighten the probability distribution within each of the categories. Variability in the underlying parameters often depends on personal characteristics, geographic location, or other factors, and there might not be an adequate sample size to detect true underlying differences in populations or to ensure that data are sufficiently representative of the population being studied.

There are many variables outside of the decision maker’s control that can affect the appropriateness of a particular decision or its consequences (for example, socioeconomic factors or comorbidities). Modeling those factors is not always feasible. For example, there may be too many socioeconomic factors that require large samples to analyze, insufficient time to conduct an appropriate statistical survey and analysis, or a prohibition of using some sociodemographic variables in the analysis. A longer-term research agenda can often evaluate such variables, and retrospective

evaluations of specific decisions can help identify their effects and improve the decision-making process.

Model and Parameter Uncertainty

Model33 and parameter uncertainty, which together constitute epistemic uncertainty, include uncertainty from the limited scientific knowledge about the nature of the models that link the causes and effects of environmental risks with risk-reduction actions as well as uncertainty about the specific parameters of the models. There may be various disagreements about the model, such as which model is most appropriate for the application at hand, which variables should be included in the model, the model’s functional form (that is, whether the relationship being modeled is linear, exponential, or some other form), and how generalizable the findings based on data collected in another context are to the problem at hand (for example, the generalizability of findings based on experiments on animals to human populations). Such disagreements add to the uncertainty when making a decision on the basis of that information, and, therefore, the committee considers scientific disagreements when discussing uncertainty.

In theory, model and parameter uncertainty can be reduced by additional research (Swart et al., 2009). Issues related to model specification can sometimes be resolved by literature reviews and by various technical approaches, including meta-analysis. It is often necessary to extrapolate well beyond the observations used to derive a given set of parameter estimates, and the functional form of the model used for that extrapolation can have a large effect on model outputs. Linear or curvilinear forms may yield substantially different projections, and theory rarely provides guidance about which functional form to choose. The best approaches involve reexamining the fit of various functional forms within the data that are observed, comparing projections based on alternative functional forms, or both. Those approaches, however, are not always fruitful.

Using data from a population that is similar to the population to which the policy will be applied helps address generalizability. In practice this may involve using observational data on human populations to supplement data from controlled experiments in animals. Both animal and human data have important advantages and disadvantages, and ideally both would be

___________________

33 A model is defined as a “simplification of reality that is constructed to gain insights into select attributes of a particular physical, biologic, economic, or social system. Mathematical models express the simplification in quantitative terms” (NRC, 2009, p. 96). Model parameters are “[t]erms in a model that determine the specific model form. For computational models, these terms are fixed during a model run or simulation, and they define the model output. They can be changed in different runs as a method of conducting sensitivity analysis or to achieve a calibration goal” (NRC, 2009, p. 97).

available. In some cases, the data may yield similar answers or it may be possible to interpret the differences. Experts in a given field or domain can sometimes quantify model and parameter uncertainty either by drawing on existing research, by relying on their professional experience, or a combination of the two. Such an approach is not a substitute for research, but if done, it should build on the best data, models, and estimates available, using experts to integrate and quantify this knowledge. EPA’s peer-review process and its consultation with the Science Advisory Board are examples of this. Those approaches are discussed in Chapter 5.

Deep Uncertainty

Deep uncertainty is uncertainty that it is not likely to be reduced by additional research within the time frame that a decision is needed (CCSP, 2009). Deep uncertainty typically is present when underlying environmental processes are not understood, when there is fundamental disagreement among scientists about the nature of the environmental processes, and when methods are not available to characterize the processes (such as the measurement and evaluation of chemical mixtures). When deep uncertainty is present, it is unclear how those disagreements can be resolved. In other words, “Deep uncertainty exists when analysts do not know, or the parties to a decision cannot agree on, (1) the appropriate models to describe the interactions among a system’s variables, (2) the probability distributions to represent uncertainty about key variables and parameters in these models and/or (3) how to value the desirability of alternative outcomes” (Lempert et al., 2003, p. 3).

In situations characterized by deep uncertainty, the probabilities associated with various regulatory options and associated utilities may not be known. Deep uncertainty often occurs in situations in which the time horizon for the decision is unusually long or when there is no prior record that is relevant for analyzing a problem following a major unanticipated event, such as climate change or the migration of radionuclides from a geological repository over the coming 100,000 years. There can also be substantial disagreement about base-case (that is, the case with no intervention) exposure levels; about the rate of economic growth; or about future changes in the relationship between economic activity and exposures, losses associated with an adverse outcome, or how to value such losses—that is, the utility levels given to different magnitudes of losses. For example, experts could disagree about the effects that an oil spill in a body of water has on human health, quality of life, animal populations, and employment. Box 1-4 presents an example of a decision made in the face of deep uncertainty.

Neither the collection nor the analysis of data nor expert elicitation to assess uncertainty is likely to be productive when key parties to a decision

BOX 1-4

An Example of a Decision in the Face of Deep Uncertaintya

Decisions that had to be made in the United Kingdom during the outbreak of bovine spongiform encephalopathy (BSE) in British cattle in the mid-1980s illustrate deep uncertainty in a decision. Public health officials had to decide whether potential risks from the fetal calf serum in the media in which vaccines were prepared warranted halting childhood immunization programs against infectious diseases (such as diphtheria and whooping cough). In describing the context of the decision, Sir Michael Rawlins stated:

There was very little science to go on. The presumption that scrapie and BSE were caused by the same prion was just that, a presumption. Experiments to confirm or refute this would take two or three years to complete. There was no information as to whether maternal–fetal transmission of the prion took place. There was no evidence of whether or not vaccines prepared using fetal calf serum contained infected material. Again it would take two or three years to find out. There was no idea of a dose–response relationship and this probability of development of disease. And again two years would pass before such information would be available.

A quick decision was needed despite the deep uncertainty surrounding the risks of creating an epidemic of BSE in infants; there was no time to decrease that uncertainty. The unknown risks of BSE had to be weighed against the risks of “the re-appearance of diphtheria, whooping cough, and other infectious diseases in children.” The risk of lethal infectious diseases by abandoning the immunization program for three years “approached 100 percent.” The decision was made to continue the immunization program; luckily, there was no outbreak of diseases related to BSE. In his speech, Sir Rawlins points out that they reached the correct conclusions, albeit perhaps not for the right reasons. But the moral of the tale is this, all decisions in the fields in which we work require good underpinning science but they also always require judgments to be made.

___________________

a Sir Michael Rawlins described this decision in his acceptance speech for the International Society for Pharmacoeconomics and Outcomes Research Avedis Donebedian Outcomes Research Lifetime Achievement Award on May 21, 2011, in Baltimore, Maryland. The text of the speech is available at http://www.ispor.org/news/articles/July-Aug2011/avedis.asp (accessed March 17, 2012).

do not agree on the system model, prior probabilities, or the cost function. The task is to make decisions despite the presence of deep uncertainty using the available science and judgment, to communicate how those decisions were made, and to revisit those decisions when more information is available.

As discussed above, uncertainty analysis at EPA has focused on the uncertainty in estimates of risks to human health. In Chapter 2 the committee briefly discusses how EPA evaluates and considers uncertainty in those risk estimates, using case studies to illustrate the effects of those uncertainties on EPA’s decisions. The committee then moves beyond that narrow focus of uncertainty in EPA’s decisions and in Chapter 3 looks at the uncertainty inherent in other factors that play a role in EPA’s regulatory decisions, that is, the economic, technological, social, and political factors. In Chapter 4 the committee examines decision making in other public health policy settings to examine whether the tools and techniques used in those fields could improve EPA’s decision-making processes. In Chapter 5 the committee applies the information discussed in the previous three chapters to the context of EPA’s regulatory decisions, discussing how uncertainty in the different factors that affect EPA’s decisions should be incorporated into the agency’s decision-making process, incorporating uncertainty into the three-phase framework presented in Science and Decisions: Advancing Risk Assessment (NRC, 2009). The committee recommends approaches to evaluating the various uncertainties and to prioritizing which uncertainties to account for in EPA’s different decisions. Details of some of those approaches are presented in Appendix A. Scientific expertise is necessary for uncertainty analysis to guide decision making, but it is not enough. Transparency, consideration of community values, and inclusion of stakeholders are prerequisites to ultimately building trust in the decisions that are reached and leading to acceptance of governmental decisions (Kasperson et al., 1999; NRC, 1989, 1996; Presidential/Congressional Commission on Risk Assessment and Risk Management, 1997a). Chapter 6 focuses on those aspects of EPA’s regulatory decision-making process. Chapter 7 discusses the practical implications of this report and includes the recommendations that derive from the discussions in Chapter 2 through Chapter 6. Appendixes B and C contain biographical sketches of committee members and the agendas of the committee’s open sessions, respectively.

Budescu, D. V., S. Broomell, and H. H. Por. 2009. Improving communication of uncertainty in the reports of the Intergovernmental Panel on Climate Change. Psychological Science 20(3):299–308.

CCSP (Climate Change Science Program). 2009. Best practice approaches for characterizing, communicating, and incorporating scientific uncertainty in decisionmaking. [M. Granger Morgan (lead author), Hadi Dowlatabadi, Max Henrion, David Keith, Robert Lempert, Sandra McBride, Mitchell Small, and Thomas Wilbanks (contributing authors)]. A report by the Climate Change Science Program and the Subcommittee on Global Change Research. National Oceanic and Atmospheric Administration.

EPA (U.S. Environmental Protection Agency). 1993. Re: Quantitative uncertainty analysis for radiological assessment. http://yosemite.EPA.gov/sab/sabproduct.nsf/8FE9A83C1BE1BA7A85257323005C134F/$File/ANALYSIS%20ASSESS%20%20RAC-COM-93-006_93006_5-9-1995_86.pdf (accessed November 20, 2012).

———. 1999a. An SAB report on the National Center for Environmental Assessment’s comparative risk framework methodology. http://yosemite.EPA.gov/sab/sabproduct.nsf/83F6D5FD42385D46852571930054E70E/$File/dwc9916.pdf (accessed November 20, 2012).

———. 1999b. An SAB report: Estimating uncertainties in radiogenic cancer risk. http://yosemite.EPA.gov/sab/sabproduct.nsf/D3511CC996FB97098525718F0064DD44/$File/rac9908.pdf (accessed November 20, 2012).

———. 2000. Risk characterization handbook. Washington, DC: Science Policy Council, EPA.

———. 2001. Guidelines for developmental toxicity risk assessment. Federal Register 56(234):63798–63826.

———. 2007. Benefits and costs of Clean Air Act—direct costs and uncertainty analysis. http://yosemite.EPA.gov/sab/sabproduct.nsf/598C5B0B5A89799A852572F4005C406E/$File/council-07-002.pdf (accessed November 20, 2012).

———. 2010a. Guidelines for preparing economic analyses. http://yosemite.epa.gov/ee/epa/eed.nsf/pages/guidelines.html (accessed January 10, 2013).

———. 2010b. Review of EPA’s microbial risk assessment protocol. http://yosemite.EPA.gov/sab/sabproduct.nsf/07322F6BB8E5E80085257746007DC64F/$File/EPA-SAB-10-008-unsigned.pdf (accessed November 20, 2012).

———. 2011. Our mission and what we do. U.S. Environmental Protection Agency. http://www.EPA.gov/aboutepa/whatwedo.html (accessed November 20, 2012).

Kasperson, R. E., D. Golding, and J. X. Kasperson. 1999. Trust, risk, and democratic theory. In Social trust and the management of risk, edited by G. Cvetkovich and R. Lofstedt. London: Earthscan. Pp. 22–44.

Lempert, R. J., S. W. Popper, and S. C. Bankes. 2003. Shaping the next one hundred years: New methods for quantitative, long-term policy analysis. RAND Corporation.

Lindley, D. 2006. Uncertainty: Einstein, Heisenberg, Bohr, and the struggle for the soul of science. New York: Random House.

NRC (National Research Council). 1983. Risk assessment in the federal government: Managing the process. Washington, DC: National Academy Press.

———. 1989. Improving risk communication. Washington, DC: National Academy Press.

———. 1994. Science and judgment in risk assessment. Washington, DC: National Academy Press.

———. 1996. Understanding risk: Informing decisions in a democratic society. Washington, DC: National Academy Press.

———. 2002. Estimating the public health benefits of proposed air pollution regulations. Washington, DC: The National Academies Press.

———. 2009. Science and decisions: Advancing risk assessment. Washington, DC: The National Academies Press.

———. 2011. A risk-characterization framework for decision making at the Food and Drug Administration. Washington, DC: The National Academies Press.

OMB (Office of Management and Budget). 2011. Report to Congress on the benefits and costs of federal regulations and unfunded mandates on state, local, and tribal entities. Washington, DC: OMB.

Presidential/Congressional Commission on Risk Assessment and Risk Management. 1997a. Risk assessment and risk management in regulatory decision-making. Final report. Volume 1. Washington, DC: Government Printing Office.

———. 1997b. Risk assessment and risk management in regulatory decision-making. Final report. Volume 2. Washington, DC: Government Printing Office.

Swart, R., L. Bernstein, M. Ha–Duong, and A. Petersen. 2009. Agreeing to disagree: Uncertainty management in assessing climate change, impacts and responses by the IPCC. Climatic Change 92:1–29.

Wallsten, T. S., and D. V. Budescu. 1995. A review of human linguistic probability processing: General principles and empirical evidence. The Knowledge Engineering Review 10(01):43–62.

Wallsten, T. S., D. V. Budescu, A. Rapoport, R. Zwick, and B. Forsyth. 1986. Measuring the vague meanings of probability terms. Journal of Experimental Psychology: General 155(4):348–365.