6

Communication of Uncertainty

Communication of uncertainty is an important component of the broader practice of human health risk communication. As discussed by Stirling (2010), conveying the uncertainty in the science related to the decision is crucial not only so that decision makers will understand the range of evidence on which to base a decision, but also because it can make the influences of “deep intractabilities of uncertainty, the perils of group dynamics or the perturbing effect of power … more rigorously explicit and democratically accountable” (p. 1031).

The U.S. Environmental Protection Agency (EPA) requested guidance on communicating uncertainty to ensure the appropriate use of risk information and to enhance the understanding of uncertainty among the users of risk information, such as risk managers (that is, decision makers), journalists, and citizens. Although, as discussed in the previous chapters, a number of factors play a role in EPA’s decisions, most of the research the committee identified on the communication of environmental decisions focuses on communication of the uncertainty in estimates of human health risk, and those uncertainties are the focus of this chapter.

This chapter begins with background information on the communication of those risks. It then discusses the advantages and disadvantages of different formats for the presentation of uncertainty and the considerations that go into determining a communication strategy, such as the purpose of the communication, the stage in the decision-making process, the decision context, the type of the uncertainty, and the characteristics of the audience. The relevant audience characteristics discussed here are the audience’s level of technical expertise, personal and group biases, and social trust. In

response to its charge, when discussing audience characteristics, the committee paid special attention to communicating with the media.

COMMUNICATION OF UNCERTAINTY IN RISK ESTIMATES

The science of risk communication and the idea of what is good and appropriate risk communication have evolved over the past decades (Fischhoff, 1995; Leiss, 1996). For example, Fischhoff (1995) described the first three stages in that evolution in terms of how communicators think about the process: “All we have to do is get the numbers right,” “All we have to do is tell them the numbers,” and “All we have to do is explain what we mean by the numbers” (p. 138). With the realization that those factors alone would not lead stakeholders to accept decisions about risks, Fischhoff writes, communication experts changed strategies to include the ideas that “All we have to do is show them that they’ve accepted similar risks in the past,” “All we have to do is show them that it’s a good deal for them,” and “All we have to do is treat them nice” (p. 138). Those approaches eventually evolved to include the current strategy, “All we have to do is make them partners” (p. 138). That most recent strategy includes both the two-way communication and the stakeholder engagement that today are considered hallmarks of good risk communication. In the context of EPA’s decisions, stakeholders include the decision makers at the agency, the industries potentially affected by a regulatory decision, and individuals or groups affected by the decision, including local community members for local issues or all the public for issues of national significance.

Improving Risk Communication (NRC, 1989) emphasized the importance of such two-way communication for agencies such as EPA, defining risk communication as “an interactive process of exchange of information and opinion among individuals, groups, and institutions. It involves multiple messages about the nature of risk and other messages, not strictly about risk, that express concerns, opinions, or reactions to risk messages or to legal and institutional arrangements for risk management” (p. 21). That report noted that risk estimates always have inherent uncertainties and that scientists often disagree about the appropriate estimates of risk. It recommended not minimizing the existence of uncertainty, disclosing scientific disagreements, and communicating “some indication of the level of confidence of estimates and the significance of scientific uncertainty” (p. 170). Other reports have also emphasized the need for engagement of stakeholders in the decision-making process (NRC, 1996, 2009).

Documenting the type and magnitude of uncertainty in a decision is not only important at the time of the decision, as discussed by Bazelon (1974), but it is also important when a decision might be revisited or evaluated in the future.

The committee agrees with many of those concepts discussed and recommended in Improving Risk Communication (NRC, 1989). Many of those concepts have been incorporated into EPA guidance documents on risk communication (see, for example, Covello and Allen, 1988; EPA, 2004, 2007). In 2007, for example, EPA’s Office of Research and Development published Risk Communication in Action (EPA, 2007), which describes the basic concepts of successful risk communication, taking into account differences in values and risk perception, and includes instructions on how best to engage with and present risk information to the public. It is not clear, however, the extent to which that and other documents—which are not agency-wide policies—are considered by or implemented in the risk communication practices of different programs and offices at EPA. Other National Research Council (NRC) reports (1996, 2008) have expressed concern that stakeholders have not been adequately involved in EPA decision making, suggesting that two-way risk communication, including communication surrounding uncertainty, may in some instances be inadequate.

The extent to which uncertainty is described and discussed varies among EPA’s decision documents. Chapter 2 discusses EPA’s decisions and supporting documentation around arsenic in drinking water, the Clean Air Interstate Rule (CAIR), and methylmercury, including the uncertainty analyses that EPA conducted and presented for those regulatory decisions. Those examples indicate that EPA does sometimes conduct numerous uncertainty analyses and present those analyses in its documents. Such analyses, however, are often presented in appendixes, and the ranges of potential outcomes are not necessarily presented in the summaries and summary tables. The committee also noted that the uncertainty analyses in those documents focus almost exclusively on the uncertainty in estimates related to human health risks and benefits. Krupnick et al. (2006) reviewed four of EPA’s regulatory impact analyses for air pollution regulations, including CAIR and the Clean Air Mercury Rule. They concluded that although the documents “indicate increased use of uncertainty analysis,” the EPA’s regulatory impact analyses “do not adequately represent uncertainties around ‘best estimates,’ do not incorporate uncertainties into primary analyses, include limited uncertainty and sensitivity analyses, and make little attempt to present the results of these analyses in comprehensive way” (p. 7).

To successfully communicate uncertainty, EPA programs and offices need to develop communication plans that include identification of stakeholder values, perceptions, concerns, and information needs related to uncertainty about the decisions to be made and to the uncertainties to be evaluated. As discussed in Chapter 5, the development of those plans should be initiated in the problem-formulation phase of decision making, and it should continue during the assessment and management phases.

The most widely used formal language of uncertainty in risk estimates is probability1 (Morgan, 2009). As Spiegelhalter et al. (2011) stated, however, “probabilities are notoriously difficult to communicate effectively to lay audiences.” Probabilistic information, and the uncertainties associated with those probabilities, can be communicated using numeric, verbal, or graphic formats, and consideration should be given to which approach is most appropriate. In a recent review Spiegelhalter et al. (2011) pointed out that the available research in this area for the most part is limited to small studies, often on students or self-selected samples. That lack of large, randomized experiments remains years after Bostrom and Löfstedt (2003) “concluded that risk communication was ‘still more art than science, relying as it often does in practice on good intuition rather than well-researched principles’” (Spiegelhalter et al., 2011, p. 1399).

As discussed later in this chapter, the most appropriate approach to communicating uncertainty depends on the circumstances (Fagerlin et al., 2007; Nelson et al., 2009; Spiegelhalter et al., 2011; Visschers et al., 2009). Lipkus (2007) summarized the general strengths and weaknesses of each of the different approaches for conveying probabilistic information, based on a comprehensive literature review and consultation with risk communication experts (see Box 6-1). The committee discusses relevant findings from this research below. Regardless of the format in which the uncertainty is presented, it is important to bound the uncertainty and to describe the effect it might have on a regulatory decision. Presenting the results of analyses such as the sensitivity analyses and scenarios discussed in Chapter 5 is one way to provide some boundaries on the effects of those uncertainties and to educate stakeholders about how those uncertainties might affect a decision. It is important to note that the existence of weaknesses does not necessarily indicate that a given method should not be used, but rather those weaknesses should be considered and adjusted for when developing a communication strategy.

Numeric Presentation of Uncertainty

In general, numeric presentations of probabilistic information—such as presenting information in terms of percentages and frequencies—can lead to more accurate perceptions of risk than verbal and graphic formats (Budescu et al., 2009). Unlike graphic and verbal presentations, numeric information can be put into tables in order to communicate a large amount of information in a single presentation. For example, Table 6-1, created by EPA,

___________________

1 Probability is a form of uncertainty information.

compares the expected reduction in nonfatal heart attacks in several age groups from two different strategies for attaining national ambient air quality standards, including the 95 percent confidence interval for all values.

Percentage and frequency formats have been found to be more effective than other formats (such as stating that there is a 1-in-X chance of an occurrence) for some circumstances because they more readily allow readers to conduct mathematical operations, such as comparisons, on risk probabilities (Cuite et al., 2008). Other research, however, found that probabilistic reasoning improves and that the influence of cognitive biases (see further discussion below) decreases when information is presented in the form of natural frequencies (for example, 30/1,000) rather than as proportions and single-event probabilities (for example, 3 percent) (see Brase et al., 1998; Gigerenzer, 2002; Gigerenzer and Edwards, 2003; Hoffrage et al., 2000; Kramer and Gigerenzer, 2005). Hoffrage et al. (2000) tested physicians’ ability to calculate the predictive value of a screening test for colorectal cancer when information was presented in terms of probabilities—a task that required combining multiple probabilities. Only 1 out of 24 physician participants correctly calculated the false-positive rate when provided data as percentages. In contrast, when provided as fractions (for example, 30/10,000 people), 16 out of 24 of the physicians correctly calculated the false-positive rate (Hoffrage et al., 2000).

Among the disadvantages of numeric presentations are that they are only useful if the people the agency is communicating with are capable of interpreting the numeric information presented and that they may not hold people’s attention as well as verbal and graphic presentations (Krupnick et al., 2006; Lipkus, 2007). The appropriateness of such presentations will depend on with whom EPA is communicating. For example, numeric presentations might be more appropriate for EPA decision makers and stakeholders with technical backgrounds than for stakeholders with less technical backgrounds.

As discussed by Peters (2008), decision making is part deliberative (that is, analytical or reason-based) and part affective (that is, intuitive or based on emotional feelings), and using a combination of both approaches is important to good decision making (Damasio, 1994; Slovic and Peters, 2006). The numerical ability, or numeracy, of people varies, however, and this numeracy plays a role in the interpretation of numerical data and in judgments and decisions (Peters et al., 2006). People with higher numeracy are more likely to “retrieve and use appropriate numerical principles and transform numbers presented in one frame to a different frame” (p. 412), and they “tend to draw more affective meaning from probabilities and numerical comparisons than the less numerate [people] do” (pp. 412–413). Both laypeople’s and scientist’s judgments about risks are often influenced by affective feelings, but the format in which risk data are presented can

BOX 6-1

Strengths and Weaknesses of Numeric, Verbal, and Visual Communication of Riska

Numeric communication of risk (e.g., percentages, frequencies)

| Strengths | Weaknesses | |

|

• Is precise and leads to more accurate perceptions of risk than the use of probability phrases and graphical displays • Conveys aura of scientific credibility • Can be converted from one metric to another (e.g., 10% = 1 out of 10) • Can be verified for accuracy (assuming enough observations) • Can be computed using algorithms, often based on epidemiological and/or clinical data, to provide a summary score |

• Lacks sensitivity for adequately tapping into and expressing gut-level reactions and intuitions • People have problems understanding and applying mathematical concepts (level of numeracy) • Algorithms used to derive numbers may be incorrect, untestable, or result in wide confidence intervals that may affect public trust |

Verbal communication of risk (e.g., unlikely, possible, almost, certain)

| Strengths | Weaknesses | |

|

• Allows for fluidity in communication (is easy and natural to use) • Expresses the level, source, and imprecision of uncertainty, encourages one to think of reasons why an event will or will not occur (i.e., directionality) • Unlike numbers, may better capture a person’s emotions and intuitions |

• Especially if the goal is to achieve precision in risk estimates, variability in interpretation may be a problem (e.g., likely may be interpreted as a 60% chance by one person and as an 80% chance by another) |

Visual (graphic) communication of risk (e.g., pie charts, scatter plots, line graphs)

| Strengths | Weaknesses | |

|

• Ability to summarize a great deal of data and show patterns in the data that would go undetected using other methods • Useful for priming automatic mathematic operations (e.g., subtraction in comparing the difference in height between two bars of a histogram) • Is able to attract and hold people’s attention because it displays data in concrete, visual terms • May be especially useful to help with visualization of part-to-whole relationships |

• Data patterns may discourage people from attending to details (e.g., numbers) • Poorly designed or complex graphs may not be well understood, and some individuals may lack the skills or educational resources to learn how to use and interpret graphs • Graphics can sometimes be challenging to prepare or require specialized technical programs • The design of graphics can mislead by calling attention to certain elements and away from others |

___________________

aThe strengths and weaknesses will vary depending on the stage of the decision, the purpose of the communication, and the audience.

SOURCE: Adapted from Lipkus, 2007.

| Reduction in Incidence (95% Confidence Interval) | ||

| Age Interval | 15/35 Attainment Strategy | 14/35 Attainment Strategy |

| 18–24 | 1 (1–2) |

4 (2–6) |

| 25–34 | 8 (4–12) |

26 (13–40) |

| 35–44 | 170 (84–250) |

280 (140–430) |

| 45–54 | 520 (260–790) |

930 (460–1,400) |

| 55–64 | 1,300 (630–1,900) |

2,100 (1,100–3,200) |

| 65–74 | 1,500 (770–2,300) |

2,600 (1,300–3,900) |

| 75–84 | 980 (490–1,500) |

1,800 (900–2,800) |

| 85+ | 520 (260–780) |

940 (460–1,400) |

| Total | 5,000 (2,500–7,500) |

8,700 (4,300–13,000) |

NOTE: PM NAAQS = Particulate Matter National Ambient Air Quality Standards.

SOURCE: Modified from EPA, 2008.

affect the interpretation of results to a greater extent in people with low numeracy. For example, low-numeracy individuals perceive risk to be higher when given the information about risk in frequency formats than when given the information in percentage formats (Peters et al., 2011). Presenting information in a manner that facilitates understanding is important, therefore, to people understanding the risks and making decisions using both deliberative and affective approaches (Peters, 2008).

Verbal Presentations of Uncertainty

Verbal presentations of risk—for example, messages containing words such as “likely” or “unlikely”—can be used as calibrations of numeric risk. Such representations may do a better job of capturing people’s attention than numeric presentations, and they are also effective for portraying directionality. People are typically familiar with verbal expressions of risk from everyday language (for example, the phrase “It will likely rain tomorrow”), and for some people such presentations may be more user friendly than quantitative portrayals. Furthermore, as discussed by Kloprogge et al.

(2007), verbal expressions of uncertainty can be better adapted to the level of understanding of an individual or group than can numeric and graphic presentations.

A major weakness of verbal or linguistic presentations of risk is that studies have shown that the probabilities attributed to words such as “likely” or “very likely” varies among individuals and can even vary for a single individual depending on the scenario being presented (see Wallsten and Budescu, 1995; Wallsten et al., 1986). For example, as discussed by Morgan (2003), in a study that asked members of the executive committee of EPA’s Science Advisory Board about the probabilities attached to the words “likely” and “unlikely” in the context of carcinogenicity, “the minimum probability associated with the word “likely” spans four orders of magnitude, the maximum probability associated with “not likely” spans more than five orders of magnitude,” and there was an overlap between the ranges of probabilities associated with the two words (Morgan, 1998, p. 48). That variation can raise a variety of issues when consistency in the interpretation of a health risk is one of the goals of a communication. However, Erev and Cohen (1990) suggested that such vague verbal presentations of information might lead to a consideration of a wider variety of actions within a group, which could be beneficial to the overall group.

Qualitative descriptions of probability—that is, those that include a description or definition for a category of certainty—are sometimes used instead of such subjective calibrations as “very likely” or “unlikely,” which are open for individual interpretation. The third assessment report of the Intergovernmental Panel on Climate Change (IPCC), published in 2001 (IPCC, 2001), made extensive use of a qualitative table proposed by Moss and Schneider (2000) as well as of more quantitative likelihood scales (see Table 6-2); these presentations were also used in slightly modified forms in the fourth assessment report published in 2007 (IPCC, 2007). The International Agency for Research on Cancer (IARC) also uses defined categories to classify evidence. For example, IARC classifies the relevant evidence of carcinogenicity from human studies for a given chemical as limited evidence of carcinogenicity when “[a] positive association has been observed between exposure to the agent and cancer for which a causal interpretation is considered by the Working Group to be credible, but chance, bias or confounding could not be ruled out with reasonable confidence” (IARC, 2006, p. 19). Such presentations, which provide a description of the state of the science in a given field, can help policy makers with decisions when definitive findings are still pending.

Such use of qualitative likelihood presentations, however, has not been problem-free. Recent research suggests that people may interpret the IPCC qualitative presentations with less precision than intended (Budescu et al., 2009) and that estimates for negatively worded probabilities, such as “very

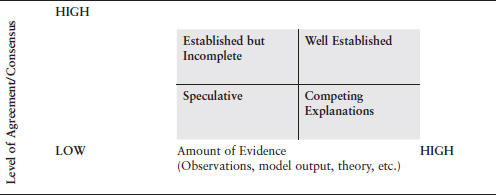

NOTE: Key to qualitative “state of knowledge” descriptors:

Well Established: Models incorporate known processes, observations largely consistent with models for important variables, or multiple lines of evidence support the finding.

Established but incomplete: Models incorporate most known processes, although some parameterizations may not be well tested; observations are somewhat consistent with theoretical or model results but incomplete; current empirical estimates are well founded, but the possibility of changes in governing processes over time is considerable; or only one or a few lines of evidence support the finding.

Competing Explanations: Different model representations account for different aspects of observations or evidence, or incorporate different aspects of key processes, leading to competing explanations.

Speculative: Conceptually plausible ideas that have not received much attention in the literature or that are laced with difficult to reduce uncertainties or have few available observational tests.

SOURCE: Moss and Schneider, 2000.

unlikely,” may be interpreted with greater variability than probability estimates that are positively worded (Smithson et al., 2011). The use of double negatives was especially confounding (Smithson et al., 2011). Budescu et al. (2009) also found that there is interindividual variability in the interpretation of the IPCC categories for certainty, and they recommended using “both verbal terms and numerical values to communicate uncertainty” and adjusting “the width of the numerical ranges to match the uncertainty of the target events” (p. 306). They also recommended describing events as precisely as possible—for example, avoiding the use of subjective terms such as “large”—and specifying the various sources of uncertainty and outlining their type and magnitude.

Graphical Presentation of Uncertainty

Graphical displays of probabilistic information—such as bar charts, pie charts, and line graphs—can summarize more information than other presentations, can capture and hold people’s attention, and can show patterns and whole-to-part relationships (Budescu et al., 1988; Spiegelhalter et al., 2011). Furthermore, uncertainties about the outcomes of an analysis can also be depicted using graphical displays, such as bar charts, pie charts, probability density functions,2 cumulative density functions,3 and box-and-whisker plots. There is some evidence that graphic displays of uncertainty can help convey uncertainty to people with low numeracy (Peters et al., 2007). A few studies have explored how well different graphical displays of quantitative uncertainty can convey information and have analyzed the effects of different graphical displays on decision making (Bostrom et al., 2008; Visschers and Siegrist, 2008).

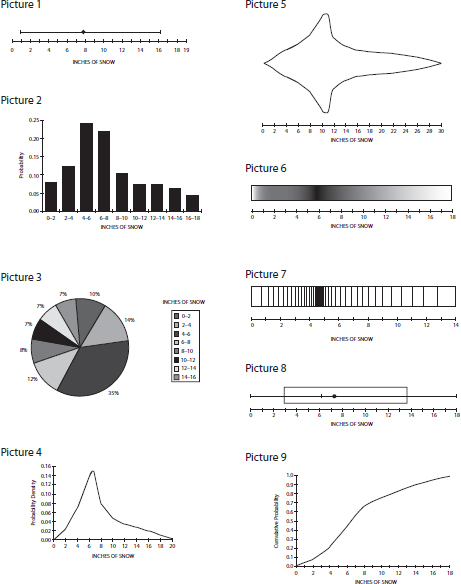

Ibrekk and Morgan (1987) compared nine graphical displays by seeing how well each of them communicated univariate uncertainty to 49 well-educated semitechnical and nontechnical people (see Figure 6-1). Participants were asked to estimate the mean, the probability that a value that occurs will be greater than some stated value (that is, x > a), and the probability that the value that occurs will fall within a stated interval (that is, b > x > a). They were first asked to make those estimates without an explanation of how to use or interpret the displays, and then they were asked again after receiving detailed nontechnical explanations. Participants were most accurate with their estimates when they had been shown graphics that explicitly marked the location of the mean (displays 1 and 8), contained the answers to questions about x > a and b > x > a (displays 2 and 9), or provided the 95 percent confidence interval (display 1). In making judgments about best estimates using displays of probability density, subjects tended to select the mode rather than the mean unless the mean was marked. Subjects reported being most familiar with the bar chart and pie chart (displays 2 and 3, respectively), but there was no relationship between familiarity with a display and how sure subjects were of their responses. The researchers also found that participants with some working knowledge of probability and statistics did not perform significantly better in interpreting the displays than participants without such knowledge. One implication of this research is that it will be important to include nontechnical people and people with knowledge of probability, such as EPA decision makers, in research on the communication of uncertainty.

___________________

2 Probability density functions show the probability of a given value, for example, the probability that there will be 12 inches of snow.

3 Cumulative density functions show the probability of something being less than or equal to a given value, for example, the probability that there will be 12 inches of snow or less.

FIGURE 6-1 Nine displays for communicating uncertain estimates for the value of a single variable used in experiments.

Picture 1: point estimate with an error bar; Picture 2: bar chart; Picture 3: pie chart; Picture 4: conventional probability density function; Picture 5: probability density function of half its regular height together with its mirror image; Picture 6: horizontal bar shaded to display probability density using dots; Picture 7: horizontal bar shaded to display probability density using lines; Picture 8: Tukey box plot modified to exclude the maximum and minimum values and to display the mean with a solid point; Picture 9: conventional cumulative distribution function.

SOURCE: Ibrekk and Morgan, 1987, p. 521. Reprinted with permission of John Wiley & Sons Ltd.

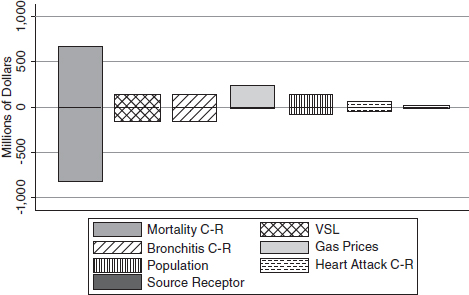

In a small exploratory study, Krupnick et al. (2006) tested the effectiveness of seven different presentations (two tables and five figures)4 of information about uncertainty in helping seven former high-level EPA decision makers make a decision about whether to adopt a hypothetical proposed tightening of the CAIR.5 Based on the presentations, decision makers were asked to decide whether they would choose (a) an option to do nothing, (b) an intermediate option of reducing the nitrogen oxide (NOx) cap by an additional 20 percent below baseline in 2020, or (c) a more stringent option of reducing the NOx cap 40 percent from baseline in 2020. While acknowledging the small sample size, the authors noted that there were a few findings that deserved to be explored further in future research. One finding was that tables and probability density functions appeared to be best suited for informing high-level decision makers about uncertainty in a scenario of choosing between two regulatory options. Participants found tables to be informative and easy to interpret, and they found probability density functions to be the most familiar of the graphic displays. When asked whether probability density functions might create a bias toward tighter spread, almost all participants reported that the probability density function made them inclined to choose the intermediate option. Participants had more difficulty interpreting the cumulative density function, and most of the participants said that the cumulative density function did not help with decision making.6 Participants reported that a graphic that listed the sources of uncertainty and described the impact of each source of uncertainty on the estimates of net benefits (see Figure 6-2) was an important input to decision making, gave them insight into how confident they should be in their decision, and prepared them to argue their choice with critics. Participants were able to interpret a pie chart and box-and-whisker plot, but some participants reported that those graphics did not add useful information to what had been provided in the summary tables. The authors were not able to make any generalizations about the impact of the different graphic presentations on decision making; participants’ final policy choices were not unanimous, and each decision option—to do nothing,

___________________

4 The seven presentations, in order, were (1) a table showing the impacts of the two proposed policies in terms of physical health impacts and costs in 2025; (2) a table with results from a cost–benefit analysis showing total benefits, costs, and net benefits in 2025; (3) a pie chart displaying the probabilities that the policies would produce positive net benefits; (4) a box-and-whisker plot; (5) a probability density function; (6) a cumulative density function; and (7) a graph showing the relative contributions of key variables to the uncertainty associated with the estimate of net benefits.

5 EPA issued the Clean Air Interstate Rule (CAIR) on March 10, 2005. CAIR covers 28 states in the eastern United States with an order to reduce air pollution by capping emissions of sulfur dioxide (SO2) and nitrogen oxides (NOx) (EPA, 2008).

6 In an earlier small exploratory study, Thompson and Bloom (2000) also found that EPA risk manager participants preferred the PDF format over other graphical displays.

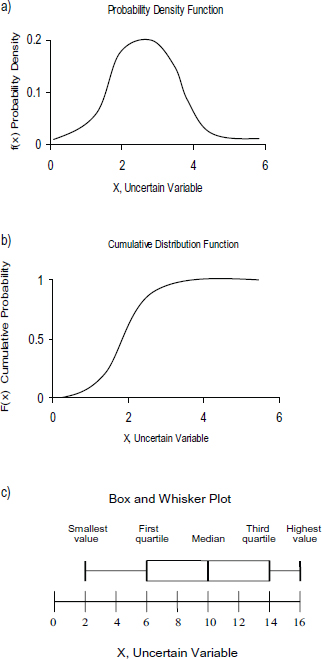

FIGURE 6-2 Examples of the most common graphical displays of uncertainty: (a) a probability density function, (b) a cumulative distribution function, and (c) a box-and-whisker plot.

SOURCE: Adapted from Morgan and Henrion, 1990, p. 221. Reprinted with permission of Cambridge University Press.

FIGURE 6-3 Graphic used by Krupnick et al. (2006) to display sources of uncertainty and to describe the impact of each source of uncertainty on estimates of expected net benefits in 2025.

NOTES: C–R = concentration–response; VSL = value of statistical lives.

SOURCE: Krupnick et al., 2006. Reprinted with permission of Copyright Clearance Center.

intermediate NOx cap, and stringent NOx cap—was selected by at least one study participant.

Krupnick et al. (2006) and Morgan and Henrion (1990) also discussed the strengths and weaknesses of probability and cumulative density functions and the display of selected fractiles, as in box-and-whisker plots (see Figure 6-3). Those are the approaches that are most often used to display uncertainty in probabilistic terms, and each emphasizes different aspects of a probability distribution (Krupnick et al., 2006).7

Probability density functions (Figure 6-2a) represent a probability distribution in terms of the area under the curve and highlight the relative probabilities of values. The peak in the curve is the mode, and the shape of

___________________

7 Uncertainty along more than one dimension can be graphed using a cumulative distribution function, a probability density function, or a box plot using either multiple graphs or superimposing uncertainties along one dimension over another. An alternative is to use error bars within a line graph, where the error bars represent uncertainty, or to use probability surfaces to depict uncertainty three-dimensionally (Krupnick et al., 2006).

the curve indicates the shape of the distribution (for example, how skewed the data are) (Krupnick et al., 2006). Probability density functions can be a sensitive indicator of variations in probability density, so their use may be advantageous when it is important to emphasize small variations. On the other hand, this sensitivity may sometimes be a disadvantage in that small variations attributed to random sampling may present as noise and are of no intrinsic interest. Another disadvantage may be that the area under the curve, rather than the height of the curve, corresponds to probability. Cumulative distribution functions (CDFs), as illustrated in Figure 6-2b, are calculated by taking the integral of the probability density function, and they best display (1) fractiles (including the median), (2) the probability of intervals, (3) stochastic dominance, and (4) mixed continuous and discrete distributions (Morgan and Henrion, 1990). CDFs have the advantage of not showing as much small variation noise as a probability density function does, so that the shape of the distribution may appear much smoother. As discussed above, however, more people have difficulty interpreting cumulative density functions (Ibrekk and Morgan, 1987). Furthermore, with a cumulative density function it is not as easy to judge the shape of the distribution.

Box-and-whisker plots (Figure 6-2c) are effective in displaying summary statistics (medians, ranges, fractiles), but they provide no information about the shape of the distribution except for the presence of asymmetry in the distribution (Krupnick et al., 2006). The first quartile (the left-hand side of the box) represents the median of the lower part of the data, the second quartile (the line through the middle of the box) is the median of all data, and the third quartile (the right-hand side of the box) is the median of the upper part of the data. The ends of the “whiskers” show the smallest and largest data points.

Ibrekk and Morgan (1987) concluded that, until future research suggests another strategy is more effective, it may be best to use both cumulative and probability density functions with the same horizontal scale and with the location of the mean clearly indicated on each. The decision of which of these displays to use depends on what type of information the user needs to extract, so it is important to understand the information needs of the people the agency is communicating with. One drawback of both density functions, however, is that people—especially people without a strong technical background—may have difficulty extracting summary information.

Despite their advantages, graphic displays do not always explicitly describe conclusions, and they can require more effort to extract information, particularly for people who are not familiar with the mode of presentation or who lack skills in interpreting graphs or in cases where the graphic presents complex data (Kloprogge et al., 2007; Lipkus, 2007).

The interpretation of a graph depends on the “viewer’s familiarity with the content depicted in a graph, and the viewer’s graphicacy skills” as well as the design of the graph (Shah and Freedman, 2009). For example, graph viewers are less likely to discern the relevant results from a graph if the data in the graph are not grouped to form appropriate “visual chunks” (Shah et al., 1999) or exhibited in a format that supports the intended inferences (Shah and Freedman, 2009). In addition, some research indicates that individuals differ in how they use the information in different presentations of data (Boduroglu and Shah, 2009), further complicating the use of graphical presentations.

There is also some evidence that graphic displays increase risk aversion. For example, one study that examined how well visual displays of risk communicated low-probability events found that adding graphics to numeric presentations increased participants’ willingness to pay for risk reductions (Stone et al., 1997). There is no correct level of perceived risk, however, so it is not possible to rank the effectiveness of various displays based on this outcome.

Furthermore, graphs can be designed—either intentionally or unintentionally—to call attention to certain aspects of a message and detract from others. Highlighting the foreground rather than the background can make people more risk averse. For example, people are more risk averse after seeing a bar graph that only shows the differences in the number of people suffering from serious gum disease with the denominator of “per 5,000” people included in the figure legend than after seeing a bar graph that depicts both differences in gum disease and the denominator of 5,000 people (Stone et al., 2003). Even when such foreground–background salience and gain–loss framing (see discussion below) are controlled, however, evidence indicates that graphic displays lead to greater risk aversion than numerical presentations (Slovic and Monahan, 1995; Slovic et al., 2000).

The ability to use interactive visualizations to display information and uncertainty about that information has increased with the evolution of computer technology. Spiegelhalter et al. (2011) point out that “increasing availability of online data and public interest in quantitative information has led to a golden age of infographics” (p. 1399), including the ability to create graphics with interactive features. Such interactive graphics have the potential to increase understanding and retention and to help counteract differences in numeracy, and this potential could be applied in the communication of uncertainty. Spiegelhater notes, however, that while there is huge potential applications and uses for infographics with interactive features, such graphics have not yet been evaluated empirically.

One limitation of most of those graphical presentations is that they display only one variable at a time. For example, they might show how the uncertainty in an estimate of human health risks varies among individuals

with different sensitivities to a chemical or show the consequences of different regulatory decisions on human health benefits. In reality, however, most of the problems that EPA faces have many sources of uncertainty and many intermediate outputs which may covary. For example, the consideration of a number of different health endpoints might influence a decision, or there might be estimates for a number of costs and benefits of a rule, each of which has uncertainty associated with it. Tornado diagrams provide “a pictorial representation of the contribution of each input variable to the output of the decision making model” (Daradkeh et al., 2010).

Framing Biases

One line of risk perception research that is relevant to EPA’s communication of uncertainty is the study of the effects that alternative ways of framing risk information have on risk perception and decision making. Experts have been found to be just as susceptible to framing effects as isthe general population (Slovic et al., 1982). Different ways of framing probabilistic information can leave people with different impressions about a risk estimate and, consequently, the confidence in that estimate. For example, stating that “10 percent of bladder cancer deaths in the population can be attributed to arsenic in the water supply” may leave a different impression than stating that “90 percent of bladder cancer deaths in the population can be attributed to factors other than arsenic in the water supply,” even though both statements contain the same information. Choices based on presentations of a range of uncertainty will be similarly influenced by the way that information is presented. Risk estimates that include a wide range of uncertainties may imply that an adverse outcome is possible, even if the likelihood of the adverse outcome occurring is extremely small (NRC, 1989).

CONSIDERATIONS WHEN DECIDING ON A COMMUNICATIONS APPROACH

Determining the best approach to communicate the uncertainty in a decision needs to be made on a case-by-case basis (Fagerlin et al., 2007; Lipkus, 2007; Nelson et al., 2009; Spiegelhalter et al., 2011; Visschers et al., 2009). There are, however, a number of considerations that should be taken into account when making that decision. The committee discusses the following considerations below: (1) the stage of the decision-making process and the purpose of the communication; (2) the decision context; (3) the type and source of the uncertainty and the whether the uncertainty analysis is qualitative or quantitative; and (4) the audience with which EPA

is communicating. Testing and evaluating the effectiveness of communication approaches is also important (Fischhoff et al., 2011).

Both the U.S. National Institutes of Health (NCI, 2011)8 and the Netherlands Environmental Assessment Agency have developed guidance on communicating uncertainty (Kloprogge et al., 2007). Although both guidance documents emphasize the need for communication strategies to be developed on a case-by-case basis, they also present generalities about the strengths and weaknesses of different approaches and describe some circumstances under which one approach might be preferable over another. NIH’s workbook includes some detailed suggestions, such as the order in which to present data and color choices (NCI, 2011).

The Stage of the Decision-Making Process and the Purpose of the Communication

The most appropriate strategy for communicating uncertainty will depend in part on the phase of the decision-making process and the purpose of the communication. Chapter 5 identified three phases in the decision-making process: problem formulation, assessment, and implementation. The key to a good communication strategy is initiating communication during the problem-formulation phase and continuing it throughout the decision-making process. The purpose of the communication, however, might differ from one phase to the next.

During the problem-formulation phase, the communication strategy should ensure input from stakeholders on what uncertainties they are aware of and concerned about and on how those uncertainties should be accounted for in the assessment and implementation phases. A key goal of communication about uncertainty during the problem-formulation phase is to develop a common understanding of the decision problem, of the limits or constraints on the decision options, and of the potential uncertainties that exist in the evidence base for the decision.

The understanding gained from the problem-formulation phase will help shape the assessments that occur during the second phase of the decision-making process. Further communication might be needed to clarify issues about uncertainties and to discuss any new uncertainties that are identified during the assessment and how those uncertainties should be considered in the assessment.

During the implementation phase, the assessors will characterize the risks, costs and benefits, and other factors that were assessed during the

___________________

8 The National Institutes of Health’s workbook operationalizes the main points contained in the book Making Data Talk: Communicating Public Health Data to the Public, Policy Makers, and the Press (Nelson et al., 2009).

assessment phase, with EPA’s decision makers being an important audience at this stage. Those characterizations should include a characterization of the uncertainties in the data and analyses that underlie each of the factors that were assessed. One type of communication during the implementation phase will be the agency communicating with stakeholders to discuss its decision and the rationale for its decision, including how uncertainties affect the decision. Another part of the communication process should be the agency getting feedback on the decision and uncertainties as well as having discussions about how and when the decision will be revisited.

Decision Context

To develop guidance on communicating uncertainty, the Netherlands Environmental Assessment Agency relied on literature reviews, a workshop on uncertainty communication, and research by the authors of the guidance document (Kloprogge et al., 2007). The guidance emphasizes the importance of decision context. The context of a decision—that is, the characteristics of the setting in which the decision is being made—affects the communication of the decision and of the uncertainty underlying it. Box 6-2 lists some decision contexts in which the communication of the uncertainty surrounding a decision is particularly important. A complex decision based on controversial science or a decision about which stakeholders disagree will benefit from greater attention to communicating uncertainties (Kloprogge et al., 2007).

It can be especially challenging to communicate the uncertainty associated with a decision made in an emergency situation, such as a hazardous chemical spill. Under such circumstances, EPA must communicate not only with those involved in containment and cleanup, but also with members of the public who might be affected by the spill, and the communication may need to be done in coordination with other agencies, with governments, and with stakeholders such as private companies involved in the spill. Such communication, sometimes called crisis communication, is often carried out at a time when there are a number of large uncertainties about the event and its potential consequences on human health and the environment (Reynolds and Matthew, 2005). The time frame within which a decision is needed in an emergency situation can limit the time and opportunities available for communication, and the purpose of communication in such a situation can differ from traditional risk communications in that crisis communication often is principally informative (Reynolds and Matthew, 2005). Although it is generally not possible to predict the timing and extent of an emergency, the nature of many potential emergencies can, and often are, known and planned for. Communicating with stakeholders about the uncertainties that might follow an emergency during the planning for such an emergency and

BOX 6-2

When Greater Attention to Reporting Uncertainties May Be Needed

Reporting uncertainties may be more policy relevant when

• The outcomes are very uncertain and have a great impact on the policy advice given.

• The outcomes are situated near a policy target, threshold, or standard set by policy.

• A wrong estimate in one direction will have entirely different consequences for policy advice than a wrong estimate in another direction.

• There is a possibility of morally unacceptable damage or “catastrophic events.”

• Controversies among stakeholders are involved.

• There are value-laden choices or assumptions that are in conflict with the views and interests of stakeholders.

Greater attention to reporting uncertainties may also be needed when

• Fright factors or media triggers are involved.

• There are persistent misunderstandings among audiences.

• The audiences are expected to distrust outcomes that point to low risks because the public perception is that the risks are serious.

• The audiences are likely to distrust the results because of low or fragile confidence in the researchers or the organization that performed the assessment.

SOURCE: Kloprogge et al., 2007.

explicitly including the communication of uncertainties in emergency plans can help facilitate communications when an environmental crisis requiring an emergency response occurs. Given the need for a quick decision and the large amount of uncertainty that often occurs in emergency situations, it is important that communication strategies include plans to collect information that might reduce uncertainties or plans to revisit the decision once more data are gathered.

The decision context could also determine whom the agency and its technical staff should communicate with. Furthermore, as discussed below, the characteristics of those with whom EPA is communicating should also affect the strategy for communicating the decision, including the uncertainty in the decision. The communication strategy for a decision that will

affect only a small region will differ from the communication strategy for a decision that will have consequences on a national scale. A decision might also have a greater effect on one subgroup than another (for example, a decision that affects the levels of a chemical in fish might affect anglers more than other people), and those subgroups that are more at risk should be identified during the problem-formulation phase, and discussions about potential uncertainties should be initiated during that phase.

The Type and Source of the Uncertainty

Some research indicates that, when communicating uncertainties in the results of risk assessments to decision makers, it is valuable to be specific about the nature or types of the uncertainties. Bier (2001b) discusses two types of outcome uncertainties: (1) state of knowledge or assessment uncertainty, and (2) variability or randomness (in other words, uncertainties arising from variability or natural variation elements in such factors as environments, populations and exposure paths), which cannot be controlled and are thus not reducible.9 Those two categories of uncertainties correspond to what the committee refers to as model and parameter uncertainty, and variability and heterogeneity, respectively.10 Bier (2001b) suggests that, when communicating uncertainties to decision makers, it is helpful to distinguish between the two types of the uncertainties so that the decision maker can understand how much of the uncertainty in the decision may be reducible. For example, if it is not possible to wait for research to reduce state-of-knowledge uncertainty, a decision maker may give more priority to a risk for which there is large state-of-knowledge uncertainty and a small population variability rather than to a risk for which there is large population variability and small state-of-knowledge uncertainty (Bier, 2001b). Others have argued, however, that in most instances this distinction is not a useful one to make since it can result in an overly complicated and confusing analysis (Morgan and Henrion, 1990). It is also important to communicate the sources of uncertainty—for example, whether it arises from the estimates of human health, estimates of costs, the availability of technology, or other factors—and to include the relative impact of the different sources on the decision. Such communication should also discuss the results of any sensitivity analyses, so that the uncertainty is bounded.

___________________

9 State-of-knowledge uncertainties are uncertainties due to limited scientific knowledge about the models that link causes and effects of risk and risk-reduction actions as well as about the specific parameters of these models. Variability refers to natural variation elements (environments, populations, exposure paths, etc.) that cannot be controlled.

10 The committee also separates out deep uncertainty, which is not immediately reducible through research (see Chapter 1 for a discussion).

Technical experts often communicate uncertainties to decision makers in aggregate. However, aggregate estimates of uncertainty do not necessarily provide the decision makers with an understanding of the uncertainty and its implications for a decision. Information about the type and sources of uncertainty can help decision makers decide whether further research is warranted to decrease the uncertainty or whether to refine the decision to reduce the effects of the uncertainty. Descriptions of where there are uncertainties can also indicate which groups or stakeholders might bear the burden of a higher-than-anticipated health risk or cost because of the uncertainty. Knowing who is likely to be affected by the uncertainty in the costs and benefits would allow decision makers to design the initial proposed regulations to address or prepare for those potential outcomes in advance. If the individual sources of uncertainty that contribute to the overall uncertainty can be determined, then uncertainty analyses in, for instance, cost–benefit analyses or cost-effectiveness analyses could incorporate graphic representations displaying the relative importance of the different sources of uncertainty sequentially so as to provide an easily interpretable graphic display of the sources of uncertainty (Krupnick et al., 2006).

Audience Characteristics

Level of Technical Expertise

The audience for the communication of uncertainty in environmental decisions, such as those made by the EPA, will have a broad array of backgrounds and roles (Wardekker et al., 2008). EPA’s scientific and technical staff communicates about uncertainty in health risk estimates, economic analyses, and other factors with agency decision makers. The agency discusses the uncertainties in its decisions with stakeholders, including individuals who might have little to no technical knowledge, as well as with industry specialists and others with high levels of technical expertise. The uncertainty that affects decisions should be discussed with all stakeholders, but the strategy used for those discussions might vary with the technical expertise of the audience. For example, agency decision makers will often have strong technical backgrounds and might need to see specific numbers to best understand the extent of uncertainty and how it affects their decisions. Industry and advocacy group scientists similarly might prefer specific numbers, as such numbers might provide them with a complete picture and the data needed for them to conduct their own independent analyses. Members of the public without strong technical backgrounds might benefit more from graphic representations of the uncertainties along with discussions about how those uncertainties will be considered in a decision, the potential consequences of those uncertainties, and whether and how EPA

plans to decrease those uncertainties. Regardless of the audience, however, EPA should use its communication opportunities to provide audiences with information as well as to gather information from the audience that could help either decrease acknowledged uncertainties or identify additional uncertainties that might affect the decision.

Another potential option now that many documents are available electronically through the Internet is to use layered hypertext for more complex uncertainty analysis. That is, the main body of text and the summary sections of EPA’s decision document could contain a summary of the uncertainty analyses conducted and could also include a link to appendixes or other documents that present full details of the analyses. That would provide summary information for all audiences as well as further details of the uncertainty analyses for technical audiences or others with an interest in seeing all the details.

Biases

Uncertainty information concerning probabilities has been found to be susceptible to biases by both experts and non-experts (Hoffrage et al., 2000; Kloprogge et al., 2007; Slovic, 2000; Slovic et al., 1979, 1981; Tversky and Kahneman, 1974). When people’s judgments about a risk are biased, risk management and communication efforts may not be as effective as they would otherwise be. Biases can stem from the characteristics of an individual or group or can be embedded in the framing of a message; both types can influence the interpretation of a message. Communicators of information about uncertainty cannot completely eliminate these biases, but they should be aware of the potential for biases to influence the acceptance of and reaction to probabilistic information and, to the extent possible, account for these biases by adjusting communication efforts. These types of biases are discussed below.

Personal Biases One bias that can affect how people interpret probabilistic information is termed availability bias. People tend to judge events that are easily recalled as more risky or more likely to occur than events that are not readily available to memory (see Kloprogge et al., 2007; Slovic et al., 1979; Tversky and Kahneman, 1974). An event may have more availability if it occurred recently, if it was a high-profile event, or if it has some other significance for an individual or group. The overestimation of rare causes of death that have been sensationalized by the media is an example of availability bias. One implication of availability bias that communicators of risk and uncertainty information should be aware of is that the discussion of a risk may increase its perceived riskiness, regardless of what the actual risk may be (Kloprogge et al., 2007). For example, evidence indicates that

women overestimate their risk of having breast cancer; women believe that their risk of breast cancer is higher than their risk of cardiovascular disease, despite the fact that cardiovascular disease affects and kills more women than breast cancer (Blanchard et al., 2002).

A second bias that can influence the communication of health risks and their uncertainties is confirmation bias. Confirmation bias refers to the filtering of new information to fit previously formed views; in particular, it is the tendency to accept as reliable new information that supports existing views, but to see as unreliable or erroneous and filter out new information that is contrary to current views (Russo et al., 1996). People may ignore or dismiss uncertainty information if it contradicts their current beliefs (Kloprogge et al., 2007). Evidence indicates that probability judgments are subject to confirmation bias (Smithson et al., 2011). Communicators of risk information, therefore, should be aware that peoples’ preexisting views about a risk, particularly when those views are very strong, may be difficult to change even with what some would consider to be “convincing” evidence with little uncertainty.

A third bias is confidence bias. People have a tendency to be overconfident about the judgments they make based on the use of heuristics. When people judge how well they know an uncertain quantity, they may set the range of their uncertainty too narrowly (Morgan, 2009). Research by Moore and Cain (2007) supports the notion that people may overestimate or underestimate their judgments based on their level of confidence. Referred to as the overconfidence bias, this tendency seems to have its basis in a psychological insensitivity to questioning of the assumptions upon which judgments are based (Slovic et al., 1979, 1981).

Group Biases The literature on public participation emphasizes the importance of interaction among stakeholders as a way of minimizing the cognitive biases that shape how people react to risk information (see Renn, 1999, 2004). Kerr and Tindale (2004), for example, caution that the more homogeneous a group is with respect to knowledge and preferences, the more strongly the knowledge and preferences will affect a group decision. Uncertainty can be either amplified or downplayed, depending on a group’s biases toward the evidence.

Assessment and explicit acknowledgement of the biases of the people that the agency is communicating with might be critical to successful communication. People may be more willing to listen to new information and other points of view after their own concerns have been acknowledged and validated (Bier, 2001a).

EPA’s scientists and technical staff are themselves not immune to these biases. An awareness of the possible biases within EPA and when they occur would be a first step toward identifying biases and helping decrease the

possibility that such biases influence the interpretation and presentation of scientific evidence.

Considerations for Communicating with Journalists

The statement of task asks the committee if there are specific communication techniques that could improve understanding of uncertainty among journalists. This is an important question, as most members of the public get their information about risks from the media. Journalists and the media help to identify conflicts about risk, and they can be channels of information during the resolution of those conflicts (NRC, 1989). Journalists do generally care about news accuracy and objectivity (NRC, 1989; Sandman, 1986) and about balance in representation of opinions, but journalists vary widely in their backgrounds, technical expertise, and ability to accurately report and explain environmental decisions. Even those who cover environmental policy making will not necessarily be familiar with the details of risk assessment and its inherent uncertainties, making it challenging to convey the rationale for decisions based, in part, on those assessments.

Uncertainty is not unique to reporting on environmental health risks, of course. Studies of how the U.S. news media handle uncertainty in science in general have found that journalists tend to make science appear more certain and solid than it is (see Fahnestock, 1986; Singer and Endreny, 1993; Weiss et al., 1988). In a quantitative content analysis, for example, Singer and Endreny (1993) found that the media tended to minimize uncertainties of the risks associated with natural and manmade hazards. The issue of which factors might contribute to this tendency to minimize uncertainties has not yet been studied, but the tendency could be related to journalists’ understanding of uncertain information versus their incentive to develop attention-grabbing stories that omit or downplay uncertainties. It should be expected that journalists, just like most other people, will tend to interpret risk messages based on their existing beliefs. The reporting of risk and uncertainty information in the media will be influenced accordingly.

Because the journalists and the media are a major avenue for framing risk information and its inherent uncertainty, efforts are needed to ensure that they are well informed of what is known about risks and risk-management options, including the sources and magnitude of uncertainty and its implications; a particularly useful approach would be to provide journalists with short, concise summaries about those implications. Although such summaries can be a challenge to develop, it can be done. For example, as discussed in Chapter 2, the summary of the regulatory impact analysis for the CAIR (EPA, 2005) contains a summary discussion of the uncertainty analysis. Those who are most familiar with the risk and uncertainties should provide the perspective that the journalists seek and should

recognize the limitations and constraints of the media. Although little research has been carried out on the best means of providing journalists with such a perspective, providing agency personnel with training on how to communicate effectively with media representatives about uncertainties may prove helpful to journalists, as might providing journalists with access to the agency officials who were involved in the decision making. Providing the media with summaries of the uncertainties in the risk assessment and risk management in a variety of formats may also help ensure that the uncertainties are conveyed accurately.

Social Trust

An important concept related to stakeholder values and perceptions is social trust. Trust has long been considered of central importance to risk management and communication (Earle, 2010; Earle et al., 2007; Kasperson et al., 1992; Löfstedt, 2009; Renn and Levine, 1991). Slovic (1993) noted an inverse relationship between the level of trust in decision makers and the public’s concern about or perception of a risk—that is, the lower the trust, the higher the perception of risk. The importance of organizational reputation is not unique to EPA; in Reputation and Power: Organizational Image and Pharmaceutical Regulation at the FDA, Carpenter (2010) emphasized the importance that the U.S. Food and Drug Administration’s reputation plays in its regulatory authority.

Frewer and Salter (2012) point out that beliefs about the underlying causes of trust or distrust and about the best approaches for increasing trust have changed over the past few decades. In contrast to the old idea that increasing knowledge will increase trust, Frewer et al. (1996) found that certain inherent aspects of the source of information—such as having a good track record, being truthful, having a history of being concerned with public welfare, and being seen as knowledgeable—lead to increased trust. Similarly, Peters et al. (1997) found that the source of the information being seen as having “knowledge and expertise, honesty and openness, and concern and care” was an important contributor to trust (p. 10). In a study looking at attitudes toward genetically modified foods, however, Frewer et al. (2003) found that neither the information itself nor the strategy for communicating the risks had much effect on people’s attitudes toward genetically modified foods; in this case, people’s attitudes toward genetically modified foods tended to determine their level of trust in the source of information, rather than the trust in the source determining their attitudes toward the foods. It is important to remember, however, that there are reasons to communicate uncertainties beyond the potential to increase social trust (Stirling, 2010).

Earle (2010) reviewed the distinction between trust, which is about relationships between people, and confidence, which concerns a relationship between people and objects, and the role of both in social trust. As Earle (2010) pointed out, although some people believe that decisions should be made on the basis of data or numbers (Baron, 1998; Bazerman et al., 2002; Sunstein, 2005), any “confidence-based approach presupposes a relation of trust” (p. 570). For decisions concerning hazards of high moral importance, that trust does not necessarily exist (Earle, 2010).

As discussed by Fischhoff (1995) and by Leiss (1996), at earlier stages in the evolution of risk communication sciences it was thought that public education via increased communication would lead to an increased understanding of the concept of risk and, subsequently, to increased trust. Furthermore, some research had indicated that a decrease in public confidence in regulatory agencies and scientific institutions—and their motives—led to decreased trust (Frewer and Salter, 2002; Pew Research Center for the People and the Press, 2010). Given those observations, it was thought that increasing transparency would be one way to increase trust. As discussed by Frewer and Salter (2012), however, there is limited evidence that transparency actually does increase trust, although there is evidence that a lack of transparency can lead to increased distrust (Frewer et al., 1996). As highlighted in the discussion of the committee’s framework for decision making in Chapter 5, all aspects of the decision-making process, including the more technical risk assessment process, require value judgments. Thus engaging the public and policy makers, in addition to scientists, in the process of health risk assessments not only improves the assessment, but can also increase both trust in the process and communications about health risks by allowing the perspectives of all stakeholders to inform the assessment. Frewer and Salter (2002) described the communications by the United Kingdom’s regulatory agencies related to the bovine spongiform encephalopathy (BSE) outbreak in the mid-1990s in the United Kingdom as an example of the consequences of inadequate public participation in the decision process. Communications about the outbreak and the outbreak response did not address many of the concerns of the public and led to public outrage about the response.

Concerning the communication of uncertainties in risks, Frewer and Salter (2012) pointed out that distrust in risks assessments will increase when uncertainties are not included in the discussion of the assessments. Although some researchers noted, for the BSE outbreak in the United Kingdom, an apparent view by government officials “that the public [is] unable to conceptualize uncertainty” (Frewer et al., 2002, p. 363), research on risks related to food safety indicates a preference by the public to be informed of uncertainties in risk information (Frewer et al., 2002) and finds

that not discussing uncertainties “increases public distrust in institutional activities designed to manage risk” (Frewer and Salter, 2012, p. 153).

Although, there is insufficient information to develop guidelines or best practices for communicating the uncertainty and variability in health risk estimates (Frewer and Salter, 2012), there is evidence that the public can differentiate between different types and sources of uncertainty (see below for further discussion). As discussed by Kloprogge et al. (2007), it is possible to communicate to the public various aspects of uncertainty information, such as how uncertainty was dealt with in the analysis as well as the implications of uncertainties and what can or cannot be done about uncertainties.

The need for a communication plan is increased when there are—or are expected to be—more uncertainties associated with a decision-making process, because there are likely to be more challenges in communicating with stakeholders. Research demonstrates a heightened interest by the public in evaluating the credibility of information sources when they perceive uncertainty (Brashers, 2001; Halfacre et al., 2000; van den Bos, 2001), and studies also indicate that the public is more likely to challenge the reliability and adequacy of risk estimates and be less accepting of reassurances in the presence of uncertainty (Kroll-Smith and Couch, 1991; Rich et al., 1995). Concerns about procedural fairness and trust appear to be even more salient when there is scientific uncertainty (NRC, 2008), and risk communication can serve to facilitate stakeholder trust (Conchie and Burns, 2008; Heath et al., 1998; Peters et al., 1997).

• Although communication is often thought of in terms of communication to an audience, two-way conversations about risks and uncertainties throughout the decision-making process are key to the informed environmental decisions that are acceptable to stakeholders. Not only will such communication inform the public and others about decisions, but it will also help to ensure that the decisions take the concerns of various stakeholders into consideration, and to build social trust and broader acceptance of decisions.

RECOMMENDATION 8.1

U.S. Environmental Protection Agency senior managers should be transparent in communicating the basis of the agency’s decisions, including the extent to which uncertainty may have influenced decisions.

• There is no definitive research that can serve as a basis for uniform recommendations as to the best approaches to communicating uncertainty information with all stakeholders. Each situation will

likely require a unique communication strategy, determined on a case-by-case basis, and in each case it may require research to determine the most appropriate approach. Communicating decision made in the presence of deep uncertainty is particularly challenging.

RECOMMENDATION 8.2

U.S. Environmental Protection Agency decision documents and communications to the public should include a discussion of which uncertainties are and are not reducible in the near term. The implications of each to policy making should be provided in other communication documents when it might be useful for readers.

• The best presentation style will depend on the audience and their needs. When communicating with decision makers, for example, because of the problem of variability in interpretation of verbal presentations, such presentations should be accompanied by a numeric representation. When communicating with individuals with limited numeracy or with a variety of stakeholders, providing numeric presentations of uncertainty may be insufficient. Often a combination of numeric, verbal, and graphic displays of uncertainty information may be the best option. In general, however, the most appropriate communication strategy for uncertainty depends on

![]() the decision context;

the decision context;

![]() the purpose of the communication;

the purpose of the communication;

![]() the type of uncertainty; and

the type of uncertainty; and

![]() the characteristics of the audience, including the level of technical expertise, personal and group biases, and the level of social trust.

the characteristics of the audience, including the level of technical expertise, personal and group biases, and the level of social trust.

RECOMMENDATION 9.1

The U.S. Environmental Protection Agency, alone or in collaboration with other relevant agencies, should fund or conduct research on communication of uncertainties for different types of decisions and to different audiences, develop a compilation of best practices, and systematically evaluate its communications.

• Little research has been conducted on communicating the uncertainty associated with technological or economic factors that play a role in environmental decisions, or other influences on decisions that are less readily quantified, such as social factors (for example, environmental justice) and the political context.

RECOMMENDATION 9.2

As part of an initiative evaluating uncertainties in public sentiment and communication, U.S. Environmental Protection Agency senior managers should assess agency expertise in the social and behavioral sciences (for example, communication, decision analysis, and economics), and ensure it is adequate to implement the recommendations in this report.

Baron, J. 1998. Judgment misguided: Intuition and error in public decision making. New York: Oxford University Press.

Bazelon, D. L. 1974. The perils of wizardry. American Journal of Psychiatry 131(12):1317–1322.

Bazerman, M. H., J. Baron, and K. Shonk. 2002. “You can’t enlarge the pie”: The psychology of ineffective government. Cambridge, MA: Basic Books.

Bier, V. M. 2001a. On the state of the art: Risk communication to decision-makers. Reliability Engineering and System Safety 71:151–157.

———. 2001b. On the state of the art: Risk communication to the public. Reliability Engineering and System Safety 71:139–150.

Blanchard, D., J. Erblich, G. H. Montgomery, and D. H. Bovbjerg. 2002. Read all about it: The over-representation of breast cancer in popular magazines. Preventive Medicine 35(4):343–348.

Boduroglu, A., and P. Shah. 2009. Effects of spatial configurations on visual change detection: An account of bias changes. Memory and Cognition 37(8):1120–1131.

Bostrom, A., and R. E. Löfstedt. 2003. Communicating risk: Wireless and hardwired. Risk Analysis 23(2):241–248.

Bostrom, A., L. Anselin, and J. Farris. 2008. Visualizing seismic risk and uncertainty. Annals of the New York Academy of Sciences 1128(1):29–40.

Brase, G. L., L. Cosmides, and J. Tooby. 1998. Individuation, counting, and statistical inference: The role of frequency and whole-object representations in judgment under uncertainty. Journal of Experimental Psychology: General 127(1):3–21.

Brashers, D. E. 2001. Communication and uncertainty management. Journal of Communication 51(3):477–497.

Budescu, D. V., S. Weinberg, and T. S. Wallsten. 1988. Decisions based on numerically and verbally expressed uncertainties. Journal of Experimental Psychology: Human Perception and Performance 14(2):281–294.

Budescu, D. V., S. Broomell, and H. H. Por. 2009. Improving communication of uncertainty in the reports of the Intergovernmental Panel on Climate Change. Psychological Science 20(3):299–308.

Carpenter, D. P. 2010. Reputation and power: Organizational image and pharmaceutical regulation at the FDA. Princeton, NJ: Princeton University Press.

Conchie, S. M., and C. Burns. 2008. Trust and risk communication in high-risk organizations: A test of principles from social risk research. Risk Analysis 28(1):141–149.

Covello, V. T., and F. W. Allen. 1988. Seven cardinal rules of risk communication. Washington, DC: EPA.

Cuite, C. L., N. D. Weinstein, K. Emmons, and G. Colditz. 2008. A test of numeric formats for communicating risk probabilities. Medical Decision Making 28(3):377–384.

Damasio, A. R. 1994. Descartes’ error: Emotion, reason, and the human brain. New York: Putnam.

Daradkeh, M., A. E. McKinnon, and C. Churcher. 2010. Visualisation tools for exploring the uncertainty–risk relationship in the decision-making process: A preliminary empirical evaluation. User Interfaces 106:42–51.

Earle, T. C. 2010. Trust in risk management: A model-based review of empirical research. Risk Analysis 30(4):541–574.

Earle, T. C., M. Siegrist, and H. Gutscher. 2007. Trust, risk perception and the TCC model of cooperation. In Trust in cooperative risk management: Uncertainty and scepticism in the public mind, edited by M. Siegrist, T. C. Earle, and H. Gutscher. London: Earthscan. Pp. 1–49.

EPA (U.S. Environmental Protection Agency). 2004. An examination of EPA risk assessment principles and practices. Washington, DC: Office of the Science Advisor, Environmental Protection Agency.

———. 2005. Regulatory impact analysis for the final Clean Air Interstate Rule. Washington, DC: EPA, Office of Air and Radiation.

———. 2007. Risk communication in action: The risk communication workbook. Washington, DC: EPA.

———. 2008. Clean Air Interstate Rule. http://www.epa.gov/cair (accessed September 10, 2008).

Erev, I., and B. L. Cohen. 1990. Verbal versus numerical probabilities: Efficiency, biases, and the preference paradox. Organizational Behavior and Human Decision Processes 45(1):1–18.

Fagerlin, A., P. A. Ubel, D. M. Smith, and B. J. Zikmund–Fisher. 2007. Making numbers matter: Present and future research in risk communication. American Journal of Health Behavior 31(Suppl 1):S47–S56.

Fahnestock, J. 1986. Accommodating science: The rhetorical life of scientific facts. Written Communication 3(3):275–296.

Fischhoff, B. 1995. Risk perception and communication unplugged: Twenty years of process. Risk Analysis 15(2):137–145.

Fischhoff, B., N. T. Brewer, and J. S. Downs, eds. 2011. Communicating risks and benefits: An evidence-based user’s guide: Washington, DC: Food and Drug Administration, U.S. Department of Health and Human Services.

Frewer, L., and B. Salter. 2002. Public attitudes, scientific advice and the politics of regulatory policy: The case of BSE. Science and Public Policy 29(2):137–145.

———. 2012. Societal trust in risk analysis: Implications for the interface of risk assessment and risk management. In Trust in risk management: Uncertainty and skepticism in the public mind, edited by M. Siegrist, T. C. Earle, and H. Gutscher. London: Earthscan. Pp. 143-158.