CHAPTER FOUR

Tsunami Detection and Forecasting

SUMMARY

An incoming tsunami may be anticipated in many ways, from direct human recognition of cues such as earthquake shaking or an initial recession of the sea, to technological warnings based on environmental sensors and data processing. This chapter reviews and evaluates the technological detection and forecasting capabilities of the U.S. tsunami warning centers (TWCs) paying specific attention to the infrastructure of the earth and ocean observation networks and to the data processing and tsunami modeling that occur at the TWCs. The next chapter discusses the centers’ operations, their human resources, and the infrastructure for their warning functions.

The initial decisions by the TWCs to issue an initial tsunami advisory, watch, or warning after an earthquake are based on analyses of data from a global seismic detection network, in conjunction with the historical record of tsunami production, if any, at the different seismic zones (see Weinstein, 2008; Whitmore et al., 2008 for greater detail on the steps taken). Although adequate for most medium-sized earthquakes, in the case of very large earthquakes or tsunami earthquakes1 the initial seismological assessment can underestimate the earthquake magnitude and lead to errors in assessing the tsunami potential (Appendix G). Far from the tsunami source, data from sea level networks provide the only rapid means to verify the existence of a tsunami and to calibrate numerical models that forecast the subsequent evolution of the tsunami. Near the source, a tsunami can come ashore before its existence is detected by the sparse sea level observation network.

Two separate U.S. TWCs monitor seismic activity and sea levels in order to detect tsunamis and warn of their presence. Based on their own data analysis, the TWCs independently decide whether to issue alerts to the emergency managers in their respective and complementary areas of responsibility (AORs). The TWCs must not only provide timely warnings of destructive tsunamis, but also must obviate needless evacuations that can cost money and even lives. An ideal warning would provide emergency managers with the necessary information to call for an evacuation in a timely fashion at any particular location in the projected tsunami path. The ideal product would also be clearly worded so that the general public easily understands the threat and who is affected by the threat. This information includes predictions of the time of arrival of the ocean waves, the duration of the occurrence of damaging waves, when the larg-

est wave is expected to arrive, the extent of the inundation and run-up, and the appropriate time to cancel the warning. Whether a call for evacuation is practicable, and how soon the “all clear” can be sounded, will depend on many factors, but especially on how soon the tsunami is expected to arrive and how long the damaging waves will continue to come ashore. Therefore, the warning system needs to be prepared to respond to a range of scenarios. They range from a near-field tsunami that arrives minutes after an earthquake to a far-field tsunami that arrives many hours after a triggering, distant earthquake yet lasts for many more hours due to the waves’ scattering and reverberation along their long path to the shore. In the case of the near-field tsunami, major challenges remain to provide warnings on such short timescales.

The committee concludes that the global networks that monitor seismic activity and sea level variations remain essential to the tsunami warning process. The current global seismic network is adequate and sufficiently reliable for the purposes of detecting likely tsunami-producing earthquakes. However, because the majority of the seismic stations are not operated by the TWCs, the availability of this critical data stream is vulnerable to changes outside of the National Oceanic and Atmospheric Administration’s (NOAA’s) control. The complex seismic processing algorithms used by the TWCs, given the available seismic data, quickly yield adequate estimates of earthquake location, depth, and magnitude for the purpose of tsunami warning, but the methodologies are inexact. Recommendations to address these two concerns fall under the following categories: (1) prioritization and advocacy for seismic stations; (2) investigation and testing of additional seismic processing algorithms; and (3) adoption of new technologies.

The tsunami detection and forecasting process requires near-real-time2 observations of tsunamis from both coastal sea level gauges and open-ocean sensors (such as provided by the Deep-ocean Assessment and Reporting of Tsunamis (DART) network). The committee finds that the upgrades enabled by the enactment of the Tsunami Warning and Education Act (P.L. 109-424) to both coastal sea level gauges and the DART network have significantly improved the capacity of the TWCs to issue timely and accurate tsunami advisories, watches, and warnings. Furthermore, these sensors provide researchers with the essential data to test and improve tsunami generation, propagation, and inundation models after the fact.

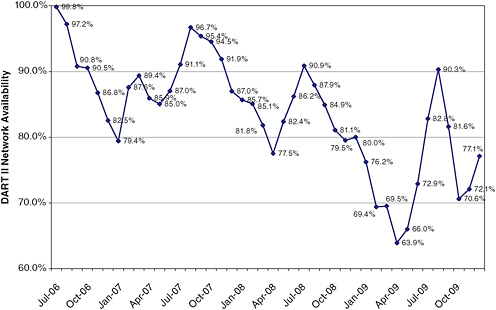

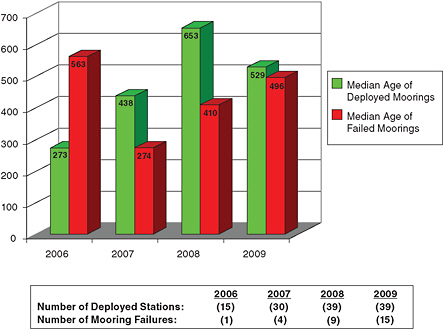

The new and upgraded DART and coastal sea level stations have closed significant gaps in the sea level observation network that had left many U.S. coastal communities subject to uncertain tsunami warnings. Although both sea level gauge networks have already proven their value for tsunami detection, forecasting, and model development, fundamental issues remain concerning gaps in coverage, the value of individual components of the network, and the risk to the warning capability due to coverage gaps, individual component failures, or failures of groups of components. Of special concern is the relatively poor survivability of the DART sta-

tions that currently average a little over one year before failure, compared to a four-year design lifetime. Additional open questions include dependence of U.S. tsunami warning activities on sea level data supplied by foreign agencies and on sea level data derived from U.S. and foreign gauges that do not meet NOAA’s standards for establishment, operation, and maintenance.

Looking to the future, the committee concludes that the numbers, locations, and prioritizations of the DART stations and coastal sea level gauges should not be considered static, in light of constantly changing fiscal realities, survivability experience, maintenance cost experience, model improvements, new technology developments, and increasing or decreasing international contributions. The committee finds of great value NOAA’s continual encouragement and facilitation of researchers, other federal and state agencies, and nongovernmental organizations (NGOs) who utilize their sea level observations for novel purposes. The committee believes that stations with a broad user base have enhanced sustainability.

The committee is optimistic that continued enhancements to the sea level monitoring component of the U.S. Tsunami Program can measurably mitigate the tsunami hazard and protect human lives and property for far-field events. The committee’s recommendations for the DART and coastal sea level gauge networks fall under the following categories: (1) assessment of network coverage; (2) station prioritization; (3) data stream risk assessment and data availability; (4) cost mitigation and cost prioritization; and (5) sea level network oversight.

Similar to open-ocean tsunami detection, tsunami forecast modeling has only recently become operational at the TWCs, as described below. The committee anticipates that further development and implementation of numerical forecast modeling methodologies at the TWCs will continue to help improve the tsunami warning enterprise.

As described below, the rapid detection of a tsunami striking within minutes to an hour, either for the purpose of providing an initial warning or for confirming any natural warnings that near-field communities have already received, will likely require consideration of alternative detection technologies, such as sensors deployed along undersea cabled observatories and coastal radars that can detect a tsunami’s surface currents tens of kilometers from the shore. Finally, examples of other new technologies and methodologies that have the potential to improve both estimation of earthquake parameters and tsunami detection are discussed at the end of this chapter.

DETECTION OF EARTHQUAKES

All initial tsunami warnings are based on rapid detection and characterization of seismic activity. Because of the fundamental differences in nature between the solid earth in which an earthquake takes place and the fluid ocean where tsunami gravity waves propagate, the vast majority of earthquakes occurring on a daily basis do not trigger appreciable or even measurable tsunamis. Nevertheless, some smaller earthquakes could trigger submarine landslides that can result in local tsunamis. It takes a large event (magnitude >7.0) to generate a damaging tsunami in the near-field and a great earthquake (magnitude >8.0) to generate a tsunami in the far-field. However, the generation of a tsunami is affected not only by the magnitude of an

earthquake, but also by material conditions at the source, such as source focal geometry, earthquake source depth, and water depth above the fault-rupture area.

Although estimating the size of a tsunami based on the magnitude of an earthquake has severe limitations (see Appendix G), the initial warning from a seismically generated tsunami is still based on the interpretation of the parent earthquake for several reasons:

-

most tsunamis are excited (or initiated) by earthquakes;

-

earthquake waves are easy to detect, and seismic instrumentation is available, plentiful, and accessible in near-real time (latencies of seconds to a few minutes);

-

most importantly, seismic waves travel faster than tsunamis by a factor of 10 to 50, thereby allowing an earthquake to provide an immediate natural warning for people who feel it while leaving time for instrumental seismology to trigger official warnings for coasts near and far from the tsunami source; and

-

earthquakes have been studied, and their sources are reasonably well understood.

Although most tsunamis result from earthquakes, some are triggered by landslides or volcanic eruptions. Technological warning of a tsunami that has been generated without a detectable earthquake will likely require detection of the tsunami waves themselves by water-level gauges.

Seismic Networks Used by the Tsunami Warning Centers

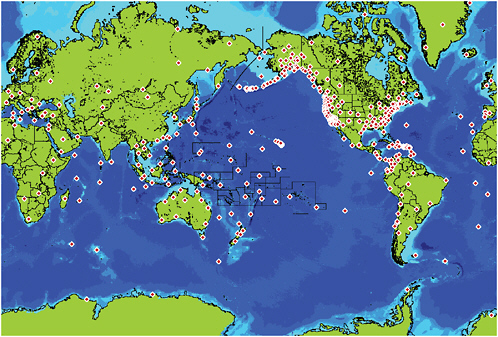

Both TWCs access the same extensive seismic networks that provide near-real-time information on earthquakes from around the world. Currently, about 350 independent channels of seismic data are monitored and recorded by the TWCs (National Oceanic and Atmospheric Administration, 2008a; Figure 4.1). Seismic networks that provide these data are operated and funded by many different agencies and organizations, including the U.S. Geological Survey (USGS), the National Science Foundation (NSF), the National Tsunami Hazard Mitigation Program (NTHMP), the UN Comprehensive Nuclear Test-Ban Treaty Organization (CTBTO), various universities in the United States, non-U.S. networks, and stations run by the Pacific Tsunami Warning Center (PTWC) and the West Coast/Alaska Tsunami Warning Center (WC/ATWC) themselves. Many of the networks used by the TWCs are part of the USGS/NSF Global Seismographic Network (GSN), which currently comprises more than 150 globally distributed, digital seismic stations and provides near-real-time, open access data through the Data Management System (DMS) of the Incorporated Research Institutions for Seismology (IRIS). The IRIS DMS also serves as the primary archive for global seismic data. GSN is a partnership between the NSF/IRIS and the USGS. The TWCs access seismic network data through dedicated circuits, private satellites, and the Internet.

The GSN is widely recognized as a high-quality network, having achieved global coverage adequate for most purposes, with near-real-time data access as well as data quality control and archiving (National Science Foundation, 2003; Park et al., 2005). GSN stations have proven

FIGURE 4.1 Data from approximately 350 seismic stations are accessed by the TWCs. SOURCE: West Coast/Alaska Tsunami Warning Center, NOAA.

to be reliable, with current (2009-2010) data return rates of 89 percent. The GSN is sufficiently robust to support warnings for events far from the recording devices and provides good global coverage (U.S. Indian Ocean Tsunami Warning System Program, 2007). The USGS was provided funding through the Emergency Supplemental Appropriations Act for Defense, the Global War on Terror, and Tsunami Relief, 2005 (P.L. 109-13) to expand and upgrade the GSN for tsunami warning. For redundancy, the TWCs also receive seismic data from many other vendors on multiple communication paths. Given the wide array of uses of the existing seismic networks, GSN can generally be viewed as a data network that is likely to be continued, well-maintained, and improved over the long-term. A future broad upgrade of seismometers in the GSN may be important for tsunami warning.

Nevertheless, the TWCs’ heavy reliance on data networks from partnering agencies exposes them to some degree of vulnerability to potential losses of data availability in the future. For example, much of the seismic data crucial to the operation of the TWCs comes from GSN stations whose deployment and maintenance have been and are currently funded primarily from NSF cooperative agreements with IRIS, renewable every five years. The Scripps Institution of Oceanography’s (SIO’s) International Deployment of Accelerometers (IDA) project with

NSF/IRIS funding operates 41 of the total 150 GSN stations through this mechanism. There can be no assurance that this funding will be sustained at current levels in the future. GSN stations have been operating since the mid-1980s (see Appendix G); much of their hardware is out of date and increasingly difficult to maintain. Operations and maintenance budgets regularly decrease and, except for events like the 2004 tsunami, modernization funds are generally not available to boost the data return rates including the necessary hardware. The more modern NSF EarthScope Transportable Array (with more than 400 telemetered broadband stations), for example, boasts data return rates in excess of 99 percent. Unfortunately, the TWCs could be among the most vulnerable of the IRIS clients in a constrained budget environment, because the TWCs are among the users needing some of the most remote seismic stations, which are difficult, hence expensive, to maintain.

To meet the requirements for detection of near-field tsunami events, the TWCs have supplemented existing seismic networks with their own local stations. The WC/ATWC maintains a network of 15 sites throughout Alaska, and most stations were upgraded to satellite communications and broadband seismometers after 2005 (National Oceanic and Atmospheric Administration, 2008a). The PTWC, in collaboration with other partners, is also working to enhance an existing seismic network in Hawaii to improve tsunami and other hazard detection capabilities through a Hawaii Integrated Seismic Network (Shiro et al., 2006).

NOAA’s Tsunami Program Strategic Plan (2009-2017; National Oceanic and Atmospheric Administration, 2008b) recommends that the TWCs “monitor critical observing networks, establish performance standards, and develop a reporting protocol with data providers” (e.g., the USGS and the NTHMP) and effect “complete upgrades of Alaska and Hawaii seismic … networks.” The committee agrees with these recommendations; however, to be strategic with limited resources, it is essential to determine and prioritize seismic stations that are critical to tsunami warning (e.g., oceanic stations in known tsunamigenic source regions or within 30º-50º from potential tsunami source areas to allow the more rapid determination of the tsunami potential).

Algorithms for Estimating an Earthquake’s Tsunami Potential

Once data from the seismic networks have been received, the data are analyzed by the TWCs to determine three key parameters for evaluating tsunamigenic potential: location, depth, and magnitude of an earthquake. Algorithms for determining the geographical location and depth of an earthquake source from seismic arrival times are based upon the concept of triangulation (U.S. Indian Ocean Tsunami Warning System Program, 2007). With the network of stations available to the TWCs, automatic horizontal locations are routinely obtained within a few minutes of origin time with accuracy on the order of 30 km. This is more than satisfactory to determine tsunami source locations, given the fact that earthquakes of such high magnitudes have much larger source areas. The three seismic parameters are used for issuing the initial bulletin. The focal mechanism characteristics are later obtained through moment tensor inversion of broadband seismic data if the data quality is adequate

(see below). In the present configuration of worldwide networks, the large number of available stations provides robust location determination, although losing a significant number of seismic stations could affect the accuracy of earthquake location and depth.

A great earthquake on a subduction thrust tends to nucleate beneath shallow water, or even beneath land in the case of the giant 1960 Chile and 1964 Alaska earthquakes. The source of such an earthquake, and of the ensuing tsunami, extends far beyond the earthquake’s point of nucleation (the hypocenter, on the fault plane; the epicenter, if projected to the earth’s surface). What matters for earthquake size, and for tsunami size as well, is the fault-rupture area, which extends seaward into deep water as well as coastwise. The hypocenter is much like the match that initiates a forest fire in which the damage depends on the total area burned. The tendency to instead equate an earthquake with its hypocenter contributed to confusion during the near-field tsunami from the February 27, 2010, Chilean earthquake of magnitude 8.8. Partly because this earthquake’s hypocenter was located near the coast, the Chilean government retracted a tsunami warning before the largest waves came ashore.

Depth determination is crucial to assessing an earthquake’s tsunamigenic potential because sources deeper than about 60 km generally pose no tsunami threat and are well resolved by location algorithms. Finer resolution of depth for shallower earthquakes remains a general seismological challenge, particularly in near-real time. This parameter can have some influence on the generation of tsunamis in the near-field; however, for far-field tsunamis generated by megathrust earthquakes, theoretical studies (Ward, 1980; Okal, 1988) have shown that the probability of tsunami excitation is moderate for depths less than 60 km. This somewhat paradoxical result reflects the fact that a shallower source may create a locally larger deformation of the ocean floor, but over a smaller area. This acts to compensate for the effect on the generation of the tsunami, which is controlled by the integral of the deformation over the whole ocean floor. Given the techniques and data available, the committee found that the location techniques used at the TWCs (Weinstein, 2008; Whitmore et al., 2008) were adequate in the context of tsunami warning.

Determining an earthquake’s magnitude is a more problematic aspect of the initial earthquake parameterization. The concept of magnitude is probably the most popular, yet most confusing, parameter in seismology. In simple terms, it seeks to describe the size of an earthquake with a single number. Reliable and well-accepted determinations of earthquake size (the “moment tensor solution”—or the product of fault area with the amount of slip) are possible, but these estimates are necessarily based on long-period surface waves arriving too late to be useful for tsunami warning, which strives for initial estimates within five minutes of the first measurements having been received. Most seismologists agree that it is not currently possible to predict how much of a fault will ultimately break based on the seismic waves propagating away from the point of nucleation (the epicenter), and that only when the slip ends can the true size or moment be inferred. For an event such as the Sumatra earthquake, the propagation of breakage along the fault surface alone takes nearly eight minutes (e.g., de Groot-Hedlin, 2005; Ishii et al., 2005; Lay et al., 2005; Tolstoy and Bohnenstiehl, 2005; Shearer and Bürgmann, 2010). Magnitudes determined at shorter times will necessarily underestimate the true size of the earthquake.

In this regard, the major challenge for tsunami warning is that tsunamis are controlled by the lowest frequency part of a seismic source, with periods of 500 to 2,000 seconds, whereas routinely recorded seismic waves have energy in the treble domain, with periods ranging from 0.1 to 200 seconds, exceptionally 500 seconds. In addition, seismic waves fall into several categories. Body waves travel through the interior of the earth at average velocities of 10 km/sec, take seconds to minutes to reach recording stations, and their high-frequency components are a good source of information. By contrast, surface waves travel around the surface at considerably slower speeds (3-4 km/sec) and take as much as 90 minutes to reach the most distant stations. The surface waves carry low-frequency signals; that is, the part of the spectrum most relevant to tsunami warning, although high-frequency body wave methods can also resolve event duration and rupture length (e.g., Ishii et al., 2005; Ni et al., 2005). For this latter case, the high-frequency body waves have not yet been exploited by the USGS’s National Earthquake Information Center (NEIC) or the TWCs. In short, the evaluation of earthquake size for tsunami warning faces a double challenge: extrapolating the trebles in the earthquake source to infer the bass, and doing this as quickly as possible to give the warning enough lead time to be useful.

Magnitudes can be obtained from various parts of the seismic spectrum, and expectedly such different scales have been “locked” to each other to quantify an earthquake with a single number. This is achieved through the use of “scaling laws,” which assert that the spectrum of a seismic source (the partitioning of its energy between bass and treble) is understood theoretically and can be estimated as a function of earthquake size. However, this universal character of scaling laws is far from proven, especially in its application to mega-earthquakes, which trigger the far-field tsunamis of major concern. In addition, scientists have identified a special class of generally smaller events, dubbed “tsunami earthquakes” by Kanamori (1972), whose source spectra systematically violate scaling laws (see Appendix G). Therefore, characterizing an earthquake source with a single number representing magnitude cannot describe all its properties, especially in the context of tsunami warning.

A detailed technical review of these topics is given in Appendix G, and the special case of tsunami earthquakes is reviewed in Appendix H. A summary of the conclusions of Appendix G are:

-

Classical magnitudes routinely determined by conventional seismological methods are inadequate for tsunami warning of great and mega-earthquakes.

-

The authoritative measurement of earthquake size, the moment tensor solution, is based on normal modes and long-period surface waves arriving too late to be used for tsunami warning.

-

The TWCs currently use an algorithm named Mwp which integrates the long-period components of the first arriving P-waves to infer the low-frequency behavior of the seismic source.

-

PTWC has recently implemented the use of the “W-phase” algorithm as well as the Mwp algorithm.

Although the use of Mwp is satisfactory for the majority of the (small, non-tsunamigenic, and medium) events processed, Mwp has very serious shortcomings in its application to great earthquakes (magnitude greater than 8.0), to mega-earthquakes (magnitude greater than 8.5; Appendix G), and to the anomalous tsunami earthquakes (Whitmore et al., 2002; Appendix H).

Thus, the committee is concerned that the TWCs have relied on a single technique applied without sufficient attention to its limitations discussed above. Other approaches are presently being studied including the “W-phase” algorithm, which could eventually be implemented after both the theoretical and operational bases of the approach are established and the limitations of current technologies are understood (Appendix G). Improvements are urgently needed for the determination of the tsunami potential of mega- and tsunami earthquakes.

Potential Use of Earthquake Alerts from the NEIC

While NOAA and the NTHMP lead the efforts relevant to tsunamis, the USGS and the National Earthquake Hazards Reduction Program (NEHRP) lead the efforts in research and reducing impacts from earthquakes. The USGS’ Earthquake Hazard Program provides and applies earthquake science information to mitigate potential losses from earthquakes. This separation in mission runs the risk of developing tsunami efforts that neglect the earthquake hazard within NOAA and vice versa within the USGS.

One service the USGS provides through its NEIC is to rapidly determine the location and size of earthquakes around the world. The NEIC in Golden, Colorado, derives initial solutions, not made public, within seconds after arrival of the seismic data. The NEIC monitors the GSN and other stations and produces accurate seismic analysis within minutes of an event, which it disseminates to a broad range of customers (national and international agencies, academia, and the public). In a development that may influence the methods and roles of the TWCs, U.S. seismology is on the verge of being able to warn of earthquakes while they are still under way. The drive toward such earthquake early warning includes the NEIC. USGS sources say that the NEIC, which began operating 24/7 in January 2006, plans to support this warning function by developing a back-up center at a site other than Golden. At present, the two TWCs do not use the epicentral, hypocentral, or magnitude estimate provided by the NEIC. Instead, each TWC uses its own mix of seismic processing algorithms and as described above develops its own seismic solutions. The TWCs may correct their initial estimates, which are often made public faster than the NEIC’s solutions, to be more consistent with the NEIC’s solutions and at times confer with NEIC staff during an event to ensure consistency. With the availability of the new tsunami forecasting methods and sea level observations (as described below), the TWCs rely more on sea level data and numerical models than on details of earthquake parameters after the issuance of the initial warning product. Therefore, the committee discussed whether it remains necessary for the TWCs to run their own independent seismic analysis. For the forecast models, the TWCs require little more than location, rough magnitude, and time of the event, which could come directly from the NEIC.

The TWCs in-house analysis offers the benefit of obtaining solutions much faster than the NEIC’s publicly available solution, which might take tens of minutes longer. In addition, the TWCs’ assessment of the tsunami potential of any given earthquake depends on knowing the depth of the earthquake and the earthquake’s geometry, neither of which are as high of a priority for the NEIC.

Regardless, there are many benefits to leveraging research and development at the TWCs and the NEIC and to more broadly find synergies in the tsunami and earthquake hazard reduction programs.

Conclusion: The current global seismic network is adequate and sufficiently reliable for the purposes of detecting likely tsunami-producing earthquakes. Because the majority of the seismic stations are not operated by the TWCs, availability of this critical data stream is vulnerable to changes outside of NOAA’s control. Furthermore, as discussed in Appendix G, many of the STS-1 seismographs in the GSN are now more than two decades old, and because the STS-1 is no longer manufactured, spares are not available.

Recommendation: NOAA and the USGS could jointly prioritize the seismic stations needed for tsunami warnings. These needs could be communicated with partner agencies and organizations to advocate for upgrading and maintenance of these critical stations over the long-term.

Conclusion: The complex seismic processing algorithms used by the TWCs, given the available seismic data, quickly produce adequate estimates of earthquake location, depth, and magnitude for the purpose of tsunami warning. The methodologies are inexact, partly because of the physically variable nature of tsunami-generating earthquakes (one model does not fit all), and partly because of the need for rapid determination of earthquake parameters that may not be certain until the entire rupture process is complete (potentially minutes). For example, the methodologies applied by the TWCs do not properly reflect the tsunami-generating potential of mega-earthquakes or tsunami earthquakes.

Conclusion: In parallel with their own analyses, staff at the TWCs and at the Tsunami Program could avail themselves of earthquake locations and magnitudes that are estimated within minutes of an event from the USGS’s NEIC. An interagency agreement could be established to make these initial estimates available on secure lines between the USGS and NOAA.

Recommendation: Among the methodologies employed by the NEIC is the W-phase algorithm for estimating earthquake magnitude. The committee recommends that the TWCs work jointly with the NEIC to test the potential utility of the W-phase algorithm in the tsunami warning process, using both a sufficient dataset of synthetic seismograms and a set of waveforms from past great earthquakes, paying particular attention to the algorithm’s performance during tsunami earthquakes and to the assessment of a lower-magnitude bound for its domain of applicability.

DETECTION OF TSUNAMIS WITH SEA LEVEL SENSORS

Because the seismic signal is the first observation available to the TWCs, seismic detection provides the basis for the initial evaluation of the potential for a tsunami. The decision about the content of the first message from the TWCs is based solely on seismic parameters and the historical record, if any, of tsunamis emanating from the neighborhood of the earthquake. However, as previously noted, this indirect seismic method is limited in the accuracy of its estimates of the strength of the tsunami, usually underestimating the tsunami potential of large earthquakes and tsunami earthquakes. In acknowledgment of this bias, and because forecasters must err on the side of caution when human lives may be at stake, the TWCs use conservative criteria to trigger advisories, watches, or warnings based on this initial seismic assessment (e.g., Weinstein, 2008), as seen in the PTWC’s far-field forecast of the tsunami from the Chilean earthquake of February 27, 2010 (Appendix J). However, these conservative assessments might cause unwarranted evacuations, which can cost millions of dollars and might threaten lives. A TWC must, therefore, not only provide timely warning of a destructive tsunami, but also must avoid causing unnecessary evacuations with their attendant negative impacts.

The detection and forecasting process requires real-time observations of tsunamis from both coastal sea level gauges and open-ocean sensors (such as provided by the DART stations). The combination of the open-ocean and coastal sea level stations, which provide direct observations of tsunami waves, are important for adjusting and canceling warnings as well as for post-tsunami validation of models of the tsunami propagation and inundation (U.S. Indian Ocean Tsunami Warning System Program, 2007). These sea level networks can also detect tsunamis from sources that fail to generate seismic waves or are generated by an earthquake on land that generates a sub-aerial and/or a seafloor landslide. Progress to expand the ocean observing network and advances in oceanographic observing technologies allow the TWCs to incorporate the direct oceanographic detection of tsunamis into their decision processes.

Conclusion: An array of coastal and open-ocean sea level sensors is necessary until such time, in some distant future, when the capability exists of observing the entire tsunami wave-front in real-time and with high horizontal resolution (e.g., perhaps with satellites) as it expands outward from its source and comes ashore.

The Tsunami Warning Decision Process Before and After Enactment of Public Law 109-424

A majority of the funds authorized by the Tsunami Warning and Education Act (P.L. 109-424) have been used to manufacture, deploy, and maintain an array of 39 DART stations (not counting the 9 purchased and deployed by foreign agencies; http://www.ndbc.noaa.gov/dart.shtml), establish 16 new coastal sea level gauges, and upgrade 33 existing water level stations (National Tsunami Hazard Mitigation Program, 2008; http://tidesandcurrents.noaa.gov/1mindata.shtml). All these new and upgraded sea level stations, especially the DART sites, have

closed large gaps in the sea level observation network that had left many U.S. coastal communities subject to uncertain tsunami warnings. Among TWC personnel and tsunami warning researchers, it is common to find sentiments echoing the following statement in Whitmore et al. (2008): “Since 2005, the amount and quality of both tide gage data and DART data [have] greatly improved. These data are critical to verify the existence of tsunamis and to calibrate models used to forecast amplitudes throughout the basin. Depending on the source location, it can take anywhere from 30 minutes to 3 hours to obtain sufficient sea level data to provide forecasts for wave heights outside the source zone, or to verify that no wave has occurred and cancel the alert. Within the AOR, upgraded sea level networks have dropped the verification time to 30 minutes in some regions.”

The implementation of the EarthVu tsunami forecast system and the Short-term Inundation Forecasting for Tsunamis (SIFT) system into the TWCs (e.g., Weinstein, 2008; see Section Forecasting of a Tsunami Under Way) places additional emphasis on the importance of the proper operation of the sea level stations, especially the open-ocean DART stations whose sea level observations of the tsunami waves are not distorted by bathymetric irregularities and local harbor resonances that affect the coastal sea level observations. With these models and data from the sea level networks, it has become possible to make reasonably accurate predictions of the amplitude of the first tsunami wave that arrives at a given shoreline, enabling the issuance of more timely and more spatially refined watches and warnings (e.g., Titov et al., 2005; Geist et al., 2007; Whitmore et al., 2008).

Furthermore, the array of DART stations, when properly functioning, enables unique and important capabilities for both tsunami detection and forecasting as described below. Whether the current DART and coastal sea level networks are sufficient for both rapid detection of tsunamis and accurate tsunami forecasting with respect to all U.S. coastal territories is addressed below.

Conclusion: The expansion and upgrades to the DART and coastal sea level network have closed large gaps in the sea level observation network that had left many U.S. coastal communities subject to uncertain tsunami warnings. These enhancements to the detection system have significantly improved the TWCs ability to detect and forecast tsunamis in a timely and more accurate fashion.

Conclusion: Based on the analysis described below, the coastal and DART sea level gauge networks have proven their value for the forecasting and warning of far-field tsunamis, especially when coupled with numerical propagation and inundation models.

Conclusion: Despite the improvements in detection and forecasting, some fundamental issues remain concerning gaps in coverage, the value of individual components of the network, and the risk to the warning capability due to the gaps and from individual component failures, or failures of groups of components.

The Economic Value of the DART Network

Although the foremost concern for emergency responders is the protection of human lives in the event of large tsunamis, another significant value of the DART stations is to provide assurance that a large wave has not been generated by a seismic event, permitting an initial watch or warning to be canceled expeditiously. Thus, the DART stations help to prevent unnecessary public concern and economic disruption.

Two estimates of economic benefits have been derived for Hawaii. In one, the cost of a needless evacuation in the state of Hawaii was put at $58.2 million in 1996 dollars (Hawaii Research and Economic Analysis Division, 1996, cited in Bernard, 2005). A second estimate is based on nearly identical earthquakes off the Aleutian Islands before and after the existence of the DART network. On May 7, 1986 (pre-DART), a magnitude 8.0 earthquake near the Aleutian Islands precipitated a full coastal evacuation in Hawaii at an estimated cost of $30-$40 million in lost productivity, emergency provider expenses, and other costs (Hawaii Research and Economic Analysis Division, 1996; National Science and Technology Council, 2005), yet tsunami amplitudes did not exceed 0.6 m. On November 17, 2003, a DART station offshore of the Aleutian Islands clearly showed that a sizable tsunami was not generated by a magnitude 7.8 earthquake in a similar location near the Aleutian Islands, and the watch was canceled (the subsequent maximum tsunami height reached only 0.33 m in Hawaii). Adjusting the 1986 figure for inflation, the cost to Hawaii’s government and businesses in 2003 could have been $70 million had an evacuation been ordered. These findings are consistent with cost estimates associated for unnecessary hurricane evacuations along the U.S. coastline between Maine and Texas (Centrec Consulting Group, LLC, 2007).

Clearly, unwarranted evacuations can cost millions of dollars; and, although the costs associated with the loss in public confidence are less easy to quantify, the effectiveness of a warning system is ultimately grounded in credibility. Therefore, a tsunami warning system should not only provide timely warning of a destructive tsunami, but also should avoid issuing “false alarms.”

Although the DART stations have their greatest value in discerning tsunami propagation characteristics in the open ocean, the inundation problem requires, ideally, sea level sensors along tsunami-prone coastlines because of the spatial variations in tsunami height that are produced by local bathymetry, coastal geometry, and the resultant system responses (e.g., coastal and harbor resonances).

Description of the Coastal Sea Level Gauge Network

Although coastal sea level stations were originally installed for monitoring tides for navigational purposes, most now serve a broad range of uses (including tsunami detection) that have contributed to their continued support and upgrades. Stations are commonly located deep within harbors or bays, where nonlinear hydrodynamic effects and local geographic complexity strongly alter the structure and amplitude of any impinging tsunami waveform. These non-

linear effects hamper the determination of open-ocean tsunami wave parameters (e.g., Titov et al., 2005) without eliminating the stations’ utility for the TWCs (e.g., Whitmore, 2003).

Tide stations were typically configured to measure sea level height in a stilling well, a vertical pipe that is secured to a piling, pier, wharf, or other shore-side structure. These pipes have a small orifice(s) to allow water to enter relatively slowly thus filtering out the short period (3-30 seconds) wind waves, and even tsunamis, so that the hourly recorded sea level values from within the pipe are not aliased by the short period variability. This technology works well for measuring tides and other long period phenomena, but even if the sampling rate is increased from hourly to minutes the true tsunami signal may not be well observed given these filtering effects. Furthermore, a large tsunami can overtop a well and render it useless in extreme events. Consequently, sea level observations intended for tsunami detection are now often accomplished inside a tsunami-hardened station equipped with a rapid-sampling pressure, acoustic, or microwave sensor with an orifice set apart from the structure (National Tsunami Hazard Mitigation Program, 2008).

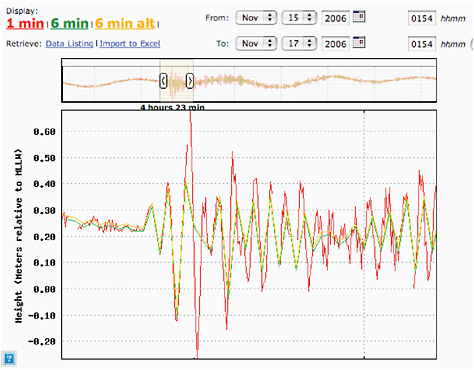

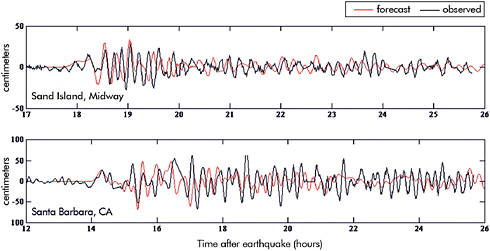

The most important roles for coastal sea level data in the tsunami forecasting and warning process are currently the initial detection of a tsunami, scaling the tsunami forecast models in near-real time, and post-tsunami validation of tsunami models (see Weinstein, 2008; Whitemore et al., 2008). These roles require accurate, rapidly sampled sea level observations delivered in near-real time via an appropriate telemetry system. In practice, these requirements translate into a need for sea level averages at least as often as every minute that are made available in near-real time (U.S. Indian Ocean Tsunami Warning System Program, 2007), and a need for assiduous maintenance of the sea level gauges so that near-real-time data can be trusted and will be available most of the time. Furthermore, subsequent to collection, the data need to be carefully processed through a set of rigorous quality control procedures to maximize the value for model validation after the fact (U.S. Indian Ocean Tsunami Warning System Program, 2007). As an example of the importance of high temporal data resolution, Figure 4.2 shows how sea level data sampled every six minutes completely missed the largest component (the third crest and trough) of the Kuril Islands tsunami of November 15, 2006 (the modeled wave heights of which are shown in Figure 4.3).

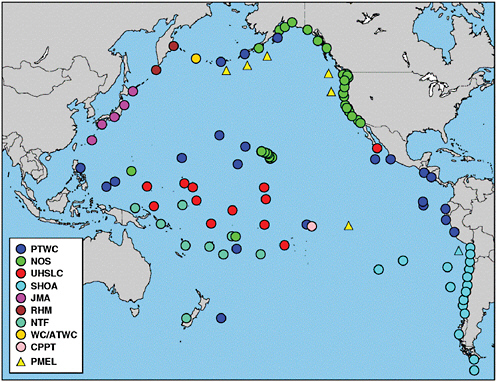

Coastal sea level data used by the TWCs originate from a number of different networks (PTWC, WC/ATWC, National Ocean Service (NOS), and University of Hawaii Sea Level Center (UHSLC)), which are maintained by various national and international organizations (Figure 4.4).

Ideally, these stations are maintained to the standards listed in the Tsunami Warning Center Reference Guide (U.S. Indian Ocean Tsunami Warning System Program, 2007) for sea level stations that are intended to provide data for tsunami warning. For coastal tide gauge stations, the requirements are:

-

independent power and communications, for example, solar and satellite;

-

fault-tolerant redundant sensors (multiple sensors for tsunami, tides, and climate);

-

local logging and readout of data (local back-up of data);

-

warning center event trigger (ramping up of sampling rate and transmission upon detection of a tsunami);

FIGURE 4.2 Sea level data from Midway Island for a short time period encompassing the arrival of the November 15, 2006, Kuril Island tsunami. One-minute samples are shown in red; two different gauges providing 6-minute samples are shown in green and orange. Note that the 6-minute samples completely miss the highest amplitude component (the third crest and trough) of the tsunami. SOURCE: http://co-ops.nos.noaa.gov/tsunami/; NOAA.

-

establishment of a system of surveying benchmarks;

-

locating gauges in protected areas that are responsive to tsunamis, such as wide-mouthed harbors (sustainability and filtering); and

-

standard sampling of 1-minute averages and a continuous 15-minute transmission cycle via the World Meteorological Organization’s (WMO) Global Telecommunications System (GTS) to the Japan Meteorological Agency (JMA), PTWC, and other appropriate warning centers/watch providers.

NOS Sea Level Stations for Tsunami Detection

In the several decades leading up to 2004, NOAA’s NOS Center for Operational Oceanographic Products and Services (CO-OPS; http://tidesandcurrents.noaa.gov/) operated long-

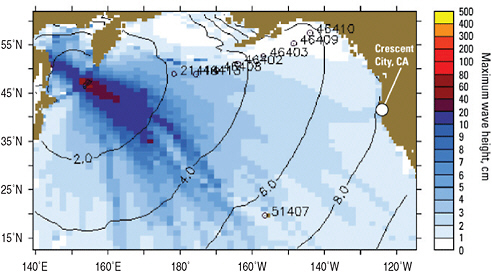

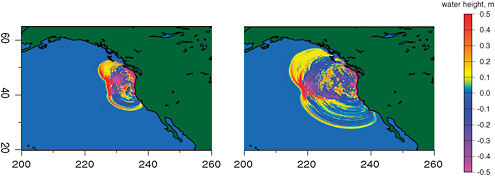

FIGURE 4.3 North Pacific Ocean, showing predicted maximum wave heights (indicated by color) and arrival times (contour lines labeled with numbers representing hours after the triggering earthquake) of tsunami waves generated by a magnitude 8.3 earthquake near the Kuril Islands on November 15, 2006. The predicted wave heights illustrate the phenomenon of “tsunami beaming”—the tendency of tsunami waves in the open ocean to be highest along azimuths approximately perpendicular to the subduction zone where the triggering earthquake occurred. Note the minor beam aimed at Crescent City, California, where the boat harbor was damaged, largely by secondary tsunami waves. SOURCE: Geist et al., 2007; with permission from Vasily Titov, NOAA/PMEL.

term tide stations, and the National Weather Service (NWS) utilized the data to support the national tsunami warning system. However, following the devastating 2004 Indian Ocean tsunami, and with the support authorized in P.L. 109-424, CO-OPS began a system-wide up-grade of its instrumentation. This upgrade increased the rate of data collection to 15-second and 1-minute sampling (National Tsunami Hazard Mitigation Program, 2008) and increased the rate of transmission (to every 6 minutes) at its coastal National Water Level Observation Network (NWLON; http://tidesandcurrents.noaa.gov/nwlon.html) stations. The increased data sampling and transmission rates advance the objectives of tsunami detection and warning, as well as to provide critical inundation model input. In addition to upgrading equipment at 33 existing long-term NWLON stations, CO-OPS collaborated with the TWCs and the Pacific Marine Environmental Laboratory (PMEL) to establish 16 new tide stations at high-priority locations in Alaska, the Pacific Islands, the U.S. West Coast, and the Caribbean, increasing the geographic coverage of water level observations in tsunami-vulnerable locations. This initiative was completed in 2007 (National Tsunami Hazard Mitigation Program, 2008; http://tidesandcurrents.noaa.gov/1mindata.shtml).

FIGURE 4.4 Map of the coastal sea level stations in the Pacific basin that provided sea level data at sufficient temporal resolution and quality for use in the PTWC’s tsunami detection activities in 2008. Color codes indicate the authorities responsible for gauge maintenance. U.S. authorities include PTWC, WC/ATWC, NOS, and UHSLC. Non-U.S. authorities include the following: Centre Polynésien de Prévention des Tsunamis (CPPT; France); Servicio Hidrográfico y Oceanográfico de la Armada de Chile (SHOA); Japan Meteorological Agency (JMA); ROSHYDROMET (RHM; Russia); and National Tidal Facility (NTF; Australia). The positions of the original six DART buoys (yellow triangles) existing in 2005 before the enactment of P.L. 109-424 are also displayed. SOURCE: Weinstein, 2008; Pacific Tsunami Warning Center, NOAA.

At the current time, CO-OPS operates tide stations on all U.S. coasts in support of tsunami warning. Upgraded tide stations are equipped with new hardware and software to enable the collection and dissemination of 1-minute water level sample data. The TWCs can receive this data in near-real time either via Geostationary Operational Environmental Sattelites (GOES) over the National Weather Service Telecommunication Gateway (NWSTG) or via the Tsunamis Stations’ website (http://tidesandcurrents.noaa.gov/tsunami/). Although near-real-time data are not subjected to the National Ocean Service’s quality control or quality assurance procedures and do not meet the criteria and standards of official National Ocean Service data, the stringent maintenance procedures for the NWLON stations maximize the

probability of a reliable data stream in near-real time. In addition to having access to raw water level data via satellite transmission, CO-OPS collaborated with the TWCs to develop a webpage (http://co-ops.nos.noaa.gov/1mindata.shtml) to disseminate 1-minute water level data. This webpage allows users to view both 6- and 1-minute data numerically or graphically for all tsunami-capable tide stations in increments of up to 4 days (Figure 4.2 is one example). Like the near-real-time data, all water level data displayed through the CO-OPS tsunami webpage are raw and unverified at this time. However, verified 6-minute sea level data are available through another website (http://tidesandcurrents.noaa.gov/station_retrieve.shtml?type=Historic+Tide+Data), usually within 2 months of collection, which enables the user to easily evaluate the quality of the 1-minute data, although well after the occurrence of the tsunami. The 15-second data, potentially more useful for model validation, are not telemetered on a regular basis, but are available to the TWCs via remote phone dial-in.

The NOAA/NOS has developed and rigorously follows a set of standards for the establishment, operation, and maintenance of its critical NWLON coastal sea level stations. As well, NOAA describes in its Tsunami Warning Center Reference Guide (U.S. Indian Ocean Tsunami Warning System Program, 2007) the performance and maintenance standards it recommends for sea level stations that are intended to aid tsunami detection, forecasting, and warning activities. Unfortunately, the high-quality NOS NWLON stations make up only a small portion of all the sea level observation stations needed for tsunami detection (Figure 4.4). Whether sea level gauges operated and maintained by other U.S. agencies satisfy, or can be upgraded to, the standards of the NWLON stations, or whether these other U.S. stations should be operated and maintained under the NWLON program, are questions that remain unanswered. In addition, the committee is not aware of any process by which the non-NOS sea level stations (U.S. or international) are evaluated or certified relative to these standards. How much of a risk occurs as a result of the TWC’s reliance on un-certified sea level gauges is not known.

The University of Hawaii Sea Level Center (UHSLC) Stations

The UHSLC (http://ilikai.soest.hawaii.edu/) maintains and/or operates a worldwide array of sea level observing stations, some of which are employed in the tsunami detection and warning process (for the Pacific Ocean, see Figure 4.4). The UHSLC is a research facility of the University of Hawaii/NOAA Joint Institute for Marine and Atmospheric Research (JIMAR) within the School of Ocean and Earth Science and Technology (SOEST). The mission of the UHSLC is to collect, process, distribute, and analyze in-situ sea level gauge data from around the world in support of climate research. Primary funding for the UHSLC comes from NOAA’s Office of Global Programs (OGP). In recent years, the UHSLC, recognizing the potential importance of its stations to tsunami hazard mitigation, has upgraded many of its stations to short period sampling and reporting (http://ilikai.soest.hawaii.edu/RSL1/index.html).

Because of the UHSLC’s climate research mission, which includes ascertaining the small (typically, 1-3 mm) annual sea level rise associated with global warming, the UHSLC strives for high operational standards and data quality. It is not known whether the UHSLC’s operational standards meet or exceed the NOS NWLON maintenance standards.

TWC Sea Level Stations

The TWCs operate a small subset of coastal tide stations (Figure 4.4). The WC/ATWC operates seven stations along southern Alaska and the Aleutian Islands with data being archived for public use at National Geophysical Data Center (NGDC) (http://wcatwc.arh.noaa.gov/WCATWCtide.php). The PTWC stations are distributed throughout the Pacific and Hawaii. In Hawaii, PTWC maintains 14 sea level gauges solely for local predictive and diagnostic value; the data from these gauges are archived under separate NOAA support (http://ilikai.soest.hawaii.edu/arshsl/techrept/arshsl.html). In general, the TWC stations are not maintained to the specifications of the NWLON but have historical precedence and fill gaps in the observing array or fill specific local needs. For example, the PTWC gauges on the Big Island of Hawaii will provide about 20 minutes of warning for Honolulu should a large amplitude tsunami be generated by an earthquake or landslide on the Big Island. The TWCs have indicated they do not have the resources to properly maintain these gauges or to process, distribute, and archive the data.

International Sea Level Stations

The Intergovernmental Oceanographic Commission (IOC) Global Sea Level Observing System (GLOSS) has about 290 stations worldwide, and many are configured for near-real-time reporting of rapidly sampled data relevant to tsunami applications. After the Indian Ocean tsunami of December 26, 2004, the IOC established a centralized Sea Level Station Monitoring Facility (http://www.vliz.be/gauges/), where most of the needed, rapidly sampled coastal sea level observations are now available and reported in near-real time over the World Meteorological Office’s (WMO’s) Global Telecommunications System (GTS). The website serves as a central clearinghouse of data from a range of international providers, including the data sources mentioned above. The objectives of this service are to provide information about the operational status of global and regional networks of near-real-time sea level stations and to provide a display service for quick inspection of the raw data stream from individual stations.

Since 2007, the Sea Level Station Monitoring Facility (SLSMF) also has the information necessary to determine data stream reliability. SLSMF is an appropriate place to obtain such reliability information because it lists only data that were initially made available in near-real time over the GTS, not what was eventually available after internal memory was finally accessed during a maintenance operation. The sea level data that the TWCs employ in their tsunami detection activities and which are acquired via the GTS are essentially the same data now disseminated and archived at SLSMF, excluding the TWCs’ own stations discussed above. As with the data received by the TWCs via the GTS after a tsunami-producing earthquake, the data flowing through SLSMF are not quality controlled, but the website provides additional metadata for most of the non-U.S. stations. To the committee’s knowledge, the level of adherence of international stations used by the TWCs to either NWLON or Tsunami Warning Center Reference Guide (U.S. Indian Ocean Tsunami Warning System Program, 2007) performance and maintenance standards has not been determined.

Adequacy of the Geographical Coverage of the Coastal Sea Level Gauge Network

Following the disastrous 2004 Indian Ocean tsunami, many additional global sea level observing stations have become available for the purpose of tsunami detection and warning, including those enabled in the United States by P.L. 109-424. Despite this increase in the number of near-real-time-reporting, rapid-sampling coastal sea level gauges, a map of the sea level station coverage (e.g., Figure 4.4 for the Pacific Ocean) reveals that large regions with no coverage remain, such as Central America and southern Mexico, the Kuril Islands north of Japan, and most of the Caribbean Islands, as pointed out previously (Bernard et al., 2007). In addition, this dependence on data supplied by foreign agencies, although mitigated somewhat by the redundancies and overlaps in coverage, exposes a vulnerability of the tsunami detection and warning activities to potential losses in data availability.

A recent earthquake in the Caribbean illustrates the issue of coverage. On May 27, 2009, a magnitude 7.3 earthquake occurred off the coast of northern Honduras. Eight minutes after the earthquake, the PTWC issued a Tsunami Watch for Honduras, Belize, and Guatemala. Worst-case-scenario tsunami forecast models suggested tsunami amplitudes up to nearly 1 m given initial earthquake source parameters. No rapidly sampled, near-real-time sea level gauges exist in the western Caribbean, so the PTWC could only wait for visual reports. After 74 minutes, the PTWC canceled the watch based on the following, in the PTWC’s own words: “ … This center does not have access to any real-time sea level gauges in the region that would be used to quickly detect and evaluate the tsunami if one were present. However, enough time has passed that any nearby areas should already have been impacted. Therefore, this center is canceling the tsunami watch it issued earlier” (Pacific Tsunami Warning Center Message, May 27, 2009).

Gaps in the coastal sea level network exist, such as revealed by the Honduran earthquake in May 2009. No analysis has been undertaken to evaluate critical coverage gaps with regards to the tsunami warning decision process. Furthermore, no analysis has been undertaken to determine the relative importance of each existing coastal sea level gauge to the tsunami warning decision and evacuation decision processes. Although there is some degree of redundancy in coverage in the current sea level gauge network for some purposes, there has been no evaluation of the associated risk and the vulnerability of the warning process to failures of single or multiple stations.

The spacing of sea level gauges for the purpose of tsunami detection is sparse, because it is now known that tsunamis can be quite directional, focusing the majority of their energy within a narrow sector, perpendicular to the seafloor rupture direction. For instance, Figure 4.3 displays the modeled beam pattern of a small tsunami generated by a large (magnitude 8.3) Kuril Islands earthquake on November 15, 2006. Given the array of sea level gauges in Figure 4.4, it is obvious that the maximum amplitudes of this tsunami were not observed in near-real time. Because DART stations were not yet in place off the Kuril Islands, only the Midway Island (28.2° N, 177.4° W) station at the far northwest end of the Hawaiian archipelago provided significant advance notice to forecasters of the possible size of the tsunami at the main Hawaiian Island to the southeast. Had the Midway Island station been temporarily inoperative, forecasters

would have been forced to issue a warning at Hawaii, given the magnitude of the earthquake, with a subsequent costly and time-consuming evacuation of coastal zones. As it was, the Midway Island record confirmed that the tsunami was not going to significantly threaten lives or property in the main Hawaiian island, and no evacuation order was issued.

After a similar Kuril Island earthquake on October 4, 1994, the lack of direct confirmation of the existence of a tsunami (including lack of high-resolution sea level data from the temporarily inoperative Midway Island station) resulted in the issuance of a warning that precipitated an unnecessary evacuation of Hawaii’s coastal zones.

Although many gaps exist in the sea level network for rapid tsunami detection, limitations in U.S. and international resources preclude immediate closure of all gaps, and some of these gaps are more important than others. A sophisticated analysis is needed to evaluate critical coverage gaps for coastal sea level gauges to inform the warning decision process. Ideally, such a study would include an evaluation of a region’s tsunami-producing potential, sensitivity analysis of source location, tsunami travel time, local population density, timing for initial warning versus evacuation decision process for communities at risk, and warning/evacuation time gained for additional station coverage. Such an analysis could also determine the relative importance of each existing coastal sea level gauge to the tsunami warning decision and evacuation decision processes. Although there is some degree of redundancy in coverage in the current sea level gauge network, there has been no evaluation of the associated risk and the vulnerability of the system to failures of single or multiple stations. It is possible that isolated gauges near historically tsunami-producing seismic zones would be considered highly important, while individual gauges among a relatively compact group of gauges might be considered less important (although the need for at least one gauge within the group might be considered highly important). Such an assessment of the relative importance of existing gauges could then be the basis of prioritization for maintenance schedules and enhancement opportunities, and for the identification of critical stations that are not under U.S. control and may require augmentation with new U.S. gauges as well as operations and maintenance support.

In order to mitigate the cost of enhancing and maintaining tsunami-useful sea level monitoring stations, the U.S. Tsunami Program could continue coordinating with other programs interested in monitoring sea level variability for other purposes, such as climate variability. Sea level stations maintained by the NOS, UHSLC, etc., have evolved from their primary missions to include higher sampling and reporting rates to serve the tsunami community. Coastal stations with a broad user base have enhanced sustainability.

Reliability of the Coastal Sea Level Gauge Network

International coastal sea level networks vary greatly in station density, transmission rates, and data quality. Improved near-real-time international sea level data observations are crucial to proper TWC response for events distant to U.S. territories, and are necessary for the TWCs to provide advice to their international customers.

Recommendation: Two important concerns regarding the entire coastal sea level network employed by the TWCs in their warning activities need to be addressed soon, as follows:

-

A priority list of the coastal sea level stations should be constructed, based at first on the experience of the TWC forecasters, and later updated from the results of the more objective coverage analysis described in the previous section.

-

A risk assessment of the data flow from the highest priority stations should be performed.

U.S. or international stations deemed high priority with a high risk that the data flow could be interrupted for more than very short periods of time should thereafter be carefully monitored and, if possible, upgraded by the appropriate authority (national or international) to meet all requirements for a tsunami monitoring sea level station that are listed in the Tsunami Warning Center Reference Guide (U.S. Indian Ocean Tsunami Warning System Program, 2007). As an example of prioritization, note that as of June 26, 2009, all five DART stations covering the Aleutian Islands west of the Dateline, and the Kuril Islands to Hokkaido, had been inoperative for nearly all of 2009. Such failures meant that the Midway Island coastal station at 28.2° N, 177.4° W, was the only sea level station that forecasters had available during the first six months of 2009 to evaluate whether a tsunami created in the Kuril Island, and directed toward the southeast (e.g., Figure 4.3), was bearing down on Hawaii. Therefore, the Midway Island station is a strong candidate for high-priority status.

Compliance with the Reference Guide’s recommendations would be a good starting point for assessing the risk in the data flow from each high-priority sea level station. Much of the needed information is now available at the IOC’s SLSMF (http://www.vliz.be/gauges/) discussed previously. SLSMF also has the information needed to determine data stream reliability, at least since 2007. SLSMF is actually a very appropriate place to obtain such reliability information because it lists only data that was initially made available in near-real time over the Global Telecommunications System, not what was eventually available after internal memory was finally accessed during a maintenance operation.

Coastal Sea Level Data Processing

In January 2008, NTHMP issued a report (National Tsunami Hazard Mitigation Program, 2008) intended to identify vulnerabilities in the U.S. environmental data streams needed by the TWCs to effectively detect tsunamis and make accurate tsunami forecasts. The data streams under consideration included, among others, sea level data from DART buoys and from U.S. coastal gauges. The committee identified findings in NTHMP (2008) with respect to processing, distribution, archiving, and long-term access to tsunami-relevant sea level data that remain highly relevant today including the following issues:

-

There is currently no routine acquisition of the 15-second CO-OPS data, which are most relevant for model validation, and there is no routine retention of these data.

-

Fifteen-second data are only collected on request and have no quality control or archive.

-

One-minute data are not currently quality controlled to the same level as the six-minute data.

-

No formal long-term archive for the TWC coastal water level data is in place, although a minimal-service archive of the PTWC Hawaiian sea level data is being maintained and some of the TWC data reach the IOC’s Sea Level Station Monitoring Facility.

-

Retrospective data from the TWCs cannot be easily accessed.

The absolute time accuracy of 15-second data (30s Nyquist period) should be 0.035 seconds if archival or even near-real-time data are to be processed between stations using correlation or coherence methods. Time accuracy at this level is required in order to preserve phase relationships at the highest observed frequencies (i.e., 1/(2*15) Hz). Such absolute accuracy is not difficult to achieve.

Recommendation: The committee endorses the following recommendations of the NTHMP report (National Tsunami Hazard Mitigation Program, 2007) for the TWCs to:

-

Create a formal data archive for both CO-OPS and TWC data and metadata, including 15-second data.

-

Address 1-minute and 15-second quality control issues in unison with the archive issue to ensure quality of archive.

-

Enact Federal Geographic Data Committee (FGDC)-compliant station metadata.

-

Create an operational website providing a portal for 15-second tsunami station water level data.

This committee did not undertake an assessment of the processing, distribution, archiving, and long-term access to tsunami-relevant sea level data originating from international sea level stations. As previously stated, the near-real-time, tsunami-relevant sea level data available to the TWCs via the GTS (and archived at the IOC’s SLSMF; http://www.vliz.be/gauges/) is not quality controlled.

Conclusion: Despite the excellent accomplishments by NOAA with respect to improving the processing, distribution, archiving, and long-term access to the tsunami-relevant sea level data that it collects, there remain several inadequacies. There is currently no routine acquisition, quality control, or archiving of the 15-second NOS/CO-OPS data, which are most relevant for model validation. In addition, NOS/CO-OPS 1-minute data are not currently quality controlled to the same level as their 6-minute data; and no formal long-term archive for TWC coastal water level data exists.

Description of the Deep-ocean Assessment and Reporting of Tsunamis (DART) Network

To ensure early detection of tsunamis, especially where the coastal sea level network is sparse or nonexistent, and to acquire data critical to near-real-time forecasts, NOAA has placed

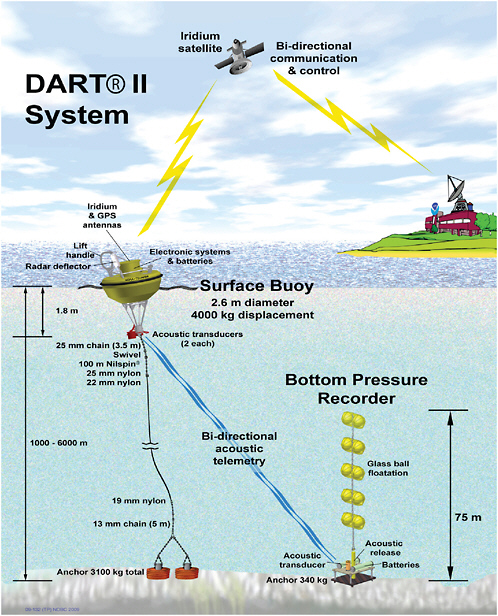

DART stations in regions with a history of generating destructive tsunamis. The DART technology was developed at NOAA’s PMEL under the U.S. National Tsunami Hazard Mitigation Program (González et al., 1998; http://nthmp-history.pmel.noaa.gov/index.html) to provide early detection of tsunamis regardless of the source (http://www.ndbc.noaa.gov/dart/dart.shtm). A DART station comprises an autonomous, battery powered, bottom pressure recorder (BPR) on the seafloor and a companion moored surface buoy that forwards the data it receives acoustically from the BPR to an onshore receiver via satellite links (Figure 4.5; see González et al., 1998). The BPR collects and internally stores pressure and temperature data at 15-second intervals. The stored pressure values are corrected for small temperature-related offsets and converted to an estimated sea-surface height (the height of the ocean surface above the seafloor). The BPR water height resolution is 1 mm in water depths to 6,000 m, and the maximum timing error is 15 seconds per year.

The station has two data reporting modes: standard and event. In standard mode, data are transmitted less frequently to conserve battery power. Event mode is triggered when internal detection software in the BPR identifies anomalous pressure fluctuations associated with the passage of a tsunami. During event mode, all 15-second data are transmitted for the first few minutes, followed by 1-minute averages. If no further events are detected, the system returns to standard mode after 4 hours.

There have been two types of operational DART stations: the first generation DART stations (DART I) became operational in 2003, but all six were replaced with the second generation DART stations (DART II) by early 2008. The DART II station has two-way communications between the BPR and the TWCs/National Data Buoy Center (NDBC) using the Iridium commercial satellite communications system (Meinig et al., 2005). The two-way communication allows the TWCs to set stations into event mode in anticipation of possible tsunamis or to retrieve high-resolution (15-second interval) data in 1-hour blocks for detailed analysis, and allows near-real-time troubleshooting and diagnostics. NDBC receives the data from the DART stations and distributes the data in near-real time to the TWCs via NWS secure communications and to other national and international users via the GTS. The data is also available on the NDBC website, and event data is highlighted when a system has been triggered.

Adequacy of the Geographical Coverage of the DART Network

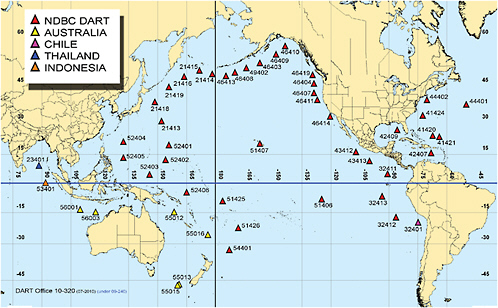

The NDBC completed, in a little more than two years, an upgrade and expansion of the DART array from 6 DART I stations to the present 39 DART II stations, as shown in Figure 4.6. The expansion was supported with funding from the Tsunami Warning and Education Act (P.L. 109-424). In addition, Figure 4.6 shows the locations of 9 DART stations purchased, deployed, maintained, and operated by Chile, Australia, Indonesia, and Thailand.

Planning for the deployment and siting of the expanded DART network was initiated at a workshop attended by representatives of NOAA, the USGS, and academia on July 6-7, 2005, in Seattle (Geist et al., 2005). The central goal of the workshop was to determine an optimal network configuration that would meet multiple mitigation objectives, while addressing scientific,

FIGURE 4.5 Schematic depicting a DART station’s components: the surface buoy with acoustic transducers communicates with the BPR acoustic transducer and then transmits data via the Iridium antenna to satellites; the BPR detects changes in bottom pressure and temperature. SOURCE: http://www.ndbc.noaa.gov/dart/dart.shtml; National Data Buoy Center, NOAA.

FIGURE 4.6 Map displays the locations of DART stations around the world. Red diamonds depict the 39 DART stations maintained and operated by NOAA’s National Data Buoy Center (NDBC). Nine other DART stations are maintained and operated by non-U.S. agencies, as indicated in the legend. SOURCE: http://www.ndbc.noaa.gov/dart.shtml; National Data Buoy Center, NOAA.

engineering, operational, logistical, and political constraints. The process that began at this workshop was augmented by an optimization analysis, which was subsequently completed at the NOAA Center for Tsunami Research (NCTR) at PMEL. To the extent that the constraints on siting can be quantified and the benefits expressed in functional form, array design can be approached as a problem in optimization. This avenue was explored using a tool called NOMAD (Nonlinear Optimization for Mixed vAriables and Derivatives; Audet and Dennis, 2006). Although the scheme was tested for only relatively simple cases, the methodology shows promise as an example of a scientifically robust process for siting and prioritizing stations in an operational sensor network.

The methodology and final rationale for the siting of DART stations are the subjects of a NOAA technical memorandum (Spillane et al., 2008). The final siting decisions were based on the workshop recommendations, as well as site recommendation reports produced at NCTR in consultation with the TWCs, with input from the USGS, NDBC, and other interested parties. The technical memorandum provides a starting point for continued refinement of the siting decisions and extension of the DART array, if necessary, while also providing information to aid efforts by the international community to extend the network coverage.

The net result of the deliberations on the siting of the DART stations is the current array displayed in Figure 4.6. The prioritization of groups of these sites is presented in Table 4.1 (Spillane et al., 2008). Some of the more important issues involved in site selection are described in Box 4.1.

The committee does not find any serious gaps in the geographic coverage of the DART network as designed, with regard to providing timely and accurate warnings and forecasts of far-field tsunamis on U.S. coasts. It can certainly be argued that denser coverage of open-ocean sensors would provide important redundancy capacity (in light of current reliability problems discussed below) and would provide more opportunities to improve the accuracy of model-generated wave forecasts. From a more global perspective, gaps in coastal sea level station coverage (as revealed in the Caribbean region, for instance; see previous section), which expose

TABLE 4.1 Sub-Region Allocations and Priorities Within the Overall U.S. DART Array

|

Array Sub-Group |

Instruments Assigned |

Pre-Existing Sites |

Priority |

|

Alaska/Aleutians |

6 |

3 |

1 |

|

Western Pacific |

6 |

0 |

2 |

|

Puerto Rico/Caribbean |

3 |

0 |

3 |

|

West Coast |

5 |

2 |

4 |

|

Southwest Pacific |

4 |

0 |

5 |

|

Central/South America |

4 |

0 |

6 |

|

Atlantic |

3 |

0 |

7 |

|

Gulf of Mexico |

1 |

0 |

8 |

|

Northwest Pacific |

5 |

0 |

9 |

|

Hawaii/Mid-Pacific |

2 |

2 |

N/A |

|

SOURCE: Spillane et al., 2008; NOAA. |

|||

|

BOX 4.1 Siting Considerations for DART Stationsa Tsunami Signal-Timeliness, Signal-to-Noise, and Signal Complexity Issues:

Engineering and Survivability Issues:

|

vulnerabilities of non-U.S. territories in the TWCs’ AORs, could be filled by DART stations if the resources of international partners are insufficient to fill the gaps with coastal sea level stations. However, the high cost of DART acquisition and maintenance may preclude any significant network growth.

NOAA is to be commended for having developed a prioritization scheme for DART stations and for having rapidly deployed the DART array. Looking to the future, the committee con-

|

foul weather or ship scheduling. Even though tsunamis do not occur frequently, redundancy in the array is still desirable. The surface buoy has two independent complete communication systems for full redundancy. In addition, in high-risk source regions, a certain amount of overlap in spatial coverage is desirable so that instrument failures may be partially compensated by having more than one DART in the region capable of providing a timely, high-quality signal. Communication Issues:

Logistical Issues:

Other Issues:

|

cludes that the numbers, locations, and prioritizations of the DART stations should not be considered static. These parameters of the DART network clearly deserve frequent re-consideration in light of constantly changing fiscal realities, survivability experience, maintenance cost experience, model improvements, new technology developments (even new DART designs), increasing international contributions, and updated information on the entire suite of siting issues listed in Box 4.1. In addition, simulations of the effectiveness of the DART network, under

numerous earthquake scenarios and under various DART failure scenarios, should continue to help improve the network design (Spillane et al., 2008). The potential contributions of optimization algorithms to the design process have not been exhausted.

A component of the periodic re-evaluations of the DART network needs to be the re-evaluation of the prioritization of each group of DART stations, not just individual stations, with detailed justifications for these determinations. In particular, the committee questions the rationale for the very low priority of the group of five DART stations deployed in the Northwest Pacific (Table 4.1) that provide coverage from the Dateline along the western Aleutian Islands, and past the Kuril Islands to Hokkaido. The Kuril Islands in particular have been the source of numerous tsunamis large enough to invoke tsunami watches and warnings. At the very least, DART stations covering the Kuril Islands would have a high value for the prevention of false alarms.

DART station prioritization could be refined by first distinguishing prioritization criteria based on the system’s primary function in the detection process. A list of criteria might include:

-

detection of a large tsunami,

-

detection of a medium to small tsunami (to mitigate false alarms),

-

providing data for scaling forecast models during the occurrence of a large tsunami, and

-

providing data for forecast model validation after the fact.