2

Scientific Foundations of Continuing Education

In 1967, the National Advisory Committee on Health Manpower recommended that professional associations and government regulatory agencies take steps to ensure the maintenance of competence in health professionals (U.S. Department of Health, Education, and Welfare, 1967). To support this objective, states in the 1970s began to mandate that health professionals receive continuing education (CE). Requirements were applied unevenly across the United States, however, and there now is variability from state to state and profession to profession regarding how much CE is needed, what kind of CE is needed, and how and when CE should be administered (Landers et al., 2005). In the late 1970s, many observers argued that the time was ripe for change in the CE system, and they raised a number of important questions: Can CE guarantee competence? Are mechanisms available to accurately assess the learning needs of health professionals? How can these learning needs best be met? How many annual contact hours are needed to ensure competence? (Mazmanian et al., 1979). Today, it is clear that this call for change went unanswered. CE has evolved organically, without an adequate system in place to ensure that the fundamental questions raised three decades ago could be addressed to inform the development and maintenance of a CE system. These still-relevant questions provide a springboard toward creating a more responsive and comprehensive system.

Pressure from a number of groups, including the Pew Taskforce on Health Care Workforce Regulation (1995) and the Institute

of Medicine (IOM), has spurred debate about how best to ensure the continuing competence of health professionals. As discussed in Chapter 1, the IOM report Health Professions Education: A Bridge to Quality (2003) details five core competencies deemed necessary for all health professionals: patient-centered care, interdisciplinary team-based care, evidence-based practice, quality improvement strategies, and the use of health informatics. These competencies are intended to help provide a more safe, effective, patient-centered, efficient, timely, and equitable health care system (IOM, 2001). For example, advances in the areas of evidence-based practice and quality improvement require the ability to integrate clinical knowledge with professional practice. Connecting these processes through evidence-based health professional education has the potential to revolutionize the health care system (Berwick, 2004; Cooke et al., 2006).

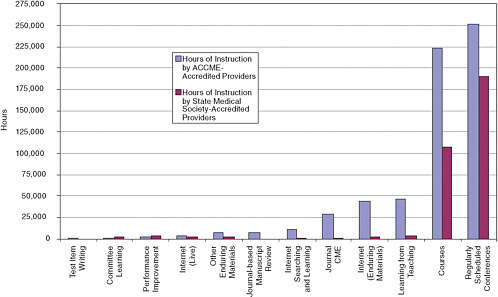

The components of CE—the CE research system, regulatory and quasi-regulatory bodies, and financing entities—are currently ill-equipped to support these core competencies consistently. For example, as this chapter later details, effective CE incorporates feedback and interaction, yet 76 percent of continuing medical education (CME) instruction hours are delivered through lectures and conferences (ACCME, 2008) that typically limit interactive exchange (Forsetlund et al., 2009). Various professions, however, have begun to use different methods of CE, including methods that better take into account the clinical practice setting (Kues et al., 2009; MacIntosh-Murray et al., 2006).

Research on CE methods and theories behind adult learning, education, sociology, psychology, organizational change, systems engineering, and knowledge translation have provided an initial evidence base for how CE and continuing professional development should be provided. Additionally, previous works have offered theoretical frameworks for conceptualizing CE and guiding its provision (Davis and Fox, 1994; Fox et al., 1989).

This chapter presents summary data on the ways in which CE is typically provided. The chapter discusses the most common methods of providing CE; details findings on the effectiveness of CE in general, as well as the effectiveness of specific CE methods; discusses theories that support what is known about how adults learn; and describes the attributes of successful CE methods and how theory can be applied to improve these methods.

METHODS OF PROVIDING CONTINUING EDUCATION

In its current form, CE consists primarily of didactic activities that are not always related to clinical settings or patient outcomes. Lectures and conference sessions, long the mainstay of CE, remain the most commonly used CE methods (see Figure 2-1). For physicians, courses and regularly scheduled series (e.g., grand rounds) account for 44.1 percent of total reported activities conducted by providers accredited by the Accreditation Council for Continuing Medical Education (ACCME) and 88.1 percent of total activities presented by providers accredited by state medical societies (ACCME, 2008). More than 82 percent of total hours of instruction are in the form of courses or series.

The committee made a concerted effort to incorporate data regarding methods of CE delivery from all health professions; however, the data collected for most professions are not robust and are not always reported in comparable formats. Consequently, publicly available data on pharmacy, nursing, dentistry, physical therapy, and other allied health professions’ CE are much more limited than in medicine.

In 2007-2008, the Accreditation Council for Pharmacy Education (ACPE) accredited 36,569 activities. Of these, 53 percent were “live activities,” 46 percent were home study, and 11 percent were Internet activities.1 The category of live activities includes lectures, symposia, teleconferences, workshops, and webcasts, but the percentage of each of these activities is unknown. For licensed social workers, survey participation rates provide some insight into the types of CE most often used (Table 2-1). Social workers, like physicians and pharmacists, often participate in formal, didactic workshops. Informal CE activities such as peer consultation, which may not be counted for CE credit by state licensing boards, are the methods most believed by social workers to change their practice behavior (Smith et al., 2006). In many health professions, journal reading is a commonly used avenue to complete CE credits.

CE providers are increasingly using an expanding variety of CE methods. A 2008 survey of academic CME providers found an “increasing diversity” of offerings beyond traditional, didactic conferences, courses, and lectures (Kues et al., 2009, p. 21). CE programs more often use multiple educational methodologies (e.g., interaction, experiential learning) and multiple educational techniques (e.g., questioning, discussion, coaching, role play). Table 2-2 provides a

TABLE 2-1 Methods of CE Reported by Social Workers

|

Activity |

Participation Rates |

|

|

% |

N |

|

|

Workshops |

97.0 |

223 |

|

Peer consultation |

76.5 |

176 |

|

Reading books or journals |

73.5 |

169 |

|

In-service training |

66.1 |

152 |

|

Supervision or mentoring |

47.4 |

109 |

|

Academic courses |

7.8 |

18 |

|

SOURCE: Smith et al., 2006. |

||

list of common approaches. Data on the use of these approaches are not always available. For example, the rate at which health professionals participate in self-directed learning is not available from CE providers or accreditors because in most health professions, CE credits—the metric for CE activities—cannot currently be earned for participation in self-directed learning.

The use of e-learning has become increasingly widespread in the training of health professionals. e-Learning modalities include educational programs delivered via electronic asynchronous or real-time communication without the constraints of place, or, in some cases, time (Wakefield et al., 2008). Although some professionals prefer traditional learning formats that include more face-to-face contact (Jianfei et al., 2008; Sargeant et al., 2006), e-learning has the advantage of enabling health professionals to set their own learning pace, review content when needed, and personalize learning experiences (Harden, 2005). Lower costs, potentially greater numbers of participants, and increased interprofessional collaborations are additional benefits of e-learning (Bryant et al., 2005). e-Learning can facilitate, for example, interprofessional team-based simulation training (Segrave and Holt, 2003). The ways in which various formats of e-learning may be used in professional practice are summarized in Table 2-3.

DEFINING OUTCOME MEASURES

Assessing the effectiveness of CE methods requires clarifying desired outcomes. Traditionally, efforts to measure CE effectiveness were constrained by a lack of consensus around ideal measures for evaluating CE learning outcomes (Dixon, 1978). Participation rates, satisfaction of participants, and knowledge gains—as evaluated by postactivity exams—were used to show that participants in a CE

TABLE 2-2 Common Approaches to Providing CE

|

Method |

Description |

|

Experiential and self-directed learning |

A professional’s experience factors into learning activities; the structure, planning, implementation, and evaluation of learning are initiated by the learner (Davis and Fox, 1994; Stanton and Grant, 1999) |

|

Reflection |

An individual marks ideas, exchanges, and events for teaching-learning (Fox et al., 1989; Schön, 1987) |

|

Academic detailing |

Outreach in which health professionals are visited by another knowledgeable professional to discuss practice issues |

|

Simulation |

The act of imitating a situation or a process through something analogous. Examples include using an actor to play a patient, a computerized mannequin to imitate the behavior of a patient, a computer program to imitate a case scenario, and an animation to mimic the spread of an infectious disease in a population |

|

Reminders |

Paper or computer-generated prompts about issues of prevention, diagnosis, or management delivered at the time of care and point-of-care |

|

Protocols and guidelines |

A set of rules generated by piecing together research-based evidence in the medical literature, representing the optimal approaches to managing a medical disease |

|

Audit/feedback |

Health care performance is measured and the results are presented to the professional |

|

Multifaceted methods |

Comprehensive programs designed to improve health professional performance or health care outcomes using a variety of methods |

|

Educational materials |

Publications or mailings of written recommendations for clinical care, including guidelines and educational computer programs |

|

Opinion leaders |

Individuals recognized by their own community as clinical experts with well-developed interpersonal skills |

|

Patient-mediated strategies |

Techniques that increase the education of patients and health consumers (e.g., health promotion media campaigns, directed prompts) |

TABLE 2-3 Examples of e-Learning

activity had reached at least the “knows” outcome level indicated in Table 2-4. The science of measuring outcomes is advancing beyond measuring procedural knowledge as researchers have come to focus on linking CE to patient care and population health (Miller, 1990; Moore et al., 2009; Tian et al., 2007). An effective CE method is now understood to be one that has enhanced provider performance and thus improved patient outcomes (Moore, 2007).

The relationships among teaching, learning, clinician competence, clinician performance, and patient outcomes are difficult to measure (Jordan, 2000; Mazmanian et al., 2009; Miller, 1990) and complicated by the inherent challenges in measuring actual, not just potential or reported, behavior. In health care settings, it may remain difficult to measure dependent variables (Eccles et al., 2005) because linking participation in CE to changes in the practice set-

TABLE 2-4 Continuum of Outcomes for Planning and Assessing CE Activities

|

Miller (1990) |

Moore et al. (2009) |

Description |

|

|

Participation |

The number of health professionals who participated in the CME activity |

|

|

Satisfaction |

The degree to which the expectations of the participants about the setting and delivery of the CME activity were met |

|

Knows |

Learning: Declarative knowledge |

The degree to which participants are able to state what the CE activity intended them to know |

|

Knows how |

Learning: Procedural knowledge |

The degree to which participants are able to state how to do what the CE activity intended them to know how to do |

|

Shows how |

Competence |

The degree to which participants show in an educational setting how to do what the CE activity intended them to be able to do |

|

Does |

Performance |

The degree to which participants do in their practices what the CE activity intended them to be able to do |

|

|

Patient health |

The degree to which the health status of patients improves due to changes in the practice behavior of participants |

|

|

Community health |

The degree to which the health status of a community of patients changes due to changes in the practice behavior of participants |

|

SOURCE: Adapted from Moore et al., 2009. |

||

ting is a complex process that cannot easily be tracked using current methods. Additionally, the provision of interprofessional care makes it difficult to attribute the education of one professional to a patient outcome (Dixon, 1978). Furthermore, due to the nature of some types of professional work, such as social work, evaluating outcomes of

client-professional interaction is inherently difficult if not ethically impossible (Griscti and Jacono, 2006; Jordan, 2000).

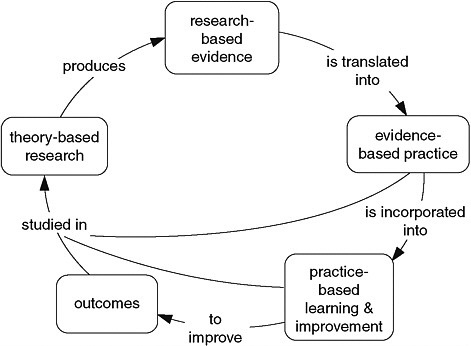

Continuing education is concerned with both health professional learning processes and broader outcomes, including patient outcomes and organizational change. Therefore, CE is itself part of a complex learning system and relies on evidence-based research that is driven by theory. Theories are developed over time and continuously build on evidence-based practice, practice-based learning, and outcomes. However, the transition between research and practice is often difficult. Closing the gaps between research and practice, as depicted in Figure 2-2, may be achieved by blending clinical practice and knowledge (MacIntosh-Murray et al., 2006).

In the absence of data on patient outcomes, health professionals’ self-reported knowledge gains and behavior change resulting from participation in a CE activity may provide insight into learning. In some cases, self-reported gains have been shown to reflect actual behavior change (Curry and Purkis, 1986; Davis et al., 2006; Parker III and Mazmanian, 1992; Pereles et al., 1997; Wakefield et al., 2003), but self-reported knowledge gains and behavior change may not always be accurate and valid (Gordon, 1991). Self-reports

FIGURE 2-2 Closing the research-practice gaps for health care professionals and continuing education professionals.

SOURCE: MacIntosh-Murray et al., 2006.

afford health professionals a voice in evaluating themselves and their motivations. Although self-reports should never be the sole basis for decision making regarding the general effectiveness of continuing education, they may serve important purposes by enabling CE providers to identify motivations and gaps in knowledge (Eva and Regehr, 2008; Fox and Miner, 1999).

MEASURING THE EFFECTIVENESS OF CONTINUING EDUCATION

The effectiveness of continuing education has been researched, debated, and discussed for decades. An oft-cited review of CME found that the weakness of most published evaluations limited possible conclusions about the effectiveness of CME (Bertram and Brooks-Bertram, 1977), while a seminal review of eight studies provided evidence that formal CME helped physicians improve their clinical performance (Stein, 1981). Soon after publication of that review, however, a benchmark study in the New England Journal of Medicine stated that “CME does not work” (Sibley et al., 1982). To evaluate these contradictory statements and findings in the contemporary context, the committee reviewed evidence on the effectiveness of CE methods. The committee synthesized results from a literature search of more than 18,000 articles from fields including CE, knowledge translation, interprofessional learning and practice, and faculty development in three rounds of detailed assessment of each study’s design, method, outcomes, and conclusions. A total of 62 studies and 20 systematic reviews and meta-analyses relevant to CE methods, cost-effectiveness, or educational theory were included (see Appendix A). Studies from a variety of health professions were included.

The literature review revealed that researchers have used a range of research designs to address a broadly defined research agenda, but the research methods used generally were weak and often lacked valid and reliable outcome measures. Several authors (Davis et al., 1999; Marinopoulos et al., 2007; O’Brien et al., 2001; Wensing et al., 2006) have questioned the propriety of systematic reviews as a tool for CE research due to a lack of a widely accepted taxonomy for the comparability of CE methods. Indeed, for several decades, authors have maintained that data on CE effectiveness are limited because studies of CE methods do not uniformly document the major elements of the learning process (Stein, 1981). Although 29 of the evaluated studies were randomized controlled trials assessing changes in clinical practice outcomes based on participation in

a CE method, none had been validated through replication. While controlled trial methods produce quantifiable end points, they do not fully explain whether outcomes occur as a result of participation in CE (Davis et al., 1999); thus, a variety of research methods may be necessary. Cohort and case-control designs may be more appropriate (Mazmanian and Davis, 2002). In general, more robust research methods must be developed and used to assess CE effectiveness adequately.

Research studies measuring outcomes in terms of behavior, clinical behavior, and/or patient outcomes were generally weak in quality and results. Of the 62 studies reviewed, 8 used patient outcomes (e.g., mortality, smoking cessation, cardiac complications) to assess a health professional’s learning resulting from participation in a CE activity. In lieu of CE outcomes measures linked directly to patient outcomes, self-reported behavior change was used to assess effectiveness in 9 of the 62 studies. Fourteen studies used the prescribing process to determine changes in prescription trends that may have resulted from a health professional’s participation in a CE activity.

Overall Effectiveness of CE

Although CE research is fragmented and may focus too heavily on learning outside of clinical settings, there is evidence that CE works, in some cases, to improve clinical practice and patient outcomes. For example, a seminal, qualitative study of physicians explored how physicians change, finding that CME can be a “force for change” within a comprehensive strategy for learning (Fox et al., 1989). A recent, comprehensive analysis of CME identified 136 articles and 9 systematic reviews summarizing the evidence regarding CME effectiveness in imparting knowledge and skills, changing attitudes and practice behavior, and improving clinical outcomes (Marinopoulos et al., 2007). Although this analysis could not determine the effectiveness of all CME methods covered, CME was found, in general, to be effective for acquiring and sustaining knowledge, attitudes, and skills, for changing behaviors, and for improving clinical outcomes. Some evidence has supported the overall effectiveness of CE in specific instances (Davis et al., 1995; Fox, 2000; Mazmanian and Davis, 2002; Robertson et al., 2003), but too little evidence exists to make a compelling case for the effectiveness of CE, under specific circumstances.

Effectiveness of Specific CE Methods

The findings of several notable studies (Davis et al., 1992, 1995, 1999; Grimshaw et al., 2002; Grol, 2002; Grol and Grimshaw, 2003) are in general agreement that some methods are more “predispose[d] toward success” than others (Slotnick and Shershneva, 2002). The committee’s review, like other reviews on CE effectiveness, provides only limited conclusions about the effectiveness of specific methods. Some tentative insights include:

Interactive techniques, such as academic detailing and audit/feedback, generally seem effective. Previous research has indicated that interactive workshops can result in moderately large changes in professional practice behavior, compared with didactic presentations (O’Brien et al., 2001). Print media, such as self-study posters, were generally ineffective. Methods that included multiple exposures to activities tend to produce more positive results than one-time methods, a finding aligned with previous studies indicating that health professionals are more likely to apply what they have learned in practice if they participate in multiple learning activities on a single topic (Davis and Galbraith, 2009; Davis et al., 1992, 1995; Mansouri and Lockyer, 2007; Marinopoulos et al., 2007; Mazmanian et al., 2009).

Simulations appear to be effective in some instances but not in others. Simulations to teach diagnostic techniques generally are more effective than simulations to teach motor skills. Assessing the effectiveness of simulation is complicated by the diversity of simulation types, ranging from case discussions to high-fidelity simulators.

e-Learning offers opportunities to enhance learning and patient care; however, without a comprehensive body of evidence, judging the effectiveness of e-learning methods is difficult. The methods for evaluating e-learning effectiveness are relatively weak, which makes demonstrating the effect of e-learning on patient outcomes difficult. Data may emerge, however, as technology and metrics are further enabled (Cook et al., 2008; Fordis et al., 2005; Maio et al., 2003; Pui et al., 2005; Wutoh et al., 2004). Ultimately, e-learning may be equal to or better than more traditional learning methods for individual health professionals, as measured by learner satisfaction and their acquisition of knowledge and skills.

THEORETICAL UNDERPINNINGS OF CONTINUING EDUCATION

There is a 40-year history of conceptualizing CE (Cervero, 1988; Eraut, 2001; Houle, 1980; Nowlen, 1988). The earliest model, called

the “update model,” was built on methods of teaching, with a different educational model to develop evidence about the ways in which adults learn (Miller, 1967). Since then, research has focused on how adult learning is best accomplished, for example, by involving learners in identifying and solving problems. Considerations of context have also been addressed, particularly regarding how conditions shape CE in practice. One example is the concept that adult learners are influenced by a “double helix” of professional performance shaped by individual ability within complex organizational and cultural circumstances (Nowlen, 1988, p. 73). Many researchers have elaborated and extended this practice-based CE model (Cervero, 1988; Eraut, 1994, 2001; Houle, 1980; Nowlen, 1988), and practice-based learning is now at the forefront of educational agendas throughout the professions (Cervero, 2001; Moore and Pennington, 2003). The principal emphasis is no longer on content but rather on what is attained in knowledge, skills, attitude, and improved performance at the end of a learning activity.

The design of CE activities should be guided by theoretical insights into how learning occurs and what makes the application of new knowledge more likely. Insights can be drawn from the literature of several academic disciplines, including adult education, sociology, psychology, knowledge translation, organizational change, engineering, and systems learning (Bennett et al., 2000). However, CE providers too often fail to base their methods on theoretical perspectives (Olson et al., 2005), despite the fact that many of the most effective CE methods have a theoretical basis in adult education (Carney, 2000; Hartzell, 2007; Mann et al., 2007; Pinney et al., 2007; Pololi et al., 2001).

Select Theories on Learning

A range of theoretical perspectives have been offered on how adults learn (Merriam, 1987; Merriam and Caffarella, 2007). These perspectives cover such topics as what motivates a person to begin a lifelong learning process and how the relevance of the learned material impacts the amount of knowledge that will be retained. Table 2-5 describes several theories of learning that have been influential in shaping adult education.

Lifelong Learning

Experience is a valuable component of lifelong learning. CE is a dynamic process—moving from inquiry to instruction to

TABLE 2-5 Overview of Select Theories of Learning

|

Lifelong learning |

An approach to learning whereby health professionals continually engage in learning for personal goals |

|

Theories of motivation |

A body of theories (e.g., discrepancy analysis, proficiency theory) explaining intrinsic motivations for engaging in learning |

|

Self-directed learning |

An approach to learning whereby the structure, planning, implementation, and evaluation of learning are initiated by the learner |

|

Reflection |

A learning tool in which an individual evaluates how experiences can guide action |

|

Experiential learning |

An approach to learning whereby a health professional’s experiences are seen as an educational resource |

performance—that continues throughout a health professional’s career (Houle, 1984). Inquiry might start with a health professional’s identification of a clinical question; instruction is the process through which knowledge or skills are then disseminated. Lifelong learning includes formal and informal modes of instruction, such as reflection, casual dialogue with peers, and lectures. CE outcomes may be best achieved when the instruction results in a health professional “internalizing an idea or using a practice habitually” (Houle, 1980, p. 32).

Through the process of lifelong learning, health professionals become aware of the reasoning and evidence that underlie their beliefs, biases, and habits (King and Kitchener, 1994). This approach to learning, in which health professionals continually engage in learning for their own personal goals, is in contrast to simply participating in formal CE for the purposes of receiving credit (King and Kitchener, 1994). Lifelong learners are sophisticated and complex. Therefore, theorists have sought to explain the implications of these complexities for the provision of CE to health professionals (Davis, 2004; Davis and Simmt, 2003; Davis and Sumara, 2006; Doll, 1993; Osberg, 2005). Complex learners are influenced by their own knowledge base as well as by their collaborative relationships with others.

Teachers of complex learners must therefore attend to these various components of influence (McMurtry, 2008).

Learning experiences are construed by health professionals in light of previous experiences and influences from other professionals and with regard to wider social processes. Thus, CE involves not only explicit curricular goals, but also recognition of unpredictable interactions, ideas, and relationships that emerge from professionals and CE providers. This kind of CE curriculum might, for example, favor collective learning that incorporates and builds on the variety of backgrounds, interests, knowledge, abilities, and personalities within a typical community of practice. The curriculum might favor a dynamic in which the CE provider and the health professional participate together rather than working under a more basic teacher-driven approach (Davis and Simmt, 2003).

Theories of Motivation

Health professionals themselves can influence the effectiveness of CE activities through the attitudes they take during the experience and the methods they select (Moon, 2004). Motivation may come from an external source, such as the need to satisfy CE requirements, or from an internal source, such as curiosity and a desire to learn. A number of theories, including discrepancy analysis, seek to explain the origins of this type of intrinsic motivation. In discrepancy analysis, for example, the learner is assumed to become motivated when he senses a discrepancy between things as they are and things as they ought to be. The learner seeks to increase competency through learning, thereby restoring balance and bringing what is and what ought to be closer in line (Fox and Miner, 1999).

A number of other theories, including proficiency theory, suggest that gaps in knowledge may produce discomfort and therefore motivation to change. Proficiency theory holds that motivation rises when a person sees a gap between the desired level of proficiency and his own (Knox, 1990; Merriam, 1987; Merriam and Caffarella, 2007). A clinician’s ability to carry out learning and change is also related to his degree of confidence in his own abilities. The learner’s sense of self-efficacy—the belief in his own ability to meet prospective challenges—impacts learning because those with greater self-efficacy are likely to make greater efforts and persist longer in seeking to learn (Bandura, 1977).

Self-directed Learning

If health professionals choose to learn only as problems arise, they may not be performing critical self-examinations to identify situations before they become problematic. Therefore, internal needs assessment, in which adult learners assess their own learning needs, plays a role in learning. The underlying theory behind self-directed learning comes from Knowles’ theoretical assumptions regarding adult learners (see Table 2-6). Self-directed learning offers opportunities for health professionals to assess their previous experiences and existing knowledge and then set learning goals, locate resources, decide on which learning methods to use, and evaluate processes (Brookfield, 1995). Self-directed learners thus are more purposeful, motivated, and reactive (Knowles, 1975).

Self-directed learning activities are meant to be more closely tailored to the needs of the learner than activities that are controlled and directed by others. Individuals, because they are “inherently self-regulating,” can and do identify clinical questions, set their own learning goals, develop strategies to address them, implement actions, and evaluate the success of those approaches (Mann and Ribble, 1994, p. 71). Evidence suggests that adults consistently seek to direct their own learning (Fox et al., 1989; Long, 1997) and that they plan and direct self-learning projects on a regular basis (Tough, 1971).

The central motivations that lead physicians, for example, to initiate learning include a desire for enhanced competence, the perception that their clinical environment presses for a change, and social pressures relating to relationships to colleagues in the same institution or profession. Additionally, professionalism, which is an internalized set of socially generated expectations, is a powerful agent for change (Fox et al., 1989). These findings indicate that comparing a health professional’s performance against that of his peers can be a facilitator in identifying learning needs.

TABLE 2-6 Theoretical Assumptions of Andragogy: “The Art and Science of Helping Adults Learn”

|

1. Adults move toward self-direction as they mature (i.e., they become more independent) |

|

2. Experience is a resource for learning |

|

3. Motivations to learn are oriented to the usefulness of the knowledge |

|

4. Orientation to learning shifts from subject-centered to problem-centered |

|

SOURCE: Knowles, 1980. |

Reflection

Reflection is a learning tool with implications for the teaching-learning process (Schön, 1983, 1987), particularly for health professionals who must learn from practice (Benner and Tanner, 1987). Professionals, despite operating in “zones of mastery,” sometimes face unique and complex situations that are not resolved by using habitual practices (Schön, 1983). Through reflection, health professionals can incorporate new knowledge into practice.

Two different methods of reflection can facilitate learning: reflection-in-action and reflection-on-action. Through reflection-in-action, a health professional can reframe the experience to determine if or how it fits with his existing knowledge base (Schön, 1983, 1987). Reflection-in-action, which has been termed “thinking on your feet,” problem solving, and “single-loop learning,” involves looking to experiences, especially when workplace pressure prevents a professional from asking questions or admitting he does not know (Bierema, 2003; Smith, 2001). In contrast, reflection-on-action, also termed “double-loop learning,” occurs when evaluating how an experience can guide action (Argyris, 1991; Schön, 1983). Teaching health professionals to engage in reflection-on-action is a key component in developing the skills necessary to be a self-directed learner.

The point at which a health professional does not know the answer—the point at which he can engage in reflective practice—is a teachable moment that may be ignored by health professionals who are unaware of or choose not to use reflection and self-assessment (Davis et al., 2006; Eva et al., 2004; Hill, 1976). Teachable moments, which are also referred to as clinical questions, are essential to health professionals’ learning (Moore and Pennington, 2003). Learning may best occur in the context of patient care when it is directly applicable to clinical questions (Ebell and Shaughnessy, 2003).

Habits, frames of reference, feelings, and value judgments can influence the way a professional thinks, acts, and reflects (Mezirow, 1990). For example, a recurrent theme in cognitive research on decision making is that humans are prone to biases. In CE, this might indicate a change in perspective: CE providers may show the pervasive nature of biases and instruct professionals how to recognize the infrequent situations in which biases tend to fail (Regehr and Norman, 1996). Reflection allows health professionals to become aware of these underlying assumptions and biases, influencing how thinking and action occur.

Experiential Learning

The start and end of any teachable moment or period of reflection should involve self-assessment through which the professional critically reflects on how the learning experience should inform future decisions about both clinical care and learning (Gibbs, 1988). Experiential learning informs a health professional’s experiences with theory about what should be done (Stanton and Grant, 1999). The cycle of experiential learning begins and ends in active experiences that include the planning, learning, and application of new knowledge (Dennison and Kirk, 1990; Kolb, 1984). The planning phase may include needs assessment and curriculum design, while the learning phase itself may incorporate a variety of educational methods, such as peer appraisal, self-reflection, case studies, and role play (Stanton and Grant, 1999). Experiential learning is based on the idea that teaching should be grounded in learners’ experiences and that these experiences represent a valuable educational resource.

ATTRIBUTES OF SUCCESSFUL CE METHODS

Health professionals face contextual influences when attempting to apply learning in the workplace. Processes, systems, and traditions can facilitate a learner’s use of new knowledge in practice. Thus, support for change, resources, and opportunity to apply learning can both positively and negatively affect a learner’s application of new knowledge (Ottoson and Patterson, 2000). While practice context can affect educational outcomes, so can the ways in which CE is delivered. The committee determined that effective CE activities have the following features:

-

Incorporate needs assessments to ensure that the activity is controlled by and meets the needs of health professionals;

-

Be interactive (e.g., group reflection, opportunities to practice behaviors);

-

Employ ongoing feedback to engage health professionals in the learning process;

-

Use multiple methods of learning and provide adequate time to digest and incorporate knowledge; and

-

Simulate the clinical setting.

The foundations of these attributes are contained in bodies of theory that explain how and why CE fosters behavior that causes health professionals to evaluate their existing knowledge base when

faced with clinical questions. These attributes indicate ways in which CE providers can best affect the learning of health professionals and explain, for example, why effective methods (e.g., reminders, academic detailing) tend to be more active and are used as part of a multimethod approach. The attributes provide credence to the provision of reinforcement through techniques such as audit and feedback, because these methods may motivate health professionals to change clinical behavior if their behavior is not aligned with best practices or the behavior of peers (Eisenberg, 1986; Greco and Eisenberg, 1993).

Professionals themselves, however, are primarily responsible for being self-directed, lifelong learners. They must take personal responsibility for developing their own short- and long-term learning goals and using the best available evidence to address clinical questions. Health professionals give a number of reasons for not engaging in learning outside of required CE, including lack of time, insufficient compensation (e.g., money, CE credits), or poor system support. Some health professionals see little reason to engage in self-directed learning because they believe themselves to be experts by virtue of their titles. To counteract this tendency, health care organizations and CE providers could foster a culture in which health professionals do not always feel satisfied by their current performance and understand the need for additional, advanced learning (Bierema, 2003).

CONCLUSION

The literature review of concepts that span academic disciplines provides evidence that some methods of CE—including some traditional, formal methods; informal methods; and newer, innovative methods—can be conduits for positive change in health professionals’ practice. There also is evidence that health professionals often need multiple learning opportunities and multiple methods of education, such as practicing self-reflection in the workplace, reading journal articles that report new clinical evidence, and participating in formal CE lectures, if they are to most effectively change their performance and, in turn, improve patient outcomes.

The evidence is also strong, however, that continuing education is too often disconnected from theories of how adults learn and from the actual delivery of patient care. As a result, CE in its present form fails to deliver the most useful and important information to health professionals, leaving them unable to adopt evidence-based approaches efficiently to best improve patient outcomes and popula-

tion health. Closing the gap will require defining research problems (Geiselman, 1984), using rigorous research techniques (Felch, 1993), developing “scholarly practitioners” (Fox, 1995, p. 5), and researching results relevant to practitioners (Conway and Clancy, 2009).

A comprehensive research agenda should do the following:

-

Identify theoretical frameworks. Despite repeated calls for moving toward evidence-based CE (Davis et al., 1992, 1995; Mazmanian and Davis, 2002), appropriate theoretical frameworks have yet to be fully identified and tested.

-

Determine proven and innovative CE methods and the degree to which they apply in various contexts. The literature does not conclusively identify the most effective CE delivery methods, the correct mixture of CE methods, or the amount of CE needed to maintain competency and improve clinical outcomes. CE providers have little evidence base for adopting proven and innovative methods of CE, and health professionals do not have a dependable basis for choosing one CE method over another.

-

Define CE outcome measures. Educational theory emphasizes that effective learning requires matching the curriculum to desired outcomes. While CE aims to improve competence and thus close the gap between evidence and practice, outcomes of CE (i.e., what CE methods should explicitly aim to achieve) have not been defined for or by CE regulators, providers, or consumers. This complicates the ability of regulatory bodies to hold CE providers accountable.

-

Determine influences on learning. The committee identified the following attributes and principles essential to the effectiveness of a CE method: needs assessments should guide CE, CE should be interactive, ongoing feedback should be employed, and CE should employ multiple learning methods. More research is needed to better understand how internal and external characteristics are associated with CE outcomes. This might include formal inquiry into the reflexivity of learning in professional practice.

REFERENCES

ACCME (Accreditation Council for Continuing Medical Education). 2008. ACCME annual report data 2007. http://www.accme.org/dir_docs/doc_upload/207fa8e2bdbe-47f8-9b65-52477f9faade_uploaddocument.pdf (accessed January 16, 2009).

Argyris, C. 1991. Teaching smart people how to learn. Harvard Business Review May-June:99-109.

Bandura, A. 1977. Social learning theory. Englewood Cliffs, NJ: Prentice Hall.

Benner, P., and C. Tanner. 1987. Clinical judgment: How expert nurses use intuition. American Journal of Nursing 87(1):23-31.

Bennett, N. L., D. A. Davis, W. E. J. Easterling, P. Friedmann, J. S. Green, B. M. Koeppen, P. E. Mazmanian, and H. S. Waxman. 2000. Continuing medical education: A new vision of the professional development of physicians. Academic Medicine 75:1167-1172.

Bertram, D. A., and P. A. Brooks-Bertram. 1977. The evaluation of continuing medical education: A literature review. Health Education Monographs 5:330-362.

Berwick, D. 2004. Escape fire: Designs for the future of health care. San Francisco, CA: Jossey-Bass.

Bierema, L. L. 2003. Systems thinking: A new lens for old problems. Journal of Continuing Education in the Health Professions 23(S1):S27-S33.

Brookfield, S. D. 1995. Becoming a critically reflective teacher. San Francisco, CA: Jossey-Bass.

Bryant, S. L., T. Ringrose, and S. L. Bryant. 2005. Evaluating the doctors.net.uk model of electronic continuing medical education. Work Based Learning in Primary Care 3:129-142.

Carney, P. 2000. Adult learning styles: Implications for practice teaching in social work. Social Work Education 19(6):609-626.

Cervero, R. M. 1988. Effective continuing education for professionals. San Francisco, CA: Jossey-Bass.

———. 2001. Continuing professional education in transition, 1981-2000. International Journal of Lifelong Education 20(1-2):16-30.

Conway, P. H., and C. Clancy. 2009. Transformation of health care at the front line. Journal of the American Medical Association 301(7):763-765.

Cook, D. A., A. J. Levinson, S. Garside, D. M. Dupras, P. J. Erwin, and V. M. Montori. 2008. Internet-based learning in the health professions: A meta-analysis. Journal of the American Medical Association 300(10):1181-1196.

Cooke, M., D. M. Irby, W. Sullivan, and K. M. Ludmerer. 2006. American medical education 100 years after the Flexner report. New England Journal of Medicine 355(13):1339-1344.

Curry, L., and I. E. Purkis. 1986. Validity of self-reports of behavior changes by participants after a CME course. Journal of Medical Education 61:579-584.

Davis, B. 2004. Inventions of teaching: A genealogy. Mahwah, NJ: Lawrence Erlbaum.

Davis, B., and E. Simmt. 2003. Understanding learning systems: Mathematics education and complexity science. Journal for Research in Mathematics Education 34:137-167.

Davis, B., and D. Sumara. 2006. Complexity and education. Mahwah, NJ: Lawrence Erlbaum.

Davis, D., and R. D. Fox. 1994. The physician as learner: Linking research to practice. Chicago, IL: American Medical Association.

Davis, D., and R. Galbraith. 2009. Continuing medical education effect on practice performance: Effectiveness of continuing medical education: American College of Chest Physicians evidence-based educational guidelines. Chest 135(3 Suppl):42S-48S.

Davis, D. A., M. A. Thomson, A. D. Oxman, and R. B. Haynes. 1992. Evidence for the effectiveness of CME: A review of 50 randomized controlled trials. Journal of the American Medical Association 268(9):1111-1117.

———. 1995. Changing physician performance: A systematic review of the effect of continuing medical education strategies. Journal of the American Medical Association 274(9):700-705.

Davis, D., M. A. O’Brien, N. Freemantle, F. M. Wolf, P. Mazmanian, and A. Taylor-Vaisey. 1999. Impact of formal continuing medical education: Do conferences, workshops, rounds, and other traditional continuing education activities change physician behavior or health care outcomes? Journal of the American Medical Association 282(9):867-874.

Davis, D. A., P. E. Mazmanian, M. Fordis, R. Van Harrison, K. E. Thorpe, and L. Perrier. 2006. Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. Journal of the American Medical Association 296(9):1094-1102.

Dennison, B., and B. Kirk. 1990. Do, review, learn, apply: A simple guide to experience-based learning. Oxford, UK: Blackwell.

Dixon, J. 1978. Evaluation criteria in studies of continuing education in the health professions: A critical review and a suggested strategy. Evaluation and the Health Professions 1(2):47-65.

Doll, W. 1993. A post-modern perspective on curriculum. New York: Teachers College Press.

Ebell, M. H., and A. Shaughnessy. 2003. Information mastery: Integrating continuing medical education with the information needs of clinicians. Journal of Continuing Education in the Health Professions 23(S1):S53-S62.

Eisenberg, J. M. 1986. Doctors’ decisions and the cost of medical care. Ann Arbor, MI: Health Administration Press.

Eraut, M. 1994. Developing professional knowledge and competence. London: Falmer.

———. 2001. Do continuing professional development models promote one-dimensional learning? Medical Education 35(1):8-11.

Eva, K. W., and G. Regehr. 2008. “I’ll never play professional football” and other fallacies of self-assessment. Journal of Continuing Education in the Health Professions 28(1):14-19.

Eva, K. W., J. P. Cunnington, H. I. Reiter, D. R. Keane, and G. R. Norman. 2004. How can I know what I don’t know? Poor self assessment in a well-defined domain. Advances in Health Sciences Education 9:211-224.

Felch, W. C. 1993. Plus ça change. Journal of Continuing Education in the Health Professions 13(4):315-316.

Fordis, M., J. E. King, C. M. Ballantyne, P. H. Jones, K. H. Schneider, S. J. Spann, S. B. Greenberg, and A. J. Greisinger. 2005. Comparison of the instructional efficacy of Internet-based CME with live interactive CME workshops: A randomized controlled trial. Journal of the American Medical Association 294(9):1043-1051.

Forsetlund, L., A. Bjørndal, A. Rashidian, G. Jamtvedt, M. A. O’Brien, F. Wolf, D. Davis, J. Odgaard-Jensen, and A. D. Oxman. 2009. Continuing education meetings and workshops: Effects on professional practice and health care outcomes. Cochrane Database Systematic Reviews(2):CD003030.

Fox, R. D. 1995. Narrowing the gap between research and practice. Journal of Continuing Education in the Health Professions 15(1):1-7.

———. 2000. Using theory and research to shape the practice of continuing professional development. Journal of Continuing Education in the Health Professions 20:238-246.

Fox, R. D., and C. Miner. 1999. Motivation and the facilitation of change, learning, and participation in educational programs for health professionals. Journal of Continuing Education in the Health Professions 19(3):132-141.

Fox, R. D., P. E. Mazmanian, and R. W. Putnam, eds. 1989. Changing and learning in the lives of physicians. New York: Praeger.

Geiselman, L. A. 1984. Editorial. Möbius: A Journal for Continuing Education Professionals in Health Sciences 4(4):1-5.

Gibbs, G. 1988. Learning by doing: A guide to teaching and learning methods. London, UK: Federation of Entertainment Unions.

Gordon, M. J. 1991. A review of the validity and accuracy of self-assessments in health professions training. Academic Medicine 66(12):762-769.

Greco, P. J., and J. M. Eisenberg. 1993. Changing physicians’ practices. New England Journal of Medicine 329:1271-1275.

Grimshaw, J. M., M. P. Eccles, A. E. Walker, and R. E. Thomas. 2002. Changing physician’s behavior: What works and thoughts on getting more things to work. Journal of Continuing Education in the Health Professions 22(4):237-243.

Griscti, O., and J. Jacono. 2006. Effectiveness of continuing education programmes in nursing: Literature review. Journal of Advanced Nursing 55(4):8.

Grol, R. 2002. Changing physicians’ competence and performance: Finding the balance between the individual and the organization. Journal of Continuing Education in the Health Professions 22(4):244-251.

Grol, R., and J. Grimshaw. 2003. From best evidence to best practice: Effective implementation of change in patient’s care. Lancet 362(9391):1225-1230.

Harden, R. M. 2005. A new vision for distance learning and continuing medical education. Journal of Continuing Education in the Health Professions 25(1):43-51.

Hartzell, J. 2007. Adult learning theory in medical education. American Journal of Medicine 120(11):e11.

Hill, D. E. 1976. Teachers’ adaptation: “Reading” and “flexing” to students. Journal of Teacher Education 27:268-275.

Houle, C. O. 1980. Continuing learning in the professions. San Francisco, CA: Jossey-Bass.

———. 1984. Patterns of learning. San Francisco, CA: Jossey-Bass.

IOM (Institute of Medicine). 2001. Improving the quality of long-term care. Washington, DC: National Academy Press.

———. 2003. Health professions education: A bridge to quality. Washington, DC: The National Academies Press.

Jianfei, G., S. Tregonning, and L. Keenan. 2008. Social interaction and participation: Formative evaluation of online CME modules. Journal of Continuing Education in the Health Professions 28(3):172-179.

Jordan, S. 2000. Educational input and patient outcomes: Exploring the gap. Journal of Advanced Nursing 31(2):461-471.

King, P. M., and K. S. Kitchener. 1994. Developing reflective judgment. San Francisco, CA: Jossey-Bass.

Knowles, M. S. 1975. Self-directed learning: A guide for learners and teachers. Englewood Cliffs, NJ: Prentice Hall.

———. 1980. The modern practice of adult education. Andragogy versus pedagogy. Englewood Cliffs, NJ: Prentice Hall/Cambridge.

Knox, A. B. 1990. Influences on participation in continuing education. Journal of Continuing Education in the Health Professions 10(3):261-274.

Kolb, D. A. 1984. Experiential learning: Experience as the source of learning and development. Englewood Cliffs, NJ: Prentice Hall.

Kues, J., D. Davis, L. Colburn, M. Fordis, I. Silver, and O. Umuhoza. 2009. Academic CME in North America: The 2008 AAMC/SACME Harrison Survey. Washington, DC: Association of American Medical Colleges.

Landers, M. R., J. W. McWhorter, L. L. Krum, and D. Glovinsky. 2005. Mandatory continuing education in physical therapy: Survey of physical therapists in states with and states without a mandate. Physical Therapy 85(9):861-871.

Long, H. B., ed. 1997. Expanding horizons in self-directed learning. Norman, OK: Public Managers Center, College of Education, University of Oklahoma.

MacIntosh-Murray, A., L. Perrier, and D. Davis. 2006. Research to practice in the Journal of Continuing Education in the Health Professions: A thematic analysis of volumes 1 through 24. Journal of Continuing Education in the Health Professions 26(3):230-243.

Maio, V., D. Belazi, N. I. Goldfarb, A. L. Phillips, and A. G. Crawford. 2003. Use and effectiveness of pharmacy continuing-education materials. American Journal of Health-System Pharmacy 60(16):1644-1649.

Mann, K., and J. Ribble, eds. 1994. The role of motivation in self-directed learning. In The physician as learner. Edited by D. A. Davis and R. D. Fox. Chicago, IL: American Medical Association.

Mann, K., J. Gordon, and A. MacLeod. 2007. Reflection and reflective practice in health professions education: A systematic review. Advances in Health Sciences Education 1-27.

Mansouri, M., and J. Lockyer. 2007. A meta-analysis of continuing medical education effectiveness. Journal of Continuing Education in the Health Professions 27(1):6-15.

Marinopoulos, S. S., T. Dorman, N. Ratanawongsa, L. M. Wilson, B. H. Ashar, J. L. Magaziner, R. G. Miller, P. A. Thomas, G. P. Prokopowicz, R. Qayyum, and E. B. Bass. 2007. Effectiveness of continuing medical education. Evidence report/technology assessment no. 149. Rockville, MD: Agency for Healthcare Research and Quality.

Mazmanian, P. E., and D. A. Davis. 2002. Continuing medical education and the physician as a learner: Guide to the evidence. Journal of the American Medical Association 288(9):1057-1060.

Mazmanian, P. E., D. E. Moore, R. M. Mansfield, and M. P. Neal. 1979. Perspectives on mandatory continuing medical education. Southern Medical Journal 72(4):378-380.

Mazmanian, P. E., D. A. Davis, and R. Galbraith. 2009. Continuing medical education effect on clinical outcomes. Chest 135(3 Suppl):49S-55S.

McMurtry, A. 2008. Complexity theory 101 for educators: A fictional account of a graduate seminar. McGill Journal of Education 43(3):265-282.

Merriam, S. B. 1987. Adult learning and theory building: A review. Adult Education Quarterly 37(4):187-198.

Merriam, S. B., and R. S. Caffarella. 2007. Learning in adulthood: A comprehensive guide. San Francisco, CA: Jossey-Bass.

Mezirow, J. 1990. Fostering critical reflection in adulthood. San Francisco, CA: Jossey-Bass.

Miller, G. E. 1967. Continuing education for what? Medical Education 42(4):320-326.

———. 1990. The assessment of clinical skills/competence/performance. Academic Medicine 65(9 Suppl):S63-S67.

Moon, J. 2004. Using reflective learning to improve the impact of short courses and workshops. Journal of Continuing Education in the Health Professions 24(1):4-11.

Moore, D. E. 2007. How physicians learn and how to design learning experiences for them: An approach based on an interpretive review of evidence. In Continuing education in the health professions: Improving healthcare through lifelong learning. Edited by M. Hager, S. Russell, and S. W. Fletcher. New York: Josiah Macy, Jr. Foundation.

Moore, D. E., and F. C. Pennington. 2003. Practice-based learning and improvement. Journal of Continuing Education in the Health Professions 23(Suppl 1):S73-S80.

Moore, D. E., J. S. Green, and H. A. Gallis. 2009. Achieving desired results and improved outcomes: Integrating planning and assessment throughout learning activities. Journal of Continuing Education in the Health Professions 29(1):1-15.

Nowlen, P. M. 1988. A new approach to continuing education for business and the professions: The performance model. New York: Macmillan.

O’Brien, M. A., N. Freemantle, A. D. Oxman, F. Wolf, D. A. Davis, and J. Herrin. 2001. Continuing education meetings and workshops: Effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews (online) (2).

Olson, C. A., T. R. Tooman, and J. C. Leist. 2005. Contents of a core library in continuing medical education: A Delphi study. Journal of Continuing Education in the Health Professions 25(4):278-288.

Osberg, D. 2005. Redescribing “education” in complex terms. Complicity: An International Journal of Complexity and Education 2(1):81-83.

Ottoson, J. M., and I. Patterson. 2000. Contextual influences on learning application in practice: An extended role for process evaluation. Evaluation & the Health Professions 23(2):194-211.

Parker III, F. W., and P. E. Mazmanian. 1992. Commitments, learning contracts, and seminars in hospital-based CME: Change in knowledge and behavior. Journal of Continuing Education in the Health Professions 12(1):49-63.

Pereles, L., J. Lockyer, D. Hogan, T. Gondocz, and J. Parboosingh. 1997. Effectiveness of commitment contracts in facilitating change in continuing medical education intervention. Journal of Continuing Education in the Health Professions 17(1):27-31.

Pew Taskforce on Health Care Workforce Regulation. 1995. Reforming healthcare workforce regulation: Policy considerations for the 21st century. San Francisco, CA: Pew Health Professions Commission.

Pinney, S., S. Mehta, D. Pratt, J. Sarwark, E. Campion, L. Blakemore, and K. Black. 2007. Orthopaedic surgeons as educators applying principles of adult education to teaching orthopaedic residents. Journal of Bone and Joint Surgery 89.

Pololi, L., M. Clay, M. Lipkin, M. Hewson, C. Kaplan, and R. Frankel. 2001. Reflections on integrating theories of adult education into a medical school faculty development course. Medical Teacher 23(3):276-283.

Pui, M. V., L. Liu, and S. Warren. 2005. Continuing professional education and the Internet: Views of Alberta occupational therapists. Canadian Journal of Occupational Therapy 72(4):234-244.

Regehr, G., and G. R. Norman. 1996. Issues in cognitive psychology: Implications for professional education. Academic Medicine 71(9):988-1001.

Robertson, M. K., K. E. Umble, and R. M. Cervero. 2003. Impact studies in continuing education for health professions: Update. Journal of Continuing Education in the Health Professions 23(3):146-156.

Sargeant, J., V. Curran, M. Allen, S. Jarvis-Selinger, and K. Ho. 2006. Facilitating interpersonal interaction and learning online: Linking theory and practice. Journal of Continuing Education in the Health Professions 26(2):128-136.

Schön, D. 1983. The reflective practitioner: How professionals think in action. New York: Basic Books.

———. 1987. Educating the reflective practitioner. San Francisco, CA: Jossey-Bass.

Segrave, S., and D. Holt. 2003. Contemporary learning environments: Designing e-learning for education in the professions. Distance Education 24(1):7-24.

Sibley, J. C., D. L. Sackett, V. Neufeld, B. Gerrard, K. V. Rudnick, and W. Fraser. 1982. A randomized trial of continuing medical education. New England Journal of Medicine 306(9):511-515.

Slotnick, H. B., and M. B. Shershneva. 2002. Use of theory to interpret elements of change. Journal of Continuing Education in the Health Professions 22(4):197-204.

Smith, C. A., A. Cohen-Callow, D. A. Dia, D. L. Bliss, A. Gantt, L. J. Cornelius, and D. Harrington. 2006. Staying current in a changing profession: Evaluating perceived change resulting from continuing professional education. Journal of Social Work Education 42(3):465-482.

Smith, M. K. 2001. Donald Schön: Learning, reflection and change. http://www.infed.org/thinkers/et-schon.htm (accessed March 4, 2009).

Stanton, F., and J. Grant. 1999. Approaches to experiential learning, course delivery and validation in medicine. A background document. Medical Education 33(4):282-297.

Stein, L. S. 1981. The effectiveness of continuing medical education: Eight research reports. Journal of Medical Education 56(2):103-110.

Tian, J., N. L. Atkinson, B. Portnoy, and R. S. Gold. 2007. A systematic review of evaluation in formal continuing medical education. Journal of Continuing Education in the Health Professions 27(1):16-27.

Tough, A. 1971. The adult’s learning projects: A fresh approach to theory and practice of adult learning. Toronto: Ontario Institute for Studies in Education.

U.S. Department of Health, Education, and Welfare. 1967. Report of the National Advisory Committee on Health Manpower. Washington, DC: U.S. Government Printing Office.

Wakefield, A. B., C. Carlisle, A. G. Hall, and M. J. Attree. 2008. The expectations and experiences of blended learning approaches to patient safety education. Nurse Education in Practice 8(1):54-61.

Wakefield, J., C. P. Herbert, M. Maclure, C. Dormuth, J. M. Wright, J. Legare, P. Brett-MacLean, and J. Premi. 2003. Commitment to change statements can predict actual change in practice. Journal of Continuing Education in the Health Professions 23(2):81-93.

Wensing, M., H. Wollersheim, and R. Grol. 2006. Organizational interventions to implement improvements in patient care: A structured review of reviews. Implementation Science 1(1):1-9.

Wutoh, R., S. A. Boren, and E. A. Balas. 2004. E-learning: A review of Internet-based continuing medical education. Journal of Continuing Education in the Health Professions 24(1):20-30.