1

Introduction

In 1998, the Division of Mathematical Sciences (DMS) at the National Science Foundation (NSF) launched a program of Grants for Vertical Integration of Research and Education in the Mathematical Sciences (VIGRE).1 As stated in the first program solicitation, these were “grants to institutions with PhD-granting departments in the mathematical sciences to carry out high quality educational programs, at all levels, that are vertically integrated with the research activities of these departments.” The goals of the program as initially enunciated were “(1) to prepare undergraduate students, graduate students and postdoctoral fellows for a broad range of opportunities available to individuals with training in the mathematical sciences; and (2) to encourage departments in the mathematical sciences to consider a spectrum of education activities and their integration with research.”2 This key notion of vertical integration was further explained:

The intent of the VIGRE program is to support the development of a community of researchers and scholars in which there is interaction among all the members. This would not only provide meaningful educational experiences for undergraduate and graduate students, but also encourage continuing professional development at the postdoctoral level and beyond. These experiences should take place in an environment in which research and education fit together naturally and reinforce each other and in which interaction takes place among all participants. This is called vertical integration and refers to programs in which research and education are coupled and in which undergraduates, graduate students, postdoctoral fellows, and faculty are mutually supportive.3

|

1 |

The title of NSF’s program was originally Grants for Vertical Integration of Research and Education in the Mathematical Sciences (VIGRE). Subsequently, in NSF literature and elsewhere, it has been referred to as Grants for Vertical Integration of Research and Education (VIGRE), or just by the acronym VIGRE. |

|

2 |

From the first program solicitation: “Grants for Vertical Integration of Research and Education in the Mathematical Sciences (VIGRE),” NSF 97-155, available at http://www.nsf.gov/pubs/1997/nsf97155/nsf97155.htm. Accessed June 12, 2009. |

|

3 |

Ibid. |

COMMITTEE’S CHARGE

In 2007, at the request of the National Science Foundation, the National Research Council (NRC) appointed an ad hoc committee to conduct an assessment of NSF’s program Grants for Vertical Integration of Research and Education in the Mathematical Sciences (see Appendix A for biographies of the committee members). The committee was given the following tasks:

-

Review the goals of the VIGRE program and evaluate how well the program is designed to address those goals;

-

Evaluate past and current practices at NSF for steering and assessing the VIGRE program;

-

Draw tentative conclusions about the program’s achievements based on the data collected to date;

-

Evaluate NSF’s plans for future data-driven assessments and identify data collection priorities that will, over time, build understanding of how well the program is attaining its goals; and

-

Offer recommendations for improvements to the program and NSF’s ongoing monitoring of it.

EVALUATION OF THE SCOPE AND APPROACH

The NRC’s Committee to Evaluate the NSF’s Vertically Integrated Grants for Research and Education (VIGRE) Program held its first meeting in June 2007. To carry out its charge, the committee began by asking what components of the VIGRE program could be evaluated. Program evaluation is beneficial in assessing the processes or outcomes of a program to determine whether improvements can be made. Evaluations are sometimes planned during the creation of a program. They may also be designed during the program or even retrospectively after the program has ended. The latter types of evaluation may be more difficult to conduct if the assessment’s data needs were not planned for in advance. Finally, evaluations can be longitudinal (for example, comparing an outcome such as the number of students pursuing a mathematics major before, during, or after a program’s lifetime) or cross-sectional (for example, comparing two departments, where one had a particular program—such as an undergraduate research experience—and the other department did not).

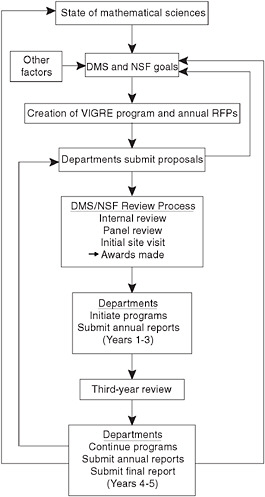

To organize an answer to the questions involved in evaluation, the committee sought to describe the VIGRE program, as captured in Figure 1-1 in the diagram that the committee developed. As that figure illustrates in the topmost boxes, the state of the mathematical sciences as well as other factors (e.g., NSF-wide goals, NSF budget) motivate the VIGRE program; and each year DMS releases a call for proposals from PhD-granting departments in the mathematical sciences in the United States. Departments in applied mathematics, mathematics, and statistics may submit proposals. The proposals are then subjected to a review process at NSF, the end result of which is that some proposals are funded (becoming the “VIGRE awardees”). The awardees carry out the plans developed in their proposals over the first 3 years of the award, submitting annual reports of their progress. In the 3rd year, NSF conducts site visits to determine whether each individual award should be continued for 2 more years. If continuation is approved, the departments proceed with their programs and submit additional annual reports and a final report.

The diagram in Figure 1-1 shows several feedback mechanisms. Recall that the VIGRE program has evolved over time, and most of the processes shown in the diagram can be thought of as occurring annually. The actions of awardees are supposed to have a positive impact on the mathematical sciences and, to the extent that they do, they might alter DMS’s goals for the program. Awardees’ actions might also directly affect DMS’s goals: in response to submissions, DMS could change the submission process; in response to the programs that individual departments are proposing to carry out, DMS could change the goals of the VIGRE program.

Figure 1-1 suggests aspects of the program that could be subject to evaluation, including the following:

-

The appropriateness of the goals in the request for proposals (RFPs);

-

The information that is requested from departments in submitting of proposals;

-

The quality of the proposals;

-

The departments that do and do not apply;

-

NSF’s review process, including decision making and information gathering;

-

The actions that departments have taken;

-

The quality of departmental reporting;

-

The outcomes of departments’ actions; and

-

The overall accomplishments of the program.

The committee determined to focus its assessment on three aspects of the VIGRE program: (1) the program’s goals as they have evolved over time, (2) data collection by NSF for purposes of its review process and of NSF’s assessment of the progra, and (3) the achievements of the program.

In thinking about the success of VIGRE—both for the VIGRE awardees and, aggregated, for the entire VIGRE program—the committee identified several potential indicators of achievement that could be studied in this evaluation, as noted in Table 1-1. While this table lists only positive achievements, it is also possible that opposite effects could be observed, and those would be important indicators as well.

With respect to the entry for “time to degree” in Table 1-1, there is a conflict between the reduction in time to degree and the reduction in teaching requirements for graduate students encouraged by VIGRE. The committee believes that a careful balance between the two needs to be achieved because teaching as a graduate student not only provides experience necessary for possible academic appointment but is a valuable learning tool for the graduate student.

SOURCES OF INFORMATION

The committee sought out sources of data that could be used to examine the VIGRE program. However, it was aware of certain problems that it would face in conducting its assessment. First, it is difficult to determine causality in an evaluation. For instance, if a goal of the VIGRE program was to interest more U.S. citizens in mathematical sciences and in obtaining advanced degrees, and if over the time of the program (1998 to the present) more U.S. citizens have received doctorates in the mathematical sciences, one cannot be certain whether this is due to the VIGRE program or to some other factors—for example, perhaps the employment outlook in the mathematical sciences has brightened since earlier years of the program and so job prospects have attracted more U.S. citizens into mathematics, or the employment outlook has dimmed, encouraging students to postpone getting a job and instead pursuing further education. Second, indicators of success are often difficult to measure, and progress toward some of the program goals might not be observed until more time has passed. Finally, for a variety of reasons, many of the data required for the evaluations have never been collected.

The committee was fortunate to have multiple sources of information in carrying out its work. These included information collected by NSF, information collected by other organizations, and information collected on behalf of the committee. Each of these categories is described in more detail below.

TABLE 1-1 Potential Indicators of VIGRE Achievement

|

Possible Indicator |

Hypothesized Effect (either at VIGRE program after award or compared with non-VIGRE awardees) |

|

Undergraduate majors |

The number of undergraduate majors in the mathematical sciences has increased. |

|

Graduate enrollment |

The number of U.S. graduate students rises more at departments awarded VIGRE grants than at other departments. |

|

PhDs |

The percentage of U.S. PhDs rises more at departments awarded VIGRE grants than at other departments. |

|

Postdoctorals |

Departments awarded VIGRE grants attract more postdoctoral researchers than do other departments. |

|

Placement of U.S. undergraduates |

Students who have participated in VIGRE make more use of their training in their first positions compared to students who have not participated in VIGRE. |

|

VIGRE departments offer students a broader range of career opportunities than other departments do. |

|

|

Among those who express an interest in an academic career, students who have participated in VIGRE are more likely to go to graduate school than are students not participating in VIGRE. |

|

|

Placement of U.S. graduate students |

Students who have participated in VIGRE are more likely to graduate because of culture, mentoring, and other advantages, and more are successfully recruited for relevant positions than are those who have not participated in VIGRE. |

|

Placement of U.S. postdoctorals |

Postdoctorals who have participated in VIGRE are more likely to succeed as researchers than those who have not. |

|

Undergraduate research experience |

More undergraduates at VIGRE departments have research experience at their university than do those at departments not awarded VIGRE grants. |

|

Interdisciplinarity |

Faculty at VIGRE departments collaborate more in teaching or research with faculty in other departments than do faculty at non-VIGRE departments. |

|

VIGRE students take more upper-level courses outside their department and/or non-mathematics majors take more mathematical science courses than students not participating in VIGRE. |

|

|

Mentoring |

Students participating in VIGRE get more mentoring (measured perhaps by number of contacts or time spent) than do those not participating. |

|

Productivity |

Graduate students produce more at VIGRE departments; postdoctorals produce more at VIGRE departments; VIGRE departments are overall more productive than non-VIGRE departments. |

NSF Sources of Information

NSF data consist of two main categories: data collected as a result of the VIGRE program and data collected by NSF for other purposes (e.g., quantitative data from NSF surveys). As described below, among the NSF data related to the program, and roughly in the order of the VIGRE process outlined in Figure 1-1, are data that come from the following: the RFPs, proposals submitted by mathematical sciences departments, reports of NSF site visits to departments that have submitted proposals, results of NSF proposal review panels, annual reports submitted by awardees, reports of NSF 3rd-year site visits to awardees to determine eligibility for continuation of the program into the 4th and 5th year, and final reports submitted by awardees.

Requests for Proposals

The first RFP for the VIGRE program was issued in 1997, and one has been released annually through 2008. The solicitations evolved until 2005, after which the same request was used. No proposals were considered in 2009 because this NRC review was in progress when departments would normally have been developing their proposals.

Information Provided by Departments in Their Proposals

From the beginning of the VIGRE program, departments submitting proposals were required to include the following data on trainees: (1) a list of PhD recipients during the previous 5 years, along with each individual’s citizenship status, baccalaureate institution, time to degree, post-PhD placement, and thesis adviser; (2) the names of postdoctoral associates (including holders of named instructorships and 2- or 3-year terminal assistant professors) during the previous 5 years, their PhD institutions, postdoctoral mentors, and post-appointment placements; and (3) the dollar amount of funding by federal agencies for research experiences for undergraduates, for graduate students, and for postdoctoral associates in each of the previous 5 years. Departments that were applying for a second (renewal) VIGRE award were required to submit data covering 10 years.

Since the 2005 solicitation, additional data also have been requested as shown in Box 1-1. These data were to be supplied for each of the previous 5 academic years, and for each of the previous 10 academic years if the applying department had already held a VIGRE grant.

Initial Site-Visit Reports

The initial pre-award site-visit reports from the past few years seem to contain more information than did earlier such reports. Beginning in 2004, but more consistently since 2005, NSF posed 10 general questions to departments prior to site visits, and the responses help to inform the site-visit reports. Those questions are listed in Box 4-1 in Chapter 4 of this report. In addition, at its discretion, each site-visit team asks specific questions.

Proposal Review Panels

NSF hosts panels of experts to review the VIGRE proposals each year. Reviewers comment on the proposals, and the comments are collected and summarized.

|

BOX 1-1 Data Now Requested with Proposals to the National Science Foundation’s Program of Grants for Vertical Integration of Research and Education (VIGRE)

|

Annual Reports

Annual progress reports are to be submitted to NSF by each VIGRE awardee, although some awardees have missed some filing requirements. Each awardee is also required to file a final report. (Final reports tend to summarize the annual reports.) Annual reports are supposed to include the following information:

-

Names of project participants;

-

Names of organizational partners and other collaborators or contacts;

-

Lists of activities and findings (including research and education activities, findings, training and development, and outreach activities);

-

Lists of journal publications, books or other one-time publications, Web/Internet sites, and other specific products; and

-

Lists of contributions within the discipline, to other disciplines, to human resource development, to resources for research and education, and beyond science and engineering.

SOURCE: National Science Foundation, 2005, Enhancing the Mathematical Sciences Workforce in the 21st Century (EMSW21) Program Solicitation, NSF 05-595, Arlington, Va. |

As noted in Chapter 3 of this report, the VIGRE program was quite specific in its early years as to the quantitative data to be included in annual reports, but that policy appears to have been relaxed somewhat since 2000.

Third-Year Site-Visit Reports

In contrast to the initial site-visit reports, the 3rd-year site-visit reports are quite uniform and detailed, presenting well-reasoned recommendations. For the most part they are organized by the following topics:

-

Introduction,

-

General observations,

-

Graduate programs,

-

Undergraduate programs,

-

Postdoctoral programs,

-

Outreach,

-

Life after VIGRE, and

-

Recommendation to NSF.

The 3rd-year reports are in part based on a self-assessment in response to direction from NSF asking awardees to furnish the following information:

-

Departmental responses to the nine items listed in their VIGRE award letter:

-

How well has the integration of research and education been achieved at all levels?

-

How is the program broadening education at all levels?

-

How has the program improved the instruction skills and communication skills of students and postdoctorals?

-

What has been the effect of the mentoring programs that have been developed?

-

How has the program promoted recruitment into the mathematical sciences?

-

How has the interaction of several levels of students and faculty been enhanced?

-

What is the program doing to affect the time to degree?

-

Has there been effective dissemination to the mathematical sciences community of the results of this activity?

-

Can you identify other changes that the grant has made possible and that may not have occurred without it?

-

-

A list of the previous institution and placement institution for each recipient of a VIGRE stipend during the project,

-

A list of the faculty who participated in the VIGRE project and their roles,

-

A breakdown, covering the period from 5 years preceding the VIGRE award, of the numbers of trainees involved in the department’s activities,

-

Accurate estimates of funds remaining and funds that will be spent in each budget category of the award,

-

Any other pertinent information that the department would like to site-visit team to see.

Beginning in 2005, NSF circulated a spreadsheet to be filled in with the information requested in the departmental responses above.

NSF Surveys

A second category of NSF data consists of quantitative data that NSF collects by means of surveys. These data can be used to provide context or background for trends among VIGRE awardees and other PhD-granting mathematical sciences departments. Three surveys are particularly useful in this regard: the Survey of Earned Doctorates (SED), the Survey of Doctoral Recipients (SDR), and the Survey of Graduate Students and Postdoctorates in Science and Engineering (GSS).

According to the NSF Web site, the SED began in 1957-1958 to collect data continuously on the number and characteristics of individuals receiving research doctoral degrees from all accredited U.S. institutions. The results of this annual survey are used to assess characteristics and trends in doctorate education and degrees. Data are now available through 2006 disaggregated by gender, race/ethnicity, and citizenship. However, the online version uses a more limited set of fields, for example “Mathematics and Statistics,” than the full SED field list. Also, the online version is aggregated by institution, not by

department/program. The survey does record departmental information, but does not make it public. Data are available online at NSF’s WebCASPAR database, which provides access to statistical data resources for science and engineering (S&E) at U.S. academic institutions.4

The SDR gathers information from individuals who have obtained a doctoral degree in a science, engineering, or health field (SEH). The SDR, which is conducted every 2 years, is a longitudinal survey that follows recipients of research doctorates from U.S. institutions until they reach age 76. This group is of special interest to many decision makers because it represents some of the most educated individuals in the U.S. workforce. The SDR results are used by employers in the education, industry, and government sectors to understand and to predict trends in employment opportunities and salaries in SEH fields for graduates with doctoral degrees. The results are also used to evaluate the effectiveness of equal opportunity efforts. Coverage began in 1973. There was no 2005 survey, but there was a 2006 survey instead. Data are available online at WebCASPAR.5

The GSS provides data on the number and characteristics of students in graduate S&E and health-related fields enrolled in U.S. institutions. NSF uses the results of this annual survey to assess trends in financial support patterns and shifts in graduate enrollment and postdoctoral appointments. The GSS collects data from all U.S. institutions that offer graduate programs in any field of science or engineering and/or in specific health-related fields of interest to the National Institutes of Health (NIH). This survey collects data items at the academic department level, including counts of full-time graduate students by source and mechanism of support, by total, and by gender; number of part-time graduate students by gender; and citizenship and racial/ethnic background of all graduate students, including first-time students. In addition, the survey requests count data on postdoctorates by source of support, gender, and citizenship, with separate data on those holding first-professional doctorates in the health fields; and summary information on other doctorate nonfaculty research personnel. Coverage is academic year 1966 to academic year 2005. Data are available online at WebCASPAR.6

Other Sources of Information

Data similar to those obtained in the NSF surveys for the mathematical sciences are collected by the American Mathematical Society (AMS) in its annual surveys. AMS collects data on the total undergraduate and graduate course enrollments in the fall of each academic year, as reported by departments through surveys conducted each year from fall 1991 through fall 2006. For the years 1991 through 1999, the survey asked departments to report the prior year’s numbers plus the current year’s numbers. Having the 2 years of data will permit one to deal with nonresponse in cases where a department responds in year N but not in year N – 1. There are some issues with the data:

-

There are years in which some departments did not reply, sometimes for several years in a row. The number seems to shrink a bit over the years: in 1991 more than 40 departments did not respond; in the mid-2000s, the figure is in the range of 10 to 15.

-

In addition, a department is sometimes dropped from the survey and at other times a department

|

4 |

See NSF, “Survey of Earned Doctorates,” available at http://www.nsf.gov/statistics/showsrvy.cfm?srvy_CatID=2&srvy_seri=1. Accessed June 15, 2009. |

|

5 |

See NSF, “Survey of Earned Doctorates,” available at http://www.nsf.gov/statistics/showsrvy.cfm?srvy_CatID=3&srvy_seri=15. Accessed June 15, 2009. |

|

6 |

See NSF, “Survey of Earned Doctorates,” available at http://www.nsf.gov/statistics/showsrvy.cfm?srvy_CatID=2&srvy_seri=12. Accessed June 15, 2009. |

-

is added. For the mathematics departments, this occurs only in Group III.7 There are also changes as to which statistics, biostatistics, and applied mathematics departments are included.

-

In some cases, departments returned a form but the specific data either were missing or judged not usable.

-

Some departments may not have undergraduate programs (i.e., the department reports graduate enrollment only).

AMS also collects data on the number of doctorates in the mathematical sciences. Data focus on the number of new doctorates by gender, race/ethnicity, and citizenship, organized by academic calendar year, by institution, and by department. Coverage is from academic year 1991 to academic year 2005. There are a number of technical issues (e.g., values changing for race/ethnicity categories in the 1990s), and there are some indications of inaccuracies.

A second source of information is provided by the Department of Education’s National Center for Education Statistics through its Integrated Postsecondary Education Data System (IPEDS) Peer Analysis System. IPEDS allows users to compare a postsecondary institution to a group of peer institutions, all of which are user-selected. Data of relevance include degree data, enrollment data, and data on enrollment of mathematics majors. Degree data include information on level of degree, gender, and race/ethnicity. Enrollment data are fall enrollment or 12-month enrollment data, and enrollment data on mathematics majors are available by gender, race, attendance status, and level of student. Degree data go back to 1986. General enrollment data go back at least to 1990. Data on enrollment of mathematics majors were collected every 2 years from 1996 through 2006.

Information Collected by the Committee

The study committee sent an e-mail request for information to VIGRE awardees and to other departments of applied mathematics, mathematics, and statistics. The objective of this request was to collect additional information on the following:

-

Initial and renewal applications to the VIGRE program,

-

Experiences of VIGRE awardees, and

-

Basic trends in the departments.

Working with AMS, the committee sent an e-mail to chairs of all PhD-granting departments of applied mathematics, biostatistics, mathematics, and statistics, asking them to submit information on their departments’ experiences. The committee requested information from a total of 288 departments. To facilitate the data collection, a Web site was created to store the information received; the e-mail to the chairs contained a link to this Web site. The initial request was sent in November 2007. Three e-mail follow-ups were sent, the final one in early February 2008. Of 50 VIGRE awardees (departments) that were surveyed, 40 returned the committee’s questionnaire. Of 238 non-awardee departments that received the e-mail, 114 responded. See Appendix C for the questionnaires sent to departments.

On February 29, 2008, three committee members conducted an hour-long conference call with seven professors who had in previous years served on site-visit teams, either at the time of an initial proposal

|

7 |

According to the AMS (http://www.ams.org/employment/groups_des.html; accessed August 6, 2009), Group III contains U.S. mathematics departments reporting a doctoral program that received a ranking of less than 2.0 in the 1995 National Research Council volume Research Doctorate Programs in the United States: Continuity and Change (NRC, 1995) or were not included in the NRC rankings. |

or at the time of the 3-year renewal. Questions included how the professors had conducted their evaluations, what criteria they had used to evaluate the sites, and what guidance the NSF staff had provided. Additionally, the committee sent an e-mail to all non-NSF people who had ever served on a VIGRE site-visit team. That e-mail asked for the recipients’ thoughts about the most helpful and least helpful parts of the site visit in terms of evaluating a proposal or awardee, and for any suggestions for improvements in the site-visit process. One follow-up reminder was sent. About 10 reviewers responded.

During several meetings, the entire committee met with various NSF staff members as well as with faculty from selected mathematical sciences departments who were involved in VIGRE activities at their institutions. The NSF staff included former DMS directors Donald Lewis and William Rundell and current director Peter March. The committee also heard presentations from NSF program officer Henry A. Warchall and from the following representatives of departments with current or former VIGRE grants: Peter May (University of Chicago); Alan Tucker (State University of New York, Stony Brook); Calvin Moore (University of California, Berkeley, Mathematics Department); Deborah Nolan (University of California, Berkeley, Statistics Department); Robert Greene (University of California, Los Angeles); and Jesús de Loera (University of California, Davis). Professors Greene and de Loera were each accompanied by undergraduate and graduate students, who presented their impressions of the VIGRE program’s effect on their department. Appendix E contains a list of presentations.

Some committee members also conducted individual telephone and e-mail interviews with selected faculty from various mathematical sciences departments around the country to learn about the interviewees’ experiences with the VIGRE program. The interviewees included William Goldman (University of Maryland), Barry Simon (California Institute of Technology), David Jerison (Massachusetts Institute of Technology), Rick Durrett (Cornell University), George Papanicolaou (Stanford University), and Peter Jones (Yale University). The committee was unable to interview Philippe Tondeur, a former director of NSF/DMS.

Finally, the committee conducted a literature review related to the VIGRE program. Increasing the Quantity and Quality of the Mathematical Sciences Workforce Through Vertical Integration and Cultural Change (Cozzens, 2008) provides many instances of innovative teaching and research within departments supported by this program. Other commentaries that the committee found useful were an early article by Rick Durrett entitled “VIGRE Turns Three,” in the Notices of the AMS (Durrett, 2002); and the report of the American Mathematical Society, the American Statistics Association, the Mathematical Association of America, and the Society for Industrial and Applied Mathematics 2002 workshop on VIGRE.8

The committee regrets that the committee itself was not allowed access to some NSF source documents, such as proposals to the VIGRE program and reviews of departments with VIGRE grants. Conflicting requirements exist between NSF, whose policy is that these documents not be made public, and the NRC, which is required by law to make public most documents received by a committee in the course of a study. Although access was allowed to NRC staff, who reviewed and summarized some of these documents and provided some statistical analysis, direct access by committee members would have aided the committee in formulating conclusions and recommendations.

OUTLINE OF THE REPORT

In this introductory chapter, the committee defined its interpretation of its charge and described its sources of information and the scope and approach of its evaluation. In Chapter 2 the committee examines the case for the VIGRE program, detailing the state of higher education in the mathematical sciences,

|

8 |

Available at http://www.ams.org/amsmtgs/VIGRE-report.pdf. Accessed August 5, 2009. |

as seen by NSF, in the years leading up to the establishment of the program and focusing in particular on several key reports summarizing the state of the field. Chapter 3 describes the VIGRE program, and Chapter 4 discusses the administration, monitoring, and assessment of the program to date. Chapter 5 presents the committee’s assessment of the VIGRE program through its evaluation of successes and some outcomes at individual departments. Finally, Chapter 6 presents the committee’s conclusions and recommendations.

The report also contains six appendixes:

-

Appendix A presents the biographies of members of the Committee to Evaluate the NSF’s Vertically Integrated Grants for Research and Education (VIGRE) Program,

-

Appendix B presents tables used to characterize the state of education in the mathematical sciences from 1980 through 1998,

-

Appendix C contains the questionnaires sent by the committee to all U.S. PhD-granting institutions in the mathematical sciences,

-

Appendix D presents tables and charts needed to describe the changes in the mathematical sciences since 1998,

-

Appendix E is a list of presentations at committee meetings, and

-

Appendix F defines the acronyms used in this report.

Except where explicitly noted, this report uses the terms “mathematics” and “mathematical sciences” interchangeably. Both include pure mathematics, applied mathematics, and statistics.