4

The Data Collection Program

This chapter focuses on the methods used in collecting information to populate the O*NET database. Because the current survey design and data collection methods are based heavily on earlier experience with the O*NET prototype development project, the chapter begins by describing that experience. After briefly summarizing best practices for survey pretesting, it describes the pretesting conducted in the early stages of O*NET development. The chapter then reviews the O*NET study design, including the establishment and occupation methods of collecting data, the data collection procedures, response rates, and data editing and cleaning. A section on data currency follows. The chapter ends by focusing on the cost-effectiveness and efficiency of data collection and maintaining high levels of data quality.

THE O*NET PROTOTYPE DEVELOPMENT PROJECT

The prototype O*NET content model was complex, including many hundreds of descriptors organized into 10 domains, also referred to as taxonomies. The final stage in the prototype development project was a field test of the content model using real-world occupational data. The first step in the field test was to define the unit of analysis: the research team wrote descriptions for 1,122 occupational units.

Development of the Rating Scales

The next step in the field test of the content model was to develop rating scales that individuals could use to assess the extent to which each descriptor was required for each occupational unit.

Abilities

For each of the 52 Abilities descriptors included in the taxonomy, the scale used to rate the importance for a given occupation ranged from (1) not important to (5) extremely important. The scale used to rate the level of each Ability is defined by “behavioral anchors”—brief descriptions of specific work behaviors—provided to assist individuals in making ratings. To generate the anchors, the development team asked panels of subject-matter experts to suggest multiple examples to illustrate different levels of each Ability. Another, independent set of panels of subject-matter experts was asked, for each Ability descriptor, to place the level represented by each anchor on a quantitative scale. For each Ability descriptor, the development team chose behavioral anchors that covered selected points on the scale, were scaled with high agreement by the subject-matter experts, and were also judged to be relevant for rating occupational (e.g., not educational) requirements. The selected anchors were then included in the final prototype rating scale. Although behavioral anchors for many Abilities descriptors had previously been developed over the course of the Fleishman job analysis research program (Fleishman, 1992), the anchors were apparently rescaled for the O*NET application (Peterson et al., 1999, p. 185).

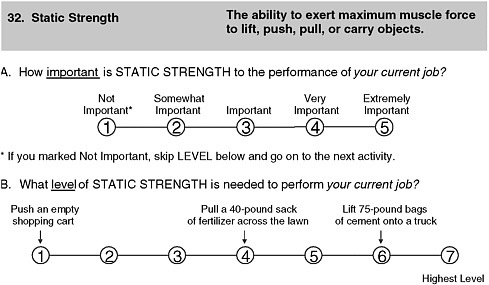

The current rating scales used by trained occupational analysts to assess the importance and level of Abilities descriptors are largely unchanged from those developed in the prototype development project. These scales present problems for users of the resulting data. For example, Figure 4-1 depicts the current scale used to rate the level and importance of the descriptor Ability “static strength.” One issue that becomes apparent in this example emerges from the question posed to the raters. The question, “What level of static strength is needed to perform your current job?” implies a dichotomy of the form “can perform the job” versus “cannot perform the job.” The meaning of perform is unclear. All research on job performance assessment yields a continuous distribution of performance differences across job holders. Does perform mean perform at a minimally acceptable level, an average level, or a very high level? The ability requirements may be different for performing at the minimum level versus performing at a high level, and the performance referent should be made clear to raters.

FIGURE 4-1 Current static strength rating scales.

SOURCE: National Center for O*NET Development (no date). Reprinted with permission.

Work Styles

For the Work Styles domain, the O*NET prototype development team developed rating scales that were similar to those for the Abilities taxonomy, including a 5-point scale for importance and a 7-point rating scale for the level of the Work Style believed to be required for a particular occupation. The current questionnaire includes the 5-point scale for rating importance but does not include a level scale with behavioral anchors.

Occupational Interests

Because the prototype O*NET content model used (as does the current O*NET content model) the model of interests developed in Gottfredson and Holland (1989), and extensive research applying this model to code occupations had already been conducted, the field test did not require additional ratings of the interest requirements of occupations.

Work Values

The prototype development team drew on a taxonomy incorporated in the Minnesota Job Description Questionnaire (Dawis and Lofquist, 1984)

to develop rating scales for the Work Values descriptors. The Minnesota Job Description Questionnaire describes occupations according to 21 “need-reinforcers,” or values. In the pilot test of the prototype content model, job incumbents were asked, for each of these 21 values, to use a 5-point scale to rate the degree to which it described their occupation. Each question began with, “Workers on this job….” For example, to rate the level of the value, “Ability Utilization,” the job incumbent was presented with the statement, “Workers on this job make use of their individual abilities” (Sager, 1997, Figure 10-3). According to the prototype developers, the reasons for including individual interests and values as domains was not to offer interests and values as important determinants of individual performance. Instead, it was to facilitate the person-job match for purposes of enhancing job satisfaction and retention. The National Center for O*NET Development does not routinely collect data on Work Values as part of its main data collection program but does periodically develop data for this domain.

Knowledge

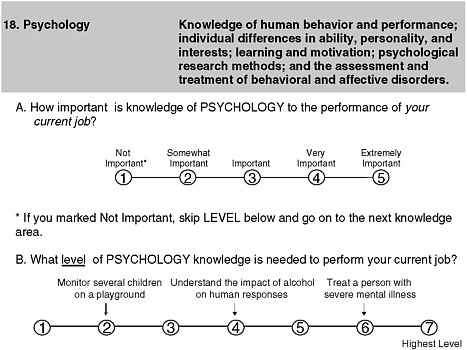

As was the case for Abilities and Work Styles, the prototype rating scales were designed to help individuals rate each Knowledge descriptor in terms of its importance for performance in a particular job and the level of the descriptor needed “to perform the job.” Again, the prototype development team calibrated the level scale with behavioral anchors. Table 4-1 presents an example of a definition and behavioral anchors for rating the level of the psychology knowledge required to perform the job. The current rating scale for this descriptor incorporates the same behavioral anchors and a very similar definition of psychology (see Figure 4-2).

TABLE 4-1 Psychology Definition and Behavioral Anchors

|

Psychology: |

Knowledge of human behavior and performance, mental processes, psychological research methods, and the assessment and treatment of behavioral and affective disorders. |

High – |

Treating a person with a severe mental illness. |

|

Low – |

Monitoring several children on a playground. |

||

|

SOURCE: Peterson et al. (1997, p. 243, Figure 4-1). Reprinted with permission. |

|||

FIGURE 4-2 Current psychology rating scales.

SOURCE: National Center for O*NET Development (no date). Reprinted with permission.

Occupational Preparation

The prototype development team did not develop rating scales to gather information on the level or importance of occupational preparation. Instead, the prototype questionnaire included seven questions related to education, training, licensure/certification, and experience requirements. Each question asked the respondent to select one alternative from among a set of alternatives. The current questionnaire used by the National Center for O*NET Development is similar, presenting five factual questions.

Skills

As discussed in Chapter 2, the O*NET prototype development team struggled to define the Skills taxonomy of descriptors clearly. As a consequence, respondents to the questionnaires may confuse descriptors from this taxonomy with descriptors with similar names found in other taxono-

mies, including Abilities and Generalized Work Activities. Nevertheless, the research team developed a 7-point rating scale incorporating behavioral anchors to assess the level of each Skills descriptor, along with a 5-point rating scale to assess the importance of each to perform the occupation. An example for the Skill, “Reading Comprehension” is discussed later in this chapter.

Generalized Work Activities

The field test of the prototype included three rating scales to be used to describe the generalized work activities of an occupation: (1) a level scale with behavioral anchors that asked, “What level of this activity is needed to perform this job?” (2) an importance scale that asked, “How important is this activity to this job?” and (3) a frequency scale that asked, “How often is this activity performed on this job?” The current questionnaire includes importance scales and level scales with behavioral anchors but does not include the frequency scales. For example, one item asks the respondent, “What level of identifying objects, actions, and events is needed to perform your current job?” followed by a scale. Level 2 of the scale is anchored with “test an automobile transmission,” level 4 with “judge the acceptability of food products,” and level 6 with “determine the reaction of a virus to a new drug” (National Center for O*NET Development, no date).

Work Context

The prototype Work Context taxonomy included three levels, with 39 descriptors at the most detailed level (see Appendix A). In the field test of the prototype content model, the development team created over 50 questions with multiple responses for each of these 39 descriptors, resulting in a very long questionnaire. The current questionnaire includes a series of 5-point rating scales inviting respondents to assess the frequency of some aspects of Work Context (e.g., public speaking, exposure to contaminants) and the level of other aspects (e.g., freedom to determine tasks, priorities, or goals). The rating scales do not include behavioral anchors.

Organizational Context

The prototype development team created a complex taxonomy of Organizational Context descriptors along with rating scales to assess levels of these descriptors required to perform various occupations. Currently, the O*NET Center does not collect data on organizational context.

Occupation-Specific Descriptors: Tasks

The field test targeted 80 occupational units for study. For each unit, the development team reviewed DOT codes within the unit and extracted 7 to 30 critical tasks. The team surveyed job incumbents employed in each occupational unit, asking them to rate the importance and frequency of occurrence of each task listed for their occupation and also to write in additional tasks they thought should be included. The psychometric characteristics of these task ratings appeared promising, but the effort to develop this taxonomy of descriptors stopped there.

Later, the O*NET Center commissioned a study in which researchers reviewed existing task statements from a sample of occupations, analyzed write-in statements provided by job incumbents, and also developed methods for identifying which task statements are critical to the occupation and for analyzing write-in tasks (Van Iddekinge, Tsacoumis, and Donsbach, 2003). The current questionnaires are occupation-specific. They present a list of task statements and ask the respondent to indicate whether the task is relevant and, if relevant, to rate its frequency using a 7-point scale and its importance using a 5-point scale (Research Triangle Institute, 2008). The scales do not include behavioral anchors.

Prototype Data Collection: Applying the Rating Scales

In the field test, the prototype development team used the rating scales to obtain information about the level and importance of each descriptor for each of the 1,122 occupational units from two groups of individuals—trained occupational analysts and job incumbents. Before these field tests began, the project team believed that job incumbents, employed in the occupational unit, would be best positioned to rate the level and importance of each descriptor, based on their familiarity with the occupation (Peterson et al., 1999).

The occupational analyst sample consisted of trained occupational analysts who were graduate students in human resource related disciplines. They used the rating scales described above to rate the level and importance of each O*NET descriptor for performance in each occupational unit. For the job incumbent sample, prescribed sampling techniques (see Peterson et al., 1999) were used to identify a representative field test sample of 80 occupational units, a sample of establishments in which the 80 occupations could be found, and at least 100 incumbents in each occupation who could be surveyed. Not every incumbent completed every questionnaire; a rotation design was used with the expectation that every domain questionnaire would be completed by 33 incumbents and every possible pair of questionnaires would be completed by at least 6 incumbents. The survey package for each incumbent was expected to take 60-90 minutes to complete.

Response Rate in the Prototype Field Test

The response rate for the incumbent surveys proved disappointing. Of the 1,240 establishments selected for the study which had identified a point of contact, 15 percent proved ineligible because they were too small or out of business. Of the 1,054 remaining, 393 dropped out during the planning of the incumbent surveys. At this stage, the field test team sent a total of 15,529 incumbent questionnaires to the points of contact in the remaining 661 establishments, asking them to distribute the questionnaires to the job incumbents within their establishments. However, only 181 of those identified as a point of contact carried out this request, distributing 4,125 questionnaires to job incumbents in these 181 establishments. These points of contact returned 2,489 questionnaires, for a response rate at the incumbent level of approximately 60 percent.

BEST PRACTICES IN QUESTIONNAIRE PRETESTING

Before discussing the pretesting of the pilot O*NET questionnaires, this section briefly describes best practices in survey pretesting. Increasingly, federal agencies and private-sector organizations are applying questionnaire pretesting and evaluation methods as an important step in developing surveys. Methods include expert review (e.g., Forsyth and Lessler, 1991), cognitive testing (e.g., Conrad, 2009; Willis, 2005), and focus group testing (e.g., Krueger, 1988). These methods, grounded in cognitive psychology, help to assess how representatives of a target survey population perform cognitive tasks, including interpreting and following instructions, comprehending question content, and navigating through a survey instrument. More recently, many survey design and pretesting principles have been applied in evaluations of the usability of web-based instruments (e.g., Couper, 2008). Some of these methods were used in the O*NET prototype field test.

Expert Review

Expert review is a quick and efficient appraisal process conducted by survey methodologists. Based on accepted design principles and their prior research experiences, the experts identify possible issues and suggest revisions. In some cases, they use a cognitive appraisal form that identifies specific areas (e.g., conflicting instructions, complex item syntax, overlapping response categories) to review each item on the instrument. A typical outcome of an expert review is improved question wording, response formats, and questionnaire flow to maximize the question clarity and to facilitate response. When usability methodologists conduct an expert review,

the focus not only includes the content, but also extends to the application of additional principles to judge ease and efficiency of completion, avoidance of errors, ability to correct mistakes, and how the content is presented (overall appearance and layout of the instrument).

Cognitive Interviewing

Cognitive interviewing is designed to test questionnaire wording, flow, and timing with respondents similar in demographics to those being surveyed. This technique is particularly helpful when survey items are newly developed and items can be tested with a population similar to the one to be surveyed. Information about a respondent’s thought processes is useful because it can be used to identify and refine instructions that are insufficient, overlooked, misinterpreted, or difficult to understand; wordings that are misunderstood or understood differently by different respondents; vague definitions or ambiguous instructions that may be interpreted differently; items that ask for information to which the respondent does not have access; and confusing response options or response formats.

Focus Group Testing

Focus groups are an excellent technique to capture users’ perceptions, feelings, and suggestions. In the context of a redesign effort, questions may be based on the results of an expert review and thus can focus on areas that have been determined to be potentially problematic. In cases in which an existing form or publication is being evaluated, an expert reviewer will identify key areas or features of the document that should be addressed in questioning participants. In the context of designing a larger population-based survey, focus groups are used to elicit important areas that should be addressed by the survey. Comparative information may also be gathered from different segments of the target population.

A focus group is typically a discussion among approximately 10 people who share some common interest, trait, or circumstance. Sessions are ideally 1 to 1½ hours long and are led by a trained moderator. The moderator follows a prepared script that lists questions and issues that are relevant to the focus group topic and are important to the client.

Usability Testing

The primary goal of usability testing with a survey instrument is to uncover problems that respondents may encounter so they can be fixed before it is fielded. Issues that may lead to reporting errors, failure to complete the survey, and break-offs (breaking off before completing and submitting

a survey) are of utmost importance. Testing is typically conducted with one individual at a time, while a moderator observes general strategies respondents use to complete the instrument; the path(s) they take (when a linear path is not required); the points at which they become confused and/or frustrated (which can indicate potential for break-off); the errors they make, how they try to correct them, and the success of their attempts; and whether the respondent is able to complete the instrument.

PRETESTING OF THE O*NET PROTOTYPE SURVEYS

Following the disappointing response rates to the surveys of job incumbents used in the field test of the prototype content model, in 1998 the U.S. Department of Labor (DOL) charged a working group with reviewing the questionnaires and making changes that would reduce the respondent burden while keeping the content model intact.

Findings from the Prototype Review

The working group assigned to evaluate and revise the questionnaires used several of the methods described above, including expert review, focus groups, and interviews with respondents.1 Based on findings from these methods, the group created revised questionnaires, which they then pilot tested with a small group of RTI employees in various occupations for the purpose of obtaining time to completion estimates. Hubbard et al. (2000) summarize the types of problems identified in their evaluation and pretesting of the pilot questionnaires:

-

Respondents reported difficulty understanding the questions and associated instructions as presented in a box format.

-

The rating task itself was very complex, and respondents had difficulty making some of the judgments required.

-

The rating scales (e.g., level, importance) and technical terminology were insufficiently defined.

-

Anchors used on the behavioral rating scales, although intended to provide examples of levels of performance, were unfamiliar to some respondents and potentially confusing or distracting.

-

No explicit referent period was provided, so it was unclear how respondents were interpreting the questions—the job as it is now, in the past, or in the future.

-

Some respondents recommended using either a 5-point or a 7-point Likert scale for all the items rather than presenting different response categories for different sets of items.

-

Respondents pointed out the redundancy across the various survey questionnaires.

-

The directions for each questionnaire were unnecessarily long, and the reading levels were too high for both the directions and many of the items.

Survey Revisions

The pretesting results provided the working group with extremely useful feedback, and revisions were made to many of the questionnaires. A few of the revisions based on respondent feedback are highlighted below (Hubbard et al., 2000):

-

Formatting changes, including a modification of the box-format presentation of instructions;

-

Reducing the length and detail of the instructions and lowering the reading level to some degree;

-

Providing an example at the beginning of each new section;

-

Asking importance first, adding a “not relevant” option, followed by the level scale (in the Abilities, Generalized Work Activities [GWAs], Skills, and Knowledge questionnaires);

-

Reducing the number of anchors that provided examples of job-related activities and presenting them at specific numbers on the scale (in the GWAs questionnaire);

-

The level scale was removed from the Work Styles questionnaire;

-

Some rating scales were eliminated on the basis of previous data (Peterson et al., 1999) showing them to be highly intercorrelated;

-

A small number of redundant descriptors were combined or aggregated into a single descriptor; and

-

A very small number of descriptors was eliminated because no respondents could understand their meaning.

A summary of the changes, comparing the current, revised questionnaires with those used in the field test is shown in Table 4-2.

With regard to the results of the pilot test regarding the estimated time to completion for the revised questionnaires, the times are considerably reduced, and most of the estimated savings, except for the Work Context questionnaire, are due to changes in formats and reading difficulty. The item-by-item changes for all the descriptors for all the domains can be found in Hubbard et al. (2000).

TABLE 4-2 Revisions to O*NET Questionnaires

|

Domain |

Number of Items |

Response Scale Changes |

|

|

Revised |

Original |

||

|

Abilities |

52 |

52 |

No changes |

|

Work Styles |

16 |

17 |

Keep importance scale, drop level scale |

|

Work Values |

0 |

21 |

Dropped from current O*NET |

|

Knowledge |

33 |

33 |

Keep level and importance |

|

Skills |

35 |

46 |

Keep level and importance, drop job entry requirements |

|

Education and Training |

5 |

15 |

Drop: (a) instructional program required; (b) level of education required in specific subject areas; and (c) licensure, certification, and registration |

|

Generalized Work Activities |

41 |

42 |

Keep level and importance, drop frequency |

|

Work Context |

57 |

97 |

Adopt consistent use of 5-point scales (not currently administered) |

|

Total Items |

239 |

323 |

|

|

SOURCE: Hubbard et al. (2000, Table 2, p. 25). Reprinted with permission. |

|||

In keeping with its charge to reduce the time burden and increase clarity and ease of use without changing the form or substance of the content model, the working group made no real substantive changes to the taxonomies of descriptors that made up the prototype content model.

Despite the findings about respondents’ difficulty with the behavioral anchor format of the level scales, this format was retained, although the number of anchors for a scale was sometimes reduced to achieve greater clarity and ease of use. In addition, the anchors were moved to the closest integer scale point (e.g., 3.0 or 4.0) rather than the actual mean judgment obtained from the previous scaling studies (e.g., 3.13 or 3.81). The working group believed that the loss of precision was more than compensated for by the gain in clarity, as documented by the interview and focus groups.

Finally, the working group decided to retain two different rating scales for four of the seven taxonomies of descriptors, one to measure the importance of the descriptor and another to measure the level required to perform the occupation.

In the panel’s view, the revisions made in response to the pilot test

results, the pretest of the O*NET data collection methodologies and instruments, and the Hubbard et al. (2000) review were inadequate to ensure that the surveys are understandable and usable.

DESIGN OF THE O*NET DATA COLLECTION PROGRAM

Like any survey of occupational information, the O*NET Data Collection Program faces trade-offs along three dimensions that affect data quality: the size of the sample collected for each occupation, the number of detailed occupations individually surveyed (rather than subsumed within broader occupation categories), and the time interval between successive waves (or “refreshes”) of the data. Improving the O*NET Data Collection Program along any one of these dimensions increases the total cost of data collection; holding constant data collection costs, improvements on one dimension necessitate cutbacks along either or both of the remaining dimensions.

As a result of the DOL decision to make few revisions to the content model following the field test, the O*NET Center today collects data related to 239 descriptors, using multiple rating scales for many of them, for a total of 400 rating scales2 (see Table 4-3). This amounts to a burdensome data collection effort, for both the O*NET Center and the survey respondents themselves.

To reduce respondent burden, the O*NET Center has developed three separate questionnaires that are administered to job incumbents—one focusing on Knowledge (this questionnaire also includes the short lists of questions related to Education and Training and Work Styles), another for GWAs, and a third for Work Context. The requirement to survey three different sets of job incumbents to obtain data for these five domains elevates the cost of administering the Data Collection Program. The O*NET Center also obtains data for these five domains from occupational experts, who are asked to complete all three questionnaires, and it has developed separate questionnaires for Skills and Abilities, which are completed by occupational analysts.

Rating Scales

One result of DOL’s limited response to the review and pretesting is that the questionnaires currently used to gather data for four domains (Skills, Knowledge, GWAs, and Abilities) include scales for both level and importance that have been subject to criticism.

TABLE 4-3 Current O*NET Questionnaires

|

Exhibit 2. |

O*NET Data Collection Program Questionnaires |

|||

|

O*NET Data Collection Program Questionnaire |

Number of Descriptors |

Number of Scales per Descriptor |

Total Number of Scales |

Data Source |

|

Skills |

35 |

2 |

70 |

Analysts |

|

Knowledge |

33 |

2 |

66 |

Job incumbents |

|

Work Stylesa |

16 |

1 |

16 |

Job incumbents |

|

Education and Traininga |

5 |

1 |

5 |

Job incumbents |

|

Generalized Work Activities |

41 |

2 |

82 |

Job incumbents |

|

Work Context |

57 |

1 |

57 |

Job incumbents |

|

Abilities |

52 |

2 |

104 |

Analysts |

|

Tasksb |

Varies |

2 |

Varies |

Job incumbents |

|

Total (not including Tasks) |

239 |

NA |

400 |

NA |

|

NOTES: Occupation experts use the same questionnaires as job incumbents for those occupations whose data collection is by the Occupation Expert Method. NA = not applicable. aThe Knowledge Questionnaire packet also contains the Work Styles Questionnaire and the Education and Training Questionnaire. bAll job incumbents are asked to complete a Task Questionnaire in addition to the domain questionnaire. SOURCE: U.S. Department of Labor (2008). Reprinted with permission. |

||||

Level Scales

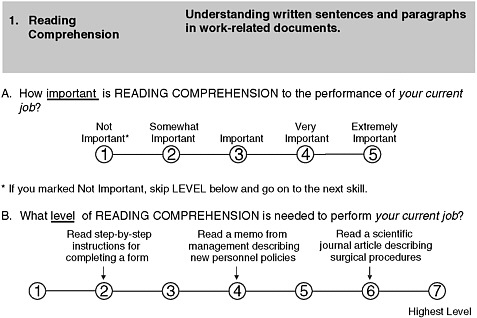

The level scales retain behavioral anchors with complex terminology and technical terms that were unfamiliar to some respondents in the pretesting. As determined by Hubbard et al. (2000), many of the behavioral anchors were examples from occupations unfamiliar to most job incumbents, and the level of difficulty or complexity of the requirements was confounded with the specific performance requirements of a particular occupation. For example, Figure 4-3 shows the current rating scales used for the “Reading Comprehension” skill.

In general, the anchors for the high end of the level scale are embedded in high-level occupations. However, relative to this particular descriptor, anyone with some knowledge of surgery might find comprehending such a journal article quite easy. After all, scientific writing is intended to be clear, direct, and simple. In contrast, an article on surgery might be incomprehensible to a Nobel laureate in literature with a very high level of general reading comprehension but little knowledge of surgery. At the other end of the scale, the level of reading comprehension required to read step-by-step instructions for completing a form may vary, depending on the content of the instructions and the complexity of the form. For example, many people find the step-by-step instructions for completing the Internal Revenue Service’s tax forms for computing the alternate minimum tax virtu

FIGURE 4-3 Reading comprehension rating scales.

SOURCE: National Center for O*NET Development (no date). Reprinted with permission.

ally incomprehensible. In short, this example illustrates the larger problem identified by Hubbard et al. (2000)—the behavioral anchors included in the level scales confound difficulty level with domain specificity.

In a study of O*NET commissioned by the Social Security Administration, Gustafson and Rose (2003) found that some of the behavioral anchors do not represent a clear continuum of levels of difficulty of a descriptor. As in the example of reading comprehension above, Gustafson and Rose found that some rating scales are consistently biased, placing behaviors in professional domains viewed as high level (including medicine, law, science, and “corporate”) near the top of the scale, regardless of the actual difficulty of the descriptor being measured. Another example is the anchor at level 6 of the 7-point “oral comprehension” scale, “understanding a lecture on advanced physics.”

Handel (2009) also argues that the anchors for some scales do not reflect equal intervals along a continuum of levels of difficulty. Based on an analysis of ratings of 809 occupations, he identifies a pattern of placing extremely complex behaviors at level 6 in the 7-point rating scales, which discourages respondents from using the upper end of the scale. Anchors placed at level 6 include arguing a case before the Supreme Court, nego-

tiating a treaty as an ambassador, and designing a new personnel system for the Army.

Gustafson and Rose (2003) found that some scale anchors include extraneous life-threatening or urgent situations that are not relevant to difficulty level. For example, the Ability “written comprehension” is defined as “the ability to read and understand ideas presented in writing.” The level 6 anchor, “understand an instruction book on repairing missile guidance systems,” confuses the difficulty of reading the material with the serious implications of a missile system in need of repair. In addition, they found that some behavioral anchors are inconsistent with—or only vaguely related to—the definition of the descriptor being measured, and others conflate learned techniques or skills with high levels of physical abilities.

Importance Scales

It is clear that some of the behaviorally anchored rating scales used to measure the level of descriptors are problematic, and the rating scales for importance have also been subject to some criticism. For example, experts have noted that the meanings of the scale points (e.g., “somewhat important,” “very important”) are not specified. Raters must impose their own internal metric, which they apparently can do with some degree of consistency (Tsacoumis and Van Iddekinge, 2006). However, the rater’s metrics may not correspond to the metrics employed by specific users. In addition, the importance scales cannot be used to reflect substantive occupational requirements. They can be used only for relative comparisons between occupations. Handel (2009, p. 15) notes that “in principle, the concept of level of complexity is more meaningful than importance.” A thorough discussion of this issue can be found in Handel (2009), along with proposals for more concrete metrics.

Correlation Between Level and Importance Scales

As noted above, the questionnaires related to Skills, Knowledge, GWAs, and Abilities include scales for both level and importance. These questionnaires contain 161 two-part items that ask respondents about the importance of the descriptor and, if at least somewhat important, the level of the descriptor needed to perform the job (see Figures 4-1 through 4-3). Analyses of the pretest data indicated that the responses to importance and level items were so highly correlated (r = .95) as to suggest that the two scales were largely redundant (Peterson et al., 1999). Handel’s (2009) analysis of current O*NET data indicates that the two scales remain largely redundant, with a mean correlation between importance and level responses of .92. Re-

spondent burden could be reduced and resources conserved if only a single scale were used to gather data in each of these four domains.

Ratings Provided by Occupational Analysts

The prototype development team believed that job incumbents would be best positioned to rate the level and importance of each descriptor (Peterson et al., 1999). Currently, however, occupational analysts rate the importance and level of the Abilities and Skills descriptors, which comprise 87 of the 239 item descriptors (approximately 37 percent) included in the standardized O*NET questionnaires; items for other domains (Knowledge, GWAs, Work Context, Work Styles) are rated by job incumbents (see Table 4-3). In the first years of data collection, analysts rated only Abilities, but since 2006, they have also rated Skills.

The choice of raters is controversial. In a presentation to the panel, Handel (2009) argued that job incumbents are the most knowledgeable source of information about their own jobs and suggested that the O*NET Center replace the current behaviorally anchored rating scales with different types of questionnaires, including specific questions that he viewed as more understandable to incumbents. In another presentation, Harvey questioned the credibility of ratings provided by analysts, based on his view that they lack extensive firsthand experience with the occupation and also questioned the use of job incumbents, saying they “arguably represent among the least-credible sources of job analysis ratings” (Harvey, 2009a).

Research on Raters as Sources of Occupational Information

The U.S. Department of Labor (2008, p. 6) notes that the O*NET prototype team expected to rely on job incumbents as the primary data source, based on earlier studies indicating that incumbents were capable of accurately rating occupational characteristics (Fleishman and Mumford, 1988; Peterson et al., 1990, 1999). More recent research indicates that job incumbents can provide psychometrically sound ratings of more concrete characteristics of occupations, such as tasks, but that their ratings of more abstract occupational characteristics, such as those relating to abilities or job-related personality traits, are less reliable (Dierdorff and Morgeson, in press; Dierdorff and Wilson, 2003). These recent findings, however, are clouded by unresolved research issues regarding the interpretation of interrater agreement and reliability in job analysis (Harvey and Wilson, 2000; Sanchez and Levine, 2000; Sanchez et al., 1998). In addition, a variety of factors are believed to influence the degree to which ratings by job incumbents and occupational analysts are interchangeable, including job complexity, rater training, written materials, and the information on which

analyst ratings are based (Butler and Harvey, 1988; Cornelius and Lyness, 1980; Lievens and Sanchez, 2007; Lievens, Sanchez, and Da Corte, 2004; Sanchez, Zamora, and Viswesvaran, 1997).

Documentation of the Decision to Use Analysts

According to the U.S. Department of Labor (2008, p. 6), the decision to use analysts to rate Abilities was based on research leading to the O*NET Data Collection Program, which examined various sources and methods for collecting occupational data. The later decision to use analysts to rate Skills was based in part on a study by Tsacoumis and Van Iddekinge (2006), which compared incumbent and analyst ratings of Skill descriptors across a large sample of O*NET occupations. Although the researchers found statistically significant differences between incumbent and analyst ratings—incumbents provided higher mean ratings but demonstrated lower levels of interrater agreement than analysts—these differences were regarded as having minimal practical significance, and they recommended basing the choice of rating source on other factors.

The O*NET Center therefore decided to use analysts to make the Skills ratings based on “considerations of relative practicality, such as cost” (U.S. Department of Labor 2008, p. 6), although it did not provide comparative data on the costs associated with either rating source. Tsacoumis (2009b, p. 1) also alluded to “theoretical and philosophical reasons for preferring one rater group to the other for collecting different types of data.” Tsacoumis further asserted that incumbents are generally more familiar with the day-to-day duties of their job and therefore are the best source of information regarding tasks, GWAs, and work context, whereas trained analysts “understand the ability and skill constructs better than incumbents and therefore should provide the ability and skills data” (p. 1).

Methods Used in Analyst Ratings

Analysts are provided written materials about occupations, referred to as “stimulus” materials because they are designed to stimulate a response in the form of a rating. Analysts do not directly observe or interview incumbents employed in the occupations they provide ratings for. Donsbach and colleagues (2003) describe a process of streamlining the stimulus materials used in rating Abilities. First, the researchers removed O*NET data on the Knowledge, Skills, Education and Training, and Work Styles for the occupation being rated, because these materials were judged to be irrelevant to Ability ratings. Next, GWA and Work Context items that were judged to be relevant to a specific Ability by a panel of eight industrial/organizational psychologists were retained. From this pool of items, those that did

not reach a certain cutoff of importance, according to incumbent ratings, were eliminated. This process greatly reduced the amount of stimulus materials provided to analysts to rate Abilities (see Donsbach et al., 2003, Appendix E). Tsacoumis (2009a) indicated that analysts are currently provided with a similar set of rating materials for use in rating Skills. As noted above, research suggests that the type of stimulus materials provided can affect the quality of the ratings.

Tsacoumis and Van Iddekinge (2006) reported that 31 unique analysts had participated in the various cycles of data collection. Eight analysts provided the Skills ratings for the ninth cycle of 106 occupations (Willison and Tsacoumis, 2009). The choice of this specific number of raters used is supported by a series of studies of the psychometric properties of their Skills ratings (Tsacoumis, 2009a), even though the manner in which these psychometric properties were computed is not free of criticism (Harvey, 2009b). Tsacoumis (2009a) also clarified that all analysts received rater training and met certain minimum requirements regarding training and experience.

Unanswered Questions About Analyst Ratings

On the basis of this brief review of the literature and published information from the O*NET Center, the panel identified several questions regarding the use of analysts or incumbents to rate occupational characteristics. Why are analysts used to collect data for the Abilities and Skills domains but not for other domains? If the decision to use analysts for these two domains is based on the argument that analysts are better raters than incumbents of trait-like, abstract aspects of the job, then it is unclear why ratings of Work Styles are still made by incumbents. As noted in Chapter 2, the Work Styles descriptors, such as dependability, stress, tolerance, and integrity, resemble abstract personality traits. Similarly, if analysts are preferred on the basis of research indicating that incumbents are likely to inflate their ratings (Dierdorff and Wilson, 2003), it is unclear why they are not used to rate Work Context descriptors, such as the seriousness of a mistake and the results of decisions on other people, which appear equally vulnerable to inflation bias.

Other questions concern how analysts acquire occupational information. For example, how may the streamlining of rating stimulus materials affect the quality of analyst ratings? Would opportunities to directly observe or interview incumbents influence the quality or usefulness of analyst ratings? Might these or other methods used to provide occupational information to analysts influence the degree of convergence or divergence between analyst and incumbent ratings?

Another question concerns what criteria should be used to evaluate the quality of ratings. Researchers have often focused on interrater agreement,

and interrater reliability (both across and within constructs and occupations), but these have drawbacks as quality metrics. Low levels of interrater agreement among job incumbents may reflect legitimate differences in how different incumbents view their job (Tsacoumis and Van Iddekinge, 2006). Conversely, the relatively high interrater agreement among analysts does not necessarily reflect high validity, because it could result in part from excessive simplification of stimulus materials so that relevant information on Abilities and Skills is lost.

Finally, might it be possible to reduce costs by avoiding the use of analysts? Hybrid approaches capitalize on archival data documenting the empirical relationships among various sets of ratings. For example, known empirical associations between GWA and Ability ratings gathered in the past could be used to mechanically derive Ability ratings using newly collected incumbent-based GWA ratings, thereby eliminating the need to collect analyst ratings with every new wave of incumbent ratings.

Data Collection from Job Incumbents

Job incumbents are the source for most O*NET data (U.S. Department of Labor, 2008). Although they do not rate the importance and level of the Skills and Abilities descriptors, job incumbents do provide data for 6 other domains (Knowledge, GWAs, Work Context, Education and Training, Work Styles, Tasks). The O*NET Center uses two methods to collect information from job incumbents. One method is a carefully designed probability sample of establishments and employees in those establishments, referred to as the “establishment method.” The other method, used for occupations that are more difficult to locate in establishments, is referred to as the “occupational expert method” (U.S. Department of Labor, 2008). O*NET Center staff determine which method is most appropriate for each occupation, using information on the predicted establishment eligibility rate and the predicted establishment response and employee response to quantify the efficiency of the two approaches.

For most domains (except Skills and Abilities), the O*NET Center uses the establishment method to collect data for approximately 75 percent of the occupations and the occupational expert method to collect data for approximately 25 percent of the occupations (U.S. Department of Labor, 2008). The occupational expert method is used for occupations that are more difficult to locate in establishments, when employment data for the occupation are not available, or the numbers of incumbents are very low, and one or more professional organizations are available to indentify occupational experts. The updated occupational data collected using these two approaches are not combined and thus estimates are presented separately.

Establishment Method

Frame, sample design, and sample waves. According to the U.S. Department of Labor (2008), a stratified two-stage design is used to select a sample of workers. The first stage is a sample of businesses (the primary stage) that were selected with probability proportional to the expected number of employed workers in the specific occupations being surveyed. From these first-stage sampling units (the businesses), a sample of employees is selected.

The frame for the primary stage contains approximately 15 million establishments, including the self-employed. Employment statistics published by the U.S. Bureau of Labor Statistics (BLS) are merged with the industry information from Dun and Bradstreet to help identify industries in which particular occupations are employed.

For occupations that are difficult to locate, another frame is used to either supplement or replace the Dun and Bradstreet frame. The National Center for O*NET Development and RTI (2009a) identified three scenarios in which another frame was used: (1) a supplemental frame of job incumbents obtained from the membership list of a professional association; (2) a supplemental frame of employers selected from a targeted listing of establishments that employ workers in the occupation; and (3) a special frame of establishments that provides better coverage of the occupation of interest than the Dunn and Bradstreet frame. Dual frame estimation techniques were used for occupations requiring two frames.

Stratification is used to obtain adequate representation for establishments with varying numbers of employees. This is done by undersampling smaller establishments (fewer than 50 employees) and oversampling large establishments (250 or more employees).

The sample design incorporates a wave design to control sample overproduction. Groups of occupations that are expected to occur in a similar set of industries are formed. This approach minimizes the number of establishments to contact. Model-aided sampling is employed, which controls employee sample selection. This approach is beneficial in reducing data collection costs, with minimal effects on the accuracy of the estimators (Berzofsky et al., 2008).

Sample size and selection. For a given occupation, a minimum of 15 valid, completed questionnaires for each of the 3 domain questionnaires is required to meet precision targets, while task and background information is collected via a minimum of 45 respondents. The current precision targets for O*NET are that virtually all 5-point scale ratings have a 95 percent confidence interval no wider than +/−1 and that virtually all 7-point scale ratings have confidence intervals no wider than +/−1.5. In O*NET data collection to date, the average number of respondents per domain question-

naire is approximately 33 and for task and background information 100. And 90 percent of the 5-point and 7-point scale ratings are within the precision targets (National Center for O*NET Development and RTI, 2009a).

The establishment method involves multiple sample selection steps (see Data Collection Procedures, below), including sampling establishments at the first stage and employees at the second stage. Probability proportional to size selection is used to select a subsample of establishments from the simple random sample of establishments that were initially selected.

Weighting and estimation. Because the sample selection process for the establishment method is a complicated design, complex procedures are required in the analysis of these data. The weight development and estimation have been carefully outlined (U.S. Department of Labor, 2008, pp. 65-86). The final weights account for the sampling of establishments, occupations, and employee selection; adjustments for early termination of employee sampling activities due to higher-than-expected yields and multiple sample adjustments; adjustments for nonresponses at both the establishment and the employee levels; and adjustments for under- and overcoverage of the population. Weight trimming is used to adjust for extremely large weights.

Final estimates are produced for each occupation, but no subgroups or domain estimates are produced or released. Standard deviations are available for each item. No item imputation is conducted because of the low item nonresponse (U.S. Department of Labor, 2008, Appendix H). Design-based variances are produced using a widely used survey design software package called SUDAAN, which was developed at RTI. This software accounts for the complex survey structure used in the establishment method. Variances are estimated using the first-order Taylor series approximation of deviations of estimates from their expected values. Standard error estimates and 95 percent confidence intervals are included with all estimates of means and proportions.

Occupational Expert Method

According to the National Center for O*NET Development and RTI (2009a), the occupational expert method is used when the establishment method would be problematic due to low rates of employment in some occupations, such as new and emerging occupations. This method is used when the occupation is well represented by one or more profession or trade associations that are willing and able to identify experts in the target population. An occupational expert is knowledgeable about the occupation, has worked in the occupation for at least 1 year, and has 5 years of experience as an incumbent, trainer, or supervisor. The occupational expert must have had experience with the occupation in the past 6 months.

For this method, samples of experts are selected from lists of potential respondents. A stratified random sample of occupational experts is selected from the provided lists to prevent investigator bias in the final selection. Sample sizes are designed to ensure that a minimum of 20 completed questionnaires are available for analysis after data cleaning. According to the U.S. Department of Labor (2008), the goal of 20 questionnaires was set as a reasonable number to enable adequate coverage of experts, occupation subspecialties, and regional distribution. Although the DOL provides estimated standard deviations for the mean estimates based on the occupational expert method, it does not indicate that 20 was selected based on sample size calculations to meet any current measure of variability. Because data collected from using this method are not based on a probability sampling design, unweighted estimates of means and percentages are reported.

Both the establishment and occupational expert approaches are carefully documented (U.S. Department of Labor, 2008). Details of the probability sampling design, stratification, sample sizes, weighting, and estimation for the establishment method are well defined. Steps in the development and analysis seem appropriate. The nonprobability approach for the occupational expert method is distinguished from the probability approach of the establishment method, and it is noted that these estimates should be viewed separately from the establishment method. However, the Data Collection Program relies on model-aided sampling. Although the model-aided sampling approach is discussed in an article published by RTI Press (Berzofsky et al., 2008), this approach would be viewed by a larger audience and gain more exposure if published in a more widely circulated professional journal in the field of survey methodology.

Data Collection Procedures

The O*NET data collection process employs a complex design that first identifies business establishments and then surveys employees in those establishments. The U.S. Department of Labor (2008) describes the 13-step process for both the establishment and the occupational expert methods. RTI International designs, implements, and supervises the survey data collection.

Selection of an employee for the establishment method is a multistep process. Establishments are first selected, and occupations are then assigned in selected establishments. Employees are selected through a point of contact in the establishment who is responsible for coordinating data collection within the establishment and for following up with nonresponding employees. Once the contact agrees to participate, informational materials and questionnaires are mailed to both him or her and the job incumbents.

The O*NET Center and RTI (2009b) report that, based on their experience almost all establishments prefer to coordinate data collection themselves and their sense is that many establishments would refuse to participate if they were required to provide the name of their employees. The Center and RTI determined that it was not feasible to contact the employee directly for nonresponse follow-up purposes, because most employers will not divulge confidential employee contact information.

A number of tools commonly used to improve and monitor response rates are implemented throughout the data collection process. First, the data collection team employs a complex web-based case management system that enables up-to-date tracking of all activities while the survey is being fielded. Incentives are offered to the point of contact, establishments, and employees to encourage participation and improve response rates. Multiple modes of data collection are offered to the employee, including web and mail versions of the surveys. In addition, multiple contacts, another well-known survey procedure to improve response rates, are also in place. Spanish versions of the questionnaires are available (and can be requested by calling a toll-free number) for occupations with a high percentage of Hispanic workers, although these are not distributed in high numbers. The O*NET Center outlines further enhancements that are implemented to maximize response rates (U.S. Department of Labor, 2008, Section B.3).

The steps in the data collection protocol for the occupational expert method are similar to the process followed in the establishment method. However, since occupational experts are enlisted for this approach, there are no verification or sampling calls.

Response Rates

Calculating response rates—the number of eligible sample units that cooperate in a survey—is central to survey research. It is valuable to standardize definitions of response rates in order to allow comparisons of response and nonresponse rates across surveys of different topics and organizations. The American Association for Public Opinion Research (AAPOR) has developed standard definitions that clearly distinguish between the response rate and the cooperation rate, cover different modes of survey administration, discuss the criteria for ineligibility, and specify methods for calculating refusal and contact rates (American Association for Public Opinion Research, 2008).

The U.S. Department of Labor (2008) reports relatively high response rates for both the employees and the establishments contacted using the establishment method. However, it is unclear whether the rates reported are response rates or cooperation rates, as defined by the AAPOR. In response

to our questions, the O*NET Center provided more information about response disposition categories but did not provide detailed disposition rates as defined by AAPOR.

Data Editing and Cleaning

This stage of data processing can be another source of nonsampling error. Responses from the mail questionnaires are keyed using double entry and 100 percent key verified, which reduces the potential for data entry error. A final stage of data cleaning for the O*NET data is defined as the “deviance analysis” for task questionnaire data. Deviance analysis identifies cases with response profiles differing from the response profiles of the rest of the cases in the occupation. For this analysis, cases with response profiles that differ from the response profiles are considered “deviant” and referred to as “outliers.”

Although the O*NET Center provided a written description of this analysis in a response to a question from the panel (National Center for O*NET Development and RTI, 2009c), there does not appear to be any public documentation of this process. It appears that outliers that have lower task endorsement in this analysis are identified. For example, cases that do not have more than 33 percent or more of tasks rated as “3 or higher” and have less than 50 percent or more of the tasks rated as “relevant” would be identified as potentially deviant.

DATA CURRENCY

From 2001 to 2006, the O*NET Center collected and published updated information on approximately 100 occupations twice yearly. Over the past 3 years, with a lower budget, it has collected and published updated information on approximately 100 occupations once each year (Rivkin and Lewis, 2009a). The current O*NET database includes comprehensively updated information for 833 of the 965 occupations for which data are gathered. The 132 occupations that have not yet been updated are among the new and emerging occupations added to the O*NET-SOC taxonomy in 2009. Overall, across all occupations, the average currency of data is 2.59 years.

The O*NET Center prioritizes occupations for waves of data collection and updating on the basis of an extensive list of criteria, including not only when the occupation was last updated, but also whether the occupation is considered a “demand-phase occupation” by DOL. According to the O*NET Center, “demand-phase occupations” are those that (Lewis and Rivkin, 2009a, 2009b):

-

Are identified as in demand by DOL;

-

Are identified by DOL as a “top 50” occupation on the basis of job openings and median income;

-

Are identified with high growth rates and/or large employment numbers;

-

Are linked to technology, math, science, computers, engineering, or innovation;

-

Are identified in a high “job zone” (i.e., require considerable or extensive preparation); and

-

Are identified as a green occupation.

The O*NET Center’s stated goal is to update all demand-phase occupations at least once every 5 years. There is no stated goal for regularly updating other occupations (Lewis and Rivkin, 2009a).

This priority-based sampling approach results in a database with uneven currency across occupations. Although data for most occupations in the current O*NET database is relatively fresh, data for just over 5 percent of occupations have not been updated in 5 years or more (Lewis and Rivkin, 2009a, 2009b).

CONCLUSIONS AND RECOMMENDATIONS

To gather information for most content model domains, the National Center for O*NET Development employs a multimethod sampling approach, whereby respondents for approximately 75 percent of the occupations are identified through probability-based sampling, and respondents for 25 percent of the occupations are identified by other less scientifically rigorous methodologies. To gather information for the Skills and Abilities domains, respondents for all occupations are identified by methodologies other than probability-based sampling. This mixed-method approach results in the collection of occupational data from different types of respondents (occupational analysts, job incumbents, occupational experts) who may or may not represent the work performed in that occupation. It is unclear what impact this has on measurement error, since each type of respondent introduces a different source of error.

Recommendation: The Department of Labor should, with advice and guidance from the technical advisory board recommended in Chapter 2, commission a review of the sampling design to ascertain whether the current methodology represents the optimal strategy for consistently identifying the most knowledgeable respondents in each of the occupations studied and whether the results obtained are generalizable geographically, ethnically, and in other ways. The review should include

investigation of the reliability, quality, and usefulness of data collected from different types of respondents and the cost-effectiveness of collecting data from these different groups.

The researchers commissioned by DOL should examine the errors involved in the current combination of probability-based sampling and other sampling approaches and distinguish the inferences made from these two approaches. If they determine that the current mix of methods does not represent the optimal strategy, DOL should commission research to explore other approaches to gather information on rare occupations, such as adaptive sampling designs.

This review should consider other surveys with which O*NET could piggyback, such as the Current Population Survey or other national house-hold surveys.

Surveys administered by the O*NET Center include rating scales with behavioral anchors that define points on the scales. The surveys ask respondents to assess the level and importance of various descriptors required to perform the occupation. Some characteristics of these scales make them difficult to use or result in an inconsistent frame of reference across raters. For example, some scales lack interval properties, and the instructions provided to focus the rater’s task are unclear. In addition, in the scales used to assess the required level of a descriptor, many of the behavioral anchors are taken from occupations that are not familiar to many job incumbent raters.

Recommendation: The Department of Labor should, in coordination with research on the content model discussed in Chapter 2,3 and with advice and guidance from the technical advisory board recommended in Chapter 2, conduct a study of the behaviorally anchored rating scales and alternative rating scales. This research should examine the rater’s understanding of the rating scales and frame of reference for the rating task and should include verbal protocol analysis as well as pretesting and feedback from respondents of different demographic backgrounds, sampled from a variety of occupations. So that researchers can fully evaluate the strengths and weaknesses of the pretest results, the demographic profile of the pretest respondents should be included as part of the study documentation, using appropriate techniques to protect individual privacy.

|

3 |

Because the research recommended in Chapter 2 may result in the elimination of some descriptors, there would be no need to study the behaviorally anchored rating scales associated with those descriptors. |

If the pretest results indicate problems with comprehension of the survey items, DOL should consider implementing changes that will facilitate a better understanding of the rating task, including revision of instructions, adjustment of the overall reading level, and revision of the rating scales so that the questions are not double barreled (combining two or more issues into a single question).

Most O*NET data are collected through surveys of employees (job incumbents) of a sample of establishments, an approach referred to as the establishment method. The O*NET Center reports relatively high response rates for both the employees and the establishments contacted using this method. However, it is unclear whether these reported rates are response rates or cooperation rates, as defined by AAPOR.

Recommendation: To improve the cost-effectiveness of data collection, conform to best practices for survey design, and comply with current federal requirements for survey samples, the Department of Labor should, with advice and guidance from the technical advisory board recommended in Chapter 2, explore ways of increasing response rates. This research should consider the costs and benefits of pilot-testing all questionnaires on real job incumbents, directly contacting job incumbents rather than relying entirely on the establishment point of contact for access to job incumbents, and using incentives to encourage participation.

As part of this exploration, DOL should consider cost-effective ways to identify nonresponders and encourage their participation. The results of these studies should be released for public use. Because the representativeness of the establishment method sample depends partly on the response rates, the O*NET Center should publish a detailed breakdown of the response disposition using the definitions of AAPOR. Such a detailed breakdown of the response rates would facilitate evaluation of the sample and help the O*NET Center and DOL to target nonresponse research efforts.

Through data collection over the past 10 years, DOL has achieved its initial goal of populating O*NET with up-to-date information from job incumbents and job analysts, replacing earlier data based on the Dictionary of Occupational Titles. However, short-term policy agendas related to workforce development have at times reduced focus on the core activities of developing, maintaining, and updating a high-quality database. For example, the O*NET Center prioritizes occupations for waves of data collection and updating based on an extensive list of criteria, including not only when the occupation was previously sampled but also whether it has been identified as “in-demand” by DOL, whether it has been identified as a new and emerging occupation, and whether it is one of the “top 50” oc-

cupations identified by DOL (based on the numbers of job openings and median income). This priority-based sampling approach results in a database with uneven currency across all occupations. The average currency of data, across all O*NET occupations, is 2.59 years, but over 5 percent of occupations have not been updated for 5 or more years.

Recommendation: The Department of Labor should, with advice and guidance from the technical advisory board recommended in Chapter 2 and the user advisory board recommended in Chapter 6, develop a clear and closely followed policy and set of procedures for refreshing survey data in all O*NET occupations. The policy and procedures should take into account the length of time that occupational data remain viable as well as the need of various O*NET user communities for the most up-to-date information. As part of this effort, the Department of Labor should explore the potential benefits and practical feasibility of adding an O*NET supplement to the ongoing Current Population Survey, in order to provide a sample of fresh data to assess the currency and representativeness of the data in O*NET.

REFERENCES

American Association for Public Opinion Research. (2008). Standard definitions: Final dispositions of case codes and outcome rates for surveys. Available: http://www.aapor.org/uploads/Standard_Definitions_04_08_Final.pdf [accessed July 2009].

Berzofsky, M., Welch, B., Williams, R., and Biemer, P. (2008). Using a model-aided sampling paradigm instead of a traditional sampling paradigm in a nationally representative establishment survey. Research Triangle Park, NC: RTI Press.

Butler, S.K., and Harvey, R.J. (1988). A comparison of holistic versus decomposed ratings of Position Analysis Questionnaire work dimensions. Personnel Psychology, 41, 761-771.

Conrad, F. (2009). Sources of error in cognitive interviews. Public Opinion Quarterly, 73, 32-55.

Cornelius, E.T., and Lyness, K.S. (1980). A comparison of holistic and decomposed judgment strategies in job analysis by job incumbents. Journal of Applied Psychology, 65, 155-163.

Couper, M. (2008). Designing effective web surveys. New York: Cambridge University Press.

Couper, M., Lessler, J., Martin, E., Martin, J., Presser, S., Rothgeb, J., and Singer, E. (2004). Methods for testing and evaluating survey questionnaires. Hoboken, NJ: Wiley-Interscience.

Dawis, R.V., and Lofquist, L.H. (1984). A psychological theory of work adjustment: An individual differences model and its applications. Minneapolis: University of Minnesota Press.

Dierdorff, E.C., and Morgeson, F.P. (in press). Effects of descriptor specificity and observability on incumbent work analyst ratings. Personnel Psychology.

Dierdorff, E.C., and Wilson, M.A. (2003). A meta-analysis of job analysis reliability. Journal of Applied Psychology, 88, 635-646.

Donsbach, J., Tsacoumis, S., Sager, C., and Updegraff, J. (2003). O*NET analyst occupational abilities ratings: Procedures. Raleigh, NC: National Center for O*NET Development. Available: http://www.onetcenter.org/reports/AnalystProc.html [accessed July 2009].

Fleishman, E.A. (1992). The Fleishman-Job Analysis Survey (F-JAS). Palo Alto, CA: Consulting Psychologists Press.

Fleishman, E.A., and Mumford, M.D. (1988). The abilities requirement scales. In S. Gael (Ed.), The job analysis handbook for business, industry, and government. New York: Wiley.

Forsyth, B.H., and Lessler, J.T. (1991). Cognitive laboratory methods: A taxonomy. In P.P. Biemer, R.M. Groves, L.E. Lyberg, N.A. Mathoiwetz, and S. Sudman (Eds.), Measurement errors in surveys (pp. 393-418). New York: Wiley.

Gottfredson, G.D., and Holland, J.L. (1989). Dictionary of Holland occupational codes. Second edition. Odessa, FL: Psychological Assessment Resources.

Gustafson, S.B., and Rose, A. (2003). Investigating O*NET’s suitability for the Social Security Administration’s disability determination process. Journal of Forensic Vocational Analysis, 6,3-15.

Handel, M. (2009). The O*NET content model: Strengths and limitations. Paper prepared for the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/MHandel%20ONET%20Issues.pdf [accessed July 2009].

Harvey, R.J. (2009a). The O*NET: Flaws, fallacies, and folderol. Presentation to the Panel to Review the Occupational Information Network (O*NET), April 17. Available: http://www7.nationalacademies.org/cfe/O_NET_RJHarvey_Presentation.pdf [accessed August 2009].

Harvey, R.J. (2009b). The O*NET: Do too abstract titles + unverifiable holistic ratings + questionable raters + low agreement + inadequate sampling + aggregation bias = (a) validity, (b) reliability, (c) utility, or (d) none of the above? Paper prepared for the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/O_NET_RJHArvey_Paper1.pdf [accessed July 2009].

Harvey, R.J., and Wilson, M.A. (2000). Yes Virginia, there is an objective reality in job analysis. Journal of Organizational Behavior, 21, 829-854.

Hubbard, M., McCloy, R., Campbell, J., Nottingham, J., Lewis, P., Rivkin, D., and Levine, J. (2000). Revision of O*NET data collection instruments. Raleigh, NC: National Center for O*NET Development. Available: http://www.onetcenter.org/reports/Data_appnd.html [accessed September 2009].

Krueger, R. (1988). Focus groups: A practical guide for applied research. Newbury Park, CA: Sage.

Lewis, P., and Rivkin, D. (2009a). O*NET program briefing. Presentation to the Panel to Review the Occupational Information Network (O*NET), February 3rd. Available: http://www7.nationalacademies.org/cfe/Rivkin%20and%20Lewis%20ONET%20Center%20presentation.pdf [accessed July 2009].

Lewis, P., and Rivkin, D. (2009b). Criteria considered when selecting occupations for future O*NET data collection waves. Document submitted to the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/Criteria%20for%20Data%20Collection%20Occupation%20Selection.pdf [accessed July 2009].

Lievens, F., and Sanchez, J.I. (2007). Can training improve the quality of inferences made by raters in competency modeling? A quasi-experiment. Journal of Applied Psychology, 92(3), 812-819.

Lievens, F., Sanchez, J.I., and DaCorte, W. (2004). Easing the inferential leap in competency modeling: The effects of task-related information and subject matter expertise. Personnel Psychology, 57(4), 847-879.

National Center for O*NET Development and RTI. (2009a). O*NET survey and sampling questions, 4-01-09. Written response to questions from the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/O_NET_Survey_Sampling_Questions.pdf [accessed July 2009].

National Center for O*NET Development and RTI. (2009b). Responses to questions from the 4/17/09 O*NET meeting. Available: http://www7.nationalacademies.org/cfe/National_ONET_Response_re_Additional_Statistics_and_Survey_Methods_Questions.pdf [accessed July 2009].

National Center for O*NET Development and RTI. (2009c). O*NET data collection program: Statistical procedures for deviant case detection. Written response to questions from the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/Deviance%20Testing%20Procedures.pdf [accessed June 2009].

National Center for O*NET Development. (no date). Questionnaires. Available: http://www. onetcenter.org/questionnaires.html [accessed July 2009].

Peterson, N.G., Mumford, M.D., Borman, W.C., Jeanneret, P.R, and Fleishman, E.A. (Eds.). (1999). An occupational information system for the 21st century: The development of O*NET. Washington, DC: American Psychological Association.

Peterson, N.G., Mumford, M.D., Borman, W.C., Jeanneret, P.R., Fleishman, E.A., and Levin, K.Y. (1997). O*NET final technical report. Volumes I-III. Salt Lake City: Utah State Department of Workforce Services, on behalf of the U.S. Department of Labor Employment and Training Administration. Available: http://eric.ed.gov [accessed June 2009].

Peterson, N.G., Owens-Kurtz, C., Hoffman, R. G., Arabian, J.M., and Wetzel, D.C. (1990). Army synthetic validation project. Alexandria, VA: U.S. Army Research Institute for the Behavioral Sciences.

Research Triangle Institute. (2008). O*NET data collection program, PDF questionnaires. Available: https://onet.rti.org/pdf/index.cfm [accessed July 2009].

Sager, C. (1997). Occupational interests and values: Evidence for the reliability and validity of the occupational interest codes and the values measures. In N.G. Peterson, M.D. Mumford, W.C. Borman, P.R. Jeanneret, E.A. Fleishman, and K.Y. Levin (Eds.), O*NET final technical report, volumes I, II and III. Salt Lake City: Utah State Department of Workforce Services on behalf of the U.S. Department of Labor, Employment and Training Administration. Available: http://eric.ed.gov [accessed July 2009].

Sanchez, J.I., and Levine, E.L. (2000). Accuracy or consequential validity: Which is the better standard for job analysis data? Journal of Organizational Behavior, 21, 809-818.

Sanchez, J.I., Prager, I., Wilson, A., and Viswesvaran, C. (1998). Understanding within-job title variance in job-analytic ratings. Journal of Business and Psychology, 12, 407-420.

Sanchez, J.I., Zamora, A., and Viswesvaran, C. (1997). Moderators of agreement between incumbent and nonincumbent ratings of job characteristics. Journal of Occupational and Organizational Psychology, 70, 209-218.

Tsacoumis, S. (2009a). O*NET analyst ratings. Presentation to the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/O_NET_Suzanne_Tsacoumis_Presentation.pdf [accessed May 2009].

Tsacoumis, S. (2009b). Responses to Harvey’s criticisms of HumRRO’s analysis of the O*NET analysts’ ratings. Paper provided to the Panel to Review the Occupational Information Network (O*NET). Available: http://www7.nationalacademies.org/cfe/Response%20to%20RJ%20Harvey%20Criticism.pdf [accessed August 2009].

Tsacoumis, S., and Van Iddekinge, C. (2006). A comparison of incumbent and analyst ratings of O*NET skills. Available: http://www.onetcenter.org/reports/SkillsComp.html [accessed August 2009].

U.S. Department of Labor, Employment and Training Administration. (2008). O*NET data collection program, Office of Management and Budget clearance package supporting statement, Volumes I and II. Raleigh, NC: Author. Available: http://www.onetcenter.org/dl_files/omb2008/Supporting_Statement2.pdf [accessed June 2009].

Van Iddekinge, C., Tsacoumis, S., and Donsbach, J. (2003). A preliminary analysis of occupational task statements from the O*NET data collection program. Raleigh, NC: National Center for O*NET Development. Available: http://www.onetcenter.org/dl_files/TaskAnalysis.pdf [accessed July,2009].

Willis, G. (2005). Cognitive interviewing: A tool for improving questionnaire design. Newbury Park, CA: Sage.

Willison, S., and Tsacoumis, S. (2009). O*NET analyst occupational abilities ratings: Analysis cycle 9 results. Available: http://www.onetcenter.org/reports/Wave9.html [accessed August 2009].