3

Systems and Software Engineering in Defense Information Technology Acquisition Programs

THE EVOLUTION OF DEPARTMENT OF DEFENSE POLICY AND PRACTICE FOR SOFTWARE DEVELOPMENT

Beyond the sometimes burdensome nature of the oversight process as described earlier in this report and the inordinate amount of time that it can take an information technology (IT) program to reach initial operating capability (IOC), there are other fundamental issues afflicting many Department of Defense (DOD) IT programs. Many programs fail to meet end-user expectations even after they do finally achieve IOC. This, of course, is not a phenomenon unique to DOD IT programs, but it is certainly exacerbated by the long cycle times associated with DOD acquisition, especially for programs that exceed the dollar threshold for which department-level oversight is required. Such programs are designated as a major automated information system (MAIS) programs in the case of IT and as major defense acquisition programs (MDAPs) for weapons systems.

In the DOD, a significant structural factor leading to this failure to meet end-user expectations is the persistent influence over many decades of what is characterized as the waterfall software development life cycle (SDLC) model—despite a body of work that is critical of the waterfall mentality, such as the Defense Science Board reports cited below, and the issuance of directives identifying models other than the waterfall approach as the preferred approach. The waterfall model discussed below remains at least implicit in the oversight structure and processes that govern IT acquisition programs in the DOD today.

The waterfall process model for software development has its origins in work by Winston Royce in 1970.1 The term waterfall refers to a sequential software development process in which a project flows downward through a series of phases—conception, initiation, analysis, design, construction, testing, and maintenance. Ironically, Royce was not advocating the waterfall model in his original paper even though the model is attributed to him. He cited the waterfall model as a commonly used but flawed development approach and instead advocated a “do-it-twice” iterative process with formal customer involvement at multiple points in the process as a means to mitigate risk in large software development projects.

A paper by Reed Sorenson outlines the evolution of DOD SDLC models in the subsequent decades.2 The early years of that evolution were dominated by military standards such as Military Standard 490 on specification practices and DOD Standard (STD) 1679A on software development. Although DOD-STD-1679A was focused on software development, its origins in hardware and weapons systems development are clearly evident, and it reflects an era in which the waterfall SDLC model predominated.

The evolution continued through DOD-STD-2167 and 2167A in the late 1980s, driven in part by strong criticism of the waterfall model and a growing appreciation for a model not so heavily influenced by hardware and weapons systems thinking. Brooks’s seminal paper “No Silver Bullet—Essence and Accidents of Software Engineering,” published in 1987, was among the first to criticize the notion integral to the waterfall model—specifically, that one can fully specify software systems in advance:

Much of present-day software-acquisition procedure rests upon the assumption that one can specify a satisfactory system in advance, get bids for its construction, have it built, and install it. I think this assumption is fundamentally wrong, and that many software-acquisition problems spring from that fallacy.3

A 1987 Defense Science Board study chaired by Brooks was equally critical of the waterfall mentality contained in the then-in-force DOD-STD-2167 and the then-draft DOD-STD-2167A:

|

1 |

Winston Royce, “Managing the Development of Large Software Systems,” pp. 1-9 in Proceedings of the IEEE Westcon, IEEE, Washington, D.C., 1970. |

|

2 |

Reed Sorenson, “Software Standards: Their Evolution and Current State,” Crosstalk 12:21-25, December 1999. Available at http://www.stsc.hill.af.mil/crosstalk/frames.asp?uri=1999/12/sorensen.asp; accessed December 12, 2009. |

|

3 |

F.P. Brooks, Jr., “No Silver Bullet—Essence and Accidents of Software Engineering,” Information Processing 20(4):10-19, April 1987. |

DOD Directive 5000.29 and STD 2167 codify the best 1975 thinking about software including a so-called “waterfall” model calling for formal specification, then request for bids, then contracting, delivery, installation and maintenance. In the decade since the waterfall model was developed, our discipline has come to recognize that setting the requirements is the most difficult and crucial part of the software development process, and one that requires iteration between designers and users.4

In 1985 Barry Boehm first published his “spiral model” for software development,5 driven in part by this same fundamental issue: the ineffectiveness of the traditional waterfall software development process model. The Defense Science Board, in reports in 19946 and 2000,7 continued to argue for the abandonment of the waterfall model, the adoption of the spiral model, and the use of iterative development with frequent end-user involvement.

In 2000, DOD Instruction (DODI) 5000.2 was revised. For the first time the acquisition policy directives identified evolutionary acquisition as the preferred approach for acquisition. In 2002 the Under Secretary of Defense for Acquisition, Technology and Logistics issued a memorandum clarifying the policy on evolutionary acquisition and spiral development and setting forth a model based on multiple delivered increments and multiple spiral cycles within each delivered increment.

The current version of DODI 5000 retains the policy statement that evolutionary acquisition is the preferred approach. It further provides the governance and oversight model for evolutionary development cycles. However, the 5000 series regulations remain dominated by a hardware and weapons systems mentality. For example, the terminology used to describe the engineering and manufacturing development phase emphasizes the hardware and manufacturing focus of the process. In the evolutionary acquisition governance model, each phase repeats every one of the decision milestones A, B, and C and also repeats every program phase.

|

4 |

F.P. Brooks, Jr., V. Basili, B. Boehm, E. Bond, N. Eastman, D.L. Evans, A.K. Jones, M. Shaw, and C.A. Zraket, Report of the Defense Science Board Task Force on Military Software, Department of Defense, Washington, D.C., September 1987. |

|

5 |

Barry Boehm, “A Spiral Model of Software Development and Enhancement,” Proceedings of the International Workshop on Software Processes and Software Environments, ACM Press, 1985; also in ACM Software Engineering Notes 15(5):22-42, August 1986; and IEEE Computer 21(5):61-72, May 1988. |

|

6 |

Department of Defense, Report of the Defense Science Board on Acquiring Defense Software Commercially, June 1994; available at http://www.acq.osd.mil/dsb/reports/commercialdefensesoftware.pdf; accessed December 12, 2009. |

|

7 |

Department of Defense, Report of the Defense Science Board Task Force on Defense Software, November 2000; available at http://www.acq.osd.mil/dsb/reports/defensesoftware.pdf; accessed December 12, 2009. |

Preliminary design reviews (PDRs) and critical design reviews (CDRs), hallmarks of the waterfall SDLC model, are prescribed for every program, with additional formal Milestone Decision Authority (MDA) decision points after each design review. At least four and potentially five formal MDA reviews and decision points occur in every evolutionary cycle. As a result, although the oversight and governance process of DODI 5000 does not forbid the iterative incremental software development model with frequent end-user interaction, it requires heroics on the part of program managers (PMs) and MDAs to apply iterative, incremental development (IID) successfully within the DODI 5000 framework. (A separate question not addressed by this report is whether the current process is well suited for weapons systems.) Moreover, the IID and evolutionary acquisition approaches address different risks (Box 3.1).

Today, many of the DOD’s large IT programs therefore continue to adopt program structures and software development models closely

|

BOX 3.1 Evolutionary Acquisition Versus Iterative, Incremental Development Because both are incremental development approaches, evolutionary acquisition (EA) and iterative, incremental development (IID) are sometimes confused, even though the motivation for each approach and the nature of the increments used in each approach are different. The EA approach is motivated by a need for technology maturation—early increments provide end-user capabilities based on mature technology, whereas work on later increments is deferred until needed technology has been matured. In contrast, IID development for information technology (IT) systems is based on mature technology—that is, no science and technology development is needed. For many IT systems, important user-interaction requirements cannot be defined in detail up-front and need to be determined and verified based on incremental feedback from users. Accurate feedback cannot be provided unless users interact with actual systems capabilities. Increments in IID for IT systems are intended to provide the basis for this requirements refinement; experience shows that without such testing in real-world environments, a delivered IT system is unlikely to be useful to its intended users without the undertaking of extensive reworking. The EA approach is particularly useful when the technology required to support a needed capability is not completely mature—those capabilities that can be provided on the basis of mature technology are implemented in initial increments; the technology needed for later increments is matured while work on the initial increments proceeds. An important difference in the two approaches is the timescale for the increments. For IT systems, each increment should deliver usable capability in less than 18 months. DODI 5000.2 suggests that an increment should be produced in fewer than 5 years (for weapons systems). |

resembling the waterfall model rather than an IID model with frequent end-user interaction. Even those that plan multiple delivered increments typically attempt to compress a waterfall-like model within each increment.

Largely the same governance and oversight approach is applied to IT programs as that applied to weapons systems programs except for the financial thresholds used to designate an IT program as one meriting department-level oversight (in DOD parlance, a major automated information system). The expenditure levels necessary for an IT system program to be designated as an MAIS and subjected to very “heavyweight” governance and oversight processes are significantly lower than the expenditure levels necessary for a weapons system program to be designated as an MDAP and subjected to this same level of scrutiny.

This heavyweight governance process includes large numbers of stakeholders whose single-issue equities must be satisfied at each decision point or assessment. As discussed in Chapter 1 of this report, this governance and oversight process results in very long time lines that are fundamentally out of alignment with the pace of change in information technology. And although many stakeholders are permitted to express opinions at each assessment or milestone decision point in the governance and oversight process, the voice of the end user is seldom heard in this process.

ITERATIVE, INCREMENTAL DEVELOPMENT

This section begins with a brief history of iterative, incremental development drawn from Craig Larman and Victor Basili and from Alan MacCormack.8 It is provided as a way to situate agile and related approaches within a broader context and also to demonstrate that IID has a long history of being applied successfully for different types and scales of problems. The section continues with a discussion of various agile software methodologies and their core concepts for how to better and more efficiently develop and acquire IT systems that meet end-user needs.

Emerging from a proposal by Walter Shewhart at Bell Labs, IID began as a quality-control exercise, encapsulated in the phrase plan-do-study-act (PDSA). PDSA was heavily promoted in the 1940s by W. Edwards Deming and was explored for software development by Tom Gilb, who developed the evolutionary project management (Evo) process in the 1960s, and by Richard Zulmer. The first project to actively use an IID process success-

fully was the 1950s X-15 hypersonic jet. IID was later used to develop software for NASA’s Project Mercury. Project Mercury was developed through time-boxed,9 half-day iterations, with planning and written tests before each micro-increment.

In 1970, Royce’s article on developing large software systems, which formalized the strict-sequenced waterfall model, was published.10 Royce actually recommended that the phases which he articulated—analysis, design, and development—be done twice. In addition to repetition (or iteration), Royce also suggested that projects with longer development time frames first be introduced with a shorter pilot model to examine distinctive and unknown factors. Although not classically IID, Royce’s proposed model was not as limited as the waterfall model would become.

Drawing on ideas introduced in Project Mercury, the IBM Federal Systems Division (FSD) implemented IID in its 1970s-era software projects and enjoyed success in developing large, critical systems for the Department of Defense. The command-and-control system for the U.S. Trident submarine, with more than 1 million lines of code, was the first highly visible application with a documented IID process. The project manager, Don O’Neill, was later awarded the IBM Outstanding Contribution Award for his work in IID (called integration engineering by IBM). IBM continued its success with IID in the mid-1970s, developing the Light Airborne Multi-Purpose System (LAMPS), part of the Navy’s helicopter-to-ship weapons system. LAMPS is notable for the fact that it used approximately 1-month iterations, similar to current popular IID methods of 1 to 6 weeks. LAMPS was ultimately delivered in 45 iterations, on time and under budget.

IBM’s FSD also had success in developing the primary avionics systems for NASA’s shuttle. Owing to shifting needs during the software development process, engineers had to abandon the waterfall model for an IID approach, using a series of 17 iterations over 31 months.

TRW, Inc., also used the IID approach for government contracts in the 1970s. One noteworthy project was the $100 million Army Site Defense software project for ballistic-missile defense. With five longer cycles, the project was not specifically time-boxed and had significant up-front specification work; however, the project was shifted at each iteration to respond to customer feedback. TRW was also home to Barry Boehm, who in the 1980s developed the spiral model described earlier in this chapter.

The System Development Corporation also adopted IID practices

while building an air defense system. Originally expected to fit into the DOD’s waterfall standard, the project was built with significant up-front specifications followed by incremental builds. In a paper published in 1984, Carolyn Wong criticized the waterfall process: “Software development is a complex, continuous, iterative, and repetitive process. The [waterfall model] does not reflect this complexity.”11

The early1980s led to numerous publications extolling the virtues of IID and criticizing the waterfall approach. In 1982, the $100 million military command-and-control project, based on IBM’s Customer Information Control System technology, was built using an IID approach without the time-boxed iterations, called evolutionary prototyping. Evolutionary prototyping was used often in the 1980s in active research in artificial intelligence systems, expert systems, and Lisp machines. During the mid-1980s, Tom Gilb was active in supporting a more stringent IID approach that recommended short delivery times, and in 1985 Barry Boehm’s text describing the spiral model was published. In 1987 TRW began building the Command Center Processing and Display System Replacement. Using an IID system that would later become the Rational Unified Process, the 4-year project involved six time-boxed iterations averaging 6 months each. In the 1990s, the developers shifted away from the heavy up-front speculation still being used in the IID approach of the 1970s and 1980s. In 1995 at the International Conference on Software Engineering, Frederick Brooks delivered a keynote address titled “The Waterfall Model Is Wrong!” to an audience that included many working on defense software projects.

After near failure using a waterfall method, the next-generation Canadian Automated Air Traffic Control System was successfully built based on a risk-driven IID process. Similarly, a large logistics system in Singapore was faltering under the waterfall process. Jeff de Luca revived the project under IID and created the Feature Drive Development (FDD) process. According to a 1998 report from the Standish Group, the use of the waterfall approach was a top reason for project failure in the 23,000 projects that the group reviewed.12

Commercially, Easel Corporation began developing under an IID process that would become Scrum, an agile software development framework. Easel used 30-day time-boxed iteration based on an approach used for nonsoftware products at major Japanese corporations. Institutionalizing its own IID process, Microsoft Corporation introduced 1-day iterations. Beginning in 1995, Microsoft, driven to garner a greater share of the

browser market from Netscape Communications Corporation, developed Internet Explorer using an iterative, component-driven process. After integrating component modules into a working system with only 30 percent functionality, Microsoft determined that it could get initial feedback from development partners. After the alpha version of Internet Explorer was released, code changes were integrated daily into a complete product. Feedback was garnered in less than 3 hours, and so adjustment and new functionality could be added. With only 50 to 70 percent functionality, a beta version was released to the public, allowing customers to influence design while developers still could make changes.

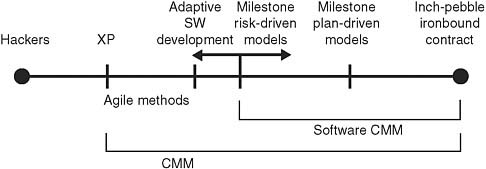

Agile software development (ASD) methodologies, a form of IID, have been gaining acceptance among mainstream software developers since the late 1990s. ASD encourages the rapid development of systems with a high degree of requirements volatility. In addition, ASD practices emphasize delivering incremental working functionality in short release cycles. “The Manifesto for Agile Software Development” (Box 3.2) presents one group’s view of ASD’s strengths. Figure 3.1 shows Boehm’s view of how ASD fits in the spectrum of development processes. The processes used by the DOD for IT acquisition traditionally fall in the right-hand side of the figure.

Today, ASD methodology is established to varying degrees in the academic, educational, and professional software development communities. Particular instantiations include the Scrum, Crystal, and Extreme Programming (XP) approaches. In 2001 the first text on the subject was published under the title Agile Software Development, by Alistair Cockburn.13

Several commercial IT companies are moving toward developing software using ASD processes. For example, a recent randomized survey of 10 percent of the engineers at Microsoft found that around one-third of the respondents use ASD. That survey also found that Scrum is the most popular ASD methodology, that ASD is a relatively new phenomenon to Microsoft, that most projects have employed ASD techniques for fewer than 2 years, and that ASD is used mostly by co-located teams who work on the same floor of the same building.

A fair amount of research has been conducted on teams adopting ASD processes.14,15 From the academic research perspective, Laurie Williams

|

13 |

A. Cockburn, Agile Software Development, Addison-Wesley, Boston, Mass., 2001. |

|

14 |

See http://www.controlchaos.com/resources/ for Scrum case studies and several examples of waterfall teams moving to Scrum; accessed November 2, 2009. |

|

15 |

See http://agile2009.agilealliance.org/; accessed November 2, 2009. The Agile conference series has various experience reports on teams adopting Agile. Agile 2006 and Agile 2007 sold out, with more than 1100 (predominantly industrial) attendees. |

|

BOX 3.2 Manifesto for Agile Software Development We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value: Individuals and interactions over processes and tools Working software over comprehensive documentation Customer collaboration over contract negotiation Responding to change over following a plan That is, while there is value in the items on the right, we value the items on the left more.

NOTE: The Web site on which this manifeso appears contains the following notice: “© 2001, the above authors. This declaration may be freely copied in any form, but only in its entirety through this notice.”” SOURCE: Available at http://www.agilemanifesto.org/. |

and her research group16 have conducted empirical evaluation of companies adopting XP. This work is summarized in Table 3.1, which highlights the research results of four case studies performed on small to medium-size systems. Across all systems, one sees a uniform improvement in post-

FIGURE 3.1 The planning spectrum. Unplanned and undisciplined hacking occupies the extreme left, while micromanaged milestone planning, also known as inch-pebble planning, occupies the extreme right. SOURCE: B. Boehm, “Get Ready for Agile Methods, with Care,” Computer 35(1):64-69, January 2002.

release quality, which is the most important quality metric, along with general trends in the improvement of programmer productivity, customer satisfaction, and team morale.

Agile processes are fundamentally different from the practices adopted during traditional development, and represent much more than a mere time-compression of the waterfall development process. Several practices that are part of ASD are significantly different from the traditional waterfall software development process. For example, some of the more common ASD practices from XP and FDD are test-driven development, continuous integration, collective code ownership, and small releases.

As an example, consider one of the more popular ASD processes— Scrum. Work is structured in cycles of work called sprints, iterations of work that are typically 2 to 4 weeks in duration. During each sprint, teams pull from a prioritized list of customer requirements, called user stories, so that the features that are developed first are of the highest value to the customer. User stories are a good way for users (and other stakeholders) to express desired functionality. At the end of each sprint, a potentially shippable product is delivered.17

Scrum essentially entails building a system in small, shippable-product increments. The shorter release cycles allow systems to obtain early feedback on various aspects, such as usage profile, quality, dependencies, integration, and stress loads, from relevant stakeholders. This information can then be used to guide corrective action affordably during development. The development team decides the deliverable for each sprint with input from the end user. This allows the end user to understand the qual-

|

17 |

See the Scrum Alliance homepage at http://www.scrumalliance.org. |

TABLE 3.1 Results of Using Extreme Programming (XP) in Industrial Software Systems: Summary of Four Case Studies

ity of the system being delivered and to make changes to the requirements as the project proceeds. Within each sprint, engineers continue to use tested and proven development practices and development tools.

Other ASD processes such as XP prescribe in detail particular practices to be used and afford little flexibility, which might present a challenge to application in the DOD context. However, care should be taken when adopting some of the agile development processes. Some of them advocate, for example, not documenting code (more popularly known as self-documenting code) or not requiring any initial architecture work (such as XP). These practices would likely be catastrophic for large sys-

tems that are expected to endure. Nonetheless, even if specific prescriptions are adapted to the particular context, adopting IID based on ASD methodologies as the default DOD practice would require radical changes to current oversight and management processes.18

As an example of the use of ASD for defense systems, recent work by Crowe and Cloutier19 presents the results of a DOD case study in which a phased approach was used to deploy high-priority capabilities using an incremental agile process. This approach was adopted for the Defense Readiness Reporting System-Army (DRRS-A). By 2006, the U.S. Army Readiness Reporting System was no longer meeting the needs of the commanders. In 9 months, a new system was built to meet these needs without the loss of existing capabilities by integrating all aspects of the software life cycle using an agile approach. The team involved about 60 people from government and several contracting firms. A development sprint length of 30 days was used. In addition to recognition of the importance of communication, coordination, and risk management, key lessons included the need for tight collaboration among the contracting teams, stakeholders, and program offices and ensuring by means of predefined checkpoints that the development met the customers’ needs. All of these were viewed by the participants as significantly enabled by the agile development processes. Table 3.2 lists emergent characteristics of the agile process.

Large software development organizations make use of IID processes in a variety of ways, including for individual logical components of a more complex product set (Box 3.3). There can and will be multiple coding milestones and beta versions in a product release, with features constantly released to end users for feedback. This feedback is then leveraged to improve the quality of the end product iteratively. Within each beta or candidate release, development teams use the normal agile development practices (e.g., sprints) and focus on planning, work allocation, and quality practices such as unit testing and code inspections; each release is equivalent to an individual system release. This approach provides complete transparency to the development process and enables project managers to get an early indication of problems in development, slippage in meeting milestones, and quality issues.

The above descriptions are meant to give a flavor of what IID looks like in the context of various agile software methodologies. Although pure

TABLE 3.2 Emergent Characteristics of the Agile Process

|

Characteristic |

Comments |

|

Liberty to be dynamic |

Agility needs dynamic processes while adhering to acquisition milestones. |

|

Nonlinear, cyclical, and nonsequential |

The life-cycle behavior was not like traditional waterfall models or linear frameworks; decreasing cycle times. |

|

Adaptive |

Conform to changes, such as capability and environment. |

|

Simultaneous development of phase components |

Rapid fielding time may not lend itself to traditional phase containment (i.e., training and software development together). |

|

Ease of change |

Culture shift to support change neutrality; ease of modification built in to architecture and design. |

|

Short iterations |

Prototyping, demonstrating, and testing can be done in short iterative cycles with a tight user-feedback loop. |

|

Lightweight phase attributes |

Heavy process reduction, such as milestone reviews, demonstrations, and risk management. |

|

SOURCE: Portia Crowe and Robert Cloutier, “Evolutionary Capabilities Developed and Fielded in Nine Months,” CrossTalk 22(4):15-17, May/June 2009. |

|

agile methods such as Scrum or XP may not be appropriate for DOD software efforts (for example, the short time lines such as the 30-day sprints advocated in Scrum), the core concepts of the methods are nonetheless applicable to defense IT acquisition. Of particular importance is the idea that the IID cycle must constantly obtain and reflect end-user feedback, especially for software development and commercial off-the-shelf software integration (SDCI) IT programs, so as to acquire systems aligned with end-user expectations and needs. Although the precise IID template for commercial off-the-shelf hardware, software, and services (CHSS) programs is somewhat different, the basic concepts are equally applicable to those programs.

|

BOX 3.3 Longer-Term Development Processes Can Also Employ Iterative, Incremental Development For some very large systems, such as the Windows operating system family, the typical development life cycle is long—on the order of 3 years. Nonetheless, agile development processes are used within that span. There is a usable version of the system at any given instant, and new versions with features integrated are produced at regular intervals/milestones. The development process provides for continuous feedback from end users. Each phase has a well-defined release feature set. User feedback drives increment planning and feature increment, and assessment continues through multiple cycles, culminating in final release. The process components and steps shown in Figure 3.3.1 are explained as follows:

FIGURE 3.3.1 Development time line for some very large systems. |

PLATFORMS AND VIRTUALIZATION: KEY UNDERPINNINGS FOR INFORMATION TECHNOLOGY SYSTEMS

The concepts of platforms and virtualization are key to modern software development and have important implications for DOD IT system acquisition.

A platform20 is an evolving combination of hardware, system software, and applications software on top of which a wide group of individuals and organizations can innovate. Important examples today include Web 2.0 capabilities that enable interactive Web sites, the Windows family of operating systems, and the Intel x86 instruction set (implementations are also available from Advanced Micro Devices).21

Platforms can be defined at a low level in the technology stack (e.g., Ethernet for local area networking) or higher up. The higher up the stack one proceeds in defining a platform, the less commoditized and generic and the more application-, domain-, and environment-specific the platform becomes. Specifying a platform at higher layers of the stack for use across a portfolio of related IT programs has the advantage of establishing an architecture with a set of inherent characteristics that can then be taken advantage of by all of the capabilities built on top of the platform. The characteristics that can be established in such platform architecture include security and information assurance, operational availability, continuity of operations and disaster recovery, scalability, extensibility in provisioning, and extensibility in operations. These are all characteristics that are inherent attributes of an underlying architecture, and there is no reason that every IT program in a portfolio should be required to address them from the ground up. There is a trade-off, of course, between defining a platform with a rich set of services as described above and the inevitable filtering out of commercial off-the-shelf (COTS) (or other) offerings that can run on that platform. Such decisions would need to be made carefully.

Beyond the utility infrastructure layers, which are predominantly CHSS, there are several additional technology stack layers that one can consider incorporating into a platform, including middleware (plus integration code to make it suitable in a particular environment), common applications, and enterprise data repositories.

Middleware appropriate for the application domain plus any additional integration code necessary to make it function in the intended operational domain constitutes the next logical layer to consider in establishing a platform for use across a portfolio of SDCI IT programs. This, for example, could include COTS service-oriented architecture middleware plus the domain-specific integration code necessary to enable it to operate across bandwidth-limited, widely distributed environments such as those

on deployed units—for example, to provide reliable messaging or distributed security services in a deployed distributed environment. The COTS middleware products typically will not directly accommodate such very demanding environments without additional integration effort. Other software layers that can be considered for standardization in a platform include common applications (e.g., database and application servers) and key enterprise information repositories.

At the same time, however, adhering to the standards and design rules of the platform architecture does impose constraints on those building and innovating on the platform. The more specific a standard or a design rule becomes as one progresses up the technology stack, the more difficult and time-consuming it is to gain broad adoption, the more difficult and time-consuming it is to evolve it to keep pace with relentlessly advancing technology, and the more suboptimal its application is to new domains. There are risks from monoculture and from being locked into a single supplier as well. These considerations thus argue for restricting the applicability of a platform to a collection of similar application domains such as a portfolio of programs. Determining the appropriate granularity at which to make use of a common platform is important and should take into account all of these factors. Done appropriately in the context of a portfolio of programs, a platform can enable significantly increased efficiency, agility, and speed to capability.

Virtualization refers to the abstraction of computer resources. For example, multiple virtual servers can run on a single physical server, and a virtual private network creates a secure network link on top of an underlying physical network. Virtualization offers a number of benefits. It enables a portfolio of programs to deliver capability independent of hardware deployment and therefore increases efficiency, agility, and speed to capability. These increases provide ample reason for separating the COTS hardware components of an IT program from the software development and COTS software integration elements of an IT program. This is true both for infrastructure-based capabilities that have ample bandwidth to permit the use of centralized computing centers, and for deployed capabilities where bandwidth limitations often dictate a deployed version of a virtualized computing, storage, and network utility infrastructure.

Virtual platforms are of particular value in a deployed environment, where the resulting efficiencies can lower the lift capacity required to deploy a combat unit, as well as lower the space, weight, power, and cooling required in a ship or an aircraft installation. Virtual platforms also have the potential to lower sustainment costs otherwise inherent in program-specific approaches to logistics support. Finally, virtual platforms enable SDCI IT programs to focus exclusively on deployment decisions involving only their own specific systems as machine images, and at the

same time to avoid addressing production decisions involving environmentally qualified COTS-based hardware installation.

A RECOMMENDED ACQUISITION MANAGEMENT APPROACH FOR INFORMATION TECHNOLOGY PROGRAMS

Chapters 1 and 2 of this report highlighted the difficulty of applying a governance and oversight regimen based on hardware and weapons system development to IT programs. Advocates of the current governance and oversight structure sometimes assert that this structure permits tailoring and provides all the flexibility needed for an MDA or a PM to adjust the way that the process is applied to specific programs. Even if these advocates are right, given the central importance of the rapid and effective acquisition of IT systems, there should be no reason that each MDA and each PM be required to define de novo a development and oversight process attuned to an IT program. Rather, as already argued in Chapter 2, a more effective approach is to establish a separate and distinct program governance and oversight regimen for IT programs that leverages the significant body of research available and the more than 20 years’ worth of past recommendations and conforms to the widely adopted commercial best practices for development.

IT programs can be defined to focus primarily on the acquisition of developmental software, COTS software integration, COTS hardware, COTS software, commercially available services, or combinations of these. There are fundamentally different classes of issues involved in each of these cases. This examination focuses on the two categories of IT programs identified in the introductory chapter of this report:

-

SDCI programs—those focused on the development of new software to provide new functionality or focused on the development of software to integrate COTS components, and

-

CHSS programs—those focused exclusively on COTS hardware, software, or services without modification for DOD purposes (i.e., the capabilities being purchased are determined solely by the marketplace and not by the DOD).

The hardware components of information technology programs are most heavily influenced by Moore’s law, which predicts the doubling of capacity per unit expenditure every 18 months. The resulting advance of networking, computing, and storage capacity in COTS hardware led the DOD to begin abandoning purpose-built military hardware and to embrace COTS hardware for IT programs beginning in the 1980s, even for tactical systems. For example, in the Navy, the Desktop Tactical Com-

puter I (DTCI; a Hewlett-Packard 9020) was procured beginning in 1984 for various programs, including the Joint Operational and Tactical System I, the Integrated Carrier Antisubmarine Warfare Protection System, the Submarine Force Mission Planning Library, and numerous other small programs. The DTC I was followed in 1989 by the DTC II, based on the Sun Series 4/110 (later the Series 4/300), which was also ruggedized for shipboard use to be survivable under strenuous environmental conditions including shock and vibration. This change took place against the backdrop of commercial technology migrating from a data-center-based computing environment to a highly networked, client-server environment with substantial computational power available at every desk.

The trend toward COTS was further reinforced in 1994 when Secretary of Defense William Perry issued a memorandum prohibiting the use of most military standards without waivers and encouraging in their place the use of performance specifications and industry standards.22 In this commercial market environment dominated by Moore’s law and a high rate of technology change, hardware obsolescence and supportability have become issues that IT programs have to deal with.

In contrast to the hardware components, the software components of IT programs are most heavily influenced by the fast pace of technology change in the Internet environment and by the fundamental difficulty in defining requirements23 for many classes of software systems, especially (but not limited to) human-interactive software systems. In the commercial Internet environment that defines the experience base of most young men and women entering the military today, new software capabilities are introduced on a regular and routine basis, often with significant end-user involvement and feedback through early alpha and beta release programs.

Fundamentally different classes of issues are involved when dealing with hardware versus software components of IT programs. Therefore, different strategies are appropriate when addressing the different components. In both cases, rapid change is a fundamental factor that must be addressed, and IID acquisition strategies are indeed appropriate. However, the nature of the capability increments should differ for hardware and software components owing to the different issues driving them.

Although some DOD IT programs are defined as exclusively COTS hardware acquisition programs (e.g., the acquisition of networking, com-

puting, or storage infrastructure) or exclusively software development acquisition programs, others are defined to provide both software-based capabilities and the physical hardware plant on which the software will run. This has been particularly true for IT programs defined to provide deployable capability such as command, control, communications, computers, intelligence, surveillance, and reconnaissance (C4ISR) or combat support programs for deployed land, air, and maritime force elements. During the early days of COTS hardware adoption by the DOD, the capacity of available hardware and the nature of the software environment dictated that many systems be single-purpose.

Several key factors have changed significantly since those early days, however. The capacity available in commodity COTS hardware has grown exponentially, following Moore’s law. Software is becoming increasingly independent of hardware in many DOD IT programs. At the same time, operations and sustainment costs have grown along with the complexity of IT systems. In addition, virtualization technology has matured. This has created a compelling business case for virtualized computing, storage, and network infrastructure utility models in many enterprise environments. The existence of such infrastructure can decouple the time cycles of SDCI capability increments from the need to deploy or refresh hardware, allowing SDCI IT programs or portfolios of programs to deploy capability to end users with significantly increased speed and agility. This is particularly true for server applications. With regard to client-side components, the use of a standardized technology platform on top of a virtualized computing, storage, and network infrastructure can further increase speed and agility.

For all of these reasons, IT programs, even those intended to provide deployed or deployable capability, should be defined as exclusively SDCI IT programs or as exclusively CHSS IT systems programs. Absent a compelling case to the contrary, the CHSS components of IT systems and SDCI software elements of IT systems should be acquired through independent IID acquisition programs with the nature of the capability increments structured to address the different issues associated with hardware and software components. Moreover, platform-based virtualized computing, storage, and networking utility models should be favored wherever possible.

The following subsections offer a modified acquisition management approach recommended by the committee for SDCI IT programs and for CHSS IT programs. They also further define the use of a platform-and virtualization-based approach and show how this approach can be visualized and managed as a combination of these two categories of programs.

Proposed Acquisition Management for SDCI Programs

IT programs that are focusing on SDCI efforts are most heavily influenced by elevated end-user expectations that are based on the pace of technological change experienced by most users every day in the Internet environment, and by the fundamental difficulty in defining requirements for many classes of software systems, especially human-interactive software systems. SDCI IT programs must therefore focus not only on the functional and nonfunctional capabilities that they are chartered to provide, but also on employing IID practices for software development and program management practices that represent the best practices in software engineering.

As discussed earlier in this chapter, the roots of IID software development methods can be traced back many years. Nevertheless, the influence of the waterfall model persists to this day in the current DOD governance and oversight process. One possible reason for this persistence identified by Curtis and co-authors24 is that major elements of the document-intensive waterfall method attempt to satisfy management’s goals for accountability despite the fact that many of these elements fail to account for the successful execution of IT projects. This type of effort places increasing focus on the acquisition process at the expense of focus on the product. To break this hammerlock on software-intensive IT programs, several aspects of the program structure and the governance and oversight regimen would need to be changed through the adoption of the following core principles:

-

Emphasis on shorter cycle times to deliver the best IT to the warfighter

-

Time-boxed incremental deliveries of usable capabilities (also known as capability increments).

-

Time-boxed iterations within each capability increment.

-

Early focus on nonfunctional requirements and an architecture suited for the intended operating environment.

-

-

Streamlined processes for requirements definition, budgeting, operational testing, and oversight

-

Focus on “big-R” requirements25 during early planning.

-

-

-

Performance of integrated testing and evaluation commensurate with risk and benefit.

-

-

The employment of IID methods for development, contracting, and testing

-

In particular, the voice of the user as a prominent factor throughout each iteration within each capability increment.

-

An acquisition governance process that empowers end users in the acquisition oversight decision processes.

-

-

The decomposition of larger programs into smaller projects or increments that are delivered to the user in an evolutionary manner

-

Deployment decisions driven by risk and benefit.

-

Incremental build-out of the architecture in scope and scale sufficient to meet the needs of the functional requirements of each capability increment.

-

Long-term stable funding across multiple capability increments.

-

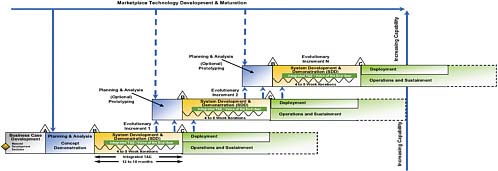

To fully internalize these core principles, SDCI programs should be structured as IID programs with time-boxed capability increments of not longer than 12 to 18 months to deliver meaningful capability to end users. The recommended acquisition management approach for SDCI IT systems programs is shown in Figure 3.2. Key to IID software methods is the acknowledgment of the difficulty of document-centric attempts to fully specify requirements in advance and the necessity of replacing such an approach with an iterative, incremental learning and communications process taking place between developers and end users. The capability increments are time-boxed and further broken down into a sequence of time-boxed iterations, each of which results in integrated and tested products with the voice of the end user further refining and reprioritizing the more detailed “small-r” requirements at the completion of each iteration.

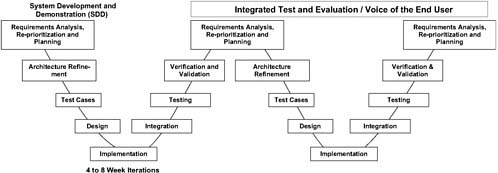

Each iteration will include analysis, design, development, integration, and testing to produce a progressively more defined and capable, fully integrated and tested product. This process is shown in Figure 3.3 as an adaptation of the traditional systems engineering “vee-diagram.” Each iteration would take on a subset of the overall problem of building the desired capability for the increment and would perform the full set of tasks, including requirements analysis and refinement, architecture formulation or refinement, design formulation or refinement, implementation, integration, and testing. It is natural that early increments would spend more time in the front-end processes of architecture and design, while later increments would spend more time in the back-end processes

of integration and testing. The two steps in the iteration of integration and testing are essential elements, because they serve to force direct understanding of the progress (or lack of progress) actually being made at frequent, well-defined checkpoints.

From one perspective this approach can be regarded as a traditional application of systems engineering processes, but there are several important differences. As just mentioned, testing plays a key role not only in terms of how often and when it is conducted, but also in how it is considered early in each iteration and the manner in which the “voice of the end user” is integrated into the process. Many regard some forms of IID as a test-driven process, since testing is addressed and tests are defined even before design and implementation. Further, when testing is actually conducted, the voice of the end user is integrated directly into the process, with direct effects on the further refinement of requirements and on the planning, and potentially the reprioritization, of subsequent iterations. This sequence of events reflects both a learning cycle and a communications cycle in each iteration.

With regard to integrated test and evaluation (T&E), operational end users are, of course, not the only important stakeholders. Other stakeholders should also be involved in the process, including developmental and operational test stakeholders, security certification and accreditation stakeholders, and interoperability stakeholders. They must all actively participate in testing and a nontraditional form of verification and validation (V&V)—not the traditional version of V&V against a fixed, predefined set of requirements, but rather the learning and communications process of IID that acknowledges the impossibility of complete up-front specification and the need to refine requirements as learning occurs. For such an approach to succeed and materially change the dynamics of the current time-consuming and unsatisfying process, all of the stakeholders that warrant a voice in the ultimate fielding decision should be integrated into this voice-of-the-end-user learning and communications cycle. A case can certainly be made that the operational end user is the most significant among the various entities that have an interest in the outcome and that the voice of end users should therefore be louder than that of the others. Chapter 4 presents more detail on testing and acceptance and on the formulation of an acceptance team that can serve as and also channel the voice of the end user.

The content of early iterations should be focused on a combination of the most technically challenging elements of the capability increment and on functional capabilities with the greatest business or warfighting value. The voice of the end user should provide feedback on each iteration for the refining and prioritizing of requirements in order to institutionalize the learning and communications process vital to IID. Since this voice of

the end user is to play such a prominent role in refining and prioritizing requirements at each iteration within each capability increment, the requirements allocated to each increment should shift from the current focus on detailed functional requirements to a greater focus on objectives or big-R requirements. This implies a profound change to the Joint Capabilities Integration Development System process, for example, which is currently biased toward the document-centric, up-front detailed specification of functional requirements.

Nonfunctional requirements for software-intensive IT systems such as security and information assurance, operational availability, scalability, and performance are fundamental attributes of systems architecture, especially in distributed systems, and they need to be communicated clearly by the appropriate stakeholders. Similarly, the operational environment in which such a system must function can profoundly affect the system architecture. For SDCI programs of any significant scope and scale, system architecture is likely to represent one of the most significant risks, and it should be addressed early in the program, even during the concept development phase in advance of entering the development of capability increments. Here again, however, caution and pragmatism must be exercised to prevent susceptibility to the demand that all requirements must be fully documented up front.

Although these nonfunctional and environmental requirements will have a profound effect on the system architecture, building out the system architecture is itself a learning and communications process that is best accomplished in an IID fashion. Similarly, although one can perhaps understand the top-level nonfunctional requirements very well up front, their refinement into lower-level requirements that drive design and implementation is also best addressed in an IID fashion. At the same time, this should not be construed to suggest that big-R requirements will not change. Feedback on those is also important and should be accommodated.26

Any SDCI program must have a clear vision of an end-state target architecture appropriate to support both the full scope of the intended capability and the full scale of the intended deployment from the outset. It is at a minimum impractical and in most cases impossible to implement or even to fully prove the full scope and scale of the architecture either in the early pre-Milestone B concept development phase or in early capabil-

ity increments or iterations. The architecture, like the other aspects of the software in an IID approach, should be built up in scope to support the planned functionality of each capability increment, and should be built up in scale to support the planned deployment scope of each capability increment. Any attempt to force more up-front architecture proof or development will substantially delay a program’s ability to deploy useful capability to end users.

Since the voice of the end user can adapt priorities across iterations within each capability increment, the learning that takes place through this process can affect the ability to achieve all of the objectives originally targeted for the increment. Further, the continuous nature of T&E inherent in IID and the learning and communications process integral to IID will develop substantial T&E results as the iterations progress within a capability increment. Therefore, an integrated approach to T&E to include the voice of the end user; traditional development, testing, and evaluation; operation testing and evaluation; interoperability certification; and information assurance certification and accreditation equities is a fundamental element of this modified acquisition management approach for IT programs. As was the case with the requirements process, this implies a profound change in the T&E process used for such programs. At the conclusion of each time-boxed capability increment, the focus of the deployment decision (Milestone C) shifts from the evaluation of successful completion of the predefined scope allocated to the capability increment to an evaluation of the risks and benefits of deployment combined with a reevaluation of the priorities for the objectives of the subsequent capability increment (Milestone B). This integrated approach to test and evaluation is discussed in depth in Chapter 4.

The governance and oversight model for such an IID program must change substantially from the current structure to accommodate this approach. The vast majority of SDCI IT system programs use technology developed and matured in the commercial marketplace. While these programs do develop software, they do not develop fundamental technology. Rather than focusing on technology development and technology readiness levels (TRLs) and technology readiness assessments (TRAs), the early phase of the program structure should use prototyping to demonstrate key concepts that the system is intended to address, key nonfunctional requirements, high-business-or-warfighting-value functional requirements, and an ability to function properly in the intended operational environment for the system, including constrained communications and networking environments. Once these issues are resolved, there is no need to repeat this redefined concept development phase for each capability increment. All that is required for successive capability increments after the first is a much-abbreviated planning and analysis phase to reevaluate

the plan for the next increment, given the learning that took place and the results actually achieved in the previous increment.

From a program oversight and governance perspective in the current DODI 5000 approach, there can be multiple oversight bodies, and there is a large number of participants in the program oversight and review process. Each of the oversight groups can require program changes, and, often, each individual representative of a Service or other group has the ability to force changes to a project or require special accommodations or requirements at a very detailed level, often without any justification of the impacts on cost or schedule caused by the changes. This has negative effects: (1) there are too many requirements; (2) the program is not able to effectively prioritize requirements; and (3) the requirements can in fact be contradictory or extremely difficult to implement.

The responsibility, authority, and accountability for program execution all need to be clarified and strengthened. Program authority and accountability are diluted by the scope and complexity of the programs and the resulting program structures. Further, the January 2006 report of the Defense Acquisition Performance Assessment (DAPA) project concluded that “the budget, acquisition and requirements processes [of the Department of Defense] are not connected organizationally at any level below the Deputy Secretary of Defense.”27

To address these issues, the program manager and portfolio management team (PMT), as defined in Chapter 2, should have decision authority, derived explicitly from higher authority, to determine trade-offs among schedule, cost, and functionality. One of the most important tasks of the PM and PMT is to determine priorities in the IID development: what is in the first capability increment, what is in the second, and so on. Further, the PM and PMT decide which requirements are essential and which would be “nice to have.” The PMT’s role is vital to ensuring that appropriate interoperability is maintained across the team’s functional area.

This process is not workable if there is program oversight or accountability to one or more committees, with each member being able to force changes. In terms of functionality, such committees have a constructive role in offering their views, but the PM and PMT should derive authority from a higher source: the milestone decision authority. (In some cases it may be appropriate for the PMT to be delegated the role of MDA.) Having governance committees be strictly advisory, with decision authority clearly given exclusively to the PM, PMT, and MDA as discussed below for each program phase and decision milestone, is key to the success of

the committee’s recommended approach. Of course, with greater authority must come greater accountability. The PM and PMT are accountable to a senior DOD official in the chain of command—namely, the MDA, who in turn is accountable for the success of the program.

Another important element of incremental program management is that the PM and PMT have an effective body to help them make the right decisions on priorities and specific requirements for each stage of the project. As indicated above, this would be an advisory body, and the decision responsibility would reside with the PM and PMT. The user has to have a significant voice here.

Finally, since multiple time-boxed capability increments will fit within each budget cycle of the planning, programming, budgeting, and execution process, and to give end users confidence that their requirements will be addressed (thereby avoiding the unintended but real consequence of users trying to overload their requirements into the first capability increment), IID programs should be provided with a stable budget profile across multiple capability increments.

Appendix B provides a more detailed discussion of each of the program phases and decision milestones presented in Figure 3.2. For each decision milestone, the key objectives of the milestone and the responsibilities of the PM, PMT, and MDA are addressed. (Once again, note that in some cases it may be appropriate for the PMT to be delegated the role of MDA.)

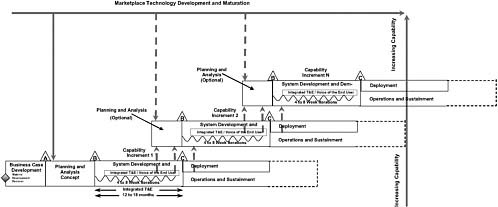

Proposed Acquisition Management for CHSS Programs

The goal of CHSS programs is to exploit commercially available products and services without modification to meet DOD needs, although ruggedization to meet environmental requirements for deployed or deployable systems can be addressed in this category of programs. Key requirements that should be addressed in such programs include capability, capacity, scalability, operational availability, information assurance, and, in the case of deployed or deployable programs acquiring hardware, the environmental qualification of the hardware components and any associated ruggedization. Products in this category are often relatively interchangeable components, with performance characteristics that are completely understandable through specifications or product data sheets and through inspection or experimentation to validate vendor claims. (Therefore, there is no real reason for confusion about the basic capability or service procured, and the areas of differentiation between competing alternatives are entirely knowable and verifiable.)

A key characteristic for commercial components and services is the rapid pace of change driven by the marketplace. For more than three

decades, IT hardware in the commercial marketplace has been driven by Moore’s law, which describes the approximate doubling of capacity per unit of expenditure every 18 months. Although that growth in performance cannot continue indefinitely, IT capacity will continue to improve, and such improvements need to be factored in to the acquisition approach of IT systems programs providing COTS hardware. COTS software is most heavily influenced by the fast pace of technology change in the Internet environment, often driving version upgrades every 12 months or less. Even the most complex COTS software products on the market, which may have 2-year cycles between major version updates, typically have several minor version updates between the major updates, with the minor updates on a cycle of 12 months or less. Finally, one must take into account the case of IT services—capabilities offered over the network on an ongoing basis, as opposed to localized applications. Such services are typically offered based on COTS hardware plus COTS software plus an IT service delivery and management process. To remain competitive in the marketplace, service providers too must regularly refresh the COTS hardware and software providing the basis for their service, as well as innovate in the COTS service delivery and management dimension.

Since the rapid pace of change driven by the commercial marketplace is such a driving factor for this category of IT programs, the structure of the programs should explicitly take this into account through an IID-based acquisition approach with iteration cycles of relatively short duration (18 to 24 month), just as was the case with SDCI programs.

For CHSS programs, this is as much a business strategy issue as it is a technical strategy issue, and both strategies should be addressed and vetted early in the program and revalidated as capability increments proceed. If appropriate virtualization and storage strategies are adopted to enable easy extension of software capability, then this utility also has to provide functional support in at least two other areas: provisioning and operations. The virtualized computing and storage utility model should provide not only the necessary capacity to support new and emergent applications, but also a means for operations staff to provision new virtual environments for those applications, to monitor the status of applications in operations, and to initiate corrective action in the event of abnormal behavior.

The capability increments for such a CHSS program should be driven by a combination of affordable investment profiles, technology refresh objectives, avoidance of technological obsolescence, and the time that it takes for installation across the production inventory objective for the program. Although Moore’s law has historically operated on an 18-month time cycle, the useful life of networking, computing, and storage hardware is at least two to three times that duration. Additionally, if the IT

program has a significant production inventory objective, it will take time to complete installation on all target units in the inventory objective, especially if shipyard or aviation depot facilities are required to accomplish the installations. At the same time, however, hardware will become increasingly difficult to maintain as it ages, as vendor support diminishes, and as it becomes harder to buy spares. If a virtualized computing and storage utility model is being employed as described earlier, avoiding technological obsolescence and providing the growth capacity to support a more rapidly changing collection of software applications produced by other IT programs are also counteracting forces. This leads to an IID-based acquisition model with increments driven by the roughly 18-month time cycle of Moore’s law, a production rollout schedule driven by affordable investment profiles and the availability of target units in the inventory objective to accomplish installations, and a technology refresh across the inventory objective also driven by affordable investment profiles. In all cases the CHSS acquisition model should adhere to the philosophy of deploying “then year” technology in all new or upgraded production installations—that is, the model should use the production baseline established in the most recent IID capability increment.

For example, the combination of the sustainable investment profile and the availability of deploying units may dictate a deployment schedule of 4 years or longer for equipping the full inventory objective. At the same time, it would not be prudent to install technology that is more than 4 years old on the last group of units to be equipped. For hardware, it would make more sense to update the technology incrementally and to requalify the updated equipment every 18 months. This could lead to a strategy of deploying increment 1 of a capability to a third of the inventory objective in the first 18 months of the program, increment 2 to the second third of the inventory objective in the second 18 months of the program, and increment 3 to the final third of the inventory objective in the third 18 months of the program, and then initiating technology refresh on the first third of the inventory objective with an increment 4 in the fourth 18 months of the program, and so on.28

The structure of a CHSS IT program is as much a question of investment and business strategy as it is a question of technical strategy; all of these topics should be addressed early in the program and revalidated as capability increments proceed. The governance and oversight model for such an IID-based IT program can be substantially simpler than the current one described in Chapter 1. The technology development phase

of the current regimen, with its focus on TRAs and TRLs and technology risk, is not applicable to COTS hardware-focused IT programs. Such an IT program would not be attempting to push the state of the art in information technology and would be using mainstream, or even commodity, COTS hardware and software. The Milestone A and B decisions that are at either end of the technology development phase can readily be combined and accomplished together. The engineering and manufacturing development phase can likewise be simplified. For COTS software programs, it becomes largely a COTS configuration effort. For COTS hardware programs, it becomes largely a COTS hardware integration effort or, at most, a ruggedization effort. This effort would be aimed at the environmental qualification of the COTS hardware baseline for survivability in the target production environment, followed by environmental qualification testing to arrive at a qualified hardware component. Such efforts may address factors such as shock, vibration, high or low temperature extremes, blowing sand, high humidity, and other environmental factors significant in the target operating environment of the IT system, especially for systems destined for deployed or deployable units.

Developmental and operational testing and evaluation for such an IT program can similarly be simplified to focus on validating the facts that capacity objectives have been met, that environmental qualifications have been achieved, that provisioning and operations support functions are effective, and that operational availability and other integrated logistics support objectives have been achieved. The vast majority of the operational testing and evaluation can be accomplished outside an operational environment, with only a final verification test taking place on an operational unit in a real-world operational environment. The resulting overall governance and oversight structure for such a COTS hardware-based IT program is shown in Figure 3.4.

Appendix C provides a more detailed discussion of each of the program phases and decision milestones presented in Figure 3.4. For each decision milestone, the key objectives of the milestone and the responsibilities of the PM, PMT, and MDA are addressed. The discussion in Appendix C focuses on the differences between this category of programs and the SDCI category discussed previously.