4

Defining the Problem: The Importance of Taking a Systems Perspective

|

KEY MESSAGES

|

Obesity is a multifaceted problem that warrants complex thinking and a broad systems perspective to frame the problem, understand potential causes, identify critical leverage points of influence, and take effective action. Linear approaches to complex public health problems such as the obesity crisis are clearly useful, but cannot address the multiple dimensions of the real world and the many influences on the energy balance equation (Foresight, 2007). It is necessary to embrace complexity and to develop strategies and implement change at multiple levels to influence human behavior and reverse the current upward trends in weight. A systems perspective offers a new approach to obesity research and action that can meet this challenge (Huang et al., 2009).

The systems approach has a nearly 50-year history since its development by Forrester at the Massachusetts Institute of Technology (Forrester, 1991). Increasingly, obesity scholars are looking to other disciplines, from biology to psychology to computer sciences and engineering, that use this approach. In the health arena, the approach has been used to elucidate seemingly intractable problems, including cardiovascular disease (Homer et al., 2004), diabetes (Milstein et al., 2007), mental health (Smith et al., 2004), public health emergencies (Hoard et al., 2005), and tobacco control (National Cancer Institute, 2007).

The complex issue of obesity lends itself to a systems approach quite well. Like tobacco control, which employed diverse and multilevel strategies (Abrams et al., 2003, 2010), progress in the obesity field will require a paradigm shift toward an interdisciplinary knowledge base that integrates systems theory with concepts and practice from community development, social ecology, social networks, and public health (Best et al., 2003).

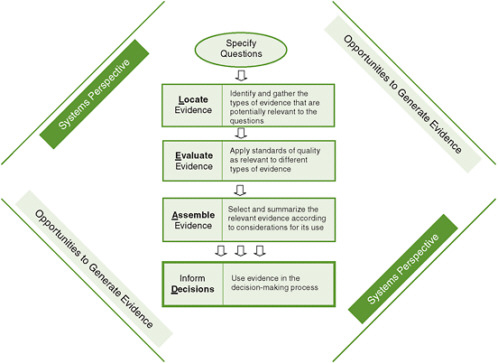

This chapter explains how systems thinking expands upon the multilevel, multisector strategies already proposed or in use to prevent obesity. It provides a primer on the concepts of such thinking and examines how the systems approach can be applied to identify the determinants, strategies, and actions that must be considered to address the obesity crisis. The chapter provides several practical examples of how systems thinking can be used in both small and large ways to expand the boundaries of current models and advance effective change in public health. The chapter also links the systems approach and its application to the L.E.A.D. framework (Figure 4-1), describing how it enhances the ability to generate, use, and learn from evidence and explaining how specific content pertaining to each step of the framework will differ according to the system on which one is focusing. Box 4-1 defines the key systems concepts pertinent to the discussion.

UNDERSTANDING A SYSTEMS APPROACH

As explained in Chapter 2, multilevel, multisector strategies, often based on ecological models (e.g., Figure 1-5 in Chapter 1),1 have gained widespread acceptance for understanding the determinants of obesity and for framing prevention and control activities (Glass and McAtee, 2006). While these models acknowledge the multiple levels of a system and show their interrelationships, however, they may not always be complex enough to capture the dynamic interactions and the short- and long-term feedback loops among the many influences on the energy balance (Foresight, 2007; Sterman, 2006). Systems investigation can complement other methods by capturing this complexity, translating it into actions that can have an impact on the obesity problem and making it possible to predict unintended consequences and time-delayed effects (Mabry et al., 2008).

FIGURE 4-1 The Locate Evidence, Evaluate Evidence, Assemble Evidence, Inform Decisions (L.E.A.D.) framework for obesity prevention decision making.

NOTE: The element of the framework addressed in this chapter is highlighted.

A systems approach requires seeing the whole picture and not just a fragment, understanding the broader context, appreciating interactions among levels, and taking an interdisciplinary approach (Leischow and Milstein, 2006). A systems approach highlights the importance of the circumstances, or context, in which an action is taken in order to understand its implementation and potential impact. Thus while investigators must, for practical reasons, establish boundaries to define the system being studied, they must also recognize that each system exists within and interacts with a hierarchy of nested systems (Midgley, 2000). In addition, appreciating leverage points or points of power within a system can help explain how a small shift in one element of a complex system can produce larger changes in other elements (Meadows, 1999). These advantages of systems investigation are particularly important for interventions targeting obesity, given their far-reaching impact on the population; solutions should be designed to maximize benefit and minimize negative consequences.

The systems approach offers a further advantage with respect to the well-recognized gap between research and practice, which limits the extent to which advances in research translate to advances in improving public health. Most efforts to

|

Box 4-1 Definition of Key Systems Concepts Systems approach: A paradigm or perspective involving a focus on the whole picture and not just a single element, awareness of the wider context, an appreciation for interactions among different components, and transdisciplinary thinking (Leischow and Milstein, 2006). Systems investigation: A promising new frontier for research and action in response to complex and critical challenges (Leischow and Milstein, 2006). Systems thinking: An iterative learning process in which one takes a broad, holistic, long-term, perspective of the world and examines the linkages and interactions among its elements (Sterman, 2006). Systems theory: An interdisciplinary theory that requires merging of multiple perspectives and sources of information and deals with complex systems in technology, society, and science (Best et al., 2003). Systems science: Research related to systems theory that offers insight into the nature of the whole system that often cannot be gained by studying the system’s component parts in isolation (Mabry et al., 2008). System dynamics: A methodology for mapping and modeling the forces of change in a complex system in order to better understand their interaction and govern the direction of the system; it enables stakeholders to combine input into a dynamic hypothesis that uses computer simulation to compare various scenarios for achieving change (Milstein and Homer, 2006). |

link research to practice and policy have merely highlighted the challenges of transferring knowledge from single-discipline, highly controlled research to practice settings. Interdisciplinary investigation using a systems approach can potentially help close this gap (Mabry et al., 2008).

A systems approach to solving health problems requires new tools, including data, methods, theories, and statistical analysis different from those traditionally used in linear approaches. No single discipline can provide these tools. Therefore, it is necessary to approach health research with a collaborative team of investigators who bring knowledge and expertise from a variety of disciplines and sectors (Leischow et al., 2008). The theoretical frameworks and methodologies that result from such collaboration can generate new conceptual syntheses, new measurement techniques (e.g., social network analysis), and interdisciplinary fields of inquiry (e.g., behavioral genetics) with the capacity to tackle complex population health problems (Fowler et al., 2009).

Sterman (2006) explains how the dynamics of a system work, using policy resistance as an example. His explanation, reproduced in Box 4-2, encompasses the key concepts and variables in systems thinking: stocks, flows, feedback processes (positive or self-reinforcing and negative or self-correcting), side effects, and time delays.

USES OF SYSTEMS THINKING, APPROACHES, MAPPING, AND MODELING

Systems can be small or large and often coincide with the levels defined in an ecological model. For example, a school can be thought of as a micro-system within a larger community; as a meso-system within the even larger national, political, and social milieu; or as a macro-system within a global-system context. This section provides several examples of how systems thinking pertains to public health problems: body mass index (BMI) screening in schools, tobacco control in the United States, obesity modeling in the United States, and obesity prevention in the United Kingdom.

BMI Screening in Schools

The monitoring of childhood growth has been a contentious issue for several decades (James and Lobstein, 2009). In recent years, school districts have been under pressure to respond to the childhood obesity epidemic. Despite limited evidence on the value of schools providing individually directed help for children with higher BMIs, the establishment of school-based surveillance to document obesity prevalence and to inform the development of prevention and treatment policies has been recommended (e.g., Massachusetts Department of Public Health, 2009). Although the measurement of weight and height within schools appears relatively simple and in fact has been taking place for decades, the development of rigorous measurement and reporting protocols has been limited until recently. This lack of a well-defined process, together with the failure to take a systems perspective, can result in a number of unintended consequences and perturbations to the system. For example, children may feel embarrassed or stigmatized during the process, parents may feel unequipped to act on the information they receive, health care providers in the community may not be educated about obesity treatment, the community may lack adequate pediatric programming to which children can be referred, and schools may forego other screening programs to make room for BMI screening (for example, hearing and vision screenings were cut back when the Massachusetts Public Health Council voted to require BMI screening of schoolchildren [Mullen, 2009]). In addition, although obesity rates may be high, insufficient funds or a lack of political will may prevent the school system from accessing the funding and assistance needed to address these unintended consequences, leaving the community feeling frustrated and helpless.

The Tobacco Control Movement

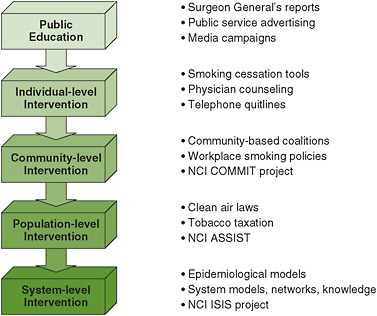

Although historically the tobacco control movement targeted individuals and their behaviors, it evolved into a multilevel systems approach to the problem (Abrams, 2007). Clearly, individual behavior change was the goal, but strategies involving industry, legislation, public health programming and messaging, and the health care system worked together to create that change. None of the strategies implemented as part of the movement worked alone. State by state, it was demonstrated that a combi-

|

Box 4-2 Primer on Concepts and Variables in Systems Thinking Social systems contain intricate networks of feedback processes, both self-reinforcing (positive) and self-correcting (negative) loops. Failure to focus on feedback in policy design has critical consequences. Suppose the hospital you run faces a deficit, caught between rising costs and increasing numbers of uninsured patients. In response, you might initiate quality improvement programs to boost productivity, announce a round of layoffs, and accelerate plans to offer new high-margin elective surgical services. Your advisors and spreadsheets suggest that these decisions will cut costs and boost income. Problem solved—or so it seems. Contrary to the open-loop model behind these decisions, the world reacts to our interventions (Figure). There is feedback: our actions alter the environment and, therefore, the decisions we take tomorrow. Our actions may trigger so-called side effects that we did not anticipate. Other agents, seeking to achieve their goals, act to restore the balance that we have upset; their actions also generate intended and unintended consequences. Goals are also endogenous, evolving in response to changing circumstances. For example, we strive to earn more in a quest for greater happiness, but habituation and social comparison rapidly erode any increase in subjective well-being (Kahneman et al., 1999). Policy resistance arises because we do not understand the full range of feedbacks surrounding—and created by—our decisions. The improvement initiatives you mandated never get off the ground because layoffs destroyed morale and increased the workload for the remaining employees. New services were rushed to market before all the kinks were worked out; unfavorable word of mouth causes the number of lucrative elective procedures to fall as patients flock to competitors. More chronically ill patients show up in your ER with complications after staff cuts slashed resources for patient education and follow-up; the additional workload forces still greater cuts in prevention. Stressed by long hours and continual crisis, your most experienced nurses and doctors leave for jobs with competitors, further raising the workload and undercutting quality of care. Hospital-acquired infections and preventable errors increase. Malpractice claims multiply. Yesterday’s solutions become today’s problems.  NOTE: Arrows indicate causation, e.g., our actions alter the environment. Thin arrows show the basic feedback loop through which we seek to bring the state of the system in line with our goals. Policy resistance (thick arrows) arises when we fail to account for the so called “side effects” of our actions, the responses of other agents in the system (and the unanticipated consequences of these), the ways in which experience shapes our goals, and the time delays often present in these feedbacks. Ignoring the feedbacks in which we are embedded leads to policy resistance as we persistently react to the symptoms of difficulty, intervening at low leverage points and triggering delayed and distant effects. The problem intensifies, and we react by pulling those same policy levers still harder in an unrecognized vicious cycle. Policy resistance breeds cynicism about our ability to change the world for the better. Systems thinking requires us to see how our actions feed back to shape our environment. The greater challenge is to do so in a way that empowers, rather than reinforces, the belief that we are helpless victims of forces that we neither influence nor comprehend. Time delays Time delays in feedback processes are common and particularly troublesome. Most obviously, delays slow the accumulation of evidence. More problematic, the short- and long-run impacts of our policies are often different (smoking gives immediate pleasure, while lung cancer develops over decades). Delays also create instability and fluctuations that confound our ability to learn. Driving a car, drinking alcohol, and building a new semiconductor plant all involve time delays between the initiation of a control action (accelerating/braking, deciding to “have another,” the decision to build) and its effects on the state of the system. As a result, decision makers often continue to intervene to correct apparent discrepancies between the desired and actual |

|

state of the system even after sufficient corrective actions have been taken to restore equilibrium. The result is overshoot and oscillation: stop-and-go traffic, drunkenness, and high-tech boom and bust cycles (Sterman, 1989). Public health systems are not immune to these dynamics, from oscillations in incidence of infectious diseases, such as measles (Anderson et al., 1984) and syphilis (Grassley et al., 2005), to the 2004-2005 flu vaccine fiasco, with scarcity and rationing followed within months by surplus stocks (U.S. House Committee on Government Reform, 2005). Stocks and Flows Stocks and the flows that alter them (the concepts of prevalence and incidence in epidemiology) are fundamental in disciplines from accounting to zoology: a population is increased by births and decreased by mortality; the burden of mercury in a child’s body is increased by ingestion and decreased by excretion. The movement and transformation of material among states is central to the dynamics of complex systems. In physical and biological systems, resources are usually tangible: the stock of glucose in the blood; the number of active smokers in a population. The performance of public health systems, however, is also determined by resources such as physician skills, patient knowledge, community norms, and other forms of human, social, and political capital. Research shows people’s intuitive understanding of stocks and flows is poor in two ways. First, narrow mental model boundaries mean that people are often unaware of the networks of stocks and flows that supply resources and absorb wastes. California’s Air Resources Board seeks to reduce air pollution by promoting so-called zero emission vehicles (California Air Resources Board, 2010). True, zero emission vehicles need no tailpipe. But the plants required to make the electricity or hydrogen to run them do generate pollution. California is actually promoting displaced emission vehicles, whose wastes would blow downwind to other states or accumulate in nuclear waste dumps outside its borders. Air pollution causes substantial mortality, and fuel cells may prove to be an environmental boon compared with internal combustion. But no technology is free of environmental impact, and no legislature can repeal the second law of thermodynamics. Second, people have poor intuitive understanding of the process of accumulation. Most people assume that system inputs and outputs are correlated (e.g., the higher the federal budget deficit, the greater the national debt will be) (Booth Sweeny and Sterman, 2000). However, stocks integrate (accumulate) their net inflows. A stock rises even as its net inflow falls, as long as the net inflow is positive: the national debt rises even as the deficit falls—debt falls only when the government runs a surplus; the number of people living with HIV continues to rise even as incidence falls—prevalence falls only when infection falls below mortality. Poor understanding of accumulation has significant consequences for public health and economic welfare. Surveys show most Americans believe climate change poses serious risks, but they also believe that reductions in GHG emissions sufficient to stabilize atmospheric GHG concentrations can be deferred until there is greater evidence that climate change is harmful (Sterman and Booth Sweeny, 2007). Federal policy makers likewise argue that it is prudent to wait and see whether climate change will cause substantial economic harm before undertaking policies to reduce emissions (Bush, 2002). Such wait-and-see policies erroneously presume that climate change can be reversed quickly should harm become evident, underestimating immense delays in the climate’s response to GHG emissions. Emissions are now about twice the rate at which natural processes remove GHGs from the atmosphere (Houghton et al., 2001). GHG concentrations will therefore continue to rise even if emissions fall, stabilizing only when emissions equal removal. In contrast, experiments with highly educated adults—graduate students at MIT—show that most believe atmospheric GHG concentrations can be stabilized while emissions into the atmosphere continuously exceed the removal of GHGs from it (Sterman and Booth Sweeny, 2007). Such beliefs are analogous to arguing that a bathtub filled faster than it drains will never overflow. They violate conservation of matter, and the violation matters: wait-and-see policies guarantee that atmospheric GHG concentrations, already greater than any in the past 420,000 years (Houghton et al., 2001), will rise far higher, increasing the risk of dangerous changes in climate that may significantly harm public health and human welfare. NOTE: ER = emergency room; GHG = greenhouse gas; MIT = Massachusetts Institute of Technology. SOURCE: Excerpt from Sterman, 2006. Reprinted with permission of the American Public Health Association. Sterman, J.D., Learning from evidence in a complex world, American Journal of Public Health 96(3) 505-513, 2006, copyright © 2006 by the American Public Health Association. |

nation of strategies was better than any single intervention, and the more components that were used, the better (CDC, 1999; Levy et al., 2007). The movement can be seen as a good example to inform the field of obesity prevention.

To explore this paradigm shift, a pilot project, the Initiative on the Study and Implementation of Systems (ISIS), was conducted with funding from the National Cancer Institute (NCI) (Best et al., 2006). ISIS was designed to (1) explore how systems thinking approaches might improve understanding of the factors contributing to tobacco use, (2) inform strategic decision making on which efforts might be most effective for reducing tobacco use, and (3) serve as an exemplar for addressing other public health problems. Contextually, tobacco control can be viewed as a system comprising smaller systems and existing within the broader systems of public health; economics; and society at the local, regional, and global levels. Figure 4-2 shows the evolution of tobacco control approaches toward systems thinking (NCI, 2007).

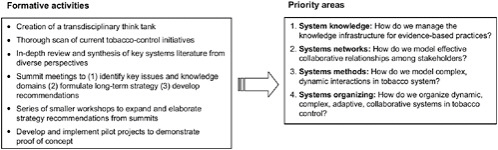

The ISIS project was an attempt to understand the whole problem of tobacco use comprehensively, and ultimately to address the problem through systems change. As a result of strategic planning, the ISIS group identified four priority areas (Figure 4-3) that together serve as a synergistic foundation for understanding and improving public health from a systems perspective (Leischow et al., 2008; NCI,

FIGURE 4-2 Evolution of tobacco control approaches toward systems thinking.

NOTES: Quitlines indicate telephone hotlines for smoking cessation. ASSIST = American Stop Smoking Intervention Study for Cancer Prevention; COMMIT = Community Intervention Trial for Smoking Cessation; ISIS = Initiative on the Study and Implementation of Systems; NCI = National Cancer Institute.

SOURCE: NCI, 2007.

FIGURE 4-3 Priority areas identified by the Initiative on the Study and Implementation of Systems (ISIS) group.

SOURCE: Reprinted from Leischow et al., Copyright © 2008, with permission from Elsevier.

2007). Simulation modeling, conducted in the third of ISIS’s priority areas, has been useful for exploring the impact of changes in various parameters within the complex systems that have an impact on population-level outcomes related to tobacco use behaviors and policies (Abrams et al., 2010; Levy et al., 2010a,b).

Mapping of Obesity Causality in the United States

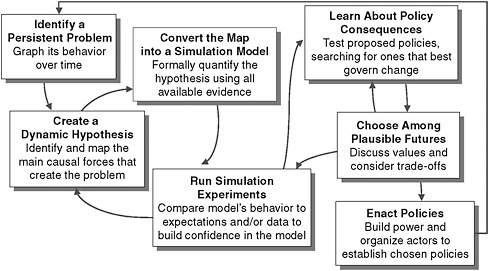

System dynamics modeling can help map causality by addressing risks and outcomes; when performed prospectively, it can be used to predict future outcomes, and when performed retrospectively, it can be used to understand how strategies and delivery systems interacted with a population during an intervention. The process proceeds iteratively through the general steps shown in Figure 4-4, beginning with the identification of a persistent problem (Milstein and Homer, 2006). Milstein and Homer

FIGURE 4-4 Iterative steps in system dynamics modeling.

SOURCE: Milstein and Homer, 2006.

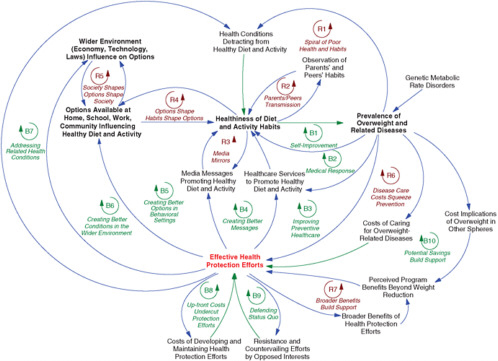

(2009) use system dynamics modeling to map the forces that contribute to the persistent obesity problem (see Figure 4-5). This exercise helps in understanding the causes of obesity, the broader systems to which they belong, and how they are thought to interact. Such mapping can therefore elucidate potential mechanisms and dynamic pathways on which intervention strategies should focus.

Once such maps have been developed, they can be converted into computer simulation models that can be used to identify interventions and policies with the potential to alleviate the problem. These experiments are followed by sensitivity analyses to assess areas of uncertainty in the models and to guide future research. Once the models have been finalized, stakeholders are convened to participate in “action labs,” in which the models allow them to discover for themselves the likely consequences of alternative policy scenarios (Milstein and Homer, 2009).

A broad array of modeling techniques are used for different purposes in many different fields. Because of the complexity of the obesity problem, the most suitable modeling techniques will have several characteristics (Hammond, 2009). First, because of the scale of the epidemic, models may provide the most insight if they capture multiple levels of analysis. Second, to capture the dynamics of such a complex system, models must be able to incorporate individual heterogeneity and adaptation over time. Finally, models must be able not only to provide a better understanding of the problem and the mechanisms behind it, but also to aid in the design of new and better interventions to slow and reverse the epidemic.

Obesity Prevention in the United Kingdom

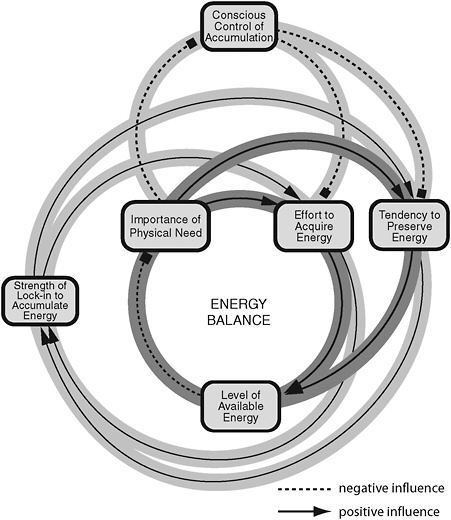

The most comprehensive effort to both understand and map the obesity epidemic and formulate a national action plan was carried out by the Foresight Group in the United Kingdom (Foresight, 2007). The Foresight Tackling Obesities: Future Choices Project was aimed at producing a sustainable response to obesity in the United Kingdom over the 40 years following the plan’s release. Using a systems approach, the group pursued objectives that included using the scientific evidence base across a wide range of disciplines to identify the many factors that influence obesity, looking beyond the obvious to achieve an integrated understanding of the relationships among these factors and their relative importance, building on this evidence to identify effective interventions, analyzing how future levels of obesity might change, and identifying what the most effective future responses might be. A detailed causal loop obesity system map was produced to display the interrelationships among the various contributors to energy balance; a simplified version of this map is shown in Figure 4-6.

In addition, a strategic framework was developed to identify gaps in current initiatives that would have to be filled to mount an integrated policy response. Its authors identify six key elements of this framework (Jebb, 2009):

FIGURE 4-5 The obesity “system”: a broad causal map.

NOTES: Blue arrows indicate same-direction links; green arrows indicate opposite-direction links; R loops indicate reinforcing processes; B loops indicate balancing processes. All parameters vary by such factors as age, sex, race/ethnicity, income, and geography.

SOURCE: Milstein and Homer, 2009. Reprinted with permission.

FIGURE 4-6 Simplified version of the causal loop obesity system map showing the interrelationships among various contributors to energy balance. The map was developed by the UK Foresight Group to understand and chart the obesity epidemic in order to inform a national action plan.

SOURCES: Vandenbroeck et al., 2007.

-

systematic change that addresses the diverse determinants of obesity simultaneously to minimize the risk of compensatory actions;

-

integrated interventions at all levels of society—individual, family, local, national and international—recognizing that individual choices are shaped by the wider context;

-

interventions across the life course to reinforce and sustain long-term behavior change;

-

diverse interventions that combine focused initiatives (which impose change), “enablers” (which inform and facilitate change), and “amplifiers” (which address social norms and the cultural context);

-

actions planned over time such that early initiatives build a climate for subsequent interventions; and

-

ongoing evidence gathering, including population-level surveillance and evaluation of interventions.

The Foresight Group identified as a key research challenge “the evaluation of new policy initiatives at all levels (process audits, behaviors and biomarkers, long-term health and economic outcomes)” (Jebb, 2009, p. 39). These types of causal loops magnify the need for new ways of evaluating and incorporating evidence not only from research studies, but also from the real-world experience of obesity initiatives undertaken not just within a particular country but around the world.

Although outcomes of systems approaches such as the Foresight Group’s causal loop system map appear complex, they are useful for informing practical, real-world intervention strategies. For example, Foresight’s Tackling Obesities: Future Choices Project was used to inform a cross-government strategy for England that was part of a sustained program to reduce obesity and support healthy weight maintenance (Cross-Government Obesity Unit, 2010).

RELATION TO THE L.E.A.D. FRAMEWORK

The idea that evidence should be identified, evaluated, and summarized from a systems perspective is fundamental to the framework proposed in this report. A systems perspective broadens the traditional approach to locating, evaluating, and assembling evidence (which generally limits the evidence to results of rigorous randomized controlled trials) to encompass evidence that reflects the complexity of the problem. Users of the framework are encouraged to approach every aspect of decision making with a comprehensive lens, considering the complex context in which programs and policies will be implemented and how it may affect their implementation and impact. A systems perspective enables the decision maker to understand interactions among smaller systems within the larger system and identify potential synergies or harms that should be explored before implementation. Creating “what if” scenarios based on systems maps can help decision makers and stakeholders think about various approaches and where to focus efforts, as well as potential costs; elements that are critical in the design of interventions or program and policy evaluations; and feedback loops that can be sources of evaluation data (see Chapter 6).

APPLICATION AND FUTURE DIRECTIONS

According to Hammond (2009), the most effective models for addressing the obesity epidemic are likely to be those that capture multiple mechanisms at multiple levels,

integrate micro and macro data and dynamics, account for significant heterogeneities, and allow for policy experimentation. To fully realize the potential application of systems theory to obesity prevention, a number of strategies will be required. First, current and future leaders should be trained in the science and understanding of systems and their application to the obesity crisis. This training would include causal mapping, conceptualization of interventions, and computational and simulation modeling techniques. The application of these methods to the obesity epidemic will be challenging—there will be important data that are not yet available, uncertainty about a number of assumptions, and many key mechanisms whose inner workings are unknown (Hammond, 2009). In some cases, smaller systems will have to be studied independently, perhaps with relatively homogeneous populations, before being integrated into a more comprehensive model. Various combinations of models can then be explored and tested against the same outcome data, building slowly toward a model that encompasses the full breadth of the system by integrating all those narrower models. Second, empirical research should be funded and executed using systems theory as a guide. Focused studies can be used to confirm and quantify relationships and to test their effects. Ideally, this research would be carried out in conjunction with modeling studies to produce the most informative data and to guide future research. Third, both knowledge generators and users must work collaboratively with different disciplines to build interdisciplinary capacity.

Caution will be necessary regarding the use of models and the need to link their application with empirical research. The interplay between systems theory and research requires high-quality experimental and quasi-experimental designs. Systems thinking puts researchers in a better position to ask the right questions. Research applications allow a systems model to make the right predictions.

In conclusion, systems thinking in public health cannot be encompassed by a single discipline or even a single “systems thinking” approach (e.g., system dynamics models). Rather, it requires interdisciplinary integration of approaches to public health aimed at understanding and reconciling linear and nonlinear, qualitative and quantitative, and reductionistic and holistic thinking and methods to create a federation of systems approaches (NCI, 2007).

REFERENCES

Abrams, D. B. 2007. Comprehensive smoking cessation policy for all smokers: systems integration to save lives and money. In Ending the tobacco problem: A blueprint for the nation, edited by R. J. Bonnie, K. Stratton, and R. B. Wallace. Washington, DC: The National Academies Press. Pp. A1-A50.

Abrams, D. B., F. Leslie, R. Mermelstein, K. Kobus, and R. R. Clayton. 2003. Transdisciplinary tobacco use research. Nicotine and Tobacco Research 5(Supplement 1):S5-S10.

Abrams, D. B., A. L. Graham, D. T. Levy, P. L. Mabry, and C. T. Orleans. 2010. Boosting population quits through evidence-based cessation treatment and policy. American Journal of Preventive Medicine 38(3, Supplement 1):S351-S363.

Anderson, R. M., B. T. Grenfell, and R. M. May. 1984. Oscillatory fluctuations in the incidence of infectious disease and the impact of vaccination: Time series analysis. Journal of Hygiene (London) 93(3):587-608.

Best, A., G. Moor, B. Holmes, P. I. Clark, T. Bruce, S. Leischow, K. Buchholz, and J. Krajnak. 2003. Health promotion dissemination and systems thinking: Towards an integrative model. American Journal of Health Behavior 27(Supplement 3).

Best, A., R. Tenkasi, W. Trochim, F. Lau, B. Holmes, T. Huerta, S. Moor, S. Leischow, and P. Clark. 2006. Systemic transformational change in tobacco control: An overview of the Initiative for the Study and Implementation of Systems (ISIS). In Innovations in health care: A reality check, edited by A. L. Casebeer, A. Harrison, and A. L. Mark. New York: Palgrave Macmillan.

Booth Sweeney, L., and J. D. Sterman. 2000. Bathtub dynamics: Initial results of a systems thinking inventory. System Dynamics Review 16:249-294.

Bush, G. W. 2002. President announces clear skies and global climate change initiatives. http://georgewbush-whitehouse.archives.gov/news/releases/2002/02/20020214-5.html (accessed March 16, 2010).

California Air Resources Board. 2010. Zero emission vehicle program. http://www.arb.ca.gov/msprog/zevprog/zevprog.htm (accessed March 16, 2010).

CDC (Centers for Disease Control and Prevention). 1999. Best practices for comprehensive tobacco control programs—April 1999. Atlanta, GA: U.S. Department of Health and Human Services, National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health.

Cross-Government Obesity Unit. 2010. Healthy weight, healthy lives: Two years on. http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/documents/digitalasset/dh_113495.pdf (accessed March 4, 2010.

Foresight. 2007. Tackling obesities: Future choice—Project report, 2nd edition. London: U.K. Government Office for Science.

Forrester, J. W. 1991. System dynamics and the lessons of 35 years. In The systemic basis of policy making in the 1990s, edited by K. B. De Greene. Cambridge, MA: MIT Press.

Fowler, J. H., C. T. Dawes, and N. A. Christakis. 2009. Model of genetic variation in human social networks. Proceedings of the National Academy of Sciences of the United States of America 106(6):1720-1724.

Glass, T. A., and M. J. McAtee. 2006. Behavioral science at the crossroads in public health: Extending horizons, envisioning the future. Social Science and Medicine 62(7):1650-1671.

Grassley, N. C., C. Fraser, and G. P. Garnett. 2005. Host immunity and synchronized epidemics of syphilis across the United States. Nature 433(7024):417-421.

Hammond, R. A. 2009. Complex systems modeling for obesity research. Preventing Chronic Disease 6(3):A96.

Hoard, M., J. Homer, W. Manley, P. Furbee, A. Haque, and J. Helmkamp. 2005. Systems modeling in support of evidence-based disaster planning for rural areas. International Journal of Hygiene and Environmental Health 208(1-2):117-125.

Homer, J., G. Hirsch, M. Minniti, and M. Pierson. 2004. Models for collaboration: How system dynamics helped a community organize cost-effective care for chronic illness. System Dynamics Review 20(3):199-222.

Houghton, J., Y. Ding, D. Griggs, M. Noguer, P. J. van der Linden, X. Dai, K. Maskell, and C. A. Johnson. 2001. Climate change 2001: The scientific basis. Cambridge, UK: Cambridge University Press.

Huang, T. T., A. Drewnowski, S. K. Kumanyika, and T. A. Glass. 2009. A systems-oriented multilevel framework for addressing obesity in the 21st century. Preventing Chronic Disease 6(3):A82.

James, W. P. T., and T. Lobstein. 2009. BMI screening and surveillance: An international perspective. Pediatrics 124(Supplement 1).

Jebb, S. 2009. Developing a strategic framework to prevent obesity. Presented at the IOM Workshop on the Application of Systems Thinking to the Development and Use of Evidence in Obesity Prevention Decision-Making, March 16, Irvine, CA.

Kahneman, D., E. Diener, and N. Schwarz. 1999. Well-being: The foundations of hedonic psychology. New York: Russel Sage.

Leischow, S. J., and B. Milstein. 2006. Systems thinking and modeling for public health practice. American Journal of Public Health 96(3):403-405.

Leischow, S. J., A. Best, W. M. Trochim, P. I. Clark, R. S. Gallagher, S. E. Marcus, and E. Matthews. 2008. Systems thinking to improve the public’s health. American Journal of Preventive Medicine 35(2, Supplement 1):S196-S203.

Levy, D. T., H. Ross, L. Powell, J. E. Bauer, and H. R. Lee. 2007. The role of public policies in reducing smoking prevalence and deaths caused by smoking in Arizona: Results from the Arizona tobacco policy simulation model. Journal of Public Health Management and Practice 13(1):59-67.

Levy, D. T., A. L. Graham, P. L. Mabry, D. B. Abrams, and C. T. Orleans. 2010a. Modeling the impact of smoking-cessation treatment policies on quit rates. American Journal of Preventive Medicine 38(3, Supplement 1).

Levy, D. T., P. L. Mabry, A. L. Graham, C. T. Orleans, and D. B. Abrams. 2010b. Reaching Healthy People 2010 by 2013: A SimSmoke simulation. American Journal of Preventive Medicine 38(3, Supplement 1).

Mabry, P. L., D. H. Olster, G. D. Morgan, and D. B. Abrams. 2008. Interdisciplinarity and systems science to improve population health. A view from the NIH Office of Behavioral and Social Sciences Research. American Journal of Preventive Medicine 35(Supplement 2).

Massachusetts Department of Public Health. 2009. BMI screening guidelines for schools. http://www.mass.gov/Eeohhs2/docs/dph/mass_in_motion/community_school_screening.pdf (accessed April 5, 2010).

Meadows, D. 1999. Leverage points: Places to intervene in a system. Hartland, VT: The Sustainability Institute.

Midgley, G. 2000. Systemic intervention: Philosophy, methodology and practice. New York: Kluwer Academic/Plenum.

Milstein, B., and J. Homer. 2006. Background on system dynamics simulation modeling with a summary of major public health studies. Atlanta, GA: CDC. http://www.caldiabetes.org/get_file.cfm?contentID=501&ContentFilesID=389 (accessed February 3, 2010).

Milstein, B., and J. Homer. 2009. System dynamics simulation in support of obesity prevention decision-making. Presented at the Institute of Medicine Workshop on the Application of Systems Thinking to the Development and Use of Evidence in Obesity Prevention Decision-Making, March 16, Irvine, CA. http://www.iom.edu/~/media/Files/Activity%20Files/PublicHealth/ObesFramework/IOMIrvine16Mar09v52MilsteinHomer.ashx

(accessed April 20, 2010).

Milstein, B., A. Jones, J. B. Homer, D. Murphy, J. Essien, and D. Seville. 2007. Charting plausible futures for diabetes prevalence in the United States: A role for system dynamics simulation modeling. Preventing Chronic Disease 4(3):1-8.

Mullen, J. 2009. Proposed Amendments to 105 CMR 200.000: Physical Examination of School Children. Letter to Massachusetts Public Health Council from Director, Bureau of Community Health Access and Promotion, Massachusetts Department of Public Health.

NCI (National Cancer Institute). 2007. Greater than the sum: Systems thinking in tobacco control. Tobaco control monograph no. 18. NCI Tobacco Control Monograph Series. NIH Pub. No. 06-6085. Bethesda, MD: U.S. Department of Health and Human Services, National Institutes of Health, National Cancer Institute.

Smith, G., E. F. Wolstenholme, D. McKelvie, and D. Monk. 2004. Using system dynamics in modeling mental health issues in the UK. Paper presented at 22nd International Conference of the System Dynamics Society, Oxford, England.

Sterman, J. D. 1989. Modeling managerial behavior: Misperceptions of feedback in a dynamic decision making experiment. Management Science 35:321-339.

Sterman, J. D. 2006. Learning from evidence in a complex world. American Journal of Public Health 96(3):505-514.

Sterman, J. D., and L. Booth Sweeney. 2007. Understanding public complacency about climate change: Adults’ mental models of climate change violate conservation of matter. Climatic Change C80(3-4):213-238.

U.S. House Committee on Government Reform. 2005. The perplexing shift from shortage to surplus: Managing this season’s flu shot supply and preparing for the future. 1st Session, 109th Congress, February 10, 2005.

Vandenbroeck, I. P., J. Goossens, and M. Clemens. 2007. Obesity system atlas. Foresight tackling obesities: Future choices.