2

Methodology

CRITERIA FOR SELECTION OF COUNTRIES

The six countries selected by the sponsor to be included in this review (Japan, Brazil, Russia, India, China, and Singapore, or JBRICS) are a select few from among many countries that have science and technology (S&T) policies of potential interest to the United States. The selection of these particular countries was driven by their variations in country size, level of development, and perceived status as global attractors for S&T innovation. The BRIC (Brazil, Russia, India, and China) countries share several qualities: each has a large land area and is rich in natural resources, and each aims to position its political and economic systems to embrace and benefit from global capitalism. The other two countries, Japan and Singapore, although of contrasting size and notably lacking in natural resources, have made similar economic reforms in the relatively recent past and are widely considered to be models of success. The sponsor hoped that, by looking at these countries together, the Committee on Global Science and Technology Strategies and Their Effect on U.S. National Security would be able to develop a portrait of a country’s use of S&T policy to transition from a developing to a developed country and to build a technical capability equal to or surpassing that of the United States. The committee was also requested to identify factors that are found in countries that have created successful environments for innovation.

Although there are many countries whose S&T infrastructures are of interest and could be studied, the limited time allotted for this review and the direction of the sponsor limited the committee’s consideration to the six countries listed above.

CRITERIA FOR HIGH-IMPACT/KEY TECHNICAL AREAS

One of the committee’s goals was to identify the technology areas for each country that have the greatest potential impact for that country’s future and for the United States. Paraphrasing the sponsor, the committee was asked to identify what technologies the six countries are picking as “winners.” What appear to be the key technologies on which the countries are focusing for future success? High-impact or key technology areas could be those that have received increased funding or have had rapid or recent improvements in measured indicators. In most cases, the key technology areas were those areas of research and development (R&D) that have been identified by the country for industrial development or for improvements in the national standard of living. However, in some countries the key technology areas were closely tied to military as well as economic competitiveness. The extent to which the committee evaluated such ties was a function of the amount of information in the open literature.

Committee members reviewed each country’s S&T plans alongside its observed S&T progress and common indicators to identify the technology areas that could have the greatest impact. These are addressed in the country-specific sections in Chapters 3 through 8. Where possible, the committee tried to assess whether there are better indicators of progress for each individual country and whether each country has the resources to make significant or economic advances in the identified key technology areas. A consequence of the focus on country-specific S&T strategies, rather than on particular areas of S&T, is that coverage in the report of important S&T developments is uneven across the countries.

METHOD OF INFORMATION GATHERING AND EVALUATION

Information was gathered by reading available S&T plans from the countries of interest, listening to experts’ presentations on S&T focus areas from the six countries and the United States, and reading other publicly available documents related to the S&T enterprises of the six countries. The committee reviewed rankings, indices, and trends, compiled by other organizations, of items such as patents filed, journal articles published, degrees earned in S&T fields, and funding devoted to research and infrastructure. The committee also reviewed the countries’ stated S&T policies, as published in their S&T plans, and S&T spending, when possible. In all cases, funding numbers primarily reflected nonmilitary R&D spending. References are included in the applicable country sections.

The committee focused not only on gathering information, but also on assessing and interpreting the information to how S&T are being developed in other countries and what existing or emerging technologies may pose threats to U.S. security. In order to evaluate the information collected, the committee members relied on their own expertise, experience, personal conversations with experts, and judgment.

One evaluation method was to compare knowledge of a country’s S&T plans with information regarding its S&T spending. Measurements of investment in R&D as a percentage of gross domestic product (GDP) can indicate the level of national commitment to S&T development. If the spending for an area is not sufficient to support stable R&D (or progress in that area), then that area may actually be a low priority for that country, even if the policy states otherwise. Although useful, such measures offer an incomplete portrait of available resources; they do not reflect the cost differences between local economies, the impact of technology transfer, or the level of innovation in a given country. An effort was made to identify indicators that capture the major S&T-related trends in each country. Commonly used indicators include metrics such as patents, publications, degrees, and spending. However, interpreting these data in the context of a country’s unique and evolving circumstances presents a considerable challenge. Traditional academic and economic measures may not be reliable in some cases because a country’s standards or its level of involvement in the global economy or the Western academic establishment may differ. For example, academic advancement in China is linked to the number of papers published, incentivizing scientists to produce a large number of lower quality papers that are not necessarily indicative of more (or high-quality) research. One potential solution to this problem is to focus on communities of researchers as demonstrated by co-authorship rather than on the number of individual papers (Klavans and Boyack, 2009).

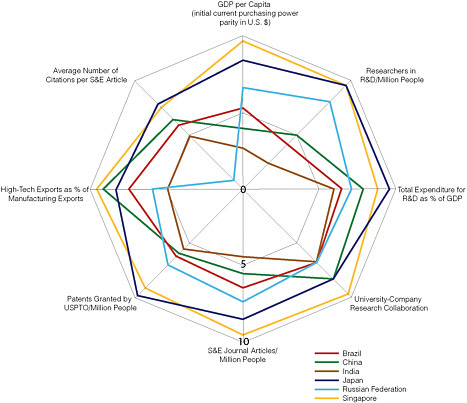

The World Bank has developed a benchmarking tool called the Knowledge Assessment Methodology (KAM) to help countries identify challenges and opportunities. The KAM is used to rate countries on 83 variables that have been identified as necessary elements of a functioning knowledge economy, ranking each on a scale from 1 (weakest) to 10 (strongest). Figure 2-1 shows the KAM innovation scorecards for the JBRICS countries on several traditionally cited indicators and compares them with that of the United States.

Economic measures are designed to measure patterns in output of goods from material resources. They do not translate well to measuring innovation, which is not easily quantifiable. Unlike in manufacturing, health policy, or education policy, where records can be collected and analyzed, there are no reliable data on the factors that produce innovation or encourage its adoption. Additionally, research on the impacts of organizational structure and decisionmaking is very limited. R&D investment, often used to predict industry output, may be an unreliable indicator—an increase in R&D spending does not always increase output or improve other indicators. For example, between 1992 and 1995, a series of R&D-focused recovery packages in Japan failed to reverse a decline in industrial R&D, due to policies that discouraged innovation and university-industry collaborations (OECD, 2009). Sweden, too, has seen little growth in recent years despite population growth and heavy investment in R&D (Lane, 2009).

FIGURE 2-1 Comparison of supply and output indicators in JBRICS countries using Knowledge Assessment Methodology. SOURCE: World Bank. 2009. Knowledge for Development (K4D) Website: Custom Scorecards (KAM 2009). Tool available at http://info.worldbank.org/etools/kam2/KAM_page3.asp?default=1. Last accessed June 14, 2010.

A constellation of cultural, economic, and policy factors must be considered when evaluating the capability of a country in S&T innovation.

In its review, the committee attempted to identify nation-specific indicators that should be used alongside the more globally relevant observables discussed above (e.g., patents, publications, degrees awarded, and S&T budgets) to better monitor, track, and quantify S&T development in other countries and the United States in the future. These nation-specific indicators are discussed in the individual country chapters and summarized in Chapter 9.

RESEARCH TIMEFRAME

The Committee on Global Science and Technology Strategies and Their Effect on U.S. National Security (see Appendix A) started research for the report at its first meeting in November 2009 and completed research in March 2010. During the first three meetings, committee members received briefings and discussed the report structure and approach. Writing and research were primarily performed outside the meetings, and the final meeting was devoted to preparing the first version of the report for review. See Appendix B for a full listing of speakers and briefings.

REFERENCES

Klavans, R., and K. W. Boyack. 2009. Toward an objective, reliable, and accurate method for measuring research leadership. Scientometrics 82(3):539-553.

Lane, J. 2009. Assessing the impact of science funding. Science 324:1273-1275.

OECD (Organisation for Economic Co-Operation and Development). 2009. A Forward-Looking Response to the Crisis: Fostering an Innovation-Led, Sustainable Recovery. Paris: OECD. Available at http://www.ioe-emp.org/fileadmin/user_upload/documents_pdf/globaljobscrisis/generaldocs/Fostering_an_Innovation-led_and_Sustainable_Recovery.pdf. Last accessed March 17, 2010.