5

The Path Forward

Recommendation: To make progress incorporating risk analysis into the Department of Homeland Security (DHS), the committee recommends that the agency focus on the following five actions:

-

Build a strong risk capability and expertise at DHS.

-

Incorporate the Risk = f(T,V,C) framework, fully appreciating the complexity involved with each term in the case of terrorism.

-

Develop a strong social science capability and incorporate the results fully in risk analyses and risk management practices.

-

Build a strong risk culture at DHS.

-

Adopt strong scientific practices and procedures, such as careful documentation, transparency, and independent outside peer review.

BUILD A STRONG RISK CAPABILITY AND EXPERTISE AT DHS

Improve the Technical Capabilities of Staff

The Statement of Task for this study requires attention to staffing issues, to answer both element (b), regarding DHS’s capability to implement and appropriately use risk analysis methods to represent and analyze risks, and element (c), regarding DHS’s capability to use risk analysis methods to support DHS decision making. The right human resources are essential for effective risk analysis. The goal should be a strong, multi-disciplinary staff in the Office of Risk Management and Analysis (RMA), and throughout DHS, with diverse experience in risk analysis. Although RMA has made an effort to hire people with diverse backgrounds, most do not come to the office with previous experience in risk analysis.

As part of its responsibility in implementing the Integrated Risk Management Framework (IRMF), RMA could be spearheading DHS efforts to develop a solid foundation of expertise in all aspects of risk analysis. This stems in part from “Delegation 17001” from the DHS Secretary which, among other things, assigns RMA the responsibility of providing core analytical and computational capabilities to support all department components in assessing and quantifying risks.

The long-term success of risk analysis throughout DHS and the improve-

ment of scientific practice both depend on the continued development of an adequate in-house workforce of well-trained risk assessment experts. The need for technical personnel skilled in risk analysis throughout DHS should be addressed on a continuing basis. Decision support requires the ready availability of such resources, to ensure that specific risk assessments are carried out according to the appropriate guidelines and their results are clear to risk managers. Such a staff would also be responsible for the development and periodic revision of risk analysis guidelines. As DHS expands its commitment to risk analysis, personnel who are up to date on scientifically grounded methods for carrying out such analyses will be increasingly in demand. In the course of its study, the committee saw little evidence of DHS being aware of the full range of state-of-the art risk analysis and decision support tools, including those designed specifically to deal with deep uncertainty. Recruitment of additional personnel is clearly needed, and continuing education for personnel currently in DHS and working for contractors and personnel exchange programs between DHS and other stakeholders are also paths that should be followed.

To fully develop its capabilities for making strong risk-based decisions, DHS is critically dependent on staff with a good understanding of the principles of risk analysis who are thoughtful about how to improve DHS processes. DHS needs more such people; the lessons provided by the experience of the Environmental Protection Agency (EPA) and similar risk-informed agencies are instructive. At present, DHS is heavily dependent on private contractors, academic institutions, and government laboratories for the development, testing, and use of models; acquisition of data for incorporation into models; interpretation of results of modeling efforts; and preparation of risk analyses. While there are advantages in relying on external expertise that is not available within DHS, in-house specialists should be fully aware of the technical content of such work. In particular, they need to ensure the scientific integrity of the approaches and understand the uncertainties of the results that are associated with risk models and the products of these models. Contractor support will remain essential, but the direction of such work should be under the tight control of in-house staff. In-house staff would also provide the linkages with other agencies that are so critical to success and ensure that these interagency efforts are scientifically coordinated and appropriately targeted.

To truly become a risk-informed organization, DHS needs a long-term effort in recruiting and retaining people with strong expertise of relevance to the multidisciplinary field of risk management along with programs designed to build up a risk-aware culture. For example, there are pitfalls in the use of risk analysis tools (Cox, 2008). Sometimes they are minor, but other times they might invalidate the results. DHS needs some deep knowledge in risk analysis to guard against misapplication of tools and data and to ensure that its risk analysis produces valid information. The need to build up a risk-aware culture might very well extend to taking responsibility for training DHS partners in states, localities, tribal areas, territories, and owners and operators of Critical Infrastructure and Key Resources (CIKR) assets.

To offset the department’s recognized shortage of risk analysis personnel, which was understandable when DHS was first established, it has elected to outsource a good deal of risk-related technical work. Other federal agencies have adopted the same strategy, but the approach has pitfalls, especially when work of a specialized technical character is outsourced. For example, it is difficult for agency staff to stay current in a technical discipline if they are not actively engaged in such work. Serving as a technical liaison is not enough to maintain or expand one’s skill base. Sooner or later, DHS staff will find that they lack enough insight to sort through competing claims from contractors, choose the contractor for a given technical task, specify and guide the work, evaluate the work, and implement the results. Moreover, a paucity of skills at the program level can undercut the ability of senior leadership to make effective use of contractor work. In the case of two models reviewed by the committee, Biological Threat Risk Assessment (BTRA) and Terrorism Risk Assessment and Management (TRAM), it was not always clear that DHS leadership fully understood the fundamental scientific concerns with the risk analysis methodology chosen, the underlying assumptions used, the data inputs required, or how to use the analysis outputs.

Some risk models developed by DHS contractors remain proprietary, which introduces additional problems. How will DHS enable institutional learning if data management, models, and/or analysis capabilities are proprietary? In addition, proprietary work could slow the adoption and appropriate utilization of risk analysis models, methods, and decision support systems.

At present, DHS has a very thin base of expertise in risk analysis—many staff members are learning on the job—and a heavy reliance on external contractors. The Government Accountability Office (GAO) considers that DHS has significant organizational risk, due to the high volume of contractors in key positions supporting DHS activities, the higher-than-desired turnover of key DHS personnel, and a lack of continuity in knowledge development and transfer.1 The committee agrees with that assessment. This combination of heavy reliance on contractors and a small in-house skill base also tends to produce risk-modeling tools that require a contractor’s expertise and intervention for running and interpreting. This is an undesirable situation for risk management, which is most useful when the assumptions behind the model are transparent to the risk manager or decision maker and that person can directly exercise the model in order to explore “what-if” scenarios.

These concerns lead to the following recommendation:

Recommendation: DHS should have a sufficient number of in-house experts, who also have adequate time, to define and guide the efforts of external contractors and other supporting organizations. DHS’ internal technical expertise should encompass all aspects of risk analysis, including the

social sciences. DHS should also evaluate its dependence on contractors and the possible drawbacks of any proprietary arrangements.

The committee recognizes that hiring appropriate technical people is difficult for DHS. Staff from both the Homeland Infrastructure Threat and Risk Analysis Center (HITRAC) and RMA staff voiced frustration in recruiting technical talent, particularly those with advanced degrees and prior work experience across a wide variety of risk analysis fields, such as engineering disciplines, social sciences, physical sciences, public health, public policy, and intelligence threat analysis. Both units cited the longer lead times of 6-12 months required for security clearances and the DHS Human Resources process as limiting their ability to make offers and bring personnel on board in a time frame that is competitive with the private sector.

RMA has used the competitive Presidential Management Fellows Program to manage lead times in recruiting graduating M.S. and Ph.D. students. HI-TRAC works with key contractors to access technical staff while waiting for the DHS Human Resources recruiting process. Both RMA and HITRAC are actively educating job candidates and working on candidate communications to manage the longer lead times required in recruiting and signing technical talent. The committee hopes that DHS can improve its human resources recruiting process and close the time gap for recruiting top technical talent.

It is unlikely that DHS can fully develop strong risk-based decisions without such a staff; again, the lessons provided by the experience of EPA and similar agencies are instructive. Contractor support will remain essential, but the direction of such work should be under the tight control of in-house staff.

Such an in-house staff would also provide the linkages with other agencies that are so critical to success and ensure that those interagency efforts are scientifically coordinated and appropriately targeted.

Recommendation: DHS should convene an internal working group of risk analysis experts to work with its RMA and Human Resources to develop a long-term plan directed for the development of a multidisciplinary risk analysis staff throughout the department and practical steps for ensuring such a capability on a continuing basis. The nature and size of the staff and the rate of staffing should be matched to the department’s long-term objectives for risk-based decision making.

DHS needs an office that can set high standards for risk analysis and provide guidance and resources for achieving those standards across the department. This office would have the mandate, depth of experience, and external connections to set a course toward risk analysis of the caliber that is needed for DHS’s complex challenges. These challenges are putting new demands on the discipline of risk analysis, so DHS needs the capability to recognize best practices elsewhere and understand how to adapt them. This requires deep technical skill, with not only competence in existing methods but also enough insight to

discern whether new extensions are feasible or overoptimistic. It also requires strong capabilities in the practice of science and engineering to understand the importance of clear models, uncertainty estimates, and validation of models, and peer review and high-caliber outside advice to be able to sort through technical differences of opinion, and so on. The office that leads this effort must earn the respect of risk analysts throughout DHS and elsewhere. If RMA is to be the steward of DHS’s risk analysis capabilities, it has to play by those rules and be an intellectual leader in this field. This will be difficult.

It is not obvious that RMA is having much impact: in conversations with a number of DHS offices and components about topics within risk analysis, RMA is rarely mentioned. This could simply reflect the fact that Enterprise Risk Management (ERM) processes generally aim to leverage unit-level risk with a light touch and avoid overlaying processes or otherwise exerting too much oversight. However, it could also be an indication that RMA is not adding value to DHS’s distributed efforts in risk analysis.

Discard the Idea of a National Risk Officer

The director of RMA suggested to the committee that the DHS Secretary, who already serves as the Domestic Incident Manager during certain events, could serve as the “country’s Chief Risk Officer.” establishing policy and coordinating and managing national homeland security risk efforts.2 A congressional staff member supported the concept of establishing a Chief Risk Officer for the nation.3

The committee has serious reservations.

Risk assessments are done for many issues, including health effects, technology, and engineered structures. The approaches taken differ depending on the issues and the agency requirements. The disciplinary knowledge required to address these issues ranges from detailed engineering and physical sciences to social sciences and law. For a single entity to wisely and adequately address this broad range would require a large—perhaps separate—agency. In addition, as other National Research Council (1989; 1996) studies have shown, risk analysis is best done with interactions between the risk analysts and stakeholders, including the involved government agencies. Obviously care must be taken not to have the analysis warped by pressures from the stakeholders, but interactions with stakeholders can improve the analysis. To be effective, such interactions require the federal agents to have an understanding of the issues and of the values of stakeholders (NRC, 1989). Each federal agency is responsible for this; some do it well, others poorly. However, to locate all in one agency and require the personnel to stay current is unlikely to succeed.

In the previous administration, the White House Office of Management and Budget (OMB) attempted to develop a single approach to risk assessment to be used by all federal agencies. That effort was withdrawn following strong criticism by an NRC (2007b) report.

The President will be issuing a revision to Executive Order 12866, Regulatory Planning and Review.4 This executive order may describe how risk assessment is to be done. It certainly will serve as guidance for some risk analysis. What a DHS office for a National Risk Officer could add is difficult to imagine. More likely such an office would lead to inefficiency and interminable bureaucratic arguments. DHS has been afflicted with shifting personnel and priorities. Neither would be of benefit in attempting to establish a single entity for risk management across all federal agencies.

Recommendation: Until risk management across DHS has been shown to be well coordinated and done effectively, it is far too premature to consider expanding DHS’s mandate to include risk management in all federal agencies.

INCORPORATE THE RISK = f(T,V,C) FRAMEWORK, FULLY APPRECIATING THE COMPLEXITY INVOLVED WITH EACH TERM IN THE CASE OF TERRORISM

Even if DHS can properly characterize its basic data and has confidence in those data, the department faces a significant challenge in adopting risk analysis to support risk-informed decision making because there are many different tools, techniques, modeling approaches, and analysis methods to assess risk. Quantitative risk analysis is not the only answer, and it is not always the best approach. A good discussion of this is given in Paté-Cornell (1996). DHS should recognize that quantitative models are only one type of method and may not be the most appropriate for all risk situations.

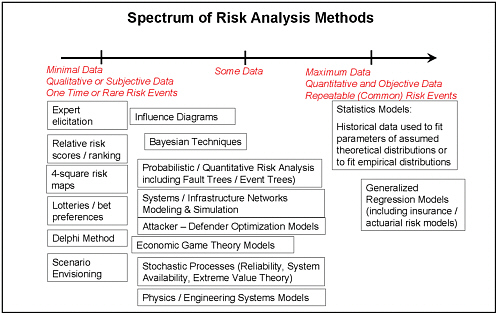

Figure 5-1 shows a spectrum of more traditional risk analysis methods, from the more qualitative or subjective methods on the left to methods that rely heavily on data of high quality, volume, and objectivity on the right. The figure also attempts to capture the rapidly developing capabilities in modeling terrorism and intelligent adversaries, through tools such as attacker-defender (or defender-attacker-defender) models, game theory applied to terrorism, and systems or infrastructure network models and simulation. Given the type and amount of available input data and the type of decision to be made, the figure suggests which types of models and methods are available for risk analysis.

FIGURE 5-1 Spectrum of traditional risk analysis methods.

Rarely is there a single “right” risk analysis tool, method, or model to provide “correct” analysis to support decision making. In general, a risk analysis is intended to combine data and modeling techniques with subject matter expertise in a logical fashion to yield outputs that differentiate among decision options and help the decision maker improve his or her decision over what could be accomplished merely with experience and intuition. Such models can be used to gain understanding of underlying system behavior and how risk events “shock” and change the behavior of the system of interest; to evaluate detection, protection, and prevention options (risk-based cost-benefit analysis); or to simply characterize and compare the likelihood and severity of various risk events.

Many of the models and analyses reviewed by the committee treat chemical, biological, radiological, and nuclear (CBRN) terrorism and weapons of mass destruction (WMD) events as separate cases from natural hazards and industrial accidents. That may be because, in a quantitative assessment based solely on financial loss and loss of life, the risk of terrorist events tends to rank substantially lower than the risk of natural hazards and accidents. The quantitative assessments cannot capture the social costs of terrorism—the terror of terrorism.

A wide body of literature is available on appropriate methods for developing and applying technical models for policy analysis and decision support in a variety of disciplines (NRC, 1989, 1994, 1996, 2007d). The EPA, for example, provides support for environmental modeling in a number of its program and research offices, with a coordinated agency-wide effort led by the EPA Council for Regulatory Environmental Modeling (CREM) (http://www.epa.gov/CREM/index.html).

The purpose of the CREM is to

-

Establish and implement criteria so that model-based decisions satisfy regulatory requirements and agency guidelines;

-

Document and implement best management practices to use models consistently and appropriately;

-

Document and communicate the data, algorithms, and expert judgments used to develop models;

-

Facilitate information exchange among model developers and users so that models can be iteratively and continuously improved; and

-

Proactively anticipate scientific and technological developments so EPA is prepared for the next generation of environmental models.

Of particular note to DHS, the EPA (2009) Guidance Document on the Development, Evaluation and Application of Environmental Models addresses the use and evaluation of proprietary models, which DHS relies on in some contexts:

…To promote the transparency with which decisions are made, EPA prefers non-proprietary models when available. However, the Agency acknowledges there will be times when the use of proprietary models provides the most reliable and best-accepted characterization of a system. When a proprietary model is used, its use should be accompanied by comprehensive, publicly available documentation. This documentation should describe:

-

The conceptual model and the theoretical basis for the model.

-

The techniques and procedures used to verify that the proprietary model is free from numerical problems or “bugs” and that it truly represents the conceptual model.

-

The process used to evaluate the model and the basis for concluding that the model and its analytical results are of a quality sufficient to serve as the basis for a decision.

-

To the extent practicable, access to input and output data such that third parties can replicate the model results. [Available online at: http:www.epa.gov/crem/library/cred_guidance_0309.pdf, pp. 31-34]

Proprietary models used in DHS include at least the BTRA, the CTRA, and the Transportation Safety Administration’s (TSA’s) Risk Management Tool (RMAT). For those many areas where DHS models risk as a function of T, V, and C, the use of non-proprietary models would mean that the best models for each of these elements (T, V, and C) could be applied to the particular decision being addressed, rather than relying on one fixed risk model.

The DHS Risk Analysis Framework

In many cases, the DHS approach to risk analysis involves application of the simple framework that Risk = Threat × Vulnerability × Consequences (or R = T × V × C). As pointed out in the Congressional Research Service’s 2007 report on Homeland Security Grant Program (HSGP), The Department of Homeland Security’s Risk Assessment Methodology: Evolution, Issues, and Options for Congress (Masse et al., 2007), this conceptual framework has evolved in that context through three stages of development: a first stage when risk was generally equated to population; a second stage when risk was, primarily in additive form, assessed as the sum of threat, critical infrastructure vulnerability, and population density. Finally, in stage 3, the current situation, the R = T × V × C framework is used and the probability of threat events was introduced, although with unresolved issues (see below).

The general framework of R = T × V × C builds upon accepted practice in risk assessment where, at its simplest level, risk has often been equated to the probability of events multiplied by the magnitude of expected consequences. The incorporation of vulnerability into this equation is entirely appropriate because it provides needed detail on how events lead to different types and magnitudes of consequences. In its 2005 review, the General Accounting Office evaluated the R = T × V × C framework as containing the key elements required for a sound assessment of risk. The committee concurs with this judgment that Risk = A Function of Threat, Vulnerability, and Consequences (Risk = f(T,V,C)) is a suitable philosophical decomposition framework for organizing information about risks. Such a conceptual approach to analyzing risks from natural and man-made hazards is not new, and the special case of Risk = T ×V × C has been in various stages of development and refinement for many years. However, the committee concludes that the multiplicative formula, Risk = T × V ×C, is not an adequate calculation tool for estimating risk for the terrorism domain, within which independence of threats, vulnerabilities, and consequences does not typically hold.

While the basic structure of the R = f(T,V,C) framework is sound, its operationalization by DHS has been seriously deficient in a number of respects. In particular, problems exist with how each term of the equation has been conceptualized and measured, beginning with defining and estimating the probabilities of particular threats in the case of terrorism. The variables, indicators, and measures employed in calculating T, V, and C can be crude, simplistic, and misleading. Defining such threats and estimating probabilities are inherently challenging because of the lack of experience with such events, the associated absence of a database from which reliable estimates of probabilities may be made, and the presence of an intelligent adversary who may seek to defeat preparedness and coping measures. DHS has employed a variety of methods to compensate for this lack of data, including game theory, “red-team” analysis, scenario construction, and subjective estimates of both risks and consequences. Even here, however, these methods have often failed to use state-of-the-art methods,

such as the expert elicitation methods pioneered at Carnegie Mellon University. As a result, defining the full range of threats and their associated probabilities remains highly uncertain, a situation that is unlikely to change in the near term, even with substantial efforts and expenditures for more research and analysis. This reality seriously limits what can be learned from the application of risk assessment techniques as undertaken by DHS.

The DHS assessment of vulnerabilities has focused on critical infrastructure and the subjectively assessed likelihood that particular elements of critical infrastructure will become targets for terrorist attacks. The “attractiveness” of targets for terrorists, judged to provide a measure of the likelihood of exposure (i.e., likelihood it may be attacked), has been estimated by subjective expert opinion, including that of the managers of these facilities. However, this addresses only one of the three dimensions—exposure—that are generally accepted in risk analysis as contributing to vulnerability. The other two dimensions, coping capacity and resilience (or long-term adaptation), are generally overlooked in DHS vulnerability analyses. So the DHS program to reduce the nation’s vulnerabilities to terrorist attack has focused heavily on the “hardening of facilities.”

This tendency to concentrate on hardware and facilities and to neglect behavior and the response of people remains a major gap in the DHS approach to risk and vulnerability. This omission results in a partial analysis of a complex problem.

Uncertainty and variability analyses are essential ingredients in a sound assessment of risk. This has most recently been pointed out at length in the recent NRC 2009 report Science and Decisions: Advancing Risk Assessment. There is also general consensus in the field of risk analysis on this issue. This is a particularly important issue in DHS risk-related work, especially for terrorism, where uncertainty is particularly large. As many authoritative studies have noted, it is essential to assess the levels of uncertainty associated with components of the risk assessment and to communicate these uncertainties forthrightly to users and decision makers. Regrettably, this has not been achieved in much of the DHS analysis of risk. Instead of estimating the types and levels of uncertainty, DHS has frequently chosen to weight heavily its consequence analyses, where magnitudes of effects can be more easily estimated, and to reduce the weight attached to threats, where the uncertainties are large. This is not an acceptable way of dealing with uncertainty. Rather, the large uncertainties associated with the difficult problem of threat assessment should be forthrightly stated and communicated. It would be helpful to have further assessment of the types of uncertainty involved, to differentiate between those that can be reduced by further data gathering and research and those that lie in the domain of “deep uncertainty,” where further research in the near term is unlikely to change substantially the existing levels of uncertainty. Sensitivity analyses tied to particular risk management strategies could help identify which types of data would be most important.

Even a sound framework of risk leaves unresolved what the decision rule should be for combining terms into an integrative, or multidimensional, metric

of risk. In the risk field, it is commonly assumed that the terms should be unweighted unless there is compelling evidence to support a weighting process, and that the terms should be multiplied by each other. In DHS applications, it is unclear how risk is to be calculated. Sometimes terms are multiplied; other times they are added. These give essentially inconsistent and incomparable end results about risk. A coherent and consistent process needs to be developed to which all assessments adhere.

Conclusions:

-

The basic risk framework of Risk = f (T,V,C) used by DHS is sound and in accord with accepted practice in the risk analysis field.

-

DHS’ operationalization of that framework—its assessment of individual components of risk and the integration of these components into a measure of risk—is in many cases seriously deficient and is in need of major revision.

-

More attention is urgently needed at DHS to assessing and communicating the assumptions and uncertainties surrounding analyses of risk, particularly those involved with terrorism.

Until these deficiencies are improved, only low confidence should be placed in most of the risk analyses conducted by DHS.

The FY 2009 Homeland Security Grant Guidance describes the DHS approach to risk assessment as (DHS, 2009):

Risk will be evaluated at the Federal level using a risk analysis model developed by DHS in conjunction with other Federal entities. Risk is defined as the product [emphasis added] of three principal variables:

Threat—the likelihood of an attack occurring

Vulnerability—the relative exposure to an attack

Consequence—the expected impact of an attack

The committee says that DHS “tends to prefer” the definition Risk = T × V × C because it is unclear whether that formula is followed in the grants program: that program’s weighting of threat by 0.10 or 0.20 doesn’t make sense unless some other formulation is used.5 Moreover, committee discussions with staff from Office of Infrastructure Protection (IP) and National Infrastructure Simulation and Analysis Center (NISAC) illuminated how T, V, and C are assessed, but there does not seem to be a standard protocol for developing a measure of risk

from those component assessments. RMA defines risk in the DHS Risk Lexicon as “the potential for an adverse outcome assessed as a function of threats, vulnerabilities, and consequences associated with an incident, event, or occurrence [emphasis added]” (DHS-RSC, 2008).

The definition Risk = T × V × C makes sense when T, V, and C are independent variables—in particular, when threat is independent of vulnerabilities and consequences, as is the case for natural hazards. The formulation assumes this independence; for example, the threat against a given facility does not change if its vulnerability goes up or the consequences of damage increase, although the overall risk to that facility will increase in either case. Given the independence of T, V, and C, the procedure for making a multiplicative assessment of risk is relatively straightforward as long as the probabilities can be estimated with some confidence and the consequences evaluated on some type of consistent metric. However, to state the obvious; a terrorist would be attracted by a soft target, whereas while a storm strikes at random. Also, vulnerability and consequences are highly correlated for terrorism but not for natural disasters. Intelligent adversaries exploit these dependencies. Challenges exist in any instance where T, V, and C are poorly characterized, which can be the case even with the risk of natural disasters and accidents. Yet this is the normal state with regard to terrorism, where T tends to be very subjective and not transparent, V is difficult to measure, and we do not know how to estimate the full extent of consequences.

Multiattribute utility theory (as used in economics and decision analysis) is one way to combine multiple attributes into a single metric for a single decision maker with a unique set of preferences. However, Arrow’s impossibility theorem (Arrow, 1950) shows that there is no unique consensus way to combine different attributes in “group” decision theory when the members of the group have different priorities or weights on the various attributes. (In other words, the relative importance of different attributes is a political question, not a scientific question.) So even if we had reliable methods of risk analysis for terrorism, those methods would not in general yield a unique ranking of different terrorist threats; rather, different rankings would result, depending on the weights placed on the attributes by particular stakeholders. (In addition, utility theory might not work well for events with extremely large consequences and extremely small probabilities.) Risk methods should not prejudge the answers to trade-off questions that are inherently political or preclude input by decision makers and other stakeholders.

Based on these concerns, the committee makes the following recommendations:

Recommendation: DHS should rapidly change its lingua franca from “Risk = T × V × C” to “Risk = f(T,V,C)” to emphasize that functional interdependence must be considered in modeling and analysis.

Recommendation: DHS should support research into how best to combine T, V, and C into a measure of risk in different circumstances. This

research should include methods of game theory, Bayesian methods, red teams to evaluate T, and so on. The success of all approaches depends on the availability and quality of data.

DEVELOP A STRONG SOCIAL SCIENCE CAPABILITY AND INCOPORATE THE RESULTS FULLY IN RISK ANALYSES AND RISK MANAGEMENT PRACTICES

A particular concern of the committee’s is an apparent lack of expertise in social sciences, certainly in RMA, but apparently also in other DHS units responsible for risk analysis. Social science expertise is critical to understand terrorism risk and to properly model societal responses to any type of hazardous scenario, and this absence poses a major gap in DHS expertise. Other kinds of social science expertise are fundamental to developing and guiding reliable expert elicitation processes, which are essential to the success of DHS risk analysis and to designing and executing effective programs of risk communication that take into account public perception and societal responses. The Study of Terrorism and Response to Terrorism (START) Center of Excellence supported by DHS-S&T (Science and Technology Directorate) has expertise in understanding terrorism risk, but the committee saw no evidence that that expertise was influencing the thinking of DHS personnel dealing with risk analysis. The Center for Risk and Economic Analysis of Terrorism Events (CREATE) Center of Excellence has apparently had more impact—for example, it helped strengthen the expert elicitation processes used for the BTRA—but otherwise its work seems to have little effect on DHS risk analysis. Neither the Centers of Excellence nor S&T’s Human Factors Division is devoting large amounts of effort into the two areas discussed next, understanding societal responses and risk communication. Without this expertise—and especially without knowledge of these areas being front and center in the minds of DHS risk analysts—it is unlikely that DHS will attain its goals for being an effective risk-informed organization.

Social Science Skills Are Essential to Improving Consequence Modeling

The range of possible consequences for some types of terrorism attacks and natural disasters can vary over several orders of magnitude. For example, exactly the same scenario can result in only a few fatalities or thousands, depending on when and how the event unfolds, the effectiveness of the emergency response, and even random factors such as wind direction. The latter factors—for example effectiveness of response and wind direction—also affect the consequences associated with natural hazards; yet an intelligent adversary will select the conditions that maximize consequences, to the degree that he or she can, and

thus the analyst’s ability to estimate those consequences may be much poorer than in the case of natural hazards. However, many methods in routine use by DHS require an analyst to provide a single point estimate of consequences or at least a single category of consequence severity. This can easily lead to misleading results, especially if the decision maker’s preference (i.e., utility function) is nonlinear in the relevant consequence measure—which might well be the case. This is not inherently a daunting problem for risk analysis, because rigorous methods exist for performing uncertainty analysis, even with extremely broad probability distributions for consequences. However, these methods might not be cost-effective for use in widespread application. Also, the probability distributions for consequences might be difficult to assess based on expert opinion, especially on a routine basis for large numbers of problems, by analysts with moderate levels of capability and resources.

In two specific cases examined by the committee (infrastructure protection and TRAM), DHS’s consequence modeling is in general too limited in what it considers. That is not always wrong for a particular stakeholder’s needs, but it is misleading if the modeling should illuminate the full extent of homeland security risk.

For example, social disruption is probably a common goal for terrorists, but the committee did not see any consequence analysis at DHS that includes this. In fact, it encountered few, if any, DHS staffers who seem concerned about this gap. What DHS is doing now is not necessarily wrong (and its decisions might be robust enough despite the coarseness of this approach), but DHS should be aware that important factors are being overlooked.

Immediately following 9/11 and the nearly concurrent mailings of the anthrax letters, U.S. government agencies and the government and nongovernmental scientific communities expanded many of their research efforts to focus on (1) the psychological impacts of terrorist attacks on people and (2) the short- and long-term economic consequences of such attacks. With the disruptions following Hurricane Katrina, the need to improve understanding of the responses of affected populations to natural disasters also received a new emphasis within government in anticipation of future catastrophic events. These three experiences were important wake-up calls to the broad scope of the consequences of disasters.

Such consequences include not only destruction over large geographic areas but also disruptions to sprawling social networks and multifaceted economic systems within and beyond the geographic impact zones. In addition to resulting in bodily harm, such incidents can indirectly devastate the lives of other individuals who are not killed or physically injured during the events (see, for example, Chapters 9 to 11 of NRC, 2002), and the consequences can affect the entire national economy.

Of particular concern are the shortcomings in labeling those persons directly affected by such events as the “public,” which is then too often considered by the government as a homogeneous entity. Different groups are affected in different ways. Prior personal experiences, which vary from individual to indi-

vidual, are important. The resilience of members of the affected population to cope with tragedy varies significantly, and their personal prior experiences cannot easily be aggregated in a meaningful fashion. Among significant differentiating factors are previous experiences in dealing with disasters, types of education, levels of confidence in government plans for coping with disasters, availability of personal economic resources for recovery activities, and attachments of residents to the physically affected geographical areas and facilities through family, professional, and social networks.

Behavioral and emotional responses to natural disasters and terrorist attacks are difficult to predict because there are so many scenarios, each with its own set of impacts that condition the nature of the responses. Examples of responses to terrorist attacks include the following: fear of additional attacks, outrage calling for retaliation, lack of confidence in government to provide protection, proactive steps by neighbors to assist one another, eagerness to leave an affected area, and so on. Similarly with regard to natural disasters, a variety of responses could ensue, perhaps driven by lack of trust in the government—apprehensions as to personal economic losses, frustrations associated with evacuation planning and implementation, and lack of communication about the safety of families and friends. There has been considerable research in this area (see, e.g., Mileti, 1999 and Slovic, 2000, 2002).

In regard to consequence assessment, a perennial problem in risk analysis is the question of completeness: What are the consequence of concern to decision makers and publics? A recent NRC report on radiological terrorism in Russia identified as a major issue the need for “a risk-based methodology that considers the psychological consequences and economic damage of radiological terrorism as well as the threat to human life and human health. (NRC, 2007e).” This same need should be recognized as a major one for DHS because it has often been observed that a primary purpose, if not the primary purpose, of terrorism is to produce a sense of terror in the population. Clearly, social disruption is an essential part of any sound consequence analysis of terrorism. Personnel at several DHS Centers of Excellence are certainly attuned to this. Yet, despite that, the almost exclusive concentration among DHS risk analysts is on damage to critical infrastructure and the need to “harden” facilities, leaving this important domain of consequences unassessed. Accordingly, the partial approach to risk analysis employed at DHS carries the risk that DHS is working on the wrong problems, because terrorists might be aiming for an entirely different set of consequences than those that are driving DHS priorities.

As to economic impacts, 9/11 demonstrated the far-reaching effects of damage in a central financial district. Significant costs were felt by the U.S. economy, both through business losses attributable to the attack and also due to the hundreds of billions of dollars spent to harden facilities across the country. While such a huge expenditure is unlikely in the future, any attack will certainly trigger unanticipated government expenditures to prevent repetition and will disrupt businesses that depend in part on unencumbered activities in the impact zone.

From the outset of the establishment of DHS, a number of components of the department have been involved in efforts to reduce the adverse social and economic consequences of disasters over a broad range of impacts. The Federal Emergency Management Agency (FEMA), for example, has an array of programs to respond to the needs of affected populations. DHS’s Science and Technology Directorate has established a program to support research in the behavioral sciences through a Human Factors Division and several university Centers of Excellence. This research is devoted to understanding the nature of the terrorist threat, designing measures to reduce the likelihood of successful attacks, and providing guidance in responding to the needs of populations affected by terrorist attacks or natural disasters (DHS-S&T, 2009). As a third example, the Office of Infrastructure Protection works closely with the private sector to minimize the economic disruption that follows disasters, whether naturally occurring or attributable to terrorist attacks (DHS-IP, 2009).

At the same time, however, DHS clearly gives much higher priority to hardening physical infrastructures (e.g., critical buildings and transportation and communications systems) than to preparing society on a broader basis to better withstand the effects of disasters. An important reason for this lack of balance in addressing consequences is that DHS has not devoted sufficient effort to the development of staff capabilities for adequately assessing the significance of the broad social and economic dimensions of enhancing homeland security.

DHS is in the early stages of embracing social and economic issues as major elements of homeland security. This belated development of capabilities in the social and economic sciences should be strongly encouraged. An increased reliance on such capabilities can upgrade DHS efforts to use quantitative modeling for anticipating a broader range of consequences of catastrophic events than in the past, particularly those consequences that lead to large-scale social and economic disruptions.

To improve preparations for managing a broad range of consequences, quantitative risk analyses should take into account the diverse ramifications to the extent possible. Of course, such estimates are inherently difficult; many new scenarios will have no precedents. More common scenarios might have different impacts in different geographic settings. Often there are difficulties in conceptualizing social and economic impacts, let alone characterizing the details of the consequences of an event. Nevertheless, these aspects of risk analysis must be recognized because in some cases, particularly with terrorism, social and economic impacts can be more significant than physical destruction and even loss of life.

There are many gaps in our ability to estimate short-term and long-term social and economic impacts of natural disasters and terrorism attacks. However, researchers have made good progress in recent years in the quantification of social and economic issues.6 Those results should be used in modeling efforts within DHS. Considerable data concerning consequences are available to help

in validating efforts to model the consequences of disasters. Incorporating such considerations in pre-event planning and response preparedness should pay off when events occur.

Recommendation: DHS should have a well-funded research program to address social and economic impacts of natural disasters and terrorist attacks and should take steps to ensure that results from the research program are incorporated into DHS’s risk analyses.

Recommendation: In characterizing risk, DHS should consider a full range of public health, safety, social, psychological, economic, political, and strategic outcomes. When certain outcomes are deemed unimportant in a specific application, reasons for omitting this aspect of the risk assessment should be presented explicitly. If certain analyses involve combining multiple dimensions of risk (e.g., as a weighted sum), estimates of the underlying individual attributes should be maintained and reported.

Social Science Skills are Essential to Improving Risk Communication

Element (d) of the Statement of Task calls for the committee to “make recommendations for best practices, including outreach and communications.” In the large, highly dispersed domain of actors with which DHS deals, and with diverse publics who may be at risk, risk communication is a critical part of the DHS risk management program. Assembling and sharing common information are essential for coherent risk management. Indeed, DHS recognizes this in its IRMF, which identifies the critical DHS need to “develop information-sharing structures and processes that make risk information available among components and at the enterprise level, when and where it is required.” The DHS focus since its inception has been on information sharing with decision makers. However, there is a much bigger job to be done to provide not only information but analysis and aids to thinking that prepare those who may be at risk to cope better with risk events that may occur. Those at risk are very diverse—tribal members, urban dwellers, state officials, and others. A concerted effort to prepare various publics for risk events has yet to be forthcoming, although aspects of needed work have been accomplished, such as producing a lexicon for risk terminology that should lessen the risk of confusion in information sharing. As DHS moves to the next stages of risk communication—which will have to go far beyond information sharing and include understanding the perceptions and needs of the recipients of various risk-related communications so that the messages can be tailored to fit the audiences—a well-developed risk communication strategy document and program, adequately staffed and funded, will be needed.

Recommendation: The DHS risk communication strategy and program must treat at minimum the following:

-

An identification of stakeholders and those at risk who need information and analysis;

-

An assessment of the needs of these people to be met by the communication program (sometimes termed, Who are the audiences?);

-

Strategies for two-way communication with these groups;

-

Ongoing evaluation of program effectiveness and needed strategy changes;

-

Learning from experience as events occur and changes in communication are suggested;

-

Links between communications and actions people can take; and

-

Outcomes resulting—cost and time considerations.

The program should be developed with careful involvement of national experts and with external peer review. It should be accompanied, indeed anticipated, by public perception research.

Effective risk communication is quite difficult. It should be done by staff who understand the issues and details of the risk analyses. This is not public relations work, as some may believe, but work that requires participation by technically knowledgeable staff. DHS does not seem to understand the lessons painfully learned by other agencies, such as the Department of Energy (DOE), EPA, and the Nuclear Regulatory Commission.

In developing best practices for communicating risk, DHS should understand four audiences: DHS employees, other federal employees, members and staff of Congress, and the general public. The knowledge bases and information needs of these audiences differ, although the fundamental principles of risk communication apply to all. Another important aspect of DHS responsibilities—but beyond the domain of risk analysis—is communication during emergencies.

A 1989 NRC report, Improving Risk Communication recommended the following best practices:

-

Relate the message to the audiences’ perspectives: “risk messages should closely reflect the perspectives, technical capacity, and concerns of the target audiences. A message should (1) emphasize information relevant to any practical actions that individuals can take; (2) be couched in clear and plain language; (3) respect the audience and its concerns; and (4) seek to inform the recipient …. One of the most difficult issues in risk communication in a democratic society is the extent to which public officials should attempt to influence individuals …”.

-

“Risk message and supporting materials should not minimize the existence of uncertainty. Some indication of the level of confidence of estimates and the significance of scientific uncertainty should be conveyed.”

-

“Risk comparisons can be helpful, but they should be presented with caution. Comparison must be seen as only one of several inputs to risk decisions, not as the primary determinant” (pp 11-12).

-

“Risk communication should be a two-way street. Organizations that communicate risk should ensure effective dialogue with potentially affected outsiders … [T]hose within the organization who interact directly with outside participants should be good listeners” (pp. 151, 153).

Other good sources on this topic include Bennett and Calman (1999) and Pidgeon et al. (2003). Effective risk communication relies on the involvement of competent people who understand the activities about which they speak.

It is worth noting that DHS has adopted a National Strategy for Information Sharing (DHS, 2008). This is fine as far as it goes, but it has to go much deeper into the issues of stakeholder and public needs, as suggested above. Recognition is also needed that the communication issues associated with terrorism and natural hazards are fundamentally different and will require quite different approaches, both in preparedness and in emergency response.

BUILD A STRONG RISK CULTURE AT DHS

The committee is concerned about the lack of any real risk analysis depth at DHS or in RMA and does not see this situation improving in recent hiring or training programs.

The challenges in building a risk culture in a federal agency or corporation are major, requiring a serious effort. At the DuPont Corporation, for example, this involved high-level commitment, diffusion of values throughout the corporation, routines and procedures, recruitment of people, and reward structures. It was not clear to the committee whether DHS has any serious plan for how this will happen and any serious ongoing evaluation of progress.

DHS would find benefit from many of the recommendations offered over the past 25 years to the EPA and other federal agencies that rely on risk analysis, as well as study of the practices that the EPA, in particular, has put into place to implement them. Of particular importance is the need for DHS to specify with complete clarity the specific uses to which risk analysis results will be put. This echoes the first recommendation of the NRC’s 2007 Interim Report on Methodological Improvements to the Department of Homeland Security’s Biological Agent Risk Analysis, which called for DHS to “establish a clear statement of the long-term purposes of its bioterrorism risk analysis” (NRC, 2007c):

A clear statement of the long-term purposes of the bioterrorism risk analysis is needed to enunciate how it can serve as a tool to inform risk assessment, risk perception, and especially risk-management decision making. Criteria and measures should be specified for assessing how well these purposes are achieved. Key issues to be addressed by such

a statement should include the following: who the key stakeholders are; what their short- and long-term values, goals, and objectives are; how these values, goals, and objectives change over time; how the stakeholders perceive the risks; how they can communicate their concerns about these risks more effectively; and what they need from the risk assessment in order to make better (more effective, confident, rational, and defensible) resource-allocation decisions. Other important issues are who should perform the analyses (contractors, government, both) and how DHS should incorporate new information into the analyses so that its assessments are updated in a timely fashion.

As part of its effort to build a risk culture and also improve its scientific practices (see next section), DHS should work to build stronger two-way ties with good academic programs in risk analysis of all types. To address these needs, DHS could develop programs that encourage its employees to spend a semester at one of the DHS university Centers of Excellence in order to strengthen their skills in a discipline of relevance to homeland security. Such a program should be bilateral in the sense that students at those Centers of Excellence should also be encouraged to spend time at DHS, either in rotating assignments or as employees after graduation. The goal is to implement technology transfer, from universities to the homeland security workforce, while keeping those universities grounded in the real needs of DHS. Improving risk modeling at DHS will require commensurate building up of academic ties, training of DHS people, and tech transfer routes to the DHS user community.

RMA and Enterprise Risk Management

Enterprise Risk Management as a field of management expertise began in the financial services sector in the late 1990s and is still evolving rapidly. Chapter 2 gives an introduction to ERM and explains how RMA is working to develop the three dimensions of a successful ERM system—governance, processes, and culture—with its Integrated Risk Management Framework. Generally speaking, RMA’s primary focus has been on processes—facilitating more coherence across the preexisting risk practices within DHS components—and this is appropriate. Its development of a risk lexicon and an interim IRMF are reasonable starting points in the process, although the committee believes that the IRMF must be made more specific before it begins to provide value. Creating an inventory of risk models and risk processes throughout the department was also a logical and necessary early step. RMA’s primary action in support of ERM governance was the establishment of the Risk Steering Committee. RMA has done little to date to help establish a risk-aware culture within DHS, although its existence and activities represent a beginning.

A central tenet of ERM—almost a tautology—is that it be driven from the top. An enterprise’s top leadership must first show itself to be fully supportive of ERM, so that ERM practices are seen as fundamental to the organization’s

mission and goals. That has been done at DHS, with both Secretary Chertoff and Secretary Napolitano emphasizing the centrality of risk-informed decision making to the department’s success. For example, Secretary Napolitano included the following in her terms of reference for the 2009 Quadrennial Homeland Security Review:

Development and implementation of a process and methodology to assess national risk is a fundamental and critical element of an overall risk management process, with the ultimate goal of improving the ability of decision makers to make rational judgments about tradeoffs between courses of action to manage homeland security risk.

Other federal organizations that have mature risk cultures and processes, such as the EPA and Nuclear Regulatory Commission, took years or decades to mature. It seems likely that the development of a mature risk culture at DHS will similarly require time, and DHS should attempt to learn from the experience of other departments and agencies that have trod the path before. Even though there has been relative success of risk management in the corporate world, DHS should not necessarily naively adopt best practices in risk management from industry. Risk management in financial services and insurance relies on some key assumptions that do not necessarily hold for DHS. For example, the law of large numbers may not hold for DHS, precisely because DHS does not get to observe millions of risk events, as would be available in auto insurance losses or financial instrument trades. Further, the financial services and insurance sectors rely on being able to collect and group data into relatively homogeneous groups and to have independence of risk events. DHS often has heterogeneous groups, and independence certainly does not hold for interdependent CIKR sectors or networks of operations.

Even the cultural best practices of risk management from the private sector need to be modified for DHS adoption. Losses occur in the private sector but not on the magnitude of DHS’s decision scale, and not with the nonfinancial consequences that DHS must consider in managing risk. Societal expectations of DHS are vastly different from those of an investment firm, and many more stakeholder perspectives must be taken into account by DHS in managing risks. Lastly, the private sector relies on making decisions under uncertainty and adapting strategy and tactics over time as the future becomes clearer. Congress should expect DHS to demonstrate adaptive learning over time to address and better manage the ever-changing portfolio of homeland security risks.

ADOPT STRONG SCIENTIFIC PRACTICES AND PROCEDURES, SUCH AS CAREFUL DOCUMENTATION, TRANSPARENCY, AND INDEPENDENT OUTSIDE PEER REVIEW

Develop Science-Based Guidelines for Different Types of DHS Risk Analyses

A key tool, which could be of value to DHS, would be peer-reviewed guidelines that spell out not only how different types of risk analysis are to be carried out, but also which decisions those analyses are meant to inform. Clear, science-based guidelines have contributed greatly to the success of the U.S. Environmental Protection Agency in developing capabilities, over a 20-year period, for using risk analysis to inform strategic decision making and decisions about risk reduction. Such guidelines, when well developed, provide both the scientific basis for methods and guidance on the sources and types of data needed to complete each type of analysis. See Appendix B for a general discussion of how risk analysis evolved at EPA.

EPA has invested heavily in the development of its guidelines. Fundamental building blocks for this success include the development of clear characterization of the kinds of decisions that must be addressed by risk analysis, clear understanding about how to address each kind of decision, and an understanding of how to treat uncertainties in both data and models. (Appendix A contains background on uncertainty characterization.) EPA also has a strong focus on transparency, providing clear documentation of how it moves from risk analysis to decisions, and it has developed a fairly clear taxonomy of decisions. To enable these steps, EPA has developed a large professional staff that supports the guidelines by, for example, tracking the literature and continually improving the guidelines. The development of guidelines is based heavily on the published (primary) scientific literature, with gaps in that literature fully discussed to provide guidance for future research. Peer review is essential before guidelines are accepted for use.

Development of these guidelines should be preceded by elucidation of the specific types of risk-based decisions that the department is required to make and identification of the types of risk assessments most useful for those decisions. Guidelines should include the scientific bases, drawn from the literature, for the methods used and full discussion of the sources and types of data necessary to complete an assessment. Guidance on how uncertainties are to be treated should be central to the guidelines. Guidelines should be implemented only after they have been subjected to independent peer review. The ways in which the results of risk assessment support decisions should always be explicitly described.

While DHS is developing some guidelines for risk analysis (as addenda to the Integrated Risk Management Framework), they do not have the focus rec-

ommended here.

Recommendation: DHS should prepare scientific guidelines for risk analyses recognizing that different categories of decisions require different approaches to risk analysis strict reliance on quantitative models is not always the best approach.

The committee suggests as a starting point the development of specific guidelines for how to perform reliable, transparent risk analyses for each of the illustrative risk scenarios (Table 5-1) that have been adopted by DHS to help guide the efforts to address such scenarios.

Improve Scientific Practice

The charge for this study was to evaluate how well DHS is doing risk analysis. DHS has not been following critical scientific practices of documentation, validation, peer review, and publishing. Without that discipline, it is very difficult to know precisely how DHS risk analyses are being done and whether their results are trustworthy and of utility in guiding decisions.

As illustrated in the sections on uncertainty and avoiding false precision in Chapter 4 and in the discussion about how T, V, and C are combined to measure risk in different circumstances, it is not easy to determine exactly what DHS is doing in some risk analyses because of inadequate documentation, and the details can be critical for determining the quality of the method. It is one thing to evaluate whether a risk model has a logical purpose and structure—the kind of

TABLE 5-1 National Planning Scenarios

|

Scenario 1: |

Nuclear Detonation—Improvised Nuclear Device |

|

Scenario 2: |

Biological Attack—Aerosol Anthrax |

|

Scenario 3: |

Biological Disease Outbreak—Pandemic Influenza |

|

Scenario 4: |

Biological Attack—Plague |

|

Scenario 5: |

Chemical Attack—Blister Agent |

|

Scenario 6: |

Chemical Attack—Toxic Industrial Chemicals |

|

Scenario 7: |

Chemical Attack—Nerve Agent |

|

Scenario 8: |

Chemical Attack—Chlorine Tank Explosion |

|

Scenario 9: |

Natural Disaster—Major Earthquake |

|

Scenario 10: |

Natural Disaster—Major Hurricane |

|

Scenario 11: |

Radiological Attack—Radiological Dispersal Devices |

|

Scenario 12: |

Explosives Attack—Bombing Using IED |

|

Scenario 13: |

Biological Attack—Food Contamination |

|

Scenario 14: |

Biological Attack—Foreign Animal Disease |

|

Scenario 15: |

Cyber Attack |

information that can be conveyed through a briefing—but quite another to really understand the critical inputs and sensitivities that affect implementation. The latter understanding comes from scrutiny of the mathematical model, evaluation of a detailed discussion of the model implementation, and review of some model results, preferably when exercised against simple bounding situations and potentially retrospective validation. Good scientific practice for model-based scientific work includes the following:

-

Clear definition of model purpose and decisions to be supported;

-

Comparison of the model with known theory and/or simple test cases or extreme situations;

-

Documentation and peer review of the mathematical model, generally through a published paper that describes in some detail the structure and mathematical validity of the model’s calculations; and

-

Some verification and validation steps, to ensure that the software implementation is an accurate representation of the model and that the resulting software is a reliable representation of reality.

In the absence of these steps, one cannot assess the quality and reliability of the risk analyses. As noted above, it is not adequate to simply ask subject matter experts (SMEs) whether they see anything odd about the model’s results. DHS has generally done a poor job of documenting its risk analyses. The NRC committee that authored the BTRA review could really understand what the software was doing only by sitting down with the software developers and asking questions. No description has ever been published. That committee’s report includes the following characterization (NRC, 2008, p. 37):

The committee also finds the documentation for the model used in the BTRA of 2006 to be incomplete, uneven, and extremely difficult to understand. The BTRA of 2006 was done in a short time frame. However, deficiencies in documentation, in addition to missing data for key parameters, would make reproducing the results of the model impossible for independent scientific analysis. For example, although Latin Hypercube Sampling is mentioned in the description of the model many times as a key feature, no actual sample design is specified … insufficient details are provided on how or where these numbers are generated, precluding a third party, with suitable software and expertise, from reproducing the results—violating a basic principle of the scientific method.

The NRC report quoted above also lists what needs to be captured in an adequate documentation of a risk analysis (Brisson and Edmunds, 2006 as cited in NRC, 2008):

It is essential that analysts document the following: (1) how they construct risk assessment models, (2) what assumptions are made to

characterize relationships among variables and parameters and the justifications for these, (3) the mathematical foundations of the analysis, (4) the source of values assigned to parameters for which there are no available data, and (5) the anticipated impact of uncertainty for assumptions and parameters.

TRAM and Risk Analysis and Management for Critical Asset Protection (RAMCAP) are described primarily through manuals that are not widely available; TRAM has never been peer-reviewed. The committee was not provided with any documentation about the risk calculations behind the grants programs—DHS offered that a specific individual could be contacted to answer questions—and likewise has not been given or pointed to detailed documentation of the modeling behind the Maritime Security Risk Analysis Model (MSRAM), CIKR vulnerability analyses, the TSA RMAT model, and so on. The committee has not seen or heard of validation studies of any DHS risk models. These gaps are apparently not due to security concerns, and it is not necessary to publish in the open literature in order to reap the value of documentation and peer review.

Recommendation: DHS should adopt recognized scientific practices for its risk analyses:

-

DHS should create detailed documentation for all of its risk models, including rigorous mathematical formulations, and subject them to technical and scholarly peer review by experts external to DHS.

-

Documentation should include simple worked-out numerical examples to show how a methodology is applied and how calculations are performed.

-

DHS should consider a central repository to enable DHS staff and collaborators to access model documentation and data.

-

DHS should ensure that models undergo verification and validation—or sensitivity analysis at the least. Models that do not meet traditional standards of scientific validation through peer review by experts external to DHS should not be used or accepted by DHS.

-

DHS should use models whose results are reproducible and easily updated or refreshed.

-

DHS should continue to work toward a clear, unambiguous risk lexicon.

The committee recognizes that security concerns at DHS constrain the extent to which some model assumptions and results are made public, but some type of formal review is still required for all elements of models if they are to be used with confidence by the department and others. Such a requirement is consistent with the core criteria for risk analysis as specified in the 2009 NIPP (DHS-IP, 2009).

The Assumptions Embedded in Risk Analyses Must Be Visible to Decision Makers

Of special importance is transparency with respect to decision makers. The assumptions and quality of the data that are provided as inputs and the uncertainties that can be anticipated are essential to establish the credibility of the model. Also of importance are periodic reviews and evaluations of the results that are being obtained using relatively new and old models. These reviews should involve specialists in modeling and in the problems that are being addressed. They should address the structure of the models, the types and certainty of the data that are required (e.g., historical or formally elicited expert judgments), and how the models are intended to be used. The assumptions and quality of the data that are provided as inputs and the uncertainties that can be anticipated are essential to establish the credibility of the model.

Because of the many uncertainties attendant on risk analysis, especially risk analysis related to terrorism, it is crucial that DHS provide transparency for the decision maker. When decision makers must weigh a broad range of risks—including some with very large uncertainties, as in the case of terrorism risk—transparency is even more important because otherwise the decision maker will be hard-pressed to perform the necessary comparison. The analysis needs to communicate the uncertainties to decision makers. In one sense, DHS risk-related processes can be helpful in this regard: in most cases, those who are close to the risk management function will have to be involved in producing vulnerability analyses. This is certainly the case for CIKR sectors, and it is also true for users of TRAM, MSRAM, and probably other risk packages. Conducting a vulnerability analysis requires many hours of focused attention on vulnerabilities and threats, which can also be a very beneficial process for making operations staff more attuned to the risks facing their facilities.

TRAM and some of the models for evaluating infrastructure vulnerabilities are overly complex, which detracts from their transparency. Moreover, it seems that nearly all of DHS’s risk models must be run by, or with the help of, a specialist. The only exception mentioned to the committee was a spreadsheet-based model under development by a contractor for the FEMA grants program, which is intended to be used by grant applicants. The ideal risk analysis tool would be one that a risk manager or decision maker can (1) understand conceptually, (2) trust, and (3) get quick turnaround on what-if scenarios and risk mitigation trade-offs. These attributes should be attainable.

Another limitation on transparency is the difficult one posed by classified information. The lack of clearances precludes the possibility of passing on much threat information to DHS’s “customers.” Even if these customers hold the right clearances, there are also limitations on the availability of secure communications networks and equipment including telephones, faxes, and computers. Vulnerability information is affected by similar constraints. Common sense—and the desire of private sector owners and operators to treat some details as proprietary—dictates that such information should be given limited dis-

tribution.

However, the committee did hear concerns that information about vulnerability from one CIKR sector is not normally shared with those outside that sector, which can limit the insights available to risk managers in sectors (e.g., public health) that are affected by risks to other sectors (e.g., electrical and water supplies).

Recommendation: To maximize transparency of DHS risk analyses for decision makers, DHS should aim to document its risk analyses as clearly as possible and distribute them with as few constraints as possible. As part of this recommendation, DHS should work toward greater sharing of vulnerability and consequence assessments across infrastructure sectors so that related risk analyses are built on common assessments.