6

Opportunities for Better Assessment

The present moment—when states are moving toward adopting common standards and a federal initiative is pushing them to focus on their assessment systems—seems to present a rare opportunity for improving assessments. Presenters were asked to consider the most promising ways for states to move toward assessing more challenging content and providing better information to teachers and policy makers.

IMPROVEMENT TARGETS

Laurie Wise began with a reminder of the issues that had already been raised: assessments need to support a wide range of policy uses; current tests have limited diagnostic value and are not well integrated with instruction or with interim assessments, and they do not provide optimal information for accountability purposes because they cover only a limited range of what is and should be taught. Improvements are also needed in validity, reliability, and fairness, he said.

For current tests, there is little evidence that they are good indicators of instructional effectiveness or good predictors of students’ readiness for subsequent levels of instruction. Their reliability is limited because they are generally targeted to very broad content specifications, and limited progress has been made in assessing all students accurately. Improvements such as computer-based testing and automated scoring need to become both more feasible in the short run and more sustainable in the long run. Wise pointed out that widespread adoption of common standards might help with these challenges in

two ways: by pooling their resources, states could get more for the money they spend on assessment, and interstate collaboration is likely to facilitate deeper cognitive analysis of standards and objectives for student performance than is possible with separate standards.

Cost Savings

The question of how much states could save by collaborating on assessment begins with the question of how much they are currently spending. Savings would be likely to be limited to test development, since many perstudent costs for administration, scoring, and reporting, would not be affected. Wise discussed an informal survey he had done of development costs (Wise, 2009), which included 15 state testing programs and a few test developers and included only total contract costs, not internal staff costs: the results are shown in Table 6-1.

Perhaps most notable in the data is the wide range in what states are spending, as shown in the minimum and maximum columns. Wise also noted that on average the states surveyed were spending well over $1million annually to develop assessments that require human scoring and $26 per student to score them.

A total of $350 million will be awarded to states through the Race to the Top initiative. That money, plus savings that winning states or consortia could achieve by pooling their resources, together with potential savings from such

TABLE 6-1 Average State Development and Administration Costs by Assessment Type

new efficiencies as computer delivery, would likely yield for a number of states as much as $13 million each to spend on ongoing development without increasing their own current costs, Wise calculated.

Edward Roeber also examined in detail the question of how a jurisdiction might afford the cost of a new approach to assessment. He began by briefly discussing the idea of a “balanced assessment system,” which is one of the top current catch phrases in education policy conversations. He pointed out that users seem to have different ideas of what a coherent system might be. He focused on vertical coherence and said that a balanced system is one that incorporates three broad assessment types: (1) state, national, or even international summative assessments; (2) instructionally relevant interim benchmark assessments; and (3) formative assessments that are embedded in instruction. For him, the key is balance among these three elements, while the current focus is almost entirely on the summative assessments. Interim assessments are not used well, and they tend to simply consist of elements of the large-scale summative assessments. Formative assessments are barely registering as important in most systems. By and large, he said, teachers are not educated about the range of strategies necessary for the continuous process of assessing their students’ progress and identifying of areas in which they need more support. The result is that the summative assessments overpower the system.

Others focus on a horizontal balance, Roeber observed, which in practice means that they focus on the skills covered on state assessments. The coherence is between what is included on tests and what is emphasized in classrooms. The emphasis is on preparing students to succeed on the tests, and on providing speedy results, rather than on the quality of the information they provide. Most programs rely too much on multiple-choice questions, which exacerbates the constraining influence of the summative assessments. If states used a broader array of constructed-response and performance items, assessments could have a more positive influence on instruction and could also provide a model for the development of interim assessments that are more relevant to high-quality instruction.

To provide context for discussion of changes states might make in their approaches to assessment, Roeber and his colleagues Barry Topol and John Olson compared the costs of a typical state assessment program with those for a high-quality assessment system (see Topol, Olson, and Roeber, 2010). They hoped to identify strategies for reducing the costs of a higher-quality system. For this analysis, they considered only mathematics, reading, and writing and made the assumption that states would use the common core standards and the same testing contractor. The characteristics of the designs they compared are shown in Tables 6-2 and 6-3. Roeber and his colleagues calculated the cost of a typical assessment program at $20 per student and the cost of a high-quality one at $55 per student (including start-up development costs). Since most states are unlikely to be able to afford a near tripling of their assessment costs,

TABLE 6-2 Numbers of Items of Each Type in Typical Assessment by Design Assessment Type

|

Subject |

Multiple-Choice Items |

Short Constructed-Response Items |

Extended Constructed-Response Items |

Performance Event |

Performance Tasks |

|

Mathematics |

50 |

0 |

2 |

0 |

0 |

|

Reading |

50 |

0 |

2 |

0 |

0 |

|

Writing |

10 |

0 |

1 |

0 |

0 |

|

Mathematics—Interim |

40 |

0 |

0 |

0 |

0 |

|

English/Language Arts—Interim |

40 |

0 |

0 |

0 |

0 |

|

SOURCE: Reprinted from a 2010 paper, “The Cost of New High-Quality Assessments: A Comprehensive Analysis of the Potential Costs for Future State Assessments,” with permission by authors Dr. Barry Topol, Dr. John Olson, and Dr. Ed Roeber. |

|||||

TABLE 6-3 Numbers of Items of Each Type in High-Quality Assessment by Design Assessment Type

|

Subject |

Multiple-Choice Items |

Short Constructed-Response Items |

Extended Constructed-Response Items |

Performance Event |

Performance Task |

|

Mathematics |

25 |

2 (1 in grade 3) |

2 (0 in grade 3, 1 in grade 4) |

2 |

2 (0 in grade 3, 1 in grade 4) |

|

Reading |

25 |

2 (1 in grades 3 and 4) |

2 (1 in grades 3 and 4) |

2 |

1 |

|

Writing |

10 |

2 (1 in grades 3 and 4) |

2 (1 in grades 3 and 4) |

2 |

0 |

|

Mathematics—Interim |

25 |

2 |

1 (0 in grade 3) |

1 |

1 (0 in grade 3) |

|

English/Language Arts—Interim |

25 |

2 |

1 |

1 |

1 |

|

SOURCE: Reprinted from a 2010 paper, “The Cost of New High-Quality Assessments: A Comprehensive Analysis of the Potential Costs for Future State Assessments,” with permission by authors Dr. Barry Topol, Dr. John Olson, and Dr. Ed Roeber. |

|||||

Roeber and his colleagues explored several means of streamlining the cost of the high-quality approach.

First, they considered the savings likely to be possible to a state that collaborated with others, as the states applying for Race the Top funding in consortia plan to do. They calculated that the potential economies of scale would save states an average of $15 per student. New uses of technology, such as online test delivery and automated scoring, would yield immediate savings of $3 to $4 per student, and further savings would be likely with future enhancements of the technology. Roeber observed that some overhead costs associated with converting to a computer-based system would be likely to decline as testing contractors begin to compete more consistently for this work.

They also considered two possible approaches to enlisting teachers to score the constructed-response items. This work might be treated as professional development, in which case there would be no cost beyond that of the usual professional development days. Alternatively, teachers might be paid a stipend ($125 per day per teacher was the figure assumed) for this work. Depending on which approach is taken, the saving would be an additional $10 to $20 per student. Altogether, these measures (assuming teachers are paid for their scoring work) would yield a cost of $21 per student for the high-quality assessment. Moreover, several participants noted, because the experience of scoring is a valuable one for teachers, it is a nonmonetary benefit to the system.

This analysis also showed that the development of a new assessment system would be relatively inexpensive in relation to the total cost: it is the ongoing administration costs that will determine whether states can afford to adopt and sustain new improved assessment systems. Participation in a consortium is likely to yield the greatest costs savings. The bottom line, for Roeber, is that implementing a high-quality assessment system would be possible for most states if they proceed carefully, seeking a balance among various kinds of items with different costs and considering cost-reduction strategies.

Improved Cognitive Analysis

Wise noted that the goal for the common core standards is that they will be better than existing state standards—more crisply defined, clearer, and more rigorous. They are intended to describe the way learning should progress from kindergarten through 12th grade to prepare students for college and work. Assuming that the common standards meet these criteria, states could collaborate to conduct careful cognitive analysis of the skills to be mastered and how they might best be assessed. Working together, states might have the opportunity to explore the learning trajectories in greater detail, for example, in order to pinpoint both milestones and common obstacles to mastery, which could in turn guide decisions about assessment. Clear models for the learning that should take place across years and within grades could support the devel-

opment of integrated interim assessments, diagnostic assessments, and other tools for meeting assessment goals.

The combination of increased funding for assessments and improved content analyses would, in turn, Wise suggested, support the development of more meaningful reporting. The numerical scales that are now commonly used offer very little meaningful information beyond identifying students above and below an arbitrary cut point. A scale that was linked to detailed learning trajectories (which would presumably be supported by the common standards and elaborated through further analysis) might identify milestones that better convey what students can do and how ready they are for the next stage of learning.

Computer-adaptive testing would be particularly useful in this regard since it provides an easy way to pinpoint an individual student’s understanding; in contrast, a uniform assessment may provide almost no information about a student who is performing well above or below grade level. Thus, reports of both short- and long-term growth would be easier, and results could become available more quickly. This faster and better diagnostic information, in turn, could also improve teacher engagement. Another benefit would be increased potential for establishing assessment validity. Test results that closely map onto defined learning trajectories could support much stronger inferences about what students have mastered than are possible with current data, and they could also better support inferences about the relationship between instruction and learning outcomes.

It is clear that common standards can support significant improvements in state assessments, Wise said. The potential cost advantages are apparent. But perhaps more important is that concentrating available brain power and resources on the elaboration of one set of thoughtful standards (as would be possible if a number of states were assessing the same set of standards) would allow researchers to work together for faster progress on assessment development and better data.

IMPLICATIONS FOR SPECIAL POPULATIONS

Much progress has been made in the accurate assessment of special populations—students with disabilities and English language learners (ELLs). Nevertheless, new approaches to assessment may offer the possibility of finding ways to much more accurately measure their learning and to target some of the specific challenges that have hampered past efforts. Robert Linquanti addressed the issues and opportunities innovative assessments present with regard to ELLs, and Martha Thurlow addressed the issues as they relate to students with disabilities.

English Language Learners

Linquanti began by stressing that although ELLs are often referred to as a monolithic entity, they are in fact a very diverse group. This fast-growing group represents 10 percent of the K-12 public school population. Of these 5 million students, 80 percent are Spanish speaking, and approximately 50 percent were born in the United States. They vary in terms of their degree of proficiency in English (and proficiency may vary across each of the four skills of listening, speaking, reading, and writing), the time they have spent in U.S. schools, their level of literacy in their first language, the consistency of their school attendance, and in many social and cultural ways.

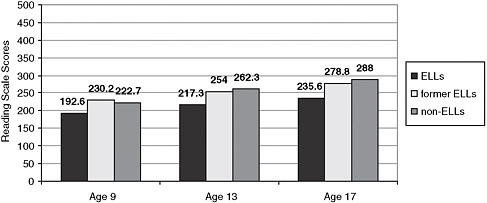

Linquanti pointed out that the performance of ELLs on English/language arts is the second most common reason why schools in California (home to many such students) fail to make adequate yearly progress. He also noted that current means of reporting on the performance of ELLs in the National Assessment of Educational Progress (NAEP) skews the picture somewhat, as shown in Figure 6-1. The NAEP reporting does not distinguish among ELLs who have very different levels of academic proficiency. In other words, the data are organized to “create a population that is performing low by definition,” Linquanti explained.

It would be better, he said, to have data that provide a finer measure of

FIGURE 6-1 Average reading scores for current and former ELLs, and non-ELLs on the National Assessment of Educational Progress.

NOTE: ELLs = English language learners.

SOURCE: From Judith Wilde, a paper presented at the 2010 American Educational Research Association annual meeting: Comparing results of the NAEP Long-Term Trend Assessment: ELLs, Former ELLs, and English-Proficient Students. Reprinted with permission by the author.

how well these students are doing academically and help to pinpoint the ways their learning is affected by their language proficiency. Though in practice many schools focus on building these students’ English skills before addressing their academic needs, that approach is not what the law requires and is not good practice—students need both at the same time.

New approaches to assessment offer several important opportunities for this population, Linquanti said. First, a fresh look at content standards, and particularly at the language demands they will entail, is an opportunity to make more explicit the benchmarks for ELLs to succeed academically. Developing academic language proficiency, he noted, is a key foundation for these students, and the instruction they receive in language support classes (English as a second language, or English language development) is not sufficient for academic success. The sorts of skills being specified more explicitly in the common core standards—for example, analyzing, describing, defining, comparing and contrasting, developing hypotheses, and persuading—are manifested through sophisticated use of language. Some kinds of academic language are discipline specific and others cross disciplines, and making these expectations much more explicit will help teachers identify the skills they need to teach and encourage them to provide students with sufficient opportunities to develop them.

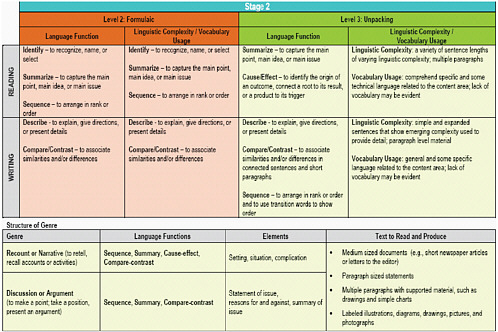

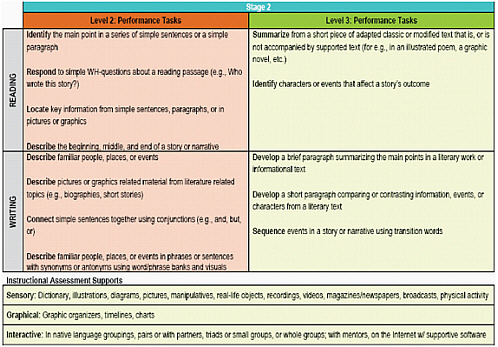

At the same time, the kinds of formative assessment that have been described throughout the workshop will also promote—and enable teachers to monitor—the development of academic language within each subject. If learning targets for language are clearly defined, teachers will be better able to distinguish them from other learning objectives. Linquanti discussed a program called the Formative Language Assessment Records for English Language Learners (FLARE), which identifies specific targets for language learning as well as performance tasks and instructional assessment supports based on them: see Figures 6-2 and 6-3 (see http://flareassessment.org/assessments/learningTargets.aspx [accessed June 2010]). This program, which is now being developed for use in three districts, is designed to actively engage teachers in understanding the language functions required for different kinds of academic proficiency, developing performance tasks, and identifying instructional supports that link to the assessments. Linquanti also welcomed the explicit definitions of language demands for all subjects that are being incorporated in the common core standards, such as those for grades 9-10 in science, which include such tasks as analyzing and summarizing hypotheses and explanations; making inferences; and identifying the relationships among terms, processes, and concepts. If the common core standards are widely adopted, it will be very useful for states to revisit their standards for English language proficiency to ensure that they are well aligned with the academic standards. In Linquanti’s view, these language skills must be seen as part of the core, foundational material that students need to master, and teachers

FIGURE 6-2 FLARE language functions and genre structures: Levels 2 and 3.

SOURCE: Reprinted with permission from Dr. Gary Cook, principal investigator for FLARE Language Learning Targets, © 2010 Board of Regents of the University of Wisconsin System. FLARE is a 3-year formative assessment grant project (2009-2011) funded by the Carnegie Corporation of New York.

FIGURE 6-3 FLARE performance tasks and instructional assessment supports: Levels 2 and 3.

SOURCE: Reprinted with permission from Dr. Gary Cook, principal investigator for FLARE Language Learning Targets, © 2010 Board of Regents of the University of Wisconsin System. FLARE is a 3-year formative assessment grant project (2009-2011) funded by the Carnegie Corporation of New York.

need to be guided in incorporating these targets into their instruction. This greater clarity will help teachers distinguish whether poor performance is the result of insufficient language skills to demonstrate the other skills or knowledge that a student has, lack of those other knowledge and skills, unnecessarily complex language in the assessment, or other factors (such as cultural difference, dialect variation, or rater misinterpretation).

Above all, Linquanti stressed, it is critical not to treat the participation of ELLs in new, innovative assessments as an afterthought—the role of language in every aspect of the system should be a prime concern throughout design and development. This point is also especially important for any high-stakes uses of assessments. “We have to calibrate the demands of the performance with the provisions of support—and make sure we are clarifying and monitoring the expectations we have for kids,” he said.

Students with Disabilities

Martha Thurlow observed that many of the issues Linquanti had raised also apply to students with disabilities, and she agreed that the opportunity to include all students from the beginning, rather than retrofitting a system to accommodate them, is invaluable. She stressed that the sorts of assessments being described at the workshop already incorporate the key to assessing students with disabilities: not to devise special instruments for particular groups, but to have a system that is flexible enough to measure a wide range of students.

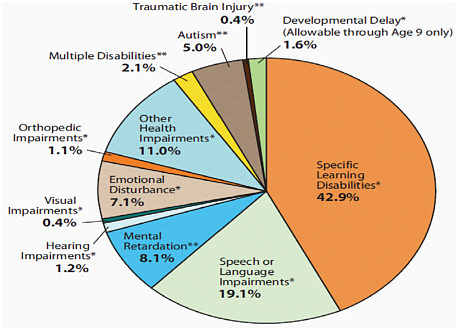

She noted that, like ELLs, students with disabilities are not well understood as a group. Figure 6-4 shows the proportions of students receiving special education services who have been classified in each of 12 categories of disability; Thurlow pointed out that approximately 85 percent of them do not have any intellectual impairment. Moreover, she noted, even students with severe cognitive disabilities can learn much more than many people realize, as recent upward trends in theoir performance on NAEP suggest.

Thurlow noted that universal design, as described by the National Accessible Reading Assessment Projects of the U.S. Department of Education (Accessibility Principles for Reading (see http://www.narap.info/ [accessed June 2010]), means considering all students beginning with the design of standards and continuing throughout the design, field testing, and implementation of an assessment.1 Accommodations and alternate assessments that support valid and reliable measurement of the performance of students with disabilities are key elements of this approach. However, developing effective, fair accommodations and alternate

|

1 |

Additional information about universal design can be found at the website of the National Center on Educational Outcomes (see http://www.cehd.umn.edu/nceo/ [accessed June 2010]). |

FIGURE 6-4 Students who receive special education services by disability category.

SOURCE: Reprinted with permission from the National Center for Learning Disabilities’ publication, Challenging Change: How Schools and Districts Are Improving the Performance of Special Education Students © 2008.

assessments that are based on achievement levels modified to fit the capacities of student with various disabilities remains a challenge.

Lack of access to curriculum and instruction also continues to confound interpretation of assessment results for this group, Thurlow added, and to limit expectations for what they can master. Specialists and researchers continue to struggle with questions about what it means for students with different sorts of disabilities to have access to curriculum at their grade levels.

These are some of the challenges that exist as possibilities for new kinds of assessment are contemplated, Thurlow said. The opportunity to have more continuous monitoring of student progress that could come with a greater emphasis on formative assessment, for example, would clearly be a significant benefit for students with disabilities, in her view. Similarly, computer-adaptive testing is an attractive possibility, though it will be important to explore whether the algorithms that guide the generation of items account for unusual patterns of knowledge or thinking. Above all, Thurlow stressed, “we have to remember that students with disabilities can learn … and take a principled approach to their inclusion in innovative assessments.”

TECHNOLOGY

Randy Bennett began by noting that new technology is already being used in K-12 assessments. Computerized adaptive testing is being administered in thousands of districts at every grade level to assess reading, mathematics, science, and languages. At the national level, NAEP will soon offer an online writing assessment for the 8th and 12th grades, and the Programme for International Student Assessment (PISA) exams now include a computerized reading assessment that relies on local schools’ infrastructure. So technology-based assessment is no longer a “wild idea,” Bennett observed. Because it is likely that technology will soon become a central force in assessment, he said, it is important to ensure that its development be guided by substantive concerns, rather than efficiency concerns. “If we focus exclusively, or even primarily, on efficiency concerns,” he argued, “we may end up with nothing more than the ability to make arguably mediocre tests faster, cheaper, and in greater numbers.” Increasing efficiency may be a worthy short-term goal, but only as a means to reach the goal of substantively driven technology-based innovation.

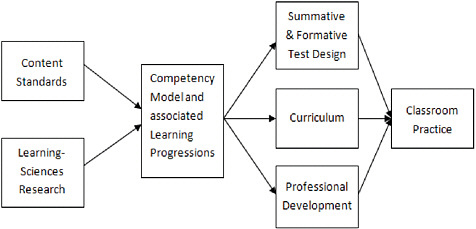

Moreover, he said, current standards cannot by themselves provide enough guidance for the design of assessments in a way that is consistent with the results of the research on learning discussed earlier in the workshop (see Chapter 2). Two key needs, Bennett said, are: competency models that identify the components of proficiency in a domain (that is, key knowledge, processes, strategies, or habits of mind) and also describe how those competencies work together to facilitate skilled performance; and learning progressions that identify the ways learning develops sequentially (see Chapter 2). Figure 6-5 shows how technology fits in a model of coherent instruction and assessment.

With that model in mind, Bennett identified 11 propositions that he believes should guide the use of technology in assessment, goals.

-

Technology should be used to give students more substantively meaningful tasks than might be feasible through traditional approaches, by presenting rich content about which they can be asked to reason, read, write, or do other tasks. He offered as an example an item developed for the Educational Testing Service’s CBAL (Cognitively Based Assessment of, for, and as Learning). In this task on the topic of electronic waste (discarded electronic devices, which often contain heavy metals and other pollutants), which is designed to be conducted over days or weeks, depending on how it is integrated into other instruction, students are asked to perform tasks that include: listening to an online radio news report and taking notes on its content; evaluating websites that contain relevant information; reading articles about the topic and writing responses and other pieces; using a graphic organizer to manage information from different sources; and working collaboratively to develop an informational poster. Many of the tasks are primarily formative assessments, but a subset can be used to provide summative data. The cost of presenting this range of material on paper

FIGURE 6-5 Model of a coherent system: Where technology fits.

SOURCE: Reprinted with permission from the Educational Testing Service © 2010.

-

would be prohibitive, and the logistics of managing it would be a significant challenge not only for schools and teachers, but even for the students who interact with the materials. But more important than the convenience, Bennett said, is that the complexity of the materials makes it possible to engage students in higher-order skills and also to build their skills and knowledge.

-

Technology-based assessment should model good instructional practice for teachers and learning practice for students by including the tools that proficient performers typically use and reflecting the ways they represent knowledge. Such tasks should encourage the habits of mind common to experts in the domain being tested. In another example from the electronic waste task, students work with an interactive screen to locate, prioritize, and organize information, to develop an outline, and to draft an essay. They have the option of selecting from a variety of organizing strategies. They may be given details and asked to develop general statements from them or given such statements and asked to locate and fill in relevant supporting details. The focus is on modeling the use of criteria to help students think critically in an online context, where the amount of information is far greater and the quality far more variable than they are likely to find in their classrooms or local libraries. Thus, the tasks both teach methods of organizing and writing and reinforce concepts, such as criteria for evaluating the quality of sources.

-

Technology should be used to assess important (higher- and lower-order) competencies that are not easily measured by conventional means. Examples could include having students read orally; use simulations of dynamic systems to interpret evidence, discover relationships, infer causes, or pose solutions; use spreadsheet for mathematical modeling of complex problem situations;

-

read and write on the computer in a nonlinear task; or digitally document the products of an extended project.

-

Technology should be used to measure students’ skills at using technology for problem solving. Successful performance in advanced academic settings and in workplaces will require skill and flexibility in using technology, so this domain should become part of what schools measure.

-

Technology should be used to collect student responses that can support more sophisticated interpretation of their knowledge and skills than is typical with traditional tests. For example, the time taken in answering questions can indicate how automatic basic skills have become or the degree of motivations a student has to answer correctly. Tracking of other aspects of students’ responses and decisions may also illuminate their problem-solving processes. Using a sample item, Bennett showed how assessors could evaluate the search terms students used and the relevance of the web pages they chose to visit, which were related to the quality of their constructed responses.

-

Technology should be used to make assessments fairer for all students, including those with disabilities and ELLs. Incorporating vocabulary links for difficult words in an assessment that is not measuring vocabulary, offering alternate representations of information (e.g., text, speech, verbal descriptions of illustrations), or alternate questions measuring the same skills are all tools that can yield improved measures of students’ learning.

-

Adaptive testing could be enhanced to assess a fuller range of competencies than it currently does. Current adaptive tests rely on multiple-choice items because real-time scoring of constructed responses has not been feasible, but automatic scoring is now an option. Students could also be routed to appropriately difficult extended constructed-response items, which would then be scored after the test administration.

-

Technology can support more frequent measurement, so that information collected over time for formative purposes can be aggregated for summative purposes. When assessment tasks are substantive, model good learning and teaching practice, and provide useful interim information, they can offer better information for decision making than a single end-of-year test.

-

Technology can be used to improve the quality of scoring. Online human scoring makes it possible for monitors to track the performance of raters and flag those who are straying from rubrics or scoring too quickly given the complexity of the responses. As noted earlier, he said, progress has also been made with automated scoring of short text responses, essays, mathematics equations or other numerical or graphic responses, and spoken language. However, Bennett cautioned, automated scoring can easily be misused, if, for example, it rewards proxies for good performance, such as essay length. Thus, he advocated that the technology’s users probe carefully to be sure that the program rewards key competencies rather than simply predicting the operational behavior of human scorers.

-

Technology can allow assessors to report results quickly and provide useful information for instructional decision making. Classroom-level information and common errors can be reported immediately, for example, and other results (such as those that require human scoring) can be phased in as they become available. Electronic results can also be structured to link closely to the standards they are measuring, for example, by showing progress along a learning progression. The results could also be linked to a competency model, instructional materials suitable for the next steps a student needs to take, exemplars of good performance at the next level, and so forth. The information could be presented in a hierarchical web page format, so users can see essential information quickly and dig for details as they need them.

-

Technology can be used to help teachers and students understand the characteristics of good performance by participating in online scoring. Students could score their own or others’ anonymous work as an instructional exercise. Teachers may gain formative information from scoring their own students’ work and that of other students as part a structured, ongoing professional development experience.

Bennett acknowledged that pursuing these 11 goals would pose a number of challenges. First, current infrastructure is not yet sufficient to support efficient, secure testing of large groups of students in the ways he described, and innovative technology-based assessments are very costly to develop. Because students bring a range of computer skills to the classroom, it is possible that those with weaker skills would be less able to demonstrate their content skills and knowledge, and their scores would underestimate them. Many of the stimuli that computer graphics make possible could be inaccessible to students with certain disabilities. Interactive assessments also make it possible to collect a range of information, but researchers have not yet identified reliable ways to extract meaningful information from all of these data.

None of these issues is intractable, Bennett argued, but they are likely to make the promise of computer-based assessment difficult to fulfill in the near term. Nevertheless, he suggested, “if you don’t think big enough you may well succeed at things that in the long run really weren’t worth achieving.”

State Perspectives

Wendy Pickett provided perspective from Delaware, a state that has been a good place to practice new technology because of its small size. Delaware, which has approximately 10,000 students per grade, is currently phasing in a new online, adaptive system. It will include benchmark interim assessments as well as end-of-course assessments, and the state has positioned itself to move forward quickly with other options, such as computerized teacher evaluations, as they consider the implications for them of the Race to the Top awards, adoption of the common core standards, and other developments.

She noted that, as in many states, there are pressures to meet numerous goals with the state assessment, including immediate scores, individualized diagnostic information, and summative evaluations. At the same time, the landscape at the local, state, and national levels is changing rapidly, she observed: developing the new assessment has felt like “flying an airplane while redesigning the wings and the engine.”

However, the state’s small size makes it easy to maintain good communication among the 19 districts and 18 charter schools. All the district superintendents can meet one or twice a month, and technology has helped them share information quickly. The state has also had a tradition of introducing new tests to the public through an open-house structure, in which samples are available at malls and restaurants. The state will do the same with the new online test, Pickett said, using mobile computer labs—and plans to use the labs to introduce state legislators to the new technology as well.

Among the features Delaware is incorporating in its new assessment are items in which students will create graphs on the screen and use online calculators, rulers, and formula sheets. The state is working to guide teachers to ensure that all their students are familiar with the operations they will need to perform for the assessment tasks. The state also has a data warehouse that makes information easily accessible and allows users to pursue a variety of links. The danger, she cautioned, is that “you can slice and dice things in so many ways that you can overinterpret” the information, and she echoed others in highlighting the importance of training for teachers in data analysis. Pickett closed with the observation that “the technology can help us be more transparent, but we owe it to all our stakeholders to be very clear about our goals and how we are using technology to accomplish them.”

Tony Alpert focused on the needs of states that have not yet “bridged the technology gap,” noting how difficult it is to make the initial move from paper-based to technology-based assessment. He noted the experiences of Delaware, Hawaii, and other states that are farther along can be invaluable in highlighting lessons learned and sensible ways to phase in the change. In his view, the most important step is to provide professional development not just to teachers, but also to state-level staff so that they will be equipped to build and support the system. States’ experiences with logistical challenges, such as monitoring the functioning of an application that is being used with multiple operating systems, could save others a lot of headaches as well.

Alpert also commented on Bennett’s view that assessment tasks can be engaging and educational even as they provide richer information about students’ knowledge and skills. He observed that somehow the most interesting items tend to get blocked by the sensitivity and bias committees, perhaps in part because they may favor students with particular knowledge. It will be an ongoing challenge, in his view, to continue to develop complex and engaging tasks that will be fair to every student.

Participants noted other challenges, including the fast pace at which new devices are being developed. Students may quickly adapt to new technologies, but assessment developers will need to be mindful of ways to design for evolving screen types and other variations in hardware, because images may render very differently on future devices than on those currently available, for example. Other challenges with compatibility were noted, but most participants agreed that the key is to proceed in incremental steps toward a long-term goal that is grounded in objectives for teaching and learning.