1

Preacquisition Technology Development for Air Force Weapon Systems

The National Research Council (NRC) issued a report in 2008 entitled Pre-Milestone A and Early-Phase Systems Engineering: A Retrospective Review and Benefits for Future Air Force Systems Acquisition (hereinafter referred to as the Kaminski report, after Paul G. Kaminski, the chair of that report’s study committee).1 The Kaminski report emphasized the importance of systems engineering early in the Department of Defense (DoD) acquisition life cycle and urged the revitalization of the systems engineering and Development Planning (DP) disciplines throughout the DoD.2

No less important to the future combat capability of the armed services is the development of new, cutting-edge technology. This is particularly true for the United States Air Force (USAF), which from its very inception has sought to capitalize on technological and scientific advances. Even before there was a United States Air Force, the Chief of Staff of the U.S. Army Air Corps Henry H. “Hap” Arnold was committed to giving his forces a decisive technological edge:

Arnold … intended to leave to his beloved air arm a heritage of science and technology so deeply imbued in the institution that the weapons it would fight with would always be the best the state of the art could provide and those on its drawing boards would be prodigies of futuristic thought.3

General Arnold succeeded in his dream of building the foundations of an Air Force that was second to none technologically. Dramatic innovations in aeronautics and later in space were fielded, with schedules that today seem impossible to achieve. The first U-2 flew just 18 months after it was ordered in 1953, and it was operational just 9 months after that first flight.4 The SR-71, even more radical, was developed with similar speed, going from contract award to operational status in less than 3 years.5 In the space domain, innovation was pursued with similar speed: for example, the Atlas A, America’s first intercontinental ballistic missile, required only 30 months from contract award in January 1955 to first launch in June 1957.6

At that time, the American military and defense industry set the standard in the effective management of new technology. In fact, the entire field known today as “project management” springs from the management of those missile development programs carried out by the Air Force, the United States Navy, and later the National Aeronautics and Space Administration (NASA). Tools used routinely throughout the project management world today—Program Evaluation Review Technique (PERT) and Critical Path Method (CPM) scheduling systems, Earned Value Management (EVM), Cost/Schedule Control System Criteria (C/SCSC), for example—trace back directly to the work of the Air Force, the Navy, and NASA in those years.7

Clearly those days are gone. The Kaminski report cites compelling statistics that describe dramatic cost and schedule overruns in specific, individual programs. Taken all together, the picture for major system acquisition is no better:

The time required to execute large, government-sponsored systems development programs has more than doubled over the past 30 years, and the cost growth has been at least as great.8

STATEMENT OF TASK AND COMMITTEE FORMATION

The Air Force requested that the National Research Council review current conditions and make recommendations on how to regain the technological expertise so characteristic of the Air Force’s earlier years. Such outside studies have long been part of the Air Force’s quest for improvement in technology. For example,

|

4 |

Clarence L. “Kelly” Johnson. 1989. More Than My Share of It All. Washington, D.C.: Smithsonian Institution Scholarly Press. |

|

5 |

Ibid. |

|

6 |

For additional information, see http://www.fas.org/nuke/guide/usa/icbm/sm-65.htm. Accessed May 10, 2010. |

|

7 |

For additional information, see http://www.mosaicprojects.com.au/PDF_Papers/P050_Origins_of_Modern_PM.pdf. Accessed May 10, 2010. |

|

8 |

NRC. 2008. Pre-Milestone A and Early-Phase Systems Engineering: A Retrospective Review and Benefits for Future Air Force Systems Acquisition. Washington, D.C.: The National Academies Press, p. 14. |

General Hap Arnold, in his autobiography, described the difficulties that he faced leading the pre-World War II United States Army Air Corps:

Still, in spite of our smallness and the perpetual discouragements, it was not all bad. Progress in engineering, development, and research was fine. At my old stamping grounds in Dayton, I found the Materiel Division doing an excellent job within the limits of its funds. [General Oscar] Westover was calling on the National Research Council for problems too tough for our Air Corps engineers to handle….9

Early in the present study, the Air Force pointed to three elements of the existing acquisition process as examples of things that required improvement. First, evolutionary technology transition has suffered from less-than-adequate early (pre-Milestone A) planning activities that manifest themselves later in problems of cost, schedule, and technical performance. Second, revolutionary transition too often competes with evolutionary transition, as reflected in efforts to rush advanced technology to the field while failing to recognize and repair chronic underfunding of evolutionary Air Force acquisition efforts. Third, there appears to be no single Air Force research and development (R&D) champion designated to address these issues.

Although technology plays a part in all Air Force activities, from operations to sustainment and systems modification, the task for the present study was targeted at the development and acquisition of new major systems. Accordingly, this study focuses on how to improve the ability to specify, develop, test, and insert new technology into new Air Force systems, primarily pre-Milestone B. Box 1-1 contains the statement of task for this study.

To address the statement of task, the Committee on Evaluation of U.S. Air Force Preacquisition Technology Development was formed. Biographical sketches of the committee members are included in Appendix A.

THE PARAMETERS OF THIS STUDY

The statement of task specifically requires assessment of relevant DoD processes and policies, both current and historical, and invites proposed changes to Air Force workforce, organization, policies, processes, and resources. Issues of particular concern include resourcing alternatives for pre-Milestone B activities and the role of technology demonstrations. Previous NRC studies, and studies by other groups,

have addressed portions of the material covered in this report.10,11,12,13,14,15,16 Importantly, however, no previous report was expressly limited to addressing the topic of early-phase technology development that is the focus of this study.

Studies of major defense systems acquisition are certainly not in short supply. Over the previous half-century there have been literally scores of such assessments, and their findings are remarkably similar: Weapons systems are too expensive, they take too long to develop, they often fail to live up to expectations. A central question for a reader of this report has to be—What makes this study any different?

The answer is, in a word, technology, and its development and integration into Air Force systems. From those early days of Hap Arnold, it was the capable development, planning, and use of technology that set the Air Force and its predecessors apart from the other services. That technological reputation needs to be preserved—some would say recaptured—if the Air Force is to continue to excel in the air, space, and cyberspace domains discussed later in this chapter.

One cause of this technological challenge is that, for a variety of reasons, the Air Force has lost focus on technology development over the past two decades. The Kaminski report makes clear that Air Force capabilities in the critical areas of systems engineering and Development Planning were allowed to atrophy. These declines had their origins in legislative actions, financial pressures, demographics, workforce development, and a host of other sources. But altogether, they led to a

|

10 |

NRC. 2008. Pre-Milestone A and Early-Phase Systems Engineering: A Retrospective Review and Benefits for Future Air Force Systems Acquisition. Washington, D.C.: The National Academies Press. |

|

11 |

NRC. 2010. Achieving Effective Acquisition of Information Technology in the Department of Defense. Washington, D.C.: The National Academies Press. |

|

12 |

Assessment Panel of the Defense Acquisition Performance Assessment Project. 2006. Defense Acquisition Performance Assessment Report. A Report by the Assessment Panel of the Defense Acquisition Performance Assessment Project for the Deputy Secretary of Defense. Available at https://acc.dau.mil/CommunityBrowser.aspx?id=18554. Accessed June 10, 2010. |

|

13 |

Gary E. Christle, Danny M. Davis, and Gene H. Porter. 2009. CNA Independent Assessment. Air Force Acquisition: Return to Excellence. Alexandria, Va.: CNA Analysis & Solutions. |

|

14 |

Business Executives for National Security. 2009. Getting to Best: Reforming the Defense Acquisition Enterprise. A Business Imperative for Change from the Task Force on Defense Acquisition Law and Oversight. Available at http://www.bens.org/mis_support/Reforming%20the%20Defense.pdf. Accessed June 10, 2010. |

|

15 |

USAF. 2008. Analysis of Alternative (AoA) Handbook: A Practical Guide to Analysis of Alternatives. Kirtland Air Force Base, N.Mex.: Air Force Materiel Command’s (AFMC’s) Office of Aerospace Studies. Available at http://www.oas.kirtland.af.mil/AoAHandbook/AoA%20Handbook%20Final.pdf. Accessed June 10, 2010. |

|

16 |

DoD. 2009. Technology Readiness Assessment (TRA) Deskbook. Prepared by the Director, Research Directorate (DRD), Office of the Director, Defense Research and Engineering (DDR&E). Washington, D.C.: Department of Defense. Available at http://www.dod.mil/ddre/doc/DoD_TRA_July_2009_Read_Version.pdf. Accessed September 2, 2010. |

|

BOX 1-1 Statement of Task The NRC will:

|

decline in the Air Force’s ability to successfully integrate technology into weapons systems in a timely and cost-effective manner.

This study was also commissioned at a time of increased interest in technology management outside the Air Force and beyond the DoD. Disappointed by acquisition programs that underperformed in terms of cost and schedule, Congress enacted the Weapon Systems Acquisition Reform Act of 2009 (WSARA; Public Law 111-23). One of WSARA’s major goals is to reduce the likelihood of future programmatic failure by reducing concurrency (i.e., the simultaneity of two or more phases of the DoD acquisition process), thus ensuring that systems and major subsystems are technologically mature before entering production. This congressional intent is apparent in WSARA’s emphasis on systems engineering and development planning, and in other mandates’ requirements for technology demonstrations, competitive prototypes, and preliminary design reviews earlier in the acquisition cycle, before costly system development and production decisions are made.

COMMITTEE APPROACH TO THE STUDY

Throughout the study, the committee met with numerous Air Force stakeholders to gain a fuller understanding of the sponsor’s needs and expectations relating to the elements contained in the statement of task. The full committee met four times to receive briefings from academic, government, and industry experts in technology development, and it conducted a number of visits during which subgroups of the committee met with various stakeholders. The committee met two additional times to discuss the issues and to finalize its report. Appendix B lists specific meetings, individual participants, and participating organizations.

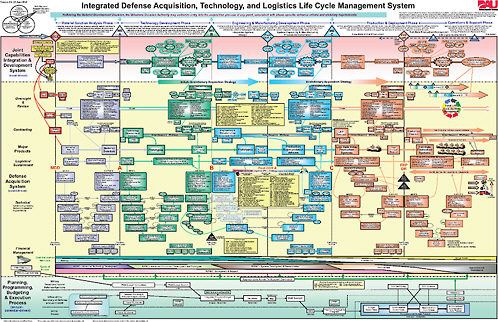

The almost absurd complexity of Figure 1-1 illustrates how daunting the DoD acquisition system is. A clearer image is not available. Given the incredible intricacy of the system, coupled with the relatively short time line of the study, the committee endeavored at the outset to distill this complexity into a basic touchstone mission statement: How to improve the Air Force’s ability to specify, develop, test, and insert new technology into Air Force systems.

THREE DOMAINS OF THE AIR FORCE

The mission of the United States Air Force is to fly, fight, and win … in air, space, and cyberspace.17

This mission statement, set forth in a joint September 2008 letter from the Secretary of the Air Force and the Air Force Chief of Staff, emphasizes the importance of all three domains in which the Air Force must operate. Each domain involves special considerations and challenges. Consequently, each of the three domains represents a unique environment in terms of science and technology (S&T) and major systems acquisition.

Air

The air domain is perhaps most frequently associated with Air Force major systems acquisition. It is characterized by relatively low (and declining) numbers of new major systems, with a relatively small number of industry contractors competing fiercely to win each new award. In this realm, relationships between government and industry tend to be at arm’s length and sometimes adversarial. The duration

|

17 |

“U.S. Air Force Mission Statement and Priorities.” September 15, 2008. Available at http://www.af.mil/information/viewpoints/jvp.asp?id=401. Accessed May 19, 2010. |

FIGURE 1-1

Department of Defense acquisition process. SOURCE: Defense Acquisition University. Available at https://ilc.dau.mil/default_nf.aspx. Accessed June 11, 2010.

of acquisition programs tends to be long, often measured in decades, whereas “buy quantities” have declined dramatically over time.18

In the air domain, not all technology insertion takes place prior to the initial delivery of a system. Aircraft stay in the active inventory for far longer periods than in years past. For example, the newest B-52 is now 48 years old, and most KC-135 aerial refueling tankers are even older. The advancing age of such aircraft means that numerous carefully planned and executed technology insertions are therefore required to upgrade and extend the lives of aging fleets.

These post-acquisition technology-based activities are in themselves both militarily necessary and economically significant, and they are increasingly characteristic of the air domain. The financial impacts of these activities are especially noteworthy. For example, the periodic overhauls of B-2 stealth bombers require 1 year and $60 million—per aircraft. Yet the military imperatives leave the Air Force little choice:

“Although there is nothing else like the B-2, it’s still a plane from the 1980s built with 1980s technology,” said Peter W. Singer, a senior fellow at the Brookings Institution think tank. Other countries have developed new ways to expose the B-2 on radar screens, so the Air Force has to upgrade the bomber in order to stay ahead. “Technology doesn’t stand still; it’s always moving forward,” he said. “It may cost an arm and a leg, but you don’t want the B-2 to fall behind the times.”19

Major aeronautical systems are thus characterized by large expenditures for research and development, as well as for the initial procurement and periodic updates of the end items themselves. But the largest expenditures tend to be in operations and support (O&S) costs over the life of a system. This is increasingly true, as systems are kept in the inventory for longer periods: The initial purchase price of the Air Force’s B-52 bomber was about $6 million in 1962; that sum is dwarfed by the resources required to operate and support the bomber over the last half-century.20

Space

“Space is different.” This idea was raised repeatedly during the course of this study. The challenges of the space world are, in fact, significantly different from

|

18 |

Following, for example, are approximate buy quantities in the world of multi-engine bombers, from World War II to today: 18,000 B-24s; 12,000 B-17s; 4,000 B-29s; 1,200 B-47s; 800 B-52s; 100 B-1s; 21 B-2s. Similar purchasing patterns exist for fighter and cargo aircraft. |

|

19 |

W.J. Hennigan. 2010. “B-2 Stealth Bombers Get Meticulous Makeovers.” Los Angeles Times, June 10. Available at http://articles.latimes.com/2010/jun/10/business/la-fi-stealth-bomber-20100610. Accessed June 22, 2010. |

|

20 |

Information available at https://acc.dau.mil/CommunityBrowser.aspx?id=241468. Accessed May 18, 2010. |

those in the air domain. Some of those differences are obvious. For example, space presents an extraordinarily unforgiving environment in which few “do-overs” are possible; by comparison, the air domain offers the luxury of maturing complex technology prototypes through a sequence of relatively rapid “fly-fix-fly” spirals during the development phase. This “spiral development process” for aircraft allows the refinement of complex technologies through responses to empirical observations. In contrast, the space domain offers few such opportunities. Furthermore, in contrast with aircraft production lines, space systems tend to be craft-produced in small quantities by skilled craftspeople.

Additionally, in the space domain there are few if any “flight test vehicles,” in that every launch is an operational mission, and failures in the always-critical launch phase tend to be spectacular and irreversible. Therefore, space development programs rely on “proto-qualification” or engineering models and must “test like you fly” in order to maximize the opportunity for on-orbit mission success. Once on orbit, a space platform must work for its lifetime as designed, since the opportunities for in-space rework, repair, and refurbishment are limited.

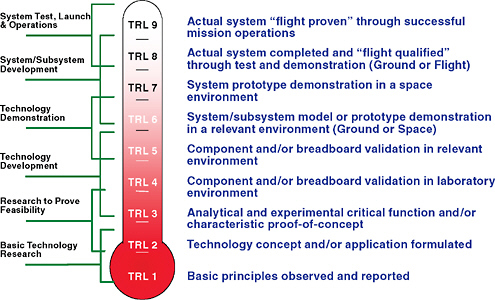

In consideration of these factors, the space domain has led the way in developing measures of technological stability. With the stakes so high and with so little ability to rectify problems once a spacecraft is in operational use, space developers and operators have found it necessary to ensure that only tested and stable systems and components make their way into space. Thus, NASA developed the concept of Technology Readiness Levels (TRLs) in the 1980s.21 Based on the idea that incorporating unproven technology into critical space systems was neither safe nor cost-effective, NASA used the seven-tiered TRL process (later expanded to nine tiers) to assess objectively the maturity and stability of components prior to placing them in space systems (illustrated in Figure 1-2). This TRL concept later spread to the military and commercial worlds and developed into an entire family of assessment tools—Manufacturing Readiness Levels, Integration Readiness Levels, and Systems Readiness Levels.

As with the air domain, the R&D phase in the space domain is expensive, as is the purchase of a space vehicle itself. Unlike with aircraft, however, the O&S costs tend to be relatively low throughout the life of space systems, with operational expenses generally limited to ground station management and communication with the space vehicle. According to Defense Acquisition University, O&S costs consume 41 percent of a fixed-wing aircraft’s life-cycle cost (LCC), but only 16 percent of the LCC for the average spacecraft.22

|

21 |

Additional information on TRL definitions is available through the NASA Web site at http://esto.nasa.gov/files/TRL_definitions.pdf. Accessed June 22, 2010. |

|

22 |

Information available at https://acc.dau.mil/CommunityBrowser.aspx?id=241468. Accessed May 18, 2010. |

FIGURE 1-2

Technology Readiness Level (TRL) descriptions. SOURCE: NASA, modified from http://www.hq.nasa.gov/office/codeq/trl/trlchrt.pdf. Accessed June 22, 2010.

Compared to an aircraft system that can be modified to extend its life for many years, a spacecraft has a finite life on orbit, limited by the operating environment of space and the amount of fuel onboard. As a result, space systems tend to be in a constant state of acquisition. As an example, the Global Positioning System (GPS) Program, managed by the Space and Missile Systems Center, is responsible for flying the current generation of satellites on orbit, for producing the next generation of satellites, and for developing the follow-on GPS system—all at the same time.

The space domain’s heavy reliance on Federally Funded Research and Development Centers (FFRDCs) is another characteristic that sets it apart from the air domain. The Aerospace Corporation has partnered with the Air Force since 1960 to provide five core technological competencies to the Air Force’s space efforts:

The Aerospace FFRDC provides scientific and engineering support for launch, space, and related ground systems. It also provides the specialized facilities and continuity of effort required for programs that often take decades to complete. This end-to-end involvement reduces development risks and costs, and allows for a high probability of mission success. The Department of Defense has identified five core competencies for the Aerospace FFRDC: launch certification, system-of-systems engineering, systems development and acquisition, process implementation, and technology application. The primary customers are the Space and Missile Systems Center of Air Force Space Command and the National Reconnaissance

Office, although work is performed for civil agencies as well as international organizations and governments in the national interest.23

Senior warfighters have raised concerns about the overall health of the space industrial base and its ability to meet the needs of U.S. national security. In particular, studies have pointed to inconsistent performance and reliability among third- and fourth-tier suppliers, many of which perform space contracts intermittently and cannot sustain design, engineering, and manufacturing capabilities in the absence of continual work.24,25,26,27,28

Cyberspace

In the early years of the Air Force’s space era, there was much uncertainty about roles and missions, about organizational structure, about boundaries and policies and processes. It took decades to resolve these matters, and in fact issues still arise from time to time—an example being the recently resolved issue of whether strategic missiles logically belong to the Air Combat Command, or to the Global Strike Command, or to the Space Command.

That same level of uncertainty now characterizes the Air Force’s cyberspace efforts. For example, in August 2009, a joint letter from the Secretary of the Air Force (SAF) and the Chief of Staff of the Air Force (CSAF) countermanded previous guidance and set up a new command—the 24th Air Force—as “the Air Force service component to the USCYBERCOM [United States Cyber Command], aligning authorities and responsibilities to enable seamless cyberspace operations.”29

|

23 |

Information from the Aerospace Corporation. Available at http://www.aero.org/corporation/ffrdc.html. Accessed May 18, 2010. |

|

24 |

Information from Booz Allen Hamilton. May 19, 2003. Available at www.boozallen.com/consulting/industries_article/659130. Accessed May 27, 2010. |

|

25 |

Eric R. Sterner and William B. Adkins. 2010. “R&D Can Revitalize the Space Industrial Base.” Space News, February 22. |

|

26 |

Aerospace Industries Association. 2010. Tipping Point: Maintaining the Health of the National Security Space Industrial Base. September. Available at http://www.aia-aerospace.org/assets/aia_report_tipping_point.pdf. Accessed January 29, 2011. |

|

27 |

Jay DeFrank. 2006. The National Security Space Industrial Base: Understanding and Addressing Concerns at the Sub-Prime Contractor Level. The Space Foundation. April 4. Available at http://www.spacefoundation.org/docs/The_National_Security_Space_Industrial_Base.pdf. Accessed January 29, 2011. |

|

28 |

Defense Science Board. 2003. Acquisition of National Security Space Programs. Washington, D.C.: Office of the Under Secretary of Defense (Acquisition, Technology, and Logistics). Available at http://www.globalsecurity.org/space/library/report/2003/space.pdf. Accessed January 29, 2011. |

|

29 |

Available at http://www.24af.af.mil/shared/media/document/AFD-090821-046.pdf. Accessed May 18, 2010. |

But as late as August 2010, much about Air Force efforts in cyberspace remained unresolved. As quoted from a 24th Air Force Web site:

At this time, we do not yet know the full complement of wings, centers and/or other units to be assigned to 24th Air Force. The organization of the required capabilities is still being determined…. The exact size of 24th Air Force is unknown at this time…. The final numbers for Headquarters 24th Air Force are yet to be determined.30

Similarly, there is much yet to be learned about systems acquisition in the cyberspace domain. However, some themes can be deduced.

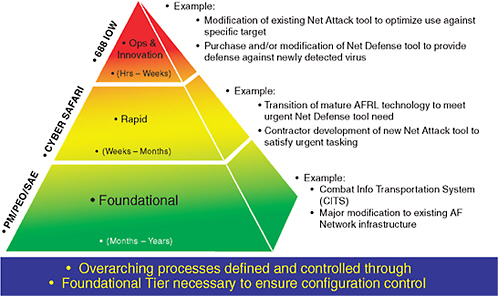

The first theme is the need for speed and agility in a world where threats can arise in days, or even hours. Throughout the course of this study, considerable time was devoted to learning about rapid-reaction acquisition efforts in organizations such as Lockheed Martin’s Skunk Works, the Air Force’s Big Safari organization, and the Joint Improvised Explosive Device Defeat Organization (JIEDDO). Common to all of these efforts was a strong sense of urgency, with program durations often measured in weeks or months rather than years and decades. But cyberspace reaction cycles are often even shorter, which for some raises the question: Is the term “major system acquisition” even relevant in the cyberspace domain?31

Program offices like Big Safari and JIEDDO highlight the need to keep pace with an agile and adaptive enemy; in such programs rapid acquisition processes are vital to the safeguarding of military forces and thus to the national interest. The cyberspace domain has similarly short time horizons, and these can be expected to place special demands on the acquisition of cyberspace technology (see Figure 1-3).

A second likely theme is that success in cyberspace acquisition will depend on building and rewarding a culture of innovation, and in that sense it will require more risk tolerance and failure tolerance than are commonly found in bureaucratic organizations. In 2008, the Secretary of the Air Force, the Honorable Michael Wynne, said that a new cyberspace organization would need to encourage innovation from the bottom ranks to the top:

Calling innovation the top goal of the command, Wynne said the fledgling organization must be “more agile than any other in the Department of Defense” if it is to succeed at fighting in a climate where technology and tactics are often obsolete just months after being introduced…. to compete in the cyber battlefield, AFCYBER [Air Force Cyber] must be able to rapidly invent, develop and field new technologies, sometimes in a matter of weeks. To do this, the command will have to adopt a culture that encourages risk taking, especially among its young officers…. “Innovation will almost always come from the lower ranks,” he said, “from those who have not internalized any agreed upon ways of doing things.”

|

30 |

Available at http://www.24af.af.mil/questions/topic.asp?id=1666. Accessed August 25, 2010. |

|

31 |

Jon Goding, Principal Engineering Fellow, Raytheon. 2010. “Improving Technology Development in Cyber: Challenges and Ideas.” Presentation to the committee, June 7, 2010. |

FIGURE 1-3

Cyberspace agile acquisition construct recently adopted by the United States Air Force. NOTE: Program Manager (PM), Program Executive Officer (PEO), Service Acquisition Executive (SAE), Information Operations Wing (IOW). SOURCE: Air Force Materiel Command/Electronic Systems Center.

Wynne called on the command to foster officers who make mistakes and take risks that will lead to innovation. “Not only must you allow good ideas to percolate up, you must make your officers’ careers dependent upon demonstrating innovation. Being a flawless officer in Cyber Command should lead to early retirement.”32

A third probable characteristic of cyberspace acquisition is likely to be even closer collaboration between government, industry, and academic institutions, domestic and international. The FFRDC model discussed in the preceding subsection, on the space domain, is already a critical part of the cyberspace domain—that is, the MITRE Corporation’s long-standing support of the Electronic Systems Center, and the Software Engineering Institute’s support of the DoD. The need for ready access to highly specialized cyberspace expertise is very similar to the type of needs found in the space domain. Additionally, commercial industry must be brought into the collaboration, as commercial communities—for example, finan-

|

32 |

Inside the Air Force. Available at http://integrator.hanscom.af.mil/2008/June/06262008/06262008-08.htm. Accessed May 18, 2010. |

cial services, computer security—have a long history of dealing with cyberspace security, integration, and testing, and thus can contribute usefully to the Air Force’s operational capability.

Technology development for air and space domains is driven by domestically based defense contractors, focusing largely on military applications. In the cyberspace world, however, the commercial market dwarfs that of the military, and both threats and resources are largely unaffected by political or geographic boundaries.33 Cyberspace acquisitions will therefore require much tighter cooperation between technology development communities, foreign and domestic, inside and outside the military-industrial complex. A recent paper from the Air Force illustrates some of the benefits of collaborative approaches to cyberspace acquisition:

A collaborative environment and integrated network that enables rapid reach-back into a broad and diverse array of cyber experts throughout the nation, giving the warfighter access to cutting edge technology and expertise that otherwise would be unavailable to the military…. A process to discover world-class cyber experts, who may be either unaware of the military cyberspace requirements or overlooked because they work for smaller, less-known firms.34

It may also be useful to consider that the state of the cyberspace domain has similarities to that of the nascent air domain circa 1910, or to the fledgling space world in 1960.

AIR FORCE SCIENCE AND TECHNOLOGY STRATEGIC PLANNING

During this study, technology transition was described as a “contact sport: every successful technology hand-off requires both a provider and a receiver.”35 The probability of a successful technology handoff increases when the provider and the receiver work together in a disciplined way to identify capability needs and match them to an S&T portfolio.

Approximately 30 percent of the Air Force Research Laboratory’s (AFRL’s) technology development efforts are “technology-push” efforts driven by technologists who perceive how an emerging technology might enable a new operational capability in advance of a stated user need—as opposed to “technology-pull” efforts, or technology development done in response to a known capability need. The

|

33 |

Jon Goding, Principal Engineering Fellow, Raytheon. 2010. “Improving Technology Development in Cyber: Challenges and Ideas.” Presentation to the committee, June 7, 2010. |

|

34 |

Available at http://www.docstoc.com/docs/30607347/The-Collaboration-Imperative-for-Cyberspace-Stakeholders. Accessed May 18, 2010. |

|

35 |

Michael Kuliasha, Chief Technologist, Air Force Research Laboratory. 2010. “AFRL Perspective on Improving Technology Development and Transition.” Presentation to the committee, May 13, 2010. |

technology-pull portion of AFRL technology development is motivated by user requirements, which originate from multiple sources, including Major Commands (MAJCOMs), Product Centers, and air logistics centers.36

In some cases, user needs are assessed and prioritized before they are provided to the AFRL. For example, Product Centers are again working with their warfighter partners to prioritize requirements, which are influenced, in part, by their understanding of technology enablers emerging from the Air Force, industry, and university laboratories. These needs are prioritized within a particular MAJCOM and Product Center channel, but there is no mechanism that can adequately filter and prioritize needs across the Air Force today. To its credit, the Air Force recognizes this deficiency and is taking steps to develop a more robust corporate mechanism for technology needs assessment and prioritization.37

THE “THREE R” FRAMEWORK

At the beginning of the study, the committee found it useful to organize its thinking around simple axiomatic principles. This resulted in a framework incorporating the following: (1) Requirements, (2) Resources, and (3) the Right People—or the “Three Rs.” The framework is a concise and simple expression of unarguable criteria for successful program execution. If all three of these components are favorable, program success is possible. If any of the three is unfavorable, the program will most likely fail to deliver as expected. The framework is shown concisely in Box 1-2, and the principles are considered individually in the subsections below.

Requirements

The importance of clear, stable, feasible, and universally understood requirements has been long understood and has been validated by countless studies. Further, requirements need to be trade-off tolerant, that is, they need to be flexible enough to permit meaningful analysis of alternative solutions. Inadequately defined requirements drive program instability, through late design changes that drive cost increases and schedule slips, which in turn lead to an erosion of political support for the program. These ripples do not end at the boundary of a problematic program: As costs rise and schedules slide, the impact is transferred to other programs, and they then bear the costs imposed to save the original troubled system.

|

BOX 1-2 The “Three Rs” Early in this study, the committee developed a framework, the “Three Rs,” for organizing its findings and recommendations. The framework describes characteristics that, in the committee’s judgment, need to be addressed fully in order for successful technology development to occur. That framework is composed of the following:

|

Our assessment is that the current requirements process does not meet the needs of the current security environment or the standards of a successful acquisition process. Requirements take too long to develop, are derived from Joint Staff and Service views of the Combatant Commands’ needs and often rest on immature technologies and overly optimistic estimates of future resource needs and availability. This fact introduces instability into the system when the lengthy and insufficiently advised requirement development process results in capabilities that do not meet warfighter needs or the capabilities that are delivered “late-to-need.”38

A second cause of difficulty in the area of requirements is that there can be a large disconnect between what the warfighter wants—“desirements,” as expressed by one presenter to the committee—and what the laws of science permit. In those cases, overly optimistic estimates early in the project life can end up requiring miracles—or worse, sequential miracles—in order to become reality. In the words of the Defense Acquisition Performance Assessment Report (called the DAPA report; commissioned by Acting Deputy Secretary of Defense Gordon England in June 2005):

Neither the Joint Capabilities Integration and Development System nor the Services requirement development processes are well informed about the maturity of technologies that underlie achievement of the requirement or the resources necessary to realize their development. No time-phased, fiscally and technically informed capabilities development

|

38 |

Assessment Panel of the Defense Acquisition Performance Assessment Project. 2006. Defense Acquisition Performance Assessment Report. A Report by the Assessment Panel of the Defense Acquisition Performance Assessment Project for the Deputy Secretary of Defense, p. 35. Available at http://www.frontline-canada.com/Defence/pdfs/DAPA-Report-web.pdf. Accessed January 29, 2011. |

and divestment plan exists to guide and prioritize the development and understanding of weapon system requirements.39

In sum, then, a successful program requires the vigilant management of the requirements process. The Government Accountability Office (GAO) summed it up well in its 2010 report Defense Acquisitions: Strong Leadership Is Key to Planning and Executing Stable Weapon Programs, which studied 13 successful acquisition programs and drew lessons from those successes:

The stable programs we studied exhibited the key elements of a sound knowledge-based business plan at program development start. These programs pursued capabilities through evolutionary or incremental acquisition strategies, had clear and well-defined requirements, leveraged mature technologies and production techniques, and established realistic cost and schedule estimates that accounted for risk. They then executed their business plans in a disciplined manner, resisting pressures for new requirements and maintaining stable funding. The programs we reviewed typically took an evolutionary acquisition approach, addressing capability needs in achievable increments that were based on well-defined requirements. To determine what was achievable, the programs invested in systems engineering resources early on and generally worked closely with industry to ensure that requirements were clearly defined. Performing this up-front requirements analysis provided the knowledge for making trade-offs and resolving performance and resource gaps by either reducing the proposed requirements or deferring them to the future. The programs were also grounded in well-understood concepts of how the weapon systems would be used.40

Resources

As is the case with requirements, stability of resources is essential to program success. Turbulence in any of the following areas—technology, budgets, acquisition regulation, legislation, policy, or processes—contributes to program failure, as the resulting uncertainty deprives government and industry of the ability to execute programs as planned. One key area is technological maturity. The GAO has examined the importance of technological maturity in predicting program success. In 1999, the GAO examined 23 successful technology efforts in both government and commercial projects, concluding that the use of formal approaches to assess technological stability, like the NASA-developed TRL system discussed elsewhere in this report, was crucial to program success. As stated in the 1999 GAO report:

[D]emonstrating a high level of maturity before new technologies are incorporated into product development programs puts those programs in a better position to succeed. The

|

39 |

Ibid., p. 36. |

|

40 |

GAO. 2010. Defense Acquisitions: Strong Leadership Is Key to Planning and Executing Stable Weapon Programs. Washington, D.C.: GAO, p. 16. Available at http://www.gao.gov/new.items/d10522.pdf. Accessed June 11, 2010. |

TRLs, as applied to the 23 technologies, reconciled the different maturity levels with subsequent product development experiences. They also revealed when gaps occurred between a technology’s maturity and the intended product’s requirements. For technologies that were successfully incorporated into a product, the gap was recognized and closed before product development began, improving the chances for successful cost and schedule outcomes. The closing of the gap was a managed result. It is a rare program that can proceed with a gap between product requirements and the maturity of key technologies and still be delivered on time and within costs.41

Additional emphasis on the achievement of technological maturity was mandated in the Weapon Systems Acquisition Reform Act of 2009, which requires, among many other provisions, that Major Defense Acquisition Programs (MDAPs) must, prior to Milestone B, carry out competitive prototyping of the system or of critical subsystems and complete their Preliminary Design Review.42

Similar to the need for technological maturity, there must be stability and predictability in the financial resources available to a program manager. During this study, frequent reference was made to the Valley of Death, that graveyard for technology development efforts that might survive early exploratory R&D phases, but then fall victim to a lack of funding for bridging the gap to the system development and production phases.43 With its longer time horizons, the DoD’s Planning, Programming, Budgeting, and Execution System is ill equipped to handle problems like the Valley of Death, or the sorts of rapid acquisitions that are often required today. A 2006 study from the Center for Strategic and International Studies focused on joint programs, but the message applies to all acquisition efforts:

The current Planning, Programming, Budgeting, and Execution System (PPBES) resource allocation process is not integrated with the requirements process and does not provide sufficient resources for joint programs, especially in critical early stages of coordination between and perturbations in resource planning and requirements planning frequently result in program funding instability. Such instability increases program costs and triggers schedule slippages across DoD acquisition programs. Chronic under-funding of joint programs is endemic to the current resource allocation system.44

|

41 |

GAO. 1999. Best Practices: Better Management of Technology Development Can Improve Weapon System Outcomes. GAO NSIAD-99-162. Washington, D.C.: General Accounting Office, p. 3. Available at http://www.gao.gov/archive/1999/ns99162.pdf. Accessed June 11, 2010. |

|

42 |

Weapon Systems Acquisition Reform Act of 2009 (Public Law 111-23, May 22, 2009). |

|

43 |

Dwyer Dennis, Brigadier General, Director, Intelligence and Requirements Directorate, Headquarters Air Force Materiel Command, Wright-Patterson Air Force Base, Ohio. 2010. “Development Planning.” Presentation to the committee, March 31, 2010. |

|

44 |

David Scruggs, Clark Murdock, and David Berteau. 2006. Beyond Goldwater Nichols: Department of Defense Acquisition and Planning, Programming, Budgeting, and Execution System Reform. Washington, D.C.: Center for Strategic and International Studies. Available at http://csis.org/files/media/csis/pubs/bgnannotatedbrief.pdf. Accessed June 11, 2010. |

Among the most critical resources are robust processes, from the very conception of a program. For both government and industry, well-defined and well-understood work processes in all phases of program management are essential to successful technological development. Repeatedly during this study, evidence was presented that within the Air Force some of these processes have been diluted in significant ways in the past decade and are only now beginning to be reinvigorated.

In particular, there was general agreement on the decline of the systems engineering field.45 After a period of decline, systems engineering has been revived and received additional attention in the 2009 WSARA legislation. But once a field has been allowed to atrophy for whatever reason, the redevelopment of that capability is a long and arduous task.

A similar situation exists with the field of Development Planning. For decades, Product Centers had DP functions (“Product Center Development Planning Organizations,” or, as referred to in headquarters shorthand—XRs) that worked with warfighter commands to address alternatives to meet future needs. These offices operated in the early conceptual environs, pre-program of record, to help a using command clarify its requirements, assess the feasibility of alternatives, and settle on the preferred way to meet those requirements. Often, the DP resources that worked on the early stages of a program were later used to form the initial cadre of a program office, if indeed one was ultimately established.

As with the rebirth of systems engineering, the disestablishment of Development Planning is being rectified. Product Center DP directors provided valuable input to this study, and although it is clear that their function is being reborn, it is equally obvious that a capability can be eliminated quickly by one decision but can only be revived with time and with great difficulty.

The Right People

The third critical element for a successful program is the right people, which translates to program managers and key staff with the right skills, the right experience, and in the right numbers to lead programs successfully. This category also includes the right personnel policies and “right” cultures, which can contribute to program success.

The acquisition workforce has been buffeted by change for decades. Every acquisition setback has generated a new round of “fixes,” which by now have so constrained the system that it is to some a wonder that it functions at all. This workforce has been downsized, outsourced, and reorganized to the point of dis-

traction, yet there is little or no evidence to suggest that discernible improvements have resulted.

This disruption in the acquisition workforce was well recognized by those close to it. Beginning in the early 1990s, many of the best and brightest Air Force acquisition professionals chose to retire—many of them early—to take jobs with advisory and assistance services (A&AS) contractors. As these highly competent and experienced performers left, they were often not replaced, and so an enormous “bathtub” developed: Air Force acquisition specialties became understaffed, and many of the people who did remain were either in very senior oversight positions, or were very junior and, lacking mentors, very inexperienced. The middle of the force, the journeymen and junior managers, quite literally disappeared.

This was recognized by the authors of the DAPA report.46 Released in 2006, that report accurately described the state of the acquisition workforce:

Key Department of Defense acquisition personnel who are responsible for requirements, budget and acquisition do not have sufficient experience, tenure and training to meet current acquisition challenges. Personnel stability in these key positions is not sufficient to develop or maintain adequate understanding of programs and program issues. System engineering capability within the Department is not sufficient to develop joint architectures and interfaces, to clearly define the interdependencies of program activities, and to manage large scale integration efforts. Experience and expertise in all functional areas [have] been de-valued and contribute to a “Conspiracy of Hope” in which we understate cost, risk and technical readiness and, as a result, embark on programs that are not executable within initial estimates. This lack of experience and expertise is especially true for our program management cadre. The Department of Defense exacerbates these problems by not having an acquisition career path that provides sufficient experience and adequate incentives for advancement. The aging science and engineering workforce and declining numbers of science and engineering graduates willing to enter either industry or government will further enforce the negative impact on the Department’s ability to address these concerns. With the decrease in government employees, there has been a concomitant increase in contract support with resulting loss of core competencies among government personnel.47

In May 2009, 3 years after the DAPA release and after being rocked by two major failed source selections in the previous year, the Air Force released its Acquisition Improvement Plan.48 It cited five shortcomings of the acquisition process, all of

|

46 |

Assessment Panel of the Defense Acquisition Performance Assessment Project. 2006. Defense Acquisition Performance Assessment Report. A Report by the Assessment Panel of the Defense Acquisition Performance Assessment Project for the Deputy Secretary of Defense. Available at http://www.frontline-canada.com/Defence/pdfs/DAPA-Report-web.pdf. Accessed January 29, 2011. |

|

47 |

Ibid., p. 29. |

|

48 |

USAF. 2009. Acquisition Improvement Plan. Washington, D.C.: USAF. Available at http://www.dodbuzz.com/wp-content/uploads/2009/05/acquisition-improvement-plan-4-may-09.pdf. Accessed June 11, 2010. |

which pointed, in whole or in part, to failures in the human side of the acquisition enterprise:

-

Degraded training, experience and quantity of the acquisition workforce;

-

Overstated and unstable requirements that are difficult to evaluate during source selection;

-

Under-budgeted programs, changing of budgets without acknowledging impacts on program execution, and inadequate contractor cost discipline;

-

Incomplete source selection training that has lacked “lessons learned” from the current acquisition environment, and delegation of decisions on leadership and team assignments for MDAP source selections too low; and

-

Unclear and cumbersome internal Air Force organization for acquisition and Program Executive Officer (PEO) oversight.49

Clearly the two failed source selections had been a major blow to the Air Force’s reputation in acquisition. The Acquisition Improvement Plan closes with a call to recapture the successes of yesterday:

We will develop, shape, and size our workforce, and ensure adequate and continuous training of our acquisition, financial management, and requirements generation professionals. In so doing we will re-establish the acquisition excellence in the Department of the Air Force that effectively delivered the Intercontinental Ballistic Missile; the early reconnaissance, weather, and communication satellites; the long-range bombers like the venerable B-52; and fighters like the ground-breaking F-117A….50

Those steps are under way. Evidence was presented during this study indicating that expedited hiring was being used to fill empty positions, in accordance with the DAPA recommendations.51 However, the redevelopment of a skilled and experienced workforce is in some ways reminiscent of the challenges facing those seeking to reinvigorate systems engineering or Development Planning: Similar to what was seen with those critical processes, a skilled workforce can shrink quickly, yet will take decades or more to rebuild and mature.

REPORT ORGANIZATION

The remainder of this report is structured as follows, to correspond to the four main paragraphs of the statement of task. Chapter 2, “The Current State of

|

49 |

Ibid. |

|

50 |

Ibid., p. 14. |

|

51 |

Assessment Panel of the Defense Acquisition Performance Assessment Project. 2006. Defense Acquisition Performance Assessment Report. A Report by the Assessment Panel of the Defense Acquisition Performance Assessment Project for the Deputy Secretary of Defense. Available at https://acc.dau.mil/CommunityBrowser.aspx?id=18554. Accessed June 11, 2010. |

the Air Force’s Acquisition Policies, Processes, and Workforce,” addresses the first paragraph of the statement of task. Chapter 3, “Government and Industry Best Practices,” addresses the third paragraph of the statement of task. Chapter 4, “The Recommended Path Forward,” responds to the second and fourth paragraphs of the statement of task. Importantly, the committee chose to present its findings in Chapters 2 and 3, and the associated recommendations (plus the reiterated relevant findings from the earlier chapters) are consolidated in Chapter 4. Finally, Appendixes C and D provide background information related to the subjects addressed in Chapter 2.