2

Key Technology Considerations

Radio-frequency (RF) communication saw a progression of innovation throughout the 20th century. In recent years, it has been transformed profoundly by technological advances, both in the capabilities of individual radios and in the design of networks and other systems of radios. This discussion presents some highlights of recent advances and their implications for the design of radios and radio systems and for regulation and policy. It does not aim to describe the full range of technical challenges associated with wireless communications; the interested reader is referred to the 1997 NRC report The Evolution of Untethered Communications,1 which describes many of the fundamental challenges associated with wireless communications or, for a more recent view of the technology and its applications, several recent textbooks on wireless communications.2

TECHNOLOGICAL ADVANCES IN RADIOS AND SYSTEMS OF RADIOS

Digital Signal Processing and Radio Implementation in CMOS

Modern communications technologies and systems, including those that are wireless, are mostly digital. However, all RF communications ultimately involve transmitting and receiving analog signals; Box 2.1 describes the relationship between digital and analog communication.

Digital signal processing (Box 2.2) is increasingly used to detect the desired signal and reject other “interfering” signals. This shift has been enabled by several trends:

-

Increasing use of complementary metal oxide semiconductor (CMOS) integrated circuits (Box 2.3) in place of discrete components;

-

The application of dense, low-cost digital logic (spawned primarily by the computer and data networking revolutions) for signal processing;

-

New algorithms for signal processing;

-

Advances in practical implementation of signal processing for antenna arrays; and

-

Novel RF filter methods.

The shift relies on an important tradeoff: although the RF performance of analog components on a CMOS chip is worse than that of discrete analog components, more sophisticated computation can compensate for these limitations. Moreover, the capabilities of radios built using CMOS can be expected to continue to improve.

The use of digital logic implies greater programmability.3 It is likely that radios with a high degree of flexibility in frequency, bandwidth, and modulation will become available, based on highly parallel architectures programmed with special languages and compilers. These software-defined radios will use software and an underlying architecture that is quite different from conventional desktop and laptop computers, but they will nonetheless have the ability to be programmed to support new applications.

High degrees of flexibility do come at a cost—both financial and in terms of power consumption and heat dissipation. As a result, the wireless transceiver portion (as opposed to the application software that communicates using that transceiver) of low-cost consumer devices is unlikely to become highly programmable, at least in the near future. On the other

|

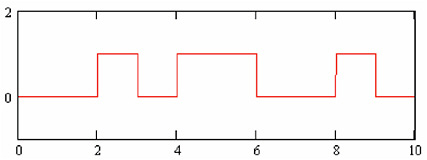

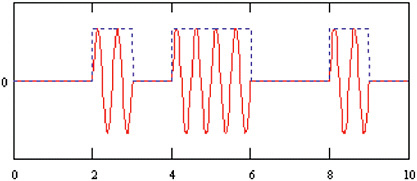

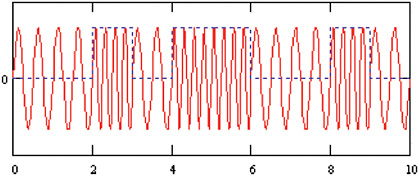

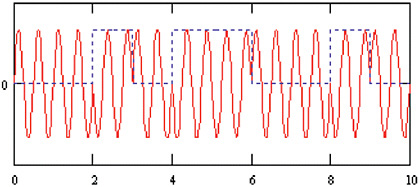

BOX 2.1 Analog Versus Digital Communications In common usage, the term “analog” has come to mean simply “not digital,” as in “analog wristwatch” or “analog cable TV.” But for the purposes of this report it is useful to trace the meaning to its original technical usage, in early computing. From about 1945 to 1965, an era when digital computers were very slow and very costly, differential equations describing a hypothetical physical system were solved (one might say modeled) by an interconnected network of properly weighted passive components (resistors and capacitors) and small amplifiers, so that the smoothly time-varying voltages at various points in this network were precisely analogous to the time behavior of the corresponding variables (velocity, acceleration, flow, and so on) of the system being modeled. Today, we solve these same equations numerically on a digital computer, very quickly and at low cost. In a similar way, for roughly 100 years, signals were transmitted in analog form (over wires or wirelessly) with a smoothly varying signal, representing the changing level and pitch of voice; the hue, saturation, and brightness of each point in a video image; and so forth. But just as high-speed and low-cost numerical representations and digital computations replaced analog computing, it likewise became much more reliable and less expensive to transmit digital coded numerical samples of a signal to be reconstituted at the receiver rather than to faithfully transmit a continuously varying analog representation. In digital communications, information is encoded into groups of ones and zeroes that represent time-sampled numerical values of the original (voice, music, video, and so on) signal. Ironically, in the wireless domain, once the analog signal has been encoded into a sequence of digital values, smoothly varying forms for the ones and the zeroes must be generated so that the transmitted signal will propagate. Figure 2.1.1 shows a digital sequence of ones and zeros. The sharp on-off pulses that work so well inside a computer do not work well at all when sent through space between antennas. And so groups of ones and zeroes are represented by smooth changes in frequency, phase, or amplitude in a sinusoidal carrier, the perfect waveform of propagation. Three schemes are illustrated in Figures 2.1.2 through 2.1.4: amplitude shift keying of the carrier wave from 1 volt to 0 volts (Figure 2.1.2), frequency shift keying of the transmission frequency from f0 to f1 (Figure 2.1.3), and phase shift keying of the phase by 180 degrees (Figure 2.1.4). These ones and zeroes are interpreted at the receiver in groups of eight or more bits, representing the numerical value or other symbol transmitted. |

FIGURE 2.1.1 Digital sequence of ones and zeroes—0010110010. SOURCE: Charan Langton, “Tutorial 8—All About Modulation—Part 1,” available at http://www.complextoreal.com. Used with permission.  FIGURE 2.1.2 Amplitude shift keying. SOURCE: Charan Langton, “Tutorial 8—All About Modulation—Part 1,” available at http://www.complextoreal.com. Used with permission. |

FIGURE 2.1.3 Frequency shift keying. SOURCE: Charan Langton, “Tutorial 8—All About Modulation—Part 1,” available at http://www.complextoreal.com. Used with permission.  FIGURE 2.1.4 Phase shift keying. SOURCE: Charan Langton, “Tutorial 8—All About Modulation—Part 1,” available at http://www.complextoreal.com. Used with permission. |

|

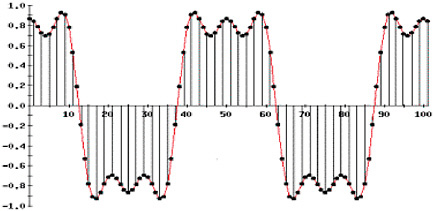

BOX 2.2 Digital Signal Processing For the continuous sinusoidal signals that can be propagated from transmitter to receiver to be encoded, modulated, demodulated, and decoded using digital technology, they must be put into a digital form by using an analog-to-digital converter (ADC), and then a digital-to-analog converter (DAC) to return to analog form. For example, an ADC might take 500 million samples per second, with a resolution of 10 bits (1 part in 1024 accuracy). Then, the continuous signal being received would be represented by a series of samples each spaced 2 nanoseconds apart. A series of dots approximately represents the continuous function shown in Figure 2.2.1. To find the frequency domain representation of this function, we can calculate its Fourier transform. But because it is now a sequence of discrete samples rather than a continuous mathematical function, we use an algorithm known as the discrete Fourier transform (DFT). It has the form And the inverse DFT has the form In these two expressions, we use N time domain samples to compute N frequency components, and vice versa. A huge improvement on the DFT is the fast Fourier transform (FFT) and the inverse FFT (IFFT). By always using N equal to a power of 2 (16, 32, 64, 128…), the calculation is greatly simplified. The FFT and IFFT are the foundation of modern digital signal processing, made possible by high-speed, low-cost digital CMOS (see Box 2.3).  FIGURE 2.2.1 Representation of continuous function as series of digital samples. SOURCE: Charan Langton, “Tutorial 6—Fourier Analysis Made Easy—Part 3,” available at http://www.complextoreal.com. Used with permission. |

|

BOX 2.3 Complementary Metal Oxide Semiconductor Technology The transformation of communications from analog to digital and the related dramatic reduction in costs and increased performance are a consequence of the revolution in semiconductor design and manufacturing caused by the emergence of the personal computer (PC) industry. In particular, the remarkable and steady increase in performance and reduction in feature size by a factor of two every 18 months, generally known as Moore’s law, has driven aggressive innovation far beyond the PC industry. By far, the majority investment to enable this progress has been in the design and process development of complementary metal oxide semiconductor (CMOS) technology. Introduced in the 1960s, CMOS is now used widely in microprocessors, microcontrollers, and other digital logic circuits as well as in a wide variety of analog circuits. This technology for constructing integrated circuits uses complementary and symmetrical pairs of p-type and n-type metal oxide semiconductor field-effect transistors. Investments also spawned a new industry structure: “fabless” companies, which design, market, and sell innovative products, along with silicon foundries, which manufacture the chips for these companies, spreading the capital investment in exotic equipment over large volumes. For example, today even a new, small company can design a complex part in CMOS and have a foundry charge $1,000 to process a silicon wafer yielding, say, 5,000 chips (20 cents each). Adding 10 cents for packaging and testing gives a cost of 30 cents for a part that is sold to a cell phone manufacturer for 40 to 60 cents. Well over 1 billion cell phones are sold each year. |

hand, there are other applications, such as cellular base stations, where concurrent support of multiple standards and upgradability to new standards make transceiver programmability highly desirable.

Also, the decreasing cost of computation and memory opens up new possibilities for network and application design. The low cost of memory, for example, makes practical store-and-forward voice instead of always-on voice. This capability creates new opportunities for modest-latency rather than real-time communication and may be of increasing importance to applications such as public safety communications. Digital signal processing of the audio can also, for example, be used to enhance understandability in (acoustically) noisy environments.4

The pace of improvement in digital logic stands in contrast to the much slower pace of improvement in analog components. One consequence of this trend is that it becomes potentially compelling to reduce the portion of a radio using discrete analog devices and instead use digital signal processing over very wide bandwidths. However, doing so presents significant technical challenges. As a result, at least for the present, the development of radios is tied to the pace of improvements in analog components as well as the rapid advances that can be expected for digital logic, although promising areas of research exist that may eventually overcome these challenges.

Digital Modulation and Coding

Modulation is the process of encoding a digital information signal into the amplitude and/or phase of the transmitted signal. This encoding process defines the bandwidth of the transmitted signal and its robustness to channel impairments. Box 2.4 describes how waveforms can be constructed as a superposition of sinusoidal waves, and Box 2.5 describes several modern modulation schemes in use today.

The introduction of the more sophisticated digital modulation schemes in widespread use today—such as CDMA and OFDM, whereby different users using the same frequency band are differentiated using mathematical codes—have further transformed radio communications (see Box 2.6).

Many important advances have also been made in channel coding, which reduces the average probability of a bit error by introducing redundancy in the transmitted bit stream, thus allowing the transmit power to be reduced or the data rate increased for a given signal bandwidth. Although some of the advances come from the ability to utilize ever-improving digital processing capacity, others have come from innovative new coding schemes (Box 2.7).

Low Cost and Modularity

The low cost and modularity (e.g., WiFi transceivers on a chip) that have resulted from the shift to largely digital radios built using CMOS technology make it cheaper and easier to include wireless capabilities in consumer electronic devices. As a result, developing and deploying novel, low-cost, specialized radios have become much easier, and many more people are capable of doing so. A likely consequence is continued growth in the number of wireless devices and in demand for wireless communications.

|

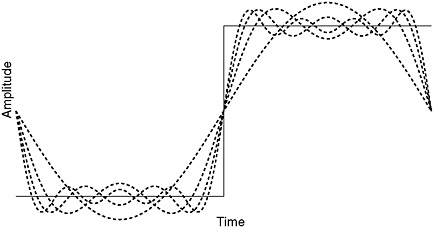

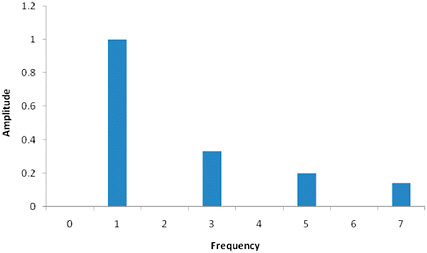

BOX 2.4 Power Spectra and the Frequency Domain Late in the 1600s, Josef Baron Fourier first proved that any periodic waveform can be represented by a (possibly infinite) sum of pure sinusoidal functions of various amplitudes. This result is surprising but true, however little the original waveform may resemble a smooth sine or cosine function. For example, a perfect square wave x(t) can be represented by the infinite series Figure 2.4.1 shows that adding the waveforms of just the first four terms of this equation already begins to approximate the square wave, an approximation that improves as more terms are added. This square wave can be composed by adding an increasing number of sine waves that are odd harmonics of the basic frequency of the square wave—that is 3, 5, 7, and so forth times the frequency—and 1/3, 1/5, 1/7, and so forth times the amplitude. Needless to say, it is impossible in practice to combine an infinite number of sine waves, but then it is also impossible to produce a perfect square wave, rising and falling in zero time. But we certainly can generate waves with very, very fast rise and fall times, and the faster they are the larger the number of harmonics they contain. Consider just the simple case of the 3rd, 5th, and 7th harmonics. This collection of sine waves can be represented in another way, by showing the amplitude of each frequency component visually. This amplitude spectrum (Figure 2.4.2) represents the signal amplitude in the frequency domain. A signal  FIGURE 2.4.1 Representation of square wave (solid line) by the sum of 1, 2, 3, and 4 sinusoidal waveforms (dashed lines). |

|

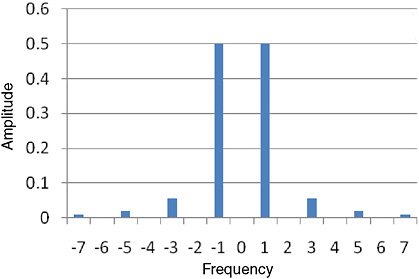

also has a frequency domain representation of the power in a signal, which is proportional to the square of the amplitude. Especially in the case of signals radiating from an antenna, we usually show the signal power spectrum as consisting of equal positive and negative frequencies or sidebands, with half of the power in each sideband. Thus, the power spectrum of the signal from Figure 2.4.1 would look like the spectrum shown in Figure 2.4.3. These ideal-looking spectra result from combining perfectly stable, pure sine waves of precise frequencies, which are also impossible to achieve in practice. Nevertheless, the spectra do illustrate the relationship between the coefficients of the time-domain harmonics in the Fourier series, and the frequency-domain components in the amplitude and power spectra. These are more clearly related by the Fourier transform, which accepts a time domain representation of a signal, such as x(t), and returns a frequency domain representation:  FIGURE 2.4.2 Signal amplitude represented in the frequency domain. |

|

The inverse Fourier transform accepts a frequency domain representation These two transformations are extremely important in modern wireless, because they allow information to be encoded by including or excluding different frequencies from a transmitted signal and then detecting these at the receiver, in order to symbolize data in a way that is very resistant to interference and noise. These continuous integral equations form the basis for the discrete computations described in Box 2.2. This requires high-speed, specialized computations.  FIGURE 2.4.3 Power spectrum representation of the signal shown in Figure 2.2.1. |

|

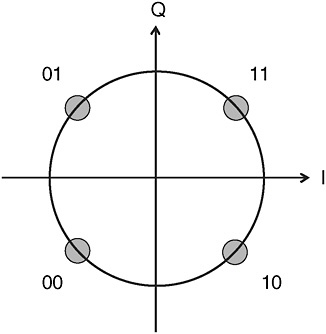

BOX 2.5 Modern Modulation Techniques Gaussian minimum shift keying (GMSK) is the most widely used form of frequency shift keying (see Box 2.1), as a result of its adoption in the Global System for Mobile Communications (GSM), the standard second-generation (2G) air interface used by 80 percent of cellular phones worldwide. The sharp digital pulse used to perform the frequency shift is first softened to a Gaussian shape, reducing unwanted harmonics. The dominant worldwide cellular phone system GSM uses a simple constant-amplitude sine wave, with all modulation done by GMSK. Quadrature phase shift keying (QPSK) is a technique that allows two bits of information to be sent concurrently. Two identical carriers 90 degrees out  FIGURE 2.5.1 Possible pairs of bits transmitted using quadrature phase shift keying. |

|

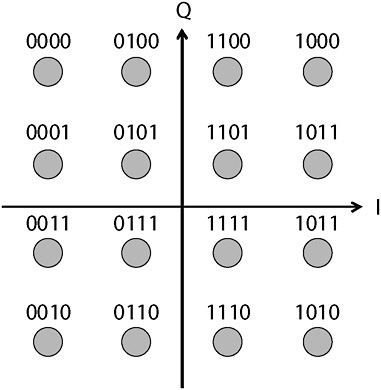

of phase (in-phase, I and quadrature, Q) are each modulated with a 0 degree or 180 degree phase shift to represent a one or a zero. These two modulated carriers are then added and transmitted, giving four different values, or two bits of information, when received and decoded, as shown in Figure 2.5.1. Quadrature amplitude modulation (QAM) is a technique that, like QPSK, uses two carriers 90 degrees apart (I, Q). But instead of phase modulation, QAM uses amplitude modulation. For example, 16-QAM has four amplitude values for I and four values for Q. When the two are combined and transmitted, there are 16 possible combinations, corresponding to 4 bits, as shown in Figure 2.5.2.  FIGURE 2.5.2 Possible groups of four bits transmitted using quadrature amplitude modulation. |

|

BOX 2.6 Code Division Multiple Access and Orthogonal Frequency Division Multiple Access Because the efficient use of spectrum is important, particularly the expensively licensed cellular spectrum, it is important that as many users as possible be able to access a given frequency band without interfering with one another. Traditionally, this has been accomplished by assigning a narrowband subfrequency “channel” to each user (frequency division multiple access; FDMA), or by providing brief, high-data-rate recurring time slices or slots to each user in turn (time division multiple access). As both the number of concurrent users and the demand for higher data rates have increased, new techniques have emerged that exploit digital processing to support these requirements without increasing interference. Code division multiple access (CDMA) has become increasingly important as the basis for higher-data-rate and more spectrally efficient third-generation (3G) mobile phone systems. CDMA is a spread spectrum multiple access technique. In CDMA a locally generated pulse train code runs at a much higher rate than the data to be transmitted. Data for transmission are added using an exclusive or logical operation with the faster-pulse train code. Each user in a CDMA system uses a different code to modulate the signal. The signals are separated by digitally correlating the received signal with the locally generated code of the desired user. If the signal matches the desired user’s code, the correlation function will be high and the system can extract that signal. If the desired user’s code has nothing in common with the signal, the correlation will eliminate it, treating it as noise (although it is actually rejected interference, the low correlation value makes it appear to be noise). Thus a large number of users can occupy the same band of frequencies and still be able to uniquely separate their desired signals. These coded signals use various modulation techniques such as quadrature amplitude modulation (QAM), phase-shift keying (PSK), and the like. Orthogonal frequency division multiple access (OFDMA) uses a group of closely spaced, harmonically related subcarriers, each of which can be modulated by PSK, QAM, or other methods. They are then added and transmitted. Because the subcarriers are harmonically related, they are said to be orthogonal and can easily be separated using a fast Fourier transform and decoded. Systems often use as many as a few thousand subcarriers in a single frequency band. Rather than a particular subcarrier being assigned rigidly to each user, as in FDMA, these subcarriers can be dynamically allocated among users, providing more subcarriers to different classes of users to give higher data rates, lower error rates, or other quality-of-service choices. Also, transmitting a given payload in a given period of time over multiple channels at a lower data rate (e.g., 1 Mbps over 16 channels) is much more power efficient than transmitting over a single channel at a higher data rate (e.g., 16 Mbps over a single channel). OFDMA is the access method in the 3GPP Long Term Evolution and IEEE802.22 (WiMax) standards. The basic OFDM technique is also used in WLAN IEEE 802.11 (WiFi), Terrestrial Radio Digital Audio Broadcast, Terrestrial Digital Television (DVB-T and T-DMB standards), and Mobile Digital Television (DVB-H and ISDB-T standards). |

|

BOX 2.7 Evolution of Coding Schemes A major advantage of the increased use of digital CMOS technology is the ability to encode information before modulation and transmission so that errors introduced into the radio signal during transmission and reception by noise or interference can be detected and corrected during decoding at the receiver. Use of these techniques has made possible the accurate recovery of very tiny signals from heavy interference and noise. Although deep-space communication was the original source of these ideas, they have since been incorporated as a fundamental enabler of modern wireless communications ranging from wireless local area networking to mobile phones and to satellite radio and television. For four decades, the workhorse combination making these advances possible has been the convolutional coder and the Viterbi decoder, which remain the mainstay of many systems. The convolutional coder is a simple linear state machine of shift registers and exclusive-or gates, which can be made longer and more elaborate as needed by the expected transmission environment. It expands the data prior to modulation to include additional bits that, when decoded, permit error detection and correction. The Viterbi decoder is a state machine that calculates metrics based on the current and prior received signals and makes the most likely decoding choice among the possible transmitted symbols. This scheme is the basis for CDMA and Global System for Mobile Communciations (GSM) digital cellular coding, as well as WiFi and various modems. For the extremes of deep-space missions and certain other applications where very long codes are required, the Viterbi algorithm becomes too complex, and a more recent technique of turbo coding is combined with Reed-Solomon error-correcting codes. It is likely that turbo coding will gradually assume a central role in broadband mobile applications. In applications that are tolerant of latency, closely related low-density-parity-check codes can provide even lower error rates. |

New Radio System Architectures

New networking technologies are transforming the architectures of radio systems, as seen in the introduction of more distributed, often Internet-Protocol (IP)-based networks in addition to networks that rely on centralized switching. This shift is suggested by cell phones, in which every mobile phone is a transmitter as well as a receiver, but the shift goes further to architectures that do not have the centralized control of cellular systems. What was once a population of deployed radios consisting of a small number of transmitters and many receivers (which placed a premium on low-cost receivers and did not impose tight cost constraints on transmitters) is changing to a population that contains many more transceivers. Especially in more densely populated areas, services that have

|

BOX 2.8 Capacity Scaling of Mesh Networks Much has been claimed about the scalability of mesh networks (wireless networks with no central control). Leaving aside issues of cost and latency, the possibility that they could scale linearly is enticing—that is, that adding more nodes would increase network throughput in proportion to the number of nodes added. If mesh networks were to scale linearly, they would offer infinite capacity, which would have profound consequences for spectrum management policy. However, considerable doubt has been raised about such claims by research showing limitations to their scalability. Research by Kumar and Gupta (2000) examined the question of capacity in mesh networks.1 Their research showed that, making certain assumptions about how current technology operates, there was indeed a constraint on the ability of mesh networks to scale and that the “cause of the throughput constriction is not the formation of hot spots, but the pervasive need for all nodes to share the channel locally with other nodes.”2 It considered networks where nodes were arbitrarily located as well as networks where nodes are uniformly distributed. These results were obtained assuming perfect information about node location and traffic demand and with stationary (nonmobile) nodes. Capacity would decrease further should any of these assumptions not hold. The capacity limitations did not change when the network nodes were located in a two-dimensional plane or on the surface of a sphere. The research further showed that splitting the communication channel into several subchannels did not change any of the results. Although scaling was not linear, the results did show that networks composed of nodes with mostly close-range transactions and sparse long-range demands, such as those envisaged for smart homes, are feasible. That is, where nodes need to communicate only with nearby nodes, then |

relied on transmission from high-power central sites are giving way to more localized transmissions using ever-smaller cells5 and mesh networks (Box 2.8) that provide much greater capacity by enabling frequencies to be reused at a fine-grained level.

Dynamic Exploitation of All Degrees of Freedom

Another important shift in radios has been the ability to use new techniques to permit greater dynamic exploitation of all available degrees of freedom. Theoretic communications capacity is the product of the number of independent channels multiplied by the Shannon

|

all nodes can transmit data to nearby nodes at a bit rate that does not decrease with the number of nodes. Further research has explored theoretical limits of scaling wireless networks if current technological limitations could be eliminated and optimal operational strategies could be devised.3 For instance, no assumptions were made that interference must be regarded as noise or that packet collision between nearby transmitters must necessarily be destructive. One result of this research was to show that better scaling can be achieved by different network architectures for information transport depending on attenuation. For relatively high attenuation, a multihop transport mode where load can be balanced across nodes appears to have the best scaling characteristics. For relatively low attenuation, multistage relaying with interference subtracted from the signal at each stage could theoretically attain unbounded transport capacity. Yet achieving these theoretical limits would require not only overcoming existing technical limitations but also achieving fundamental advances in information theory to understand complex modes of cooperation between nodes in a network. |

limit for a channel. In practice, the capacity (data rate) of an individual channel will be limited by the particular choice of modulation, coding scheme, and transmission power—for any particular profile of background channel noise.

Four independent degrees of freedom can be used to establish independent channels—frequency, time, space, and polarization.6 In the past, technology and the regulatory schemes that govern it have relied principally on a static separation by frequency and space. Advances in digital signal processing and control make it possible for radios to exploit the available degrees of freedom on a dynamic basis and to coordinate their own use of the various degrees of freedom available so as to coexist with

one another and with uncoordinated spectrum occupants. Antenna arrays enable more sophisticated spatial separation through beam forming in all three dimensions. Today’s radio technologies can thus, in principle, take greater advantage of all the degrees of freedom (frequency, time, space, and polarization) to distinguish signals and to do so in a dynamic, fine-grained fashion. An important consequence is that a wider set of parameters (beyond the conventional separation in frequency and space) can be used to introduce new options for allocating usage rights (i.e., defining what a user can do and what the user must tolerate) based on all of these degrees of freedom.

Flexibility and Adaptability

The agility and the flexibility of radios are improving along with advances in the ability to more accurately measure communication channels (sensing), share channels (coordination), and adapt to the operational environment in real time (adaptation). More agile radios can change their operating frequency or modulation or coding scheme, can sense and respond to their environment, and can cooperate to make more dynamic, shared, and independently coordinated use of spectrum. Digital logic advances make it possible for radios to incorporate significant and growing computing power that enables them to coordinate their own use of the various degrees of freedom available so as to coexist with each other and with uncoordinated spectrum occupants. Since much of the processing is performed digitally, the performance improvements popularly associated with Moore’s law that characterize the computer industry are likely to apply to improvements in this type of processing. The result is that radios and systems of radios will be able to operate in an increasingly dynamic and autonomous manner.

Finally, increased flexibility poses both opportunities and challenges for regulators. Although it is much more complex, costly, and power consuming, flexibility makes possible building radios that can better coexist with existing radio systems. Coexistence is sometimes divided into underlay (low-power use intended to have a minimal impact on the primary user) and overlay (agile utilization by a secondary user of “holes” in time and space of use by the primary user). Such overlays and underlays might be introduced by rules requiring such changes or by rules that enable licensees to agree to such sharing in exchange for a market price.

Moreover, flexibility allows building radios with operating parameters that can be modified to comply with future policy or rule changes or future service requirements. That is, devices are able to instantiate and operate on specified policies, and the policies (and the devices’ operation) can be modified.

Besides providing regulators and system operators with a valuable new tool, this malleability poses new challenges, such as how to assure a radio’s security in the face of potential (possibly malicious) attempts to modify its software. Possible scenarios include rogue software silently placing calls constantly (thus congesting the control channel) or altering the parameters of a cell phone’s transmitter so as to jam transmissions of cellular or other services. Information system security experience from other applications suggests that it will be possible, with significant effort, to provide reasonable security (i.e., against casual efforts to break it) but that it would be quite difficult using today’s state of the art to provide highly robust security against a determined attacker.7

Antennas

Work has been done for many years on antennas that can operate over very wide frequency ranges. Early theoretical work in this area on mode coupling of radiation into materials by such authors as Chu,8 Harrington,9 and Hansen10 still stands today, and advanced research continues on such topics as fractal and non-resonant antennas. Commercial products approximating wideband antenna technology include patch antennas, meander antennas for use at 2.4 and 5 GHz, and extreme spectrum antennas in the 2 to 6 GHz bands.

In the past decade, an interesting new approach to improved wireless communication began to develop, based on using multiple antennas at both transmitter and receiver. Advances in analog and digital processing have made it possible to individually adjust the amplitude and phase of the signal on each member of an array of antennas. When the approach is used to increase data rates, it is called multiple-input, multiple-output (MIMO), and when it is used to extend range, it is called beam forming. The most basic form of MIMO is spatial multiplexing, in which a high-data-rate signal is split into lower-rate streams and each is broadcast concurrently from a different antenna. (More generally, multiple antennas can be used to obtain the desired degree of enhancement in both data rate and range.) These schemes require significant “baseband” (i.e., digital)

processing before transmission and after reception, but are able to provide increased range or data rates without using additional bandwidth or power. They provide link diversity, which improves reliability, and they enable more efficient use of spectrum. This approach is used in a number of commercially deployed technologies including 802.11n (a wireless LAN standard), WiMax (a last-mile wireless local-access technology), and long-term-evolution (LTE; a technology for fourth-generation mobile telephony).

LOW-COST, PORTABLE RADIOS AT FREQUENCIES OF 60 GHZ AND ABOVE

The use of CMOS and digital processing together with other advances in RF technology opens up opportunities in the form of low-cost, portable radios that are becoming increasingly practical at frequencies of 60 GHz and above. Technological progress may extend this up to 100 GHz and beyond.

Radios operating in this domain confront a number of challenges. At these frequencies, propagation distances are very short in free space and even shorter where there is foliage. Penetration through and diffraction around building walls or other structures are also very limited. On the other hand, operation at these frequencies also has some attractive properties. Only at these frequencies are very large bandwidths available, making them the only practical option to support wireless applications that require extremely high data rates. For example, technology developed for in-room video transmission uses data rates of up to 4 gigabits per second (Gbps).

Another attractive feature of operation at these frequencies is diminished potential for interference. Short propagation distances and limited penetration of buildings are one reason. The high path losses could have another advantage with respect to interference. A likely solution to the path-loss problem is to use adaptive beam forming to provide high antenna gain—that is, directing transmitted energy along a chosen path and preferentially receiving a signal from a chosen path. If transmission sensitivity and receiver sensitivity are thus tightly focused, the potential for interference among different pairs of transmitters and receivers is markedly reduced.11 Using these frequencies for mobile devices therefore becomes technically challenging because very narrow beams must be dynamically

steered, a capability that is now being deployed in commercial products providing links up to 4 Gbps.12 Note that technological advances in these areas, which open up the bands between 20 and 100 GHz to practical use, will also open up other attractive options for using antenna arrays at lower frequencies, such as the use of MIMO, or the adaptation of 802.11n technology to operate at higher-than-present frequencies.

What applications might operation in these newly accessible frequencies have? In the short term applications that require very large bandwidth over short range appear to be promising, such as devices that allow computer devices to transfer data at high speed across a desktop or devices that can transmit high-definition video from one side of a room to another.

Short propagation distances make these frequencies less viable for wide-area infrastructure or applications where in-building signal propagation is important. Looking ahead, it is possible that new architectures, such as very small cells, could make it possible to use these frequencies to provide wider-coverage services. Realizing this vision would depend on several factors not yet present—devices that operate at 20 to 100 GHz becoming cheap enough to be ubiquitous, a sufficiently widespread and cheap wired network infrastructure that would connect these devices, and the development of new business models for such services.

INTERFERENCE AS A PROPERTY OF RADIOS AND RADIO SYSTEMS, NOT RADIO SIGNALS

It is commonplace to talk about radio signals interfering with one another, a usage that mirrors the common experience of broadcast radio signals on the same channel interfering with each another. Thus, the term “interference” might suggest that multiple radio signals cancel each other out, making their reception harder or impossible. However, this view is misleading because radio signals themselves do not, generally speaking, interfere with each other in the sense that information is destroyed. In fact, interference is a property of a receiver, reflecting the receiver’s inability to disambiguate the desired and undesired signals.

Radio signals are electromagnetic waves whose behavior, as described by Maxwell’s equations, is linear. One consequence of this behavior is that radio signals do not, in general, cancel each other out. Each new communication signal is superposed on the entire field.13 Actual destruction of information requires energy input at the point of destruction, and this

energy must be applied very precisely to cancel out the signal’s vector field in all six dimensions, which is a low-probability event, and applies only at a single point in space.

As a result, the superposition of any number of radio signals should be thought of not in terms of destroying information but rather in terms of the ambiguity it creates for a radio trying to receive any one specific signal. The difficulty of resolving the ambiguity relates to the energy emitted by other radios (with the implication that each radio sees multiple signals) and the unpredictability of the signals (which makes the individual signals harder to separate).

Even though it is available, information is discarded in the receivers primarily for the following reasons:

-

Dynamic range—large interfering signals inhibit a receiver’s ability to detect a small signal. A small signal cannot be amplified above certain noise levels without the larger signal saturating the receiver. Moreover, desensitization circuits will reduce gain in the presence of strong interfering signals. Finally, the resolution of analog-to-digital converters is limited, which means that a weak signal cannot be digitally represented when a strong interfering signal is also present.

-

Nonlinearity of receiver components—the desired and the interfering signals will interfere with each other inside the receiver. (See below.)

-

Inadequate separation in signal space (within the various degrees of freedom and code).

Moreover, the extent to which signal processing can be used to separate signals with the required sensitivity, accuracy, and latency is limited by the computational power available in a radio. Removing signal ambiguity thus entails investment in one or more of the following: better radio components, additional radio complexity, additional integrated circuit area, additional antennas, additional computation, and/or additional power consumption.

Another area for potential improvement is in systems of radios. With more and more radios capable of transmitting and receiving, behavioral schemes can be used to mediate among radios. Also, because it is fundamentally easier to separate out known (and thus predictable) signals as opposed to random signals, mechanisms that allow waveform and modulation information to be registered or otherwise shared may prove useful. However, there will always be practical limits to what can be shared or coordinated.

The costs of disambiguating signals are, thus, ultimately reflected in a number of ways, including in the complexity of a radio’s (or system’s) design, the cost of its hardware, its size, the power it consumes, and (for

mobile devices) the lifetime of the battery. Disambiguation thus involves tradeoffs, given that a radio is built to meet many requirements, only one of which is dealing with signal ambiguity.

ENDURING TECHNICAL CHALLENGES

Even as the capabilities and performance of radios continue to improve, a number of hard technical problems can be expected to persist.

Power Consumption

The power required to operate increasingly complex and sophisticated radios will continue to represent an important boundary condition, especially for mobile devices, where it dictates the cost, capacity, dimensions, and weight of their batteries as well as the interval between charges. Even for nonmobile devices, excess power results in heat that requires space or costly cooling components to dissipate. The design of practical radios will continue to reflect difficult tradeoffs between power consumption and other desired attributes and capabilities.

Nonlinearity

Real-world radio elements are not perfectly linear—that is, the output of an element is not exactly proportional to the input. Nonlinearity results in signal distortion and, when more than one signal is present in a nonlinear element, the creation of new, unwanted products of the original signals—an effect known as intermodulation distortion. The result is a degraded ability to separate a desired signal from other signals, which constrains the extent to which a receiver can mitigate interference.

Radio designers use several strategies to mitigate these effects. One is to use filters that separate out signals at other frequencies from the range of signals that are to be detected. In particular, filters allow relatively strong signals to be separated out so that a relatively weak signal can be detected. Another is to use components that are close to linear over a wider range of signal strengths.

Nonlinearity has always been a significant challenge to radio designers. It is a particular challenge to realizing the vision of radios that dynamically adapt to the presence of other radios by changing their frequency and other operating parameters. One might imagine building a radio that uses digital signal processing over very wide frequency ranges to separate out desired signals from potentially interfering signals. Doing so would allow one to leverage improvements in digital logic and better digital signal processing techniques to mitigate interference. However, the extent to which this

approach can be used is constrained by the intermodulation distortion associated with real-world radio components, which limit the bandwidth that can be handled practically using digital signal processing alone.

A variety of avenues are being pursued by researchers to overcome these constraints. One of them has long been of interest but has not been realized in commercial products: the use of narrow filters that are tunable under digital control over a wide range, perhaps using microelectro-mechanical systems (MEMS) technology.

Nomadic Operation and Mobility

Supporting nomadic operation and mobility requires more dynamic adaptation of radio operating parameters than is needed for fixed radios, which only need to cope with changes in environmental conditions. Moreover, nomadic operation and mobility make it more difficult to neatly segment space or frequency, and they complicate dynamic market approaches because they make it more difficult to buy and sell rights at the rate at which radios can move between segments.

Heterogeneity of Capabilities

As more sophisticated radios are deployed, the heterogeneity of capabilities—especially the existence of radios with much poorer performance than others—will present growing challenges. At any point in time, there will be a legacy in terms of deployed equipment, existing frequency allocations, and existing businesses and government operations that are being made obsolete, in some sense, by new capabilities. The problem is not new, but a rapid pace of technological advancement and concomitant explosion of applications, especially applications with different purposes and capabilities, magnifies the challenges.

Not all heterogeneity will arise from legacy systems. Some applications will have cost and/or power requirements that preclude the use of highly sophisticated radios that coordinate their behavior. For example, the constraints on cost and power consumption for embedded networked sensors preclude the use of highly sophisticated radios that are able to do very sophisticated signal processing or complex computation to coordinate their behavior. Another manifestation of heterogeneity is the contrast between active use, which involves both transmitter(s) and receiver(s), and passive spectrum use (e.g., remote sensing and radio astronomy), which involves receivers only.14 Figuring out how to simultaneously

accommodate more sophisticated and adaptable radios with those that are necessarily less sophisticated will be an ongoing challenge.

TIMESCALES FOR TECHNOLOGY DEPLOYMENT

A particular challenge in contemplating changes to policy or regulatory practice is determining just how quickly promising new technologies will actually be deployable as practical devices and systems and thus how quickly, and in what directions, policy should be adjusted.

Rate for Deployment of New Technologies as Practical Devices and Systems

As is natural with all rapidly advancing technology areas, concepts and prototypes are often well ahead of what has been proven feasible or commercially viable. The potential of adaptive radios, for example, has been explored (particularly for military use), but the technology has not yet been used in mainstream commercial devices or services. As described above, there is reason to expect the capabilities of radios to improve and their hardware costs to steadily decline, but many important details of operation and protocols must be worked out in parallel with technical development and regulatory change. Moreover, although great technical progress has been made in recent years, resulting in the deployment of new wireless services, wireless communications will remain a fertile environment for future basic research as well as product and service development.

Timescales for Technology Turnover

Different wireless services are characterized by the different timescales on which technology can be upgraded. The factors influencing the turnover time include the time to build out the infrastructure and the time to convince existing users (who may be entrenched and politically powerful) to make a shift. For instance, public safety users tend to have a long evolution cycle, as government procurement cycles are long and products are made to last a long time. Cellular turnover is rapid by comparison, and technology can be changed out relatively readily (a 2-year handset half-life and a 5- to 7-year time frame for a shift to new technology are typical). The digital television transition that finally occurred in the United States in 2009 is emblematic of the challenge of making a transition where technology turnover is very slow, in part because of expectations raised by static technology and services that were developed over many decades.

Importantly, the rate at which turnover is possible depends on the incentives for upgrading as well as the size of the installed base. For

instance, firms operating cellular networks have demonstrated an ability to upgrade their technology fairly quickly despite having an enormous user base, whereas aviation has a relatively small set of users but a very long turnover rate, having yet to transition from essentially 1940s radio voice technology. The primary driver of successful upgrades is for users to see tangible benefits and for service providers to have an incentive to push for the switch. Cellular subscribers gain tangible benefits from newer capabilities commensurate with the added costs. (Also, U.S. mobile operators generally subsidize handset cost, because it makes it easier to upgrade their network technologies and increase system capacity, somewhat offsetting the visible costs to the end user),15 whereas private pilots would incur a large capital cost and have to learn a new system even though the existing technology already meets their requirements.

TALENT AND TECHNOLOGY BASE FOR DEVELOPING FUTURE RADIO TECHNOLOGY

The changing nature of radios is creating new demands for training and education. Research and development (R&D) for radios depend on skills that span both the analog and the digital realms and encompass multiple traditional disciplines in electrical and computer engineering. Similarly, making progress in wireless networks often requires expertise from both electrical engineering and computer science. It is thus not straightforward for a student to obtain the appropriate education and training through a traditional degree program. The nature of modern radios presents another barrier to advanced education and university-based research, because the CMOS chips that lie at their heart require very large-scale fabrication facilities, presenting a significant logistical barrier to university-based groups that seek to test and evaluate new techniques.

This report assumes a continued stream of innovation in radio technology. Such sustained innovation depends on the availability of scientific and engineering talent and on sustained R&D efforts. Considerable attention has been focused in recent years on broad concerns about the declining base of scientific and engineering talent and levels of research support in the United States and its implications for competitiveness, including in the area of telecommunications. For a broad look at trends and their implications for science, engineering, and innovation, see Rising

Above the Gathering Storm: Energizing and Employing America for a Brighter Economic Future;16 for a study focused on telecommunications research, see Renewing U.S. Telecommunications Research.17

The issues and opportunities described in this report involve considerations of many areas of science and engineering—including RF engineering, CMOS, networking, communications system theory, computer architecture, applications, communications policy, and economics. Addressing the challenges and realizing the opportunities will require a cadre of broad systems-oriented thinkers. Building this talent will be a major national advantage.

Radio engineering is an important area for consideration in this context, given that wireless is a fast-moving, high-technology industry that is economically important in its own right and that has much broader economic impacts. Moreover, wireless engineering encompasses an extensive skill set—including RF engineering, an ability to do RF work in CMOS technology, and an ability to work on designs that integrate RF and digital logic components—that is difficult to learn in a conventional degree program. Similarly, wireless networks involve expertise that spans both electrical engineering and computer science.

Finally, for R&D to be effective, it is important to be able to implement and experiment with new ideas in actual radios and systems of radios. Work on new radio designs requires access to facilities for IC design and fabrication. Work on new radio system architectures also benefits from access to test beds that allow ideas to be tested at scale. Given the high cost of such facilities, university R&D can be enhanced by collaboration with industry.

MEASUREMENTS OF SPECTRUM USE

The standard reference in the United States for the use of spectrum is the U.S. Frequency Allocation Chart that is published by the NTIA. The chart separates the spectrum from 30 MHz to 300 GHz into federal or nonfederal use and indicates the current frequency allocations for a multitude of services (cellular, radiolocation, marine, land mobile radio, military systems, and so on).

Although this chart is an invaluable reference in providing a comprehensive view of what frequencies are potentially in use for various

services and in giving some indication of the complexity of frequency use, it does not shed light on a particularly critical issue—the actual density of use of the spectrum. That is, are there blank spaces in frequency, time, and space that could potentially be used for other purposes?

It is increasingly asserted that much spectrum goes unused or is used inefficiently. Yet relatively little is known about actual spectrum utilization. Licensees and users are not required to track their use of spectrum. There are no data available from any sort of ongoing, comprehensive measurement program. And when spectrum measurements have been made, they were often aimed at addressing a specific problem. Proxy measurements, such as the number of licenses issued in a frequency range, have been used to characterize trends and extrapolate likely use, but they do not measure actual use and do not, of course, yield any insight into unlicensed use.18

Why Spectrum Measurement Is Hard

Perhaps the greatest challenge is that any program of measurement will be limited in its comprehensiveness if all the degrees of freedom are actually to be measured. Measurements can be made only at specific locations and times; measurements at one place may not reveal much about even nearby points. Results obtained by one set of measurements are not easily applied to a different situation. The full scope of measurement is suggested by the electrospace model, in which one specifies the frequency, time, angle of arrival (azimuth, elevation angle), and spatial location (latitude, longitude, elevation) to be measured.19 Other measurement considerations include polarization, modulation scheme, location type (e.g., urban, suburban, or rural),20 and which signals are being measured (known signals, unknown signals, or noise).

Many radio systems are designed to operate with very low average power levels, and naive spectrum measurement techniques may miss use by such low-power devices.21 Moreover, a directional signal will be missed if the receiver is not pointing in the right direction. Often designed to operate with very low average power levels, point-to-point microwave

links and radar systems are examples of use that may be missed by spectrum measurements efforts. Radar emits narrow high-power pulses infrequently, making them easy to miss. Some uses, such as public safety communications, are inherently sporadic and random in time and location. Because they are normally confined to military installations, defense uses may take place in well-defined locations but will vary considerably over time.

Also, measurements by definition measure only active use of the spectrum; passive use of the spectrum and remote sensing cannot be detected and, worse, could be interpreted as non-use of parts of the spectrum that would be seen as empty. Similarly, without careful interpretation, guard bands established to mitigate interference for existing services could be interpreted as unused portions of the spectrum even though these bands are in a real sense being used to enable those services.

These considerations suggest that spectrum measurement is a challenging endeavor that requires measurements at many points in space and time and the collection of a very large amount of data. They also suggest that spectrum measurement has an inherent element of subjectivity, because results may depend significantly on the particular assumptions made and methods employed.

Looking forward, measurement might be improved over the long term by requiring systems to provide usage statistics, as might the development and adoption of a formal framework for measuring, characterizing, and modeling spectrum utilization. Such a framework might provide researchers a way to cogently discuss spectrum utilization and provide policy makers with evidence-based information about technical factors affecting efficient utilization.22

Results from Some Measurement Activities

The NTIA has a long history of spectrum measurement work going back to at least 1973.23 Those early efforts included federal land mobile radio measuring use in the 162-174 and 406-420 MHz range, and Federal Aviation Administration radar bands in the 2.7-2.9 GHz range. These projects were generally considered successful because the measurements focused on a definite problem and were able to address specific questions, such as whether claimed interference was real and whether minor changes to receivers could mitigate the problem of overcrowded use. The

NTIA conducted a number of broadband spectrum surveys in different cities in the 1990s.24

An NTIA report from 1993 (and updated in 2000) used proxy information as a “measurement” of spectrum usage for fixed services (e.g., common carriers).25 That report examined historical license data and observations about market and technology factors likely to affect spectrum use, in order to gain insight on the degree to which the existing fixed-service spectrum bands would continue to be needed for their allocated services. One conclusion to be drawn from that report is that point-to-point microwave bands are probably underused and that the growth expected when these bands were allocated decades ago did not occur.26 Anticipated use of point-to-point microwave has moved largely to optical fiber instead, although it is still used in many rural areas where the traffic does not justify the cost of laying fiber.

A number of research projects have attempted to directly measure spectrum utilization.27 Shared Spectrum Company, a developer of spectrum-sensing cognitive radio technology, has made several measurement studies since 2000, including occupancy measurements in urban settings such as New York City and Chicago, suburban settings such as northern Virginia, and rural environments in Maine and West Virginia.28 Spectrum measurements for the New York City study were done during a period of expected high occupancy, the Republican National Convention.29 The studies aimed to determine how much spectrum might be allocated for more sophisticated wireless applications and secondary users relative to primary (licensed) users.

Some important conclusions can be drawn from these measurements. The measurements indicate that some frequency bands are very heavily

used and that some other currently assigned frequency bands are only lightly used, at least over some degrees of freedom. Above all, the picture that emerges clearly from the measurements made to date is that frequency allocation and assignment charts are misleading in their suggestion that little spectrum is theoretically available for new applications and services—provided that the right sharing or interference mitigation measures could be put in place. One might legitimately quibble over the details or the precise level of use; the real point is that there is a good deal of empty space, provided that ways of safely detecting and using it can be found.

Another broad conclusion is that the density of use becomes lower at higher frequency. The advent of low-cost radios that can operate at frequencies in the tens of gigahertz points to a promising arena for introducing new services.

Finally, measurements of spectrum use do not capture the value of use. In addition, if a licensee internalizes the opportunity cost of underutilized spectrum and has a way to mitigate that cost, there is no need for centralized measurement and management; that empty space exists, but the best way to use it is not necessarily for the government to allow additional users.

CHALLENGES FACING REGULATORS

Technology advances bring new issues before regulators that require careful analysis. Some require a subtle understanding of the ways in which new technology may necessitate new regulatory approaches and a challenging of past assumptions about limitations and constraints. Several examples are discussed below.

Use of White Space to Increase Spectrum Utilization

The basic goal of “white space” utilization is to let operators with lower priority use the space when higher-priority users leave the spectrum unoccupied. From a technical perspective this approach requires adding sensing capability to devices to determine if a higher-priority user is using the spectral band (or bands). Such a dynamic use of spectrum has not been supported in past regulatory models.

In the dynamic situation envisaged in the white-space model, several new questions and considerations have to be addressed. For instance, “occupancy” must be defined thoughtfully. Higher-priority users opposed to the use of white space might say that any use of their spectrum could cause harm to their transmissions, so that only “no interference” is acceptable. Yet achieving no interference has never been possible because all

radios transmit energy outside their allowed bands and generate interference with other adjacent users. Therefore, the only question, ultimately, is the degree to which interference is allowed. In the absence of a clear technical analysis of when a given level of interference is actually causing significant degradation of signal, it is difficult to determine an acceptable level. How best to do so is of importance in formulating rules to open up spectrum as well as for private parties to negotiate what level of interference they would accept in return for a market price.

A clear technical analysis requires that several factors be considered. Estimating the total interference load depends on a realistic statistical model for the number of likely secondary users, the transmitted power spectrum for each user, the susceptibility of the primary occupant’s receivers to these secondary signals, and the ability of the primary user to adapt its transmissions to reduce the impacts of the secondary users.

Given that the analysis is statistical in nature, it may be useful to approach the question in terms of a probability of degradation that should not be exceeded. If the likelihood of degradation by secondary users falls below this probability, then those secondary users would be considered as not occupying the band of the primary user. An analysis done from this perspective would help avoid situations in which highly improbable scenarios (as opposed to situations that can reasonably be expected to cause a problem) lead to the rejection of sharing arrangements.

Second, considering frequency as the only degree of freedom available to separate users makes for simpler technical analysis but is highly limiting. Radios built to perform dynamic beam forming, for instance, allow highly sophisticated spatial separation. Also, if sensing is fast enough, then it is possible to exploit white spaces in time. Thus frequency, time, and space could all be considered as tools to reduce the effects of interference to below the level of degradation defined as noninterference.

Third, spectral emissions regulations have historically considered each transmitter working independently. Yet, considering sensing performed by the network might mean much greater opportunity for more efficient spectrum use. Just how much might be gained from such an approach is not well understood, because it depends on an understanding of the statistical correlation between sensing at different locations. Considering such an approach requires the same mind-set change as described previously, which allows for statistically based improvements.

Finally, there is the issue of sensitivity of detection. Greater sensitivity increases the probability of detection but also leads to a greatly increased probability of false alarms. In other words, at some point increasing sensitivity causes any random noise to appear as occupancy. To make a proper analysis requires a level of understanding about sensing that goes beyond just sensing the energy in a spectral band. Most signals have distinctive

signatures that can be used to differentiate them from noise or other spurious emissions.

One opportunity to make use of white space is in the broadcast television bands. To that end, in late 2008, the FCC issued a set of rules30 under which devices use geolocation and access to an online database of television broadcasters together with spectrum-sensing technology to avoid interfering with broadcasters and other users of the television bands. (Alternatively, the ruling provides for devices that rely solely on sensing, provided that more rigorous standards are met.) Debate and litigation ensued following the 2008 order on such issues as how to establish and operate a database of broadcaster locations. In a second order issued in 2010 to finalize the rules, the requirement was dropped that devices incorporating geolocation and database access also must employ sensing.31

Adaptive Antenna Arrays and Power Limits

Antenna arrays at transmitters and receivers are being used increasingly to provide greater range, robustness, and capacity. Yet the basic regulatory strategy of defining an equivalent isotropically radiated power level for transmitters ignores many of the special characteristics of antenna arrays. As one example, this regulatory approach does not encourage the use of beam forming, which has considerable advantages in reducing interference over omnidirectional antennas.

Decreasing Cost of Microwave Radio Links

The present report describes above how standard CMOS technology can now be used to transmit in the microwave bands (60-GHz links have been demonstrated). As desired data rates rise into the gigabit-per-second range, adaptive antenna arrays will be used to obtain the necessary received power for both mobile and fixed devices. As with the previous examples, this technology is very different from what has been in use until now.

ENGINEERING ALONE IS OFTEN NO SOLUTION

The previous section describes several specific issues where engineering insights would help to inform future policy and regulation. At the same time, it is important not to oversell the extent to which better engineering or understanding of the technology alone can yield solutions. In the end, an engineering analysis depends on a knowledge of possible scenarios and what the acceptable outcomes are. These inform a complex set of business, marketing, and political judgments about value and risk. For example,

-

Engineering alone does not determine whether a service supporting aviation merits greater protection from interference than a service delivering entertainment.

-

The density and the distribution of a constellation of mobile devices (which affect their ability to interfere) cannot be determined fully a priori. They will reflect market and consumer behavior, and moreover they will change over time.