4

Standards for Synthesizing the Body of Evidence

Abstract: This chapter addresses the qualitative and quantitative synthesis (meta-analysis) of the body of evidence. The committee recommends four related standards. The systematic review (SR) should use prespecified methods; include a qualitative synthesis based on essential characteristics of study quality (risk of bias, consistency, precision, directness, reporting bias, and for observational studies, dose–response association, plausible confounding that would change an observed effect, and strength of association); and make an explicit judgment of whether a meta-analysis is appropriate. If conducting meta-analyses, expert methodologists should develop, execute, and peer review the meta-analyses. The meta-analyses should address heterogeneity among study effects, accompany all estimates with measures of statistical uncertainty, and assess the sensitivity of conclusions to changes in the protocol, assumptions, and study selection (sensitivity analysis). An SR that uses rigorous and transparent methods will enable patients, clinicians, and other decision makers to discern what is known and not known about an intervention’s effectiveness and how the evidence applies to particular population groups and clinical situations.

More than a century ago, Nobel prize-winning physicist J. W. Strutt Lord Rayleigh observed that “the work which deserves …

the most credit is that in which discovery and explanation go hand in hand, in which not only are new facts presented, but their relation to old ones is pointed out” (Rayleigh, 1884). In other words, the contribution of any singular piece of research draws not only from its own unique discoveries, but also from its relationship to previous research (Glasziou et al., 2004; Mulrow and Lohr, 2001). Thus, the synthesis and assessment of a body of evidence is at the heart of a systematic review (SR) of comparative effectiveness research (CER).

The previous chapter described the considerable challenges involved in assembling all the individual studies that comprise current knowledge on the effectiveness of a healthcare intervention: the “body of evidence.” This chapter begins with the assumption that the body of evidence was identified in an optimal manner and that the risk of bias in each individual study was assessed appropriately—both according to the committee’s standards. This chapter addresses the synthesis and assessment of the collected evidence, focusing on those aspects that are most salient to setting standards. The science of SR is rapidly evolving; much has yet to be learned. The purpose of standards for evidence synthesis and assessment—as in other SR methods—is to set performance expectations and to promote accountability for meeting those expectations without stifling innovation in methods. Thus, the emphasis is not on specifying preferred technical methods, but rather the building blocks that help ensure objectivity, transparency, and scientific rigor.

As it did elsewhere in this report, the committee developed this chapter’s standards and elements of performance based on available evidence and expert guidance from the Agency for Healthcare Research and Quality (AHRQ) Effective Health Care Program, the Centre for Reviews and Dissemination (CRD, part of University of York, UK), and the Cochrane Collaboration (Chou et al., 2010; CRD, 2009; Deeks et al., 2008; Fu et al., 2010; Lefebvre et al., 2008; Owens et al., 2010). Guidance on assessing quality of evidence from the Grading of Recommendations Assessment, Development, and Evaluation (GRADE) Working Group was another key source of information (Guyatt et al. 2010; Schünemann et al., 2009). See Appendix F for a detailed summary of AHRQ, CRD, and Cochrane guidance for the assessment and synthesis of a body of evidence.

The committee had several opportunities for learning the perspectives of stakeholders on issues related to this chapter. SR experts and representatives from medical specialty associations, payers, and consumer groups provided both written responses to the committee’s questions and oral testimony in a public workshop (see Appendix C).

In addition, staff conducted informal, structured interviews with other key stakeholders.

The committee recommends four standards for the assessment and qualitative and quantitative synthesis of an SR’s body of evidence. Each standard consists of two parts: first, a brief statement describing the related SR step and, second, one or more elements of performance that are fundamental to carrying out the step. Box 4-1 lists all of the chapter’s recommended standards. This chapter provides the background and rationale for the recommended standards and elements of performance, first outlining the key considerations in assessing a body of evidence, and followed by sections on the fundamental components of qualitative and quantitative synthesis. The order of the chapter’s standards and the presentation of the discussion do not necessarily indicate the sequence in which the various steps should be conducted. Although an SR synthesis should always include a qualitative component, the feasibility of a quantitative synthesis (meta-analysis) depends on the available data. If a metaanalysis is conducted, its interpretation should be included in the qualitative synthesis. Moreover, the overall assessment of the body of evidence cannot be done until the syntheses are complete.

In the context of CER, SRs are produced to help consumers, clinicians, developers of clinical practice guidelines, purchasers, and policy makers to make informed healthcare decisions (Federal Coordinating Council for Comparative Effectiveness Research, 2009; IOM, 2009). Thus, the assessment and synthesis of a body of evidence in the SR should be approached with the decision makers in mind. An SR using rigorous and transparent methods allows decision makers to discern what is known and not known about an intervention’s effectiveness and how the evidence applies to particular population groups and clinical situations (Helfand, 2005). Making evidence-based decisions—such as when a guideline developer recommends what should and should not be done in specific clinical circumstances—is a distinct and separate process from the SR and is outside the scope of this report. It is the focus of a companion IOM study on developing standards for trustworthy clinical practice guidelines.1

A NOTE ON TERMINOLOGY

The SR field lacks an agreed-on lexicon for some of its most fundamental terms and concepts, including what actually constitutes

|

1 |

The IOM report, Clinical Practice Guidelines We Can Trust, is available at the National Academies Press website: http://www.nap.edu/. |

the quality of a body of evidence. This leads to considerable confusion. Because this report focuses on SRs for the purposes of CER and clinical decision making, the committee uses the term “quality of the body of evidence” to describe the extent to which one can be confident that the estimate of an intervention’s effectiveness is correct. This terminology is designed to support clinical decision making and is similar to that used by GRADE and adopted by the Cochrane Collaboration and other organizations for the same purpose (Guyatt et al., 2010; Schünemann et al., 2008, 2009).

Quality encompasses summary assessments of a number of characteristics of a body of evidence, such as within-study bias (methodological quality), consistency, precision, directness or applicability of the evidence, and others (Schünemann et al., 2009). Syn-

Standard 4.3 Decide if, in addition to a qualitative analysis, the systematic review will include a quantitative analysis (meta-analysis) Standard 4.4 If conducting a meta-analysis, then do the following: Required elements:

NOTE: The order of the standards does not indicate the sequence in which they are carried out. |

thesis is the collation, combination, and summary of the findings of a body of evidence (CRD, 2009). In an SR, the synthesis of the body of evidence should always include a qualitative component and, if the data permit, a quantitative synthesis (meta-analysis).

The following section presents the background and rationale for the committee’s recommended standard and performance elements for prespecifying the assessment methods.

A Need for Clarity and Consistency

Neither empirical evidence nor agreement among experts is available to support the committee’s endorsement of a specific approach for assessing and describing the quality of a body of evi-

dence. Medical specialty societies, U.S. and other national government agencies, private research groups, and others have created a multitude of systems for assessing and characterizing the quality of a body of evidence (AAN, 2004; ACCF/AHA, 2009; ACCP, 2009; CEBM, 2009; Chalmers et al., 1990; Ebell et al., 2004; Faraday et al., 2009; Guirguis-Blake et al., 2007; Guyatt et al., 2004; ICSI, 2003; NCCN, 2008; NZGG, 2007; Owens et al., 2010; Schünemann et al., 2009; SIGN, 2009; USPSTF, 2008). The various systems share common features, but employ conflicting evidence hierarchies; emphasize different factors in assessing the quality of research; and use a confusing array of letters, codes, and symbols to convey investigators’ conclusions about the overall quality of a body of evidence (Atkins et al., 2004a, 2004b; Schünemann et al., 2003; West et al., 2002). The reader cannot make sense of the differences (Table 4-1). Through public testimony and interviews, the committee heard that numerous producers and users of SRs were frustrated by the number, variation, complexity, and lack of transparency in existing systems.

One comprehensive review documented 40 different systems for grading the strength of a body of evidence (West et al., 2002). Another review, conducted several years later, found that more than 50 evidence-grading systems and 230 quality assessment instruments were in use (COMPUS, 2005).

Early systems for evaluating the quality of a body of evidence used simple hierarchies of study design to judge the internal validity (risk of bias) of a body of evidence (Guyatt et al., 1995). For example, a body of evidence that included two or more randomized controlled trials (RCTs) was assumed to be “high-quality,” “level 1,” or “grade A” evidence whether or not the trials met scientific standards. Quasi-experimental research, observational studies, case series, and other qualitative research designs were automatically considered lower quality evidence. As research documented the variable quality of trials and widespread reporting bias in the publication of trial findings, it became clear that such hierarchies are too simplistic because they do not assess the extent to which the design and implementation of RCTs (or other study designs) avoid biases that may reduce confidence in the measures of effectiveness (Atkins et al., 2004b; Coleman et al., 2009; Harris et al., 2001).

The early hierarchies produced conflicting conclusions about effectiveness. A study by Ferreira and colleagues analyzed the effect of applying different “levels of evidence” systems to the conclusions of six Cochrane SRs of interventions for low back pain (Ferreira et al., 2002). They found that the conclusions of the reviews were highly dependent on the system used to evaluate the evidence

TABLE 4-1 Examples of Approaches to Assessing the Body of Evidence for Therapeutic Interventions*

|

System |

System for Assessing the Body of Evidence |

|

|

Agency for Healthcare Research and Quality |

High |

High confidence that the evidence reflects the true effect. Further research is very unlikely to change our confidence of the estimate of effect. |

|

Moderate |

Moderate confidence that the evidence reflects the true effect. Further research may change our confidence in the estimate of effect and may change the estimate. |

|

|

Low |

Low confidence that the evidence reflects the true effect. Further research is likely to change the confidence in the estimate of effect and is likely to change the estimate. |

|

|

Insufficient |

Evidence either is unavailable or does not permit a conclusion. |

|

|

American College of Chest Phsicians |

High |

Randomized controlled trials (RCTs) without important limitations or overwhelming evidence from observational studies. |

|

Moderate |

RCTs with important limitations (inconsistent results, methodological flaws, indirect, or imprecise) or exceptionally strong evidence from observational studies. |

|

|

Low |

Observational studies or case series. |

|

|

American Heart Association/American College of Cardiology |

A |

Multiple RCTs or meta-analyses. |

|

B |

Single RCT, or nonrandomized studies. |

|

|

C |

Consensus opinion of experts, case studies, or standard of care. |

|

|

Grading of Recommendations Assessment, Development and Evaluation (GRADE) |

Starting points for evaluating quality level: |

|

|

||

|

Factors that may decrease or increase the quality level of a body of evidence: |

||

|

||

|

System |

System for Assessing the Body of Evidence |

|

|

|

High |

Further research is very unlikely to change our confidence in the estimate of effect. |

|

|

Moderate |

Further research is likely to have an important impact on our confidence in the estimate of effect and may change the estimate. |

|

|

Low |

Further research is very likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate. |

|

|

Very low |

Any estimate of effect is very uncertain. |

|

National Comprehensive Cancer Network |

High |

High-powered RCTs or meta-analysis. |

|

Lower |

Ranges from Phase II Trials to large cohort studies to case series to individual practitioner experience. |

|

|

Oxford Centre for Evidence-Based Medicine |

Varies with type of question. Level may be graded down on the basis of study quality, imprecision, indirectness, inconsistency between studies, or because the absolute effect size is very small. Level may be graded up if there is a large or very large effect size. |

|

|

Level 1 |

Systematic review (SR) of randomized trials or n-of-1 trial. For rare harms: SR of case-control studies, or studies revealing dramatic effects. |

|

|

Level 2 |

SR of nested case-control or dramatic effect. For rare harms: Randomized trial or (exceptionally) observational study with dramatic effect. |

|

|

Level 3 |

Nonrandomized controlled cohort/follow-up study. |

|

|

Level 4 |

Case-control studies, historically controlled studies. |

|

|

Level 5 |

Opinion without explicit critical appraisal, based on limited/undocumented experience, or based on mechanisms. |

|

|

Scottish Intercollegiate Guidelines Network |

1++ |

High-quality meta-analyses, SRs of RCTs, or RCTs with a very low risk of bias. |

|

1+ |

Well-conducted meta-analyses, SRs, or RCTs with a low risk of bias. |

|

|

1− |

Meta-analyses, SRs, or RCTs with a high risk of bias. |

|

|

2++ |

High-quality SRs of case control or cohort studies. High-quality case control or cohort studies with a very low risk of confounding or bias and a high probability that the relationship is causal. |

|

|

2− |

Case control or cohort studies with a high risk of confounding or bias and a significant risk that the relationship is not causal. |

|

|

3 |

Nonanalytic studies, e.g., case reports, case series. |

|

|

4 |

Expert opinion. |

|

|

* Some systems use different grading schemes depending on the type of intervention (e.g., preventive service, diagnostic tests, and therapies). This table includes systems for therapeutic interventions. SOURCES: ACCF/AHA (2009); ACCP (2009); CEBM (2009); NCCN (2008); Owens et al. (2010); Schünemann et al. (2009); SIGN (2009). |

||

primarily because of differences in the number and quality of trials required for a particular level of evidence. In many cases, the differences in the conclusions were so substantial that they could lead to contradictory clinical advice. For example, for one intervention, “back school,”2 the conclusions ranged from “strong evidence that back schools are effective” to “no evidence” on the effectiveness of back schools.

One reason for these discrepancies was failure to distinguish between the quality of the evidence and the magnitude of net benefit. For example, an SR and meta-analysis might highlight a dramatic effect size regardless of the risk of bias in the body of evidence. Conversely, use of a rigid hierarchy gave the impression that any effect based on randomized trial evidence was clinically important, regardless of the size of the effect. In 2001, the U.S. Preventive Services Task Force broke new ground when it updated its review methods, separating its assessment of the quality of evidence from its assessment of the magnitude of effect (Harris et al., 2001).

What Are the Characteristics of Quality for a Body of Evidence?

Experts in SR methodology agree on the conceptual underpinnings for the systematic assessment of a body of evidence. The committee identified eight basic characteristics of quality, described below, that are integral to assessing and characterizing the quality of a body of evidence. These characteristics—risk of bias, consistency, precision, directness, and reporting bias, and for observational studies, dose–response association, plausible confounding that would change an observed effect, and strength of association—are used by GRADE; the Cochrane Collaboration, which has adopted the GRADE approach; and the AHRQ Effective Health Care Program, which adopted a modified version of the GRADE approach (Owens et al., 2010; Balshem et al., 2011; Falck-Ytter et al., 2010; Schünemann et al., 2008). Although their terminology varies somewhat, Falck-Ytter and his GRADE colleagues describe any differences between the GRADE and AHRQ quality characteristics as essentially semantic (Falck-Ytter et al., 2010). Owens and his AHRQ colleagues appear

|

BOX 4-2 Key Concepts Used in the GRADE Approach to Assessing the Quality of a Body of Evidence The Grading of Recommendations Assessment, Development, and Evaluation (GRADE) Working Group uses a point system to upgrade or downgrade the ratings for each quality characteristic. A grade of high, moderate, low, or very low is assigned to the body of evidence for each outcome. Eight characteristics of the quality of evidence are assessed for each outcome. Five characteristics can lower the quality rating for the body of evidence:

Three factors can increase the quality rating for the body of evidence because they raise confidence in the certainty of estimates (particularly for observational studies):

SOURCES: Atkins et al. (2004a); Balshem et al. (2011); Falck-Ytter et al. (2010); Schünemann et al. (2009). |

to agree (Owens et al., 2010). As Boxes 4-2 and 4-3 indicate, the two approaches are quite similar.3

Risk of Bias

In the context of a body of evidence, risk of bias refers to the extent to which flaws in the design and execution of a collection of studies could bias the estimate of effect for each outcome under study.

|

3 |

For detailed descriptions of the AHRQ and GRADE methods, see the GRADE Handbook for Grading Quality of Evidence and Strength of Recommendations (Schünemann et al., 2009) and “Grading the Strength of a Body of Evidence When Comparing Medical Interventions—AHRQ and the Effective Health Care Program” (Owens et al., 2010). |

|

BOX 4-3 Key Concepts Used in the AHRQ Approach to Assessing the Quality of a Body of Evidence The Agency for Healthcare Research and Quality (AHRQ) Effective Health Care Program refers to the evidence evaluation process as grading the “strength” of a body of evidence. It requires that the body of evidence for each major outcome and comparison of interest be assessed according to the concepts listed below. After a global assessment of the concepts, AHRQ systematic review teams assign a grade of high, moderate, low, or insufficient to the body of evidence for each outcome. Evaluation components in all systematic reviews:

Other considerations (particularly with respect to observational studies):

SOURCE: Owens et al. (2010). |

Chapter 3 describes the factors related to the design and conduct of randomized trials and observational studies that may influence the magnitude and direction of bias for a particular outcome (e.g., sequence generation, allocation concealment, blinding, incomplete data, selective reporting of outcomes, confounding, etc.),4 as well as

|

4 |

Sequence generation refers to the method used to generate the random assignment of study participants in a trial. A trial is “blind” if participants are not told to which arm of the trial they have been assigned. Allocation concealment is a method used to prevent selection bias in clinical trials by concealing the allocation sequence from those assigning participants to intervention groups. Allocation concealment prevents researchers from (unconsciously or otherwise) influencing the intervention group to which each participant is assigned. |

available tools for assessing risk of bias in individual studies. Assessing risk of bias for a body of evidence requires a cumulative assessment of the risk of bias across all individual studies for each specific outcome of interest. Study biases are outcome dependent in that potential sources of bias impact different outcomes in different ways; for example, blinding of outcome assessment to a treatment group might be less important for a study of the effect of an intervention on mortality than for a study measuring pain relief. The degree of confidence in the summary estimate of effect will depend on the extent to which specific biases in the included studies affect a specific outcome.

Consistency

For the appraisal of a body of evidence, consistency refers to the degree of similarity in the direction and estimated size of an intervention’s effect on specific outcomes.5 SRs and meta-analyses can provide clear and convincing evidence of a treatment’s effect when the individual studies in the body of evidence show consistent, clinically important effects of similar magnitude (Higgins et al., 2003). Often, however, the results differ in the included studies. Large and unexplained differences (inconsistency) are of concern especially when some studies suggest substantial benefit, but other studies indicate no effect or possible harm (Guyatt et al., 2010).

However, inconsistency across studies may be due to true differences in a treatment’s effect related to variability in the included studies’ populations (e.g., differences in health status), interventions (e.g., differences in drug doses, cointerventions, or comparison interventions), and health outcomes (e.g., diminishing treatment effect with time). Examples of inconsistency in a body of evidence include statistically significant effects in opposite directions, confidence intervals that are wide or fail to overlap, and clinical or statistical heterogeneity that cannot be explained. When differences in estimates across studies reflect true differences in a treatment’s effect, then inconsistency provides the opportunity to understand and characterize those differences, which may have important implications for clinical practice. If the inconsistency results from biases in study design or improper study execution, then a thorough assessment of these differences may inform future study design.

|

5 |

In analyses involving indirect comparisons, network meta-analyses, or mixed-treatment comparisons, the term consistency refers to the degree to which the direct comparisons (head-to-head comparisons) and the indirect comparisons agree with each other with respect to the magnitude of the treatment effect of interest. |

Precision

A measure of the likelihood of random errors in the estimates of effect, precision refers to the degree of certainty about the estimates for specific outcomes. Confidence intervals about the estimate of effect from each study are one way of expressing precision, with a narrower confidence interval meaning more precision.

Directness

The concept of directness has two dimensions, depending on the context:

-

When interventions are compared, directness refers to the extent to which the individual studies were designed to address the link between the healthcare intervention and a specific health outcome. A body of evidence is considered indirect if the included studies only address surrogate or biological outcomes or if head-to-head (direct) comparisons of interventions are not available (e.g., intervention A is compared to intervention C, and intervention B is compared to C, when comparisons of A vs. B studies are of primary interest, but not available).

-

The other dimension of “directness” is applicability (also referred to as generalizability or external validity).6 A body of evidence is applicable if it focuses on the specific condition, patient population, intervention, comparators, and health outcomes that are the focus of the SR’s research protocol. SRs should assess the applicability of the evidence to patients seen in everyday clinical settings. This is especially important because numerous clinically relevant factors distinguish clinical trial participants from most patients, such as health status and comorbidities as well as age, gender, race, and ethnicity (Pham et al., 2007; Slone Survey, 2006; Vogeli et al., 2007).

|

6 |

As noted in Chapter 1, applicability is one of seven criteria that the committee used to guide its selection of SR standards. In that context, applicability relates to the aim of CER, that is, to help consumers, clinicians, purchasers, and policy makers to make informed decisions that will improve health care at both the individual and population levels. The other criteria are acceptability/credibility, efficiency of conducting the review, patient-centeredness, scientific rigor, timeliness, and transparency. |

Reporting Bias

Chapter 3 describes the extent of reporting bias in the biomedical literature. Depending on the nature and direction of a study’s results, research findings or findings for specific outcomes are often selectively published (publication bias and outcome reporting bias), published in a particular language (language bias), or released in journals with different ease of access (location bias) (Dickersin, 1990; Dwan et al., 2008; Gluud, 2006; Hopewell et al., 2008, 2009; Kirkham et al., 2010; Song et al., 2009, 2010; Turner et al., 2008). Thus, for each outcome, the SR should assess the probability of a biased subset of studies comprising the collected body of evidence.

Dose–Response Association

When findings from similar studies suggest a dose–response relationship across studies, it may increase confidence in the overall body of evidence. “Dose–response association” is defined as a consistent association across similar studies of a larger effect with greater exposure to the intervention. For a drug, a dose–response relationship might be observed with the treatment dosage, intensity, or duration. The concept of dose–response also applies to non-drug exposures. For example, in an SR of nutritional counseling to encourage a healthy diet, dose was measured as “the number and length of counseling contacts, the magnitude and complexity of educational materials provided, and the use of supplemental intervention elements, such as support groups sessions or cooking classes” (Ammerman et al., 2002, p. 6). Care needs to be exercised in the interpretation of dose–response relationships that are defined across, rather than within, studies. Cross-study comparisons of different “doses” may reflect other differences among studies, in addition to dose, that is, dose may be confounded with other study characteristics, populations included, or other aspects of the intervention.

The absence of a dose–response effect, in the observed range of doses, does not rule out a true causal relationship. For example, drugs are not always available in a wide range of doses. In some instances, any dose above a particular threshold may be sufficient for effectiveness.

Plausible Confounding That Would Change an Observed Effect

Although controlled trials generally minimize confounding by randomizing subjects to intervention and control groups, obser-

vational studies are particularly prone to selection bias, especially when there is little or no adjustment for potential confounding factors among comparison groups (Norris et al., 2010). This characteristic of quality refers to the extent to which systematic differences in baseline characteristics, prognostic factors, or co-occurring interventions among comparison groups may reduce or increase an observed effect. Generally, confounding results in effect sizes that are overestimated. However, sometimes, particularly in observational studies, confounding factors may lead to an underestimation of the effect of an intervention. If the confounding variables were not present, the measured effect would have been even larger. The AHRQ and GRADE systems use the term “plausible confounding that would decrease observed effect” to describe such situations. The GRADE Handbook provides the following examples (Schünemann et al., 2009, p. 125):

-

A rigorous systematic review of observational studies including a total of 38 million patients demonstrated higher death rates in private for-profit versus private not-for-profit hospitals (Devereaux et al., 2004). One possible bias relates to different disease severity in patients in the two hospital types. It is likely, however, that patients in the not-for-profit hospitals were sicker than those in the for-profit hospitals. Thus, to the extent that residual confounding existed, it would bias results against the not-for-profit hospitals. The second likely bias was the possibility that higher numbers of patients with excellent private insurance coverage could lead to a hospital having more resources and a spill-over effect that would benefit those without such coverage. Since for-profit hospitals are [more] likely to admit a larger proportion of such well-insured patients than not-for-profit hospitals, the bias is once again against the not-for-profit hospitals. Because the plausible biases would all diminish the demonstrated intervention effect, one might consider the evidence from these observational studies as moderate rather than low quality.

-

A parallel situation exists when observational studies have failed to demonstrate an association but all plausible biases would have increased an intervention effect. This situation will usually arise in the exploration of apparent harmful effects. For example, because the hypoglycemic drug phenformin causes lactic acidosis, the related agent metformin is under suspicion for the same toxicity. Nevertheless, very large observational studies have failed to demonstrate an association (Salpeter et al., 2004). Given the likelihood that

-

clinicians would be more alert to lactic acidosis in the presence of the agent and overreport its occurrence, one might consider this moderate, or even high-quality evidence refuting a causal relationship between typical therapeutic doses of metformin and lactic acidosis.

Strength of Association

Because observational studies are subject to many confounding factors (e.g., patients’ health status, demographic characteristics) and greater risk of bias compared to controlled trials, the design, execution, and statistical analyses in each study should be assessed carefully to determine the influence of potential confounding factors on the observed effect. Strength of association refers to the likelihood that a large observed effect in an observational study is not due to bias from potential confounding factors.

Evidence on Assessment Methods Is Elusive

Applying the above concepts in a systematic way across multiple interventions and numerous outcomes is clearly challenging. Although many SR experts agree on the concepts that should underpin the assessment of the quality of body of evidence, the committee did not find any research to support existing methods for using these basic concepts in a systematic method such as the GRADE and AHRQ approaches. The GRADE Working Group reports that 50 organizations have either endorsed or are using an adapted version of their system (GRADE Working Group, 2010). However, the reliability and validity of the GRADE and AHRQ methods have not been evaluated, and not much literature assesses other approaches. Furthermore, many GRADE users are apparently selecting aspects of the system to suit their needs rather than adopting the entire method. The AHRQ method is one adaptation.

The committee heard considerable anecdotal evidence suggesting that many SR producers and users had difficulty using GRADE. Some organizations seem reluctant to adopt a new, more complex system that has not been sufficiently evaluated. Others are concerned that GRADE is too time consuming and difficult to implement. There are also complaints about the method’s subjectivity. GRADE advocates acknowledge that the system does not eliminate subjectivity, but argue that a strength of the system is that, unlike other approaches, it makes transparent any judgments or disagreements about evidence (Brozek et al., 2009).

RECOMMENDED STANDARD FOR ASSESSING AND DESCRIBING THE QUALITY OF A BODY OF EVIDENCE

The committee recommends the following standard for assessing and describing the quality of a body of evidence. As noted earlier, this overall assessment should be done once the qualitative and quantitative syntheses are completed (see Standards 4.2–4.4 below). The order of this chapter’s standards does not indicate the sequence in which the various steps should be conducted. Standard 4.1 is presented first to reflect the committee’s recommendation that the SR specifies its methods a priori in the research protocol.7

Standard 4.1—Use a prespecified method to evaluate the body of evidence

Required elements:

|

4.1.1 |

For each outcome, systematically assess the following characteristics of the body of evidence: |

|

|

|

|

4.1.2 |

For bodies of evidence that include observational research, also systematically assess the following characteristics for each outcome: |

|

|

|

|

4.1.3 |

For each outcome specified in the protocol, use consistent language to characterize the level of confidence in the estimates of the effect of an intervention |

Rationale

If an SR is to be objective, it should use prespecified, analytic methods. If the SR’s assessment of the quality of a body of evidence is to be credible and true to scientific principles, it should be based on agreed-on concepts of study quality. If the SR is to be comprehensible, it should use unambiguous language, free from jar-

|

7 |

See Chapter 2 for the committee’s recommended standards for developing the SR research protocol. |

gon, to describe the quality of evidence for each outcome. Decision makers—whether clinicians, patients, or others—should not have to decipher undefined and possibly conflicting terms and symbols in order to understand the methods and findings of SRs.

Clearly, the assessment of the quality of a body of evidence—for each outcome in the SR—must incorporate multiple dimensions of study quality. Without a sound conceptual framework for scrutinizing the body of evidence, the SR can lead to the wrong conclusions about an intervention’s effectiveness, with potentially serious implications for clinical practice.

The lack of an evidence-based system for assessing and characterizing the quality of a body of evidence is clearly problematic. A plethora of systems are in use, none have been evaluated, and all have their proponents and critics. The committee’s recommended quality characteristics are well-established concepts for evaluating quality; however, the SR field needs unambiguous, jargon-free language for systematically applying these concepts. GRADE merits consideration, but should be rigorously evaluated before it becomes a required component of SRs in the United States. Until a well-validated standard language is developed, SR authors should use their chosen lexicon and provide clear definitions of their terms.

QUALITATIVE SYNTHESIS OF THE BODY OF EVIDENCE

As noted earlier, the term “synthesis” refers to the collation, combination, and summary of the results of an SR. The committee uses the term “qualitative synthesis” to refer to an assessment of the body of evidence that goes beyond factual descriptions or tables that, for example, simply detail how many studies were assessed, the reasons for excluding other studies, the range of study sizes and treatments compared, or quality scores of each study as measured by a risk of bias tool. While an accurate description of the body of evidence is essential, it is not sufficient (Atkins, 2007; Mulrow and Lohr, 2001).

The primary focus of the qualitative synthesis should be to develop and to convey a deeper understanding of how an intervention works, for whom, and under what circumstances. The committee identified nine key purposes of the qualitative synthesis (Table 4-2).

If crafted to inform clinicians, patients, and other decision makers, the qualitative synthesis would enable the reader to judge the relevance and validity of the body of evidence for specific clinical

TABLE 4-2 Key Purposes of the Qualitative Synthesis

|

Purpose |

Relevant Content in the Systematic Review (SR) |

|

To orient the reader to the clinical landscape |

A description of the clinical environment in which the research was conducted. It should enable the reader to grasp the relevance of the body of evidence to specific patients and clinical circumstances. It should describe the settings in which care was provided, how the intervention was delivered, by whom, and to whom. |

|

To describe what actually happened to subjects during the course of the studies |

A description of the actual care and experience of the study participants (in contrast with the original study protocol). |

|

To critique the strengths and weaknesses of the body of evidence |

A description of the strengths and weaknesses of the individual studies’ design and execution, including their common features and differences. It should highlight well-designed and executed studies, contrasting them with others, and include an assessment of the extent to which the risk of bias affects summary estimates of the intervention’s effect. It should also include a succinct summary of the issues that lead to the use of particular adjectives (e.g., “poor,” “fair,” “low quality,” “high risk of bias,” etc.) in describing the quality of the evidence. |

|

To identify differences in the design and execution of the individual studies that explain why their results differ |

An examination of how heterogeneity in the treatment’s effects may be due to clinical differences in the study population (e.g., demographics, coexisting conditions, or treatments) as well as methodological differences in the studies’ designs. |

|

To describe how the design and execution of the individual studies affect their relevance to real-world clinical settings |

A description of the applicability of the studies’ health conditions, patient population, intervention, comparators, and health outcomes to the SR research question. It should also address how adherence of patients and providers may limit the applicability of the results. For example, the use of prescribed medications, as directed, may differ substantially between patients in the community compared with study participants. |

|

To integrate the general summary of the evidence and the subgroup analyses based on setting and patient populations |

For each important outcome, an overview of the nested subgroup analyses, as well as a presentation of the overall summary and assessment of the evidence. |

|

To call attention to patient populations that have been inadequately studied or for whom results differ |

A description of important patient subgroups (e.g., by comorbidity, age, gender, race, or ethnicity) that are unaddressed in the body of evidence. |

|

To interpret and assess the robustness of the meta-analysis results |

A clear synthesis of the evidence that goes beyond presentation of summary statistics. The summary statistics should not dominate the discussion; instead, the synthesis of the evidence should be carefully articulated, using the summary statistics to support the key conclusions. |

|

To describe how the SR findings contrast with conventional wisdom |

Sometimes commonly held notions about an intervention or a type of study design are not supported by the body of evidence. If this occurs, the qualitative synthesis should clearly explain how the SR findings differ from the conventional wisdom. |

decisions and circumstances. Guidance from the Editors of Annals of Internal Medicine is noteworthy:

We are disappointed when a systematic review simply lists the characteristics and findings of a series of single studies without attempting, in a sophisticated and clinically meaningful manner, to discover the pattern in a body of evidence. Although we greatly value meta-analyses, we look askance if they seem to be mechanistically produced without careful consideration of the appropriateness of pooling results or little attempt to integrate the finds into the contextual background. We want all reviews, including meta-analyses to include rich qualitative synthesis. (Editors, 2005, p. 1019)

Judgments and Transparency Are Key

Although the qualitative synthesis of CER studies should be based in systematic and scientifically rigorous methods, it nonetheless involves numerous judgments—judgments about the relevance, legitimacy, and relative uncertainty of some aspects of the evidence; the implications of missing evidence (a commonplace occurrence); the soundness of technical methods; and the appropriateness of conducting a meta-analysis (Mulrow et al., 1997). Such judgments may be inherently subjective, but they are always valuable and essential to the SR process. If the SR team approaches the literature from an open-minded perspective, team members are uniquely positioned to discover and describe patterns in a body of evidence that can yield a deeper understanding of the underlying science and help readers to interpret the findings of the quantitative synthesis (if conducted). However, the SR team should exercise extreme care to keep such discussions appropriately balanced and, whenever possible, driven by the underlying data.

RECOMMENDED STANDARDS FOR QUALITATIVE SYNTHESIS

The committee recommends the following standard and elements of performance for conducting the qualitative synthesis.

|

4.2.2 |

Describe the strengths and limitations of individual studies and patterns across studies |

|

4.2.3 |

Describe, in plain terms, how flaws in the design or execution of the study (or groups of studies) could bias the results, explaining the reasoning behind these judgments |

|

4.2.4 |

Describe the relationships between the characteristics of the individual studies and their reported findings and patterns across studies |

|

4.2.5 |

Discuss the relevance of individual studies to the populations, comparisons, cointerventions, settings, and outcomes or measures of interest |

Rationale

The qualitative synthesis is an often undervalued component of an SR. Many SRs lack a qualitative synthesis altogether or simply provide a nonanalytic recitation of the facts (Atkins, 2007). Patients, clinicians, and others should feel confident that SRs accurately reflect what is known and not known about the effects of a healthcare intervention. To give readers a clear understanding of how the evidence applies to real-world clinical circumstances and specific patient populations, SRs should describe—in easy-to-understand language—the clinical and methodological characteristics of the individual studies, including their strengths and weaknesses and their relevance to particular populations and clinical settings.

META-ANALYSIS

This section of the chapter presents the background and rationale for the committee’s recommended standards for conducting a meta-analysis: first, considering the issues that determine whether a meta-analysis is appropriate, and second, exploring the fundamental considerations in undertaking a meta-analysis. A detailed description of meta-analysis methodology is beyond the scope of this report; however, excellent reference texts are available (Borenstein, 2009; Cooper et al., 2009; Egger et al., 2001; Rothstein et al., 2005; Sutton et al., 2000). This discussion draws from these sources as well as guidance from the AHRQ Effective Health Care Program, CRD, and the Cochrane Collaboration (CRD, 2009; Deeks et al., 2008; Fu et al., 2010).

Meta-analysis is the statistical combination of results from multiple individual studies. Meta-analytic techniques have been

used for more than a century for a variety of purposes (Sutton and Higgins, 2008). The nomenclature for SRs and meta-analysis has evolved over time. Although often used as a synonym for SR in the past, meta-analysis has come to mean the quantitative analysis of data in an SR. As noted earlier, the committee views “metaanalysis” as a broad term that encompasses a wide variety of methodological approaches whose goal is to quantitatively synthesize and summarize data across a set of studies. In the context of CER, meta-analyses are undertaken to combine and summarize existing evidence comparing the effectiveness of multiple healthcare interventions (Fu et al., 2010). Typically, the objective of the analysis is to increase the precision and power of the overall estimated effect of an intervention by producing a single pooled estimate, such as an odds ratio. In CER, large numbers are often required to detect what may be modest or even small treatment effects. Many studies are themselves too small to yield conclusive results. By combining the results of multiple studies in a meta-analysis, the increased number of study participants can reduce random error, improve precision, and increase the likelihood of detecting a real effect (CRD, 2009).

Fundamentally, a meta-analysis provides a weighted average of treatment effects from the studies in the SR. While varying in details, the weights are set up so that the most informative studies have the greatest impact on the average. While the term “most informative” is vague, it is usually expressed in terms of the sample size and precision of the study. The largest and most precisely estimated studies receive the greatest weights. In addition to an estimate of the average effect, a measure of the uncertainty of this estimate that reflects random variation is necessary for a proper summary.

In many circumstances, CER meta-analyses focus on the average effect of the difference between two treatments across all studies, reflecting the common practice in RCTs of providing a single number summary. While a meta-analysis is itself a nonrandomized study, even if the individual studies in the SR are themselves randomized, it can fill a confirmatory or an exploratory role (Anello and Fleiss, 1995). Although it has been underused for this purpose, meta-analysis is a valuable tool for assessing the pattern of results across studies and for identifying the need for primary research (CRD, 2009; Sutton and Higgins, 2008).

In other circumstances, individual studies in SRs of more than two treatments evaluate different subsets of treatments so that direct, head-to-head comparisons between two treatments of interest, for example, are limited. Treatment networks allow indirect comparisons in which the two treatments are each compared to a common

third treatment (e.g., a placebo). The indirect treatment estimate then consists of the difference between the two comparisons with the common treatment. The network is said to be consistent if the indirect estimates are the same as the direct estimates (Lu and Ades, 2004). Consistency is most easily tested when some studies test all three treatments. Finding consistency increases confidence that the estimated effects are valid. Inconsistency suggests a bias in either or both of the indirect or direct estimates. While the direct estimate is often preferred, bias in the design of the direct comparison studies may suggest that the indirect estimate is better (Salanti et al., 2010). Proper consideration of indirect evidence requires that the full network be considered. This facilitates determining which treatments work best for which reported outcomes.

Many clinical readers view meta-analyses as confirmatory summaries that resolve conflicting evidence from previous studies. In this role, all the potential decision-making errors in clinical trials (e.g., Type 1 and Type 2 errors or excessive subgroup analyses)8 apply to meta-analyses as well. However, in an exploratory role, meta-analysis may be more useful as a means to explore heterogeneity among study findings, recognize types of patients who might differentially benefit from (or be harmed by) treatment or treatment protocols that may work more effectively, identify gaps in knowledge, and suggest new avenues for research (Lau et al., 1998). Many of the methodological developments in meta-analysis in recent years have been motivated by the desire to use the information available from a meta-analysis for multiple purposes.

When Is Meta-Analysis Appropriate?

Meta-analysis has the potential to inform and explain, but it also has the potential to mislead if, for example, the individual studies are not similar, are biased, or publication or reporting biases are large (Deeks et al., 2008). A meta-analysis should not be assumed to always be an appropriate step in an SR. The decision to conduct a meta-analysis is neither purely analytical nor statistical in nature. It will depend on a number of factors, such as the availability of suitable data and the likelihood that the analysis could inform clinical decision making. Ultimately, it is a subjective judgment that should be made in consultation with the entire SR team, including both clinical and methodological perspectives. For purposes of transpar-

ency, the review team should clearly explain the rationale for each subjective determination (Fu et al., 2010).

Data Considerations

Conceptually a meta-analysis may make sense, and the studies may appear sufficiently similar, but without unbiased data that are in (or may be transformed into) similar metrics, the meta-analysis simply may not be feasible. There is no agreed-on definition of “similarity” with respect to CER data. Experts agree that similarity should be judged across three dimensions (Deeks et al., 2008; Fu et al., 2010): First, are the studies clinically similar, with comparable study population characteristics, interventions, and outcome measures? Second, are the studies alike methodologically in study design, conduct, and quality? Third, are the observed treatment effects statistically similar? All three of these questions should be considered before deciding a meta-analysis is appropriate.

Many meta-analyses use aggregate summary data for the comparison groups in each trial. Meta-analysis can be much more powerful when outcome, treatment, and patient data—individual patient data (IPD)—are available from individual patients. IPD, the raw data for each study participant, permit data cleaning and harmonization of variable definitions across studies as well as reanalysis of primary studies so that they are more readily combined (e.g., clinical measurement reported at a common time). IPD also allow valid analyses for effect modification by factors that change at the patient level, such as age and gender, for which use of aggregate data are susceptible to ecological bias (Berlin et al., 2002; Schmid et al., 2004). By permitting individual modeling in each study, IPD also focus attention on study-level differences that may contribute to heterogeneity of treatment effects across studies. When IPD are not available from each study in the meta-analysis, they can be analyzed together with summary data from the other studies (Riley and Steyerberg, 2010). The IPD inform the individual-level effects and both types of data inform the study-level effects. The increasing availability of data repositories and registries may make this hybrid modeling the norm in the future.

Advances in health information technology, such as electronic health records (EHRs) and disease registries, promise new sources of evidence on the effectiveness of health interventions. As these data sources become more readily accessible to investigators, they are likely to supplement or even replace clinical trials data in SRs of

CER. Furthermore, as with other data sources, the potential for bias and confounding will need to be addressed.

The Food and Drug Administration Sentinel Initiative and related activities (e.g., Observational Medical Outcomes Partnership) may be an important new data source for future SRs. When operational, the Sentinel Initiative will be a national, integrated, electronic database built on EHRs and claims records databases for as many as 100 million individuals (HHS, 2010; Platt et al., 2009). Although the principal objective of the system is to detect adverse effects of drugs and other medical products, it may also be useful for SRs of CER questions. A “Mini-Sentinel” pilot is currently under development at Harvard Pilgrim Health Care (Platt, 2010). The system will be a distributed network, meaning that separate data holders will contribute to the network, but the data will never be put into one common repository. Instead, all database holders will convert their data into a common data model and retain control over their own data. This allows a single “program” to be run (e.g., a statistical analysis in SAS) on all the disparate datasets, generating an estimated relative risk (or other measure) from each database. These then can be viewed as a type of meta-analysis.

Will the Findings Be Useful?

The fact that available data are conducive to pooling is not in itself sufficient reason to conduct a meta-analysis (Fu et al., 2010). The meta-analysis should not be undertaken unless the anticipated results are likely to produce meaningful answers that are useful to patients, clinicians, or other decision makers. For example, if the same outcomes are measured differently in the individual studies and the measures cannot be converted to a common scale, doing a meta-analysis may not be appropriate (Cummings, 2004). This situation may occur in studies comparing the effect of an intervention on a variety of important patient outcomes such as pain, mental health status, or pulmonary function.

Conducting the Meta-Analysis

Addressing Heterogeneity

Good statistical analyses quantify the amount of variability in the data in order to obtain estimates of the precision with which estimates may be made. Large amounts of variability reduce our confidence that effects are accurately measured. In meta-analysis,

variability arises from three sources—clinical diversity, methodological diversity, and statistical heterogeneity—which should be separately considered in presentation and discussion (Fu et al., 2010). Clinical diversity describes variability in study population characteristics, interventions, and outcome ascertainments. Methodological diversity encompasses variability in study design, conduct, and quality, such as blinding and concealment of allocation. Statistical heterogeneity, relating to the variability in observed treatment effects across studies, may occur because of random chance, but may also arise from real clinical and methodological diversity and bias.

Assessing the amount of variability is fundamental to determining the relevance of the individual studies to the SR’s research questions. It is also key to choosing which statistical model to use in the quantitative synthesis. Large amounts of variability may suggest a poorly formulated question or many sources of uncertainty that can influence effects. As noted above, if the individual studies are so diverse in terms of populations, interventions, comparators, outcomes, time lines, and/or settings, summary data will not yield clinically meaningful conclusions about the effect of an intervention for important subgroups of the population (West et al., 2010).

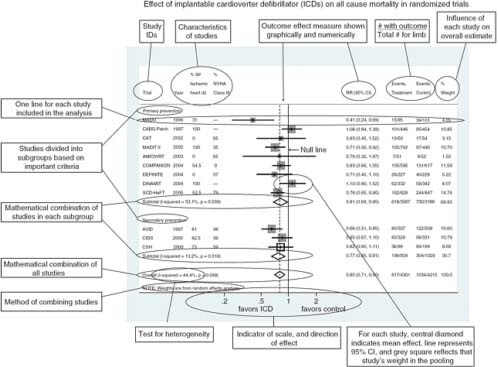

In general, quantifying heterogeneity helps determine whether and how the data may be combined, but specific tests of the presence of heterogeneity can be misleading and should not be used because of their poor statistical properties and because an assumption of complete homogeneity is nearly always unrealistic (Higgins et al., 2003). Graphical representations of among-study variation such as forest plots can be informative (Figure 4-1) (Anzures-Cabrera and Higgins, 2010).

When pooling is feasible, investigators typically use one of two statistical techniques—fixed-effects or random-effects models—to analyze and integrate the data, depending on the extent of heterogeneity. Each model has strengths and limitations. A fixed-effects model assumes that the treatment effect is the same for each study. A random-effects model assumes that some heterogeneity is present and acceptable, and the data can be pooled. Exploring the potential sources of heterogeneity may be more important than a decision about the use of fixed- or random-effects models. Although the committee does not believe that any single statistical technique should be a methodological standard, it is essential that the SR team clearly explain and justify the reasons why it chose the technique actually used.

Statistical Uncertainty

In meta-analyses, the amount of within- and between-study variation determines how precisely study and aggregate treatment effects are estimated. Estimates of effects without accompanying measures of their uncertainty, such as confidence intervals, cannot be correctly interpreted. A forest plot can provide a succinct representation of the size and precision of individual study effects and aggregated effects. When effects are heterogeneous, more than one summary effect may be necessary to fully describe the data. Measures of uncertainty should also be presented for estimates of heterogeneity and for statistics that quantify relationships between treatment effects and sources of heterogeneity.

Between-study heterogeneity is common in meta-analysis because studies differ in their protocols, target populations, settings, and ages of included subjects. This type of heterogeneity provides evidence about potential variability in treatment effects. Therefore, heterogeneity is not a nuisance or an undesirable feature, but rather an important source of information to be carefully analyzed (Lau et al., 1998). Instead of eliminating heterogeneity by restricting study inclusion criteria or scope, which can limit the utility of the review, heterogeneity of effect sizes can be quantified, and related to aspects of study populations or design features through statistical techniques such as meta-regression, which associates the size of treatment effects with effect modifiers. Meta-regression is most useful in explaining variation that occurs from sources that have no effect within studies, but big effects among studies (e.g., use of randomization or dose employed). Except in rare cases, meta-regression analyses are exploratory, motivated by the need to explain heterogeneity, and not by prespecification in the protocol. Meta-regression is observational in nature, and if the results of meta-regression are to be considered valid, they should be clinically plausible and supported by other external evidence. Because the number of studies in a meta-regression is often small, the technique has low power. The technique is subject to spurious findings because many potential covariates may be available, and adjustments to levels of significance may be necessary (Higgins and Thompson, 2004). Users should also be careful of relationships driven by anomalies in one or two studies. Such influential data do not provide solid evidence of strong relationships.

Research Trends in Meta-Analysis

As mentioned previously, a detailed discussion of meta-analysis methodology is beyond the scope of this report. There are many

unresolved questions regarding meta-analysis methods. Fortunately, meta-analysis methodological research is vibrant and ongoing. Box 4-4 describes some of the research trends in meta-analysis and provides relevant references for the interested reader.

Sensitivity of Conclusions

Meta-analysis entails combining information from different studies; thus, the data may come from very different study designs. A small number of studies in conjunction with a variety of study designs contribute to heterogeneity in results. Consequently, verifying that conclusions are robust to small changes in the data and to changes in modeling assumptions solidifies the belief that they are robust to new information that could appear. Without a sensitivity analysis, the credibility of the meta-analysis is reduced.

Results are considered robust if small changes in the metaanalytic protocol, in modeling assumptions, and in study selection do not affect the conclusions. Robust estimates increase confidence in the SR’s findings. Sensitivity analyses subject conclusions to such tests by perturbing these characteristics in various ways.

The sensitivity analysis could, for example, assess whether the results change when the meta-analysis is rerun leaving one study out at a time. One statistical test for stability is to check that the predictive distribution of a new study from a meta-analysis with one of the studies omitted would include the results of the omitted study (Deeks et al., 2008). Failure to meet this criterion implies that the result of the omitted study is unexpected given the remaining studies. Another common criterion is to determine whether the estimated average treatment effect changes substantially upon omission of one of the studies. A common definition of substantial involves change in the determination of statistical significance of the summary effect, although this definition is problematic because a significance threshold may be crossed with an unimportant change in the magnitude or precision of the effect (i.e., loss of statistical significance may result from omission of a large study that reduces the precision, but not the magnitude, of the effect).

In addition to checking sensitivity to inclusion of single studies, it is important to evaluate the effect of changes in the protocol that may alter the composition of the studies in the meta-analysis. Changes to the inclusion and exclusion criteria—such as the inclusion of non-English literature or the exclusion of studies that enroll some participants not in the target population or the focus on studies with low risk of bias—may all modify results sufficiently to question robustness of inferences.

|

BOX 4-4 Research Trends in Meta-Analysis Meta-analytic research is a dynamic and rapidly changing field. The following describes key areas of research with recommended citations for additional reading: Prospective meta-analysis—In this approach, studies are identified and evaluated prior to the results of any individual studies being known. Prospective meta-analysis (PMA) allows selection criteria and hypotheses to be defined a priori to the trials being concluded. PMA can implement standardization across studies so that heterogeneity is decreased. In addition, small studies that lack statistical power individually can be conducted if large studies are not feasible. See for example: Berlin and Ghersi, 2004, 2005; Ghersi et al., 2008; The Cochrane Collaboration, 2010. Meta-regression—In this method, potential sources of heterogeneity are represented as predictors in a regression model, thereby enabling estimation of their relationship with treatment effects. Such analyses are exploratory in the majority of cases, motivated by the need to explain heterogeneity. See for example: Schmid et al., 2004; Smith et al., 1997; Sterne et al., 2002; Thompson and Higgins, 2002. Bayesian methods in meta-analysis—In these approaches, as in Bayesian approaches in other settings, both the data and parameters in the meta-analytic model are considered random variables. This approach allows the incorporation of prior information into subsequent analyses, and may be more flexible in complex situations than standard methodologies. See for example: Berry et al., 2010; O’Rourke and Altman, 2005; Schmid, 2001; Smith et al., 1995; Sutton and Abrams, 2001; Warn et al., 2002. Meta-analysis of multiple treatments—In this setting, direct treatment comparisons are not available, but an indirect comparison through a common comparator is. Multiple treatment models, also called mixed comparison models or network meta-analysis, may be used to more efficiently model treatment comparisons of interest. See for example: Cooper et al., 2009; Dias et al., 2010; Salanti et al., 2009. Individual participant data meta-analysis—In some cases, study data may include outcomes, treatments, and characteristics of individual participants. Meta-analysis with such individual participant data (IPD) offers many advantages over meta-analysis of aggregate studylevel data. See for example: Berlin et al., 2002; Simmonds et al., 2005; Smith et al., 1997; Sterne et al., 2002; Stewart, 1995; Thompson and Higgins, 2002; Tierney et al., 2000. |

Another good practice is to evaluate sensitivity to choices about outcome metrics and statistical models. While one metric and one model may in the end be chosen as best for scientific reasons, results that are highly model dependent require more trust in the modeler and may be more prone to being overturned with new data. In any case, support for the metrics and models chosen should be provided.

Meta-analyses are also frequently sensitive to assumptions about missing data. In meta-analysis, missing data include not only missing outcomes or predictors, but also missing variances and correlations needed when constructing weights based on study precision. As with any statistical analysis, missing data pose two threats: reduced power and bias. Because the number of studies is often small, loss of even a single study’s data can seriously affect the ability to draw conclusive inferences from a meta-analysis. Bias poses an even more dangerous problem. Seemingly conclusive analyses may give the wrong answer if studies that were excluded—because of missing data—differ from the studies that supplied the data. The conclusion that the treatment improved one outcome, but not another, may result solely from the different studies used. Interpreting such results requires care and caution.

RECOMMENDED STANDARDS FOR META-ANALYSIS

The committee recommends the following standards and elements of performance for conducting the quantitative synthesis.

Standard 4.3—Decide if, in addition to a qualitative analysis, the systematic review will include a quantitative analysis (meta-analysis)

Required element:

|

4.3.1 |

Explain why a pooled estimate might be useful to decision makers |

Standard 4.4—If conducting a meta-analysis, then do the following:

Required elements:

|

4.4.1 |

Use expert methodologists to develop, execute, and peer review the meta-analyses |

|

4.4.2 |

Address heterogeneity among study effects |

|

4.4.3 |

Accompany all estimates with measures of statistical uncertainty |

|

4.4.4 |

Assess the sensitivity of conclusions to changes in the protocol, assumptions, and study selection (sensitivity analysis) |

Rationale

A meta-analysis is usually desirable in an SR because it provides reproducible summaries of the individual study results and has potential to offer valuable insights into the patterns of results across studies. However, many published analyses have important methodological shortcomings and lack scientific rigor (Bailar, 1997; Gerber et al., 2007; Mullen and Ramirez, 2006). One must always look beyond the simple fact that an SR contains a meta-analysis to examine the details of how it was planned and conducted. A strong meta-analysis emanates from a well-conducted SR and features and clearly describes its subjective components, scrutinizes the individual studies for sources of heterogeneity, and tests the sensitivity of the findings to changes in the assumptions and set of studies (Greenland, 1994; Walker et al., 2008).

REFERENCES

AAN (American Academy of Neurology). 2004. Clinical practice guidelines process manual. http://www.aan.com/globals/axon/assets/3749.pdf (accessed February 1, 2011).

ACCF/AHA. 2009. Methodology manual for ACCF/AHA guideline writing committees. http://www.americanheart.org/downloadable/heart/12378388766452009MethodologyManualACCF_AHAGuidelineWritingCommittees.pdf (accessed July 29, 2009).

ACCP (American College of Chest Physicians). 2009. The ACCP grading system for guideline recommendations. http://www.chestnet.org/education/hsp/gradingSystem.php (accessed February 1, 2011).

Ammerman, A., M. Pignone, L. Fernandez, K. Lohr, A. D. Jacobs, C. Nester, T. Orleans, N. Pender, S. Woolf, S. F. Sutton, L. J. Lux, and L. Whitener. 2002. Counseling to promote a healthy diet. http://www.ahrq.gov/downloads/pub/prevent/pdfser/dietser.pdf (accessed September 26, 2010).

Anello, C., and J. L. Fleiss. 1995. Exploratory or analytic meta-analysis: Should we distinguish between them? Journal of Clinical Epidemiology 48(1):109–116.

Anzures-Cabrera, J., and J. P. T. Higgins. 2010. Graphical displays for meta-analysis: An overview with suggestions for practice. Research Synthesis Methods 1(1):66–89.

Atkins, D. 2007. Creating and synthesizing evidence with decision makers in mind: Integrating evidence from clinical trials and other study designs. Medical Care 45(10 Suppl 2):S16–S22.

Atkins, D., D. Best, P. A. Briss, M. Eccles, Y. Falck-Ytter, S. Flottorp, and GRADE Working Group. 2004a. Grading quality of evidence and strength of recommendations. BMJ 328(7454):1490–1497.

Atkins, D., M. Eccles, S. Flottorp, G. Guyatt, D. Henry, S. Hill, A. Liberati, D. O’Connell, A. D. Oxman, B. Phillips, H. Schünemann, T. T. Edejer, G. Vist, J. Williams, and the GRADE Working Group. 2004b. Systems for grading the quality of evidence and the strength of recommendations I: Critical appraisal of existing approaches. BMC Health Services Research 4(1):38.

Bailar, J. C., III. 1997. The promise and problems of meta-analysis. New England Journal of Medicine 337(8):559–561.

Balshem, H., M. Helfand, H. J. Schünemann, A. D. Oxman, R. Kunz, J. Brozek, G. E. Vist, Y. Falck-Ytter, J. Meerpohl, S. Norris, and G. H. Guyatt. 2011. GRADE guidelines: 3. Rating the quality of evidence. Journal of Clinical Epidemiology (In press).

Berlin, J. A., J. Santanna, C. H. Schmid, L. A. Szczech, H. I. Feldman, and the Anti-lymphocyte Antibody Induction Therapy Study Group. 2002. Individual patient versus group-level data meta-regressions for the investigation of treatment effect modifiers: Ecological bias rears its ugly head. Statistics in Medicine 21(3):371–387.

Berlin, J., and D. Ghersi. 2004. Prospective meta-analysis in dentistry. The Journal of Evidence-Based Dental Practice 4(1):59–64.

———. 2005. Preventing publication bias: Registries and prospective meta-analysis. Publication bias in meta-analysis: Prevention, assessment and adjustments, edited by H. R. Rothstein, A. J. Sutton, and M. Borenstein, pp. 35–48.

Berry, S., K. Ishak, B. Luce, and D. Berry. 2010. Bayesian meta-analyses for comparative effectiveness and informing coverage decisions. Medical Care 48(6):S137.

Borenstein, M. 2009. Introduction to meta-analysis. West Sussex, U.K.: John Wiley & Sons.

Brozek, J. L., E. A. Aki, P. Alonso-Coelle, D. Lang, R. Jaeschke, J. W. Williams, B. Phillips, M. Lelgemann, A. Lethaby, J. Bousquet, G. Guyatt, H. J. Schünemann, and the GRADE Working Group. 2009. Grading quality of evidence and strength of recommendations in clinical practice guidelines: Part 1 of 3. An overview of the GRADE approach and grading quality of evidence about interventions. Allergy 64(5):669–677.

CEBM (Centre for Evidence-based Medicine). 2009. Oxford Centre for Evidence-based Medicine—Levels of evidence (March 2009). http://www.cebm.net/index. aspx?o=1025 (accessed February 1, 2011).

Chalmers, I., M. Adams, K. Dickersin, J. Hetherington, W. Tarnow-Mordi, C. Meinert, S. Tonascia, and T. C. Chalmers. 1990. A cohort study of summary reports of controlled trials. JAMA 263(10):1401–1405.

Chou, R., N. Aronson, D. Atkins, A. S. Ismaila, P. Santaguida, D. H. Smith, E. Whitlock, T. J. Wilt, and D. Moher. 2010. AHRQ series paper 4: Assessing harms when comparing medical interventions: AHRQ and the Effective Health Care Program. Journal of Clinical Epidemiology 63(5):502–512.

Cochrane Collaboration. 2010. Cochrane prospective meta-analysis methods group. http://pma.cochrane.org/ (accessed January 27, 2011).

Coleman, C. I., R. Talati, and C. M. White. 2009. A clinician’s perspective on rating the strength of evidence in a systematic review. Pharmacotherapy 29(9):1017–1029.

COMPUS (Canadian Optimal Medication Prescribing and Utilization Service). 2005. Evaluation tools for Canadian Optimal Medication Prescribing and Utilization Service. http://www.cadth.ca/media/compus/pdf/COMPUS_Evaluation_Methodology_final_e.pdf (accessed September 6, 2010).

Cooper, H. M., L. V. Hedges, and J. C. Valentine. 2009. The handbook of research synthesis and meta-analysis, 2nd ed. New York: Russell Sage Foundation.

Cooper, N., A. Sutton, D. Morris, A. Ades, and N. Welton. 2009. Addressing between-study heterogeneity and inconsistency in mixed treatment comparisons: Application to stroke prevention treatments in individuals with non-rheumatic atrial fibrillation. Statistics in Medicine 28(14):1861–1881.

CRD (Centre for Reviews and Dissemination). 2009. Systematic reviews: CRD’s guidance for undertaking reviews in health care. York, U.K.: York Publishing Services, Ltd.

Cummings, P. 2004. Meta-analysis based on standardized effects is unreliable. Archives of Pediatrics & Adolescent Medicine 158(6):595–597.

Deeks, J., J. Higgins, and D. Altman, eds. 2008. Chapter 9: Analysing data and undertaking meta-anayses. In Cochrane handbook for systematic reviews of interventions, edited by J. P. T. Higgins and S. Green. Chichester, UK: John Wiley & Sons.

Devereaux, P. J., D. Heels-Ansdell, C. Lacchetti, T. Haines, K. E. Burns, D. J. Cook, N. Ravindran, S. D. Walter, H. McDonald, S. B. Stone, R. Patel, M. Bhandari, H. J. Schünemann, P. T. Choi, A. M. Bayoumi, J. N. Lavis, T. Sullivan, G. Stoddart, and G. H. Guyatt. 2004. Payments for care at private for-profit and private not-for-profit hospitals: A systematic review and meta-analysis. Canadian Medical Association Journal 170(12):1817–1824.

Dias, S., N. Welton, D. Caldwell, and A. Ades. 2010. Checking consistency in mixed treatment comparison meta analysis. Statistics in Medicine 29(7 8):932–944.

Dickersin, K. 1990. The existence of publication bias and risk factors for its occurrence. JAMA 263(10):1385–1389.

Dwan, K., D. G. Altman, J. A. Arnaiz, J. Bloom, A.-W. Chan, E. Cronin, E. Decullier, P. J. Easterbrook, E. Von Elm, C. Gamble, D. Ghersi, J. P. A. Ioannidis, J. Simes, and P. R. Williamson. 2008. Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS ONE 3(8):e3081.

Ebell, M. H., J. Siwek, B. D. Weiss, S. H. Woolf, J. Susman, B. Ewigman, and M. Bowman. 2004. Strength of recommendation taxonomy (SORT): A patient-centered approach to grading evidence in medical literature. American Family Physician 69(3):548–556.

Editors. 2005. Reviews: Making sense of an often tangled skein of evidence. Annals of Internal Medicine 142(12 Pt 1):1019–1020.

Egger, M., G. D. Smith, and D. G. Altman. 2001. Systematic reviews in health care: Meta-analysis in context. London, U.K.: BMJ Publishing Group.

Falck-Ytter, Y., H. Schünemann, and G. Guyatt. 2010. AHRQ series commentary 1: Rating the evidence in comparative effectiveness reviews. Journal of Clinical Epidemiology 63(5):474–475.

Faraday, M., H. Hubbard, B. Kosiak, and R. Dmochowski. 2009. Staying at the cutting edge: A review and analysis of evidence reporting and grading; The recommendations of the American Urological Association. BJU International 104(3): 294–297.

Federal Coordinating Council for Comparative Effectiveness Research. 2009. Report to the President and the Congress. Available from http://www.hhs.gov/recovery/programs/cer/cerannualrpt.pdf.

Ferreira, P. H., M. L. Ferreira, C. G. Maher, K. Refshauge, R. D. Herbert, and J. Latimer. 2002. Effect of applying different “levels of evidence” criteria on conclusions of Cochrane reviews of interventions for low back pain. Journal of Clinical Epidemiology 55(11):1126–1129.

Fu, R., G. Gartlehner, M. Grant, T. Shamliyan, A. Sedrakyan, T. J. Wilt, L. Griffith, M. Oremus, P. Raina, A. Ismaila, P. Santaguida, J. Lau, and T. A. Trikalinos. 2010. Conducting quantitative synthesis when comparing medical interventions: AHRQ and the Effective Health Care Program. In Methods guide for comparative effectiveness reviews, edited by Agency for Healthcare Research and Quality. http://www.effectivehealthcare.ahrq.gov/index.cfm/search-for-guidesreviews-and-reports/?pageaction=displayProduct&productID=554 (accessed January 19, 2011).